Windows Azure and Cloud Computing Posts for 12/1/2010+

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database and Reporting

- Marketplace DataMarket and OData

- Windows Azure AppFabric: Access Control and Service Bus

- Windows Azure Virtual Network, Connect, and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch

- Windows Azure Infrastructure

- Windows Azure Platform Appliance (WAPA) and Hyper-V Cloud

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Read the detailed TOC here (PDF) and download the sample code here.

Discuss the book on its WROX P2P Forum.

See a short-form TOC, get links to live Azure sample projects, and read a detailed TOC of electronic-only chapters 12 and 13 here.

Wrox’s Web site manager posted on 9/29/2009 a lengthy excerpt from Chapter 4, “Scaling Azure Table and Blob Storage” here.

You can now freely download by FTP and save the following two online-only PDF chapters of Cloud Computing with the Windows Azure Platform, which have been updated for SQL Azure’s January 4, 2010 commercial release:

- Chapter 12: “Managing SQL Azure Accounts and Databases”

- Chapter 13: “Exploiting SQL Azure Database's Relational Features”

HTTP downloads of the two chapters are available for download at no charge from the book's Code Download page.

Tip: If you encounter articles from MSDN or TechNet blogs that are missing screen shots or other images, click the empty frame to generate an HTTP 404 (Not Found) error, and then click the back button to load the image.

Azure Blob, Drive, Table and Queue Services

No significant articles today.

<Return to section navigation list>

SQL Azure Database and Reporting

Lauren Crawford announced on 12/1/2010 that she published Sync Framework Tips and Troubleshooting on TechNet Wiki:

I've started an article on the Technet Wiki that collects usage tips and troubleshooting for Sync Framework. This can be the first place you look when you have a nagging question that isn't answered anywhere else.

Some tips already collected are recommendations about how to speed up initialization of multiple SQL Server Compact databases, how to find the list of scopes currently provisioned to a database, and how to improve the performance of large replicas for file synchronization.

It's a wiki, so you can add to it! If the answer you need isn't in the article, or if you have more to say about a tip that's already in the article, post what you know. By sharing our knowledge, we can all make Sync Framework even more useful for everyone.

Check out the article, and share your tips: http://j.mp/f1TQLi!

Lori MacVittie (@lmacvittie) asserted It is the database tier and its unique characteristics that ultimate determine where an application will be deployed as a preface to her The Database Tier is Not Elastic post of 12/1/2010 to F5’s DevCentral blog:

Cloud computing is mostly about “elasticity.” The extraction and contraction of resources based on demand. It is the contraction of resources which is oft times forgotten but without it, cloud computing and highly dynamic, virtualized infrastructures are little more than seamless capacity growth engines. For web and application architectural tiers, the contraction of resources is as much a requirement to realize the benefits of shared, dynamic capacity as the ability to rapidly expand. But in the database tier, the application data layer, contraction is more a contradiction than anything else.

WHAT COMES UP USUALLY COMES DOWN

Elasticity in applications is a good thing. It is important to the overall success rate of cloud computing and dynamic infrastructure initiatives to remember that “what comes up, must come down” – especially in relation to provisioned compute resources. Applications should expand their resource consumption to meet demand, but when demand wanes, so too should their resource consumption rates. By spreading compute resources around the various applications that need them in a dynamic way, based on demand, we achieve peak efficiency and make the most of our capital expenditures. Such architectural approaches allow us to allocate “temporary” compute resources when necessary from cloud computing environments external to the organization, and release them when not necessary.

This is all well and good, except when we’re talking about the database.

Databases employ a number of techniques by which they can improve their performance, and most of them involve complex caching and pooling strategies that make use of lots and lots of RAM. At the database tier, RAM may increase, but it rarely decreases. It’s a different kind of workload than web and application servers, which can easily be scaled out using parallel processing strategies. Many, many copies of the same code can execute in isolated chunks around the data center because they do not need access to a centralized store of information about all the sessions that may be occurring at the same time. In order to maintain consistency, databases use indexes and locks and other computational techniques to manage access to data, especially in the case of modification. This means that even though the code to perform such tasks can be ostensibly executed on multiple copies of a database, the especial data required to ensure consistent operations is contained in a single, contiguous data structure. That data cannot be easily transferred or replicated in real-time to other copies. There is a single data overlord that must maintain a holistic view of the data and therefore must (today) run on a single machine (virtual or iron).

That means all access is through a single gateway, and scaling that gateway is generally only possible through the expansion of resources available to the database application. Scale up is the traditional strategy, and until we learn how to share memory blocks across the network in a way that assures consistency we can either bow to the belief that eventual consistency is good enough or that there will be one, ginormous system that continually expands along with data growth.

YOU CAN SCALE OUT READ but NOT WRITE

It is the unique characteristics of data that result in a quirky architecture that allows us to scale out read but only scale up write. This makes the database tier a lot more complex than perhaps it once was. In the past, a single ginormous server housed a database and it was the only path to data. Today, however, the need for better performance and support for hyperscaling of applications has led to a functional partitioning scheme that separates reads from writes and assumes that eventual consistency is better than non-availability.

This does not mean it’s impossible to put a database into an external cloud computing environment. It just means that it’s going to run, 24x7, and scalability cannot necessarily be achieved by scaling out – the traditional means by which a cloud computing environment enables scale. It means that scaling up will require migration, if you haven’t adjusted for future growth to begin with, and that there may be, depending on the cloud computing environment you choose, an upper bounds to your data growth. If you’ve only got X amount of disk and memory available, at some point your database will hit that upper bound and either it will begin to drag down performance or availability or simply be unable to continue growing.

Or you’ll need to consider the use of distributed database systems which can scale out by distributing data across multiple database nodes (local or remote) either using replication or duplication. When used over a LAN – low latency, high performing, high bandwidth – the replication and/or duplication required for the master database to manage and maintain its minion databases can be successful. One would assume, then, that the use of distributed database systems in a cloud computing environment would be the appropriate marriage of the two architectural approaches to scalability. However, most enterprise applications existing today – both developed in-house and packaged – do not take advantage of such technology and there exist no standardized means by which a traditional DBMS can be morphed into a DDBMS.

Additionally, the replication/duplication of database systems over a WAN – high latency, lower performing, low bandwidth – is problematic for maintaining consistency. Which often means a closed-system, LAN connected only approach to application architecture is the only feasible option.

Which puts us right back where we were – with the database tier being upward-bound only, not elastic, and potentially outgrowing the ability of a provider to offer an appropriate level of compute resources to maintain performance and capacity, effectively limiting data growth.

Which is not a good thing, because limiting data growth means limiting business growth.

DATA GROWTH is AN INDICATOR of BUSINESS SUCCESS

It is almost universally true that the growth of data is an indicator of business success. As business grows, so does the customer data. As business grows, so does the user-generated content. As business grows, so do the financial and employee records and e-mail. And, of course, the gigabytes of Power Point presentations and standard operating procedure documents that grow, morph, and are ultimately discarded – but maintained for posterity/reference in the future grow along with the business.

Data grows, it doesn’t shrink. There is nothing that so accurately lives up to the “pack rat” mentality as a business. And much of it is stored in databases, which live in the data tier and are increasingly web (and mobile client) enabled.

So when we talk about elastic applications we’re really talking just about the applications, not necessarily the data tier. Unless you have employed a sharded architectural approach to enabling long-term growth, you have “THE database” and it’s going to grow and grow and grow and never shrink. It isn’t elastic; the parts of an application that are are the applications that access THE database.

It is this “nut” that needs to be cracked for cloud computing to truly become “the” standard for data center architectures. Until we either see DDBMS become the standard for database systems or figure out how to really share compute resources across the LAN such that RAM from multiple machines appears to be a contiguous, locally accessible chunk of memory, the database tier will be the limiting – and deciding – factor in determining how an application is architected and where it might end up residing.

Noel Yuhanna [pictured below], Mike Gilpin and Adam Knoll wrote SQL Azure Raises The Bar On Cloud Databases: Ease Of Use, Integration, Reliability, And Low Cost Make It A Compelling Solution, which was published as a Forrester Research white paper on 11/2/2010 (missed when posted):

Executive Summary

Over the past six months, Forrester interviewed 26 companies using Microsoft SQL Azure to find out about their implementations. Most customers stated that SQL Azure delivers a reliable cloud database platform to support various small to moderately sized applications as well as other data management requirements such as backup, disaster recovery, testing, and collaboration. Unlike other DBMS vendors such as IBM, Oracle, and Sybase that offer public cloud database largely using the Amazon Elastic Compute Cloud (Amazon EC2) platform, Microsoft SQL Azure is unique because of its multitenant architecture, which allows it to offer greater economies of scale and increased ease of use. Although SQL Azure currently has a 50 GB database size limit (which might increase in the near future), a few companies are already using data sharding with SQL Azure to scale their databases into hundreds of gigabytes.

Application developers and database administrators seeking a cloud database will find that SQL Azure offers a reliable and cost-effective platform to build and deploy small to moderately sized applications.

Table of Contents

- Microsoft SQL Azure Takes A Leading Position Among Cloud Databases

- Microsoft’s Enhancements To SQL Azure Give It Key Advantages

- SQL Azure Has Limitations That Microsoft Will Probably Address

- Other Database Vendors Currently Offer Only Basic Public Cloud Database Implementations

- Case Studies Show That SQL Azure Is Ready For Enterprise Use

- Case Study: TicketDirect Handles Peak Loads With A Cost-Effective SQL Azure Solution

- Case Study: Kelley Blue Book Leverages SQL Azure In A Big Way

- Case Study: Large Financial Services Company Uses SQL Azure For Collaboration

Recommendations

SQL Azure Offers A Viable Cloud Database

Platform To Support Most App

The white paper reads like Microsoft-sponsored research, but I see no indication of sponsorship in the PDF file.

<Return to section navigation list>

Dataplace DataMarket and OData

Jon Galloway (@jongalloway) posted Okay, WCF, we can be friends now on 12/1/2010:

Over the years, I've had a tough time with Windows Communication Foundation, otherwise know as (and sometimes cursed as) WCF. I knew it was what I was "supposed" to be using to because it handled complex scenarios like managing access as secured messages passed through systems and users with different access rights. However, it didn't seem to be able to handle my simple scenarios – things like returning very simple, unrestricted information from a server to a client – without requiring hours of pain, configuration, and things like writing a custom ServiceHostFactory.

I understand the idea of WCF – it’s an entire API that’s designed to allow for just about anything you might want to do in a SOA scenario, and it offers a pretty big abstraction over the transport mechanism so you can swap out SOAP over HTTP to Binary over TCP with a few well-placed config settings, and you can theoretically do it without caring about the transport or wire format.

So, I guess my thoughts on WCF up until very recently can be summed up as follows:

- I knew it did a lot of neat, advanced things that I never seemed to need to do

- WCF configuration always seemed to be a problem for me in rather simple use, e.g. hosting a basic, unauthenticated WCF service in an MVC application on shared hosting

- My co-workers got sick of trying to explain to me why I shouldn’t just use an ASMX service

All that’s changing, though. There have been two new developments in WCF-land that have broken down the walls I’d built around my heart:

- WCF 4.0 took a first step to making simple things simple by defaulting to “it just works” mode. For example, when you create a service that uses HTTP transport, it uses all the same settings you’d get with an ASMX service unless you go in and explicitly change them. That’s cool.

- The really big news – Glenn Block’s PDC 2010 announcement of the new WCF Web API’s.

Favorite new features in WCF Web API’s

Focus: First, the overall focus on HTTP as a good thing, not something to be abstracted away. There can be value in a variety of approaches, and those values can shift over time. WCF’s original design seemed to be oriented towards letting coders write code without worrying about the transport protocol. That can be useful in cases, but the fact is that HTTP contains some great features that we’ve been ignoring. This seems a little similar to the difference in approaches between ASP.NET Web Forms / ASP.NET MVC. In some cases an abstraction can help you be more productive by handling insignificant details for you, but in other cases those details are significant and important.

And I think the variety in focus is a good thing. Different software challenges call for different tools. For instance, looking at the WCF offerings:

- WCF Data Services is a great way to expose data in a raw format which is queryable and accessible in a great, standards friendly format (OData)

- WCF RIA Services solves some common client-server communication issues, such as keeping business logic in sync on both client and server through some pretty slick cross-compilation

- [more WCF options here – IANAWE (I am not a WCF Expert)]

- Now, WCF Web API’s provide an option for writing pure, RESTful services and service clients that work close to the HTTP level with minimal friction

Pipeline: Rather than stack piles of attributes on your service methods or write a ton of configuration, you can surgically process the input and output using lightweight processors. For instance, you can create a processor that displays information for a specific media type, like JSON or an image format like PNG. What’s really powerful here is that these processors can be associated with a media type, so they’re automatically returned to clients of your service based on what media types they prefer, as stated in the Accept header.

More on that in the next post, where I talk about using the Speech API to create a SpeechProcessor that responds to clients which accept audio/x-wav.

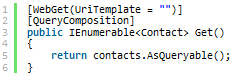

Queryable services: Glenn’s announcement post shows the promise here – you can simply annotate a service with the [QueryComposition] which will allow clients to query over a service’s results. Glenn’s post shows how this works pretty well, so I’m just quoting it here. First, a service is marked as queryable:

Now we can query over the results on the client. For instance, if we wanted to get all contacts in Florida, we could use a request like "http://localhost:8081/contacts?$filter=State%20eq%20FL" which says "find me the contact with a State equal to FL".

Client-side Features

There are some great client-side features which mirror new features on the server-side. For instance, once your services are exposed as queryable, there’s client-side support for query via LINQ. There’s support via HttpRequestMessage and HttpResponseMessage to the HTTP values on the client as well as on the server.

The new HttpClient provides a client which was built with the same philosophy and API support on the client to match what’s on the server. I’ll plan to look at HttpClient first when I need to query services from .NET code.

WCF jQuery Support

There’s some interesting work in progress to build out support for writing services which are accessible from jQuery. While I’d probably use ASP.NET MVC’s JSON support for simple cases, if I needed to build out a full API which exposed services over AJAX, this looks really useful.

JsonValue

The jQuery support gets even more interesting with new support for JsonValue. Tomek spells it out in a great post covering WCF support for jQuery, but I think broad support for JsonValue in the .NET Framework deserves some individual attention because it enables some really slick things like LINQ to JSON:

Where to go next

There’s a lot to the new WCF Web API’s, and I’m only scratching the surface in this overview. Check out my next post to see a sample processor, and take a look at the list of posts listed in the News section at http://wcf.codeplex.com/ for more info.

Some top resources:

- http://wcf.codeplex.com

- Glenn’s talk at PDC: Building Web API’s for the Highly Connected Web

- Glenn’s announcement blog post: WCF Web API’s, HTTP Your Way

- Darrel Miller’s overview: Microsoft WCF Gets Serious about HTTP

Jon’s conclusion reflects mine.

Abishek reported a Problem Accessing Dynamics CRM 2011 Beta services from BCS in Sharepoint 2010 in a 12/1/2010 post:

Dynamics 2011 Beta exposes a new oData based service which can be used to pull data out from the system. I am working on BCS for Sharepoint 2010 for a simple demo and i decided to use this service to pull data from CRM 2011 beta system.

After setting the reference i tried to use the DataContext object to pull data out and got an exception which said: "Request version '1.0' is too low for the response. The lowest supported version is '2.0'."

On digging a little deep i realized that some header mismatch is happening between the oData service and my client.

MSDN social says that in order to change this header from 1.0 to 2.0 i need to target my assemblies to compile and run against .NET 4.0. To my utter surprise Sharepoint 2010 runtime can't load the assemblies which are targeted for 4.0 and hence i had to fall back on using the old way of accessing data from CRM which is using CRM data service. That's bad news as this means i will have to write lot of code which i could have done without.

The good thing though is that i will learn the old way of accessing data from the system. Will try to post some code samples.....

<Return to section navigation list>

Windows Azure AppFabric: Access Control and Service Bus

David Kearns asserted Identity services are key to new applications in a 12/1/2010 post to NetworkWorld’s Infrastructure Management blog:

More often than not, when I’m writing the monthly “here’s what we were talking about ten years ago” edition of the newsletter I’m tempted to lead with the French proverb “plus ça change, plus c'est la même chose,” which means the more things change the more they stay the same. Happily (at least for the sake of variety) that’s not true for this issue.

Here’s what I wrote in November, 2000:

“Is it time to stop talking about the directory? You know it, I know it: mention directory services and line-of-business execs glaze over within two minutes. To them a directory is the telephone white pages, and that's just not very sexy in this whiz-bang, dot-com, sock-puppet, bingo-bango marketplace that's today's business.

“So what do you do? You don't mention the directory, that's what. You talk about security and authentication. You talk about CRM and value chains. Slide in a touch of digital persona. Above all, talk about applications and services - what you're selling and how you sell it.

“It doesn't matter if it’s a client or your boss you[‘re] talking to, you still need to sell and its easier, as any old-line salesman will tell you, to sell sizzle than it is to sell steak.”

One think has dramatically changed since then, and it’s that the IT department is no longer the leader when it comes to bringing in new applications and services. It’s marketing, sales, finance and even HR that is clamoring for new apps, especially when it comes to cloud-based services. More than ever, IT needs to talk about the importance of identity services (represented by the directory in our discussions 10 years ago) to make those new apps viable, efficient and secure.

Of course, one thing really hasn’t changed – the business folk still glaze over when we bring up identity services. So it’s imperative that IT people be on the cutting edge of new service offerings, and partner with the line-of-business innovators so that they can have input into the strategic architecture of the new services, the “plumbing,” so to speak. Because an example I used in 2000 still works:

“Take a look at the Delta Faucet website. Notice their catchphrase? "Style. Innovation. Beauty…that lasts." Its not the PVC (or even the copper) pipe they're talking about, but those beautiful fixtures. But if you buy the fixtures (because they'll last, or just because they're sexy) you also need to have the PVC or copper tubing in place to carry the water to the fixture.”

It’s the same with identity services - sell the sexy parts and the plumbing gets taken along for the ride.

The Windows Azure AppFabric Team reported on 11/30/2010 the availability of Wade Wegner’s Sample on using SAML CredentialType to Authenticate to the Service Bus code:

To answer a popular request we’ve been getting from our customers, Wade Wegner our AppFabric evangelist has written a blog post that explains how to use the SAML CredentialType to Authenticate to the Service Bus.

Read Wade’s blog post to learn more on this topic: Using the SAML CredentialType to Authenticate to the Service Bus.

If you have other AppFabric related requests please visit our Windows Azure AppFabric Feature Voting Forum.

See Wade Wegner explained Using the SAML CredentialType to Authenticate to the Service Bus in Windows Azure and Cloud Computing Posts for 11/24/2010.

Nuno Filipe Godinho posted his abbreviated Windows Azure AppFabric Service Bus Future – PDC10 Session Review on 11/30/2010:

Clemens Vasters – Principal Technical Lead @ Windows Azure Team

Service Bus Today

- Naming fabric or Structure that sits out on the internet that provides us to create a namespace

- When we create a namespace for the service bus, Microsoft provisions a tenant in a multi-tenant value fabric for us, where we can create endpoints, message buffers, and communicate through those.

- Around this routing fabric we have several nodes where we connect and that’s is where the fabric is being used.

- Use Case Samples:

- Message Queues: Wherever you need some application queue

- Airline that is using this to check-in and synchronize devices using this

- Outside Services: Whenever you need to provide a public facing service, but your internal service

- MultiCast (Eventing)

- Direct Connect (Bridge two environments through Firewalls and NAT.

A look into the Future

- Service Bus Capability Scope

- Connectivity (currently in the CTP)

- Service Relay

- Protocol Tunnel

- Eventing

- Push

- Messaging (currently in the CTP)

- Queuing

- Pub/Sub

- Reliable Transfer

- Service Management

- Naming

- Discovery

- Monitoring

- Orchestration (Workflow)

- Routing

- Coordination

- Transformation

- Service Bus October 2010 Labs (labs release not parity with production services)

- New and Improved

- Load Balancing

- I need to be able to take multiple listeners and host them on Service Bus and have load balancing on them

- This is not possible on the production environment, and in the Labs we have a new protocol called AnyCast to provide this.

- Richer Management

- Durable Message Buffers

- We want to be more queues and less about message buffers

- In this October 2010 Labs Release we don’t have:

- HttpListeners

- Capabilities in the Service Registry

- Missing some composition capabilities

- Missing all the bindings

- The purpose is to gather feedback about this new protocol

- Durable Message Buffers

Same Lightweight REST protocol

- Long pooling support

- Future Releases

- Reliable Transfer protocol options

- Higher throughput transport options

- Volatile buffers for higher throughput

- Currently the same protocol backed up with a REAL Queuing system that is reliable and capacity.

- Listener Load Balancing

- Connection point management separate from listener

- Explicit Management of service bus connection points to the service bus

- We gain the ability to know if someone is listening or not.

- Multiple listeners can share the same connection point

- Load balancing and no single point of failure

- Sticky sessions

- Session Multiplexing

- One socket per listener

- Optimized for short sessions, and short messages

- The latency for setting up that session is now very short

- No extra round-trip to set up a session

- Note: The purpose is to reduce latency

- Explicit Streaming Support (Default model in production Today)

- One socket per client

- Optimized for long sessions, streaming and very large messages

- Lightweight handshake, mostly ‘naked’ end-to-end socket afterwards

- Note: This will be made available later into the Labs

- Namespace and Management

- Management Surface Today

- Implicit for connectivity

- Connection points created on-the-fly

- Explicit for message buffers

- Atom Publishing Protocol

- .servicebus.windows.net/">.servicebus.windows.net/">.servicebus.windows.net">.servicebus.windows.net">.servicebus.windows.net">.servicebus.windows.net">https://<tenant>.servicebus.windows.net

- Runtime artifacts (listeners, message buffers) share address space with management

- Refactoring Goals

- Management consistently explicit

- No more implicit connection points, just explicit

- Split management and runtime surface

- Enable richer addressing/organization

- In the Labs

- Namespace is split into 3 different views

- Runtime (Organized by Concerns)

- Observation & Discovery (Organized as mirror of runtime view)

- Management (Organized by Resource and Taxonomies) – Currently not available

- Management operations are now explicit only

- Management store is persistent. No renewals

- Atom Publishing Protocol / REST

- Uses OData protocol behind the scenes

- New url will be:

- Each of the artifacts has a mapping into the runtime namespace (this is the url we know today .servibus.windows.net/">.servibus.windows.net/">.servibus.windows.net/">.servibus.windows.net/">.servibus.windows.net/">.servibus.windows.net/">https://<namespace>.servibus.windows.net/.

- Protocols

- Currently allowed

- NMF (net.tcp)

- Http(S)

- Currently thought

- FTP

- SMTP

- SMS

- Other

- Candidate Example of those: XMPP, … (not planned but thought as possible candidates)

- Messaging

- Reliable, transacted, end-to-end message, transfer of message sequences

- Local Transactions

- Batches are transferred completely or not at all

- Service Bus Pub/Sub – Topics

- Service Bus Topics

- Think of as a message log with multiple subscriptions

- Durable message sequences for multiple readers with per-subscription cursors

- Pull Model consumption using messaging protocols

- Service Bus manages subscription state

- Service Bus Pub/Sub – Eventing

- Service Bus Events

- We already have it with neteventrelaybinding

- Multicast event distribution

- Push delivery via outbound push or connectivity listeners

- Same subscription and filter model as topics

- Considering UDP for (potentially lossy) ultra-low latency delivery

- Service Bus Push Notifications

- Distribution points allow for push delivery to a variety of targets

- Built on top of pub/sub topic infrastructure

- Protocol adapters for HTTP, NET/TCP, SMS, SMTP, …

- Subscription-level AuthN and reference headers

- Monitoring

- Monitor of Service Bus state

- System events exposed as topics with pull and push delivery

- Events can flow into store for analytics

- Ad-Hoc queries into existing resources and their state

- Discovery

- Discovery of Service Bus resources and resource instances

- Eventing and query mechanisms similar to monitoring

- Service Presence

- Custom Taxonomies

What can you do know. Use AppFabric Service Bus Labs

- http://portal.appfabriclabs.com

- Register for a Labs account

- Download the SDK

- Try things out

<Return to section navigation list>

Windows Azure Virtual Network, Connect, and CDN

No significant articles today.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

Neil MacKenzie described Configuration Changes to Windows Azure Diagnostics in Azure SDK v1.3 in a 12/1/2010 post:

The configuration of Windows Azure Diagnostics (WAD) was changed in the Windows Azure SDK v1.3 release. This post is a follow-on to three earlier posts on Windows Azure Diagnostics – overview, custom diagnostics and diagnostics management – and describes how WAD configuration is affected by the Azure SDK v1.3 changes.

WAD is implemented using the pluggable-module technique introduced to support VM Roles. The idea with pluggable modules is that various Azure features are represented as modules and these modules can be imported into a role. Other features implemented in this way include Azure Connect and Remote Desktop.

Service Definition and Service Configuration for WAD

WAD is added to a role by adding the following to the role definition in the Service Definition file:

<Imports>

<Import moduleName=”Diagnostics” />

</Imports>The Azure Diagnostics service needs to be configured with a storage account where it can persist the diagnostic information it captures and stores locally in the instance. This has traditionally been done using an ad-hoc Service Configuration setting typically named DiagnosticsConnectionString. The pluggable Diagnostics module expects the storage account to be provided in a configuration setting with a specific name:

Microsoft.WindowsAzure.Plugins.Diagnostics.ConnectionString

Note that this setting does not need to be defined in the Service Definition file – its need is inferred from the Diagnostics module import specified in the Service Definition file. The following is a fragment of a Service Configuration file with this setting:

<Role name=”WebRole1″>

<Instances count=”2″ />

<ConfigurationSettings>

<Setting

name = “Microsoft.WindowsAzure.Plugins.Diagnostics.ConnectionString”

value=”UseDevelopmentStorage=true” />

</ConfigurationSettings>

</Role>Configuring WAD in Code

After the Diagnostics module is added to a role, the Azure Diagnostics service is started automatically using the default configuration. There is no longer a need to use DiagnosticMonitor.Start() to start the Azure Diagnostics service. However, in a somewhat counter-intuitive manner, this method can still be used to modify the default configuration just as before. This is presumably to ensure that existing code works with only configuration changes.

A more logical way to change the WAD configuration is to use RoleInstanceDiagnosticManager.GetCurrentConfiguration() to retrieve the current configuration and then use RoleInstanceDiagnosticManager.SetCurrentConfiguration() to update the configuration. There is a new element, <IsDefault>, in the wad-control-container blob containing the WAD configuration for an instance which is true when the default configuration is being used.

The following is an example of this technique:

private void ConfigureDiagnostics()

{

String wadConnectionString =

“Microsoft.WindowsAzure.Plugins.Diagnostics.ConnectionString”;CloudStorageAccount cloudStorageAccount =

CloudStorageAccount.Parse(RoleEnvironment.GetConfigurationSettingValue

(wadConnectionString));DeploymentDiagnosticManager deploymentDiagnosticManager =

new DeploymentDiagnosticManager(cloudStorageAccount,

RoleEnvironment.DeploymentId);

RoleInstanceDiagnosticManager roleInstanceDiagnosticManager =

cloudStorageAccount.CreateRoleInstanceDiagnosticManager(

RoleEnvironment.DeploymentId,

RoleEnvironment.CurrentRoleInstance.Role.Name,

RoleEnvironment.CurrentRoleInstance.Id);

DiagnosticMonitorConfiguration diagnosticMonitorConfiguration =

roleInstanceDiagnosticManager.GetCurrentConfiguration();diagnosticMonitorConfiguration.Directories.ScheduledTransferPeriod =

TimeSpan.FromMinutes(5.0d);diagnosticMonitorConfiguration.Logs.ScheduledTransferPeriod =

TimeSpan.FromMinutes(1.0d);diagnosticMonitorConfiguration.WindowsEventLog.DataSources.Add(“Application!*”);

diagnosticMonitorConfiguration.WindowsEventLog.DataSources.Add(“System!*”);

diagnosticMonitorConfiguration.WindowsEventLog.ScheduledTransferPeriod =

TimeSpan.FromMinutes(5.0d);PerformanceCounterConfiguration performanceCounterConfiguration = new

PerformanceCounterConfiguration();

performanceCounterConfiguration.CounterSpecifier =

@”\Processor(_Total)\% Processor Time”;

performanceCounterConfiguration.SampleRate =

System.TimeSpan.FromSeconds(10.0d);

diagnosticMonitorConfiguration.PerformanceCounters.DataSources.Add

(performanceCounterConfiguration);

diagnosticMonitorConfiguration.PerformanceCounters.ScheduledTransferPeriod =

TimeSpan.FromMinutes(1.0d);roleInstanceDiagnosticManager.SetCurrentConfiguration

(diagnosticMonitorConfiguration);

}

I’ll update my current diagnostics test harness and see if there’s any difference in reported data.

Brent Stineman (@BrentCodeMonkey) briefly reviewed The Great Azure Update – 1.3, November 2010 and described a couple of gotchas in an 11/30/2010 post:

Well here we are with the single biggest release for Windows Azure since it became available earlier this year. Little about this update is a surprise as everyone was covered in depth at PDC10 back in October. But as of yesterday we have the new 1.3 SDK and associated training kit. We also got an updated Windows Azure Management Portal. I won’t be diving deeply into any of these for the moment, the Windows Azure team has their post up on it and is doing a webcast tomorrow. So it seems unnecessary.

What I do want to do today is call out a couple things that the community at large has brought to light about the updates.

Wade Wegner, Microsoft’s Technical Evangelist for the Windows Azure AppFabric has invested a non-trivial amount of time updating the BidNow sample used at PDC for the latest release. I have it on good authority, that this is something Wade was really passionate about doing and excited to finally be able to share with the world.

The Azure Storage team has a blog post that outlines fixes, bugs, and breaking changes in the Azure 1.3 SDK.

The 1.3 Azure SDK is only for VS 2010 and will automatically update any cloud service solution you open once its been loaded. Additionally, all the new 1.3 features can not be used by deployed services until they are rebuilt using the new SDK and redeployed. This includes features like the remote desktop.

The new management portal includes enhanced functionality for managing Windows Azure and SQL Azure services. However, as yet there is no updated portal for the Azure AppFabric. Hopefully this will come soon. Additionally, if you’re using IE9, be sure to enable pop-ups when at the portal or you won’t be able to launch the SQL Azure Management Portal (previously known as project “Houston”). [Emphasis added.]

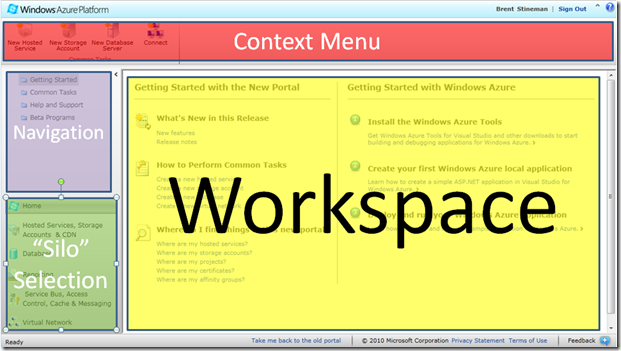

The new portal, being a web app, takes a few moments to get your head wrapped around. So I put together a quick guide using the following image.

The “Silo” Selection area in the lower left is where we select which set of assets (SQL Azure, Azure AppFabric, Azure Connect, etc…) we want to work with. Once an area has been selected, we can then jump a bit on the left to the Navigation section to move around within our selected silo, switching SQL Azure database servers or moving between Windows Azure services. Once we select an item in the Navigation menu, we can then view it in the large Workspace area. Lastly, across the top is our tool bar or a Context Menu. I prefer the term context menu because the options on this menu and their enabled/disable state will vary depending on your selections in the other areas.

You can switch back and forth between the new and old portals easily. Just look for the links at the top (in the old) or bottom (on the new). This is helpful because not everything in the old portal is in the new one. I was helping a friend with a presentation and noticed the new portal doesn’t have the handy “connection strings” button that was in the old SQL Azure portal.

I can’t wait to start digging into this but unfortunately I’m still preparing for my talk on the Azure AppFabric at next week’s AzureUG.NET user group meeting. So get out there and start playing for me! I’ll catch up as soon as I can.

PS – ok Wade. Can I go to bed now?

The Windows Azure Team announced Updated Windows Azure Platform Training Kit Now Available on 11/30/2010:

Yesterday, we announced availability of the Windows Azure SDK and Windows Azure Tools for Visual Studio release 1.3, as well as several features being rolled out as a beta or CTP. To help you better understand how to use these new features and enhancements, as well as those announced at PDC10 earlier this month, a new version of the Windows Azure Platform Training Kit is now available for free download. This release of the training kit includes several new hands-on labs (HOLs) that focus on the latest Windows Azure features and enhancements.

Updates include:

- Advanced Web and Worker Role - explains how to use admin mode and startup tasks

- Connecting Cloud Apps to on-premises resources with Windows Azure Connect

- Virtual Machine Role - describes how to get started with VM Role by creating and deploying a VHD

- Windows Azure CDN - a simple introduction to the CDN

- Introduction to the Windows Azure AppFabric Service Bus Futures - shows how to use the new Service Bus features in the AppFabric labs environment

- Building Windows Azure Apps with Caching Service - explains how to use the new Windows Azure AppFabric Caching service

- Introduction to the AppFabric Access Control Service V2 - covers how to build a simple web application that supports multiple identity providers

- Introduction to Windows Azure - updated to use the new Windows Azure Platform Portal

- Introduction to SQL Azure - updated to use the new Windows Azure Platform Portal

If you prefer to download selected HOLs without downloading the entire training kit package, you can browse and download all of the available labs here.

Morebits explained Using Windows Azure Development Environment Essentials in a 12/1/2010 tutorial posted to MSDN’s Technical Notes blog:

The Windows Azure development is a simulation environment which enables you to run and debug your applications (services) before deploying them. The development environment provides the following utilities:

The development fabric utility. The development fabric is a simulation environment for running your application (service) locally, for testing and debugging it before deploying it to the cloud. The fabric handles the lifecycle of your role instances, as well as providing access to simulated resources such as local storage resources.

- The development storage utility. It simulates the Blob, Queue, and Table services available in the cloud. If you are building a hosted service that employs storage services or writing any external application that calls storage services, you can test locally against development storage.

- The Windows Azure SDK also provides a set of utilities for packaging your service for either the development environment or for Windows Azure, and for running your service in the development fabric

For more information see Using the Windows Azure Development Environment.

Development Fabric

Before using the Windows Azure development environment, you must assure that it is activated. Usually this happens when you start debugging your application in Visual studio. You can verify the activation by checking that the related icon exists in the system tray as shown in the following illustration.

Figure 1 Windows Azure Development Environment Icon

Note. Using the CSRun command-line tool starts the development fabric if it is not yet running. You must run this tool as an administrator. For more information see CSRun Command-Line Tool.

You can right-click this icon to display the user interfaces for the development fabric and development storage and to start or shut down these services.

1. Right-click the icon and in the pop-up dialog click Show Development Fabric UI. A development fabric UI window is activated similar to the one shown in the following illustration. The development fabric UI shows your service deployments in an interactive format. You can examine the configuration of a service, its roles, and its role instances. From the UI, you can run, suspend, or restart a service. In this way, you can verify the basic functionality of your service.

Figure 2 Development Fabric UI

2. Right-click the icon and in the pop-up dialog click Show Development Storage UI. A development storage UI window is activated similar to the one shown in the following illustration. You can use the development storage UI to start or stop any of the services, or to reset them. Resetting a service stops the service, cleans all data for that service from the SQL database (including removing any existing blobs, queues, or tables), and restarts the service.

Figure 3 Development Storage UI

Development Storage

The development storage relies on a Microsoft SQL Server instance to simulate the storage servers. By default, the development storage is configured for Microsoft SQL Server Express versions 2005 or 2008. Also development storage uses Windows authentication to connect to the server.

Using the CSRun command-line tool automatically starts development storage. You can also use this command to stop development storage. The first time you run development storage, an initialization process runs to configure the environment. The initialization process creates a database in SQL Server Express and reserves HTTP ports for each local storage service.

Configuring Microsoft SQL Server

You can also configure the development storage to access a local instance of SQL Server different from the default SQL Server Express. You can do that by running the DSInit.exe command-line tool as shown next. Remember you must run this tool as an administrator. For more information see DSInit Tool.

1. In the Windows Azure SDK command prompt execute the following command as shown in the next illustration:

>dsinit /sqlinstance:.

Figure 4 Initializing Development Environment Storage

2. A dialog prompt is displayed similar to the one shown in the next illustration.

Figure 5 Confirming Development Environment Storage Initialization

The initialization process creates a database named DevelopmentStorageDb20090919 in your current SQL Server default instance and reserves HTTP ports for each local storage service.

3. Click OK.

You can view the created database by using Microsoft SQL Server Management Studio as shown in the following illustration. The tool can be downloaded from this location: Microsoft SQL Server 2008 Management Studio Express.

Figure 6 Displaying Development Environment Storage Database

Connecting to Microsoft SQL Server

This section shows you how to connect to your own Microsoft SQL server instance. To do that you need to configure the connection string that development storage can use. The following steps show how to do this.

The first thing you want to do is to obtain the actual connection string to use.

1. Activate Visual Studio as an administrator then select Server Explorer.

2. Right-click Data Connections and then select click Add Connection… as shown in the following illustration.

Figure 7 Creating Development Environment Storage Database Server Connection

3. In the displayed dialog window, in the Server name box enter the name of your SQL server (by default, it is the name of your machine).

4. In the Log on to the server, leave Use Windows Authentication selected. This is the authentication modality used by development storage.

5. In the Connect to a database check Select or enter a database name.

6. In the drop-down list select the development storage database DevelopmentStorageDb20090919. The following illustration is an example of these steps.

Figure 8 Specifying Development Environment Storage Database

7. Click Test Connection. If everything is correct, a successful pop-up dialog is displayed. Click OK.

8. Finally, Click OK in the main dialog window.

A new database connection, similar to the one shown in the following illustration, is created and displayed in Server Explorer.

Figure 9 Development Storage Database Connection

9. Right-click the data connection just created and in the pop-up dialog select Properties.

10. In the Properties window copy the Connection String property value that is similar to this:

Data Source=mmieleLAPTOP;Initial Catalog=DevelopmentStorageDb20090919;Integrated Security=True

Among other things, the string defines the name of the SQL server instance and the development storage database.

Now you need to instruct development storage to use this connection string to connect to the database. You do this by following the next steps.

11. In Visual Studio open the file C:\Program Files\Windows Azure SDK\v1.2\bin\devstore\dsservice.exe.config. Remember that you must activate Visual Studio as an administrator.

12. In the <configuration> element create a <connectionStrings> section.

13. In the section just created enter an <add> element.

14. To the name attribute assign the value DevelopmentStorageDbConnectionString.

15. To the connectionString attribute assign the connection string evaluated in the previous steps.

The following is an example of these connection string settings.

<connectionStrings> <add name="DevelopmentStorageDbConnectionString" connectionString="Data Source=mmielelaptop;Initial Catalog=DevelopmentStorageDb20090919;Integrated Security=True" providerName="System.Data.SqlClient" /> </connectionStrings>Notice that development storage expects the name attribute to be DevelopmentStorageDbConnectionString.

16. Save and close the file.

You have configured development storage to use your own instance of the SQL server instead of the default SQL Express server.

Mary Jo Foley (@maryjofoley) reported Microsoft starts rolling out test builds of promised Azure cloud add-ons on 12/1/2010:

At this year’s Professional Developers Conference, the Azure team promised to deliver a slew of new add-ons and services — in either test or final form — before the end of calendar 2010. As of this week, test versions of many of those services are available for download.

The majority of the promised services and add-ons are available via the new Azure Management Portal; a few others are available via a newly released Azure Tools for Visual Studio software development kit (version 1.3), which Microsoft made available for download earlier this week.

There are still a few services that Microsoft officials said would be available before the end of this year that are not downloadable yet. Those include server application virtualization (due in Community Technology Preview, or CTP, test form), SQL Azure Reporting (due in CTP form), and SQL Azure Data Sync (also due in CTP form). These are still on track for end of year, the Softies said on November 30.

As of this week, public betas are now available for:

- Windows Azure Virtual Machine Role

- Extra Small Windows Azure Instance, which is priced at $0.05 per compute hour, to attract developers who need cheaper cloud training and prototyping environment

- The application section of the Windows Azure Marketplace, a site offering Azure building-block components, premium data sets, training and services

A CTP is now available for:

- Windows Azure Connect: (formerly Project Sydney), which provides IP-based network connectivity between on-premises and Windows Azure resources

Microsoft is providing an overview Webcast about these new features on December 1 at 9 a.m. PT.

By the way, if you’re wondering whether “CTP” and “beta” mean the same thing in the cloud as they do on-premises, Microsoft’s answer is “not exactly.” Server and Tools President Bob Muglia told me at the PDC that Microsoft is moving towards a slightly different nomenclature when talking about the path to rollout in the cloud. “Preview” in the cloud refers to code that Microsoft is showing but not providing to testers outside the company. “CTP” refers to code testers can use, but that will be provided for free. “Beta” refers to code available to testers, for which Microsoft plans to charge, Muglia said.

Bill Zack explained Sending Email from Azure in this 12/1/2010 post:

On-premise applications often have a need to send email. For instance an order entry system might have to send an email request to an external vendor to drop-ship all or part of an order. An event registration system might have to send email confirmations and reminders of an upcoming event.

In an on-premise application ASP.NET provides a component: Collaboration Data Objects (CDO) that can be used to send email directly from an application. Windows Azure does not provide a built in capability for sending email.

There are however a couple of solutions for this that you can use until Azure does support this capability:

1. You can leverage an on-premises SMTP server. You will need to have the SMTP server accessible by the Azure application. Normally, that requires you to give network engineers the IP address of the application so that they can allow connectivity. This IP address may change when you delete and re-deploy your application (such as when you add or remove a role, add or remove a configuration setting, change certificate, etc.), but it is one solution.

2. You can also use the Office365 (Exchange Online) service. However, you can only send messages in the names of the authenticated sender (i.e. you can’t use some arbitrary email address such as no-reply@mytest.com as sender). You also need to set enablessl to true as well. For more information on how to set this method up see this blog post.

My thanks to Harry Chen of Microsoft for some of the material in this article.

There have been many requests to add simple e-mail sending capability to Azure.

<Return to section navigation list>

Visual Studio LightSwitch

John Rivard posted Anatomy of a LightSwitch Application Part 4 – Data Access and Storage to the Visual Studio LightSwitch Team blog on 11/30/2010:

In prior posts I’ve covered the LightSwitch presentation tier and the logic tier. The presentation tier is primarily responsible for human interaction with the application using data from a LightSwitch logic tier. The logic tier comprises one or more LightSwitch data services whose primary job is to process client requests to read and write data from external data stores. This post explores how the logic tier access data and what the supported data storage services are.

The following diagram shows the relevant pieces of each tier that participate in data access.

Figure 1: LightSwitch Tiers

In Visual Studio LightSwitch, when you define a data source by creating a new table or connecting to an external data source, LightSwitch creates a corresponding data service and configures its data provider. For application-defined data, LightSwitch creates a special data source called “ApplicationData” (sometimes referred to as the intrinsic data source). In addition to creating a data service for the intrinsic data source, LightSwitch also creates and publishes the SQL database.

Figure 2 below shows a LightSwitch application with two data sources: the intrinsic “ApplicationData” and an attached “NorthwindData”. LightSwitch will create the corresponding “ApplicationDataService” and “NorthwindDataService” and will publish the database for the intrinsic database.

Figure 2: Data Sources in Project Explorer

Each data service has a data provider that corresponds to the kind of data storage service. LightSwitch supplies the data provider for Microsoft SQL Server and for Microsoft SharePoint, but others can be plugged in to support other data storage services. The following table summarizes the supported data providers and data storage services in LightSwitch 1.0.

Table 1: Supported Data Access Providers

† Not all 3rd party Entity Framework providers have been tested or are known to be compatible with LightSwitch.

‡ Provider specific.So let’s begin our tour of data access and storage in LightSwitch. We’ll cover the following areas.

- LightSwitch Data Types

- Using Microsoft SQL Server and SQL Azure

- Using Microsoft SharePoint

- Using a WCF RIA DomainService as a Data Adapter

- Using an Alternate Entity Framework Provider

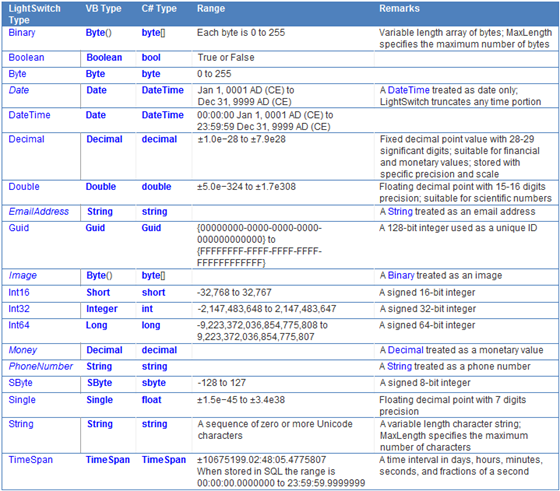

LightSwitch Data Types

Before we look at the various data providers that you can use with LightSwitch, it will help to understand the data types that LightSwitch supports. In the sections following, we will see how LightSwitch maps data types from other data source and data access providers to the built-in LightSwitch data types.

Table 2 below lists the simple data types that LightSwitch supports. The data types shown in italic fonts (Date, EmailAddress, Image, PhoneNumber) are referred to as semantic types. These do not represent a distinct value type, but provide specific formatting, visualization and validation for an existing simple data type. The set of simple data types is fixed but the set of semantic types is open for extensibility.

Table 2: LightSwitch Simple Data Types

Nullable Data Types

Each of the LightSwitch simple and semantic data types has a corresponding nullable data type. The data type is represented in the LightSwitch modeling language as datatype?. In the design experience, the q-mark notation is not displayed. Instead a “Required” property indicates a non-nullable value. In code, LightSwitch uses the corresponding nullable VB and C# data types, for example Integer? or int?. The one exception is that both required and non-required string values are represented as the CLR String type. If a string is required, LightSwitch validates that it is non-null and non-empty.

Using Microsoft SQL Server and SQL Azure

This section applies to data accessed from SQL Server, SQL Server Express, or SQL Azure, including the intrinsic “ApplicationData” database created for a LightSwitch application.

Note: In Beta 1 you can connect to an external SQL Azure database but you cannot deploy the intrinsic database to it.

At publish time, you specify the connection string that will be used to access data in SQL Server or SQL Azure. Your production SQL connection string should have the following properties set. (See ConnectionString Property for more information.)

Unsupported Features

LightSwitch does not support accessing SQL stored procedures. The LightSwitch designer ignores all other database objects that may be configured such as triggers, UDFs or SQL CLR. (It is possible to create a custom data adapter that accesses SQL stored procedures. This is covered below in “Using a WCF RIA DomainService as a Data Adapter”.)

LightSwitch does not support certain SQL data types as noted in the table below. LightSwitch omits columns with unsupported data types.

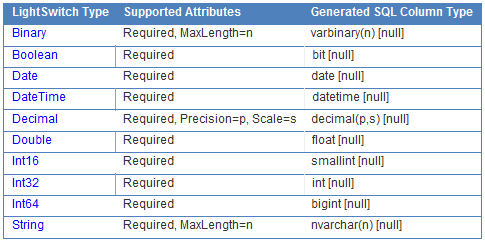

SQL Data Type Mapping

When you attach to an existing SQL Server or SQL Azure, LightSwitch maps the SQL column types to LightSwitch data types according to the following table. A non-nullable SQL type is mapped to a LightSwitch “Required” data type. LightSwitch also imports the length, precision and scale of certain data types.

Table 3: SQL Data Type Mapping

SQL Data Provider

The LightSwitch logic tier uses the Entity Framework data access provider for SQL Server. In the data service implementation, LightSwitch uses an Entity Framework ObjectContext to move entity instances to and from SQL Server. The LightSwitch developer doesn’t see the Entity Framework objects or API directly. He writes the logic tier business logic in terms of the LightSwitch Entity and Data Workspace API—without concern for the underlying data access mechanism.

LightSwitch supports transactions over SQL Client. The default isolation level for query operations is IsolationLevel.ReadCommitted. The default isolation level for updates is IsolationLevel.RepeatableRead. LightSwitch doesn’t enlist in a distributed transaction by default. You can control that behavior by creating an ambient transaction scope; if available, LightSwitch will compose with it. For more details, see Transaction Management under the Logic Tier architecture post.

Generating the Intrinsic SQL Database

As we’ve noted above, a LightSwitch application has a default application database called “Application Data“. This database provides the data storage for entity types defined by your LightSwitch application – as opposed to entity types that are imported from an external storage service.

At design-time, LightSwitch uses a local SQL Server Express database on the developer’s machine for the intrinsic database. In production, LightSwitch uses Microsoft SQL Server Express, Microsoft SQL Server or Microsoft SQL Azure for the application database. Runtime data access is the same as for any other attached SQL Server or SQL Azure database, as described above.

LightSwitch deploys the intrinsic database schema when you publish the application. It will also attempt to upgrade an existing database schema when you upgrade the application.

Note: LightSwitch does not deploy design-time or test data to production. It just deploys the schema. If you have requirements to publish data along with your application, you’ll need to do that as a first-time install or first-time run step.

SQL Schema Generation

LightSwitch maintains a model of the application data entities and relationships. From this model it generates a database storage schema that can be used to create tables and relationships in SQL Server or SQL Azure.

When you use the “Create Table” command in the LightSwitch designer, you are actually defining an entity type and a corresponding entity set. From the entity set, LightSwitch infers the database table and columns. The column types are mapped from the entity properties according to the following table.

Table 4: Generated SQL Column Types

The following LightSwitch data types are not supported when defining entity properties on the intrinsic database: Byte, Guid, SByte, Single, and TimeSpan. These were omitted to keep the design experience simple. They are supported, however, when attaching to an external database.

A LightSwitch “Required” property translates into a SQL “NOT NULL” column.

The LightSwitch designer handles keys and foreign keys for entity relationships. For every entity type, it creates an auto-increment primary key property named “Id” of type Int32. LightSwitch also automatically creates hidden foreign-key fields for entity properties that reference [1] entity or [0..1] entities. These are generated as SQL columns with an appropriate foreign-key constraint.

In general, there is full fidelity in converting data between the LightSwitch data types and the SQL column types. The one exception is DateTime. LightSwitch chose to generate DateTime properties as SQL datetime instead of as datetime2 so that our generated SQL would be compatible with SQL Server 2005. This means that the range and precision of date/time values that LightSwitch handles (via the System.DateTime type) is greater than what the SQL column can store. See SQL date and time types for more details. This is only a problem if your application needs to deal with dates prior to January 1, 1753, which isn’t typical for most business applications.

Schema Versioning

After you’ve built and deployed your Application Data for the first time, you may need to make updates to the application and to its data schema. LightSwitch allows you to deploy over the top of an existing Application Database – to a degree. At publish time LightSwitch will read the schema of the existing published database and attempt to deploy just the differences, for example adding tables, relationships, and adding or altering columns.

There are a number of changes that LightSwitch will not deploy because the changes could result in data loss. Here are some of the breaking changes that could be made in the LightSwitch Entity designer that could result in being unable to deploy the schema updates.

- Renaming an entity property

- Changing an entity property’s data type to something that is incompatible

- Rearranging the order of entity properties

- Adding a required property

- Adding a 1-many relationship

- Adding a 1-0..1 relationship

- Changing the multiplicity of a relationship (however changing from 1-many to 0..1-many is allowed)

- Adding a unique index (not available in Beta 1)

LightSwitch doesn’t prevent you from making a breaking change during iterative development. In some cases you may be warned that a change may cause data loss for your design-time test data. If you don’t mind losing your test data, you can accept the warning and make the change. But beware that if you’ve already deployed the data schema once, such a change may prevent you from successfully deploying a schema update because the scripts that LightSwitch uses to update the data schema will fail and roll-back if the potential for data loss is detected.

There are also a certain schema changes that may cause data loss but are intentionally allowed. These include:

- Deleting an entity property. The column will be dropped and any existing data will be lost.

- Deleting an entity. Any foreign-key constraints will be dropped, the table will be dropped, and any existing data will be lost.

- Deleting a relationship. Any existing foreign-keys and foreign-key constraints will be removed. There is the potential for data loss.

The Membership Database

If you use Windows Authentication or Form Authentication in your application, LightSwitch will also deploy the necessary SQL tables to store membership information for users and roles. These tables are included with the intrinsic database. Internally, LightSwitch uses the ASP.NET SQL Membership provider which requires these tables to be present.

- dbo.aspnet_Applications

- dbo.aspnet_Membership

- dbo.aspnet_Profile

- dbo.aspnet_Roles

- dbo.aspnet_SchemaVersions

- dbo.aspnet_Users

- dbo.aspnet_UsersInRoles

In addition to Users and Roles, LightSwitch applications have the concept of Permissions. Permissions are defined statically per-application in the LightSwitch application model (LSML). LightSwitch associates Permissions with Roles. A Role acts as an administrative grouping for Permissions. LightSwitch creates one additional table called RolePermissions to track this association.

- dbo.RolePermissions

Post deployment, you can add and remove users, add and remove roles, associate users with roles, and assign permissions to roles. A user gets all the permissions that are associated with the roles that he or she is in.

Design-time Data

During F5, LightSwitch generates a temporary SQL Server Express database to hold test data. This physical database is not deployed with the application, nor is any of the data that is entered during design-time.

LightSwitch attempts to maintain this data between F5 iterations, even as you make changes to the shape of the data. In cases where a data schema change cannot be performed on the design-time database without data loss, LightSwitch will warn you. If you accept the warnings, it will delete the test database and start from scratch with an empty database.

Using SharePoint Lists

LightSwitch allows you to access lists on a SharePoint 2010 site as tabular data.

Unsupported Features

LightSwitch only supports SharePoint 2010 and higher—those versions that support exposing OData. LightSwitch does not support managing SharePoint attachments. All other data types are supported, but there is limited support for displaying or editing some of them, as noted below.

SharePoint Data Type Mapping

LightSwitch maps column types from SharePoint lists to LightSwitch data types according to the following table.

Table 5: SharePoint Data Type Mapping

A SharePoint Lookup column maps to an entity relationship in LightSwitch.

SharePoint Data Provider

All LightSwitch data services use an Entity Framework ObjectContext to manage data in the server data workspace. When there is no Entity Framework data provider available, LightSwitch uses a disconnected ObjectContext and builds its own shim later to move entities in and out of the ObjectContext via an alternate date access provider.

In the case of SharePoint 2010, there is no Entity Framework provider. LightSwitch has an internal adapter that talks to SharePoint via its OData feed, using an instance of System.DataServices.Client.DataServiceContext. Under the hood, LightSwitch does all the necessary query translation and entity type marshaling to go between the EF ObjectContext and the OData DataServiceContext.

The Entity Framework ObjectContext requires entity keys and foreign-keys to manage entity relationships. OData doesn’t handle entity relationships in the same way and does not naturally provide the foreign-key data. LightSwitch compensates for this by traversing the entity relationships to get to the necessary related entity keys and auto-populates the foreign-keys. This is a bit of extra work in the logic tier, but results in a consistent data access model and API.

Using a WCF RIA DomainService as a Data Adapter

LightSwitch lets you plug in a custom data adapter for cases where no other data provider technology is available. Because it requires writing some custom code and entity classes, it works best for custom in-house scenarios or for access to public services that always have the same data schema.

Rather than defining our own protocol for the data adapter, we noted that WCF RIA Services had already done a great job of defining an object that supports query and update to user-defined entity types: the DomainService. LightSwitch uses the same DomainService class as an in-memory adapter—without necessarily exposing it as a public WCF service endpoint.

The solution involves writing a custom DomainService and referencing the DLL from the LightSwitch project. LightSwitch uses .NET reflection to examine the DomainService and to infer the entity types and their read/insert/update/delete accessibilities. From this, LightSwitch generates its own data service and its own entity types so that business logic can be written against it just like with any other data service.

Unsupported Features

LightSwitch does not support the following features of the WCF RIA DomainService:

- Custom Entity Operations

- Domain Invoke Operations

- Multiple assemblies. The DomainService class and the entity classes that it exposes must be defined in the same assembly. The LightSwitch data import feature doesn’t resolve the entity types to a different assembly.

RIA Data Type Mapping

LightSwitch data types from the DomainService entities to LightSwitch data types according to the following table.

Table 6: RIA Entity Data Type Mapping

Data types exposed as nullable types will be imported as required.

LightSwitch also imports metadata attributes specified on the entity types exposed by the DomainService. These attributes are defined in System.ComponentModel.DataAnnotations.

Table 7: RIA Attribute Mapping

Exposing Stored Procedures

LightSwitch doesn’t support access to SQL stored procedures via the standard Entity Framework provider. However, you can use a custom DomainService to expose stored procedures.

Say for example that you have a SQL database where access to the data table is read-only. All inserts, updates and deletes must go through a stored procedure. You can create a DomainService that exposes access to the table as a query and implements custom Insert/Update/Delete methods to invoke the stored procedures.

[Query(IsDefault=True)]

IQueryable<MyEntity> GetMyEntity() { … }

[Insert]

public void InsertMyEntity(MyEntity e) { … }

[Update]

public void UpdateMyEntity(MyEntity e) { … }

[Delete]

Calling a Web Service

Consider another case where a call to a web service returns structured information. If the return value(s) can be construed as an entity type (even a pseudo-entity), you can model the web service call as a parameterized query on a DomainService that returns a single entity that contains the results. LightSwitch will model the query as a parameterized domain service query that can be called from the LightSwitch client or server.

// LightSwitch requires a default query for each entity type

[Query(IsDefault=True)]

IQueryable<MyResults> GetMyResults()

{

return new List<MyResults>().AsQueryable(); // dummy entity set

}

// A singleton query returns the results of a web service call

[Query]

MyResults CallWebService(string param1)

{

// call web service with param1 and get xxx and yyy

return new MyResults() {

Id = Guid.NewGuid(), // dummy id

Value1=xxx,

Value2=yyy };

}Data Provider

Under the hood, LightSwitch does all of the necessary translation between its native entity types and the DomainService entity types. For queries, we also rewrite the query expression tree with the DomainService entity types and hand it off to the DomainSerivce for processing. For updates, we copy the changed LightSwitch entities into a new DomainService change set.

Using Alternate Entity Framework Providers

The LightSwitch logic tier uses Entity Framework as its primary data access mechanism. By default we use the SQL provider for access to Microsoft SQL Server. But any other EF provider can be used—so long as it conforms to the features that LightSwitch expects from it.

When you connect to a data source via an EF provider, LightSwitch can ask the provider for the database schema. The EF provider hands off its schema information via the EDMX format.

Unsupported Features

LightSwitch does not support the EDM FunctionImport, typically used to expose stored procedures. It does not support the DateTimeOffset data type and will ignore those columns when importing the data schema.

EDM Data Type Mapping

LightSwitch maps the EDM Simple Types from the imported data schema into LightSwitch data types and attributes according to the following table.

Table 8: EDM Data Type Mapping

Entity Framework Data Provider

There are many 3rd party entity framework providers available. The degree to which each provider works with LightSwitch is unknown as we have not tested with any specific providers other than the SQL provider.

LightSwitch relies on many features of an Entity Framework provider to perform correctly. These include handling complex query expressions, data paging via skip and take operators, searching on string fields, sorting by arbitrary columns, and including related records. All this is to say that your mileage may vary.

While LightSwitch is able to configure any data provider via the EF connection string, a specific provider may require additional deployment or configuration steps. You’ll need to contact the publisher to find out how to use and deploy it with LightSwitch.

We do hope to see many compatible providers available by the time LightSwitch 1.0 ships. Stay tuned!

Summary

LightSwitch supports accessing data from a number of different data sources and aggregating that data together. LightSwitch can access data from SQL Server Express, SQL Server and SQL Azure and can also deploying its intrinsic database to any of these. LightSwitch can also access lists from SharePoint 2010, but does not yet have support for arbitrary OData sources

For extensible data access, LightSwitch can use a compatible Entity Framework provider. And finally, you can use a custom data adapter by creating a WCF RIA DomainService class and the related entity classes.

Return to section navigation list>

Windows Azure Infrastructure

David Linthicum explained Selling SOA/Cloud Part 1 in a 12/1/2010 post to ebizQ’s Where SOA Meets Cloud blog:

Many organizations out there don't really have to sell SOA, and thus cloud computing. They understand that the hype is the driver, and they, in essence, leverage the thousands of articles and books on the topic to sell this architectural pattern. SOA is easy to sell if everyone else seems to be doing it, and there are plenty of smart people talking about its benefits.

However, in most cases, SOA has to be sold within the enterprise; it's not a slam-dunk. Indeed, if you're doing SOA right you'll find that the cost quickly goes well into the millions, thus you'll need executive approval for that kind of acceleration in spending. But, the benefits are there as well, including the core benefit of agility that could save the company many times the cost of building a SOA. At least, that's the idea.

Truth-be-told, technical folk are not good at selling the value of a technology, or, in this case, a grouping of technologies, into the enterprise. They rely on the assumption that everyone sees the benefit without them having to explain it, but that is not always the case. Moreover, while in many instances the benefit is clear, in more instances it's not. Also, there is a chance that SOA may not be a fit, and you better figure that out up-front.

So, how do you sell SOA? Let's look a few key concepts, including:

- Shining a light on existing limitations

- Creating the business case

- Creating the execution plan

- Delivering the goods

Shining a light on existing limitations refers to the process of admitting how bad things are. This is difficult to do for most architects, because you're more or less exposing yourself to criticism. In many instances, you're the person in charge of keeping things working correctly. Architecture within most Global 2000 companies, however, is in need of fixing. You can't change the architectures; they are too complex and ill planned. If your architecture has issues, and they all do, now is time to list them.