Windows Azure and Cloud Computing Posts for 11/4/2010+

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

•• Updated 11/7/2010 with articles marked ••

• Updated 11/6/2010 with articles marked •

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database, Marketplace DataMarket, and OData

- AppFabric: Access Control and Service Bus

- Windows Azure Virtual Network, Connect, and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch

- Windows Azure Infrastructure

- Windows Azure Platform Appliance (WAPA)

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Read the detailed TOC here (PDF) and download the sample code here.

Discuss the book on its WROX P2P Forum.

See a short-form TOC, get links to live Azure sample projects, and read a detailed TOC of electronic-only chapters 12 and 13 here.

Wrox’s Web site manager posted on 9/29/2009 a lengthy excerpt from Chapter 4, “Scaling Azure Table and Blob Storage” here.

You can now freely download by FTP and save the following two online-only PDF chapters of Cloud Computing with the Windows Azure Platform, which have been updated for SQL Azure’s January 4, 2010 commercial release:

- Chapter 12: “Managing SQL Azure Accounts and Databases”

- Chapter 13: “Exploiting SQL Azure Database's Relational Features”

HTTP downloads of the two chapters are available for download at no charge from the book's Code Download page.

Tip: If you encounter articles from MSDN or TechNet blogs that are missing screen shots or other images, click the empty frame to generate an HTTP 404 (Not Found) error, and then click the back button to load the image.

Azure Blob, Drive, Table and Queue Services

•• Neil MacKenzie (@mknz) posted Comparing Azure Queues With Azure AppFabric Labs’ Durable Message Buffers on 11/6/2010:

The Azure AppFabric team made a number of important announcements at PDC 10 including the long-waited migration of the Velocity caching service to Azure as the Azure AppFabric Caching Service. The team also released into the Azure AppFabric Labs a significantly enhanced version of the Azure AppFabric Service Bus Message Buffers feature.

The production version of Message Buffers provide a small in-memory store inside the Azure Service Bus where up to 50 messages no larger than 64KB can be stored for up to ten minutes. Each message can be retrieved by a single receiver. In his magisterial book Programming WCF Services, Juval Löwy suggests that Message Buffers provide for “elasticity in the connection, where the calls are somewhere between queued calls and fire-and-forget asynchronous calls.” The production version of Message Buffers meets a very specific need so are perhaps rightly not widely discussed.

The Azure AppFabric Labs version of Message Buffers are sufficiently enhanced that the feature could have a much wider appeal. Message Buffers are now durable and look much more like a queuing system. There may be places where Durable Message Buffers could provide an alternative to Azure Queues.

The purpose of this post is to compare the enhanced Durable Message Buffers with Azure Queues primarily so that Durable Message Buffers get more exposure to those who need queuing capability. In the remainder of this post any reference to Durable Message Buffers will mean the Azure AppFabric Labs version.

Note that the Azure AppFabric Labs are available only in the US South Central sub-region. Furthermore, any numerical or technical limits for Durable Message Buffers described in this post apply to the Labs version not any production version that may be released.

Scalability

Azure Queues are built on top of Azure Tables. In Azure Queues , messages are stored in individual queues. The only limit on the number of messages is the 100 TB maximum size allowed for an Azure Storage account. Each message has a maximum size of 8KB. However, the message data is stored in base-64 encoded form so the maximum size of actual data in the message is somewhat less than this – perhaps around 6KB. Messages are automatically deleted from a queue after a message-dependent, time-to-live interval which has a maximum and default value of 7 days.

Durable Message Buffers are built on top of SQL Azure. In Durable Message Buffers, messages are stored in buffers. Currently, each account is allowed only 10 buffers. Each buffer has a maximum size of 100MB and individual messages have a maximum size of 256 KB including service overhead. There appears to be no limit to the length of time a message remains in a buffer.

Authentication

Azure Queues use the HMAC account and key authentication used for Azure Blobs and Azure Tables. Azure Queues operations may be performed using either a secure or unsecure communications channel.

Durable Message Buffers uses the Azure AppFabric Labs Access Control Service (ACS) to generate a Simple Web Token (SWT) that must be transmitted with each operation.

Programming Model

The RESTful Queue Service API is the definitive way to interact with Azure Queues. The Storage Client library provides a high-level .Net interface on top of the Queue Service API.

The only way to interact with Durable Message Buffers is through a RESTful interface. Although there is a .Net API for the production version of Message Buffers there is currently no .Net API for the Labs release of Durable Message Buffers.

Management of Azure Queues is fully integrated with use of the queues so that the same namespace is used for operations to create and delete queues as for processing messages. For example:

{storageAccount}.queue.core.windows.net

The Durable Message Buffers API incorporates the recent change to the Azure AppFabric Labs whereby service management is provided with a namespace distinct from that of the actual service. For example:

{serviceName}.servicebus.appfabriclabs.com

{serviceName}-mgmt.servicebus.appfabriclabs.comThe Queue Service API supports the following operations on messages in a queue:

- Put – inserts a single message in the queue

- Get – retrieves and makes invisible up to 30 messages from the queue

- Peek – retrieves up to 30 messages from the queue

- Delete – deletes a message from the queue

- Clear – deletes all messages from the queue

These operations are all addressed to the following endpoint:

http{s}://{storageAccount}.queue.core.windows.net/{queueName}/messages

When the Get operation is used to retrieve a message from a queue the message is made invisible to other requests for a specified period of up to two hours with a default value of 30 seconds.

Note that all these operations have equivalents in the Storage Client API.

The Durable Message Buffer API supports the following operations on messages in a message buffer:

- Send – inserts a single message in the buffer

- Peek-Lock – retrieves and locks a single message

- Unlock – unlocks a message so other retrievers can get it

- Read and Delete Message – retrieves and deletes a message as an atomic operation

- Delete Message – deletes a locked message

These operations are all addressed to the following endpoint:

http{s}://{serviceNamespace}.servicebus.windows.net/{buffer}/messages

When the Peek-Lock operation is used to retrieve a message from a buffer the message is locked (made invisible) to other requests for a buffer-dependent period up to 5 minutes. This period is specified when the buffer is created and is not changeable. It is possible to lose a message irretrievably if a receiver crashes while processing a message retrieved using Read and Delete Message.

The Queue Service API supports the following queue management operations:

- Create Queue – creates a queue

- Delete Queue – deletes a queue

- Get Queue Metadata – gets metadata for the queue

- Set Queue Metadata – sets metadata for the queue

These operations are all addressed to the following endpoint:

http{s}://{storageAccount}.queue.core.windows.net/{queueName}

The Queue Service API supports an additional queue management operation:

- List Queues – lists the queues under the Azure Storage account

This operation is addressed to the following endpoint:

http{s}://{storageAccount}.queue.core.windows.net

The Durable Message Buffers API supports the following buffer-management operations:

- Create Buffer – creates and configures a message buffer

- Delete Buffer – deletes a message buffer

- Get Buffer – retrieves the configuration for a message buffer

- List Buffers – lists the message buffers associated with the service

These operations are addressed to the following endpoint:

https://{serviceNamespace}-mgmt.servicebus.windows.net/Resources/MessageBuffers

When a buffer is created various the following configuration parameters are fixed for the buffer:

- Authorization Policy – specifies the authorization needed for various operations

- Transport Protection Policy – specifies the transport protection used for operations

- Lock Duration – specifies the duration when messages are locked for a receiver

- Maximum Message Size – specifies the maximum message size

- Maximum Message Buffer Size – specifies the maximum size of the message buffer

Note that these configuration parameters are set when the message buffer is created and are immutable following creation. The Get Buffer operation may be invoked to view the configuration parameters for a message buffer.

Resources

The Azure AppFabric Labs portal is here.

The Windows Azure AppFabric Team blog is here. This post announces the October 2010 (PDC) release of the Azure AppFabric labs.

The Windows Azure AppFabric SDK V2.0 CTP described here is downloadable from here.

The PDC 10 presentation by Clemens Vasters on Windows Azure AppFabric Service Bus Futures is here. Channel 9 hosts a discussion with Clemens Vasters and Maggie Myslinska on the Azure AppFabric Labs release here.

• Joe Giardino, Jai Haridas, and Brad Calder show you How to get most out of Windows Azure Tables in a chapter-length article of 11/6/2010 for the the Microsoft Windows Azure Storage Team blog. The full article rivals the length of most OakLeaf Windows Azure and Cloud Computing posts:

Introduction

Windows Azure Storage is a scalable and durable cloud storage system in which applications can store data and access it from anywhere and at any time. Windows Azure Storage provides a rich set of data abstractions:

- Windows Azure Blob – provides storage for large data items like file and allows you to associate metadata with it.

- Windows Azure Drives – provides a durable NTFS volume for applications running in Windows Azure cloud.

- Windows Azure Table – provides structured storage for maintaining service state.

- Windows Azure Queue – provides asynchronous work dispatch to enable service communication.

This post will concentrate on Windows Azure Table, which supports massively scalable tables in the cloud. It can contain billions of entities and terabytes of data and the system will efficiently scale out automatically to meet the table’s traffic needs. However, the scale you can achieve depends on the schema you choose and the application’s access patterns. One of the goals of this post is to cover best practices, tips to follow and pitfalls to avoid that will allow your application to get the most out of the Table Storage.

Table Data Model

To those who are new to Windows Azure Table, we would like to start off with a quick description of the data model since it is a non-relational storage system; a few concepts are different from a conventional database system.

To store data in Windows Azure Storage, you would first need to get an account by signing up here with your live id. Once you have completed registration, you can create storage and hosted services. The storage service creation process will request a storage account name and this name becomes part of the host name you would use to access Windows Azure Storage. The host name for accessing Windows Azure Table is <accountName>.table.core.windows.net.

While creating the account you also get to choose the geo location in which the data will be stored. We recommend that you collocate it with your hosted services. This is important for a couple of reasons – 1) applications will have fast network access to your data, and 2) the bandwidth usage in the same geo location is not charged.

Once you have created a storage service account, you will receive two 512 bit secret keys called primary and secondary access keys. Any one of these secret keys is then used to authenticate user requests to the storage system by creating a HMAC SHA256 signature for the request. The signature is passed with each request to authenticate the user requests. The reason for the two access keys is that it allows you to regenerate keys by rotating between primary and secondary access keys in your existing live applications.

Using this storage account, you can create tables that store structured data. A Windows Azure table is analogous to a table in conventional database system in that it is a container for storing structured data. But an important differentiating factor is that it does not have a schema associated with it. If a fixed schema is required for an application, the application will have to enforce it at the application layer. A table is scoped by the storage account and a single account can have multiple tables.

The basic data item stored in a table is called entity. An entity is a collection of properties that are name value pairs. Each entity has 3 fixed properties called PartitionKey, RowKey and Timestamp. In addition to these, a user can store up to 252 additional properties in an entity. If we were to map this to concepts in a conventional database system, an entity is analogous to a row and property is analogous to a column. Figure 1 show the above described concepts in a picture and more details can be found in our documentation “Understanding the Table Service Data Model”.

Figure 1 Table Storage Concepts

Every entity has 3 fixed properties:

- PartitionKey – The first key property of every table. The system uses this key to automatically distribute the table’s entities over many storage nodes.

- RowKey – A second key property for the table. This is the unique ID of the entity within the partition it belongs to. The PartitionKey combined with the RowKey uniquely identifies an entity in a table. The combination also defines the single sort order that is provided today i.e. all entities are sorted (in ascending order) by (PartitionKey, RowKey).

- Timestamp – Every entity has a version maintained by the system which is used for optimistic concurrency. Update and Delete requests by default send an ETag using the If-Match condition and the operation will fail if the timestamp sent in the If-Match header differs from the Timestamp property value on the server.

The PartitionKey and RowKey together form the clustered index for the table and by definition of a clustered index, results are sorted by <PartitionKey, RowKey>. The sort order is ascending.

Operations on Table

The following are the operations supported on tables

- Create a table or entity

- Retrieve a table or entity, with filters

- Update an entity

- Delete a table or entity

- Entity Group Transactions - These are transactions across entities in the same table and partition

Note: We currently do not support Upsert (Insert an entity or Update it if it already exists). We recommend that an application issue an update/insert first depending on what has the highest probability to succeed in the scenario and handle an exception (Conflict or ResourceNotFound) appropriately. Supporting Upsert is in our feature request list.

For more details on each of the operations, please refer to the MSDN documentation. Windows Azure Table uses WCF Data Services to implement the OData protocol. The wire protocol is ATOM-Pub. We also provide a StorageClient library in the Windows Azure SDK that provides some convenience in handling continuation tokens for queries (See “Continuation Tokens” below) and retries for operations.

The schema used for a table is defined as a .NET class with the additional DataServiceKey attribute specified which informs WCF Data Services that our key is <PartitionKey, RowKey>. Also note that all public properties are sent over the wire as properties for the entity and stored in the table. …

The post continues with about 50 more feet of C# sample code examples and related documentation. Read more from the original source.

• Goeleven Yves posted Operational costs of an Azure Message Queue on 11/6/2010:

A potential issue with azure message queues is the fact that there are hidden costs associated with them, depending on how often the system accesses the queues you pay more, no matter whether there are messages or not.

Lets have a look at what the operational cost model for NServiceBus would look like if we treated azure queues the same as we do MSMQ queues.

On azure you pay $0.01 per 10K storage transactions. Every message send is a transaction, every successful message read are two transactions (GET + DELETE) and also every poll without a resulting message is a transaction. Multiply this by the number of roles you have, and again by the number of threads in the role and again by the number of polls the cpu can launch in an infinite for-loop per second and you’ve got yourself quite a bill at the end of the month. For example, 2 roles with 4 threads each, in idle state, at a rate of 100 polls per second would result in an additional $2.88 per hour for accessing your queues.

Scary isn’t it

As a modern-day developer you have the additional responsibility to take these operational costs into account and balance them against other requirements such as performance. In order for you to achieve the correct balance, I’ve implemented a configurable back-off mechanism in the NServiceBus implementation.

The basic idea is, if the thread has just processed a message it’s very likely that there are more messages, so we check the queue again in order to maintain high throughput. But if there was no message, we will delay our next read a bit before checking again. If there is still no message, we delay the next read a little more, and so on and so on, until a certain threshold has been reached. This poll interval will be maintained until there is a new message on the queue. Once a message has been processed we start the entire sequence again from zero…

Configuring the increment of the poll interval can be done by setting the PollIntervalIncrement property (in milliseconds) on the AzureQueueConfig configuration section, by default the interval is increased by a second at a time. To define the maximum wait time when there are no messages, you can configure the MaximumWaitTimeWhenIdle property (also in milliseconds). By default this property has been set to 60 seconds. So an idle NServiceBus role will slow down incrementally by one second until it polls only once every minute. For our example of 2 roles with 4 threads, in idle state, this would now result in a cost of $0.00048 per hour.

Not so scary anymore

Please play with these values a bit, and let me know what you feel is the best balance between cost and performance…

Until next time…

Steve Yi reported Wiki: Understanding Data Storage Offerings on the Windows Azure Platform in a 11/4/2010 post:

Larry Franks has published an article in the wiki section of TechNet called: “Understanding Data Storage Offerings on the Windows Azure Platform”. This article describes the data storage offerings available on the Windows Azure platform, including: Windows Azure Storage: Tables, Queues, Blobs and our favorite: SQL Azure. He has done a nice job of comparing and contrasting storage to help you find the best Windows Azure Platform storage solution for your needs.

Read: “Understanding Data Storage Offerings on the Windows Azure Platform”

<Return to section navigation list>

SQL Azure Database, Marketplace DataMarket and OData

•• Kalen Delaney (@sqlqueen) wrote an Inside SQL Azure white paper, which Wayne Walter Berry posted to the Microsoft TechNet Wiki on 11/1/2010 (missed when posted). The white paper is especially interesting because it offers architectural and data center implementation details that Microsoft doesn’t advertise broadly:

Summary

With Microsoft SQL Azure, you can create SQL Server databases in the cloud. Using SQL Azure, you can provision and deploy your relational databases and your database solutions to the cloud, without the startup cost, administrative overhead, and physical resource management required by on-premises databases. The paper will examine the internals of the SQL Azure databases, and how they are managed in the Microsoft Data Centers, to provide you high availability and immediate scalability in a familiar SQL Server development environment.

Introduction

SQL Azure Database is Microsoft’s cloud-based relational database service. Cloud computing refers to the applications delivered as services over the Internet and includes the systems, both hardware and software, providing those services from centralized data centers. This introductory section will present basic information about what SQL Azure is and what it is not, define general terminology for use in describing SQL Azure databases and applications, and provide an overview of the rest of the paper.

What Is SQL Azure?

Many, if not most, cloud-based databases provide storage using a virtual machine (VM) model. When you purchase your subscription from the vendor and set up an account, you are provided with a VM hosted in a vendor-managed data center. However, what you do with that VM is then entirely isolated from anything that goes on in any other VM in the data center. Although the VM may come with some specified applications preinstalled, in your VM you can install additional applications to provide for your own business needs, in your own personalized environment. Even though your applications can run in isolation, you are dependent on the hosting company to provide the physical infrastructure, and the performance of your applications is impacted by the load on the data center machines, from other VMs using the same CPU, memory, disk I/O, and network resources.

Microsoft SQL Azure uses a completely different model. The Microsoft data centers have installed large-capacity SQL Server instances on commodity hardware that are used to provide data storage to the SQL Azure databases created by subscribers. One SQL Server database in the data center hosts multiple client databases created through the SQL Azure interface. In addition to the data storage, SQL Azure provides services to manage the SQL Server instances and databases. More details regarding the relationship between the SQL Azure databases and the databases in the data centers, as well as the details regarding the various services that interact with the databases, are provided in later in this paper.

What Is in This Paper

In this paper, we describe the underlying architecture of the SQL Server databases in the Microsoft SQL Azure data centers, in order to explain how SQL Azure provides high availability and immediate scalability for your data. We tell you how SQL Azure provides load balancing, throttling, and online upgrades, and we show how SQL Server enables you to focus on your logical database and application design, instead of having to worry about the physical implementation and management of your servers in an on-premises data center.

If you are considering moving your SQL Server databases to the cloud, knowing how SQL Azure works will provide you with the confidence that SQL Azure can meet your data storage and availability needs.

What Is NOT in This Paper

This paper is not a tutorial on how to develop an application using SQL Azure, but some of the basics of setting up your environment will be included to set a foundation. We will provide a list of links where you can get more tutorial-type information.

This paper will not cover specific Windows Azure features such Windows Azure Table store and Blob store.

Also, this paper is not a definitive list of SQL Azure features. Although SQL Azure does not support all the features of SQL Server, the list of supported features grows with every new SQL Azure release. Because things are changing so fast with SQL Azure service updates every few months and new releases several times a year, users will need to get in the habit of checking the online documentation regularly. New service updates are announced on the SQL Azure team blog, found here: http://blogs.msdn.com/b/sqlazure/. In addition to announcing the updates, the blog is also your main source for information about new features included in the updates, as well as new options and new tools. …

Skipping to the architectural content:

SQL Azure Architecture Overview

As discussed earlier, each SQL Azure database is associated with its own subscription. From the subscriber’s perspective, SQL Azure provides logical databases for application data storage. In reality, each subscriber’s data is actually stored multiple times, replicated across three SQL Server databases that are distributed across three physical servers in a single data center. Many subscribers may share the same physical database, but the data is presented to each subscriber through a logical database that abstracts the physical storage architecture and uses automatic load balancing and connection routing to access the data. The logical database that the subscriber creates and uses for database storage is referred to as a SQL Azure database.

Logical Databases on a SQL Azure Server

SQL Azure subscribers access the actual databases, which are stored on multiple machines in the data center, through the logical server. The SQL Azure Gateway service acts as a proxy, forwarding the Tabular Data Stream (TDS) requests to the logical server. It also acts as a security boundary providing login validation, enforcing your firewall and protecting the instances of SQL Server behind the gateway against denial-of-service attacks.

The Gateway is composed of multiple computers, each of which accepts connections from clients, validates the connection information and then passes on the TDS to the appropriate physical server, based on the database name specified in the connection. Figure 2 shows the complex physical architecture represented by the single logical server.

Figure 2: A logical server and its databases distributed across machines in the data center (SQL stands for SQL Server)

In Figure 2, the logical server provides access to three databases: DB1, DB3, and DB4. Each database physically exists on one of the actual SQL Server instances in the data center. DB1 exists as part of a database on a SQL Server instance on Machine 6, DB3 exists as part of a database on a SQL Server instance on Machine 4, and DB4 exists as part of a SQL Server instance on Machine 5. There are other SQL Azure databases existing within the same SQL Server instances in the data center (such as DB2), available to other subscribers and completely unavailable and invisible to the subscriber going through the logical server shown here.

Each database hosted in the SQL Azure data center has three replicas: one primary replica and two secondary replicas. All reads and writes go through the primary replica, and any changes are replicated to the secondary replicas asynchronously. The replicas are the central means of providing high availability for your SQL Azure databases. For more information about how the replicas are managed, see “High Availability with SQL Azure” later in this paper.

In Figure 2, the logical server contains three databases: DB1, DB2, and DB3. The primary replica for DB1 is on Machine 6 and the secondary replicas are on Machine 4 and Machine 5. For DB3, the primary replica is on Machine 4, and the secondary replicas are on Machine 5 and on another machine not shown in this figure. For DB4, the primary replica is on Machine 5, and the secondary replicas are on Machine 6 and on another machine not shown in this figure. Note that this diagram is a simplification. Most production Microsoft SQL Azure data centers have hundreds of machines with hundreds of actual instances of SQL Server to host the SQL Azure replicas, so it is extremely unlikely that if multiple SQL Azure databases have their primary replicas on the same machine, their secondary replicas will also share a machine.

The physical distribution of databases that all are part of one logical instance of SQL Server means that each connection is tied to a single database, not a single instance of SQL Server. If a connection were to issue a USE command, the TDS might have to be rerouted to a completely different physical machine in the data center; this is the reason that the USE command is not supported for SQL Azure connections.

Network Topology

Four distinct layers of abstraction work together to provide the logical database for the subscriber’s application to use: the client layer, the services layer, the platform layer, and the infrastructure layer. Figure 3 illustrates the relationship between these four layers.

Figure 3: Four layers of abstraction provide the SQL Azure logical database for a client application to use

The client layer resides closest to your application, and it is used by your application to communicate directly with SQL Azure. The client layer can reside on-premises in your data center, or it can be hosted in Windows Azure. Every protocol that can generate TDS over the wire is supported. Because SQL Azure provides the TDS interface as SQL Server, you can use familiar tools and libraries to build client applications for data that is in the cloud.

The infrastructure layer represents the IT administration of the physical hardware and operating systems that support the services layer. Because this layer is technically not a part of SQL Azure, it is not discussed further in this paper.

The services and platform layers are discussed in detail in the next sections.

Services Layer

The services layer contains the machines that run the gateway services, which include connection routing, provisioning, and billing/metering. These services are provided by four groups of machines. Figure 4 shows the groups and the services each group includes.

Figure 4: Four groups of machines provide the services layer in SQL Azure

The front-end cluster contains the actual gateway machines. The utility layer machines validate the requested server and database and manage the billing. The service platform machines monitor and manage the health of the SQL Server instances within the data center, and the master cluster machines keep track of which replicas of which databases physically exist on each actual SQL Server instance in the data center.

The numbered flow lines in Figure 4 indicate the process of validating and setting up a client connection:

- When a new TDS connection comes in, the gateway, hosted in the front-end cluster, is able to establish a connection with the client. A minimal parser verifies that the command is one that should be passed to the database, and is not something such as a CREATE DATABASE, which must be handled in the utility layer.

- The gateway performs the SSL handshake with the client. If the client refuses to use SSL, the gateway disconnects. All traffic must be fully encrypted. The protocol parser also includes a “Denial of Service” guard, which keeps track of IP addresses, and if too many requests come from the same IP address or range of addresses, further connections are denied from those addresses.

- Server name and login credentials supplied by the user must be verified. Firewall validation is also performed, only allowing connections from the range of IP addresses specified in the firewall configuration.

- After a server is validated, the master cluster is accessed to map the database name used by the client to a database name used internally. The master cluster is a set of machines maintaining this mapping information. For SQL Azure, partition means something much different than it means on your on-premises SQL Server instances. For SQL Azure, a partition is a piece of a SQL Server database in the data center that maps to one SQL Azure database. In Figure 2, for example, each of the databases contains three partitions, because each hosts three SQL Azure databases.

- After the database is found, authentication of the user name is performed, and the connection is rejected if the authentication fails. The gateway verifies that it has found the database that the user actually wants to connect to.

- After all connection information is determined to be acceptable, a new connection can be set up.

- This new connection goes straight from the user to the back-end (data) node.

- After the connection is established, the gateway’s job is only to proxy packets back and forth from the client to the data platform.

Platform Layer

The platform layer includes the computers hosting the actual SQL Server databases in the data center. These computers are called the data nodes. As described in the previous section on logical databases on a SQL Azure server, each SQL Azure database is stored as part of a real SQL Server database, and it is replicated twice onto other SQL Server instances on other computers. Figure 5 provides more details on how the data nodes are organized. Each data node contains a single SQL Server instance, and each instance has a single user database, divided into partitions. Each partition contains one SQL Azure client database, either a primary or secondary replica.

Figure 5: The actual data nodes are part of the platform layer

A SQL Server database on a typical data node can host up to 650 partitions. Within the data center, you manage these hosting databases just as you would manage an on-premises SQL Server database, with regular maintenance and backups being performed within the data center. There is one log file shared by all the hosted databases on the data node, which allows for better logging throughput with sequential I/O/group commits. Unlike on-premises databases, in SQL Azure, the database log files pre-allocate and zero out gigabytes of log file space before the space is needed, thus avoiding stalls due to autogrow operations.

Another difference between log management in the SQL Azure data center and in on-premises databases is that every commit needs to be a quorum commit. That is, the primary replica and at least one of the secondary replicas must confirm that the log records have been written before the transaction is considered to be committed.

Figure 5 also indicates that each data node machine hosts a set of processes referred to as the fabric. The fabric processes perform the following tasks:

- Failure detection: notes when a primary or secondary replica becomes unavailable so that the Reconfiguration Agent can be triggered

- Reconfiguration Agent: manages the re-establishment of primary or secondary replicas after a node failure

- PM (Partition Manager) Location Resolution: allows messages to be sent to the Partition Manger

- Engine Throttling: ensures that one logical server does not use an disproportionate amount of the node’s resources, or exceed its physical limits

- Ring Topology: manages the machines in a cluster as a logical ring, so that each machine has two neighbors that can detect when the machine goes down

The machines in the data center are all commodity machines with components that are of low-to-medium quality and low-to-medium performance capacity. At this writing, a commodity machine is a SKU with 32 GB RAM, 8 cores, and 12 disks, with a cost of around $3,500. The low cost and the easily available configuration make it easy to quickly replace machines in case of a failure condition. In addition, Windows Azure machines use the same commodity hardware, so that all machines in the data center, whether used for SQL Azure or for Windows Azure, are interchangeable.

The term cluster refers to a collection of machines in the data center plus the operating system and network. A cluster can have up to 1,000 machines, and at this time, most data centers have one cluster of machines in the platform layer, over which SQL Azure database replicas can be spread. The SQL Azure architecture does not require a single cluster, and if more than 1,000 machines are needed, or if there is need for a set of machines to dedicate all their capacity to a single use, machines can be grouped into multiple clusters.

Kalen continues with about 20 feet of details about “High Availability with SQL Azure,” “Scalability with SQL Azure,” “SQL Azure Management,” and “Future Plans for SQL Azure,” which is excerpted here:

The list of features and capabilities of SQL Azure is changing rapidly, and Microsoft is working continuously to release more enhancements.

For example, in SQL Azure building big databases means harnessing the easy provisioning of SQL Azure databases and spreading large tables across many databases. Because of the SQL Azure architecture, this also means scaling out the processing power. To assist with scaling out, Microsoft plans to make it easier to manage tables partitioned across a large set of databases. Initially, querying is expected to remain restricted to one database at a time, so developers will have to handle the access to multiple databases to retrieve data. In later versions, improvements are planned in query fan-out, to make the partitioning across databases more transparent to user applications.

Another feature in development is the ability to take control of your backups. Currently, backups are performed in the data centers to protect your data against disk or system problems. However, there is no way currently to control your own backups to provide protection against logical errors and use a RESTORE operation to return to an earlier point in time when a backup was made. The new feature involves the ability to make your own backups of your SQL Azure databases to your own on-premises storage, and the ability to restore those backups either to an on-premises database or to a SQL Azure database. Eventually Microsoft plans to provide the ability to perform SQL Azure backups across data centers and also make log backups so that point-in-time recovery can be implemented.

Conclusion

Using SQL Azure, you can provision and deploy your relational databases and your database solutions to the cloud, without the startup cost, administrative overhead, and physical resource management required by on-premises databases. In this paper, we examined the internals of the SQL Azure databases. By creating multiple replicas of each user database, spread across multiple machines in the Microsoft data centers, SQL Azure can provide high availability and immediate scalability in a familiar SQL Server development environment.

For more information:

- http://www.microsoft.com/sqlserver/: SQL Server Web site

- http://msdn.microsoft.com/en-us/windowsazure/sqlazure/default.aspx: SQL Azure Web site

- http://blogs.msdn.com/b/sqlazure/: SQL Azure Team blog

- http://msdn.microsoft.com/en-us/library/ee336279.aspx: SQL Azure documentation

- http://technet.microsoft.com/en-us/sqlserver/: SQL Server TechCenter

- http://msdn.microsoft.com/en-us/sqlserver/: SQL Server DevCenter

Kalen’s article deserves more publicity from Microsoft’s SQL Azure and other Azure-related Teams. Read the entire white paper here.

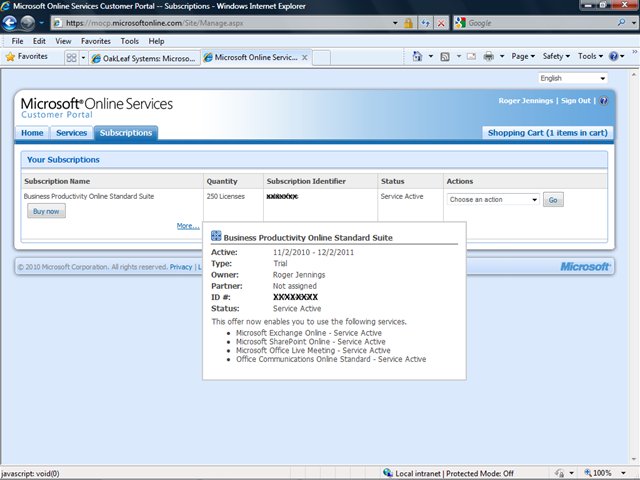

•• The SQL Azure Team updated their SQL Azure Products page after PDC 2010 with a comparison of SQL Server and SQL Azure Reporting, as well as new details about registering for the forthcoming SQL Azure Reporting feature CTP:

Register to be invited to the community technology preview (CTP) at Microsoft Connect.

Note: When the Azure Reporting CTP goes live, this section will be divided into “SQL Azure Database and Reporting” and “Azure Marketplace DataMarket and OData” sections.

• Elisa Flasko’s (@eflasko) Introducing DataMarket article for MSDN Magazine’s November 2010 issue, which has a “Data in the Cloud” theme, carries this deck:

See how the former Microsoft Project Codename "Dallas" has matured into an information marketplace that makes it easy to find and purchase the data you need to power applications and analytics.

and continues

Windows Azure Marketplace DataMarket, which was first announced as Microsoft Project Codename “Dallas” at PDC09, changes the way information is exchanged by offering a wide range of content from authoritative commercial and public sources in a single marketplace. This makes it easier to find and purchase the data you need to power your applications and analytics.

If I were developing an application to identify and plan stops for a road trip, I would need a lot of data, from many different sources. The application might first ask the user to enter a final destination and any stops they would like to make along the way. It could pull the current GPS location or ask the user to input a starting location and use these locations to map the best route for the road trip. After the application mapped the trip, it might reach out to Facebook and identify friends living along the route who I may want to visit. It might pull the weather forecasts for the cities that have been identified as stops, as well as identify points of interest, gas stations and restaurants as potential stops along the way.

Before DataMarket, I would’ve had to first discover sources for all the different types of data I require for my application. That could entail visiting numerous companies’ Web sites to determine whether or not they have the data I want and whether or not they offer it for sale in a package and at a price that meets my needs. Then I would’ve had to purchase the data directly from each company. For example, I might have gone directly to a company such as Infogroup to purchase the data enabling me to identify points of interest, gas stations and restaurants along the route; to a company such as NavTeq for current traffic reports; and to a company such as Weather Central for my weather forecasts. It’s likely that each of these companies would provide the data in a different format, some by sending me a DVD, others via a Web service, Excel spreadsheet and so on.

Today, with DataMarket, building this application becomes much simpler. DataMarket provides a single location—a marketplace for data—where I can search for, explore, try and purchase the data I need to develop my application. It also provides the data to me through a uniform interface, in a standard format (OData—see OData.org for more information). By exposing the data as OData, DataMarket ensures I’m able to access it on any platform (at a minimum, all I need is an HTTP stack) and from any of the many applications that support OData, including applications such as Microsoft PowerPivot for Excel 2010, which natively supports OData.

DataMarket provides a single marketplace for various content providers to make their data available for sale via a number of different offerings (each offering may make a different subset or view of the data available, or make the data available with different terms of use). Content providers specify the details of their offerings, including the terms of use governing a purchase, the pricing model (in version 1, offerings are made available via a monthly subscription) and the price.

Elisa continues with “Getting Started with DataMarket,” which include Figure 1:

“Consuming OData” and “Selling Data on ‘Data Market’” sections.

Elisa is a program manager in the Windows Azure Marketplace DataMarket team at Microsoft. She can be reached at blogs.msdn.com/elisaj.

• Lynn Langit (@llangit) asserts “SQL Azure provides features similar to a relational database for your cloud apps. We’ll show you how to start developing for SQL Azure today” and explains Getting Started with SQL Azure Development in MSDN Magazine’s November 2010 issue:

Microsoft Windows Azure offers several choices for data storage. These include Windows Azure storage and SQL Azure. You may choose to use one or both in your particular project. Windows Azure storage currently contains three types of storage structures: tables, queues and blobs.

SQL Azure is a relational data storage service in the cloud. Some of the benefits of this offering are the ability to use a familiar relational development model that includes much of the standard SQL Server language (T-SQL), tools and utilities. Of course, working with well-understood relational structures in the cloud, such as tables, views and stored procedures, also results in increased developer productivity when working in this new platform. Other benefits include a reduced need for physical database-administration tasks to perform server setup, maintenance and security, as well as built-in support for reliability, high availability and scalability.

I won’t cover Windows Azure storage or make a comparison between the two storage modes here. You can read more about these storage options in Julie Lerman’s July 2010 Data Points column (msdn.microsoft.com/magazine/ff796231). It’s important to note that Windows Azure tables are not relational tables. The focus of this is on understanding the capabilities included in SQL Azure.

This article will explain the differences between SQL Server and SQL Azure. You need to understand the differences in detail so that you can appropriately leverage your current knowledge of SQL Server as you work on projects that use SQL Azure as a data source.

If you’re new to cloud computing you’ll want to do some background reading on Windows Azure before continuing with this article. A good place to start is the MSDN Developer Cloud Center at msdn.microsoft.com/ff380142.

Lynn continues with “Getting Started with SQL Azure,” which includes Figure 1:

“Setting Up Databases,” “Creating Your Application,” with Figures 2, 3 and 4:

And concludes with “Using SQL Azure,” “Data Migration and Loading,” “Data Access and Programmability,” and “Data Administration” sections:

Lynn is a developer evangelist for Microsoft in Southern California. She’s published two books on SQL Server Business Intelligence and has created a set of courseware to introduce children to programming at TeachingKidsProgramming.org. Read her blog at blogs.msdn.com/b/SoCalDevGal.

• The ADO.NET Team posted Entity Framework [EF] & OData @ SQL PASS and TechEd Europe! on 11/5/2010:

Next week there’s two exciting conferences happening – SQL PASS and TechEd Europe, and the EF and OData teams will be at both! Check out the session lineup below, and if you’re there please swing by the booth and say hello.

SQL PASS

- Introduction to the Entity Framework, Rowan Miller

- Entity Framework for the DB Administrator, Faisal Mohamood

TechEd Europe

For more detail on each of these talks please see http://europe.msteched.com/Topic/List

- Introduction to the Entity Framework, Jeff Derstadt, Tim Laverty

- Code First Development with Entity Framework, Jeff Derstadt, Tim Laverty

- WCF Data Services – A Practical Deep Dive!, Mario Szpuszta

- Open Data for the Open Web, Jonathan Carter

- Custom OData Services: Inside Some of the Top OData Services, Jonathan Carter

- Data Development GPS: Guidance for the Choosing the Right Data Access Technology for Your Application Today, Drew Robbins

• PR.com published Digital Map Products Brings Parcel Boundary Data to Microsoft’s Windows Azure Marketplace DataMarket press release on 11/5/2010:

Digital Map Products (DMP), a leading provider of cloud-based spatial technology solutions, announced that the company’s ParcelStream™ web service for nationwide parcel boundary data, is now available through Microsoft’s new Windows Azure Marketplace DataMarket. Through DataMarket, developers and information workers can access DMP’s parcel boundaries and parcel interactivity features through simple, cross platform compatible APIs and can even combine parcel data with other premium and public geospatial data sets. [DataMarket link added.]

Microsoft’s Windows Azure Marketplace represents a wide range of new Platform-as-a-Service capabilities to help developers improve their productivity and bring their applications to market more rapidly. DataMarket, the first component of the Azure Marketplace to be made commercially available, is a cloud-based service that brings data, web services, and analytics from leading commercial data providers and authoritative public data sources together into a single location.

“Microsoft is transforming the application development market by making the building blocks of cloud applications more readily available and lowering the barriers to entry for small and medium sized developers,” says Jim Skurzynski, DMP CEO and President. “We’ve long believed in the power of the cloud to mainstream spatial technology and we’re proud to be a launch partner with Microsoft in bringing our parcel boundary data to a much wider audience.”

Digital Map Products’ ParcelStream™ web service has been readily adopted by online real estate sites as the standard for parcel boundary data. Other industries, such as utilities, financial services, and insurance also leverage parcel boundary data to enhance their mapping applications and decision analytics. ParcelStream™ is a turn-key solution for integrating parcel boundaries into mapping applications. To learn more about ParcelStream™ and how you can access it through the Windows Azure Marketplace DataMarket, visit www.spatialstream.com/microsite/ParcelStreamForDataMarket.html.

About Digital Map Products

Digital Map Products is a leading provider of web-enabled spatial solutions that bring the power of spatial technology to mainstream business, government and consumer applications. SpatialStream™, the company’s SaaS spatial platform, enables the rapid development of spatial applications. Its ParcelStream™ web service is powering national real estate websites with millions of hits per hour. LandVision™ and CityGIS™ are embedded GIS solutions for real estate and local government. To learn more, visit http://www.digmap.com.

• Trupti Kamath reported LOC-AID Named Launch Partner for Microsoft's Windows Azure Marketplace DataMarket in an 11/5/2010 article for TMCNet:

LOC-AID Technologies, a location enabler and Location-as-a-Service (LaaS) provider in North America, announced that Microsoft Corp., has named LOC-AID as a launch partner for Windows Azure Marketplace DataMarket, a cloud-based micro-payment marketplace for data and business services that is comparable to Apple iTunes or Amazon online retail environments. [Link added.]

The new platform was unveiled at the Microsoft Professional Developers Conference 2010 on Oct. 28, in Seattle, Wash. Windows Azure Marketplace DataMarket now offers LOC-AID's single API and ubiquitous carrier location data.

"We are delighted to be the only mobile location enabler in the Windows Azure Marketplace," said Rip Gerber, president and CEO of LOC-AID Technologies in a statement. "Our partnership directly supports the Windows Azure DataMarket mission to extend the reach of content through exposure to Microsoft's global developer and information worker community."

The platform is hosted within Microsoft's recently announced Windows Azure cloud-based services platform, creating an open system for users, developers and resellers to transact across a wide range of applications and with tremendous access to vast data resources including U.S. Census data, demographic and consumer expenditures via free and for-fee transaction services.

LOC-AID is one of a select group of Windows Azure DataMarket partners along with Accenture, D&B, Lexis-Nexis, NASA and National Geographic.

LOC-AID's ability to locate more than 300 million mobile devices is greater than the reach provided by any other location enabler in the industry. This comprehensive reach to more than 90 percent of all U.S. consumers, is possible because of LOC-AID's extensive mobile subscriber access via all top-tier wireless carrier networks.

LOC-AID offers mobile developers the ability to get location data for over 300 million devices, all through a single privacy-protected, CTIA best-practice API. Any company with a privacy-approved location-based service can now locate any phone or mobile device across all of the top-tier carrier networks in North America. A number of large companies are already utilizing LOC-AID's Location-as-a-Service platform and developer services to build and launch innovative location-based applications.

The company recently announced that it has surpassed the 300 million mark for mobile device access.

• Klint Finley described how to Create Mashups in the Cloud with Microsoft Azure DataMarket and JackBe in this 11/4/2010 post to the ReadWriteCloud blog:

Mashup tool provider JackBe is working with Microsoft to create dashboard apps using Azure DataMarket. In our coverage of the DataMarket, we noted that it's a marketplace, not an app environment. That's where JackBe comes in. JackBe can run in Azure to help end users create their own mashups using data sources from the marketplace.

JackBe shares an example app in a company blog post. The example is a logistics app designed to plan routes to keep perishable food fresh and incorporates the following data from the following sources:

- Bing maps: with Navteq dynamic routing information and Microsoft's Dynamics CRM on Demand customer data;

- Microsoft Dynamics CRM on Demand: customer order data, and real-time Weather Central information visualized in Microsoft Silverlight, this App also supports write-back capability to the Dynamics CRM;

- Microsoft SharePoint: aggregating information on delivery trucks and their locations;

- And from Azure Data Market services: dynamic fuel prices and geographically correlated fuel station locations.

There's a video on the blog post that explains how it works.

The advent of cloud computing and big data makes huge amounts of data available to organizations, but it's not always clear how to make practical use of it. Tools like JackBe can help turn all this data into something end users can work with minimal support from IT.

We've previously covered JackBe's enterprise app store here and the potential for point-and-click app development here.

eBay deployed its eBay OData API documentation to Windows Azure on 11/5/2010:

Here’s the default collection list at http://ebayodata.cloudapp.net/:

Here’s the oData document for the Antiques category:

and the first entry for the Antiques category’s children:

The documentation’s “Getting Started” section describes the supported oData syntax in the following sections:

- Top level collections

- Individual resources

- Parameters support

- Filtering support

- Order by support

Peter McIntyre showed students how to Consume JSON from a RESTful web service in iOS in an 11/4/2010 post:

In this post, you will learn to consume JSON from a RESTful web service in iOS. In a later post, you will learn to code the full range of RESTful web service operations.

One of the characteristics of a good iOS app is its supporting web services. A device that runs iOS is also typically a mobile device which relies on network communication, so iOS apps that use web services are and should be very common. We’ll introduce you to the consumption of web service data today, and build a foundation for more complex operations in the future.

Web services definitions

Web services are applications with two characteristics:

- A web service publishes, and is defined by, an application programming interface (API) for the data and functionality it makes available to external callers

- A web service is accessed over a network, by using the hypertext transfer protocol (HTTP)

Modern systems today are often built upon a web services foundation. There are two web app API styles in wide use today: SOAP XML Web Services, and RESTful Web Services. In this course, we will work with RESTful web services.

A RESTful web service is resource-centric. Resources (data) are exposed as URIs. The operations that can be performed on the URIs are defined by HTTP, and typically include GET, POST, PUT, and DELETE.

There are two web services data payload formats in wide use today: XML and JSON. Both are text-based, language-independent, data interchange formats.

- Web Services use XML, specifically XML messages that conform to (the) SOAP (protocol).

- REST typically uses either XML or JSON messages.

In this course, we will work with the JSON data payload format.

The JSON format can express the full range of results from a web service, including:

- A scalar (i.e. one value) result

- A single object (or data structure)

- A collection of objects (or data structures …

Peter continues with “An Introduction to JSON” and related topics.

Sreedhar Pelluru reported Microsoft Sync Framework 4.0 October 2010 CTP Documentation is posted to MSDN Online in this 11/4/2010 post:

Sync Framework 4.0 October 2010 CTP documentation is posted to MSDN Library Online at http://msdn.microsoft.com/en-us/library/gg299051(SQL.110).aspx. Read through the CTP Overview topic, skim through the OData + Sync Protocolo specification, practice the tutorials, and play around with the samples.

This is the first post I’ve seen from Sreedhar.

See Steve Yi reported Wiki: Understanding Data Storage Offerings on the Windows Azure Platform in a 11/4/2010 post in the Azure Blob, Drive, Table and Queue Services section above.

James Senior posted Announcing the OData Helper for WebMatrix Beta on 11/4/2010:

I’m a big fan of working smarter, not harder. I hope you are too. That’s why I’m excited by the helpers in WebMatrix which are designed to make your life easier when creating websites. There are a range of Helpers available out of the box with WebMatrix – you’ll use these day in, day out when creating websites – things like Data access, membership, WebGrid and more. Get more information on the built-in helpers here.

It’s also possible to create your own helpers (more on that in a future blog post) to enable other people to use your own services or widgets. We are are currently working on a community site for people to share and publicize their own helpers – stay tuned for more information on that.

Today we are releasing the OData Helper for WebMatrix. Designed to make it easier to use OData services in your WebMatrix website, we are open sourcing it on CodePlex and is available for you to download, use, explore and also contribute to. You can download it from the CodePlex website.

- @{

- var result = OData.Get("http://odata.netflix.com/Catalog/Genres('Horror')/Titles","$orderby=AverageRating desc&$top=5");

- var grid = new WebGrid(result);

- }

- @grid.GetHtml();

<Return to section navigation list>

AppFabric: Access Control and Service Bus

•• Balasubramanian Sriram and Burley Kawasaki announced plans for combining BizTalk Server 2010 and the Windows Azure AppFabric as “Integration as a Service” in a Changing the game: BizTalk Server 2010 and the Road Ahead post of 10/28/2010 to the BizTalk Team Blog (out of scope for this blog when published):

What’s next for BizTalk? As excited as we are about the recent announcement that we shipped BizTalk Server 2010 (see blog post), we know that customers depend upon us to give them visibility into the longer-term roadmap; given the lifespan of their enterprise systems, making an investment in BizTalk Server represents a significant bet and commitment on the Microsoft platform. While we are currently working thru product planning on BizTalk VNext, we wanted to share some of the early direction to date.

- At PDC’09 last year, we discussed at a high-level our strategy for BizTalk betting deeply on AppFabric architecturally so that we can benefit from the application platform-level investments we are making both across on-premises and in the cloud. This strategy has not changed, and in fact we are accelerating some of our investments; we started this journey even in BizTalk Server 2010 with built-in integration with Windows Server AppFabric for maps and LOB connectivity (a feature called AppFabric Connect).

- At PDC’10 this week we released-to-web a new innovative BizTalk capability which will allow you to bridge your existing BizTalk Server investments (services, orchestrations) with the Windows Azure AppFabric Service Bus – this new set of simplified tooling will help accelerate hybrid on/off premises composite application scenarios which we believe are critical to enable our customers to start taking advantage of the benefits of cloud computing (see blog post on this capability).

- Also this week, we disclosed an early peek into our strategy of “Integration as a Service” which begins to shed light on how we will be taking the integration workload to the cloud. This is a transition we have already made with Windows Server and SQL Server (as we have released Azure flavors of these server products); and we are committed to following this same path with integration. Link to recorded Integration session.

Our plans to deliver a true Integration service – a multi-tenant, highly scalable cloud service built on AppFabric and running on Windows Azure – will be an important and game changing step for BizTalk Server, giving customers a way to consume integration easily without having to deploy extensive infrastructure and systems integration. Due to the agile delivery model afforded by cloud services, we are able to bring early CTPs of this out to customers much more rapidly than traditional server software. We intend to offer a preview release of this Azure-based integration service during CY11, and will update on a regular cadence of roughly 6 month update cycles (similar to how Windows Azure and SQL Azure deliver updates). This will give us the opportunity to rapidly respond to customer feedback and incorporate changes quickly.

However, regardless of the innovative investments we are making in the cloud, we know our BizTalk customers will want to know that these advantages can be applied on-premises (either for existing or new applications). We are committed to delivering this new “Integration as a Service” capability on-premises on AppFabric server-based architecture. This will be available in the 2 year cadence that is consistent with previous major releases of BizTalk Server and other Microsoft enterprise server products.

Additionally, knowing well that our existing 10,000+ customers will move to a new version only at their own pace and on their own terms, we are committed to not breaking our customers’ existing applications by providing side-by-side support for the current BizTalk Server 2010 architecture. We will also continue to provide enhanced integration between BizTalk and AppFabric to enable them to compose well together as part of an end-to-end solution. This will preserve the investments you have made in building on BizTalk Server and enable easy extension into AppFabric (as we have delivered today with pre-built integration with both Windows Server AppFabric and Windows Azure AppFabric).

Another critical element is providing guidance to our customers on how best to deploy BizTalk and AppFabric together, in order to best prepare for the future. At PDC this week we delivered the first CTP of the Patterns and Practices Composite Application Guidance which provides practices and guidance for using BizTalk Server 2010, Windows Server AppFabric and Windows Azure AppFabric together as part of an overall composite application solution. We will also be delivering soon a companion offering from Microsoft Services which will provide the right expertise and strategic consulting on architecture and implementation for BizTalk Server and AppFabric. We will work closely with our Virtual-TS community & Partners to extend similar offerings. We will continue to update both the Composite Application Guidance and consulting offering as we release our next generation integration offerings, to help guide our customers as they move to newer versions of our products and take advantage of our next-generation integration platform built natively on AppFabric architecture.

We are excited to share these plans for the first time and prove our commitment to continue to innovate in the integration space. As BizTalk Server takes a bold step forward in its journey to harness the benefits of a new middleware platform, which will provide cloud and on-premises symmetry, we will make it a lot easier for our customers to build applications targeting cloud and hybrid scenarios. We look forward to delivering the first CTP of integration as a service to market next year!

Balasubramanian Sriram, General Manager, BizTalk Server & Integration

Burley Kawasaki, Director, Product Management

See •• Neil MacKenzie (@mknz) posted Comparing Azure Queues With Azure AppFabric Labs’ Durable Message Buffers on 11/6/2010 in the Azure Blob, Drive, Table and Queue Services section above.

• Vittorio Bertocci’s (@vibronet) ACS and Windows Phone 7 post of 11/6/2010 commented on Caleb Baker’s post (see below):

I still need to finish packing for Berlin, but this is so good that it warrants taking a break from socks tetris and jolting few lines on the blog right away.

Caleb just released the code of the WP7+ACS demo I’ve shown last week in my PDC session (direct link to the WP7 demo here). If you didn’t see it: in a nutshell, the sample demonstrates some early thinking about how to leverage both social and business IPs (Facebook, Windows Live ID, Google, Yahoo!, ADFS2 instances) to access REST web services (secured via OAuth2.0: part of the OAuth2.0+WIF code comes from the FabrikamShipping SaaS source package) from a Windows Phone 7 application. What are you doing still here? Go get it! :-)

• Caleb Baker announced Access Control for Windows Phone 7 Apps in an 11/5/2010 post to the Claims-Based Identity blog:

With the U.S. release of Windows Phone 7 around the corner, I’m excited to share a sample that shows some of our early thinking around how ACS in LABS can be used to enable sign in to web services… from the phone apps.

This makes it simple to write REST services, for Windows Phone 7 Silverlight applications, that can be used millions of users, including those at Live ID, Facebook, Google, Yahoo and AD FS accounts.

To see it in action, check out Vittorio’s PDC talk. The sample appears in the last few minutes, but I recommend watching the full talk.

As an early sample of how mobile apps may be supported, your feedback is very valuable. Download it and try it out!

• The Windows Azure AppFabric Team posted Windows Azure AppFabric Caching CTP - Interview and Feedback Opportunity on 11/4/2010:

- Wade Wegner, Technical Evangelist for Windows Azure AppFabric, has released an interview with Karandeep Anand, Principal Group Program Manager with Application Platform Services, about the new Caching service:

- We are conducting a survey on Caching where you can help guide the future of Caching: let us know how you use Caching and what features you would most like to see in the future.

Go to the original post to view Wade’s interview.

Richard Seroter (@rseroter) recommended Using Realistic Security For Sending and Listening to The AppFabric Service Bus in this 11/3/2010 post:

I can’t think of any demonstration of the Windows Azure platform AppFabric Service Bus that didn’t show authenticating to the endpoints using the default “owner” account. At the same time, I can’t imagine anyone wanting to do this in real life. In this post, I’ll show you how you should probably define the proper permissions for listening the cloud endpoints and sending to them.

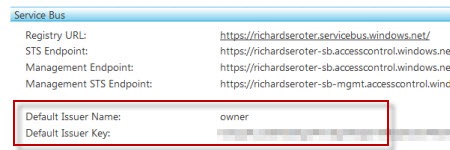

To start with, you’ll want to grab the Azure AppFabric SDK. We’re going to use two pieces from it. First, go to the “ServiceBus\GettingStarted\Echo” demonstration in the SDK and set both projects to start together. Next visit the http://appfabric.azure.com site and grab your default Service Bus issuer and key.

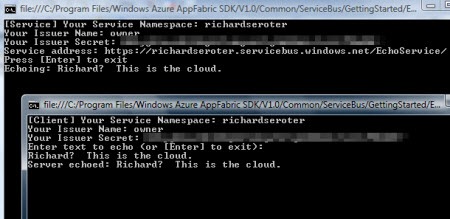

Start up the projects and enter in your service namespace and default issuer name and key. If everything is set up right, you should be able to communicate (through the cloud) between the two windows.

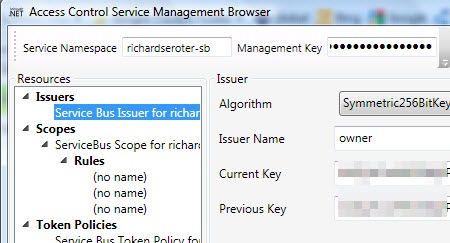

Fantastic. And totally unrealistic. Why would I want to share what are in essence, my namespace administrator permissions, with every service and consumer? Ideally, I should be scoping access to my service and providing specific claims to deal with the Service Bus. How do we do this? The Service Bus has a dedicated Security Token Service (STS) that manages access to the Service Bus. Go to the “AccessControl\ExploringFeatures\Management\AcmBrowser” solution in the AppFabric SDK and build the AcmBrowser. This lets us visually manage our STS.

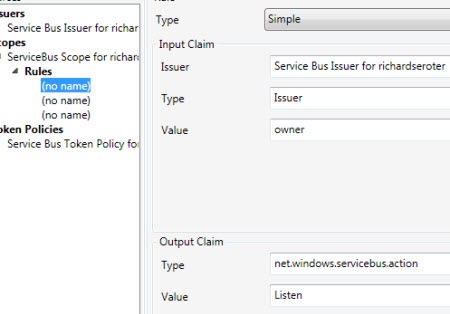

Note that the service namespace value used is your standard namespace PLUS “-sb” at the end. You’ll get really confused (and be looking at the wrong STS) if you leave off the –sb suffix. Once you “load from cloud” you can see all the default settings for connecting the Service Bus. First, we have the default issuer that uses a Symmetric Key algorithm and defines an Issuer Name of “owner.”

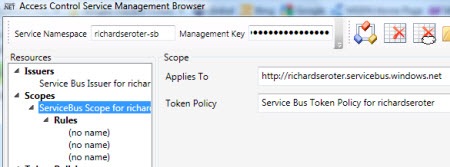

Underneath the Issuers, we see a default Scope. This scope is at the root level of my service namespace meaning that the subsequent rules will provide access to this namespace, and anything underneath it.

One of the rules below the scope defines who can “Listen” on the scoped endpoint. Here you see that if the service knows the secret key for the “owner” Issuer, then they will be given permission to “Listen” on any service underneath the root namespace.

Similarly, there’s another rule that has the same criteria and the output claim lets the client “Send” messages to the Service Bus. So this is what virtually all demonstrations of the Service Bus use. However, as I mentioned earlier, someone who knows the “owner” credentials can listen or send to any service underneath the base namespace. Not good.

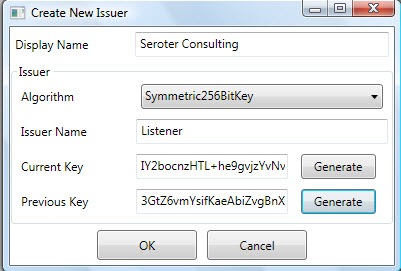

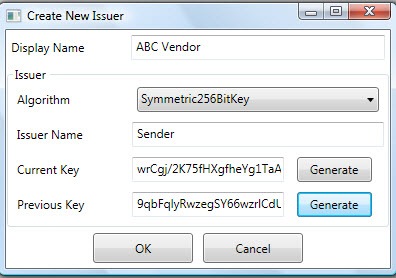

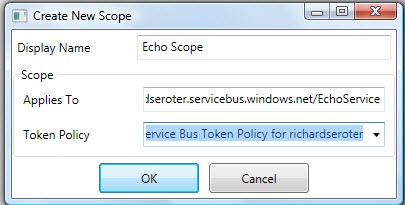

Let’s apply a tad bit more security. I’m going to add two new Issuers (one who can listen, one who can send), and then create a scope specifically for my Echo service where the restricted Issuer is allowed to Listen and the other Issuer can Send.

First, I’ll add an Issuer for my own fictitious company, Seroter Consulting.

Next I’ll create another Issuer that represents a consumer of my cloud-exposed service.

Wonderful. Now, I want to define a new scope specifically for my EchoService.

Getting closer. We need rules underneath this scope to govern who can do what with it. So, I added a rule that says that if you know the Seroter Consulting Issuer name “(“Listener”) and key, then you can listen on the service. In real life, you also might go a level lower and create Issuers for specific departments and such.

Finally, I have to create the Send permissions for my vendors. In this rule, if the person knows the Issuer name (“Sender”) and key for the Vendor Issuer, then they can send to the Service Bus.

We are now ready to test this bad boy. Within the AcmBrowser we have to save our updated configuration back to the cloud. There’s a little quirk (which will be fixed soon) where you first have to delete everything in that namespace and THEN save your changes. Basically, there’s no “merge” function. So, I clicked “Clear Service Namespace in the Cloud” button, and then go ahead and “Save to Cloud”.

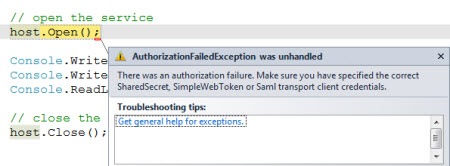

To test our configuration, we can first try to listen to the cloud using the VENDOR AC credentials. As you might expect, I get an authentication error because the vendor output claims don’t include the “net.windows.servicebus.action = Listen” claim.

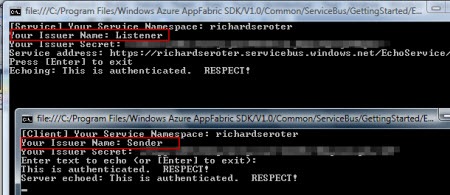

I then launched both the service and the client, and put the “Listener” issuer name and key into the service and the “Sender” issuer name and key into the client and …

It worked! So now, I have localized credentials that I can pass to my vendor without exposing my whole namespace to that vendor. I also specific credentials for my own service without requiring root namespace access.

To me this seems like the right way to secure Service Bus connections in the real world. Thoughts?

<Return to section navigation list>

Windows Azure Virtual Network, Connect, and CDN

• The Windows Azure Virtual Network Team opened an introductory page after PDC 2010 and is accepting requests for notification when the Windows Azure Connect CTP is available:

To be notified when the team begins accepting registrations for the Windows Azure Connect CTP, click here.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

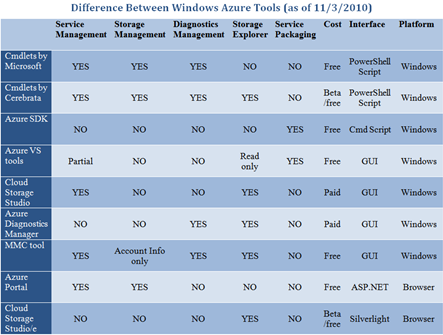

•• Guy Shahine listed and described nine Azure Service Management Tools in an 11/4/2010 post (missed when posted):

In your day-to-day efforts to build a cloud service on Windows Azure, it’s crucial to be aware of the currently available tools that will facilitate your job and make

syou more productive. I’ve created a table thatwillillustrate[s] the different aspect of each tool, then I expressedmy opinion about each one of them.

See the table in Zoom.it

UI tools

Cloud Storage Studio

A tool developed by a company called Cerebrata, It’s currently my favorite tool which I extensively use for service management and browsing storage accounts. Even though the name is confusing, the interface is really nice, the application is stable and the team is very receptive of feedback . The only thing that annoys me, I can’t retrieve storage accounts information. Plus, it’s not

forfree but the team is actively adding new features and rolling fixes.Windows Azure MMC tool

It has the richest set of utilities and It’s

forFREE. Now, If you can bite on the wound and accept a blend interface, plus you expect it to hang here and there, then this tool if for you. Also note, that the tool is lightly maintained and I’m not aware of any planned new releases.Azure Diagnostics Manager

Another tool developed by Cerebrata. This tool is more focused on managing your diagnostics. It includes a bunch of utilities that will definitely help you a lot in browsing through the logs, getting performance counters, trying to figure out some weird issue, etc. It also includes a storage explorer (that I haven’t used because I already have Cloud Storage Studio)

Visual Studio Tools For Azure

There are multiple parts: It has a nice interface for configuring your service. You’re able to build, package and deploy your service when you ask Visual Studio to publish your app. You can also build, package and run locally in [the Development]

devFabric. There is also a read-only storage explorer. The downside is that you can’t manage your services or storage data but I believe there are plans to allow you to (at least for storage data)Windows Azure Portal

The portal is required for many things that are usually done very rarely like creating and deleting a storage account. It’s slow and poor on features, but there was an announcement at PDC2010 that there will be a redesigned portal built on top of Silverlight.

Cloud Storage Studio /e

A web based storage explorer built by Cerebrata. It’s still in beta and for free. I personally haven’t used it in a while.

Script based tools

Windows Azure Service Management Cmdlets

Even though I haven’t wr[itten] any scripts that make use of the cmdlets, I know the test and operations team rely on them to automate different kinds of services management.

Azure Management Cmdlets

Didn’t also get the chance to play with it, but I believe it’s very similar to the Windows Azure Service Management Cmdlets. I’ll leave it for you to figure the difference

Azure SDK command line tools

The SDK command line tools are essentials for automation. There are multiple ways you can find those tools useful, I’ll name a couple 1) Write a custom script that will allow you to package then run your service locally (or perform other actions) using cspack.exe and csrun.exe. 2) Integrate cspack.exe part of your build system to automate packaging the service.

•• Steve Peschka (@speschka) posted The Claims, Azure and SharePoint Integration (CASI) Toolkit Part 2 on 11/6/2010 with a source code link at the end:

This is part 2 of a 5 part series on the CASI (Claims, Azure and SharePoint Integration) Kit. Part 1 was an introductory overview of the entire framework and solution and described what the series is going to try and cover. In this post we’ll focus on the pattern for the approach:

1. Use a custom WCF application as the front end for your data and content

2. Make it claims aware

3. Make some additional changes to be able to move it up into the Windows Azure cloud

Using WCF