Windows Azure-Compatible NoSQL Databases: GraphDB, RavenDB and MongoDB

I’m guessing that the properties of these three NoSQL database are what the original SQL Server Data Services (SSDS) and later SQL Data Services (SDS) teams had in mind when they developed their original SQL Server-hosted Entity-Attribute-Value (EAV) database. GraphDB is the newest and one of the most interesting of the databases.

SQL Azure has become very popular among .NET developers working on Windows Azure deployments due to its relatively low cost for 1-GB Web databases (US$9.99 per month). A single small Worker role instance of a NoSQL database costs US$ 0.12 * 24 * 30 = $86.40/month average, not counting storage charges.

As more details surface on the scalability and availability of these Azure-compatible databases becomes available, I’ll update this post.

GraphDB

Alex Popescu reported sones GraphDB available on Microsoft Windows Azure to his myNoSQL blog on 8/9/2010:

sones GraphDB available in the Microsoft cloud:

“The sones GraphDB is the first graph database which is available on Microsoft “Windows Azure. Since the sones GraphDB is written in C# and based upon Microsoft .NET it can run as an Azure Service in it’s natural environment. No Wrapping, no glue-code. It’s the performance and scalability a customer can get from a on-premise hosted solution paired with the elasticity of a cloud platform.”

You can read a bit more about it ☞ here.

In case you’ve picked [an]other graph database, you can probably set it up with one of the cloud providing Infrastructure-as-a-Service.

Reading List:

- sones Releases First Open Source Version of GraphDB

- Video: Emil Eifrem about NoSQL and the Benefits of Graph Databases

- NoSQL Graph Database Matrix

- InfiniteGraph Graph Database Reaches 1.0 Release

- Quick Review of Existing Graph Databases

You might like:

Alex’s post has an interesting set of “Most Read Articles” and “Latest Posts”. He describes his site:

myNoSQL features the best daily NoSQL news, articles and links covering all major NoSQL projects and following closely all things related to the NoSQL ecosystem.

myNoSQL is maintained by Alex Popescu, a code hacker, geek co-founder and CTO of InfoQ.com, web and NoSQL aficionado.

sones GmbH posted sones GraphDB on Microsoft Windows Azure platform on 8/4/2010:

What is Microsoft Windows Azure?

Azure Services Platform is an application platform in the cloud that allows applications to be hosted and run at Microsoft datacenters. It provides a cloud operating system called Windows Azure that serves as a runtime for the applications and provides a set of services that allows development, management and hosting of applications off-premises. All Azure Services and applications built using them run on top of Windows Azure. Windows Azure has three core components: Compute, Storage and Fabric. As the names suggest, Compute provides computation environment with Web Role and Worker Role while Storage focuses on providing scalable storage (Blobs, Tables, Queue, Drives) for large scale needs.

Why is the sones GraphDB a perfect fit?

The sones GraphDB is the first graph database which is available on Microsoft Windows Azure. Since the sones GraphDB is written in C# and based upon Microsoft .NET it can run as an Azure Service in it's natural environment. No Wrapping, no glue-code. It's the performance and scalability a customer can get from a on-premise hosted solution paired with the elasticity of a cloud platform.

How can I access/buy it?

The sones GraphDB 1.1 for Windows Azure is available now!

This edition brings all the features from the Open Source and Enterprise Edition to the Windows Azure cloud.

If you are interested in evaluating or buying this edition of the sones GraphDB please contact us!

sones GmbH offers a GraphDB whitepaper that describes their product. The whitepaper begins:

The sones GraphDB is a new, object-oriented data management system based on graphs. It enables efficient saving, management and evaluation of extremely complex data bases that are networked to a high degree. The integrated solution combines the advantages of file storage with the possibilities of a Database Management System. Unstructured data (e.g. video files), semi-structured data (Meta data, e.g. log files) and structured data can be linked to each other and thus be managed from one source and be evaluated as needed.

The idea for a new development of a database based on the graph theory arose in the beginning of 2005 due to dissatisfaction with the existing solutions available in the market. Often, those offered no or only insufficient ad-hoc analysis functions for queries into strongly linked data. Besides, these systems were mostly not able to cope with the frequently changing data and information structures applications have to process especially in the web environment. Therefore, the development focused on providing efficient data handling and real time analysis for these tasks which could previously due to their complexity hardly be solved or only with the highest efforts. A fundamentally new database technology was developed based on totally independent and new concepts.

The solution includes an object-oriented storage solution (sones GraphFS) for high-performance data storage as well as the graph-oriented database management system (sones GraphDB), which links the data to each other and makes their high-performance evaluation possible. The summation of these two parts into one software offers the advantage of highly efficient processing since any abstraction layers can be avoided. The otherwise customary efforts for the operation of a database solution will be extremely decreased by this solution.

The object-oriented storage solution (sones GraphFS) also stores unstructured and semi-structured data, like e.g. document formats, photos, more efficiently than file systems, which are the basis for the operation system. This principle successfully overcomes one of the significant performance problems in the previous object-oriented databases, which is the mapping of object models in relational tables. A patent has been filed for the file system.The graph-oriented data management (sones GraphDB) offers high flexibility of the data structuring and enables the direct linking of the data without additional auxiliary constructs like, for example, allocation tables in relational data bases. Since the data model is oriented on the established programming languages, there is no discrepancy between application logic and data storage. The complexity is in part drastically smaller compared to the existing relational solutions. This leads amongst others to the following advantage: With increasing linkage of the data, the complexity does not increase exponentially, but in a linear manner. A further decisive advantage is the ability of the data base to change or amend data structures during runtime. New requirements on the part of the application can therefore be realized without extensive re-programming of a relational database schematic.

The Graph Query Language (sones GQL) was developed to facilitate the data management for the user. While its extent and syntax is oriented on SQL, it was adjusted to the graph-oriented approach and makes the advantages which exist in this model accessible to a direct and easily comprehensible query. …

Graph-oriented databases

Graph-oriented data bases store nodes and relations between nodes. An indirect (complex) relation (e.g. between object 1 and 3) exist if an object references a second and this in turn a third.

Multilevel relations are called graphs.

Graph-oriented databases allow a query of indirect relations over multiple edges. The edges can also be weighed to describe the degree of the relation. Thus, complex and indirect dependencies in data bases can be easily described and their high-performance evaluation can be enabled.

The evaluation of the data is not limited to the lists, but the data can also be processed structured as graphs. The advantage of this approach is that the work step of data structuring will be saved in the data processing application. The GraphDB of sones is a graph-oriented database:

… The graphic illustrates the structure of the sones GraphDB. It consists of four application layers – the Storage Layer, the File System, the Database Management System as well as the Data Access Layer:

… The attributes of the data objects can be defined free of type errors (C# data types). Complex structures can be shown through lists and object dependencies.

The database offers the following functions:

- Attributes of objects can be defined free of type errors (C# data types)

- Complex types can for example by shown through object referencing

- Inheritance of objects (for more specifics standardization of types or for expansion)

- Editing of objects

- Versioning of objects

- Data safety (redundancy, mirroring, striping, backup) can be defined on object level

- Data integrity is ensured via checksums (CRC32, SHA1, MD5)

- Authorization concept for the database can be defined

- DataStorage – GraphDS

GraphDS provides the interface to the product. A GQL command line as well as a user interface is provided for administration. The system can also be accessed via WebService, REST or WebShell.

The Graph Query Language offers the following functions:

- Functionalities analog SQL (CREATE, INSERT, UPDATE, ALTER, SELECT, etc.)

- Graph theoretical functions, e.g. pathfinding or weighing of edges

The REST interface is an URL-encoded query string with a JSON-encoded response; it doesn’t support the OData protocol at present.

RavenDB

Mark Rendle (@markrendle) describes Running RavenDB on Azure Drive as a Worker Role in this 8/9/2010 post:

Microsoft’s Azure platform provides excellent data storage facilities in the form of the Windows Azure Storage service, with Table, Blob and Queue stores, and SQL Azure, which is a near-complete SQL Server-as-a-service offering. But one thing it doesn’t provide is a “document database”, in the NoSQL sense of the term.

I saw Captain Codeman’s excellent post on running MongoDB on Azure with CloudDrive, and wondered if Ayende’s new RavenDB database could be run in a similar fashion; specifically, on a Worker role providing persistence for a Web role.

The short answer was, with the current build, no. RavenDB uses the .NET HttpListener class internally, and apparently that class will not work on worker roles, which are restricted to listening on TCP only.

I’m not one to give up that easily, though. I’d already downloaded the source for Raven so I could step-debug through a few other blockers (to do with config, mainly), and I decided to take a look at the HTTP stack. Turns out Ayende, ever the software craftsman, has abstracted his HTTP classes and provided interfaces for them. I forked the project and hacked together implementations of those interfaces built on the TcpListener, with my own HttpContext, HttpRequest and HttpResponse types. It’s still not perfect, but I have an instance running at http://ravenworker.cloudapp.net:8080.

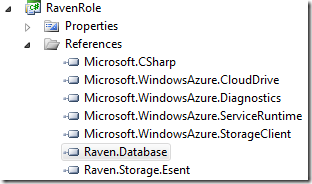

My fork of RavenDB is at http://github.com/markrendle/RavenDB – the Samples solution contains an Azure Cloud Service and a Worker Role project. My additions to the core code are mainly within the Server.Abstractions namespace if you want to poke around.

HOWTO

My technique for getting Raven running on a worker role differ from the commonly-used methods for third-party software. Generally these rely on running applications as a separate process, getting them to listen on a specified TCP port. With Raven, this is unnecessary since it consists of .NET assemblies which can be referenced directly from the worker project, so that’s how I did it:

The role uses an Azure CloudDrive for Raven’s data files. A CloudDrive is a VHD disk image that is held in Blob storage, and can be mounted as a drive within Azure instances. …

[Mark’s C# source code excised for brevity.]

A few points on the configuration properties:

- the Port is obtained from the Azure Endpoint, which specifies the internal port that the server should listen on, rather than the external endpoint which will be visible to clients;

- I added a new Enum, ListenerProtocol, which tells the server whether to use the Http or Tcp stack;

- AnonymousUserAccessMode is set to all. My intended use for this project will only expose the server internally, to other Azure roles, so I have not implemented authentication on the TCP HTTP classes yet;

- The DataDirectory is set to the path that the CloudDrive Mount operation returned.

I have to sign a contribution agreement, and do some more extensive testing, but I hope that Ayende is going to pull my TCP changes into the RavenDB trunk so that this deployment model is supported by the official releases. I’ll keep you posted.

See Google Group’s Raven running in Azure thread for more background on Mark’s project. Mark wrote on 7/30/2010:

There is an instance running (as at 30 July 2010) at

http://ravenworker.cloudapp.net:8080/raven/index.html which I'll leave

active until I need the role for something else.

Hibernating Rhinos (@Ayende) briefly described RavenDB on 5/11/2010 at its site:

Raven is an Open Source (with a commercial option) document database for the .NET/Windows platform. Raven offers a flexible data model design to fit the needs of real world systems. Raven stores schema-less JSON documents, allow you to define indexes using LINQ queries and focus on low latency and high performance.

Scalable infrastructure: Raven builds on top of existing, proven and scalable infrastructure

- Simple Windows configuration: Raven is simple to setup and run on windows as either a service or IIS7 website

- Transactional: Raven support System.Transaction with ACID transactions. If you put data in it, that data is going to stay there

- Map/Reduce: Easily define map/reduce indexes with Linq queries

- .NET Client API: Raven comes with a fully functional .NET client API which implements Unit of Work and much more

RESTful: Raven is built around a RESTful API

Raven is released under a dual Open Source license and a commercial license. Simply put, that means that it is freely available under the AGPL license but if you want to use this with proprietary software, you must buy a commercial license.

Documents...

in Raven are schema less JSON documents. Documents are independent entities, unrelated to one another. The following are three different documents:

Documents can be queried by defining indexes.

Indexes...

are persistent Linq queries that are executed on the background. The nearest thing in RDMBS terminology is a materialized view. Unlike RDBMS systems, indexes do not slow CRUD performance. Here is the index for PostsByCategory:

from post in docs.Posts select category in post.Categories select new { category }Indexes can be queried using the following syntax: "category:Raven"

You can read more about Raven in our documentation.

MongoDB

Simon Green posted Running MongoDb on Microsoft Windows Azure with CloudDrive on 5/24/2010:

I’ve been playing around with the whole CQRS approach and think MongoDb works really well for the query side of things. I also figured it was time I tried Azure so I had a look round the web to see if there we’re instructions on how to run MongoDb on Microsoft’s Azure cloud. It turned out there were only a few mentions of it or a general approach that should work but no detailed instructions on how to do it. So, I figured I’d give it a go and for a total-Azure-newbie it didn’t turn out to be too difficult.

Obviously you’ll need an Azure account which you may get with MSDN or you can sign-up for their ‘free’ account which has a limited number of hours included before you have to start paying. One thing to be REALLY careful of though – just deploying an app to Azure starts the clock running and leaving it deployed but turned off counts as hours so be sure to delete any experimental deployments you make after trying things out!!

First of all though it’s important to understand where MongoDb would fit with Azure. Each web or worker role runs as a virtual machine which has an amount of local storage included depending on the size of the VM, currently the four pre-defined VMs are:

- Small: 1 core processor, 1.7GB RAM, 250GB hard disk

- Medium: 2 core processors, 3.5GB RAM, 500GB hard disk

- Large: 4 core processors, 7GB RAM, 1000GB hard disk

- Extra Large: 8 core processors, 15GB RAM, 2000GB hard disk

This local storage is only temporary though and while it can be used for processing by the role instance running it isn’t available to any others and when the instance is moved, upgraded or recycled then it is lost forever (as in, gone for good).

For permanent storage Azure offers SQL-type databases (which we’re not interested in), Table storage (which would be an alternative to MongoDb but harder to query and with more limitations) and Blob storage.

We’re interested in Blob storage or more specifically Page-Blobs which support random read-write access … just like a disk drive. In fact, almost exactly like a disk drive because Azure provides a new CloudDrive which uses a VHD drive image stored as a Page-Blob (so it’s permanent) and can be mounted as a disk-drive within an Azure role instance.

The VHD images can range from 16Mb to 1Tb and apparently you only pay for the storage that is actually used, not the zeroed-bytes (although I haven’t tested this personally).

So, let’s look at the code to create a CloudDrive, mount it in an Azure worker role and run MongoDb as a process that can use the mounted CloudDrive for it’s permanent storage so that everything is kept between machine restarts. We’ll also create an MVC role to test direct connectivity to MongoDb between the two VMs using internal endpoints so that we don’t incur charges for Queue storage or Service Bus messages.

The first step is to create a ‘Windows Azure Cloud Service’ project in Visual Studio 2010 and add both an MVC 2 and Worker role to it.

We will need a copy of the mongod.exe to include in the worker role so just drag and drop that to the project and set it to be Content copied when updated. Note that the Azure VMs are 64-bit instances so you need the 64-bit Windows version of MongoDb.

We’ll also need to add a reference to the .NET MongoDb client library to the web role. I’m using the mongodb-csharp one but you can use one of the others if you prefer.

Our worker role needs a connection to the Azure storage account which we’re going to call ‘MongoDbData’

The other configured setting that we need to define is some local storage allocated as a cache for use with the CloudDrive, we’ll call this ‘MongoDbCache’. For this demo we’re going to create a 4Gb cache which will match the 4Gb drive we’ll create for MongoDb data. I haven’t played enough to evaluate performance yet but from what I understand this cache acts a little like the write-cache that you can turn on for your local hard drive.

The last piece before we can crack on with some coding is to define an endpoint which is how the Web Role / MVC App will communicate with the MongoDb server on the Worker Role. This basically tells Azure that we’d like an IP Address and a port to use and it makes sure that we can use it and no one else can. It should be possible to make the endpoint public to the world if you wanted but that isn’t the purpose of this demo. The endpoint is called ‘MongoDbEndpoint’ and set to Internal / TCP:

Now for the code and first we’ll change the WorkerRole.cs file in the WorkerRole1 project (as you can see, I put a lot of effort into customizing the project names!). We’re going to need to keep a reference to the CloudDrive that we’re mounting and also the MongoDb process that we’re going to start so that we can shut them down cleanly when the instance is stopping:

1privateCloudDrive _mongoDrive;

2privateProcess _mongoProcess;In the OnStart() method I’ve added some code copied from the Azure SDK Thumbnail sample – this prepares the CloudStorageAccount configuration so that we can use the method CloudStorageAccount.FromConfigurationSetting() to load the details from configuration (this just makes it easier to switch to using the Dev Fabric on our local machine without changing code). I’ve also added a call to StartMongo() and created an OnStop() method which simply closes the MongoDb process and unmounts the CloudDrive when the instance is stopping. …

[Simon’s C# source code excised for brevity.]

So, that’s the server-side, oops, I mean Worker Role setup which will now run MongoDb and persist the data permanently. We could get fancier and have multiple roles with slave / sharded instances of MongoDb but they will follow a similar pattern.

The client-side in the Web Role MVC app is very simple and the only extra work we need to do is to figure out the IP Address and Port that we need to connect to MongoDb using which are setup for us by Azure. The RoleEnvironment lets us get to this and I believe (but could be wrong so don’t quote me) that the App Fabric part of Azure handles the communication between roles to pass this information. Once we have it we can create our connection to MongoDb as normal and save NoSQL JSON documents to our hearts content …

I hope you find this useful. I’ll try and add some extra notes to explain the code and the thinking behind it in more detail and will post some follow ups to cover deploying the app to Azure and what I’ve learned of that process.

Windows-Compatible NoSQL Databases

Max Indelicato’s NoSQL on the Microsoft Platform post of 8/9/2010 lists some additional non-relational DBs that run on Windows Server, not specifically under Windows Azure:

NoSQL is a trend that is gaining steam primarily in the world of Open Source. There are numerous NoSQL solutions available for all levels of complexity: from queryable distributed solutions like MongoDB to simpler distributed key-value storage solutions like Cassandra. Then there’s Riak, Tokyo Cabinet, Voldemort, CouchDB, and Redis. However, very few of these packaged NoSQL products are available for the other end of the platform market: Microsoft Windows. I’m going to outline what’s available now and briefly touch on some opportunities that are still available to the daring Microsoft engineer.

What’s Available Now

There are a handful of NoSQL projects that currently support Microsoft Windows and support it well enough for practical use.

Memcached

Memcached is not traditionally considered a NoSQL solution, but being a distributed key-value in-memory cache, it can be used to house a variety of transient datasets in a manner typical of other NoSQL data stores.

NorthScale has a nicely packaged, freely downloadable, version of Memcached that works on both 32-bit and 64-bit versions of Windows. You can check it out here: http://www.northscale.com/products/memcached.html

MongoDB

MongoDB is a document-based (JSON-style ) data store capable of scaling horizontally via its auto-sharding feature. It uses a simple but powerful query language based in JSON/javascript and is capable of fast inserts and updates thanks largely to its low-overhead atomic modifiers. Additionally, Map/Reduce is used for aggregation and data processing across MongoDB databases.

The team at 10Gen, the company behind MongoDB, officially supports the Windows platform and has since early on in the development process. It currently sits at version 1.6.0 and is in use at a number of high-profile web companies.

You can find more information about setting up MongoDB on Windows here: http://www.mongodb.org/display/DOCS/Windows

And you can download the latest version here: http://www.mongodb.org/downloads

[It’s important to note that MongoDB can run under Windows Azure, as well as Windows server, as noted above.]

sones GraphDB

The sones GraphDB is an enterprise graph data store developed in managed .NET code using C#. It is open source and available for free download for non-commercial usage. Commercial usage licenses are available.

Graph databases in general are a different kind of beast than the typically referenced NoSQL storage examples. They excel at handling a specific class of problem: datasets that include a high number of relationships and require traversing those relationships quickly and efficiently.

A common use-case for graph databases is for storing social relationships or “social graphs”. Often, these social graphs are made up of nodes with many individual relationships between other nodes. This is a problem domain that traditional relational database handle poorly.

You can find more information about the sones GraphDB source code on GitHub here: http://github.com/sones/sones

And cost information and a feature breakdown of the various license options here: http://www.sones.com/produkte

[It’s important to note that sones GraphDB runs under Windows Azure, as well as Windows server, as noted above.]

Voldemort

Voldemort is a distributed key-value storage system used at LinkedIn for “certain high-scalability storage problems where simple functional partitioning is not sufficient.” It’s written in Java and by virtue of the fact that Java is cross-platform, it can be configured to work on Windows.

Check out this link on GoNoSQL.com to learn more about setting it up in a Windows environment: http://www.gonosql.com/how-to-install-voldemort-on-windows/

NoSQL Project Opportunities

This is an exciting time in the world of Microsoft. Partial as a result of the fact that the Microsoft club is slow to take hold of the NoSQL trend, opportunities are abound for developers to begin implementing a host of NoSQL storage solutions.

Thinking through some of the possibilities and what there is to work with and build upon, some interesting possibilities have presented themselves…

Managed ESENT-Backed Distributed Data Store

The best analogy I can think of to describe Managed ESENT is that it is the BerkleyDB of the Microsoft world. It is hardly known and rarely used by .NET developers, but its performance and reliability have been proven time and time again by ESENT’s usage in major Microsoft products like Active Directory, Exchange Server, and more.

More technically, ESENT is an “embeddable database engine native to Windows.” Managed ESENT is a CodePlex project and the .NET wrapper around the esent.dll that is part of every late Windows version.

I will say this - in the limited testing that I have done, it is damn fast – on the order of 100,000 inserts per second. See the Performance section here for more rough stats: http://managedesent.codeplex.com/wikipage?title=ManagedEsentDocumentation&referringTitle=Documentation

I’m imagining a Microsoft NoSQL solution that uses Managed ESENT as the backing store for a simple, distributed, key-value or columnar data store. Use C# or F# with asynchronous TCP networking and consistent hashing or a lookup/routing instance and we could have something here. Makes me want to play around with that and see what comes of it – anyone else interested in thinking this through with me in the comments?

In-Memory Dictionary-Backed Distributed Data Store

Another alternative, and admittedly this will likely be food for thought for a future post, is the viability of an in-memory dictionary-backed distributed data store. This is similar in concept to the Managed ESENT version above, but contained entirely in volatile memory.

This could serve as the basis for a distributed cache, or could be persisted by replicating data across a series of nodes. With the intent that by having at least a subset of nodes running at any one time, data within the data store would be persisted. Amazon, or any other cloud-based non-persistent server solution would host this perfectly. It’s “out there” as a general concept, but I’m a proponent in a big way – more on this in a later post.

Closing Thoughts

There is clearly limited options available to Microsoft/.NET developers as far as NoSQL solutions go. That is a shame, but can and will change with time. As .NET developers, it is up to us to make that happen and with some of the ideas I’ve presented above, it should be clear that opportunities are abound.

I consider this an exciting time and being able to bring NoSQL to the Microsoft masses is an effort that I’m willing to get behind. If there are any volunteers that would like to discuss this further, please comment below!

UPDATE:

Matt Warren mentioned RavenDB.net in the comments. Looks like an interesting document-database project written in .NET. Thanks Matt!

Max is Director of Infrastructure and Software Development at Stride & Associates. He has worked in a variety of companies, including startups, growth-stage businesses, and established enterprises

0 comments:

Post a Comment