Windows Azure and Cloud Computing Posts for 8/24/2010+

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

Updated 9/25/2010: Removed post by Wictor Wilén at his request.

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database, Codename “Dallas” and OData

- AppFabric: Access Control and Service Bus

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch

- Windows Azure Infrastructure

- Windows Azure Platform Appliance (WAPA)

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Read the detailed TOC here (PDF) and download the sample code here.

Discuss the book on its WROX P2P Forum.

See a short-form TOC, get links to live Azure sample projects, and read a detailed TOC of electronic-only chapters 12 and 13 here.

Wrox’s Web site manager posted on 9/29/2009 a lengthy excerpt from Chapter 4, “Scaling Azure Table and Blob Storage” here.

You can now freely download by FTP and save the following two online-only PDF chapters of Cloud Computing with the Windows Azure Platform, which have been updated for SQL Azure’s January 4, 2010 commercial release:

- Chapter 12: “Managing SQL Azure Accounts and Databases”

- Chapter 13: “Exploiting SQL Azure Database's Relational Features”

HTTP downloads of the two chapters are available for download at no charge from the book's Code Download page.

Tip: If you encounter articles from MSDN or TechNet blogs that are missing screen shots or other images, click the empty frame to generate an HTTP 404 (Not Found) error, and then click the back button to load the image.

Azure Blob, Drive, Table and Queue Services

Azure Blob, Drive, Table and Queue Services

No significant articles today.

<Return to section navigation list>

SQL Azure Database, Codename “Dallas” and OData

Maarten Balliauw describes how to create Hybrid Azure applications using OData in this 8/24/2010 post:

In the whole Windows Azure story, Microsoft has always been telling you could build hybrid applications: an on-premise application with a service on Azure or a database on SQL Azure. But how to do it in the opposite direction? Easy answer there: use the (careful, long product name coming!) Windows Azure platform AppFabric Service Bus to expose an on-premise WCF service securely to an application hosted on Windows Azure.

Now how would you go about exposing your database to Windows Azure? Open a hole in the firewall? Use something like PortBridge to redirect TCP traffic over the service bus? Why not just create an OData service for our database and expose that over AppFabric Service Bus. In this post, I’ll show you how.

For those who can not wait: download the sample code: ServiceBusHost.zip (7.87 kb)

What we are trying to achieve

The objective is quite easy: we want to expose our database to an application on Windows Azure (or another cloud or in another datacenter) without having to open up our firewall. To do so, we’ll be using an OData service which we’ll expose through Windows Azure platform AppFabric Service Bus. But first things first…

- OData??? The Open Data Protocol (OData) is a Web protocol for querying and updating data over HTTP using REST. And data can be a broad subject: a database, a filesystem, customer information from SAP, … The idea is to have one protocol for different datasources, accessible through web standards. More info? Here you go.

- Service Bus??? There’s an easy explanation to this one, although the product itself offers much more use cases. We’ll be using the Service Bus to interconnect two applications, sitting on different networks and protected by firewalls. This can be done by using the Service Bus as the “man in the middle”, passing around data from both applications.

Our OData feed will be created using WCF Data Services, formerly known as ADO.NET Data Services formerly known as project Astoria.

Maarten continues with a detailed tutorial and C# source code and concludes:

That was quite easy! Of course, if you need full access to your database, you are currently stuck with PortBridge or similar solutions. I am not completely exposing my database to the outside world: there’s an extra level of control in the EDMX model where I can choose which datasets to expose and which not. The WCF Data Services class I created allows for specifying user access rights per dataset.

Download sample code: ServiceBusHost.zip (7.87 kb)

Wayne Walter Berry (@WayneBerry) describes Using SQL Server Migration Assistant to Move Access Tables to SQL Azure in this 8/24/2010 post to the SQL Server team blog:

The new version (v4.2) of SQL Server Migration Assistant that came out on August 12th, 2010 allows you to move your Microsoft Access Tables and queries to SQL Azure. In this blog post I will walk through how to use the SQL Server Migration Assistant migration wizard to move the North Wind database to SQL Azure.

Download

You can download and install the SQL Server Migration Assistant for free from here, note that you will want the 2008 version of the download that aligns with SQL Azure which is built upon the SQL Server 2008 code base.

Migration Wizard

Once you have it downloaded and installed, start it up and you will be presented with a migration wizard to migrate the data.

The first page of the wizard gets you started with a migration project.

Make sure to select SQL Azure as the option for Migration To: drop down. In the next page of the wizard we are asked to select one or more Microsoft Access 2010 databases to upload to SQL Azure.

The illustrated tutorial that follows is similar to my Migrating a Moderate-Size Access 2010 Database to SQL Azure with the SQL Server Migration Assistant of 8/21/2010.

Cliff Hobbs reported two new SQL Azure hot fixes for SQL Server 2008 R2 Management Studio in his [MS KBs] New KB Articles At Microsoft 23 Aug 2010 - Weekly Summary post of 8/24/2010:

2285720 FIX: A fix is available for SQL Server Management Studio 2008 R2 that enables you to rename SQL Azure databases in Object Explorer

2285711 FIX: A fix is available that improves the manageability of SSMS 2008 R2 to support Spatial Types for SQL

<Return to section navigation list>

AppFabric: Access Control and Service Bus

Vittorio Bertocci (@vibronet) shows you how to use SelfSTS: when you need a SAML token NOW, RIGHT NOW in this 8/24/2010 post:

A little new toy for you claims-based identity aficionados to play with! Available here.

Tokens are the currency on the identity market. Any identity solution you develop will require you to acquire & consume tokens (& associated claims) at some point.

ADFS2 is super-easy to install, but does require Active Directory and as a result it is not always readily available at development time, especially if you are not targeting a departmental application or a classic federation scenario. And even in that case, you don’t always have a test endpoint set up. When you test a payment service you don’t move real money right away, there’s no reason to do the same with identities: do you have a test account for all the role values you want to test?

The standard solution, one that has been used big time also in out training kit and other examples, is creating a custom STS. Almost three years have passed since the very first preview we gave in Barcelona of what became WIF, and I still remember Barry in the first row facepalming at the sight of one STS built in under 10 minutes. Well, with the WIF SDK tooling now that happens in seconds (or less, if your machine has an SSD ;-)) hence it’s incredibly easy to set up a test STS. However, after you’ve done that hundreds of times (and believe me, I did) even that can start to wear you off; writing new fed metadata every time you change something takes time; and above all, creating many custom STSes can litter your IIS and certificates stores if you are not disciplined in keeping things clean (I’m not). Also, if you are packing your solution for others (in my case for making labs and sample code available to you, more commonly you need to do that when something is not working and you want the help of others) you need to include some setup, which has requirements (IIS, certificate stores, etc) and impact on the target machine.

Well, enter SelfSTS.

SelfSTS is a little Winform app which will not deny a token to anyone. It’s an EXE which exposes one endpoint for a passive STS, and one endpoint with the corresponding fed metadata document. The claim types and values are taken from a config section, which can be edited directly in the SelfSTS UI. The tokens are signed with simple self-signed certificates from PFX files, the certificates tore remains untouched. You can even create new self-signed certs with a simple UI, instead of risking to conjure mystical beasts by mistake if you write the wrong makecert parameter.

With SelfSTS you can produce test input for your apps very easily: you start it, point the Add STS Wizard to to the metadata endpoint, and hit F5. If you want the token to have a different claim type or value, you just go to the claims editing UI and change things accordingly. If you need more than one STS at once, you just copy the exe, the config and the PFX certificate you want to another folder, you change the port on which the STS is listening and you fire it up; here you go, you can now do all the home realm discovery experiments you want. I already heard people considering to use this for testing SharePoint even in situations in which you don’t have the WIF SDK installed: that’s right, it just requires the WIF runtime! I am sure you’ll come out with your own creative ways to use that. I am even considering using it instead of many of the custom STSes in the next drops of the training kit…

I feel silly even at having to say that, but for due diligence… SelfSTS is obviously ABSOLUTELY INSECURE. It is just a test toy, and as such it should be used. It gives tokens without even checking who the caller is, and it does that on plain HTTP. It signs tokens with self-signed certificates, and it stores the associated passwords in the clean in the web config. It is the very essence of insecurity itself. Do not use it for anything else than testing applications at development time, on non production systems.

Below there’s an online version of the readme we packed in the sample. Have fun!

Overview

Securing web applications with Windows Identity Foundation require the use of an identity provider, which may not always be available at development or test time. The standard solution to the issue is creating a test STS. The WIF SDK templates make it very easy to create a minimal STS; however it requires you to write code for customizing the claims it emits and, if you want to be able to use the WIF tooling to its fullest, customize the metadata generation code. What’s worse, you need to repeat the process for every new application.

SelfSTS is a quick & dirty utility which provides a minimal WS-Federation STS endpoint and its associated federation metadata document. You can use SelfSTS for testing your web applications by simply pointing WIF’s Add STS Wizard to its metadata endpoint.

Figure 1

The main SelfSTS screenSelfSTS is a simple .EXE file, which does not require IIS and never touches the certificates store. There is no installation required, you just need the .EXE file itself, its configuration file and the PFX file of the certificate you want to use for signing tokens. Its only requirements are .NET 4.0, the WIF runtime and (if you want to generate extra certificates) the Windows SDK.

SelfSTS provides a simple UI for easily editing the types and values of the claims it will emit: the metadata document will be dynamically updated accordingly.

SelfSTS offers a UI for simplified creation of self-signed X.509 certificates, which you can use if you need to use a signing certificate with a specific subject or if for some reason you cannot use the certificate provided out of the box.

WARNING: SelfSTS is not, and is not meant to be, secure by any measure. All traffic takes place in the clear, on HTTP; requests are automatically accepted regardless of who the caller is; certificates are handled from the file system, without specific passwords protections. This is all by design, SelfSTS is just meant to help you to test web applications by providing you with an easy way of obtaining tokens via WS-Federation.

Vittorio continues with detailed, illustrated instructions for using SelfSTS.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

The Windows Azure Platform Team posted a new Pivot view of Case Studies evidence at http://www.microsoft.com/windowsazure/evidence/ on about 8/24/2010:

All that’s missing is a sort by date (DESC) so I can check the latest ones.

Andrew Freyer reported SQL Server Reporting Services “will will soon be available in SQL Azure” in his SQL Server Reporting Services interop post of 8/23/2010 to his TechNet blog:

SQL Server Reporting Services is nearly ubiquitous as SQL Server itself, it is in nearly all versions of SQL Server from Express (with Advanced services) to DataCenter edition and will soon be available in SQL Azure. It’s been around for 8 years and if Microsoft used traditional version numbers it would now be on V5. This wide spread availability and long history does cause some confusion when trying to work out what works with what.

So here are the FAQs I commonly see and get asked about :

FAQ 1. Can Reporting Services report on data stored in [ insert relevant database platform here]?

Although Reporting Services is part of SQL Server it can consume data from pretty well any structured data source e.g Excel, xml, and anything you can get an ADO.Net,OLEDB or ODBC connector for. To design your reports you’ll need these database connectors (drivers) on the machine you’re designing the reports on and on the server they’ll be run from.

Also don’t forget that if your source data is in a different version of SQL Server that doesn’t matter either, providing you again use the right connector/drivers.

FAQ 2. Can I design a report in the SQL Server 2XXX and then run it on Server 2YYY?

A report can generally be run on a later version of SQL server than it was designed for (unless you have custom code or rare authentication or security setup). Once you save it to the newer version of reporting services it will be automatically updated. However you can never do this the other way around i.e. run a report on an older version an the one it was designed in. This is because the report definition language (RDL) gracefully changes in each release, so that older reports can run.

Other resources on upgrading to SQL Server 2008 R2 can be found here

FAQ 3 Can I use and older version of SQL server to host the reporting server databases

Reporting servicers uses 2 SQL Server databases one to hold the metadata about the reports and the other is a temporary workspace. This is possible.

FAQ 4. Which versions of Visual Studio (VS) work with which version of Reporting Services?

When SQL Server 2005 came out it introduced the BI development studio (BIDS) which is essentially a cut down version of Visual Studio in that case 2005. When SQL Server 20087 came out BIDS was built on VS2008. However (BIDS) in SQL Server 2008 R2 is also still built on VS 2008 , but it’s simple to have another version alongside e.g. VS 2010.

FAQ 5. Which versions of Visual Studio do I use to embed my reports into my applications?

An ancillary question to this relates to the report viewer control that developers can use to embed reports in their projects.

- For SQL Server 2005 there is a report viewer tool, described here, that works in Visual Studio 2005

- If you followed that link you’ll notice there is an other version option that tales you to similar details about the report viewer control that works in VS 2008 but still against SQL Server 2005.

- The report viewer in VS2010 the only works against SQL Server 2008 and SQL Server 2008 R2 as detailed here.

See Andrew’s post for detailed compatibility tables.

Return to section navigation list>

VisualStudio LightSwitch

Tim Anderson (@timanderson) lists Ten things you need to know about Microsoft’s Visual Studio LightSwitch and suggests a future use with Windows Phone 7 apps in this 8/24/2010 post:

Microsoft has announced a new edition of Visual Studio called LightSwitch, now available in beta, and it is among the most interesting development tools I’ve seen. That does not mean it will succeed; if anything it is too radical and might fail for that reason, though it deserves better. Here’s some of the things you need to know.

1. LightSwitch builds Silverlight apps. In typical Microsoft style, it does not make the best of Silverlight’s cross-platform potential, at least in the beta. Publish a LightSwitch app, and by default you get a Windows click-once installation file for an out-of-browser Silverlight app. Still, there is also an option for a browser-hosted deployment, and in principle I should think the apps will run on the Mac (this is stated in one of the introductory videos) and maybe on Linux via Moonlight. Microsoft does include an “Export to Excel” button on out-of-browser deployments that only appears on Windows, thanks to the lack of COM support on other platforms.

I still find this interesting, particularly since LightSwitch is presented as a tool for business applications without a hint of bling – in fact, adding bling is challenging. You have to create a custom control in Silverlight and add it to a screen.

Microsoft should highlight the cross-platform capability of LightSwitch and make sure that Mac deployment is easy. What’s the betting it hardly gets a mention? Of course, there is also the iPhone/iPad problem to think about. Maybe ASP.NET and clever JavaScript would have been a better idea after all.

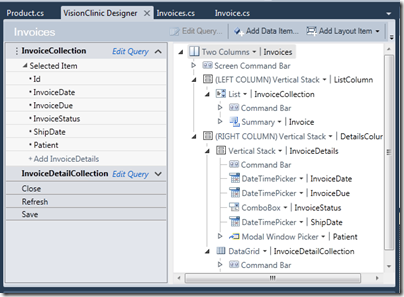

2. There is no visual form designer – at least, not in the traditional Microsoft style we have become used to. Here’s a screen in the designer:

Now, on one level this is ugly compared to a nice visual designer that looks roughly like what you will get at runtime. I can imagine some VB or Access developers will find this a difficult adjustment.

On the positive side though, it does relieve the developer of the most tedious part of building this type of forms application – designing the form. LightSwitch does it all for you, including validation, and you can write little snippets of code on top as needed.

I think this is a bold decision – it may harm LightSwitch adoption but it does make sense.

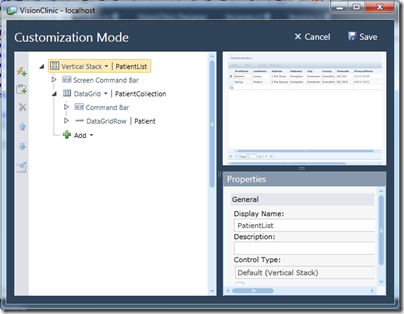

3. LightSwitch has runtime form customization. Actually it is not quite “runtime”, but only works when running in the debugger. When you run a screen, you get a “Customize Screen” button at top right:

which opens the current screen in Customization Mode, with the field list, property editor, and a preview of the screen.

It is still not a visual form designer, but mitigates its absence a little.

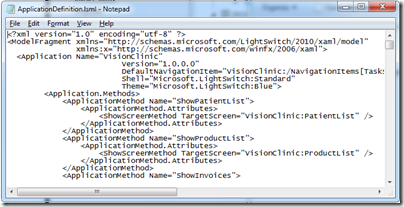

4. LightSwitch is model driven. When you create a LightSwitch application you are writing out XAML, not the XAML you know that defines a WPF layout, but XAML to define an application. The key file seems to be ApplicationDefinition.lsml, which starts like this:

Microsoft has invested hugely in modelling over the years with not that much to show for it. The great thing about modelling in LightSwitch is that you do not know you are doing it. It might just catch on.

Let’s say everyone loves LightSwitch, but nobody wants Silverlight apps. Could you add an option to generate HTML and JavaScript instead? I don’t see why not.

5. LightSwitch uses business data types, not just programmer data types. I mean types like EmailAddress, Image, Money and PhoneNumber:

I like this. Arguably Microsoft should have gone further. Do we really need Int16, Int32 and Int64? Why not “Whole number” and “Floating point number”? Or hide the techie choices in an “Advanced” list?

6. LightSwitch is another go at an intractable problem: how to get non-professional developers to write properly designed relational database applications. I think Microsoft has done a great job here. Partly there are the data types as mentioned above. Beyond that though, there is a relationship builder that is genuinely easy to use, but which still handles tricky things like many-to-many relationships and cascading deletes. I like the plain English explanations in the too, like “When a Patient is deleted, remove all related Appointment instances” when you select Cascade delete.

Now, does this mean that a capable professional in a non-IT field – such as a dentist, shopkeeper, small business owner, departmental worker – can now pick up LightSwitch and and write a well-designed application to handle their customers, or inventory, or appointments? That is an open question. Real-world databases soon get complex and it is easy to mess up. Still, I reckon LightSwitch is the best effort I’ve seen – more disciplined than FileMaker, for example, (though I admit I’ve not looked at FileMaker for a while), and well ahead of Access.

This does raise the question of who is really the target developer for LightSwitch? It is being presented as a low-end tool, but in reality it is a different approach to application building that could be used at almost any level. Some features of LightSwitch will only make sense to IT specialists – in fact, as soon as you step into the code editor, it is a daunting tool.

7. LightSwitch is a database application builder that does not use SQL. The query designer is entirely visual, and behind the scenes Linq (Language Intergrated Query) is everywhere. Like the absence of a visual designer, this is a somewhat risky move; SQL is familiar to everyone. Linq has advantages, but it is not so easy to use that a beginner can express a complex query in moments. When using the Query designer I would personally like a “View and edit SQL” or even a “View and edit Linq” option.

8. LightSwitch will be released as the cheapest member of the paid-for Visual Studio range. In other words, it will not be free (like Express), but will be cheaper than Visual Studio Professional.

9. LightSwitch applications are cloud-ready. In the final release (but not the beta) you will be able to publish to Windows Azure. Even in the beta, LightSwitch apps always use WCF RIA Services, which means they are web-oriented applications. Data sources supported in the beta are SQL Server, SharePoint and generic WCF RIA Services. Apparently in the final release Access will be added.

10. Speculation – LightSwitch will one day target Windows Phone 7. I don’t know this for sure yet. But why else would Microsoft make this a Silverlight tool? This makes so much sense: an application builder using the web services model for authentication and data access, firmly aimed at business users. The first release of Windows Phone 7 targets consumers, but if Microsoft has any sense, it will have LightSwitch for Windows Phone Professional (or whatever) lined up for the release of the business-oriented Windows Phone.

Beth Massi (@BethMassi) lists her Visual Studio LightSwitch How Do I Videos in this 8/24/2010 post:

I ’ve done my fair share of How Do I videos over the years, particularly on Visual Basic and related technologies. And even though I really don’t like hearing the sound of my own voice (who is that? ;)) I do love teaching. I also love doing How Do I videos for LightSwitch because the tool really lends itself well to short 5-10 minute videos. I’ll be working on more of them this week and will release them weekly. For now, enjoy the first 5 that we released yesterday on the LightSwitch Developer Center.

These videos are meant to be watched in order because each one builds on the last one. If you are familiar with the old Windows Forms Over Data series I did a few years ago, the application will be familiar to you because we build out the same tables and fields for a simple order management system in this series. It’s amazing how much farther along we get in this series building the application with LightSwitch instead.

- #1 - How Do I: Define My Data in a LightSwitch Application?

- #2 - How Do I: Create a Search Screen in a LightSwitch Application?

- #3 - How Do I: Create an Edit Details Screen in a LightSwitch Application?

- #4 - How Do I: Format Data on a Screen in a LightSwitch Application?

- #5 - How Do I: Sort and Filter Data on a Screen in a LightSwitch Application?

In the next few videos I’m going to tackle Custom Validation, Master-Detail forms, Lookup Tables, and some more advanced Queries.

Enjoy!

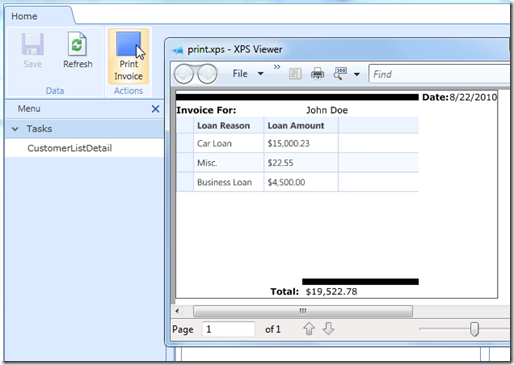

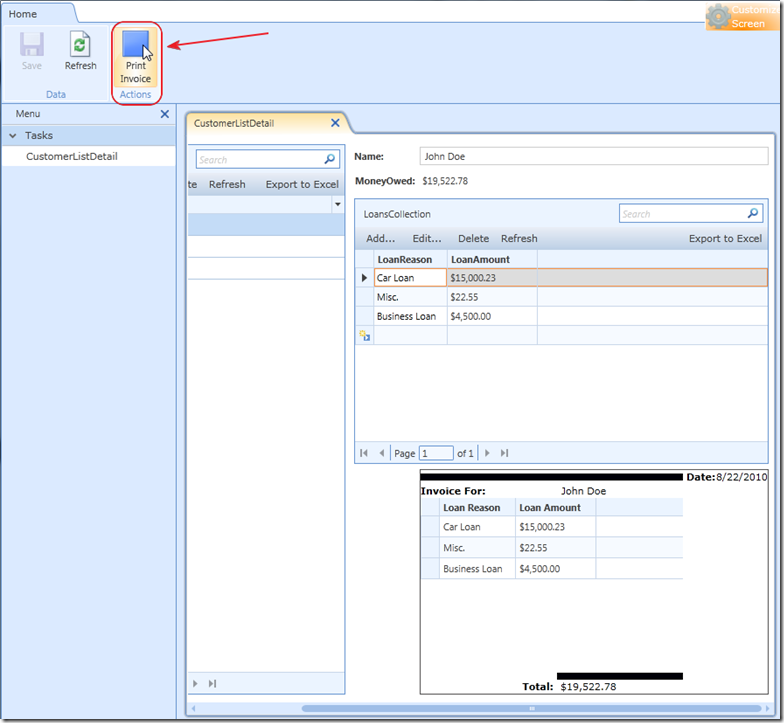

Michael Washington posted Printing With LightSwitch to the Visual Studio LightSwitch Help Website on 8/22/2010. His tutorial shows you in detail how to create a custom control in Visual Studio for printing the content of a form:

With LightSwitch Beta 1, there is no built in Printing. Here is a method that works. This will also show you how to create a custom template to allow you to print exactly what you want, not just the current screen.

Note: you need Visual Studio Professional (or higher) to create Custom Silverlight Controls. …

Michael continues with a detailed, illustrated tutorial and C# source code to create the following form:

I don’t believe that presenting invoices is normal part of loan processing, but it’s clear from the effort involved that LightSwitch needs built-in printing capability.

<Return to section navigation list>

Windows Azure Infrastructure

Nicole Hemsoth reported Large Enterprises Giving IaaS a Second Glance based on a Yankee Group survey to the HPC in the Cloud blog on 8/24/2010:

The commonly-held view among those who don’t spend their days immersed in the clouds is that cloud computing can be pared down to what is essentially SaaS. After all, most mainstream definitions spun from the distance of the small and mid-sized business camp can be nicely boiled down to just that—“you see,” they say, “ the cloud is pretty much just like Google Docs, instead of installing software on your computer, you use it over the web as a service. Some things you pay for, some things you don’t.”

However, the definitions about multiple cloud models and strategies, including Platform as a Service (PaaS) and Infrastructure as a Service (IaaS) are often overlooked because, quite frankly, not everyone needs them, except, of course, those who are functioning on the larger scale. While there are several emerging PaaS and IaaS use cases that are of interest (and even more on the books for the last couple of years in particular) we don’t hear enough about these models and because of that, it’s difficult to believe that there are actually a relatively (should I italicize that “relatively” or repeat it a few times?) large number of large-scale enterprises considering the IaaS model—at least if you take this as truth from the 400 or so IT professionals who fessed up to appease a survey.

According to a recently-released Yankee Group survey, Infrastructure-as-a-Service (IaaS) is increasingly showing up on the IT radar for a number of enterprises as a new cloud computing strategy. The results, which were based questions directed at the leaders of 400 enterprises (although industry or focus was not specified in any detail) claim that “24 percent of large enterprises with cloud experience are already using IaaS, and an additional 37 percent expect to adopt IaaS during the next 24 months.” The research group notes that while surprising, these numbers pale in comparison to those whose use of cloud extends only so far as select SaaS adoption, but it might represent a growing trend—and hence a new push on the part of vendors to reach potential IaaS users.

One of the more compelling points this study makes about IaaS is how it is being considered by large enterprises. The Yankee Group states that “storage and on-demand compute are top IaaS use cases” for organizations that have already instituted broader PaaS or IaaS strategies in the past two years. They also note that “enterprises see cloud as a second-tier storage alternative” and point out that while “on demand compute is attractive, use cases are not clear cut.”

As Joe McKendrick at ZDNet noted of the results, virtualization security is the biggest barrier preventing wider IaaS adoption. “However, those that have already deployed IaaS say other issues come to the fore, including regulatory compliance, data migration, reliability, employee use and quantitative benefits.”

What’s in a Name?

The report also included some interesting fodder for vendors, albeit some that comes without much surprise. The respondents indicated, with minimal exception, that “traditional IT suppliers are the most trusted suppliers of cloud for enterprises” and that as organizations begin to put their efforts into their internal cloud projects, the “role of vendors and integrators becomes most critical.”

There is no doubt that with a technological movement like cloud, which many are still wary about given the host of security, regulatory and other issues, large enterprises brave enough to make the leap are not going to do so without the perceived safety net of a big name vendor. For those newcomers to the cloud space, the biggest challenge will be convincing customers that it is possible to supply the most intangible, yet most important deliverable of all—the trust that only seems to come with longevity in technology. IBM, Microsoft, and now even Amazon are all “big name celebrities” in the world of cloud and competing with this kind of history is nearly impossible, even when you leave out the question of available marketing dollars.

The State of the Enterprise Cloud Market

On the whole, the Yankee Group contends that enterprises are “optimistic about cloud computing” in terms of capex-to-opex issues and the ability to have elastic, on-demand resources. In a graphic representing enterprise sentiments about the cloud (which is available here, but be prepared to surrender your vital details before you can have it) only 1% thought the cloud was nothing more than marketing hype—a figure that would make some interesting comparison fodder for a survey asking the same question a few years ago. On the flipside, 57% percent of respondents agreed that the cloud is “an enabling technology that drives business transformation and innovation” with the less enthusiastic 35% stating that yes, while it is essentially a good thing, it’s an “evolving concept that will take years to mature.”

Responding to a survey abut intended plans to optimize IT infrastructure is far easier than actually doing it—ask any enterprise IT exec who has done both. Still, it will be interesting to see if there will be a greater push from the IaaS vendors who might have been wary to invest in heavily heralding an offering that only a select few seemed to want not so long ago.

David Linthicum asks “Many cloud computing providers are using other cloud services to form their offerings. Is this adding invisible risk?” in a preface to his The rise -- and risk -- of the composite clouds article of 8/23/2010 for InfoWorld’s Cloud Computing blog:

Many cloud providers are learning to eat their own dog food, and they're leveraging other clouds to augment missing pieces of their offerings. As a result, you may find that a cloud provider offering software as a service (SaaS) is actually leveraging another cloud for storage, another cloud for compute, and perhaps other components as well.

As cloud computing evolves -- and providers try to get the market quickly -- we could find that offerings appearing to be from a single provider are actually a composite of various cloud providers brought together to form a solution. I've found myself in briefing after briefing where this is the case. But should you care?

I think you should. The fact of the matter is that IT is moving to cloud computing with the assumption that the cloud provider will give a certain level of security and a certain level of service. Having core components, such as storage, compute, security, and so on, outsourced to other cloud providers could mean that your data and application processing exists across many different physical providers -- and the risk of outages, compliance issues, and data leaks increases dramatically.

Consider that the primary cloud, which is in reality made up of many clouds, could stop dead if a single subordinate cloud provider fails. Thus, your cloud provider that's offering five-nines reliability is not truly providing that level of SLA, considering the added risk of leveraging a composite cloud.

I suspect that this trend will continue as smaller cloud providers come to market and look for shortcuts in getting services online quickly. Moreover, as cloud providers learn to work and play better together, they'll tap other clouds as channels. And I suspect that we'll see some outages in 2011 around the vulnerabilities that composite clouds bring. Keep an eye on this one.

Don MacVittie asserts “There are not yet viable cloud interoperability standards” as a deck for his “Just in Case, Bring Alternate Plans to the Cloud Party” post of 8/23/2010 to F5’s DevCentral blog:

As I write this, Lori and The Toddler are on their way to the store, his first trip out of the house without a diaper (nappy for our UK friends), she bravely told him that they would go get some robots as reward for being a big boy. I told her that it was brave – possibly brazen – to take him out straight away, to which she replied “I’m putting clothes in the diaper bag and taking it along just in case”. I’m sure all will be well, he has inherited his mother’s stubbornness, and is pretty focused on any activity that ends with a robot “IN MY HAND” (shout that in toddler voice, and you’ll get the idea). But Lori has planned ahead just in case because this is all new to him.

And that’s precisely the deal with cloud. It’s new. In fact, if you look at something cloud and go “this is the same stuff that we were doing 5/10/20 years ago”, then chances are a vendor is hoodwinking you with what I’m told is called “cloud-washing”, or you’re doing it wrong. Since it is new to you – Infrastructure as a Service is the best definition I’ve seen to date – you should go into it with contingency plans. What happens if it doesn’t scale as expected? What happens if it does scale and the costs get exorbitant? For public cloud, how do you get your stuff out of the cloud if need be? Once you go cloud – be it private or public – your infrastructure consumption is likely to be larger than your physical infrastructure, so what’s the backup plan?

In short, expect the same type of growth that you saw with virtualization. It will be easier to provision, and thus more projects are likely to be tried. The choke point will be development, which will help keep the numbers down a bit, but don’t count on it to keep your cloud provisioned applications in line with your physical ability to host them, particularly if public cloud is part of your plan (and it should be). So you’ll need to have a backup plan should your vendor do any of the many things that vendors do that make them less appealing. Like get purchased, or have a major security breach or any of a thousand other things.

There are not yet viable cloud interoperability standards, largely because there is not yet (in my estimation) market convergence on the definition of “cloud”. Until there is, you’ll have to fend for yourself. That means knowing how to get your apps/infrastructure/data back out of cloud A and into cloud B (or a non-cloud datacenter).

Another odd thing that you will want to consider is data protection laws and storage locations in the cloud. Lori has talked about this quite a bit for apps, but it’s even more important from a storage perspective. Know where your cloud provider is physically storing your data, and what the implications for that are for you. If you are in a country with one set of infosec laws, and your cloud provider stores your data in a different country, what exactly does that mean to you? At this time it probably means that you will have to implement the most stringent of the two country’s laws. Of course, if the storage provider doesn’t tell you what country your data is in (Amazon for example spawns copies of data, who knows where), then you have a more fuzzy problem. Are you responsible for maintaining the standards of a country you didn’t know your data was stored in? I’d say no, but I could argue that it’s your data, you are responsible for seeing it is protected and knowing where it’s at. If you’ve got data centers in multiple countries, you already understand the implications here, but it’s worth revisiting with cloud in mind, particularly if your cloud provider has datacenters in a variety of locales.

Finally, if your applications are running in more than one geographic location, you’ll need something like our GTM product to make sure you are directing connections to the right servers. If you have multiple datacenters you may or may not have already put some type of architecture in place, but with cloud – particularly with cloud-bursting – it becomes a necessity to front your URLs with something that resolves them intelligently, since the application is running in multiple places at once.

Cloud provides a great mechanism for doing more and being more adaptable as an IT department, but look before you leap. As the old adage goes, “Those who fail to plan plan to fail”. Cloud is big enough that you don’t want to jump off that cliff without knowing your parachute is packed.

And hopefully you will have fewer incidents than The Toddler is likely to have over the next few days. Though first time implementations of new technology tend to have their share of incidents in my experience.

Eric Ligman’s Microsoft cloud computing & cloud services – So much more than just BPOS post of 8/23/2010 reminds partners that there’s more than BPOS in Microsoft’s cloud portfolio:

“Cloud” – Now there’s a topic that I had multiple conversations with Microsoft partners about at Worldwide Partner Conference, as well as one that was covered in many presentations, communications, sessions, updates, etc. both at Worldwide Partner Conference but also since then. One thing that I have heard or seen several times, and why I am putting up this post, is a perception that at Microsoft, “cloud” = BPOS and BPOS = “cloud." Now don’t get me wrong, BPOS (Business Productivity Online Services) is a component of Microsoft cloud computing / Microsoft cloud services; however, it is not the holistic, all encompassing definition of Microsoft cloud computing / Microsoft cloud services, it is merely one offering available.

Let’s take a look in a little more depth at what Microsoft Cloud Computing / Microsoft Cloud Services is and offers…

First, let’s be clear on the timeline. I’ve heard some statements along the line of “Microsoft is new to offering cloud services.” I guess it depends on how you define “new.” Compared to how long the automobile has been on the road, sure 15 years could be considered “new,” but in terms of “new” as in “just getting into it,” then no, and I think this is something people forget. Here’s a quick look over time at Microsoft involvement in cloud services/offerings (including before “cloud computing” was being used as a term).

- Click image for full size

The journey leading up to where we are today has been taking place for 15 years now, starting way back with Windows Live and Hotmail. Since then, the services and offerings served up online through cloud from Microsoft have continued and expanded. Today, there are a number of cloud based solutions available, enabling individuals and businesses around the world to do so much. Here’s a look at some of these, with links to more information about each and trials of these for you:

- Windows Azure - flexible, familiar environment to create applications and services for the cloud. You can shorten your time to market and adapt as demand grows.

- Get the trial

- More info

- Check out the Windows Azure platform learning and readiness for partners

- Windows Azure Platform resources on Microsoft Partner Network portal

- Windows Azure Platform Partner Hub

- Microsoft Online Services partner resources on Microsoft Partner Network portal

- Learn about partner opportunities to become a Microsoft Online Services reseller

- Windows Intune - simplifies how businesses manage and secure PCs using Windows cloud services and Windows 7

- More info & how to sign up for the beta

- Microsoft Online Services partner resources on Microsoft Partner Network portal

- Learn about partner opportunities to become a Microsoft Online Services reseller

- Microsoft Office Web Apps - online companions to Word, Excel, PowerPoint, and OneNote, giving you the freedom to access, edit, and share Microsoft Office documents from virtually anywhere.

- Get the trial

- More info

- Watch the Office Web Apps video

- Microsoft Office 2010 information & resources

- Microsoft Online Services partner resources on Microsoft Partner Network portal

- Learn about partner opportunities to become a Microsoft Online Services reseller

- Microsoft SQL Azure - provides a highly scalable, multi-tenant database that you don't have to install, setup, patch or manage.

- Get the trial

- More info

- Microsoft Online Services partner resources on Microsoft Partner Network portal

- Learn about partner opportunities to become a Microsoft Online Services reseller

- Microsoft Exchange Online - highly secure hosted e-mail with "anywhere access" for your employees. Starts at just $5 per user per month.

- Get the trial

- More info

- Microsoft Exchange Online Standard Datasheet

- Microsoft Exchange Online Dedicated Datasheet

- Online Demo (Silverlight)

- Microsoft Online Services partner resources on Microsoft Partner Network portal

- Learn about partner opportunities to become a Microsoft Online Services reseller

- Microsoft Forefront Online Protection for Exchange - helps protect businesses' inbound and outbound e-mail from spam, viruses, phishing scams, and e-mail policy violations.

- Get the trial

- More info

- Microsoft Online Services partner resources on Microsoft Partner Network portal

- Learn about partner opportunities to become a Microsoft Online Services reseller

- Microsoft SharePoint Online - gives your business a highly secure, central location where employees can collaborate and share documents.

- Get the trial

- More info

- Microsoft Online Services partner resources on Microsoft Partner Network portal

- Learn about partner opportunities to become a Microsoft Online Services reseller

- Microsoft Office Live Meeting - provides real-time, Web-hosted conferencing so you can connect with colleagues and engage clients from almost anywhere – without the cost of travel.

- Get the trial

- More info

- Office Live Meeting Standard Datasheet

- Office Live Meeting Dedicated Datasheet

- View product videos:

- Microsoft Online Services partner resources on Microsoft Partner Network portal

- Learn about partner opportunities to become a Microsoft Online Services reseller

- Microsoft Office Communications Online - delivers robust messaging functionality for real-time communication via text, voice, and video.

- Get the trial

- More info

- Watch the demo

- Office Communications Online Standard Datasheet

- Office Communications Online Dedicated Datasheet

- Microsoft Online Services partner resources on Microsoft Partner Network portal

- Learn about partner opportunities to become a Microsoft Online Services reseller

- Microsoft Dynamics CRM Online - helps you find, keep, and grow business relationships by centralizing customer information and streamlining processes with a system that quickly adapts to new demands.

- Get the trial

- More info

- Microsoft Online Services partner resources on Microsoft Partner Network portal

- Learn about partner opportunities to become a Microsoft Online Services reseller

- Windows Live ID - identity and authentication system provided by Windows Live that lets you create universal sign in credentials across diverse applications.

- Get the trial

- More info

- Microsoft Online Services partner resources on Microsoft Partner Network portal

- Learn about partner opportunities to become a Microsoft Online Services reseller

- Microsoft Business Productivity Online Suite (BPOS) - brings together online versions of Microsoft's messaging and collaboration solutions, including: Exchange Online, SharePoint Online, Office Live Meeting, and Office Communications Online.

- Get the trial

- More info

- BPOS Standard Suite Datasheet

- View online demo (Silverlight)

- Watch the “What is Microsoft Business Productivity Online Suite (BPOS)?” video online

- Microsoft Business Productivity Online Suite Role-Based Learning Resources for partners

- Microsoft Online Services partner resources on Microsoft Partner Network portal

- Learn about partner opportunities to become a Microsoft Online Services reseller

Hopefully in the information above, you can see that when it comes to Microsoft cloud computing and Microsoft cloud services, BPOS is just one aspect and offering available, but it goes far beyond just that. Whether you are a business or a partner, the opportunity that cloud computing/cloud services and Microsoft brings to you are very exciting and continue to expand each and every day. Please be sure to utilize the resources above to either begin or continue your journey into what Microsoft and cloud/online services can bring to light for you. Also, if you are a business looking to learn more about what Microsoft’s online/cloud solutions can do for your business, be sure to talk with a Microsoft partner near you to learn more.

<Return to section navigation list>

Windows Azure Platform Appliance (WAPA)

Bill Claybrook warned “Check your traditional data center mind-set at the door or be prepared to fail” as a preface to his Building a private cloud: Get ready for a bumpy ride post of 8/24/2010 to the ComputerWorld Blogs:

When cloud computing became a topic of discussion a few years ago, public clouds received the bulk of the attention, mostly due to the high-profile nature of public-cloud announcements from some of the industry's biggest names, including Google and Amazon. But now that the talk has turned into implementation, some IT shops have begun steering away from public clouds because of the security risks; data is outside the corporate firewall and is basically out of their control.

Tom Bittman, vice president at Gartner, said in a blog post that based on his poll of IT managers, security and privacy are of more concern than the next three public cloud problems combined. He also wrote that 75% of those polled said that they would be pursuing a private cloud strategy by 2012, and 75% said that they would invest more in private clouds than in public clouds through 2012.

Frank Gillett, an analyst at Forrester Research, agrees that IT's emphasis is more on private clouds these days. He says that IT managers "are not interested in going outside" the firewall.

Still, as Bittman's blog post points out, private clouds have their share of challenges, too; in his poll, management issues and figuring out operational processes were identified as the biggest headaches. And, of course, an on-premises private cloud need to be built internally by IT, so time frame and learning curve, as well as budget, need to be part of the equation.

Indeed, transitioning from a traditional data center -- even one with some servers virtualized -- to a private cloud architecture is no easy task, particularly given that the entire data center won't be cloud-enabled, at least not right away.

In this two-part article, we'll examine some of the issues. Part 1 looks at how cloud differs from virtualization and from a "traditional" data center. Part 2 will examine some of the management issues and look at a few shops actively building private clouds, and the lessons they've learned.

While we generally think of a private cloud as being inside a company's firewall, a private cloud can also be off-premises -- hosted by a third party, in other words -- and still remain under the control of the company's IT organization. But in this article we are talking only about on-premises private clouds.

Also, despite all the hype you might hear, no single vendor today provides all of the software required to build and manage a real private cloud -- that is, one with server virtualization, storage virtualization, network virtualization, and resource automation and orchestration. Look for vendors to increasingly create their own definitions of private cloud to fit their product sets.

Sidebar: Hurdles involved

Building your own private cloud involves some challenges, including these:

- Budget. Private clouds can be expensive, so you need to do your due diligence and figure out what the upper and lower bounds for your ROI will be.

- Integrating with public clouds. Build your private cloud so that you can move to a hybrid model if public cloud services are required. This involves many factors, including security and making sure you can run your workloads in both places.

- Scaling. Private cloud computing services usually don't have the economies of scale that large public cloud providers provide.

- Reconfiguring on the fly. You may have to tear down servers and other infrastructure as it is working to move it into the private cloud. This could create huge problems.

- Legacy hardware. Leave your oldest servers behind -- you should not try to repurpose any servers that require manual configuration with a private cloud, since it would be impossible to apply automation/orchestration management to these older machines.

- Technology obsolescence. The complexity and speed of technology change will be hard for any IT organization to handle, especially the smaller ones. Once you make an investment in a private-cloud technology stack, you need to protect that investment and make sure you stay up to date with new releases of software components.

- Fear of change. Your IT team may not be familiar with private clouds, and there will be a learning curve. There may also be new operational processes and old processes that need to be reworked. Turn this into a growth opportunity for your people -- the stress of doing and learning all this may be mitigated by helping your folks keep in mind that these are important new skills in today's business environment.

<Return to section navigation list>

Cloud Security and Governance

Lydia Leong’s (@cloudpundit) Liability and the cloud post of 8/24/2010 deals with cloud service provider liability issues:

I saw an interesting article about cloud provider liability limits, including some quotes from my esteemed colleague Drue Reeves (via Gartner’s acquisition of Burton). A quote in an article about Eli Lilly and Amazon also caught my eye: Tanya Forsheit, founding partner at the Information Law Group, “advised companies to walk away from the business if the cloud provider is not willing to negotiate on liability.”

I frankly think that this advice is both foolish and unrealistic.

Let’s get something straight: Every single IT company out there takes measures to strongly limit its liability in the case something goes wrong. For service providers — data center outsourcers, Web hosting companies, and cloud providers among them — their contracts usually specify that the maximum that they’re liable for, regardless of what happens, is related in some way to the fees paid for service.

Liability is different from an SLA payout. The terms of service-level agreements and their financial penalties vary significantly from provider to provider. SLA payouts are usually limited to 100% of one month of service fees, and may be limited to less. Liability, on the other hand, in most service provider contracts, specifically refers to a limitation of liability clause, which basically states the maximum amount of damages that can be claimed in a lawsuit.

It’s important to note that liability is not a new issue in the cloud. It’s an issue for all outsourced services. Prior to the cloud, any service provider who had their contracts written by a lawyer would always have a limitation of liability clause in there. Nobody should be surprised that cloud providers are doing the same thing. Service providers have generally limited their liability to some multiple of service fees, and not to the actual damage to the customer’s business. This is usually semi-negotiable, i.e., it might be six months of service fees, twelve months of fees, some flat cap, etc., but it’s never unlimited.

For years, Gartner’s e-commerce clients have wanted service providers to be liable for things like revenues lost if the site is down. (American Eagle Outfitters no doubt wishes it could hold IBM’s feet to the fire with that, right now.) Service providers have steadfastly refused, though back a decade or so, the insurance industry had considered whether it was reasonable to offer insurance for this kind of thing.

Yes, you’re taking a risk by outsourcing. But you’re also taking risks when you insource. Contract clauses are not a substitute for trust in the provider, or any kind of proxy indicating quality. (Indeed, a few years back, small SaaS providers often gave away so much money in SLA refunds for outages that we advised clients not to use SLAs as a discounting mechanism!) You are trying to ensure that the provider has financial incentive to be responsible, but just as importantly, a culture and operational track record of responsibility, and that you are taking a reasonable risk. Unlimited liability does not change your personal accountability for the sourcing decision and the results thereof.

In practice, the likelihood that you’re going to sue your hosting provider is vanishingly tiny. The likelihood that it will actually go to trial, rather than being settled in arbitration, is just about nil. The liability limitation just doesn’t matter that much, especially when you take into account what you and the provider are going to be paying your lawyers.

Bottom line: There are better places to focus your contract-negotiating and due-diligence efforts with a cloud provider, than worrying about the limitation-of-liability clause. (I’ve got a detailed research note on cloud SLAs coming out in the future that will go into all of these issues; stay tuned.)

Chris Czarnecki posted Cloud Computing Security and Audit Moves Forward on 8/24/2010 to the Learning Tree blog:

A key concern for many organisations adopting cloud computing is security. Moving to the cloud means many aspects of security are handled by the cloud provider, especially when using Platform as a Service (PaaS). In addition to security, the operational, policy and regulatory procedures of cloud providers is a concern.

Businesses who require information on security policies and auditory and compliance from a cloud provider have many problems in gathering the information. Firstly, public cloud providers cannot spend all their time providing this information for their customers. Secondly, it is easy to misunderstand what is actually being asked of the provider by their customers resulting in the incorrect information being provided.

To help solve this problem for both cloud providers and cloud consumers, a welcome development is the formation of the Cloud Audit Organisation. The goal of this organisation is to provide a common interface and namespace that enables cloud computing providers to automate the audit, assertion, assessment and assurance of their Infrastructure (IaaS), Platform (PaaS) and Application (SaaS) environments. The result will be the ability of authorised cloud consumers to automatically gather the required security and audit information in a standard manner without any misunderstanding or ambiguity and with no burden on the cloud provider. This follows a key benefit of the cloud – self service.

The Cloud Audit Organisation is a cross industry effort that currently has over 250 participants comprising members of all the leading Cloud Computing providers including Google, Amazon, Microsoft, VMWare, Cisco and many others. As anybody who has attended Learning Tree’s Cloud Computing course and participated in the course workshops knows, this organisation is a welcome and vital development in removing one of the perceived barriers to Cloud Computing adoption.

<Return to section navigation list>

Cloud Computing Events

My (@rogerjenn) List of Cloud Computing and Online Services Sessions at TechEd Australia 2010 post of 8/24/2010 is somewhat untimely:

It’s a bit late because Tuesday is already over down under, but here’s the list of sessions in the Cloud Computing and Online Services Track of TechEd Australia 2010 categorized as Breakout Sessions, Interactive Sessions, and Instructor-Led Labs. Surprisingly, searching with LightSwitch returns no sessions.

Note: I’ll add links to slide decks and videos, if and when they become available. (See my List of 77 Cloud-Computing Sessions at TechEd North America 2010 Updated with Webcast Links post of 6/14/2010 as an example. Unlike that location, I’m not registered for Tech*Ed Australia, so it might be a while for the links.)

See the list here.

<Return to section navigation list>

Other Cloud Computing Platforms and Services

Steve reported Updated: AWS Security Whitepaper on 8/24/2010 in the Amazon Web Services blog:

Good news for all those interested in security...we've released the fourth version of our Overview of Security Processes whitepaper. It contains ten pages of new and additional detailed information. Highlights of new content include:

- A description of the AWS control environment

- A list of our SAS-70 Type II Control Objectives

- Some discussion of risk management and shared responsibility principles

- Greater visibility into our monitoring and communication processes and our employee lifecycle

- Descriptions of our physical security, environmental safeguards, configuration management, and business continuity management processes and plans

- Updated summaries of new AWS security features

- Additional detail about the security attributes of various AWS components

The additional information and greater level of detail should help to answer many common questions. As always, feel free to reach out to us if you're still needing more information.

<Return to section navigation list>

![clip_image002[7] clip_image002[7]](http://blogs.msdn.com/cfs-file.ashx/__key/CommunityServer-Blogs-Components-WeblogFiles/00-00-00-42-93-metablogapi/2772.clip_5F00_image0027_5F00_thumb_5F00_2A482919.jpg)

0 comments:

Post a Comment