Windows Azure and Cloud Computing Posts for 8/2/2010+

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database, Codename “Dallas” and OData

- AppFabric: Access Control and Service Bus

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Windows Azure Infrastructure

- Windows Azure Platform Appliance (WAPA)

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Read the detailed TOC here (PDF) and download the sample code here.

Discuss the book on its WROX P2P Forum.

See a short-form TOC, get links to live Azure sample projects, and read a detailed TOC of electronic-only chapters 12 and 13 here.

Wrox’s Web site manager posted on 9/29/2009 a lengthy excerpt from Chapter 4, “Scaling Azure Table and Blob Storage” here.

You can now freely download by FTP and save the following two online-only PDF chapters of Cloud Computing with the Windows Azure Platform, which have been updated for SQL Azure’s January 4, 2010 commercial release:

- Chapter 12: “Managing SQL Azure Accounts and Databases”

- Chapter 13: “Exploiting SQL Azure Database's Relational Features”

HTTP downloads of the two chapters are available for download at no charge from the book's Code Download page.

Azure Blob, Drive, Table and Queue Services

Steve Marx (@smarx) describes Publishing Adaptive Streaming Video from Expression Encoder to Windows Azure Blobs in an 8/2/2010 update to his earlier post on the topic:

I’ve just released a new drop of Adaptive Streaming with Windows Azure Blobs Uploader on Code Gallery, which includes a publishing plug-in for Expression Encoder 3 that pushes your Smooth Streaming content directly to Windows Azure Blobs.

For background on the technique and the tool, please read last week’s post “Adaptive Streaming with Windows Azure Blobs and CDN.”

See it in Action

[This] 2-minute video shows the Expression Encoder plug-in in action.

Detailed Walkthrough

To use the plug-in, download the binary drop from Adaptive Streaming with Windows Azure Blobs Uploader on Code Gallery, and run

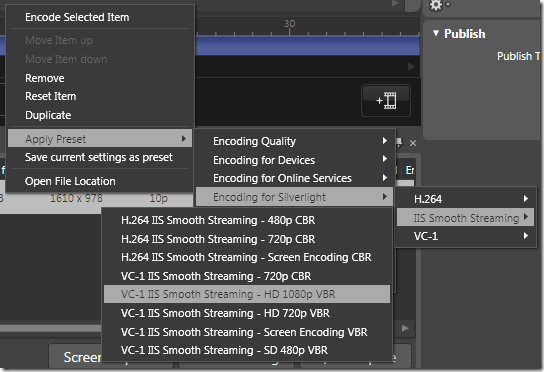

EncoderPlugin\install.cmdfrom an elevated command prompt.Once the plug-in is installed, go to Expression Encoder and set up an encode job to use one of the IIS Smooth Streaming presets.

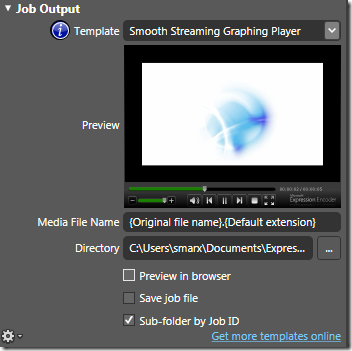

If you want a prepackaged Smooth Streaming player with your content, choose a template in the Job Output area. You might want to uncheck “Preview in browser,” because the publishing plugin also includes an option to launch the browser once publishing is complete.

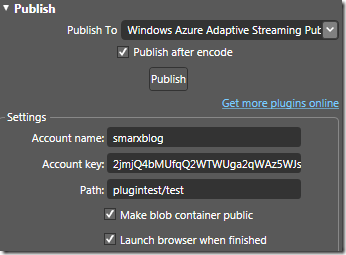

Finally, you can choose the “Windows Azure Adaptive Streaming Publisher” option in the Publish area to invoke the plugin. You’ll need to provide your Windows Azure storage account name and key, as well as a full path to where you would like the video published (at least a container name, but possibly also a path under that container).

If you’ve checked “make blob container public,” the container will automatically be set for full read access permissions, and you’ll also have the option of launching the browser when the publish is finished.

Although the Encoder plug-in is based on the same library as the command-line tool, it contains only a small subset of options. If you’re looking for more fine-grained control over things like number of upload threads or cache policies, you’ll need to use the command-line tool (which can be downloaded from the same project).

Download

You can download the Expression Encoder plug-in, the command-line tool, and the full source code at the “Adaptive Streaming with Windows Azure Blobs Uploader” project on Code Gallery.

Niall Best explains Using Windows Azure tables to persist session data in this 8/2/2010 tutorial:

Being able to store session data is arguably one of the reasons that the Web features the dynamic applications we all use every day. If it wasn’t for the session we would have a fairly boring static Web experience.

Windows Azure, being built on top of Windows Server 2008, allows ASP.Net developers to utilise all the session features of IIS. Additionally the developer can extend these options with the Windows Azure table storage to cheaply and easily persist storage of session data in the Cloud.

Session storage for Windows Azure

The scalability of Windows Azure means that you are going to have at least a couple of web roles and possibly even tens or hundreds of web roles for busy sites. All these individual web roles need access to a central session store, the best place to put each user’s session data is in Windows Azure table storage.

The process of getting started with storing session data in the table store requires the modification of the following files.

Web.config

As per normal ASP.Net web.config settings you need to modify the <sessionState> tag to point to the custom table storage provider. Application name is the name recorded in the table when the session detail is logged, used to help identify the session.

AspProviders

The sample code for the AspProviders project is availbe from Microsoft. Search for “Windows Azure Platform Training Kit”. Presently June 2010 is the latest release. Looking at the TableStorageSessionStateProvider.cs namespace you can see the code still resides in the Microsoft.samples namespace, hopefully this code will be rolled up into the main Windows Azure SDK at some point in the future.

You can either include the AspProviders project into your solution or compile the DLL and include that. Whatever you choose make sure you add it as a reference to your web role project.

ServiceConfiguration.cscfg

The most important setting here is the “DataConnectionString”. The AspProviders uses this to pull all the rest of the settings needed to access the table store for your account, the rest of the settings here are standard for Azure. I’ve shown the values needed for running in the cloud environment when deployed.

At this point you will need to add the setting name keys into your ServiceDefinition.csdef or your will get a build error.

Global.asax

You will need to set the configuration setting for the Azure CloudStorageAccount before you try and access the table storage. This is best done in the Global.asax of the web role project

If all has gone to plan you can run your application and connect to the live Windows Azure services. Using a tool like Cerebrata Cloud Storage Studio you can look in your table store and see that a new table will be automatically created for you called “session”. If you perform a task requiring state in your application you should see serialised version of your session data stored in the table.

Richard Parker shows you how to implement Open-source FTP-to-Azure blob storage: multiple users, one blob storage account and offers source code in this 8/2/2010 post:

A little while ago, I came across an excellent article by Maarten Balliauw in which he described a project he was working on to support FTP directly to Azure’s blob storage. I discovered it while doing some research on a similar concept I was working on. At the time of writing this post though, Maarten wasn’t sharing his source code and even if he did decide to at some point soon, his project appears to focus on permitting access to the entire blob storage account. This wasn’t really what I was looking for but it was very similar…

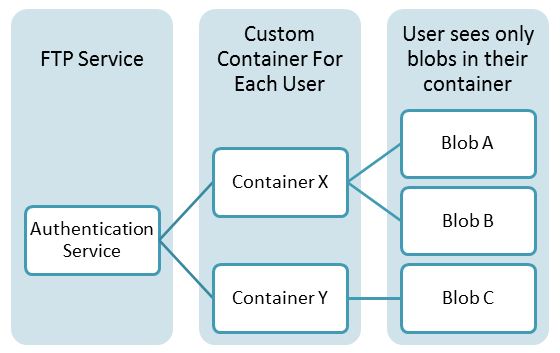

My goal: FTP to Azure blobs, many users: one blob storage account with ‘home directories’

I wanted a solution to enable multiple users to access the same storage account, but to have their own unique portion of it – thereby mimicking an actual FTP server. A bit like giving authenticated user’s their own ‘home folder’ on your Azure Blob storage account.

This would ultimately give your Azure application the ability to accept incoming FTP connections and store files directly into blob storage via any popular FTP client – mimicking a file and folder structure and permitting access only to regions of the blob storage account you determine. There are many potential uses for this kind of implementation, especially when you consider that blob storage can feed into the Microsoft CDN…

Features

- Deploy within a worker-role

- Support for most common FTP commands

- Custom authentication API: because you determine the authentication and authorisation APIs, you control who has access to what, quickly and easily

- Written in C#

How it works

In my implementation, I wanted the ability to literally ‘fake’ a proper FTP server to any popular FTP client: the server component to be running on Windows Azure. I wanted to have some external web service do my authentication (you could host yours on Windows Azure, too) and then only allow each user access to their own tiny portion of my Azure Blob Storage account.

It turns out, Azure’s containers did exactly what I wanted, more or less. All I had to do was to come up with a way of authenticating clients via FTP and returning which container they have access to (the easy bit), and write an FTP to Azure ‘bridge’ (adapting and extending a project by Mohammed Habeeb to run in Azure as a worker role).

Here’s how my first implementation works:

A quick note on authentication

When an FTP client authenticates, I grab the username and password sent by the client, pass that into my web service for authentication, and if successful, I return a container name specific to that customer. In this way, the remote user can only work with blobs within that container. In essence, it is their own ‘home directory’ on my master Azure Blob Storage account.

The FTP server code will deny authentication for any user who does not have a container name associated with them, so just return null to the login procedure if you’re not going to give them access (I’m assuming you don’t want to return a different error code for ‘bad password’ vs. ‘bad username’ – which is a good thing).

Your authentication API could easily be adapted to permit access to the same container by multiple users, too.

Simulating a regular file system from blob storage

Azure Blob Storage doesn’t work like a traditional disk-based system in that it doesn’t actually have a hierarchical directory structure – but the FTP service simulates one so that FTP clients can work in the traditional way. Mohammed’s initial C# FTP server code was superb: he wrote it so that the file system could be replaced back in 2007 – to my knowledge, before Azure existed, but it’s like he meant for it to be used this way (that is to say, it was so painless to adapt it one could be forgiven for thinking this. Mohammed, thanks!).

Now I have my FTP server, modified and adapted to work for Azure, there are many ways in which this project can be expanded…

Over to you (and the rest of the open source community)

It’s my first open source project and I actively encourage you to help me improve it. When I started out, most of this was ‘proof of concept’ for a similar idea I was working on. As I look back over the past few weekends of work, there are many things I’d change but I figured there’s enough here to make a start.

If you decide to use it “as is” (something I don’t advise at this stage), do remember that it’s not going to be perfect and you’ll need to do a little leg work – it’s a work in progress and it wasn’t written (at least initially) to be an open-source project. Drop me a note to let me know how you’re using it though, it’s always fun to see where these things end up once you’ve released them into the wild.

Where to get it

Head on over to the FTP to Azure Blob Storage Bridge project on CodePlex.

It’s free for you to use however you want. It carries all the usual caveats and warnings as other ‘free open-source’ software: use it at your own risk.

The Windows Azure Team posted How to Use Windows Azure Blobs and CDN to Deliver Adaptive Streaming Video on 8/2/2010:

Steve Marx just posted a great overview about how to use Windows Azure Blobs and the Windows Azure CDN to deliver adaptive streaming video content in a format compatible with Silverlight's Smooth Streaming player. For those of you unfamiliar with Smooth Streaming, he also explains what it is and how it works and points to a great article, "Smooth Streaming Technical Overview" by Alex Zambelli for an even deeper dive.

You can get all the details in Steve's blog post. If you want to dive right in and start hosting Smooth Streaming content in Windows Azure Blobs, check out the Adaptive Streaming for Windows Azure Blobs Uploader project on Code Gallery, a command-line tool and reusable library for hosting adaptive streaming videos in Windows Azure Blobs.

<Return to section navigation list>

SQL Azure Database, Codename “Dallas” and OData

Walter Wayne Berry posted I Miss You SQL Server Agent: Part 2 to the SQL Azure Team blog on 8/2/2010:

Currently, SQL Azure doesn’t have the concept of a SQL Server Agent. In this blog series we are attempting to create a light-weight substitute using a Windows Azure Worker role. In the first blog post of the series, I covered how the Windows Azure worker roles compare to SQL Server Agent, and got started with Visual Studio and some code. In this blog post I am going to create a mechanism to complete the “job” once per day.

Creating a Database

Windows Azure is a stateless platform, where the worker role could be moved to a different server in the data center at any time. Because of this, we need to persist the state of the job completion ourselves; so the obvious choice is SQL Azure. To do this I have created a database under my SQL Azure server, called SQLServerAgent (the database name msdb is reserved). In this database I created a table called jobactivity, which is a simplified version of the on-premise SQL Server Agent table sysjobactivity. Here is the creation script I used:

CREATE TABLE [dbo].[jobactivity]( [job_id] uniqueidentifier NOT NULL PRIMARY KEY, [job_name] nvarchar(100) NOT NULL, [start_execution_date] datetime NOT NULL, [stop_execution_date] datetime NULL, )The job_id represents the daily instance of the object, the job_name is an arbitrary key for the job being executed, we can use this table to run many jobs with different names.

Tracking Jobs Starting And Stopping

I also need a couple of stored procedures that add a row to the table when the job starts, and set the stop execution date when the job ends. The StartJob stored procedure ensures that the job has not been started for this day before it adds a row for the job execution as a signal that one worker role has started the job. It conveniently allows us to have multiple worker roles acting as SQL Server Agents, without executing the job multiple times.

CREATE PROCEDURE StartJob ( @job_name varchar(100), @job_id uniqueidentifier OUTPUT) AS BEGIN TRANSACTION SELECT @job_id FROM [jobactivity] WHERE DATEDIFF(d, [start_execution_date], GetDate()) = 0 AND [job_name] = @job_name IF (@@ROWCOUNT=0) BEGIN -- Has Not Been Started SET @job_id = NewId() INSERT INTO [jobactivity] ([job_id],[job_name],[start_execution_date]) VALUES (@job_id, @job_name, GetDate()) END ELSE BEGIN SET @job_id = NULL END COMMIT TRANThe other stored procedure, StopJob, looks like this:

CREATE PROCEDURE [dbo].[StopJob]( @job_id uniqueidentifier) AS UPDATE [jobactivity] SET [stop_execution_date] = GetDate() WHERE job_id = @job_idNow let’s write some C# in the worker role code to call our new stored procedures.

protected Guid? StartJob(String jobName) { using (SqlConnection sqlConnection = new SqlConnection( ConfigurationManager.ConnectionStrings["SQLServerAgent"]. ConnectionString)) { try { // Open the connection sqlConnection.Open(); SqlCommand sqlCommand = new SqlCommand( "StartJob", sqlConnection); sqlCommand.CommandType = System.Data.CommandType.StoredProcedure; sqlCommand.Parameters.AddWithValue("@job_name", jobName); // WWB: Sql Job Id Output Parameter SqlParameter jobIdSqlParameter = new SqlParameter("@job_id", SqlDbType.UniqueIdentifier); jobIdSqlParameter.Direction = ParameterDirection.Output; sqlCommand.Parameters.Add(jobIdSqlParameter); sqlCommand.ExecuteNonQuery(); if (jobIdSqlParameter.Value == DBNull.Value) return (null); else return ((Guid)jobIdSqlParameter.Value); } catch (SqlException) { // WWB: SQL Exceptions Means It Is Not Started return (null); } } } protected void StopJob(Guid jobId) { using (SqlConnection sqlConnection = new SqlConnection( ConfigurationManager.ConnectionStrings["SQLServerAgent"]. ConnectionString)) { // Open the connection sqlConnection.Open(); SqlCommand sqlCommand = new SqlCommand( "StopJob", sqlConnection); sqlCommand.CommandType = System.Data.CommandType.StoredProcedure; sqlCommand.Parameters.AddWithValue("@job_id", jobId); sqlCommand.ExecuteNonQuery(); } }Now let’s tie it altogether in the Run() method of the worker role, we want our spTestJob stored procedure to execute once a day right after 1:00 pm.

public override void Run() { Trace.WriteLine("WorkerRole1 entry point called", "Information"); while (true) { DateTime nextExecutionTime = new DateTime( DateTime. UtcNow.Year, DateTime. UtcNow.Month, DateTime. UtcNow.Day, 13, 0, 0); if (DateTime. UtcNow > nextExecutionTime) { // WWB: After 1:00 pm, Try to Get a Job Id. Guid? jobId = StartJob("TestJob"); if (jobId.HasValue) { Trace.WriteLine("Working", "Information"); // WWB: This Method Has the Code That Execute // A Stored Procedure, The Actual Job ExecuteTestJob(); StopJob(jobId.Value); } // WWB: Sleep For An Hour // This Reduces The Calls To StartJob Thread.Sleep(3600000); } else { // WWB: Check Every Minute Thread.Sleep(60000); } } }Notice that there isn’t any error handling code in the sample above, what happens then there is an exception? What happens when SQL Azure returns a transient error? What happens when the worker role is recycled to a different server in the data center? These issues I will try to address in part 3 of this series, by adding additional code.

Chris “Woody” Woodruff (@cwoodruff) opened his OData Workshop Screencasts folder on 8/2/2010 at TechSmith’s Screencast com:

Woody’s first two screencasts are:

Jess Chadwick posted his Using jQuery with OData and WCF Data Services feature article prefaced by “Create robust, data-driven AJAX apps with little hassle” on 8/2/2010 to the Windows IT Pro site:

Creating solutions that leverage AJAX data retrieval are relatively easy with the tools available today. However, creating solutions that depend on AJAX updates to server-side data stores remain time-consuming at best. Luckily, we have a few tools in our repertoire to make the job significantly easier: jQuery in the browser and OData (via WCF Data Services) on the server side. These frameworks not only help make developers’ lives easier, they do so in a web-friendly manner. In this article, I'll demonstrate how these technologies can help create robust, data-driven AJAX applications with very little hassle.

Microsoft created the OData protocol to easily expose and work with data in a web-friendly manner. OData achieves this level of web-friendliness by embracing ubiquitous web protocols such as HTTP, ATOM, and (most relevant to this article) JSON to expose Collections of Entries. At the core of these concepts is the referencing of data via Links (along with a new and intuitive querying and filtering language) to provide a very REST-ful, discoverable, and navigable way to present data over the web. In this way, OData is able to further enhance the value of its underlying protocols. OData leverages many of its underlying protocols’ features by heavily leveraging standard HTTP methods and response codes as meaningful and semantic communication features.

The easiest way to express OData’s power and simplicity is showing it in action. The code snippet in Figure 1 includes what should be relatively familiar jQuery code calling the root (feed) of an OData service to retrieve the various Collections it’s exposing. The jQuery call is pretty simple: the $.getJSON() utility method makes it easy to provide jQuery with a URL to execute a JSON request against, as well as a JavaScript method to execute once the response returns with data. The request URL in this example is straightforward: it’s the base URL to the NetFlix OData catalog with the special OData $format keyword instructing the service to return JSON data back to the browser, as well as the WCF Data Service keyword $callback that instructs the service to trigger our callback method.

Figure 2 shows the raw data that would be returned from this request. Because the request executed against the root feed URL, the OData protocol dictates that the NetFlix service respond with a list of the Catalogs available in this feed. OData’s inherent discoverability is immediately apparent because—even though the service’s root URL is the only thing the client knows at this point—it has all the information it needs to navigate all the service’s exposed data.

Once the client retrieves a list of the Catalogs that the service exposes, it's able to append any one of those Catalog names to the root service URL to retrieve the contents (Entries) of that Catalog. The snippet in Figure 3 provides an example of this concatenation, effectively copying the original request, then adding /Titles to the end of the root service URL. Things really start to get interesting in Figure 4, which shows that the response containing the Catalog’s Entries provides much more than just its Entries’ basic information. Notice that each Entry has a __metadata property that includes a uri property indicating that particular Entry’s URI. It's this URI that uniquely identifies this Entry, providing clients with a direct reference to the Entry. Thus, retrieving the details for a particular Entry is as easy as making another GET request to its URI (as shown in Figure 5). What’s more, if that Entry contains references to yet more Entries, they too will be exposed using this same method. Thus, the entire model is navigable using only GET requests.

Read more:

Andrew Freyer offers an abbreviated tour of SQL Azure’s Project “Houston” in his Managing SQL Azure post of 7/26/2010 to the TechNet blogs:

Effective management of the cloud is essential, and SQL Azure is no exception to this. Until a couple of days ago the only way to do this was to fire up SQL Server Management Studio in SQL Server 2008 R2. However the Azure team have now released a public beta of Project Houston a lightweight web based management console.

I simply connect..

to get a screen full of Silverlight loveliness…

I can then do all the basic stuff I need with my database, such as view and modify table designs, run and save queries and create new table, view and stored procedures. Here I am looking at the data in dimDate..

from where I can also add and delete rows.

The more astute among you will realise that despite the gloss, the tool is quite basic at the moment. I agree entirely but I would emphasise the ‘at the moment’ in the above statement. One of the game changing things about cloud based solutions is that they are regularly refreshed and because they are cloud based I can use the new version this straight away as there’s nothing to deploy. In the case of SQL Azure This rate of change is unique for any database platform. For example since it’s launch in January, SQL Azure has added spatial data type support and is now available as a 50Gb offering, in addition to this new management tool.

Apart from that SQL Azure is really really boring as you just use it like any other SQL Server Database, and when it comes to databases boring is good.

My Test Drive Project Houston CTP1 with SQL Azure post, updated 7/32/2010, offers a detailed test drive with the Northwind sample database (ww0h28tyc2.database.windows.net) published by the SQL Azure Houston team.

<Return to section navigation list>

AppFabric: Access Control and Service Bus

See Geoff Snowman will present an MSDN Webcast: Windows Azure AppFabric: Soup to Nuts (Level 300) in the Cloud Computing Events section below.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

Jeremiah Peschka analyzes CloudSleuth’s comparative performance data in his Would the Fastest Cloud Please Step Forward? post of 8/2/2010:

Not so fast, there, buddy.

CloudSleuth has released a new tool that allows users and customers to see how the different cloud providers stack up. As of 6:45 PM EST on August 1, Microsoft’s Azure services come out ahead by about 0.08 seconds.

0.08 seconds per what?

Turns out that it was the time to request and receive the same files from each service provider. Is this a meaningful metric for gauging the speed of cloud service providers? Arguably, yes. There was considerable variance across the different cloud providers, but that is also to be expected since there are different internet usage patterns in different parts of the world.

More importantly, using speed as a metric points out something much more important: As we develop more and more applications in the cloud, we will need new benchmarks to determine the effectiveness of an application. We can no longer just look at raw query performance and raw application response time. We need to consider things like global availability and global response times. In the past, we could get away with storing data on a remote storage server and caching commonly used files in memory on our web servers or in a separate reverse proxy server. Moving to the cloud makes it more difficult to accomplish some of this advanced performance tuning. Arguably, our cloud service providers should be handling these things for us, but that may not be the case.

With the proliferation of cheaper and cheaper cloud based hosting (did you know you can host basic apps for free with heroku?) the problems that used to plague only large customers can now bother smaller customers. As soon as you begin distributing data and applications across data centers, you can run into all kinds of problems. If you are sharding data based on geographic location, you may have problems when a data center goes down. Worse than that, because you’re in the cloud you may not notice it.

This isn’t meant to be fear mongering, I’ve come to the conclusion that the cloud is a great thing and it opens up a lot of exciting options that weren’t available before. But moving applications into the cloud caries some risks and requires new ways of looking at application performance. Latency is no longer as simple as measuring the time to render a page or the time to return a database query. We need to be aware of the differences between different cloud providers’ offerings and why performance on one provider may not directly equate to performance with a different provider.

Microsoft’s PR Phalanx asserted “Windows Azure is helping enterprise customers drive profits and maximize business value by pushing their computing infrastructure onto the cloud” as a preface to its From Viral Onions to Flight Maps: Businesses Use Windows Azure to Create Flexible Services in the Cloud feature story of 8/2/2010:

For devotees of the Outback Steakhouse chain, a meal of horseradish-crusted filet mignon is seldom complete without the restaurant’s signature offering — “the Bloomin’ Onion” — a large, deep-fried onion, artfully sliced to resemble a flower in bloom. Last year, when Outback’s marketing team brainstormed a social networking campaign to expand the restaurant’s customer base, it seemed logical to lure diners with free samples of the pièce de résistance on their menu. It was time for the Bloomin’ Onion to go viral.

Screenshot of Outback’s Facebook application developed by Thuzi, a Florida-based social media marketing company and member of Microsoft’s partner network.

Outback partnered with Thuzi, a Florida-based social media marketing company and a member of Microsoft’s partner network, to build an application that would offer a free Bloomin’ Onion coupon to the first 500,000 people to sign up as fans on the restaurant’s Facebook page. Thuzi searched extensively for a solution that would meet all the needs of the viral campaign and found one in Windows Azure. …

From 10 to 10 million: Dynamic Scalability

Zimmerman attributes much of the application’s success to its ability to instantly scale up to ensure coverage during peak usage periods, and then scale back again when the extra capacity isn’t needed. Using Windows Azure, developers can easily create applications that can scale from 10 users to 10 million users without any additional coding. Since the Outback Facebook application has its own cloud-based database, it is also much easier for the company to run queries and produce reports.

Prashant Ketkar, director of product marketing for Windows Azure at Microsoft, says scalability and adaptability are among a growing list of attributes that distinguishes Windows Azure from its competitors.

“Windows Azure is truly differentiated in terms of the enterprise-class service options that it offers. The ease of development and adaptability is unmatched. With Windows Azure, you supply only a Windows application, along with instructions about how many instances to run. The platform takes care of everything else,” says Ketkar.

Ketkar adds that the Windows Azure platform manages the entire maintenance lifecycle, including updating system software when required. This enables Windows Azure customers to focus on addressing business needs instead of resolving operational challenges. Windows Azure also enables partners and customers to utilize their existing skills in familiar languages such as Microsoft.NET, Java and PHP, and familiar tools such as Visual Studio and Eclipse, to create and manage Web applications and services.

Keeping Costs Down

Deploying applications with Windows Azure is also more cost-effective for businesses, since the Windows Azure model lets customers “rent” resources that reside in Microsoft datacenters and the amount they pay depends on how much of those resources they use. This was the motivation behind 3M’s decision to use Windows Azure to host an innovative Web-based application last year.

The 3M Visual Attention Service (VAS) application lets designers, marketers and advertisers know where people’s eyes are drawn when they look at an image. Since the initial beta test in November last year, customers have successfully used the VAS service to optimize the design of websites, in-store signage and billboards.Terry Collier, business development manager of 3M’s commercial graphics division, says the company wanted to provide the application as a service to customers worldwide without having to open datacenters around the world.

“The real challenge was keeping the cost of operating the VAS tool low considering the computing-intensive nature of our image-processing algorithms.” says Collier. “With the pay-as-you-go structure of Windows Azure, we only need to budget for the resources we use.”

Collier adds that in addition to requiring a low up-front investment, the Windows Azure platform aligns seamlessly with the Microsoft-based development tools and processes deployed throughout 3M. …

What’s Next for Windows Azure?

Microsoft’s Ketkar says customers can expect to see a host of new features added to the Windows Azure platform within the next year. Topping that list is virtual machine deployment functionality, a key forthcoming feature announced at last year’s Professional Developers Conference (PDC) in Los Angeles, which will enable customers to migrate existing Windows Server applications. [Emphasis added.]

“We’re on track to fulfill our commitment from PDC 2009 and empower customers to run a wide range of Windows applications in Windows Azure, while taking full advantage of the built- in automated service management,” says Ketkar. “And that’s just the first step. Almost every feature in Windows Server is on the list of things for us to consider bringing up to the cloud.”

Enrique Lima asked How do you manage your Azure? in this 8/1/2010 post:

Recently in preparation for a project, I was pointed to the Azure MMC.

This is a great tool, while the portal gives you the capabilities that come with managing the creation and basic upkeep of the environment, the MMC tool gives you the option of looking at metrics and understand performance elements of what you are running and running against in terms of performance.

Give it a download and then try it out. When you download, it is an executable files that will deploy a project. When that deployment is done you will come to find an open folder with 3 folders in it, and a command script called StartHere, double click and the actual MMC snap-in deployment takes place then. http://code.msdn.microsoft.com/windowsazuremmc

Take some time (about 15 minutes) to review the feature and configuration video. Click here to watch the video.

A reminder of the existence of the Windows Azure Management Tool (MMC) is useful for those who haven’t tried it. Here’s a table of its capabilities from its MSDN CodeGallery page:

J. C. Stame posted Windows Azure – I’m All In! on 8/1/2010:

I am super excited!!! I recently joined (first week of July) the Windows Azure Platform Field organization focused on business development and sales. I will be working with the largest enterprise customers in the Northwest (Northern California, Oregon, Washington) based out of the Bay Area.

If you have ever read my professional blog, you know that I have been writing about cloud for the last 2 years. Its an exciting time in our industry, as the cloud platform is enabling enterprises to build and deploy rich applications, while lowering their capital investments and costs in computer infrastructure.

Cloud computing is here, and the Windows Azure Platform is leading the way!

It would be interesting to read sales war stories in blogs from the Windows Azure Platform Field organization, even if the customers aren’t identified.

The Microsoft Case-Study Team posted Video Publisher [Sagastream] Chooses Windows Azure over Amazon EC2 for Cost-Effective Scalability on 7/29/2010:

Software company Sagastream developed an online video platform that enables customers to publish and manage interactive videos over the web. The company developed its platform for the cloud, initially choosing Amazon Elastic Cloud Compute (EC2) as its cloud service provider. Sagastream quickly realized, however, that while EC2 offered low infrastructure costs, it did so at the expense of scalability and manageability, and the company found that it spent considerable time managing scaling logic and load-balancing requirements.

Sagastream decided to implement the next version of its online video platform on the Windows Azure platform. Since making the switch, the company has already benefited from quick development, improved scalability, and simplified IT management. Sagastream also reduced its costs—savings it will pass along to customers.

Organization Profile: Based in Gothenburg, Sweden, Sagastream develops an online video platform that enables customers to publish interactive videos on the web. Founded in 2008, the company has nine employees.

Business Situation: Sagastream used Amazon EC2 for its Saga Player online video platform, but wanted even more efficient scalability and to further streamline IT management.

Solution: The company implemented the Windows Azure cloud services platform for its compute and storage needs, and is releasing its next-generation online video platform, ensity, in November 2010.

Benefits:

- Fast development

- Improved, easy scalability

- Simplified IT management

- Reduced costs; lowered prices

Jeff Harrison posted a Windows Azure cloud in real-time geospatial collaboration demo case-study of the CarbonCloud Sync with an 00:08:08 video clip on 7/29/2010:

The Carbon Project's CarbonCloud Sync platform was tested and demonstrated at the recent Open Geospatial Consortium (OGC) Web Services 7 (OWS-7) event. The problem addressed by this solution is the need to receive real-time geographic updates from many sources, validate them, and then share the updates with web services from many organizations. The demonstration uses Gaia, WFS from ESRI, plus WFS and REST Map Tile Services from CubeWerx. All real-time communications between users and web services is handled by CarbonCloud Sync on Windows Azure Cloud.

The components of CarbonCloud Sync as deployed on Azure Cloud were:

- A web role - to host the management part and front facing services

- A worker role - to process the transactions, do the transpositions, etc.

- SQL Azure database – to store the transactions, users, etc.

- Azure Queue Storage – web role uses this to add events that the worker role will process.

Lots of positive feedback on this - and many thanks to ESRI, CubeWerx and Windows Azure for the services!

Visit Jeff’s site for the video.

The Microsoft Case Study Team posted Software Start-Up [Transactiv] Scales for Demand Without Capital Expenses by Using Cloud Services on 7/26/2010:

Transactiv facilitates business-to-business and business-to-consumer commerce through social networking websites. When the start-up company set out to build its application, it knew that it wanted a cloud-based infrastructure that offered high levels of scalability to manage unpredictable demand, but without cost-prohibitive capital expenses and IT management costs associated with on-premises servers. After ruling out competitors’ offerings based on scalability limitations and maintenance requirements, Transactiv developed its solution on the Windows Azure platform.

As a result of using Windows Azure, Transactiv gained the scalability it wanted while avoiding capital expenses and saving a projected 54.6 percent of costs over a three-year period compared to an on-premises solution. In addition, the company has greater agility with which to respond to business needs.

Organization Size: 20 employees

Organization Profile: Transactiv is a start-up company that facilitates online transactions between buyers and sellers through social networking platforms. The company has 20 employees and is based in Cincinnati, Ohio.

Business Situation: The company wanted a scalable architecture to handle unpredictable traffic for its online commerce solution, but it wanted to avoid capital expenses and ongoing infrastructure maintenance costs.

Solution: Transactiv developed its patent-pending application, which integrates with the popular social networking site Facebook, on the Windows Azure platform with hosting through Microsoft data centers.

Benefits:

- Cost-effective scaling

- Avoids capital expenses

- Quickly responds to business needs

Return to section navigation list>

Windows Azure Infrastructure

Mary Jo Foley asserts Microsoft's cloud conclusions: Pre-assembled components trump datacenter containers in her article of 8/2/2010 for ZDNet’s All About Microsoft blog:

ISO standard containers are out. Air-cooling is in.

Those are just a couple of the findings from a just-published Microsoft white paper, entitled “A Holistic Approach to Energy Efficiency in Datacenters.”

The author of the paper, Microsoft Distinguished Engineer Dileep Bhandarkar, includes some of the Microsoft Global Foundation Services’ learnings about how best to populate and cool cloud datacenters.

(Global Foundation Services is the part of Microsoft that provides the infrastructure that powers Microsoft’s various cloud offerings. GFS runs the various datacenter servers running Hotmail, Bing, Business Productivity Online Services, CRM Online, Azure and anything else Microsoft counts as its “cloud.”)

The GFS team has discovered some “non-intuitive” changes that helped Microsoft improve its power consumption by 25 percent in some of its existing datacenters. These included cleaning the datacenter’s roof and painting it white, and repositioning concrete walls around the externally-mounted air conditioning units to improve air flow, according to the paper.

There were some more intuitive changes that helped, as well. Microsoft has been on a campaign to “rightsize its servers,” by eliminating unnecessary components and using higher efficiency power supplies and voltage converters. The company also is moving away from ISO standard containers as the best way to “contain” servers. From the white paper:

“We have learned a lot from our container experience in Chicago and have now moved more towards containment as a useful tool. We do not see ISO standard containers as the best way of achieving containment in future designs. Our experience with air side economization in our Dublin datacenter has taught us a lot too. Our discussions with server manufacturers has convinced us that we can widen the operating range of our servers and use free air cooling most of the time. Our early proof-of-concept with servers in a tent, followed by our own IT Pre-Assembled Component (ITPAC) design (shown in the image above) have led us to a new approach for our future datacenters.”

Microsoft has found that air cooling is sufficient for datacenters in some climates. Evaporative coolers (also known as “swamp coolers”) are necessary when temperatures go above 90 to 95 degrees Farenheit, according to the paper. While adding secondary cooling with air or water-cooled chillers does raise operational costs, their infrequent use reduces overall energy consumption, the Softies have found.

The conclusion of the white paper:

“Microsoft views its future datacenters as driving a paradigm shift away from traditional monolithic raised floor mega datacenters. Using modular pre-manufactured components yields improvements in cost and schedule. The modular approach reduces the initial capital investment and allows you to scale capacity with business demand in a timely manner.”

Microsoft is hardly the only cloud vendor to be focusing on how to optimize its own datacenters to be more power-efficient and maintainable. At Amazon, former Softie (now Amazon Vice President and Distinguished Engineer) James Hamilton has continued his explorations of how best to optimize cloud datacenter performance and architecture.

Meanwhile, Microsoft is working with a handful of OEM partners to enable them to provide customers with private cloud capabilities via the recently announced Windows Azure Appliances, which are virtual private clouds in a box. I’d assume many of Microsoft’s own datacenter learnings will be applied to these partner/customer-hosted datacenters, as well.

Along similar lines, Rich Miller describes A Vision for the Modular Enterprise in an 8/2/2010 post to DataCenterKnowledge.com:

One of the prototype units for for the i/o Anywhere modular data center.

PHOENIX, Ariz. – The way George Slessman sees it, i/o Anywhere is much more than a container. Slessman, the CEO of i/o Data Centers, believes the company’s new modular data center design is at the forefront of a fundamental shift in the way enterprise companies will buy and deploy data centers.

“Our feeling is that the underlying demand for data center properties is outstripping supply over the long term, but the way the industry is building data centers will not scale,” said Slessman.

i/o Anywhere is the company’s response: a family of modular data center components that can create and deploy a fully-configured enterprise data center within 120 days. i/o Data Centers says these factory-built modular designs can transform the cost and delivery time for IT capacity.

Most importantly, the new modular designs alter the location equation, allowing data centers to be deployed at or near a customer premises, offering the benefits of outsourced data centers in an in-house environment.

Multiple Customized Modules

Data Center Knowledge got an early look at i/o Anywhere, which is standardized on a container form factor, but includes custom modules for IT equipment, power infrastructure and network gear. i/o Data Centers will also offer containerized chillers and generators, as well as modules supplying thermal storage and even solar power.i/o Anywhere is the latest example of container-based designs that seek to go beyond the “server box” to create a fully-integrated data center. Early container implementations have focused on cloud data centers (as at Microsoft), remote IT requirements or temporary data center capacity. i/o Data Centers is notable for its commitment to its modular approach as the basis for its own enterprise-centric colocation and wholesale data center business.

The company is used to making bold bets on growth. In mid-2009 i/o Data Centers opened one of the world’s largest colocation data centers in the midst of a bruising recession. A year after opening the doors at the 538,000 square foot Phoenix ONE, the company has fully leased the 180,000 square foot first phase.

i/o Anywhere to Drive Phoenix ONE Growth

The entire second phase of Phoenix ONE will be delivered using the i/o Anywhere modular design, which will also serve as the basis of i/o Data Centers’ future facilities, Slessman says. The company has deployed its first i/o Anywhere module in Phase II of Phoenix ONE, which eventually will house at least 22 modules supporting customer equipment.The company expects to begin installing customers in December. i/o Data Centers said it is also in active negotiations on i/o Anywhere deployments in other locations.

Here’s a look at some of the features of i/o Anywhere:

- Each module is 42 feet long – slightly larger than a standard ISO container but still easily shipped by truck, train or plane.

- Each data module supports 300 kilowatts of critical power, with a chilled-water cooling system housed under a 3-foot raised floor. Chilled air is delivered into a 4-foot wide cold aisle, travels through the equipment, and returns via a 3-foot wide hot aisle in the rear of the container.

- A power module includes an uninterruptible power supply (UPS) and batteries. Each module can support 1 megawatt of capacity.

- i/o Data Centers can supply generators or chillers. A standard 20-module configuration would include two 2.5 megawatt generators and three 500-ton chillers.

- Additional modules can provide a thermal storage system, which makes ice at night when energy is less expensive and stores it in large tanks to supply chilled water during the day.

- For customers interested in renewable energy, i/o Anywhere will offer a 500 kilowatt solar power module.

- Each deployment can be configured with security, including mantraps, biometric access control and round-the-clock security staffing.

- The modules are managed using i/o OS, a software package the company describes as “a fully-integrated data center monitoring, alarming, remote control and management operating system.”

The modular design incorporates key features of the infrastructure i/o Data Centers has adopted in its facilities in Scottsdale and Phoenix, including ultrasonic humidification and energy-efficient variable frequency drives in chillers, plug fans and and air handlers. i/o Anywhere will also be backed by the same 100 percent uptime service level agreement (SLA) offered in i/o’s existing data centers.

A key challenge for i/o Anywhere and other modular offerings is finding customers’ comfort level with new designs. Slessman said enterprise customers are interested in container-based designs that resemble a traditional data center. That feedback prompted the use of a raised floor, which isn’t essential but provides a familiar look and feel (see image at left of the interior of an i/o Anywhere data module).

But i/o Data Centers believes that interest in cloud computing and capital preservation will drive changes in the data center sector. Slessman said the industry can scale using two approaches: the “747 model” featuring a small number of massive facilities, and the “Volkswagen model” offering a larger volume at an attractive price point.

i/o Data Centers hopes to compete on both fronts as it expands beyond its core Phoenix market. The company is contemplating additional data centers in new markets. Meanwhile, Slessman says modular designs may be of particular interest to companies that have previously resisted outsourcing their data centers.

“Only 15 percent of enterprise users have outsourced their data centers,” said Slessman. “We are interested in that other 85 percent.”

Cloud Computing May Drive Adoption

The buzz about cloud computing could be a factor in adoption of container-based design. Many enterprises are interested in the benefits of cloud computing, but wary of placing mission-critical IT assets in a public cloud environment. Containers provide a way for these companies to quickly deploy private clouds on premises. Microsoft is already targeting this market with its Windows Azure Platform Appliance.

“The key is to talk about this as a platform,” said Anthony Wanger, President of i/o Data Centers. “We think we have a meaningful first movers advantage. Our focus is on building a toolset for our customers, and maintaining a capital structure that allows I/O to reinvest in innovation and infrastructure.”

James Hamilton rang in with an Energy Proportional Datacenter Networks article on 8/1/2010:

A couple of weeks back Greg Linden sent me an interesting paper called Energy Proportional Datacenter Networks. The principal of energy proportionality was first coined by Luiz Barroso and Urs Hölzle in an excellent paper titled The Case for Energy-Proportional Computing. The core principal behind energy proportionality is that computing equipment should consume power in proportion to their utilization level. For example, a computing component that consumes N watts at full load, should consume X/100*N Watts when running at X% load. This may seem like a obviously important concept but, when the idea was first proposed back in 2007, it was not uncommon for a server running at 0% load to be consuming 80% of full load power. Even today, you can occasionally find servers that poor. The incredibly difficulty of maintaining near 100% server utilization makes energy proportionality a particularly important concept. …

James continues:

Understanding the importance of power proportionality, it’s natural to be excited by the Energy Proportional Datacenter Networks paper. In this paper, the authors observer “if the system is 15% utilized (servers and network) and the servers are fully power-proportional, the network will consume nearly 50% of the overall power.” Without careful reading, this could lead one to believe that network power consumption was a significant industry problem and immediate action at top priority was needed. But the statement has two problems. The first is the assumption that “full energy proportionality” is even possible. There will always be some overhead in having a server running. And we are currently so distant from this 100% proportional goal, that any conclusion that follows from this assumption is unrealistic and potentially mis-leading.

The second issue is perhaps more important: the entire data center might be running at 15% utilization. 15% utilization means that all the energy (and capital) that went into the datacenter power distribution system, all the mechanical systems, the servers themselves, is only being 15% utilized. The power consumption is actually a tiny part of the problem. The real problem is the utilization level means that most resources in a nearly $100M investment are being wasted by low utilization levels.There are many poorly utilized data centers running at 15% or worse utilization but I argue the solution to this problem is to increase utilization. Power proportionality is very important and many of us are working hard to find ways to improve power proportionality. But, power proportionality won’t reduce the importance of ensuring that datacenter resources are near fully utilized.

Just as power proportionality will never be fully realized, 100% utilization will never be realized either so clearly we need to do both. However, it’s important to remember that the gain from increasing utilization by 10% is far more than the possible gain to be realized by improving power proportionality by 10%. Both are important but utilization is by far the strongest lever in reducing cost and the impact of computing on the environment.

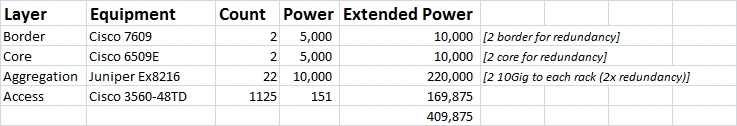

Returning to the negative impact of networking gear on overall datacenter power consumption, let’s look more closely. It’s easy to get upset when you look at net gear power consumption. It is prodigious. For example a Juniper EX8200 consumes nearly 10Kw. That’s roughly as much power consumption as an entire rack of servers (server rack powers range greatly but 8 to 10kW is pretty common these days). A fully configured Cisco Nexus 7000 requires 8 full 5kW circuits to be provisioned. That’s 40kW or roughly as much power provisioned to a single network device as would be required by 4 average racks of servers. These numbers are incredibly large individually but it’s important to remember that there really isn’t all that much networking gear in a datacenter relative to the number of servers.

To get precise, let’s build a model of the power required in a large datacenter to understand the details of networking gear power consumption relative to the rest of the facility. In this model, we’re going to build a medium to large sized datacenter with 45,000 servers. Let’s assume these servers are operating at an industry leading 50% power proportionality and consume only 150W each at full load. This facility has a PUE of 1.5 which is to say that for every watt of power delivered to IT equipment (servers, storage and networking equipment), there is ½ watt lost in power distribution and powering mechanical systems. PUEs as high as 1.2 are possible but rare and PUEs as poor as 3.0 are possible and unfortunately quite common. The industry average is currently 2.0 which says that in an average datacenter, for every watt delivered to the IT load, 1 watt is spent on overhead (power distribution, cooling, lighting, etc.).

For networking, we’ll first build a conventional, over-subscribed, hierarchical network. In this design, we’ll use Cisco 3560 as a top of rack (TOR) switch. We’ll connect these TORs 2-way redundant to Juniper Ex8216 at the aggregation layer. We’ll connect the aggregation layer to Cisco 6509E in the core were we need only 1 device for a network of this size but we use 2 for redundancy. We also have two border devices in the model:

Using these numbers as input we get the following:

- Total DC Power: 10.74MW (1.5 * IT load)

- IT Load: 7.16MW (net gear + servers and storage)

- Servers & Storage: 6.75MW (45,100 * 150W)

- Networking gear: 0.41 MW (from table above)

This would have the networking gear as consuming only 3.8% of the overall power consumed by the facility (0.41/10.74). If we were running at 0% utilization which I truly hope is far worse that anyone’s worst case today, what networking consumption would we see? Using the numbers above with the servers at 50% power proportionally (unusually good), we would have the networking gear at 7.2% of overall facility power (0.41/((6.75*0.5+0.41)*1.5)).

This data argues strongly that networking is not the biggest problem or anywhere close. We like improvements wherever we can get them and so I’ll never walk away from a cost effective networking power solution. But, networking power consumption is not even close to our biggest problem so we should not get distracted.

James concludes with a similar analysis for a “fat tree network using commodity high-radix networking gear along the lines alluded to in Data Center Networks are in my Way.”

James Staten posited To get cloud economics right, think small, very, very small on 8/2/2010:

A startup, who wishes to remain anonymous, is delivering an innovative new business service from an IaaS cloud and most of the time pays next to nothing to do this. This isn't a story about pennies per virtual server per hour - sure they take advantage of that-- but more a nuance of cloud optimization any enterprise can follow: reverse capacity planning.

Most of us are familiar with the black art of capacity planning. You take an application, simulate load against it, trying to approximate the amount of traffic it will face in production, then provision the resources to accommodate this load. With web applications we tend to capacity plan against expected peak which is very hard to estimate - even if historical data exists. You capacity plan to peak because you don't want to be overloaded and cause client to wait, error out or go to your competition for your service.

And if you can't accommodate a peak you don't know how far above the capacity you provisioned the spike would have gone. If you built out the site to accommodate 10,000 simultaneous connections and you went above capacity for five minutes, was the spike the 10,001st connection or an additional 10,000 connections?

But of course we can never truly get capacity planning right because we don't actually know what peak will be and if we did, we probably don't have the budget (or data center capacity) to built it out to this degree.

The downside of traditional capacity planning is that while we build out the capacity for a big spike, that spike rarely comes. And when there isn't a spike a good portion of those allocated resources sit unused. Try selling this model to your CFO. That's fun.

Cloud computing platforms - platform as a service (PaaS) and infrastructure as a service (IaaS) offerings to be specific -- provide a way to change the capacity planning model. For scale-out applications you can start with a small application footprint and incrementally adjust the number of instances deployed as load patterns change. This elastic compute model is a core tenant of cloud computing platforms and most developers and IT infrastructure and operations professionals get this value. But are you putting it into practice?

To get the most value from the cloud, you should capacity planning completely opposite of the traditional way because the objective on the cloud is to get the application to have as small a base footprint as possible. You don't preallocate resources for peak - that's the cloud's job. You plan for the minimum hourly bill because you know you can call up more resources when you need them providing you have the appropriate monitoring regime and can provision additional IaaS resources fast enough.

In the case study we published on GSA's use of Terremark's The Enterprise Cloud this is the approach it took for USA.gov. It determined the minimum footprint the site required and placed this capacity into traditional Terremark hosting under a negotiated 12-month contract. It then tapped into The Enterprise Cloud for capacity as traffic loads went up from here. The result: a 90% reduction in hosting costs.

You can do the same but don't stop once you've determined your base capacity. Can you make it even smaller? The answer is most likely yes. Because if there is a peak, then there is also a trough.

That's what the startup above has done. They tweaked their code to get this footprint even smaller, then determined the minimum footprint they really needed persistently was their services' home page and that could be cached in a content delivery network. So when their service has no traffic at all, they have no instances running on their IaaS platform. Once the cache has been hit they then fire up the minimal footprint (which they tuned down to a single virtual machine) and then scale up from here.

Now that's leveraging cloud economics. If you can find the trough then you can game the system to your advantage. What are you doing to maximize your cloud footprint? Share your best tips here and we'll collect them for a future blog post and the forthcoming update to our IaaS Best Practices report.

Mike Gualtieri contributed to this report.

James Staten serves Infrastructure & Operations Professionals as a Forrester Research analyst.

Lori MacVittie (@lmacvittie) claimed Discussions on scaling are focused on the application, but don’t forget everything else. And I do mean everything in a preface to her The Domino Effect post of 8/2/2010 to F5’s DevCentral blog:

You played with dominos as a child, I’m sure, or perhaps your children do now. You know what happens when the first domino topples and hits the second and then the third and then… The entire chain topples to the ground. When you play dominos it’s actually cool to watch them topple in interesting patterns. Playing a game of dominos with your infrastructure, however, is not.

SCALABILITY is ABOUT the WHOLE BUSINESS

Almost every discussion revolving around “scale” are centered on the application. Don’t get me wrong, that’s the right place to start, but there are quite a few other facets of IT and the business that need to be “scale-ready” if you’re anticipating hot and heavy growth. A recent article on dramatic growth delved a bit more deeply into what at first glance may appear to be non-technical, i.e. business, related growth concerns but upon deeper reflection you may see the connection back to technology.

Make sure your third parties are ready to scale - Whoever you are working with, whether it be suppliers for your widget manufacturing or your shipping company who was used to pushing only 100 pieces of product a week, you have to make sure that the third parties you are working with can scale with you. If your suppliers can’t ensure that your widgets ordered during peak sales can be fulfilled in a timely matter, or that your boxes cannot be shipped more than 100 at a time, then you will have massive problems that are outside of your control. Also understand that it’s not that easy just to pick up and find another supplier to support the excess volume that your current supplier cannot manage.

Relating this back to technology, if you’re integrated with or rely upon any kind of third-party or hosted service you simply must ask can they handle the anticipated growth? Can they scale out?

YOU ARE ONLY AS SCALABLE AS THE LEAST SCALABLE POINT IN YOUR CHAIN

One of the great equalizing aspects of the Internet was the ability of smaller suppliers, distributors, and providers to be able to provide and consume services from anyone. This made them more competitive as the costs, previously a barrier to enter the marketplace, were eliminated in terms of the technology required to be a participate in the supply-chain game. While often cost-competitive, reliance on any third-party supplier can be detrimental to the health of an organization. Growth in this case really can kill: the infrastructure, the application, and ultimately the business.

The aforementioned article is talking strictly about the business side of third-parties, but consider when the business side of a third-party supplier is providing services – think payment processing or order-processing – and those services are integrated via technology. It’s an application, somewhere. If it can’t scale, you can’t scale. It’s riding atop an infrastructure, somewhere. Which means if its infrastructure can’t scale, you can’t scale. And if it relies on third-third-party services that can’t scale, well, you get the picture. Ad infinitum.

You might be patting yourself on the back because you don’t rely upon any third-party services but you might want to wait a minute before you do. The least scalable point in your chain might be you, after all.

We focus on scaling out the application but what about the means by which folks find your application in the first place? Yes, you guessed it. What about your DNS infrastructure? Is it ready? It is able to handle the deluge? Or did you leave it running on an old server that’s still running a 100 MB connection to the rest of your network because, well, it ain’t broke.

What about e-mail? Sure you might be able to handle the incoming connections but what about storage? How much capacity is available for storing all that e-mail from satisfied customers? What about the storage for your application? And your infrastructure? Of particular interest might be your logging servers…they’re going to have a lot of traffic to log, right? It’s got to go somewhere…

COMPREHENSIVE is the ONLY WAY to WIN THIS GAME

A complete scalability strategy should be just that: complete. It’s got to be as comprehensive as a disaster recovery or business continuity strategy. It must take into consideration all the myriad points in your infrastructure where an increase in traffic might increase other related and just as significant technology that also must be scaled and ready to go.

Because when the first domino topples, the entire chain is going to go. The sound of dominos toppling is almost pleasant. The sound coming from the data center when the infrastructure topples is, well, not so pleasant.

The Economist asserted “As Microsoft and Intel move apart, computing becomes multipolar” as the lead-in to its The end of Wintel article in the 7/29/2010 issue;

THEY were the Macbeths of information technology (IT): a wicked couple who seized power and abused it in bloody and avaricious ways. Or so critics of Microsoft and Intel used to say, citing the two firms’ supposed love of monopoly profits and dead rivals. But in recent years, the story has changed. Bill Gates, Microsoft’s founder, has retired to give away his billions. The “Wintel” couple (short for “Windows”, Microsoft’s flagship operating system, and “Intel”) are increasingly seen as yesterday’s tyrants. Rumours persist that a coup is brewing to oust Steve Ballmer, Microsoft’s current boss.

Yet there is life in the old technopolists. They still control the two most important standards in computing: Windows, the operating system for most personal computers, and “Intel Architecture”, the set of rules governing how software interacts with the processor it runs on. More than 80% of PCs still run on the “Wintel” standard. Demand for Windows and PC chips, which flagged during the global recession, has recovered. So have both firms’ results: to many people’s surprise, Microsoft announced a thumping quarterly profit of $4.5 billion in July; Intel earned an impressive $2.9 billion. …

Other firms have leapt into the gap. Apple is now worth more than Microsoft, thanks to its hugely successful mobile devices, such as the iPod and the iPhone. Google may be best known for its search service, but the firm can also be seen as a global network of data centres—dozens of them—which allow it to offer free web-based services that compete with many of Microsoft’s pricey programmes.

The shift to mobile computing and data centres (also known as “cloud computing”) has speeded up the “verticalisation” of the IT industry. Imagine that the industry is a stack of pancakes, each representing a “layer” of technology: chips, hardware, operating systems, applications. Microsoft, Intel and other IT giants have long focused on one or two layers of the stack. But now firms are becoming more vertically integrated. For these new forms of computing to work well, the different layers must be closely intertwined. …

In response to these threats, Microsoft has made big bets on cloud computing. It has already built a global network of data centres and developed an operating system in the cloud called Azure. The firm has put many of its own applications online, even Office, albeit with few features. What is more, Microsoft has made peace with the antitrust authorities and even largely embraced open standards. … [Emphasis added.]

Read the entire article here.

<Return to section navigation list>

Windows Azure Platform Appliance

The Windows Azure Team recently updated information about the Windows Azure Platform Appliance (WAPA) on its landing page:

Delivering the power of the Windows Azure Platform to your datacenter.

Windows Azure™ platform appliance is a turnkey cloud platform that customers can deploy in their own datacenter, across hundreds to thousands of servers. The Windows Azure platform appliance consists of Windows Azure, SQL Azure and a Microsoft-specified configuration of network, storage and server hardware. This hardware will be delivered by a variety of partners.

The appliance is designed for service providers, large enterprises and governments and provides a proven cloud platform that delivers breakthrough datacenter efficiency through innovative power, cooling and automation technologies.

Use Windows Azure Platform Appliance to:

- Offer scale-out applications, platform-as-a-service or software-as-a-service in your own datacenter.

- Deploy a proven cloud platform that can scale to tens of thousands of servers.

- Leverage the benefits of the Windows Azure platform in your own datacenter while maintaining physical control, data sovereignty and regulatory compliance.

Windows Azure Platform Appliance Benefits

Efficiency and Agility:

- Datacenter efficiency – proven to run at low cost of operations across hundreds and thousands of servers with a small ratio of IT operators to servers and efficient power, cooling and server utilization.

- Massive Scale – ability to scale from hundreds to tens-of-thousands of servers.

- Elasticity – ability to scale up and down from one to thousands of application instances depending on demand.

- Integration – with existing datacenter tools and operations

- Optimized for multi-tenancy – designed for multi-tenancy across business units or organizations.

- Optimized for service availability – Designed for service availability via fault tolerance and self-healing capabilities

Simplicity:

Partners and customers can utilize their existing development skills in familiar languages such as .NET and PHP to create and manage web applications and services,. Optimized for scale-out applications-designed so that developers can easily build scale-out applications using their existing application platforms and development tools.

Trustworthy:

Partners and customers will receive enterprise class service backed by reliable service level agreements and deep online services experience.

Why call it an appliance?

We call it an appliance because it is a turn-key cloud solution on highly standardized, preconfigured hardware. Think of it as hundreds of servers in pre-configured racks of networking, storage, and server hardware that are based on Microsoft-specified reference architecture.

The Microsoft Windows Azure platform appliance is different from typical server appliances in that it involves hundreds of servers rather than just one node or a few nodes and It is designed to be extensible – customers can simply add more servers -- depending upon the customer’s needs to scale out their platform.

Windows Azure Platform Appliance Availability:

The appliance is currently in Limited Production Release to a small set of customers and partners. We will develop our roadmap depending on what we learn from this set of customers and partners. We have no additional details to share at this time.

Who is using the appliance?

Dell, eBay, Fujitsu and HP intend to deploy the appliance in their datacenters to offer new cloud services.

Ways to get started with the Windows Azure Platform

- New to Windows Azure? Learn about what you can accomplish here

- Ready to start building with Windows Azure? Download free Tools & SDK

- Need to know pricing? Compare all of our platform packages

The team’s page offers links to Windows Azure platform FAQs and News.

<Return to section navigation list>

Cloud Security and Governance

Nicole Lewis asserted “Healthcare providers still have many reservations about the security of cloud computing for electronic medical records and mission-critical apps” as a preface to her Microsoft Aims To Alleviate Health IT Cloud Concerns in this 7/6/2010 post to InormationWeek’s HealthCare blog (overlooked when posted):

Many healthcare providers have questions and doubts about adopting cloud computing for administration and hosting of their healthcare information. Steve Aylward, Microsoft's general manager for U.S. health and life sciences, has encouraged healthcare IT decision makers to embrace the technology, which he said could help them improve patient care and provide new delivery models that can increase efficiency and reduce costs.

"Just about everyone I know in healthcare is asking the same question: "What can cloud computing do for me?" Aylward writes in a June 28 blog post.

"Plenty," Aylward answers. "The cloud can allow providers to focus less on managing IT and more on delivering better care: It can, for instance, be used to migrate e-mail, collaboration, and other traditional applications into the web. It can also be used to share information seamlessly and in near-real-time across devices and other organizations," Aylward explains.

Generally defined as anything that involves delivering hosted services over the internet, a cloud computing model that offers a software-as-a-service platform is increasingly being offered to healthcare delivery organizations, especially small and medium-size physician practices that are budget constrained and have few technical administrators on staff.

What has helped information technology managers at health delivery organizations take a closer look at cloud computing, however, is the Obama administration's objective to move medical practices and hospitals away from paper-based systems and onto digitized records. Primarily through the Health Information Technology for Economic and Clinical Health (HITECH) Act, the government has established programs under Medicare and Medicaid to provide incentive payments for the "meaningful use" of certified electronic medical record (EMR) technology.

The government's drive to have every American provided with an EMR by 2014 will mean that digitized clinical data is expected to grow exponentially. However, several doctors contacted by InformationWeek say that, even with those considerations, they are in no rush to outsource the maintenance of their important records and they have delayed their decisions to put sensitive information, such as their EMR systems, onto a cloud-based computing model.

Dr. Michael Lee, a pediatrician and director of clinical informatics for Atrius Health, an alliance of five nonprofit multi-specialty medical groups with practice sites throughout eastern Massachusetts, said that while he recognizes that cloud computing can be a cheaper and more practical model, especially for non-mission-critical applications, he is waiting to see what improvements in security will take place over the next five to 10 years before supporting a decision to put high-level data on cloud computing technology.

"The only cloud computing that we would contemplate at the moment is in the personal health record space, so that patients would own the dimension in the cloud in terms of where they want to store or access information," Lee said.

Page 2: Cloud Is Not Secure Enough ![]() 1 | 2 | 3 Next Page »

1 | 2 | 3 Next Page »

<Return to section navigation list>

Cloud Computing Events

Gartner will conduct A Mastermind Interview With Steve Ballmer, CEO, Microsoft on 10/21/2010 at its ITxpo 2010 Symposium in Orlando, FL:

Thursday, 21 October 2010

11:00 AM-11:45 AM

Keynote Speaker: Steve Ballmer

Moderators: Neil MacDonald, John Pescatore

Session Type: Keynote Session

Tracks: APP, BIIM, BPI, CIO, EA, COMMS, I&O, PPM, SRM, SVR

Virtuals: CC, CAC, ETT, ITM, MM, PBS, SM, SUS

Marc Benioff, Chairman and CEO of Salesforce.com, and John T. Chambers, Chairman and Chief Executive Officer of Cisco, also will be keynote speakers.

Click here to display the Cloud Computing (CC) virtual tracks.

Geoff Snowman will present an MSDN Webcast: Windows Azure AppFabric: Soup to Nuts (Level 300) on Wed., 9/15/2010 at 9:00 AM PDT:

Event Overview: In this webcast, we demonstrate a web service that combines identity management via Active Directory Federation Services (AD FS) and Windows Azure AppFabric Access Control with cross-firewall connectivity via Windows Azure AppFabric Service Bus. We also discuss several possible architectures for services and sites that run both on-premises and in Windows Azure and take advantage of the features of Windows Azure AppFabric. In each case, we explain how the pay-as-you-go pricing model affects each application, and we provide strategies for migrating from an on-premises service-oriented architecture (SOA) to a Service Bus architecture.

Presenter: Geoff Snowman, Mentor, Solid Quality Mentors

Geoff Snowman is a mentor with Solid Quality Mentors, where he teaches cloud computing, Microsoft .NET development, and service-oriented architecture. His role includes helping to create SolidQ's line of Windows Azure and cloud computing courseware. Before joining SolidQ, Geoff worked for Microsoft Corporation in a variety of roles, including presenting Microsoft Developer Network (MSDN) events as a developer evangelist and working extensively with Microsoft BizTalk Server as both a process platform technology specialist and a senior consultant. Geoff has been part of the Mid-Atlantic user-group scene for many years as both a frequent speaker and user-group organizer.

<Return to section navigation list>

Other Cloud Computing Platforms and Services

Yahoo!Finance regurgitated Painless Migration Package Puts a Stop to Lost Business Profits and Productivity Resulting from Continued Quickbase Downtimes on 8/2/2010 as a lead-in to the Caspio Offers Intuit Quickbase Customers a Reliable Alternative After Repeated Service Outages press releast of 7/29/2010: