Windows Azure and Cloud Computing Posts for 8/6/2010+

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

•• Update 8/8/2010: New articles marked •• (copy, Ctrl+F and paste to find items)

• Update 8/7/2010: New articles marked • (copy, Ctrl+F and paste, add [space])

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database, Codename “Dallas” and OData

- AppFabric: Access Control and Service Bus

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Windows Azure Infrastructure

- Windows Azure Platform Appliance (WAPA)

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Read the detailed TOC here (PDF) and download the sample code here.

Discuss the book on its WROX P2P Forum.

See a short-form TOC, get links to live Azure sample projects, and read a detailed TOC of electronic-only chapters 12 and 13 here.

Wrox’s Web site manager posted on 9/29/2009 a lengthy excerpt from Chapter 4, “Scaling Azure Table and Blob Storage” here.

You can now freely download by FTP and save the following two online-only PDF chapters of Cloud Computing with the Windows Azure Platform, which have been updated for SQL Azure’s January 4, 2010 commercial release:

- Chapter 12: “Managing SQL Azure Accounts and Databases”

- Chapter 13: “Exploiting SQL Azure Database's Relational Features”

HTTP downloads of the two chapters are available for download at no charge from the book's Code Download page.

Tip: If you encounter articles from MSDN or TechNet blogs that are missing screen shots or other images, click the empty frame to generate an HTTP 404 (Not Found) error, and then click the back button to load the image.

Azure Blob, Drive, Table and Queue Services

• Richard Parker uploaded FTP to Azure Blob Storage Bridge to CodePlex on 8/5/2010:

Project Description

Deployed in a worker role and written in C#, the code creates an 'FTP Server' that can accept connections from all popular FTP clients (like FileZilla, for example). It's easy to deploy, and you can easily customise it to support multiple logins.What does it do?

The project contains code which will emulate an FTP server while running as a Windows Azure worker role. It connects to Azure Blob Storage and has the capability to restrict each authenticated user to a specific container within your blob storage account. This means it is possible to actually configure an FTP server much like any traditional deployment and use blob storage for multiple users.

This project contains slightly modified source code from Mohammed Habeeb's project, "C# FTP Server" and runs in an Azure Worker Role.

Jim O’Neill continues his blog series with Azure@home Part 3: Azure Storage of 8/5/2010:

This post is part of a series diving into the implementation of the @home With Windows Azure project, which formed the basis of a webcast series by Developer Evangelists Brian Hitney and Jim O’Neil. Be sure to read the introductory post for the context of this and subsequent articles in the series.

In case you haven’t heard: through the end of October, Microsoft is offering 500 free monthly passes (in the US only) for you to put Windows Azure and SQL Azure through its paces. No credit card is required, and you can apply on-line. It appears to be equivalent to the two-week Azure tokens we provided to those that attended our live webcast series – only twice as long in duration! Now you have no excuse not to give the @home with Windows Azure project a go!

In my last post, I started diving into the WebRole code of the Azure@home project covering what the application is doing on startup, and I’d promised to dive deeper into the default.aspx and status.aspx implementations next. Well, I’m going to renege on that! As I was writing that next post, I felt myself in a chicken-and-egg situation, where I couldn’t really talk in depth about the implementation of those ASP.NET pages without talking about Azure storage first, so I’m inserting this blog post in the flow to introduce Azure storage. If you’ve already had some experience with Azure and have set up and accessed an Azure storage account, much of this article may be old hat for you, but I wanted to make sure everyone had a firm foundation before moving on.

Azure Storage 101

Azure storage is one of the two main components of an Azure service account (the other being a hosted service, namely a collection of web and worker roles). Each Azure project can support up to five storage accounts, and each account can accommodate up to 100 terabytes of data. That data can be partitioned across three storage constructs:

- blobs – for unstructured data of any type or size, with support for metadata,

- queues – for guaranteed delivery of small (8K or less) messages, typically used for asynchronous, inter-role communication, and

- tables – for structured, non-schematized, non-relational data.

Also available are Windows Azure drives, which provide mountable NTFS file volumes and are implemented on top of blob storage.

Azure@home uses only table storage; however, all storage shares some common attributes

- accessibility via a similar REST-based API, with a .NET abstraction layer provided by the StorageClient API,

- strong consistency (as opposed to eventual consistency) ,

- redundancy (written to three locations to support high availability), and

- shared key authentication.

Development Storage

To support the development and testing of applications with Windows Azure, you may be aware that the Windows Azure SDK includes a tool known as csrun, which provides a simulation of Azure compute and storage capabilities on your local machine – often referred to as the development fabric. csrun is a stand-alone application, but that’s transparent when you’re running your application locally, since Visual Studio communicates directly with the fabric to support common developer tasks like debugging. In terms of Azure storage, the development fabric simulates tables, blobs, and queues via a SQLExpress database (by default, but you can use the utility dsinit to point to a different instance of SQL Server on your local machine).

Development storage acts like a full-fledged storage account in an Azure data center and so requires the same type of authentication mechanisms – namely an account name and a key – but it’s a fixed and well-known value:

Account name: devstoreaccount1

Account key: Eby8vdM02xNOcqFlqUwJPLlmEtlCDXJ1OUzFT50uSRZ6IFsuFq2UVErCz4I6tq/K1SZFPTOtr/KBHBeksoGMGw==That’s probably not a value you’re going to commit to memory though, so as we’ll see in a subsequent post, there’s a way to configure a connection string (just as you might a database connection string) to easily reference your development storage account.

Keep in mind though that development storage is not implemented in exactly the same way as a true Azure storage account (for one thing, few of us have 100TB of disk space locally!), but for the majority of your needs it should suffice as you’re developing and testing your application. Also note that you can run your application in the development fabric but access storage (blobs, queues, and tables, but not drives) in the cloud by simply specifying an account name and key for a bona fide Azure storage account. You might do that as a second level of testing before incurring the expense and time to deploy your web and worker roles to the cloud.

Provisioning an Azure Storage Account

Sooner or later, you’re going to outgrow the local development storage and want to test your application in the actual cloud. The first step toward doing so is creating a storage account within your Azure project. You accomplish this via the Windows Azure Devloper Portal as I’ve outlined below. If you’re well-versed in these steps, feel free to skip ahead. …

Jim continues with a table of steps for creating an Azure Storage account (excised for brevity).

Table Storage in Azure@home

With your storage account provisioned, you’re now equipped to create and manipulate your data in the cloud, and for Azure@home that specifically means two tables: client and workunit.

As depicted on the left, data is inserted into the client table by WebRole, and that data consists of a single row including the name, team number, and location (lat/long) of the ‘folder’ for the Folding@home client application. We’ll see in a subsequent post that multiple worker roles are polling this table waiting for a row to arrive so they can pass that same data on to the Folding@home console application provided by Stanford.

WebRole also reads from a second table, workunit, which contains rows reflecting both the progress of in-process work units and the statistics for completed work units. The progress data is added and updated in that table by the various worker roles.

Reiterating what I mentioned above, Azure tables provide structured, non-relational, non-schematized storage (essentially a NoSQL offering). Let’s break down what that means:

- structured – Every row (or more correctly, entity) in a table has a defined structure and is atomic. An entity has properties (‘columns’), and those properties have specific data types like string, double, int, etc. Contrast that with blob storage where each entity is just a series of bytes with no defined structure. Every Azure table also includes three properties:

- PartitionKey – a string value; the ‘partition’ has great significance in terms of performance and scalability:

- the table clusters data in the same partition which enhances locality of reference, and conversely it distributes different partitions across multiple storage nodes

- each partition is served by single storage node (which is beneficial for intra-partition queries but *can* present a bottleneck for updates)

- transactional capabilities (Entity Group Transactions) apply only within a partition

- RowKey – a string value, that along with the PartitionKey forms the unique (and, currently only) index for the table

- Timestamp – a read-only DateTime value assigned by the server marking the time the entity was last modified

- PartitionKey – a string value; the ‘partition’ has great significance in terms of performance and scalability:

Recommendation: The selection of PartitionKey and RowKey is perhaps the most important decision you’ll make when designing an Azure table. For a good discussion of the considerations you should make, be sure to read the Windows Azure Table whitepaper by Jai Haridas, Niranjan Nilakantan, and Brad Calder. There are complementary white papers for blob, queue, and drive storage as well.

- non-relational – This is not SQL Server or any of the other DBMSes you’ve grown to love. There are no joins, there is no SQL; for that you can use SQL Azure. But before you jump ship here back to the relational world, keep in mind that the largest database supported by SQL Azure is 50GB, and the largest table store supported by Windows Azure is 100TB (equivalent of 2000 maxed-out SQL Azure databases), and even then you can provision multiple 100TB accounts! Relational capability comes at a price, and that price is scalability and performance. Why you ask? Check out the references for the CAP Theorem.

- non-schematized – Each entity in a table has a well-defined structure; however, that structure can vary among the entities in the table. For example, you could store employees and products in the same table, and employees may have properties (columns) like last_name and hire_date, while products may have properties like description and inventory. The only properties that have to be shared by all entities are the three mentioned above (PartitionKey, RowKey, and Timestamp). That said, in practice it’s fairly common to impose a fixed schema on a given table, and when using abstractions like ORMs and the StorageClient API, it’s somewhat of a necessity.

Let’s take a look at the schema we defined for the two tables supporting the Azure@home project:

client Table

The PartitionKey defined for this table is the UserName, and the RowKey is the PassKey. Was that the best choice? Well in this case, the point is moot since there will at most be one record in this table.

workunit Table

The PartitionKey defined for this table is the InstanceId, and the RowKey is a concatenation of the Name, Tag, and DownloadTime fields. Why those choices?

- The InstanceId defines the WorkerRole currently processing the work unit. At any given time there is one active role instance with that ID, and so it’s the only one that’s going to write to that partition in the workunit table, avoiding any possibility of contention. Other concurrently executing worker roles will also be writing to that table, but they will have different InstanceIds and thus target different storage nodes. Over time each partition will grow since each WorkerRole retains its InstanceId after completing a work unit and moving on to another, but at any given time the partition defined by that InstanceId has a single writer.

- Remember that the PartitionKey + RowKey defines the unique index for the entity. When a work unit is downloaded from Stanford’s servers (by the WorkerRole instances), it’s identified primarily by a name and a tag (e.g., “TR462_B_1 in water” is the name of a work unit, and “P6503R0C68F89” is a tag). Empirically we discovered that the same work units may be handed out to multiple different worker roles, so for our purposes the Name+Tag combination was not sufficient. Adding the DownloadTime (also provided by the Stanford application) gave us the uniqueness we required.

Programmatic Access

When I introduced Azure storage above, I mentioned how all the storage options share a consistent RESTful API. REST stands for Representational State Transfer, a term coined by Dr. Roy Fielding in his Ph.D. dissertation back in 2000. In a nutshell, REST is an architectural style that exploits the natural interfaces of the web (including a uniform API, resource based access, and using hypermedia to communicate state). In practice, RESTful interfaces on the web

- treat URIs as distinct resources (versus operations as is typical in SOAP-based APIs),

- use more of the spectrum of available HTTP verbs (specifically, but not exclusively, GET for retrieving a resource, PUT for updating a resource, POST for adding a resource, and DELETE for removing a resource), and

- embed hyperlinks in resource representations to navigate to other related resources, such as in a parent-child relationship.

OData

The RESTful architecture employed by Azure table storage specifically subscribes to the Open Data Protocol (or OData), an open specification for data transfer on the web. OData is a formalization of the protocol used by WCF Data Services (née ADO.NET Data Services, née “Astoria”).

The obvious benefit of OData is that it makes Azure storage accessible to any client, any platform, any language that supports an HTTP stack and the Atom syndication format (an XML specialization) – PHP, Ruby, curl, Java, you name it. At the lowest levels, every request to Azure storage – retrieving data, updating a value, creating a table – occurs via an HTTP request/response cycle (“the uniform interface” in this RESTful implementation).

StorageClient

While it’s great to have such a open and common interface, as developers, our heads would quickly explode if we had to craft HTTP requests and parse HTTP responses for every data access operation (just as they would explode if we had code to the core ODBC API or parse TDS for SQL Server). The abstraction of the RESTful interface that we crave is provided in the form of the StorageClient API for .NET, and there are abstractions available for PHP, Ruby, Java, and others. StorageClient provides a LINQ-enabled model, with client-side tracking, that abstracts all of the underlying HTTP implementation. If you’ve worked with LINQ to SQL or the ADO.NET Entity Framework, the programmatic model will look familiar.

To handle the object-“relational” mapping in Windows Azure there’s a bit of a manual process required to define your entities, which makes sense since we aren’t dealing with nicely schematized tables as with LINQ to SQL or the Entity Framework. In Azure@home that mapping is incorporated in the AzureAtHomeEntities project/namespace, which consists of a single code file: AzureAtHomeEntities.cs. There’s a good bit of code in that file, but it divides nicely into three sections:

- a ClientInformation entity definition,

- a WorkUnit entity definition, and

- a ClientDataContext.

Jim continues with source code for the preceding sections and concludes:

At this point we’ve got a lot of great scaffolding set up, but we haven’t actually created our tables yet, much less populated them with data! In the next post, I’ll revisit the WebRole implementation to show how to put the StorageClient API and the entities described above to use.

<Return to section navigation list>

SQL Azure Database, Codename “Dallas” and OData

•• Darryl K. Taft offered Microsoft LightSwitch: 15 Reasons Non-Programmers Should Try It Out on 8/4/2010 in the form of a slideshow:

Microsoft has announced a new product called Microsoft Visual Studio LightSwitch, which the company claims is the simplest way to build business applications for the desktop and cloud.

LightSwitch is a new member of the Visual Studio family focused on making it easy to develop line-of-business applications. Jason Zander, corporate vice president for Visual Studio at Microsoft, says, at their core, "most end-user business applications combine two things: data plus screens." LightSwitch is optimized around making these two things very simple, he said.

Simple export of data from grids to Excel worksheets is #11 of the 15 reasons.

•• James Senior (@jsenior) tweeted The OData helper for WebMatrix is now on the list of consumers at odata.org http://www.odata.org/consumers #webmatrix on 8/5/2010:

Here are the OData Helper for WebMatrix link’s contents (written by James):

Project Description

The OData Helper for WebMatrix and ASP.NET Web Pages allows you to easily retrieve and update data from any service that exposes its data using the OData Protocol.Get Started in 60 seconds

- Download the OData Helper DLL

- In your WebMatrix project, create a folder named "bin" off the root

- Copy the OData Helper DLL there

- Check out the documentation

- Start writing queries!

Documentation and Samples

Documentation is available here, and be sure to check out the sample application which is available as a download too.Other useful resources

- View the WebMatrix website to learn more about WebMatrix and download it

- Watch James Senior's video tutorial on using the OData Helper

- For more information on OData check out the spec website.

- Find out which websites and services are producing OData feeds

- Learn how to query a service using the OData syntax

•• Doug Finke (@ODataPrimer) updated his Welcome to OData Primer page on 8/7/2010:

What is OData?

The Open Data Protocol (OData) is an open protocol for sharing data. It provides a way to break down data silos and increase the shared value of data by creating an ecosystem in which data consumers can interoperate with data producers in a way that is far more powerful than currently possible, enabling more applications to make sense of a broader set of data. Every producer and consumer of data that participates in this ecosystem increases its overall value.

Following are categories of Doug’s many links to OData resources:

- List of Articles about Consuming OData Services

- List of Articles about Producing OData Services

- List of Podcasts about OData

- List of Screencasts, Videos, Slide Decks and Presentations about OData

- Where to get your questions answered?

- OData Feeds to Use and Test (Free)

- OData Tools

- Open Discussions

- OData Open Source/Source Available Projects and Code Samples

- OData People

• Wayne Walter Berry (@WayneBerry) updated his Handling Transactions in SQL Azure post of 7/19/2010 on 8/6/2010:

In this article I am going to touch on some of the aspects of transaction handling in SQL Azure.

This blog post was originally published on 7/19/2010, it has been updated on 8/06/2010 for clarity and to address some of the issues raised in the comments.

Local Transactions

SQL Azure supports local transactions. These types of transactions are done with the Transact-SQL commands BEGIN TRANSACTION, ROLLBACK TRANSACTION, COMMIT TRANSACTION. They work exactly the same as they do on SQL Server.

Isolation Level

SQL Azure default database wide setting is to enable read committed snapshot isolation (RCSI) by having both the READ_COMMITTED_SNAPSHOT and ALLOW_SNAPSHOT_ISOLATION database options set to ON, learn more about isolation levels here. You cannot change the database default isolation level. However, you can control the isolation level explicitly on a connect ion. On way to do this you can use any one of these in SQL Azure before you BEGIN TRANSACTION:

SET TRANSACTION ISOLATION LEVEL SERIALIZABLE SET TRANSACTION ISOLATION LEVEL SNAPSHOT SET TRANSACTION ISOLATION LEVEL REPEATABLE READ SET TRANSACTION ISOLATION LEVEL READ COMMITTED SET TRANSACTION ISOLATION LEVEL READ UNCOMMITTEDSET TRANSACTON ISOLATION LEVEL controls the locking and row versioning behavior of Transact-SQL statements issued by a connection to SQL Server and spans batches (GO statement). All of the above works exactly the same as SQL Server.

Distributed Transactions in SQL Azure

SQL Azure Database does not support distributed transactions, which are transactions that multiple transaction managers (multiple resources). For more information, see Distributed Transactions (ADO.NET). This means that SQL Azure doesn’t allow Microsoft Distributed Transaction Coordinator (MS DTC) to delegate distributed transaction handling. Because of this you can’t use ADO.NET or MSDTC to commit or rollback a single transaction that spans across multiple SQL Azure databases or a combination of SQL Azure and an on-premise SQL Server.

This doesn’t mean that SQL Azure doesn’t support transactions, it does. However, it only supports transactions that are not escalated to a resource manager such as MS DTC. An article entitled: Transaction Management Escalation on MSDN can give you more information.

TransactionScope

The TransactionScope class provides a simple way to mark a block of code as participating in a transaction, without requiring you to interact with the transaction itself. The TransactionScope class works with the Transaction Manager to determine how the transaction will be handled. If the transaction manager determines that the transaction should be escalated to a distributed transaction, using the TransactionScope class will cause a runtime exception when running commands against SQL Azure, since distributed transactions are not supported.

So the question is when is it safe to use the TransactionScope class with SQL Azure? The simple answer is whenever you use it in a way that the Transaction Manager does not promote the transaction to a distributed transaction. So another way to ask the question is what causes the transaction manager to promote the transaction? Here are some cases that cause the transaction to be promoted:

- When you have multiple connections to different databases.

- When you have nested connections to the same database.

- When the ambient transaction is a distributed transaction, and you don’t declare a TransactionScopeOption.RequiresNew.

- When you invoke another resource manager with a database connection.

Juval Lowy wrote an excellent whitepaper (downloadable here) all about System.Transactions, where he covers promotion rules in detail.

Because transaction promotion happens at runtime you need to make sure you understand all your runtime code paths in order to use TransactionScope successfully. You don’t want the thread calling your method to be involved in an ambient transaction.

SqlTransaction

One way to write your code without using the TransactionScope class is to use SqlTransaction. The SqlTransaction class doesn’t use the transaction manager, it wraps the commands within a local transaction that is committed when you call the Commit() method. You still can’t have a single transaction across multiple databases; however SqlTransaction class provides a clean way in C# to wrap the commands. If your code throws an exception, the using statement guarantees a call to IDispose which rolls back the transaction.

Here is some example code to look over:

using (SqlConnection sqlConnection = new SqlConnection(ConnectionString)) { sqlConnection.Open(); using (SqlTransaction sqlTransaction = sqlConnection.BeginTransaction()) { // Createthe SqlCommand object and execute the first command. SqlCommand sqlCommand = new SqlCommand("sp_DoFirstPieceOfWork", sqlConnection, sqlTransaction); sqlCommand.CommandType = System.Data.CommandType.StoredProcedure; sqlCommand.ExecuteNonQuery(); // Createthe SqlCommand object and execute the first command. SqlCommand sqlCommand = new SqlCommand("sp_DoSecondPieceOfWork", sqlConnection, sqlTransaction); sqlCommand.CommandType = System.Data.CommandType.StoredProcedure; sqlCommand.ExecuteNonQuery(); sqlTransaction.Commit(); } }

• Joydip Kanjilal’s In Sync: Creating Synchronization Providers With The Sync Framework appeared in MSDN Magazine’s August 2010 “Dealing with Data” issue:

The Sync Framework can be used to build apps that synchronize data from any data store using any protocol over a network. We’ll show you how it works and get you started building a custom sync provider.

Learn how to use the AtomPub protocol to pump up your blogs. Chris Sells includes a practical demonstration of mapping so you can expose a standard AtomPub service from a Web site and use Windows Live Writer to provide a rich editing experience against the service.

• Michael Maddox’s Consuming OData from Silverlight 4 with Visual Studio 2010 includes workarounds for discovered problems:

Consuming OData from Silverlight 4 can be a very frustrating experience for people like me who are just now joining the Silverlight party.

When it doesn't work, it can fail silently and/or with incorrectly worded warnings.

This is a perfect example of where a blog post can hopefully fill in some of the gaps left by the Microsoft documentation.

First, let's setup a very simple Silverlight 4 application against the odata.org Northwind service.

Note: You may need to install the Silverlight 4 Tools for VS 2010 for this sample.

http://www.bing.com/search?q=Microsoft+Silverlight+4+Tools+for+Visual+Studio+2010In Visual Studio 2010:

File -> New -> Project

Visual C# -> Silverlight -> Silverlight Application -> OK

New Silverlight Application wizard

Uncheck "Host the Silverlight application in a new website"

Silverlight Version: Silverlight 4

OKRight Click References in Solution Explorer -> Add Service Reference...

Address: http://services.odata.org/Northwind/Northwind.svc/

Namespace: RemoteNorthwindServiceReference

OKFrom the toolbox, drag and drop a DataGrid from the "Common Silverlight Controls" section onto MainPage.xaml

Change the AutoGenerateColumns property to True

Change the Margin to 0,0,0,0

Change the Width to 400In MainPage.xaml.cs:

Add the following using statement:using System.Data.Services.Client;

Add the following class variable:

private DataServiceCollection<RemoteNorthwindServiceReference.Shipper> _shippers;

Add the following to the bottom of the MainPage constructor method:

RemoteNorthwindServiceReference.NorthwindEntities remoteNorthwindService =

new RemoteNorthwindServiceReference.NorthwindEntities(

new Uri("http://services.odata.org/Northwind/Northwind.svc/"));_shippers = new DataServiceCollection<RemoteNorthwindServiceReference.Shipper>();

_shippers.LoadCompleted += new EventHandler<LoadCompletedEventArgs>(_shippers_LoadCompleted);var query = from shippers in remoteNorthwindService.Shippers select shippers;

_shippers.LoadAsync(query);Add the following new method:

private void _shippers_LoadCompleted(object sender, LoadCompletedEventArgs e)

{

if (_shippers.Continuation != null)

{

_shippers.LoadNextPartialSetAsync();

}

else

{

this.dataGrid1.ItemsSource = _shippers;

this.dataGrid1.UpdateLayout();

}

}Debug -> Start Without Debugging

You should get a dialog box titled "Silverlight Project" that says:

"The Silverlight project you are about to debug uses web services. Calls to the web service will fail unless the Silverlight project is hosted in and launched from the same web project that contains the web services. Do you want to debug anyway?"

Click "Yes"This application runs fine. The warning dialog message was obviously inaccurate and misleading.

I wish I would have understood what this warning dialog was trying to say, but since the remote OData service was working, I had no real choice but to ignore the dialog, which would haunt me when trying to use the same application to call a local OData service.

I believe this dialog is trying to warn you that Silverlight has special constraints when calling web services, but it's still not clear to me what those are. Proceed cautiously.

Here is one way to successfully call a local OData webservice from Silverlight 4 in Visual Studio 10:

File -> New -> Project

Visual C# -> Silverlight -> Silverlight Application -> OK

New Silverlight Application wizard

OK (Accept the defaults: ASP.NET Web Application Project, Silverlight 4, etc.)Right Click SilverlightApplication<number>.Web in Solution Explorer -> Add New Item...

Visual C# -> Data -> ADO.NET Entity Data Model -> Add

Entity Data Model Wizard

Next (Generate from database - this assumes you have Northwind running locally)

New Connection... -> Point to your local Northwind Database Server -> Next

Select the Tables check box (to select all tables) -> FinishRight Click SilverlightApplication<number>.Web in Solution Explorer -> Add New Item...

Visual C# -> Web -> WCF Data Service -> AddIn WcfDataService1.cs:

Replace " /* TODO: put your data source class name here */ " with "NorthwindEntities" (no quotes)

Add the following line to the InitializeService method:config.SetEntitySetAccessRule("*", EntitySetRights.AllRead);

Debug -> Start Without Debugging

Right Click References under SilverlightApplication<number> in Solution Explorer -> Add Service Reference...

Discover -> Services In Solution (note the port number of the discovered service, you will need it below)

Namespace: LocalNorthwindServiceReference

OKStop the browser that was started above

Follow the same basic steps as the first example above to modify MainPage except:

Replace RemoteNorthwindServiceReference with LocalNorthwindServiceReference

Replace "http://services.odata.org/Northwind/Northwind.svc/" with "http://localhost:<port number noted above>/WcfDataService1.svc/"Debug -> Start Without Debugging

This application runs fine (without the warning dialog this time).

It can be ridiculously difficult to get a local OData service working with Silverlight 4 if you don't carefully dance around the project setup issues.

The warning dialog when you start debugging is nearly useless and the app will return no data with no apparent errors if you setup the project incorrectly.Some references I found useful while building this sample:

MSDN: How to: Create the Northwind Data Service (WCF Data Services/Silverlight)

http://msdn.microsoft.com/en-us/library/cc838239(VS.95).aspxAudrey PETIT's blog: Use OData data with WCF Data Services and Silverlight 4

http://msmvps.com/blogs/audrey/archive/2010/06/10/odata-use-odata-data-with-wcf-data-services-and-silverlight-4.aspxDarrel Miller's Bizcoder blog: World’s simplest OData service

http://www.bizcoder.com/index.php/2010/03/26/worlds-simplest-odata-service/Once you deploy your OData service to a real web hosting environment, you'll likely need to do some special setup to access your OData service from Silverlight 4 (clientaccesspolicy.xml / crossdomain.xml):

Making a Service Available Across Domain Boundaries

http://msdn.microsoft.com/en-us/library/cc197955(VS.95).aspxTremendous thanks go out to Scott Davis of Ignition Point Solutions for pointing me to the flawed project setup as the reason I couldn't get this working initially.

• Steve Fox posted Office Development using OData: Combining the Old with the New on 8/6/2010:

You may have heard the “We’re all in” message, but some of you may be wondering how the cloud applies to Office. The answer is that it applies in many different ways, which I’ll be exploring over the next few weeks and months with you. For example, the following are a couple of ways in which Office and the cloud come together:

- Rich Office client apps that integrate with the cloud (e.g. Word or Outlook add-ins that leverage cloud-based services or data). These apps can range from consuming OData, Web 2.0 applications, and Azure.

- Integrating Office and SharePoint through Office server-side services. InfoPath, Excel Services, Access Services, etc.

As mentioned above, one of the new ways you can leverage cloud-based data is by using oData; in this post I’d like to walk through a sample that integrates Outlook 2010 with OData. OData is the Open Data protocol (http://www.odata.org/) that supports leveraging web-based data through light-weight RESTful interfaces.

First, if you’re looking to get started with some pre-existing data, you can use one of a couple of OData samples: 1) Netflix data (http://odata.netflix.com/Catalog/) or 2) Northwind data (http://services.odata.org/Northwind/Northwind.svc/). For example, if you navigate to the Northwind URI you’ll see the following feed. Note that you can filter on results using this URI, for example entering http://services.odata.org/Northwind/Northwind.svc/Customers will return all of the Northwind customers.

Using OData interfaces, you can leverage data like the Northwind data in a rich Office client application. Let’s walk through creating an Outlook add-in that leverages the Northwind data via OData.

To begin, open Visual Studio 2010 and use the Office 2010, Outlook add-in project template. Right-click and add a new WPF user control. In the figure below you can see that I’ve created a straightforward XAML-based user control. The UI includes a combo-box that will load customers, labels (e.g. Contact Name, Contact Address, etc.) that will display customer information, two buttons (one to view order data for a specific customer and another to create a contact from the customer data), and a listbox to display the order data.

The following shows the code for the above user control.

XAML code excised for brevity

After you’ve created the user control, you’ll now want to add a service reference to the Northwind OData source using the following URI: http://services.odata.org/Northwind/Northwind.svc) by right-clicking the project and selecting Add Service Reference. Add the aforementioned URI into the service reference dialog, and you’ll be able to use the Northwind data in your application. To get to the code-behind, you can right-click the XAML file and select View Code.

The code-behind for the user control is as follows. Note that you can use the OData connection as a data context (see bolded line of code). This application loads two sets of data from Northwind, Customers and Orders. You’ll also note that you can use LINQ queries against the OData feed, which in this application are used to populate the list collections for the customers and orders (the list collections use custom objects: oDataCustomer and oDataOrders). The LINQ queries also enable you to take the selected customer in the combo-box and display the orders for that customer. One of the interesting parts of this application is the integration of the OData Northwind data with the Outlook object model. For example, you can see in the btnAddCustomer_Click method you’re creating a new contact from the selected customer data.

C# source code excised for brevity.

You can then click Create Contact to add that customer to your Outlook contacts. See the figure below to see that the customer is now added.

There are a ton of other ways to integrate with Office, and in future blogs I’ll discuss other types of integrations—e.g. Azure and Web 2.0.

If you’re looking for the code, you can get the code for this post here: http://cid-40a717fc7fcd7e40.office.live.com/browse.aspx/Outlook%5E_oData%5E_Code.

[oData converted to OData throughout the preceding post.]

Wayne Walter Berry (@WayneBerry) invites you to Try SQL Azure for One Month for Free in this 8/6/2010 post to the SQL Azure Team blog:

How would you like to try SQL Azure and Windows Azure for a month - for free? Now you can, with the Windows Azure Platform One Month Pass USA. Through this unique offer, US developers can get a one month pass to try out SQL Azure and Windows Azure - without having to submit a credit card!

Limited to US developers, the first 500 to sign up each month will get a full month's pass good for the one calendar month. In addition, you'll get free phone, chat and email support through the Front Runner for Windows Azure program.

More Information: Windows Azure Platform One Month Pass USA

• Some of Wayne’s earlier posts on the Project 31-A blog about migrating from SQL Server 2005 to SQL Azure are here.

Bruce Kyle reported New PHP Drivers for SQL Server Support Improved BI, Connection to SQL Azure in this 8/5/2010 post to the US ISV Evangelism blog:

Microsoft Drivers for PHP for SQL Server 2.0 are now available. The new drivers adds support for PHP Data Objects (PDO) and supports integration with SQL Server business intelligence features and connectivity to SQL Azure.

The driver, called, PDO_SQLSRV, enables popular PHP applications to use the PDO data access “style” to interoperate with Microsoft’s SQL Server database. The new drivers make it easier for you to take advantage of SQL Server features, such as SQL Server's Reporting Services and Business Intelligence capabilities.

In addition to accessing SQL Server, the drivers also enable PHP developers to easily connect to and use SQL Azure for a reliable and scalable relational database in the cloud and expose OData feeds.

For more information, including information about the new architecture, sample code, highlights, and download information, see Microsoft Drivers for PHP for SQL Server 2.0 released from the SQL Server Team.

Alex James (@adjames) resets Enhancing OData support for querying derived types – revisited on 8/6/2010:

About a week ago we outlined some options for enhancing OData support for querying derived types.

From there the discussion moved to the OData Mailing list, where the community gave us very valuable feedback:

- Everyone seemed to like the idea in general - evidenced by the fact that all debate focused on syntax.

- Most liked the idea of the short hand syntax for OfType.

- However Robert pointed out that using the !Employee syntax is weird because ! implies NOT to a lot of developers.

- Given this some suggested alternatives like using @ or [].

- Erik wondered if we could simply use the name of the type in a new segment, dealing with any ambiguity by picking properties and navigations first.

- Robert pointed out that it would be hard to know when to use IsOf and when to use OfType.

Feedback Takeaways

Well we just sat down and discussed all this, so what follows is an updated proposal taking into account your feedback.

Clearly the '! means NOT' issue is a show stopper, thanks for catching this early Robert.

That option is out.

Most seem to want a short-hand syntax - the complicating factor though is that we really don't like ambiguity - so whatever syntax we choose must be precise and unambiguous.

Using []'s:

We considered using '[]' instead of ! like this:

~/People[Employee]

~/People[Employee](2)/Manager

~/People(2)/[Employee]/Manager

~/People(2)/Mother[Employee]/Manager

~/People(2)/Mother[Employee]/Manager/Father[Employee]/Reportsand something like this in query segments:

~/People/?$filter=[Employee]/Building eq 'Puget Sound Building 18'

~/People/?$expand=[Employee]/Manager

~/People/?$orderby=[Employee]/Manager/FirstnameThis is okay but it does have at least three issues:

- It makes OData URIs a little harder to parse.

- It makes OData URIs harder to read.

- It makes []() combo segments reasonably common.

Not to mention it is the return of [] which has a colorful history in the OData protocol: early versions of Astoria used [] for keys!

Using Namespace Qualified Type Names:

We think a better option is to use Namespace Qualified Names.

The Namespace qualification removes any chance of ambiguity with property and navigation names. So each time an OfType or Null propagating cast is required, you simply create a new segment with the Namespace Qualified type name.

If Employee is in the HR namespace the earlier queries would now look like this:

~/People/HR.Employee

~/People/HR.Employee(2)/Manager

~/People(2)/HR.Employee/Manager

~/People(2)/Mother/HR.Employee/Manager

~/People(2)/Mother/HR.Employee/Manager/Father/HR.Employee/Reportsand something like this in query segments:

~/People/?$filter=HR.Employee/Building eq 'Puget Sound Building 18'

~/People/?$expand=HR.Employee/Manager

~/People/?$orderby=HR.Employee/Manager/FirstnameThis does make the choice of your namespace more important than it is today, because it is more front and centre, but the final result is:

- URIs that are more readable.

- Casting is always done in a separate segment which makes it feel more like a function that operates on the previous segment.

- [] is preserved for later use.

So this seems like a good option.

Impact on the protocol

How does it work across the rest of the protocol?

404's

If you try something like this:

~/People(11)/HR.Employee

And person 11 is not an employee, you will get a 404. Indeed if you try any other operations on this uri you will get a 404 too.

Composition

After using the Namespace Qualified Type name to filter and change segment type all normal OData uri composition rules should apply. So for example these queries should be valid:

~/People/HR.Employee/$count/?$filter=Firstname eq 'Bill'

~/People(6)/HR.Employee/Reports/$count

~/People(6)/HR.Employee/Reports(56)/Firstname/$valueAlso you should be able to apply type filters to service operations that support composition:

~/GetPeopleOlderThanSixty/HR.Employee

$filter

As explained in the earlier post this:

~/People/?$filter=HR.Employee/Manager/Firstname eq 'Bill'

Will include only employees managed by Bill.

Whereas this:

~/People/?$filter=HR.Employee/Manager/Firstname eq 'Bill' OR Firstname eq 'Bill'

Will include anyone called Bill or any *employee* managed by Bill.

$expand

$expand will expand related results where possible. So this:

~/People/?$expand=HR.Employee/Manager

Would include managers for each employee using the standard OData <link><m:Inline> approach, and non employees would have no Manager <link>.

$orderby

This query:

~/People/?$orderby=HR.Employee/Manager/Firstname

Would order by people by Manager name. And because HR.Employee is a null propagating cast any entries which aren't Employees would be ordered as if their Manager's Firstname were null.

$select

Given this query:

~/People/?$select=Firstname, HR.Employee/Manager/Firstname

All Employee's will contain Firstname and Manager/Firstname. All non-employees will contain just Firstname.

You might expect people to have a NULL Manager/Firstname, but there is a real difference between having a NULL property value and having no property!

Inserts - POST

Today when inserting into a Collection that allows derived types you must specify the type using the entry's category's term.

However if you post to a type specialized uri like this:

~/People/HR.Employee

The server may be able to infer the exact type being created.

So if there are no types derived from HR.Employee, the server can unambiguously infer that you want to insert an employee, without the request specifying a category.

On the other hand if there are types derived from HR.Employee the request must specify the type via the category.

Finally if the type is specified via the category and it is not a HR.Employee (or derived from HR.Employee) the request will fail.

Deep Inserts

OData already supports 'deep inserts'. A 'deep insert' creates an entity and builds a link to an existing entity. So posting here:

~/People(6)/Friends

Inserts a new person and creates a 'friends' link with Person 6.

The same type specification rules apply for deep inserts too, so posting here:

~/People(6)/Friends/HR.Employee

Will succeed only if the type is unambiguous and allowed (i.e. it is an Employee or derived from Employee).

Updates - PUT / MERGE / PATCH

You can't change type during an update. So putting here:

~/People(6)/HR.Employee

Will only succeed if Person 6 is an Employee.

The same applies for MERGE and will apply to PATCH when supported.

Deletes - DELETE

Delete is as you would expect, and will work unless the URI 404's.

Summary

Clearly adding better support for derived types to OData is valuable. And it seems like the proposal is starting to take shape - thanks in large part to your feedback!

What do you think?

The best place to tell us is on the OData Mailing List.

Wade Wegner (@WadeWegner) announced an Article: Tips for Migrating Your Applications to the Cloud about the SQL Server Migragtion Wizard in MSDN Magazine’s August 2010 issue:

I had the great pleasure of co-authoring an article on application migration to Windows Azure for MSDN Magazine with my friend George Huey, creator of the SQL Azure Migration Wizard. This article stems from our work helping dozens of customers – both small and big – migrate their existing applications to run in the cloud.

(Ours is the second down on the left.)

While this article is by no means exhaustive, we did try to explain some of the common patterns and scenarios we faced when helping customers migrate their applications into Windows Azure. I hope you find it valuable.

Michael Desmond continues his Developers at VSLive! respond to Microsoft's LightSwitch visual development tool commentary with Gauging Response to Visual Studio LightSwitch of 8/4/2010:

A day after Microsoft Corporate Vice President of Visual Studio Jason Zander introduced the LightSwitch visual application development environment to the crowd at VSLive! in Redmond, Washington, opinions on the business-oriented tool are still evolving. During the announcement, Zander placed the value of LightSwitch squarely on the business end user.

"When you look at business applications, not all of them are written by us, the developers," Zander said Tuesday morning, noting that legions of business end users are spinning solutions in Access and SharePoint rather than waiting for corporate development to respond. "When I talk with people who are writing these types of applications, they say look, at some point it was easier for me to build it than just talk about it. We also know there are challenges when these applications start to grow up."

Sanjeev Jagtap of component-maker GrapeCity, said the LightSwitch announcement has been a source of buzz among conference attendees. He said the product is aimed squarely at in-house IT application developers who are struggling with varied tools like Office, Access and Visual Studio, and need to manage the complexity of working with multiple data sources and offline/online scenarios.

"Microsoft's motivation in bringing this product would be to help accelerate the adoption of the new generation of the stack -- that includes Azure, SharePoint, Office, etc. -- by taking away the typical developer's pain in learning and correctly aggregating these technologies. So this product could very well be a win-win for both sides in this scenario," Jagtap said.

Several developers expressed doubt that LightSwitch would appeal to business analysts. One development manager for a state government said her end users are knowledgeable about process, but lack an understanding of the data structures in the organization. Another senior application developer, Mary Kay Larson in the City of Tacoma Information Technology department, said LightSwitch could prove very helpful in raising the productivity of entry level developers.

Walter Kimrey, information technology manager for software development at Delta Community Credit Union, looks at LightSwitch as a fresh take on rapid application development.

"Similar to how VB enabled rapid development of simple Windows applications back in the day, LightSwitch enables rapid development of Silverlight applications, but with the added benefits of the power, flexibility and interoperability of the .NET framework behind it," Kimrey said. "It at least appears to provide a very good starting point. Within our organization, I can see us using LightSwitch to rapidly develop in-house administrative applications."

Gent Hito, chief executive officer for data connector provider RSSBus, said his company worked closely with Microsoft to craft custom Entity Framework data providers for LightSwitch. LightSwitch provides mechanisms for both WCF RIA Services and Entity Framework. "It's the much harder option," Hito said of Entity Framework, "it's a much harder beast to deal with and build. But it makes much more sense from an integration perspective for the customers."

Hito was very positive about LightSwitch, noting that the product matured significantly from earlier pre-release versions. "It's a cool idea. It's coming together as a product. We'll see how adoption goes," he said. "If they keep this one simple, they will be very successful. They've done a very good job of keeping their nose clean and giving people exactly what they need."

Michael Desmond is editor in chief of Visual Studio Magazine and former editor in chief of Redmond Developer News.

<Return to section navigation list>

AppFabric: Access Control and Service Bus

Michele Leroux Bustamante’s Federated Identity: Passive Authentication for ASP.NET with WIF appeared in MSDN Magazine’s August 2010 “Dealing with Data” issue:

The goal of federated security is to provide a mechanism for establishing trust relationships between domains. Platform tools like Windows Identity Foundation (WIF) make it much easier to support this type of identity federation. We show you how.

Just in time for the Windows Azure AppFabric upgrade.

My (@rogerjenn) Creating an Windows Azure AppFabric Labs Account, Project, and Namespace tutorial of 8/6/2010 begins:

To take advantage of the new Windows Windows AppFabric Access Control Services preview features announced by the Azure AppFabric team on 8/5/2010, you must create an AppFabric Labs account, then add a named AppFabric project and namespace. Here’s how…

1. Log into the Windows Azure AppFabric Labs Portal with your Windows LiveID credentials to open the Summary page:

Click the screen capture to open a full-size (~955 px wide) version. …

and ends:

7. Click Manage to open the Access Control Service page:

8. Future tutorials will address the Getting Started section’s five steps.

Stay tuned.

Justin Smith reported Major update to ACS now available in this 8/5/2010 blog post:

Today I’m excited to announce a major update to ACS. It’s available in our labs environment: http://portal.appfabriclabs.com. Keep in mind that there is no SLA around this release, but accounts and usage of the service are free while it is in the labs environment.

This release includes many of the features I discussed late last year: http://blogs.msdn.com/b/justinjsmith/archive/2009/09/28/access-control-service-roadmap-for-pdc-and-beyond.aspx. Here’s a snapshot of what’s in this release:

- Integrates with Windows Identity Foundation (WIF) and tooling

- Out-of-the-box support for popular web identity providers including: Windows Live ID, Google, Yahoo, and Facebook

- Out-of-the-box support for Active Directory Federation Server v2.0

- Support for OAuth WRAP, WS-Trust, and WS-Federation protocols

- Support for the SAML 1.1, SAML 2.0, and Simple Web Token (SWT) token formats

- Integrated and customizable Home Realm Discovery that allows users to choose their identity provider

An OData-based Management Service that provides programmatic access to ACS configuration

- A Web Portal that allows administrative access to ACS configuration

There’s quite a bit more information available on our CodePlex project: http://acs.codeplex.com. There you will find documentation, screencasts, samples, readmes, an issue tracker, and discussion lists.

Also check out the Channel 9 video at http://channel9.msdn.com/shows/Identity/Introducing-the-new-features-of-the-August-Labs-release-of-the-Access-Control-Service.

Like always, I encourage you to check it out and let the team know what you think.

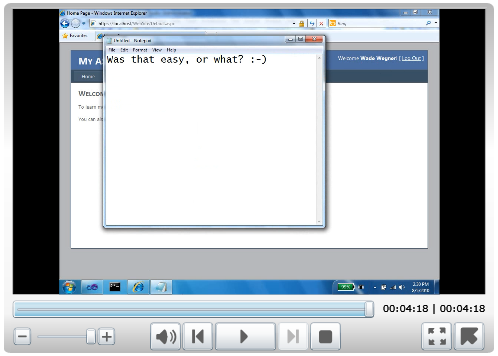

Wade Wegner (@WadeWegner) posted a 00:04:18 demo screencast of the new Access Control Services features on 8/6/2010 in his Use social web providers in less than 5 minutes post:

Significant Access Control Service (ACS) updates released to AppFabric LABS environment today. Take a look at the Windows Azure AppFabric team blog for the formal announcement. I’d rather show you a quick demo.

Quick summary of new capabilities in the Access Control Service:

- Integration with Windows Identity Foundation (WIF) and tooling

- Out-of-the-box support for popular web identity providers including: Windows Live ID, OpenID, Google, Yahoo, and Facebook

- Out-of-the-box support for Active Directory Federation Server v2.0

- Support for OAuth WRAP, WS-Trust, and WS-Federation protocols

- Support for the SAML 1.1, SAML 2.0, and Simple Web Token (SWT) token formats

- Integrated and customizable Home Realm Discovery that allows end-users to choose their identity provider

An OData-based Management Service that provides programmatic access to ACS configuration

- A Web Portal that allows administrative access to ACS configuration

Try this out yourself. Use Visual Studio, install Windows Identity Foundation, and go to https://portal.appfabriclabs.com/.

For detailed information and a more verbose walkthrough (i.e. including explanations), [watch and] listen to Justin Smith’s walkthrough and interview.

The Windows Azure AppFabric Team posted three Access Control Services Video Clips to CodePlex on 8/4/20101:

- Walkthrough Sample that [s]hows the most basic walkthrough of ACS. It shows how to use ACS with ASP.NET MVC, Windows Identity Foundation, and web identity providers (e.g. Live ID, Google, Yahoo!, etc.) (00:12:59)

- IdElement Interview … with Vittorio [Bertocci (Vibro)] that discusses some of the key features of ACS (00:20:22)

- AD FS 2.0 Integration (Active Profile) shows how to connect a REST web service to an enterprise identity provider like AD FS 2.0. It is slightly dated (I refer to the team as .NET Services, which is no longer the case), but the capability shown in it still works with this version of ACS.

Justin Smith posted Access Control Service Samples and Documentation (Labs) to CodePlex on 8/3/20101:

Introduction

The Windows Azure AppFabric Access Control Service (ACS) makes it easy to authenticate and authorize users of your web sites and services. ACS integrates with popular web and enterprise identity providers, is compatible with most popular programming and runtime environments, and supports many protocols including: OAuth, OpenID, WS-Federation, and WS-Trust.

Contents

- Prerequisites: Describes what's required to get up and running with ACS.

- Getting Started: Walkthrough the simple end-to-end scenario

- Working with the Management Portal: Describes how to use the ACS Management Portal

- Working with the Security Token Service: Provides technical reference for working with the ACS Security Token Service

- ACS Token Anatomy: coming soon

- WS-Federation: coming soon

- WS-Trust: coming soon

- OAuth WRAP: coming soon

- Working with the Management Service: coming soon

- Samples: Descriptions and Readmes for the ACS Samples

Key Features

- Integrates with Windows Identity Foundation and tooling (WIF)

- Out-of-the-box support for popular web identity providers including: Windows Live ID, Google, Yahoo, and Facebook

- Out-of-the-box support for Active Directory Federation Server v2.0

- Support for OAuth, WS-Trust, and WS-Federation protocols

- Support for the SAML 1.1, SAML 2.0, and Simple Web Token (SWT) token formats

- Integrated and customizable Home Realm Discovery that allows users to choose their identity provider

An OData-based Management Service that provides programmatic access to ACS configuration

- A Web Portal that allows administrative access to ACS configuration

Platform Compatibility

ACS is compatible with virtually any modern web platform, including .NET, PHP, Python, Java, and Ruby. For a list of .NET system requirements, see Prerequisites.

Core Scenario

Most of the scenarios that involve ACS consist of four autonomous services:

- Relying Party (RP): Your web site or service

- Client: The browser or application that is attempting to gain access to the Relying Party

- Identity Provider (IdP): The site or service that can authenticate the Client

- ACS: The partition of ACS that is dedicated to the Relying Party

The core scenario is similar for web services and web sites, though the interaction with web sites utilizes the capabilities of the browser.

Web Site Scenario

This web site scenario is shown below:

- The Client (in this case a browser) requests a resource at the RP. In most cases, this is simply an HTTP GET.

- Since the request is not yet authenticated, the RP redirects the Client to the correct IdP. The RP may determine which IdP to redirect the Client to using the Home Realm Discovery capabilities of ACS.

- The Client browses to the IdP authentication page, and prompts the user to login.

- After the Client is authenticated (e.g. enters credentials), the IdP issues a token.

- After issuing a token, the IdP redirects the Client to ACS.

- The Client sends the IdP issued token to ACS.

- ACS validates the IdP issued token, inputs the data in the IdP issued token to the ACS rules engine, calculates the output claims, and mints a token that contains those claims.

- ACS redirects the Client to the RP.

- The Client sends the ACS issued token to the RP.

- The RP validates the signature on the ACS issued token, and validates the claims in the ACS issued token.

- The RP returns the resource representation originally requested in (1).

Web Service Scenario

The core web service scenario is shown below. It assumes that the web service client does not have access to a browser and the Client is acting autonomously (without a user directly participating in the scenario).

- The Client logs in to the IdP (e.g. sends credentials)

- After the Client is authenticated, the IdP mints a token.

- The IdP returns the token to the Client.

- The Client sends the IdP-issued token to ACS.

- ACS validates the IdP issued token, inputs the data in the IdP issued token to the ACS rules engine, calculates the output claims, and mints a token that contains those claims.

- ACS returns the ACS issued token to the Client.

- The Client sends the ACS issued token to the RP.

- The RP validates the signature on the ACS issued token, and validates the claims in the ACS issued token.

- The RP returns the resource representation originally requested in (1).

Vittorio Bertocci (@vibronet) rings in with New Labs Release of ACS marries Web Identities and WS-*, Blows Your Mind on 8/5/2010:

They did it. Justin, Hervey and the AppFabric ACS gang just released a new version of the ACS in the LABS environment, a version that is chock-full of news and enhancements, including:

- Integration with Windows Identity Foundation (WIF) and tooling

- Out-of-the-box support for popular web identity providers including: Windows Live ID, OpenID, Google, Yahoo, and Facebook

- Out-of-the-box support for Active Directory Federation Server v2.0

- Support for OAuth WRAP, WS-Trust, and WS-Federation protocols

- Support for the SAML 1.1, SAML 2.0, and Simple Web Token (SWT) token formats

- Integrated and customizable Home Realm Discovery that allows end-users to choose their identity provider

An OData-based Management Service that provides programmatic access to ACS configuration

- A Web Portal that allows administrative access to ACS configuration

As you’ve come to expect, the IdElement covered the news and captured an interview with Justin about the new features in this release.

Join Justin Smith, Program Manager on the Windows Azure AppFabric Access Control Service (ACS) team, on a whirlwind tour of the new features of today's Labs release of ACS. Just to whet your appetite, here's a list of some of the news touched on in this video:

- Support for identity providers such as Facebook, Windows Live ID, Google, Yahoo, OpenID providers, and ADFS 2 instances

- Support for a wide range of protocols: WS-Federation, WS-Trust, Oauth WRAP

- Seamless integration with Windows Identity Foundation

- Brand new management portal and ODtata-based management API

- Tools for helping developers embed identity providers selection UI in their applications

What are you waiting for? Tune in!

Once you have watched the video, create your account at http://portal.appfabriclabs.com/ and start experimenting: it's free, there are no tokens to redeem, and there's no waiting time. Instant gratification!

This release marks an inflection point.

You asked to bring back in ACS WS-Federation and WS-Trust, and the ACS team did it, but this release offers so much more. The chance of using a consistent programming model (the Visual Studio-friendly Windows Identity Foundation) for a variety of popular identity provider brings together the best of the web and business worlds, eliminating friction and enabling scenarios that were simply too complex or onerous to implement before. Imagine the possibilities! Write your ASP.NET or WCF application as usual, run the WIF wizard on it, and suddenly you can authenticate users from Facebook, Windows Live ID, Google, Yahoo, ADFS2 instances… without having to change a single line of code in your application. To my knowledge, no other service in the industry offers as many protocols today.

See for yourself, experiment with ACS. It’s easy, free and immediately available. Relevant links:

- ACS LABS: http://portal.appfabriclabs.com/

- Samples and Documentation on Codeplex: http://acs.codeplex.com/

- Interview with Justin

- Justin’s blog: http://blogs.msdn.com/b/justinjsmith

- Hervey’s blog: http://www.dynamic-cast.com/

Congratulations to the ACS team for a great release!

Mary Jo Foley summarizes the new Access Control Services release in her Microsoft begins adding single-sign on support to its Azure cloud post of 8/6/2010 to ZDNet’s All About Microsoft blog:

Microsoft is adding federated-identity support for providers including Google, Facebook, LiveID and OpenID to its Azure cloud platform via a new update to its Windows Azure AppFabric component.

Windows Azure AppFabric is the new name for .Net Services, and currently includes service bus and access control only. Microsoft has started making regular, monthly updates to Azure AppFabric. The August update — which the Softies are characterizing as a major one — includes a number of identity-specific updates to the access control piece.

The August Azure AppFabric update is available via the AppFabric LABS environment, which is where the AppFabric team showcases some of its early bits and makes them available for free to get user feedback. (Microsoft characterizes the features it delivers via AppFabric LABS as “similar to a Community Technology Preview,” but notes that these technologies “may occasionally be even farther away from commercial availability.” …

Mary Jo continues with the list of new features and concludes:

Microsoft officials outlined the company’s plans to add single sign-on/federated identity support to Azure in the fall of 2009. Microsoft execs recently said that the company is working to add federated-identity support to Microsoft’s Business Productivity Online Suite (BPOS) of hosted applications. (BPOS is not yet running on Azure, just to be clear; however, it’s still running in Microsoft datacenters.)

How major are these new AppFabric updates? Sergejus, a .Net developer, tweeted: “Finally, Azure #AppFabric supports LiveID, OpenID, Google and Facebook authentication. Now real development starts!“

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

•• Bradley Millington explained Deploying Orchard to Windows Azure in this 8/4/2010 post to the Orchard Project site (missed when posted):

Orchard supports building and deploying to the Windows Azure environment. If you don't want or need to build the package by yourself, a binary version of the Windows Azure package is available on the CodePlex site. This topic describes the detailed steps you can take to build and deploy packages of Orchard to Azure.

If you’re not familiar with the Orchard project, here’s the description from CodePlex:

Orchard is a free, open source, community-focused project aimed at delivering applications and reusable components on the ASP.NET platform. It will create shared components for building ASP.NET applications and extensions, and specific applications that leverage these components to meet the needs of end-users, scripters, and developers. Additionally, we seek to create partnerships with existing application authors to help them achieve their goals. Orchard is delivered as part of the ASP.NET Open Source Gallery under the CodePlex Foundation. It is licensed under a New BSD license, which is approved by the OSI. The intended output of the Orchard project is three-fold:

In the near term, the Orchard project is focused on delivering a .NET-based CMS application that will allow users to rapidly create content-driven Websites, and an extensibility framework that will allow developers and customizers to provide additional functionality through modules and themes. You can learn more about the project on the Orchard Project Website.

- Individual .NET-based applications that appeal to end-users , scripters, and developers

- A set of re-usable components that makes it easy to build such applications

- A vibrant community to help define these applications and extensions

Project Status

Orchard is currently working to deliver a V1 release. We invite participation by the developer community in shaping the project’s direction, so that we can publicly validate our designs and development approach.

Our 0.5 release is available from our Downloads page, and is easy to Install Orchard using the Web Platform Installer. We encourage interested developers to check out the source code on the Orchard CodePlex.com site and get involved with the project.

A rogues’ gallery of Microsoft developers working on the Orchard project is here.

The Orchard team is a small group of developers at Microsoft who are passionate about delivering open source solutions on .NET technology. The team is primarily composed of ASP.NET developers and has recently grown with the addition of two of the principal developers on Oxite, Erik Porter and Nathan Heskew, as well as Louis DeJardin, a long-time ASP.NET developer, community software advocate, and creator of the SparkViewEngine for MVC.

Following is a screen capture of the live Orchard Azure demo at orchardproject.cloudapp.net:

• Ward Bell blogged DevForce in the Cloud: 1st Flight about IdeaBlade’s live Windows Azure demo on 8/6/2010:

Our first Azure DevForce “application” debuted today.

Image edited and captured by –rj on 8/7/2010

Sure it’s just Northwind customers in an un-styled, read-only Silverlight DataGrid. Kind of like putting a monkey in orbit. But it’s an important step for IdeaBlade and DevForce … and I’m feeling a wee bit proud.

Behind that simple (simplistic?) exterior are DevForce 2010, SQL Azure, Windows Azure, Silverlight 4, Entity Framework v.4, and .NET 4. That really is EF 4 running in the cloud, not on the client as it is in most Azure demonstrations. That really is the DevForce middle tier “BOS” in the cloud and a DevForce Silverlight client executing in your browser.

We’ll probably have taken this sample down by the time you read this post. But who knows; if you’re quick, you may still be able to catch it at http://dftest003.cloudapp.net/

Kudos to Kim Johnson, our ace senior architect / developer who made it happen. Thanks to Microsoft’s Developer Evangelist Bruno Terkaly who first showed us EF 4 in Azure when there were no other published examples.

I’ll be talking about DevForce and Azure much more as our “DevForce in the Cloud” initiative evolves. It’s clearly past the “pipe dream” phase and I anticipate acceleration in the weeks ahead.

Those of you who’ve been wondering if we’d get there (Hi Phil!) or whether to "play it safe" and build your own datacenter (really?) … wonder no more!

Ward is a Microsoft MVP and the V.P. of Technology at IdeaBlade (www.ideablade.com), makers of the "DevForce" .NET application development product.

• Bruno Terkaly continued his Creating a Web Site with Membership and User Login – Implementing Forms-based Authentication series, which is intended for ASP.NET but is applicable also to Windows Azure Web Roles, on 8/7/2010. It begins:

Controlling access to a web site

Building an Infrastructure for Authentication and Authorization

It is very common to restrict access to a web site.

A infrastructure to manage logins needs to be implemented. We will need to provide a way for users to log in (authenticate), such as by prompting users for a name and password.

The application must also include a way to hide information from anonymous users (users who are not logged in). ASP.NET provides Web site project templates that include pages that let you create a Web site that already includes basic login functionality.

What this post is about

This post is about manually building this infrastructure using ASP.NET controls and ASP.NET membership services to create an application that authenticates users and uses ASP.NET membership to hide information from anonymous users.

- Configuring ASP.NET membership services

- Defining users

- Using login controls to get user credentials and to display information to logged-in users

- Protecting one or more pages in your application so that only logged-in users can view them

- Allowing new users to register at your site

- Allowing members to change and reset their passwords

Create the Web Site

Make your selections from the “File” menu.

Bruno’s larger-than-life screen captures are ideal for projection in a classroom setting. His post, which is almost as long as mine, concludes:

Success !

Now that we have logged in, “SafePage.aspx” can safely appear. Notice in the upper right hand side of the browser it reads, “Welcome Bruno Terkaly.”Conclusion: We have successfully implemented Forms-based authentication. Visual Studio 2010 makes it easy.

By now we can see how forms-based authentication works. I will now post about securing WCF Services and ultimately Silverlight clients.

Additional Content

- Walkthrough: Creating a Web Site with Membership and User Login http://msdn.microsoft.com/en-us/library/879kf95c.aspx

- Creating and Configuring the Application Services Database for SQL Server http://msdn.microsoft.com/en-us/library/2fx93s7w.aspx

Cloud Ventures says they’re Introducing Microsoft [Unified Communications] Cloud Archiving Services in the It's All About Cloud UC post of 8/6/2010:

Introducing Microsoft UC Cloud Archiving Services

A key application area for Cloud providers to consider is the UC suite (Unified Communications), referring to technologies for VoIP, 'Presence' and Instant Messaging, and a single inbox for email, fax and voicemail.Not only can it improve staff collaboration but it can provide quick solutions for e-workflow needs and help meet record-keeping compliance needs through new 'Cloud Archiving' features that can plug in to popular tools like the Microsoft UC suite.

Unified Communications

Microsoft caused big waves in the telco industry in 2007 when they launched their products into this space, significant because traditionally the worlds of IT applications and telco telephony has remained quite seperate.Business applications run as software in the enterprise data-centre, and telephony is provided by black box hardware and maintained by a different team who look after networks too.

The 'convergence' of these two worlds is the same underlying driver pushing Cloud Computing forward, so it's a very potent area for their combination. The fact that the Microsoft option is so software-centric makes it ideal for Cloud deployment.

It is a useful way to help frame a strategy for successful adoption of Cloud Computing because actually it highlights what's real and practical about this approach.

Moving an application "into the Cloud" doesn't just mean re-locating from an on-site location to a remote data-centre. As many experts will tell you UC is exactly the type of application that will quickly show you the limitations of this model, because it is so dependent on local high-performance or else voice quality will not be sufficient enough to be usable.

It's more likely the performance-intensive apps like the main OCS communications engine, will be on-site and an ideal early candidate for delivery via the private Enterprise Cloud.

Cloud Archiving Services

So for this and many other applications the most likely scenarios for enterprise adoption of Cloud are an integrated combination of on-site and remote data-centre based services, where the limitations and strengths of each are used in the right way.One simple and very practical example that illustrates both points is 'Cloud Archiving Services', referring to connecting the Microsoft applications to remote Cloud storage facilities, for archiving the documents and other information they're working with.

The UC suite includes Instant Messaging tools that are swapping files, emails receiving attachments and the like, and also the key selling feature is better use of these tools for various e-workflow scenarios. For example the Unified Messaging component of Exchange lets you receive faxes direct to your email inbox, rather than printed to paper via the fax machine.

Doesn't sound like much but for users who have only had paper-based fax processes all of their lives this is a huge productivity booster.

Not only that, with a Cloud plug-in for storage services that can automatically time-stamp and archive the fax as its coming through, then it can tick the Compliance box too. All government agencies are regulated to retain their records in line with certain legislations, the MIA here in Newfoundland for example, which means that all of their customer forms received this way must be kept according to these standards.

Cloud technology can not only automate the process, converting a paper-based workflow to an electronic one, but it can certify compliance with these laws too.

For example there are deadlines for receiving various types of documents at the Courts and other government offices, and so when received via fax or other methods their arrival needs to be 'stamped' in some form to record the transaction, so it can be provable that you did deliver them.

"CloudLocking" - Securing Unstructured Content Records

This certification process can be thought of as "Cloud Locking", and is ideal for 'Unstructured content'.This refers to all the information outside of databases, like Word documents, Excel spreadsheets and multimedia files. What this example highlights is that there can still be 'structured data' within them, like customer information, and so they must be e-archived the same way as the main corporate databases.

However what this Aberdeen report Securing Unstructured Data (33 page PDF) highlights is that these types of files fly around relatively unprotected, stored and shared across users laptops, not behind the firewall, and shared promisciously through UC tools.