Windows Azure and Cloud Computing Posts for 8/11/2010+

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database, Codename “Dallas” and OData

- AppFabric: Access Control and Service Bus

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Windows Azure Infrastructure

- Windows Azure Platform Appliance (WAPA)

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Read the detailed TOC here (PDF) and download the sample code here.

Discuss the book on its WROX P2P Forum.

See a short-form TOC, get links to live Azure sample projects, and read a detailed TOC of electronic-only chapters 12 and 13 here.

Wrox’s Web site manager posted on 9/29/2009 a lengthy excerpt from Chapter 4, “Scaling Azure Table and Blob Storage” here.

You can now freely download by FTP and save the following two online-only PDF chapters of Cloud Computing with the Windows Azure Platform, which have been updated for SQL Azure’s January 4, 2010 commercial release:

- Chapter 12: “Managing SQL Azure Accounts and Databases”

- Chapter 13: “Exploiting SQL Azure Database's Relational Features”

HTTP downloads of the two chapters are available for download at no charge from the book's Code Download page.

Tip: If you encounter articles from MSDN or TechNet blogs that are missing screen shots or other images, click the empty frame to generate an HTTP 404 (Not Found) error, and then click the back button to load the image.

Azure Blob, Drive, Table and Queue Services

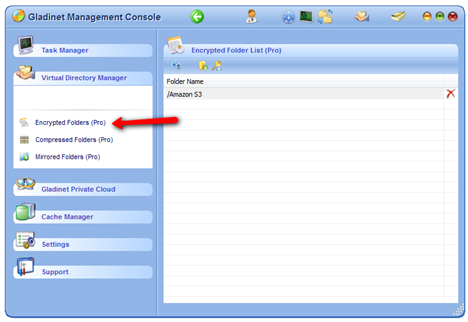

Jerry Huang describes A Secure Network Drive for Windows Azure Blob Storage in this 8/10/2010 post to the Gladinet blog:

Windows Azure Platform has been released since Feb 2010. Azure Blob Storage is part of the offering and it provides good online storage service. So how to easily copy data in and out of Azure Blob Storage? How to do it securely with SSL and AES encryptions for your files?

This article talks about how to setup a AES encrypted network drive to Windows Azure Blob Storage.

First you will need to install the Gladinet Cloud Desktop and map a network drive to Windows Azure Blob Storage.

After that you have a mapped drive. Without further setup, you can drag and drop files to transfer files in and out of Azure Blob Storage. The transfer is under SSL but not encrypted yet once it reaches Azure Blob Storage.

So now let’s set it up with AES-256 encryption. You will need to open the Gladinet Management Console (Task Manager) and add encrypted folders.

Add the root folder of the Windows Azure Blob Storage to your encrypted folder.

If it is the first time you setup encrypted folder, you will need to add password to generate encryption key. You will need to remember the password, without which the file can’t be recovered.

This is it. Now you have a secure (AES-256 encrypted), mapped network drive to Windows Azure Storage.

Read More about Gladinet’s Access Solutions at gladinet website.

<Return to section navigation list>

SQL Azure Database, Codenames “Dallas” and “Houston” and OData

Elisa Flasko of Microsoft’s Project “Dallas” Team suggested Migrating your [Project “Dallas”] application from CTP2 to CTP3 in this 8/11/2010 post:

CTP3 uses HTTP Basic Authentication instead of a custom HTTP header. As a result, applications written against CTP2 will encounter errors until they are updated to work with our release of CTP3 today. CTP3 introduces the first live OData services and we will continue to migrated additional services to OData over the coming weeks. In the mean time, many of the CTP2 based services will remain accessible and will continue to remain accessible for a period of time after a service is migrated to OData. However, it will eventually be turned off.

How you update your application will depend on which service your application uses and how your application was written.

If you are writing or updating an application against a service that already has an OData endpoint available, we recommend that you use the OData endpoint.

If you are writing or updating an application against a CTP2 based service that does not yet have an OData endpoint, the process will largely depend on how your application uses “Dallas”; Also we highly recommend you keep an eye on our blog and plan to migrate your application when the OData endpoint becomes available.

If you used the C# proxy classes for a “Dallas” dataset, you must download and use the updated proxy classes. You can obtain the new classes by clicking on the “Click here to explore the dataset” link from the Subscriptions page on the portal. Then click on the “Download C# service classes link”. When you build against the new version of the service classes, you must also change your application to use the zero-argument constructor and set the AccountKey property to your “Dallas” account key. The Unique User Id from CTP2 is no longer supported.

If you invoked the “Dallas” service directly, you must stop sending the custom HTTP headers for $accountKey and $uniqueUserId. Instead, you must pass the authorization header for HTTP Basic Authentication. If you used the WebRequest class in .NET, you can use the Credentials property to accomplish this as follows;

WebRequest request = WebRequest.Create(dallasUri); request.Credentials = new NetworkCredential("accountKey", dallasAccountKey);

Wayne Walter Berry (@WayneBerry) asserted A Server Is Not a Machine in this 8/11/2010 post to the SQL Azure Team blog:

I see this come up from time to time, so I thought I would set the record straight; a server isn’t a machine. In SQL Azure, there is a concept of a server, which appears in the SQL Azure portal looking like this:

However, a server isn’t the same as a server in an on-premise SQL Server installation. In an on-premise SQL Server installation a server is synonymous with a machine, which has a fixed set of resources, storage, and hence can hold a limited number of databases.

With SQL Azure, a server is a TDS endpoint. Each of the databases created under that server are spread across multiple machines in the data center. A server in SQL Azure has unlimited capacity to hold additional databases, and has the full resources of the data center. In other words, there is no way for your SQL Azure server to run out of resources.

Another way to think about it is that you don’t have to create new servers in the data center in order to distribute your database load; SQL Azure does it automatically for you. With an on-premise SQL Server installation, the concepts of load balancing and redundancy require that you take the number of servers in consideration. With SQL Azure, one server will provide you with all the load balancing and redundancy you need in the data center.

A single SQL Azure server doesn’t mean a single point of failure; the TDS endpoints are serviced by multiple redundant SQL Azure front-end servers.

SQL Azure Front-End

The SQL Azure front-end servers are the Internet-facing machines that expose the TDS protocol over port 1433. In addition to acting as the gateway to the service, these servers also provide some necessary customer features, such as account provisioning, billing, and usage monitoring. Most importantly, the servers are in charge of routing requests to the appropriate back-end server. SQL Azure maintains a directory that keeps track of where on the SQL Azure back-end servers your primary data and all the backup replicas are located. When you connect to SQL Azure, the front end looks in the directory to see where your database is located and forwards the request to that specific back-end node (your database).

Unlimited Databases

Conceptually, there is no limit to the number of databases you can have under a SQL Azure server. However, by default, a SQL Azure account is restricted to 150 databases in each SQL Azure server, including the master database. An extension of this limit is available for your SQL Azure server. For more information, contact a customer support representative at the Microsoft Online Services Customer Portal.

Beth Massi (@BethMassi) interviews Joe Binder in this 00:43:24 Channel9 Visual Studio LightSwitch - Beyond the Basics video segment of 8/11/2010:

In this interview with Joe Binder, a Program Manager on the LightSwitch team, we discuss the LightSwitch application framework architecture and how a LightSwitch application is built on top of well-known technologies like Silverlight, MVVM, RIA Services, and Entity Framework. Joe shows us how to modify the behavior of a screen and how it exposes the commanding pattern in an easy-to-use way. He also shows us how to extend the UI with our own custom Silverlight controls, as well as how to connect our own data sources using RIA Services.

For an introduction to LightSwitch please see this interview:

Jay Schmelzer: Introducing Visual Studio LightSwitchFor more information on LightSwitch, please see:

Visual Studio LightSwitch Developer Center

Visual Studio LightSwitch Team Blog Visual Studio LightSwitch Forums

Elisa Flasko of Microsoft’s Project “Dallas” Team updated its 8/10/2010 Notice of site maintenance on 8/11/2010:

We are performing maintenance on www.sqlazureservices.com today, Aug 10th, from 10am-4pm PST and tomorrow Aug 11th, from 10am-4pm.

NOTE 8/11/2010: Yesterday's maintenance was completed successfully. Main[t]enance will continue today betwee[n] 10am-4pm PST.

During this time, users may experience a short interruption to service availability.

We ap[

p]ologize for any inconv[en]ience this may cause. The site will be back up and running at full capacity shortly.Thank you,

The Dallas Team

Elisa: Rather short notice, no? Note that Windows Live Writer has a spell-checker you might want to try.

Stephen Forte posted yet another Project “Houston” walk-through as Houston there is a database on 8/11/2010:

Microsoft recently released a CTP of the cloud based SQL Azure management tool, code named “Houston”. Houston was announced last year at the PDC and is a web based version of SQL Management Studio (written in Silverlight 4.0.) If you are using SQL Management Studio, there really is no reason to use Houston, however, having the ability to do web based management is great. You can manage your database from Starbucks without the need for SQL Management Studio. Ok, that may not be a best practice, but hey, we’ve all done it. :)

You can get to Houston here. It will ask you for your credentials, log in using your standard SQL Azure credentials, however for “Login” you have to use the username@server format.

I logged in via FireFox and had no problem at all. I was presented with a cube control that allowed me see a snapshot of the settings and usage statistics of my database. I browsed that for a minute and then went straight to the database objects. Houston gives you the ability to work with SQL Azure objects (Tables, Views, and Stored Procedures) and the ability to create, drop, and modify them.

I played around with my tables’ DDL and all worked fine. I then decided to play around with the data. I was surprised that you can open a .SQL file off your local disk inside of Houston!

I opened up some complex queries that I wrote for Northwind on a local version of SQL Server 2008 R2 and tested it out. The script and code all worked fine, however there was no code formatting that I could figure out (hey, that is ok).

I wanted to test if Houston supported the ability to select a piece of TSQL and only execute that piece of SQL. I was sure it would not work so I tested it with two select statements and got back one result. (I tried rearranging the statements and only highlighted the second one and it still worked!) Just to be sure I put in a select and a delete statement and highlighted only the select statement and only that piece of TSQL executed.

I then tried two SQL statements and got back two results, so the team clearly anticipated this scenario!

All in all I am quite happy with the CTP of Houston. Take it for a spin yourself.

My Test Drive Project Houston CTP1 with SQL Azure post of 7/31/2010 has more details about Project Houston (@ProjHou).

Guy Harrison posted a reasoned, rational answer to Why NoSQL? in his brief article of 6/10/2010 to Database Trends and Applications magazine:

NoSQL - probably the hottest term in database technology today - was unheard of only a year ago. And yet, today, there are literally dozens of database systems described as "NoSQL." How did all of this happen so quickly?

Although the term "NoSQL" is barely a year old, in reality, most of the databases described as NoSQL have been around a lot longer than the term itself. Many databases described as NoSQL arose over the past few years as reactions to strains placed on traditional relational databases by two other significant trends affecting our industry: big data and cloud computing.

Of course, database volumes have grown continuously since the earliest days of computing, but that growth has intensified dramatically over the past decade as databases have been tasked with accepting data feeds from customers, the public, point of sale devices, GPS, mobile devices, RFID readers and so on.

Cloud computing also has placed new challenges on the database. The economic vision for cloud computing is to provide computing resources on demand with a "pay-as-you-go" model. A pool of computing resources can exploit economies of scale and a levelling of variable demand by adding or subtracting computing resources as workload demand changes. The traditional RDBMS has been unable to provide these types of elastic services.

The demands of big data and elastic provisioning call for a database that can be distributed on large numbers of hosts spread out across a widely dispersed network. While commercial relational databases - such as Oracle's RAC - have taken steps to meet this challenge, it's become apparent that some of the fundamental characteristics of relational database are incompatible with the elastic and Big Data demands.

Ironically, the demand for NoSQL did not come about because of problems with the SQL language. The demand is due to the strong consistency and transactional integrity of NoSQL. In a transactional relational database, all users see an identical view of data. In 2000, however, Eric Brewer outlined the now famous CAP theorem, which states that both Consistency and high Availability cannot be maintained when a database is Partitioned across a fallible wide area network.

Google, Facebook, Amazon and other huge web sites, therefore, developed non-relational databases that sacrificed consistency for availability and scalability. It just so happened that these databases didn't support the SQL language either, and, when a group of developers organized a meeting in June 2009 to discuss these non-relational databases, the term "NoSQL" seemed convenient. Perhaps unfortunately, the term NoSQL caught on beyond expectations, and now is used as shorthand for any non-relational database.

Within the NoSQL zoo, there are several distinct family trees. Some NoSQL databases are pure key-stores without an explicit data model, with many based on Amazon's Dynamo key-value store. Others are heavily influenced by Google's BigTable database, which supports Google products such as Google Maps and Google Reader. Document databases store highly structured self-describing objects, usually in an XML-like format called JSON. Finally, graph databases store complex relationships such as those found in social networks.

Within these four NoSQL families are at least a dozen database systems of significance. Some probably will disappear as the NoSQL segment matures, and, right now, it's anyone's guess as to which ones will win, and which will lose.

NoSQL is a fairly imprecise term - it defines what the databases are not, rather than what they are, and rejects SQL rather than the more relevant strict consistency of the relational model. As imprecise as the term may be, however, there's no doubt that NoSQL databases represent an important direction in database technology.

Guy is Director of Research and Development at Quest Software.

<Return to section navigation list>

AppFabric: Access Control and Service Bus

Zane Adams posted New Windows Server AppFabric benchmarks vs. IBM WebSphere 7 and IBM eXtreme Scale 7.1 on 8/10/2010:

Microsoft just released two new benchmark reports for Windows Server AppFabric and Microsoft .NET Framework 4.0. These reports can be downloaded from http://msdn.microsoft.com/en-us/netframework/stocktrader-benchmarks.aspx.

The first looks at the use of Microsoft Windows Server AppFabric Caching Services, comparing the performance of Microsoft Windows Server AppFabric to IBM eXtreme Scale 7.1 as a middle-tier distributed caching solution.

The second report looks at the performance of .NET Framework 4.0 in a variety of scenarios as compared to IBM WebSphere 7.0. Both reports include pricing information and comparisons, as well as full tuning details and source code.

The results show a very large price-performance advantage with Windows Server AppFabric and Microsoft .NET Framework 4.0 vs. IBM WebSphere 7 and IBM eXtreme Scale 7.1.

I encourage customers to visit http://msdn.microsoft.com/en-us/netframework/stocktrader-benchmarks.aspx and download the two benchmark reports.

The post isn’t about Windows Azure AppFabric, but deserves mention due to representation of IBM as developers’ “first choice for private clouds” by Evans Data.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

Jim O’Neil continues his series with Azure@home Part 4: WebRole Implementation (redux) of 8/11/2010:

This post is part of a series diving into the implementation of the @home With Windows Azure project, which formed the basis of a webcast series by Developer Evangelists Brian Hitney and Jim O’Neil. Be sure to read the introductory post for the context of this and subsequent articles in the series.

Here’s a quick recap as to what I’ve covered in this series so far (and what you might want to review if you’re just joining me now):

- Introductory post – overview of Folding@home and project files and prerequsities

- Part 1 – application architecture and flow

- Part 2 – WebRole implementation (OnStart method and diagnostics)

- Part 3 – Azure storage overview and explanation of entities used in Azure@home

By the way, I’d encourage you to play along as you walk through my blog series: deploy Azure@home yourself and experiment with some of the other features of Windows Azure by leveraging a free Windows Azure One Month Pass.

The focus of this post is to pick up where Part 2 left off and complete the walkthrough of the code in the WebRole implementation, this time focusing on the processing performed by the two ASP.NET pages, default.aspx and status.aspx..

default.aspx

entry page to the application via which user enters name and location to initiate the “real work,’ which is carried out by worker roles already revved up in the cloudstatus.aspx

read-only status page with a display of progress of the Folding@home work units processed by the cloud application

Recall too the application flow; the portion relevant to this post is shown to the right:

- The user browses to the home page (default.aspx) and enters a name and location which get posted to the ASP.NET application,

- The ASP.NET application

- writes the information to a table in Azure storage (client) that is being monitored by the worker roles, and

- redirects to the status.aspx page.

Subsequent visits to the site (a) bypass the default.aspx page and go directly to the status page to view the progress of the Folding@home work units, which is extracted (b) from a second Azure table (workunit).

In the last post, I covered the structure of the two Azure tables as well as the abstraction layer in AzureTableEntities.cs. Now, we’ll take a look at how to use that abstraction layer and the StorageClient API to carry out the application flow described above.

Default.aspx and the client table

This page is where the input for the Folding@home process is provided and as such is strongly coupled to the client table in Azure storage. The page itself has the following controls

- txtName – an ASP.NET text box via which you provide your username for Folding@home. The value entered here is eventually passed as a command-line parameter to the Folding@home console client application.

- litTeam – an ASP.NET hyperlink control with the static text “184157”, which is the Windows Azure team number for Folding@home. This value is passed as a parameter by the worker roles to the console client application. For those users who may already be part of another folding team, the value can be overridden via the TEAM_NUMBER constant in the code behind. Veteran folders may also have a passkey, which can likewise be supplied via the code behind.

- txtLatitude and txtLongitude – two read-only ASP.NET text boxes that display the latitude and longitude selected by the user via the Bing Map control on the page

- txtLatitudeValue and txtLongitudeValue – two hidden fields used to post the latitude and longitude to the page upon a submit

- cbStart – submit button

- myMap – the DIV element providing the placeholder for the JavaScript Bing Maps control. The implementation of the Bing Map functionality is completely within JavaScript on the default.aspx page, the code for which is fairly straightforward (and primarily

stolen fromresearched at the Interactive SDK)The majority of the code present in this page uses the StorageClient API to access the client table. That table contains a number of columns (or more correctly, properties) as you can see below. The first three properties are required for any Azure table (as detailed in my last post), and the other fields are populated for the most part from the input controls listed above. …

The remainder of Jim’s post consists primarily of C# code of interest to .NET developers.

The Windows Azure Team reported Windows Azure Evangelist Ryan Dunn Shares Top Tips for Running in the Cloud on 8/11/2010:

If you're looking for tips on monitoring and working with production level Windows Azure applications in the cloud, you should check out the recent video interview with Windows Azure Evangelist Ryan Dunn [@dunnry] on Bytes by MSDN.

During his conversation with Joe Healy, principal developer evangelist at Microsoft, Ryan also talks about what's new in the latest Windows Azure SDK, and how the new features make it easier to monitor and manage Windows Azure applications.

Ryan Dunn and Joe recommend you check out

Jon Udell shows you How to make Azure talk to Twitter in this 8/10/2010 post to the O’Reilly Answers blog:

Note: This series of how to articles is a companion to my elmcity series on the Radar blog, which chronicles what I've been learning as I build a calendar aggregation service on Azure.

Overview

The elmcity service doesn't (yet) require its users -- who are called curators -- to register in order to create and operate calendar hubs that aggregate calendar feeds. Instead it relies on several informal contracts involving partner services, including delicious, FriendFeed, and Twitter. Here's the Twitter contract:

The service will follow the curator at a specified Twitter account. By doing so, it enables the curator to send authentic messages to the service. The vocabulary used by those messages will initially be just a single verb: start. When the service receives the start message from a hub, it will re-aggregate the hub's list of feeds.

The code that supports this contract, Twitter.cs, only needs to do two things:

1. Follow curators' Twitter accounts from the service's Twitter account

2. Read direct messages from curators' accounts and transmit them to the service's account

Following a Twitter account

Here's the follow request:

[C# code removed for brevity]

Elsewhere I'll explore the HttpUtils class. It's a wrapper around the core .NET WebRequest and WebResponse classes that helps me focus on the HTTP mechanics underlying the various partner services that my service interacts with. But as you can see, this is just a simple and straightforward HTTP request for Twitter to follow the specified account on behalf of the service's own account.

Monitoring Twitter direct messages

The method that reads Twitter direct messages is more involved. Let's start with its return type, List. In C# you can make a generic List out of any type. In this case, I've defined a type called TwitterDirectMessage like so:

[C# code removed for brevity]Define a custom type, or just use a dictionary?

It's worth noting that you don't have to define a type for this purpose. Originally, in fact, I didn't. When I'm working in Python I often don't define types but instead use dictionaries to model simple packages of data in a fluid way. You can do the same in C#. The first incarnation of this method was declared like so:

public static List<Dictionary<string,object>> TwitterDirectMessage(...)In that version, the return type was also a generic List. Each item was a generic Dictionary whose keys and values were both of type String. Modeling a Twitter direct message that way avoids the overhead of declaring a new type, but makes it a little harder to keep track of things. Consider these two declarations:

[C# code removed for brevity]They look similar when viewed in the debugger:

[C# code removed for brevity]But these objects behave differently when you're writing code that uses them. If I use a dictionary-style object, I have to remember (or look up) that my convention for that dictionary is, for example, to use the key sender_screen_name rather than, say, sender_name. And if I get that wrong, I won't find out until run time. When I create a new custom type, though, Visual Studio knows the names and types of the object's properties and prompts for their values by name. I regard this static typing as optional, and sometimes I don't bother, but increasingly I've come to see it as a form of documentation that repays the overhead required to create it.

Unpacking a Twitter message using LINQ

Now let's look at how GetDirectMessagesFromTwitter unpacks the XML response from the Twitter API.

[C# code removed for brevity]In this example, response.bytes is the XML text returned from the Twitter API, and xdoc is a System.Xml.Linq.Xdocument. I could alternatively have read that XML text into a System.Xml.XmlDocument, and used XPath to pick out elements from within it. That was always my approach in Python, it's available in C# too, and there are some cases where I use it. But here I'm instead using LINQ (language-integrated query) which is a pattern that works for many different kinds of data sources. The elmcity project uses LINQ to query a variety of sources including XML, in-memory objects, JSON, and CSV. I've come to appreciate the generality of LINQ. And when the data source is XML, I find that System.Xml.Linq.XDocument makes namespaces more tractable than System.Xml.XmlDocument does.

The type of object returned by xdoc.Descendants() is IEnumerable. That means it's an enumerable set of objects of type XElement. If you wanted to simply return an enumerable set of XElement, you could do this:

var messages = from message in xdoc.Descendants("direct_message")var messages = from message in xdoc.Descendants("direct_message")

It would then be the caller's responsibility to pick out the elements and construct TwitterDirectMessages from them. But instead, this method does that on the fly. Although xdoc.Descendants() is an IEnumerable, messages is an IEnumerable. The transformation occurs in the LINQ select clause, which unpacks the System.Xml.Linq.XElement and makes a TwitterDirectMessage.

You could return that messages object directly, in which case it would be the caller's responsibility to actually do the enumeration that it encapsulates. That style of deferred execution is fundamental to LINQ. But here there's no need to decouple the declaration of the query from the materialization of a view based on it. So GetDirectMessagesFromTwitter returns messages.ToList(), which does the enumeration and sends back a List as advertised in its declaration.

Moving Twitter messages to and from Azure storage

Each message sent from a curator to the elmcity service should only be handled once. So the service needs to be able to identify messages it hasn't seen before, and then dispose of them after its handling is done. To accomplish this, and also to keep an archive of all messages sent to the service, it reflects all new messages into the Azure table service, which is a scalable key/value database that stores bags of properties without requiring an explicit schema. In this case, scaling isn't a concern. At any given time, there shouldn't ever be more than a handful of Twitter messages stored in Azure. But the bags-of-properties aspect of the Azure table service is really convenient, for the same reason that hashtables and dictionaries are.

(Azure is, by the way, not restricted to the key/value table store. There's a cloud-based relational store as well. My project's storage needs are basic, so I haven't used SQL Azure yet. But I expect that I eventually will.)

The Azure SDK models the table service using a set of abstractions that work with defined types, but I wanted to preserve the flexibility to store and retrieve arbitrary key/value collections. Hence my project's alternate interface to the service. I'll explore it in more detail elsewhere. For now, just consider the Azure-oriented counterpart to GetDirectMessagesFromTwitter:

[C# code removed for brevity]If it were written in English, that method would say the following.

From the Azure table named twitter, retrieve all entities identified by a partition key whose value is direct_messages. The result is an Atom feed whose entries look like this:

<entry m:etag="W/"datetime'2010-06-14T15%3A01%3A39.103296Z'"">

<id>https://elmcity.table.core.windows.net/twitter(PartitionKey='direct_messages',RowKey='1003299227')</< span>id>

<title type="text"/>

<updated>2010-08-09T22:13:20Z</< span>updated>

<author><name/></< span>author>

<link rel="edit"title="twitter"href="twitter(PartitionKey='direct_messages',RowKey='1003299227')"/>

<category term="elmcity.twitter"scheme="http://schemas.micro...services/scheme"/>

<content type="application/xml">

<m:properties>

<d:PartitionKey>direct_messages</< span>d:PartitionKey>

<d:RowKey>1003299227</< span>d:RowKey>

<d:Timestamp m:type="Edm.DateTime">2010-06-14T15:01:39.103296Z</< span>d:Timestamp>

<d:id>1003299227</< span>d:id>

<d:sender_screen_name>westborough</< span>d:sender_screen_name>

<d:recipient_screen_name>elmcity_azure</< span>d:recipient_screen_name>

<d:text>start</< span>d:text>

</< span>m:properties>

</< span>content>

</< span>entry>

Jon Udell is an author, information architect, software developer, and new media innovator. His 1999 book, Practical Internet Groupware, helped lay the foundation for what we now call social software. Udell was formerly a software developer at Lotus, BYTE Magazine's executive editor and Web maven, and an independent consultant.

From 2002 to 2006 he was InfoWorld's lead analyst, author of the weekly Strategic Developer column, and blogger-in-chief. During his InfoWorld tenure he also produced a series of screencasts and an audio show that continues as Interviews with Innovators on the Conversations Network. In 2007 Udell joined Microsoft as a writer, interviewer, speaker, and experimental software developer. Currently he is building and documenting a community information hub that's based on open standards and runs in the Azure cloud.

David Pallman explains Dynamically Computing Web Service Addresses for Azure-Silverlight Applications in this 8/10/2010 post to his Technology Blog:

If you work with Silverlight or with Windows Azure you can experience some pain when it comes to getting your WCF web services to work at first. If you happen to be using both Silverlight and Azure together you might feel this even more.

One troublesome area is the Silverlight client knowing the address of its Azure web service. Let’s assume for this discussion that your web service is a WCF service that you’ve defined in a .svc file (“MyService.svc”) that resides in the same web role that hosts your Silverlight application. What’s the address of this service? There are multiple answers depending on the context you’re running from:

- If you’re running locally, your application is running under the Dev Fabric (local cloud simulator), and likely has an address similar to http://127.0.0.1:81/MyService.svc.

- If you run the web project outside of Windows Azure, it will run under the Visual Studio web server and have a different address of the form http://localhost:<port>/MyService.svc.

- If you deploy your application to a Windows Azure project’s Staging slot, it will have an address of the form http://<guid>.cloudapp.net/MyService.svc. Moreover, the generated GUID part of the address changes each time you re-deploy.

- If you deploy your application to a Windows Azure project’s Production slot, it will have the chosen production address for your cloud service of the form http://<project>.cloudapp.net/MyService.svc.

One additional consideration is that when you perform an Add Service Reference to your Silverlight project the generated client configuration will have a specific address. All of this adds up to a lot of trouble each time you deploy to a different environment. Fun this is not.

Is there anything you can do to make life easier? There is. With a small amount of code you can dynamically determine the address your Silverlight client was invoked at, and from there you can derive the address your service lives at.

You can use code similar to what’s shown below to dynamically compute the address of your service. To do so, you’ll need to make some replacements to the MyService = … statement:

- Replace “MyService.MyServiceClient” with the namespace and class name of your generated service proxy client class.

- Replace “CustomBinding_MyService” with the binding name used in the generated client configuration file for your service endpoint (ServiceReferences.ClientConfig).

- Replace “MyService.svc” with the name of your service .svc file.

string hostUri = System.Windows.Browser.HtmlPage.Document.DocumentUri.AbsoluteUri;

int pos = hostUri.LastIndexOf('/');

if (pos != -1)

{

hostUri = hostUri.Substring(0, pos);

}

MyService.MyServiceClient MyService = new MyService.MyServiceClient("CustomBinding_MyService", hostUri + "/MyService.svc");With this in place you can effortlessly move your application between environments without having to change addresses in configuration files. Note this is specific to “traditional” WCF-based web services only. If you’re working with RIA Services, the client seems to know its service address innately and you should not need to worry about computing web service addresses.

Return to section navigation list>

Windows Azure Infrastructure

Mary Jo Foley’s Microsoft's Windows Azure: What a difference a year makes post of 10/11/2010 to ZDNet’s All About Microsoft blog is a survey of recently added Windows Azure Platform features:

In some ways, Microsoft’s Azure cloud operating environment doesn’t seem to have changed much since the Softies first made it available to beta testers almost two years ago. But in other ways — feature-wise, organization-wise and marketing-wise — Azure has morphed considerably, especially in the last 12 months.

Microsoft started Windows Azure (when it was known as “Red Dog”) with a team of about 150 people. Today, the Azure team is about 1,200 strong, having recently added some new big-name members like Technical Fellow Mark Russinovich. Over the past six months, the Azure team and the Windows Server team have been figuring out how to combine their people and resources into a single integrated group. During that same time frame, the Azure team has launched commercially Microsoft’s cloud environment; added new features like content-delivery-network, geo-location and single sign-on; and announced plans for “Azure in a box” appliances for those interested in running Azure in their own private datacenters.

In the coming months, Azure is going to continue to evolve further. Microsoft is readying a new capability to enable customers to add add virtual roles to their Azure environments, as well as a feature (codenamed “Sydney”) that will allow users to more easily network their on-premises and cloud infrastructures. The biggest change may actually be on the marketing front, however, as Microsoft moves to position Azure as an offering not just for developers but for business customers of all sizes. (I’ll have more on that in Part 2 of this post on Thursday August 12.)

Senior Vice President of Microsoft’s combined Server and Cloud Division, Amitabh Srivastava, has headed the Windows Azure team from the start. Srivastava said Windows Azure is still fundamentally the same as when his team first built it. At its core, it consists of the same group of building blocks: Compute, Storage and a Fabric Controller (providing management and virtualization). Microsoft’s latest “wedding cake” architectural diagram detailing Azure looks almost identical — at least at the operating system level — to the team’s original plan for Red Dog:

Most of the work that Microsoft has been doing on Azure for the past year has been quiet and behind-the-scenes. The team regularly updates the Azure platform weekly and sometimes even daily. By design, there are no “big releases” of Azure. The Azure team designs around “scenarios,” not features. Some scenarios — like the forthcoming VM role — can take as long as a year or more to put together; something else, like a more minor user interface change, could take less and show up more quickly.

All these little changes do add up, however.

Next page: The Red Dog puppy grows up

Page 1 of 2: Read more

Comment (added 8/12/2010): Windows Azure’s compute and storage features are “still fundamentally the same as when [Amitabh’s] team first built it” but the relational SQL Azure database replaced the original Entity-Attribute-Value (EAV or key-value) SQL Server Data Services (SSDS, later SQL Data Services, SDS.) SSDS was an EAV data store hosted on a modified SQL Server version. This was an good decision because it eliminated the confusion caused by providing two competing EAV data stores. (Windows Azure tables are a highly scalable and very reliable EAV data store.)

Page 2 contains a detailed Windows Azure architectural diagram which I prepared for Mary Jo at her request. The diagram includes new features added during the last year, including a Content Delivery Network (CDN), the Windows Azure AppFabric, single-sign-on (SSO) with Access Control Services (ACS), OData, Project “Dallas”, Project “Houston”, and SQL Azure Data Sync:

Image updated 8/12/2010. Click for full-size (1,024-px) PowerPoint SlideShow capture.

Mike Wickstrand asked are you Interested in becoming a Windows Azure MVP? in this 8/11/2010 post to his MSDN Pondering Clouds blog:

We are in the final stages of accepting nominations for the newly formed Windows Azure Most Valued Professional (MVP) Program. We are looking for a few good developers to become charter MVPs for Windows Azure. What does it take to become a Windows Azure MVP?

Assuming you have the requisite technical skills; (1) Windows Azure MVPs are independent experts on Windows Azure, (2) can represent and amplify the voice of our customers, (3) is a thought leader in online and local communities, (4) provides feedback to the Windows Azure development teams, and (4) provides customer support while evangelizing Windows Azure. Do you have what it takes?

If you are interested, please send email to Robert Duffner (rduffner@microsoft.com) the Windows Azure MVP Community Lead.

Thanks in advance – Mike

David Linthicum asserts “Despite IT's bravado, most don't have the skills or understanding to properly handle the task of building a private cloud” as a preface to his Why you're not ready to create a private cloud post of 8/11/2010:

According to James Staten at Forrester Research, those of you looking to implement private clouds may be lacking the skills required to succeed.

While many IT pros are hot on cloud computing – Staten [pictured at right] notes that the common attitude is "I'll bring these technologies in-house and deliver a private solution, an internal cloud" -- they don't grasp an underlying truth of cloud computing. As Staten puts it: "Cloud solutions aren't a thing, they're a how, and most enterprise I&O shops lack the experience and maturity to manage such an environment."

I'm reaching the same conclusion. While private clouds seem like mounds of virtualized servers to many in IT, true private clouds are architecturally and operationally complex, and they require that the people behind the design and cloud creation know what they are doing. Unfortunately, few do these days.

Private clouds are not traditional architecture, nor are they virtualized servers. I say that many times a day, but I suspect that many IT organizations will still dive in under false assumptions. Private clouds have to include such architectural components as auto-provisioning, identity-based security, governance, use-based accounting, and multitenancy -- concepts that are much less understood than they need to be.

At the core of this problem is the fact that we're hype-rich and architect-poor. IT pros who understand the core concepts behind SOA, private cloud architecture, governance, and security -- and the enabling technology they require -- are few and far between, and they clearly are not walking the halls of rank-and-file enterprises and government agencies. I can count the ones I know personally on a single hand.

What can you do to get ready? The most common advice is to hire people who know what they're doing and have the experience required to get it right the first time. Make sure to encourage prototyping and testing, learning all you can from that process. Focus on projects with the best chances of success, initially. This means not trying to port legacy systems to private clouds on the initial attempt, but instead focusing on new automation.

Kurt Mackie asserted Microsoft Lays Out Its 'Open Cloud' Vision and quotes Microsoft’s Jean Paoli in this 8/10/2010 post to 1105 Media’s Virtualization Review site:

Microsoft used the occasion of an open source conference last month to advance its vision of an open cloud by breaking it down into four basic principles.

The principles were laid out by Jean Paoli [pictured at right], Microsoft's general manager for interoperability strategy, a group that coordinates with Microsoft's product teams. He spoke at the O'Reilly Open Source Convention in Portland, Ore. (video). OSCON might not seem like the most welcoming venue for Microsoft, but Paoli has renown beyond being a Microsoft employee. He's a co-creator of the XML 1.0 standard in conjunction with the Worldwide Web Consortium (W3C) standards body. [See post below.]

More than anything, XML serves as an example of successful adoption of a standard. It's a good back-drop for Paoli's current interoperability career.

"You know, XML is really pervasive today; it's like electricity," Paoli said in a telephone interview. "I'm very humbled by the level of adoption in the world. I mean, even refrigerators have XML these days. It really became the backbone of interoperability of the Internet, literally, today."

Interoperability or Vendor Lock-In?

Interoperability questions currently seem complex when it comes to the Internet cloud. Organizations face questions about legal, logistical and technical issues. Will companies have data and application portability in the future or will they be locked into the vendor's cloud service platform?Such prospects of vendor lock-in become even more unclear because standards are being debated even as cloud providers promote their platforms and deliver services. Players such as Amazon, Google, Rackspace, Salesforce.com, Microsoft and others have adopted technologies that may not line up. An open cloud, if possible to achieve, seems to be an important prerequisite for providers and customers alike.

Paoli, speaking to open source software professionals at OSCON, outlined four "interoperability elements" that Microsoft sees as necessary to achieve an open cloud platform. Microsoft's main goal, at this point, is to advance the conversation, connecting with the open source community and customers, Paoli explained in a blog post.

The four cloud interoperability elements outlined by Paoli include:

- Enabling portability for customer data;

- Supporting commonly used standards;

- Providing easy migration and deployment; and

- Ensuring developer choice on languages, runtimes and tools.

In Microsoft's case, it has built its Windows Azure cloud computing infrastructure as an "open platform" from the ground up, Paoli said.

"At the base, Azure has been built using the basic XML, REST and WS protocols," he explained. And because of that, we are able to put multiple languages and tool support on top of it." Windows Azure supports the use of Visual Studio and the Eclipse development environments. It also supports different languages and runtimes, such as .NET, Java, PHP, Python and Ruby.

Microsoft believes that customers own their data in the cloud, Paoli said. To support data portability in Windows Azure, Microsoft advocates the Open Data Protocol (OData).

"OData originated at Microsoft and it just basically depends on JSON, XML and REST," Paoli said. "And it enables you to do basic cloud operations -- read, update and queries and all of that -- through a protocol that we opened up." He added that OData is used by IBM's WebSphere, as well as Microsoft's SQL Azure. Microsoft is currently discussing standardization of OData; organizations such as the W3C and OASIS are interested, Paoli said.

Microsoft prefers to rely on existing standards for the cloud. It has an Interoperability Bridges and Labs Center for collaboration with the community. It is working through multiple standards organizations with other vendors on the open cloud concept. And the standards talk already is happening, Paoli said. One of those forums is the Distributed Management Taskforce (DMTF).

"The DMTF…is a standards body where this discussion around what to do for an open interoperable cloud is happening," Paoli said. "It's the same thing in ISO. Very recently, there is a new group called 'SB38' that has been created to also discuss the cloud, openness, standards and interoperability."

Clouds Are Moving Targets

The concept of an open cloud has come up before. In March of 2009, the Cloud Computing Interoperability Forum published the "Open Cloud Manifesto," which was backed by 53 companies but support was missing from some of the larger players. Microsoft claimed at the time that it was excluded from participating in drafts of the document. Allegedly, IBM was lurking in the background and using the document to promote its views. The Open Cloud Manifesto eventually went nowhere; even its backers backed away from it.So, Microsoft's attempt to define an open cloud isn't the first. It's perhaps the third or fourth attempt, according to James Staten, an analyst with Forrester Research.

"One of the challenges here is that everyone acknowledges that it would great to do a common integration among these clouds," Staten said in a phone interview. "All of these clouds are moving targets. They're all adapting and maturing as they are figuring out what their market is, what their specialization is, and as they add new capabilities and change infrastructure choices. Most of them want to have some consistency on the interfaces customers use, but don't have the discipline to ensure that."

Read page 2

Jean Paoli posted Interoperability Elements of a Cloud Platform Outlined at OSCON to the Interoperability @ Microsoft blog on 7/22/2010:

This week I’m in Portland, Oregon attending the O’Reilly Open Source Convention (OSCON). It’s exciting to see the great turnout as we look to this event as an opportunity to rub elbows with others and have some frank discussions about what we’re collectively doing to advance collaboration throughout the open source community. I even had the distinct pleasure of giving a keynote this morning at the conference.

The focus of my presentation, titled “Open Cloud, Open Data” described how interoperability is as an essential component of a cloud computing platform. I personally think it’s critical to acknowledge that the cloud is intrinsically about connectivity. Because of this, interoperability is really the key to successful connectivity.

We’re facing an inflection point in the industry – where the cloud is still in a nascent state – that we need to focus on removing the barriers for customer adoption and enhancing the value of cloud computing technologies. As a first step, we’ve outlined what we believe are the foundational elements of an open cloud platform.

They include:

- Data Portability:

How can I keep control over my data?

Customers own their own data, whether stored on-premises or in the cloud. Therefore, cloud platforms should facilitate the movement of customers’ data in and out of the cloud.- Standards:

What technology standards are important for cloud platforms?

Cloud platforms should support commonly used industry standards so as to facilitate interoperability with other software and services that support the same standards. New standards may be developed where existing standards are insufficient for emerging cloud platform scenarios.- Ease of Migration and Deployment:

Will your cloud platforms help me migrate my existing technology investments to the cloud and how do I use private clouds?

Cloud platforms should provide a secure migration path that preserves existing investments and should enable the co-existence between on-premise software and cloud services. This will enable customers to run “customer clouds” and partners (including hosters) to run “partner clouds” as well as take advantage of public cloud platform services.- Developer Choice:

How can I leverage my developers’ and IT professionals’ skills in the cloud?

Cloud platforms should offer developers a choice of software development tools, languages and runtimes.

Through our ongoing engagement in standards and with industry organizations, open source developer communities, and customer and partner forums, we hope to gain additional insight that will help further shape these elements. We’ve also pulled together a set of related technical examples which can be accessed at www.microsoft.com/cloud/interop to support continued discussion with customers, partners and others across the industry.

In addition, we continue to work with others in the industry to deliver resources and technical tools to bridge non-Microsoft languages — including PHP and Java — with Microsoft technologies. As a result, we have produced several useful open source tools and SDKs for developers, including the Windows Azure Command-line Tools for PHP, the Windows Azure Tools for Eclipse and the Windows Azure SDK for PHP and for Java. Most recently, Microsoft joined Zend Technologies Ltd., IBM Corp. and others for an open source, cloud interoperability project called Simple API for Cloud Application Services, which will allow developers to write basic cloud applications that work in all of the major cloud platforms.

Available today is the latest version of the Windows Azure Command Line Tools for PHP to the Microsoft Web Platform Installer (Web PI). The Windows Azure Command Line Tools for PHP enable developers to use a simple command-line tool without an Integrated Development Environment to easily package and deploy new or existing PHP applications to Windows Azure. Microsoft Web PI is a free tool that makes it easy to get the latest components of the Microsoft Web Platform as well as install and run the most popular free web applications.

On the data portability front, we’re also working with the open source community to support the Open Data Protocol (OData), a REST-based Web protocol for manipulating data across platforms ranging from mobile to server to cloud. You can read more about the recent projects we’ve sponsored (see OData interoperability with .NET, Java, PHP, iPhone and more) to support OData. I’m pleased to announced that we’ve just release a new version of the OData Client for Objective-C (for iOS & MacOS), with the source code posted on CodePlex, joining a growing list of already available open source OData implementations.

Microsoft’s investment and participation in these projects is part of our ongoing commitment to openness, from the way we build products, collaborate with customers, and work with others in the industry. I’m excited by the work we’re doing , and equally eager to hear your thoughts on what we can collectively be doing to support interoperability in the cloud.

Jean Paoli, general manager for Interoperability Strategy at Microsoft

David Linthicum claimed For SMBs, Cloud Computing is a No-Brainer in an 8/10/2010 post to ebizQ’s Where SOA Meets cloud blog:

According to a Spiceworks survey of over 1,500 IT professionals, the smaller the company, the more willing they are to adopt cloud computing technology. 38 percent report that they plan to adopt cloud computing within the next 6 months.

While we focus on cloud computing adoption by the Global 2000 and the government, the reality is that small businesses have been on the cloud for years, and continue to fuel the largest and most rapid growth around the cloud. They were the first to adopt SaaS earlier this decade, driving the rapid growth of SaaS providers such as Salesforce.com. Now SMBs are looking to drive much of their IT into the cloud, largely around the cost benefits.

While the larger enterprises and government agencies attempt to sort out issues around security, privacy, compliance, and, that all-important control, the decision to go cloud for small businesses is a no-brainer. They can't afford traditional IT, in many cases. Lacking the "cloud option," most SMBs would do without core enterprise systems such as CRM, ERP, calendar sharing, e-mail, and even business intelligence. Now, all of these can be had out of the cloud for a few dollars a day.

In addition, the availability of clouds that provide IaaS, such as Amazon Web Services, GoGrid, and Rackspace, have opened the gates for small technology-oriented startups to rent virtual data centers and avoid the million or so dollars needed to make capital expenditures around hardware, software, and renting data center space. Many tech startups have no infrastructure to speak of on-premise, and leverage the savings in capital to drive faster and more effective product development efforts. When I was running small startups, you figured a million dollars minimum just to get ready for development. With the cloud computing options today, that's money you can leverage for other purposes.

What's interesting about all this is that many cloud computing providers are not paying much attention to the emerging SMB market. I think many providers assume they will come to cloud anyway, and perhaps they are right. However, you also have to keep in mind that small businesses soon become big businesses. Many of those fast-growing businesses will use cloud computing as a strategic differentiator that will allow them to lead their markets, using cloud computing solutions that provide both lower costs and agile computing benefits. Thus, cloud computing could find that it grows more around the success of the smaller players, than it does by waiting for the behemoths to begin adopting cloud computing.

<Return to section navigation list>

Windows Azure Platform Appliance

No significant articles today.

<Return to section navigation list>

Cloud Security and Governance

Lori MacVittie (@lmacvittie) asserted The fallacy of security is that simplicity or availability of the solution has anything to do with time to resolution as a preface to her Six Lines of Code article of 8/11/2010 for F5’s DevCentral blog:

The announcement of the discovery of a way in which an old vulnerability might be exploited gained a lot of attention because of the potential impact on Web 2.0 and social networking sites that rely upon OAuth and OpenId, both of which use affected libraries. What was more interesting to me, however, was the admission by developers that the “fix” for this vulnerability would take only “six lines of code”, essentially implying a “quick fix.”

For most of the libraries affected, the fix is simple: Program the system to take the same amount of time to return both correct and incorrect passwords. This can be done in about six lines of code, Lawson said.

It sounds simple enough. Six lines of code.

If you’re wondering (and I know you are) why it is that I’m stuck on “six lines of code” it’s because I think this perfectly sums up the problem with application security today. After all, it’s just six lines of code, right? Shouldn’t take too long to implement, and even with testing and deployment it couldn’t possibly take more than a few hours, right?

Try thirty eight days, on average. That’d be 6.3 days per lines of code, in case you were wondering.

SIMPLICITY OF THE SOLUTION DOES NOT IMPLY RAPID RESOLUTION

Turns out that responsiveness of third-party vendors isn’t all that important, either.

But a new policy announced on Wednesday by TippingPoint, which runs the Zero Day Initiative, is expected to change this situation and push software vendors to move more quickly in fixing the flaws.

Vendors will now have six months to fix vulnerabilities, after which time the Zero Day Initiative will release limited details on the vulnerability, along with mitigation information so organizations and consumers who are at risk from the hole can protect themselves.

-- Forcing vendors to fix bugs under deadline, C|Net News, August 2010

To which I say, six lines of code, six months. Six of one, half-a-dozen of the other. Neither is necessarily all that pertinent to whether or not the fix actually gets implemented. Really. Let’s examine reality for a moment, shall we?

The least amount of time taken by enterprises to address a vulnerability is 38 days, according to WhiteHat Security’s 9th Website Security Statistic Report.

Only one of the eight reasons cited by organizations in the report for not resolving a vulnerability is external to the organization: affected code is owned by an unresponsive third-party vendor. The others are all internal, revolving around budget, skills, prioritization, or simply that risk of exploitation is acceptable. Particularly of note is that in some cases, the “fix” for the vulnerability conflicts with a business use case. Guess who wins in that argument?

What WhiteHat’s research shows, and what most people who’ve been inside the enterprise know, is that there are a lot of reasons why vulnerabilities aren’t patched or fixed. We can say it’s only six lines of code and yes, it’s pretty easy, but that doesn’t take into consideration all the other factors that go into deciding when, if ever, to resolve the vulnerability. Consider that one of the reasons cited for security features of underlying frameworks being disabled in WhiteHat’s report is that it breaks functionality. That means that securing one application necessarily breaks all others. Sometimes it’s not that the development folks don’t care, it’s just that their hands are essentially tied, too. They can’t fix it, because that breaks critical business functions that impact directly the bottom line, and not in a good way.

For information security professionals this must certainly appear to be little more than gambling; a game which the security team is almost certain to lose if/when the vulnerability is actually exploited. But the truth is that information security doesn’t get to set business and development priorities, unfortunately, but yet they’re the ones responsible.

All the accountability and none of the authority. It’s no wonder these folks are high-strung.

INFOSEC NEEDS THEIR OWN INFRASTRUCTURE TOOLBOX

This is one of the places that a well-rounded security toolbox can provide security teams some control over their own destiny, and some of the peace of mind needed for them to get some well-deserved sleep.

If the development team can’t/won’t address a vulnerability, then perhaps it’s time to explore other options. IPS solutions with an automatically updated signature database for those vulnerabilities that can be identified by a signature can block known vulnerabilities. For exploits that may be too variable or subtle, a web application firewall or application delivery controller enabled with network-side scripting can provide the means by which the infosec professional can write their own “six lines of code” and at least stop-gap the risk of actively being exploited.

Infosec also needs visibility into the effectiveness of their mitigating solutions. If a solution involves a web application firewall, then that web application firewall ought to provide an accurate report on the number of exploits, attacks, or even probing attempts it stopped. There’s no way for an application to report that data easily – infosec and operators end up combing through log files or investing in event correlation solutions to try and figure out what should be a standard option. Infosec needs to make sure it can report on the amount of risk mitigated in the course of a month, or a quarter, or a year. Being able to quantify in terms of hard dollars provides management and the rest of the organization (particularly the business) what they consider real “proof” of value of not just infosec but the solutions in which it invests to protect data and applications and business concerns.

Every other group in IT has, in some way, embraced the notion of “agile” as an overarching theme. Infosec needs to embrace agile as not only an overarching theme but as a methodology in addressing vulnerabilities. Because the “fix” may be a simple “six lines of code”, but who implements that code and where is less important than when. An iterative approach that initially focuses on treating the symptoms (stop the bleeding now) and then more carefully considers long-term treatment of the disease (let’s fix the cause) may result in a better overall security posture for the organization.

<Return to section navigation list>

Cloud Computing Events

Colinizer reported his Live Meeting Presentation Today on Windows Phone 7 + OData + Silverlight + Azure on 10/11/2010 at 1:00 PM PDT (16:00 EDT):

I’m doing a 1.5 hour Live Meeting presentation today at 16:00 ET on these hot topics for the Windows Azure User Group

The audience objectives include:

- Learn key features of Silverlight, OData & the Windows Azure Platform

- Learn about preparing an application for use with Windows Azure & SQL Azure

- Learn stages and ways to deploy a full application to the Windows Azure Platform

- Learn how Silverlight can interact with Windows Azure Platform technologies.

Register for this hot-topic event and participate remotely.

https://www.clicktoattend.com/invitation.aspx?code=147804

If you are looking for in-depth rapid training on developing for Windows Phone 7 development AND hands-on time with a device, then you should consider registering for the this major 2-day boot camp running across Canada.

Geva Perry compares cloud benchmarking services in his Shopping the Cloud: Performance Benchmarks post of 8/10/2010:

As cloud computing matures -- meaning it is being used by increasingly larger companies for mission critical applications -- companies are shopping around for cloud providers with requirements that are more sophisticated than merely price and ease-of-use. One of these criteria is performance.

Performance has consistently been one of the main concerns enterprise buyers have had about cloud computing, as indicated from the chart of the responses to a survey conducted by IDC in Q3 of 2009.

To address this concern, and help potential cloud users in selecting their cloud provider, a number of research, measurement and academic groups have initiated efforts to actually measure and compare the performance of various cloud providers under a variety of circumstances (application use case, geographical location and more).

Here are some of the more interesting performance benchmarks out there today:

Compuware Gomez: CloudSleuth

Still in beta, Gomez CloudSleuth is likely to be one of the more important reference points for customer and media cloud performance testing. Gomez has developed a benchmark Java ecommerece applications and measures the end-to-end response time of various cloud providers and locations. The tests are run from 125 end-user U.S. locations in all 50 states and from 75 international locations in 30 countries and are conducted 200 times per hour. Gomez is planning on adding the ability to benchmark a user’s own application.

CloudHarmony

Although just a startup, if it succeeds CloudHarmony is likely to be an important resource for customers and the media for evaluating cloud provider performance. CloudHarmony has a service called Cloud SpeedTest, which allows users to benchmark the performance of a web application across multiple cloud providers and services (servers, storage, CDN, PaaS). This service is currently in beta and is quote simplistic, but CloudHarmony is working on a more sophisticated version with additional features.

In addition, the CloudHarmony staff conducts a variety of performance benchmarks for specific scenarios such as CPU performance, storage I/O, memory I/O or video encoding and publishes them on their blog.

UC Berkeley: Cloudstone Project

Cloudstone is an academic open source project from the UC Berkeley. It provides a framework for testing realistic performance. The project does not publish as of yet comparative results across clouds, but provides users with the framework that allows them to do so. The reference application is a social Web 2.0-style application.

Duke University and Microsoft Research: Cloud CMP Project

The objective of the Cloud CMP project is to enable “comparison shopping” across cloud providers -- both IaaS and PaaS -- and do so for a number of application use cases and workloads. To that end, the project combines straight performance benchmarks as well as a cost-performance analysis. The project has already measured computational, storage, Intra-cloud and WAN performance for three cloud providers (two IaaS and one PaaS) and intends to expand.

BitCurrent: The Performance of Clouds

BitCurrent conducted a comprehensive performance benchmarking study commissioned by Webmetrics and using their testing service entitled The Performance of Clouds. The study covered three IaaS providers (Amazon, Rackspace and Terremark) and two PaaS providers (Salesforce.com and Google App Engine). It measured four categories of performance: raw response time and caching, network throughput and congestion, computational performance (CPU-intensive tasks) and I/O performance.

The Bit Source: Rackspace Cloud Servers Vs. Amazon EC2

According to its website, The Bit Source is an “online publication and testing lab”, which appears to be a one-man show and may not play a significant role going forward. It conducted a one-time benchmark comparing Rackspace Cloud Servers and Amazon EC2 performance.

What are your thoughts about cloud performance and these benchmarks? Please share in the comments below.

[P.S. I added a new category to the blog called "Shopping the Cloud", which will include posts that discuss various aspects of comparison shopping for cloud providers.]

TM Forum claimed Boeing, Commonwealth Bank of Australia, Deutsche Bank, Washington Post and Schumacher amongst global leaders at TM Forum's Cloud Summit on 8/10/2010 in conjunction with a press release for its TM Forum Sees Sunny Future for Cloud Services at Management World Americas to be held 11/9 to 11/11/2010 in Orlando, FL:

With the Cloud Services promise meant to transform the way enterprises operate today, TM Forum is at the forefront, announcing their Cloud Summit at Management World Americas 2010, (November 9-11, Orlando, FL.) Bringing together enterprise customers, cloud service providers and technology suppliers to remove barriers to adoption, create a vibrant marketplace for cloud services, and support transparency through industry standards, TM Forum's Cloud Summit will see open discussion and debates with industry leaders and attendees.

With three days full of user case studies, panels, intensive debates and enterprise roundtables, senior executives from Boeing, Commonwealth Bank of Australia, Deutsche Bank, Washington Post and Schumacher will join others to discuss strategies to enable Cloud service providers and enterprise users to turn cloud services into business reality.

The key issues addressed at the Summit will be;

- Enhanced security

- Optimizing management and operations of cloud services

- How to combine TM Forum Business Process Framework (Frameworx) and ITIL in the context of the cloud

- Client billing and partner revenue sharing

- Defining and maintaining a set of standardized definitions of common cloud computing services

- Reducing deployment costs for services such as desktop as a service, database as a service and Virtual Private Clouds

Speakers include:

- David Nelson, Chief Cloud Strategist, Boeing Company

- Doug Menefee, CIO, Schumacher Group

- Sean Kelley, CIO, Deutsche Bank and Chair, TM Forum's Enterprise Cloud Leadership Council (ECLC)

- Yuvi Kochar, CTO & VP Technology - Washington Post

Dedicated panel discussions include:

- A "Buyer Meets Provider" Panel - bringing together the key stakeholders in the cloud eco-system to examine the role of standards in removing risk and barriers to entry. Panelists include Jon Waldron, Executive Architect, Commonwealth Bank of Australia; John Engates, Chief Technology Officer, Rackspace Hosting; Douglas Menefee, CIO, Schumacher Group; Joe Weinman, VP Strategy & Business Development, AT&T; and Michael Lawrey, Executive Director - Network & Technology, Telstra

- War stories from the front, featuring Brett Piatt, Director Technical Alliances, Rackspace; Michael Crandell, CEO, Rightscale; Trae Chancellor, VP, salesforce.com; and Joe Graves, CIO, Stratus Technologies focusing on applications and how enterprises can make the most of them

- Hearing how China Mobile has rolled out a full scale mobile cloud computing solution, offering tangible benefits to their enterprise customers

Key Facts:

- Cloud services are expected to hit $68.3 billion this year, a 16.6% rise compared to 2009 Cloud services revenue and $148.8 billion by 2014, according to Gartner.

- Management World Americas 2010 (November 9-11) Rosen Creek Orlando, FL, is the leading communications management conference for the Americas, delivering compelling strategies to help companies invest wisely, cut costs and diversify their revenue portfolios, offering inspiration and access to knowledge based on real-world experiences.

- New this year will be a series of intensive debates following each session that are designed to enable knowledge transfer, promote collaboration and debate amongst attendees. An extensive program of executive roundtables, enterprise roundtables, a full training and certification program, Executive Appointment Service, and Forumville, where visitors can see TM Forum and its members in action, make Management World Americas your go to event this fall.

- Mark your calendars now to join this year's premier US communications management conference, November 9-11. …

- About TM Forum:

With more than 700 corporate members in 195 countries, TM Forum is the world's leading industry association focused on enabling best-in-class IT for service providers in the communications, media and cloud service markets. The Forum provides business-critical industry standards and expertise to enable the creation, delivery and monetization of digital services.

TM Forum brings together the world's largest communications, technology and media companies, providing an innovative, industry-leading approach to collaborative R&D, along with wide range of support services including benchmarking, training and certification. The Forum produces the renowned international Management World conference series, as well as thought-leading industry research and publications.

CloudTweaks reported on 8/10/2010 CloudTweaks Event Partner “Cloud Symposium” Hosting World’s Largest SOA and Cloud Event on 10/4/2010:

This week the SOA & Cloud Symposium program committees announced an exciting free event to kick-start this year’s SOA & Cloud Symposium. The first conference day (Monday, October 4th) will be dedicated to hosting a Cloud Camp event, which is open for anyone to attend free of charge. Cloud Camp events are based on the “unconference” format whereby experts and attendees openly discuss and debate current topics pertaining to Cloud Computing. The Cloud Camp day is further supplemented by lightning talks also performed by attending Cloud Computing experts.

The Cloud Camp event, followed by the numerous Cloud-related sessions during the subsequent two conference days, culminate in the delivery the Cloud Computing Specialist Certification Workshop, provided by SOASchool.com in Europe for the very first time. This post-conference workshop enables IT practitioners to achieve formal vendor-neutral accreditation in the field of Cloud Computing. To register or learn more about the Cloud Camp event and the Cloud Computing Specialist Certification Workshop, visit: www.cloudsymposium.com

The tracks spanning the SOA & Cloud Symposium conference days (Tuesday October 5 plus Wednesday October 6) currently cover over 17 topics. Newly added time slots have brought the count to over 100 speaker sessions, making this the largest SOA and Cloud event in the world. The content, context, and application for SOA and Cloud Computing are ever-expanding. This event will be the hot spot for cutting edge technologies, methodologies, and their impacts on IT practitioners today.

The agenda can be found here: www.soasymposium.com/agenda2010.php

The SOA & Cloud Symposium event organizers are also excited to launch the new Official Facebook Fan Page and Twitter account.

To commemorate the launch of these new social media platforms, a special promotional giveaway has been organized with the following prizes:

- 1st Prize - 3 x SOA & Cloud Symposium Passes (valued at over 3,000 Euros) + 1 x SOA Security Specialist Self-Study Kit Bundle (valued of over 800 Euros) or 1 x Cloud Computing Specialist Self-Study Kit Bundle (valued of over 800 Euros) from SOASchool.com. There will only be one first prize.

- 2nd Prize – 2 x SOA & Cloud Symposium Passes (valued at over 2,000 Euros) +1 x any one Self-Study Kit (valued at over 300 Euros) from SOASchool.com. The 2nd prize will be issued to three winners.

- 3rd Prize – 1 x SOA & Cloud Symposium Pass (valued at over 1,000 Euros). The 3rd prize will be issued to five winners.Contestants must visit and “like” the Facebook Fan Page before August 23rd to be entered into the draw. The prizes will be issued on the morning of August 24th.

Stay connected with SOA & Cloud Symposium news, announcements, contests, and ongoing developments at: www.twitter.com/soacloud and www.linkedin.com/groups?mostPopular=&gid=1951120

<Return to section navigation list>

Other Cloud Computing Platforms and Services

Nicole Hemsoth’s To Build or to Buy Time: That is the Question article for the HPC in the Cloud blog describes a SMB’s use of cloud-based high-performance computing fLinux cluster or designing aerospike nozzles:

Generally, when one thinks about the vast array of small to medium-sized businesses deploying a cloud to handle peak loads or even mission-critical operations, the idea that such a business might be designing the future of missile defense strategy isn’t the first thing that comes to mind. After all, SMB concerns have historically not had much in common with those of large-scale enterprise and HPC users. The cloud is creating a convergence of these spaces and smaller businesses that were once unable to gain a foothold in their market due to high infrastructure start-up costs are now a competitive force due to the availablity of shared or rented infrastructure and a virtualized environment. This convergence creates new possibilites but can complicate end user decision-making about ideal options for mission-critical workloads.