Windows Azure and Cloud Computing Posts for 8/27/2010+

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

![AzureArchitecture2H_thumb31[1] AzureArchitecture2H_thumb31[1]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEgYzRdbDR0pI-8aqVuBbVjDw5CncBotE2X_t1OoZ__k5-gtAoWZpf-6kFgs3B8JJzm1XHLjC4_Z_isj62saUBHsF9pgizUNqKtADd5KOGt5fiBrTrRu8tCmoDYVL5tz0H8VZPZFTyZ4/?imgmax=800)

• Updated 8/29/2010 with new articles marked •

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database, Codename “Dallas” and OData

- AppFabric: Access Control and Service Bus

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch

- Windows Azure Infrastructure

- Windows Azure Platform Appliance (WAPA)

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Read the detailed TOC here (PDF) and download the sample code here.

Discuss the book on its WROX P2P Forum.

See a short-form TOC, get links to live Azure sample projects, and read a detailed TOC of electronic-only chapters 12 and 13 here.

Wrox’s Web site manager posted on 9/29/2009 a lengthy excerpt from Chapter 4, “Scaling Azure Table and Blob Storage” here.

You can now freely download by FTP and save the following two online-only PDF chapters of Cloud Computing with the Windows Azure Platform, which have been updated for SQL Azure’s January 4, 2010 commercial release:

- Chapter 12: “Managing SQL Azure Accounts and Databases”

- Chapter 13: “Exploiting SQL Azure Database's Relational Features”

HTTP downloads of the two chapters are available for download at no charge from the book's Code Download page.

Tip: If you encounter articles from MSDN or TechNet blogs that are missing screen shots or other images, click the empty frame to generate an HTTP 404 (Not Found) error, and then click the back button to load the image.

Azure Blob, Drive, Table and Queue Services

Azure Blob, Drive, Table and Queue Services

James Senior delivered a guided tour of New: Windows Azure Storage Helper for WebMatrix in this 00:13:21 Channel9 video segment:

Hot on the heels of the OData Helper for WebMatrix, today we are pushing a new helper out into the community. The Windows Azure Storage Helper makes it ridiculously easy to use Windows Azure Storage (both blob and table) when building your apps. If you’re not familiar with “cloud storage,” I recommend you take a look at this video where my pal Ryan explains what it’s all about. In a nutshell, it provides infinitely simple yet scalable storage, which is great if you are a website with lots of user generated content and you need your storage to grow auto-magically with the success of your apps. Tables aren’t your normal relational databases—but they are great for simple data structures and they are super fast.

<Return to section navigation list>

SQL Azure Database, Codename “Dallas” and OData

• Beth Massi recommends that you Add Some Spark to Your OData: Creating and Consuming Data Services with Visual Studio and Excel 2010 in an article for the September/October 2010 issue of CoDe Magazine:

The Open Data Protocol (OData) is an open REST-ful protocol for exposing and consuming data on the web. Also known as Astoria, ADO.NET Data Services, now officially called WCF Data Services in the .NET Framework. There are also SDKs available for other platforms like JavaScript and PHP. Visit the OData site at www.odata.org.

With the release of .NET Framework 3.5 Service Pack 1, .NET developers could easily create and expose data models on the web via REST using this protocol. The simplicity of the service, along with the ease of developing it, make it very attractive for CRUD-style data-based applications to use as a service layer to their data. Now with .NET Framework 4 there are new enhancements to data services, and as the technology matures more and more data providers are popping up all over the web. Codename “Dallas” is an Azure cloud-based service that allows you to subscribe to OData feeds from a variety of sources like NASA, Associated Press and the UN. You can consume these feeds directly in your own applications or you can use PowerPivot, an Excel Add-In, to analyze the data easily. Install it at www.powerpivot.com.

As .NET developers working with data every day, the OData protocol and WCF data services in the .NET Framework can open doors to the data silos that exist not only in the enterprise but across the web. Exposing your data as a service in an open, easy, secure way provides information workers access to Line-of-Business data, helping them make quick and accurate business decisions. As developers, we can provide users with better client applications by integrating data that was never available to us before or was clumsy or hard to access across networks.

In this article I’ll show you how to create a WCF data service with Visual Studio 2010, consume its OData feed in Excel using PowerPivot, and analyze the data using a new Excel 2010 feature called sparklines. I’ll also show you how you can write your own Excel add-in to consume and analyze OData sources from your Line-of-Business systems like SQL Server and SharePoint.

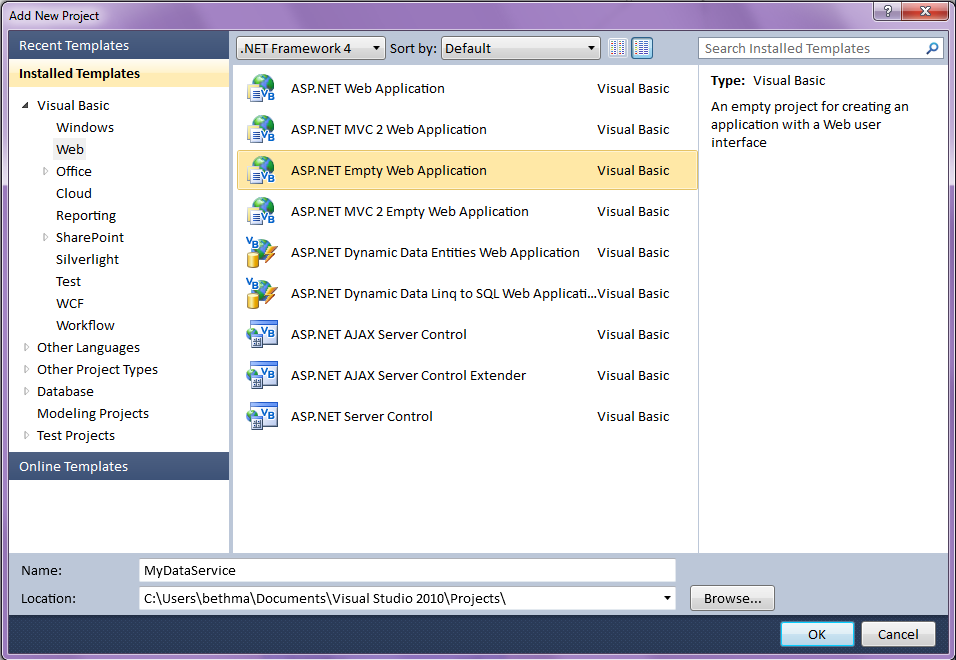

Creating a Data Service Using Visual Studio 2010

Let’s quickly create a data service using Visual Studio 2010 that exposes the AdventureWorksDW data warehouse. You can download the AdventureWorks family of databases here: http://sqlserversamples.codeplex.com/. Create a new Project in Visual Studio 2010 and select the Web node. Then choose ASP.NET Empty Web Application as shown in Figure 1. If you don’t see it, make sure your target is set to .NET Framework 4. This is a new handy project template to use in VS2010 especially if you’re creating data services.

Figure 1: Use the new Empty Web Application project template in Visual Studio 2010 to set up a web host for your WCF data service.

Click OK and the project is created. It will only contain a web.config. Next add your data model. I’m going to use the Entity Framework so go to Project -> Add New Item, select the Data node and then choose ADO.NET Entity Data Model. Click Add and then you can create your data model. In this case I generated it from the AdventureWorksDW database and accepted the defaults in the Entity Model Wizard. In Visual Studio 2010 the Entity Model Wizard by default will include the foreign key columns in the model. You’ll want to expose these so that you can set up relationships easier in Excel.

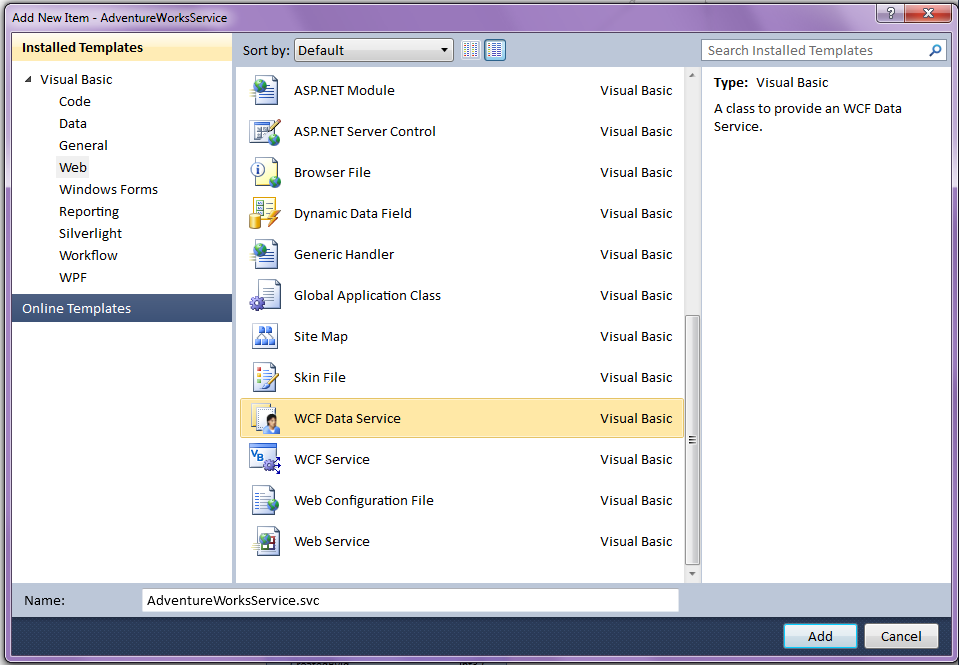

Next, add the WCF Data Service (formerly known as ADO.NET Data Service in Visual Studio 2008) as shown in Figure 2. Project -> Add New Item, select the Web node and then scroll down and choose WCF Data Service. This item template is renamed for both .NET 3.5 and 4 Framework targets so keep that in mind when trying to find it.

Figure 2: Select the WCF Data Service template in Visual Studio 2010 to quickly generate your OData service.

Now you can set up your entity access. For this example I’ll allow read access to all my entities in the model:

Public Class AdventureWorksService

Inherits DataService(

Of AdventureWorksDWEntities)

' This method is called only once to

' initialize service-wide policies.

Public Shared Sub InitializeService(

ByVal config As DataServiceConfiguration)

' TODO: set rules to indicate which

'entity sets and service operations

' are visible, updatable, etc.

config.SetEntitySetAccessRule("*",

EntitySetRights.AllRead)

config.DataServiceBehavior.

MaxProtocolVersion =

DataServiceProtocolVersion.V2

End Sub

End ClassYou could add read/write access to implement different security on the data in the model or even add additional service operations depending on your scenario, but this is basically all there is to it on the development side of the data service. Depending on your environment this can be a great way to expose data to users because it is accessible anywhere on the web (i.e., your intranet) and doesn’t require separate database security setup. This is because users aren’t connecting directly to the database, they are connecting via the service. Using a data service also allows you to choose only the data you want to expose via your model and/or write additional operations, query filters, and business rules. For more detailed information on implementing WCF Data Services, please see the MSDN library.

You could deploy this to a web server or the cloud to host for real or you can keep it here and test consuming it locally for now. Let’s see how you can point PowerPivot to this service and analyze the data a bit.

Article Pages: 1 2 3 - Next Page: 'Using PowerPivot to Analyze OData Feeds' >>

• Kevin Fairs points out a problem when using SqlBulkCopy with SQLAzure in this 8/29/2010 post:

Using SqlBulkCopy (.NET 4.0) with SQL Azure (10.25.9386) gives the error:

A transport-level error has occurred when receiving results from the server. (provider: TCP Provider, error: 0 - An existing connection was forcibly closed by the remote host.)

The SqlBulkCopy class is recommended by Microsoft for migration in their General Guidelines and Limitations (SQL Azure Database) document. However, it is clear to me that this does not work having tried many approaches, generally of the form:

Using cn As SqlConnection = New SqlConnection("connection string") cn.Open() Using BulkCopy As SqlBulkCopy = New SqlBulkCopy(cn) BulkCopy.DestinationTableName = "table" BulkCopy.BatchSize = 5000 BulkCopy.BulkCopyTimeout = 120 Try BulkCopy.WriteToServer(ds.Tables(0)) Catch ex As Exception End Try End Using End UsingAlternatives

- Use a traditional row at a time insert.

- Write a wrapper around BCP which works fine.

Or use George Huey’s SQL Azure Migration Wizard.

Wayne Walter Berry (@WayneBerry) issued a warning on 8/27/2010 about a problem with the original version of his Transferring a SQL Azure Database:

The original blog post doesn’t work as written, since the destination user executing the CREATE DATABASE must have exactly the same name as SQL Azure Portal Administrator name on the source database; i.e. you can only copy from a database where you know the SQL Azure Portal administrator name and password.

I will be revisiting this topic of how to transfer a SQL Azure database between two different parties in another blog post.

Dare Obasanjo (@Carnage4Life) posted Lessons from Google Wave and REST vs. SOAP: Fighting Complexity of our own Choosing on 8/27/2010:

Software companies love hiring people that like solving hard technical problems. On the surface this seems like a good idea, unfortunately it can lead to situations where you have people building a product where they focus more on the interesting technical challenges they can solve as opposed to whether their product is actually solving problems for their customers.

I started being reminded of this after reading an answer to a question on Quora about the difference between working at Google versus Facebook where Edmond Lau wrote

Culture:

Google is like grad-school. People value working on hard problems, and doing them right. Things are pretty polished, the code is usually solid, and the systems are designed for scale from the very beginning. There are many experts around and review processes set up for systems designs.Facebook is more like undergrad. Something needs to be done, and people do it. Most of the time they don't read the literature on the subject, or consult experts about the "right way" to do it, they just sit down, write the code, and make things work. Sometimes the way they do it is naive, and a lot of time it may cause bugs or break as it goes into production. And when that happens, they fix their problems, replace bottlenecks with scalable components, and (in most cases) move on to the next thing.

Google tends to value technology. Things are often done because they are technically hard or impressive. On most projects, the engineers make the calls.

Facebook values products and user experience, and designers tend to have a much larger impact. Zuck spends a lot of time looking at product mocks, and is involved pretty deeply with the site's look and feel.

It should be noted that Google deserves credit for succeeding where other large software have mostly failed in putting a bunch of throwing a bunch of Ph.Ds at a problem at actually having them create products that impacts hundreds of millions people as opposed to research papers that impress hundreds of their colleagues. That said, it is easy to see the impact of complexophiles (props to Addy Santo) in recent products like Google Wave.

If you go back and read the Google Wave announcement blog post it is interesting to note the focus on combining features from disparate use cases and the diversity of all of the technical challenges involved at once including

“Google Wave is just as well suited for quick messages as for persistent content — it allows for both collaboration and communication”

“It's an HTML 5 app, built on Google Web Toolkit. It includes a rich text editor and other functions like desktop drag-and-drop”

“The Google Wave protocol is the underlying format for storing and the means of sharing waves, and includes the ‘live’ concurrency control, which allows edits to be reflected instantly across users and services”

“The protocol is designed for open federation, such that anyone's Wave services can interoperate with each other and with the Google Wave service”

“Google Wave can also be considered a platform with a rich set of open APIs that allow developers to embed waves in other web services”

The product announcement read more like a technology showcase than an announcement for a product that is actually meant to help people communicate, collaborate or make their lives better in any way. This is an example of a product where smart people spent a lot of time working on hard problems but at the end of the day they didn't see the adoption they would have liked because they they spent more time focusing on technical challenges than ensuring they were building the right product.

It is interesting to think about all the internal discussions and time spent implementing features like character-by-character typing without anyone bothering to ask whether that feature actually makes sense for a product that is billed as a replacement to email. I often write emails where I write a snarky comment then edit it out when I reconsider the wisdom of sending that out to a broad audience. It’s not a feature that anyone wants for people to actually see that authoring process.

Some of you may remember that there was a time when I was literally the face of XML at Microsoft (i.e. going to http://www.microsoft.com/xml took you to a page with my face on it ). In those days I spent a lot of time using phrases like the XML<-> objects impedance mismatch to describe the fact that the dominate type system for the dominant protocol for web services at the time (aka SOAP) actually had lots of constructs that you don’t map well to a traditional object oriented programming language like C# or Java. This was caused by the fact that XML had grown to serve conflicting masters. There were people who used it as a basis for document formats such as DocBook and XHTML. Then there were the people who saw it as a replacement to for the binary protocols used in interoperable remote procedure call technologies such as CORBA and Java RMI.

The W3C decided to solve this problem by getting a bunch of really smart people in a room and asking them to create some amalgam type system that would solve both sets of completely different requirements. The output of this activity was XML Schema which became the type system for SOAP, WSDL and the WS-* family of technologies. This meant that people who simply wanted a way to define how to serialize a C# object in a way that it could be consumed by a Java method call ended up with a type system that was also meant to be able to describe the structural rules of the HTML in this blog post.

Thousands of man years of effort was spent across companies like Sun Microsystems, Oracle, Microsoft, IBM and BEA to develop toolkits on top of a protocol stack that had this fundamental technical challenge baked into it. Of course, everyone had a different way of trying to address this “XML<-> object impedance mismatch which led to interoperability issues in what was meant to be a protocol stack that guaranteed interoperability. Eventually customers started telling their horror stories in actually using these technologies to interoperate such as Nelson Minar’s ETech 2005 Talk - Building a New Web Service at Google and movement around the usage of building web services using Representational State Transfer (REST) was born.

In tandem, web developers realized that if your problem is moving programming language objects around, then perhaps a data format that was designed for that is the preferred choice. Today, it is hard to find any recently broadly deployed web service that doesn’t utilize

onJavascript Object Notation (JSON) as opposed to SOAP.

The moral of both of these stories is that a lot of the time in software it is easy to get lost in the weeds solving hard technical problems that are due to complexity we’ve imposed on ourselves due to some well meaning design decision instead of actually solving customer problems. The trick is being able to detect when you’re in that situation and seeing if altering some of your base assumptions doesn’t lead to a lot of simplification of your problem space then frees you up to actually spend time solving real customer problems and delighting your users. More people need to ask themselves questions like do I really need to use the same type system and data format for business documents AND serialized objects from programming languages?

I well remember learning about .NET 1.0’s XML support from Dare when he was literally the face of XML at Microsoft.

Wade Wegner (@WadeWegner) explained Using the ‘TrustServerCertificate’ Property with SQL Azure and Entity Framework in this 8/26/2010 post:

I’ve spent the last few days refactoring a web application to leverage SQL Server via Entity Framework 4.0 (EF4) in preparation for migrating it to SQL Azure. It’s a neat application, and a great example of how to fully encapsulate your data tier (the previous version had issues due to tight coupling between the data and web tier). More on this soon.

So, when I deployed my database to SQL Azure (using the SQL Azure Migration Wizard) I was confounded by the following error:

A connection was successfully established with the server, but then an error occurred during the pre-login handshake. (provider: SSL Provider, error: 0 – The certificate’s CN name does not match the passed value.)

I was caught off guard by this error, as I was pretty sure my connection string was valid – after all, I had copied it directly from the SQL Azure portal. Then I realized that this was the first time I had attempted to use EF4 along with SQL Azure; my first thought was, “oh crap!”

After a little bit of frantic research I found the following question on the SQL Azure forums. Raymond Li of Microsoft made the suggestion to set the ‘TrustServerCertificate’ property to True. So, I updated my connection string from …

… to …

… and voilà! It worked!

Turns out that when Encryt=True and TrustServerCertificate=False, the driver will attempt to validate the SQL Server SSL certificate. By setting the property TrustServerCertificate=True the driver will not validate the SQL Server SSL certificate.

Of course, once I learned/tried this, I came across an article on MSDN called How to: Connect to SQL Azure Using ADO.NET[, which] says to set the TrustServerCertificate property to False and the Encrypt property to True to prevent any man-in-the-middle attacks, so I guess I should include the following disclaimer: Use at your own risk!

Michael Otey wrote SQL Server vs. SQL Azure: Where SQL Azure is Limited for SQL Server Magazine on 8/26/2010:

Cloud computing is one of Microsoft’s big pushes in 2010, and SQL Azure is its cloud-based database service. Built on top of SQL Server, it shares many features with on-premises SQL Server.

For example, applications connect to SQL Azure using the standard Tabular Data Stream (TDS) protocol. SQL Azure supports multiple databases, as well as almost all of the SQL Server database objects, including tables, views, stored procedures, functions, constraints, and triggers.

However, SQL Azure is definitely not the same as an on-premises SQL Server system. Here's how it differs:

7. SQL Azure Requires Clustered Indexes

When you first attempt to migrate your applications to SQL Azure, the first thing you’re likely to notice is that SQL Azure requires all tables to have clustered indexes. You can accommodate this by building clustered indexes for tables that don’t have them. However, this usually means that most databases that are migrated to SQL Azure will usually require some changes before they can be ported to SQL Azure.

6. SQL Azure Lacks Access to System Tables

Because you don’t have access to the underlying hardware platform, there’s no access to system tables in SQL Azure. System tables are typically used to help manage the underlying server and SQL Azure does not require or allow this level of management. There's also no access to system views and stored procedures.

5. SQL Azure Requires SQL Server Management Studio 2008 R2

To manage SQL Azure databases, you must use the new SQL Server Management Studio (SSMS) 2008 R2. Older versions of SSMS can connect to SQL Azure, but the Object Browser won’t work. Fortunately, you don’t need to buy SQL Server 2008 R2. You can use the free version of SSMS Express 2008 R2, downloadable from Microsoft's website.

4. SQL Azure Doesn't Support Database Mirroring or Failover Clustering

SQL Azure is built on the Windows Azure platform which provides built-in high availability. SQL Azure data is automatically replicated and the SQL Azure platform provides redundant copies of the data. Therefore SQL Server high availability features such as database mirroring and failover cluster aren't needed and aren't supported.

3. No SQL Azure Support for Analysis Services, Replication, Reporting Services, or SQL Server Service Broker

The current release of SQL Azure provides support for the SQL Server relational database engine. This allows SQL Azure to be used as a backend database for your applications. However, the other subsystems found in the on-premises version of SQL Server, such as Analysis Services, Integration Services, Reporting Services, and replication, aren't included in SQL Azure. But you can use SQL Azure as a data source for the on-premises version of Analysis Services, Integration Services, and Reporting Services.

2. SQL Azure Offers No SQL CLR Support

Another limitation in SQL Azure is in the area of programmability: It doesn't provide support for the CLR. Any databases that are built using CLR objects will not be able to be moved to SQL Azure without modification.

1. SQL Azure Doesn't Support Backup and Restore

To me, one of the biggest issues with SQL Azure is the fact that there no support for performing backup and restore operations. Although SQL Azure itself is built on a highly available platform so you don’t have to worry about data loss, user error and database corruption caused by application errors are still a concern. To address this limitation, you could use bcp, Integration Services, or the SQL Azure Migration Wizard to copy critical database tables.

Michael’s “limitations” are better termed “characteristics” in my opinion. Note that a new Database Copy backup feature is available, as announced in the SQL Azure Team’s Backing Up Your SQL Azure Database Using Database Copy post of 8/25/2010.

Wayne Walter Berry (@WayneBerry) described Creating a Bing Map Tile Server from Windows Azure on 8/26/2010:

In this example application, I am going to build a Bing Maps tile server using Windows Azure that draws push pins on the tiles based on coordinates stored as spatial data in SQL Azure. Bing Maps allows you to draw over their maps with custom tiles; these tiles can be generated dynamically from a Windows Azure server. The overlay that this example produces corresponds to latitude and longitude points stored in a SQL Azure database.

There is another option for drawing pushpins, using JavaScript the Bing Map Ajax Controls allow you draw pushpins. However, after about 200 push pins on a map, the performance slows when using JavaScript. So if you have a lot of pushpins, draw them server side using a tile server.

Windows Azure is a perfect solution to use when creating a tile server, since it can scale to meet the traffic needs of the web site serving the map. SQL Azure is a perfect match to hold the latitude and longitude points that we want to draw as push pins because of the spatial data type support.

The tricky part of writing about this application is assembling all the knowledge about these disjointed technologies in the space of a blog post. Because of this, I am going to be off linking to articles about Bing Maps, Windows Azure, and writing mostly about SQL Azure.

Bing Maps

In order to embed a Bing Map in your web page you need to get a Bing Map Developer Account and Bing mapping keys, You can do that here at the Bing Map Portal. Once you have your keys, read this article about getting started with the Bing Maps AJAX control. This is what I used in the example to embed the Bing Map in my web page. Since we are going to build our own Bing Map Tile Server, you will want to read up on how Bing organizes tiles, and the JavaScript you need to add to reference a tile server from an article entitled: Building Your Own Tile Server. Or you can just cut and paste my HTML for a simple embedded map looks like this:

See original post for code snippet.

Notice that I am using my local server for the tile server. This allows me to quickly test the tile server in the Windows Azure development fabric without having to deploy it to Windows Azure every time I make a programming change to the ASP.NET code.

Windows Azure

We are going to use a Windows Azure web role to serve our titles to the Bing Map Ajax Control. If you haven’t created a web role yet, you need to read: Walkthrough: Create Your First Windows Azure Local Application. When you are ready to deploy you will need to visit the Windows Azure portal and create a new Hosted Service to serve your tiles to the Internet. However, for now you can serve your tiles from the Windows Azure Development Fabric; it is installed when you install the Windows Azure Tools. The download can be found here.

Once you have created your web role in your cloud project, add an ASP.NET page entitled tile.aspx. Even though an ASP.NET page typically serves HTML, in this case we are going to serve an image as a PNG from the ASP.NET page. The Bing Map AJAX Control calls the tile.aspx page with the quadkey in the query string to get a tile (which is an image); this is done roughly 4 times with 4 different tile identifiers for every zoom level displayed. More about the quadkey can be found here. The example ASP.NET code uses the quadkey to figure out the bounding latitude/longitude of the tile. With this information we can make a query to SQL Azure for all the points that fall within the bounding latitude and longitude. The points are stored as a geography data type (POINT) in SQL Azure and we use a Spatial Intersection query to figure out which ones are inside the tile.

SQL Azure

Here is a script for the table that I created on SQL Azure for the example:

CREATE TABLE [dbo].[Points]( [ID] [int] IDENTITY(1,1) NOT NULL, [LatLong] [geography] NULL, [Name] [varchar](50) NULL, CONSTRAINT [PrimaryKey_2451402d-8789-4325-b36b-2cfe05df04bb] PRIMARY KEY CLUSTERED ( [ID] ASC ) )It is nothing fancy, just a primary key and a name, along with a geography data type to hold my latitude and longitude. I then inserted some data into the table like so:

INSERT INTO Points (Name, LatLong) VALUES ('Statue of Liberty', geography::STPointFromText('POINT(-74.044563 40.689168)', 4326)) INSERT INTO Points (Name, LatLong) VALUES ('Eiffel Tower', geography::STPointFromText('POINT(2.294694 48.858454)', 4326)) INSERT INTO Points (Name, LatLong) VALUES ('Leaning Tower of Pisa', geography::STPointFromText('POINT(10.396604 43.72294)', 4326)) INSERT INTO Points (Name, LatLong) VALUES ('Great Pyramids of Giza', geography::STPointFromText('POINT(31.134632 29.978989)', 4326)) INSERT INTO Points (Name, LatLong) VALUES ('Sydney Opera House', geography::STPointFromText('POINT( 151.214967 -33.856651)', 4326)) INSERT INTO Points (Name, LatLong) VALUES ('Taj Mahal', geography::STPointFromText('POINT(78.042042 27.175047)', 4326)) INSERT INTO Points (Name, LatLong) VALUES ('Colosseum', geography::STPointFromText('POINT(41.890178 12.492378)', 4326))The code is only designed to draw pinpoint graphics at points, it doesn’t handle the other shapes that the geography data type supports. In other words make sure to only insert POINTs into the LatLong column.

One thing to note is that SQL Azure geography data type takes latitude and longitudes in the WKT format which means that Longitudes are first and then Latitudes. In my C# code, I immediately retrieve the geography data type as a string, strip out the latitude and longitude and reverse it for my code.

Now that we have a table and data, the application needs to be able to query the data, here is the stored procedure I wrote:

CREATE PROC [dbo].[spInside]( @southWestLatitude float, @southWestLongitude float, @northEastLatitude float, @northEastLongitude float) AS DECLARE @SearchRectangleString VARCHAR(MAX); SET @SearchRectangleString = 'POLYGON((' + CONVERT(varchar(max),@northEastLongitude) + ' ' + CONVERT(varchar(max),@southWestLatitude) + ',' + CONVERT(varchar(max),@northEastLongitude) + ' ' + CONVERT(varchar(max),@northEastLatitude) + ',' + CONVERT(varchar(max),@southWestLongitude) + ' ' + CONVERT(varchar(max),@northEastLatitude) + ',' + CONVERT(varchar(max),@southWestLongitude) + ' ' + CONVERT(varchar(max),@southWestLatitude) + ',' + CONVERT(varchar(max),@northEastLongitude) + ' ' + CONVERT(varchar(max),@southWestLatitude) + '))' DECLARE @SearchRectangle geography; SET @SearchRectangle = geography::STPolyFromText(@SearchRectangleString, 4326) SELECT CONVERT(varchar(max),LatLong) 'LatLong' FROM Points WHERE LatLong.STIntersects(@SearchRectangle) = 1The query takes two corners of a box, and creates a rectangular polygon, then is queries to find all the latitude and longitudes that are in the Points table which fall within the polygon. There are a couple subtle things going on in the query, one of which is that the rectangle has to be drawn in a clock-wise order, and that the first point is the last point. The second thing is that the rectangle must only be in a single hemisphere, i.e. either East or West of the dateline, but not both and either North or South of the equator, but not both. The code determines if the rectangle (called the bounding box in the code) is a cross over and divides the box into multiple queries that equal the whole.

The Code

To my knowledge there is only a couple complete, free code examples of a Bing tile server on the Internet. I borrowed heavily from both Rob Blackwell’s “Roll Your Own Tile Server” and Joe Schwartz’s “Bing Maps Tile System”.

I had to make a few changes, one of which is to draw on more than one tile for a single push pin. The push pin graphic is both wide and tall and if you draw it on the edge of a tile, it will be cropped by the next tile over in the map. That means for push pins that land near the edges, you need to draw it partially on both tiles. To accomplish this you need to query the database for push pins that are slightly in your tile, as well as the ones in your tiles. The downloadable code does this.

Caching

The whole example application could benefit from caching. It is not likely that you will need to update the push pins in real time, which means that based on your application requirements, you could cache the tiles on both the server and the browser. Querying SQL Azure, drawing bitmaps in real-time for 4 tiles per page would be a lot of work for a high traffic site with lots of push pins. Better to cache the tiles to reduce the load on Windows Azure and SQL Azure; even caching them for just a few minutes would significantly reduce the workload.

Another technique to improve performance is not to draw every pin on the tile when there are a large number of pins. Instead come up with a cluster graphic that is drawn once when there are more than X numbers of pins on a tile. This would indicate to the user that they need to zoom in to see the individual pins. This technique would require that you query the database for a count of rows within the tile, to determine if you are need to use the cluster graphic.

You can read more about performance and load testing with Bing Maps here.

- Attachment: BingMapTileServer.zip

- Code Sample, C#, T-SQL

Mike Godfrey explains how to remove the web server from a web application for searching a remote OData source in his JQuery and OData post of 8/26/2010:

A common model enabling a web application to search a remote source for data could be as follows.

The HTTP POST method allows the data set of an HTML form to be sent to a processing agent, typically a web server. The web server is responsible for querying a remote data source, typically a database. This can be achieved using a variety of techniques, such as old-fashioned ADO.Net in the ASP.Net page code behind or by more up-to-date ORM technologies. The web server will use the result of the data source query to re-render the HTML page and send it back to the browser.

There are several steps here making the model more complicated than it might need to be. Why do we need to POST to the web server? After all, is it not essentially acting as an intermediary between the browser and the data source? Is it possible to remove the web server from this model?

WCF Data Services and the Open Data Protocol (OData) combined with JQuery make it possible to remove the web server from our web application searching a remote data source scenario. If you are unfamiliar with WCF Data Services and OData there is plenty of material elsewhere that I won’t attempt to regurgitate here, http://msdn.microsoft.com/en-us/data/aa937697.aspx is a good primer, OData itself has a comprehensive site at http://www.odata.org/.

That’s the introduction finished with, let’s look at an example using the Netflix OData service (http://developer.netflix.com/docs/oData_Catalog).

See original post for code snippet.

Here I’ve set up a very basic page containing a form to search with and an empty div to display the results in. Using JQuery to hook into the submission of the form I’ve set up a fairly standard ajax call that will get the top 10 Titles from the Netflix catalogue. Because the Netflix OData service is on a different domain to my script I need to use JSONP to call it. This is where the added url complexity comes from, if the service was on the same domain as my script the url would be much simpler (e.g. “http://odata.netflix.com/Catalog/Titles?$top=10&$format=json”) and we would not need to specify the jsonpCallback parameter. I specify a success callback function to do something with the JSON result, I simply spit out a few properties to screen in the results div – but it’s easy to imagine more funky examples! Finally I return false to prevent the standard form submission from occurring.

If you look into the OData protocol you will notice that there are many many things that can make up the url:- $filter, $orderby, $skip, $expand, $select and $inlinecount come to mind in addition to the possibility of calling service operations. This makes handcrafting the url potentially complex and time consuming.

After a quick bit of research I discovered a small OData plugin that already exists for JQuery - http://wiki.github.com/egil/jquery.odata/. It removes the need to handcraft the urls – replacing them with more intuitive function calls – as well as hiding the potentially nasty ajax call setup.

See original post for Code Snippet

JQuery.OData makes it much easier to work with OData services but still allows you pass through many options as per normal JQuery plugin patterns, above I pass through a dataType property set to JSONP to be used in the ajax call. The success callback is pretty much identical, JQuery.OData does wrap up result.d into a more friendly result.data.

We’re currently using this plugin in a project at iMeta and have been pleased with it so far. If you find yourself with the need call an OData service and don’t want to perform full page postbacks, I thoroughly recommend giving JQuery.OData a go!

If you’re unfortunate enough to be supporting IE6 then give me a ping as I’ve spent many an hour so far debugging it and had to make a couple of tweaks to get JQuery.OData to play nice with it.

Other than that, the Fiddler web debugging tool is your friend when working with OData services, studying the request and response headers has proved to be a vital skill.

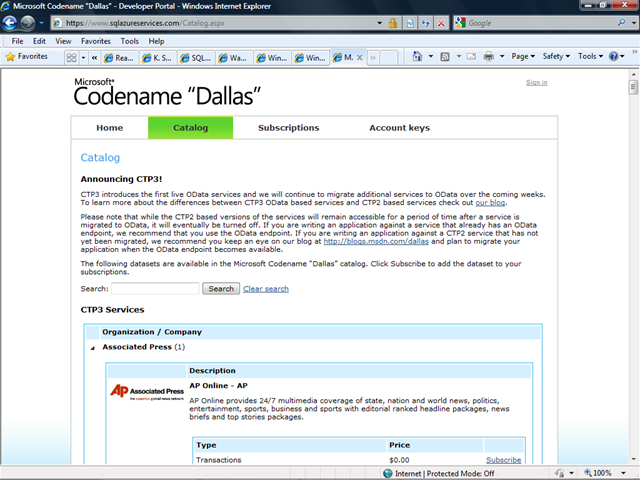

The SQL Azure Services Team recently updated its Codename “Dallas” Catalog with a list of services migrated from CPT2 to CPT3:

Announcing CTP3!

CTP3 introduces the first live OData services and we will continue to migrate additional services to OData over the coming weeks. To learn more about the differences between CTP3 OData based services and CTP2 based services check out our blog.

Please note that while the CTP2 based versions of the services will remain accessible for a period of time after a service is migrated to OData, it will eventually be turned off. If you are writing an application against a service that already has an OData endpoint, we recommend that you use the OData endpoint. If you are writing an application against a CTP2 service that has not yet been migrated, we recommend you keep an eye on our blog at http://blogs.msdn.com/dallas and plan to migrate your application when the OData endpoint becomes available.

The following datasets are available in the Microsoft Codename “Dallas” catalog. Click Subscribe to add the dataset to your subscriptions.

Only the Associated Press, Government of the USA, Gregg London Consulting, and InfoUSA had migrated data to CTP3 as of 8/27/2010.

<Return to section navigation list>

AppFabric: Access Control and Service Bus

• The Endpoint.tv Team wants you to Vote on [AppFabric] show topics for endpoint.tv:

Got a great idea for endpoint.tv? Interested in Windows Server AppFabric and Windows Azure AppFabric? Want to see a sample, tip, technique, code, etc.? Let us know and vote for your favorites.

See the Atom feed here in your reader.

• Brian ? helps Understanding Windows Azure AppFabric Access Control via PHP in this 8/29/2010 post:

AppFabric Access Control Service (ACS) as a Nightclub

The diagram below (adapted from Jason Follas’ blog) shows how a nightclub (with a bouncer and bartender) might go about making sure that only people of legal drinking age are served drinks (I’ll draw the analogy to ACS shortly):

Before the nightclub is open for business, the bouncer tells the bartender that he will be handing out blue wristbands tonight to customers who are of legal drinking age.

Once the club is open, a customer presents a state-issued ID with a birth date that shows he is of legal drinking age.

The bouncer examines the ID and, when he’s satisfied that it is genuine, gives the customer a blue wristband.

Now the customer can go to the bartender and ask for a drink.

The bartender examines the wristband to make sure it is blue, then serves the customer a drink.

I would add one step that occurs before the steps above and is not shown in the diagram:

0. The state issues IDs to people and tells bouncers how to determine if an ID is genuine.

To draw the analogy to ACS, consider these ideas:

You, as a developer, are the State. You issue ID’s to customers and you tell the bouncer how to determine if an ID is genuine. You essentially do this (I’m oversimplifying for now) by giving giving both the customer and bouncer a common key. If the customer asks for a wristband without the correct key, the bouncer won’t give him one.

The bouncer is the AppFabric access control service. The bouncer has two jobs:

He issues tokens (i.e. wristbands) to customers who present valid IDs. Among other information, each token includes a Hash-based Message Authentication Code (HMAC). (The HMAC is generated using the SHA-256 algorithm.)

Before the bar opens, he gives the bartender the same signing key he will use to create the HMAC. (i.e. He tells the bartender what color wristband he’ll be handing out.)

The bartender is a service that delivers a protected resource (drinks). The bartender examines the token (i.e. wristband) that is presented by a customer. He uses information in the token and the signing key that the bouncer gave him to try to reproduce the HMAC that is part of the token. If the HMACs are not identical, he won’t serve the customer a drink.

The customer is any client trying to access the protected resource (drinks). For a customer to get a drink, he has to have a State-issued ID and the bouncer has to honor that ID and issue him a token (i.e. give him a wristband). Then, the customer has to take that token to the bartender, who will verify its validity (i.e. make sure it’s the agreed-upon color) by trying to reproduce a HMAC (using the signing key obtained from the bouncer before the bar opened) that is part of the token itself. If any of these checks fail along the way, the customer will not be served a drink.

To drive this analogy home, I’ll build a very simple system composed of a client (barpatron.php) that will try to access a service (bartender.php) that requires a valid ACS token.

Hiring a Bouncer (i.e. Setting up ACS)

In the simplest way of thinking about things, when you hire a bouncer (or set up ACS) you are simply equipping him with the information he needs to determine if a customer should be issed a wristband. Essentially, this means providing information to a customer that the bouncer will recognize as a valid ID. And, we also need to equip the bartender with the tools for validating the color of a wristband issued by the bouncer. I think this is worth keeping in mind as you work through the following steps.

To use Windows Azure AppFabric, you need a Windows Azure subscription, which you can create here:http://www.microsoft.com/windowsazure/offers/. (You’ll need a Windows Live ID to sign up.) I purchased theWindows Azure Platform Introductory Special, which allows me to get started for free as long as keep my usage limited. (This is a limited offer. For complete pricing details, see http://www.microsoft.com/windowsazure/pricing/.) After you purchase your subscription, you will have to activate it before you can begin using it (activation instructions will be provided in an e-mail after signing up).

After creating and activating your subscription, go to the AppFabric Developer Portal:https://appfabric.azure.com/Default.aspx (where you can create an access control service). To create a service…

Brian continues with a step-by-step PHP tutorial and ends with a link to the source code for Barterder.php and BarPatron.php: ACS_tutorial.zip.

Michael Gorsuch explained Using DotNetOpenAuth with Windows Azure in an 8/28/2010 post:

I found a few hours of spare time over the past couple of weeks, and used it to get a handle on Windows Azure. I’ll write more on my reasons for this in a future post, but thought I’d share a tip.

My project requires OpenID authentication, and I chose to use the DotNetOpenAuth library. Since I’m working with ASP.NET MVC, I implemented the example listed on their website.

I found that it works just fine in production and in any other MVC project, but it does not work with the Azure development fabric (this is what you use to run your projects locally before deploying to cloud). As soon as the redirect is called to send you out for authentication, the fabric crashes.

The offending line is as follows:

returnrequest.RedirectingResponse.AsActionResult();I do not know the precise nature of this error, and was not able to find a fix on the internets. Everyone who came across it proposed using a DEBUG switch to mock the transaction if you were in your development environment. While that’s probably okay, I’m rather new to federated authentication and am not entirely sure how to ‘fake it’.

Fortunately, I made a small ‘fix’. It’s more of a hack, in that I don’t fully understand the cause of this problem – I only know that this slight modification lets you get on with your life. I change the previously mentioned line to read:

stringlocation = request.RedirectingResponse.Headers["Location"];returnRedirect(location);For whatever reason, fashioning a redirect request from scratch works. I can only assume that the DotNetOpenAuth code is producing something that the Azure Dev environment finds offensive.

Hope this helps!

Brian Loesgen reported A New Blog is Born: Windows Server AppFabric Customer Advisory Team on 8/27/2010:

Microsoft’s Windows Server AppFabric Customer Advisory Team ( CAT) has launched a new blog focused on AppFabric (both Windows Server AppFabric and Azure AppFabric), WCF, WF, BizTalk, data modeling and StreamInsight. You can find the blog at http://blogs.msdn.com/b/appfabriccat/. [Emphasis added.]

If you read my blog, then these are technologies that will be of interest to you, and this promises to be a good source of information.

Thanks to the CAT team for putting this together!

Ryan Dunn (@dunnry) [left] and Steve Marx (@smarx) [center] put Wade Wegner (@WadeWegner) [right] through the wringer in this 00:40:52 Cloud Cover Episode 23 - AppFabric Service Bus:

Join Ryan and Steve each week as they cover the Microsoft cloud. You can follow and interact with the show at @cloudcovershow

In this episode:

- Wade Wegner joins us as we talk about what the Windows Azure AppFabric Service Bus is and how to use it.

- Discover the different patterns and bindings you can use with the AppFabric Service Bus.

- Learn a tip on how to effectively host your Service Bus services in IIS.

Show Links:

SQL Azure Support for Database Copy

Perfmon Friendly Viewer for Windows Azure MMC

Infographic: IPs, Protocols, & Token Flavours in the August Labs release of ACS

Wade's Funky Fresh Beat

AutoStart WCF Services to Expose them as Service Bus Endpoints

Host WCF Services in IIS with Service Bus Endpoints

Neil Mackenzie posted Messenger Connect Beta .Net Library to his Azurro blog on 8/26/2010:

Introduction

The early posts on this blog focused on the Live Framework API which at the time was part of Windows Azure. The Live Framework API provided a programming model for Live Mesh an attempt to integrate Live Services with synchronization. The Live Framework API was moved into the Live Services group and subsequently cancelled. The Live Mesh lives on although the website now advertises: Live Mesh will soon be replaced by the new Windows Live Sync. UPDATE 8/27/2010 - Microsoft has announced that Windows Live Sync is to be rebranded as Windows Live Mesh. [Emphasis added.]

The Live Services group has now released the Windows Live Messenger Connect API Beta providing programmatic access to Live Services but without the synchronization that caused some confusion in the Live Framework API. This is a large offering encompassing ASP.Net sign in controls along with both JavaScript and .Net APIs. The Live Services team deserves credit for a solid offering – and this is particularly true of the documentation team which has provided unusually strong and extensive documentation for a product still in Beta.

The goal of this post is to provide a narrative guide through only the .Net portion of the Messenger Connect API.

OAuth WRAP

The Live Framework used Delegated Authentication to give user’s control over the way a website or application can access their Live Services information. Messenger Connect instead uses OAuth WRAP (Web Resource Authorization Protocol) an interim standard already been deprecated in favor of the not yet completed OAuth 2 standard. A number of articles – here and here - describe the politico-technical background of the move from OAuth 1 to OAuth2 via OAuth WRAP. From the perspective of the .Net API the practical reality is that this is an implementation detail burnt into the API so it is not necessary to know anything at all about the underlying standard being used.

Three core concepts from OAuth WRAP (and Delegated Authentication) are exposed in the Messenger Connect API:

- scopes

- access tokens

- refresh tokens

Authorization to access parts of a user’s information is segmented into scopes. For example, a user may give permission for an application to have read access but not write access to the photos uploaded to Live Services. An application typically requests access only for those scopes it requires. The user can manage the consent provided to an application and revoke that consent at any time through the consent management website at http://consent.live.com.

The Messenger Connect API supports various scopes grouped into public and restricted scopes. Public scopes are usable by any application while restricted scopes – allowing access to sensitive information such as email addresses and phone numbers – are usable only by specific agreement with Microsoft.

The public scopes are:

- WL_Activities.View

- WL_Activities.Update

- WL_Contacts.View

- WL_Photos.View

- WL_Profiles.UpdateStatus

- WL_Profiles.View

- Messenger.SignIn

The restricted scopes are:

- WL_Calendar.Update

- WL_Calendar.View

- WL_Contacts.Update

- WL_Contacts.ViewFull

- WL_Photos.Update

- WL_Profiles.ViewFull

The extent of these scopes is pretty apparent from their name with, for example, WL_Activities.Update being permission to add items to the activities stream of a user.

An application requests permission to some scopes and then initiates the sign in process which pops up a Windows Live sign-in page in which the user can view the scopes which have been requested. On successful sign-in Windows Live returns an access token and a refresh token. The access token is a short-lived token the application uses to access the user’s information consistent with the scopes authorized by the user. The refresh token is a long-lived token an application uses to reauthorize itself without involving the user and to generate a new access token – it essentially allows the application to continue accessing the user’s information without continually asking the user for permission. The access token is valid for the order of hours while the refresh token lasts substantially longer (very helpful, I know). …

Neil continues with a detailed analysis of the API beta.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

• Humphrey Scheil described Umbraco CMS - complete install on Windows Azure (the Microsoft cloud) in this 8/29/2010 post:

We use the Umbraco CMS a lot at work - it's widely regarded as one of (if not the) best CMSs out there in the .NET world. We've also done quite a bit of R&D work on Microsoft Azure cloud offering and this blog post shares a bit of that knowledge (all of the other guides out there appear to focus on getting the Umbraco database running on SQL Azure, but not how to get the Umbraco server-side application itself up and running on Azure). The cool thing is that Umbraco comes up quite nicely on Azure, with only config changes needed (no code changes).

So, first let's review the toolset / platforms I used:

- Umbraco 4.5.2, built for .NET 3.5

- Latest Windows Azure Guest OS (1.5 - Release 201006-01)

- Visual Studio 2010 Professional

- Azure SDK 1.2

- SQL Express 2008 Management Studio

- .NET 3.5 sp1

Step one is simply to get Umbraco running happily in VS 2010 as a regular ASP.NET project. The steps to achieve this are well documented here. Test your work by firing up Umbraco locally, accessing the admin console and generating a bit of content (XSLTs / Macros / Documents etc.) before progressing further. (The key to working efficiently with Azure is to always have a working case to fall back on, instead of wondering what bit of your project is not cloud-friendly).

Then use these steps to make your Umbraco project "Azure-aware." Again, test your installation by deploying to the Azure Dev Compute and Storage Fabric on your local machine and testing that Umbraco works as it should before going to production. The Azure Dev environment is by no means perfect (see below) or a true synonym for Azure Production, but it's a good check nonetheless.

Now we need to use the SQL Azure Migration Wizard tool to migrate the Umbraco SQL Express database. I used v3.3.6 (which worked fine with SQL Express contrary to some of the comments on the site) to convert the Umbraco database to its SQL Azure equivalent - the only thing the migration tool has to change is add a clustered index on one of the tables (dbo.Users) as follows - everything else migrates over to SQL Azure easily:

CREATE CLUSTERED INDEX [ci_azure_fixup_dbo_umbracoUserLogins] ON [dbo].[umbracoUserLogins] ([userID]) WITH (IGNORE_DUP_KEY = OFF, DROP_EXISTING = OFF, ONLINE = OFF) GOThen create a new database in SQL Azure and re-play the script generated by AzureMW into it to create the db schema and standing data that Umbraco expects. To connect to it, you'll replace a line like this in the Umbraco web.config:

<add key="umbracoDbDSN" value="server=.\SQLExpress; database=umbraco452;user id=xxx;password=xxx" />with a line like this:

<add key="umbracoDbDSN" value="server=tcp:<<youraccountname>> .database.windows.net;database=umbraco;user id=<<youruser>> @<<youraccount>>;password=<<yourpassword>>" />So we now have the Umbraco database running in SQL Azure, and the Umbraco codebase itself wrapped using an Azure WebRole and deployed to Azure as a package. If we do this using the Visual Studio tool set, we get:

- 19:27:18 - Preparing...

- 19:27:19 - Connecting...

- 19:27:19 - Uploading...

- 19:29:48 - Creating...

- 19:31:12 - Starting...

- 19:31:52 - Initializing...

- 19:31:52 - Instance 0 of role umbraco452_net35 is initializing

- 19:38:35 - Instance 0 of role umbraco452_net35 is busy

- 19:40:15 - Instance 0 of role umbraco452_net35 is ready

- 19:40:16 - Complete.

Note the total time taken - Azure is deploying a new VM image for you when it does this, it's not just deploying a web app to IIS, so the time taken is always ~ 13 minutes, give or take. I wish it was quicker..

Final comments

If you deploy and it takes longer than ~13 minutes, then double check the common Azure gotchas. In my experience they are:

1. Missing assemblies in production - so your project runs fine on the Dev Fabric and just hangs in Production on deploy - for Umbraco you need to make sure that Copy Local is set to true for cms.dll, businesslogic.dll and of course umbraco.dll so that they get packaged up.

2. Forgetting to change the default value of DiagnosticsConnectionString in ServiceConfiguration.cscfg (by default it wants to persist to local storage which is inaccessible in production - you'll need to use an Azure storage service and update the connection string to match, e.g. your ServiceConfiguration.cscfg should look something like this:

<?XML:NAMESPACE PREFIX = [default] http://schemas.microsoft.com/ServiceHosting/2008/10/ServiceConfiguration NS = "http://schemas.microsoft.com/ServiceHosting/2008/10/ServiceConfiguration" /> <?xml version="1.0"?> <ServiceConfiguration serviceName="UmbracoCloudService" xmlns="http://schemas.microsoft.com/ServiceHosting/2008/10/ServiceConfiguration"> <Role name="umbraco452_net35"> <Instances count="1" /> <ConfigurationSettings> <Setting name="DiagnosticsConnectionString" value="DefaultEndpointsProtocol=https;AccountName=travelinkce;AccountKey=pIiMwawlZohZSmgrQr8tUPL5DRPE31Sg/9lqJog11Y3FdXTG7HnRSlVNXMNGLbZyCsG3JX1DlbqYtBBrce1w7g==" /> </ConfigurationSettings> </Role> </ServiceConfiguration>The Azure development tools (Fabric etc.) are quite immature in my opinion - very slow to start up (circa one minute) and simply crash when you've done something wrong rather than give a meaningful error message and then exit (for example, when trying to access a local SQL Server Express database (which is wrong - fair enough), the loadbalancer simply crashed with a System.Net.Sockets.SocketException{"An existing connection was forcibly closed by the remote host"}. I have the same criticism of the Azure production system - do a search to see how many people spin their wheels waiting for their roles to deploy with no feedback as to what is going / has gone wrong. Azure badly needs more dev-friendly logging output.

I couldn't get the .NET 4.0 build of Umbraco to work (and it should, .NET 4.0 is now supported on Azure). The problem appears to lie in missing sections in the machine.config file on my Azure machine that I haven't had the time or inclination to dig into yet.

You'll also find that the following directories do not get packaged up into your Azure deployment package by default: xslt, css, scripts, masterpages. To get around this quickly, I just put an empty file in each directory to force their inclusion in the build. If these directories are missing, you will be unable to create content in Umbraco.

Exercises for the reader

- Convert the default InProc session state used by Umbraco to SQLServer mode (otherwise you will have a problem once you scale out beyond one instance on Azure). Starting point is this article - http://blogs.msdn.com/b/sqlazure/archive/2010/08/04/10046103.aspx, but Google for errata to the script - the original script supplied does not work out of the box.

- Use an Azure XDrive or similar to store content in one place and cluster Umbraco

• Roberto Bonini continues his Windows Azure Feed Reader series with a 00:45:00 Windows Azure Feed Reader Episode 4: The OPML Edition post and video segment on 8/29/2010:

As you’ve no doubt surmised from the title, this weeks episode deals almost entirely with the OPML reader and fitting it in with the rest of our code base.

If you remember, last week I showed a brand new version of the OPML reader code using LINQ and Extension Methods. This week, we begin by testing said code. Given that its never been been tested before, bugs are virtually guaranteed. Hence we debug the code, make the appropriate changes and fold the changes back into our code base.

We then go on to creating an Upload page to upload the OPML file. We store the OPML file in a Blob and drop a message in a special queue for this OPML file to be uploaded. We make the changes to WorkerRole.cs to pull that message off the queue and process the file correctly, retrieve the feeds and store them. If you’ve been following along, none of this code will be earth shattering to you either.

The fact is that a) making the show any longer would bust my Vimeo Basic upload limit and b) I couldn’t think of anything else to do that could be completed in ~10 minutes.

The good thing is that we’re back to our 45 minute-ish show time, after last weeks aberration.

Don’t forget, you can head over to Vimeo to see the show in all its HD glory: http://www.vimeo.com/14510034

After last weeks harsh lessons in web-casting, file backup and the difference between 480p and 720p when displaying code, this weeks show should go perfectly well.

Enjoy.

Remember, the code lives at http://windowsazurefeeds.codeplex.com

• Ankur Dave describes his work on Windows Azure Clustering as an intern for Microsoft Research’s Extreme Computing Group (XCG) in this Looking back at my summer with Microsoft post of 8/24/2010:

August 13th was my last day as a Microsoft intern. Ever since then, I’ve been missing working with great people, reading lots of interesting papers, and contributing to a larger effort in the best way I know -- by writing code :)

I spent the summer working on the Azure Research Engagement project within the Cloud Computing Futures (CCF) team at Microsoft Research’s eXtreme Computing Group (XCG). My project was to design and build CloudClustering, a scalable clustering algorithm on the Windows Azure platform. CloudClustering is the first step in an effort by CCF to create an open source toolkit of machine learning algorithms for the cloud. My goal within this context was to lay the foundation for our toolkit and to explore how suitable Azure is for data-intensive research.

Unfortunately, high school ends late and Berkeley starts early, so the internship was compressed into just seven weeks. In the first week, I designed the system from scratch, so I got to control its architecture and scope. I spent the next two weeks building the core clustering algorithm, and three weeks implementing and benchmarking various optimizations, including multicore parallelism, data affinity, efficient blob concatenation, and dynamic scalability.

I presented my work to XCG in the last week, in a talk entitled "CloudClustering: Toward a scalable machine learning toolkit for Windows Azure." Here are the slides in PowerPoint [with the script] and PDF, and here’s the video of the talk. On my last day, it was very gratifying to receive a request from the Azure product group to give this talk at a training session for enterprise customers :)

- Introduction by Roger Barga, my manager - http://www.youtube.com/watch?v=Sy6MyB_w0fs

- General introduction - http://www.youtube.com/watch?v=djkiyhG0e4A

- Technical introduction - http://www.youtube.com/watch?v=N9BsoXze61Y

- Algorithm and implementation - http://www.youtube.com/watch?v=MpAGwyFQqHw

- Optimizations (Part 1) - http://www.youtube.com/watch?v=bU43KnbCfxs

- Optimizations (Part 2) and Results - http://www.youtube.com/watch?v=vxucDtIpttI

These seven weeks were some of the best I've ever had -- and for that I especially want to thank my mentors, Roger Barga and Wei Lu. I'd love to come back and work with them again next year! :)

Unfortunately, the link to Ankur Dave’s talk is missing.

Jenna Pitcher reported Computershare’s infrastructure turns Azure in this 8/27/2010 article for Delimiter.com.au:

Australian investor services giant Computershare this week revealed that it had rebuilt its most highly trafficked sites on Microsoft’s Azure cloud computing platform, sending them live in the first week of August.

Speaking at Microsoft’s Tech.Ed conference on the Gold Coast this week, Computershare architect Peter Reid explained the company’s online investor services to corporations were quite seasonal and “spikey” because of the nature of financial reporting.

“What we did was had a look at some of the worst-performing applications on our website — that we buy a lot of hardware for,” Reid said. “We had a look at those sites as the primary candidates to putting to Azure.” Reid and his team then rebuilt and re-targeted the “spikey” short run events in Azure.

The company immediately saw several benefits from moving to Microsoft’s cloud computing platform, the first being that it no longer needed to keep extra hardware provisioned for its high traffic events.

“The other one is that we would like to protect our normal business — traditional employee and investor side business from these unusual events,” said Reid. Previously, the “spikey” applications had affected all of the other services running on the same software.

Reid said that he did not look at other hosting options because the team already had the tools and knowledge to go forward. “We had the application written in .NET, we already had the skills base of the development staff on .NET,” he said.

Computershare has a priority projects group of five employees which takes on interesting projects within the organisation. Four of them were assigned to the Azure project for a month and worked closely with Microsoft. This computer development team worked closely with Microsoft’s Technical Adoption Program (TAP).

“We were kind of guided through the process by the Azure TAP guys,” Reid said. Computershare touched base with the Azure experts at least once a week with a phone call. But no real re-education of the Computershare developers was needed, given they had a lot of the .NET knowledge already.

One other benefit Reid touched on was scalability. “We were really interested in looking at the the flexible deployment side of the Azure framework,” he said. “So the ability to scale up and scale down automatically without having to touch it.”

Reid said that other cloud computing options didn’t have Azure’s abilities in this area. “Ssome of the other providers — where you have infrastructure as a service with [virtual machines] in the cloud — didn’t give us that flexible ability to spin up extra web servers on demand and spin them back down as we needed,” he said.

The GFC

One of the main motivations for the switch was also the changing nature of the financial sector. In 2007 and 2008 Computershare had some load issues due to a lot of initial public offering launches coming in from Hong Kong. But then everything changed due to the GFC — the IPOs dried up.However, Reid’s team noticed early this year that the market was getting set to ramp up again — and they knew they would have another big season on their doorstep very soon. “We knew it was imminent, that we were going to have these big IPOs coming on board,” the architect said. “We just got the application Azure two weeks before we had to go live the first time.”

Reid stated that the data centres for the project were located in South East Asia — whereas normally they would use their Brisbane and Melbourne facilities. This location worked out being better for the Hong Kong listings. And the cheaper processing architecture also drove savings.

There was only one pressing issue that Computershare came up against — the ability to monitor the infrastructure.

“They really wanted to know how they were going to monitor this application in the cloud,” said Reid. Not all the needed tools were available out of the box, so there was a bit of work to do in that area, with some tools needing to be built in-house.

In terms of the future, Reid said Computershare was currently looking at all of its applications and working out which ones it would make sense to run in the cloud. Computershare is also looking a content management system, an edge network and caching technology.

“So if you go to our website, we send you the page and the images out of our datacentre,” said Reid of the current situation, noting Computershare wanted to shift to a system where a browser’s closest local datacentre would actually serve the traffic.

One last aspect of the project which Reid highlighted as being important was that of its impact on the environemnt,

“Its important for us to deliver our website in the most efficient way we can,” he said. “Running these kind of servers all year round wasting energy is adding to our carbon foot print and one thing we would like to do is wipe those loads and turn off the servers as soon as we can can so we don’t end up killing the environment unnecessarily by running idle machines that are not doing anything.”

However, the architect has not estimated how much the Azure project has reduced the company’s carbon footprint.

“We don’t have any figures of how much carbon we are burning at the moment but it is certainly something I want to do,” he said.

Image credit: TechFlash Todd, Creative Commons

Delimiter’s Jenna Pitcher is attending Tech.Ed this week as a guest of Microsoft, with flights, accommodation and meals paid for.

Patrick Butler Monterde posted Code Contracts – Best Practices to Azure on 8/26/2010:

Code Contracts provide a language-agnostic way to express coding assumptions in .NET programs. The contracts take the form of pre-conditions, post-conditions, and object invariants. Contracts act as checked documentation of your external and internal APIs.

The contracts are used to improve testing via runtime checking, enable static contract verification, and documentation generation. Code Contracts bring the advantages of design-by-contract programming to all .NET programming languages. We currently provide three tools:

- Runtime Checking. Our binary rewriter modifies a program by injecting the contracts, which are checked as part of program execution. Rewritten programs improve testability: each contract acts as an oracle, giving a test run a pass/fail indication. Automatic testing tools, such as Pex, take advantage of contracts to generate more meaningful unit tests by filtering out meaningless test arguments that don't satisfy the pre-conditions.

- Static Checking. Our static checker can decide if there are any contract violations without even running the program! It checks for implicit contracts, such as null dereferences and array bounds, as well as the explicit contracts. (Premium Edition only.)

- Documentation Generation. Our documentation generator augments existing XML doc files with contract information. There are also new style sheets that can be used with Sandcastle so that the generated documentation pages have contract sections.

Code Contracts comes in two editions:

- Code Contracts Standard Edition: This version installs if you have any edition of Visual Studio other than the Express Edition. It includes the stand-alone contract library, the binary rewriter (for runtime checking), the reference assembly generator, and a set of reference assemblies for the .NET Framework.

- Code Contracts Premium Edition: This version installs only if you have one of the following: Visual Studio 2008 Team System, Visual Studio 2010 Premium Edition, or Visual Studio 2010 Ultimate Edition. It includes the static checker in addition to all of the features in the Code Contracts Standard Edition.

The Visual Studio ALM Rangers uploaded a Visual Studio Database Guide to CodePlex on 8/12/2010 (missed when posted):

Project Description

Practical guidance for Visual Studio 2010 Database projects, which is focused on 5 areas:This release includes common guidance, usage scenarios, hands on labs, and lessons learned from real world engagements and the community discussions.

- Solution and Project Management

- Source Code Control and Configuration Management

- Integrating External Changes with the Project System

- Build and Deployment Automation with Visual Studio Database Projects

- Database Testing and Deployment Verification

The goal is to deliver examples that can support you in real world scenarios, instead of an in-depth tour of the product features.Visual Studio ALM Rangers

This guidance is created by the Visual Studio ALM Rangers, who have the mission to provide out of band solutions for missing features or guidance. This content was created with support from Microsoft Product Group, Microsoft Consulting Services, Microsoft Most Valued Professionals (MVPs) and technical specialists from technology communities around the globe, giving you a real-world view from the field, where the technology has been tested and used.

For more information on the Rangers please visit http://msdn.microsoft.com/en-us/vstudio/ee358786.aspx and for more a list of other Rangers projects please see http://msdn.microsoft.com/en-us/vstudio/ee358787.aspx.What is in the package?

The content is packaged in 3 separate zip files to give you the choice of selective downloads, but the default download is the first of the listed packages:

- Visual Studio Guidance for Database Projects --> Start here

- Visual Studio Database Projects Hands-On-Labs document

- Hands-On-Labs (HOLs), including:

- Solution and Project Management

- Refactoring a Visual Studio Database Solution to Leverage Shared Code

- Source Code Control and Configuration Management

- Single Team Branching Model

- Multiple Team Branching Model

- Integrating External Changes with the Project System

- Maintaining Linked Servers in a Visual Studio Database Project

- Complex data movement

- Build and Deployment Automation

- WiX-Integration with deployment of databases

- The Integrate with Team Build Scenario

- Building and deploying outside team build

- Database Testing and Deployment Verification

- The “Basic” Create Unit Test Scenario

- The “Advanced” Create Unit Test Scenario

- Find Model drifts Scenario

Team

- Contributors

- Shishir Abhyanker (MSFT), Chris Burrows (MSFT), David V Corbin (MVP), Ryan Frazier (MSFT), Larry Guger (MVP), Barclay Hill (MSFT), Bob Leithiser (MSFT), Pablo Rincon (MSFT), Scott Sharpe (MSFT), Jens K. Süßmeyer (MSFT), LeRoy Tuttle (MSFT)

- Reviewers

- Christian Bitter (MSFT), Regis Gimenis (MSFT), Rob Jarrat (MSFT), Bijan Javidi (MSFT), Mathias Olausson (MVP), Willy-Peter Schaub (MSFT)

How to submit new ideas?

The recommended method is to simply post ideas to the Discussions page or to contact the Rangers at http://msdn.microsoft.com/en-us/teamsystem/ee358786.aspx

Return to section navigation list>

VisualStudio LightSwitch

The Video Studio Lightswitch Team announced MSDN Radio: Visual Studio LightSwitch with John Stallo and Jay Schmelzer on MSDN Radio at 8/30/3010 9:00 to 9:30 AM PDT:

Tune in on Monday, August 30, 2010 from 9:00-9:30 AM Pacific Time for MSDN Radio. This week they’ll be interviewing LightSwich team members! It’s your chance to call in and ask us questions about LightSwitch.

About MSDN Radio: “MSDN Radio is a weekly Developer talk-show that helps answer your questions about the latest Microsoft news, solutions, and technologies. We dive into the challenges of deciphering today’s technology stack. Register today and have a chance to call-in and talk with the experts on the air, or just tune in to the show.”

Register here: MSDN Radio: Visual Studio LightSwitch with John Stallo and Jay Schmelzer

Matt Thalman showed How to: designing one LightSwitch screen to create or edit an entity in this 8/26/2010 post (Click an empty image frame to generate a 404 error and then click Back to view the screen captures):

In Beta 1, Visual Studio LightSwitch provides screen templates for defining a details screen or a new data screen for an entity. But you may want to use the same screen for doing both tasks. This allows you to have a consistent experience no matter whether you are creating an entity or simply editing an existing one. Here’s how you can do this.

Create an entity to work with. For the purposes of this tutorial, I’ve created a Customers table.

- Create a Details Screen for Customer:

- Rename the Customer property on the screen to CustomerQuery:

- Click the “Add Data Item…” button in the command bar and create a local Customer property:

- Delete the current content on the screen bound to the CustomerQuery. We want to replace the content in the screen to be bound to the Customer property, not the CustomerQuery.

- In its place, we want to bind the Customer property onto the screen:

- Change the control type of the Customer to be a Vertical Stack. By default, this will add controls for each of the fields defined in Customer which is exactly what we want.

- Now we need to write some code to hook everything up. Click the Write Code button on the command bar at the top of the screen designer. Paste the following code into the generated class:

partial void CustomerDetail_Loaded()

{

if (!this.Id.HasValue)

{

this.Customer = new Customer();

}

else

{

this.Customer = this.CustomerQuery;

}

}

So what happens here is that the screen has a parameter called Id which represents the Id of the Customer. If that property is not set, we assume the screen should create and display a new customer. If that property is set, we simply set the Customer property to the return value of the CustomerQuery query which is actually bound to the Id property (the screen template did that work for us). Notice that we’re setting the Customer property here because that is what all of the screen content is bound to. So by setting this property, it updates the controls on the screen with the state from that entity.- Ok, how do we use this screen? Well, by default any customer displayed as Summary control defined as a link will automatically navigate to this screen. If you want to navigate to this screen through the use of a List, for example, just following the next set of steps.

- Create a List and Details Screen for Customers:

- Expand the Command Bar of the Customer List and override the code for the Add button:

- Paste the following code into the CustomerListAddAndEditNew_Execute method:

this.Application.ShowCustomerDetail(null);

This code passes null as the customer ID parameter to the screen which will cause the screen to create a new entity.- Go back to the screen designer and override the code for the Edit button in the same way you did for the Add button. Paste the following code into the CustomerListEditSelected_Execute method:

this.Application.ShowCustomerDetail(this.CustomerCollection.SelectedItem.Id);