Windows Azure and Cloud Computing Posts for 8/26/2010+

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

![AzureArchitecture2H_thumb31[1] AzureArchitecture2H_thumb31[1]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEhbe659Q0gWu19e6K0tRzsa5PmGIr83GwwVGJaKXIpk1LnaHob3WXFwdJDLTLrU7UFZGNtqqfpgwraCG6Ggk-oyB76yrvyKqPUtm02sKRKZOAfEz1BtRXkFQhTjqs57kfg8nLEgdvx8/?imgmax=800)

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database, Codename “Dallas” and OData

- AppFabric: Access Control and Service Bus

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch

- Windows Azure Infrastructure

- Windows Azure Platform Appliance (WAPA)

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Read the detailed TOC here (PDF) and download the sample code here.

Discuss the book on its WROX P2P Forum.

See a short-form TOC, get links to live Azure sample projects, and read a detailed TOC of electronic-only chapters 12 and 13 here.

Wrox’s Web site manager posted on 9/29/2009 a lengthy excerpt from Chapter 4, “Scaling Azure Table and Blob Storage” here.

You can now freely download by FTP and save the following two online-only PDF chapters of Cloud Computing with the Windows Azure Platform, which have been updated for SQL Azure’s January 4, 2010 commercial release:

- Chapter 12: “Managing SQL Azure Accounts and Databases”

- Chapter 13: “Exploiting SQL Azure Database's Relational Features”

HTTP downloads of the two chapters are available for download at no charge from the book's Code Download page.

Tip: If you encounter articles from MSDN or TechNet blogs that are missing screen shots or other images, click the empty frame to generate an HTTP 404 (Not Found) error, and then click the back button to load the image.

Azure Blob, Drive, Table and Queue Services

Nathan Stuller posted 7 Reasons I Used Windows Azure for Media Storage to his Midwest Developer Insights blog on 8/26/2010:

Windows Azure is one service that Microsoft offers as part of its Cloud Computing platform. Earlier this year, I began leveraging a small piece of the entire platform on my side projects for what is considered “Blob Storage.” Essentially, I am just storing large media files on Microsoft’s servers. Below I outline why I chose this option over storing the large files on my traditional web host.

General Reasons for Cloud Storage:

- Stored Files Are Backed up

- Workload Scalability if traffic increases

- Cost scalability if traffic increases

Benefits of Microsoft’s Windows Azure:

- Easy to Learn for .NET Developers

I am a pretty heavy Microsoft developer so I more easily learned: how to use it, what the resources for learning it were, etc.

- Azure Cheaper than Competitors

Thirsty Developer: Azure was cheaper than competitors

- Azure Discounts with Microsoft BizSpark program

Azure is heavily discounted for BizSpark program members (Microsoft’s Startup program that gives free software for a 3 year time frame). BizSpark members get a big discount for 16 months, and a continued benefit for having an MSDN membership for as long as it is current (presumably a minimum of 3 years).

- Ease of use

Retrieving and creating stored files is extremely easy for public containers. One can just get the blob from a REST URI.

How this applied to me

Traditional hosting had a very low threshold for data storage (1GB) above which it was very expensive to add on more storage. The same was true for Bandwidth but the threshold was higher (80GB). Instead, with Azure, the cost is directly proportional to each bit stored and/or delivered, so it is much easier to calculate cost and it will be cheaper once I get over those thresholds.

Using Azure for Storage produces the following Download Process

- When a user requests a video file, he/she receives the web content and the Silverlight applet from my website.

- Embedded in the web content is a link to a public video from Windows Azure storage.

- Silverlight uses the link to stream the video content.

The link that my Silverlight applet uses to retrieve the file is a simple HTTP URI, which Silverlight streams to the client as it receives it. This is nice behavior; especially considering the file is downloaded directly to the client browser and does not incur additional bandwidth costs (from my traditional webhost) for every download.

What this means in terms of cost

There are several cost-increasing metrics as I scale my use of Windows Azure (see pricing below). Fortunately, Azure is much cheaper than my traditional web site hosting provider.

Under my current plan, costing roughly $10 per month, my hosting provider allows us to use:

- 80 GB of Bandwidth per Month

- 1 GB of storage space

For each additional 5GB of bandwidth used per month, a $5 fee is charged

For each additional 500MB of storage on the server above 1GB, the additional fee would be $5.

Fees for Windows Azure uploads & downloads can be easily predicted using the metrics below.

- Azure Pricing (Effective 02/01/2010)

- Compute = $0.12 / hour (Not applicable to this example)

- Storage = $0.15 / GB stored / month

- Storage transactions = $0.01 / 10K

- Data transfers = $0.10 in / $0.15 out / GB

An Example

Let’s say that I average 80 GB of Bandwidth Usage per month on a traditional hosting provider. Because I would be using the full allotment from my provider, that would be the cheapest scenario per GB. Let’s assume that half of my $10 payment per month accounts for Bandwidth and the other half accounts for storage. In that case, my cost for Bandwidth Usage would be $5 / 80 GB, or $.06 / GB.

That may seem cheap, but remember that this price is capped at 80 GB per month. If my website sees tremendous growth, to say 500 GB per month then I would be in real trouble. For that additional 420 GB, I would have to pay a total of $420 per month. My total monthly bill attributed to Bandwidth Usage of 500 GB would be $425, or $.85 / GB.

In contrast, the Azure storage model is simple and linear. For the low traffic case of downloading 80GB per month, it would actually be more expensive. However, as Bandwidth Usage grows, the rates do not go through the roof. Case in point, my total monthly bill attributed to Bandwidth Usage of 500 GB would be $75 (assuming all outgoing traffic).

I could walk through a similar example for the cost of storage but I think you see the point. In fact, it would likely be even worse. Since my website collects videos, it would be more likely that the amount of storage used would grow faster than the Bandwidth used.

As you can see, Azure storage and bandwidth is much cheaper, especially after scaling over the initial allotment.

Note: Storage transactions are a negligible contributor to cost in comparison.

<Return to section navigation list>

SQL Azure Database, Codename “Dallas” and OData

Walter Wayne Berry (@WayneBerry) announced Microsoft Project Code-Named “Houston” CTP 1 (August 2010 Update) on 8/25/2010:

Microsoft® Project Code-Named “Houston” is a lightweight and easy to use database management tool for SQL Azure databases. It is designed specifically for Web developers and other technology professionals seeking a straightforward solution to quickly develop, deploy, and manage their data-driven applications in the cloud. Project “Houston” provides a web-based database management tool for basic database management tasks like authoring and executing queries, designing and editing a database schema, and editing table data.

Major features in this release include:

- Navigation pane with object search functionality

- Information cube with basic database usage statistics and resource links

- Table designer and table data editor

- Aided view designer

- Aided stored procedure designer

- Transtact-SQL editor

What’s new in the August update:

- Project “Houston” is deployed to all data centers. For best results, use this tool for managing your databases located in the same data center.

- A refresh button in the database toolbar will retrieve the latest database object properties from the server.

- User interface and performance enhancements. …

Wayne continues with “Resources for Project ‘Houston’ Customers” and “Known Issues in this Release” topics.

<Return to section navigation list>

AppFabric: Access Control and Service Bus

Geoff Snowman presented a 01:00:00 MSDN Webcast: Introduction to Windows Azure AppFabric (Level 200) on 8/11/2010 (required online registration with your Windows Live ID):

- Language(s): English.

- Product(s): Windows Azure.

- Audience(s): Pro Dev/Programmer.

- Duration: 60 Minutes

- Start Date: Wednesday, August 11, 2010 5:00 PM Pacific Time (US & Canada)

Event Overview

Windows Azure AppFabric provides Access Control and an Internet-scale service bus. Access Control can authenticate users and claims, authorizing access to web sites and web services. The Service Bus allows applications running inside different firewalls to connect with each other and with services running in the cloud. In this webcast, you learn how Windows Azure AppFabric fits into the Microsoft cloud strategy and see simple demonstrations of both Windows Azure AppFabric Access Control and Windows Azure AppFabric Service Bus. We also take a look at Windows Azure AppFabric pricing and how the pay-as-you-go model affects application usage.

Presenter: Geoff Snowman, Mentor, Solid Quality Mentors

Geoff Snowman is a mentor with Solid Quality Mentors, where he teaches cloud computing, Microsoft .NET development, and service-oriented architecture. His role includes helping to create SolidQ's line of Windows Azure and cloud computing courseware. Before joining SolidQ, Geoff worked for Microsoft Corporation in a variety of roles, including presenting Microsoft Developer Network (MSDN) events as a developer evangelist and working extensively with Microsoft BizTalk Server as both a process platform technology specialist and a senior consultant. Geoff has been part of the Mid-Atlantic user-group scene for many years as both a frequent speaker and user-group organizer.Register here.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

Thomas Erl continues his series with Cloud Computing, SOA and Windows Azure - Part 3 about Windows Azure Roles on 8/26/2010:

A cloud service in Windows Azure will typically have multiple concurrent instances. Each instance may be running all or a part of the service's codebase. As a developer, you control the number and type of roles that you want running your service.

Web Roles and Worker Roles

Windows Azure roles are comparable to standard Visual Studio projects, where each instance represents a separate project. These roles represent different types of applications that are natively supported by Windows Azure. There are two types of roles that you can use to host services with Windows Azure:

- Web roles

- Worker roles

Web roles provide support for HTTP and HTTPS through public endpoints and are hosted in IIS. They are most comparable to regular ASP.NET projects, except for differences in their configuration files and the assemblies they reference.

Worker roles can also expose external, publicly facing TCP/IP endpoints on ports other than 80 (HTTP) and 443 (HTTPS); however, worker roles do not run in IIS. Worker roles are applications comparable to Windows services and are suitable for background processing.

Virtual Machines

Underneath the Windows Azure platform, in an area that you and your service logic have no control over, each role is given its own virtual machine or VM. Each VM is created when you deploy your service or service-oriented solution to the cloud. All of these VMs are managed by a modified hypervisor and hosted in one of Microsoft's global data centers.Each VM can vary in size, which pertains to the number of CPU cores and memory. This is something that you control. So far, four pre-defined VM sizes are provided:

- Small - 1.7ghz single core, 2GB memory

- Medium - 2x 1.7ghz cores, 4GB memory

- Large - 4x 1.7ghz cores, 8GB memory

- Extra large - 8x 1.7ghz cores, 16GB memory

Notice how each subsequent VM on this list is twice as big as the previous one. This simplifies VM allocation, creation, and management by the hypervisor.

Windows Azure abstracts away the management and maintenance tasks that come along with traditional on-premise service implementations. When you deploy your service into Windows Azure and the service's roles are spun up, copies of those roles are replicated automatically to handle failover (for example, if a VM were to crash because of hard drive failure). When a failure occurs, Windows Azure automatically replaces that "unreliable" role with one of the "shadow" roles that it originally created for your service. This type of failover is nothing new. On-premise service implementations have been leveraging it for some time using clustering and disaster recovery solutions. However, a common problem with these failover mechanisms is that they are often server-focused. This means that the entire server is failed over, not just a given service or service composition.

When you have multiple services hosted on a Web server that crashes, each hosted service experiences downtime between the current server crashing and the time it takes to bring up the backup server. Although this may not affect larger organizations with sophisticated infrastructure too much, it can impact smaller IT enterprises that may not have the capital to invest in setting up the proper type of failover infrastructure.

Also, suppose you discover in hindsight after performing the failover that it was some background worker process that caused the crash. This probably means that unless you can address it quick enough, your failover server is under the same threat of crashing.

Windows Azure addresses this issue by focusing on application and hosting roles. Each service or solution can have a Web frontend that runs in a Web role. Even though each role has its own "active" virtual machine (assuming we are working with single instances), Windows Azure creates copies of each role that are physically located on one or more servers. These servers may or may not be running in the same data center. These shadow VMs remain idle until they are needed.

Should the background process code crash the worker role and subsequently put the underlying virtual machine out of commission, Windows Azure detects this and automatically brings in one of the shadow worker roles. The faulty role is essentially discarded. If the worker role breaks again, then Windows Azure replaces it once more. All of this is happening without any downtime to the solution's Web role front end, or to any other services that may be running in the cloud.

Input Endpoints

Web roles used to be the only roles that could receive Internet traffic, but now worker roles can listen to any port specified in the service definition file. Internet traffic is received through the use of input endpoints. Input endpoints and their listening ports are declared in the service definition (*.csdef) file.Keep in mind that when you specify the port for your worker role to listen on, Windows Azure isn't actually going to assign that port to the worker. In reality, the load balancer will open two ports-one for the Internet and the other for your worker role. Suppose you wanted to create an FTP worker role and in your service definition file you specify port 21. This tells the fabric load balancer to open port 21 on the Internet side, open pseudo-random port 33476 on the LAN side, and begin routing FTP traffic to the FTP worker role.

In order to find out which port to initialize for the randomly assigned internal port, use the RoleEnvironment.CurrentRoleInstance.InstanceEndpoints["FtpIn"].IPEndpoint object.

Inter-Role Communication

Inter-Role Communication (IRC) allows multiple roles to talk to each other by exposing internal endpoints. With an internal endpoint, you specify a name instead of a port number. The Windows Azure application fabric will assign a port for you automatically and will also manage the name-to-port mapping.Here is an example of how you would specify an internal endpoint for IRC:

<ServiceDefinition xmlns=

"http://schemas.microsoft.com/ServiceHosting/2008/10/

ServiceDefinition" name="HelloWorld">

<WorkerRole name="WorkerRole1">

<Endpoints>

<InternalEndpoint name="NotifyWorker" protocol="tcp" />

</Endpoints>

</WorkerRole>

</ServiceDefinition>Example 1

In this example, NotifyWorker is the name of the internal endpoint of a worker role named WorkerRole1. Next, you need to define the internal endpoint, as follows:RoleInstanceEndpoint internalEndPoint =

RoleEnvironment.CurrentRoleInstance.

InstanceEndpoints["NotificationService"];

this.serviceHost.AddServiceEndpoint(

typeof(INameOfYourContract),

binding,

String.Format("net.tcp://{0}/NotifyWorker",

internalEndPoint.IPEndpoint));

WorkerRole.factory = new ChannelFactory<IClientNotification>(binding);Example 2

You only need to specify the IP endpoint of the other worker role instances in order to communicate with them. For example, you could get a list of these endpoints with the following routine:var current = RoleEnvironment.CurrentRoleInstance;

var endPoints = current.Role.Instances

.Where(instance => instance != current)

.Select(instance => instance.InstanceEndpoints["NotifyWorker"]);Example 3

IRC only works for roles in a single application deployment. Therefore, if you have multiple applications deployed and would like to enable some type of cross-application role communication, IRC won't work. You will need to use queues instead.Summary of Key Points

- Windows Azure roles represent different types of supported applications or services.

- There are two types of roles: Web roles and worker roles.

- Each role is assigned its own VM.

This excerpt is from the book, SOA with .NET & Windows Azure: Realizing Service-Orientation with the Microsoft Platform, edited and co-authored by Thomas Erl, with David Chou, John deVadoss, Nitin Ghandi, Hanu Kommapalati, Brian Loesgen, Christoph Schittko, Herbjörn Wilhelmsen, and Mickie Williams, with additional contributions from Scott Golightly, Daryl Hogan, Jeff King, and Scott Seely, published by Prentice Hall Professional, June 2010, ISBN 0131582313, Copyright 2010 SOA Systems Inc. For a complete Table of Contents please visit: www.informit.com/title/0131582313

ISC reported MapDotNet UX 8.0.2000 Released Today on 8/25/2010:

Today we released version 8.0.2000 of MapDotNet UX. Even though this is not versioned as a major or minor upgrade, there are some impressive new features in this drop. See the release notes for more information. Three additions worth noting are:

1. We brought back Oracle support for 32-bit installs using Oracle’s recently released ODP.NET for .Net 4. Oracle has not released a 64 bit connector yet, so it will have to wait until they do.

2. Dynamic loading of data-source connectors (e.g. if you remove ISC.MapDotnetServer.Core.Data.OracleSpatial.dll, UX Services and Studio continue to function normally – just the data source option is omitted from the list of available options.)

3. REST map interface (more information here)

In this blog I want to briefly explain what the new REST interface is and why you might use it over our existing WCF (SOAP) and WMS map interfaces. The new REST interface is built on Microsoft’s .Net 4 WCF support – WebHttp Services. In this drop, we have added REST for our map service only. The contract methods are nearly identical to what is provided through our WCF SOAP interface. The REST offering fits nicely between our current SOAP and WMS versions. It is the most performant of the three and takes the middle ground for flexibility. The following chart should help you decide which map interface to use in UX8 for a particular application:

Also included in this release are ITileRequestor REST implementations for WPF and Silverlight RIM (MDNSRestTileRequestor). If you look at Page.xaml for the Silverlight sample, you will see this requestor in use. As far as runtime flexibility is concerned, the WMS service is the least flexible allowing only map size, extent, projection and individual layer visibility changes. The SOAP version is the most flexible but also the slowest. It allows complete control over the map definition at runtime. The REST version provides more flexibility than the WMS and is also the fastest option. It also allows for tile rendering using x,y,z postioning or quad-keys. Neither the SOAP or WMS versions support this.

MapDotNet powers HeyGov!, which is a new and open way for citizens and government to communicate about access to non-emergency municipal services. The cities of San Francisco and Miami use HeyGov! currently.

Return to section navigation list>

VisualStudio LightSwitch

Paul Patterson (@PaulPatterson) explained Microsoft LightSwitch – Customizing an Entity Field using the Designer on 8/26/2010:

In a previous post I went through an exercise of modifying some of the properties of a table. In this post I am going to use the entity designer to customize some of the fields in my Customer entity.

Here is a look at my Customer table (entity).

Customer Entity

The first field in my Customer table is a field named “Id” and it is an Int23 (Integer) type field. I notice is that the Id field is kinda grayed out a bit. This is because the field named “Id” is field that has been automatically defined as a unique identifier field for the entity. The unique id in a database table is how a record is uniquely identified. There is a lot more to it than just that however the scope of this post is to only show what I do in LightSwitch. I am planning on a future post on the whole relational database concepts and design thing, but for now, we’ll just stick with the LightSwitch stuff. Note that this field is required by LightSwitch, and as such, the Required property for this field can not be disabled.

My Customer entity was created using LightSwitch. LightSwitch automatically added the Id field. Looking at the properties of the Id field I see only two items that I can modify. The first is the Description, I enter “This is a unique id of the customer”. Nothing fantastic here.

Hey, something I just discovered! Hovering over the label for the Description property produces a tooltip that explains what the property is for…

Tooltip for the Description property

Well, there you have it. This tells me that the value of the description property in tooltips or help text on the screens that display the field. Cool!

Taking a look at the Display Name property now, I hover over the label and it tells me that the display name is what shows as the label for the field when it is displayed on screens. Alright then. I change the display name value from the defaulted “Id” to “Customer ID”.

The last property is the Is Visible On Screen property. Thinking about it a bit more I wonder if there is any real value to having this field actually show up on a screen. Maybe I want open up some screen real estate by not showing this field. So, I uncheck the propery.

Looking some more at the properties window I notice a small circular button beside the text fields. Wondering what they are for, I click the button beside the description value.

What does that little circle do?

Interesting. Seems that I can optionally select to reset the field value to what LightSwitch had originally created. Sure, why not. Let’s reset to the default value. I am not going to display the Id field anyway, so whey worry about having a tooltip anyway. I click the Reset To Default item and presto, the description property value returns to the default value (which happens to be blank for the Id field).

More on that little circle button. I am assuming that this button is used to present a context menu specifically for the field. So far the only menu item that I can see is the Reset to Default item. Maybe other types of field properties present different types of menu items, I don’t know yet. So far, reseting to default is the only one I have experienced so far.

Moving on to my next field, the CustomerName field. I added this field to store information about the name of my customer. It is a string data type, which really means you can have just about anything for text in there, including just numbers if you want.

Clicking on the CustomerName value within the table designer allows me to edit the name property, directly within the designer. Similarly, I can change the field type and the required properties from within the designer. Each of these three properties are also available to edit in the Properties window, so I am going to work directly from there for now.

Making the CustomerName field the active field in the designer caused my Properties window to change to show the properties that are available for the field. Because the field is a String type of field, the properties windows includes properties that are available to String type fields.

The first property is the Is Computed property. If enabled, I can add a business rule that would compute the value of my field. A good example of this may be to show a money value that has to have some tax applied. I am thinking that I am going to tackle this subject in my next post. For now, I am going to leave this unchecked.

The IsSearchable property, if checked, will allow this field to be searchable when doing searches from the default search screens. If I end up with a few hundred customers in my application, you can bet that I am going to want do some searching based on their name. I leave this property as checked. (Make sure your table is searchable too, otherwise this field property is useless if can’t search the table to begin with).

I leave the Name property as is.

The ChoiceList link on the properties window allows me to create a list of possible choices for my field. I am going to leave this alone for now.

For the Description property, I enter “This is the name of the customer” .

Next I change the Display Name property to “Name” so that labels and column headers of my default screens shows this.

I do want this name displayed on the default screens, so I make sure the Is Visible On Screen is checked.

The Validation section contains properties where I can apply some simple rules about the field. The customer name is required for my application, so I make sure the Is Required property is checked.

I can also define the maximum number of characters allowed in my text field by entering a number into the Maximum Length property. Note that you can only enter a valid number (between 1 and 2147483647) (Although for some reason it allows 0 (zero) to be entered. I’ve changed the customer name field maximum length property from the default 255 to just 50.

The Custom Validation link allows me to enter some…custom validation or business rule via code. I’m not going to do any custom validation just yet. I’ll touch on this one in another post too.

Here is what my CustomerName field properties look like…

CustomerName field properties window

For the remaining fields, I update each so that they are NOT required, except for my IsActive field as I need to know if the customer is still an active account or not. The customer name I also want to make sure has information in it I create or edit a customer.

Back to the CustomerType field. Although the field is a String type field, I want to constrain the information to only a specific set of values. This is valuable in scenarios where I want to search my customers. There may be a customer type that I applied to many customers, but formed or spelled the information differently. There would be risk that I would miss some customers when searching for a specific type of customer. By constraining the information allowed in the field to a defined list, I make sure that the customer type values are consistent for all customers.

As an alternative to selecting the CustomeType field by clicking on it in the designer, I select it from the dropdown at the top of the properties window.

Next, I hover over the Choice List to see what is tells me.

Tooltip for Choice List link

Clicking on the Choice List link opens up the Choice List dialog. The choice list contains two columns. The first column is the Value column. The value column is what will be used as your field value when the choice is selected. The second column is the Display Name which is what will be displayed in the choice list that shows up on screens. If nothing is entered into the Display Name column, the Display Name defaults to the value entered into the Value column.

I enter some choices that I want to have available for my CustomerType field…

Some Choice List values

I click on the OK button and head back to the properties window. On the properties window I now see a red X icon beside the Choice List link. Hovering over the icon I see this…

Choice List option to delete

It seems that I can now delete the choice list that I created. I don’t want to delete it. I clicked on the Choice List link to see if I could later edit the list I created, and it opened with my list.

To see how this works on a screen, I click the Start Debugging button. In my NewCustomer screen, the CustomerType field is enabled with a dropdown box that offers a items from the choice list I created.

Choice List in a screen

I also have the option of still being able to manually enter anything I wanted, so I am not constrained to only the items in the list. This is great because not only do I have an easy to reference list of common customer types to use, I still have the flexibility to add something that is not on the list…

In the screen I happen to notice that the labels beside my fields are quite what I want. So, I stop the application and head back to the properties of each field to update the Display Name value of each property. And viola…

New display names for my fields.

As far as experiencing how to use the designer to modify the properties of a field, that is it in a nutshell. There are other types of fields that other properties that can be managed too. These include:

- Date, DateTime, and Double type fields have properties to define the minimum and maximum values that are allowed.

- A Decimal field type has minimum and maximum value properties, as well as properties to define the values precision and scale.

- The EmailAddress type, a custom business entity type, has a property to require that an email domain in its value.

- Money entity types that have a number of properties to define the appearance of the value, such as the currency symbol and number of decimal spaces. Similar to the Decimal field, the money type has validation properties for the minimum, maximum, and precision and scale of the value.

As I move forward in creating other tables in my project, I’ll likely come across something else that might be unique to the field type. Until them, I think I now have a pretty good understanding of how to manage the fields of my entities using the LightSwitch designers.

In my next post I am going to create a couple of more tables and then apply relationships to those tables.

Cheers!

I agree with Michael Washington’s comment to Paul’s article:

This is really well done. It forced me to go through each option and think about it.

I learned a lot like the IS Searchable (I saw it but I didn’t “think about it” until I read your article). That field is important because the more fields that are searchable, the slower.

See also Allende’s comments on search performance below.

Sergey Vlasov explained Troubleshooting Visual Studio LightSwitch IDE extension installation using Runtime Flow on 8/26/2010:

With the Visual Studio LightSwitch Beta 1 release this week, I wanted to verify that [my] Text Sharp – Visual Studio 2010 text clarity tuner is compatible with the standalone LightSwitch installation:

Visual Studio LightSwitch Beta standalone IDE startup

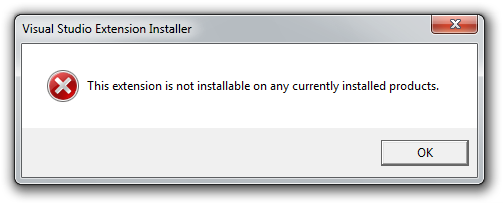

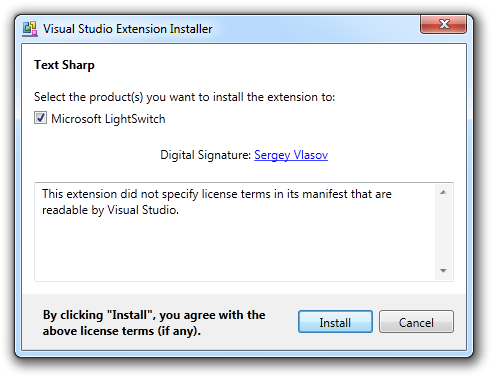

Unfortunately, running the TextSharp_12.vsix installer gave me the “This extension is not installable on any currently installed products” error:

Visual Studio Extension Installer error

.vsixmanifest for Text Sharp contained the following list of supported products:

<SupportedProducts> <VisualStudio Version="10.0"> <Edition>Pro</Edition> </VisualStudio> </SupportedProducts>I looked for documentation specifying what needs to be added for LightSwitch, bug found absolutely nothing. Then I decided to look inside the vsix installer using Runtime Flow.

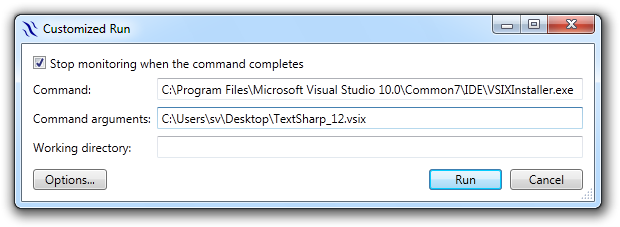

With the error message box on the screen, using Process Explorer I found the path to the vsix installer and that its command line contained just path to TextSharp_12.vsix. I installed Runtime Flow on the same machine for LightSwitch IDE (no problems this time) and set up customized monitoring run:

Runtime Flow customized run settings for VSIX installer monitoring

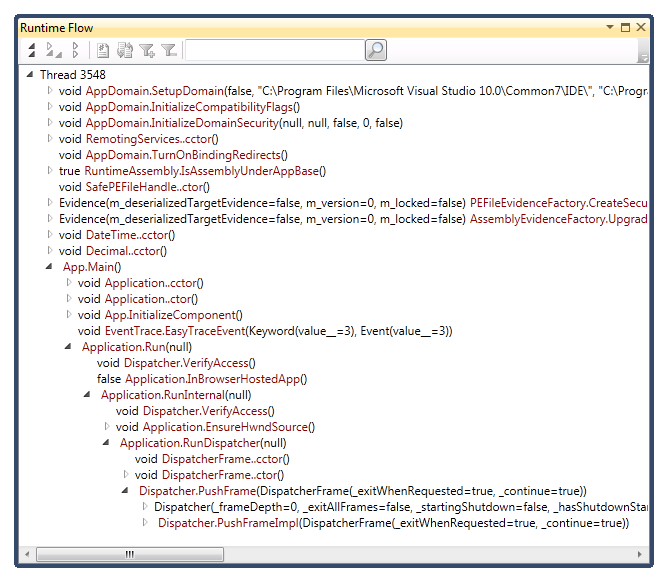

I received the same installation error under Runtime Flow monitoring and tried to find the call to show message box (that should be close to the place when the error occurs). I thought it would be the last call in the Runtime Flow tree, but after opening several levels of calls I didn’t feel that I was going in the right direction:

Going to the last call in the Runtime Flow tree

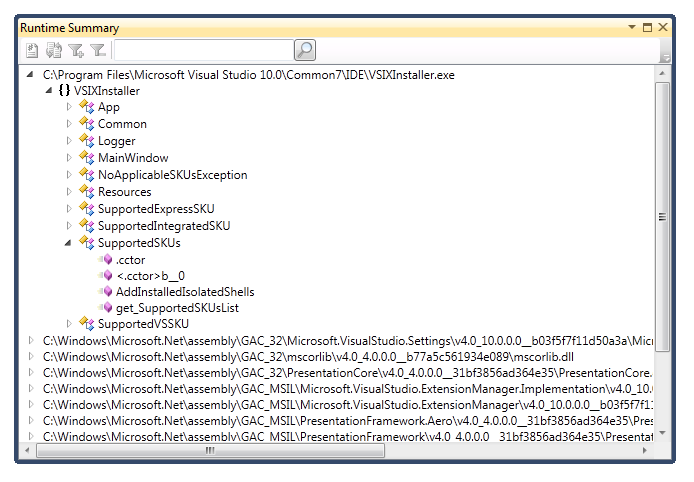

Then I opened the Runtime Summary window and for VSIXInstaller.exe several SupportedSKU classes immediately caught my attention:

Classes and functions called in the VSIX installer

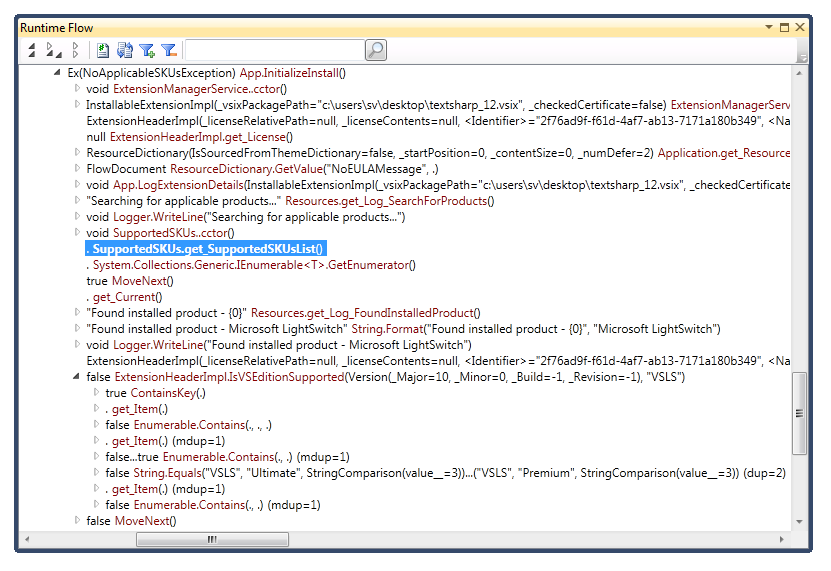

I selected get_SupportedSKUsList, clicked Locate in flow and appeared at the beginning of a very interesting sequence of calls:

Supported editions check in the VSIX installer

It was clearly visible that the supported edition strings were compared with VSLS and returned false. Indeed adding the VSLS edition to .vsixmanifest in Text Sharp solved the original problem:

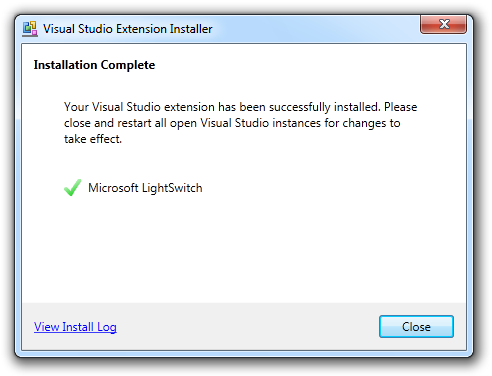

<SupportedProducts> <VisualStudio Version="10.0"> <Edition>Pro</Edition> <Edition>VSLS</Edition> </VisualStudio> </SupportedProducts>Successful start of the Text Sharp installer

Successful Text Sharp installation

Channel9 posted Steve Anonsen and John Rivard: Inside LightSwitch delivering a 01:06:14 presentation about LightSwitch architecture on 8/25/2010:

"Visual Studio LightSwitch is a new tool aimed at easily building data-driven applications, such as an inventory system or a basic customer relationship management system."

Typically, when making difficult things easy, the price is solving a set of very difficult technical problems. In this case, the LightSwitch engineering team needed to remove the necessity for non-programmer domain experts to think about application tiers (e.g., client, web server, and database) when constructing data-bound applications for use in their daily business lives. LightSwitch is designed for non-programmers, but it also offers the ability to customize and extend it, which will most likely be done by experienced developers (see Beth Massi's Beyond the Basics interview to learn about some of the more advanced capabilities).

This conversation isn't really about how to use LightSwitch (or how to extend it to meet your specific needs)—that's already been covered. Rather, in this video we meet the architects behind LightSwitch, Steve Anonsen and John Rivard, focusing on how LightSwitch is designed and what problems it actually solves as a consequence of the design. Most of the time is spent at the whiteboard, discussing architecture and solutions to some hard technical problems. This is Going Deep, so we will open LightSwitch's hood and dive into the rabbit hole.

Enjoy! For more information on LightSwitch, please see:Visual Studio LightSwitch Developer Center Visual Studio LightSwitch Team Blog Visual Studio LightSwitch Forums

The geo2web.com blog continues its Switch on the Light – Bing Maps and LightSwitch (Part 2/2) series:

In the first part we have used Visual Studio 2010 LightSwitch to create a database and a user interface to select, add, edit and delete data without writing a single line of code. The one thing that bothers me for now is that in the summary list on the left half of the screen we only have the data from AddressLine1-field.

I would like to have the complete address-string in this summary – partially because it is more informative but also because we will use this as the address-string that we hand over to the Bing Maps Geocoder later. To do this we need to stop the debugger and make some changes in the table itself. We create a new column – let’s call it QueryString – and set the properties

- is visible on screen: unchecked and

- is required: unchecked

My first thought was to make this a computed column but that half-broke something with the Bing Maps extension that we are going to implement later so we need to make a little detour:

We click on CountryRegion column and select “Write Code” from the menu bar of the designer. From the dropdown list we select the method CountryRegion_Changed.

I swear this is the only time during this walk-through that we need to write a line of code J. In the automatically generated method we create the following line of code:

QueryString = AddressLine1 + “, ” + City + “, ” + PostCode + “, ” + CountryRegion

The summary in our list-view is picked up from the column that follows Id so we drag our column QueryString simply to that position.

Let’s see the result. As expected we have now the concatenated address-string in the summary on the left-hand side.

Integrating Bing Maps

LightSwitch uses extensions in order to add new capabilities. Custom business type extensions such as the Money and Phone Number types are examples of extensions that are included in LightSwitch but we can also create our own extensions and make them available for others to use for example in the Visual Studio Extension Gallery. In order to support this extensibility, the LightSwitch framework leverages the capabilities of the Managed Extensibility Framework (MEF) to expose (and discover) extensibility points .You will find a hands-on lab on how the Bing Maps Silverlight Control is implemented as such a custom extension in the LightSwitch Training Kit.

To develop your own extensions you will additionally need the Visual Studio 2010 Software Development Kit which is available as a free download.

The example in the LightSwitch Training Kit will receive an address-string from a selected item in our list view, geocode the address and center & zoom the map to this location. The lab is very detailed and hence we won’t go into more depth here. The result of the lab is packed into a Visual Studio extension and can be installed on your development machine.

Note: If your Visual Studio was open during the installation you need to close and restart your Visual Studio.

Once we have installed the package we can enable the extension in the properties of our project:

In the screen-designer we can now drag & drop our recently created QueryString column into our details view and simply switch the control type from Text to “Bing Maps Control”

In order to use Bing Maps Control we will need a Bing Maps Key and if you don’t have one you should get it straight away in the Bing Maps Portal. The key is then entered in the properties of our QueryString control:

And that’s it. Let’s see the result:

Whenever, we select an address in the summary view at the left the address-string is send our Bing Maps extension. The Bing Maps extension will than geocode the address and center and zoom the map.

From the Desktop to the Web

What we have seen so far is all on the desktop and we can package and publish the application for the desktop straight from Visual Studio but what about the web? Well, be prepared for a surprise. It can’t be much simpler than this.

We just go to the project properties, select the “Application Type” tabulator and flick the switch from Desktop to Browser client.

Done!

As a free bonus we also got full-text search over all columns in the table. It can indeed not be much simpler than this. So let’s go and create some nice Bing Maps extensions for LightSwitch to do even more useful things.

Ayende Rahien (@ayende) begs to differ with LightSwitch’s design in a series of posts (in chronological order) beginning with LightSwitch: Initial thoughts of 8/24/2010, which I missed when posted:

As promised, I intend to spend some time today with LightSwitch, and see how it works. Expect a series of post on the topic. In order to make this a read scenario, I decided that that a simple app recording animals and their feed schedule is appropriately simple.

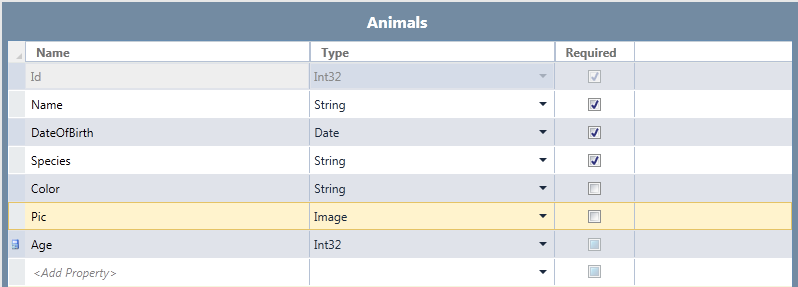

I created the following table:

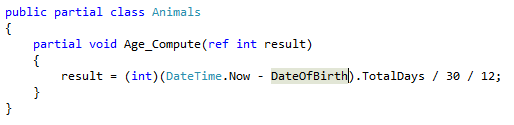

Note that it has a calculated field, which is computed using:

There are several things to note here:

- ReSharper doesn’t work with LightSwitch, which is a big minus to me.

- The decision to use partial methods had resulted in really ugly code.

- Why is the class called Animals? I would expect to find an inflector at work here.

- Yes, the actual calculation is crap, I know.

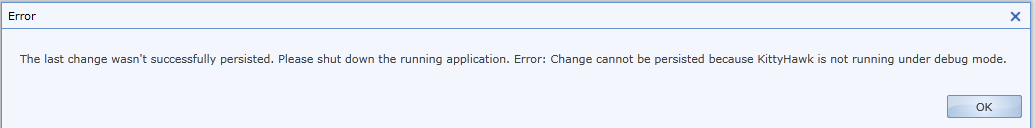

This error kept appearing at random:

It appears to be a known issue, but it is incredibly annoying.

This is actually really interesting:

- You can’t really work with the app unless you are running in debug mode. That isn’t the way I usually work, so it is a bit annoying.

- More importantly, it confirms that this is indeed KittyHawk, which was a secret project in 2008 MVP Summit that had some hilarious aspects.

There is something that is really interesting, it takes roughly 5 – 10 seconds to start a LS application. That is a huge amount of time. I am guessing, but I would say that a lot of that is because the entire UI is built dynamically from the data source.

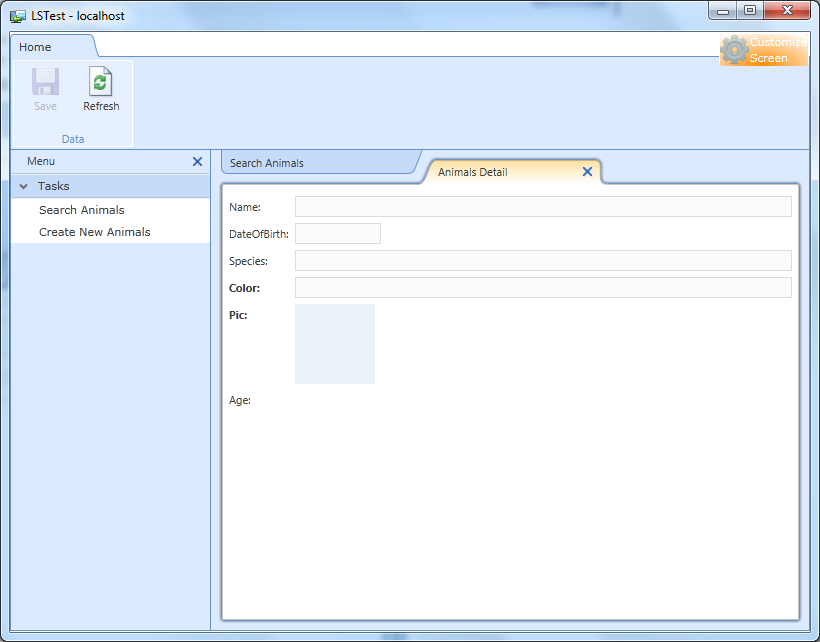

That would be problematic, but acceptable, except that it takes seconds to load data even after the app has been running for a while. For example, take a look here:

This is running on a quad core, 8 GB machine, in 2 tiers mode. It takes about 1 – 2 seconds to load each screen. I was actually able to capture a screen half way loaded. Yes, it is beta, I know. Yes, perf probably isn’t a priority yet, but that is still worrying.

Another issue is that while Visual Studio is very slow, busy about 50% of the time. This is when the LS app is running or not. As an a side issue, it is hard to know if the problem is with LS or VS, because of all the problems that VS has normally.

As an example of that, this is me trying to open the UserCode, it took about 10 seconds to do so.

What I like about LS is that getting to a working CRUD sample is very quick. But the problems there are pretty big, even at a cursory examination. More detailed posts touching each topic are coming shortly.

I’m running the LightSwitch beta on a similar machine, although it’s running Windows 7 Pro as a guest OS with 3 GB RAM on an Intel Core Duo Quad processor. I encounter start and screen load times that are similar to Ayende’s.

Ayende’s LightSwitch posts don’t appear in the #lightswitch Daily because he doesn’t use #VSLS or #LightSwitch hashtags. Google doesn’t include his posts in the first few pages of their Blog Search for LightSwitch.

Ayende continues with Profiling LightSwitch using Entity Framework Profiler of 8/24/2010:

This post is to help everyone who want to understand what LightSwitch is going to do under the covers. It allows you to see exactly what is going on with the database interaction using Entity Framework Profiler.

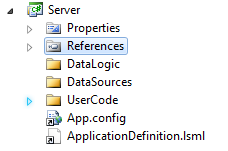

In your LightSwitch application, switch to file view:

In the server project, add a reference to HibernatingRhinos.Profiler.Appender.v4.0, which you can find in the EF Prof download.

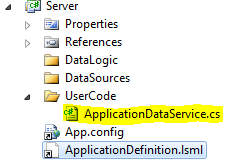

Open the ApplicationDataService file inside the UserCode directory:

Add a static constructor with a call to initialize the entity framework profiler:

public partial class ApplicationDataService { static ApplicationDataService() { HibernatingRhinos.Profiler.Appender.EntityFramework.EntityFrameworkProfiler.Initialize(); } }This is it!

You’re now able to work with the Entity Framework Profiler and see what sort of queries are being generated on your behalf.

Ayende’s Entity Framework Profiler is a commercial product that costs US$305 to license or US$16 per month to rent. You can apply for a trial registration license here. @julielerman recommends it highly.

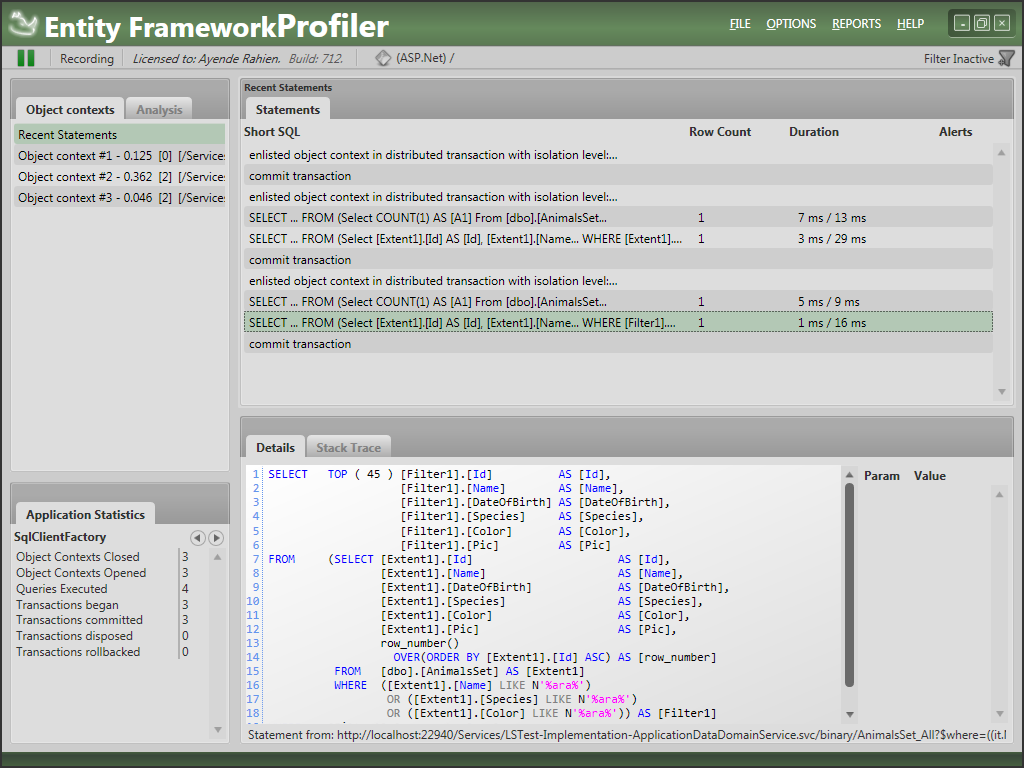

Ayende’s third LightSwitch post is Analyzing LightSwitch data access behavior of 8/24/2010:

I thought it would be a good idea to see what sort of data access behavior LightSwitch applications have. So I hook it up with the EntityFramework Profiler and took it for a spin.

It is interesting to note that it seems that every operation that is running is running in the context of a distributed transaction:

There is a time & place to use DTC, but in general, you should avoid them until you really need them. I assume that this is something that is actually being triggered by WCF behavior, not intentional.

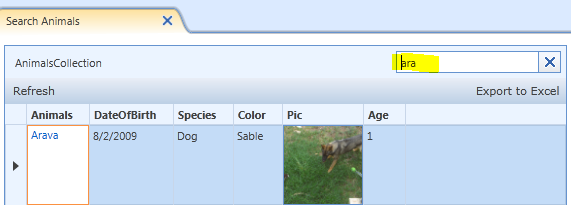

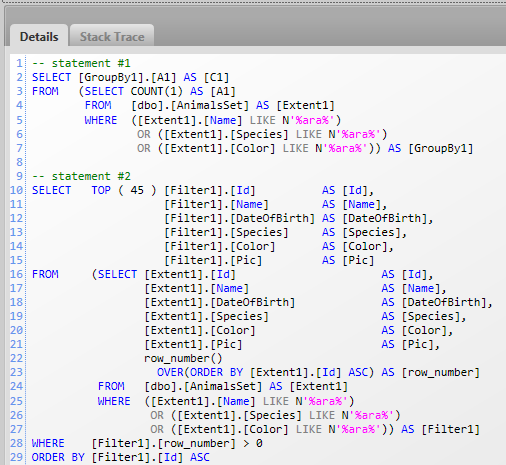

Now, let us look at what a simple search looks like:

This search results in:

That sound? Yes, the one that you just heard. That is the sound of a DBA somewhere expiring. The presentation about LightSwitch touted how you can search every field. And you certainly can. You can also swim across the English channel, but I found that taking the train seems to be an easier way to go about doing this.

Doing this sort of searching is going to be:

Very expensive once you have any reasonable amount of data.

Prevent usage of indexes to optimize performance.

In other words, this is an extremely brute force approach for this, and it is going to be pretty bad from performance perspective.

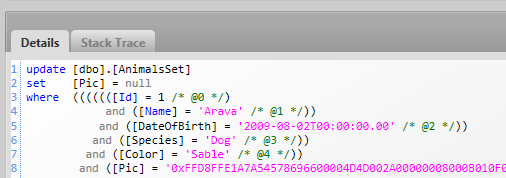

Interestingly, it seems that LS is using optimistic concurrency by default.

I wonder why they use the slowest method possible for this, instead of using version numbers.

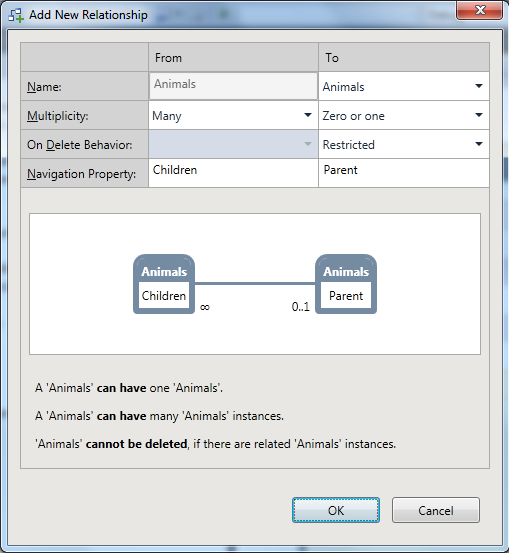

Now, let see how it handles references. I think that I run into something which is a problem, consider:

Which generates:

This make sense only if you can think of the underlying data model. It certainly seems backward to me.

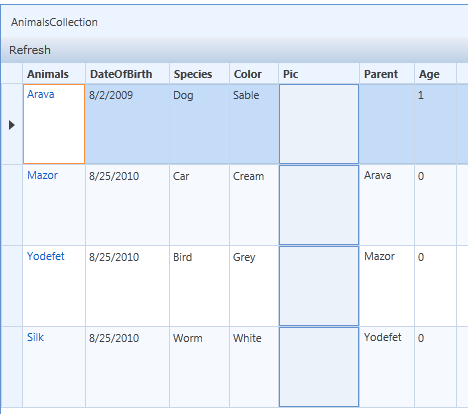

I fixed that, and created four animals, each as the parent of the other:

Which is nice, except that here is the SQL required to generate this screen:

-- statement #1 SELECT [GroupBy1].[A1] AS [C1] FROM (SELECT COUNT(1) AS [A1] FROM [dbo].[AnimalsSet] AS [Extent1]) AS [GroupBy1] -- statement #2 SELECT TOP ( 45 ) [Extent1].[Id] AS [Id], [Extent1].[Name] AS [Name], [Extent1].[DateOfBirth] AS [DateOfBirth], [Extent1].[Species] AS [Species], [Extent1].[Color] AS [Color], [Extent1].[Pic] AS [Pic], [Extent1].[Animals_Animals] AS [Animals_Animals] FROM (SELECT [Extent1].[Id] AS [Id], [Extent1].[Name] AS [Name], [Extent1].[DateOfBirth] AS [DateOfBirth], [Extent1].[Species] AS [Species], [Extent1].[Color] AS [Color], [Extent1].[Pic] AS [Pic], [Extent1].[Animals_Animals] AS [Animals_Animals], row_number() OVER(ORDER BY [Extent1].[Id] ASC) AS [row_number] FROM [dbo].[AnimalsSet] AS [Extent1]) AS [Extent1] WHERE [Extent1].[row_number] > 0 ORDER BY [Extent1].[Id] ASC -- statement #3 SELECT [Extent1].[Id] AS [Id], [Extent1].[Name] AS [Name], [Extent1].[DateOfBirth] AS [DateOfBirth], [Extent1].[Species] AS [Species], [Extent1].[Color] AS [Color], [Extent1].[Pic] AS [Pic], [Extent1].[Animals_Animals] AS [Animals_Animals] FROM [dbo].[AnimalsSet] AS [Extent1] WHERE 1 = [Extent1].[Id] -- statement #4 SELECT [Extent1].[Id] AS [Id], [Extent1].[Name] AS [Name], [Extent1].[DateOfBirth] AS [DateOfBirth], [Extent1].[Species] AS [Species], [Extent1].[Color] AS [Color], [Extent1].[Pic] AS [Pic], [Extent1].[Animals_Animals] AS [Animals_Animals] FROM [dbo].[AnimalsSet] AS [Extent1] WHERE 2 = [Extent1].[Id] -- statement #5 SELECT [Extent1].[Id] AS [Id], [Extent1].[Name] AS [Name], [Extent1].[DateOfBirth] AS [DateOfBirth], [Extent1].[Species] AS [Species], [Extent1].[Color] AS [Color], [Extent1].[Pic] AS [Pic], [Extent1].[Animals_Animals] AS [Animals_Animals] FROM [dbo].[AnimalsSet] AS [Extent1] WHERE 3 = [Extent1].[Id]I told you that there is a select n+1 builtin into the product, now didn’t I?

Now, to make things just that much worse, it isn’t actually a Select N+1 that you’ll easily recognize. because this doesn’t happen on a single request. Instead, we have a multi tier Select N+1.

What is actually happening is that in this case, we make the first request to get the data, then we make an additional web request per returned result to get the data about the parent.

And I think that you’ll have to admit that a Parent->>Children association isn’t something that is out of the ordinary. In typical system, where you may have many associations, this “feature” alone is going to slow the system to a crawl.

Ayende’s fourth LightSwitch post of 8/24/2010 is LightSwitch & Source Control:

Something that I found many high level tools are really bad at is source control, so I thought that I would give LightSwitch a chance there.

I created a Git repository and shoved everything into it, then I decided that I would rename a property and see what is going on.

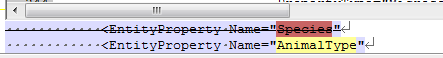

I changed the Animals.Species to Animals.AnimalType, which gives me:

This is precisely what I wanted to see.

Let us see what happen when I add a new table. And that created a new set in the ApplicationDefinition.lsml file.

Overall, this is much better than I feared.

I am still concerned about having everything in a single file (which is a receipt for having a lot of merge conflicts), but at least you can diff & work with it, assuming that you know how the file format works, and is seems like it is at least a semi reasonable one. …

And his fifth and final post in the series is LightSwitch on the wire on 8/24/2010:

This is going to be my last LightSwitch post for a while.

I wanted to talk about something that I found which was at once both very surprising and Doh! at the same time.

Take a look here:

What you don’t know is this was generated from a request similar to this one:

wget http://localhost:22940/Services/LSTest-Implementation-ApplicationDataDomainService.svc/binary/AnimalsSet_All?$orderby=it.Id&$take=45&$includeTotalCount=

What made me choke was that the size of the response for this was 2.3 MB.

Can you guess why?

The image took up most of the data, obviously. In fact, I just dropped an image from my camera, so it was a pretty big one.

And that lead to another problem. It is obviously a really bad idea to send that image on the wire all the time, but LightSwitch make is so easy, indeed, even after I noticed the size of the request, it took me a while to understand what exactly is causing the issue.

And there doesn’t seems to be any easy way to tell LightSwitch that we want to put the property here, but only load it in certain circumstances. For that matter, I would generally want to make the image accessible via HTTP, which means that I gain advantages such as parallel downloads, caching, etc.

But there doesn’t seems to be any (obvious) way to do something as simple as binding a property to an Image control’s Url property.

<Return to section navigation list>

Windows Azure Infrastructure

David Linthicum asserted “If you want to see the growth of cloud computing, you should resist any efforts to restrict Internet traffic” and promised The lowdown on Net neutrality and cloud computing in this 8/26/2010 post to InfoWorld’s Cloud Computing blog:

By now, most of us have heard about the Net neutrality proposal from Google and Verizon Wireless that would exempt wireless Internet and some broadband services from equal treatment on carrier networks, setting the stage for carriers to favor some services over others or charge more for specific services.

Google and Verizon say the proposal will make certain that most traffic is treated equally, more appropriately scale usage so that heavy users and heavy services don't crowd out the rest, as well as ensure sufficient income for further wireless broadband investment.

The reaction to any restrictions on Internet traffic, wireless or not, set the blogosphere on fire and created a huge PR problem for Google and Verizon. To be fair, they are chasing their own interests. After all, Google is a huge provider of mobile OSes -- many of which run on the Verizon network -- and the two companies effectively created a common position around imposing control to drive additional revenue.

The concept of allowing specific networks, especially wireless networks, to restrict or prioritize some traffic is a huge threat to the success of cloud computing. If provider networks are allowed to control traffic, they could give priority to the larger cloud computing vendors who write them a big check for the privilege. At the same time, smaller cloud computing upstarts who can't afford the fee will have access to their offerings slowed noticeably, or perhaps not even allowed on the network at all.

Considering that most clouds are limited by bandwidth, the Google-Verizon proposal would have the effect of narrowing the playing field for cloud computing providers quickly. Businesses using pubic cloud computing will have fewer choices, and their costs will go up as these fees are passed onto cloud computing customers.

If what Google and Verizon are promoting becomes accepted as FCC policy or law, cloud computing will become more expensive, lower performing, and much less desirable. Moreover, the innovative aspect of cloud computing, which typically comes from very lean startups, is quickly removed from the market as the Internet toll roads go up.

It's bad for the Web, bad for users, and very bad for cloud computing. Let's work together to make sure that the Internet stays open and neutral.

<Return to section navigation list>

Windows Azure Platform Appliance (WAPA)

James Hutchinson asserted “Private cloud trumps public infrastructure in Australia due to concerns about security, focus on OpEx” as a preface to his Pendulum swings back toward private cloud: IDC post to ComputerWorld Australia:

After years of publicity around the virtues of the public cloud, analysts are predicting a huge swing back to private infrastructure.

According to analyst firm IDC, 20 per cent of Australian IT heads are looking to implement cloud services within the next year, while a further 45 per cent will implement these services after 12 months. The continued moves to external infrastructure are seen as a huge boost to the 20 or so per cent of local companies already taking advantage of the cloud.

However, IDC’s cloud technologies and services Asia Pacific research director, Chris Morris, has forecast a shift away from current practices.

“The interesting thing is, these people who are already using cloud now, almost all of them were public cloud users,” he told attendees at IDC’s Cloud Computing Conference 2010. “But when we look at those who are planning to use in the next 6 to 12 months, there are still public cloud users, but we’ve switched to a majority of people who are planning to use a private cloud rather than public.”

Notably, 85 per cent of Australian companies are expected to look toward virtualisation services in a private cloud by 2013. Also:

- 45 per cent will look to private standardisation,

- 45 per cent will look to automation,

- 26 per cent will adopt private service level management, and

- 19 per cent will look at private self service models.

The swing is expected to continue exponentially, with 95 per cent of companies adopting a virtualised private cloud by 2014.

According to Morris, the same security concerns that have afflicted public cloud services for years are likely to continue - against his own logic - driving demand for private infrastructure.

“The other thing, simply, is we’ve got a lot of money invested already in assets in our organisations. We want to drive up the utilisation levels of those servers and storage devices,” Morris said.

The economic downturn’s affect on companies saw IT departments influenced greater by the chief financial officer, Morris said, and subsequently an inherent move toward projects based on minimising operational expenditure rather than utilising capital expenditure.

While public cloud services will continue to exist, a drive toward private cloud services will likely see a proliferation of niche, specialised clouds that companies will be adopt to pick and choose from based on their individual needs.

The last two years has already seen such proliferation, with services such as Microsoft’s Windows Azure focussing on application testing and development rather than all available cloud applications.

“People are saying that, ‘yeah we now understand what the cloud can do, we understand that we can do public and we can do private. For some things private is better than public and for others public has great advantages,’” Morris said. “I think in the last twelve months we’ve come out of this period of hype and our view has crystallised on what cloud can and can’t do and we can build proper strategies.”

Morris made similar predictions with the release of the analyst firm’s Cloud Services and Technologies End-User Survey earlier in the month.

IDC’s forecast also coheres with those made recently by Gartner vice president, Tom Bittman, who provided statistics from his own poll indicating IT leaders were concentrating more on private clouds.

He wrote that 75 per cent of those polled in the US said that they would be pursuing a private cloud strategy by 2012, and 75 per cent said that they would invest more in private clouds than in public clouds through 2012.

Some have derided the private cloud as a name change for the hosted infrastructure and services offered by the likes of IBM for years, and a step away from the multi-tenant base on which many public clouds are based. However, the concerns of security lacking in public infrastructure - and particularly the lack of data centres based in Australia - have held companies back from pushing sensitive data into the cloud.

In contrast, those in the private arena offer localised centres that provide greater comfort for apprehensive companies, with services from the likes of HP, IBM and CSC.

<Return to section navigation list>

Cloud Security and Governance

Nicole Hemsoth reported IBM’s X-Force Warns Earth’s Citizens of Imminent Threat in this 8/26/2010 post to the HPC in the Cloud blog:

IBM X-Force team, which the company describes as the “premier security research organization within IBM that has catalogued, analyzed and researched more than 50,000 vulnerability disclosures since 1997” seems to me to be very much like like the X-Men; a protected group of particularly gifted IT superhero types who are cloistered in majestic, isolated quarters until they are needed by the masses...

A group of freakishly talented protectors that live to hone their gifts in secret, defending us from threats we never see, able to relish the joys and tragedies of their societal burden only from the confines of their own community of special people as the world spins on, oblivious to the dangers that have been squelched as we dreamed and vacuumed our dens.

I could be wrong about that, but I prefer to think of things in these ways so please don’t ever tell me otherwise. If nothing else, it makes writing about IBM’s take on cloud security much more interesting.

In their seek and destroy (well, probably more like discover and analyze) missions for the sake of protecting the cloud that so many have come to appreciate, the X-Force team has released its Trend and Risk Report for the first part of the year, stating that there are new threats emerging and virtualization is a prime target.

Of the cloud, X-Force stated today, “As organizations transition to the cloud, IBM recommends that they start by examining the security requirements of the workloads they intend to host in the cloud, rather than starting with an examination of different potential service providers.”

IBM is making a point of warning consumers of cloud services to look past what the vendors themselves are claiming to offer and to take a much closer glance at the application-specific security needs. Since security (not to mention compliance and other matters related to this sphere for enterprise users) depends on the workload itself, this is good advice, but when the vendors, all of whom are pushing their services discourage this by taking a “we’ll take care of everything for you” approach, it’s no surprise that IBM feels the need to repeat this advice.

The X-Force team also contributed a few discussion points about virtualization and a multitenant environment, stating that “as organizations push workloads into virtual server infrastructures to take advantage of ever-increasing CPU performance, questions have been raised about the wisdom of sharing workloads with different security requirements on the same physical hardware.”

On this note, according to the team’s vulnerability reports, “35 percent of vulnerabilities impacting server class virtualization systems affect the hypervisor, which means that an attacker with control of one virtual system may be able to manipulate other systems on the same machine.”

The concept of an evil supergenius attacking the hypervisor and creating “puppets” out of other systems is frightening indeed and there have been some examples of this occurring, although not frequently enough (or on a large-enough scale) to generate big news. However, this point of concern is enough to keep cloud adoption rates down if there are not greater efforts to secure the hypervisor against attack.

IBM recommends that enterprises plan their own strategy with careful attention to application requirements versus reliance on vendor support.

I recommend a laser death ray.

Robert Mullins claimed “Cloud Security Alliance makes it easier to plan cloud computing smartly” as a prefix to his The 13 things you should know before going cloud post of 8/25/2010 to NetworkWorld’s Microsoft Tech blog:

There’s a lot of hype about cloud computing but not as much clarity into exactly how to adopt cloud computing for your particular environment or specifically to address all the security “what ifs” the technology raises. A group called the Cloud Security Alliance (CSA) is trying to provide that insight.

The CSA, whose membership includes Microsoft, as well as Google, HP, Oracle and other marquee names -- 60 companies and 11,000 individuals in all -- was formed in early 2009 to try to educate the tech community about cloud computing, establish standards and basically give companies a clear path to bring cloud computing to their enterprise.nI listened in on a Web cast today by Jim Reavis, executive director of the CSA, which was hosted by security vendor RSA.

While intrigued by the promise of cloud computing, which is a company contracting with a third party to deliver compute cycles without the expense of building and maintaining their own data center, concerns about cloud security abound. (That’s an overly-simplified definition that doesn’t get into the whole private versus public cloud, but it should suffice for the moment.)

To help companies navigate the myriad issues regarding cloud computing and to properly evaluate the qualifications of a cloud vendor, CSA has made available what it calls the Cloud Controls Matrix Tool, a free download that lays out in a speadsheet all the considerations that should go into a cloud decision. While the tool was introduced back in April, CSA is already working on version 2.0 that may launch in November.

In all there are 13 general categories of considerations for going cloud and they are divided into two main categories, governance issues and operational issues, said Reavis. Under governance, he said a company evaluating cloud vendors needs to do a risk management assessment, asking what are the risks and who accepts the risk, the cloud provider or you? What compliance and audit assurances are given? How is information lifecycle management handled? How is electronic discovery provided when that becomes necessary? Under operating concerns, what assurances does the provider offer for business continuity and disaster recovery? What kind of data encryption is provided? How is access controlled? Such questions and more need to be asked of the prospective cloud vendor and detailed in requests for proposals, contracts and service level agreements, Reavis said.

The idea for the Matrix Control spreadsheet is to help companies make sure all bases are covered before making a decision.

“Our idea is that for IT security, audit functions [etcetra] that this could bridge the gap with your current knowledge and current tools to look for the presence of appropriate security controls in any type of cloud environment,” Reavis said.

To further expand the knowledge base of players in the cloud market, CSA is also launching a certification program, the Certificate for Cloud Security Knowledge (CCSK), which is an online training and testing program that will be available starting Sept. 1. The certification costs $295, although CSA is discounting it to $195 through the end of the year.

Although cloud computing is all the rage, there is understandable reluctance to go “all in” as one top vendor puts it, without having security, governance and other concerns fully addressed. This CSA effort is a solid effort to make the cloudy picture more clear.

W. Scott Blackmer analyzed European Reservations? about computing and the EU-US Safe Harbor Framework for the Info Law Group on 8/25/2010:

German state data protection authorities have recently criticized both cloud computing and the EU-US Safe Harbor Framework. From some of the reactions, you would think that both are in imminent danger of a European crackdown. That’s not likely, but the comments reflect some concerns with recent trends in outsourcing and transborder data flows that multinationals would be well advised to address in their planning and operations.

In April, the Düsseldorfer Kreis, an informal group of state data protection officials that attempts to coordinate approaches to international data transfers under Germany’s federal system, called on the US Federal Trade Commission to increase its monitoring and enforcement of Safe Harbor commitments by US companies handling European personal data. On July 23, Dr. Thilo Weichert, head of the data protection commission in the northernmost German state of Schleswig-Holstein (capital: Kiel), issued a press release provocatively titled “10th Anniversary of Safe Harbor – many reasons to act but none to celebrate.” Dr. Weichert cites an upcoming report by an Australian consultancy (Galexia) asserting that hundreds of American companies claiming to be part of the Safe Harbor program are not currently certified, and that many Safe Harbor companies fail to provide information to individuals on how to enforce their rights or refer them to costly self-regulatory dispute resolution programs. Dr. Weichert urges a radical solution: “From a privacy perspective there is only one conclusion to be drawn from the lessons learned – to terminate safe harbor immediately.”

Dr. Weichert also attracted international attention with another press release issued this summer, entitled (translating loosely) “Data protection in cloud computing? So far, nil!” The press release refers to his recently published opinion on “Cloud Computing und Datenschutz,” which is deeply skeptical about the ability of cloud customers to assure compliance with European data protection laws. …

Scott continues with a detailed description of “The European Context” and related topics.

<Return to section navigation list>

Cloud Computing Events

No significant articles today.

<Return to section navigation list>

Other Cloud Computing Platforms and Services

Chris Hoff (@Beaker) answered Why Is NASA Re-Inventing IT vs. Putting Men On the Moon? Simple on 8/26/2010:

I was struck with a sense of disappointment as I read Bob Wardspan’s (Smoothspan) blog today “NASA Fiddles While Rome Is Burning.” So as Bob was rubbed the wrong way by Alex Howard’s post (below,) so too was I by Bob’s perspective. All’s fair in love and space, I suppose.

In what amounts to a scathing indictment of new areas of innovation and research, he laments the passing of the glory day’s of NASA’s race to space, bemoans the lack of focus on planet-hopping, and chastises the organization for what he suggests is their dabbling in spaces they don’t belong:

Now along comes today’s NASA, trying to get a little PR glory from IT technology others are working on. Yeah, we get to hear Vinton Cerf talk about the prospects for building an Internet in space. Nobody will be there to try to connect their iGadget to it, because NASA can barely get there anymore, but we’re going to talk it up. We get Lewis Shepherd telling us, “Government has the ability to recognize long time lines, and then make long term investment decisions on funding of basic science.” Yeah, we can see that based on NASA’s bright future, Lewis.

Bob’s upset about NASA (and our Nation’s lost focus on space exploration. So am I. However, he’s barking up the wrong constellation. Sure, the diversity of different technologies mentioned in Alex Howard’s blog on the NASA IT Summit are wide and far, but NASA has always been about innovating in areas well beyond the engineering of solid rocket boosters…

Let’s look at Cloud Computing — one of those things that you wouldn’t necessarily equate with NASA’s focus. Now you may disagree with their choices, but the fact that they’re making them is what is important to me. They are, in many cases, driving discussion, innovation and development. It’s not everyone’s cup of tea, but then again, neither is a Saturn V.

NASA didn’t choose to cut space exploration and instead divert all available resources and monies toward improving the efficiency and access to computing resources and reducing their cost to researchers. This was set in motion years ago and was compounded by the global economic meltdown.

The very reasons the CIO’s (Chief Information Officers) — the people responsible for IT-related mission support — are working diligently on new computing platforms like Nebula is in many ways a direct response to the very cause of this space travel deficit — budget cuts. They, like everyone else, are trying to do more with less, quicker, better and cheaper.

The timing is right, the technology is here and it’s an appropriate response. What would you have NASA IT do, Bob? Go on strike until a Saturn V blasts off? The privatization of space exploration will breed all new sets of public-private partnership integration and information collaboration challenges. These new platforms will enable that new step forward when it comes.

The fact that the IT divisions of NASA (whose job it is to deliver services just like this) are innovating simply shines a light on the fact that for their needs, the IT industry is simply too slow. NASA must deal with enormous amounts of data, transitive use, hugely collaborative environments across multiple organizations, agencies, research organizations and countries.

Regardless of how you express your disappointment with NASA’s charter and budget, it’s unfortunate that Bob chose to suggest that this is about “…trying to get a little PR glory from IT technology others are working on” since in many cases NASA has led the charge and made advancements and innovated where others are just starting. Have you met Linda Cureton or Chris Kemp from NASA? They’re not exactly glory hunters. They are conscientious, smart, dedicated and driven public servants, far from the picture you paint.

In my view, NASA IT (which is conflated as simply “NASA”) is doing what they should — making excellent use of taxpayer dollars and their budget to deliver services which ultimately support new efforts as well as the very classically-themed remaining missions they are chartered to deliver:

To improve life here,

To extend life to there,

To find life beyond.

I think if you look at the missions that the efforts NASA IT is working on, it certainly maps to those objectives.

To Bob’s last point:

What’s with these guys? Where’s my flying car, dammit!

I find it odd (and insulting) that some seek to blame those whose job is mission support — and doing a great job of it — as if they’re the cause of the downfall of space exploration. Like the rest of us, they’re doing the best they can…fly a mile in their shoes.

Better yet, take a deeper look at to what they’re doing and how it maps to supporting the very things you wish were NASA’s longer term focus — because at the end of the day when the global economy recovers, we’ll certainly be looking to go where no man and his computing platform has gone before.

/Hoff

Image via Wikipedia

Chris Czarnecki answered a Is Azure the Only Cloud Option for .NET Developers ? in this 8/26/2010 post to Learning Tree’s Cloud Computing blog:

On a consultancy assignment last week my client was considering moving their ASP.NET deployment to the cloud but was a little concerned about their lack of knowledge of Azure. This is a valid concern and knowledge of Azure is required. However, the really interesting point to me was that the assumption was that Azure was the ONLY cloud option available to .NET developers wishing to deploy to the cloud.

For developers working with ASP.NET, deployment will typically be to servers running Windows Server, IIS and SQL Server database. The versions may vary but the basic configuration will be the same. What my consultancy assignment highlighted to me was that many .NET development teams are not aware that there are many cloud providers that support the exact configuration I have described above. For example, Amazon AWS provides a number of servers preconfigured with Windows Server, IIS and SQL Server, in their various versions and off the shelf. This provides organisations with an instantaneous, self provisioning cloud solution that exactly matches their current configuration. In addition the software requires no modification at all to run in the cloud environment as well as flexibility, for instance using Oracle, DB2 or MySQL rather than SQL server.

In addition to Amazon AWS there are many other service providers with cloud based solutions appropriate to .NET developers such as Rackspace.

So in summary, Azure is one of many cloud computing options available to .NET developers, not the only one. Which one to choose is both a technical and a business decision and requires a core knowledge of Cloud Computing to make the correct choice. Its this kind of scenario which we discuss in the Learning Tree Cloud Computing course I wrote for Learning Tree. Written from a vendor independent position, it enables attendees to make their choice based on informed decisions.

Andrew’s Leaders in the Cloud: OpenStack post of 8/26/2010 points to Kamesh Pemmenju’s OpenStack: Will It Prevent a Cloud Mono Culture? post of 8/24/2010:

“People don’t realize that lock-in actually occurs at the architectural level, not at the API- or hypervisor-level”

Randy Bias discusses the impact of OpenStack on the cloud computing ecosystem with sandhill.com’s Kamesh Pemmaraju.

Kamesh outlines the current state of cloud adoption, OpenStack and looks ahead.

Bruce Guptil wrote The Roiling Cloud Surrounding Dell, EMC, HP, Intel, and SAP Research Alert for Saugatuck Technology on 8/25/2010 (site registration required):

What is Happening:

Summer, especially August, is traditionally a quiet period for IT Master Brands and the investment world. But Cloudy weather is creating significant activity, as we witnessed in the preceding week-plus:

- Intel acquired McAfee.

- HP and Dell are engaging in a high-priced bidding war for second-tier storage vendor 3Par.

- EMC rolled out integrated storage management for the Cloud.

- RedHat announced its intentions and foundation regarding the development of platform-as-a-service (PaaS) capabilities.

In addition, two big rumors drove significant interest and trading in four more IT Master Brands:

- Oracle is rumored to be offloading its Sun hardware lines to IBM.

- SAP was rumored to be buying RedHat.

Bruce continues with the usual detailed “Why is it Happening” and “Market Impact” sections.

<Return to section navigation list>

![image_thumb[36] image_thumb[36]](https://psijhg.blu.livefilestore.com/y1mnm6yqvuGRUXLSDvTv_1vyXOEqSOq7xN02Dvr3EE4noHOGqHflv5JmR21-sVd_2Jn6SCOalhc3Qdp6q3kunC3f-VCY4tdPiEuP-tnSSek9arhLvyV31d0VZBSrMfGKjlD7YRKi0OEzkx7piumUX1iBQ/image_thumb%5B36%5D%5B3%5D.png?download&psid=1)

![image_thumb[37] image_thumb[37]](https://psijhg.blu.livefilestore.com/y1mvNnJavZX3BOBQbUibiC11Sw0kGIP2uepf_zrcoj4Y5g5DYkoUFUkeENBjbW-jxgWNEXZdLsStKY1wC74IVPb4oncPNGfp9z0AU5IYPLJUSlPjkyegcgI2bhFhf5atiYL2oSDF5-ur-XOdDzphn558g/image_thumb%5B37%5D%5B3%5D.png?download&psid=1)

![image_thumb[38] image_thumb[38]](https://psijhg.blu.livefilestore.com/y1m8PJkvFma0EN4RV5hsn_i9nLkI2CrbFgLEw82RM6OrIy-TIG4sGHdtIza7dpD9qkPaxUbUCYDhEw0g0luR4S8AmzGwGt8VP0XddC57rXH4HXP_rOXqSZVM41_nS2RWWbAeykr6HobtQpjbCCoXmZjCw/image_thumb%5B38%5D%5B3%5D.png?download&psid=1)