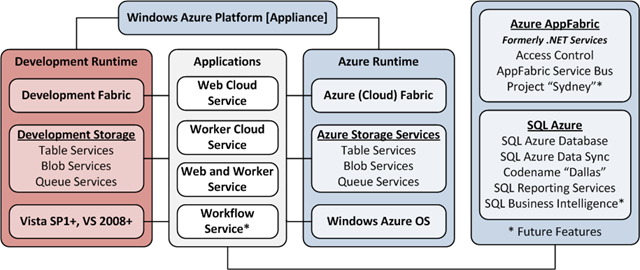

Windows Azure and Cloud Computing Posts for 8/17/2010+

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database, Codename “Dallas” and OData

- AppFabric: Access Control and Service Bus

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch

- Windows Azure Infrastructure

- Windows Azure Platform Appliance (WAPA)

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Read the detailed TOC here (PDF) and download the sample code here.

Discuss the book on its WROX P2P Forum.

See a short-form TOC, get links to live Azure sample projects, and read a detailed TOC of electronic-only chapters 12 and 13 here.

Wrox’s Web site manager posted on 9/29/2009 a lengthy excerpt from Chapter 4, “Scaling Azure Table and Blob Storage” here.

You can now freely download by FTP and save the following two online-only PDF chapters of Cloud Computing with the Windows Azure Platform, which have been updated for SQL Azure’s January 4, 2010 commercial release:

- Chapter 12: “Managing SQL Azure Accounts and Databases”

- Chapter 13: “Exploiting SQL Azure Database's Relational Features”

HTTP downloads of the two chapters are available for download at no charge from the book's Code Download page.

Tip: If you encounter articles from MSDN or TechNet blogs that are missing screen shots or other images, click the empty frame to generate an HTTP 404 (Not Found) error, and then click the back button to load the image.

Azure Blob, Drive, Table and Queue Services

No significant articles today.

<Return to section navigation list>

SQL Azure Database, Codename “Dallas” and OData

Wayne Walter Berry (@WayneBerry) describes Using the Report Viewer Control with SQL Azure in this 8/17/2010 tutorial:

Currently Microsoft doesn’t provide a PaaS version of SQL Server Reporting Service, where you can run your server-side (.rdl) reports in the cloud. However, if you want to run reports using Microsoft reporting technology you can use Windows Azure to host your client report definition (.rdlc) in a ASP.NET web page. This techique uses the ReportViewer control (that ships with ASP.NET) to execute, generate and display the reports from Windows Azure. This allows you to have your reports in the cloud (Windows Azure) along with your data (SQL Azure). This article will cover the basics for creating a client report definition that will work with SQL Azure using Visual Studio.

If you wanted to run the ASP.NET page with the ReportViewer control pointed at SQL Azure from your local on-premise IIS server you could do that too with this technique.

Here are the steps that need to get done:

- Create a new data connection to SQL Azure using the ODBC data source.

- Create a report client definition (.rdlc) style report using the data source.

- Embed a ReportViewer control into the ASP.NET page that displays the .rdlc.

However, Visual Studio report wizard works in reverse order:

- Create an ASP.NET page to host the report.

- Embed a ReportViewer control into the ASP.NET page.

- Which prompts you for a report, since you don’t have a report yet, you choose New Report.

- Which prompts you for a data source, since you don’t have a data source yet you are prompted to create one.

Let’s go into the steps in detail.

Creating an ASP.NET Page

1) Open Visual Studio and create a new project, Choose Windows Azure Cloud Service

2) Choose the ASP.NET Web Role

3) That creates a default.aspx page. Navigate to default.aspx page and put the page into design mode.

4) Open the toolbox and drag over the MicrosoftReportViewer control found under Reporting section onto the ASP.NET page.

5) Once the ReportViewer control is embedded in the page, a quick menu will protrude from the embedded control. Choose Design a new report.

6) Once you client the Design a new report option, the Report Wizard opens and you are asked to choose a data source.

7) Click on the New Connection Button.

Creating a Data Connection

Currently, the ReportViewer control only allows you to create two types of data connection, using either an OLEDB or ODBC data source. While it might be tempting to try to use the SQL Server Native data source, this will not work. The SQL Server Native data source uses OLEDB and currently there isn’t an OLEDB provider for SQL Azure. Instead, you need to use the OBDC data source.

Now that we have the Add Connection dialog up, here is how to proceed to create an ODBC connection to SQL Azure:

1) Click on Change to change the data source.

2) This will bring up the Change Data Source dialog.

3) Choose the Microsoft ODBC Data Source from the Data Source dialog. Click OK.

4) This will return you to the Add Connection dialog, with the appropriate options for an ODBC data source.

5) Choose Use connection string for the Data source specification.

6) This connection string we are going to get from the SQL Azure Portal. Login to the portal, naviagte to the database that you want to connect to, select that database and press the connection string button at the bottom.

7) This will bring up the connection string dialog with the specifc information filled in for server, database and administrative login. You want to copy the ODBC connection string, by clicking on the copy to clipboard button.

8) Paste this into the Add Connection dialog under Use connection string.

9) Notice where the Pwd attribute is set to myPassword. It is very important to change this to your password for the administrator account. The one that matches the user account in the connection string. If you don’t change it now, the wizard will keep asking you for the password.

10) Click the Test Connection button to test the connection, then press OK.

11) This takes us back to the Data Source Configuration Wizard dialog with our new connection highlighted. Click on Next.

12) Choose to save the connection string, which is saved in the web.config file.

13) Choose the data objects, i.e. tables, from SQL Azure that you want to use in the report. In my screen shots I am using the AdventureWorks database.

14) Press Finish.

Now that we have the data source configured, we have to select it and create a report.

Designing a Report

The report we are going to design creates a client report definition file (.rdlc), that gets embedded in the project files that get deployed to Windows Azure. When the page is requested the ReportViewer control loads the .rdlc file, executes the transact-sql, which calls SQL Azure, and returns the data. When the data is returned the ReportViewer control (running on Windows Azure) formats and displays the report.

1) In the Report Wizard dialog. Choose a data source and press Next.

2) Walk through the dialog to design a report, you can read more about how to do this here.

When you are done with the Report Wizard dialog you will have the report embedded in the ReportViewer, inside the ASP.NET page. Next thing to do is deploy it to Windows Azure.

Deploy to Windows Azure

Before you can deploy to Windows Azure, you need to make sure that the Windows Azure package contains all the assemblies for the ReportViewer control that are not part of the .NET framework:

- Microsoft.ReportViewer.Common.dll

- Microsoft.ReportViewer.WebForms.dll

- Microsoft.ReportViewer.ProcessingObjectModel.dll

- Microsoft.RevportViewer.DataVisualization.dll

These four assemblies are available only if you have Visual Studio installed or if you install the free redistributable package ReportViewer.exe. In order to get them in your Windows Azure package you need to:

- Mark the Microsoft.ReportViewer.dll which is already added as a reference in your project, as Copy Local (true).

- Add the Microsoft.ReportViewer.Common.dll to the assembly references, by doing an add reference. Mark it Copy Local to true also.

- Find the Microsoft.ReportViewer.ProcessingObjectModel.dll and Mircosoft.RevportViewer.DataVisualization.dll in your GAC, copy them into your My Documents folder and then reference the copies as assemblies. Mark them Copy Local to true also.

You also need to make sure all the reports that you generated are tagged in your project as content, instead of embedded resources.

There is a good video about using the ReportViewer control with Windows Azure found here.

Julie Strauss explained Using PowerPivot with “Dallas” CTP3 in this 8/17/2010 post to the PowerPivot Team blog:

What is Codename "Dallas"?

Codename "Dallas" is a service allowing developers and information workers to easily discover, purchase, and manage premium data subscriptions in the Windows Azure platform. By bringing data from a wide range of content from authoritative commercial & public sources together into a single location this information marketplace is perfect for PowerPivot users who want to enrich their applications in innovative ways.

What makes working with the “Dallas” data even more attractive is the build in integration between the two solutions, where you can either pull data from “Dallas” using the Data Feeds button in PowerPivot or push data into PowerPivot by launching PowerPivot directly from the “Dallas” web site.

What’s New in CTP3?

Those of you who have already tried working with the “Dallas” CTPs have likely discovered a 100 row import limit for “Dallas” datasets in PowerPivot. With the “Dallas” CTP3 release, this limit has been removed, which enables analysis using “Dallas” data on a much larger scale.

Additionally in this CTP release of “Dallas”, the security for how “Dallas” datasets are accessed in applications such as PowerPivot has been strengthened. Previously, “Dallas” would automatically include your account key when opening a dataset in PowerPivot; with CTP3 you must now enter this account key yourself.

As before, datasets can be opened in PowerPivot from the “Dallas” Service Explorer by clicking the “Analyze” button. This will open PowerPivot on your machine and show the Table Import Wizard.

At this point, you must provide your “Dallas” account key. To do this, click on the “Advanced” button. Change the “Integrated Security” property to “Basic”, set the “User ID” property to “accountKey”, and change “Persist Security Info” to “True”. You can now paste your “Dallas” account key in the Password property. Your account key is located on the Account Key page of the “Dallas” portal here. The dialog should now look as follows.

Click OK and complete the import wizard.

For existing connections to “Dallas” datasets to continue to refresh, you will need to modify these properties by clicking on the Existing Connections button in the Design ribbon tab. Select the connection and click “Edit”.

When pulling data from the PowerPivot side you click the From Data Feeds option, located in Get External Data group of the Home tab. This will open the Table Import Wizard dialog from where you simply follow the same steps as described above. You can copy the data feed URL from the “Dallas” Service Explorer using the “Copy link to clipboard” option.

Please visit the “Dallas” site to learn more about “Dallas” and available subscriptions.

Azret Botash’s OData Provider for XPO – Using Server Mode to handle Huge Datasets post of 8/16/2010 to DevExpress’s The One With blog claimed:

The most exciting part of OData is its simplicity and at the same time its flexibility. The idea of accessing data over HTTP using a well defined RESTful API, and the fact that by doing so we completely remove direct access to the database from our clients, is indeed very powerful!

So what is Server Mode?

Simply put, Server Mode is a concept of our Grid Controls that, if enabled, instead of fetching the entire data set, the grid only fetches the number of records relevant to the current view. Similarly, sorting, filtering, summary operations and group summaries are delegated to the underlying IQueryable<T>. This is why !summary was very important.

Let’s have a closer look at what the grid actually does. I have a resource set “ServerModeItems” on the server that exposes a SQL Server table with 100,000 records. I then bind it to a WinForms Grid Control is Server Mode like so:

this.gridControl1.DataSource = ServerModeSource2.Create<ServerModeItem>( new Uri("http://localhost:59906/ServerMode.svc"), "ServerModeItems", "OID");Note: Never mind the name ServerModeSource2. It’s a variation of LinqServerModeDataSource designed specifically for handling IQueryables that implement IServerMode2. The actual name will change once this goes to a full CTP. Suggestions are welcome :). Although binding directly to LinqServerModeDataSource and AtomPubQuery<> will work just fine, the ServerModeSource2 is able to utilize a custom !contains extension which is explained below.

Under the Hood

Initial Load

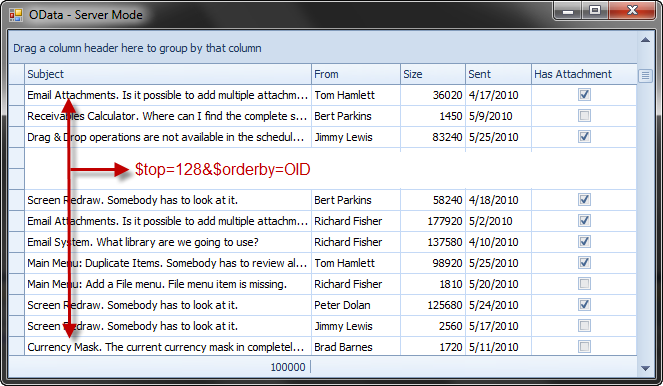

The initial grid data load is incredibly lightweight: Query.OrderBy(it => it.OID).Take(128).Select() which than translates into a ?$top=128&$orderby=OID request.

Sorting by the Sent column for example will execute the following: ?$top=128&$orderby=Sent desc,OID desc

Scrolling

As I start scrolling down, the Grid (the Data Controller actually) will start requesting more data from our IQueryable. It will do this in 2 steps.

Step 1: Fetch the Keys for a specific range.

- LINQ: Query.OrderBy(e => e.OID).Skip(100).Take(768).Select(e => e.OID)

- URL: ?$skip=100&$top=768&$orderby=OID

The range for key selection is not arbitrary. It is calculated and optimized on the fly as you scroll based on how much time it takes to fetch N number records vs. k * N number of records. If getting 2 * N keys takes the same time as getting N keys then we’ll get 2 * N keys.

Step 2: Fetching records for the keys.

Once the keys are selected, the Grid will request the actual data rows for the visible range.

- LINQ: Query.Where(e = > e.OID == @id1 || e.OID == @id2 || e.OID == @id3 etc…)

- URL: ?$filter=OID eq @id1 or OID eq @id2 or OID eq @id3…

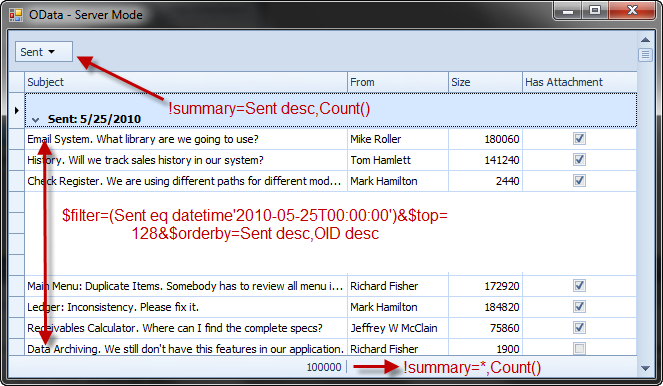

Grouping

When grouping is performed, we first run the GroupBy query:

- LINQ: GroupBy(e => e.Sent).OrderBy(e => e.Key).Select([Key+Summaries])

- URL: ?!summary=Sent asc,[Summaries]

and than when we expand a group, the process of initial load and scrolling repeats itself but this time only for the current group.

- LINQ: Query.Where(e => (e.Sent == 4/6/2010 12:00:00 AM)).OrderBy(e => e.Sent).ThenBy(e => e.OID).Take(128)

- URL: ?$filter=(Sent eq datetime'2010-04-06T00:00:00')&$top=128&$orderby=Sent,OID

Optimizations

Of course, a big trade off of having to fetch data over HTTP is that it is slower than if you had a DB right next to you. Utilizing the Grid in Server Mode solves this, but there is still room for improvements. One improvement that we are thinking about for 10.2 release or right after, is a smart Data Controller that will perform key fetches in the background thus giving us a very smooth scrolling experience.

Other optimization we can do at the query level is to delay load the record fetches (see Step 2). This means that when data is needed for a visible range, we return fakes and issue a fetch request asynchronously. Than when the response comes back, we notify the Grid to repaint itself.

Dealing with URL Length Limitations

Look back at Step 2 above:

URL: ?$filter=OID eq @id1 or OID eq @id2 or OID eq @id3…

This URI is shortened for readability. The actual query string can get really big and ugly, and sooner or later you will run into URL Length Limitations, which you will need to configure for both .NET HTTP Runtime and IIS. Making the acceptable query string sizes bigger will only solve one problem. You will soon run into recursion limits set by the Data Service Library. Because filter expressions are recursive and when they are generated straight out of LINQ and are not simplified they look like this:

Query.Where(it => (((((((((((((((((((((((((((((((((((((((((((((((((((((((it.OID == 6792) OrElse (it.OID == 6818)) OrElse (it.OID == 6882)) OrElse (it.OID == 6959)) OrElse (it.OID == 7008)) OrElse (it.OID == 7038)) OrElse (it.OID == 7045)) OrElse (it.OID == 7064)) OrElse (it.OID == 7089)) OrElse (it.OID == 7124)) OrElse (it.OID == 7167)) OrElse (it.OID == 7176)) OrElse (it.OID == 7268)) OrElse (it.OID == 7270)) OrElse (it.OID == 7306)) OrElse (it.OID == 7349)) OrElse (it.OID == 7444)) OrElse (it.OID == 7560)) OrElse (it.OID == 7573)) OrElse (it.OID == 7607)) OrElse (it.OID == 7616)) OrElse (it.OID == 7649)) OrElse (it.OID == 7726)) OrElse (it.OID == 7755)) OrElse (it.OID == 7786)) OrElse (it.OID == 7862)) OrElse (it.OID == 8018)) OrElse (it.OID == 8069)) OrElse (it.OID == 8074)) OrElse (it.OID == 8104)) OrElse (it.OID == 8141)) OrElse (it.OID == 8215)) OrElse (it.OID == 8329)) OrElse (it.OID == 8371)) OrElse (it.OID == 8439)) OrElse (it.OID == 8590)) OrElse (it.OID == 8643)) OrElse (it.OID == 8660)) OrElse (it.OID == 8688)) OrElse (it.OID == 8792)) OrElse (it.OID == 8939)) OrElse (it.OID == 9033)) OrElse (it.OID == 9042)) OrElse (it.OID == 9067)) OrElse (it.OID == 9123)) OrElse (it.OID == 9143)) OrElse (it.OID == 9193)) OrElse (it.OID == 9287)) OrElse (it.OID == 9329)) OrElse (it.OID == 9341)) OrElse (it.OID == 9387)) OrElse (it.OID == 9393)) OrElse (it.OID == 9502)) OrElse (it.OID == 9507)) OrElse (it.OID == 9542)))

AtomPubQuery<> solves this by:

- 1: Optimizing the the resulting URL to avoid unneeded parentheses.

- 2: Putting the $filter criteria into the HTTP header X-Filter-Criteria

- 3: Using a custom !contains extension designed specifically for fetching data by multiple keys. !contains=OID in (1,3,4,5,6 etc…)

Hope you got excited about all of this :). You can download the Pre CTP from http://xpo.codeplex.com/.

Bruce Kyle’s Migrate MySQL, Access Databases to the Cloud with SQL Server Migration Assistant post of 8/16/2010 to the US ISV Evangelism blog provides a brief explanation of the two new free SSMA versions:

Microsoft has updated the SSMA (SQL Server Migration Assistant) family of products to include support for both Access and MySQL. This release makes it possible to move data directly and easily from local Microsoft Access databases or MySQL databases into SQL Azure. Microsoft refreshed the existing SSMA family of products for Oracle, Sybase and Access with this latest v4.2 release.

The latest SQL Server Migration Assistant is available for free download and preview at:

- SSMA for MySQL v1.0, out of the two downloads, ‘SSMA 2008 for MySQL’ enables migration to SQL Azure

- SSMA for Access v4.2 out of the two downloads, ‘SSMA 2008 for Access’ enables migration to SQL Azure

- SSMA for Oracle v4.2

- SSMA for Sybase v4.2

For more information about the latest update, see the SQL Azure team blog post Microsoft announces SQL Server Migration Assistant for MySQL.

About SQL Server Migration Assistant

SQL Server Migration Assistant for MySQL is the newest migration toolkit, others include Oracle, Sybase, Access, and an analyzer for PowerBuilder. The toolkits were designed to tackle the complex manual process customer’s deal with when migrating databases. In using the SQL Server Migration Assistants, customers and partners reduce the manual effort; as a result the time, cost and risks associated with migrating are significantly reduced.

SSMA for MySQL v1.0 is designed to work with MySQL 4.1 and above. Some of the salient features included in this release are the ability to convert/migrate:

- Tables

- Views

- Stored procedures

- Stored functions

- Triggers

- Cursors

- DML statements

- Control statements

- Transactions

My Installing the SQL Server Migration Assistant 2008 for Access v4.2: FAIL and Workaround post of 8/15/2010 provides additional details about SSMA for Access v4.2.

Wayne Walter Berry (@WayneBerry) describes Security Resources for the Windows Azure Platform in this 8/16/2010 post to the SQL Azure Team blog:

If you’ve been looking for information on SQL Azure security, check out Security Resources for the Windows Azure Platform. This new topic in the MSDN Library provides links to many of the articles, blogs, videos, and webcasts on Windows Azure Platform security authored by and presented by Microsoft experts (including this blog).

You will find content on security across the Windows Azure platform, including Windows Azure, SQL Azure, and Windows Azure AppFabric. The Windows Azure Platform Information Experience team plans to keep this page updated as new security content becomes available.

Repeated in the Cloud Security and Governance below.

Extending data to the cloud using Microsoft SQL Azure is a two-page PDF data sheet targeting the federal government produced by the Microsoft in Government team:

<Return to section navigation list>

AppFabric: Access Control and Service Bus

Brian Swan aids Understanding Windows Azure AppFabric Access Control via PHP with this 8/17/2010 post:

In a post I wrote a couple of weeks ago, Consuming SQL Azure Data with the OData SDK for PHP, I didn’t address how to protect SQL Azure OData feeds with the Windows Azure AppFabric access control service because, quite frankly, I didn’t understand how to do it at the time. What I aim to do in this post is share with you some of what I’ve learned since then. I won’t go directly into how to protect OData feeds with AppFabric access control service (ACS, for short), but I will use PHP to show you how ACS works.

Disclaimer: The code in this post is intended to be educational only. It is simplified code that is intended to help you understand how ACS works. If you already have a good understanding of ACS and are looking to use it with PHP in a real application, then I suggest you check out the AppFabric SDK for PHP Developers (which I will examine more closely in a post soon). If you are interested in playing with my example code, I’ve attached it to this post in a .zip file.

Credits: A series of articles by Jason Follas were the best articles I could find for helping me understand how ACS works. I’ll borrow heavily from his work (with his permission) in this post, including his bouncer-bartender analogy (which Jason actually credits Brian H. Prince with). If you are interested in Jason’s articles, they start here: Windows Azure Platform AppFabric Access Control: Introduction.

AppFabric Access Control Service (ACS) as a Nightclub

The diagram below (adapted from Jason Follas’ blog) shows how a nightclub (with a bouncer and bartender) might go about making sure that only people of legal drinking age are served drinks (I’ll draw the analogy to ACS shortly):

Before the nightclub is open for business, the bouncer tells the bartender that he will be handing out blue wristbands tonight to customers who are of legal drinking age.

Once the club is open, a customer presents a state-issued ID with a birth date that shows he is of legal drinking age.

The bouncer examines the ID and, when he’s satisfied that it is genuine, gives the customer a blue wristband.

Now the customer can go to the bartender and ask for a drink.

The bartender examines the wristband to make sure it is blue, then serves the customer a drink.

I would add one step that occurs before the steps above and is not shown in the diagram:

0. The state issues IDs to people and tells bouncers how to determine if an ID is genuine.

To draw the analogy to ACS, consider these ideas:

You, as a developer, are the State. You issue ID’s to customers and you tell the bouncer how to determine if an ID is genuine. You essentially do this (I’m oversimplifying for now) by giving giving both the customer and bouncer a common key. If the customer asks for a wristband without the correct key, the bouncer won’t give him one.

The bouncer is the AppFabric access control service. The bouncer has two jobs:

He issues tokens (i.e. wristbands) to customers who present valid IDs. Among other information, each token includes a Hash-based Message Authentication Code (HMAC). (The HMAC is generated using the SHA-256 algorithm.)

Before the bar opens, he gives the bartender the same signing key he will use to create the HMAC. (i.e. He tells the bartender what color wristband he’ll be handing out.)

The bartender is a service that delivers a protected resource (drinks). The bartender examines the token (i.e. wristband) that is presented by a customer. He uses information in the token and the signing key that the bouncer gave him to try to reproduce the HMAC that is part of the token. If the HMACs are not identical, he won’t serve the customer a drink.

The customer is any client trying to access the protected resource (drinks). For a customer to get a drink, he has to have a State-issued ID and the bouncer has to honor that ID and issue him a token (i.e. give him a wristband). Then, the customer has to take that token to the bartender, who will verify its validity (i.e. make sure it’s the agreed-upon color) by trying to reproduce a HMAC (using the signing key obtained from the bouncer before the bar opened) that is part of the token itself. If any of these checks fail along the way, the customer will not be served a drink.

To drive this analogy home, I’ll build a very simple system composed of a client (barpatron.php) that will try to access a service (bartender.php) that requires a valid ACS token. …

Brian then walks you through Hiring a Bouncer (i.e., Setting Up ACS), Setting Up the Bartender (i.e. Verifying Tokens), Getting a Wristband (i.e. Requesting a Token), and concludes:

Wrapping Up

Once you have set up ACS (i.e. once you have “Hired a Bouncer”), you should be able to take the two files (bartender.php and barpatron.php) in the attached .zip file, modify them to that they use your ACS information (service namespace, signing keys, etc.), and then load the barpatron.php in your browser to see how it all works.

If you have been reading carefully, you may have noticed a flaw in this bouncer-bartender analogy: in the real world, the bouncer would actually check the DOB claim to make sure the customer is of legal drinking age. In this example, however, it would be up to the bartender to actually verify that the customer is of legal drinking age. (At this time, ACS doesn’t have a rules engine that would allow for this type of check.) Or, the Issuer would have to verify legal drinking age. i.e. You might write a service that only provides the customer with the information necessary to request a token if he has somehow proven that he is of legal drinking age.

I hope this helps in understanding how ACS works. Look for a post soon that shows how to use the AppFabric SDK for PHP Developers to make things easier!

Wade Wegner (@WadeWegner) explains Configuring an ASP.NET Web Application to Use a Windows Server AppFabric Cache for Session State in this 8/17/2010 post:

Below is a walkthrough on how to configure this scenario. In addition to this post, I recommend you take a look at this article on MSDN.

- Thoroughly review Getting Started with Windows Server AppFabric Cache to get everything setup.

- Open up the Cache PowerShell console (Start –> Windows Server AppFabric –> Caching Administration Windows PowerShell). This will automatically import the DistributedCacheAdministration module and use the CacheCluster.

- Start the Cache Cluster (if not already started). Run the following command in the PowerShell console:

Start-CacheCluster

- Create a new cache that you will leverage for your session state provider. Run the following command in the PowerShell console:

New-Cache MySessionStateCache

- Create a new ASP.NET Web Application in Visual Studio 2010 targeting .NET 4.0. This will create a sample project, complete with master page which we’ll leverage later on.

- Add references to the Microsoft.ApplicationServer.Caching.Client and Microsoft.ApplicationServer.Caching.Core. To do this, use the following steps (thanks to Ron Jacobs for the insight):

- Right-click on your project and select Add Reference.

- Select the Browse tab.

- Enter the following folder name, and press enter:

%windir%\Sysnative\AppFabric

- Locate and select both Microsoft.ApplicationServer.Caching.Client and Microsoft.ApplicationServer.Caching.Core assemblies.

- Add the configSections element to the web.config file as the very first element element in the configuration element:

<!--configSections must be the FIRST element --> <configSections> <!-- required to read the <dataCacheClient> element --> <section name="dataCacheClient" type="Microsoft.ApplicationServer.Caching.DataCacheClientSection, Microsoft.ApplicationServer.Caching.Core, Version=1.0.0.0, Culture=neutral, PublicKeyToken=31bf3856ad364e35" allowLocation="true" allowDefinition="Everywhere"/> </configSections> - Add the dataCacheClient element to the web.config file, after the configSections element. Be sure to replace YOURHOSTNAME with the name of your cache host. In the PowerShell console you can get the HostName (and CachePort) by starting or restarting your cache).

<dataCacheClient> <!-- cache host(s) --> <hosts> <host name="YOURHOSTNAME" cachePort="22233"/> </hosts> </dataCacheClient> - Add the sessionState element to the web.config file in the system.web element. Be sure that the cacheName is the same as the cache you created in step 4.

<sessionState mode="Custom" customProvider="AppFabricCacheSessionStoreProvider"> <providers> <!-- specify the named cache for session data --> <add name="AppFabricCacheSessionStoreProvider" type="Microsoft.ApplicationServer.Caching.DataCacheSessionStoreProvider" cacheName="MySessionStateCache" sharedId="SharedApp"/> </providers> </sessionState> - Now, we need a quick and easy way to test this. There are many ways to do this, below is mine. I loaded data into session, then created a button that writes the session data into a JavaScript alert. Quick and easy:

protected void Page_Load(object sender, EventArgs e) { // Store information into session Session["PageLoadDateTime"] = DateTime.Now.ToString(); // Reference the ContentPlaceHoler on the master page ContentPlaceHolder mpContentPlaceHolder = (ContentPlaceHolder)Master.FindControl("MainContent"); if (mpContentPlaceHolder != null) { // Register the button mpContentPlaceHolder.Controls.Add( GetButton("btnDisplayPageLoadDateTime", "Click Me")); } } // Define the button private Button GetButton(string id, string name) { Button b = new Button(); b.Text = name; b.ID = id; b.OnClientClick = "alert('PageLoadDateTime defined at " + Session["PageLoadDateTime"] + "')"; return b; } - Now, hit control-F5 to start your project. After it loads, click the button labeled “Click Me” – you should see the following alert:

That’s it! You have now configured your ASP.NET web application to leverage Windows Server AppFabric Cache to store all Session State.

While I was putting this together, I encountered two errors. I figured I’d share them here, along with resolution, in case any of you encounter the same problems along the way.

Configuration Error Description: An error occurred during the processing of a configuration file required to service this request. Please review the specific error details below and modify your configuration file appropriately. Parser Error Message: ErrorCode<ERRCA0009>:SubStatus<ES0001>:Cache referred to does not exist. Contact administrator or use the Cache administration tool to create a Cache.

If you received the above error message, it’s likely that the cacheName specified in the sessionState element is wrong. Update the cacheName to reflect the cache you created in step #4.

Configuration Error Description: An error occurred during the processing of a configuration file required to service this request. Please review the specific error details below and modify your configuration file appropriately. Parser Error Message: ErrorCode<ERRCA0017>:SubStatus<ES0006>:There is a temporary failure. Please retry later. (One or more specified Cache servers are unavailable, which could be caused by busy network or servers. Ensure that security permission has been granted for this client account on the cluster and that the AppFabric Caching Service is allowed through the firewall on all cache hosts. Retry later.)

If you received the above error message, it’s likely that the host name specified in the dataCacheClient is wrong. Update the dataCacheClient host name to reflect the name of your host. Note: it’s likely that it’s just your machine name.

Hope this helps!

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

Karsten Januszewski’s Architecture Of The Archivist post of 8/16/2010 to the MIX Online website continues his series about a project that uses Windows Azure and SQL Azure storage for archiving tweets:

Building The Archivist introduced several architectural difficulties, which were solved over a number of iterations and trials and error.

Implementing the core three features of The Archivist (archiving, analyzing and exporting) in a scalable and responsive way proved to be a challenge. While I’m tempted not to write this article as some will anticipate my mistakes, I’m going to suck it up for the sake of maybe helping someone else out there.

With this in mind, I’d like to go over the journey of The Archivist architecture with the hope that others may benefit from my experience.

If you don’t know what The Archivist is, you can read about here [or in an OakLeaf Archive and Mine Tweets In Azure Blobs with The Archivist Application from MIX Online Labs post of 7/11/2010].

Architecture I: Prototype

My first architecture was a prototype meant to prove the feasibility of the application. Like many prototypes, I wrote this one quickly to prove the basic idea.

I started out with an instance of SQL Express and created four tables:

- A User table. Because the archivist allowed different users to create archives of tweets, I needed a user table.

- A Tweets table. Of course I needed a table to store tweets.

- A Search table. In this table, I stored the searches: search term, last updated, is active, etc. It was joined to the tweets table.

- An Archive table. This table was basically a look up table between searches and users. Because two users might start the same search, there was no point in duplicating that search, thus the creation of the archive table.

In fact, here’s exactly what it looked like:

I whipped up some data access objects using LINQ to SQL and I was off and running. Tweets were pulled from Twitter, serialized to my data access objects and inserted into the database. To do the analysis, I pulled tweets out of SQL back into CLR objects and ran LINQ queries on demand at runtime to create the various aggregate graphs of data. To export the tweets to Excel, the graph of CLR objects was transformed to a tab delimited text file on the fly.

It was working great in my development environment with one user, one search and about 100 tweets.

I got this basic architecture working with the Azure development environment and then pushed it up to Azure. I had a Web Role for the rudimentary UI and a worker role for polling Twitter.

I then started doing some very basic performance and load tests. Guess what happened? First, performance crawled to a halt. Who can guess where the bottlenecks were? Well, first, the on demand LINQ queries were painfully slow. So was the export to Excel. Also, the performance of inserting tweets into SQL was a surprise bottleneck I hadn’t seen in my development environment.

Performance was a problem, but my database was filling up too… and fast. I had created the default SQL Azure instance of 1 gig. But three archives of 500,000 tweets could fill that up. I was going to fill up databases quickly.

Back to the drawing boards.

Architecture II: SQL-centric

I had always been concerned about the on-demand LINQ aggregate queries and expected they wouldn’t scale. As a result, I looked into moving them to SQL Server as stored procedures. While that helped performance, it still didn’t meet the speed benchmarks required to show all six visualizations in the dashboard. I then got turned on to indexed views. Ah ha, I thought! The solution to my problems! By making the stored procs indexed views in SQL, I thought I had the solution.

But I had another problem on my hands: the size of the SQL databases. My first thought was to have multiple databases and come up with a sharding structure. But the more I looked at the scalability needs growth of The Archivist, the more skeptical I became of this architecture. It seemed complex and error-prone. And I could end up having to manage 100 SQL Servers? In the cloud? Hmm…

I also had performance problems with doing inserts into SQL Azure. In part, this had to do with the SQL Azure replication model, which does three inserts for each insert. Not an issue with a single insert, but it wasn’t looking good for bulk inserts of tweets.

So, SQL could solve my analysis problems but didn’t seem like a good fit for my storage problems.

That’s when I called a meeting with Joshua Allen and David Aiken. Between the three of us, we came up with a new architecture.

Architecture III: No SQL (Well, Less SQL)

The more astute among you probably saw this coming: We dumped SQL for storing tweets and switched to blob storage. So, instead of serializing tweets to CLR objects and then persisting them via LINQ to SQL, I simply wrote the JSON result that came back from the Twitter Search API right to blob storage. The tweet table was no more.

At first, I considered moving the rest of the tables to table storage, but that code was working and not causing any problems. In addition, the data in the other three tables is minimal and the joins are all on primary keys. So I didn’t move them, and never have. So it turns out that The Archivist has a hybrid storage solution: half blob storage and half SQL server.

You might be wondering how I correlate the two stores. It’s pretty simple: Each search has a unique search id in SQL. I use that unique search id as a container name in Blob Storage. Then, I store all the tweets related to that search inside that container.

Moving from SQL to Blob Storage immediately solved my two big archival problems:

First, I no longer had to worry about a sharding structure or managing all those SQL Servers. Second, my insert performance problems disappeared.

But I still had problems with both analysis and export. Both of these operations were simply too taxing on large datasets to do at runtime with LINQ.

Time for another meeting with Joshua and David. Enter additional Azure worker roles and Azure queues.

Architecture IV: Queues

To solve both of these problems, I introduced additional Azure worker roles that were linked by queues. I then moved the processing of each archive (running aggregate queries as well as generating the file to export) to worker roles.

So in addition to my search worker role, I introduced two new roles, one for appending and one for aggregating. It worked as follows:

The Search role queried the search table for any searches that hadn’t been updated in the last half hour. Upon completion of a search that returned new tweets, it put a message in the queue for the Appender.

The Appender role picked up the message and appended the new tweets to the master archive. The master archive was stored as a tab delimited text file. Perfect! This solved my performance problem getting the tweets into that format, as it was now stored natively as that format and available for download from storage whenever necessary.

Upon completing appending, a message was added to the queue for the Aggregator role. That role deserialized the archive to objects via my own deserialization engine.

Once I had the graph of objects, the LINQ queries were run. The result of each aggregate query was then persisted to blob storage as JSON. Again, perfect! Now all six aggregate queries, which sometimes could take up to five minutes to process, were available instantaneously to the UI. (This is why performance is so snappy on the charts for archives of massive size.) In addition, I could also provide these JSON objects to developers as an API.

This architecture worked for quite awhile and seemed to be our candidate architecture.

Until a new problem arose.

The issue was that the aggregate queues were getting backed up. With archives growing to 500,000 tweets, running the aggregate queries was bottlenecking. A single archive could take up to five minutes to process. With archives being updated every half hour and the number of archives growing, the time it took to run the aggregate queries on each archive was adding up. Throwing more instances at it was a possible solution, but I stepped back from the application and really looked at what we were doing. I began to question the need for all that aggregate processing, which was taking up not only time but also CPU cycles. With Azure charging by the CPU hour, that was a consideration as well.

As it was, I was re-running the aggregate queries every half hour on every archive that had new tweets. But really, the whole point of the aggregate queries was to get a sense of what was happening to the data over time. Did the results of those queries change that much between an archive of 300,000 tweets and 301,000 tweets? No. In addition, I was doing all that processing for an archive that may only be viewed once a day – if that. The whole point of The Archivist is to spot trends over time. The Archivist is about analysis. It is not about real time search.

It dawned on me: do I really need to be running those queries every half-hour?

Architecture V: No Queues

We made the call (pretty late in the cycle) to run the aggregate queries only once every 24 hours. Rather than using queues, I changed the Aggregator role to run on a timer similar to how the Searcher role worked. I then partitioned work done by the Appender and moved its logic to the Searcher or the Aggregator.

One thing I like about this architecture is that it gets rid of the queues entirely. In the end, no queues means the whole application is simpler and, in software, the fewer moving parts, the better. So, the final architecture ends up looking like this:

Both the Searcher and the Aggregator are encapsulated in DLLs, such that they can be called directly by the web role (when the user enters an initial query into the database) or by a worker role (when an archive is updated). So each worker role runs on a timer.

We had to make another change to how the Searcher worked pretty late in the game. The Searcher was polling Twitter every half hour for every single archive. This seemed like overkill, especially for archives that didn’t get a lot of tweets—it was a lot of unnecessarily polling of Twitter. With a finite amount of queries allowed per hour to the Search API, this needed revising.

The solution was an elastic degrading polling algorithm. Basically, it means that we try to determine how ‘hot’ an archive is depending on how many tweets it returns. This algorithm is discussed in detail here if you’d like to read more.

If you’d like to learn more about exactly how the architecture works, check out this Channel9 interview at about 10:00 in, where I walk through the whole thing.

Final Thoughts

The Archivist has been an exciting and challenging project to work on. This post didn’t even get into other issues we faced during the development, which included getting the ASP.NET Charts to work in Azure; dealing with deduplication and the Twitter Search API; writing and reading from blob storage; nastiness with JSON deserialization; differences in default date time between SQL Server and .NET; struggles trying to debug with the Azure development; and lots more. But we shipped!

And, perhaps more interestingly, we have made all the source code available for anyone to run his or her own instance of The Archivist.

Return to section navigation list>

VisualStudio LightSwitch

Paul Patterson posted Microsoft LightSwitch – CodeCast Episode 88 Takeaway Notes on 8/17/2010:

Here are some takeaway notes for a recent CodeCast episode - LightSwitch for .Net Developers with Jay Schmelzer.

CodeCast co-hosts Ken Levy and Markus Egger talk with Jay Schmelzer, Program Manager with Microsoft Visual Studio Business Applications team charged with LightSwitch…

New “Product”, a version of Visual Studio with its own price point (SKU).

- Will be priced as an entry level price point into Visual Studio.

- Pricing not set yet, but should be priced somewhere between Visual Studio Express (free) and Visual Studio Professional (not free).

- With MSDN subscription, will be able to integrate with existing Visual Studio installation.

- If you don’t have MDSN and you buy a stand-alone version of LightSwitch, and you already have Visual Studio, it will also integrate.

- Integrating LightSwitch with an existing Visual Studio Pro (or better) will allow the developer to extend a LightSwitch project.

- LightSwitch is being marketed as the easiest way to build .net applications for the desktop or the cloud.

- Create a class of business applications that typically are not being developed by enterprise I.T. departments. For example, business units that cannot use enterprise I.T. groups.

- As well, small and medium sized businesses that do not have I.T. departments.

- Not enterprise critical applications; relatively small number of users.

- A rich-client application by using Silverlight 4.

- Typically a stand-alone, in-browser or on desktop application.

- Will run in any browser that supports Silverlight 4.

- Not a code-generator, but rather a metadata generator.

- Taking a model centric approach to designing an application.

- Silverlight creates a Silverlight 4 client application, a set of WCF RIA services, and Entity Framework model without the developer having to think about anything.

- LightSwitch creates the 3-tier architecture using best practices.

- Goal is to dramatically simplify the development of an application.

- Targeted at someone who is confortable writing some code.

- Typically, a LightSwitch developer will need to write some code.

- Not an Access replacement.

- Model driven approach, using the LightSwitch tool creates the underlying architecture. It is not a wizard based approach. Developers use the environment to define and model the data to be used and then, through dialogs and screens in the IDE, create user interfaces based on the model.

- LightSwitch implements many best practices in its metadata design.

- Allows enterprise I.T. departments a way of allowing non-IT business units the ability to create solutions that can be supported by I.T. For example, provisioning servers for business units to publish their LightSwitch solutions to; bringing the business unit developed solutions into the IT models for support and management.

- Enterprises can create and expose a set a core services that business units can then consume using LightSwitch. The services exposed are controlled by the enterprise, ensuring the rules and protocols are being adhered to.

- Potential huge ecosystem for LightSwitch because of the extension points available. E.g. Templates, business types, etc…

- At its core, LightSwitch is a code Visual Studio application. This means that the LightSwitch IDE can be extended.

- LightSwitch specific extension points include; business types, templates for screens, the overall U.I. shell (or chrome) of the application.

- Not constrained to what LightSwitch creates. You can create your own screen, using a Silverlight user control, and plug it in to your LightSwitch application. You’ll be able to then still bind your own screen using the LightSwitch binding.

- Will have the ability to deploy directly into the Windows Azure and SQL Azure cloud (not using beta 1 though). [Emphasis added.]

Matt Thalman provided an Introduction to Visual Studio LightSwitch Security on 8/16/2010:

Visual Studio LightSwitch is all about creating LOB applications. And, of course, managing who can access the data in those applications is highly important. LightSwitch aims to make the task of managing security simple for both the developer and the application administrator.

Here are the basic points about security within Visual Studio LightSwitch:

It has built-in support for Windows and Forms (user name/password) authentication.

- It supports application-level users, roles, and permissions. Management of these entities are handled within the running application through built-in administration screens.

- Developers are provided with access points to perform security checks.

- It is based on, and an extension of, ASP.NET security. In other words, it makes use of the membership, role, and profile provider APIs defined by ASP.NET. This allows for a familiar experience in configuration and customization.

I’ll address each of these bullet points in detail in subsequent blog posts. Let me know your questions and concerns so that I can address them in those posts.

I’m waiting …

Alex Handy reported Microsoft turns on LightSwitch in this 8/16/2010 article for SDTimes:

Microsoft has introduced LightSwitch—that’s Visual Studio LightSwitch, a point-and-click, template-driven development environment that outputs C# or Visual Basic code through a wizard-driven deployment process. The project is currently in beta form, and no release date has been set yet.

Doug Seven, group product manager of Visual Studio at Microsoft, said that LightSwitch will be a standalone product. “LightSwitch is part of the Visual Studio family of products, and can be installed as an integrated component of Visual Studio Pro and above," he said.

Developers using the software can choose from templates to start their projects. From then on, pointing and clicking can be used to set up the database connection and lay out the on-screen forms and interface.

LightSwitch will generate either C# or Visual Basic code, a choice developers make at the outset of project creation. When asked how maintainable the automatically generated code will be, Seven replied that the generated code is done following best practices and patterns for three-tier architecture, including separation of concerns, client- and server-side validation, and proper data access practices.

A completed LightSwitch application can be deployed (via a wizard) as a desktop client or a Silverlight-based Web client. Additionally, Seven said that LightSwitch will offer publish-to-cloud functionality, although this capability is not implemented in the initial beta. [Emphasis added.]

LightSwitch is targeted at building data-backed applications, said Seven. “LightSwitch enables developers to easily aggregate data from multiple sources by creating Entity models of the data. This enables developers to associate different data sources using patterns similar to foreign-key relationships. Essentially, the working of the various data stores is abstracted, enabling the developer to think about the data model, not the data source.”

Seven also said that LightSwitch is heavily focused on business users, because adding custom business logic and rules is core to line-of-business applications. “LightSwitch makes it easy to add custom logic to models and screens (either as validation rules on models, or control execution events in screens). Ultimately, because LightSwitch is just a .NET implementation, the possibilities are wide open,” he said.

The main goal, said Seven, is saving developers time. “We have been working on the idea of LightSwitch for a while. The idea was born from our developer division as we looked at the common problems faced in both small/mid-size businesses who have limited IT capabilities and resources, and in enterprises where the needs of the IT resources exceed their capacity. We wanted to provide tools that would enable developers to quickly build professional quality line-of-business applications with the flexibility to grow and scale as the needs of the application grow.”

<Return to section navigation list>

Windows Azure Infrastructure

David Linthicum asserts “In many instances clouds will just become a set of new silos, diminishing the value of IT if we're not careful” as he points out The danger of cloud silos in this 8/17/2010 post to InfoWorld’s Cloud Computing blog:

Many consider cloud computing a revolution in how we do computing. However, we could find we're falling back into very familiar and unproductive patterns.

Although clouds can become more effective and efficient ways of using applications, computing, and storage, many clouds are becoming just another set of silos that the enterprises must deal with. But it does not have to be that way -- if you learn to recognize the pattern.

Silos are simple instances of IT, where processes and data reside in their own little universe, typically not interacting with other systems. Clearly IT seems to like the idea of silos. Need an application? Place it on a single-use server, and place that server in the data center ... now repeat. This pattern has been so common that data centers have grown rapidly over the years, while efficiency has fallen significantly. Cloud computing will address efficiency around resource utilization, but any gains there could be rapidly lost if we're just using cloud computing to build more silos.

In the past we've tried to bring all of these silos together through integration projects that were more tactical afterthoughts than strategic approaches for system-to-system synergy. For many enterprises, the cost and the distraction of eliminating silos meant that the silos lived on.

Now there's the cloud. While seemingly great a way to eliminate the inefficiencies we've created over the years, the ongoing temptation is to implement cloud solutions, public or private, that are really just new silos to deal with. For example, creating a new application on a platform service provider without information- and behavior-sharing with existing enterprise applications. Or even easier, just signing up with a SaaS provider and neglecting data-synchronization services, which in many instances are not provided by the SaaS vendors.

The end result is an enterprise IT that becomes more complex and difficult to change, now on the new cloud computing target platform. This siloization counters any efficiencies gained through the use of better-virtualized and sharable infrastructure, as provided by public and private clouds. Indeed, in many instances the use of cloud computing actually makes things worse.

The ability to avoid these issues is brain-dead simple, but it does require some effort and a bit of funding. As we add systems to our portfolio, cloud or noncloud, there has to be careful consideration of how both data and processes are shared between most of the systems. While taking a fair bit of work, the value that such consideration brings will be three or four times the investment.

So please consider this before signing up to more clouds and inadvertently signing up to more silos.

Lori MacVittie (@lmacvittie) asserts Normalizing deployment environments from dev through production can eliminate issues earlier in the application lifecycle, speed time to market, and gives devops the means by which their emerging discipline can mature with less risk as a preface to her Data Center Feng Shui: Normalizing Phased Deployment with Virtualized Network Appliances post of 8/16/2010 to F5’s DevCentral blog:

One of the big “trends” in cloud computing is to use a public cloud as an alternative environment for development and test. On the surface, this makes sense and is certainly a cost effective means of managing the highly variable environment that is development. But unless you can actually duplicate the production environment in a public cloud, the benefits might be offset by the challenges of moving through the rest of the application lifecycle.

NORMALIZATION LEADS to GREATER EFFICIENCIES

One of the reasons developers don’t have an exact duplicate of the production environment is cost. Configuration aside, the cost of the hardware and software duplication across a phased deployment environment is simply too high for most organizations. Thus, developers are essentially creating applications in a vacuum. This means as they move through the application deployment phases they are constantly barraged with new and, shall we say, interesting situations caused or exposed by differences in the network and application delivery network.

Example: One of the most common problems that occurs when moving an application into a scalable production environment revolves around persistence (stickiness). Developers, not having the benefit of testing their creation in a load balanced environment, may not be aware of the impact of a Load balancer on maintaining the session state of their application. A load balancer, unless specifically instructed to do so, does not care about session state. This is also true, in case you were thinking of avoiding this by going “public” cloud, in a public cloud. It’s strictly a configuration thing, but it’s a thing that is often overlooked. This causes problems when developers or customers start testing the application and discover it’s acting “wonky”. Depending on the configuration of the load balancer, this wonkiness (yes, that is a technical term, thank you very much) can manifest in myriad ways and it can take precious time to pinpoint the problem and implement the proper solution. The solution should be trivial (persistence/sticky sessions based on a session id that should be automatically generated and inserted into the HTTP headers by the application server platform) but may not be. In the event of the latter it may take time to find the right unique key upon which to persist sessions and in some few cases may require a return to development to modify the application appropriately.

This is all lost time and, because of the way in which IT works, lost money. It’s also possibly lost opportunity and mindshare if the application is part of an organization’s competitive advantage.

Now, assume that the developer had a mirror image of the production environment. S/He could be developing in the target environment from the start. These little production deployment “gotchas” that can creep up will be discovered early on as the application is being tested for accuracy of execution, and thus time lost to troubleshooting and testing in production is effectively offset by what is a more agile methodology.

DEVELOPING DEVOPS as a DISCIPLINE

Additionally developers can begin to experiment with other infrastructure services that may be available but were heretofore unknown (and therefore untrusted). If a developer can interact with infrastructure services in development, testing and playing with the services to determine which ones are beneficial and which ones may not, they can develop a more holistic approach to application delivery and control the way in which the network interacts with their application.

That’s a boon for the operations and network teams, too, as they are usually unfamiliar with the application and must take time to learn its nuances and quirks and adjust/fine-tune the network and application delivery network to meet the needs of the application. If the developer has already performed these tasks, the only thing left for the ops and network teams is to implement and verify the configuration. If the two networks – production and virtual production – are in synch this should eliminate the additional time necessary and make the deployment phase of the application lifecycle less painful.

If not developers, ops, or network teams, then devops can certainly benefit from a “dev” environment themselves in which they can hone their skills and develop the emerging discipline that is devops. Devops requires integration and development of automation systems that include infrastructure which means devops will need the means to develop those systems, scripts, and applications used to integrate infrastructure into the operational management in production environments. Like developers, this is an iterative and ongoing process that probably shouldn’t use production as an experimental environment. Thus, devops, too, will increasingly find a phased and normalized (commoditized) deployment approach a benefit to developing their libraries and skills.

This assumes the use of virtual network appliances (VNA) in the development environment. Unfortunately the vast majority of hardware-only solutions are not available as VNAs today which makes a perfect mirrored copy of production at this time unrealistic. But for those pieces of the infrastructure that are available as a VNA, it should be an option to deploy them as a copy of production as the means to enable developers to better understand the relationship between their application and the infrastructure required to deliver and secure it. Infrastructure services that most directly impact the application – load balancers, caches, application acceleration, and web application firewall – should be mirrored into development for use by developers as often as possible because it is most likely that they will be the cause of some production-level error or behavioral quirk that needs to be addressed.

The bad news is that if there are few VNAs with which to mirror the production environment there are even fewer that can be/are available in a public cloud environment. That means that the cost-savings associated with developing “in the cloud” may be offset by the continuation of a decades old practice which results in little more than a game of “throw the application over the network wall.”

Petri Salonen continues his series on 8/16/2010 with Law #9 of Bessemer’s Top 10 Cloud Computing Laws and the Business Model Canvas –Mind the GAAP! Cloud accounting is all about matching revenue and costs to consumption…:

The ninth law in Bessemer’s Top 10 Computing Laws is all about accounting and how to recognize revenue. In the traditional software business, the software license revenue is recognized at the time of delivery, while in the SaaS world the scenario is very different. I mentioned in my prior posts that I have been/I still am in enterprise software sales (besides SaaS), and have to deal with both of these models. Let’s review this law in greater detail what it means for a SaaS company. In my prior blog entries about Bessemer Top 10 Cloud Computing Laws and the Business Model Canvas, I have opened the financial side of a SaaS vendor, but in this case we will be looking at how the overall revenue recognition is seen in the SaaS world.

Law #9: Mind the GAAP! Cloud accounting is all about matching revenue and costs to consumption…well, except for professional services!

With enterprise software, revenue is recognized at the time of the delivery (like for example with a CD/DVD) and the software vendor can immediately have that sales in the income statement. This is both good and bad for software vendors. The good side of the thing is that large deals can fund further development and some of the money can be funneled back into marketing. The bad thing is that large deals can also put the software sales organization to sleep, almost paralyzing the entire organization. I have been part of this so many times so this statement is based on my own experience. The other negative side of these large deals is that enterprise software sales is very cyclical with long sales cycles: A software company might be going without deals for months and then gets a large deal that kind of saves the entire year. Yet again, I have personal experiences of this as well. In the enterprise software deal, the support/maintenance revenue is typically recognized on monthly basis based on the length of the maintenance contract. So if you have an annual contract, the support/maintenance will be recognized each month for the entire year. In similar manner, services revenue is recognized after delivery of the service and the common billing cycle is either biweekly or on a monthly basis.

Let’s look at the revenue recognition of cloud computing revenue. There are typically two types of revenue streams in a cloud computing: subscription revenue and professional service revenue. The subscription revenue is recognized based on consumption, whereby the SaaS vendor can start recognizing the revenue when the end user organization starts using the software. What is very different in the SaaS world when compared with the enterprise software world is the revenue recognition of professional services. Based on best practices and audits by the top for auditing companies, revenue recognition of professional services should be recognized with the length of the contract, and some recommendations say that even over the lifetime of the customer. The practical amortization time for these types of contracts is 5-6 years if the customer churn is reasonable. Within the GAAP (Generally Accepted Accounting Principles) world, the SaaS vendor should match revenue with the corresponding costs and this might not be the case in this kind of 5-6 year amortization scenario.

Also, there is an exception to any rule in life. In cases where the software company trains the end user client either 3 months before the delivery of the solution or after the renewal date of the contract, this revenue can be considered as it can be said not be tied or associated with the delivery of the solution. This rule of 3 months seems to have been approved by the top 4 auditing companies as best practices based on the Bessemer blog entry.

The final mention from Bessemer is to exclude deferred revenue from Quick Ratio calculations as this is booked as liability in the balance sheet. Quick Ratio is calculated by dividing total assets with total liabilities. This ratio shows how quickly the company can cover the liabilities with cash or quick assets that can be quickly liquidated. Another term “Acid test” is when you add up cash or cash equivalent, marketable securities and accounts receivable and divide these with current liabilities you get a number that shows you whether you can cover you current liabilities… when the ratio is 1 or better it is considered to be a feasible ratio. Less than 1, you have more debt that you can currently cover.

This makes sense to me as it is revenue that the company (excluding customer churn) will recognize throughout the lifetime of the contract whereby the liability /deferred revenue gets smaller by being moved to income statement whenever the revenue is recognized. Obviously in high growth cases, the SaaS company deferred revenue should only grow as new contracts are signed. This suggestion is specifically important for companies looking for venture funding to avoid financial covenants. Getting paid from customer in advance is a good thing and should not be seen as liability. Let’s review how this law impacts the Business Model Canvas.

Petri continues with a “Summary of our findings in respect to Business Model Canvas” section.

<Return to section navigation list>

Windows Azure Platform Appliance (WAPA)

Justin Pirie asks Can Microsoft beat Google at it’s own Game with the Azure Appliance? in this 8/17/2010 post to the Mimecast blog:

Can Microsoft ever catch Google?

That’s a question I’ve heard many times recently. Going to the Microsoft Worldwide Partner Conference in Washington helped me answer some of those nagging questions.

One of the problems with a long time away travelling to conferences like I did in July is that there is hardly any time to really cogitate over what you learn, and even less time to write about it. There’s only one person that I know that does this well is Ray Wang- which prompted another analyst to ask- Does he sleep?

Now that I’m back, I’ve been thinking a lot about the Azure appliance and wondering if it represents the future of all Microsoft products, not just Azure? Let me explain…

I think one of Microsoft’s biggest problems is its customers.

Well, not actually its customers but the way software is deployed to its customers: on-premise.

It’s shipped and customers configure it extensively for their environment. The code and configuration are co-mingled.

But before the software can be shipped it’s extensively tested and throughout its life on-premise it’s continuously patched. This is an incredibly slow and time consuming process because of the sheer number of configurations and variations of hardware the software needs to exist on. Millions and millions of combinations have to be tested, bugs found and fixed, and even then they don’t catch them all and have to patch often. It’s painful for everyone.

One of the key tenets of the SaaS and Cloud business model is multi-tenancy. The reason that analysts get so vexed about multi-tenancy being core to SaaS / Cloud is because it separates the code and configuration. As the vendor upgrades the underlying code it doesn’t change the customers configuration.

The impact of this seemingly small innovation should not be be underplayed. Not only does it underpin the economics of SaaS it also underpins the agility of the vendor. With total control of hardware and software, the vendor is free to continuously deploy and test new code without interrupting client operations in a much more lightweight way than ever before.

This is why Google has been disrupting the on premise email market for the past few years with Google Apps. They use Agile development practices and iterate early and often, all on infrastructure and code they operate and maintain.

But as we know, some customers aren’t happy losing control of all their data to the Cloud, and like it or not, Private Cloud is here to stay. That’s why we’ve developed a Just Enough On Site philosophy- mixing the right amount of Cloud and on-premise IT, not forcing anyone down a prescriptive route but at it’s core still retaining the benefits of the Cloud.

With on-premise appliances has Microsoft figured out a way to beat Google at its own game, by deploying in the Cloud and On-Premise but still retaining enough control of the hardware and code to enable agility?

I think they might just have a shot.

And I wonder in the future we’re likely to see lots of Microsoft’s products being deployed like this?

p.s. You can see Bob Muglia launch the Azure Appliance at WPC here.

Wayne Walter Berry (@WayneBerry) explains Why the Windows Azure Platform Appliance is So Important to Me in this 8/16/2010 post to the SQL Azure Team blog:

To me, as a DBA, the most important thing about the Windows Azure Platform Appliance, announced here, is the translation of my SQL server skill set across all deployment scenarios. The knowledge is portable because the database technology is scalable and the tools are shared. Skills translation goes hand in hand with database portability.

You can move your SQL Server platform database from desktop applications using SQL Express to:

- Enterprise on-premise SQL Server installations

- Off-premise SQL Azure in the cloud

- Private cloud deployments of Windows Azure Platform Appliance.

There is no other single platform that covers such a broad set of usage scenarios. The Microsoft SQL Server platform covers scenario from free SQL Server Express databases up to [10*]

4GB, to on premise SQL Server installations hosting thousands of terabytes of storage, to elastic and reliable SQL Azure databases in the cloud. The wide set of offerings allow us to leverage the knowledge we have in SQL Server across multiple scenarios.One reason why skill translation is so important is that it allows you to take a set of knowledge and use it to encompass many different scenarios. Most of us will not work on just a single project or solve the same problem all the time. We accomplish many different goals using a wide variety of data scenarios in our careers and it is great having a single mental tool set that will accomplish them all. Here are a few scenarios that SQL Server and SQL Azure handle well (with links for context):

- 99.9% uptime for web sites. See our connection handing blog post.

- Geographic Redundancy, part 1 and part 2

- Database Warehousing, Microsoft Overview.

- Business Intelligence: Power Pivot blog posts, Microsoft Overview.

Another reason portability and data transferability are important is that it allows your company to switch between SQL Azure and SQL Server as the requirements for the database change. Growth is just one fluctuating component of a maturing business, as you grow you want to be able to move between database solutions without having learn a different database technology, or worse, the company have to hire someone else. The similarities between SQL Azure and SQL Server are so close that you can easily move from one to another, and get the data transferred easily.

As a DBA, I rarely get the chance to set the company’s business objective. One project might require an on-premise SQL Server installation; one might need the reliability of SQL Azure; one might need both with synchronization setup between them -- it usually is not up to me. Either way, with portable skills I can get the job done by picking the best database solution for the job at hand. Another way to think about it is that the database project isn’t limited to a solution as a result of the staff on hand only knowing one solution.

Added Bonus: Data Transfer

Just as easily as your knowledge transfers between these different deployments, your data and schema transfer. Tools like SQL Server Management Studio, SSIS, bcp utility and other third party tools allow you to jump between solutions as you need greater scalability, reliability, and performance. Microsoft will be continuing to improve the tools for moving data and schemas between deployment solutions.

Summary

Start thinking of your Microsoft data platform DBA skills as not only SQL Server, but SQL Azure skills too. Take some time to learn SQL Azure, you will find that your SQL Server skills closely mirror SQL Azure, and you have the ability to accomplish a wider range of deployment solutions. Do you have questions, concerns, comments? Post them below and we will try to address them.

* Microsoft SQL Server 2008 R2 Express supports 10 GB of storage per database.

Mary Jo Foley posted Microsoft delivers on-premises private-cloud building block on 8/16 and updated it on 8/17/2010:

When it comes to building private clouds, Microsoft is planning to offer customers two ways to go: One using its Windows Azure cloud operating system on forthcoming pre-configured Windows Azure Appliances; and one assembled of various on-premises components atop Windows Server.

On August 15, Microsoft made available the final reannounced availability of the Release Candidate version of one of the building blocks for its latter option. That product — System Center Virtual Machine Manager 2008 R2 Self Service Portal 2.0 (note: I am linking here to the cached version that shows the original headline on the blog post) — is the customer-focused version of what was formerly known as the Dynamic Datacenter Toolkit. It is available from the Microsoft Download Center. (Update: August 17: Even though the team blog made it look as though this was the final version of the portal, in fact, it was not; it’s just a reannouncement of the RC. Still no date on when the final will be available.)

The VMMSSP self-service portal is a collection of tools and guidance for building cloud services on top of the Windows Server (rather than the Windows Azure) platform. As Microsoft explains it, VMMSSP is a partner-extensible offering that can be used to “pool, allocate, and manage resources to offer infrastructure as a service and to deliver the foundation for a private cloud platform inside your datacenter.” The portal features a dynamic-provisioning engine, as well as a pre-built web-based user interface that “has sections for both the datacenter managers and the business unit IT consumers, with role-based access control,” according to a new post on TechNet blogs.

To use the 2.0 version of VMMSSP, users need Windows Server 2008 R2 Enterprise Edition or Windows Server 2008 R2 Datacenter Edition; IIS 7.0, Virtual Machine Manager 2008 R2, SQL Server 2008 Enterprise Edition or Standard Edition; .Net Framework 3.5 SP1, Message Queueing (MSMQ); and PowerShell 2.0. VMMSSP is not considered to be an upgrade to the existing VMM 2008 R2 self-service portal, according to company officials; users can deploy one or both. The new version, unlike the current VMM 2008 R2 portal, makes virtual machine actions extensible, enabling more customization for particular hardware configurations, according to the aforementioned blog post.

In addition to the new self-service portal and its prerequisites, other pieces of Microsoft’s on-premises private-cloud solution include BizTalk Server 2010 and Windows Server AppFabric.

Other reporters didn’t catch that the 8/16/2010 TechNet post announcing a 8/15/2010 release date for VMMSSP disappeared (404) on 8/17/2010 (e.g., Krishnan Subramanian with Microsoft Releases VMMSSP of 3/16/2010).

Although Wilfried Shadenboeck’s Cloud: Microsoft delivers on-premises private-cloud building block TechNet post claimed: