Windows Azure and Cloud Computing Posts for 8/20/2010+

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

• Updated 8/23/2010 with new posts of 8/21 and 8/22/2010 marked •

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database, Codename “Dallas” and OData

- AppFabric: Access Control and Service Bus

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch

- Windows Azure Infrastructure

- Windows Azure Platform Appliance (WAPA)

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Read the detailed TOC here (PDF) and download the sample code here.

Discuss the book on its WROX P2P Forum.

See a short-form TOC, get links to live Azure sample projects, and read a detailed TOC of electronic-only chapters 12 and 13 here.

Wrox’s Web site manager posted on 9/29/2009 a lengthy excerpt from Chapter 4, “Scaling Azure Table and Blob Storage” here.

You can now freely download by FTP and save the following two online-only PDF chapters of Cloud Computing with the Windows Azure Platform, which have been updated for SQL Azure’s January 4, 2010 commercial release:

- Chapter 12: “Managing SQL Azure Accounts and Databases”

- Chapter 13: “Exploiting SQL Azure Database's Relational Features”

HTTP downloads of the two chapters are available for download at no charge from the book's Code Download page.

Tip: If you encounter articles from MSDN or TechNet blogs that are missing screen shots or other images, click the empty frame to generate an HTTP 404 (Not Found) error, and then click the back button to load the image.

Azure Blob, Drive, Table and Queue Services

The Windows Azure Team posted How to Get Started with the Windows Azure CDN on 8/20/2010 after I wrote the following article:

A new guide, "Getting Started with the Windows Azure CDN", is now available to help you understand how to enable the Windows Azure CDN and begin caching your content. Included is a short list of prerequisites for using the Windows Azure CDN, as well as step-by-step instructions for getting started, along with links to additional resources if you want to dive deeper. For details about pricing for the Windows Azure CDN, please read our previous blog post here, and for a list of nodes available globally for the Windows Azure CDN, please read our previous blog post here.

It wasn’t that new.

MSDN published a Getting Started with the Windows Azure CDN topic on 8/11/2010, which I missed:

This quick start guide will show you how to enable the Windows Azure CDN and begin caching your content.

Prerequisites for Using the Windows Azure CDN

To get started caching content in the Windows Azure CDN, you first need to have the following prerequisites:

- A Windows Azure subscription. If you don't have one yet, you can sign up using your Windows Live ID. Navigate to the Windows Azure Portal and follow the instructions to sign up.

- A Windows Azure storage account. The storage account is where you will store the content that you wish to cache, using the Windows Azure Blob service. To create a storage account, navigate to the Windows Azure Portal and click the New Service link. Follow the instructions to create and name your storage account.

Enabling the Windows Azure CDN for Your Storage Account

To enable the CDN for your Windows Azure storage account, navigate to the Summary page for your storage account, locate the Content Delivery Network section, and click the Enable CDN button. You'll then see listed on the page the CDN endpoint for the storage account, in the form

http://<identifier>.vo.msecnd.net/, whereidentifieris a value unique to your storage account.Once you've enabled the CDN for your storage account, publicly accessible blobs within that account will automatically be cached.

Adding Content to Your Storage Account

Any content that you wish to cache via the CDN must be stored in your Windows Azure storage account as a publicly accessible blob. For more details on the Windows Azure Blob service, see Blob Service Concepts.

There are a few different ways that you can work with content in the Blob service:

- By using the managed API provided by the Windows Azure Managed Library Reference.

- By using the free web-based GUI tool myAzureStorage.

- By using a third-party storage management tool.

- By using the Windows Azure Storage Services REST API.

The following code example is a console application that uses the Windows Azure Managed Library to create a container, set its permissions for public access, and create a blob within the container. It also explicitly specifies a desired refresh interval by setting the Cache-Control header on the blob. This step is optional – you can also rely on the default heuristic that's described in About the Windows Azure CDN. …

The source code sample has been excised for brevity.

Test that your blob is available via the CDN-specific URL. For the blob shown above, the URL would be similar to the following:

http://az1234.vo.msecnd.net/cdncontent/testblob.txtIf desired, you can use a tool like wget or Fiddler to examine the details of the request and response.

See Also

All articles relating to the Azure CDN will now be posted to this section.

<Return to section navigation list>

SQL Azure Database, Codename “Dallas” and OData

• The odata4j Team uploaded odata4j version 0.2 - complete archive distribution (core, source, javadoc and bundle jars) as “Featured Stable” to Google Code on 8/22/2010:

Project Info¶

odata4j is a new open-source toolkit for building first-class OData producers and first-class OData consumers in Java.

New in version 0.2

- JSON support $format=json and $callback [for] Producers

- Pojo api [for] OData Consumers

- #getEntities/getEntity [for] Consumers

- Support for Dallas CTP3 [Emphasis added]

- Basic Authentication [for] Consumers

- Simplified metadata [for] Consumers

- Simple skiptoken support [for] Producers

- Model links as part of entity model

- Javadoc and source jars as part of each distribution

Goals and Guidelines¶

- Build on top of existing Java standards (e.g. JAX-RS, JPA, StAX) and use top shelf Java open-source components (jersey, Joda Time)

- OData consumers should run equally well under constrained Java client environments, specifically Android

- OData producers should run equally well under constrained Java server environments, specifically Google AppEngine

Consumers - Getting Started¶

- Download the latest archive zip

- Add odata4j-bundle-x.x.jar to your build path (or odata4j-clientbundle-x.x.jar for a smaller client-only bundle)

- Create an new consumer using ODataConsumer.create("http://pathto/service.svc/") and use the consumer for client scenarios

Consumers - Examples¶

(All of these examples work on Android as well)

- Basic consumer query & modification example using the OData Read/Write Test service: ODataTestServiceReadWriteExample.java

- Simple Netflix example: NetflixConsumerExample.java

- List all entities for a given service: ServiceListingConsumerExample.java

- Use the Dallas data service to query AP news stories (requires Dallas credentials): DallasConsumerExampleAP.java

- Use the Dallas data service to query UNESCO data (requires Dallas credentials): DallasConsumerExampleUnescoUIS.java

- Basic Azure table-service table/entity manipulation example (requires Azure storage credentials): AzureTableStorageConsumerExample.java

- Test against the sample odata4j service hosted on Google AppEngine: AppEngineConsumerExample.java

Producers - Getting Started¶

- Download the latest archive zip

- Add odata4j-bundle-x.x.jar to your build path (or odata4j-nojpabundle-x.x.jar for a smaller bundle with no JPA support)

- Choose or implement an ODataProducer

- Use InMemoryProducer to expose POJOs as OData entities using bean properties. Example: InMemoryProducerExample.java

- Use JPAProducer to expose an existing JPA 2.0 entity model: JPAProducerExample.java

- Implement ODataProducer directly

- Take a look at odata4j-appengine for an example of how to expose AppEngine's Datastore as an OData endpoint

- Hosting in Tomcat: http://code.google.com/p/odata4j/wiki/Tomcat

Status¶

odata4j is still early days, a quick summary of what's implemented

- Fairly complete expression parser (pretty much everything except complex navigation property literals)

- URI parser for producers

- Complete EDM metadata parser (and model)

- Dynamic entity/property model (OEntity/OProperty)

- Consumer api: ODataConsumer

- Producer api: ODataProducer

- ATOM transport

- Non standard behavior (e.g. Azure authentication, Dallas paging) via client extension api)

- Transparent server-driven paging Consumers

- Cross domain policy files for silverlight clients

- Free WADL for your OData producer thanks to jersey. e.g. odata4j-sample application.wadl

- Tested with current OData client set (.NET ,Silverlight, LinqPad, PowerPivot)

Todo list, in a rough priority order

- DataServiceVersion negotiation

- Better error responses

- gzip compression

- Access control

- Authentication

- Flesh out InMemory producer

- Flesh out JPA producer: map filters to query api, support complex types, etc

- Bring expression model to 100%, query by expression via consumer

- Producer model: expose functions

• The EntitySpaces Team announced EntitySpaces Support for SQL Azure on 8/21/2010:

Our SQL Azure support now successfully passes our NUnit test suite. It is our plan to release our SQL Azure support on Monday, August 30th. There are also other Cloud databases we are looking at.

There is a new choice in the “Settings” tab in the driver combo-box called “SQL Azure”, you just select it, connect, and generate your EntitySpaces architecture as you would normally.

EntitySpaces LLC

Persistence Layer and Business Objects for Microsoft .NET

http://www.entityspaces.net

• The SQL Server Team announced Cumulative Update package 3 for SQL Server 2008 R2 on 8/20/2010:

Hotfix Download Available

View and request hotfix downloadsCumulative Update 3 contains hotfixes for the Microsoft SQL Server 2008 R2 issues that have been fixed since the release of SQL Server 2008 R2.

Note This build of this cumulative update package is also known as build 10.50.1734.0.

We recommend that you test hotfixes before you deploy them in a production environment. Because the builds are cumulative, each new fix release contains all the hotfixes and all the security fixes that were included with the previous SQL Server 2008 R2 fix release. We recommend that you consider applying the most recent fix release that contains this hotfix.

For more information, click the following article number to view the article in the Microsoft Knowledge Base: 981356 (http://support.microsoft.com/kb/981356/ ) “The SQL Server 2008 R2 builds that were released after SQL Server 2008 R2 was released.”

Important notes about the cumulative update package

- SQL Server 2008 R2 hotfixes are now multilanguage. Therefore, there is only one cumulative hotfix package for all languages.

- One cumulative hotfix package includes all the component packages. The cumulative update package updates only those components that are installed on the system.

• Doug Finke posted Doug Finke on the OData PowerShell Explorer! on 8/18/2010:

Richard and Greg over on RunAs Radio talked to me about the OData PowerShell Explorer I built and put up on CodePlex.

Check it out. HERE is the podcast.

We talked about:

- OData – Open Data Protocol http://www.odata.org/

- PowerShell

- WPK (WPF for PowerShell, think Tcl/Tk)

- PowerShell and Data visualization, HERE, HERE and HERE

- and more

I want to thank Richard and Greg for having me on, smart guys and smart questions. They got me thinking about next steps for using PowerShell and features to add to the OData PowerShell Explorer.

My (@rogerjenn) Migrating a Moderate-Size Access 2010 Database to SQL Azure with the SQL Server Migration Assistant post of 8/20/2010 (updated 8/21/2010) describes upsizing a 16.8 MB Oakmont.accdb database to a 43.8 MB SQL Azure database:

The SQL Server Migration Assistant for Access (SSMA) v4.2 is a recent update that supports migrating Access 97 to 2010 databases to SQL Azure cloud databases in addition to on-premises SQL Server 2005 through 2008 R2 instances.

Luke Chung’s Microsoft Azure and Cloud Computing...What it Means to Me and Information Workers white paper explains the many benefits and few drawbacks from using SQL Azure cloud databases with Access front ends. Luke is president of FMS, Inc., a leading developer of software for Microsoft Access developers, and publishes products for the SQL Server, Visual Studio .NET, and Visual Basic communities.

Note: You cannot migrate SQL Server databases for Access Data Projects (ADPs) to operable SQL Azure tables because SQL Azure doesn’t support OLE DB connections currently.

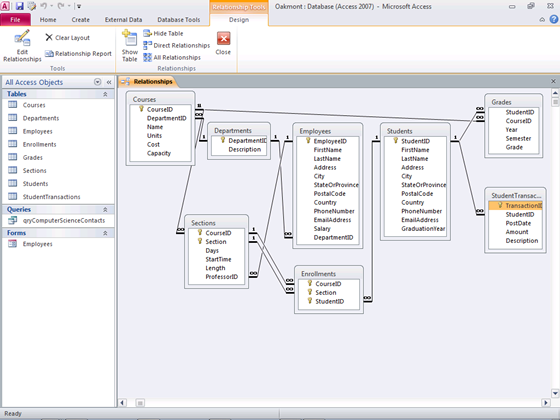

It’s common to use the Northwind or smaller sample databases to demonstrate SSMA v4.2’s capabilities. For example, Chapter 28, “Upsizing Access Applications to Access Data Projects and SQL Azure,” of my Microsoft Access 2010 In Depth book (QUE Publishing) describes how to upsize Northwind.accdb to an SQL Azure database. Most departmental and line of business (LOB) databases have substantially more records than Northwind, so it might not demonstrate performance issues that could arise from increased data size. This article uses the Oakmont database for a fictitious Oakmont University Department-Employees-Courses-Enrollments database, which has a schema illustrated by this Access 2010 Relationships diagram (click image for full size, 1024px, capture):

The following table lists the database tables and their size:

Table Name Rows Courses 590 Departments 14 Employees 2,320 Sections 1,770 Enrollments 59,996 Students 29,998 Grades 59,996 Student Transactions 45,711

The size of the Oakmont.accdb Access database is 16,776 KB with one query and form. It has been included in the downloadable code of several of my books, including Special Edition Using Micorosoft [Office] Access 97 through 2007 (QUE Publishing), the forthcoming Microsoft Access 2010 In Depth (QUE Publishing), and Admin 911: Windows 2000 Group Policy (Osborne/McGraw-Hill). Steven D. Gray and Rick A. Llevano created the initial version of the database for Roger Jennings' Database Workshop: Microsoft Transaction Server 2.0 (SAMS Publishing).

Update 8/21/2010: Alternatively, you link your Access front-end to tables in a local (on-premises) SQL Server 2008 [R2] database and then move the database to SQL Azure in a Microsoft data center by using the technique described in my Linking Microsoft Access 2010 Tables to a SQL Azure Database post of 7/28/2010. Luke Chung describes a similar process in his August 2010 Microsoft Access and Cloud Computing with SQL Azure Databases (Linking to SQL Server Tables in the Cloud) white paper.

I continue with a 24-step, fully illustrated tutorial, and conclude:

The increase in size might be the result of the three replicas that SQL Azure creates for data reliability. A Why are SQL Azure Databases Migrated from Access Database with SSMA v4.2 Three Times Larger? thread is pending a response in the SQL Azure – Getting Started forum.

Note: The Migrating a Moderate-Size Access 2010 Database to SQL Azure with the SQL Server Migration Assistant post to Roger Jennings’ Access Blog updated 8/21/2010 is a copy of this article.

Luke Chung wrote the Deploying Microsoft Databases Linked to a SQL Azure Database to Users without SQL Server Installed on their Machine white paper for FMS, Inc. in August 2010:

Introduction to Linking Microsoft Access Databases to SQL Server on Azure

In my previous paper, I described how to link a Microsoft Access database to a SQL Server database hosted on Microsoft SQL Azure. By using Microsoft's cloud computing Azure platform, your can easily host an enterprise quality SQL Server database with high levels of availability, bandwidth, redundancy, and support. SQL Azure databases range from 1 to 50 GB, and you pay for what you use starting at $10 per month.

In that paper, I showed how you needed to have SQL Server 2008 R2 installed on your machine to manage a SQL Azure database and create the data source network file (DSN file) to connect to the SQL Azure database. With this file, your Access database can link (or import) data from your SQL Azure database.

So how can you deploy your database to users who don't have SQL Server 2008 installed on their machines?

Deploying the SQL Server 2008 ODBC Driver

Deploying a Microsoft Access database using a SQL Azure database is similar to deploying any Access database using a SQL Server back end database. For our purposes, this can include Access Jet databases linked to SQL Azure tables directly (MDB or ACCDB formats) or your VBA code that references the SQL Server tables via ADO.

The users of your applications do not need to have SQL Server installed on their machines, just the ODBC driver. Once that's installed, they can use your database. If they need to link to the tables from their own database, they simply need to have the DSN File you created along with the password for the database.

How Do I Install the SQL Server 2008 ODBC Driver?

The file you need to run on each machine is the SQL Server client installation program: sqlncli.msi

You have the right to deploy this if you have SQL Server. This is located in your SQL Server setup program under these folders:

- 32 bit: \Enterprise\1033_ENU_LP\x86\Setup\x86

- 64 bit: \Enterprise\1033_ENU_LP\x64\Setup\x64

Run the program and follow the instructions:

Initial screen of the SQL Server 2008 R2 Native Client SetupFor more details, read this MSDN article about Installing SQL Server Native Client. This article also includes silent installation instructions to install the ODBC driver without the user interface prompting your users.

Make Sure Your Users' IP Addresses are Listed on SQL Azure

For security reasons, SQL Azure (like standard SQL Server) lets you specify the IP addresses to allow direct interaction with the database. This is required with SQL Azure. You'll need to specify this in SQL Azure's administration tools if your users are using IP addresses different from yours.

Conclusion

Hope this helps you create Microsoft Access databases with SQL Server more easily. Good luck and I hope to learn what you're doing or would like to do with Access and Azure.

Blog about it with me here.

Additional Resources for Microsoft Azure and SQL Azure

FMS Technical Papers

- Microsoft Azure and Cloud Computing...What it Means to Me and Information Workers

- Microsoft Access and Cloud Computing with SQL Azure Databases (Linking to SQL Server Tables in the Cloud)

- Deploying Microsoft Databases Linked to a SQL Azure Database to Users without SQL Server Installed on their Machine

- Additional FMS Tips and Techniques

- Detailed Technical Papers

Microsoft Azure, SQL Azure, and Access in Action

- EzUpData Web Site check out the link to the live application hosted on Azure Windows and SQL Azure

- Microsoft Azure, SQL Server, and Access Consulting Services

Microsoft Resources

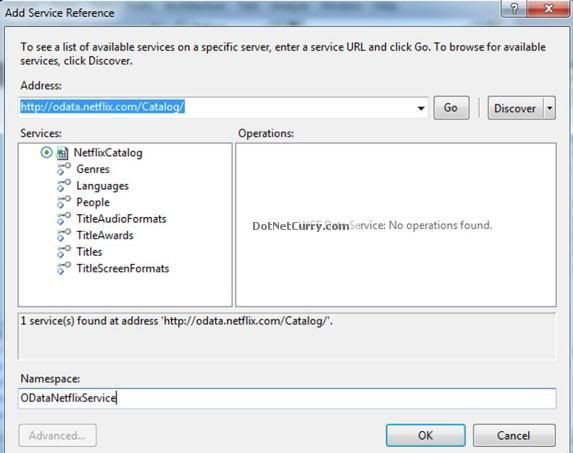

Suprotim Agarwal explains Querying Netflix OData Service using Silverlight 4 in this post of 8/15/2010:

Silverlight 4 includes the WCF Data Services client library, using which you can now easily access data from any service that exposes an OData or REST end-points. The ‘Add Service Reference’ feature in Visual Studio 2010 makes this very easy to implement.

The Open Data Protocol (OData) is a Web protocol for querying and updating data that provides a way to unlock your data and free it from silos that exist in applications today. OData does this by applying and building upon Web technologies such as HTTP, Atom Publishing Protocol (AtomPub) and JSON to provide access to information from a variety of applications, services, and store (source)

In this article, we will see how to create a Silverlight client that can consume the Netflix OData service and display data in a Silverlight DataGrid control. Netflix partnered with Microsoft to create an OData API for the Netflix catalog information and announced the availability of this API during Microsoft Mix10.

Let us get started:

Step 1: Open Visual Studio 2010 > File > New Project > Silverlight Application > Rename it to ‘SilverlightODataREST’. Make sure that .NET Framework 4 is selected in the Target Framework. Click Ok.

Step 2: Right click the project SilverlightODataREST > Add Service Reference. In the address box, type http://odata.netflix.com/Catalog/. This is where the Netflix OData service is located. Rename the namespace as ‘ODataNetflixService’ and click Go. You should now be able to see the available services as shown below:

Click OK. Visual Studio generates the code files to be able to access this service. …

Suprotim continues with C# and VB source code, excised for brevity. He concludes:

Run the application and hit the Fetch button. It takes a little time to load the data. The output will be similar to the one shown below:

Conclusion

In this article, we saw how easy it was to consume an OData feed in a Silverlight client application, developed in Visual Studio 2010. If you want to perform CRUD operations, there is an excellent article over here Consuming OData Feeds (Silverlight QuickStart)

The entire source code of this article can be downloaded over here.

<Return to section navigation list>

AppFabric: Access Control and Service Bus

Brian Swan posted Access Control with the Azure AppFabric SDK for PHP on 8/19/2010:

In my last post I used some bare-bones PHP code to explain how the Windows Azure AppFabric access control service works. Here, I’ll build on the ideas in that post to explain how to use some of the access control functionality that is available in the AppFabric SDK for PHP Developers.

I will again build a barpatron.php client (i.e. a customer) that requests a token from the AppFabric access control service (ACS) (the bouncer). Upon receipt of a token, the client will present it to the bartender.php service (the bartender) to attempt to access a protected resource (drinks). If the service can successfully validate the token, the protected resource will be made available.

0. Set up ACS

In this post, I’ll assume that you have a Windows Azure subscription, that you have set up an access control service, and that you have created a token policy and scope as outlined in the “Hiring a Bartender (i.e. Setting Up ACS)” section of my last post. I’ll also assume you are familiar with the bouncer-bartender analogy I used in that post.

1. Install the AppFabric SDK for PHP Developers

You can download the AppFabric SDK for PHP developers from CodePlex here: http://dotnetservicesphp.codeplex.com/. After you have downloaded and extracted the files, update the include_path in your php.ini file to include the library directory of the downloaded package.

Note: I found what I think are a couple of bugs in the SDK. Here are the changes I had to make to get it working for me:

- Replace POST_METHOD with "POST" on line 113 of the SimpleApiAuthService.php file. Or, define the POST_METHOD constant as "POST".

- Add the following require_once directive to the SimpleApiAuthService.php file: require_once "util\curlRequest.php";

2. Enable the bartender.php service to verify tokens

When presented with a token, a service needs 3 pieces of information to validate it:

The service namespace (which you created when you set up ACS).

The signing key that is shared with ACS (this is the key associated with the token policy you created).

The audience (which is the value of the appliesto parameter you used when creating a scope for the token policy).

As I examined in my last post, this information will be used to make comparisons with information that is presented in the token. Nicely, the AppFabric SDK for PHP Developers has a TokenValidator class that handles all of this comparison work for us – all we have to do is pass it the token and the 3 pieces of information above. So now my bartender.php service looks much cleaner than it did in my previous post (note that I’m assuming the token is passed to the service in an Authorization header):

require_once "ACS\TokenValidator.php";

define("SIGNING_KEY", "The_signing_key_for_your_token_policy");

define("SERVICE_NAMESPACE", "Your_service_namespace");

define("APPLIES_TO", "http://localhost/bartender.php");// Check for presence of Authorization header

if(!isset($_SERVER['HTTP_AUTHORIZATION']))

Unauthorized("No authorization header.");

$token = $_SERVER['HTTP_AUTHORIZATION'];// Validate token

try

{

$tokenValidator = new TokenValidator(SERVICE_NAMESPACE,

APPLIES_TO,

SIGNING_KEY,

$token);

if($tokenValidator-> validate())

echo "What would you like to drink?";

else

Unauthorized("Token validation failed.");

}

catch(Exception $e)

{

throw($e);

}function Unauthorized($reason)

{

echo $reason." No drink for you!<br/>";

die();

}Note: The TokenValidator class does not expect the $token parameter to be in the same format as is issued by ACS. Instead, it expects a string prefixed by "WRAP access_token=" with the body of the token enclosed in double quotes. The following is an example of a token in the format expected by the TokenValidator class: WRAP access_token="Birthdate%3d1-1-70%26Issuer%3dhttps%253a%252f%252fbouncernamespace.accesscontrol.windows.net%252f%26Audience%3dhttp%253a%252f%252flocalhost%252fbartender.php%26ExpiresOn%3d1283981128%26HMACSHA256%3dzBux1nj9yPAMrqNqhmk%252fYvzyc75b3vK%252fqYp8K6DTah4%253d"

3. Enable the barpatron.php client to request a token

When requesting a token from ACS, a client needs 4 pieces of information:

The service namespace (which you created when you set up ACS).

The issuer name (which you set when creating an Issuer during ACS setup).

The issuer secret key (this was generated when you created an Issuer during ACS setup).

The audience (which is the value of the appliesto parameter you used when creating a scope for the token policy).

As with token validation, the AppFabric SDK for PHP makes creating the request, sending it, and retrieving the token very easy:

require_once "DotNetServicesEnvironment.php";

require_once "ACS\Scope.php";

require_once "ACS\SimpleApiAuthService.php";define("SERVICE_NAMESPACE", "Your_service_namespace");

define("ISSUER_NAME", "Your_issuer_name");

define("ISSUER_SECRET", "The_key_associated_with_your_issuer");

define("APPLIES_TO", "http://localhost/bartender.php");try

{

//$acmHostName is accesscontrol.windows.net by default

$acmHostName = DotNetServicesEnvironment::getACMHostName();

$serviceName = SERVICE_NAMESPACE;

$scope = new Scope("simpleAPIAuth");$scope->setIssuerName(ISSUER_NAME);

$scope->setIssuerSecret(ISSUER_SECRET);

$scope->setAppliesTo(APPLIES_TO);

$scope->addCustomInputClaim("DOB", "1-1-70");$simpleApiAuthService = new SimpleApiAuthService($acmHostName, $serviceName);

$simpleApiAuthService->setScope($scope);

$token = $simpleApiAuthService->getACSToken();}

catch(Exception $e)

{

throw($e);

}Now to send the token, we re-format it (see note about the TokenValidator class in the section above) and put it in an Authorization header (where the bartender.php service will expect it):

$token = 'WRAP access_token="'.$token.'"';

// Initialize cURL session for presenting token.

$ch = curl_init();

curl_setopt($ch, CURLOPT_URL, "http://localhost/bartender.php");

curl_setopt($ch, CURLOPT_HTTPHEADER, array("Authorization: ".urldecode($token)));

curl_setopt($ch, CURLOPT_HEADER, true);

curl_setopt($ch, CURLOPT_RETURNTRANSFER, true);$bartenderResponse = curl_exec($ch);

curl_close($ch);$responseParts = explode("\n", $bartenderResponse);

echo $responseParts[count($responseParts)-1];That’s it! The bartender.php and barpatron.php files are attached to this post in case you want to play with the service. You should be able to modify the files (updated with your AppFabric service information) and then load the barpatron.php file in your browser.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

• Roberto Bonini continues his series with Windows Azure Feed Reader Episode 3 of 8/22/2010:

Sorry for the lateness of this posting. Real life keeps getting in the way.

This weeks episode is a bit of a departure from the previous two episodes. The original recording I did on Friday had absolutely no sound. So, instead of re-doing everything. I give you a deep walkthrough of the code. Be as that may, I did condense an hours worth of coding into a 20 minute segment – which is probably a good thing.

As I mentioned last week, this week we get our code to actually do stuff – like downloading, parsing and displaying a feed in the MVCFrontEnd.

We get some housekeeping done as well – I re-wrote the OPML reader using LINQ and Extension Methods. We’ll test this next week.

The final 20 minutes, or so is a fine demonstration of voodoo troubleshooting ( i.e. Hit run and see what breaks) but we get Scott Hanselman’s feed parsed and displayed. The View needs a bit of touching up to display the feed better, but be as that may it works.

Since we get a lot done this week, its rather longer – 1 hour and 13 minutes. I could probably edit out all the pregnant pauses.

Here’s the show [and links to the two earlier episodes.]

Pardon my french but the HD version that uploaded last night is crap. I’m really nots sure why, but I’m cutting a new version. From Scratch.

Remember, the code lives at http://windowsazurefeeds.codeplex.com

• David Pallman added Part 2: Windows Azure Bandwidth Charges to his “Hidden Costs in the Cloud” series on 8/22/2010:

In Part 1 of this series we identified several categories of “hidden costs” of cloud computing—that is, factors you might overlook or underestimate that can affect your Windows Azure bill. Here in Part 2 we’re going to take a detailed look at one of them, bandwidth. We’ll first discuss bandwidth generally, then zoom in on hosting bandwidth and how your solution architecture affects your changes. Lastly, we’ll look at how you can estimate or measure bandwidth using IIS Logs and Fiddler.

KINDS OF BANDWIDTH

Bandwidth (or Data Transfer) charges are a tricky part of the cloud billing equation because they’re harder to intuit. Some cloud estimating questions are relatively easy to answer: How many users do you have? How many servers will you need? How much database storage do you need? Bandwidth, on the other hand, is something you may not be used to calculating. Since bandwidth is something you’re charged for in the cloud, and could potentially outweigh other billing factors, you need to care about it when estimating your costs and tracking actual costs.Bandwidth charges apply any time data is transferred into the data center or out of the data center. These charges apply to every service in the Windows Azure platform: hosting, storage, database, security, and communications. You therefore need to be doubly aware: not only are you charged for bandwidth, but many different activities can result in bandwidth charges.

In the case of Windows Azure hosting, bandwidth charges apply when your cloud-hosted web applications or web services are accessed. We’re going to focus specifically on hosting bandwidth for the remainder of this article.

An example where Windows Azure Storage costs you bandwidth charges is when a web page contains image tags that reference images in Windows Azure blob storage. Another example is any external program which writes to, reads from, or polls blob, queue, or table storage. There is a very complete article on the Windows Azure Storage Team Blog I encourage you to read that discusses bandwidth and other storage billing considerations: Understanding your Windows Azure Storage Billing.

Bandwidth charges don’t discriminate between people and programs: they apply equally to both human and programmatic usage of your cloud assets. If a user visits your cloud-hosted web site in a browser, you pay bandwidth charges for the requests and responses. If a web client (program) invokes your cloud-hosted web service, you pay bandwidth charges for the requests and responses. If a program interacts with your cloud-hosted database, you pay bandwidth charges for the T-SQL queries and results.

WHEN BANDWIDTH CHARGES APPLY

The good news about bandwidth is that you are not charged for it in every situation. Data transfer charges apply only when you cross the data center boundary: That is, something external to the data center is communicating with something in the data center. There are plenty of scenarios where your software components are all in the cloud; in those cases, communication between them costs you nothing in bandwidth charges.It’s worth taking a look at how this works out in practice depending on the technologies you are using and the architecture of your solutions. …

David continues with illustrated bandwidth profiles of four scenarios:

Bandwidth Profile of an ASP.NET Solution in the Cloud

Bandwidth Profile of an ASP.NET Solution in the Cloud

Bandwidth Profile of a Batch Application in the Cloud

Bandwidth Profile of a Hybrid Application

and additional bandwidth cost analyses.

• Wely Lau continues his “Step-by-step: Migrating ASP.NET Application to Windows Azure” series with Part 3–Converting ASP.NET Web site to Web Role of 8/22/2010:

This is the third post of Migrating ASP.NET Application to Windows Azure" series. You are encourage to read my first post about Preparing the ASP.NET Application and the second post about Preparing SQL Azure Database if you have not do so. Without further due, let’s get started.

Before conversion, do note that web role is actually ASP.NET web application++, not ASP.NET website. If you are not sure about the difference, please go through the following blogs and discussion about that:

- http://www.codersbarn.com/post/2008/06/ASPNET-Web-Site-versus-Web-Application-Project.aspx

- http://stackoverflow.com/questions/398037/asp-net-web-site-or-web-application

- http://forums.asp.net/p/1339211/2706297.aspx

- http://webproject.scottgu.com/CSharp/migration2/migration2.aspx

The ++ that I’ve mentioned before is some additional files as well as minor changes as following:

WebRole.cs / WebRole.vb which derived from RoleEntryPoint that functions for initiating the configuration of your Windows Azure WebRole project.

If you notice your web.config file, there would be an additional section as following:

<system.diagnostics>

<trace>

<listeners>

<add type="Microsoft.WindowsAzure.Diagnostics.DiagnosticMonitorTraceListener, Microsoft.WindowsAzure.Diagnostics, Version=1.0.0.0, Culture=neutral, PublicKeyToken=31bf3856ad364e35" name="AzureDiagnostics">

<filter type=""/>

</add>

</listeners>

</trace>

</system.diagnostics>This section of the code enable us to perform Windows Azure diagnostic monitoring by injecting the trace listener. I encourage you to read this reference to learn more about Windows Azure diagnostic.

Alright, now let’s get our hand dirty to convert the core application.

I’ll continue the steps that are done in the first post here. We have 3 projects in our solution: namely the Website personal, CloudServicePersonal, and PersonalWebRole.

1. As we need to align our webrole with the website personal content, we need to delete the unused files in default WebRole project. On the WebRole project “PersonalWebRole”, delete the default.aspx and web.config file. Note that, if we want to implement the diagnostic, we’ll need to manually add those diagnostic section later on.

2. Next step is to copy the entire content of the website personal to the PersonalWebrole. Block all of the content from personal website and drag it to PersonalWebRole. Alternately, you can do so by copy the website personal and paste it on the PersonalWebRole.

If it prompts for merge folders, check “Apply to all items” and select “Yes” to merge.

3. Remembering that ASP.NET Web Role is based on ASP.NET Web application, not web site, we will need to convert the aspx files to web application so that it contains the .designer.cs file. To do that, right click on the PersonalWebRole and select Convert to Web Application.

4. You may delete your personal website as we don’t need to use it anymore.

5. Try to build the solution to check if any error occurs.

6. As hosting your application on Windows Azure is somehow different with traditional web application, we’ll need to examine the code whether they are all valid.

Some example of invalid code could be found in App_Code\PhotoManager.cs, ListUploadDirectory() method since it tries to get the detail directory info of “upload” folder. Comment this method.

We’ll also need to comment any other code that call that method such as Admin\Photo.aspx.

In some case where you really need the similar functionality, of course you’ll need to modify it as required to be ready to run on Azure. For example, If your ASP.NET Website allow people to upload and store the file on file system, you will want to implement similar feature using Azure Storage Blob. I’ll talk about those stuff on the further post if possible.

7. Run the web role on the development fabric by pressing F5 or Run. Development Fabric is the local simulation environment of Windows Azure hat implements things like load balancing, etc. Make sure that your website is running well on development fabric first before deploy it to real Windows Azure environment.

8. Try to log-in with the credential to see whether it’s running well.

9. Congratulation if you’ve done at this step. It means that you have successfully converted your web site to Windows Azure web role, as well as have successfully connected it to SQL Azure.

But wait, you are not done yet! Why? Running well on development fabric, doesn’t mean it will run well on Windows Azure. [Emphasis added.]

No worries, in next post, I’ll show you how to deploy it to Windows Azure and solve each problem if there’s any issue arise.

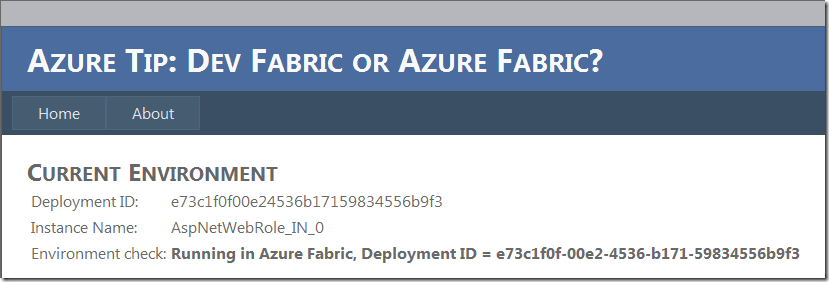

• David Makogon offered an Azure Tip of the Day: Determine if running in Dev Fabric or Azure Fabric on 8/20/2010:

One potential goal, when writing a new Azure application, is to support running in both Azure-hosted and non-Azure environments. The SDK gives us an easy way to check this:

if (RoleEnvironment.IsAvailable) { // Azure-specific actions }

With this check, you could, say, build a class library that has specific behaviors depending on the environment in which it’s running.

Now, let’s assume the Azure environment is available, and you need to take a specific action depending on whether you’re running in the Dev Fabric vs. the Azure Fabric. Unfortunately, there’s no specific method or property in RoleEnvironment that helps us out.This brings me to today’s tip: Determining whether an app is running in Dev Fabric or Azure Fabric.

The Deployment ID

While there’s no direct call such as RoleEnvironment.InDevFabric, there’s a neat trick I use, making it trivial to figure out. A disclaimer first: This trick is dependent on the specific way the Dev Fabric and Azure Fabric generate their Deployment IDs. This could possibly change with a future SDK update.

Whenever you deploy an Azure application, the deployment gets a unique Deployment ID. This value is available in RoleEnvironment.DeploymentId.As it turns out, the deployment ID has two different formats, depending on runtime environment:

With this little detail, you can now write a very simple method to determine whether you’re in Dev Fabric or Azure Fabric:

- Dev Fabric: The deployment ID takes the form of deployment(n), where n is sequentially incremented with each Dev Fabric deployment.

- Azure Fabric: The deployment ID is a Guid.

private bool IsRunningInDevFabric(){ // easiest check: try translate deployment ID into guid Guid guidId; if (Guid.TryParse(RoleEnvironment.DeploymentId, out guidId)) return false; // valid guid? We're in Azure Fabric return true; // can't parse into guid? We're in Dev Fabric }Taking this one step further, I wrote a small asp.net demo app that prints out the deployment ID, along with the current instance ID. For example, here’s the output when running locally in my Dev Fabric:

Here’s the same app, published to, and running in, the Azure Fabric:I uploaded my demo code so you can try it out yourself. You’ll need to change the diagnostic storage account information in the asp.net role configuration, prior to deploying it to Azure.

Chris Woodruff interviews Scott Densmore and Eugenio Pace about Moving Applications to the Cloud on Windows Azure on 8/19/2010 in episode 59 of Deep Fried Bytes:

In this episode Woody sat down two members of the Patterns & Practices Cloud Guidance team at Microsoft to discuss Azure and the ways to get legacy applications to the Cloud. Also discussed during the interview was SQL Azure and Identity and Security in Azure.

Episode 58 of 8/2/2010 was Building Facebook Applications with Windows Azure with Jim Zimmerman:

In this episode Keith and Woody sit down with Jim Zimmerman to discuss how Jim builds Facebook applications using Windows Azure. Jim was an important developer that worked and released the Windows Azure Toolkit for Facebook project on Codeplex. The project was released to give the community a good starter kit for getting Facebook apps up and running in Windows Azure.

Michael Spivey made a brief observation about the Windows Azure Service Management API Tool (Command-line) on 8/19/2010:

The Windows Azure Service Management API Tool is a free command-line program that lets you manage deployments and services using the Windows Azure Service Management API. The download comes with an application EXE and a config file. The Usage.txt file included in the downloaded ZIP will tell you which settings you need to edit in the config file for the EXE to work. The tool does require you to use a certificate and the thumbprint needs to be put in the config file.

You can create a self-signed certificate that you then upload to Windows Azure in the API Certificates section of the Windows Azure Developer Portal.

FMS, Inc. has announced a Preview Version of its ezUpdata application that runs in Windows Azure and stores data in SQL Azure:

From FMS, Inc.’s ezUpdata FAQs:

EzUpData is hosted by Microsoft on their Azure platform. Azure is a cloud computing system that is highly scalable with data stored on multiple machines in multiple physical locations for extremely reliable service.

Even if there is a failure with a particular piece of hardware, connectivity, electricity, or physical disaster, the site should remain available.

Additionally, Microsoft personnel are monitoring the systems, networks, operating systems, software, etc. to make sure the latest updates and security patches are applied. This is truly enterprise level service at a very affordable price.

FMS president Luke Chung explains Microsoft Azure and Cloud Computing...What it Means to Me and Information Workers in this white paper.

Return to section navigation list>

VisualStudio LightSwitch

• Brian Schroer questions LightSwitch is a golf cart? in this 8/21/2010 post, which reflects my complaints about developers denigrating LightSwtich before the beta was available:

I was listening to the latest episode of the CodeCast podcast this morning, featuring Microsoft's Jay Schmelzer talking about Visual Studio LightSwitch.

Host Ken Levy made an interesting analogy for the future choice whether to use LightSwitch or Visual Studio:

"Have you ever been to Palm Springs? It's an interesting place because a lot of times you'll see people in golf carts driving around - not just on the golf course - on the street. If someone needs to go from Palm Springs to LA, they need a car. But if you're just putting around the neighborhood, this golf cart where all you do is put your foot on the pedal and steer - that's all you need - It works, right? Most people have both a car and a golf cart in the garage, and they pick which one they need to use."

Now, I haven't seen LightSwitch yet, and I hate it when people form an opinion about something they haven't seen, but…

It concerns me when I read articles like this, and see quotes like:

It’s a tool that relies on pre-built templates to make building applications easier for non-professional programmers.

…or…

Microsoft’s idea is that LightSwitch users will be able to “hand off their apps to professional Visual Studio developers to carry them forward,” when and if needed.

I've been on the receiving end of that handoff way too many times, with Access or Excel "applications" that started with the idea that they would be simple golf carts:

…but they bolt on a feature here and there, trying for this:

…and by the time they admit they're in over their heads and call in a professional, the "hand off" looks like this:

I hope LightSwitch will automate things enough to help the "non-professional programmers" fall into the "pit of success", but I also hope Microsoft understands why professional developers view initiatives like this with trepidation.

I remember a similar reaction by .NET programmers to Polita Paulus’ Blinq framework, which she demonstrated at Microsoft’s 2006 Lang .NET Symposium. See my 2006 Lang .NET Symposium to Expose Blinq for ASP.NET post of 6/19/2006. Blinq became the prototype for ASP.NET Dynamic Data, which many developers use for creating administrative apps quickly and thus cheaply. Like LightSwitch, ASP.NET Dynamic Data uses Entity Framework to aid in autogenerating pages. Click here to read my 63 previous posts that contain ASP.NET Dynamic Data content.

Dave Noderer describes My 1st Lightswitch App in this 8/20/2010 post to his South Florida Does .NET! blog:

On Tuesday August 18th, the first publicly available beta for the Microsoft LightSwitch development tool was posted on MSDN.

For more information about this Silverlight application generator addition to Visual Studio 2010 visit: http://msdn.microsoft.com/en-us/lightswitch/default.aspx

Because it is still a beta I installed the 500mb ISO on a Windows 7 virtual PC VM which already had Visual Studio 2010. I had not spun up this VM for over 4 months so there were LOTS of updates to apply first. Although some have reported problems, mine went smoothly.

The default 512 MB memory on the VM was not enough. Understandably it was very slow and when I first tried to run the generated program it timed out. So I increased the VM to 2 GB and it ran much better!

For consultant time keeping, I’ve been using a classic asp website and access admin program for over 10 years. I could never justify making a change (nobody is paying for it).

I created a new LightSwitch project, both VB and C# are available:

I created a new datasource pointing to a SQL 2005 server (2005 or above is required) at my web host (http://www.Appliedi.net) where the Computer Ways timesheet data resides. Knowing that LightSwitch is based on Entity Framework and also that I’ve been meaning to do it, I added foreign key relationships on the main tables I would need. In the past this was enforced by the application originally on Access then SQL Server 7.

Dave continues with an illustrated walk-through of his app.

I’m test-driving LightSwitch on a VM running 64-bit Windows 7 Professional as the guest OS and 64-bit Windows Server 2008 R2 as the host OS. The CPU is an Intel Core2 Quad Q9550 (2.83 GHz) on an Intel DQ45CB motherboard with 3 GB RAM assigned. I’ve experienced no problems with this configuration so far, but my initial projects are relatively simple.

Josh Barnes reported the Connected Show Crew On Visual Studio LightSwitch to the Innovation Showcase blog on 8/19/2010:

Peter Laudati & Dmitry Lyalin host the edu-taining Connected Show developer podcast on cloud computing and interoperability. Check out episode #35, “Cat Ladies & Acne-Laden Teenagers”. In this episode, the duo cover the latest news around the Microsoft developer space, including Windows Azure updates, interoperability, & Windows Phone 7.

Dmitry & Peter also talk about the new Visual Studio LightSwitch. Is it really for cat ladies? They discuss who the target audience is for LightSwitch as well as how some in the community feel it may or may not impact professional Microsoft Developers in the industry.

If you like what you hear, check out previous episodes of the Connected Show at www.connectedshow.com. You can subscribe on iTunes or Zune. New episodes approximately every two weeks!

<Return to section navigation list>

Windows Azure Infrastructure

• Tony Bishop claimed “The following design principles are proven to enable firms to create an enterprise cloud-oriented datacenter” as a deck to his Nine Design Principles of an Enterprise Cloud-oriented Datacenter Blueprint post of 9/22/2010:

An Enterprise Cloud-oriented datacenter is a top-down, demand driven datacenter design that maximizes efficiency and minimizes traditional IT waste of power, cooling, space, and capacity under-utilization while providing enhanced levels of service and control. The following design principles are proven to enable firms to create an enterprise cloud-oriented datacenter.

#1: Dynamic provisioning

A package-once, deploy-many application facility that incorporates application, infrastructure, and IP dependencies into a simple policy library is critical. This affords the capability to deploy in minutes versus days.#2: Dynamic execution management

Dynamic execution management performs allocation that is managed in real time based on monitored workloads compared against service contracts adjusting allocation of applications, infrastructure services, and infrastructure resources as demand changes throughout the operational day.#3: Virtualized

In order to achieve dynamic allocation of resources, applications and other types of demand cannot be tied to specific infrastructure. The traditional configuration constraints of forced binding of infrastructure and software must be broken in order to meet unanticipated demand without a significant amount of over provisioning (usually 2‐6 times projected demand).#4: Abstracted

The design of each infrastructure component and layer needs to have sufficient abstraction so that the operational details are hidden from all other collaborating components and layers. This maximizes the opportunity to create a virtualized infrastructure, which aids in rapid deployment and simplicity of operation.#5: Real time

Service & resource allocation, reprovisioning, demand monitoring, and resource utilization must react as demand and needs change, which translates into less manual allocation. Instead, a higher degree of automation is required, driving business rules about allocation priorities in different scenarios to the decision‐making policies of the infrastructure, which can then react in sub‐ seconds to changes in the environment.#6: Service-oriented infrastructure

Service-oriented infrastructure is infrastructure (middleware, connectivity, hardware, network) as a defined service, subject to service level agreements (SLAs) that can be enforced through policies in real time, and that can be measured, reported on, and recalibrated as the business demands.#7: Demand-based infrastructure footprints

Because demand can be categorized in terms of operational qualities, efficient, tailored infrastructure component ensembles can be created to meet different defined types. These ensembles are tailored to fit into operational footprints (or processing execution destinations) that are tailored based on demand.#8: Infrastructure as a service-oriented utility

The key operating feature of a utility is that a service is provided for when needed, so that spikes are processed effectively. It reflects a demand‐based model where services are used as needed and pools of service can be reallocated or shut down as required. A real- time, measured, demand‐based utility promotes efficiency, because consumers will be charged for what they use.#9: Simplified engineering

The utility will only be viewed as a success if it is easy to use, reduces time to deploy, provision is highly reliable, and it is responsive to demand. Applying the principles stated above will simplify engineering and meet the objectives that will make the utility a success. Simplified engineering will promote greater efficiency, enable the datacenter to migrate to a "green" status, enable greater agility in the deployment of new services, all while reducing cost, waste, and deployment time, and providing a better quality service.

• Bernard Golden asserted “The pervasive growth of the Internet will accelerate cloud adoption” as a preface to his Cloud computing will outlive the web post of 8/22/2010 to Computerworld UK:

The tech world is all a-twitter (literally!) about an article in this month's Wired Magazine which announces "The Web Is Dead. Long Live the Internet". The article recites a litany of problems that are choking the web: the rise of apps that replace use of a web browser, the growth of uber-aggregation sites like Facebook that are closed platforms, the destruction of traditional advertising and replacement by Google - the semi-benevolent search monster and even the move away from HTML and use of port 80-based apps.

In short, Wired has published a jeremiad for the end of the freewheeling open web, being rapidly supplanted by voracious wannabe monopolists who seek to dominate the networked world and reduce all of us to nothing more than predestined consumers of "content" served up by monolithic megabrands. You have to look carefully, but, after all mournful moping about the terrible things happening on the web, Wired concludes that the Internet is young and still developing, so new things are right down the pike.

I yield to no-one in my admiration for (or, indeed, enjoyment of) Wired. I always find it stimulating and interesting. Nevertheless, one must admit that in its straining for profundity it often overreaches for effect and overstates for conclusion (indeed, the magazine itself admits this when it notes that it predicted the death of the browser over a decade ago). For example, Wired reached a laughably wrong conclusion two years ago when it declared "The End of Theory: The Data Deluge Makes the Scientific Method Obsolete".

As regards the message of the article, I draw a diametrically opposed conclusion than does Wired. It spends perhaps 90% of the piece bewailing the rise of bad forces, and tosses in 10% at the end in a kind of "but it's still early innings for the Internet, so some good things might happen." I look at the same phenomenon and see the huge problems it poses as perhaps 10% of the reality of the Internet, and certainly nothing to be worried about - in fact, unlikely to remain as problems into the near future.

For example, take the move away from web standards like HTML and port 80. Why anyone should be concerned about what port is used for communication across the Internet is beyond me, but in fact port 80 is increasing in importance. In my experience, entire tranches of applications that would be better served with their own ports and protocols ride on port 80 because its the only one that can reliably be expected to be open on company firewalls. We might shed a tear for poor old port 80, given how it's overworked and overloaded all in aid of letting applications do things that were never envisioned for it when the original HTML protocol was designed - but we shouldn't conclude it's obsolete and abandoned.

Or the rise of the monoliths. What's surprising is how fragile these unassailable entities actually turn out to be in the world of the Internet. Yahoo! owned the portal space, until it was outfoxed by Google. MySpace was everyone's darling until Facebook came along. Let's not even talk about the fate of the little-lamented AOL.

Cloud Tweaks lists the Top 10 Cloud Computing Most Promising Adoption Factors in this 8/20/2010 post:

Top 10 Cloud Computing Most Promising Adoption Factors

Cloud computing has already fascinated many critics and observers by its progress and fast domination of the IT and Business world. It is on the verge of becoming world premier technology in providing computing services to the organization no matter how small small or big regardless of regional and economical status. It is a bit strange that even those close to this technology that many including CIOs and other concerned professionals still don’t know much about cloud computing.

The Cash Cloud

Cloud computing is estimated to save 20-30 percent of the expenses as compared to the client-server model. This huge saving will attract businesses towards cloud computing. This combination means both will affect and depend on each other.

The Most Important Thing Happening Around

Cloud computing is not only better computing but it is also very agile technology which meets the requirements of fast changing business trends. Cloud computing is introducing the new ways of business, and business changes the shape of cloud according to its own needs.

Shift to Cloud Computing is Inevitable

It is estimated that by 2012 only about 20 percent of businesses will be without cloud computing. The concept of business firms’ privately owned IT infrastructure will disappear by then.

Real-Time Collaboration in the Cloud

At present, real-time collaboration tools are much needed in business the world. Cloud computing has made it easy to implement. Now, workers in different locations are able to work on and use the same document. Google Docs and lotus Live have already offered it. Document sharing and updating from different locations will become very easy and just the way of the cloud computing life.

The Consumer Internet Becomes Enterprise

Cloud computing actually started as consumer Internet services like email applications and then eventually came Google Docs. That was a big breakthrough in computing and so the same will be expected from cloud computing new arrivals.

Many Types of Clouds

In the future, it is expected that there will be many established types of computing clouds. Mainly public clouds (used and shared by many people at different locations), Private clouds (owned and used only by private firms and organizations), Hybrid clouds with both types mixed to some extent. At present, this concept may be a bit confusing because organizations are trying to find out which cloud model will suit them best.

Security May Remain a Concern

Security still remains the main concern about cloud computing. In fact, most of the clouds will have the ability to be fool proof in terms of security but not all. Another reason for this concern is because of change. Any change is often associated with a sense of insecurity about it. (Fear of the Unknown)

Cloud Brings Mobility

One of the best advantages of cloud computing is the facility it provides regarding your location of working and accessing your data and information remotely. Now you can access and use your data at any location without any need to install too many software and hardware on every system you use.

Cloud is Scalable

Cloud computing is scalable for all types and sizes of businesses. Now, small business firms don’t have to buy costly software and hire skilled professional to use that technology. They will pay for what modules they use.

Quality and 24/7 Service

Cloud computing vendors will have enough resources to hire very skilled and professional workers to work on, maintain, and ensure the security and quality of your data. Businesses will get the desired data in as processed form as they will desire. Another plus point is 24/7 availability of the cloud computing services. Office workers will be able to work at any time and there will be almost no down-time because of resources, skills, and professionalism of the cloud computing vendors.

Jerry Huang asks Amazon or Azure? in this 8/20/2010 post to the Gladinet blog:

In a couple of years, Amazon and Azure in Cloud Platform and Services could be like Coke and Pepsi in soft drinks.

With the launch of Amazon S3 in March 2006 and Amazon EC2 in Aug 2006, Amazon publicized the “Cloud Services” early that it has a significant lead over its peers. On the other hand, we have seen time and time again how Microsoft take a lead from behind. It is not clear who will be the leader in a couple of years when Amazon has the leader role now.

When you look at customers in a given product category, there seem to be two kinds of people. There are those who want to buy from the leader and there are those who don’t want to buy from the leader. A potential No.2 has to appeal to the latter group.

Quote: The 22 Immutable Laws of Marketing

So what are the differences that Windows Azure has that appeal to the latter group?

(1) Branding

Amazon brands the storage and computing separately as S3 (Simple Storage Service) and EC2 (Elastic Compute Cloud). The short brand gives strong lasting impressions on the brand names. (How can you forget S3?). The 3 and 2 combination also re-enforces each other. Amazon has other services such as SimpleDB and etc but not as easy to remember as the S3/EC2 combo. Everything is under Amazon Web Service (AWS) but not many are saying “let’s compare AWS to Azure”.

Windows Azure brands the whole Cloud Platform and Service together under Azure. It also gives strong impressions to the platform. (Cloud Computing Service, try Windows Azure).

Some thinks bottom up, some thinks top down. From the branding perspective, they appeal to different groups of people.

(2) Focus

Azure focus more on the cloud platform as a whole with Visual Studio 2010 integration. The “Hello World” examples from Microsoft evangelists are more about how to use VS 2010 to create new apps using SQL Azure instead of SQL 2005, how to use Azure Blob Storage instead of local file system.

When applications are locked into the Azure Platform, it is like Microsoft Office locks into Windows Platform. The binding is strong and supports each other. Azure has a strong appeal to the Visual Studio and .NET developers.

(3) Channels

Azure has Azure Appliance that can be used by enterprises for private cloud. Amazon currently is public cloud only. Microsoft has enterprise customer base and channels. For the customers and channels that are already familiar with Windows Servers and Enterprise solutions, they are easier to accept Azure.

(4) SDK

They both have HTTP REST API. To make developers’ life easy, they both wrap the API into SDKs. Azure’s SDK is more object oriented. For example, if you want to create a blob, you use a container object and invoke the CreateBlob method. Amazon’s SDK is more transaction based, closely mirrors the REST API. Azure’s SDK is closer to what a VB programmer likes. Amazon SDK is closer to what a network engineer likes. They appeal to different groups with different design philosophy.

They may have other differences in pricing and other technical details, which could mirror each other over a long period of time and lose the difference.

The branding, the focus, the channel could be the determining factors that differentiate them apart.

Gladinet is committed to support both of them, bringing their cloud services to Desktop and File Servers; providing easy access solution for users who wants to use Amazon S3 and Azure Storage. Amazon S3 or Azure Storage, it is all good with Gladinet.

John Savageau prefaces his A Cloud Computing Epiphany post of 8/20/2010 with “Cloud Computing- the Divine Form:”

One of the greatest moments a cloud evangelist indulges in occurs at that point a listener experiences an intuitive leap of understanding following your explanation of cloud computing. No greater joy and intrinsic sense of accomplishment.

Government IT managers, particularly those in developing countries, view information and communications technology (ICT) as almost a “black” art. Unlike the US, Europe, Korea, Japan, or other countries where Internet and network-enabled everything has diffused itself into the core of Generation “Y-ers,” Millennials, and Gen “Z-ers.” The black art gives IT managers in some legacy organizations the power they need to control the efforts of people and groups needing support, as their limited understanding of ICT still sets them slightly above the abilities of their peers.

But, when the “users” suddenly have that right brain flash of comprehension in a complex topic such as cloud computing, the barrier of traditional IT control suddenly becomes a barrier which must be explained and justified. Suddenly everybody from the CFO down to supervisors can become “virtual” data center operators – at the touch of a keyboard. Suddenly cloud computing and ICT becomes a standard tool for work – a utility.

The Changing Role of IT Managers

IT managers normally make marginal business planners. While none of us like to admit it, we usually start an IT refresh project with thoughts like, “what kind of computers should we request budget to buy?” Or “that new “FuzzPort 2000″ is a fantastic switch, we need to buy some of those…” And then spend the next fiscal year making excuses why the IT division cannot meet the needs and requests of users.

The time is changing. The IT manager can no longer think about control, but rather must think about capacity and standards. Setting parameters and process, not limitations.

Think about topics such as cloud computing, and how they can build an infrastructure which meets the creativity, processing, management, scaling, and disaster recovery needs of the organization. Think of gaining greater business efficiencies and agility through data center consolidation, education, and breaking down ICT barriers.

The IT manager of the future is not only a person concerned about the basic ICT food groups of concrete, power, air conditioning, and communications, but also concerns himself with capacity planning and thought leadership.

The Changing Role of Users

There is an old story of the astronomer and the programmer. Both are pursuing graduate degrees at a prestigious university, but from different tracks. By the end of their studies (this is a very old story), the computer science major focusing on software development found his FORTRAN skills were actually below the FORTRAN skills of the astronomer.

“How can this be” cried the programmer? “I have been studying software development for years, and you studying the stars?”

The astronomer replied “you have been studying FORTRAN as a major for the past three years. I have needed to learn FORTRAN and apply it in real application to my major, studying the solar system, and needed to learn code better than you just to do my job.”

There will be a point when the Millenials, with their deep-rooted appreciation for all things network and computer, will be able to take our Infrastructure as a Service (IaaS), and use this as their tool for developing great applications driving their business into a globally wired economy and community. Loading a LINUX image and suite of standard applications will give the average person no more intellectual stress than a “Boomer” sending a fax.

Revisiting the “4th” Utility

Yes, it is possible IT managers may be the road construction and maintenance crews of the Internet age, but that is not a bad thing. We have given the Gen Y-ers the tools they need to be great, and we should be proud of our accomplishments. Now is the time to build better tools to make them even more capable. Tools like the 4th utility which marries broadband communications with on-demand compute and storage utility.

The cloud computing epiphany awakens both IT managers and users. It stimulates an intellectual and organizational freedom that lets creative people and productive people explore more possibilities, with more resources, with little risk of failure (keep in mind with cloud computing your are potentially just renting your space).

If we look at other utilities as a tool, such as a road, water, or electricity – there are far more possibilities to use those utilities than the original intent. As a road may be considered a place to drive a car from point “A” to point “B,” it can also be used for motorcycles, trucks, bicycles, walking, a temporary hard stand, a temporary runway for airplanes, a stick ball field, a street hockey rink – at the end of the day it is a slab of concrete or asphalt that serves an open-ended scope of use – with only structural limitations.

Cloud computing and the 4th utility are the same. Once we have reached that cloud computing epiphany, our next generations of tremendously smart people will find those creative uses for the utility, and we will continue to develop and grow closer as a global community.

David Linthicum clamed Virtualization and Cloud Computing? Two Different Concepts in this 8/20/2010 post to ebizQ’s Where SOA Meets Cloud blog:

It's funny to me that virtualization and cloud computing are often used interchangeably, and they are very different concepts. This confusion is leading to issues around enterprises thinking that just because they have virtualization software running, such as VMWare, thus they have a cloud. Not entirely true.

One of the better definitions of virtualization can be found on Tech Target: "DEFINITION - Virtualization is the creation of a virtual (rather than actual) version of something, such as an operating system, a server, a storage device or network resources." Thus, it's really how you implement infrastructure and not as much about sharing in a multitenant environment.

Of course the core problem around all this is that cloud computing is widely defined, so it can really be anything. Thus, when we began to look at the patterns of cloud computing, and the fact that most leverage virtualization, we naturally assume the interchangeability of concepts.

I see cloud computing is something much more encompassing than just simple virtualization, and indeed the industry seems to be moving in this direction. Cloud computing is all about managed sharing of resources, and typically includes the concept of multitenancy to accomplish that. Multitenancy simply means we have a system for sharing those resources among many different users simultaneously.

Also needed is the notion of auto-provisioning, or the allocation or de-allocation of resources as needed. Moreover, cloud computing should also have use-based accounting to track use for billing or charge backs, as well as governance and security subsystems.

So, you can think if virtualization as a foundation concept around cloud computing, but not cloud computing unto itself. I suspect that the two concepts will become even more blurry over time as virtualization technology vendors create more compelling cloudy solutions that include many of the features that make them a true cloud. Moreover, I'm seeing a lot of great technology hit the streets that that augments the existing virtualization technology in cloud directions, many spanning many different virtualization systems at the same time providing management and provisioning abstraction.

Personal aside: I like Dave’s previous portrait better than this formal photo.

Kevin Fogarty quotes Matson Navigation’s CIO Peter Weis in a Which Apps Should You Move to the Cloud? 5 Guidelines article of 8/18/2010 for ComputerWorld via CIO.com:

To most people -- especially in August -- 'Ocean Services' probably conjures visions of boogie boards, sun umbrellas and bringing the drinks without getting sand in the glass.

To Matson Navigation CIO Peter Weis, it means logistics, and the need to gather, analyze and coordinate information so his customers can monitor the location, condition and progress of finished goods in one container on one freighter in the South Pacific, more easily than they'd be able to check on a new phone battery being delivered by FedEx.

It's not the kind of information or access the ocean services business has been accustomed to offering.

"Shipping is a very traditional industry," Weis says. "There a lot of traditional old-school people, and so many moving pieces, solving global problems using IT is much harder than it might be in another industry."

Weis, who took over as CIO of the $1.5 billion Matson Navigation in 2003, says the company is 75 percent of the way through an IT overhaul that focuses on retiring the mainframes, DOS and AS/400 systems the company has depended on for years in favor of a Java-based application-integration platform and a plan to get as many of Matson's business applications as possible from external SaaS providers.

Moving to a heavy reliance on SaaS applications is a key part of the strategy to reduce the company's risk and capital spending on new systems. Matson is unusual not because of its increasing use of software owned and maintained by someone else -- but because it is selecting those services according to a coherent, business-focused strategy and connecting them using an open platform built for the purpose.

"What we're seeing is companies jumping into SaaS or cloud projects without an overarching strategy," according to Kamesh Pemmaraju, director of cloud research at consultancy Sand Hill Group. "There are a lot of departmental initiatives and they do get some quick returns on that, but it's very local in nature and there's no coordination on how to obtain global benefits to get real value out of the cloud."

Defining goals is an obvious step, but not one every company realizes it needs to take, according to Michael West, vice president and distinguished analyst for Saugatuck Technology.

Below are a few more necessary steps to take before deciding to move from a traditional app to a Web-based one.

1. Decide Why You Want SaaS

Weis was hired in 2003 to revamp Matson's IT infrastructure, so part of his mandate was to design an infrastructure and draw up a strategy to coordinate the company's use of SaaS and internal applications.

Business agility, not cost savings, is the leading reason U.S. companies are interested in cloud computing, according to a survey of 500 senior-level IT and business-unit managers Sand Hill released in March. Forty nine percent of respondents listed business agility as their most important goal; 46 percent listed cost efficiency. The No. 3 response -- freeing IT resources to focus on innovation -- got less than half that support, with 22 percent.

SaaS is spreading quickly among business units and departments, not usually with the help or strategic guidance of IT to make sure the functionality isn't duplicated or conflicting with the company's other tools.

"People tend to think of SaaS as a technology choice, which it's not," Pemmaraju says. "It's a business choice, to allow you to access technology to respond to business demands in real time. If you just look at it as technology, you have to answer how you're contributing to your business goals."

2. Think Architecture

Before Weis started signing on SaaS providers and ramping down internal applications, he and his staff spent a year taking inventory of all the company's existing systems and building a reference platform that provided middleware and application servers to connect new and existing applications.

"Our endpoint was that all our apps would run on the target IT platform based on distributed architecture, J2E based middleware and apps servers," Weis says. "That year was spent laying that foundation but also restructuring the legacy organization, hiring the skills we needed, building a QA group, test group, architecture group -- a lot of things the legacy organization didn't have."

That kind of thoughtful architectural planning will help any organization in the long run, though it is becoming less necessary as SaaS providers begin to provide more ways to integrate SaaS and legacy applications, sometimes with another SaaS choice, West says.

More than half the transactions on SalesForce.com come through APIs, meaning the software is programmatically connected to other systems, usually through manual integration work on the part of the customer.

SaaS providers like SalesForce are building much better integration into their apps, however. And third parties such as Boomi and recent IBM acquisition CastIron, can also help with two-way synchronization of data between on-premise and SaaS applications, West says.

Third-party integrators such as Appirio, ModelMetrics, and BlueWolf also offer integrated sets of SaaS applications, sometimes selected by menu from a Web interface, sometimes built in custom engagements.

"You end up with a constellation of functionality, with multiple SaaS providers linked, available on a subscription basis, " West says.

3. Take Inventory and Throw Out Apps