Windows Azure and Cloud Computing Posts for 8/25/2010+

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database, Codename “Dallas” and OData

- AppFabric: Access Control and Service Bus

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch

- Windows Azure Infrastructure

- Windows Azure Platform Appliance (WAPA)

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Read the detailed TOC here (PDF) and download the sample code here.

Discuss the book on its WROX P2P Forum.

See a short-form TOC, get links to live Azure sample projects, and read a detailed TOC of electronic-only chapters 12 and 13 here.

Wrox’s Web site manager posted on 9/29/2009 a lengthy excerpt from Chapter 4, “Scaling Azure Table and Blob Storage” here.

You can now freely download by FTP and save the following two online-only PDF chapters of Cloud Computing with the Windows Azure Platform, which have been updated for SQL Azure’s January 4, 2010 commercial release:

- Chapter 12: “Managing SQL Azure Accounts and Databases”

- Chapter 13: “Exploiting SQL Azure Database's Relational Features”

HTTP downloads of the two chapters are available for download at no charge from the book's Code Download page.

Tip: If you encounter articles from MSDN or TechNet blogs that are missing screen shots or other images, click the empty frame to generate an HTTP 404 (Not Found) error, and then click the back button to load the image.

Azure Blob, Drive, Table and Queue Services

Jerry Huang asserted “Hard drive is a commodity. Whether it is IDE, EIDE or SATA, you buy the right one and plug it directly into your computer” as a preface to his Cloud Storage Becoming a Commodity post of 8/25/2010:

Hard drive is a commodity. Whether it is IDE, EIDE or SATA, you buy the right one and you can plug it directly into your computer.

A commodity is a good for which there is demand, but which is supplied without qualitative differentiation across a market. Commodities are substances that come out of the earth and maintain roughly a universal price

Quote - WikipediaCloud Storage is becoming a commodity. The pricing is becoming similar with the interface becoming similar too across cloud storage vendors.

There are three layers in the cloud storage stack:

- cloud storage software facing the end user;

- service providers providing the services

- the cloud storage vendor providing the solution to the service providers.

On the user front, more and more cloud storage software are available supporting more and more cloud storage vendors. For example, in 2008, Gladinet supported SkyDrive, Google Docs, Google Picasa and Amazon S3. In 2010, the list expands to include AT&T Synaptic Storage, Box.net, EMC Atmos Online, FTP, Google Docs, Google Apps, Google Storage For Developers, Mezeo, Nirvanix, Peer1 CloudOne, Windows Azure, WebDav and more. This trend enables the consumers to pick and choose which cloud storage services they need.

On the service providers front (service providers are the biggest cloud storage service providers), they may not all create cloud storage services themselves. For example, AT&T and Peer1 are using EMC Atmos. Verizon and Planet are using Nirvanix. As time goes on, we will see the same provider using multiple backend solutions to satisfy different need. Also when service providers merge, the merged company may be using backend solutions from multiple vendors.

On the cloud storage vendor front, more and more are conforming to the Amazon S3 API. We saw Google Storage for Developers, Eucalyptus, Dunkel and Mezeo all creating S3 compatible APIs for their cloud storage solutions. On the other hand, Rackspace is pushing the OpenStack project. All are trying to create a unified interface.

All these are turning cloud storage into a commodity.

If Neo4j can provide Advanced Indexes Using Multiple Keys, as reported by Alex Popescu on 8/25/2010 in his myNoSQL blog, why can’t Azure Tables?

There’s a prototype implementation of a new index which solves this (and some other issues as well, f.ex. indexing for relationships).

The code is at https://svn.neo4j.org/laboratory/components/lucene-index/ and it’s built and deployed over at http://m2.neo4j.org/org/neo4j/neo4j-lucene-index/

The new index isn’t compatible with the old one so you’ll have to index your data with the new index framework to be able to use it.

Original title and link for this post: Neo4j: Advanced Indexes Using Multiple Keys (published on the NoSQL blog: myNoSQL)

<Return to section navigation list>

SQL Azure Database, Codename “Dallas” and OData

Alex James announced on 8/25/2010 the #odata Daily newspaper that’s updated every day:

A newspaper built from all the articles, blog posts, videos and photos shared on Twitter using the #OData hashtag.

Wayne Walter Berry (@WayneBerry) announced SQL Azure Service Update 4 in an 8/24/2010 post to the SQL Azure Team blog:

Service Update 4 is now live with database copy, improved help system, and deployment of Microsoft Project Code-Named “Houston” to multiple data centers.

Support for database copy: Database copy allows you to make a real-time complete snapshot of your database into a different server in the data center. This new copy feature is the first step in backup support for SQL Azure, allowing you to get a complete backup of any SQL Azure database before making schema or database changes to the source database. The ability to snapshot a database easily is our top requested feature for SQL Azure, and goes above and beyond our database center replication to keep your data always available. The MSDN Documentation with more information is entitled: Copying Databases in SQL Azure.

Additional MSDN Documentation: MSDN has created a new section called Development: How-to Topics (SQL Azure Database) which has links to information about how to perform common programming tasks with Microsoft SQL Azure Database.

- Update on “Houston”: Microsoft project Microsoft Project Code-Named “Houston” (Houston) is a light weight web-based database management tool for SQL Azure. Houston, which runs on top of Windows Azure is now available in multiple datacenters reducing the latency between the application and your SQL Azure database.

Wayne continued with Backing Up Your SQL Azure Database Using Database Copy on 8/24/2010:

With the release of Service Update 4 for SQL Azure you now have the ability to make a snapshot of your running database on SQL Azure. This allows you to quickly create a backup before you implement changes to your production database, or to create a test database that resembles your production database.

The backup is performed in the SQL Azure datacenter using a transactional mechanism without downtime to the source database. The database is copied in full to a new database in the same datacenter. You can choose to copy to a different server (in the same data center) or the same server with a different database name.

A new database created from the copy process is transactionally consistent with the source database at the point in time when the copy completes. This means that the snapshot time is the end time of the copy, not the start time of the copy.

Getting Started

The Transact SQL looks like this:

CREATE DATABASE destination_database_name AS COPY OF [source_server_name.]source_database_nameTo copy the Adventure Works database to the same server, I execute this:

CREATE DATABASE [AdvetureWorksBackup] AS COPY OF [AdventureWorksLTAZ2008R2]This command must be execute when connected to the master database of the destination SQL Azure server.

Monitoring the Copy

You can monitor the currently copying database by querying a new dynamic managed view called sys.dm_database_copies.

An example query looks like this:

SELECT * FROM sys.dm_database_copiesHere is my output from the Adventures Works copy above:

Permissions Required

When you copy a database to a different SQL Azure server, the exact same login/password executing the command must exist on the source server and destination server. The login must have db_owner permissions on the source server and dbmanager on the destination server. More about permissions can be found in the MSDN article: Copying Databases in SQL Azure.

One thing to note is that the server you copy your database to does not need to belong to the same service account. In fact you can give or transfer your database to a third party by using this database copy command. As long the user transferring the database has the correct permissions on the destination server and the login/password match you can transfer the database. I will show how to do this in a future blog post.

Why Copy to Another Server?

You will obtain the same resource allocation in the data center if you copy to the same server or a different server. Each server is just an endpoint – not a physical machine, see or blog post entitled: A Server Is Not a Machine for more details. So why copy to another server? There are two reasons:

- You want the new database to have a different admin account in the SQL Azure portal than the destination database. This would be desirable if you are copying the database to testing server from a production database, where the testers owned the testing server and could create and drop database as they desired.

- You want the new database to fall under a different service account for billing purposes.

Summary

More information about copying can be found in the MSDN article: Copying Databases in SQL Azure. Do you have questions, concerns, comments? Post them below and we will try to address them.

Wade Wegner and Zane Adam also chimed in on this topic.

Azret Botash posted End-User Report Designer – Viewing Reports (Part 2) on 8/24/2010 to the DevExpress blog:

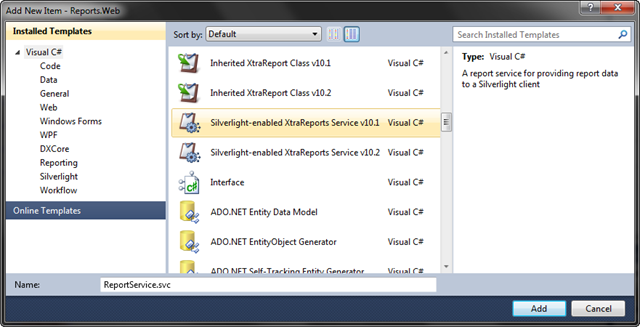

In part 1 we created an end-user report designer that can publish reports to a database using OData protocol. Now, let’s create a Silverlight application that can view the reports that we have published.

Report Service

First we’ll add a printing service to our ASP.NET host application.

By default Silverlight-enabled XtraReports Service looks up the reports by type name, we want to override this behavior and load the report from the database.

protected override XtraReport CreateReport( string reportTypeName, Dictionary<string, object> parameters) { try { using (List listService = new List()) { using (Session session = new Session(listService.GetDataLayer())) { Report report = session.GetObjectByKey<Report>(new Guid(reportTypeName)); if (report == null) { return Create404Report(); } File file = report.File; if (file == null) { return Create404Report(); } XtraReport retVal = new XtraReport(); using (MemoryStream stream = new MemoryStream(file.Binary)) { retVal.LoadLayout(stream); return retVal; } } } } catch (Exception e) { return Create500Report(e); } }Note: The assumption of the new CreateReport is that the reportTypeName is a report ID, a GUID.

Silveright Viewer

Inside the Silverlight, we’ll simply drop the DocumentPreview control on our page and load the report on page load. I have described this process here.

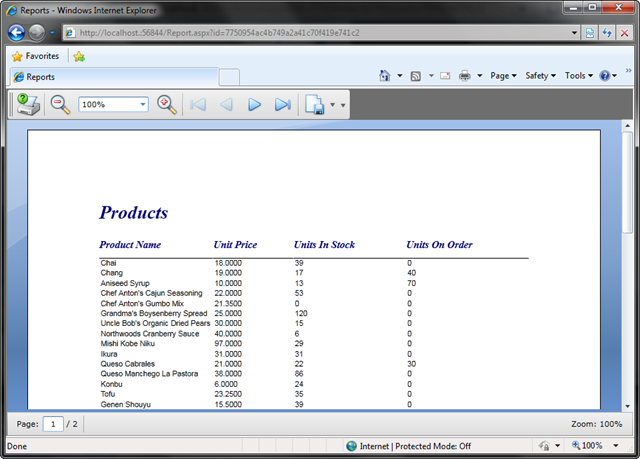

<dxp:DocumentPreview Name="documentPreview1"/>void MainPage_Loaded(object sender, RoutedEventArgs e) { if (!HtmlPage.Document.QueryString.ContainsKey("id")) { return; } string reportId = HtmlPage.Document.QueryString["id"]; if (string.IsNullOrWhiteSpace(reportId)) { return; } ReportPreviewModel model = new ReportPreviewModel( new Uri(App.Current.Host.Source.AbsoluteUri + "../../ReportService.svc").ToString()); model.ReportTypeName = reportId; documentPreview1.Model = model; model.CreateDocument(); }That’s it, we can now access our reports by a URL for example

http://localhost.:56844/Report.aspx?id=7750954ac4b749a2a41c70f419e741c2

Final Notes

- You can download the complete sample here.

- The included Web.config will be useful to you if you need to copy paste some settings.

- You will also need DevExpress.Xpo.Services.10.1.dll this file is not included in the provided sample but you can download it from http://xpo.codeplex.com.

- Get more information and more samples of OData Provider for XPO.

Not to be outdone by the #OData enthusiasts, there’s also an #sqlazure Daily newspaper based on Twitter posts with the #OData tag:

What’s strange is that I can’t find an #Azure or #WindowsAzure newspaper.

<Return to section navigation list>

AppFabric: Access Control and Service Bus

Vittorio Bertocci (@vibronet) posted Infographic: IPs, Protocols & Token Flavours in the August Labs release of ACS to his personal blog on 8/25/2010:

The newest lab release of ACS shows some serious protocol muscle, covering (to my knowledge) more ground than anything else to date. ACS also does an excellent job in simplifying many scenarios that would traditionally require much more thinking & effort: as a result, it is very tempting to just think that any scenario falling in the Cartesian product of possible IPs, protocols, token types and application types can be easily tackled. Although that is true in principle, in reality there are uses and scenarios that are more natural and easier to implement. Discussions about this, in a form or another, are blossoming all over the place both internally and externally: as a visual person I think that a visual summary of the current situation can help to scope the problem and use the service more effectively. Here there’s my first attempt (click for bigger version).

I am fairly confident that this should be correct, I discussed it with Hervey, Todd and Erin, but there’s always the possibility that I misunderstood something.

There’s quite a lot of stuff in there, let me walk you through the various parts of the diagram.

The diagram is partitioned in 3 vertical disjointed regions: on the left there are all the identity providers you can use with ACS, on the right the applications that can trust ACS; and between them, there is ACS itself. On the borderline between ACS and your applications there are the three issuing endpoints offered by ACS: the WS-Federation endpoint, the WS-Trust endpoint and the OAuth WRAP one. Here I didn’t draw any of the ACS machinery, from the claim transformation engine to the list of RP endpoints; it’s enough to know that something happens to the claims in their journey from the IP to the ACS issuers.

The diagram is also subdivided in 3 horizontal regions, which represent the kind of apps that are best implemented using a given set of identity providers and/or protocols. The WS-Federation issuer is best suited for applications which are meant to be consumed via web browser; WS-Trust, and the OAuth WRAP profiles that ACS implements, are ideal for server to server communications; finally, WS-Trust is also suitable for cases in which the user is taking advantage of rich clients. This classification is one of the areas of maximum confusion, and likely source of controversy. Of course you can use WS-Federation without a browser (that’s what I do in SelfSTS), of course you can embed WS-Federation in a rich client and use a browser control to obtain tokens; however those require writing custom code, a very good grasp of what you are doing and the will to stretch things beyond intended usage, hence I am not covering those here.

Let’s backtrack through the diagram starting from the ACS issuer endpoints.

The WS-Federation endpoint is probably the one you are most familiar with; it’s the one you take advantage of in order to sign in your application by leveraging multiple identity providers. It’s also the one which allow you a no-code experience for the most common cases, thanks to the WIF SDK’s Add STS Reference wizard.

You can configure that endpoint to issue SAML1.1, SAML2 and SWT tokens. The latter can be useful for protocol transition scenarios, however remember that there’s no OOB support for the format.The sources here are the ones you can see on the portal, and the ones that the ACS-generated home realm discovery page will offer you (if you opted in). Every IP will use its own protocol for authentication (Google and Yahoo use OpenID, Facebook uses Facebook Connect, ADFS2 uses whatever authentication system is active) but in the end your application will get a WS-Federation wresult with a transformed token. It should be noted that “ADFS2” does not strictly indicates an ADFS2 instance, anything that can do WS-Federation should be able to be used here.

The WS-Trust endpoint will issue tokens when presented with a token from a WS-Trust identity provider, that is to say an ADFS2 instance (or equivalent, per the earlier discussion). It will also issue tokens when invoked with username and password associated to a service identity, static credentials maintained directly in ACS.

The OAuth WRAP endpoint will issue SWT tokens when invoked with a service identity credential; it will also accept SAML assertions from a trusted WS-Trust IP, pretty much the ADFS2 integration scenario from V1. Note that the profiles supported by ACS are server to server: the username & password of a service identity are not user credentials, but the means through which a service authenticates with another (including cases in which the user does not even have a session in place).

That’s it, that should give you a feeling of the scope of what you can do with this release. I’ll probably add to this as things more forward. Have fun!

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

Rinat Abdullin (@abdullin) explained how to Redirect TCP Connections in Windows Azure in this 8/25/2010 post:

I've just published a quick and extremely simple open source project that shows how to redirect TCP connections from one IP address/port combination to another in Windows Azure. It is sometimes helpful, when dealing with SQL Azure, cloud workers, firewalls and the like.

Lokad Tcp Tunnel for Windows Azure | Download

Usage is extremely simple:

- Get the package.

- Configure ServiceConfiguration to point to the target IP address/port you want to connect to (you can do this later in Azure Developer's Portal).

- Upload the Deployment.cspkg with the config to the Azure and start them.

- Connect to deployment.cloudapp.net:1001 as if it was IP:Port from the config.

If you are connecting to SQL Server this way (hosted in Azure or somewhere else), then the address have to specified like this in Sql Server Management Console (note the comma):

deployment.cloudapp.net,1001Actual Azure Worker config settings should look similar to the ones below, when configuring TCP Routing towards SQL Server (note the 1433 port, that is the default one for SQL):

<ConfigurationSettings>

<Setting name="Host" value="ip-of-your-SQL-server" />

<Setting name="Port" value="1433" />

</ConfigurationSettings>The project relies on rinetd to do the actual routing and demonstrates how to:

- Bundle non .NET executable in Windows Azure Worker and run it.

- Deal with service endpoints and pass them to the processes.

- Use Cloud settings to configure the internal process.

Since core source code is extremely simple, I'll list it here:

var point = RoleEnvironment.CurrentRoleInstance.InstanceEndpoints["Incoming"];

var host = RoleEnvironment.GetConfigurationSettingValue("Host");

var port = RoleEnvironment.GetConfigurationSettingValue("Port");

var tempFileName = Path.GetTempFileName();

var args = string.Format("0.0.0.0 {0} {1} {2}", point.IPEndpoint.Port, host, port)

File.WriteAllText(tempFileName, args);

var process = new Process

{

StartInfo =

{

UseShellExecute = false,

RedirectStandardOutput = true,

RedirectStandardError = true,

CreateNoWindow = true,

ErrorDialog = false,

FileName = "rinetd.exe",

WindowStyle = ProcessWindowStyle.Hidden,

Arguments = "-c \"" + tempFileName + "\"",

},

EnableRaisingEvents = false

};

process.Start();

process.BeginOutputReadLine();

process.BeginErrorReadLine();

process.WaitForExit();Tcp Tunnel for Azure is shared by Lokad in hopes that it will save a few hours or a day to somebody.

Dilip Krishnan summarizes David Pallman’s Hidden Costs in the Cloud, Part 1: Driving the Gremlins Out of Your Windows Azure Billing post of 8/14/2010 in an 8/25/2010 post to the InfoQ blog:

In a recent post David Pallman takes a look at the hidden costs of moving to the cloud, specifically in the context of Azure.

Cloud computing has real business benefits that can help the bottom line of most organizations. However, you may have heard about (or directly experienced) cases of sticker shock where actual costs were higher than expectations.

“These costs aren’t really hidden, of course: it’s more that they’re overlooked, misunderstood, or underestimated.” He says, as he examines and identifies these commonly overlooked costs in cloud based solutions.

Hidden Cost #1: Dimensions of Pricing

According to David the #1 source of surprises is not taking into account the various dimensions the provider services are metered. Every service utilized adds more facets the offering can be metered in terms of bandwidth, storage, transaction costs, service fees etc.

In effect, everything in the cloud is cheap but every kind of service represents an additional level of charge. To make it worse, as new features and services are added to the platform the number of billing considerations continues to increase.

He suggests consumers use service specific ROI calculators such as the Windows Azure TCO Calculator, Neudesic’s Azure ROI Calculator, or the official Windows Azure pricing information.

Hidden Cost #2: Bandwidth

”Bandwidth is often overlooked or underappreciated in estimating cloud computing charges.” he says, He suggests that we model the bandwidth usage using tools such as Fiddler to give an give a ballpark estimate based on key usage scenarios. One could also throttle bandwidth overages or model the architecture to provide the path of least traffic given any usage scenario.Hidden Cost #3: Leaving the Faucet Running

He suggests we review the usage charges and billing often to avoid surprises at the endLeaving an application deployed that you forgot about is a surefire way to get a surprising bill. Once you put applications or data into the cloud, they continue to cost you money, month after month, until such time as you remove them. It’s very easy to put something in the cloud and forget about it.

Hidden Cost #4: Compute Charges Are Not Based on Usage

Hidden Cost #6: A Suspended Application is a Billable Application

He emphasizes that if the application is not used or suspended it does not mean the billing charges do not apply. He urges users to check the billing policies.

Since the general message of cloud computing is consumption-based pricing, some people assume their hourly compute charges are based on how much their application is used. It’s not the case: hourly charges for compute time do not work that way in Windows Azure.

Hidden Cost #5: Staging Costs the Same as Production

Many have mistakenly concluded that only Production is billed for when in fact Production and Staging are both charged for, and at the same rates.

Use Staging as a temporary area and set policies that anything deployed there must be promoted to Production or shut down within a certain amount of time. Give someone the job of checking for forgotten Staging deployments and deleting them—or even better, automate this process.

Hidden Cost #7: Seeing Double

[Y]ou need a minimum of 2 servers per farm if you want the Windows Azure SLA to be upheld, which boils down to 3 9’s of availability. If you’re not aware of this, your estimates of hosting costs could be off by 100%!

Hidden Cost #8: Polling

Polling data in the cloud is a costly activity and incurs transaction fees. Very soon the costs could add up based on the quantity of polling. He suggestsEither find an alternative to polling, or do your polling in a way that is cost-efficient. There is an efficient way to implement polling using an algorithm that varies the sleep time between polls based on whether any data has been seen recently.

Hidden Cost #9: Unwanted Traffic and Denial of Service Attacks

He warns that unintended traffic in the form of DOS attacks or spiders etc. could increase traffic in unexpected ways. he suggests the best way to deal with such unintended charges is to audit the security of the application and provide measures of controls such as CAPTCHA’s.

If your application is hosted in the cloud, you may find it is being accessed by more than your intended user base. That can include curious or accidental web users, search engine spiders, and openly hostile denial of service attacks by hackers or competitors. What happens to your bandwidth charges if your web site or storage assets are being constantly accessed by a bot?

Hidden Cost #10: Management

Finally he states that as a result of all of these factors clouds come with an inherent cost to manage these services for efficiency in usage and consequently billing.Regularly monitor the health of your applications. Regularly monitor your billing. Regularly review whether what’s in the cloud still needs to be in the cloud. Regularly monitor the amount of load on your applications. Adjust the size of your deployments to match load.

The cloud’s marvelous IT dollar efficiency is based on adjusting deployment larger or smaller to fit demand. This only works if you regularly perform monitoring and adjustment.

He concludes his article saying that its very possible that one might not get things right the first time around and to expect some experimentation; possibly get a assessment of the infrastructure and seek the guidance from experts.

Cloud computing is too valuable to pass by and too important to remain a diamond in the rough.

Be sure to check out the original post and do enrich the comments section with your experiences.

Also, check out David’s latest: Hidden Costs in the Cloud, Part 2: Windows Azure Bandwidth Charges of 8/21/2010.

Brent Stineman (@BrentCodeMonkey) finally posted his Windows Azure Diagnostics Part 2–Options, Options, Options analysis on 8/24/2010:

It’s a hot and muggy Sunday here in Minnesota. So I’m sitting inside, writing this update while my wife and kids both get their bags ready for going back to school. Its hard to believe that summer is almost over already. Heck, I’ve barely moved my ‘68 Cutlass convertible this year. But enough about my social agenda.

After 4 months I’m finally getting back to my WAD series. Sorry for the delay folks. It hasn’t been far from my mind since I did part 1 back in April. But I’m back with a post that I hope you’ll enjoy. And I’ve taken some of the time to do testing, digging past the surface and in hopes of bringing you something new.

Diagnostic Buffers

If you’ve read up on WAD at all, you’re probably read that there are several diagnostic data source that are collected by default. Something that’s not made real clear in the MSDN articles (and even in many other blogs and articles I read preparing for this), is that this information is NOT automatically persisted to Azure Storage.

So what’s happening is that these data sources are buffers that represent files stored in the local file system. The size of these buffers is governed by a property of the DiagnosticMonitorConfiguration settings, OverallQuotaInMB. This setting represents the total space on the VM that will used for the storage of all log file information. You can also set quotas for the various individual buffers the sum total of which should be no greater than the overall quota.

These buffers will continue to grow until their maximum quota is reached at which time the older entries will be aged off. Additionally, should your VM crash, you will likely lose any buffer information. So the important step is to make sure you have each of your buffers configured properly to persist the logs to Azure Storage in such a way that helps protect the information you are most interested in.

When running in the development fabric, you can actually see these buffers. Launch the development fabric UI and navigate to a role instance and right click it as seen below:

Poke around in there a bit and you’ll find the various file buffers I’ll be discussing later in this update.

If you’re curious about why this information isn’t automatically persisted, I’ve been told it was a conscious decision on the part of the Azure team. If all these sources were automatically persisted, the potential costs associated with Azure Storage could present an issue. So they erred on the side of caution.

Ok, with that said, its time to move onto configuring the individual data sources.

Windows Azure Diagnostic infrastructure Logs

Simply put, this data source is the chatter from the WAD processes, the role, and the Azure fabric. You can see it start up, configuration values being loaded and changed etc… This log is collected by default but like we just mentioned, but not persisted automatically. Like most data sources, configuring it is pretty straight forward. We start by grabbing the current diagnostic configuration in whatever manner suits you best (I covered a couple ways last time), giving us an instance of DiagnosticMonitorConfiguration that we can work with.

To adjust the WAD data source, we’re going work with DiagnosticInfrastructureLogs property which is of type BasicLogsBufferConfiguration. This allows us to adjust the following values:

BufferQuoteInMB – maximum size of this data source’s buffer

ScheduledTransferLogLevelFilter – this is the LogLevel threshold that is used to filter entries when entries are persisted to Azure storage.

ScheduledTransferPeriod – this TimeSpan value is the interval at which the log should be persisted to Azure Storage.

Admittedly, this isn’t a log you’re going to have call for very often, if ever. But I have to admit, when I looked it, it was kind of interesting to see more about what was going on under the covers when roles start up.

Windows Azure Logs

The next source that’s collected automatically is Azure Trace Listener messages. This data source is different from the previous because it only contains what you put into it. Since its based on trace listener, you have instrument your application to take advantage of this. Proper instrumentation of any cloud hosted application is something I consider a best practice.

Tracing is a topic so huge that considerable time can (and has) been expended to discuss it. You have switches, levels, etc… So rather then diving into that extensive topic, let me just link you to another source that does it exceedingly well.

However, I do want to touch on how get this buffer into Azure Storage. Using the Logs property DiagnosticMonitorConfiguration we again access an instance of the BasicLogsBufferConfiguration class, just like Azure Diagnostics Infrastructure logs, so the same properties are available. Set them as appropriate, save your configuration, and we’re good to go.

IIS Logs (web roles only)

The last data source that is collected by default, at least for web roles, are the IIS logs. These are a bit of an odd bird in that there’s no way to schedule a transfer or set a quota for these logs. I’m also not sure if their size counts against the overall quota. What is known is that if you do an on-demand transfer for ‘Logs’, this buffer will be copied to blob storage for you.

FREB Logs

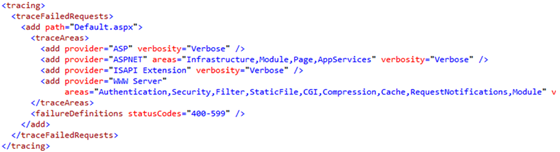

Out next buffer, the Failed Request Event Buffering log or FREB, is closely related to the IIS Logs. It is of course the failed IIS requests. This web role only data source is configured by modifying the web.config file of your role, introducing the following section.

Unfortunately, my tests for how to extract these logs haven’t yet been completed as I write this. But as soon as I do, I’ll update this post with that information. But for the moment, my assumption is that once configured, an on-demand transfer will pull them in along with the IIS Logs.

Crash Dumps

Crash dumps, like the FREB logs, aren’t automatically collected or persisted. Again I believe that doing an on-demand transfer will copy them to storage, but I’m still trying to prove it. But configuring the capture of this data also requires a different step. Fortunately, it’s the easiest of all the logs in that its simply and on/off switch that doesn’t even require a reference to the current diagnostic configuration. As follows:

Microsoft.WindowsAzure.Diagnostics.CrashDumps.EnableCollection(true);

Windows Event Logs

Do I really need to say anything about these? Actually yes, namely that the security log… forget about it. Adding custom event types/categories? Not an option. However, what we can do is gather from the other logs though a simple xpath statement as follows:

diagConfig.WindowsEventLog.DataSources.Add("System!*");

In addition to this, you can also filter the severity level.

Of course, the real challenge is formatting the xpath. Fortunately, the king of Azure evangelists, Steve Marx has a blog post that helps us out. At this point I’d probably go on to discuss how to gather these, but you know… Steve already does that. And it would be pretty presumptuous of me to think I know better then the almighty “SMARX”. Alright, enough sucking up… I see the barometer is dropping. So lets move on.

Performance Counters

We’re almost there. Now we’re down to performance counters. A topic most of us are likely familiar with. The catch is that as developers, you likely haven’t done much more than hear someone complain about them. Performance counters belong in the world of the infrastructure monitoring types. You know. Those folks that site behind closed doors with the projector aimed at a wall with scrolling graphs and numbers? If things start to go badly, a mysterious email shows up in the inbox of a business sponsor warning that a transaction took 10ms longer then it was supposed too. And the next thing you know, you’re called into an emergency meeting to find out what’s gone wrong.

Well guess what, mysterious switches in the server are no longer responsible for controlling these values. Now we can via the WAD as follows:

We create a new PerformanceCounterConfiguration, specific what we’re monitoring, and set a sample rate. Finally, we add that to the diagnostic configuration’s PerformanceCounters datasources and set the TimeSpan for the scheduled transfer. Be careful when adding though, because if you add the same counter twice, you’ll get twice the data. So check to see if it already exists before adding it.

Something important to note here, my example WON’T WORK. Because as of release of Azure Guest OS 1.2 (April of 2010), we need to use the specific versions of the performance counters or we won’t necessarily get results. So before you go trying this, get the right strings for the CounterSpecifier.

Custom Error Logs

*sigh* Finally! We’re at the end. But not so fast! I’ve actually saved the best for last. How many of you have applications you may be considering moving to Azure? These likely already have complex file based logging in them and you’d rather not have to re-instrument them. Maybe you’re using a worker role to deploy an Apache instance and want to make sure you capture its non-Azure logs. Perhaps its just a matter of your having an application that captures data from another source and saves it to a file and you want a simply way to save those values into Azure storage without having to write more code.

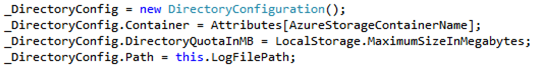

Yeah! You have an option through WAD’s support for custom logs. They call them logs, but I don’t want you to think like that. Think of this option as your escape clause for any time there’s a file in the VM’s local file store that you want to capture and save to Azure Storage! And yes, I speak from experience here. I LOVE this option. Its my catch all. And the code snippit at the left shows how to configure a data source to capture a file. In this snippet, “AzureStorageContainerName” refers to a blob in Azure Storage that these files will be copied too. LogFilePath is of course where the file(s) I want to save are located.

Then we add it to the diagnostic configuration’s Directories data sources. So simply yet flexible! All that remains is to set a ScheduledTransferPeriod or do an on-demand transfer.

Yes, I’m done

Ok, I think that does it. This went on far longer then I had originally intended. I guess I just had more to say then I expected. My only regret here is that just when I’m getting some momentum going on this blog again.. I’m going to have to take some time away. I’ve got another Azure related project that needs my attention and is unfortunately under NDA.

Once that is finished, I need to dive into preparing several presentations I’m giving in October concerning the Azure AppFabric. If I’m lucky, I’ll have time to share what I learn as I work on those presentations. Until then… stay thirsty my friends.

Return to section navigation list>

VisualStudio LightSwitch

Tim Anderson (@timanderson) asked Visual Studio LightSwitch – model-driven architecture for the mainstream? on 8/25/2010:

I had a chat with Jay Schmelzer and Doug Seven from the Visual Studio LightSwitch team. I asked about the release date – no news yet.

What else? Well, Schmelzer and Seven had read my earlier blog post so we discussed some of the things I speculated about. Windows Phone 7? Won’t be in the first release, they said, but maybe later.

What about generating other application types from the same model? Doug Seven comments:

The way we’ve architected LightSwitch does not preclude us from making changes .. it’s not currently on the plan to have different output formats, but if demand were high it’s feasible in the future.

I find this interesting, particularly given that the future of the business client is not clear right now. The popularity of Apple’s iPad and iPhone is a real and increasing deployment problem, for example. No Flash, no Silverlight, no Java, only HTML or native apps. The idea of simply selecting a different output format is compelling, especially when you put it together with the fast JIT-compiled JavaScript in modern web browsers. Of course support for multiple targets has long been the goal of model-driven architecture (remember PIM,PSM and PDM?) ; but in practice the concept of a cross-platform runtime has proved more workable.

There’s no sign of this in the product yet though, so it is idle speculation. There is another possible approach though, which is to build a LightSwitch application, and then build an alternative client, say in ASP.NET, that uses the same WCF RIA Services. Since Visual Studio is extensible, it will be fun to see if add-ins appear that exploit these possibilities.

I also asked about Mac support. It was as I expected – the team is firmly Windows-centric, despite Silverlight’s cross-platform capability. Schmelzer was under the impression that Silverlight on a Mac only works within the browser, though he added “I could be wrong”.

In fact, Silverlight out of browser already works on a Mac; the piece that doesn’t work is COM interop, which is not essential to LightSwitch other than for export to Excel. It should not be difficult to run a LightSwitch app out-of-browser on a Mac, just right-click a browser-hosted app and choose Install onto this computer, but Microsoft is marketing it as a tool for Windows desktop apps, or Web apps for any other client where Silverlight runs.

Finally I asked whether the making of LightSwitch had influenced the features of Silverlight or WCF RIA Services themselves. Apparently it did:

There are quite a few aspects of both Silveright 4 and RIA services that are in those products because we were building on them. We uncovered things that we needed to make it easier to build a business application with those technologies. We put quite a few changes into the Silverlight data grid.

said Schmelzer, who also mentioned performance optimizations for WCF RIA Services, especially with larger data sets, some of which will come in a future service pack. I think this is encouraging for those intending to use Silverlight for business applications.

There are many facets to LightSwitch. As a new low-end edition of Visual Studio it is not that interesting. As an effort to establish Silverlight as a business application platform, it may be significant. As an attempt to bring model-driven architecture to the mainstream, it is fascinating.

The caveat (and it is a big one) is that Microsoft’s track-record on modelling in Visual Studio is to embrace in one release and extinguish in the next. The company’s track-record on cross-platform is even worse. On balance it is unlikely that LightSwitch will fulfill its potential; but you never know.

Paul Peterson posted Microsoft LightSwitch – Customizing a Data Entity using the Designer on 8/24/2010:

In my last post I created a really simple application that I can use to keep track of some simple customer information. I created a simple entity named Customers, and then created a couple of screens that allow me to manage the entities I create. What I’ll do next is take a closer look at this Customers entity, and see what can be done to it to better meet my needs.

This post is specifically about the “entity”, not the fields within the entity (yet). I want to first understand what I can do with the designer in the management of a single entity. Things like creating and editing the fields and their properties, as well as entity relationships, are all subjects for later posts. I don’t have enough time during my lunch hour to write an all inclusive post about that stuff, so I am going to create smaller bite sized tidbits of information that you can print and read during your library break at work.

The Entity

My Customers entity contains some pretty simple information. Not really much there, and probably not nearly enough for what I want to eventually accomplish. None the less, it’s a start and enough to get me going and start exploring.

Here is a look at my Customers entity in LightSwitch

The Customers Entity

Since my last post I’ve had some time to think about my Customers entity. I was thinking that, hey, this entity I created is really supposed to represent a business entity, not a bunch of entities. As such, it is probably a better idea to name my entity in a way that represents a single entity instead of many. So I rename my entity to just Customer.

One of the first things I notice is how LightSwitch automagically saw that I changed the name of my entity. In the Properties window of my (now renamed) Customer entity there is a property labeled Plural Name . Before I renamed the entity, this propery had a value of “CustomersSet”. When I changed the entity name to just “Customer”, LightSwitch saw this and automatically pluralized the name of the entity.

When it comes to naming your entities (tables) it is a good idea to follow a consistent naming convention for all entities. Everyone likes to use a different convention when naming anything in an application; it’s really a matter of personal choice. Personally, I like to name a table as if it is a single entity. Others like to name it in its plural form. Some conventions even include one, or a combination of, different casings (upper and/or lower case characters). Whatever you think is going to work for you is ultimately the “right” way, so don’t think that you have to do exactly what someone else says is the “right” way – like when I BBQ!

Just for kicks, I click the Start Debugging button. I want to see if changing the name of entity had any cascading effects to my application.

Cool! The application fired up just fine and let me do the same add and edit customers without barfing.

Properties

Looking further further at the property window for my Customer entity, I see some other properties I can try messing with.

Default Screen

In the earlier post I created a screen showing a list of customers, and a screen allows me to edit customers. These are not to be confused with Search Data nor Details screens. Details screens are screens that I can create to use instead of the default detail screens that LightRoom might present. If I had created a Details Screen for my Customer entity, the Default Screen dropdown would contain an item for the detail screen I created. For example, If I double clicked a record in my CustomerList screen, and I had created a Details Screen and selected it from the detail screen drop down box, that screen would open instead of the default LightSwitch detail edit screen. For now, I am not going to create a default screen. I’ll let LightSwitch present one on the fly as I need it.

Plural Name

As mentioned before, the Plural Name property is the name that is pluralized representation of my entity. The plural name is editable, and can be customer to whatever you want. Just remember to use something that will make sense to you. Oh, and it can’t contain any spaces or special characters.

Is Searchable

The Is Searchable property tells LightSwitch whether or not the data will be searchable from a screen. This applies to both the list screens, and the search screens that LightSwitch creates. You can still create a screen from the Search Data Screen template, however the search box will only be visible if the Is Searchable property is selected.

For example, here is my CustomerList screen with the Is Searchable property set to true on the Customer entity…

Is Searchable property of entity set to TRUE

… and the same screen where the search box is not there because the Is Searchable property is not checked…

Is Searchable property of entity set to FALSE

Name

This is what I talked about before. This is the name of the business entity.

Description

This is a description of the screen. It may help you better understand the purpose of the screen should you end up with a whole lot of them. I am not really sure where else in LightSwitch this property value is used.

Display Name

This display name is what will show up on top of some of the screens that are created for your entity . The default display name is the same value you first entered in as the entity name. If you like, you can give it a different name that may make more sense that what may have been used for the entity name.

Summary Property

An entity might be made up of a lot of different fields. There is likely a field that could be used as a summary for the entity. In my case, the CustomerName is going to be the Summary Property. If not otherwise defined, the value of the field defined as the summary property will show up on the screen where there is a list involved. Running my application I see that the value of the CustomerName field appears in the list on the CustomerList screen.

Conclusion

That’s it. Not that difficult to understand is it?

In my next post I am going to start playing with the properties if the fields themselves. This is where I am going to do some fun stuff like creating choice list for one of my fields.

Martin Heller asserted “Beta 1 of Microsoft's LightSwitch shows promise as an easy-to-use development tool, but it doesn't seem to know its audience” in a preface to his InfoWorld preview: Visual Studio LightSwitch chases app dev Holy Grail post of 8/23/2010 to InfoWorld’s Developer-World blog:

One of the Holy Grails of application development has been to allow a businessperson to build his or her own application without needing a professional programmer. Over the years, numerous attempts at this goal have achieved varied levels of success. A few have survived; most have sunk into oblivion.

Microsoft's latest attempt at this is Visual Studio LightSwitch, now in its first beta test. LightSwitch uses several technologies to generate applications that connect with databases. It can run on a desktop or in a Web browser, and it can use up to three application layers: client tier, middle tier, and data access.

The technologies used are quite sophisticated. Silverlight 4.0 is a rich Internet application (RIA) environment that can display screens in a Web browser or on a desktop, and it hosts a subset of .Net Framework 4.0. WCF (Windows Communication Foundation) RIA services allow Silverlight applications to communicate. An entity-relationship model controls the data services. (See the LightSwitch architecture diagram below.)

LightSwitch screens run in three layers of objects. A screen object encapsulates the data and business logic. A screen layout defines the logical layout of objects on the screen. And a visual tree contains physical Silverlight controls bound to the logical objects in the layout.

In a conventional Silverlight application -- or in almost any conventional application built in Visual Studio -- the user works directly with the controls and layout and writes or generates a file that defines the visual layout and data bindings. For Silverlight and WPF (Windows Presentation Foundation), that file is in XAML. The Silverlight and WPF designers in Visual Studio 2010 offer two synchronized panes of XAML code and visuals.

LightSwitch can create two-tier and three-tier desktop and Web applications against SQL Server and other data sources using Silverlight 4 and WCF RIA Services.

Read more: Next page › 1, 2, 3,

Martin concluded page 2 of his post with the following observation:

The database capabilities of LightSwitch are impressive. An ad-hoc table designer generates SQL Server tables. Existing SQL Server databases can be imported selectively, with relations intact. Relations can be added to imported tables. Entities can be imposed on existing fields; for example, a text field holding a phone number can be treated as a Phone Number entity, which supplies a runtime editor that knows about country codes and area codes.

But in the database area, too, LightSwitch doesn't quite deliver. While it can map an integer field to a fixed pop-up list of meanings, it can't yet follow a relation and map the integer foreign key to the contents of the related table. In other words, I can tell LightSwitch that 0 is New York, 1 is London, and 2 is Tokyo, but I can't point it at a database table that lists the cities of the world.

I’m not certain how valuable an asset “a database table that lists the cities of the world” would be, but I’m certain that it’s possible to find a database that contains city and country information for three-letter airport codes and use it with LightSwitch. (LightSwitch has a screen for defining relationships between tables in multiple databases.) Mapping.com sells a database of 36,606 locations (459 IATA/FAA codes represent two locations) for $50. I’m trying to find a free one; otherwise, I’ll import and munge Wikipedia’s Airport Code list with Microsoft Excel or Access. An alternative is a list of US cities by ZIP code from the US Postal Service. Stay tuned for developments.

<Return to section navigation list>

Windows Azure Infrastructure

Wilson Pais claimed Microsoft Invest[ing] $500 million on State of the Art Data Center in Brazil as reported in a 8/25/2010 post to the Near Shore Americas blog:

Total investments in Microsoft’s (Nasdaq: MSFT) Brazil data center will reach US$500mn, the director of technology and innovation for Microsoft’s Chile division, Wilson Pais, told BNamericas.

Construction is well underway, Pais said, but was unable to disclose the center’s exact location. The facility will be up and running next year.

The executive also confirmed Microsoft’s intentions of constructing additional data centers in the region, but said the exact locations are still up in the air.

“There will be more than two in Latin America,” he said. “All the data centers are connected, and they all have Microsoft cloud computing infrastructure. Each data center represents an investment of roughly US$500mn. These are data centers the size of soccer stadiums.”

Pais emphasized that the company will make the final call regarding additional centers once demand reaches a certain level. Factors under analysis also include the quality of local network connectivity and country stability, while politics are being left by the wayside.

“If the consumption in Latin America turns out as we expect it will be, we will obviously need another data center in a short period of time,” he said.

BNamericas previously reported that Brazil and Mexico had garnered the most attention in Microsoft’s datacenter planning. Microsoft will need the centers to support its regional cloud computing offer, which already includes a range of enterprise products, from the recently launched Microsoft online services and Windows Azure to Windows Intune and Microsoft Dynamics CRM online.

Microsoft’s cloud services are now available in Brazil, Mexico, Chile, Colombia, Peru, Puerto Rico, Costa Rica and Trinidad & Tobago.

The company has more than 10 data centers worldwide, including three to four facilities in the US, three in Asia and another three to four in Europe, according to Pais.

I wonder when the new data center will start supporting the Windows Azure Platform.

Stuart J. Johnston reported Microsoft Cops to Cloud Computing Platform Outage to the Datamation blog on 8/25/2010:

Microsoft is apologizing to customers for an outage that kept some of its cloud computing users from being able to access their enterprise applications for more than two hours on Monday.

"On Aug. 23, from 5:30 a.m. [to] 7:45 a.m. PDT, some customers in North America experienced intermittent access to our datacenter. The outage was caused by a network issue that is now fully resolved, and service has returned to normal," a Microsoft (NASDAQ: MSFT) spokesperson said in an email to InternetNews.com.

Around 7 a.m. PDT on Monday, Microsoft sent out an Online Services Notification alert that said it was looking into "a performance issue which may impact connectivity to the North American data center." A second notification announced at around 8:45 a.m. that service had been restored to affected users.

"During the duration of the issue, customers were updated regularly via our normal communication channels. We sincerely apologize to our customers for any inconvenience this incident may have caused them," the Microsoft spokesperson added. The spokesperson declined to say where the affected datacenter is located.

Microsoft, like practically every major enterprise software vendor, has been preaching the benefits of the cloud. Last month, Microsoft touted its successful addition of cloud-based apps and environments to its better-known lineup of deployed software, such as Office and Windows. It also trumpeted client wins like Dow Chemical and Hyatt Hotels & Resorts, which it signed to deals for Microsoft's Business Productivity Online Suite (BPOS), a set of enterprise applications delivered via Microsoft's cloud infrastructure. The suite provides users with hosted versions of Exchange Online, SharePoint Online, Office Live Meeting, Exchange Hosted Services, and Office Communications Online.

As it turns out, however, Monday's outage impacted users of BPOS, which has emerged one of Microsoft's most popular cloud services.

Also impacted, according to the notification alert, were Microsoft's Online Services Administration Center, Sign In Application, My Company Portal, and Customer Portal.

Microsoft has not said how many users or customer companies were impacted by the outage.

However, Microsoft Server and Tools Division President Bob Muglia said in June at Microsoft's TechEd conference that it has signed up some "40 million paid users of Microsoft Online Services across 9000 business customers and more than 500 government entities."

The news also highlights one of the chief worries discouraging enterprise IT executives from shifting their infrastructures to the cloud: complete reliance on a third party to ensure application availability. Along with the periodic bouts of downtime suffered by Microsoft, Amazon, Google and a slew of other major and up-and-coming cloud players have experienced brief outages that had their cloud and software-as-a-service offerings offline for hours.

Stuart J. Johnston is a contributing writer at InternetNews.com.

According to mon.itor.us, my OakLeaf Systems Azure Table Services Sample Project, which runs as a single instance in the Southwest-US (San Antonio) data center, has had no downtime for the last three weeks (8/1 to 8/22/2010). Watch for my Uptime Report during the first week of September.

John P. Alioto continued his Categorizing the Cloud Azure series by asking in Part 2: Where does my business fit?:

Last time we discussed various categorizations of the cloud and determined that there were three dimensions against which we could categorize a Cloud offering. Those dimensions are Service Model, Deployment Model and Isolation Model. Given that the cardinality of the dimensions is 3, 3, 2, we have 18 possible categorizations. To answer the question “where does my business fit?” we can examine each of the 18 possible categorizations, the nature of the businesses that fall into each category and the Cloud offerings that one might find there.

For each category, I’ve decided on a three-way breakdown: the category, an example technology from Microsoft (and example technology from Others as available or more precisely as I understand them) and a description/analysis of the situation in each category.

NB: The technology lists are not intended to be comprehensive, but examples of each. Also please note as the disclaimer says, this is my opinion and differ from the opinion of others or my Employer.

Before the breakdown (hopefully in my case), let’s look at a few interesting themes that will come up throughout these discussions. The first theme is the trade-off between Control and Economy of Scale. The second theme is defining when our requirements are met by a particular Cloud offering. And the third is the old standby “do more with less” None of these three themes is new -- the technology industry has been steeped in them for years and each is critical in making the best decision when it comes to Cloud offerings (or any other system selection procedure.) Strangely, however, when these three themes are applied to the Cloud, the decisions can become confusing and appear new. They are not.

Not New Theme #1: Control vs. EoS

It’s very interesting that this is sometimes presented as a new issues as it pertains to the Cloud. I don’t see it that way. Who among us is not familiar with this trade-off …

We know it, we love it, we work within its constrains all the time. When we first approach a project, we can have a very high degree of control by building things ourselves or we can give up some of that control and buy an off the shelf solution. Sometimes the decision is obvious (few, if any of us would consider building an OS or DBMS from scratch for example) whereas sometimes its not.

How do we go about making this decision in the situations when the right move is less than perfectly clear? We follow a fairly tried-and-true procedure starting with a Requirements Matrix. The Requirements Matrix consists of a list of functionality that we want the system to potentially have.

We then categorize the requirements by criticality (must have, should have, nice to have) and call this our Business Requirements Document (BRD). We do a vendor/product/solution/service analysis (sometimes including an RFP and PoC) and at the end of all that, we decide which solution most meets our requirements. (You will see I did not say meets most of our requirements.) If a solution/product is adequate, we buy it; if none is adequate, we build it. At least this is how it works in the healthy state. (I’ve seen companies decided to build solutions themselves that I would never have.)

When applied to the Cloud, this discussion can somehow seem new. But why is this tradeoff …

… any different than the last? Why should we treat it any differently?

The answer is, we shouldn’t. It’s the very same trade-off we were making with Buy vs. Build with the independent variable being On Premises versus Cloud and the dependent variable remaining Control vs. EoS. The good news is that we can use the same process we used before to determine whether or not there is a product or solution out there that is adequate for our needs. In fact, it is not an entirely new process, but instead fits nicely into our vendor/product/solution selection process.

Indeed, I will argue that is should be our default starting position going into selection. If there is a Cloud solution/product that meets our needs, we should choose it. Only in the case where there is no adequate Cloud offering should we consider hosting a solution ourselves.

Not New Theme #2: The First Love is Formative (or What is “Good Enough”?)

It is critical that solution adoption decisions for Cloud technologies are correct the first time. The First Love, opening gambit, right off the bat, first pig out of the chute (insert your metaphor of choice here) experience for businesses in the Cloud have to be positive. Because of where we sit as in industry in a Diffusion of Technology sense, a bad Cloud decision risks putting a business off the Cloud completely for a good long time. We need the first Cloud love to be an experience that will create a cloud consumer for life.

Public enemy number one here is ignorance. Do not go down a “good enough” road in ignorance. Because I work for MS, I hear the question phrased this way all too often “what is BPOS?” We must arm ourselves with information before we make Cloud decisions or advise our customers on same. We must be aware of the breath and depth of Cloud offerings in order to make the correct decision on requirements being met and the correct amount of control to be ceded.

The Cloud is good news for businesses. Let’s keep it that way. We can all make our jobs easier by not setting ourselves up for a battle every time we go into a customer to talk about Cloud.

Not New Theme #3: The Goal

The Goal is three-fold. If you’ve been a CIO like me, then you know the Goal by heart. It’s the CIO’s mantra – say it with me!

- Control Costs!

- Increase Productivity!

- More Innovation!

Some might consider it the CIO’s curse because they can all three be wrapped up into once devilishly simple phrase: “Do more with less!” That’s a CIO’s job. That’s your CIO’s job. (Short digression here. If you tend to be puzzled by executive decisions, look at them anew in that light and see if they still puzzle you. You might still disagree, but at least the confusion might go away.) Whether times are tough or times are booming, the pressure to do more with less never goes away.

If you’ve not had the joy of owning a budget, think of Theme #3 like this: If you can pull costs out of operations, you can take that money and invest it back into your people. That investment should increase innovation. The increase in innovation should lower costs even further and the cycle continues. But do not make the novice mistake of equating the three-fold Goal with a “cheaper is better” attitude. You must always look at feasibility of solution versus Total Cost of Ownership. Always remember, cheaper does not imply lower TCO.

Call it what you want, the CIO’s mantra, the fundamental theorem of IT, whatever, Theme #3 is the Goal.

The Categories: Where does my Business Fit?

With those Themes in mind, let’s break down the categories of the Cloud and take a look at some of the offerings in each category. I will combine categories as it makes sense to do so. It’s not really important to break out each of the 18 categories and have a definitive product/offering in each. It’s adequate to determine where a business currently is and then look in general what direction that business will take moving to the Cloud. As I mentioned last time, it’s important to consider the correct category or set of categories of the Cloud that are relevant for the business that you are (or that you are talking to) lest confusion ensue and clarity fade.

SaaS

Category: Multi-Tenant/Public/SaaS

MS Examples: Bing, BPOS-S, Dynamics CRM Online, Windows Live (Office Web Apps, Mail, SkyDrive, many more)

Other Examples: Google, Gmail, Google Apps, SalesForce CRM

Description:I chose this category first because it is the most recognizable facet of the Cloud. We all use Bing and Live Mail/Calendar or whatever your SaaS stack of choice is every day. And, all three of the Themes play here.

The name of the game in this category is economy of scale. The goal is to push TCO as far down as possible. Solutions in this category require the most give in terms of control and should offer the most get in terms of TCO. This category is defined by pay-as-you-go (or free), self-service, centrally managed capabilities that scale massively. In many cases, offerings in this category cannot reasonably be built as a one off and as such, there may be no Buy vs. Build decision to be made.

All business over the next ten years will use some capability in this category.

As we sunset our legacy, On Premises solutions , Multi-Tenant/Public/SaaS solutions should be first on our list as replacements. Our goal should be to replace as many On Premises solutions as possible with offerings from this category. The reason for this is the huge amount of cost savings potential that can be realized here. Theme #3 looms absolutely gigantic here and is ignored at a business’ peril.

Category: Multi-Tenant/Private/SaaS & Multi-Tenant/Hybrid/SaaS

MS Examples: Line of Business Applications built on Windows Server AppFabric or an Azure Appliance

Other Examples: Other stacks such as IBM

Description:I have seen it argued that Private/SaaS does not make sense. I disagree. If a business invests in a Private/PaaS solution and then builds software services for their business units or subsidiaries on that Private/PaaS, that is Private/SaaS.

We can clump most of these solutions together. They are typically line of business applications offered internally by an IT department. The IT departments working in this category may serve multiple business units or various subsidiary companies that for regulatory or security reasons are treated as separate Tenants. These solutions can stay entirely behind the corporate firewall or reach out and integrate with other systems (sometimes in the Cloud).

These are the systems that we fall back to when there is no public Cloud offering available that meets our requirements matrix. This has begun to happen less and less frequently with as the level of capabilities in Multi-Tenant/Public/SaaS has grown. It’s very tough for a CIO to tell his employees that they can have a 100 MB mailbox at work and then have them go home and have 25 GB of storage for free in their SkyDrive.

Theme #1 plays here as often times increased configuration capabilities is sited by businesses that want to remain in this category. A detailed and specific analysis of a Requirements Matrix can yield many features in this case that have been mislabeled as Must Haves. Business need to start asking the question “We pay for all these levers we can pull and dials we can turn, but do we ever pull or dial them? If not, why pay for them?”

Category: Dedicated/Public/SaaS

MS Examples: BPOS-D, BPOS-F

Other Examples: Hosted Solutions, Hosted CRM

Description:These are the solutions that are chosen in the presence of a “Good Reason”. From last time, a Good Reason is as follows:

- Compliance

- Data Sovereignty

- Residual Risk Reduction for high value business data

Because these solutions tend to be more costly and specialized, if requirements fall outside of the Good Reason category, look toward Multi-Tenant/Public/SaaS solutions. Hosted SPS –> BPOS-S for example.

Category: Dedicated/Private/SaaS & Dedicated/Hybrid/SaaS

MS Examples: Line of Business Applications built on Windows Server AppFabric

Other Examples: Other stacks such as IBM or Oracle

Description:These solutions are basically the same as the Multi-Tenant variety, but are served up from an IT department with only one Tenant.

PaaS

Category: Multi-Tenant/Public/PaaS

MS Examples: Windows Azure

Other Examples: GAE, VMForce

Description:PaaS moves the slider a bit more toward control, but still maintains the ability to realize much lower TCO. Even the most optimized and dynamic data centers do not run compute at a cost of $0.12/hour. Business taking advantage of this category have the benefits of pay-as-you go, self-service, elasticity, centralized management and near infinite scalability.

As discussed last time, the unit of deployment for a PaaS solution is at the service level. The concern then becomes the ambient capabilities that are available to the services in terms of Storage, Compute, Data, Connectivity and Security. These capabilities must be understood completely if a correct platform decision is to be made. For example, NoSql can be the right way to go, but do not underestimate the value of relational data to an Enterprise.

Azure, for example, offers the following ambient capabilities to services …

Through the following offerings …

Thus, even though Azure offers less “levers to pull” than an On Premise deployment of Windows Server 2008 and AppFabric, it nonetheless offers a very complete stack for the development of services.

Multi-Tenant/Public/PaaS is very much the future of the Cloud (see my argument about IaaS below). This is where business should target their service development and deployment by default, then fall back for the minority of services that will not work in this category.

Category: Multi-Tenant/Private/PaaS & Multi-Tenant/Hybrid/PaaS

MS Examples: Azure Appliance or Windows Server AppFabric & System Center Virtual Machine Manager 2008 R2 Self Service Portal 2.0

Other Examples: GAE for Business? vCloud?

Description:These solutions are for business that have Good Reasons but want the self-service, elasticity, centralized management and scalability of a Cloud solution. They can buy a Private PaaS Cloud or build one. The typical On Premises data center has only a few of the features of a Private PaaS Cloud. It is generally not self-service, does not offer elasticity and is typically more difficult to manage all resulting in higher TCO.

Windows Server AppFabric along with System Center Virtual Machine Manager 2008 R2 Self Service Portal 2.0 (VMMSSP – say that 10x fast!) provides capabilities for for IT departments to build their own Private Cloud. But, business can also purchase a pre-fab, pluggable, modular PaaS Private Cloud in the form of an Azure Appliance. This really only makes sense when a business is at very large scale or has a Good Reason (generally Data Sovereignty).

So where does the lower TCO come from? The answer is less in the economy of scale of a Public PaaS, but more in the efficiency and centralized management. It’s a private data center, but it’s a better, more efficient private data center.

Business in this category should make a concerted effort to segment the portions of their operations and/or data that can be moved to the Public Cloud. Then they should construct or buy a Private Cloud to host the remaining operations or data.

The Dedicated versions of the Private/PaaS and Hybrid/PaaS are single Tenant versions of the Multi-Tenant/*/PaaS solutions. The Dedicated version of the Public/PaaS is something along the lines of a Hosted xRM solution. These solutions are fairly narrow and can likely be repositioned into or the Public/PaaS category.

IaaS

I need to make a caveat up front here: IaaS is not as interesting to me as SaaS and PaaS. I don’t see as big an opportunity for IaaS to lower TCO as with PaaS and SaaS. Instead of the complete and centrally managed functionality of SaaS or PaaS, with IaaS, a business rents metal. Business have been doing that for years with hosting solutions and it has yet to change the world. At the end of the day, it’s metal a business still needs to manage and all the inefficiencies inherent in that situation raise TCO.

Amazon EC2 has done a good job re-invigorating the notion of Utility Computing by adding the elasticity and self-service elements. Amazon has also done a good job commoditizing the sale of extra compute hours in its data center. It’s not a business model I fully understand, however. They are driving the value of a compute hour down as far as it can go which is good for everyone except companies that sell compute hours! As a result, Amazon can only get so far renting out spare cycles in their datacenters. They are moving more toward a complete PaaS offering which says to me that even the poster child of IaaS sees PaaS as more interesting. So at least I’m not alone! :)

But, in the interest of completeness …

Category: Multi-Tenant/Public/IaaS & Multi-Tenant/Hybrid/IaaS

MS Examples: N/A

Other Examples: Amazon EC2

Description:Business in this category rent metal from another business. They don’t rack and stack the machines, but they deploy and manage as if they were machines in their own data center. They also buy and maintain licenses for software above the OS level. The notions of elasticity and self-service differentiate an IaaS solution from a hosting solution.

I do not put the Azure VM role in this category. I will not discuss why until more about that offering is officially announced, but suffice it to say that it does not belong here.

Category: Multi-Tenant/Private/IaaS

MS Examples: Windows Server AppFabric & System Center Virtual Machine Manager 2008 R2 Self Service Portal 2.0

Other Examples: vSphere

Description:Business in this category have significant investment in On Premises solutions and want to benefit from self-service, elastic, central managed capability. Business in this category should be looking to move to a combination of Multi-Tenant/Hybrid/PaaS and Multi-Tenant/Hybrid/IaaS solutions to lower TCO as much as possible.

Category: Dedicate/Private/IaaS, Dedicated/Hybrid/IaaS, Dedicated/Public/IaaS

MS Examples: Windows Server AppFabric & System Center Virtual Machine Manager 2008 R2 Self Service Portal 2.0

Other Examples: vSphere, Hosted Solutions

Description:Some business in this category have realized the benefits of having someone else rack and stack their servers, but have not moved their traditional hosting solutions to Cloud solutions. Others have a traditional On Premises deployment. Business in this category should be looking to move to a combination of Multi-Tenant/Hybrid/PaaS and Multi-Tenant/Hybrid/IaaS solutions to lower TCO as much as possible.

Conclusion

Whew! We made it! This time, we established our Three Themes as they pertain to the Cloud and went into the nitty-gritty of each category of Cloud offering and the type of business that can be found there. As Cloud professionals, it is our responsibility to understand the categories and the themes as well as the Cloud offerings that apply in each category.

Putting the Cloud in the perspective of the Not New Themes makes it more approachable for businesses. CIOs can use the same processes, make the same trade-offs and realize the same results that they are familiar with, but apply them to the Cloud. That makes Cloud a part of the team and not a confusing, unclear outlier. It helps bring analysis and decisions around the Cloud down to size.

Lori MacVittie (@lmacvittie) claimed Cloud is more likely to make an application deployment more – not less – complex, but the benefits are ultimately worth it as a preface to her Cloud + BPM = Business Process Scalability post of 8/25/2010 to F5’s DevCentral blog:

I was a bit disconcerted by the notion put forward that cloud-based applications are somehow less complex than their non-cloud, non-virtualized predecessors. In reality, it’s the same application, after all, and the only thing that has really changed is the infrastructure and its complexity. Take BPM (Business Process Management) as an example. It was recently asserted on Twitter that cloud-based BPM “enables agility”, followed directly by the statement, “There’s no long rollout of a complex app.”

That statement should be followed by the question: “How, exactly, does cloud do anything to address the complexity of an application?” It still needs the same configuration, the same tweaks, the same integration work, the same testing. The only thing that changed is that physical deployment took less time, which is hardly the bulk of the time involved in rolling out an application anyway.