Windows Azure and Cloud Computing Posts for 8/13/2010+

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database, Codename “Dallas” and OData

- AppFabric: Access Control and Service Bus

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch

- Windows Azure Infrastructure

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Read the detailed TOC here (PDF) and download the sample code here.

Discuss the book on its WROX P2P Forum.

See a short-form TOC, get links to live Azure sample projects, and read a detailed TOC of electronic-only chapters 12 and 13 here.

Wrox’s Web site manager posted on 9/29/2009 a lengthy excerpt from Chapter 4, “Scaling Azure Table and Blob Storage” here.

You can now freely download by FTP and save the following two online-only PDF chapters of Cloud Computing with the Windows Azure Platform, which have been updated for SQL Azure’s January 4, 2010 commercial release:

- Chapter 12: “Managing SQL Azure Accounts and Databases”

- Chapter 13: “Exploiting SQL Azure Database's Relational Features”

HTTP downloads of the two chapters are available for download at no charge from the book's Code Download page.

Tip: If you encounter articles from MSDN or TechNet blogs that are missing screen shots or other images, click the empty frame to generate an HTTP 404 (Not Found) error, and then click the back button to load the image.

Azure Blob, Drive, Table and Queue Services

Inge Henriksen waxes enthusiastic about Azure Table Storage - the future is now in this 8/14/2010 post:

With the NoSQL movement gaining momentum I think that the Microsoft Azure Table Storage is getting an undeserved low amount of attention, in fact Azure Table Storage is just, in lack of a better word; amazing!

Here are some pointers of why you should definately consider Azure Table Storage for your next application or service( And no, Microsoft does not pay me to say this ;) ):

- Scales automatically to multiple servers as your application grows; it can in practical terms scale indefinitely in terms of performance. The scaling is completely transparent from a user and a developer point of view

- Supports declarative queries using LINQ

- Flexible schema

- Is already in a cloud so you don’t need your own infrastructure

- Pricing models is very affordable and only get more expensive if your application grows

- Distributed queries makes it really fast

- Just like developing on any other ADO.NET application for an experienced .NET developer

- REST interface for those that do not want to develop their application using .NET

- You can select a Azure data center close to your users for low latency:

- North Central US - Chicago, Illinois

- South Central US - San Antonio, Texas

- North Europe - Amsterdam, Netherlands,

- West Europe - Dublin, Ireland

- East Asia - Hong Kong

- Southeast Asia - Singapore

- Support for asynchronous work dispatch

- Up to 100 TB per storage

Watch this video presentation for more in-depth information: http://www.microsoftpdc.com/2009/SVC09?type=wmv

My main complaint about Azure Table Storage is lack of promised secondary indexes.

<Return to section navigation list>

SQL Azure Database, Codename “Dallas” and OData

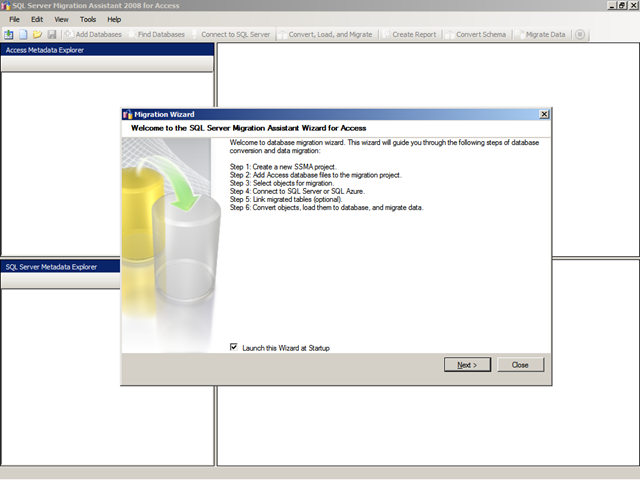

My Installing the SQL Server Migration Assistant 2008 for Access v4.2: FAIL and Workaround post of 8/15/2010 describes a problem with obtaining a license key file and how to work around it:

Installing the SQL Server Migration Assistant v4.2 for Access from the download page mentioned in the Windows Azure and Cloud Computing Posts for 8/12/2010+ post’s Easily migrate Access data to SQL Azure item failed due to a problem with downloading the license file that’s required to run SSMA for Access. …

Workaround

To get a handle on the problem, I installed Eric Lawrence’s Fiddler2 and captured the session of previous steps 7 through 9. Here’s Fiddler2’s capture with the download request highlighted at #61 (click image for full size 1024px capture):

Manually executing the download request by double-clicking line #61 produced the expected result:

You can obtain the license file here, if you encounter the preceding problem:

Note: The file downloads with SSMA 2008 for Access 4.2.license as its name. After you save it to your C:\Users\UserName\AppData\Roaming\Microsoft SQL Server Migration Assistant\a2ss-2008, change its name to access-ssma.license as shown here:

Return focus to the License Management dialog and click Refresh License.

Note: If you receive a message that the license key failed to refresh, check the ssma.log file in the C:\Users\UserName\AppData\Roaming\Microsoft SQL Server Migration Assistant\a2ss-2008/logs folder. The following two errors result from failing to copy the license file to the correct folder and not changing its name to access-ssma.license:

Click OK to open SSMA 2008 for Access with the Migration Wizard active:

A complementary Using the SQL Server Migration Assistant 2008 for Access post that describes migrating and linking an Access application to an SQL Azure file will be posted shortly.

Azret Botash posted OData Provider for XPO – GroupBy(), Count(), Max() and More… to DevExpress’s The One With … blog on 8/15/2010:

Last time I have introduced you to a custom OData extension !summary. But as exciting as it was, the standard .NET Data Client Library does not support it. Why should it right? It does not know about it. To solve this, the eXpress Persistent Objects (XPO) Toolkit now includes a client side library that can understand and work with all the extensions that we make.

The AtomPubQuery<> (similar to the .NET DataServiceQuery<>) will also support all the basic OData operations like $filter, $orderby etc… Let’s see how to use it.

Let’s assume you have an OData service exposing data from the Northwind Database and you want to get top 5 sales broken down by country.

AtomPubQuery<Order> Orders { get { return new AtomPubQuery<Order>( new Uri("http://localhost:54691/Northwnd.svc", UriKind.Absolute), "Orders", "OrderID", this); } }var query = from o in Orders group o by o.ShipCountry into g orderby g.Count() descending select new Sale() { Country = g.Key, Total = g.Count() };this.chartControl1.DataSource = query.Take(5).ToList();

This will make the following request:

http://localhost:54691/Northwnd.svc/Orders?!summary=ShipCountry,Count() desc&$top=5

and execute the following query on the server:

select top 5 ShipCountry, count(*) from Orders group by ShipCountry order by count(*) desc

Pretty cool ah? :)

Wayne Walter Berry (@WayneBerry) posted Finding Blocking Queries in SQL Azure to the SQL Azure Team blog on 8/13/2010:

Slow or long-running queries can contribute to excessive resource consumption and be the consequence of blocked queries; in other words poor performance. The concept of blocking is not different on SQL Azure then on SQL Server. Blocking is an unavoidable characteristic of any relational database management system with lock-based concurrency.

The query below will display the top ten running queries that have the longest total elapsed time and are blocking other queries.

SELECT TOP 10 r.session_id, r.plan_handle, r.sql_handle, r.request_id, r.start_time, r.status, r.command, r.database_id, r.user_id, r.wait_type, r.wait_time, r.last_wait_type, r.wait_resource, r.total_elapsed_time, r.cpu_time, r.transaction_isolation_level, r.row_count, st.text FROM sys.dm_exec_requests r CROSS APPLY sys.dm_exec_sql_text(r.sql_handle) as st WHERE r.blocking_session_id = 0 and r.session_id in (SELECT distinct(blocking_session_id) FROM sys.dm_exec_requests) GROUP BY r.session_id, r.plan_handle, r.sql_handle, r.request_id, r.start_time, r.status, r.command, r.database_id, r.user_id, r.wait_type, r.wait_time, r.last_wait_type, r.wait_resource, r.total_elapsed_time, r.cpu_time, r.transaction_isolation_level, r.row_count, st.text ORDER BY r.total_elapsed_time descThe cause of the blocking can be poor application design, bad query plans, the lack of useful indexes, and so on.

Understanding Blocking

On SQL Azure, blocking occurs when one connection holds a lock on a specific resource and a second connection attempts to acquire a conflicting lock type on the same resource. Typically, the time frame for which the first connection locks the resource is very small. When it releases the lock, the second connection is free to acquire its own lock on the resource and continue processing. This is normal behavior and may happen many times throughout the course of a day with no noticeable effect on system performance.

The duration and transaction context of a query determine how long its locks are held and, thereby, their impact on other queries. If the query is not executed within a transaction (and no lock hints are used), the locks for SELECT statements will only be held on a resource at the time it is actually being read, not for the duration of the query. For INSERT, UPDATE, and DELETE statements, the locks are held for the duration of the query, both for data consistency and to allow the query to be rolled back if necessary.

For queries executed within a transaction, the duration for which the locks are held are determined by the type of query, the transaction isolation level, and whether or not lock hints are used in the query. For a description of locking, lock hints, and transaction isolation levels, see the following topics in SQL Server Books Online:

- Locking in the Database Engine

- Customizing Locking and Row Versioning

- Lock Modes

- Lock Compatibility

- Row Versioning-based Isolation Levels in the Database Engine

- Controlling Transactions (Database Engine)

Summary

The short and simple is that a lot of small short quick queries reduce the chance of blocking compared to fewer longer running queries. Breaking up the work you are doing into small units, reducing the amount of transactions, and writing fast running queries will get you the best performance. Remember: even one poorly written slow query can block faster more efficient queries making them slow.

<Return to section navigation list>

AppFabric: Access Control and Service Bus

Ryan Dunn (@dunnry), Steve Marx (@smarx), and Vittorio Bertocci (@vibronet) star in a 00:40:15 Cloud Cover Episode 22 - Identity in Windows Azure episode of 8/15/2010:

Join Ryan and Steve each week as they cover the Microsoft cloud. You can follow and interact with the show at @cloudcovershow.

In this episode:

- Vittorio Bertocci (@vibronet), author of Programming Windows Identity Foundation, joins us to talk about Identity and how it relates to Windows Azure and AppFabric ACS.

- Learn a tip on how to figure out if you are running low on space in SQL Azure.

Show Links:

Ryan Dunn Shares Top Tips for Running in the Cloud

New Windows Azure Security Whitepaper Available

A Server is not a Machine

Try Windows Azure for One Month FREE

A Secure Network Drive for Windows Azure Blob Storage

Lokad.Snapshot

AppFabric Access Control Service (ACS) v2

How to Tell if You are Out of Room

Is Steve Marx standing in a hole, or are Ryan and Vibro standing on bricks?

Wade Wegner (@WadeWegner) explained Getting Started with Windows Server AppFabric Cache on 8/13/2010:

I struggled today to find a good “Getting started with Windows Server AppFabric Cache” tutorial – either my search fu failed me or it simply doesn’t exist. Nevertheless, I was able to piece together the information I needed to get started.

I recommend you break this up into three steps:

- Installing Windows Server AppFabric

- Configuring Windows Server AppFabric Cache

- Testing Windows Server AppFabric Cache with Sample Apps

I think this article will serve as a good tutorial on getting started, and we can refer back to it as the basis for more advanced scenarios.

Installing Windows Serve AppFabric

- Get the Web Platform Installer.

- Once it is installed and opened, select Options.

- Under Display additional scenarios select Enterprise.

- Now you’ll see an Enterprise tab. Select it, and choose Windows Server AppFabric. Click Install. This will start a multi-step process for installing Windows Server AppFabric (which in my case required two reboots to complete).

Configuring Windows Server AppFabric Cache

- Open the Windows Server AppFabric Configuration Wizard (Start –> Windows Server AppFabric –> Configure AppFabric).

- Click Next until you reach the Caching Service step. Check Set Caching Service configuration, select SQL Server AppFabric Caching Service Configuration Store Provider for the configuration provider, and click Configure.

- Check Create AppFabric Caching Service configuration database, confirm the Server name, and specify a Database name. Click OK.

- When asked if you want to continue, click Yes.

- You will receive confirmation that your database was created and registered.

- On the Cache Node step, confirm the selected port nodes.

- You will be asked to continue and apply the configuration settings; select Yes.

- On the last step you’ll click Finish.

- Open up an elevated Windows PowerShell window.

- Add the Distributed Cache administration module

Import-Module DistributedCacheAdministration- Set the context of your Windows PowerShell session to the desired cache cluster with Use-CacheCluster. You can run this without parameters to use the connection parameters provided during configuration.

Use-CacheCluster- Grant your user account access to the cache cluster as a client. Specify your user and domain name.

Grant-CacheAllowedClientAccount domain\username- Verify your user account has been granted access.

Get-CacheAllowedClientAccounts- Start the cluster.

Start-CacheCluster

Testing Windows Server AppFabric Cache with Sample Apps

- Grab a copy of the Microsoft AppFabric Samples, which are a series of very short examples.

- Extract the samples locally.

- Open up CacheSampleWebApp.sln (..\Samples\Cache\CacheSampleWebApp).

- Right-click the CreateOrder.aspx file and select Set As Start Page.

- Hit F5 to start the solution.

- Confirm that the cache is functioning by creating a sample order, getting the sample order, and updating the sample order.

Once you accomplish these three steps, you’ll have the basis for building more complex caching solutions.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

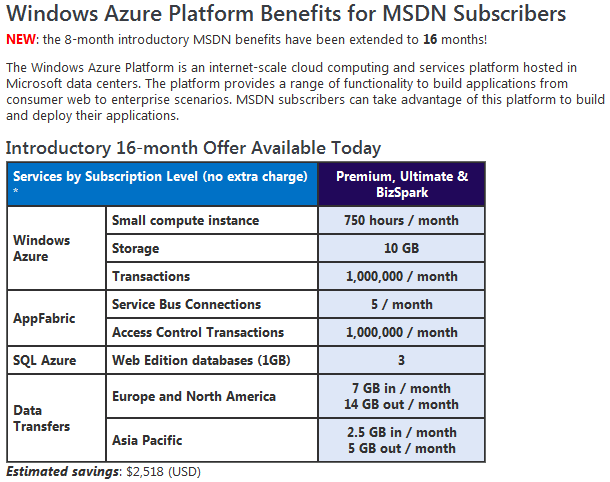

My Opening a Windows Azure Platform One Month Pass or Introductory Special Account post of 8/16/2010 explains how to gain Windows Azure and SQL Azure benefits:

Microsoft offers a number of special pricing schemes for Windows Azure and SQL Azure accounts. If you’re a MSDN subscriber you can take advantage of special offers, such as the Windows Azure Platform Benefits for MSDN Subscribers, which recently had its duration increased:

If you aren’t an MSDN Premium or higher subscriber, you can still get one of two temporary benefits for SQL Azure and Windows Azure:

Windows Azure One Month Pass: Up to 31 days of free SQL Azure and Windows Azure use; no credit card required. Valid only for the calendar month in which the request is received.

Introductory Special: Three months free use of an 1-GB Azure database, 25 free Windows Azure compute hours per month and other benefits.

Either benefit requires a Windows Live ID.

Following are the steps to sign up for the Windows Azure One-Month Pass:

1. Open the Windows Azure One-Month Pass page, a Windows Azure Web Role:

2. Request a pass by clicking the here link to open your email client, adding your company name and zip code, and marking an “offers and campaigns” box:

3. Wait a maximum of 2-3 working days for a response. See the post for contents of the pass.

The post continues with instructions for obtaining Introductory Special Benefits.

David Pallman described Hidden Costs in the Cloud, Part 1: Driving the Gremlins Out of Your Windows Azure Billing in this 8/14/2010 post:

grem•lin (ˈgrɛm lɪn) –noun

1. a mischievous invisible being, said by airplane pilots in World War II to cause engine trouble and mechanical difficulties.

2. any cause of trouble, difficulties, etc.

Cloud computing has real business benefits that can help the bottom line of most organizations. However, you may have heard about (or directly experienced) cases of sticker shock where actual costs were higher than expectations. These episodes are usually attributed to “hidden costs” in the cloud which are sometimes viewed as gremlins you can neither see nor control. Some people are spooked enough by the prospect of hidden costs to question the entire premise of cloud computing. Is using the cloud like using a slot machine, where you don’t know what will come up and you’re usually on the losing end?

These costs aren’t really hidden, of course: it’s more that they’re overlooked, misunderstood, or underestimated. In this article series we’re going to identify these so-called “hidden costs” and shed light on them so that they’re neither hidden nor something you have to fear. We’ll be doing this specifically from a Windows Azure perspective. In Part 1 we’ll review “hidden costs” at a high level, and in subsequent articles we’ll explore some of them in detail.

Hidden Cost #1: Dimensions of Pricing

In my opinion the #1 hidden cost of cloud computing is simply the number of dimensions there are to the pricing model. In effect, everything in the cloud is cheap but every kind of service represents an additional level of charge. To make it worse, as new features and services are added to the platform the number of billing considerations continues to increase.As an example, let’s consider that you are storing some images for your web site in Windows Azure blob storage. What does this cost you? The answer is, it doesn’t cost you very much—but you might be charged in as many as 4 ways:

- Storage fees: Storage used is charged at $0.15/GB per month

- Transaction fees: Accessing storage costs $0.01 per 10,000 transactions

- Bandwidth fees: Sending or receive storage in and out of the data center costs $0.10/GB in, $0.15/GB out

- Content Delivery Network: If you are using this optional edge caching service, you are also paying an additional $0.15/GB and an additional $0.01 per 10,000 transactions

You might still conclude these costs are reasonable after taking everything into account, but this example should serve to illustrate how easily you can inadvertently leave something out in your estimating.

What to do about it: You can guard against leaving something out in your calculations by using tools and resources that account for all the dimensions of pricing, such as the Windows Azure TCO Calculator, Neudesic’s Azure ROI Calculator, or the official Windows Azure pricing information. With a thorough checklist in front of you, you won’t fail to consider all of the billing categories. Also make sure that anything you look at is current and up to date as pricing formulas and rates can change over time. You also need to be as accurate as you can in predicting your usage in each of these categories.

Hidden Cost #2: Bandwidth

In addition to hosting and storage costs, your web applications are also subject to bandwidth charges (also called data transfer charges). When someone accesses your cloud-hosted web site in a web browser, the requests and responses incur data transfer charges. When an external client accesses your cloud-hosted web service, the requests and responses incur data transfer charges.Bandwidth is often overlooked or underappreciated in estimating cloud computing charges. There are several reasons for this. First, it’s not something we’re used to having to measure. Secondly, it’s less tangible than other measurements that tend to get our attention, such as number of servers and amount of storage. Thirdly, it’s usually down near the bottom of the pricing list so not everyone may notice it or pay attention to it. Lastly, it’s nebulous: many have no idea what their bandwidth use is or how they would estimate it.

What to do about it: You can model and estimate your bandwidth using tools like Fiddler, and once running in the cloud you can measure actual bandwidth using mechanisms such as IIS logs. With a proper analysis of bandwidth size and breakdown, you can optimize your application to reduce bandwidth.

You can also exercise control over bandwidth charges through your solution architecture: you aren’t charged for bandwidth when it doesn’t cross in or out of the data center. For example, a web application in the cloud calling a web service in the same data center doesn’t incur bandwidth charges.Hidden Cost #3: Leaving the Faucet Running

As a father I’m constantly reminding my children to turn off the lights and not leave the faucets running: it costs money! Leaving an application deployed that you forgot about is a surefire way to get a surprising bill. Once you put applications or data into the cloud, they continue to cost you money, month after month, until such time as you remove them. It’s very easy to put something in the cloud and forget about it.What to do about it: First and foremost, review your bill regularly. You don’t have to wait until end of month and be surprised: your Windows Azure bill can be reviewed online anytime to see how your charges for the month are accruing.

Secondly, make it someone’s job to regularly review that what’s in the cloud still needs to be there and that costs are in line with expectations. Set expiration dates or renewal review dates for your cloud applications and data. Be proactive in recognizing the faucet has been left running before the problem reaches flood levels.

Hidden Cost #4: Compute Charges Are Not Based on Usage

If you put an application in the cloud and no one uses it, does it cost you money? Well, if a tree falls in the forest and no one is around to hear, does it make a sound? The answer to both questions is yes. Since the general message of cloud computing is consumption-based pricing, some people assume their hourly compute charges are based on how much their application is used. It’s not the case: hourly charges for compute time do not work that way in Windows Azure.Rather, you are reserving machines and your charges are based on wall clock time per core. Whether those servers are very busy, lightly used, or not used at all doesn’t affect this aspect of your bill. Where consumption-based pricing does enter the picture is in the number of servers you need to support your users, which you can increase or decrease at will. There are other aspects of your bill that are charged based on direct consumption such as bandwidth.

What to do about it: Understand what your usage-based and non-usage-based charges will be, and estimate costs accurately. Don’t make the mistake of thinking an unused application left in the cloud is free—it isn’t.

Hidden Cost #5: Staging Costs the Same as Production

If you deploy an application to Windows Azure, it can go in one of two places: your project’s Production slot or its Staging slot. Many have mistakenly concluded that only Production is billed for when in fact Production and Staging are both charged for, and at the same rates.What to do about it: Use Staging as a temporary area and set policies that anything deployed there must be promoted to Production or shut down within a certain amount of time. Give someone the job of checking for forgotten Staging deployments and deleting them—or even better, automate this process.

Hidden Cost #6: A Suspended Application is a Billable Application

Applications deployed to Windows Azure Production or Staging can be in a running state or a suspended state. Only in the running state will an application be active and respond to traffic. Does this mean a suspended application does not accrue charges? Not at all—the wall clock-based billing charges accrue in exactly the same way regardless of whether your application is suspended or not.

What to do about it: Only suspend an application if you have good reason to do so, and this should always be followed by a more definitive action such as deleting the deployment or upgrading it and starting it up. It doesn’t make any sense to suspend a deployment and leave it in the cloud: no one can use it and you’re still being charged for it.Hidden Cost #7: Seeing Double

Your cloud application will have one more software tiers, which means it is going to need one or more server farms. How many servers will you have in each farm? You might think a good answer is 1, at least when you’re first starting out. In fact, you need a minimum of 2 servers per farm if you want the Windows Azure SLA to be upheld, which boils down to 3 9’s of availability. If you’re not aware of this, your estimates of hosting costs could be off by 100%!The reason for this 2-server minimum is how patches and upgrades are applied to cloud-hosted applications in Windows Azure. The Fabric that controls the data center has an upgrade domain system where updates to servers are sequenced to protect the availability of your application. It’s a wonderful system, but it doesn’t do you any good if you only have 1 server.

What to do about it: If you need the SLA, be sure to plan on at least 2 servers per farm. If you can live without the SLA, it’s fine to run a single server assuming it can handle your user load.

Hidden Cost #8: Polling

Polling data in the cloud is a costly activity. If you poll a queue in the enterprise and the queue is empty, this does not explicitly cost you money. In the cloud it does, because simply attempting to access storage (even if the storage is empty) is a transaction that costs you something. While an individual poll doesn’t cost you much—only $0.01 per 10,000 transactions—it will add up to big numbers if you’re doing it repeatedly.What to do about it: Either find an alternative to polling, or do your polling in a way that is cost-efficient. There is an efficient way to implement polling using an algorithm that varies the sleep time between polls based on whether any data has been seen recently. When a queue is seen to be empty the sleep time increases; when a message is found in the queue, the sleep time is reduced so that message(s) in the queue can be quickly serviced.

Hidden Cost #9: Unwanted Traffic and Denial of Service Attacks

If your application is hosted in the cloud, you may find it is being accessed by more than your intended user base. That can include curious or accidental web users, search engine spiders, and openly hostile denial of service attacks by hackers or competitors. What happens to your bandwidth charges if your web site or storage assets are being constantly accessed by a bot?Windows Azure does have some hardening to guard against DOS attacks but you cannot completely count on this to ward off all attacks, especially those of a new nature. Windows Azure’s automatic applying of security patches will help protect you. If you enable the feature to allow Windows Azure to upgrade your Guest OS VM image, you’ll gain further protections over time automatically. The firewall in SQL Azure Database will help protect your data. You’ll want to run at least 2 servers per farm so that rapidly-issued security patching does not disrupt your application’s availability.

What to do about it:

- To defend against such attacks, first put the same defenses in place that you would for a web site in your perimeter network, including reliable security, use of mechanisms to defeat automation like CAPTCHA, and coding defensively against abuses such as cross-site scripting attacks.

- Second, learn what defenses are already built into the Windows Azure platform that you can count on.

- Third, perform a threat-modeling exercise to identify the possible attack vectors for your solution—then plan and build defenses. Diligent review of your accruing charges will alert you early on should you find yourself under attack and you can alert Microsoft.

Hidden Cost #10: Management

Cloud computing reduces management requirements and labor costs because data centers handle so much for you automatically including provisioning servers and applying patches. It’s also true—but often overlooked—that the cloud thrusts some new management responsibilities upon you. Responsibilities you dare not ignore at the risk of billing surprises.What are these responsibilities? Regularly monitor the health of your applications. Regularly monitor your billing. Regularly review whether what’s in the cloud still needs to be in the cloud. Regularly monitor the amount of load on your applications. Adjust the size of your deployments to match load.

The cloud’s marvelous IT dollar efficiency is based on adjusting deployment larger or smaller to fit demand. This only works if you regularly perform monitoring and adjustment. Failure to do so can undermine the value you’re supposed to be getting.

What to do about it:

- Treat your cloud application and cloud data like any resource in need of regular, ongoing management.

- Monitor the state of your cloud applications as you would anything in your own data center.

- Review your billing charges regularly as you would any operational expense.

- Measure the load on your applications and adjust the size of your cloud deployments to match.

Some of this monitoring and adjustment can be automated using the Windows Azure Diagnostic and Service Management APIs. Regardless of how much of it is done by programs or people, it needs to be done.

Managing Hidden Costs Effectively

We’ve exposed many kinds of hidden costs and discussed what to do about them. How can you manage these concerns correctly and effectively without it being a lot of trouble?

- Team up with experts. Work with a Microsoft partner who is experienced in giving cloud assessments, delivering cloud migrations, and supporting and managing cloud applications operationally. You’ll get the benefits of sound architecture, best practices, and prior experience.

- Get an assessment. A cloud computing assessment will help you scope cloud charges and migration costs accurately. It will also get you started on formulating cloud computing strategy and policies that will guard against putting applications in the cloud that don’t make sense there.

- Take advantage of automation. Buy or build cloud governance software to monitor health and cost and usage of applications, and to notify your operations personnel about deployment size adjustment needs or make the adjustments automatically.

- Get your IT group involved in cloud management. IT departments are sometimes concerned that cloud computing will mean they will have fewer responsibilities and will be needed less. Here’s an opportunity to give IT new responsibilities to manage your company’s responsible use of cloud computing.

- Give yourself permission to experiment. You probably won't get it exactly right the first time you try something in the cloud. That's okay--you'll learn some valuable things from some early experimentation, and if you stay on top of monitoring and management any surprises will be small ones.

I trust this has shed light on and demystified “hidden costs” of cloud computing and given you a fuller picture of what can affect your Windows Azure billing. In subsequent articles we’ll explore some of these issues more deeply. It is possible to confidently predict and manage your cloud charges. Cloud computing is too valuable to pass by and too important to remain a diamond in the rough.

Roberto Bonini continues his series with Windows Azure Feed Reader, Episode 2 of 8/14/2010:

A few days late (meant to have this up on Tuesday). Sorry. But here is [the 00:44:30] part 2 of my Series on building a Feed Reader for the windows Azure platform: http://vimeo.com/14128232.

If you remember, last week we covered the basics and we ended by saying that this week’s episode would be working with Windows Azure proper.

Well this week we cover the following:

- Webroles (continued)

- CloudQueueClient

- CloudQueue

- CloudBlobClient

- CloudBlobContainer

- CloudBlob

- Windows Azure tables

- LINQ (no PLINQ yet)

- Lambdas (the very basics)

- Extension Methods

Now, I did do some unplanned stuff this week. I abstracted away all the storage logic into its own worker class. I originally planned to have this in Fetchfeed itself. This actually makes more sense than my original plan.

I’ve added Name services classes for Containers and Queues as well, just so each class lives in its own file.

Like last weeks, this episode is warts and all. I’m slowly getting the hang of this screencasting thing, so I’ll be getting better as time goes on I’m sure. Its forcing me to think things through a little more thoroughly as well.

Enjoy:

Next week we’ll start looking at the MVC project and hopefully get it to display a few feeds for us. We might even try get the OPML reader up to scratch as well.

PS: This week’s show is higher res than the last time. let me know if its better.

Zoiner Tejada’s Sample Code for Hosting a WCF Service in Windows Azure of 8/14/2010 is brief and to the point:

This code supports an article I wrote for DevProConnections. It demonstrates hosting a WCF Service in both a Web and Worker Role, using HTTP and TCP protocols: Download CloudListService.

The download throws an error; I’ve notified Zoiner. Here are links to a few of his recent articles:

- DevConnections Article: Managing WCF Services with Windows Server AppFabric: In this article I provide an overview of the features available in the new Windows Server AppFabric RTM that make your life as a WCF Services developer easier and more productive.

- DevConnections Article: Custom WCF Behaviors: In this article I demonstrate how you can implement and leverage custom service and endpoint behaviors to extend or configure the functionality of your WCF Services.

- DevConnections Article: Validating Workflow Services: In this article I show you how to enforce best practices and ensure your workflow services will run as expected by implementing design-time validation logic in code or XAML.

- DevConnections Article: Securing Workflow Services: In this article, I explain how to control access to Workflow Services using Windows and username/password credentials using a custom service authorization manager.

B2BMarketingOnline reported Alterian teams up with Microsoft Windows Azure platform on 8/13/2010:

CRM technology solutions provider Alterian has announced plans to port Alterian's web and social media engagement solutions to the Microsoft Windows Azure platform.

Alterian will work with Microsoft to build web applications and services which will allow Alterian's customers and partners to benefit from the global presence, real-time scalability and on-demand infrastructure of the Windows Azure platform.

While initial development areas will focus on Alterian's web and social media engagement solutions, other applications will be available in the cloud over time.

"We are pleased that Alterian has chosen the Windows Azure platform as its cloud computing platform," commented Prashant Ketkar, senior director of product marketing, Windows Azure.

"Microsoft is a current user of Alterian's offerings and we see great potential in bringing Alterian's solutions to the cloud. We are confident that the Windows Azure platform will allow Alterian to enhance its position as a world-class provider of customer engagement solutions."

"Smart marketing is all about intelligently listening to customers, learning from them, understanding what needs to be done from a customer engagement perspective, and then proactively speaking to customers in a way that is win-win for everyone involved," said David Eldridge, CEO of Alterian.

"To do this across all communications channels, you need great software. Alterian already has this, but now we're also coupling our software with the world-class cloud computing infrastructure offered by the Windows Azure platform, bringing a wide range of benefits to our customers."

The Windows Azure Team posted Real World Windows Azure: Interview with Rhett Thompson, Cofounder of CoreMotives on 8/12/2010:

As part of the Real World Windows Azure series, we talked to Rhett Thompson, Cofounder of CoreMotives, about using the Windows Azure platform to deliver the company's automated marketing solution. Here's what he had to say:

MSDN: Tell us about CoreMotives and the services you offer.

As part of the Real World Windows Azure series, we talked to Rhett Thompson, Cofounder of CoreMotives, about using the Windows Azure platform to deliver the company's automated marketing solution. Here's what he had to say:MSDN: Tell us about CoreMotives and the services you offer.

Thompson: CoreMotives develops business-to-business marketing automation and lead generation software that enables marketing and sales teams to detect, track, and target potential customers. Our marketing automation suite integrates with Microsoft Dynamics CRM and provides business intelligence for customers' website traffic and email marketing initiatives.MSDN: What was the biggest challenge CoreMotives faced prior to implementing the Windows Azure platform?

Thompson: We're starting to acquire new customers and our small, five-person company was outgrowing our hosted server infrastructure. In some cases, our hosting provider would automatically shut down our servers when the load reached 70 percent for more than five minutes, leaving us unable to process customers' web traffic. Our customers expect near real-time results and, in a worst-case scenario, it could take us up to 14 hours to process data-that's just unacceptable.MSDN: Can you describe the solution you built with the Windows Azure platform?

Thompson: We compared the Windows Azure platform to other cloud services providers, including Rackspace hosting and Amazon Elastic Cloud Compute (EC2), and Windows Azure had the scalability and reliability we needed. We migrated our existing solution by using Web roles to capture website traffic details and Worker roles to host the logic and process the traffic. We are also using Microsoft SQL Azure in a multitenant environment so that we can isolate the customers' relational data that we store.MSDN: What makes your solution unique?

Thompson: One thing that is really unique about our solution is that we have developed an account provisioning system that is powered by Windows Azure. Unlike our competitors, we can provision accounts without having to visit customer locations and set up servers and databases on-premises. The provisioning system connects to and installs the CoreMotives solution directly into a customer's instance of Microsoft Dynamics CRM.MSDN: What benefits have you seen since implementing the Windows Azure platform?

Thompson: We have significantly improved our ability to scale up and meet demands of high-volume traffic. Instead of 14 hours, we can process traffic in 24 seconds-even during peak periods. That's what our customers expect and with Windows Azure, we can deliver. We also now have a reliable infrastructure that complies with standards, such as Sarbanes-Oxley and SAS 70 Type II. This means that, even as a small company, we can go head-to-head with industry-leading, enterprise marketing automation companies. The rest of the world is starting to move in the direction of cloud-based computing, and if you're going to compete in this marketplace, you need to get there fast without compromising the user experience. By using Windows Azure, we can do that and improve our competitive advantage.

Read the full story at: www.microsoft.com/casestudies/casestudy.aspx?casestudyid=4000007608

To read more Windows Azure customer success stories, visit: www.windowsazure.com/evidence

The Azure Forum Support Team posted Host Wordpress on Windows Azure: Run PHP applications in Windows Azure on 8/10/2010:

Our Wordpress live sample website:http://wordpress-win-azure.cloudapp.net/:

This tutorial article will show you how easy to run PHP applications in Windows Azure. In this tutorial, you will experience the basic process of hosting PHP applications in the cloud with Windows Azure.

Prerequisites

IIS 7 (with ASP.NET, WCF HTTP Activation)

Microsoft Visual Studio 2008 SP1 (or above)

Windows Azure Tools for Microsoft Visual Studio (June 2010)

1. Firstly, download the latest version of PHP from http://windows.php.net/download/

VC9 x86 Non Thread Safe (2010-Jul-21 20:38:25) version is your best choice. At right sidebar of the download page, we can see that: VC6 Versions are compiled with the legacy Visual Studio 6 compiler.VC9 Versions are compiled with the Visual Studio 2008 compiler and have improvements in performance and stability.

As for difference between PHP thread safe and non thread safe binaries, please refer here.

The team continues with a detailed, illustrated tutorial.

Return to section navigation list>

VisualStudio LightSwitch

No significant articles today.

<Return to section navigation list>

Windows Azure Infrastructure

Chris Hay and Brian H. Prince posted How Windows Azure Works, a sample chapter from their Azure in Action book for Manning Publications on 8/14/2010:

About the book

This is a sample chapter of the book Azure in Action. It has been published with the exclusive permission of Manning.

Written by: Chris Hay and Brian H. Prince

Pages: 425

Publisher: Manning

ISBN: 9781935182481

Andrew R. Hickey asserted SMBs Gobbling Up Cloud Computing In 2010 on 8/12/2010 in a post to Computer Reseller News:

Cloud services adoption among SMBs rose in the first half of 2010 and is expected to continue to skyrocket through the remainder of the year, a new study has revealed.

The study, overseen by IT management software maker Spiceworks, showed that during the first half of 2010, 14 percent of SMBs reported using cloud computing services while another 10 percent reported plans to deploy cloud-based services.

Spiceworks probed 1,500 SMB IT professionals globally and published the results in a survey SMB Cloud Computing Adoption: What's Hot and What's Not.

The study found that the smaller the company the more willing it is and the more aggressive it is when it comes to cloud services adoption. For example, 38 percent of SMBs with fewer than 20 employees currently use or plan to use cloud computing solutions within the next six months. Meanwhile, 7 percent of organizations with 20 to 99 employees and 22 percent of organizations with more than 100 employees said they plan to use cloud services over the same time period.

"Small companies with little existing infrastructure and outsourced IT are moving most quickly to the cloud, whereas larger SMBs are taking more measured steps due to considerable investments in onsite technology," Jay Hallberg, Co-Founder, Spiceworks, said in a statement.

Additionally, the study showed that SMBs in emerging markets are leading the cloud computing charge with 41 percent of SMBs in Latin American and South America and 35 percent of SMBs in the Asia-Pacific region adopting cloud computing services. That beats out North America, in which 24 percent of SMBs are jumping into the cloud and Europe, where 19 percent are adopting cloud services.

Among different verticals, technology companies are grabbing onto cloud computing at a faster pace, Spiceworks found. Thirty-four percent of SMBs in the tech sector use or are planning to use cloud services, but the services sector, which comprises finance, human resources and consulting, is the next fastest growing segment with 22 percent using or planning to use cloud computing offerings. Other industries hover around the 20 percent adoption rate.

And despite SMBs quickly latching onto the cloud, there are still some holdouts. Spiceworks' research found that 72 percent of SMBs have no plan to move to the cloud in the next 12 months. Their main reasons for avoidance: technology and security concerns. Meanwhile, companies with 20 to 99 employees and a full-time IT pro on staff were the least likely to make the cloud leap.

Sam Johnston (@samj) and Benjamin Black (@b6n) debate What We Need Are Standards in the Cloud in this 00:45:17 session from OSCON 2010 posted by O’Reilly Media on 8/11/2010:

The participants and jury consisted of:

Sam Johnston (Google)

Benjamin Black

Patrick Kerpan (Cohesive Flexible Technologies)

Subra Kumaraswamy (eBay, Inc.)

Mark Masterson (CSC)

Stephen O'Grady (Redmonk)

Ken Corless listed Ten Things I Think I Think About…Cloud Computing in this 8/2/2010 post to the Accenture blog:

Welcome to my 2nd TTITITA (Ten Things I Think I Think About…) post. The concept of "I think I think" is used to reflect the fact that things change so much in IT, I’m never 100% sure what I think. I received a bunch of good feedback on my last post on Agile development. This time I’m going to hit another hot topic – cloud computing.

Cloud computing – the first problem is you can’t even get a solid definition of what it is. Wikipedia’s entry is a good start. However, it’s hard to get a handle on what is really new and different about it.

In Accenture’s Internal IT, we have a private cloud that is used for our email and collaboration platform (i.e. exchange and sharepoint) – although much of the rest of our infrastructure is virtualized, I wouldn’t consider it cloud. We have also used a few public clouds ranging from Software as a Service (such as Taleo and Workscape) to Platform as a Service (such as Microsoft Azure) to Infrastructure as a Service (Amazon AWS). The thoughts below are based upon those experiences and on discussions I have had with IT leaders at many of our clients.

- Scale Play: While I’ve not always been a huge fan of Nicolas Carr, I do like the core analogy in book The Big Switch: That computing today is like electricity in the middle 1800’s – every factory had it’s own coal burning power plant to supply electricity to make the factory run. Today, in the early 21st century, every office has it’s own computing power plant (called a data center) to supply information to make the office run. Carr posits, and I agree, that just as the electricity grid became a scale play utility, so will computing power. I do not, however, agree with his belief that nearly all computing technology will go in this route.

- Lower==Easier: The lower on the technology stack, the more applicable cloud computing is. While there certainly are many exceptions, the closer to the infrastructure you get, the better it can serve as a common platform. Telecom providers have been doing this for years with the network. AOL gave many enterprises a “cloud based” instant messaging platform long before it was hip to call anything cloud (likely in 2006, but perhaps as early as 1996).

- IAAS – good to go: Infrastructure as a Service (IaaS) is the most exciting part of the cloud. If you believe in the promise of cost savings promised by the cloud, you need a way to move some legacy applications there – without business case destroying remediation/redesign projects. Putting only new applications in the cloud means a 15-25 year journey for most companies. With IaaS, you must also be clear what you are getting: Who’s patching, who’s administering, who’s accountable for what?

- PaaS – good, scary: Platform as a Service (PaaS) is attractive and scary. I like the added value run-time services it provides, the speed of development, and the scalability design patterns it brings. (Accenture has long been a proponent of investing in common run-time architecture even in the pre-cloud era). The lock-in does, however, make me pause. I don’t like that I can’t bring back in-house an application written for on some PaaS offerings. In Accenture’s IT, we’ll likely do some smaller new development on PaaS, but we won’t go all in.

- SaaS - not just packaged software: While Software as a Service (SaaS) on a technical level is nothing more than “packaged software” running on an infrastructure cloud, there is a bit more to it than that. Yes, SaaS has many of the advantages of IaaS (although I’d argue few of them will truly make it as a scale IaaS – see point #9). The commercial model of SaaS is dramatically different from traditional packaged software model. Low, or no, upfront costs combined with some for of pay by the use/user/transaction is very attractive. Given the diversity in the jobs and roles of Accenture’s nearly 200,000 employees, we lean toward some type of usage/transaction based model rather than user. Additionally, true SaaS offerings are often simply designed better – modern architectures, more modular and built with integration in mind. This design approach can dramatically shorten the time to deployment (and therefore business value). One last caveat around SaaS – make sure you can get your data back if/when your contract ends!

- Rise of commodity: A big part of the cloud advantage that I don’t see talked about much is the ability of cloud providers to give us enterprise class performance, scalability and reliability from commodity hardware and open source software. If you walk around Google’s data center, you don’t see a lot of high end Tier 1 storage or specialized servers. Many Fortune 1000 companies are paying 10x the storage cost and 5x the computing cost for specialized hardware and software. The trend we have seen over the last 20 years will continue – more and more computing will be done on commodity (read: “cheap”) hardware and software.

- Public vs. Private: There is much talk in the industry about public (external) and private (internal) clouds. While Accenture today is using both, I believe it is predominantly a transitory state. While there will certainly be exceptions, I believe that the scale play of the major cloud providers will be too hard to overcome, even for Global 1000 companies. Remember, the biggest proponents of the private clouds are the companies that don’t want to lose you as a customer.

- Lions and Tigers and Bears, Oh my: Security FUD around cloud is overblown. Most people I talk to don’t get it. Yes, there is risk in entrusting your “crown jewels” to a 3rd party. But many companies already do this: they engage in 3rd party non-cloud hosting, they hire contractors who don’t even encrypt their laptops. I’m not saying there is no risk in signing up for Cloud Computing, especially with some of the startups that may lack in experience in running enterprise class IT. But you should talk to Google, Microsoft, Verizon or Amazon about their security practices and expertise. In my experience they will stack up very well against your internal capabilities. Where do you think the best minds in security are going?

- SaaS to Iaas?: If I ran a SaaS company, I would run my offering on someone else’s IaaS or PaaS. Strategically, I believe my company’s value would be in the IP tied up in the software, not in running the hardware. Let the scale plays handle that. As enterprises embrace IaaS, I think you will see SaaS (and PaaS) companies moving their infrastructure towards the IaaS of one of the major scale players.

- Accenture’s Cloud Experience: Accenture’s experience with the cloud providers has been quite positive. On the SaaS front, our implementations have gone smoothly and arguably (we don’t run parallel projects to race) more quickly than had we gone a non-SaaS route. The commercial models have been acceptable even though they continue to evolve. Our IaaS and PaaS pilots have made us quite bullish on pushing more of Accenture internal IT to the cloud. While it is all a moving target, our modeling activities with Accenture’s Cloud Computing Accelerator have shown that we could cut a very significant percentage of our hosting costs by going “all in” on cloud computing. As a matter of fact, we have recently agreed to move our internal Exchange and Sharepoint to Microsoft BPOS.

<Return to section navigation list>

Cloud Security and Governance

Aleksey Savateyev produced a 00:34:22 Security in Provisioning and Billing Solutions for Windows Azure Platform video on 8/13/2010:

This video provides in-depth overview of the security aspects associated with development against Service Management API (SMAPI) and MOCP, within the context of Cloud Provisioning & Billing (CPB) solution. CPB abstracts and merges operations of MOCP and Windows Azure portals, providing value-add features necessary for cloud resource resellers and cloud ISVs. CPB is primarily targeted at service providers wanting to sell Windows Azure services and resources in combination with their own, and application vendors wanting to give their customers an ability to customize their applications before they get deployed to Windows Azure.

David McCann claimed “The savings from cloud computing may be considerably less than expected if you don't avoid these missteps” and listed Six Costly Cloud Mistakes in this 8/12/2010 post to the CFO.com blog:

The chance to cut costs is one of the chief reasons companies turn to cloud computing, but how much is saved may depend on avoiding some common mistakes and misperceptions.

On the surface, moving to the cloud seems like a can't-lose deal. When third parties (such as Amazon.com, Google, Hewlett-Packard, IBM, Microsoft, and Savis) host your applications or computing capacity in their massive virtualized data centers, you save the capital expense of updating hardware, cut maintenance costs, use less power, and free up floor space. The basic cloud pricing model is a flat fee based on capacity consumption, which often amounts to less than the forgone costs.

But there are a number of "hidden gotchas" when it comes to using cloud infrastructure providers, warns Jeff Muscarella, executive vice president of the IT division at NPI, a spend-management consultancy. If a company runs afoul of them, "six months in, the CFO might be wondering where all the savings are," he says.

In particular, Muscarella identifies six potential pitfalls that can make cloud costs pile up higher than expected:

1. Not taking full account of financial commitments on existing hardware. IT departments that have much of the responsibility for technology decisions and purchases may underappreciate the financial impact of committing to a cloud provider before existing physical equipment is fully depreciated. If that equipment cannot be repurposed, the ROI of moving computing workloads to the cloud may suffer. "This has to be looked at from an accounting perspective," says Muscarella.

2. Not factoring in your unique requirements when signing up for a cloud service. Most cloud offerings are fairly generic, as they are designed for the masses. For specialized needs, such as a retailer's need to comply with payment-card industry regulations, a company is likely to face additional charges. Be sure you understand such charges before signing a contract, says Muscarella.

3. Signing an agreement that doesn't account for seasonal or variable demands. In the contract, don't tie usage only to your baseline demands or you'll pay for it, warns Muscarella. If high-demand periods are not stipulated up front, there are likely to be costly up-charges when such periods happen.

4. Assuming you can move your apps to the cloud for free. Many software licenses prohibit the transfer of applications to a multitenant environment, but the provider may let you do it for a price. If you think you may want the flexibility, ask for such permission to be written into licenses at the time you're negotiating them.

5. Assuming an incumbent vendor's new cloud offering is best for you. Many providers have added cloud-based infrastructure to their traditional outsourcing and hosting services, but they're not motivated to give existing customers the lower price point that the cloud typically offers. "They're struggling with how to develop these new offerings without cannibalizing their existing revenue stream," says Muscarella. If you want to switch to a vendor's cloud services, do it at the end of the current contract term, when you can get negotiating leverage by soliciting other vendors.

6. Getting locked in to a cloud solution. Some cloud-services providers, notably Amazon, have a proprietary application program interface as opposed to a standardized interface, meaning you have to customize your data-backup programs for it. That could mean trouble in the future. "Once you have written a bunch of applications to the interface and invested in all the necessary customizations, it will be difficult — and expensive — to switch vendors," says Muscarella.

Related Articles

David McCann is CFO’s Web News Editor.

Dan Woods asserted “We are afraid of the complexity, the learning curve, lock in--and losing our jobs” as a preface to his Overcoming Fear Of The Cloud post of 8/3/2010 to Forbes.com (missed when posted):![]()

![]()

Even though the cloud is steadily enveloping the world of information technology, it is easy to forget the magnitude of the transition for those responsible for running and managing the systems. As the economic benefits of cloud technology become more profound, fear will be a smaller barrier. But right now, while we are still in the early days, fear of the cloud is a tangible force that is hard to overcome.

The fears range from the personal (Will I lose my job?) to the operational (How can we make sure we are backed up?) to the strategic (Will we be locked in to one cloud?). Some of the fears expose long-standing weaknesses in traditional IT practices, while others are new to the cloud. While most of us don't like to admit we are constrained by fear, it is worth taking an inventory of these fears to see if we are missing out on important opportunities.

Let's start with the biggest and most frequently encountered: Fear of losing control. Vendors derisively refer to people who are nervous about not being able to visit their machines as "server huggers," but this obscures the limits that one lives under in the cloud. You cannot do anything you want to a machine in the cloud. You cannot get the disk that crashed and send it to a data recovery service. You cannot control the hardware in an unlimited manner.

When do you need to do any of the things you cannot do in the cloud? Are these limits important? The fear comes in most strongly for those who do not have a clear idea of what they want from each piece of their infrastructure. In many ways, you have more control in the cloud because the infrastructure is standardized and controllable through APIs.

The fear of control comes up most often with cloud based infrastructure. People are more comfortable ceding control of applications and using them on a Software-as-a-Service basis. End users, who are often the buyers of SaaS, never had control in the first place, so they do not feel they are missing out on anything. But even for SaaS, there is a loss of control that has been shown recently as Salesforce.com announced its Chatter product. At companies all across the globe, a screen splashed up offering Chatter as an extension to the existing Salesforce.com application. While we are used to seeing this on consumer software, it is another thing to see it in an enterprise application. IT staff were surprised when end-users with administrator privileges chose to install Chatter for hundreds or thousands of users. It is likely that SaaS vendors will market their products proactively without asking permission until users complain. This is a loss of control.

Losing data is another common fear. When your key data is in the cloud, you really don't know where it is. Can it disappear? Absolutely, just look at the terms of the service for your cloud provider, virtually nothing is guaranteed to work. No liability is assumed for complete loss of data. This fear is not based on the lack of reliability in the cloud but rather on the fact that few companies have really up to the minute backups. In most data centers, you have monthly, weekly and nightly backups. In the cloud you need that, but you also need some persistent transaction log so you can keep track of what was done during the day. The best systems have this already, but many people considering the cloud do not meet this standard, and the cloud exposes this failing. The remedy: Get a better backup system in place.

Security worries are also scary at first. In the cloud, your applications and data are really on the public Internet. In most data centers, there are lots of firewalls and other security, which gives a strong feeling of security. But who is likely to be better at security? You or the cloud provider whose business depends on it? It is true that applications that run on the public Internet are more open to attack than applications inside a data center. But if a hacker comes calling and gets inside your firewall, unsecured applications become a playground. In today's world, all applications on a network should be locked down, whether inside a data center or not. Everyone should be afraid about security and make reasonable investments to provide protection. The cloud really doesn't increase the size of the reasonable investment, in my opinion.

Control, data and security are the big three. The rest of the common fears about the cloud are really the same fears we face about any new technology. We are afraid of the complexity, the learning curve, lock in or losing our jobs. This last one is only rarely a factor. Most IT people I know are more afraid of losing their jobs for not figuring out how to use the cloud than for using it.

Experimentation to build confidence is the best way to conquer these fears. Once a few small projects have been done, it is safe to think big.

But the biggest fear IT people should have about the cloud is failing to understand its true business value. The cloud right now is a technology phenomenon, but to make the biggest impact, its power must be reflected in business terms. Migration of server assets to the cloud or taking advantage of elastic capacity may save a few dollars, but the big money will come when business models of entire industries are reshaped because of the flexibility the cloud offers. The IT person who figures that out will not have to worry about losing his job or losing data. He will be the CEO before too long.

Dan Woods is chief technology officer and editor of Evolved Technologist, a research firm focused on the needs of CTOs and CIOs. He consults for many of the companies he writes about. For more information, go to evolvedtechnologist.com.

<Return to section navigation list>

Cloud Computing Events

Jeff Barr announced on 8/13/2010 an Event: AWS Cloud for the Federal Government to take place 9/23/2010 in Crystal City, Virginia:

Since the announcement of Recovery.gov last March, Amazon has seen an accelerating adoption of the cloud by our Federal customers. These include Treasury.gov, the Federal Register 2.0 at the National Archives, the Supplemental Nutrition Assistance Program at USDA, the National Renewable Energy Lab at DoE, and the Jet Propulsion Laboratory at NASA.

On September 23, 2010 we'll be conducting a half-day event in Crystal City, Virginia to discuss the use of the AWS Cloud by the Federal Government by the agencies and organizations mentioned above.

Speakers will include Amazon CTO Werner Vogels, AWS CISO Steve Schmidt, and a number of AWS customers. There will also be time for Q&A and a cocktail reception afterward for networking.

The event is free but you need to sign up now in order to reserve your spot.

PS - You may also enjoy the story behind the Federal Register 2.0 makeover.

<Return to section navigation list>

Other Cloud Computing Platforms and Services

Liz McMillan asserted “Virtualization and Cloud infrastructure consulting firm will provide strategic advice and implementation expertise” in a preface to her CA Technologies Acquires 4Base post of 8/13/2010:

As part of its continued investment in virtualization and cloud management, CA Technologies today announced the acquisition of privately-held 4Base Technology, a virtualization and cloud infrastructure consulting firm. Terms of the transaction were not disclosed.

4Base will become the CA Virtualization and Cloud Strategy group, a competency practice within the CA Services division. With experience in more than 300 engagements, 4Base will help customers move quickly, pragmatically and successfully from virtualization to cloud by providing strategic advice and implementation expertise.

“As CA Technologies expands its role as a strategic partner and advisor to customers on the journey from virtualization to cloud computing, we are investing in both the software and consulting services needed to achieve substantial business results,” said Adam Elster, general manager of CA Services. “4Base has worked with hundreds of customers across a broad spectrum of virtualization and cloud technologies, including CA Technologies, Cisco, EMC, NetApp and VMware. Through this experience, 4Base has developed a broad base of skills, knowledge and experience that will play a critical role in CA Technologies ability to help customers quickly plan, adopt and execute transformative strategies and technologies that make their business more agile.”

Virtualization and cloud computing are in a fluid and evolving state, and CA Technologies will engage customers with a more comprehensive solution—both products and services—designed to help them fully realize the agility and operational benefits that can be derived from virtualization and cloud computing. With 4Base, CA Technologies customers can access proven approaches, skills and experience as they begin or continue on the virtualization-to-cloud journey, significantly reducing risk and accelerating returns.

“Enterprises have high interest in virtualization and cloud computing, but suffer from a lack of proven best practices,” said Paul Camacho, principal and co-founder of 4Base. “We are thrilled that CA Technologies chose 4Base to bring validated on-site experience, proven approaches and highly skilled resources to its customers. We look forward to helping new and existing CA Technologies customers eliminate VM stall and develop a dynamic IT service supply chain that transforms IT from a cost center to a business enabler, increases business agility, reduces IT costs, and increases quality of service.”

For additional information, please visit http://ca.com/4base.

Alex Williams claimed The Politics of Virtualization is at the Core of CA Acquisition in this 8/13/2010 post to the ReadWriteCloud:

CA Technologies acquisition of a consulting firm gets to the heart of what happens to companies when they begin to adopt virtualization technologies.

Andi Mann of CA makes the point in a blog post that the company acquired 4Base Technologies to provide companies with the expertise that is often required to really get the most out of virtualization.

Here's what happens. A company deploys virtualization. They reap the benefits but then they get a bit stuck. To deploy a virtualization infrastructure requires expertise that many companies do not have. For CA, 4Base will be the company that helps customers fill that void.

The acquisition also points to another issue. And that's adoption. Or should we just call this what it is. And that's politics. CA is even more blunt. They point to "server huggers," those members of the IT guard that often have a lot of doubt about virtualization and developing a cloud infrastructure.

4Base will be chartered with going into enterprise companies and helping with staffing and skills issues. The idea is to get people on board who can handle the complexities of making the transition to virtualized environments. That again helps ease the political issues. More informed people do make a difference.

There are other aspects to this deal that tell a story about the politics of IT. 4Base brings expertise. For instance, CA cites 4Base and its knowledge of the wide array of hypervisors. This includes VMware, Microsoft, Sun, IBM, Citrix, Red Hat and other platforms. Again, the knowledge exists outside the customer walls. That knowledge needs to move inside. Once that knowledge is in place, the political tensions subside to some degree.

We don't think this is a departure for CA. We see it as a necessary factor of doing business in the enterprise and the company's increasing focus on the cloud and SasS offerings.

CA competes with companies such as IBM, Hewlett-Packard and Oracle. Like IBM, CA is on an acquisition tear. It bought 3Tera and Nimsoft earlier this year, showing its clear intentions to get into the cloud computing market.

Mike Lewis explained Why Your Startup Should Use A Schema-less Database on 8/13/2010:

Here's a hint. It isn't because it's better than (insert-your-brand-here)SQL. I'm not even going to address that debate right now. It simply has to do with product iteration.

I love MongoDB as a technology, but I'm not even going to argue this from a tech perspective. The point that I want to address here is pivoting. MongoDB scales, it shards, it map-reduces, yadda yadda yadda. I love it for those things, but for a seed-stage startup, it's single biggest asset is the fact that it is schema-less, and does it beautifully.

SQL is phenomenal for enforcing rigidity onto tightly defined problems. It's fast, mature, stable, and even a mediocre developer can JOIN their way out of a paper bag. Save it for your next government defense contract. Build your startup's tech on the assumption that your business premise will change, and that you need to be ready for it. Your data schema is a direct corollary with how you view your business' direction and tech goals. When you pivot, especially if it's a significant one, your data may no longer make sense in the context of that change. Give yourself room to breath. A schema-less data model is MUCH easier to adapt to rapidly changing requirements than a highly structured, rigidly enforced schema.

This advice applies equally to great solutions such as Cassandra, CouchDB, etc. Whatever your flavor is, make sure to give yourself options. Use the most powerful and flexible technologies available to you. If a startup decided to develop their core technologies in C, under the pretenses that "It will handle more traffic per server," I'd laugh in their faces. You won't get that traffic if another company comes along and can crank out better features, and ten times faster than you. Pre-optimization is at the heart of all software evil, and it applies to data design as well. Bring SQL back into rotation after you've found your market, and a specific project calls for it. When you're first searching for that market, use the most flexible tools you can. Use tools that let you move fast, and allow you to salvage as much work as you can from efforts that dead-ended or required a pivot.

Ezra Zygmuntowicz posted 4 years at Engine Yard, what a long strange trip it's been about his departure from the start-up he cofounded on 8/13/2010. Ezra’s memorable story is germane to this blog because Engine Yard was one of the first successful PaaS startups:

So by now most folks who know me know that I have resigned from the startup I co-founded, namely Engine Yard Inc. It’s a long strange story that has lead up to this point and a lot of it I cannot speak of but I am going to give a short history of a 4 year startup that took $38 million in VC money over 3 rounds and my view from the cockpit and trenches both.

Back in February of 2006 Ruby on Rails was still in it’s infancy and things were still changing very fast with regards to deployment especially. It was obvious that Rails was going to be a smashing success and I had actually launched one of the first commercial Rails applications in late 2004 when I worked at the Yakima Herald-Republic newspaper in Yakima WA. They had a website already written in PHP that got about 250k uniques/day, so not a small amount of traffic but it was no Twitter or anything like that. Still back then almost no one knew how to efficiently deploy Rails apps and scale them without them falling over. And the landscape was going through major changes, CGI, webrick, Fast-CGI, mod_ruby, mongrel etc. With a plethora of front end static file web servers to put these things behind.

I was the only technical person at the whole newspaper and I was tasked with rewriting their entire website, intranet and classified and obituary entry system. They had a small data center in the newspaper building and they bought me two Apple X-Serves and said “here you go, your Rails application must take the 250k uniques on day one without faltering or we will roll back to the PHP app and you will be in hot water buddy.”

So I had to become the deployment expert in the Rails community, if you search the ruby on rails mailing lists from 2004-2007 you will find literally thousands of my posts helping folks figure out how to deploy and keep their apps running. I was approached by Dave Thomas of the Pragmatic Programmers and asked if I’d like to write a book on Rails Deployment. “Hell yeah I’d like to write that book I said”. Little did I know how much work that was going to be with all the changes Rails deployment was about to go through. But it gave me a name in the community as the “Rails Deployment Expert”. And I did finally finish the book “Deploying Rails Applications” two years later with the help of two other authors. But I rewrote most of the book a few times as things changed so often.

Anyway back to February of 2006, Tom Mornini and Lance Walley called me up out of the blue (I did not know either of these guys before this call) and told me about an idea they had to build a “Rackspace for Rails”. They asked if I was interested and said they found me through my thousands of posts on the rails mailing list and my Pragmatic beta book and figured I was the obvious expert to help them build their vision. So I agreed to become the 3rd founder with them and we started the planning stages of what was to become Engine Yard Inc.

The first Railsconf was in June of 2006 and we had a teaser website up and I was giving a talk on Rails Deployment at the conference. I announced the then vaporware Engine Yard as my “One more thing” at the end of my speech and linked to our site where we took emails.

We rounded up about $120k by begging, borrowing and stealing (not really) from friends and family. I must admit that Tom and Lance raised most of this first money here while I was busy writing the first code for Engine Yard. We raised this seed money in August of 2006 and that was when I quit my full time job and took the plunge. We all flew down to Sacramento where we had rented our first rack at Herakles data and bought our first 6 Super-micro servers and first Co-Raid JBOD disk array.

I still remember building the servers and racking them while at the same time fast talking folks on the phone trying to sell early accounts on our not yet running first cluster.

Now you need to know that this was way back before everything was “Cloud” Amazon had not yet come out with EC2 or any hints of it. But we knew what we needed to make our vision happen so we ended up building a cloud before the word cloud meant what it means today. We went with Xen and commodity pizza boxes and hand rolled automation written in ruby and python.

At this point Tom and I had realized we were out of our league with the low level linux, Xen, networking and basic ponytail type of stuff. This is when we took on our 4th founder Jayson Vantyl. He was the guy answering all our Xen and coraid questions on the mailing lists just like I had been the guy answering the rails deployment stuff. So we first hired him as a contractor and flew him out to help build our first cluster. We quickly realized we needed this guy as the 4th founder and made him an offer and he joined the company as the 4th and final founder in September I believe of 2006.

So we were able to cobble together our first cluster and get it working well enough to take some trusting early customers. I personally hand deployed the first 80 or so customers. I mean I literally took their app code and worked with them to learn about their app, and personally wrote the caspistrano deployment scripts for each of these customers myself. I was the only support staff on call 24/7 for the first year as far as application support went and Jayson was the only cluster support guy for the first 6 months or so as well.

I quickly built up a collection of best practices and a set of automation tools that let me do this easier and easier for each new customer. Slowly building out our Engine Yard automation toolkit.

I was also responsible for putting together the “Rails Stack” we used. Jayson chose Gentoo linux because that was what he was most familiar with and it was the distro we could hand optimize to get the best speed and flexibility out of. But I built the entire stack that ran after a blank VM was online with networking, storage and all that jazz.