Windows Azure and Cloud Computing Posts for 12/18/2010+

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

• Update 12/19/2010: 14 articles marked •

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database and Reporting

- Marketplace DataMarket and OData

- Windows Azure AppFabric: Access Control and Service Bus

- Windows Azure Virtual Network, RDP, Connect, and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch

- Windows Azure Infrastructure

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Read the detailed TOC here (PDF) and download the sample code here.

Discuss the book on its WROX P2P Forum.

See a short-form TOC, get links to live Azure sample projects, and read a detailed TOC of electronic-only chapters 12 and 13 here.

Wrox’s Web site manager posted on 9/29/2009 a lengthy excerpt from Chapter 4, “Scaling Azure Table and Blob Storage” here.

You can now freely download by FTP and save the following two online-only PDF chapters of Cloud Computing with the Windows Azure Platform, which have been updated for SQL Azure’s January 4, 2010 commercial release:

- Chapter 12: “Managing SQL Azure Accounts and Databases”

- Chapter 13: “Exploiting SQL Azure Database's Relational Features”

HTTP downloads of the two chapters are available for download at no charge from the book's Code Download page.

Tip: To find new and updated articles, copy the bullet (•) to the Clipboard press Ctrl+F, paste the bullet to the Find text box, and repeatedly click Next.

Azure Blob, Drive, Table and Queue Services

No significant articles today.

<Return to section navigation list>

SQL Azure Database and Reporting

• Bruno Terkaly posted ADO.NET Entity Framework – Troubleshooting steps for “The underlying provider failed on Open” on 12/19/2010:

The ADO.NET Entity Framework shields you from many problems. But to troubleshoot connection errors, this can work against you. I developed a technique that can very accurately tell you the root cause. The technique is very simple – use the SQLConnection() and SqlCommand() objects because they often provide the most direct route to the underlying root cause.

You will need to put your own connection string and select statement.

Description: Some ADO.NET code to test a connection

string conn = "Data Source=.;Initial Catalog=MarketIndexData;Integrated Security=True;"; using (SqlConnection connection = new SqlConnection(conn)) { try { connection.Open(); SqlCommand cmd = new SqlCommand(); cmd.CommandText = "select * from AssetPrices"; cmd.Connection = connection; SqlDataReader rdr = cmd.ExecuteReader(); while (rdr.Read()) { } catch (Exception ex) { string s = ex.Message; } }Figure: Low Level ADO.NET Code

Most common error is insufficient privileges

In my case, the problem turned out to be an issue with the [NT AUTHORITY\NETWORK SERVICE] account. When I ran my MVC application, the application pool identity runs as [NT AUTHORITY\NETWORK SERVICE] and it is that account that talks to SQL Server. The steps below show how I solved that problem.

Make sure you have [NT AUTHORITY\NETWORK SERVICE] as an account. If you don’t, right mouse click on “Logins” and add it.

Figure Logins in SSMS

Go to “User Mapping.” Notice that I was way too liberal. I chose all my databases and gave multiple roles to each database. But then again, there is no production data here anywhere.

Figure: User Mapping – SQL Server

Type “inetmgr” in the run window. Click on “Application Pools.” Notice that the identity is “Network Service.”

What is the funky app pool identity running as “NetworkService?”

Looks to me that that when I installed Azure SDK 1.3, the system added a new app pool for my cloud apps to run in in the local development fabric.

Figure Internet Information Manager (inetmgr.exe)

Start SQL Server Management Studio and grant privileges to the newly added “Network Service” account.

Figure: SQL Server Management Studio

Damir Dobric analyzed Windows Azure SQL Server T-SQL issues in a 12/12/2010 post (missed when published):

When you move one database form SQL Server to Windows Azure SQL Server by using of SQL scripts you will run into several issues which are not that obvious.

SQL Server in Windows Azure platform does not support full T-SQL. Here are few examples:This is the simple table which I want to move to Windows Azure SQL Server:

USE AzureDb

CREATE TABLE [calc].[TClient](

[ID] [bigint] IDENTITY(1,1) NOT NULL,

[Name] [nvarchar](50) NOT NULL,

[ChangedBy] [nvarchar](50) NULL,

[ChangedAt] [datetime] NULL,

[CreatedBy] [nvarchar](50) NULL,

[CreatedAt] [datetime] NULL,

[ExternalId] [nvarchar](256) NULL,

[ExternalSystemId] [nvarchar](20) NULL,

CONSTRAINT [PK_TMandate] PRIMARY KEY CLUSTERED

(

[ID] ASC

)WITH (PAD_INDEX = OFF, STATISTICS_NORECOMPUTE = OFF, IGNORE_DUP_KEY = OFF, ALLOW_ROW_LOCKS = ON, ALLOW_PAGE_LOCKS = ON) ON [PRIMARY]

) ON [PRIMARY]After you start this script following error appears:

Msg 40508, Level 16, State 1, Line 1

USE statement is not supported to switch between databases. Use a new connection to connect to a different Database.

No problem. Just remove statement USE AzureDb.

Now execute script again and uupss:Msg 40517, Level 16, State 1, Line 14

Keyword or statement option 'pad_index' is not supported in this version of SQL Server.To work around this issue remove following part of script:

WITH (PAD_INDEX = OFF, STATISTICS_NORECOMPUTE = OFF, IGNORE_DUP_KEY = OFF, ALLOW_ROW_LOCKS = ON, ALLOW_PAGE_LOCKS = ON) ON [PRIMARY]

) ON [PRIMARY]At the end you script should look like:

CREATE TABLE [tracking].[TClient](

[ID] [bigint] IDENTITY(1,1) NOT NULL,

[Name] [nvarchar](50) NOT NULL,

[ChangedBy] [nvarchar](50) NULL,

[ChangedAt] [datetime] NULL,

[CreatedBy] [nvarchar](50) NULL,

[CreatedAt] [datetime] NULL,

[ExternalId] [nvarchar](256) NULL,

[ExternalSystemId] [nvarchar](20) NULL,

CONSTRAINT [PK_TMandate] PRIMARY KEY CLUSTERED

(

[ID] ASC

))This will work fine. This post might help you to solve your problem. Unfortinatelly my problem is that my script has contained 100+ tables.

Read the complete post at http://developers.de/blogs/damir_dobric/archive/2010/12/12/windows-azure-sql-server-t-sql-issues.aspx

<Return to section navigation list>

MarketPlace DataMarket and OData

Eric White explained Using the OData Rest API for CRUD Operations on a SharePoint List in a 12/17/2010 post to his MSDN blog:

SharePoint 2010 exposes list data via OData. This post contains four super-small code snippets that show how to Create, Read, Update, and Delete items in a SharePoint list using the OData Rest API.

This post is one in a series on using the OData REST API to access SharePoint 2010 list data.

- Getting Started using the OData REST API to Query a SharePoint List

- Using the OData Rest API for CRUD Operations on a SharePoint List

These snippets work as written with the 2010 Information Worker Demonstration and Evaluation Virtual Machine. That VM is a great way to try out SharePoint 2010 development. Also see How to Install and Activate the IW Demo/Evaluation Hyper-V Machine.

See the first post in this series, Getting Started using the OData REST API to Query a SharePoint List, for detailed instructions on how to build an application that uses OData to query a list. These snippets use the list that I describe how to build in that post.

Query a List

using System;

using System.Collections.Generic;

using System.Linq;

using System.Net;

using Gears.Data;

class Program

{

static void Main(string[] args)

{

// query

TeamSiteDataContext dc =

new TeamSiteDataContext(new Uri("http://intranet/_vti_bin/listdata.svc"));

dc.Credentials = CredentialCache.DefaultNetworkCredentials;

var result = from d in dc.Inventory

select new

{

Title = d.Title,

Description = d.Description,

Cost = d.Cost,

};

foreach (var d in result)

Console.WriteLine(d);

}

}Create an Item

using System;

using System.Collections.Generic;

using System.Linq;

using System.Net;

using Gears.Data;

class Program

{

static void Main(string[] args)

{

// create item

TeamSiteDataContext dc =

new TeamSiteDataContext(new Uri("http://intranet/_vti_bin/listdata.svc"));

dc.Credentials = CredentialCache.DefaultNetworkCredentials;

InventoryItem newItem = new InventoryItem();

newItem.Title = "Boat";

newItem.Description = "Little Yellow Boat";

newItem.Cost = 300;

dc.AddToInventory(newItem);

dc.SaveChanges();

}

}Update an Item

using System;

using System.Collections.Generic;

using System.Linq;

using System.Net;

using Gears.Data;

class Program

{

static void Main(string[] args)

{

// update item

TeamSiteDataContext dc =

new TeamSiteDataContext(new Uri("http://intranet/_vti_bin/listdata.svc"));

dc.Credentials = CredentialCache.DefaultNetworkCredentials;

InventoryItem item = dc.Inventory

.Where(i => i.Title == "Car")

.FirstOrDefault();

item.Title = "Car";

item.Description = "Super Fast Car";

item.Cost = 500;

dc.UpdateObject(item);

dc.SaveChanges();

}

}Delete an Item

using System;

using System.Collections.Generic;

using System.Linq;

using System.Net;

using Gears.Data;

class Program

{

static void Main(string[] args)

{

// delete item

TeamSiteDataContext dc =

new TeamSiteDataContext(new Uri("http://intranet/_vti_bin/listdata.svc"));

dc.Credentials = CredentialCache.DefaultNetworkCredentials;

InventoryItem item = dc.Inventory

.Where(i => i.Title == "Car")

.FirstOrDefault();

dc.DeleteObject(item);

dc.SaveChanges();

}

}

Eric is a is senior programming writer for the Office Developer Center.

The World Bank published Announcing the Apps for Development Competition on 10/7/2010 (missed when posted):

As part of the World Bank's Open Data Initiative, World Bank President Robert Zoellick kicked off the Apps for Development Competition on October 7th, 2010 that will challenge the developer community to create tools, applications, and mash-ups using World Bank data.

Please check the Apps for Development contest Web site for complete contest details.

The contest requires applicants to use World Bank data, a major part of which is the World Development Indicators dataset that’s available in OData format from the Windows Azure DataMarket:

The World Development Indicators database is the primary World Bank database for development data from officially-recognized international sources. It provides a comprehensive selection of economic, social and environmental indicators, drawing on data from the World Bank and more than 30 partner agencies.

The database covers more than 900 indicators for 210 economies with data back to 1960 in many cases. As part of the World Bank's Open Data Initiative, World Bank President Robert Zoellick kicked off the Apps for Development Competition on October 7th, 2010 that will challenge the developer community to create tools, applications, and mash-ups using World Bank data. Please check the Apps for Development contest Web site for complete contest details.

<Return to section navigation list>

Windows Azure AppFabric: Access Control and Service Bus

• Neil MacKenzie (@mknz) explained the Azure AppFabric Caching Service in a detailed post on 12/18/2010:

The Azure AppFabric Caching Service was released into the Azure AppFabric Labs at PDC 10. This service makes available to the Azure Platform many of the features of the Windows Server AppFabric Caching Service (formerly known as Velocity). The Azure AppFabric Caching Service supports the centralized caching of data and the local caching of that data. It also provides pre-built session and page-state providers for web sites. These can be used with only configuration changes to an existing web site. (This post doesn’t go into that feature.)

The Azure AppFabric SDK v2 required to use Azure AppFabric Caching can be downloaded from the Help and Resources page on the Azure AppFabric Labs Portal. This download includes documentation. The preview is available currently only in the US South Central Azure datacenter.

Wade Wegner has a great PDC 10 presentation introducing the Azure AppFabric Caching Service. He also participates in a very interesting conversation with Karandeep Anand for Channel 9. The Windows Azure Platform Training Kit (December 2010) contains a hands-on lab for the Azure AppFabric Caching Service (and one day I’ll get it to work). This post complements an earlier post on another Azure AppFabric Labs feature: Durable Message Buffers.

The Azure AppFabric Caching Service appears to be a very powerful and easy-to-use addition to the Azure Platform. A significant unknown is cost – since pricing has not yet been announced. There is an interesting discussion of this on an Azure Forum thread. It will be interesting to see how the Azure AppFabric Caching Service develops over the coming months – but it has got off to a really solid start.

AppFabric Caching

The Windows Server AppFabric Caching Service runs on a cluster of servers that present a unified cache to the client. This service requires IT support to manage and configure the servers. These clusters can be quite large – the documentation, for example, describes the cache management needs of a cluster in the range of 50 cache servers. This IT need disappears in Azure AppFabric Caching since Microsoft handles all service management.

In Windows Server AppFabric Caching Service it is possible to create named caches and provide different configuration characteristics to each cache. For example, one cache with rarely changing data could have a very long time-to-live configured for cached data while another cache containing more temporary data could have a much shorter time-to-live. Furthermore, it is possible to create regions on a single node in the cache cluster and associate searchable tags with cache entries. Neither of these features are implemented in Azure AppFabric Caching which supports only the default cache (named, unsurprisingly, default).

The Windows Server AppFabric Caching Service supports cache notifications which cache clients can subscribe to and receive notification when various operations are performed on the cache and the items contained in it. This feature is not supported in Azure AppFabric Caching Service.

Both the Azure and the Windows Server AppFabric Caching Service use various techniques to remove data from the cache automatically: expiration and eviction. A cache has a default timeout associated with it after which an item expires and is removed automatically from the cache. This default timeout may be overridden when items are added to the cache. The local cache similarly has an expiration timeout. The Windows Server AppFabric Caching Service supports notification-based expiration but that is not implemented in the Azure AppFabric Caching Service. Eviction refers to the process of removing items because the cache is running out of memory. A least-recently used algorithm is used to remove items when cache memory comes under pressure – this eviction is independent of timeout.

The Azure and Windows Server AppFabric Caching Services support two concurrency models: optimistic and pessimistic. In optimistic concurrency, a version number is provided when an item is retrieved from the cache. This version number can be provided when the item is updated in the cache and the update fails if an old version number is provided. In pessimistic concurrency, the item is locked when it is retrieved from cache and unlocked when it is no longer needed. However, pessimistic concurrency assumes that all clients obey the rules regarding locks because regardless of any current lock an item can be updated by an operation not using locks.

Configuring the Azure AppFabric Caching Service

Note that links to classes in this and subsequent sections are to the documentation for the Windows AppFabric Caching Service. Since the intent is that they use the same API this should be a reasonable proxy until the Azure AppFabric Caching Service documentation is available online.

The documentation for Windows AppFabric Caching Service uses the expression cache cluster to refer to the central (non-local) cache. In this and subsequent sections I use the alternate term AppFabric cache for the central cache to emphasize the fact that we don’t care about its implementation.

The DataCacheFactoryConfiguration class is used to configure the Azure AppFabric Cache. It is declared:

public class DataCacheFactoryConfiguration : ICloneable {

// Constructors

public DataCacheFactoryConfiguration();// Properties

public TimeSpan ChannelOpenTimeout { get; set; }

public Boolean IsRouting { get; set; }

public DataCacheLocalCacheProperties LocalCacheProperties { get; set; }

public Int32 MaxConnectionsToServer { get; set; }

public DataCacheNotificationProperties NotificationProperties { get; set; }

public TimeSpan RequestTimeout { get; set; }

public DataCacheSecurity SecurityProperties { get; set; }

public IEnumerable<DataCacheServerEndpoint> Servers { get; set; }

public DataCacheTransportProperties TransportProperties { get; set; }// Implemented Interfaces and Overridden Members

public Object Clone();

}When an Azure AppFabric Caching Service service is configured, the Azure AppFabric Labs Portal provides a single cache endpoint and an authentication token. The Servers property specifies the single cache endpoint. The authentication token is provided via the SecurityProperties property. LocalCacheProperties specifies the configuration of the local cache. It can be set to null if the local cache is not required. The default timeout for the (Azure) AppFabric cache is 10 minutes.

The LocalCacheProperties property is of type DataCacheLocalCacheProperties declared:

public class DataCacheLocalCacheProperties {

// Constructors

public DataCacheLocalCacheProperties();

public DataCacheLocalCacheProperties(Int64 objectCount,

TimeSpan defaultTimeout,

DataCacheLocalCacheInvalidationPolicy invalidationPolicy);// Properties

public TimeSpan DefaultTimeout { get; }

public DataCacheLocalCacheInvalidationPolicy InvalidationPolicy { get; }

public Boolean IsEnabled { get; }

public Int64 ObjectCount { get; }

}DefaultTimeout is the default timeout for the local cache. InvalidationPolicy specifies the invalidation policy for the local cache and must be DataCacheLocalCacheInvalidationPolicy.TimeoutBased since notification-based expiration is not supported in Azure AppFabric Labs. IsEnabled indicates whether or not the local cache is enabled for the cache client. ObjectCount specifies the maximum number of items that can be stored in the local cache. Items are added to the local cache when they are retrieved from the AppFabric cache. There is no way to add items to the local cache independently of the AppFabric cache.

The DataCacheFactoryConfiguration is passed into a DataCacheFactory constructor to create the DataCache object used to interact with the cache. DataCacheFactory is declared:

public sealed class DataCacheFactory : IDisposable {

// Constructors

public DataCacheFactory(DataCacheFactoryConfiguration configuration);

public DataCacheFactory();// Methods

public DataCache GetCache(String cacheName);

public DataCache GetDefaultCache();// Implemented Interfaces and Overridden Members

public void Dispose();

}The DataCacheFactory() constructor reads the cache configuration from the app.config file. In the Azure AppFabric Caching Service GetCache(String) can be used with the parameter “default” or, more simply, GetDefaultCache() can be used to retrieve the DataCache object for the default (and only) cache.

The following is an example of cache configuration:

private DataCache InitializeCache()

{

String hostName = “SERVICE_NAMESPACE.cache.appfabriclabs.com”;

String authenticationToken = @”BASE64 AUTHENTICATION TOKEN”;

Int32 cachePort = 22233;

Int64 sizeLocalCache = 1000;List<DataCacheServerEndpoint> server =

new List<DataCacheServerEndpoint>();

server.Add(new DataCacheServerEndpoint(hostName, cachePort));DataCacheLocalCacheProperties localCacheProperties =

new DataCacheLocalCacheProperties(sizeLocalCache, TimeSpan.FromSeconds(60),

DataCacheLocalCacheInvalidationPolicy.TimeoutBased);DataCacheFactoryConfiguration dataCacheFactoryConfiguration =

new DataCacheFactoryConfiguration();

dataCacheFactoryConfiguration.ChannelOpenTimeout = TimeSpan.FromSeconds(45);

dataCacheFactoryConfiguration.IsRouting = false;

dataCacheFactoryConfiguration.LocalCacheProperties = localCacheProperties;

dataCacheFactoryConfiguration.MaxConnectionsToServer = 5;

dataCacheFactoryConfiguration.RequestTimeout = TimeSpan.FromSeconds(45);

dataCacheFactoryConfiguration.SecurityProperties =

new DataCacheSecurity(authenticationToken);

dataCacheFactoryConfiguration.Servers = server;

dataCacheFactoryConfiguration.RequestTimeout = TimeSpan.FromSeconds(45);

dataCacheFactoryConfiguration.TransportProperties =

new DataCacheTransportProperties() { MaxBufferSize = 100000 };DataCacheFactory myCacheFactory =

new DataCacheFactory(dataCacheFactoryConfiguration);

dataCache = myCacheFactory.GetCache();

//dataCache = myCacheFactory.GetCache(“default”);

return dataCache;

}This method creates the cache configuration and uses it to create a DataCache object which it then returns. The configuration provides for only a 60s expiration timeout for the local cache which contains at most 1,000 items.

DataCache

DataCache is the core class for handling cached data. The following is a very truncated version of the DataCache declaration:

public abstract class DataCache {

// Properties

public Object this[String key] { get; set; }// Methods

public DataCacheItemVersion Add(String key, Object value);

public Object Get(String key);

public Object GetAndLock(String key, TimeSpan timeout,

out DataCacheLockHandle lockHandle);

public DataCacheItem GetCacheItem(String key);

public Object GetIfNewer(String key, ref DataCacheItemVersion version);

public DataCacheItemVersion Put(String key, Object value);

public DataCacheItemVersion PutAndUnlock(String key, Object value,

DataCacheLockHandle lockHandle);

public Boolean Remove(String key);

public void ResetObjectTimeout(String key, TimeSpan newTimeout);

public void Unlock(String key, DataCacheLockHandle lockHandle);

}Each of these methods comes in variants with parameters supporting optimistic and pessimistic concurrency. There are variants with tags which are not supported in the Azure AppFabric Caching Service. The tags themselves are actually saved with the item and can be retrieved with the item – but searching is not functional because of the lack of region support.

The Add() methods add a serializable item to the AppFabric cache. One variant allows the item to have a non-default expiration timeout in the AppFabric cache.

The Get() methods retrieve an item from the cache while the GetAndLock() methods do so for the pessimistic concurrency model. If the item is present in the local cache it will be retrieved from these otherwise it will be retrieved from the AppFabric cache and added to the local cache. The objects retrieved with these methods need to be cast to the correct type. GetCacheItem() retrieves all the information about the item from the AppFabric cache. GetIfNewer() retrieves an item from the AppFabric cache if a newer version exists there.

The Put() methods add an item to the AppFabric cache while the PutAndUnlock() methods overwrite an existing item in the pessimistic concurrency model.

The Remove() methods remove an item from the AppFabric cache.

ResetObjectTimeout() modifies the timeout for a specific item in the AppFabric cache.

The Unlock() methods release the lock on an item managed in the pessimistic concurrency model.

The Item property (this[]) provides simple read/write access to the item – similar to an array accessor.

The DataCacheItem class provides a complete description of an item in the AppFabric cache and is declared:

public sealed class DataCacheItem {

// Constructors

public DataCacheItem();// Properties

public String CacheName { get; }

public String Key { get; }

public String RegionName { get; }

public ReadOnlyCollection<DataCacheTag> Tags { get; }

public TimeSpan Timeout { get; }

public Object Value { get; }

public DataCacheItemVersion Version { get; }

}CacheName is the name of the AppFabric cache, i.e. default. Key is the unique identifier of the item. RegionName is the name of the region containing the item. Note that regions are not supported in Azure AppFabric Caching Service but this property will have a more-or-less random value in it. The Tags property contains all the tags associated with the item. Tags are not supported by the Azure AppFabric Caching Service. In fact, the tag methods appear to work but their functionality is limited by the lack of support for regions. The Timeout property specifies for how much longer the item will remain in the AppFabric cache. The Value is the actual value of the item and needs to be cast to the correct type. Version specifies the current AppFabric version of the item as used for optimistic concurrency.

Cache Aside

The cache aside programming model should be used with the Azure AppFabric Caching Service. If the data is in the cache it is used. Otherwise, it is retrieved from its source, added to the cache and then used. This is necessary because it can never be guaranteed that cached data has not been expired or evicted from the cache.

Examples

The following adds three items to the AppFabric cache:

private void AddEntries()

{

dataCache.Add(“Malone Dies”, “Samuel Beckett”);

dataCache.Put(“Molloy”, “Samuel Beckett”, TimeSpan.FromMinutes(1));

dataCache["The Unnamable"] = “Samuel Beckett”;

}The Put() invocation also reduces the expiration timeout to 1 minute from the default 10 minutes.

The following retrieves three items from the cache:

private void GetEntries()

{

String ulysses = dataCache.Get(“Molloy”) as String;

String molloy = dataCache["Malone Dies"] as String;

DataCacheItem dataCacheItem2 = dataCache.GetCacheItem(“The Unnamable”);

}The first two calls use the local cache if the items are present there. The final call always uses the AppFabric cache.

The following removes three items from the AppFabric cache:

private void ClearEntries()

{

dataCache.Remove(“Malone Dies”);

dataCache["Molloy"] = null;

dataCache["The Unnamable"] = null;

}The following demonstrates optimistic concurrency:

private void OptimisticConcurrency()

{

String itemKey = “Malone Dies”;

DataCacheItem dataCacheItem1 = dataCache.GetCacheItem(itemKey);

DataCacheItem dataCacheItem2 = dataCache.GetCacheItem(itemKey);

dataCache.Put(itemKey, “A novel written by Samuel Beckett”);

// Following fails with invalid version

dataCache.Put(itemKey, “Samuel Beckett”, dataCacheItem2.Version);

}The second Put() fails because it uses an older version of the item than the one updated by the first Put().

The following demonstrates pessimistic concurrency:

private void PessimisticConcurrency()

{

String itemKey = “Ulysses”;

dataCache[itemKey] = “James Joyce”;DataCacheLockHandle lockHandle1;

DataCacheLockHandle lockHandle2;

String ulysses1 = dataCache.GetAndLock(itemKey, TimeSpan.FromSeconds(5),

out lockHandle1) as String;

System.Threading.Thread.Sleep(TimeSpan.FromSeconds(6));

String ulysses2 = dataCache.GetAndLock(itemKey, TimeSpan.FromSeconds(5),

out lockHandle2) as String;

// following works regardless of lock status

//dataCache.Put(itemKey, “James Augustine Joyce”);

dataCache.PutAndUnlock(itemKey, “James Augustine Joyce”, lockHandle2);

}In this example the lock handle acquired in the first GetAndLock() is allowed to expire. The lock handle acquired in the second GetAndLock() is formally unlocked by PutAndUnlock() which also changes the value of the item. Note that pessimistic concurrency can be overridden by methods that are not lock aware as alluded to in the final comment.

Gunnar Peipman (@gpeipman) explained ASP.NET MVC 3: Using AppFabric Access Control Service to authenticate users on 12/18/2010:

I had Windows Azure training this week and I tried out how easy or hard it is to get Access Control Service to work with my ASP.NET MVC 3 application. It is easy now but it was not very easy to get there. In this posting I will describe you what I did to get ASP.NET MVC 3 web application to work with Access Control Service (ACS). I will show you also some code you may find useful.

Prerequisites

To get started you need Windows Azure AppFabric Labs account. There is free version of Access Control Service you can use you try out how to add access control support to your applications. In your machine you need some little things:

- ASP.NET MVC 3 RC2

- Windows Azure Tools for Visual Studio 1.3

- Windows Azure platform AppFabric SDK V2.0 CTP - October Update

- Microsoft Windows Identity Foundation Runtime

- Microsoft Windows Identity Foundation SDK

After installing WIF runtime you have to restart you machine because otherwise SDK cannot understand that runtime is already there.

Setting up Access Control Service

When all prerequisites are installed you are ready to register your (first?) access control service. Your fun starts with opening the following URL in your web browser: https://portal.appfabriclabs.com/ and logging in with your Windows Live ID. I don’t put up pictures here, if you want to guide with illustrations feel free to check out ASP.NET MVC example from AppFabric Labs sample documentation.

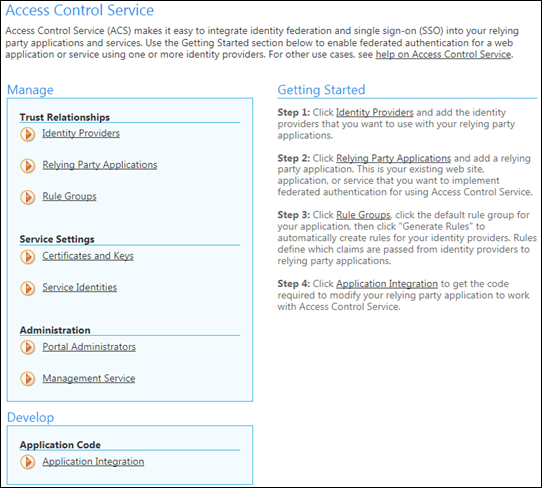

I will show you one screenshot in this point and this is the most important one – your ACS control panel where you find all important operations.

Click on image to see it at original size.In short the steps are as follows.

- Add new project and give it meaningful name.

- If ACS is not activated after creating project then activate it.

- Navigate to ACS settings and open Identity Providers section (take a look at screenshot above).

- Add Google as identity provider (Windows Live ID is already there and you cannot remove it) and move back to ACS control panel.

- Click on Relaying Party Applications link and add settings for your application there (you can use localhost too). I registered http://localhost:8094/ as my local application and http://localhost:8094/error as my error page. When you application is registered move back to ACS control panel.

- Move to Rule Groups section, select default rule group that was created when you registered your application and click on Generate Rules link. Now all rules for claims will be created and after that you can move back to ACS control panel.

- Now move to Application Integration section and leave it open – you need soon one link from there.

Follow these steps carefully and look at sample documentation if you need. Don’t miss step nr 6 as this is not illustrated in sample documentation and if you are in hurry then you may miss this step easily.

STS references

Now comes the most interesting and annoying part of the process you have to perform every time before you deploy your application to cloud and also after that to restore STS settings for development environment.

You can see STS reference on the image on right in red box. If you have more than one environment (more than one relaying party application) registered you need reference for every application instance. If you have same instance in two environments – common situation in development – then you have to generate this file for both environments.

The other option is to run STS reference wizard before deploying your application to cloud giving it cloud application URL. Of course, after deployment you should run STS reference wizard again to restore development environment settings. Otherwise you are redirected to cloud instance after authenticating your self on ACS.

Adding STS reference to your application

- Now right-click on your web application project and select Add STS Reference…

- Now you are asked for location web.config file and URL of your application for which you are adding STS reference.

web.config location is filled automatically, you have to insert application URL and click Next button.- You get some warnings about not using SSL etc but keep things as simple as possible when you are just testing. The next steps asks you about location of STS data.

Select Use an Existing STS and insert URL from AppFabric settings (WS-Federation Metadata is the correct URL). Click Next.- You are asked about certificated and other stuff in the following dialogs but right now you can ignore these settings. Just click Next until STS reference wizard has no more questions.

Okay, now you have STS reference set but this is not all yet. We need some little steps more to do before trying out our application.

Modifying web.config

We need some modifications to web.config file. Add these two lines to appSettings block of your web.config file.

<add key="enableSimpleMembership" value="false" />

<add key="autoFormsAuthentication" value="false" />Under system.web add the following lines (or change existing ones).

<authorization>

<deny users="?" />

</authorization>

<httpRuntime requestValidationMode="2.0" />

If you get form validation errors when authenticating then turn request validation off. I found no better way to get XML-requests to my pages work normally.

Adding reference to Windows Identity Model

Before deploying our application to Windows Azure we need local reference to Microsoft.IdentityModel assembly. We need this reference because this assembly cannot be found in cloud environment.

If you don’t want to reference this assembly in your code you have to make it part of web application project so it gets deployed.

I have simple trick for that – I just include assembly to my project from bin folder. It doesn’t affect compilation but when I deploy my application to cloud the assembly will be in package.

Adding error handler

Before we can test our application we need also error handler that let’s us know what went wrong when we face problems when authenticating ourselves.

I added special controller called Error to my web application and defined view called Error for it. You can find this view and controller code also from ACS sample projects. I took them from there.

Testing application

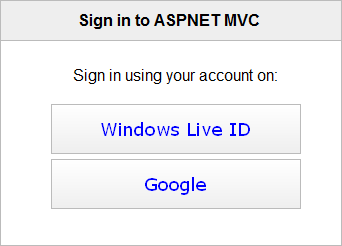

Now try to run the application. You should be redirected to ACS identity providers page like shown on the following screenshot.

Testing application

Run your application now. It should you redirect to authentication providers page show on following screenshot. This page is part of ACS.

Select Google and log in. You should be redirected back to your application as authenticated user. You can see corner of my test page on the following screenshot.

NB! For Windows Live ID you will get empty username and this seems to be issue that is – of course – by design. Hopefully MS works things out and makes also Live ID usable in ACS but right now – if you are not level 400 coder, don’t touch it – it means more mess than you need.

Conclusion

Adding AppFabric ACS support to ASP.NET MVC 3 web application was not such an easy thing to do like I expected. ASP.NET MVC 3 has some minor bugs (that’s why we needed to parameters to appSettings block) and in the beginning we are not very familiar with Winodws Identity Foundation. After some struggling on different problems I got it running and I hope you can make your applications run too if you follow my instructions.

AppFabric ACS is still in beta but it looks very promising. There is also Facebook and Yahoo! support available and hopefully they add more authentication options in the near future. ACS helps you identify your users easily and you don’t have to write code for each identity provider.

<Return to section navigation list>

Windows Azure Virtual Network, RDP, Connect, and CDN

• Wely Lau described Establishing Virtual Network Connection Between Cloud Instances with On-premise Machine with Windows Azure Connect in a 12/19/2010 post:

I was super excited to hear the announcement by Microsoft at PDC2010 regarding the Windows Azure Connect. With Windows Azure Connect, we would be able to connect cloud instances (VMs on the cloud) with on-premise machine through logical virtual network. This really solves many scenarios that we are facing today, such as:

- Connecting on-premise SQL Server database from Windows Azure instances.

- Using on-premise SMTP gateway from Windows Azure instances.

- Windows Azure instance domain-joined to corporate Active Directory

- Remote Administration and troubleshooting on Windows Azure Role.

The following figure illustrates how the Windows Azure Connect works.

In order to enable Windows Azure Connect, the following is the steps that we would need to do. A detail step-by-step post will be followed on subsequent post.

- Enabling Windows Azure Connect at the Windows Azure Developer Portal.

- A tiny “Windows Azure Connect” engine will be installed on our on-premise application.

- A role group then must be created in order select which of the Windows Azure Roles and on-premise will be included.

- Your on-premise machine name will be shown on the developer portal when it’s successfully connected.

- Then the machines on that group regardless cloud or on-premise would be able to ping each other

I will show you the “how-to” on the subsequent post, stay tune here..

Wely is a developer advisor in Developer and Platform Evangelism (DPE), Microsoft Indonesia.

• Nico Ploner describes how to create an ephemeral FTP server on an Azure instance in his FTP-Server on Windows Azure from scratch post of 12/19/2010:

Using the new Windows Azure 1.3 SDK and the RDP support, you can create your own FTP server in the cloud within less than 30 minutes! Here is a little guide how to do so:

[Consider this procedure an exercise because the “FTP server in the cloud” will disappear if the Windows Azure fabric drops or restarts the instance. See the “Issues” section at the end of this post.]

The three major steps are to open the necessary FTP ports to the virtual server, enable RDP on the virtual server and configure the FTP server role on the virtual server.

- Download and install the Windows Azure 1.3 SDK

- Create a new Windows Azure Project in Visual Studio 2010 and add a Role to that project. In fact it doesn’t matter what kind of role you add to the project. I started by adding a ASP.NET Web Role.

- In the Solution Explorer, right click on the WebRole and choose Properties

- In the Endpoints tab on the Properties page add a second endpoint that uses the public port 21 (which is the default port for FTP)

- Now right click on the Cloud Project in the Solution Explorer and choose Publish

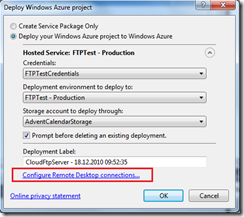

- In the publishing dialog select the option “Deploy your Windows Azure project to Windows Azure” and select the credentials, the deployment environment and the storage account you want to use for the deployment. Then click on “Configure Remote Desktop connections…”

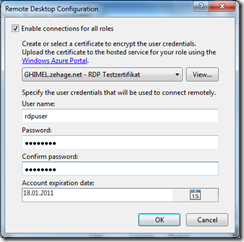

- Check the option “Enable connections for all roles”, select or create a certificate that will be used for the RDP connection and provide a user name and password. This user name and password will grant you administrative rights via remote desktop on the server.

- Close the dialog and publish your Windows Azure project to the cloud.

- After the publishing visit the Windows Azure Management Portal, select the server instance of your Windows Azure project and click on the Remote Desktop Connect button.

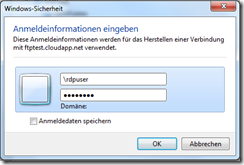

You can either open the .rdp file directly or save it to your computer for later use without having to go to the Management Portal again.- Login to the server with the user name and password you provided in step #7.

Hint: use “\<yourUserNameHere>” to remove domain information for the login

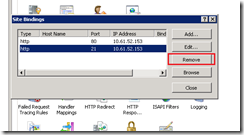

- On the server you first need to remove the binding to port 21 from the IIS default website. Go to: Start > Administrative Tools > Internet Information Services (IIS) Manager, navigate to the only web site in the tree on the left and click on the “Bindings…” link in the Actions pane on the right.

- Remove the binding to port 21.

- Close the bindings window and close the IIS Manager

- Go to: Start > Administrative Tools > Server Manager

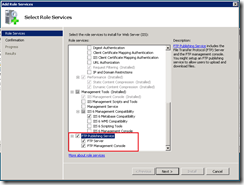

Navigate to the Web Server (IIS) Role in the tree on the left and click on “Add Role Services” in the right part of the window.

- Select the role service “FTP Publishing Service”. This should select the services “FTP Server” and “FTP Management Console” automatically, too. If not, select all three manually.

If necessary, add required role services pointed out by the wizard.

- Click “Next” and then “Install”.

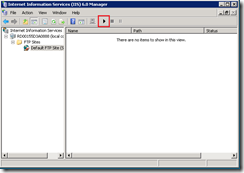

- Go to: Start > Administrative Tools > Internet Information Services (IIS) 6.0 Manager

This tool is used to manage FTP on the server.- Expand the tree on the left to the “Default FTP Site”, right click the Default site and

- Start the FTP Default Site

When the IIS6 Manager asks you if it should start the FTP Publishing Service, click “Yes”.- To check if your FTP server is working, open a Command Shell (either on the server or on your local machine) and connect to your server. Use the same credentials for the FTP connection as for the RDP login.

You can find the address of your FTP server in the title bar of the RDP window.

Issues:

- For using passive FTP functionality you need to open up more FTP related ports.

- When the cloud fabric decides to shut down your server, reboot it or move it to another physical machine, all changes you made via RDP are lost!

You might solve this by creating startup scripts or upload a pre-configured server image.- When your server is restarted or moved all locally stored files are deleted, too. So you need to store the FTP uploads somewhere persistently.

Maarten Balliauw’s Using FTP to access Windows Azure Blob Storage post of 3/14/2010 demonstrates what’s necessary to emulate a persistent Azure FTP server project with Windows Azure blobs.

Richard Parker posted v0.1 (beta) code for a FTP to Azure Blob Storage Bridge to CodePlex on 8/5/2010.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

• The Microsoft Case Studies Team posted eCraft: IT Firm Links Web Applications to Powerful Business Management Software on 12/15/2010:

eCraft helps its customers manage their business processes with powerful systems and software such as Microsoft Dynamics NAV. The company wanted to deliver scalable web applications that would link with Microsoft Dynamics NAV, without requiring itself or its customers to make high investments in hardware and infrastructure maintenance. eCraft used Windows Azure from Microsoft to develop an e-commerce website that uses Microsoft Dynamics NAV to manage product information, order processes, payment systems, and delivery logistics. Now the company is reaching new markets and new customers by delivering low-cost, high-performance web applications that provide its customers with the power, focus, and flexibility to grow their businesses.

Partner Profile: Based in Finland and Sweden, eCraft provides custom software development and consulting, and other IT services for companies in manufacturing, energy, and other industries.

Business Situation: eCraft wanted to develop and offer solutions to work with Microsoft Dynamics NAV business management software that did not require large hardware investments, and that would scale up quickly and easily.

Solution: To meet the specific needs of its customer PowerStation Oy, eCraft developed a Microsoft Dynamics NAV multitenant online-shopping website—or webshop—integrated with Windows Azure from Microsoft.

Benefits:

- New customer offerings

- Fast development, simple deployment

- Easy integration and flexibility

- Low costs, high performance

Software and Services:

- Windows Azure

- Microsoft Dynamics NAV

- Service Bus

- Microsoft SQL Azure

Vertical Industries:

- IT Services

Country/Region:

- Finland

Bill Zack recommended Windows Azure Platform Learning and Readiness Guidance in a 12/17/2010 post to the Ignition Showcase blog:

For Independent Software Vendors (ISVs) and System Integrators (SIs): The folks at the Microsoft Partner Network (MPN) have designed a learning path to help you update your Windows Azure technology platform skills or acquire new ones. This includes materials for both your sales and technical professionals. The information available on the site includes topics such as:

What Is Windows Azure?

- Value Proposition for Migrating Enterprise Applications to Windows Azure

- Building a Windows Azure Practice

- Windows Azure Platform – Business Model for Partners

- WCSD12PAL: Windows Azure Platform – Value for Partners

- Windows Azure Platform – TCO Tool

- The Windows Azure Platform on Microsoft.com

- On the Go? Stay in the Know about the Windows Azure Platform

Other parts of the MPN site link you to information on Microsoft’s Worldwide Partner Conference as well as to the Microsoft Worldwide Events site where you can find information on other global and local events as well as to create your own events.

Ron Jacobs interviewed two of five book co-authors in his 00:26:59 endpoint.tv - Applied Architecture Patterns on the Microsoft Platform video segment of 12/17/2010:

Have you ever noticed that there are often several products or solutions available when you want to solve a problem with the Microsoft platform, and so you aren't sure which is the right fit? With AppFabric, for example, there's a fair bit of overlap between BizTalk and Workflow.

Luckily, a group of guys who make a living helping people solve these types of problems wrote a book that I think you might find useful. Applied Architecture Patterns on the Microsoft Platform is definitely worth a read. On this episode, I chat with two of the authors—Rama and Ewan—as they explain what they have learned about solving real world problems with the Microsoft Platform.

Richard Seroter (@rseroter, pictured below) added details about the preceding interview in his My Co-Authors Interviewed on Microsoft endpoint.tv post of 12/18/2010:

You want this book!

-Ron Jacobs, Microsoft

Ron Jacobs (blog, twitter) runs the Channel9 show called endpoint.tv and he just interviewed Ewan Fairweather and Rama Ramani who were co-authors on my book, Applied Architecture Patterns on the Microsoft Platform. I’m thrilled that the book has gotten positive reviews and seems to fill a gap in the offerings of traditional technology books.

Ron made a few key observations during this interview:

- As people specialize, they lose perspective of other ways to solve similar problems, and this book helps developers and architects “fill the gaps.”

- Ron found the dimensions our “Decision Framework” to be novel and of critical importance when evaluating technology choices. Specifically, evaluating a candidate architecture against design, development, operational and organizational factors can lead you down a different path than you might have expected. Ron specifically liked the “organizational direction” facet which can be overlooked but should play a key role in technology choice.

- He found the technology primers and full examples of such a wide range of technologies (WCF, WF, Server AppFabric, Windows Azure, BizTalk, SQL Server, StreamInsight) to be among the unique aspects of the book.

- Ron liked how we actually addressed candidate architectures instead of jumping directly into a demonstration of a “best fit” solution.

Have you read the book yet? If so, I’d love to hear your (good or bad) feedback. If not, Christmas is right around the corner, and what better way to spend the holidays than curling up with a beefy technology book?

WordPress.org added Windows Azure Storage for WordPress to the WordPress Plugin Directory:

This WordPress plugin allows you to use Windows Azure Storage Service to host your media for your WordPress powered blog.

This WordPress plugin allows you to use Windows Azure Storage Service to host your media for your WordPress powered blog. Windows Azure Storage is an effective way to scale storage of your site without having to go through the expense of setting up the infrastructure for a content delivery.

Please refer UserGuide.pdf for learning more about the plugin.

For more details on Windows Azure Storage Services, please visit the Windows Azure Platform web-site.

Related Links: Plugin Homepage

Author: Microsoft

<Return to section navigation list>

Visual Studio LightSwitch

Return to section navigation list>

Windows Azure Infrastructure

• David Linthicum explained the Relevance of Enterprise Architecture to Cloud Computing in a 12/19/2010 article for ebizQ’s Where SOA Meets Cloud blog:

An Enterprise Architecture (EA) is a strategic information asset base, which defines the mission, the information necessary to perform the mission, the technology necessary to perform the mission, and the transitional processes for implementing new technologies in response to the changing mission needs. An EA characterizes and models the enterprise as a set of interrelated layers or views: strategy and performance, business, data, applications and services, and technology/infrastructure.

Strategic decisions about cloud computing should both draw upon and inform the EA. An organization must have a mature and well formed understanding of its architecture components (e.g., business processes, services, applications and data) to make meaningful decisions related to cloud computing, such as whether a move to the cloud is advantageous, what services most lend themselves to a cloud deployment, and what cloud deployment model (e.g., private, public) makes the most sense.

There are three key roles for EA in facilitating cloud computing strategy and planning:

Front-end decision support. An organization's EA should inform decisions about the desirability of a move to a cloud environment, what services should move to the cloud, and the appropriate deployment models. Existing business processes, services and resources should be analyzed through the lens of cloud characteristics and quality dimensions, such as elasticity, reliability, and security.

Cloud implementation planning support. During the period of transition, an EA provides the current inventory of services and the roadmap to which services are being deployed into what clouds. As new business needs emerge, the EA provides solution architects with a view of what has been deployed in the cloud already and what is planned for future deployment.

Enterprise context. Sharing and reuse of services and resources long have been primary objectives of EA. In the context of a cloud strategy, EA provides a critical enterprise view to ensure cloud decisions are optimized at the enterprise level and independent decisions on cloud-based point solutions are viewed in a broader context.

• Billy Hollis interviewed Burley Kawasaki in a 00:05:40 video segment of 12/18/2010:

Burley Kawasaki is director for developer platform product management at Microsoft Corp. He is responsible for product strategy, business planning, and outbound marketing for the company”s solutions that enable the building and managing of composite applications across both on-premises and the cloud platforms. This includes technologies such as the .NET Framework components, including Windows Communication Foundation and Windows Workflow Foundation, as well as the upcoming AppFabric technology for Windows Server and Windows Azure. Kawasaki is also tasked shaping and integrating company messages around topics such as Dynamic IT, modeling and cloud computing, and their impact on developer productivity. Kawasaki joined Microsoft in 2004, after serving as the vice president of Solutions for Interlink Group, one of Microsoft’s top regional systems integrators on the West Coast. …

• Amit Chatterjee posted Parallelism: In the Cloud, Cluster and Client to his MSDN blog on 12/18/2010:

Recently I had the opportunity to keynote at the Intel Tech Days conference, and I chose to talk about the trends in Parallelism. I thought I’d share the “presentation” with you, and in the end I will certainly weave in the topic of “testing” into this. I hope you enjoy the post. …

Background “data explosion” paragraphs excised for brevity.

… The final frontier: Parallelism in the Cloud, Cluster and Client

Only harnessing the power of a single Manycore system may not be sufficient to bring the kind of experiences that we have discussed earlier to our daily lives. There are many sceanrios where we need to harness the power of many systems. Moreover, the operating system and the platform should provide the necessary abstractions to give that power and yet hide the complexities from the lives of the developers of the software. A business or enterprise providing rich information and computational intensive service to individuals, need combination of Cluster, Client and the Cloud. This enables them to optimize their investment for on-demand computing power and utilize idle computing power in desktops. This is typically the vision of teams like the High Performance Computing (HPC) group in Microsoft. An HPC solution here will look as follows:

Imagine a financial company providing the next generation financial analytics and risk management solutions to its clients. To provide those solutions it needs enormous processing capacity. The organization probably has a computational infrastructure similar to that shown above and a cluster of machines in its data center that takes care of the bulk of the computation. The organization also likely has many powerful client machines in the workplace that are unused during inactive periods. Thus, it would benefit from adding those machines during unused times as compute nodes to its information processing architecture. Finally, it will need the power of the Cloud to provide elastic compute capacity for short periods of intensive needs (or bursts) for which it is not practical to reserve computational power.

The RiskMetrics Group is a financial company that uses an Azure-based HPC architecture like the one outlined above. It provides risk management services to the world’s leading asset managers, banks and institutions to help them measure and model complex financial instruments. RiskMetrics runs its own data center, but to accommodate high increase in demand for computing power at specific periods of time, the company needed to expand its technical infrastructure. Rather than buying more servers and expanding its data center, RiskMetrics decided to leverage the Windows Azure Platform to handle the surplus loads. This is particularly necessary for a couple of hours, and the organization needs to complete its financial computations within window . This leads to bursts of computationally intensive activities as shown in the chart below:

Courtesy Microsoft PDC Conference 2009 and case study [Financial Risk-Analysis Firm Enhances Capabilities with Dynamic Computing]

By leveraging the highly scalable and potentially limitless capacity of Windows Azure, RiskMetrics is able to satisfy the peak bursts of computational needs without permanently reserving its resources. It eventually expects to provision 30,000 Windows Azure instances per day to help with the computational needs! This model enables the organization to extend from fixed scaling to elastic scaling for satisfying its on-demand and ‘burst’ computing needs.

You then get a sense of how Parallelism in Cloud, Cluster and Client is the solution for today’s and tomorrow’s innovative and information rich applications that need to be scaled to millions of users.

Finally, let us take a look at how the platform and developer tools will allow developers to easily build these massively scalable parallel applications.

How does the development platform look like?

Here’s a picture of how the development platform should look like.

‘Parallel Technologies’ stack is natively a part of Microsoft’s Visual Studio 2010 product as shown below - both for native and managed applications.

Microsoft has invested in the Parallel Computing Platform to leverage the power of Parallel Hardware/Multi-core Evolution

Developers can now:

- Move from fine grain parallelism models to coarse grained application components – both in the managed world and in the native world

- Express concurrency at an algorithmic level and not worry about the plumbing of thread creation, management and control

- The concurrency runtime by itself is extensible. Intel has announced integration with the concurrency runtime for TBB, OpenMP and Parallel Studio

A demonstration of this capability

I will show a small demonstration of the example that supports parallelism in the Microsoft platform. I have a simple application called ‘nBody’ that is a simulation of ’n’ planetary bodies, where each body positions itself based on the gravitational pull of all the other bodies in its vicinity. This is clearly a very compute intensive application, and also one that can benefit immensely from executing ‘tasks’ in parallel.

The code for this application is just a native C++ code.

If I execute the application ’as-is’ without relying on the Visual Studio PPL libraries, the output of the application and the processor utilization looks as follows:

If you look at the Task Manager closely, you will see that only 13 percent of the CPU is being utilized. That means, with an 8-core, each core is utilized to only about 1/8th of its capacity.

The main part of the program, which draws the bodies, is encapsulated in an update loop, which looks as follows:

To take care of [n1] the multi-core presence and the platform support for parallelism, namely the PPL library, we can simply modify the update loop to use the parallel for construct shown below:

If I now rerun the program using the UpdateWorldParallel code, the results look like something like this:

You can now see that we are using 90 percent of the processing capacity in the machine, and all the cores are operating almost at their full capacity. Of course, if you are able to see the output of the program, you will notice the fluency of the movement, which is perceptibly faster than the jerky motion of the bodies with the earlier run.

What about advances in testing tools for parallelism

Testing such programs of course is going to be a challenge, and essentially the problem here is about finding ways to deal with the non-deterministic nature of the execution of parallel programs. Microsoft research has tool, integrated with Visual Studio, that helps out here. Check out CHESS: Systematic Concurrency Testing available at Codeplex. CHESS essentially runs the test code in a loop and it controls the execution, via a custom scheduler, in a way that it becomes fully deterministic. When a test fails, you can fully reproduce the sequence of the execution and this really helps narrow down and fix the bug!

In conclusion

Current global trends in information explosion and innovative experiences in the digital age require immense computational power. The only answer to this need is leveraging parallelism and the presence of multiple cores in today’s processors. This ’Multi-core shift’ is truly going well on its way. To make this paradigm shift seamless for the developers, Microsoft is providing parallel processing abstractions in its platforms and development tools. These allow developers to take advantage of parallelism—both from managed code as well as native code—and apply them for computation in local clients, clusters clouds and a mix of all the three!

Creative minds can focus on developing the next generation experiences into reality without worrying about processor performance limitations or requiring advanced education in concurrency.

Amit is Managing Director and General Manager at Microsoft (R&D) India

• Chris Webb reported Dryad and DryadLINQ in Beta in a 12/18/2010 post:

I’ve just seen the news on the Windows HPC blog that Dryad and DryadLINQ are now in beta: Dryad Beta Program Starting [see below].

If you’re wondering what Dryad is, Daniel Moth has blogged a great collection of introductory links; and Jamie [Thomson] and I have been following Dryad for some time too. Although it seems like its integration with SSIS has fallen by the wayside its relevance for very large-scale ETL problems remains: it will allow you to crunch terabytes or petabytes of data in a highly parallel way. And given that you can now join Windows Azure nodes to your HPC cluster, it sounds like it’s a solution that can scale out via the cloud, making it even more exciting. I hope the people working on Microsoft’s regular and cloud BI solutions are talking to the HPC/Dryad teams.

• The Windows HPC Team announced Dryad Beta Program Starting on 12/17/2010:

The HPC team is starting up a new beta program right now, read on for a description and sign-up instructions.

Dryad, DSC, and DryadLINQ are a set of technologies that support data-intensive computing applications that run on a Windows HPC Server 2008 R2 Service Pack 1 cluster.

These technologies allow you to process large volumes of data in many types of applications, including data-mining applications, image and stream processing, and some scientific computations. Dryad and DSC run on the cluster to support data-intensive computing and manage data that is partitioned across the cluster. DryadLINQ allows developers to define data intensive applications using the .Net LINQ model.

Today we release the first Community Technology Preview (CTP) of these technologies. This initial preview is intended for developers who are exploring data-intensive computing. The DryadLINQ, Dryad and DSC programming interfaces are all in the early phases of development and might change significantly before the final release based on your feedback.

This CTP has known scalability limits. In particular, the current version does not support more than 2048 individual partitions and it has only been tested on up to 128 individual nodes. Furthermore, the DryadLINQ LINQ provider does not yet support all LINQ queries.

The prerequisite is having an HPC Pack 2008 R2 Enterprise-based cluster, with Service Pack 1 installed. A trial version of HPC Pack 2008 R2 Enterprise is available through the Windows HPC Server 2008 R2 Evaluation Program. The Service Pack 1 updater is available [here].

Joining the new beta program is easy. If you have previously registered for an HPC Pack 2008 R2 program, you already have access. If you have never participated in one of our betas, you need to fill out some registration forms. Once you are signed up, just go to our 'Connect Beta' website and look in the 'Downloads' section.

• David Pallman (@davidpallman) announced AzureDesignPatterns.com Re-Launched in an 11/15/2010 post (missed when posted):

AzureDesignPatterns.com has been re-launched after a major overhaul. This site catalogues the design patterns of the Windows Azure Platform. These patterns will be covered in extended detail in my upcoming book, The Azure Handbook.

This site was originally created back in 2008 to catalog the design patterns for the just-announced Windows Azure platform. An overhaul has been long overdue: Azure has certainly come a long way since then and now contains many more features and services--and accordingly many more patterns. Originally there were about a dozen primitive patterns and now there over 70 catalogued. There are additional patterns to add but I believe this initial effort decently covers the platform including the new feature announcements based on what was shown at PDC 2010.The first category of patterns is Compute Patterns. This includes the Windows Azure Compute Service (Web Role, Worker Role, etc.) and the new AppFabric Cache Service.

The second category of patterns is Storage Patterns. This includes the Windows Azure Storage Service (Blobs, Queues, Tables) and the Content Delivery Network.

The third category of patterns is Communication Patterns. This covers the Windows Azure AppFabric Service Bus.

The fourth category of patterns is Security Patterns. This covers the Windows Azure AppFabric Access Control Service. More patterns certainly need to be added in this area and will be over time.

The fifth category of patterns is Relational Data Patterns. This covers the SQL Azure Database Service, the new SQL Azure Reporting Service, and the DataMarket Service (formerly called Project Dallas).

The sixth category of patterns is Network Patterns. This covers the new Windows Azure Connect virtual networking feature (formerly called Project Sydney).

The original site also contained an Application Patterns section which described composite patterns created out of the primitive patterns. These are coming in the next installment.I’d very much like to hear feedback on the pattern catalog. Are key patterns missing? Are the pattern names and descriptions and icons clear? Is the organization easy to navigate? Let me know your thoughts.

Mark Lee reported Microsoft, China Mobile to Partner on Cloud Computing in a 12/17/2010 article for Bloomberg BusinessWeek:

Microsoft Corp. will collaborate with China Mobile Communications Corp., parent of the world’s biggest phone carrier by market value, on developing technologies including cloud computing for Chinese businesses.

Microsoft, the world’s biggest software company, and China Mobile also will partner on information services for businesses, wireless devices, sales and distribution after signing a memorandum of understanding in Beijing today, according to a joint statement sent by e-mail.

The companies are betting that businesses in the world’s fastest-growing major economy will increase spending on computing services and applications accessed through the Internet instead of through individual corporate servers. International Business Machines Corp. and Amazon.com Inc. also are boosting development of the cloud services.

By March, 90 percent of Redmond, Washington-based Microsoft’s engineers will be working on cloud-related products, Chief Executive Officer Steve Ballmer said in October.

State-owned China Mobile Communications owns 74 percent of Hong Kong-listed China Mobile Ltd., which has more than 500 million mobile-phone users.

IBM, based in Armonk, New York, plans to spend $20 billion on acquisitions in the next five years as the world’s biggest computing-services company invests in operations that include cloud computing, Chief Executive Officer Sam Palmisano said in May.

Jean-Pierre Garbani posted Consider The Cloud As A Solution, Not A Problem to the Forrester Research blog on 12/17/2010:

It’s rumored that the Ford Model T’s track dimension (the distance between the wheels of the same axle) could be traced from the Conestoga wagon to the Roman chariot by the ruts they created. Roman roads forced European coachbuilders to adapt their wagons to the Roman chariot track, a measurement they carried over when building wagons in America in the 19th and early 20th centuries. It’s said that Ford had no choice but to adapt his cars to the rural environment created by these wagons. This cycle was finally broken by paving the roads and freeing the car from the chariot legacy.

IT has also carried over a long legacy of habits and processes that contrast with the advanced technology that it uses. While many IT organizations are happy to manage 20 servers per administrator, some Internet service providers are managing 1 or 2 million servers and achieving ratios of 1 administrator per 2000 servers. The problem is not how to use the cloud to gain 80% savings in data center costs, the problem is how to multiply IT organizations’ productivity by a factor of 100. In other words, don’t try the Model T approach of adapting the car to the old roads; think about building new roads so you can take full advantage of the new technology.

Gains in productivity come from technology improvements and economy of scale. The economy of scale is what the cloud is all about: cookie cutter servers using virtualization as a computing platform, for example. The technology advancement that paves the road to economy of scale is automation. Automation is what will abstract diversity and mask the management differences between proprietary and commodity platforms and eventually make the economy of scale possible.

The key is to turn the issue on its head: Don’t design cloud use around the current organization, but redesign the IT organization around the use of cloud and abstracted diversity. The fundamental question of the next five years is not the cloud per se but the proliferation of services made possible by the reduced cost of technologies. What’s important in Moore’s Law is the exponential growth that it describes. If our current organization manages 1,000 servers today with difficulty, what happens when we have 2,000 servers (or the virtual equivalent) in 2012 and 4,000 servers in 2014? IT organizations can’t grow exponentially. The cloud is our automobile, and we need paved roads.

Zane Adam summarized recent posts by professional cloud pundits in his Industry Analysts Agree..... post of 12/17/2010:

Over the past 2 months we have seen tremendous validation from leading industry analyst firms (Gartner and Forrester) that we are delivering great value for our customers and are headed in the right direction with our relational database, middleware services and data market in the cloud, as well as our traditional integration offerings.

Following are recent analyst reports on this topic:

SQL Azure Raises The Bar On Cloud Databases (Forrester Research, Inc., November 2, 2010, .pdf file, 187 kb) Over the past six months, Forrester interviewed 26 companies using Microsoft SQL Azure to find out about their implementations. Most customers stated that SQL Azure delivers a reliable cloud database platform to support various small to moderately sized applications as well as other data management requirements such as backup, disaster recovery, testing, and collaboration. In the report, analyst Noel Yuhanna states that key enhancements position SQL Azure as a leader in the emerging category of cloud databases and writes that it delivers the most innovative, integrated and flexible solution.

Windows Azure AppFabric: A Strategic Core of Microsoft's Cloud Platform (Gartner, November 15, 2010) Examines Microsoft’s strategy with Windows Azure AppFabric and the AppFabric services, concluding that “continuing strategic investment in Windows Azure is moving Microsoft toward a leadership position in the cloud platform market, but success is not assured until user adoption confirms the company's vision and its ability to execute in this new environment.”

Unlock the value of your data with Azure DataMarket (Blog post by James Staten, Forrester Research, October 29, 2010) In this article James Staten provides more commentary from the Microsoft Professional Developers Conference, including a deeper look at Azure DataMarket.

Quotes from the article: “What eBay did for garage sales, DataMarket does for data… With Azure DataMarket now you can unlock the potential of your valuable data and get paid for it because it provides these mechanisms for assigning value to your data, licensing and selling it, and protecting it.”

Magic Quadrant for Application Infrastructure for Systematic SOA-Style Application Projects (Gartner, Inc., October 21, 2010) Microsoft is positioned in the Leaders’ Quadrant of this report which examines vendors' application infrastructure worthiness for systematic service-oriented architecture (SOA)-style application projects. According to Gartner, “Systematic projects include long-term consideration and project planning in the process of design and technology selection for the application. They target applications that are intended for extended periods of use, carry advanced service-level requirements and typically have an impact on the overall information context of the business organization.”

Magic Quadrant for Application Infrastructure for Systematic Application Integration Projects (Gartner, Inc., October 18, 2010) Microsoft is positioned in the Leaders’ Quadrant of this report that identifies which products contain the features required to address the needs of systematic application-to-application (A2A), B2B and cloud-based application and integration projects. According to Gartner, “[t]he part of the application infrastructure market focused on integration products for A2A, B2B and cloud-based application integration will undergo consolidation, because multiple product features are used across each of the project types. Many users are looking to support their integration projects with an integrated suite provided by one vendor, thus eliminating the burden to act as a system integrator for application infrastructure.”

The Forrester Wave™: Comprehensive Integration Solutions, Q4 2010 (Forrester Research, Inc., November 9, 2010, .pdf file, 825 kb) Forrester Research Inc., a leading independent research firm, recognized Microsoft as a Leader in The Forrester Wave™: Comprehensive Integration Solutions, Q4 2010 report. Microsoft’s BizTalk 2010 received some of the top-level scores in the areas of integration server and application development support.

Beyond the continued validation we have seen from industry analysts on our relational database and middleware services in the cloud, we have received similar validation from customers this fall at the many industry events we participated in. Your feedback is very important to us and we encourage you to take advantage of our free trial offers of SQL Azure and Windows Azure AppFabric. Click on the links below, give them a try and let us know what you think!

<Return to section navigation list>

Windows Azure Platform Appliance (WAPA), Hyper-V and Private Clouds

• Yasser Abdel Kader posted Private Cloud Architecture - Part 1: Introduction and Definitions to his MSDN blog on 12/19/2010:

Cloud Computing promises to increase quality and agility of the IT Services while decreasing the associated costs. We will discuss how to achieve that in this series.

The promise that cloud computing is based upon will change the way we plan, design and consume computing power for the next decade. Yet, it is unclear from where should we start or where we will go.

In this series, I will discuss the principles, concepts and patterns for Private Cloud. The reason for that is that in our Area (MEA) and in different areas as well worldwide, I believe that this will be easier to achieve and implement in mid to large size organizations.

In the first part, I will start with the basic definitions. In the following parts, I will go through the principles and concepts and finally will discuss the patterns to implement Private Cloud.

Public Cloud Provides Computer Power, Storage and Networking infrastructure (such as firewalls and load balances) as a service via the public Internet. Private Cloud provides the same but in own organization data center.

The following three terms are crucial for our discussion

Infrastructure as a Service (IaaS) provides cloud computing at the virtual machine granularity level. For example, computing power will be provided to different consumer inside the organization at the level of server.

Platform as a Service (IaaS) provides cloud computing by providing a runtime environment for compiled application code.

Software as a Service (Saas) provides an entire software application as a service for its consumer.

Private Cloud mission is to offer the IaaS internally inside the organization to allow any workload hosted to inherit a set of cloud like attributes such as infinite capacity, continuous availability and drive predictability.

Sounds a good promise… YEAPJ, let’s see how we will achieve that, in the next blog we will discuss the principles behind Private Cloud.

Yasser is a Development Architect on Microsoft’s Middle East and Africa Team, so we probably can expect Azure-specific content in future posts.

<Return to section navigation list>

Cloud Security and Governance