Windows Azure and Cloud Computing Posts for 12/22/2010+

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database and Reporting

- Marketplace DataMarket and OData

- Windows Azure AppFabric: Access Control and Service Bus

- Windows Azure Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch

- Windows Azure Infrastructure

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Read the detailed TOC here (PDF) and download the sample code here.

Discuss the book on its WROX P2P Forum.

See a short-form TOC, get links to live Azure sample projects, and read a detailed TOC of electronic-only chapters 12 and 13 here.

Wrox’s Web site manager posted on 9/29/2009 a lengthy excerpt from Chapter 4, “Scaling Azure Table and Blob Storage” here.

You can now freely download by FTP and save the following two online-only PDF chapters of Cloud Computing with the Windows Azure Platform, which have been updated for SQL Azure’s January 4, 2010 commercial release:

- Chapter 12: “Managing SQL Azure Accounts and Databases”

- Chapter 13: “Exploiting SQL Azure Database's Relational Features”

HTTP downloads of the two chapters are available for download at no charge from the book's Code Download page.

Tip: If you encounter articles from MSDN or TechNet blogs that are missing screen shots or other images, click the empty frame to generate an HTTP 404 (Not Found) error, and then click the back button to load the image.

Azure Blob, Drive, Table and Queue Services

Microsoft’s new Interoperability Bridges and Labs Center site offers a link to the Interoperability @ Microsoft team blog’s IndexedDB Prototype Available for Internet Explorer of 12/21/2010:

As we launch our new HTML5 Labs today, this is one of two guest blogs about the first two HTML5 prototypes. It is written by Pablo Castro, a Principal Architect in Microsoft's Business Platform Division.

With the HTML5 wave of features, Web applications will have most of the building blocks required to build full-fledged experiences for users, from video and vector graphics to offline capabilities.

One of the areas that has seen a lot of activity lately is local storage in the browser, captured in the IndexedDB spec, where there is a working draft as well as a more current editor's draft.

The goal of IndexedDB is to introduce a relatively low-level API that allows applications to store data locally and retrieve it efficiently, even if there is a large amount of it.

The API is low-level to keep it really simple and to enable higher-level libraries to be built in JavaScript and follow whatever patterns Web developers think are useful as things change over time.

Folks from various browser vendors have been working together on this for a while now, and Microsoft has been working closely with the teams at Mozilla, Google and other W3C members that are involved in this to design the API together. Yeah, we even had meetings where all of us where in the same room, and no, we didn't spontaneously combust!

The IE folks approach is to focus IE9 on providing developer site-ready HTML5 that can be used today by web developers without having to worry about what is stable and not stable, or being concerned about the site breaking as the specifications and implementations change. Here at the HTML5 Labs we are letting developers experiment with unstable standards before they are ready to be used in production site.

In order to enable that, we have just released an experimental implementation of IndexedDB for IE. Since the spec is still changing regularly, we picked a point in time for the spec (early November) and implemented that.

The goal of this is to enable early access to the API and get feedback from Web developers on it. Since these are early days, remember that there is still time to change and adjust things as needed. And definitely don't deploy any production applications on it :)

You can find out more about this experimental release and download the binaries from this archive, which contains the actual API implementation plus samples to get you started. [URL edited to remove spurious :91 port designator, which caused HTTP 404 errors.]

For those of you who are curious about the details: we wanted to give folks early access to the API without disrupting their setup, so we built the prototype as a plain COM server that you can register in your box.

That means we don't need to mess with IE configuration or replace files. The only visible effect of this is that you have to start with "new ActiveXObject(...)" instead of the regular windows.indexedDB. That would of course go away if we implement this feature.

If you have feedback, questions or want to reach out to us for any other reason, please contact us here. We're looking forward to hearing from you.

As a side note, and since this is a component of IE, if you want to learn more about how IE is making progress in the space of HTML5 and how we think about new features in this context, check out the IE blog here.

Pablo

This implementation has been tested to work on Internet Explorer 8 & 9.

Here’s a capture of the HTML5 IndexedDB Bug Tracker page from Readme.txt’s “Getting Started” section running in IE8:

This IndexedDB post is in the Azure Blob, Drive, Table and Queue Services section because, like Azure tables, it’s a key-value object store. Pablo described it in his HTML5 does databases post of 1/4/2010 as:

… [A]n ISAM API with Javascript objects as the record format. You can create indexes to speed up lookups or scans in particular orders. Other than that there is no schema (any clonable Javascript object will do) and no query language.

Unlike Azure tables, the current IndexedDB implementation can have multiple indexes.

It will be interesting to see what higher-level APIs Pablo and his team come up with for RESTful data exchange with Windows Azure tables.

See also Microsoft’s new Interoperability Bridges and Labs Center site offers a link to the Interoperability @ Microsoft team blog’s Introducing the WebSockets Prototype post of 12/21/2010 in the Live Windows Azure Apps, APIs, Tools and Test Harnesses section below.

Derrick Harris explained Why Hadoop-like ‘Dryad’ Could Be Microsoft’s Big Data Star in a 12/21/2010 post to GigaOm’s Structure blog:

Microsoft’s HPC division opened up the company’s Dryad parallel-processing technologies as a Community Technology Preview (CTP) last week, a first step toward giving Windows HPC Server users a production-ready Big Data tool designed specifically for them. The available components — Dryad, DSC and DryadLINQ – appear to be an almost part-for-part comparison with the base Hadoop components (Hadoop MapReduce, Hadoop Distributed File System and the SQL-like Hive programming language) and hint that Microsoft wants to offer Windows/.NET shops their own stack on which to write massively parallel applications. Dryad could be a rousing success, in part because Hadoop – which is written in Java – is not ideally suited to run atop Windows or support .NET applications.

When Microsoft broke into the high-performance computing market in 2005 with its Windows Compute Cluster Server, it affirmed the idea that, indeed, HPC was taking place on non-Linux boxes. Certainly, given the prevalence of large data volumes across organizations of all types, there is equal, if not greater, demand for Hadoop-like analytical tools even in Windows environments. Presently, however, Apache only supports Linux as a production Hadoop environment; Windows is development-only. There are various projects and tools floating around for running Hadoop on Windows, but they exist outside the scope of Apache community support. A Stack Overflow contributor succinctly summed up the situation in response to a question about running Hadoop on Windows Server:

From the Hadoop documentation:

‘Win32 is supported as a development platform. Distributed operation has not been well tested on Win32, so it is not supported as a production platform.’

Which I think translates to: ‘You’re on your own.’

Then there is the Windows Azure angle, where Microsoft appears determined to compete with Amazon Web Services. Not only has it integrated IaaS functionality in Windows Azure, but, seemingly in response to AWS courting the HPC community with Cluster Compute Instances, Microsoft also recently announced free access to Windows Azure for researchers running applications against the NCBI BLAST database, which currently is housed within Azure. Why not counter Elastic MapReduce – AWS’s Hadoop-on-EC2 service – with Dryad applications in Azure?

SD Times actually reported in May that Microsoft was experimenting with supporting Hadoop in Windows Azure, but that capability hasn’t arrived yet. Perhaps that’s because Microsoft has fast-tracked Dryad for a slated production release in 2011. As the SD Times article explains, Hadoop is written in Java, and any support for Hadoop within Windows Azure likely would be restricted to Hadoop developers. That’s not particularly Microsoft-like, which certainly would prefer to give additional reasons to develop in .NET, not Java. Dryad would be such a reason.

What might give the Microsoft developer community even more hope is the Dryad roadmap that Microsoft presented in August. ZDNet’s Mary Jo Foley noted a variety of Dryad subcomponents that will make Dryad even more complete, including a job scheduler codenamed “Quincy.”

But there’s no guarantee that the production version of Dryad will resemble the current iteration too closely. As Microsoft notes in the blog post announcing the Dryad CTP, “The DryadLINQ, Dryad and DSC programming interfaces are all in the early phases of development and might change significantly before the final release based on your feedback.” A prime example of this is that Dryad has only been tested on 128 individual nodes. In the Hadoop world, Facebook is running a cluster that spans 3,000 nodes (sub req’d). Dryad has promise as Hadoop for the Microsoft universe, but, clearly, there’s work to be done.

Related content from GigaOM Pro (sub req’d):

<Return to section navigation list>

SQL Azure Database and Reporting

Mark Kromer (@mssqldude) posted SQL Server 2008 R2 Feature Pack on 12/22/2010:

I’ve run into a few inquiries recently where folks are having trouble locating all of the add-on goodies for SQL Server 2008 R2 in a single place. Some of this confusion seems to originate from the R2 CTPs and bits & pieces of functionality that had their own trials or early releases as separate downloads.

Go here for the latest definitive SQL Server 2008 R2 Feature Pack with all things grouped together such as remote blog storage, sync framework, powershell extensions, migration assistant, many more.

Deepak Kumar reminded readers What you ‘Currently’ don’t get with SQL Azure? in a 12/21/2010 post to his SQLKnowledge.com blog:

SQL Azure is cloud based relational database platform built on SQL Server technology; it allows storing structured, semi-structured and unstructured data over the cloud. Behind the scene databases are hosted on cluster SQL servers, and are load balanced plus replicated for scalability & high availability purposes. Each logical Server consists of one master and N number of user databases based on Wed or Business Edition.

SQL Azure Standard Consumption Pricing (check offers available with MSDN subscription and Microsoft Partner Network)

Web Edition Database• $9.99 per database up to 1GB per month

• $49.99 per database up to 5GB per monthBusiness Edition Database

• $99.99 per database up to 10GB per month

• $199.98 per database up to 20GB per month

• $299.97 per database up to 30GB per month

• $399.96 per database up to 40GB per month

• $499.95 per database up to 50GB per monthHow to connect to SQL Azure database: You may use SQL Server management studio or

Alternatively you can choose SQLCMD to connect to SQL Azure database:

sqlcmd -U <ProvideLogin@Server> -P <ProvidePassword> -S <ProvideServerName> -d masterHow to get started?

Once connected to SQL Azure instance. If you pass a very basic database creation command “CREATE DATABASE SQLKnoweldge”, it will create default web edition database of maxsize 1GB. You can specify maxsize as like:

CREATE DATABASE SQLKnoweldge (EDITION=’WEB’, MAXSIZE=5GB)Following command will create a database with default Maxsize of 10GB that you can increase up to 50GB.

CREATE DATABASE SQLKnoweldge (EDITION=’BUSINESS’)What if you do not specify edition? It will check database size and bases value it will determine edition. Say, if MAXSIZE is set to 10, 20, 30, 40 or 50GB then its business edition. Here is database creation syntax for SQL Azure.

CREATE DATABASE database_name

{

(<edition_options> [, ...n])

}

<edition_options> ::=

{

(MAXSIZE = {1 | 5 | 10 | 20 | 30 | 40 | 50} GB)

|(EDITION = {‘web’ | ‘business’})

}[;]To copy a database:

CREATE DATABASE destination_database_name

AS COPY OF [source_server_name.] source_database_name [;]What you ‘Currently’ don’t get with SQL Azure?

In addition to SQL Profiler, Perfmon, Trace Flags, Global Temporary Tables, Distributed Transactions, SQL Azure does not support heap tables, a table must have a clustered index. If a table is created without a clustered constraint, a clustered index must be created before an insert operation is allowed on the table. That is the reason ‘select into’ statement fails. Read committed Snapshot Isolation (RCSI) is default instead Read Committed in SQL server; Connection timeout is 30 minutes plus long running transactions can be terminated without your knowledge. Here is the MS list for more details:

SQL Server 2008 R2 Features Not Supported by SQL Azure: The following features that were new to SQL Server 2008 R2 are not supported by SQL Azure:

- SQL Server Utility

- SQL Server PowerShell Provider. PowerShell scripts can be run on an on-premise computer, however, and connect to SQL Azure using supported objects (such as System Management Objects or Data-tier Applications Framework).

- Master Data Services

SQL Server 2008 Features Not Supported by SQL Azure: The following features that were new to SQL Server 2008 are not supported by SQL Azure:

- Change Data Capture

- Data Auditing

- Data Compression

- Extended Events

- Extension of spatial types and methods through Common Language Runtime (CLR)

- External Key Management / Extensible Key Management

- FILESTREAM Data

- Integrated Full-Text Search

- Large User-Defined Aggregates (UDAs)

- Large User-Defined Types (UDTs)

- Performance Data Collection (Data Collector)

- Policy-Based Management

- Resource Governor

- Sparse Columns

- SQL Server Replication

- Transparent Data Encryption

SQL Server 2005 Features Not Supported by SQL Azure: The following features that were new to SQL Server 2005 are not supported by SQL Azure:

- Common Language Runtime (CLR) and CLR User-Defined Types

- Database Mirroring

- Service Broker

- Table Partitioning

- Typed XML and XML indexing is not supported. The XML data type is supported by SQL Azure.

Other SQL Server Features Not Supported by SQL Azure: The following features from earlier versions of SQL Server are not supported by SQL Azure:

- Backup and Restore

- Replication

- Extended Stored Procedures

- SQL Server Agent/Jobs

<Return to section navigation list>

MarketPlace DataMarket and OData

Mike Flasko, Pablo Castro and Jonathan Carter appear as developers of the Insights OData Service Facebook application:

Stay tuned for more details.

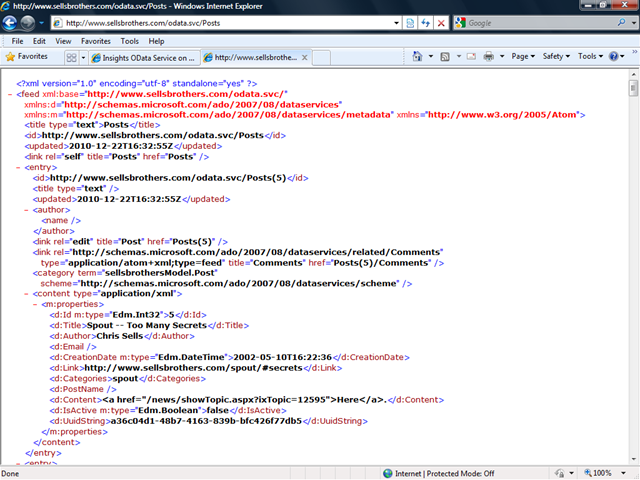

Chris Sells added an OData service to his SellsBrothers.com blog:

See Phil Haack posted on on 12/21/2010 See Me in Brazil and Argentina in March 2011 in the Cloud Computing Events section.

<Return to section navigation list>

Windows Azure AppFabric: Access Control and Service Bus

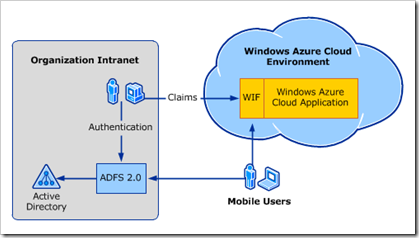

Tim Anderson (@timanderson) asserted Single sign-on from Active Directory to Windows Azure: big feature, still challenging on 12/22/2010:

Microsoft has posted a white paper setting out what you need to do in order to have users who are signed on to a local Windows domain seamlessly use an Azure-hosted application, without having to sign in again.

I think this is a huge feature. Maintaining a single user directory is more secure and more robust than efforts to synchronise a local directory with a cloud-hosted directory, and this is a point of friction when it comes to adopting services such as Google Apps or Salesforce.com. Single sign-on with federated directory services takes that away. As an application developer, you can write code that looks the same as it would for a locally deployed application, but host it on Azure.

There is also a usability issue. Users hate having to sign in multiple times, and hate it even more if they have to maintain separate username/password combinations for different applications (though we all do).

The white paper explains how to use Active Directory Federation Services (ADFS) and Windows Identity Foundation (WIF, part of the .NET Framework) to achieve both single sign-on and access to user data across local network and cloud.

The snag? It is a complex process. The white paper has a walk-through, though to complete it you also need this guide on setting up ADFS and WIF. There are numerous steps, some of which are not obvious. Did you know that “.NET 4.0 has new behavior that, by default, will cause an error condition on a page request that contains a WS-Federation authentication token”?

Of course dealing with complexity is part of the job of a developer or system administrator. Then again, complexity also means more to remember and more to troubleshoot, and less incentive to try it out.

One of the reasons I am enthusiastic about Windows Small Business Server Essentials (codename Aurora) is that it promises to do single sign-on to the cloud in a truly user-friendly manner. According to a briefing I had from SBS technical product manager Michael Leworthy, cloud application vendors will supply “cloud integration modules,” connectors that you install into your SBS to get instant single sign-on integration.

SBS Essentials does run ADFS under the covers, but you will not need a 35-page guide to get it working, or so we are promised. I admit, I have not been able to test this feature yet, and aside from Microsoft’s BPOS/Office 365 I do not know how many online applications will support it.

Still, this is the kind of thing that will get single sign-on with Active Directory widely adopted.

Consider FaceBook Connect. Register your app with Facebook; write a few lines of JavaScript and PHP; and you can achieve the same results: single sign-on and access to user account information. Facebook knows that to get wide adoption for its identity platform it has to be easy to implement.

On Microsoft’s platform, another option is to join your Azure instance to the local domain. This is a feature of Azure Connect, currently in beta.

Are you using ADFS, with Azure or another platform? I would be interested to hear how it is going.

Related posts:

<Return to section navigation list>

Windows Azure Virtual Network, Connect, RDP and CDN

No significant articles today.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

Microsoft’s new Interoperability Bridges and Labs Center site offers a link to the Interoperability @ Microsoft team blog’s Introducing the WebSockets Prototype post of 12/21/2010:

As we launch our new HTML5 Labs today, this is one of two guest blogs about the first two HTML5 prototypes. It is written by Tomasz Janczuk [pictured at right below] a Principal Development Lead in Microsoft’s Business Platform Division.

In my blog post from last summer I wrote about a prototype .NET implementation of two drafts of the WebSockets protocol specification - draft-hixie-thewebsocketprotocol-75 and draft-hixie-thewebsocketprotocol-76 - making their way through the IETF at that time.

Since then, there have been a number of revisions to the protocol specification, and it is time to revisit the topic. Given the substantial demand for code to experiment with, we are sharing the Windows Communication Foundation server and Silverlight client prototype implementation of one of the latest proposed drafts of the WebSockets protocol: draft-montenegro-hybi-upgrade-hello-handshake-00.

You can read more about the effort and download the .NET prototype code at the new HTML5 Labs site.

What is WebSockets?

WebSockets is one of the HTML 5 working specifications driven by the IETF to define a duplex communication protocol for use between web browsers and servers. The protocol enables applications that exchange messages between the client and the server with communication characteristics that cannot be met with the HTTP protocol.

In particular, the protocol enables the server to send messages to the client at any time after the WebSockets connection has been established and without the HTTP protocol overhead. This contrasts WebSockets with technologies based on the HTTP long polling mechanism available today.

For this early WebSockets prototype we are using a Silverlight plug-in on the client and a WCF service on the server. In the future, you may see HTML5 Labs using a variety of other technologies.

What are we making available?

Along with the downloadable .NET prototype implementation of the WebSocket proposed draft-montenegro-hybi-upgrade-hello-handshake specification, we are also hosting a sample web chat application based on that prototype in Windows Azure here. The sample web chat application demonstrates the following components of the prototype [Emphasis added]:

- The server side of the WebSocket protocol implemented using Windows Communication Foundation from .NET Framework 4. The WCF endpoint the sample application communicates with implements the draft WebSocket proposal.

- The client side prototype implementation consisting of two components:

- A Silverlight 4 application that implements the same draft of the WebSocket protocol specification.

- A jQuery extension that dynamically adds the Silverlight 4 application above to the page and creates a set of JavaScript WebSocketDraft APIs that delegate their functionality to the Silverlight application using the HTML bridge feature of Silverlight.

The downloadable package contains a .NET prototype implementation consisting of the following components:

- A WCF 4.0 server side binding implementation of the WebSocket specification draft.

- A prototype of the server side WCF programming model for WebSockets.

- Silverlight 4 client side implementation of the protocol.

- .NET 4.0 client side implementation of the protocol.

- A HTML bridge from the Silverlight to JavaScript that enables use of the prototype from JavaScript applications running in browsers that support Silverlight.

- Web chat and stock quote samples.

Given the prototype nature of the implementation, the following restrictions apply:

- A Silverlight client (and a JavaScript client, via the HTML bridge) can only communicate using the proposed WebSocket protocol using ports in the range 4502-4534 (this is related to Network Security Access Restrictions applied to all direct use of sockets in the Silverlight platform).

- Only text messages under 126 bytes of length (UTF-8 encoded) can be exchanged.

- There is no support for web proxies in the client implementation.

- There is no support for SSL.

- Server side implementation limits the number of concurrent WebSocket connections to 5.

This implementation has been tested to work on Internet Explorer 8 and 9.

Why is this important?

Through access to emerging specifications like WebSockets, the HTML5 Labs sandbox gives you implementation experience with the draft specifications, helps enable faster iterations around Web specifications without getting locked in too early with a specific draft, and gives you the opportunity to provide feedback to improve the specification. This unstable prototype also has the potential to benefit a broad audience.

We want your feedback

As you try this implementation we welcome your feedback and we are looking forward to your comments!

See also Microsoft’s new Interoperability Bridges and Labs Center site offers a link to the Interoperability @ Microsoft team blog’s IndexedDB Prototype Available for Internet Explorer of 12/21/2010 in the Azure Blob, Drive, Table and Queue Services section above.

The Windows Azure Team posted Real World Windows Azure: Interview with Wayne Houlden, Chief Executive Officer at Janison on 12/22/2010:

As part of the Real World Windows Azure series, we talked to Wayne Houlden, CEO at Janison, about using the Windows Azure platform for the online science testing system at the Department of Education & Training of New South Wales. Here's what he had to say:

MSDN: Tell us about Janison and the services you offer.

Houlden: Janison provides an online learning management system and custom learning portals to customers throughout Australia. We offer our innovative learning solutions to a wide variety of organizations that provide online learning services to their staff, students, and customers.

MSDN: What were the biggest challenges that you faced prior to implementing the Windows Azure platform?

Houlden: We have been working on a project with the Department of Education of New South Wales, Australia, to transform the Essential Secondary Science Assessment that high school students take from a paper-based test to an online multimedia assessment. The challenge is to deliver a system that can scale quickly to handle more than 80,000 students across nearly 600 schools in a single day. However, a redundant, highly-available, and load-balanced system with physical servers would require extensive procurement, configuration, and maintenance work, and would be too costly for the department.

MSDN: Can you describe the solution you built with the Windows Azure platform to address your need for cost-effective scalability?

Houlden: Our developers have been working with the Microsoft .NET Framework and other Microsoft technologies for more than a decade, so the Windows Azure platform was a natural fit for Janison. We created a set of multimedia, animated virtual assessments for students and an administration portal for staff and teachers that use Web roles and Worker roles in Windows Azure for compute processing. We populate Table storage with assessment data and use the Windows Azure Content Delivery Network to store multimedia assets. Students have authenticated access to the assessment and their answers are recorded in Table storage and then replicated in an on-premises server running Microsoft SQL Server 2008 data management software.

Built on the Windows Azure platform, the Essential Secondary Science Assessment includes a portal for administrators to track students' progress on the test.

MSDN: What makes your solution unique?

Houlden: We were able to demonstrate the value of multimedia-rich online assessments. Students not only found the test interesting, enjoyable, and engaging, but the Department of Education of New South Wales also found that it was an educationally-sound alternative to the written test. The Windows Azure platform, with its scaling capabilities to handle an enormous compute load, was a critical component.

MSDN: What kinds of benefits are you realizing with the Windows Azure platform?

Houlden: The key benefits for us are the scalability and cost-effectiveness of the Windows Azure platform. We can deliver a solution that can quickly scale to handle a massive amount of users for a short period of time, and only pay for what we use and without having the cost of a physical infrastructure. For example, to support 40,000 students we used 250 instances of Windows Azure for a period of 12 hours. With a traditional approach for the same performance, we would have needed 20 physical servers-which, all told, would be 100 times more expensive than using the Windows Azure platform.

To read more Windows Azure customer success stories, visit: www.windowsazure.com/evidence

NVoicePay posted a 00:03:53 See how Invoice Payment Company Used Windows Azure, SQL Azure and AppFabric video demo on 12/20/2010:

Find out how NVoicePay partnered with ADP to develop an enterprise-class payment network using Windows Azure, SQL Azure and Azure AppFabric.

From Windows Azure and Cloud Computing Posts for 12/16/2010+: Microsoft PressPass reported “Payment solution provided by NVoicePay is based on Windows Azure platform with Silverlight interface across the Web, PC and phone” as a preface to its Customer Spotlight: ADP Enables Plug-and-Play Payment Processing for Thousands of Car Dealers Throughout North America With Windows Azure press release of 12/16/2010:

The Dealer Services Group of Automatic Data Processing Inc. has added NVoicePay as a participant to its Third Party Access Program. NVoicePay, a Portland, Ore.-based software provider, helps mutual clients eliminate paper invoices and checks with an integrated electronic payments solution powered by the Windows Azure cloud platform, Microsoft Corp. reported today. [Link added.]

NVoicePay’s AP Assist e-payment solution offers substantial savings for dealerships that opt into the program. NVoicePay estimates that paying invoices manually ends up costing several dollars per check; however, by reducing that transaction cost, each dealership stands to save tens of thousands of dollars per year depending on its size.

“Like most midsize companies, many dealerships are using manual processes for their accounts payables, which is fraught with errors and inefficiency,” said Clifton E. Mason, vice president of product marketing for ADP Dealer Services. “NVoicePay’s hosted solution is integrated to ADP’s existing dealer management system, which allows our clients to easily process payables electronically for less than the cost of a postage stamp.”

The NVoicePay solution relies heavily on Microsoft Silverlight to enable a great user experience across multiple platforms, including PC, phone and Web. For example, as part of the solution, a suite of Windows Phone 7 applications allow financial controllers to quickly perform functions such as approving pending payments and checking payment status while on the go.

On the back end, the solution is implemented on the Windows Azure platform. This gives it the ability to work easily with a range of existing systems, and it also provides the massive scalability essential for a growth-stage business. According to NVoicePay, leveraging Windows Azure to enable its payment network allowed the NVoicePay solution to go from zero to nearly $50 million in payment traffic in a single year.

“The Windows Azure model of paying only for the resources you need has been key for us as an early stage company because the costs associated with provisioning and maintaining an infrastructure that could support the scalability we require would have been prohibitive,” said Karla Friede, chief executive officer, NVoicePay. “Using the Windows Azure platform, we’ve been able to deliver enterprise-class services at a small-business price, and that’s a requirement to crack the midmarket.”

About ADP

Automatic Data Processing, Inc. (Nasdaq: ADP), with nearly $9 billion in revenues and about 560,000 clients, is one of the world’s largest providers of business outsourcing solutions. Leveraging 60 years of experience, ADP offers the widest range of HR, payroll, tax and benefits administration solutions from a single source. ADP’s easy-to-use solutions for employers provide superior value to companies of all types and sizes. ADP is also a leading provider of integrated computing solutions to auto, truck, motorcycle, marine, recreational, heavy vehicle and agricultural vehicle dealers throughout the world. For more information about ADP or to contact a local ADP sales office, reach us at 1-800-CALL-ADP ext. 411 (1-800-225-5237 ext. 411).

About NVoicePay

NVoicePay is a B2B Payment Network addressing the opportunity of moving invoice payments from paper checks to electronic networks for mid-market businesses. NVoicePay’s simple efficient electronic payments have made the company the fastest growing payment network for business.

About Microsoft

Founded in 1975, Microsoft (Nasdaq “MSFT”) is the worldwide leader in software, services and solutions that help people and businesses realize their full potential.

<Return to section navigation list>

Visual Studio LightSwitch

Return to section navigation list>

Windows Azure Infrastructure

Jason Mar published Microsoft Windows Azure Cloud First impressions on 12/22/2010:

I’ve been trying to help my dad set up a VM image that has HP Quality Control software on it. Normally this wouldn’t be very difficult, and I could probably finish in under an hour. But my dad has a MSDN account and wanted it on Microsoft’s Azure Cloud.

Before you can open the Azure Management Console, you’ll need to install Silverlight. Once you open the console for the first time, you really can’t do anything with it on its own. I found myself scratching my head just figuring out how to start a server. In my opinion, if cloud users need to read over 10 pages of documentation just to be able to create their first server, then something is wrong.

I found out that you have to download and install Visual Studio 2010 and the Windows Azure 1.3 SDK. Then in VS you’ll need to create a new cloud project, add a role to it, and select publish. A Deploy to Azure dialog will appear that requires you to select credentials and a storage account. Then you need to configure remote desktop connections by entering a username and password. Then you’re ready to click deploy to Azure. Once that’s done, open the management console, select the server you just created, download an RDP connection file to finally connect to the server.

Something that jumped out at me was that virtual servers on Azure aren’t called servers, they’re called Hosted Services. This is completely different from the familiar virtual servers provided by Amazon and other public clouds. Even though Azure is a Microsoft service and runs on Server 2008 R2, there’s no option to simply provision a fresh Server 2008 R2 instance that you can remote desktop into and start configuring. If that’s what you want to do, you basically have to open Visual Studio 2010, and create a “Hello World” app to deploy to Azure. It will have an app running on it that you didn’t really want in the first place.

I can understand Microsoft requiring an app to be specified before starting an instance, but an example configuration should be provided so new users can get going right away. As it is now it will take a very determined developer to take the time to use Azure over any other cloud service, thanks to the poor initial experience Azure is currently presenting.

I don’t know if this is just because I’m used to Amazon, GoGrid, and VMWare, but to me the idea of needing to go through Visual Studio just to spin up a fresh server is completely nonsensical.

If you aren’t in TAP (early adopter hand-holding program) I wouldn’t recommend using Windows Azure, because it’s nowhere near as easy to use as Amazon EC2 or other public clouds that are way faster to get started on and are much more friendly towards open source software.

I replied to Jason as following in a comment:

Popular Searches

kdb

Looking for more about [term]?

Jason Mar

A random cross section of my life and technology I think you should know about

Microsoft Windows Azure Cloud First Impressions

22 Dec 2010 at 01:34

Jason Mar

I’ve been trying to help my dad set up a VM image that has HP Quality Control software on it. Normally this wouldn’t be very difficult, and I could probably finish in under an hour. But my dad has a MSDN account and wanted it on Microsoft’s Azure Cloud.

Before you can open the Azure Management Console, you’ll need to install Silverlight. Once you open the console for the first time, you really can’t do anything with it on its own. I found myself scratching my head just figuring out how to start a server. In my opinion, if cloud users need to read over 10 pages of documentation just to be able to create their first server, then something is wrong.

I found out that you have to download and install Visual Studio 2010 and the Windows Azure 1.3 SDK. Then in VS you’ll need to create a new cloud project, add a role to it, and select publish. A Deploy to Azure dialog will appear that requires you to select credentials and a storage account. Then you need to configure remote desktop connections by entering a username and password. Then you’re ready to click deploy to Azure. Once that’s done, open the management console, select the server you just created, download an RDP connection file to finally connect to the server.

Something that jumped out at me was that virtual servers on Azure aren’t called servers, they’re called Hosted Services. This is completely different from the familiar virtual servers provided by Amazon and other public clouds. Even though Azure is a Microsoft service and runs on Server 2008 R2, there’s no option to simply provision a fresh Server 2008 R2 instance that you can remote desktop into and start configuring. If that’s what you want to do, you basically have to open Visual Studio 2010, and create a “Hello World” app to deploy to Azure. It will have an app running on it that you didn’t really want in the first place.

I can understand Microsoft requiring an app to be specified before starting an instance, but an example configuration should be provided so new users can get going right away. As it is now it will take a very determined developer to take the time to use Azure over any other cloud service, thanks to the poor initial experience Azure is currently presenting.

I don’t know if this is just because I’m used to Amazon, GoGrid, and VMWare, but to me the idea of needing to go through Visual Studio just to spin up a fresh server is completely nonsensical.

If you aren’t in TAP (early adopter hand-holding program) I wouldn’t recommend using Windows Azure, because it’s nowhere near as easy to use as Amazon EC2 or other public clouds that are way faster to get started on and are much more friendly towards open source software.

I took exception to Jason’s conclusion in the following comment:

Jason,

You are comparing different services; Amazon is an Infrastructure as a Service (IaaS) offering and Windows Azure is a Platform as a Service (PaaS) product. Azure's primary platform is .NET with Visual Studio, so it shouldn't be surprising that VS 2010 with the Windows Azure SDK 1.3 running under a customized Windows Server OS is the default developement environment. (Azure also supports PHP, Java and a few other programming languages, as well as Eclipse tools.) Azure now supports virtual machine roles (VMRoles), plus the original ASP.NET WebRoles and .NET WorkerRoles. Hosted Windows Server IaaS instances are in the works.

Having used both Amazon EC2 and Windows Azure, I find Azure to be far "easier to use" for hosting Visual Studio projects and relational databases (SQL Azure). Support services for high availability and scalability are built in. Microsoft makes its living selling software, so I wouldn't expect Windows Azure to offer Unix/Linux instances in the foreseeable future.

Roger Jennings

http://oakleafblog.blogspot.co...

Irene Bingley surmised Microsoft's Windows 8 Direction Suggested by Job Postings about Win 8’s connections to the cloud on 12/22/2010:

I understand why Microsoft would be reluctant to share too much about its development plans for Windows 8. After all, Windows 7 continues to sell at a steady clip (Microsoft claims 240 million licenses since the operating system's Oct. 2009 launch), and there's always the fear that officially announcing the next version will dissuade some XP and Vista holdouts from upgrading.

Nonetheless, Windows 8 rumors continue to percolate. Within the blogosphere, a 2012 release for the next-generation operating system seems the consensus. The scuttlebutt also suggests that, in keeping with Microsoft's widely touted "all in" strategy, Windows 8 will be tightly integrated with Web applications and content.

Microsoft itself may be publicly tight-lipped about Windows 8. But two new job postings on the company's Website suggest the company's actively searching for people to help build it. Both those postings have now been yanked from Microsoft's Careers Website, but blogs such as Winrumors and Windows8news have reproduced the copy in full; simply because I haven't seen these on Microsoft's Website with my own eyes (although I have no reason to distrust either of those fine blogs), take the following with a hefty grain of salt.

The first job posting seeks a Software Development Engineer who can help with work "on a Windows Azure-based service and integrating with certain Microsoft online services and Windows 8 client backup." [Emphasis added.]

The second is looking for a Windows System Engineer to "play a pivotal role as we integrate our online services with Windows 8."

During the summer, an alleged internal slide deck suggested that the cloud would indeed play a big part in Windows 8. On June 26, a Website called Microsoft Journal (which soon disappeared from its Windows Live Spaces host site) posted the deck, dated April 2010, detailing Microsoft's discussions about the operating system. The features supposedly under consideration included ultra-fast booting, a "Microsoft Store" for downloading apps, fuller cloud integration, and the use of facial recognition for logins.

"Windows accounts could be connected to the cloud," read one of the slides, which followed that with a bullet point: "Roaming settings and preferences associated with a user between PCs and devices."

During a discussion at October's Gartner Symposium/ITxpo 2010 in Orlando, Microsoft CEO Steve Ballmer suggested "the next release of Windows" posed the company's riskiest bet. Although the desktop-based Windows franchise continues to sell well, thinkers such as departing Microsoft Chief Software Architect Ray Ozzie believe the future lies in devices connected to the cloud--devices that take forms beyond traditional desktops and laptops.

"At this juncture, given all that has transpired in computing and communications, it's important that all of us do precisely what our competitors and customers will ultimately do: close our eyes and form a realistic picture of what a post-PC world might actually look like," Ozzie wrote in an Oct. 28 posting on his personal blog, days after the company announced his resignation.

If these job postings prove accurate, it's yet another small bit of evidence that Microsoft's trying to apply its "all in" cloud strategy to Windows. Personally, I'm wondering just how the company will walk that tightrope between the operating system's traditional desktop emphasis and the cloud paradigm. I guess we'll find out the answer in a few years.

Lori MacVittie (@lmacvittie) asserted The right infrastructure will eventually enable providers to suggest the right services for each customer based on real needs as a preface to her McCloud: Would You Like McAcceleration with Your Application? post of 10/22/2010 to F5’s DevCentral blog:

When I was in high school I had a job at a fast food restaurant, as many teenagers often do. One of the first things I was taught was “suggestive selling”. That’s the annoying habit of asking every customer if they’d like an additional item with their meal. Like fries, or a hot apple pie. The reason behind the requirement that employees “suggest” additional items is that studies showed a significant number of customers would, in fact, like fries with their meal if it was suggested to them.

Hence the practice of suggestive selling.

The trick is, of course, that it makes no sense to suggest fries with that meal when the customer ordered fries already. You have to actually suggest something the customer did not order. That means you have to be aware of what the customer already has and, I suppose, what might benefit them. Like a hot apple pie for desert.

See, it won’t be enough for a cloud provider to simply offer infrastructure services to its customers; they’re going to have to suggest services to customers based on (1) what they already have and (2) what might benefit them.

CONTEXT-AWARE SUGGESTIVE SERVICE MENUS

Unlike the real-time suggestive selling practices used for fast-food restaurants, cloud providers will have to be satisfied with management-side suggestive selling. They’ll have to compare the services they offer with the services customers have subscribed to and determine whether there is a good fit there. Sure, they could just blanket offer services but it’s likely they’ll have better success if the services they suggest would actually benefit the customer.

Let’s say a customer has deployed a fairly typical web-based application. It’s HTTP-based, of course, and the provider offers a number of application and protocol specific infrastructure services that may benefit the performance and security of that application. While the provider could simply offer the services based on the existence of that application, it would be more likely a successful “sell” if they shared the visibility into performance and capacity that would provide a “proof point” that such services were needed. Rather than just offer a compression-service, the provider could – because it has visibility into the data streams – actually provide the customer with some data pertinent to the customer’s application. For example, telling the customer they could benefit from compression is likely true, but showing the customer that 75% of their data is text and could ostensibly reduce bandwidth by X% (and thus their monthly bandwidth transfer costs) would certainly be better. Similarly, providers could recognize standard-applications and pair them with application-specific “templates” that are tailored to improve the performance and/or efficiency of that application and suggest customers might benefit from the deployment of such capabilities in a cloud computing environment.

This requires context, however, as you don’t want to be offering services to which the customer is already subscribed or which may not be of value to the organization. If the application isn’t consistently accessed via mobile devices, for example, attempting to sell the customer a “mobile device acceleration service” is probably going to be annoying as well as unsuccessful. The provider must not only manage its own infrastructure service offerings but it must also be able to provide visibility into traffic and usage patterns and intelligently offer services that make sense based on actual usage and events that are occurring in the environment. The provider needs to leverage visibility to provide visibility into the application’s daily availability, performance, and security such that they understand not only why but what additional infrastructure services may be of value to their organization.

SERVICES MUST EXIST FIRST

Of course in order to offer such services they must exist, first. Cloud computing providers must continue to evolve their environments and offerings to include infrastructure services in addition to their existing compute resource services lest more and more enterprise turn their eyes inward toward their own data centers. The “private cloud” that does/doesn’t exist – based on to whom you may be speaking at the moment – is an attractive concept for most organizations precisely because such services can continue to be employed to make the most of a cloud computing environment. While cloud computing in general is more efficient, such environments are made even more efficient by the ability to leverage infrastructure services designed to optimize capacity and improve performance of applications deployed in such environments.

Cloud computing providers will continue to see their potential market share eaten by private cloud computing implementations if they do not continue to advance the notion of infrastructure as a service and continue to offer only compute as a service. It’s not just about security even though every survey seems to focus on that aspect; it’s about the control and flexibility and visibility required by organizations to manage their applications and meet their agreed upon performance and security objectives. It’s about being able to integrate infrastructure and applications to form a collaborative environment in which applications are delivered securely, made highly available, and perform up to expected standards.

Similarly, vendors must ensure that providers are able to leverage APIs and functionality as services within the increasingly dynamic environments of both providers and enterprises. An API is table stakes, make no mistake, but it’s not the be-all-and-end-all of Infrastructure 2.0. Infrastructure itself must also be dynamic, able to apply policies automatically based on a variety of conditions – including user environment and device. It must be context-aware and capable of changing its behavior to assist in balancing the demands of users, providers, and application owners simultaneously. Remember infrastructure 2.0 isn’t just about rapid provisioning and management, it’s about dynamically adapting to the changing conditions in the client, network, and server infrastructure in as automated a fashion as possible.

Derrick Harris predicted What Won’t Happen in 2011: Mass Cloud Adoption, For One (emphasis added) in a 12/22/2010 post to GigaOm’s Structure blog:

One thing that strikes me as I read about cloud computing and other infrastructure trends is how fast they get characterized as ubiquitous, or as having reached their pinnacles, without being anywhere near those points.

A prime example is a recent article on the rate of server virtualization — despite unending talk about VMware and hypervisors over the past several years, the reality is that educated estimates have virtual workloads still in the minority, and the percentage of virtualized servers even further behind. If we’re not there yet on virtualization, what chance is there that cloud computing or Green IT will be there within the next 12 months, or that something will convince Apple to open up? I’d say “minimal,” but I’m keeping my fingers crossed.

Here are my five predictions for what not to expect in 2011, with a few links apiece to provide context. For the full analysis on why I’m skeptical about these things happening in the coming months, read my full post at GigaOM Pro (subscription required).

1. Ubiquitous cloud adoption

- Apps vs. Ops: How Cultural Divides Impede Migration to the Cloud

- What’s Hindering Cloud Adoption? Try Humans.

- The Cloud in the Enterprise: Big Switch or Little Niche?

2. Amazon to announce its AWS revenues

- How Big Is Amazon’s Cloud Computing Business? Find Out.

- AWS Looks on Pace for That $500M in 2010

- Will Amazon Become the King of Cloud Hosting Too?

3. Apple to tell us anything about its data center operations

- Apple’s New North Carolina Data Center Ready to Roll

- Apple Should Open Its Kimono — Pronto

- Gizzard Anyone? Twitter Open Sources Code to Access Distributed Data

4. Legitimate progress on clean IT

- Cloud Computing — Net Loss or Gain for IT Energy Efficiency?

- Structure 2010 LaunchPad Presenter: Greenqloud, Iceland’s Clean Power Cloud Computing Co.

- Greenpeace to Facebook CEO: No More Coal

5. The demise of Intel

- Intel: Desperately Seeking Software (Margins)

- Can Intel and $50B in IT Budgets Achieve Open Clouds?

- Why Intel Is a Likely Winner of Tech Recovery

Image courtesy of Flickr user Bicycle Bob.

Related content from GigaOM Pro (sub req’d):

<Return to section navigation list>

Windows Azure Platform Appliance (WAPA), Hyper-V and Private Clouds

No significant articles today.

<Return to section navigation list>

Cloud Security and Governance

The ITToolbox blog offered Microsoft’s Security Best Practices for Developing Windows Azure Applications whitepaper for download on 12/22/2010 (registration required). From the About This White Paper topic:

This paper focuses on the security challenges and recommended approaches to design and develop more secure applications for Microsoft’s Windows Azure platform. Microsoft Security Engineering Center (MSEC) and Microsoft’s Online Services Security & Compliance (OSSC) team have partnered with the Windows Azure team to build on the same security principles and processes that Microsoft has developed through years of experience managing security risks in traditional development and operating environments.

About This Sponsor

Subscribing to software delivered over the Web can enable your business to take advantage of enterprise-class software without the burden or cost of managing the technology yourself.

Only Microsoft delivers such a comprehensive set of cloud services and enterprise applications with the reliability, security, and global reach you should expect for your business—so you don’t have to compromise.

<Return to section navigation list>

Cloud Computing Events

VBUG (UK) announced a Web Matrix, Expression Web 4, VS LightSwitch, Expression Blend & Sketchflow meeting at the Red Lion Hotel in Fareham on 1/17/2011 from 6:30 PM to 9:00 PM GMT:

Topic: Web Matrix, Expression Web 4, Visual Studio LightSwitch, Expression Blend and Sketchflow

Overview

Ian Haynes will be taking a brief look at some of Microsoft’s new web development tools: Web Matrix, xWeb V4, Visual Studio LightSwitch and, if we don’t run out of time, try out Blend and SketchFlow. There’ll definitely be something here to capture your imagination!

Location: Red Lion Hotel, Fareham, PO16 0BP, GB

Price: FREE and open to everyone

Add this event to Outlook Book Online Now (requires registration)

Phil Haack posted on on 12/21/2010 See Me in Brazil and Argentina in March 2011:

Along with James Senior, I’ll be speaking at a couple of free Web Camps events in South America in March 2011.

Buenos Aires, Argentina – March 14-15, 2011

São Paulo, Brazil – March 18-19, 2011

The registration links are not yet available, but I’ll update this blog post once they are. For a list of all upcoming Web Camps events, see the events list.

If you’re not familiar with Web Camps, the website provides the following description, emphasis mine:

Microsoft's 2 day Web Camps are events that allow you to learn and build websites using ASP.NET MVC, WebMatrix, OData and more. Register today at a location near you! These events will cover all 3 topics and will have presentations on day 1 with hands on development on day 2. They will be available at 7 countries worldwide with dates and locations confirming soon.

Did I mention that these events are free? The description neglects to mention NuGet, but you can bet I’ll talk about that as well.

I’m really excited to visit South America (this will be my first time) and I hope that schedules align in a way that I can catch a Fútbol/Futebol game or two. I also need to brush up on my Spanish for Argentina and learn a bit of Portugese for Brazil.

One interesting thing I’m learning is that visiting Brazil requires a Visa (Argentina does not) which is a heavyweight process. According to the instructions I received, it takes a minimum of 40 business days to receive the Visa! Wow. I’m sure the churrascaria will be worth it though.

Good thing I’m getting started on this early. Hey Brazil, I promise not to trash your country. So feel free to make my application go through more quickly.

The comments include numerous observations about Brazilian and US visa requirements and a $US130 Argentine entrance fee in lieu of a visa requirement.

<Return to section navigation list>

Other Cloud Computing Platforms and Services

Jeff Barr (@jeffbarr, pictured below) reported Transloadit - Realtime Video Encoding on Amazon EC2 on 12/22/2010:

Felix Geisendörfer of Debuggable wrote in to tell me about Transloadit. He told me that they now support real-time video encoding using a number of Amazon EC2 instances.

Whereas prior transcoding solutions waited for the entire video to be uploaded, Transloadit runs the encoding process directly on the incoming data stream, using the popular node.js library to capture each chunk of the file as it is uploaded, piping it directly into the also-popular ffmpeg encoder. Because transcoding is almost always faster than uploading, the video is ready to go shortly after the final block has been uploaded. As Felix noted in his email to me, "Since Ec2 can encode videos much faster than most people can upload them, that essentially cuts the encoding time to 0."

Felix told me that they implemented Transloading using a number of AWS services including EC2, Elastic Load Balancing, Elastic Block Storage, and Amazon S3. They have found that the c1.medium instance type delivers the best price/performance for their application, and are very happy that their Elastic Load Balancer can deliver data to the instances with minimal delay. They are able to deploy data directly to a customer's S3 bucket, and are looking in to Multipart Upload and larger objects.

Transloadit also offers image resizing, encoding, thumbnailing, and storage (to S3) services (which they call robots).

Pricing for Transloadit is based on the amount of data transferred and usage is billed monthly. You can even sign up for a free trial and receive $5 of credits toward your usage of Transloadit.

Guy Rosen (@guyro) suggested Using AWS Route 53 to Keep Track of EC2 Instances in a guest post to Shlomo Swidler’s blog on 12/22/2010:

This article is a guest post by Guy Rosen [pictured at right], CEO of Onavo and author of the Jack of All Clouds blog. Guy was one of the first people to produce hard numbers on cloud adoption for site hosting, and he continues to publish regular updates to this research in his State of the Cloud series. These days he runs his startup Onavo which uses the cloud to offer smartphone users a way to slash overpriced data roaming costs.

In this article, Guy provides another technique to track changes to your dynamic cloud services automatically, possible now that AWS has released Route 53, DNS services. Take it away, Guy.

While one of the greatest things about EC2 is the way you can spin up, stop and start instances to your heart’s desire, things get sticky when it comes to actually connecting to an instance. When an instance boots (or comes up after being in the Stopped state), Amazon assigns a pair of unique IPs (and DNS names) that you can use to connect: a private IP used when connecting from another machine in EC2, and a public IP is used to connect from the outside. The thing is, when you start and stop dozens of machines daily you lose track of these constantly changing IPs. How many of you have found, like me, that each time you want to connect to a machine (or hook up a pair of machines that need to communicate with each other, such as a web and database server) you find yourself going back to your EC2 console to copy and paste the IP?

This morning I got fed up with this, and since Amazon launched their new Route 53 service I figured the time was ripe to make things right. Here’s what I came up with: a (really) small script that takes your EC2 instance list and plugs it into DNS. You can then refer to your machines not by their IP but by their instance ID (which is preserved across stops and starts of EBS-backed instances) or by a user-readable tag you assign to a machine (such as “webserver”).

Here’s what you do:

- Sign up to Amazon Route 53.

- Download and install cli53 from https://github.com/barnybug/cli53 (follow the instructions to download the latest Boto and dnspython)

- Set up a domain/subdomain you want to use for the mapping (e.g., ec2farm.mycompany.com):

- Set it up on Route53 using cli53:

./cli53.py create ec2farm.mycompany.com- Use your domain provider’s interface to set Amazon’s DNS servers (reported in the response to the

createcommand)- Run the following script (replace any details and paths, emphasized in bold, with your own):

#!/bin/tcsh -f

set root=`dirname $0`

setenv EC2_HOME /usr/local/ec2-api-tools

setenv EC2_CERT $root/ec2_x509_cert.pem

setenv EC2_PRIVATE_KEY $root/ec2_x509_private.pem

setenv AWS_ACCESS_KEY_ID myawsaccesskeyid

setenv AWS_SECRET_ACCESS_KEY mysecretaccesskey

$EC2_HOME/bin/ec2-describe-instances | \

perl -ne '/^INSTANCE\s+(i-\S+).*?(\S+\.amazonaws\.com)/ \

and do { $dns = $2; print "$1 $dns\n" }; /^TAG.+\sShortName\s+(\S+)/ \

and print "$1 $dns\n"' | \

perl -ane 'print "$F[0] CNAME $F[1] --replace\n"' | \

xargs -n 4 $root/cli53/cli53.py \

rrcreate -x 60 ec2farm.mycompany.comVoila! You now have DNS names such as

i-abcd1234.ec2farm.mycompany.comthat point to your instances. To make things more helpful, if you add a tag calledShortNameto your instances it will be picked up, letting you create names such asdbserver2.ec2farm.mycompany.com. The script creates CNAME records, which means that you will automatically get internal EC2 IPs when querying inside EC2 and public IPs from the outside.Put this script somewhere, run it in a cron – and you’ll have an auto-updating DNS zone for your EC2 servers.

Short disclaimer: the script above is a horrendous one-liner that roughly works and uses many assumptions, it works for me but no guarantees.

Romin Irani reported Amazon Web Services Go Mobile with New SDKs to the ProgrammableWeb blog on 12/22/2010:

Amazon Web Services (AWS), the leading infrastructure as a service platform is now making it easier for its core platform services to be available directly for use in mobile applications. It has wrapped its Storage, Database and Messaging APIs into easy to use Mobile SDKs for Apple iOS and Google Android platforms, so that mobile developers can easily integrate and invoke those services directly from their applications.

Announcing the release of Mobile SDKs for Android and iOS, Amazon clearly wants to reduce the time and effort that mobile developers today spend to integrate their services. No more writing your code for HTTP connections, retries, error handling. You might even want to do away with building additional infrastructure on the Server side to proxy your requests to and from AWS.

The current AWS Infrastructure Services supported by the Mobile SDKs are:

- Amazon Simple Storage Service (Amazon S3)

- Amazon SimpleDB

- Amazon Simple Queue Service (SQS) and Amazon Simple Notifications Service (SNS)

The initial release of the Mobile SDKs is for iOS and Android platforms. Keep in mind that you will need to handle the authentication to AWS services in your mobile applications. There is a guide for that in the the Amazon Mobile Developer Center.

The SDKs ship with sample applications that should give you a good idea of the kind of functionality that you can directly implement, which in turn is powered by AWS. The sample applications demonstrate uploading media directly from your mobile application to Amazon S3, a social mobile game that shares the moves and high scores to Amazon SimpleDB and an interesting way in which you can send messages/alerts between devices by directly invoking the Amazon SQS and SNS services.

The “Mobile First, Web Next” design philosophy is slowly gaining prominence and in the middle are the APIs, with vendors making them easier to use via mobile SDK wrappers.

<Return to section navigation list>

0 comments:

Post a Comment