Windows Azure and Cloud Computing Posts for 12/8/2010+

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database and Reporting

- Marketplace DataMarket and OData

- Windows Azure AppFabric: Access Control and Service Bus

- Windows Azure Virtual Network, Connect, and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch

- Windows Azure Infrastructure

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Read the detailed TOC here (PDF) and download the sample code here.

Discuss the book on its WROX P2P Forum.

See a short-form TOC, get links to live Azure sample projects, and read a detailed TOC of electronic-only chapters 12 and 13 here.

Wrox’s Web site manager posted on 9/29/2009 a lengthy excerpt from Chapter 4, “Scaling Azure Table and Blob Storage” here.

You can now freely download by FTP and save the following two online-only PDF chapters of Cloud Computing with the Windows Azure Platform, which have been updated for SQL Azure’s January 4, 2010 commercial release:

- Chapter 12: “Managing SQL Azure Accounts and Databases”

- Chapter 13: “Exploiting SQL Azure Database's Relational Features”

HTTP downloads of the two chapters are available for download at no charge from the book's Code Download page.

Tip: If you encounter articles from MSDN or TechNet blogs that are missing screen shots or other images, click the empty frame to generate an HTTP 404 (Not Found) error, and then click the back button to load the image.

Azure Blob, Drive, Table and Queue Services

No significant articles today.

<Return to section navigation list>

SQL Azure Database and Reporting

[SQL Azure Database] [North Central US] [Yellow] SQL Azure Investigation:

- Dec 7 2010 3:04AM We are currently investigating a potential problem impacting SQL Azure Database

- Dec 7 2010 7:31PM We continue to investigate a potential problem impacting SQL Azure Database

- Dec 8 2010 4:08PM Normal service availability is fully restored for SQL Azure Database.

A more detailed description of the problem and its affect on users would be appreciated.

<Return to section navigation list>

Dataplace DataMarket and OData

Stephen Forte reported Telerik Data Services Wizard Updates on 12/8/2010:

If you are creating OData or WCF services in your application and have been using the Telerik Data Services Wizard, things just got a whole lot easier. As I have shown before, you can go from File|New to a new CRUD application in 30 seconds using the wizard. With the Q3 release last month, Telerik gives you more control over the wizard and its output. Some of the new features are: the ability to isolate your service in its own project, the ability to select which CRUD methods gets created for each entity, and Silverlight data validation. Let’s take a look.

When you run the wizard, on its first page you now have the option, as shown here, to separate the service in its own class library. You can check the checkbox and type in a project name and the Data Services Wizard create the implementation files for the service in this new class library for you.

In previous versions of the wizard, the wizard would create all of the CRUD operations for you automatically. We received feedback from customers that said they would like more control over this process, allow some entities to be read only for example. The Q3 version of the wizard now allows you to select which CRUD methods to generate for each entity.

Lastly, if you choose the automatic Silverlight application generation, the wizard will read the database validation rules and replicate them as client side validation rules, saving you a lot of configuration and coding!

Enjoy the new wizard’s improvements!

The Windows Azure Team reminded developers on 12/8/2010 to Build and Promote your Cloud Applications and Services with the Windows Azure Marketplace:

Are you looking to promote your Windows Azure platform applications and services? Would you like to compose Windows Azure platform applications and services in a modular fashion? Are you looking for consulting services to help build Windows Azure platform applications? Then head on over to the new Windows Azure Marketplace to get listed and connect with customers and partners to boost your business.

The Windows Azure Marketplace is geared towards simplifying the process of building Windows Azure platform applications and helping developers promote their Windows Azure platform-related skills and expertise within the community. The Windows Azure Marketplace includes listings of building block components, training, services, and finished services/applications. These building blocks are designed to be incorporated by other developers into their Windows Azure platform applications. Other examples include developer tools, administrative tools, components and plugins, and service templates.

With unique listings browsable by category, there's a wide range of offerings to choose from. New listings are added weekly so check back regularly to see the latest!

David Linthicum posted The Value of Information-as-a-Service on 12/8/2010 to ebizQ’s Where SOA Meets Cloud blog:

In the world of cloud computing where storage and compute are accessed using well-defined APIs, we also have available to us information that can be as easily accessed, also using well-defined APIs. Typically, these providers offer up zip code or address validation and lookup, payment processing, or other services that validate or complete data. In other words, information-as-a-service.

One of the players out there is Postcode Anywhere, who provide Web services which are accessible over the Internet providing these data validation services.

The idea is simple. You link to the Web service to perform the validation service typically using an URL. You pass in the parameters required, such as a zip code, and it passes back the result.

The advantage of leveraging this kind of service is that you don't have to maintain the data yourself, which is a daunting if not impossible task. Moreover, you can mix and match these services within applications or processes, as needed. Also, considering that the interfaces are standardized, you don't need to relearn them as you move from API to API.

Applications for these types of services are many, including address validation and payment processing for a commerce site, or perhaps even data cleansing operations in larger batches. Also, you're able to embed these services within other clouds, such as IaaS as needed.

These micro-clouds are not at all new, and they have been refining their services over many years. Now that it's more acceptable to leverage cloud computing, you need to make sure that you look at information-as-a-service as part of your cloud computing and SOA strategy.

Postal code lookup was one of the first commercial applications for .NET XML Web Services created by Visual Studio 2002.

Joel Varity announced on 12/7/2010 that Agility CMS “added a cutting edge OData API into UGC” (User-Generated Content) in his December 2010 Gold Release post:

December 2010 marks one of our most ambitious releases of Agility yet!

We have completely revamped our input forms setup, increased staging and development mode performance, and added a cutting edge OData API into UGC.

All of this along with a host of other smaller features and fixes.

Let’s talk details.

Staging and Development Mode Performance

This has been a stickler for us for quite some time – we are all developers here, and we want the developer’s tools to be as fast and pain-free as possible. To that end we have redesigned our development mode data access model for Agility Web Content such that ALL requests for Content, Page and Documents or Attachments are cached locally and only requested when the developer clicks “Refresh” on the development pane.

This means that the all of the content for all pages and modules will be served entirely from the local file cache and not from the server, so your pages will render much faster, usually in less than a second.

Staging/Preview Mode

Using a similar strategy for staging mode as we did for development mode, the staging mode performance for page previews has been increased dramatically. In most cases you will notice a 40-50% speed increase here, and in some cases up to a 70% increase in speed for preview mode!

You may also notice the new “Edit Page” link on the graphic above. This allows you to jump right to this page in the Content Manager if you need to edit a module or content on it.

New Input Forms Builder

We have taken a completely new tack with our Input Forms to make it easier and faster to get your forms ready for content entry. The biggest change here is the removal of any server-side code; the new API is completely client-side and all advanced UI is driven from jQuery plugins. This means you don’t have to mix and match Javascript and C# in order to get custom functionality going – it’s all Javascript from here on out. Of course, if you have custom input forms already coded with the old methodology, they will still work moving forward, but any new input forms you make will inherit the new functionality.

Part and parcel with this plug-in strategy means we have a greater amount of flexibility in how we can render the form out of the box. That means you can now add Tabs and Custom HTML right into the content or module definition properties without having to write any code at all. That’s why we changed the name of the Fields Properties tab to “Form Builder”.

As you can see with the graphic above, you can now add Tabs and Custom Sections (injected as HTML) right into the form. This essentially means you won’t have to create fully customized input forms unless you have a completely different form design paradigm that you need to accomplish. Also, you can write custom Javascript into the Custom Scripts field and hook into the many new Javascript events for onLoad, onLoadComplete, onSave, onSaveComplete, etc.

New Input Form Plugins for Linked Content

Adding onto the work we started last iteration, you can now create linked content to be rendered as a “Search List Box” or “Checkbox List” along with Dropdown, Grid and standard Link. The

The “Checkbox List” Linked Content – Render the linked list as a series of checkboxes whose values can be saved.

The “Search List Box” Linked Content – Render the linked content list as a searchable list whose value can be saved in the “Selection” box.

New Plugin for “URL” field

You can now add a URL field type to your forms that will allow the user to choose from list of Pages or Documents, or let them input a manual URL. They can also set the Title text, and Target of the URL field. A fully formed <a> tag will be saved in the actual content.

OData API for UGC

From OData.org: “The Open Data Protocol (OData) is a Web protocol for querying and updating data that provides a way to unlock your data and free it from silos that exist in applications today.”

Agility developers can now access all of their UGC data from our new OData API. This means that every User Generated Content Definition is automatically available from the API endpoint as a Feed. We have implemented a large subset of the OData Protocol with this, including: $format for JSON or ATOM output; $filter for searching; $orderby for sorting data; $select for customizing which columns are outputted and more.

Multiplatform SDKs

The OData protocol is an platform agnostic standard, meaning you can access your data in .Net, PHP, Objective-C, Java, Javascript (using JSONP), Ruby, Flash Silverlight etc. The client SDKs are available here. In addition to these standard SDKs, we will continue to provide a custom Javascript API SDK that will facilitate authentication and access to custom OData operations.

New Service Operations for Profiles

We have added a series of OData Service operations especially for Website Profiles, meaning you can now set up a Website Profile Type with Login and Password fields. Any profiles created with this type will automatically have the password encrypted for you. Then you can call the Authenticate method in Javascript and a login cookie will be automatically created with a secure token. There is a method for IsAuthenticated that will evaluate the token on the UGC server and determine if the current user is logged in or not and change their context accordingly. The RetrievePassword method will send the user an email based on their provided login name or email. The ChangePassword allows a user to securely change their password without doing a full profile update.

As with all of our UGC APIs, these new Profile operations are accessible via HTTPS over SSL in addition to the regular port 80 HTTP access.

Some other Highlights

UGC “Draft” Mode

This features allows you to unlock the power of UGC as a blogging platform for your Website Users! Now you can enable “Draft Mode” on any UGC Content Definition. This enables a new “Draft” state, allowing users to save and edit Drafts of their items that do not show up on the website (or Awaiting Approval queue) until they are submitted. Similarly, any item that is already published can be edited as a draft, creating a new copy of the item that can go through the standard UGC workflow while the originally published item is still live on the website. When the Draft is submitted and/or published, it replaces the originally Published version of the item on the website.

Ooyala Integration in UGC

You can now add files directly to Ooyala from Agility UGC! This integration is similar to the YouTube integration except that the original file is not stored in Amazon S3. The uploads take advantage of the Ooyala Widget and are sent directly to your Ooyala account.

Flash-based Uploads

The Documents and Attachment Section now use a Flash based uploader for better progress reporting and file size checking. If your have the Flash plug-in enabled on your browser, you will see a new upload button.

This has been a big iteration for us, but we’re just getting started!

Stay tuned for more updates about the next releases that will continue to make Agility better, faster and stronger.

more later – Joel Varty

<Return to section navigation list>

Windows Azure AppFabric: Access Control and Service Bus

The Windows Azure AppFabric Team reminded developers about an Article on the Access Control service on MSDN Magazine on 12/8/2010:

The latest issue of the MSDN Magazine features a great article on the Windows Azure Access Control service written by our two technical evangelists: Vittorio Bertocci and Wade Wegner.

Want to get an overview of what Access Control is good for and how to get started?

Follow this link to the article: Re-Introducing the Windows Azure AppFabric Access Control Service.

<Return to section navigation list>

Windows Azure Virtual Network, Connect, and CDN

No significant articles today.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

Steve Marx (@smarx) described How to Resolve “SetConfigurationSettingPublisher needs to be called before FromConfigurationSetting can be used” After Moving to Windows Azure SDK 1.3 in a 12/8/2010 to his and the Windows Azure Team blog:

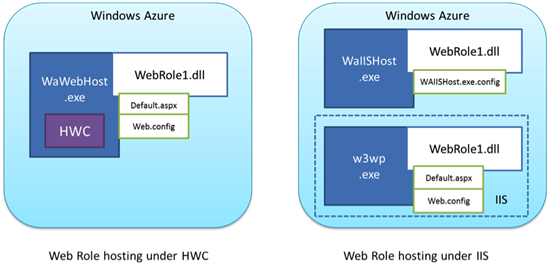

If you’re receiving this exception after migrating a web role from Windows Azure SDK 1.2 to SDK 1.3, this may have to do with changes associated with the new full IIS model introduced in SDK 1.3. This post will help you to understand the change and how to use the

FromConfigurationSettingmethod correctly under the full IIS model. If you want to simply go back to using the Hosted Web Core model (as in previous SDK releases), you can do that too.The Full IIS Model

Full IIS in Windows Azure introduces new capabilities, such as hosting multiple web sites within a single web role and advanced IIS configuration. For a discussion of the new full IIS hosting model in Windows Azure SDK 1.3 and how it differs from the Hosted Web Core model used previously, see “New Full IIS Capabilities: Differences from Hosted Web Core” on the Windows Azure blog.

The following diagram from that post illustrates one difference that impacts how

FromConfigurationSettingis used:

With the Hosted Web Core model,

RoleEntryPointcode (e.g.,WebRole.cs) and web application code (e.g.,Default.aspx.csfor ASP.NET) were executed in the same app domain. Under full IIS, these are two distinct app domains. This means that static objects are no longer shared between these two parts of your application, and that’s the key to understanding how to useFromConfigurationSettingin the new full IIS hosting model.Before: FromConfigurationSetting with Hosted Web Core

The Hosted Web Core model, where everything ran in the same app domain, lead to a common pattern for initializing

CloudStorageAccountobjects. This is how SDK 1.2 code was commonly written:In the

OnStartmethod ofWebRole.cs:CloudStorageAccount.SetConfigurationSettingPublisher((configName, configSettingPublisher) => { var connectionString = RoleEnvironment.GetConfigurationSettingValue(configName); configSettingPublisher(connectionString); } );(Your function may look more complicated than this, but the information in this post still applies.) Then, in

Default.aspx.cs(or an ASP.NET MVC controller):var account = CloudStorageAccount.FromConfigurationSetting("MyConnectionString");This code worked, because the call to

SetConfigurationSettingPublisherwas made in the same app domain as the call toFromConfigurationSetting. However, under the full IIS hosting model, these are two different app domains.After: FromConfigurationSetting with full IIS

A convenient place to put the call to

SetConfigurationSettingPublisheris in theApplication_Startmethod, which runs as part of the app domain where all your web application code runs. This method is located inGlobal.asax.cs. (If you don’t have aGlobal.asax.csalready, you can add one by right-clicking on the web application project, choosing “Add,” “New Item,” and then “Global Application Class.”)void Application_Start(object sender, EventArgs e) { CloudStorageAccount.SetConfigurationSettingPublisher((configName, configSettingPublisher) => { var connectionString = RoleEnvironment.GetConfigurationSettingValue(configName); configSettingPublisher(connectionString); } ); }This is exactly the same code that would have been in

WebRole.cs, just moved to a location that’s part of the web application’s app domain.An Alternative

The combination of

SetConfigurationSettingPublisherandFromConfigurationSettingabstracts the location of the configuration setting. Some people use this to write code that works both inside and outside of Windows Azure, reading configuration settings from Windows Azure when available and falling back toweb.configor some other location otherwise.If you don’t need this abstraction layer, you can use another method of initializing

CloudStorageAccountobjects from configuration settings:var account = CloudStorageAccount.Parse(RoleEnvironment.GetConfigurationSettingValue("MyConnectionString"));

The SetConfigurationSettingPublisher delegate is one of the modifications I needed to make to get the Windows Azure Platform Training kit demo samples to work. See my Strange Behavior of Windows Azure Platform Training Kit with Windows Azure SDK v1.3 under 64-bit Windows 7 post updated 12/8/2010.

The Windows Azure Team described Specifying Machine Keys with Windows Azure SDK 1.3 in a 12/8/2010 post:

One of the new features introduced in Windows Azure SDK 1.3 is the ability to host web roles under full IIS (instead of Hosted Web Core, as in previous SDK releases). Among other things, full IIS allows customers to host multiple web sites in a single web role. To support multiple web sites, a change was made in SDK 1.3 to set the

machineKeyelement on a per-web-site basis rather than a per-machine basis. This had the unfortunate side effect of overwriting any site-levelmachineKeyelements already specified inweb.config.A new MSDN topic, “Top Windows Azure Support Issues” includes information about this issue, among others. It describes a workaround:

In prior releases, the user could provide an explicit machine key by specifying the machineKey element in the site’s web configuration file. Explicit site-level configuration would override the automatic machine-level configuration.

In the SDK 1.3 release, automatic configuration occurs at the site-level, overriding any user-supplied value.

Workaround

A workaround is to programmatically update the site-level configuration during role instance start-up.…

If you rely on specifying your own machine keys (e.g., if you use a membership provider which encrypts and hashes passwords), please read and apply the workaround, which includes full source code.

Neil MacKenzie sent the following message to folks who signed up for his Azure Diagnostic presentation to the Azure Cloud Computing Developers Group:

Thanks for coming to the Azure Diagnostics presentation last night.

I uploaded the presentation and demo to my SkyDrive [account]:

- Presentation: http://tinyurl.com/WadPresentation

- Demo: http://tinyurl.com/WadDemo

I have done a number of posts on Azure Diagnostics on my blog that you might find useful:

- Azure Diagnostics: http://convective.wordpress.com/2009/12/08/diagnostics-in-windows-azure/

- Management of Azure Diagnostics: http://convective.wordpress.com/2009/12/10/diagnostics-management-in-windows-azure/

- Custom diagnostics: http://convective.wordpress.com/2009/12/08/custom-diagnostics-in-windows-azure/

- Changes in Azure SDK v 1.3: http://convective.wordpress.com/2010/12/01/configuration-changes-to-windows-azure-diagnostics-in-azure-sdk-v1-3/

I wrote these posts shortly after the release of Azure Diagnostics. However, the API has not changed since then.

Neil Mackenzie

Riccardo Becker explained Performance Counters and Scaling for Windows Azure in a 12/8/2010 post:

To make the right decisions on scaling up or scaling down your Windows Azure instances you need to have good metrics. One of these metrics can be performance counters like CPU utilization, free memory etc. Performance counters can be added during startup of a worker or webrole or from a distant. Adding a perfcounter within the logic of the role itself is fairly easy.

public override bool OnStart()

{

var config = DiagnosticMonitor.GetDefaultInitialConfiguration();

// Adding CPU performance counters to the default diagnostic configuration

config.PerformanceCounters.DataSources.Add(

new PerformanceCounterConfiguration()

{

CounterSpecifier = @"\Processor(_Total)\% Processor Time",

//do the actual probe every 5 seconds.

SampleRate = TimeSpan.FromSeconds(5)

});

//transfer the gathered perfcount data to my storage account every minute

config.PerformanceCounters.ScheduledTransferPeriod = TimeSpan.FromMinutes(1);

// Start the diagnostic monitor with the modified configuration.

// DiagnosticsConnectionString contains my cloudstorageaccount settings

DiagnosticMonitor.Start("DiagnosticsConnectionString", config);

return base.OnStart();

}

From now on this role will gather perfcounters for ever giving you the ability to analyze the data.

How to add a performance counter from a distant?

//Create a new DeploymentDiagnosticManager for a given deployment ID

DeploymentDiagnosticManager ddm = new DeploymentDiagnosticManager(csa, this.PrivateID);You need a storage account (where your WADPerformanceCounter table is) and the deploymentID of your deployment(multiple instances of a web and/or workerrole is possible since every instance is a running VM eventually).

//Get the role instance diagnostics manager for all instance of the a role

var ridm = ddm.GetRoleInstanceDiagnosticManagersForRole(RoleName);

//Create a performance counter for processor time

PerformanceCounterConfiguration pccCPU = new PerformanceCounterConfiguration();

pccCPU.CounterSpecifier = @"\Processor(_Total)\% Processor Time";

pccCPU.SampleRate = TimeSpan.FromSeconds(5);

//Create a performance counter for available memory

PerformanceCounterConfiguration pccMemory = new PerformanceCounterConfiguration();

pccMemory.CounterSpecifier = @"\Memory\Available Mbytes";

pccMemory.SampleRate = TimeSpan.FromSeconds(5);

//Set the new diagnostic monitor configuration for each instance of the role

foreach (var ridmN in ridm)

{

DiagnosticMonitorConfiguration dmc = ridmN.GetCurrentConfiguration();

//Add the new performance counters to the configuration

dmc.PerformanceCounters.DataSources.Add(pccCPU);

dmc.PerformanceCounters.DataSources.Add(pccMemory);

//Update the configuration

ridmN.SetCurrentConfiguration(dmc);

}By applying the code above for a certain role (specified by RoleName), every instance of that role is being monitored. Both CPU and memory perf counters are sampled and written to the cloudstorageaccount provided. The data of every 5 seconds per instance is eventually written to storage.

So how make the right decisions on scaling? As you might notice your raw data in storage doesn't help you and only shows sparks. To discover trends or averages you need to apply e.g. smoothing to your data in order to get a readable graph. See the graph below to see the difference.

As you can see the "smoothed" graph is far more readable and shows you exactly how your roleinstance is performing and whether or not it needs to be scaled up/down.Smoothing algorithms like simple or weighted moving average can help you make better decisions rather than examining the raw data from the WADPerformancecountersTable in your storage account. Implement this smoothing, have it run in a workerrole in the same datacenter and avoid massive bandwidth costs if you examine weeks or even months of performance counter data.

Good luck with it!

<Return to section navigation list>

Visual Studio LightSwitch

Jim Duffy claimed Visual Studio LightSwitch: Yes, these are the droids you’re looking for on 12/6/2010:

With all the news and focus on the new features coming in Silverlight 5 I thought I’d take a few minutes to remind folks about the work that Microsoft has done on LightSwitch since the applications created by LightSwitch are Silverlight applications. LightSwitch makes it easier for non-coders to build business applications and easier for coders to maintain them.

For those not familiar with LightSwitch, it is a new tool that provides a easier and quicker way for coder and non-coder types alike to create line-of-business applications for the desktop, the web, and the cloud. The target audience for this tool are those power-user types who create Access applications for their organization. While those Access applications fill an immediate need, they typically aren’t very scalable, extendable and/or maintainable by the development staff of the organization. LightSwitch creates applications based on technologies built into Visual Studio thus making it easier for corporate developers to extend and maintain them.

LightSwitch is currently in beta but it will ultimately become a new addition to the Visual Studio line of products. Go ahead and download the beta to get a better idea of what the product can do for your organization.

The LightSwitch Developer Center contains

- links to download the beta

- links to instructional videos

- links to tutorials

- links to the LightSwitch Training Kit

Another quality resource for LightSwitch information is the Visual Studio LightSwitch Team Blog. My good friend Beth Massi is on the LightSwitch team and has additional valuable content on her blog.

Return to section navigation list>

Windows Azure Infrastructure

Phil Garland, Rob Gittings, and Mike Pearl wrote “Reducing technology costs is just the starting point—learn about cloud’s potential to speed innovation, boost customer responsiveness, and create new revenue opportunities” as a preface to their Cloud computing gets strategic article for issue 13 of PriceWaterhouseCoopers’ View publication:

What a difference a year makes. Last fall, View introduced readers to what we called the latest technology trend to capture the attention of businesses, consumers, and investors alike. That description of cloud computing was apt: Momentum—along with considerable hype—for the new approach and its supporting technologies has been considerable. But the real impact is only now beginning to be felt. A year ago, cloud was squarely in the IT domain; now, it’s making its way to the boardroom. Phil Garland, Rob Gittings, and Mike Pearl— leaders in PwC’s Advisory, Technology, and Cloud Computing practices—explain why the real story is less about technology and more about business strategy.

Most organizations today are no longer deciding whether they’ll use cloud computing. Rather, they’re asking how? Will we use software-as-a-service to provide CRM for our sales team instead of managing the application in-house? Should we take advantage of inexpensive, virtualized storage to meet our mushrooming data needs? Will a private cloud enable us to better leverage our technology investments among our different business units?

Good questions—but all too often, the discussion ends there. And even more important, certain crucial stakeholders are missing from the dialogue.

Yes, cloud is absolutely about facilitating better IT. But, as leading companies are discovering, it can also be much more than that. Those companies are taking a broader view: cloud as a new engine for business growth.

The cloud you don’t know

Cloud computing can play a strategic role for all companies, not just those in the technology or services industries. The vision is simple: By doing away with typical IT constraints—limited resources, consuming maintenance, and incompatible systems—cloud computing frees the business to pursue growth and innovation.

In essence, cloud lets you say yes more often. Imagine that you could green-light as many as a half dozen new research and development (R&D) projects instead of betting on just one or two. With cloud’s on-demand approach, it’s possible to quickly—and at a fraction of the current cost—equip teams with the resources they need. Rather than weeks or months, the supporting infrastructure for a project could be set up in just a few days. That kind of rapid start-up means companies could try many new ideas, quickly rejecting ones that weren’t viable in favor of ones that are most promising.

Cloud computing finally brings businesses closer to a world in which technology can truly be an enabler, not an obstacle.

Having more options is a compelling proposition. That was the case for Zagat Survey, the company behind the eponymous dining, travel, and leisure guides and website. When Zagat Survey began rebuilding the Web platform for its Zagat.com subscription service—one of its most strategic and fastest-growing businesses—it determined that using Amazon Elastic Compute Cloud (Amazon EC2) infrastructure was a better choice than managing the hardware in-house. With Amazon EC2, Zagat could easily test different hardware configurations to determine the best setup for the core service.

Companies not held back by IT are free to capitalize on opportunities as they arise. For example, a company unexpectedly overwhelmed by a consumer response to a pop-culture phenomenon might want to quickly launch a Web promotion. Instead of scrambling to set up the required infrastructure, this short-term need could be met quickly with cloud-based resources.

Or consider another way cloud can smooth out IT roadblocks to top-line growth. In, say, a merger or acquisition, system integration is often a complex and synergy-draining proposition.

But think about how much more efficiently the two entities’ data and business processes could be merged if supporting systems resided in the cloud instead of being managed internally.

Admittedly, it sounds too good to be true—as if you could merely snap your fingers and the supporting infrastructure needed for any initiative would magically appear. Of course, that will never quite happen. Cloud computing is no magic bullet. In fact, as a disruptive force, it raises a number of issues that companies need to carefully consider: strategy, finance, risk and governance, data security and privacy, technology, and its impact on other business functions.

Yet cloud computing finally brings businesses closer to a world in which technology can truly be an enabler, not an obstacle. To deliver on that promise, companies find they need to formulate a comprehensive business strategy for cloud computing. Created by the executive team—not solely the CIO—such a strategy takes into account how cloud can best support the business, and it considers the changes the strategy would cause to the ways people work and make decisions.

The auditors (perhaps your organization’s auditors) chime in on cloud computing.

Phil Garland is US CIO Advisory Leader, Rob Gittings is Leader, US Technology Sector, and Mike Pearl is US Cloud Computing Leader for PwC.

David Linthicum asked “Deloitte predicts cloud providers will be big winners in 2011 -- but will that cash improve the cloud offerings for us all? in a deck for his Money race could strengthen cloud computing article of 12/8/2010 for InfoWorld’s Cloud Computing blog:

Cloud computing continues to be a hot topic, but not as hot as the valuation of the cloud computing companies themselves, according to a Deloitte survey: "Mergers and acquisitions are set to increase in 2011, with cloud computing companies selling for the highest price. This was the finding of Deloitte's twice-annual survey into U.K. technology companies, which claimed to have found a rise in optimism in the market compared to six months ago."

I'm not sure this is news -- it's been feeling a bit 1998 to me for a while now. However, the dynamics of the market are different than in the Internet heydey. In this go-round, we're creating a small, privately held cloud computing bubble, rather than one involving publicly traded firms with widely distributed stock. I suspect this bubble will inflate throughout 2011 and perhaps well into 2012, with price-to-earnings multiples hitting 10 to 15 times revenue for some hot cloud firms.

The good news is that this growth will parallel the expansion of useful cloud computing, and solid benefits are already visible today in cloud-adopting enterprises. The bad news is that few of us personally can make any money in this market -- most of these cloud companies will remain privately held and never go public, given the current regulatory climate.

Additional good news is that venture capitalists will race to fund the cloud computing technology space. They typically move in packs, and the pack now smells profit in the cloud. Their cash will fuel more cloud growth, which may end up resulting in more and better cloud services. However, the bad news is all hyped spaces come to an end, and the bubble will quickly deflate at some point, though I don't think this one will burst.

Jay Fry (@jayfry3) posted Cloud conjures up new IT roles; apps & business issues are front & center to his personal blog on 12/7/2010:

So you’ve managed to avoid going the way of the dodo, and dodged the IT job “endangered species list” I talked about in my last post (and at Cloud Expo). Great. Now the question is, what are some of the roles within IT that cloud computing is putting front & center?

I listed a few of my ideas during my Cloud Expo presentation a few weeks back. My thoughts are based on what I’ve heard and discussed with IT folks, analysts, and other vendors recently. Many of those thoughts even resonated well with what I’ve heard this week here at the Gartner Data Center Conference in Las Vegas.

IT organizations will shift how they are, well, organized

James Urquhart from Cisco put forth an interesting hypothesis a while back on his “Wisdom of Clouds” blog at CNET that identified 3 areas he thought will be (and should be) key for dividing up the jobs when IT operations enters “a cloudy world,” as he put it.First, there’s a group that James called InfraOps. That’s the team focused on the server, network, and storage hardware (and often virtual versions of those). Those selling equipment (like Cisco) posit that this area will become more homogenous, but I’m not sold on that. James followed that with ServiceOps, the folks managing a service catalog and a service portal, and finally AppOps. AppOps is the group manages the applications themselves. It executes and operates them, makes sure they are deployed correctly, watches the SLAs, and the like.

I thought these were pretty useful starting points. I agree whole-heartedly with the application-centric view he highlights. In fact, in describing the world in which IT is the manager of an IT service supply chain, that application-first approach seems paramount. The role of the person we talk to about CA 3Tera AppLogic, for example, is best described as “applications operations.”

Equally important as an application-centric approach are the skills to translate business needs into technical delivery, even if you don’t handle the details yourself. More on that below.

Some interesting new business cards

I can already see some interesting new titles on IT peoples’ business cards in the very near future thanks to the cloud. Here are some of those that I listed in my presentation, several of which were inspired by the research that Cameron Haight and Milind Govekar have been publishing at Gartner. (If you’re a Gartner client, their write-up on “I&O Roles for Private Cloud-Computing Environments” is a good one to start with.):

- “The Weaver” (aka Cloud Service Architect) – piecing together the plan for delivering business services

- “The Conductor” (aka Cloud Orchestration Specialist) – directing how things actually end up happening inside and outside your IT environment

- “The Decider” (aka Cloud Service Manager) – more of a vendor evaluator and the person who sets up and eliminates relationships for pieces of your business service

- “The Operator…with New Tools” (aka Cloud Infrastructure Administrator) – this may sound like a glorified version of an old network or system administrator, but there’s no way this guy’s going to use the same tools to figure all this out that he has in the past.

In Donna Scott’s presentation at the Gartner Data Center Conference called “Bringing Cloud to Earth for Infrastructure & Operations: Practical Advice and Implementations,” she hit upon these same themes. Some of the new roles needed that she listed included solution architects, the automation specialist, the service owner, cloud capacity manager, and IT financial/costing analyst. Note the focus on business-level issues – both the service and the cost.

Blending

Or maybe the truth is that these roles blend together a bit more. I could see the IT organization evolving to perform three core functions in support of application delivery:

- Source & Operate Resource Pools This person would be someone who would maintain efficient data center resources, including the management of automation and hypervisors. The first is the ability to more effectively manage resources—to determine how much memory and CPU is available at any given time, to scale up and down capacity in response to demand (and have the ability to pay only for what you use). These resources might eventually be sourced either internally or externally, and will most often be a combination of the two. This function will be responsible for making sure the right resources are available at the right time and that the cost of those resources is optimized.

- The second function is Application Delivery, focused on building application infrastructure proactively, so that when the next idea comes down the pike, you can be ready. You can proactively build an appropriately flexible application infrastructure. You can provide the business with a series of different, ready-made configurations from which to choose, and you would have the ability (when they are needed) to quickly get these pre-configured solutions up and running quickly.

- The last, higher-level function is the process of engaging with the business closely. Your job is to Assemble Service. You’ll be able to say to the business ‘bring me your best ideas’ and you’ll be able to turn those concepts into real, working systems quickly, without having to dive into lower-level technical bits & bytes that some of the previously mentioned folks would.

In all cases, I’m talking about ways to deliver your application or business service, and the technical underpinnings are fading into the background a bit. “Instead of being builders of systems,” said Mike Vizard in a recent IT Business Edge article, “most IT organizations will soon find themselves orchestrating IT services.”

James Urquhart talks about this as an important tenant of the DevOps approach: The drive to make the application the unit of administration, not the infrastructure. James had another post more recently that underscored his disappointment that shows like Cloud Expo are focusing more on the infrastructure guys still, not the ones thinking about the applications. I’m all in favor. I heard Gartner’s Cameron Haight suggest why this might be true in most IT shops: while development has spent a lot of time working toward things like agile development, IT ops has not. There’s a mismatch, and work is starting on the infrastructure side of things to address it.

Still, how do you get there from here?

So the premise here is that cloud will change the role of IT as a whole, as well as particular IT jobs. So what should you do? How do you know how to ease people into these roles, how to build these roles, or even how to try to make these role transitions yourself?

I’ll repeat the advice I’ve been giving pretty consistently: try it.

- Figure out what risks you and your organization want to take. Understand if a given project or application is more suited to a greenfield, “clean break” kind of approach or to a more gradual (though drawn-out) shift.

- Set up a “clean room” for experimentation. Create the “desired state” (Forrester’s James Staten advocated that on a recent webcast we did with him). Then use this new approach to learn from.

- Arm yourself with the tools and the people to do it right. Experience counts.

- Work on the connection between IT & business aggressively

- Measure & compare (with yourself and others trying similar things)

- Fail fast, then try again. It’s all about using your experience to get to a better and better approach.

There was one thing that I heard this week at the Gartner show from Tom Bittman that sums up the situation nicely. IT, Tom said, has the opportunity to “become the trusted arbiter and broker [of cloud computing] in your organization.” Now that definitely puts IT in a good place, even a place of strength. However, there’s no denying that many, many folks in IT are going to have to get comfortable with roles that are very different from the roles they have today.

(If you would like a copy of the presentation that I gave at Cloud Expo, email me at jay.fry@ca.com.)

Jay is strategy VP for CA's cloud business. He joined via Cassatt acquisition (private cloud software). Jay was employee #6 at BEA, including 3 yrs in London.

<Return to section navigation list>

Windows Azure Platform Appliance (WAPA), Hyper-V and Private Clouds

Lydia Leong (@cloudpundit) reported her Observations from the Gartner data center conference on 12/8/2010:

I’m at Gartner’s Data Center Conference this week, and I’m finding it to be an interesting contrast to our recent Application Architecture, Development, and Integration Summit.

AADI’s primary attendees are enterprise architects and other people who hold leadership roles in applications development. The data center conference’s primary attendees are IT operations directors and others with leadership roles in the data center. Both have significant CIO attendance, especially the data center conference. Attendees at the data center conference, especially, skew heavily towards larger enterprises and those who otherwise have big data centers, so when you see polling results from the conference, keep the bias of the attendees in mind. (Those of you who read my blog regularly: I cite survey data — formal field research, demographically weighted, etc. — differently than conference polling data, as the latter is non-scientific.)

At AADI, the embrace of the public cloud was enthusiastic, and if you asked people what they were doing, they would happily tell you about their experiments with Amazon and whatnot. At this conference, the embrace of the public cloud is far more tentative. In fact, my conversations not-infrequently go like this:

Me: Are you doing any public cloud infrastructure now?

Them: No, we’re just thinking we should do a private cloud ourselves.

Me: Nobody in your company is doing anything on Amazon or a similar vendor?

Them: Oh, yeah, we have a thing there, but that’s not really our direction.That is not “No, we’re not doing anything on the public cloud”. That’s, “Yes, we’re using the public cloud but we’re in denial about it”.

Lots of unease here about Amazon, which is not particularly surprising. That was true at AADI as well, but people were much more measured there — they had specific concerns, and ways they were addressing, or living with, those concerns. Here the concerns are more strident, particularly around security and SLAs.

Feedback from folks using the various VMware-based public cloud providers seems to be consistently positive — people seem to uniformly be happy with the services themselves and are getting the benefits they hoped to get, and are comfortable. Terremark seems to be the most common vendor for this, by a significant margin. Some Savvis, too. And Verizon customers seem to have talked to Verizon about CaaS, at least. (This reflects my normal inquiry trends, as well.)

Neil MacDonald posted Everything You Wanted to Know About Private Clouds on 12/2/2010 (missed when posted):

I’ve just completed work with a team of analysts on a Gartner Research Spotlight focused on private cloud computing.

Whether your like or hate the term “private cloud”, the trend is real. These are the types of questions you are asking us:

- How can we make our own enterprise data centers act more like what the public cloud providers use?

- How can we move towards 1000 to 1 or even 5000 to 1 server-to-administrator ratios?

- How can we add self-service interfaces to make the provisioning of workloads (and associated security policy) integrated and seamless?

- How can we take advantage of scale out architectures on inexpensive x86 compute fabrics?

These are the types of questions addressed by this research set.

There’s quite a few research notes included:

- “Private Cloud Computing: An Essential Overview”

- “Key Considerations in the Development of a Private Cloud Architecture”

- “From Secure Virtualization to Secure Private Clouds”

- “Test and Quality Management: The First Frontier for Private Cloud”

- “Comparing Infrastructure Utility Services and Private Clouds”

- “Top Seven Considerations for Configuration Management for Virtual and Cloud Infrastructures”

- “Cloud Environments Need a CMDB and a CMS”

- “Microsoft’s Windows Azure Platform Appliance: A Major Experiment”

- “VMware and Private Cloud Computing”

- “VMware Pushes Further Into the Security Market With Its vShield Offerings”

Security plays a critical role in enabling private clouds, and two of the research notes for clients I authored are included in this set – the research note titled “From Secure Virtualization to Secure Private Clouds” and the research note titled “VMware Pushes Further Into the Security Market With Its vShield Offerings”.

<Return to section navigation list>

Cloud Security and Governance

Lori MacVittie (@lmacvittie) asserted It’s time to stop talking about imaginary trolls under the cloud bridge and start talking about the real security challenges that exist in cloud computing as a preface to her There Is No Such Thing as Cloud Security post of 12/8/2010 to F5’s Cloud Central blog:

I’ve been watching with interest a Twitter stream

of information coming out of the Gartner Data Center conference this week related to security.

There have been many interesting tidbits that, as expected, are primarily focused on cloud computing and virtualization. That’s no surprise as both are top of mind for IT practitioners, C-level execs, and the market in general.

Another unsurprise would be the response to a live poll conducted at the event indicating the imaginary “cloud security” troll is still a primary concern for attendees.

I say imaginary because “cloud security” is so vague as a descriptor that it has, at this point, no meaning.

Do you mean the security of the cloud management APIs? The security of the cloud infrastructure? Or the security of your applications when deployed in the cloud? Or maybe you mean the security of your data accessed by applications when deployed in the cloud? What “security” is it that’s cause for concern? And in what cloud environment?

See, “cloud security” doesn’t really exist any more than there are really trolls under bridges that eat little children.

Application, data, platform, and network security, however, do exist and are valid concerns regardless of whether such concerns are raised in the context of cloud computing or traditional data centers.

“CLOUD SECURITY” is a TECHNOLOGY GODWIN

For those of you unfamiliar with the concept of a “godwin” I will summarize: the invocation of a comparison to Hitler in a discussion ends the debate.

Period. Mike Godwin introduced the “law” as a means to reduce the number of reductio ad Hitlerum arguments that added little to the conversation and were in fact likely borne of desperation or angst. What seems to be happening now in the cloud computing arena is the use of what amounts to a reductio ad Cloud Cautio argument that also adds little to the conversation and is often also borne of desperation or angst. Let’s call this a “cloudwin”, in honor of Mr. Godwin, shall we?

“Cloud security” is a misused term that implies some all-encompassing security that exists for a cloud computing environment. The cloud has no especial security that cannot be described as being as application, data, platform (hypervisor) or network related. This is important as the type of cloud we attach “security” to directly impacts both the provider and the consumer’s responsibility for providing that security. In a SaaS, for example, the application, data, platform, and network security onus is primarily on the provider. In an IaaS, however, application and data and even some network security lies solely in the lap of the consumer, with bare infrastructure and management framework security the responsibility of the provider.

I could spend an entire blog just making reference’s to Hoff’s

blogs on the topic. If you haven’t read his voluminous thoughts on the subject, do so.

Suffice to say that the invocation of the term “cloud security” as a means to justify avoiding public cloud computing is equivalent to a cloudwin. Invoking the argument that “cloud security is missing” or “lax” or “a real concern” adds nothing to the conversation because the term itself means nothing without context, and even with context it still needs further exploration before one can get down to any kind of real discussion of value.

SAY WHAT YOU MEAN, MEAN WHAT YOU SAY

If what you’re really concerned with is data leakage (data security) then say so.

If what you’re really concerned with is network security, say so. If you were to ask me, “Hey, what can F5 do to address ‘cloud security’” my response would invariably be, “What are you trying to secure?” because we can’t have a meaningful conversation until I know what you want to secure. Securing a network is different than securing an application is different from securing a network in the data center than it is securing a network in the “cloud”.

We need to stop asking survey and research questions about “cloud security” and start breaking it down into at least the three core security demesnes: application, data, platform, and network. It’s fine to distinguish “application security” from “IaaS-deployed application security”.

In fact we need to make that distinction because securing an application that is deployed in an IaaS environment is more challenging than security an application deployed in a traditional data center environment. Not because there is necessarily a difference in the technology and solutions leveraged, but because the architecture and thus topology are different and create challenges that must be addressed.

Securing applications, data, and the network “in the cloud” is a slightly different game than securing the same in the local data center where IT organizations have complete control and ability to deploy solutions. We do need to differentiate between the two environments but we also need to differentiate between the different types of security so we can have meaningful conversations about how to address those issues. Yes, a web application firewall can enable data leak prevention regardless of whether it’s deployed in a cloud environment or a data center. But the deployment challenges are different based on the environment. These are the challenges we need to speak to but can’t under the all-encompassing and completely inaccurate “cloud security” moniker.

So let’s stop with the cloudwin arguments and start being more specific about the concerns we really have with cloud computing. Let’s talk about application, data, platform, and network security in a cloud environment and the challenges associated with such security rather than in broad, generalized terms of a “thing” that is as imaginary as trolls under the bridge.

<Return to section navigation list>

Cloud Computing Events

The Windows Azure Team on 12/8/2010 is Calling All Bay Area Developers! Don’t Miss a FREE Cloud Development with Windows Azure Hackathon December 18 in San Francisco:

If you live in the San Francisco Bay Area, here's an opportunity to dive into the Windows Azure platform and start building cloud applications. Join other Bay area .NET developers for this exciting, FREE Hackathon: Cloud Development with Windows Azure, which will take place at Microsoft's San Francisco offices on Saturday, December 18, 2010 from 9:00AM-2:00 PM PST.

Following a short walkthrough of the Windows Azure platform, you'll have the opportunity to code and collaborate with other cloud developers. In addition to getting the chance to share ideas, code and techniques and learn from experts, all attendees will also receive a FREE Windows Azure Platform 30-day pass. And if that's not enough, a the end of the day, participants will vote for the top two apps developed during the hackathon, with the winners receiving copies of Microsoft Visual Studio 2010 Ultimate with MSDN Subscription (valued at approx. $11,000.)

The only pre-requisites for this event are that you have knowledge of basic ASP.NET development and that you bring your own computer. You will also need to install the Windows Azure SDK using Visual Studio; you can download it in advance here or download at the event using pre-loaded USBs that will be available onsite.

Space is limited so click here to learn more about this event and register.

Wes Yanaga announced Windows Azure Game Developer Event in Seattle, December 15, 2010 at the W Hotel in Seattle, WA:

Knowing the competitive landscape of gaming, especially here in the Northwest, we want to invite you to a 2 hour session to learn about building your next game on Windows Azure. Hear from a game developer just like you, Sneaky Games, who has successfully leveraged the cloud and Windows Azure to build and deploy Facebook games such as Fantasy Kingdoms.

The Windows Azure Platform can revolutionize the way you do business, making your company more agile, efficient and flexible while allowing you to grow your business, enhance your customer's experience and save money towards your bottom line. Join us December 15th at 5:30pm to learn more about what the Windows Azure Platform and cloud computing can do for you as a game developer, network with fellow developers and enjoy open bar and appetizers on us!

Event Date:

Wednesday, 15 December 2010 5:30 PM - 7:30 PM

Location:

W Hotel

1112 4th Avenue

Seattle, WA 98101

United StatesTo Register – Please visit this link

<Return to section navigation list>

Other Cloud Computing Platforms and Services

Bob Warfield shared my issue with Database.com pricing in his Salesforce’s Database.com: Fat SaaS Here We Come! post of 12/8/2010 to the Enterprise Irregulars blog:

Salesforce is skating to where the puck will be ahead of the other players once again–there’s a reason they’re so much bigger than the rest of the SaaS players. This time it’s all about their latest development in the hotly contested PaaS (Platform as a Service) market. They’ve introduced a fascinating new offering called “database.com” (what do you suppose they paid for that domain?).

What is this database.com offering?

Larry Dignan pegged it best, among the various posts I read. He says it’s a full frontal assault on the incumbents like Oracle, and that Salesforce is building out a stack, only the stack is delivered as a service that lives in the Cloud. That’s exactly what’s happening. Imagine writing software that talks to your database server via an API. That part isn’t hard, because that’s how it already works. Now imagine that you don’t own the database server; it lives in the Salesforce Cloud and you rent. They take care of it and promise to use all the tricks they learned scaling Salesforce.com to make your database scale like crazy too. Pretty cool!

The initial responses from the rest of the blogging world are also interesting:

- Phil Wainewright says they’ve squashed all the little PaaS players.

- Klint Finley reports that Progress software is building out drivers (ODBC, etc.) so your apps can directly call Database.com as their DB.

- Sam Diaz and many others are focused on the Oracle rivalry. Can this be good for Oracle’s share price? Will their database hegemony finally start to crack? And what does it mean to the budding NoSQL world?

Obviously there is a lot of crystal ball work to be done here, and a lot of study of the available information. Most of all, we’ll have to wait to get our hands on Database.com before we can really understand what it means. But I did want to finish this post by talking about Database.com’s relationship to what I’ve been calling “Fat SaaS”.

We’re moving beyond the debate about SaaS versus On-premises. On-prem isn’t dead, but it sure isn’t getting any stronger, while the SaaS world keeps gaining momentum. The truth is that it is a superior model. There used to be a lot of feeling that IT was afraid of it, and that this is what was holding it back. But we’re starting to see considerable evidence IT not only doesn’t fear it, but that they’re embracing it wholeheartedly. More importantly, we’re seeing the SaaS world start to move beyond simple questions (to multitenant or not to multitenant is actually a pretty simple question) and onto how to evolve to the next level.

Fat SaaS is a model that pushes as much business logic into the client as possible and leaves the server-side largely acting as a data store. Given the availability of rich User Experience tools like Adobe Flex with AIR (for creating desktop apps), as well as the onset of the mobile app phenomenon, together with the difficulties of scaling in a multicore world, it’s a logical development. After all, if you survey an Enterprise, do they have more cpu’s tied up in client devices, or in servers? Which cpu’s are more over worked and which ones have more bandwidth available?

I’ve written about the Fat SaaS idea before, and I think it’s one of the logical next developments we’ll start seeing like crazy. Database.com just opened the door to making it even more logical, because what else would talk to such a thing but a Fat SaaS application? Doing a bunch of centralized number crunching won’t be nearly as happy as a Fat SaaS app with the inevitable latency that comes with having your database in a different Cloud than the software that’s consuming the data. The client is already used to that being the norm.

Now, getting back to Phil Wainewright’s proposition that it has squashed the other players, I don’t think so. It may be hard on the little players, or it may not. Remember, Benioff is trying to out-Oracle Oracle. But even Oracle hasn’t succeeded in squashing the Open Source DB movement, not even after acquiring MySQL. It’s more popular than ever. In the end, neither Salesforce nor Oracle have to squash these littler guys. They’re after the higher end anyway.

What I want to see is competition. Who will be the first to put up a service on Amazon AWS that delivers exactly the same function using MySQL and for a lot less money? You see, Salesforce’s initial pricing on the thing is their Achille’s heel. I won’t even delve into their by-the-transaction and by-the-record pricing. $10 a month to autheticate the user is a deal killer. How can I afford to give up that much of my monthly SaaS billing just to authenticate? The answer is I won’t, but Salesforce won’t care, because they want bigger fish who will. I suspect their newfound Freemium interest for Chatter is just their discovery that they can’t get a per seat price for everything, or at least certainly not one as expensive as they’ve tried in the past.

I’ll be watching to see whether the prices come down and whether competition develops. I fully expect both will be underway before we know it. Meanwhile, Bravo Salesforce–you’re showing the rest of the world how it’s done!

See my Preliminary Cost Comparison of Database.com and SQL Azure Databases for 3 to 500 Users post updated 12/8/2010 for current Database.com costs. A substantial part of the income from Database.com undoubtedly will go toward the price paid for the domain name.

Anshu Sharma posted Database.com – Why? How? What? to the Enterprise Irregulars blog on 12/8/2010:

Why?

Developers are moving to the cloud because users expect their applications to be available from multiple devices – not just a PC or Mac – but a myriad new computers that come in the shapes of tablets, smart phones, and purpose-built devices. Users want be able to access their data from home, work, and on the road; CIOs want this data to be secure and manageable, the databases to be reliable and available; and developers want to be able to build more applications without having to worry about installing software, tuning the database, or worrying about scale.

Developers today are faced with some false choices – they can choose a database that was built for the on-premise world and is hosted in a “blue” cloud replicating the architecture and the pains of on-premise; or, they can choose a database that’s built for the cloud but lacks basic features like the ability to have a schema e.g., key value cloud databases. Moreover, users don’t just want a data store – they want to be able to search this data, report on this data, and have a model for securely sharing this data with the right people.

Our customers have had access to a reliable, scalable, and secure cloud database for over 10 years now. The database that underlies all of salesforce.com including Sales Cloud, Service Cloud and Force.com – is built for the cloud with a unique multitenant architecture that scales – our customers executed over 25 Billion in the last quarter, and now store over 20 Billion records in the database. Until today, this database was accessible via our applications and our platform – but we realized that this unique technology can serve the needs of many more developers and customers if it could be accessed by developers on any platform, using any language and with any device or computer.

In launching Database.com, we are taking the next logical step in the evolution of our platform toward openness. Database.com is an open database, accessible from any language – Java, .NET, PHP, Ruby, etc. – any platform, including not only our cloud platforms, such as force.com and VMforce, but also Amazon, Google, Azure, Heroku, etc., and of course accessible directly from any device.

Let’s evaluate the need for Cloud 2 Database from the perspective of three key stakeholders – users, developers and CIOs.

CIO

CIOs no longer want to spend 85 percent of their budget just keeping the lights on. They want to unleash innovation and deliver business value. This year’s Gartner survey reveals the top ten items on CIOs’ budgets. Cloud computing is near the top of the list, which also includes Web2.0, mobile, and data & document management.

If you are a CIO, here are few questions to help to assess the need for Database.com:

- How much time and money does it take to manage databases today in your current environment?

- How can you match the innovation of the Facebook Era and bring it to your enterprise? The Facebook generation is no longer just people just graduated from college but also baby boomers (see this New York Times report – requires registration).

- Are you able to deliver apps that are social and mobile?

- How much time and money is wasted in kluging together disparate technologies just to get basic features like search and reporting to work against your existing database systems?

- What if you could write your applications for any platform and any language but not have to worry about mundane tasks that provide neither business value nor agility?

Developer

If you are a developer, here is a list of questions you need to ask about applications that you want to build:

- Can you start building your application right away or do you need to first install and fuse together ten different pieces?

- Are you spending more time writing interesting, new applications or more time simply keeping the old ones running?

- Are your OS upgrades, database upgrades, and hardware upgrades managed for you? Or do you have to spend time doing that?

- Are you spending time on database setup, provisioning, management and other tasks?

- Are you able to write the business logic of the application and then offer features like search and mobile? Or do you have to cobble it all together?

- Are you using an infrastructure cloud today? If so, does it offer all the services you need to build your apps and does it automatically manage those for you?

- Can you build apps that are mobile and social? How do you do that?

- Are you having fun?

Programming used to be fun. We think it can be fun again if you can focus on what you do best and let all the painful stuff like OS and database patch upgrades be managed by the cloud provider.

How?

Database.com was built with three core principles in mind with a clear focus on Openness:

- Any Platform: As an enterprise database in the cloud, it should be accessible to developers on all platforms – cloud or on-premise – through standards-based API’s and protocols.

- Any Language: Being open to any language is critical to supporting the innovation of the developer community. Development and adoption of new languages is increasing at an amazing rate but data storage represented a universal need.

- Any Device: With the growth of mobile devices and an increasingly connected internet-of-things, the database must be open to use from any and all of these clients in a clean, consistent and secure manner.

Building business logic for an application is becoming easier and easier as tools and Platform-as-a-Service vendors continue to evolve and deliver unparalleled developer productivity. However, setting up and managing the database that is secure & scalable continues to be a challenge. The developer must choose upfront whether they can afford the restrictions of a cloud data store or must take on the administrative burden of a traditional RDBMS.

With database.com, the developer can avoid having to choose between functionality and complexity and take advantage of the bests of both worlds.

- Multitenant: Database.com was designed from the very beginning to manage a vast, ever-changing set of database structures on behalf of each and every application using a common infrastructure. This is not a re-hosting of 30-year old notion of a database system.

- Internet Scale: The service is proven to deliver scalability and is already the largest enterprise cloud database on the market.

- Automated Upgrades: Software, hardware, and system infrastructure is upgraded automatically to ensure that your applications can leverage advances in performance and security without effort.

- Automatic Tuning & Backup: It is a given that data and workloads change over time. With Database.com, the administration for ensuring continuing performance is provided as a part of the service. Backups or disaster recovery services are available automatically when needed without impacting development and deployment schedules.

The service is focused on making the life of a developer better:

- Application Developer Centric: Database.com is focused on the application developer – eliminating the need for low level administration tasks associated with the pain of administering previous generation database systems. As a service, it exposes that functionality needed to deliver killer applications and takes care of all the rest for you.

- Search: You have the ability to search any and all data in your enterprise apps.

- Web Services: Without having to install software or write any code, your data is available via web services in a secure, managed way via SOAP and REST APIs.

- Built-in Security: Security in an application is both critical and extremely difficult to get right – the rules and exploits are constantly changing. Database.com takes care of the underlying security so that you can focus on the data privacy needed within your application.

- Event-driven Push: Proactive notifications are quickly becoming a required feature in many applications – particularly mobile apps. Database.com provides a built-in infrastructure for event-driven push without requiring you as a developer to learn, develop and deploy a whole new set of infrastructure components.

- Social Data Model & Feeds: Finally, every database built on Database.com is inherently social – supporting an activity feed schema, API’s for posting comments and files and notifying users of new activities.

Now, What?

If you are a CIO, IT leader, or a business user:

- Talk to your salesforce.com executive in a conversation on your database needs and your application development roadmap.

- Watch these videos to learn more about Database.com and the Force.com platform.

- You can get started today with a Free Force.com Developer Edition!

- Encourage your development team to start building applications using the Free Force.com Developer Edition.

If you are a developer:

- Read Developing with Database.com, a practical roadmap to help you get started with Database.com development.

- Sign up for a Free Force.com Developer Edition account and get started today.

- Because Database.com is the database at the heart of Force.com, you can tap into its power today using Force.com APIs and toolkits.

Join the conversation with:

Get ready to be a Cloud 2 Database developer!

Anshu is Vice President of Force.com platform product management at salesforce.com – leading Force.com server techonologies, Force.com Sites and VMforce (the Enterprise Java Cloud). Previously founded and led Oracle SaaS Platform, and held engineering and product management roles in SOA and Identity Management.

Jennifer Van Grove reported Salesforce Unleashes 5 New Platforms for Rapid Cloud App Development in a 12/8/2010 post to the Mashable/Business blog:

Salesforce is using its Dreamforce conference to reveal a bevy of news including Database.com, Chatter Free and its acquisition of Ruby platform Heroku for $212 million. The enterprise software company is further pushing its agenda to become the go-to middle man between the cloud and the enterprise with five new platform services.

The enterprise cloud platform services — Appforce, Siteforce, VMforce, ISVforce and Heroku — are now a part of the Salesforce Force.com platform.

The new services speak to Salesforce’s new mission around supporting even more application development for the cloud. It’s a mission big enough to warrant a slight rebrand: Force.com 2 on the Cloud 2 Platform.

Yesterday, CEO Mark Benioff discussed what he believes to be a measurable move from “Cloud 1″ in the early 2000s to a newer rendition. “Cloud 2,” as he calls it, is built around a social and mobile web that includes Facebook, feeds, push notifications, touch, smartphones, location, HTML5 and mobile operating systems.

The five new platforms introduced today, described below, are meant to enable developers to build faster, better and more robust “Cloud 2″ applications and work around common IT challenges.

- Appforce: A platform for companies to build collaborative departmental apps that scale.

- Siteforce: A website creation solution designed with both developers and business users in mind.

- VMforce: A private beta service for Java developers to run their applications natively on Force.com.

- Heroku: The just acquired popular cloud platform for Ruby applications.

- ISVforce: A platform service that enables ISVs to build and deliver multi-tenant cloud apps.

These new platforms are being discussed by Benioff in today’s morning keynote session. We’ll be updating this post with additional details. Stay tuned.

Tim Anderson (@timanderson]) posted Salesforce.com acquires Heroku, wants your Enterprise apps on 12/8/2010:

The big news today is that Salesforce.com has agreed to acquire Heroku, a company which hosts Ruby applications using an architecture that enables seamless scalability. Heroku apps run on “dynos”, each of which is a single process running Ruby code on the Heroku “grid” – an abstraction which runs on instances of Amazon EC2 virtual machines. To scale your app, you simply add more dynos.

Why is Salesforce.com acquiring Heroku? Well, for some years an interesting question about Salesforce.com has been how it can escape its cloud CRM niche. The obvious approach is to add further applications, which it has done to some extent with FinancialForce, but it seems the strategy now is to become a platform for custom business applications. We already knew about VMForce, a partnership with VMWare currently in beta that lets you host Java applications that are integrated with Force.com, but it is with the announcements here at Dreamforce that the pieces are falling into place. Database.com for data access and storage; now Heroku for Ruby applications.

These services join several others which Salesforce.com is branding a[s] Force.com 2:

- Appforce – in effect the old Force.com, build departmental apps with visual tools and declarative code.

- Siteforce – again an existing capability, build web sites on Force.com.

- ISVForce – build your own multi-tenant application and sign up customers.

Salesforce.com is thoroughly corporate in its approach and its obvious competition is not so much Google AppEngine or Amazon EC2, but Microsoft Azure: too expensive for casual developers, but with strong Enterprise features.

Identity management is key to this battle. Microsoft’s identity system is Active Directory, with federation between local and cloud directories enabling single sign-on. Salesforce.com has its own user directory and developing on its platform will push you towards using it.

Today’s announcement makes sense of something that puzzled me: why we got a session on node.js at Monday’s Cloudstock event. It was a great session and I wrote it up here. Heroku has been experimenting with node.js support, with considerable success, and says it will introduce a new version next year.

Finally, the Heroku acquisition is great news for Enterprise use of Ruby. Today many potential new developers will be looking at it with interest.

Related posts:

Robin Wauters reported Salesforce.com Buys Heroku For $212 Million In Cash in a 12/8/2010 post to TechCrunch:

Salesforce.com has just announced that it is acquiring Heroku, which provides a Ruby application platform-as-a-service, for approximately $212 million in cash.

That’s one hell of an exit for the startup, which was founded in 2007 and has raised only $13 million in funding.

Heroku was initially provided with seed funding through Y Combinator back in 2008.

The startup says it currently powers over 105,000 social and mobile cloud applications.