Windows Azure and Cloud Computing Posts for 12/28/2010+

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database and Reporting

- Marketplace DataMarket and OData

- Windows Azure AppFabric: Access Control and Service Bus

- Windows Azure Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch

- Windows Azure Infrastructure

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Read the detailed TOC here (PDF) and download the sample code here.

Discuss the book on its WROX P2P Forum.

See a short-form TOC, get links to live Azure sample projects, and read a detailed TOC of electronic-only chapters 12 and 13 here.

Wrox’s Web site manager posted on 9/29/2009 a lengthy excerpt from Chapter 4, “Scaling Azure Table and Blob Storage” here.

You can now freely download by FTP and save the following two online-only PDF chapters of Cloud Computing with the Windows Azure Platform, which have been updated for SQL Azure’s January 4, 2010 commercial release:

- Chapter 12: “Managing SQL Azure Accounts and Databases”

- Chapter 13: “Exploiting SQL Azure Database's Relational Features”

HTTP downloads of the two chapters are available for download at no charge from the book's Code Download page.

Tip: If you encounter articles from MSDN or TechNet blogs that are missing screen shots or other images, click the empty frame to generate an HTTP 404 (Not Found) error, and then click the back button to load the image.

Azure Blob, Drive, Table and Queue Services

No significant articles today.

<Return to section navigation list>

SQL Azure Database and Reporting

Manu Cohen-Yashar posted Uploading Your Database To the Cloud on 12/27/2010:

One of the basic steps in migrating an application to the cloud is uploading the database to the cloud. With Windows Azure Connect it is possible to bind the cloud application to a SQL server running on premise but this is not recommended. The reason is simple. Traditional SQL is running on a single server which by design does not scale and is not very reliable. The cloud is all about scalability, reliability and availability. Running your data on a single server might break that. I consider connecting on premise databases to Azure only as the first step in the migration process. The goal is to bring the data to the cloud.

In this post I would like to describe how to upload a database to the cloud.

The idea is simple:

- Create a new empty database in Sql Azure.

- Using Sql Management Studio 2008 R2 Create a script that creates the database.

- Convert the script to be compatible with Sql Azure

- Run the script against SqlAzure using Database Manager or any other tool.

In this post I created an empty database and called it pubs.

To Create a script that creates the database and copy the data:

1. Open the database Sql Management Studio 2008.

2. Right click the database and choose Tasks –> Create scripts3. Follow the wizard.

4. In the Set Scripting Options page choose "Advanced"

5. Set the script type to "Schema and Data" and "script Use Database" to False.6. Save the script to a file.

Now its time to convert the script to be compatible with Sql Azure and run it.

To do that we will use a tool called: SQL Azure Migration Wizard v3.4.1Download the tool and run it.

The tool will analyze the script we just created and mark all the lines which should be deleted before running it on Sql Azure. If you choose "Analyze and migrate" the tool will connect to your Sql Azure Database and execute the script.

First I want to show the conversion screen.

The lines with a red remark before them should be deleted.

As you can see "ALTER DATABASE" is an example of a TSQL command not supported in Sql Azure. After deleting all the "not supported" commands you have a script compatible with Sql Azure. Save it for future use.

If you do not really need the script you can consider the second option "Analyze and Migrate". You will be prompted to connect to your Sql Azure database you created and the converted script will be executed automatically.

That it. The database is ready !!

If you saved the script and you did not execute the script with the conversion tool you can run it using "Database Manager" by clicking "Manage" in the portal.

Provide your credentials and connect to the database.

Click "Open Query" and open the file with the query you created with SQL Azure Migration Wizard v3.4.1

Click "Execute" and that is it !!!

Refresh the database and make sure that all the data is here.

For detailed information about an earlier version of George Huey’s SQL Server Migration Wizard, see my Using the SQL Azure Migration Wizard v3.3.3 with the AdventureWorksLT2008R2 Sample Database post of 7/18/2010.

Trent Swanson added a Some performance feedback and regex changes thread to the SQL Azure Migration Wizard v3.4.1 CodePlex project on 12/20/2010:

I would first like to add that this is a very useful tool and wonderful contribution to the community. I just wanted to share some experience, usage information, and some minor issues I ran in to migrating a 2GB database with ~4000 tables.

1) DB_NAME() shows up as a migration issue and actually seems to work in SQL Azure today.

2) Select Field1, Field2, Field3, SpinTomato FROM FS180 throws raises a "SELECT INTO" migration issue.

3) As far as performance is concerned... I made it about 15% in to the analysis before I had to kill the tool due to performance. After a few quick tweaks to the tool to write out to a file instead of appending to the textbox I was able to run through the analysis pretty quickly. Also, I was unable to complete the migration of schema/data to SQL Azure from my desktop/server... nothing to do with the tool here, and I gave up after 4 hours. With a larger database there is a HUGE performance benefit to completing this part from a VM-Role; I'm sure you could do by spinning up another temp role with TS as well if you don't have access to the CTP yet. What took me giving up at 4+ hours and running took me less than 25 min from an Azure VM role.

<Return to section navigation list>

MarketPlace DataMarket and OData

Markus Konrad reported about OData endpoints in his CRM 2011 - Webresources and SOAP Endpoint post of 12/28/2010:

Like mentioned in my previouse post about the Limitations of the oData Endpoint, there is "currently" no option for assign records or use the Execute-Functionality of the CRM-Service using the oData Endpoint. I found an interesting hint in the new RC-SDK:

You can use JScript and Silverlight Web resources to access Microsoft Dynamics CRM 2011 and Microsoft Dynamics CRM Online data from within the application. There are two web services available, each provides specific strengths. The following table describes the appropriate web service to use depending on the task you need to perform.

Both of these Web services rely on the authentication provided by the Microsoft Dynamics CRM application. They cannot be used by code that executes outside the context of the application. They are effectively limited to use within Silverlight, JScript libraries, or JScript included in Web Page (HTML) Web resources.

The REST endpoint provides a ‘RESTful’ web service using OData to provide a programming environment that is familiar to many developers. It is the recommended web service to use for tasks that involve creating, retrieving, updating and deleting records. However, in this release of Microsoft Dynamics CRM the capabilities of this Web service are limited to these actions. Future versions of Microsoft Dynamics CRM will enhance the capabilities of the REST endpoint.

The SOAP endpoint provides access to all the messages defined in the Organization service. However, only the types defined within the WSDL will be returned. There is no strong type support. While the SOAP endpoint is also capable of performing create, retrieve, update and delete operations, the REST endpoint provides a better developer experience. In this release of Microsoft Dynamics CRM the SOAP endpoint provides an alternative way to perform operations that the REST endpoint is not yet capable of.

That means, you have to use the SOAP-Endpoint for Assign, SetState... in your Webresource. How this can be handled is not shown in the SDK at the moment. I hope for an update.

See the Jeff Barr (@jeffbarr) described Amazon Web Service’s WebServius - Monetization System for Data Vendors on 12/28/2010 article in the Other Cloud Computing Platforms and Services section below for details about a new MarketPlace DataMarket competitor.

<Return to section navigation list>

Windows Azure AppFabric: Access Control and Service Bus

No significant articles today.

<Return to section navigation list>

Windows Azure Virtual Network, Connect, RDP and CDN

No significant articles today.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

Christian Weyer explained Monitoring Windows Azure applications with System Center Operations Manager (SCOM) in a 12/28/2010 post:

Windows Azure contains a few options to collect monitoring data at runtime, including event log data, performance counters, Azure logs, your custom logs, IIS logs etc. There are not really good ‘official’ monitoring tools by Microsoft – besides a management pack for System Center Operation Manager (SCOM).

To get started monitoring your Azure applications with an enterprise-style systems management solution, you need to do the following:

- Install SCOM. SCOM is a beast, but the good news is you can install all the components (including Active Directory and SQL Server) on a single server. Here is a very nice walkthrough – long and tedious, but very good.

- Download and import the Azure management pack (MP) for SCOM. Note that the MP is still RC at the time of this writing but Microsoft support treats it like an RTM version already.

- Follow the instructions in the guide from the download page on how to discover and start monitoring your Azure applications.

Voila. If everything worked then you will see something like this:

Note: This is a very ‘enterprise-y’ solution – I surely hope to see a more light-weight solution by Microsoft soon targeted at ISVs and the like.

Christian also posted Running a 32-bit IIS application in Windows Azure on the same date:

Up there, everything is 64 bits. By design.

What to do if you have 32-bit machines locally – erm, sorry: on-premise – and want to move your existing applications, let’s say web applications, to Windows Azure?

For this scenario you need to enable 32-bit support in (full) IIS in your web role. The following is a startup task script that enables 32-bit applications in IIS settings by using appcmd.exe.

enable32bit.cmd:

%windir%\system32\inetsrv\appcmd set config -section:applicationPools

-applicationPoolDefaults.enable32BitAppOnWin64:trueAnd this is the necessary startup task defined in the service definition file:

ServiceDefinition.csdef:

<Startup><Task commandLine="enable32bit.cmd" executionContext="elevated" taskType="simple" /></Startup>

Dominick Baier described Unlocking the SSL Section in Windows Azure Web Roles on 12/28/2010:

Posting the favourite command line snippet seems to be the newest hobby for Azure developers ;)

Here’s one that is useful to unlock the SSL section (e.g. for client certificates):

%windir%\System32\inetsrv\appcmd.exe unlock config /section:system.webServer/security/access

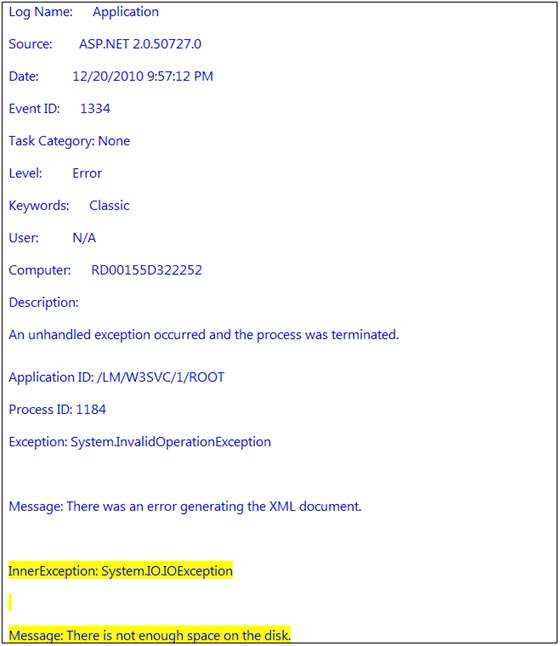

Avkash Chauhan explained how to solve the Windows Azure : There is not enough space on the disk - description and handling error in a 12/28/2010 post:

If you have your application running on Windows Azure, it is possible that your:

1. 1. Web Role is keep Recycling after successfully running for some amount of time

2. 2. You are not getting anything out from your diagnostics log after sometime

So if you have you application based on Windows Azure SDK 1.3, you can login to your VM and investigate the issue. Once you login to your VM, I would suggest looking:

1. 1. System UpTime from Task Manager Window - This will tell you how long does not VM running so once you look for event logs, you can get an idea when this error started to check how fast your Local Storage is getting filled

2. 2, Application Event Log - For your IIS and ASP.NET based application, this is the first place to start your investigation

In the event log you may see the following Error:

Now when you explore your VM you will see all 3 drives on VM have significant space on it. On Windows Azure VM you will see 3 drive as C: D: and E: and here is a little explanation for these 3 drives:

1. Drive E: -> this drive holds your Windows Azure Service package which you have uploaded or published to Azure Portal. When your VM starts the package is decrypted and added a drive E:\ to the VM

2. Drive D: -> This drive holds the Windows Server 2008 R2 OS.

3. Drive C: -> This drive can be considered as the working drive where most of the Windows Azure run time data is available.

So a few of you, may have some confusion about the above error because all 3 drives will have lots of space but event log will still show the error. So here is the explanation:

When you define Local Storage in your Windows Azure Application, a new folder will be added as below in your drive C: on Azure VM:

C:\Resources\directory\[Your_deploymentID].[your_webrolename]_[Role_Number]\<LocalStorage_name_Dir>

This is the folder which will have a size cap on it.

The error you are getting is coming out from your “Local Storage” folder size limitation. So above error is directly related with your Local Storage folder. Windows Azure OS limits the size of your “Local Storage” folder depend on the size you define in your ServiceDefinition file.

For example the local Storage name is “TomcatLocation” and the size defined for “TomcatLocation” in the below CSDEF file is 2GB:

So when the folder will hit the defined limit (i.e. 2048MB based on above CSDEF) the above described error start occurring.

To solve this problem either you increase the size of “Local Storage” in the CSDEF file or write file system API based code to monitor the “Local Storage” folder and delete it when it is reaching a certain threshold. We do not provide any mechanism or API within Windows Azure SDK to maintain the “Local Storage” setting. So if you want to keep using it then please either increase the size of add more code in your Azure service using file system API to keep checking the folder size and delete it when it hits the maximum.

Once thing to remember is that when role starts, the local Storage folder deleted and then recreated for your service so that is the only time it is deleted by the system and any other time, it is service responsibility to manage it.

Buck Woody described The Proper Use of the VM Role in Windows Azure in a 12/28/2010 post:

At the Professional Developer’s Conference (PDC) in 2010 we announced an addition to the Computational Roles in Windows Azure, called the VM Role. This new feature allows a great deal of control over the applications you write, but some have confused it with our full infrastructure offering in Windows Hyper-V. There is a proper architecture pattern for both of them.

Virtualization

Virtualization is the process of taking all of the hardware of a physical computer and replicating it in software alone. This means that a single computer can “host” or run several “virtual” computers. These virtual computers can run anywhere - including at a vendor’s location. Some companies refer to this as Cloud Computing since the hardware is operated and maintained elsewhere.

IaaS

The more detailed definition of this type of computing is called Infrastructure as a Service (Iaas) since it removes the need for you to maintain hardware at your organization. The operating system, drivers, and all the other software required to run an application are still under your control and your responsibility to license, patch, and scale. Microsoft has an offering in this space called Hyper-V, that runs on the Windows operating system. Combined with a hardware hosting vendor and the System Center software to create and deploy Virtual Machines (a process referred to as provisioning), you can create a Cloud environment with full control over all aspects of the machine, including multiple operating systems if you like. Hosting machines and provisioning them at your own buildings is sometimes called a Private Cloud, and hosting them somewhere else is often called a Public Cloud.

State-ful and Stateless Programming

This paradigm does not create a new, scalable way of computing. It simply moves the hardware away. The reason is that when you limit the Cloud efforts to a Virtual Machine, you are in effect limiting the computing resources to what that single system can provide. This is because much of the software developed in this environment maintains “state” - and that requires a little explanation.

“State-ful programming” means that all parts of the computing environment stay connected to each other throughout a compute cycle. The system expects the memory, CPU, storage and network to remain in the same state from the beginning of the process to the end. You can think of this as a telephone conversation - you expect that the other person picks up the phone, listens to you, and talks back all in a single unit of time.

In “Stateless” computing the system is designed to allow the different parts of the code to run independently of each other. You can think of this like an e-mail exchange. You compose an e-mail from your system (it has the state when you’re doing that) and then you walk away for a bit to make some coffee. A few minutes later you click the “send” button (the network has the state) and you go to a meeting. The server receives the message and stores it on a mail program’s database (the mail server has the state now) and continues working on other mail. Finally, the other party logs on to their mail client and reads the mail (the other user has the state) and responds to it and so on. These events might be separated by milliseconds or even days, but the system continues to operate. The entire process doesn’t maintain the state, each component does. This is the exact concept behind coding for Windows Azure.

The stateless programming model allows amazing rates of scale, since the message (think of the e-mail) can be broken apart by multiple programs and worked on in parallel (like when the e-mail goes to hundreds of users), and only the order of re-assembling the work is important to consider. For the exact same reason, if the system makes copies of those running programs as Windows Azure does, you have built-in redundancy and recovery. It’s just built into the design.

The Difference Between Infrastructure Designs and Platform Designs

When you simply take a physical server running software and virtualize it either privately or publicly, you haven’t done anything to allow the code to scale or have recovery. That all has to be handled by adding more code and more Virtual Machines that have a slight lag in maintaining the running state of the system. Add more machines and you get more lag, so the scale is limited. This is the primary limitation with IaaS. It’s also not as easy to deploy these VM’s, and more importantly, you’re often charged on a longer basis to remove them. your agility in IaaS is more limited.

Windows Azure is a Platform - meaning that you get objects you can code against. The code you write runs on multiple nodes with multiple copies, and it all works because of the magic of Stateless programming. you don’t worry, or even care, about what is running underneath. It could be Windows (and it is in fact a type of Windows Server), Linux, or anything else - but that' isn’t what you want to manage, monitor, maintain or license. You don’t want to deploy an operating system - you want to deploy an application. You want your code to run, and you don’t care how it does that.

Another benefit to PaaS is that you can ask for hundreds or thousands of new nodes of computing power - there’s no provisioning, it just happens. And you can stop using them quicker - and the base code for your application does not have to change to make this happen.

Windows Azure Roles and Their Use

If you need your code to have a user interface, in Visual Studio you add a Web Role to your project, and if the code needs to do work that doesn’t involve a user interface you can add a Worker Role. They are just containers that act a certain way. I’ll provide more detail on those later.

Note: That’s a general description, so it’s not entirely accurate, but it’s accurate enough for this discussion.

So now we’re back to that VM Role. Because of the name, some have mistakenly thought that you can take a Virtual Machine running, say Linux, and deploy it to Windows Azure using this Role. But you can’t. That’s not what it is designed for at all. If you do need that kind of deployment, you should look into Hyper-V and System Center to create the Private or Public Infrastructure as a Service. What the VM Role is actually designed to do is to allow you to have a great deal of control over the system where your code will run. Let’s take an example.

You’ve heard about Windows Azure, and Platform programming. You’re convinced it’s the right way to code. But you have a lot of things you’ve written in another way at your company. Re-writing all of your code to take advantage of Windows Azure will take a long time. Or perhaps you have a certain version of Apache Web Server that you need for your code to work. In both cases, you think you can (or already have) code the the software to be “Stateless”, you just need more control over the place where the code runs. That’s the place where a VM Role makes sense.

Recap

Virtualizing servers alone has limitations of scale, availability and recovery. Microsoft’s offering in this area is Hyper-V and System Center, not the VM Role. The VM Role is still used for running Stateless code, just like the Web and Worker Roles, with the exception that it allows you more control over the environment of where that code runs.

Buck also mentioned in a 12/28/2010 tweet that he’s having “Fun - playing with Microsoft Map Cruncher to add overlays in Azure to Bing Maps: http://bit.ly/hWlIOS.”

Kristofer Anderson (@KristoferA) continued his foreign key constraints series with Inferring Foreign Key Constraints in Entity Framework Models (Part 2) on 12/28/2010:

In my last blog entry, Inferring Foreign Key Constraints in Entity Framework Models, I wrote about a new feature in the Model Comparer for EFv4 that allow foreign key constraints to be inferred and added to an Entity Framework model even if some or all FKs are missing in the database. The inferred FKs can then be used to generate associations and navigation properties in Entity Framework models.

In the latest release of Huagati DBML/EDMX Tools, the FK inference feature has been improved with a new regex match-and-replace feature for matching foreign key columns to primary key columns. This allows FKs to be inferred even if the FK/PK columns to be matched use inconsistent naming and/or are named with special prefixes/suffixes etc.

Of course, the basic name matching feature described in the previous article is still there, and can be used under many (if not most) common scenarios. However, when the PK/FK naming is more complex then using Regex match+replace comes in handy for resolving what columns in one table can be mapped to the PK in another table.

The following screenshot shows the settings dialog with the new settings for RegExp-based column matching:

In addition to allowing the standard regular expression language elements to be used in the Match field, and the substitution elements to be used in the Replace field, there are two reserved keywords that can be used in the replacements as well; %pktable% which is replaced with the name of the primary-key-side table and %fktable% which is replaced with the name of the foreign key table when the match is evaluated.

The above screenshot shows how regular expression replacements plus the %pktable% substitution keyword can be used to match two tables even if the PK column is named “id” and the FK column in another table is named using the PK table’s name followed by “_key”; if the result of the Match+Replace on both sides evaluate to the same value, a foreign key constraint is inferred and will be displayed in the diff-tree and diff-reports in the Model Comparer’s main window.

The Model Comparer

If you want to read more about the Model Comparer and the other features for Entity Framework contained in the Huagati DBML/EDMX Tools add-in for Visual Studio, see the following links:

- Introducing the Model Comparer for Entity Framework v4

- What’s new in the latest version of the Model Comparer for Entity Framework 4

- Using the Model Comparer to generate difference reports for Entity Framework v4 models

- Mixing inheritance strategies in Entity Framework models

- SQL Azure support in Huagati DBML/EDMX Tools

- Inferring Foreign Key Constraints in Entity Framework Models

- Simplify Entity Framework v4 models with complex types

…and of course, there is a brief summary of the add-in’s supported features for both Entity Framework and Linq-to-SQL at the product page: http://huagati.com/dbmltools/ where you can also download the add-in and get a free 30-day trial license.

Jassand posted Quicksteps to get started with PHP on Windows Azure on 12/28/2010 to the Interoperability @ Microsoft blog on 12/27/2010:

The weather in the northern hemisphere is still a little nippy and if you're like me, you're spending a lot of time indoors with family and friends enjoying the holiday season. If you're spending some of your time catching up and learning new things in the wonderful world of cloud computing, we have a holiday gift of some visual walkthroughs and tutorials on our new "Windows Azure for PHP" center. We pushed up these articles to help you quickly get set up with developing for Windows Azure

"Getting the Windows Azure pre-requisites via the Microsoft Web Platform Installer 2.0" will help you quickly set up your machine in a "few clicks" with all the necessary tools and settings you will need to work with PHP on Windows, IIS and SQL Server Express. We’ve included snapshots of the entire process you will need to get a developer working with the tools built by the “Interoperability at Microsoft” team

"Deploying your first PHP application with the Windows Azure Command-line Tools for PHP" will visually walk you through getting the tool, getting familiar with how it's used and packaging up a simple application for deployment to Windows Azure.I hope this will help you get over the first speed bump of working on Microsoft cloud computing platform and we look forward to bringing you more of these based on your feedback and input. So please check them out and let us know how you feel!

When added to this article, the original post wasn’t accessible. The above is reproduced from the Atom feed.

<Return to section navigation list>

Visual Studio LightSwitch

Return to section navigation list>

Windows Azure Infrastructure

Jeffrey Schwartz asserted “This time, Rizzo pulls no punches when it comes to talking down Google's effort to compete against Microsoft, and also discusses future integration opportunities with Windows Azure” as a deck for his Q&A Part 2: Microsoft's Tom Rizzo Talks Smack on Online Services post of 12/28/2010 to the Redmond Channel Partner blog:

Google will be hard pressed to make a dent in Microsoft's Office franchise, insists Tom Rizzo, Microsoft's senior director of online services [pictured at right]. Rizzo is pounding the pavement talking up his company's next big cloud offering: Office 365. With the product released to beta this month, Rizzo has been meeting with analysts and the press to explain the next generation of Microsoft's online productivity offering. In part one of his interview with Redmond Channel Partner magazine, Rizzo talked about the partner opportunities for Office 365 and gave some details about the rollout. This week, in part two, Rizzo pulls no punches when it comes to talking down Google's effort to compete against Microsoft, and also discusses future integration opportunities with Windows Azure.

Following are Q&As that relate to Windows Azure:

… What is the relationship, if any between Office 365 and Windows Azure?

We are working really closely with the Azure team to make sure that Azure and Office 365 connect well together, because we realize that the partners, especially ISVs will want to build apps that run in Azure that they can sell to people running in Office 365. We want to make sure identity, security integration is all there so you can have the two solutions integrate plus we are investing a lot in Pinpoint, our marketplace, so it spans not only geographically everywhere but also spans Dynamics CRM, Azure, and Office 365 so a customer has one place to go look for partners whether they're services partners solution providers, or whether they are selling software through the marketplace.What kind of apps will lend themselves to running on Azure from Office 365?

I think it's similar apps to those built and run on premises, so things that maybe extend with horizontal solutions There's a multitude of things that customers can do and partners can build and customers can buy through the cloud by building them on top of Azure. The great thing for the partner is Azure scales, so if they get a great app and people start buying then the partner doesn't' have to worry about scaling their own infrastructure.To what extent are you seeing customers interested in extending Office apps onto Azure?

A lot of the Azure apps today are Web based because we don't have the Office 365 product out yet, and the BPOS product, while it integrates, there's still a bunch of work you have to do as a partner to integrate the two together. Office 365 will make it more seamless. Productivity applications are probably not the majority of the Azure apps but I think you will see that shift over time as we get Office 365 to the market.What horizontal or vertical industry trends do you see in terms of customer interest in cloud computing solutions?

I would split it into two in terms of industries and workloads. The first workload that we see coming to the cloud is email and even Gartner predicts email will be the first to come to market. They said it will be 30 percent by 2015 and 50 percent 2018. So that is definitely the first workload to go and we are seeing that happening in spades. Next is team collaboration and document sharing where you may want to put up a document, share it with others in an extranet business-to-business portal. In terms of industries, we see small and medium business being the first to go to the cloud because they don't want to run IT infrastructure. Enterprises will be slower, or they'll have more hybrid solutions where they've got some stuff on premises, some stuff in the cloud.What about specific verticals?

Manufacturing, is definitely one of them where you may have a set of what we call structured task workers or kiosk workers. For example a car company where you may have of 200,000 people, maybe 100,000 work on the shop floor where they don't need high-end productivity, they just need simple e-mail, simple calendaring and maybe access to a portal to access their HR information. Also financial services is another one looking heavily at the cloud, and from there healthcare. Obviously sensitive information stays on premises but they are moving other stuff into the cloud.What's you take on how premises and cloud-based solutions will co-exist?

I think hybrid is around for awhile, especially in the enterprise. In SMB I think it goes faster where they move to an all cloud model. I think in the enterprise, I think on premises is not going anywhere, both in enterprise and large government. I think that presents opportunity to make that integration easier and to also have folks help us with the transition to the cloud over time. I think it also opens the opportunity for the Azure technologies where people can develop applications. And the Azure appliance as well, will allow people to move stuff into their private clouds. We hear a lot of conversation around private clouds. I would say one of the other differences between us and Google for sure is we don't require an IT ultimatum. You want to run stuff on prem? Great, we have an on-prem solution. Want to run stuff in the cloud? We have a cloud solution. You want to run both, we have that. Google is one size fits all across everywhere. It all has to be cloud-based.

Tom is Microsoft Senior Director of Online Services.

Full disclosure: Tom Rizzo was the technical editor of my Professional ADO.NET 3.5 with LINQ and the Entity Framework book. I am a contributing editor for Visual Studio Magazine, whose publisher is 1105 Media – also the publisher of Redmond Channel Partner.

Klint Finley paraphrased 14 Cloud Computing Predictions for 2011 from Chirag Mehta and R "Ray" Wang in a 12/27/2010 post to the ReadWriteCloud blog:

Cloud computing blogger Chirag Mehta and Constellation Research Group principal analyst R "Ray" Wang published today a list of their cloud computing predictions for 2011. The pair sees public cloud adoption stalling temporarily, the spread of the app store model in the enterprise, the convergence of Development-as-a-Service and Platform-as-a-Service and an overall simplification of the technology landscape as some of the most important trends in cloud computing in 2011.

Mehta and Wang's predictions are:

- Most new procurement will be replaced with cloud strategies

- Private clouds will serve as a stepping stone to public clouds

- Cloud customers will stop saying "the cloud is not secured" and start asking hard questions about how the cloud can be secured

- Public cloud adoption will be temporarily reduced by concerns about cybersecurity. Private clouds will be kept for security and backups.

- A transition from best-of-breed purpose-built applications to "cloud mega stacks" will occur. (See our article "Jive Just Became a Platform Vendor" for more on this idea.)

- App stores will be the predominate channel for application deployment

- User experience and scale will no longer be mutually exclusive

- Custom application development will shift to the cloud

- Development-as-a-Service and Platform-as-a-Service will merge

- Integration vendors will be expected to offer integration beyond data

- Consumer tech features such as social business platforms, mobile enterprise capabilities, predictive analytical models and unified communications will continue to proliferate in the enterprise

- Customers will demand better virtualization

- The overall technology landscape will simplify thanks to the cloud

- Archiving and data management will be core competencies for cloud users

What do you think of these predictions?

I think cybersecurity concerns may actually drive more enterprises to public clouds. Remember, those diplomatic cables and other materials published by WikiLeaks were hosted on-premise, not in the cloud. So were all those systems hit with Stuxnet. While last week's breach at Microsoft might cause some concern, that problem was minor and fixed quickly without any patching on the part of Microsoft's customers.

For more 2011 cloud predictions, see our article on Forrester's James Staten's predictions.

Update: Part 2 is now available.

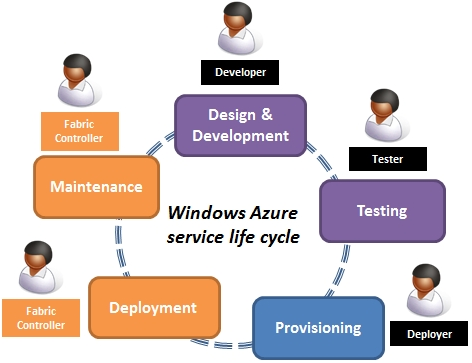

Shamelle described The Windows Azure Service Life Cycle in a 12/26/2010 post:

The 5 distinct phases of the Windows Azure service life cycle

1. Design and development

The on-premise team plans, designs, and develops a cloud service for Windows Azure. The design includes quality attribute requirements for the service and the solution to fulfill them. The key roles involved in this phase are on-premise stakeholders.2. Testing

The quality attributes of the cloud service are tested, during the testing phase. This phase involves on-premise as well as Windows Azure cloud testing. The tester role is in charge of this phase and tests end-to-end quality attributes of the service deployed into cloud testing or staging environment.3. Provisioning

Once the application is tested, it can be provisioned to Windows Azure cloud. The deployer role deploys the cloud service to the Windows Azure cloud. The deployer is in charge of service configurations and makes sure the service definition of the cloud service is achievable through production deployment in Windows Azure cloud. The configuration settings are defined by

the developer, but the production values are set by the deployer.

In this phase, the role responsibilities transition from on-premise to the Windows Azure cloud. The fabric controller in Windows Azure assigns the allocated resources as per the service model defined in the service definition. The load balancers and virtual IP address are reserved for the service.4. Deployment

the fabric controller commissions the allocated hardware nodes into the end state and deploys services on these nodes as defined in the service model and configuration. The fabric controller also has the capability of upgrading a service in running state without disruptions. The fabric controller abstracts the underlying hardware commissioning and deployment from the services. The hardware commissioning includes commissioning the hardware nodes, deploying operating system images on these nodes, and configuring switches, access routers, and load-balancers for the externally facing roles(e.g., Web role).5. Maintenance

Any service on a failed node is redeployed automatically and transparently, and the fabric controller automatically restarts any failed service roles. The fabric controller allocates new hardware in the event of a hardware failure. Thus, fabric controller always maintains the desired number of roles irrespective of any service, hardware or operating system failures. The fabric controller also provides a range of dynamic management capabilities like adding capacity, reducing capacity and service upgrades without any service disruptions.References

<Return to section navigation list>

Windows Azure Platform Appliance (WAPA), Hyper-V and Private Clouds

Benjamin Grubin (pictured below) asserted “For those just starting on the private cloud journey, it might be useful to get the lay of the land” as a preface to his The Essential Elements of a Private Cloud post of 12/28/2010:

Not long ago, Forrester analyst James Staten wrote a report with the compelling title: You're Not Ready for Internal Cloud. What Staten meant, of course, by the term "internal cloud" is what we have been referring to in this blog as a private cloud. Whether you're ready or not probably depends a lot on where you are on the project path.

For those just starting on the private cloud journey, it might be useful to get the lay of the land and discuss the essential elements of any private cloud project, and point out areas where my definition diverges from Staten's, as a recognized expert on the subject and someone whose opinion I greatly respect.

A Set of Consistent Services

Staten's first rule is that you need a set of consistent services that your users can access and use with a limited amount of friction.Staten says beyond this consistent deliverable, the service shouldn't require any custom configuration. The user basically gets that standard service offering and that's it. Think Henry Ford's famous quote about the Model T: "You can have it any color you like, so long as it's black." But as Staten states in his report, "...the capability delivered is repeated, religiously. That's the foundation for how cloud computing achieves mass scalability and differentiated economics."

He's right, of course, but what he doesn't mention is that you need a service catalogue of standardized IT components to make all of this work to make it all possible. When you create that, it makes the self-service portal Staten discusses possible.

The problem is that standardization is harder than it looks. Organizations have been trying to do this for a long time. The challenge is having a framework that's manageable and works yet is flexible enough to work for your business when it doesn't match the standard offerings.

Usage-based Metering

Another essential pillar of the private cloud is the notion of usage-based metering, or the idea that you pay only for what you use and no more.This model should appeal to everyone. Managers know what they are getting, and IT can easily determine capital expenditure budgets by tracking usage across the organization. As Staten explains in the report: "Nearly all cloud services leverage this model to provide cost elasticity as your consumption changes."

This is one of the key elements of the private cloud - this ability to meter and measure usage across the organization.

Self-Service and Fast

The final piece of Staten's cloud computing puzzle is self-service. This means you set up a web portal where users can access your services using standard internet protocols. He says it needs to be almost always on and users need to be able to get up and running quickly.One key piece I see missing from Staten's essential elements is a system to measure what we mean by successful - what you would call a service-level agreement if you were contracting with a public cloud. How do you measure success? What is your up time guarantee and so forth?

We see this is as an essential covenant between IT and the company, which spells out exactly what users should expect in terms of service levels from the company's private cloud.

With an understanding of these basic elements of a private cloud project, you are better prepared to begin to understand what you need to do to achieve your organization's cloud computing goals.

Benjamin is the Director of Data Center Management for Novell.

<Return to section navigation list>

Cloud Security and Governance

No significant articles today.

<Return to section navigation list>

Cloud Computing Events

The Southern California Code Camp Community announced Next Code Camp January 29th & 30th at Cal State Fullerton on 12/27/2010:

Code Camp is a place for developers to come and learn from their peers. This community driven event has become an international trend where peer groups of all platforms, programming languages and disciplines band together to bring content to the community.

Who is speaking at Code Camp? YOU are, YOUR PEERS are, and YOUR LOCAL EXPERTS are… all are welcome! This is a community event and one of the main purposes of the event is to have local community members step up and offer some cool presentations! Don’t worry if you have never given a presentation before, we’ll give you some tips if you need help, and this a great opportunity to spread your wings. Of course, we do have some ringers on our speaker list as well… stay tuned…

And yes the price is right! FREE FREE FREE

Please just check in when you get to the event so we can know how many people showed up and so we can give you some fun stuff.

The Code Camp includes sessions on Windows Azure, SQL Azure, SQL Azure Reporting Services, and four presentations about OData.

<Return to section navigation list>

Other Cloud Computing Platforms and Services

Jeff Barr (@jeffbarr) described Amazon Web Service’s WebServius - Monetization System for Data Vendors on 12/28/2010:

Do you own the rights to some interesting structured or semi-structured data? If so, you may find AWS-powered WebServius to be of interest. They make it easy for you to monetize your data by providing you the ability to sell access via pay-per-use data access APIs and a bulk download process.

Their system is optimized for use with data stored in Amazon SimpleDB. WebServius handles each aspect of monetization process including developer signup, API key management, usage metering, quote enforcement, usage-based pricing, and billing. In short, all of the messy and somewhat mundane details that you must address before you can start making money from you data. You can access the data in three forms (normal and simplified XML formats or JSON).

As a data vendor you have a lot of flexibility with your pricing. You can price your data per row and/or by column. WebServius offers a free plan for low-traffic (up to 50 subscribers or 10,000 calls to the access APIs, rate-limited to 5 calls per second) access and several usage and revenue-based pricing plans for higher traffic and/or paid access to data.

You can see WebServius in action at several sites including Mergent (historical securities pricing, company fundamentals and executives, annual reports, and corporate actions and dividends), Retailigence (retail intelligence and product data), and Compass Marketing Solutions (rich data on over 16 million business establishments in the US).

You can read more at the WebServius site or in our new WebServius case study.

WebServius sounds a lot like MarketPlace DataMarket to me.

Klint Finley started a new cloud database series for the ReadWriteCloud blog on 12/27/2010 with 5 Cloud Startups to Watch in 2011 #1: ClearDB:

As 2010 draws to a close we're taking a look at a few cloud startups that show promise and that we haven't covered on ReadWriteCloud.

ClearDB is a cloud-hosted MySQL database service competing with Salesforce.com's Database.com. It's a fully ACID compliant relational database and is accessible from any platform and any programming language. It delivers query results over a REST API.

Some of ClearDB's features include:

- A 100% ACID compliant database

- Complete support for stored procedures with CGL

- Social Integration Support with CGL httpGet and httpPost

- SSL

- Automatic backup and management by database engineers

- Elasticity and scalability

- A NoSQL management API "for those who can't stand SQL"

- A SQL92 compliant database API based on MySQL technology

- A cloud infrastructure with a 99.999% uptime guarantee for high availability customers

In addition to Database.com, ClearDB will compete with CouchOne's hosted CouchDB instances. I have the feeling that hosted databases are just getting started and that we'll be seeing many more in 2011. These make a lot of sense for websites and developers that have light front-end requirements but need robust database support.

I'm interested in the implications of hosted databases for DBAs. ClearDB, for example, includes the line "Save money by not having to employ database administrators" in its website copy.

Are you a DBA? Are you worried about your job security?

ClearDB’s most obvious competitors are SQL Azure and Amazon RDS. Following are prices, features and capabilities of ClearDB’s service plans:

| Plan | Price | API Calls | DB Size | Stored Procs | Triggers | CGL | Uptime SLA |

| Starter | Free | 400,000/mo. | 100 MB | No | No | No | 99.95% |

| Basic | $10/mo. | 1,000,000/mo. | 1 GB | Yes | Yes | Yes | 99.95% |

| Small | $20/mo. | 6,000,000/mo. | 4 GB | Yes | Yes | Yes | 99.95% |

| Medium | $30/mo. | 12,000,000/mo. | 10 GB | Yes | Yes | Yes | 99.995% |

| Large | $90/mo. | 96,000,000/mo. | 50 GB | Yes | Yes | Yes | 99.999% |

CGL: An abbreviation for ClearDB Glass Language

SLA: “Five percent (5%) of the fees for each hour of complete service unavailability, up to 100% of the fees.” The 99.999% uptime guarantee (service level agreement, SLA) for Large-plan databases provides for “double payout on failures that exceed 5 minutes per year.”

Note: All tier uptime ratings exclude scheduled maintenance.

Following are geographic locations and multi-location support:

- Starter and Basic: US, single-site only

- Small: US or EU, single-site only

- Medium and Large: US, EU, or PAC, multiple sites

I received the following message when attempting to sign up for the free service:

Clicking OK led me to the ClearDB’s service plans page. Clicking the Basic button opened a registration form, which contained the data I entered when signing up. Adding a first and last name completed the profile; no credit card info was required.

Following is the New Table Wizard’s form for creating a table and adding fields (click images for full-size 1024px captures):

There appears to be no capability to edit the database schema. Changing a table’s structure requires deleting the original table and recreating it, so I had to recreated the Orders table to change the OrderID data type and add the missing ShipRegion field:

The ClearDB API Documentation page lists the following available client APIs:

- ClearDB PHP API Client - Documentation (Login Required)

- ClearDB Java API Client - Documentation - new!

- ClearDB Objective-C API Client - Documentation - new!

Lack of a .NET API Client indicates to me that ClearDB doesn’t expect to convert many .NET developers from using SQL Azure.

A brief perusal of the documentation divulged no instructions for defining foreign key constraints or maintaining referential integrity; I’m continuing to investigate.

Stay tuned for future posts about ClearDB.

<Return to section navigation list>

0 comments:

Post a Comment