Windows Azure and Cloud Computing Posts for 12/14/2010+

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database and Reporting

- Marketplace DataMarket and OData

- Windows Azure AppFabric: Access Control and Service Bus

- Windows Azure Virtual Network, Connect, and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch

- Windows Azure Infrastructure

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Read the detailed TOC here (PDF) and download the sample code here.

Discuss the book on its WROX P2P Forum.

See a short-form TOC, get links to live Azure sample projects, and read a detailed TOC of electronic-only chapters 12 and 13 here.

Wrox’s Web site manager posted on 9/29/2009 a lengthy excerpt from Chapter 4, “Scaling Azure Table and Blob Storage” here.

You can now freely download by FTP and save the following two online-only PDF chapters of Cloud Computing with the Windows Azure Platform, which have been updated for SQL Azure’s January 4, 2010 commercial release:

- Chapter 12: “Managing SQL Azure Accounts and Databases”

- Chapter 13: “Exploiting SQL Azure Database's Relational Features”

HTTP downloads of the two chapters are available for download at no charge from the book's Code Download page.

Tip: If you encounter articles from MSDN or TechNet blogs that are missing screen shots or other images, click the empty frame to generate an HTTP 404 (Not Found) error, and then click the back button to load the image.

Azure Blob, Drive, Table and Queue Services

No significant articles today.

<Return to section navigation list>

SQL Azure Database and Reporting

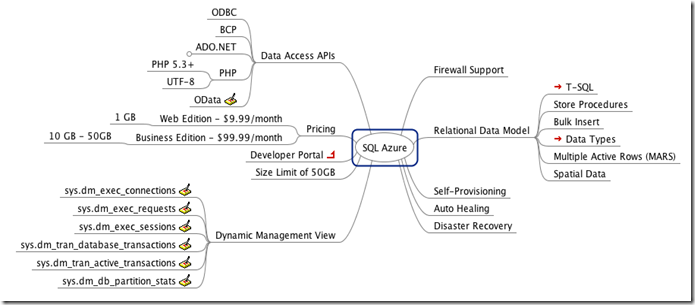

James Vastbinder published a Concept Map of SQL Azure on 12/14/2010:

I’ve been reading through the SQL Azure blog and their wiki to get a complete view of its current state. I’m helping the SQL Azure team with marketing and strategy. As an artifact of my efforts to ramp on SQL Azure, I built a concept map or mind map using FreeMind. FreeMind really helps me collect my thoughts in a manner where I can collect, sort and graph so that I can quickly view complex concepts in a easily consumable manner.

I like that SQL Azure was architected with High-Availability and Failover from the start. I like that you can use the same tools and data access mechanisms. I’m not sold on OData yet nor do I like that unless you have an MSDN subscription, you don’t have a free access tier for usage. Amazon provides one for their cloud data products.

Rénald Nollet (@renaldnollet) posted the first part of a series on SQL Azure Database Manager in Silverlight as SQL Azure Database Manager – Part 1 : How to connect to your SQL Azure DB on 12/14/2010:

The SQL Azure Database Manager is a light and easy to use tool to manage you SQL Azure Database.

This tool runs inside your browser thanks to the Silverlight power (remember, the dead Silverlight!).

The URL to access the SQL Azure DB Manager is : https://manage-db3.sql.azure.com/

One you get the Silverlight application loaded, insert :

- Your SQL Azure Server Name (Fully Qualified DNS Name)

- Your database Name

- Your login Name

- Your password.

That's it! Click on the Connect button an you're in!

If you've any problem connecting, you'll see a small error link :

Click on it and you'll have a more detailed error message:

Once the door is open, you'll meet this new view :

More to come… :)

<Return to section navigation list>

Dataplace DataMarket and OData

Carl Franklin and Richard Campbell interview Shawn Wildermuth (@ShawnWildermuth) in .NET Rocks Show #619, Shawn Wildermuth on Silverlight, Windows Phone 7, HTML 5 ,oData and more!, on 12/14/2010:

Carl and Richard talk to Shawn Wildermuth about Silverlight, Windows Phone 7, HTML 5, oData and more! Yes, a lot of topics, but it all holds together on how people are going to build applications in the future, for the desktop, the web and the phones.

Shawn Wildermuth is a Microsoft MVP (C#), member of the INETA Speaker's Bureau and an author of six books on .NET. Shawn is involved with Microsoft as a Silverlight Insider, Data Insider and Connected Technology Advisors (WCF/Oslo/WF). He has been seen speaking at a variety of international conferences including SDC Netherlands, VSLive, WinDev and DevReach. Shawn has written dozens of articles for a variety of magazines and websites including MSDN, DevSource, InformIT, CoDe Magazine, ServerSide.NET and MSDN Online. He has over twenty years in software development regularly blogs about a range of topics including Silverlight, Oslo, Databases, XML and web services on his blog (http://wildermuth.com).

Telerik described its OData consumers and producers in an Telerik OData Support post of 12/13/2010:

The Open Data Protocol is a data exchange protocol that enables data transactions to be carried over standard web protocols (HTTP, Atom, and JSON). OData producers expose data over the web to a variety of consumers, building on technology that is widely adopted.

Telerik, always being a pioneer in supporting new technologies, not only provides native support for the OData protocol in its products, but also offers several applications and services which expose their data using the OData protocol.

Telerik OData Consumers

OData consumers are controls or applications that consume data exposed through the OData protocol. OData consumers can vary greatly in sophistication, from something as simple as a data bound control, through developer tools such as ORMs, to fully blown end-user products that take advantage of all features of the OData Protocol.

RadGrid for ASP.NET Ajax

RadGrid for ASP.NET Ajax supports automatic client-side databinding for OData services, even at remote URLs (through JSONP), where you get automatic binding, paging, filtering and sorting of the data with Telerik Ajax Grid. The setup is very easy (requires setting only three properties) and will get RadGrid to automatically make remote calls to OData services. Read more in our blogs

RadControls for Silverlight and WPF

Being built on a naturally rich UI technology, the Telerik Silverlight and WPF controls will display the data in nifty styles and custom-tailored filters. Hierarchy, sorting, filtering, grouping, etc. are performed directly on the service with no extra development effort.

RadGridView for Silverlight and RadGridView for WPF consume OData services to display data by utilizing GridView's VirtualQueryableCollectionView to fetch the data on-demand from the service. A new Silverlight/WPF RadDomainDataSource for the GridView is coming soon for enhanced data support in our controls. Read more in our blogs.

RadTreeView for Silverlight also offers flexible API that allows for binding to "Object Data", including OData, that could be either hierarchical or not. See online demo and read more in our blogs.

Telerik Reporting

Telerik Reporting can connect and consume an existing OData feed with the help of WCF Data Services. The process is straightforward and executed in a few simple steps by using the powerful Telerik Reporting data source components without breaking the best architecture patterns. Watch video and read more in our blogs.

Telerik OData Producers

OData producers are services that expose their data using the OData protocol.

Telerik OpenAccess ORM

In Q2 2010 Telerik released a LINQ implementation that is simple to use and produces domain models very fast. Built on top of the enterprise grade OpenAccess ORM, it allows you to connect to any database that OpenAccess can connect to such as: SQL Server, MySQL, Oracle, SQL Azure, VistaDB, etc. In addition, the LINQ implementation is so easy to use, that you can build an OData feed via a few easy steps by using the OpenAccess Visual Designer and the Data Services Wizard. Read more.

Telerik TeamPulse

The TeamPulse Silverlight client interacts with the database using a WCF data service, and more specifically by using the Open Data Protocol (OData) which is a popular way to expose information from a variety of sources including, but not limited to, relational databases, file systems, content management systems and traditional Web sites.

The OData protocol comes in extremely handy for TeamPulse, because the nature of the product is such that it is used by many teams and people at the same time. Due to the complex and integrated character of most teams, rarely does information stay within one repository, and it often needs to be shared in many different ways to many different people, i.e. a number of teams may need to mine the information in order to feed it to other systems via a myriad of different formats. And this is where OData comes into place: it exposes the TeamPulse data for digesting and distribution, making sure that developer will find what they need very quickly within the large repository of valuable information in the TeamPulse data store. Since the integration between TeamPulse and OData is built-in, you only need to look at the TeamPulse data service to browse it. Read more in our blogs.

Sitefinity CMS

Sitefinity is ready to host OData services. With the powerful API, you can expose any information from the CMS through your custom made OData service.

Telerik TV

Telerik TV is filled with hundreds of videos that help customers master the Telerik tools. With recorded, on-demand webinars, interviews with product experts, and countless Telerik how-to and getting started videos, Telerik TV is a rich learning resource freely available to all Telerik customers. Telerik TV organizes videos in to libraries grouped by product, and it provides multiple ways to discover helpful videos, such as search and browse, on tv.telerik.com. The Telerik TV portal is built on ASP.NET MVC using the Telerik Extensions for ASP.NET MVC in the UI and OpenAccess ORM for all data access.

Telerik TV’s OpenAccess ORM-powered data layer allows it to expose a rich WCF Data Service OData endpoint that contains information about all videos on Telerik TV. Any developer can create new visualizations of Telerik TV’s video library by consuming this OData service in new applications. The Telerik TV OData service is featured on the official OData.org OData Producers page. Try the Telerik TV OData service now by visiting: http://tv.telerik.com/services/odata.svc/

<Return to section navigation list>

Windows Azure AppFabric: Access Control and Service Bus

Alik Levin announced Shaping Windows Azure AppFabric Access Control Service 2.0 Documentation on Codeplex in a 12/14/2010 post:

I have published on Codeplex the first draft of the upcoming documentation for Windows Azure AppFabric Access Control Service 2.0. This is how it looks at the time of this writing. We also publish topics on Codeplex as they become available. It shows as linked items in the TOC. Our hope to get as much as possible feedback before it goes to MSDN.

Consider providing feedback in the comments below.

It is available here and will be ported to the front page of the ACS documentation soon.

- Release Notes for Version 2.0

- Overview of Windows Azure AppFabric Access Control Service

- Getting Started with the Windows Azure AppFabric Access Control Service

- Prerequisites

- Web Applications and ACS

- Web Services and ACS

- Windows Azure AppFabric Access Control Service Features

- Active Federation

- Authorization in claims aware applications

- Claims token transformation explained

- Integrating ACS in ASP.NET applications

- Integrating ACS in WCF RESTful wen service

- Integrating with Internet identity providers

- Integrating with enterprise identity providers

- OAuth WRAP protocol

- Mapping an application to Service Identities

- Management service

- Managing trust using Certificates and Keys

- OAuth 2.0 protocol

- Passing a set of claims in the token between client, relying party, identity provider, and ACS

- Passive federation

- Relying party, Client, Identity Provider, ACS

- SAML Token

- SWT Token

- WS-Federation protocol

- WS-Trust protocol

- Working with the Windows Azure AppFabric Access Control Service Management Portal

- Scenarios and Solutions

- ACS v2 Fast Track

- Challenges – SSO, Identity Flow, Authorization

- Solution concept and ACS v2 Architecture

- Secure Web Applications With ACS v2

- Secure RESTful WCF Services With ACS v2

- Automation Using ACS v2 Management Service

- ACS v2 Functionality

- Federation

- Authentication

- Authorization

- Security Token Flow and Transformation

- Trust Management

- Administration

- Automation

- Security

- Performance and Scalability

- Interoperability

- Extensibility

- Troubleshooting

- How To's Index

- How To: Create My First Claims-based ASP.NET application

- How To: Create My First Claims Aware WCF Service

- How To: Configure ADFS 2.0 as an Identity Provider

- How To: Configure Facebook as an Identity Provider

- How To: Configure Federated Authentication in an ASP.NET Web Application

- How To: Configure Google as an Identity Provider

- How To: Configure Yahoo! as an Identity Provider

- How To: Host Login Pages in the Windows Azure AppFabric Access Control Service

- How To: Host Login Pages in Your ASP.NET Web Application

- How To: Configure Trust Between ACS and ASP.NET Web Applications Using X.509 Certificates

- How To: Configure Trust Between ACS and RESTful WCF Service Using Symmetric Keys

- How To: Implement Claims Based Access Control (CBAC) in a Claims Aware ASP.NET Application Using WIF and ACS

- How To: Implement Role Based Access Control (RBAC) in a Claims Aware ASP.NET Application Using WIF and ACS

- How To: Implement Token Transformation Logic Using Rules

- Guidelines Index

- Checklists Index

- Code Samples Index

- Claim-based Identity Terminology

Related Books

- Programming Windows Identity Foundation (Dev - Pro)

- A Guide to Claims-Based Identity and Access Control (Patterns & Practices) – free online version

- Developing More-Secure Microsoft ASP.NET 2.0 Applications (Pro Developer)

- Ultra-Fast ASP.NET: Build Ultra-Fast and Ultra-Scalable web sites using ASP.NET and SQL Server

- Advanced .NET Debugging

- Debugging Microsoft .NET 2.0 Applications

Related Info

Mike Benkovich and Brian Prince will present a MSDN Webcast: Windows Azure Boot Camp: Connecting with AppFabric (Level 200) on 2/7/2011:

Register today for new Windows Azure AppFabric training! Join this MSDN webcast training on February 7, 2011 at 11:00 AM PST.

It's more than just calling a REST service that makes an application work; it's identity management, security, and reliability that make the cake. In this webcast, we look at how to secure a REST Service, what you can do to connect services together, and how to defeat evil firewalls and nasty network address translations (NATs).

Presenters: Mike Benkovich, Senior Developer Evangelist, Microsoft Corporation and Brian Prince, Senior Architect Evangelist, Microsoft Corporation

In addition, don’t miss these other Windows Azure and SQL Azure webcasts:

- 11/29: MSDN Webcast: Azure Boot Camp: Introduction to Cloud Computing and Windows Azure

- 12/06: MSDN Webcast: Azure Boot Camp: Windows Azure and Web Roles

- 12/13: MSDN Webcast: Azure Boot Camp: Worker Roles

- 01/03: MSDN Webcast: Azure Boot Camp: Working with Messaging and Queues

- 01/10: MSDN Webcast: Azure Boot Camp: Using Windows Azure Table

- 01/17: MSDN Webcast: Azure Boot Camp: Diving into BLOB Storage

- 01/24: MSDN Webcast: Azure Boot Camp: Diagnostics and Service Management

- 01/31: MSDN Webcast: Azure Boot Camp: SQL Azure

- 02/14: MSDN Webcast: Azure Boot Camp: Cloud Computing Scenarios

<Return to section navigation list>

Windows Azure Virtual Network, Connect, and CDN

The Windows Azure AppFabric Team offers a Connectivity for the Windows Azure Platform forum for Windows Azure Connect, but as of 12/14/2010, it contained only seven threads.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

Robert Duffner posted Thought Leaders in the Cloud: Talking with Maarten Balliauw, Technical Consultant at RealDomen and Windows Azure Expert on 12/14/2010:

Maarten Balliauw is an Azure consultant, blogger, and speaker, as well as an ASP.NET MVP. He works as a technical consultant at RealDomen. Maarten is a project manager and developer for the PHPExcel project on CodePlex, as well as a board member for the Azure User Group of Belgium (AZUG.BE).

In this interview we discuss:

- Take off your hosting colored glasses - architecting for the cloud yields benefits far beyond using it as just a mega-host

- Strange bedfellows - PHP on Azure and why it makes sense

- Average is the new peak - The cloud lets you pay for average compute, on-premesis makes you pay for peak capacity

- Datacenter API - It makes sense for cloud providers to expose APIs for many of the same reasons it makes sense for an OS to expose APIs

- Data on the ServiceBus - Transferring data securely between your datacenter and your public cloud applications

Robert Duffner: Could you tell us a little bit about yourself to get us started?

Maarten Balliauw: I work for a company named RealDolmen, based in Belgium. Historically, I've worked with PHP and ASP.NET, and since joining RealDolmen, I have been able to work with both technologies. While my day to day job is mostly in ASP.NET, I do keep track of PHP, and I find it really interesting to provide interconnections between the technologies.

And Azure is also a great platform to work with, both from an ASP.NET perspective and a PHP perspective. Also, in that area, I'm trying to combine both technologies to get the best results.

Robert: You recently spoke at REMIX 10, and in your presentation, you talked about when to use the cloud and when not to use the cloud. What's the guidance that you give people?

Maarten: The big problem with cloud computing at this time is that people are looking at it from a perspective based on an old technology, namely classic hosting. If you look at cloud computing with fresh eyes, you will see that it is really an excellent opportunity, and that no matter what situation you are in, your solution will always be more reliable and a lot cheaper if you do it in the cloud.

Still, not every existing application can be ported to the cloud at this time. One important metric to use in choosing between cloud and non-cloud deployments is your security requirements. Do you care about keeping your data and applications on premises versus in the cloud?

Robert: We've obviously engineered Azure to be an open platform that runs a lot more than just .NET, including dynamic languages like Python and PHP that you mentioned, but also languages like Java. But you talk quite a bit about PHP on Azure. From your perspective, why would anyone want to do that when there are so many options for PHP hosting today?

Maarten: You can ask the same question about ASP.NET hosting. There are so many options to host your .NET applications somewhere: on a dedicated server, on a virtual private server, on a cloud somewhere. So I think the same question applies to PHP, Java, Ruby, and whatever language you're using.

Azure provides some quite interesting things with regard to PHP that other vendors don't have. For example, the service bus enables you to securely connect between your cloud application and your application that's sitting in your own data center. You can leverage that feature from .NET as well as from PHP. So it's really interesting to have an application sitting in the cloud, calling back to your on premises application, without having to open any firewall at all.

Robert: In your talk, you also point to the Azure solution accelerators for Memcached, MySQL, MediaWiki, and Tomcat. In your experience, are most people even aware that these kinds of things run on Azure?

Maarten: I'm not sure, because, traditionally, the Microsoft ecosystem is quite simple. There's Microsoft as a central authority offering services, and then there are other vendors adding additional functionality, bringing some other alternative components. In the PHP world, for example, there is no such thing as a central authority, so information is really distributed across different communities, companies, and user groups.

I think some part of the message from Azure is already out there in all these communities and all these small technology silos in the PHP world, but not everything has come through. So I'm not sure if everyone is aware that all these things can actually run on Azure, if you're using PHP or MySQL or whatever application that you want to host on Azure.

Robert: In another talk, you mentioned "Turtle Door to Door Deliveries," and how they estimated needing six dedicated servers for peak load, but because the load is fairly elastic, they saw savings with Azure. Can you talk a little bit more about that example?

Maarten: That was actually a fictitious door-to-door delivery company, like DHL, UPS, or FedEx, which we created for that talk. They knew the load was going to be around six dedicated servers for peaks, but there were times of the day where only one or two servers would be needed. And when you're using cloud computing, you can actually scale dynamically and, for example, during the office hours have four instances on Azure, and during the evening have six instances, and then in the night scale back to two instances.

And if you take an average of the number of instances that you're actually hosting, you will see that you're not actually paying for six instances, so that you're only paying for three or, maximum, four. Which means you have the extra capacity of two machines, without paying for it.

We have done a project kind of like that, for a company in Belgium, doing timing on sports events. Most of these events have a maximum of 1,000 or 2,000 participants, but there are several per year with 30,000 participants.

We used the same technique for that project as in the example in the talk. We scaled them up to 18 instances during peaks, and at night, for example, we scaled them back to two instances. They actually had the capacity of 18 instances during those peak moments, but on average, they only had to pay for seven instances.

Robert: One important characteristic of clouds is the ability to control them remotely through an API. Amazon Web Services has an API that lets you control instances, and you recently wrote a blog post showing a PowerShell script that makes an app auto-scale out to Azure when it gets overloaded. What are some of the important use cases for cloud APIs?

Maarten: If you have a situation where you need features offered by a specific cloud, then you would need those cloud APIs. For example, if you look at the PHP world, there's an initiative, the Simple Cloud API, which is one interface to talk to storage on different clouds, like Amazon, Azure, and Rackspace. They actually provide the common denominator of all these APIs, and you're not getting all the features of the exact cloud that you are using and testing.

I think the same analogy goes for why you would need cloud APIs. They're just a direct means of interacting with the cloud platform, not only with the computer or the server that you're hosting your application on but, really, a means of communicating with the platform.

For example, if you look at diagnostics, getting logs and performance counters of your Azure application on a normal server, you would log in through a remote desktop and have a terminal to look at the statistics and how your application is performing and things like that. But if you have a lot of instances running on Azure, it would be difficult to log in to each machine separately.

So what you can do then is use the diagnostics API, which lets you actually gather all this diagnostic data in one location. You have one entry point into all the diagnostics of your application, whether it's running on one server or 1,000 servers.

Robert: That's a great example. You also wrote an article titled "Cost Architecting for Windows Azure," talking about the need to architect specifically for the cloud to get all the advantages. Can you talk a little bit about things people should keep in mind when architecting for the cloud?

Maarten: You need to take costs into account when you're architecting a cloud application. If you take advantage of all the specific billing situations that these platforms have to offer, you can actually reduce costs and get a highly cost effective architecture out there.

For example, don't host your data in a data center in the northern US and your application in a data center in the southern US, because you will pay for the traffic between both data centers. If your storage is in the same data center as your hosted application, you won't be paying for internal traffic.

There are a lot of factors that can help you really tune your application. For example, consider the case where you are checking a message queue, and you also have a process processing messages in this queue. Typically in this case, you would poll the queue for new messages, and if no messages are there, you would continue polling until there's a message. Then you process the message, and then you start polling again. You may be polling the queue a few times a second.

Every time you poll the queue is a transaction. Even though transactions are not that expensive on Windows Azure, if you have a lot of transactions, it can cost you substantial money. Therefore, if you poll less frequently, or with a back off mechanism that causes you to wait a little longer if there are no messages, you can drastically reduce your costs.

Robert: One of the key challenges for enterprise cloud applications is that they often can't run as a separate island. Can you talk about some of the solutions that exist when identity and data need to be shared between your data center and the cloud?

Maarten: Actually, the Windows Azure AppFabric component is completely focused on this problem. It offers the service bus, which you can use to have an intermediate party between two applications. Say you have an application in the cloud and another one in your own data center, and you don't want to open a direct firewall connection between both applications. You can actually relay that traffic through the Windows Azure service bus and have both applications make outgoing calls to the service bus, and the service bus will route traffic between both applications.

You can also integrate authentication with a cloud application. For example, if you have a cloud application where you want your corporate accounts to be able to log in, you can leverage the Access Control service. That will allow you to integrate your cloud application with your Active Directory, through the AppFabric ACS and an Active Directory federation server.

If you have an application sitting in your own data center but you need a lot of storage, you can actually just host the application in your data center and have a blob storage account on Azure to host your data. So there are a lot of integrations that are possible between both worlds.

Robert: You manage a few open source projects on CodePlex. Can you talk a little bit about the opportunity for open source on platforms like Azure or those operated by other cloud providers?

Maarten: Consider, for example, SugarCRM, which is an open source CRM system that you can just download, install, modify, view the codes, and things like that. What they actually did was take that application and also host it in a cloud. They still offer their product as an open source application that you can download and use freely. But they also have a hosted version, which they use to drive some revenue and to be able to pay some contributors.

They didn't have to invest in a data center. They just said, "Well, we want to host this application," and they hosted it in a cloud somewhere. They had a low entry cost, and as customers start coming, they will just increase capacity.

Normally, if a lot of customers come, the revenue will also increase. And by doing that, they actually now have a revenue model for their open source project, which is still free if you want to download it and use it on your own server. But by using the hosted service that's hosted somewhere on a cloud, they actually get revenue to pay contributors and to make the product better.

Robert: Maarten, I appreciate your time. Thanks for talking to the Windows Azure community.

Maarten: Sure. Thank you.

The Windows Azure Team announced on 12/13/2010 Transport for London Moves to Windows Azure:

Today we have some great news to announce as Transport for London (TfL) has taken to the cloud by moving their Developers Area and new Trackernet data feed to the Windows Azure platform. This forms the first major step forward as part of their comprehensive Digital Strategy and open data policy initiative.

The Developers Area contains TfL's data feeds and allows developers to create applications for mobile and other devices for use by the public. Feeds available include information on the location of cycle hire docking stations, timetable data and real time traffic and roadworks information along the entire TfL transport network in and around London.

The latest data feed to be added to the Area and onto Windows Azure is called 'Trackernet' - an innovative new realtime display of the status of the London Underground 'Tube' network. Trackernet is able to display the locations of trains, their destinations, signal aspects and the status of individual trains at any given time. This works by taking four data feeds from TfL's servers and making it available to developers and the public via the Windows Azure platform.

Microsoft and TfL have worked in partnership to create this scalable and strategic platform. The platform is resilient enough to handle several million requests per day and will enable TfL to make other feeds available in the future via the same mechanism, at a sustainable cost. To check out Trackernet for yourself, take a look here.

At the launch in London was Chris MacLeod, Director of Group Marketing, Transport for London and Mark Taylor, Director of DPE at Microsoft UK.

Chris MacLeod, TfL's Director of Group Marketing, said: "This is great news for TfL passengers. We are committed to making travel information available to passengers how and when they want it. Trackernet - which will lead to some new apps and which many developers have already tested for themselves - is a great example of how TfL is using new technologies to provide better travel tools for public transport users."

Michael Gilbert, TfL's Director of Information Management, said: "TfL with the help of Microsoft, has created a strategic, scalable technical platform that will aid us in making real-time data sets available; the first of which is Trackernet data."

Mark Taylor, had this to say: "TfL asked for a system able to handle in excess of seven million requests per day, as well as being able to scale to handle unpredictable events like snow days. Microsoft's cloud computing platform, Windows Azure is ideal for this kind of task and provides a great basis for the programme of innovations that TfL has planned over the coming years, such as Trackernet. We look forward to continuing to work with TfL and other organisations seeking a secure, trusted cloud computing experience."

Derrick Harris explained Why CloudSwitch Is Gaining Momentum and Resisting VCs in a 12/13/2010 post to GigaOm’s Structure blog:

Updated: Hot cloud computing startup CloudSwitch today released version 2.0 of its flagship product, CloudSwitch Enterprise, which helps companies move enterprise applications to the cloud with a few clicks of the mouse. The new features should help in the company’s quest to become an integral part of customers’ IT environments, but my conversation with CEO John McEleney and Founder/VP Ellen Rubin focused on the company’s future plans and how it’s bucking the seeming trend toward grabbing as much venture capital as possible.

Regarding version 2.0, Rubin says the goal is to make the product more than a one-time experience of porting applications into the cloud and moving on. Whereas version 1.0 let customers port applications into the cloud while maintaining the look and feel of in-house administration, as well as internal policies, version 2.0 lets customers develop applications within the CloudSwitch environment and launch them natively in the cloud. It also lets them utilize advanced networking techniques and apply public IP addresses to customer-facing applications, which Rubin explained will save customers’ data centers from overwhelming load should an application experience heavy traffic.

Going forward, McEleney says that CloudSwitch, which currently lets users work within Amazon EC2 and Terremark, “will support multiple clouds,” including Windows Azure, Savvis and OpenStack. As Rubin added, certain clouds in certain geographies might be better suited for particular workloads, so choice is key to making CloudSwitch Enterprise the most valuable tool possible. CloudSwitch will support Windows Azure Virtual Machines Roles, the IaaS capability announced during Microsoft’s Professional Developers Conference in October. [Emphasis added.]

We also spoke about funding, or, rather, CloudSwitch’s relative lack thereof. The company is among the most-promising cloud computing startups around, having won the Launchpad competition at our Structure 2010 event and already boasting a number of large, even Fortune 500, enterprises as customers. Still, CloudSwitch has raised only $15.4 million and closed its latest round in June 2009. This is in stark contrast to other popular cloud startups, some of which have raised between $30 million and $50 million. RightScale just closed a $25 million round in September. Update: On the other hand, Heroku had raised just $13 million before selling itself to Salesforce.com for $212 million last week.

According to McEleney, it’s not that his company can’t get more money, but rather that “[t]here’s still plenty of gunpowder left.” Although he says selling CloudSwitch’s product is a bit more resource-intensive than selling SaaS via a freemium model (a la New Relic), CloudSwitch still runs its business as efficiently as possible, and it has money coming in from deep, and large, customer engagements. Its investors are there if CloudSwitch needs an injection of capital to spur growth, but McEleney just isn’t keen on diluting its valuation. After all, he joked, it’s not like CloudSwitch is valued as high as Groupon, where tens of millions are merely a drop in the bucket.

If it keeps up its momentum, CloudSwitch might not need to raise another round. Large vendors have been furiously buying cloud startups over the past year, and few have received the accolades that CloudSwitch has. Further, its hybrid nature fits many systems-management vendors’ strategies of keeping applications primarily on-premise but easing the transition to the cloud when necessary. IBM, CA, Dell, Red Hat and BMC all seem like legitimate potential buyers, possibly within the next year.

Related content from GigaOM Pro (sub req’d):

See also Lori MacVittie (@lmacvittie) claimed Options begin to emerge to address a real management issue with virtualized workloads in public cloud computing in a preface to her ”Lights Out” in the Cloud post of 12/14/2010 to F5’s DevCentral blog in the Other Cloud Computing Platforms and Services section below.

Josh Greenbaum (pictured below) explained the importance of Dynamics CRM to Windows Azure in his The Realignment of the Enterprise Software Market: Oracle vs. Everyone, Microsoft in Ascendance, and Watch out for Infor post of 12/13/2010 to his Enterprise Irregulars blog:

Larry Ellison makes no bones about it, he’s willing and able to compete to the death, or at least to the near death, with any and all who stand in his way. So, despite the billions of dollars in joints sales between Oracle and SAP, HP, and IBM, Ellison has been directly, and at times, viciously, attacking these three companies in very public and very over the top ways.

These are not some of Ellison’s finest moments.

Not only that, he seems to have neglected one major rival – Microsoft – despite the fact that the relative degree of competition versus joint business between Oracle and Microsoft is significantly smaller than between SAP, HP, and IBM. This would theoretically make Microsoft a more logical target for Larry’s wrath – especially if Larry really understood the degree of the threat that Microsoft presents. More on this in a moment.

And everyone seems to be forgetting that a new player is about to emerge in the form of Infor. More on this in a moment too.

Meanwhile, the consequence of Ellison’s attacks and actions regarding SAP, HP and IBM are having the unintended effect of pushing the above three competitors into a relatively united front against the Ironman from Redwood Shores. While SAP, HP and IBM all have many reasons to compete with one another, SAP in particular is beginning to see some benefits of being the wild card in the HP and IBM portfolios that can counter the wildness of Oracle.

SAP EVP Sanjay Poonen described this effect at the SAP Summit last week – adding that in particular his field sales team is teaming up with IBM and HP to beat Oracle as much as possible. The fact that this is happening perhaps more in the field than in the executive suite is key: field sales people are notoriously pragmatic and tactical, and their apparent propensity to team up with erstwhile rivals to take on Oracle is an indication of the value of this new realignment, all other political realities aside.

So, with Ellison talking Oracle into a corner with SAP, HP and IBM– one based, in my opinion, on a theory about the value of the hardware “stack” that is hugely outmoded – it’s interesting how little time he spends dissing Microsoft. To his peril.

What many, Larry obviously among them, don’t realize is that Microsoft Dynamics, the former bastard stepchild of the Microsoft portfolio, is now becoming the poster child of innovation in Redmond. And Redmond is taking notice big time: hidden in the departure of Stephen Elop for Nokia was a significant upgrade for Dynamics: Kirill Tatarinov, the head of Dynamics, now reports directly to Steve Ballmer.

This is no accident, or, as one of my Microsoft contacts told me, “Ballmer is already busy, he doesn’t need more direct reports.” The reason for Ballmer’s direct interest in Dynamics is based on three newly discovered values for Microsoft’s second smallest division:

1) The Dynamics product pull-through: Once, in the days before Steve Raikes, there was serious talk about jettisoning Dynamics. Then Raikes figured out that Dynamics is a great driver of product pull-through. Today, every dollar of Dynamics generates from $3 to $9 in additional software sales for Microsoft. Now that’s what I call a stack sale.

2) Dynamics’ VARs and ISVs get the software & services = Azure strategy: It’s clear that the value-add of Azure has to be about driving innovation into the enterprise, and that’s not something the average Office or Windows developer really gets. But Dynamics partners live and breath this concept every day, and Microsoft has been honing the high-value, enterprise-level capabilities of its Dynamics channel this year in a concerted effort to make them enterprise and Azure-ready. [Emphasis added.]

3) Dynamics capabilities will drive much of the functionality in Azure: Dynamics CRM is already the queued up to be the #1 app in Azure. Next stop, break up AX into components, and let the partners use them as building blocks for value-added apps. (And do so before SAP’s ByD development kit starts stealing this opportunity.) While SQL Server, Sharepoint, Communications Server, Windows Server, and other pieces of the stack will have a big play in Azure, the customer working in that innovative new Azure app will most likely be directly interfacing a piece of AX, or Dynamics CRM. That makes Dynamics essential to Azure, and vice versa. [Emphasis added.]

What does the Microsoft factor mean in terms of the aforementioned realignment? Well, despite Ellison’s public neglect, Microsoft is setting up a strong case for contending for a big chunk of the market. The case starts with the SME market, where Microsoft is traditionally strong. But the Azure/S&S/Dynamics strategy is heading straight for the large enterprise as well. SAP’s recent strategic definition of the opportunity for on-demand apps to deliver innovation to large enterprise customers locked into an on-premise world plays well for the above Microsoft strategy as well.

This places Microsoft front and center in a battlefield where it is deploying 21st century technology against an Oracle that fighting the battle as though this were still the 20th century. We’ve all seen this movie before: Black Hawk Down is just one version of the danger of deploying sexy but old technology in a battlefield that has evolved in the middle of the war.

With Microsoft starting to outflank it – its version of a stack is a warehouse full of interchangeable, plug and play container-sized data centers, with virtually no on-site maintenance needed – Larry is driving Oracle towards a focus on hardware that starts to look a little tired. Sure, we saw genuine innovation from Oracle at Open World this fall, but it was buried in a corporate message about hardware and a strategic necessity for a little too much middleware in order to deploy this innovation. Fusion looked good, but it’s not yet ready for the market, and the shenanigans surrounding the circus atmosphere preceding and during the trial against SAP was an unfortunate distraction from the business of actually building and selling innovation.

In fact, I think the impact of the trial will rebound against Oracle as the negatives – mostly centering around the lawyering-up of the enterprise software market – start to come home to roost. I always felt that Oracle would be hard-pressed to show that its hot-footed, heavy-handed pursuit of SAP would have a measurable payback in either market share, stock price, or customer preference, and the impact of adding the fear that Oracle is ready and willing to lawyer up to win in the market will start to trickle down to the customers as well. No vendor wants its customers to worry that they could end up facing down the likes of David Boies if things start to go south.

Meanwhile, I have yet to find a single customer who thinks more highly of Oracle since the trial, or for that matter, less of SAP. I do know a number of customers who have really been scratching their heads at Oracle’s conduct, and I personally worry that the lawyers seem to be running Oracle more and more, to the detriment of the people at Oracle who actually develop, market, and sell product. I love lawyers, including the one I employ to help me when my business dealings need it – but the day he becomes a strategic asset to my business development efforts is the day I look in the mirror and start slapping myself around.

So, with Oracle facing a number of potential self-inflicted wounds of late, Microsoft on the rise, and HP/SAP and IBM/SAP starting to work better and better together, the market is starting to shift gears once again.

And waiting in the wings, but not for long, is Infor, with Charles Phillips at the helm, ready to start throwing its weight around. I firmly believe that Charles Philips, aided and abetted by Bruce Richardson, the former AMR analyst, is calmly dissecting the market and getting ready to launch a new strategy that will have Philips old company occupying that bullseye in the middle of the target. Not because anyone is vindictive or out for revenge – not in our industry – but because Oracle’s strategy is the one that’s looking a little stale right now. I for one can’t wait to see what the new Infor is cooking up for the market.

So, it’s almost 2011, and my prediction is this: fasten your seatbelts. The new year will bring a shifting of forces and interests that would make Metternich drool and leave Kissinger gasping for air. And regardless of whether you believe any of the above, you have to grant me this last fact: boy, is 2011 going to be an interesting year.

Image credit: Digital Daily

If you’re interested in Microsoft Dynamics CRM, you might enjoy the following video segments posted by Microsoft Dynamics ISV Architect Evangelist John O'Donnell on 12/14/2010:

- New Video - Overview of the Dynamics Communities CRMUG event with the event organizers

- New Video - Talking Dynamics CRM and the CRMUG event with the Dynamics CRM MVP's Part One

- New Video - Talking Dynamics CRM and the CRMUG event with the Dynamics CRM MVP's Part Two

Eric Newell announced Microsoft Dynamics CRM 2011 Release Candidate Bits Available in a 12/14/2010 post:

You can download the Release Candidate version of Microsoft Dynamics CRM 2011 from the Microsoft download servers at http://downloads.microsoft.com. Here are the specific links to the various packages that can be downloaded:

- Microsoft Dynamics CRM 2011 Server Release Candidate

- Microsoft Dynamics CRM 2011 for Microsoft Office Outlook

- Microsoft Dynamics CRM 2011 Language Pack

- Microsoft Dynamics CRM 2011 E-mail Router

- Microsoft Dynamics CRM 2011 Report Authoring Extension

- Microsoft Dynamics CRM 2011 List Component for Microsoft SharePoint Server 2010

This is still a pre-release version of the software, so this is not the final Release to Manufacturing (RTM) build. There is a CRM 2011 launch event planned for January 20, 2011 where information about the final release will be shared. If you would like to watch the launch event, you can find more information and register here: http://crm.dynamics.com/2011launch/.

Eric is a Premier Field Engineering Manager for Microsoft Services focused on the Dynamics family of products.

Dawate started a new series with Into the Cloud with Windows Phone, Windows Azure and Push Notifications on 12/14/2010:

The need for cloud computing (or at least an understanding of it)

Windows Phone 7 supports “smart” multitasking, which means that third party applications have to manage their own state and cannot run in the background (except for some apps which can play music under a locked screen, although this is really a function of the first-party software on the phone).

That leaves some folks confused: How can I run a “service” type application on the phone?

The answer is that you really can’t. And this answer has many developers heading for the cloud.

Now, ultimately I think this is a good thing. Say you have an app that polls stocks. Instead of sitting there on your phone, draining your battery, the app could be running in the cloud, on a machine plugged into a wall somewhere that has comparatively infinite resources. Whenever that stock goes “bing,” the phone gets a notification and launches the app. That’s a pretty clean way of doing it and leaves you with more precious battery life!

Over the next few blog posts, we’ll explore some of the uncharted vapors of the cloud and how we can utilize them to take your Windows Phone apps to the next level.

Here is the tentative agenda (I’ll be updating this as we move):

- Intro (this post!)

- App Data: SQL Azure

- The Cloud Service: Registering for Push Notifications (via WCF endpoint)

- The Cloud Service: Worker Role

- Writing the Phone App

About the app we’ll build

We will be building a very simple app that watches a few stocks for a user and occasionally alerts that user when a stock changes by some threshold. Easy, right? You’ll be amazed to see all the pieces of Microsoft we touch during this journey.

Next…

Join me tomorrow to read about SQL Azure and see how to set up a database using the new management portal (formerly Project Houston).

Brian Hitney continued his series with Folding@home SMP Client on 12/13/2010:

Wouldn’t you know it! As soon as we get admin rights in Azure in the form of Startup Tasks and VM Role, the fine folks at Stanford have released a new SMP client that doesn’t require administrative rights. This is great news, but let me provide a little background on the problem and why this is good for our @home project.

In the @home project, we leverage Stanford’s console client in the worker roles that run their Folding@home application. The application, however, is single threaded. During our @home webcasts where we’ve built these clients, we’ve walked through how to select the appropriate VM size – for example, a single core (small) instance, all the way up to an 8 core (XL) instance.

For our purposes, using a small, single core instance is best. Because the costs are linear (2 single core costs the same as a single dual-core), we might as well just launch 1 small VM for each worker role we need. The extra processors wouldn’t be utilized and it didn’t matter if we had 1 quad core running 4 instances, or 4 small VMs each with their own instance.

The downside to this approach is that the work units assigned to our single core VMs were relatively small, and consequently the points received were very small. In addition, bonus points are offered based on how fast work is done, which means that for single core machines, we won’t be earning bonus points. Indeed, if you look at the number of Work Units our team has done, it’s a pretty impressive number compared to our peers, but our score isn’t all that great:

As you can see, we’ve processed some 180,000 WU’s – that would take one of our small VMs, working alone, some 450 years to complete! Points-wise, though, is somewhat ho-hum.

Stanford has begun putting together some High Performance Clients that make use of multiple cores, however, until now, were difficult to install in Windows Azure. With VM Role and admin startup tasks just announced at PDC we could now accomplish these tasks inside of Azure, but it turns out Stanford (a few months back, actually) put together a drop-in replacement that is multicore capable. Read their install guide here. This is referred to as the SMP (symmetric multiprocessing) client.

The end result is that instead of having (for example) 8 single-core clients running the folding app, we can instead of 1 8-core machine. While it will crunch fewer Work Units, the power and point value is far superior. To test this, I set up a new account with a username of bhitney-test. After a couple of days, this is result (everyone else is using the non-SMP client):

36 Work Units processed for 97k points is averaging about 2,716 points per WU. That’s significantly higher than the single core which pulls in about 100 points per WU. The 2,716 average is quite a bit lower than what it is doing right now, because bonus points don’t kick in for about the first dozen items.

Had we been able to use the SMP client from the beginning, we’d be sitting pretty at a much higher rating – but that’s ok, it’s not about the points. :)

<Return to section navigation list>

Visual Studio LightSwitch

Kunal Chowdhury continued his LightSwitch series with Beginners Guide to Visual Studio LightSwitch (Part – 4) – Working with List and Details Screen on 12/2/2010 (possible repeat):

This article is Part 4 of the series “Beginners Guide to Visual Studio LightSwitch”:

- Beginners Guide to Visual Studio LightSwitch (Part – 1) – Working with New Data Entry Screen

- Beginners Guide to Visual Studio LightSwitch (Part – 2) – Working with Search Screen

- Beginners Guide to Visual Studio LightSwitch (Part – 3) – Working with Editable DataGrid Screen

- Beginners Guide to Visual Studio LightSwitch (Part – 4) – Working with List and Details Screen

Visual Studio LightSwitch is a new tool for building data-driven Silverlight Application using Visual Studio IDE. It automatically generates the User Interface for a DataSource without writing any code. You can write a small amount of code also to meet your requirement.

In my previous chapter “Beginners Guide to Visual Studio LightSwitch (Part – 3)”, I guided you step-by-step to create a DataGrid of records. Here you understood, how to insert/modify/delete records.

In this chapter, I am going to demonstrate you how to create a List and Details screen using LightSwitch. This will show you how to integrate two or more tables into a single screen.

Background

If you are new to Visual Studio LightSwitch, I will first ask you to read the previous three chapters of this tutorial, where I demonstrated it in detail. In my 3rd chapter, I discussed the following topics:

- Create the Editable DataGrid Screen

- See the Application in Action

- Edit a Record

- Create a New Record

- Delete a Record

- Filter & Export Records

- Customizing Screen

In this Chapter, we will discuss on the List and Details Screen where we can integrate two or more Tables inside a single Screen. Read the complete tutorial to learn it. Enough images are there for you to understand it very easily. Don’t forget to vote and write some feedbacks on this topic.

Creating the List and Details Screen

Like the other screens, it is very easy to create the List and Details screen. Hope you have the basic table available with you. If you don’t have, read my first chapter of this tutorial to create the one for you. Now open the StudentTable inside your Visual Studio 2010 IDE. As shown in the below screenshot, click on the “Screen…” button to open up the Screen Template selector.

In the “Add New Screen” dialog that pops up (which is nothing but the Screen Template Selector), chose the “List and Details Screen” template. Check the below screenshot for details:

On the right side panel, chose the table that you want to use here. Select “StudentTables” from the Screen Data combo box and hit “Ok”. This will create the basic UI for you from the screen template with all the necessary fields.

You will be able to see the UI design inside the Visual Studio IDE itself. This will look just like this:

You will see that the screen has four TextBox named FirstName, LastName, Age and Marks. These are nothing but the table columns added to the screen.

Now run the application to see it in action. Once the application shows up in the display screen, click the “StudentTableListDetail” menu item from the left navigation panel. If you don’t know what is this navigation panel, read any one of the previous three chapters.

UI Screen Features

Here you will see that, there are two sections inside the main tab. One hosts only the FirstName from the records column and the right side panel has all the details for that specific record.

If you chose a different record from the left list, the right panel automatically reflect the record details. This screen calls as List and Details screen based on it’s UI. The left panel is the list of records and right panel is the details of the selected record.

Here, you will see that, like other screens “Add…”, “Edit…”, “Delete”, “Refresh” and “Export to Excel” buttons are available. The “Add” button will popup a new modal dialog with the form to enter new record. “Edit” button will popup a modal dialog with the selected record to modify it. “Delete” will simply delete the record from the table. It will not actually delete from the table unless you press “Save” after that. “Refresh” button will re-query the table to fetch the records. “Export to Excel” opens up the Excel application with the listed records.

You will also notice, there is a new Combo Box in the UI. It’s called as “Arrange By” (as shown in the below figure). If you click on the drop down you will see all the column names in the list.

From there, you can sort the records by any field. Once you select a different field from the drop down, it will again load the records sorted by that field.

Like the below screenshot, you will see an indicator while sorting and loading the records. No code to be written for all these functionalities. They are all out-of-box features.

Here you will see how the records have been sorted by FirstName (in ascending order):

If you want to sort them on descending order, just click the small arrow head just right to the “Arrange By” text. Here is the screenshot of the same (marked with Red circle):

Add, Edit operations are similar to the earlier screens. Either press the respective buttons or double click the DataGrid. Double clicking the DataGrid cell will do the operation inline but pressing the button will pop up a new modal dialog on the screen.

You can see the above figure. Once you click “Edit” button, the modal window will pop up as shown in the figure. Update the records with desired values.

Adding a New Table

Let us create a new Table. Open your Solution Explorer and right click on the “Application Data”. This will bring a context menu in the screen. As shown below, click on the “Add Table” menu item to open the Table designer.

In the table designer screen, enter all the columns with specific column types. For our example, we will create a StudentMarks table with three new columns named as “Math”, “Physics” and “Biology”. “Id” column is default primary key field.

All the columns are Int16 type in our case. The new table design will look like this:

Creating the Validation Rules

In earlier chapters, we saw how the IDE creates the validation rule by default for null value. Here, we will jump a little bit depth and set some additional validation rules. Of-course, they will not be in code but setting some properties inside the property panel.

Chose each one of the subject columns one by one and set the Minimum and Maximum values for them. In our case, the minimum value will be 0 (zero) and maximum value will be 100. Repeat the same step mentioned in the above screenshot for all the other two fields. Now, those fields will take an integer value ranging between 0 and 100. You will not be able to enter any value less than 0 or greater than 100.

If you enter any other value outside the range, it will fail the validation and show appropriate message on the screen. We will see that in action later. But before that, we need to create the screen.

Adding Relationship between two tables

Yes, we can easily create a relationship between two tables. How? Create the “Relationship…” button placed at the top left corner of the table designer screen (as shown below);

This will bring the “Add New Relationship” dialog in the screen. You will notice there that, it has two table field “from” and “to”. This means the relationship between two tables (one with the other). There is a field called “Multiplicity”. This is nothing but the relationship type (one to one, one to many, many to one etc.)

One to one is not supported in this Beta 1 version. Can’t say whether it will be available in the next versions. If you select one to one relationship, the wizard will show the appropriate message saying that “One to one relationships are not supported”. Have a look here:

For our example, chose Many to One relationship between StudentMarks and StudentTable. You can select the delete behavior in the wizard, whether it will support restricted or cascaded delete operation.

Once you setup the relationship between the two tables, click “Ok” to continue creating the relation. Now, Visual Studio 2010 IDE will show you how the relationship is binded between the two tables.

Here you will find the relationship as a diagram. See the box marked as Red:

You will see that, the StudentTable now has the one to many relationship with the StudentMarks table and it actually created a new field called StudenTable of type “StudentTable”.

Creating the new List and Details Screen

Now as a beginner, we will first remove the previous ListDetails screen. To do this, go to Solution Explorer and remove the StudentTableListDetail screen from the Screens folder. Here is the snapshot of the same:

The above step is not require if you are familiar with the screen customization. As the tutorial is targeted for beginners, hence I am removing this screen for you to understand properly.

Let use create a new List & Detail screen for our example. Go to the solution explorer. Right click on the Screens folder (as shown in the below figure), from the context menu chose “Add Screen…”.

This will popup the “Add New Screen” dialog. You are very familiar with this page as we discussed it earlier for several times. In this page, select “List and Details Screen” from left template panel.

In the right panel, select the desire table as the data source. In our example, we are going to use the “StudentTables” as the data source. Select it.

You will see that, it has now one more extra table called “StudentMarks” which you can chose from there. Let us select all the additional tables from the screen and click “Ok”.

This will create the UI for you and open up the UI inside Visual Studio IDE in the design view. You will see that the StudentMarksCollection (marked as Red in the below screenshot) also has been added to the screen and has reference to the StudentTableCollection.

In the right side design view you will see that, the StudentMarksCollection has been added to the screen as a DataGrid, means all the related data related to the actual StudentTable will be populated here.

You will be able to chose the fields from this screen. If you want, you can remove any field and/or select the control type for the respective column type.

Application in Action

This is all about the thing that we wanted to discuss here. Now it’s time to see the demo of the same in action. This will exactly tell about the feature that we wanted to learn. We will now be able to tell whether we were able to achieve the goal of this chapter.

Let us build the solution and run it. Once run, it will open the application UI. from the left menu panel, click the menu item called “StudentTableListDetail”. It will open the ListDetail screen as a new tab into the screen.

You will see that all the records are displayed in the middle panel of the screen. It is only showing the FirstName from the table. You can chose which field to display here by customizing the UI.

In the right panel, you will see two sections. The top section is nothing but a Form where you can view and update the respective records of the selected one. Below to that, there is a DataGrid which actually shows the respective related records from the 2nd table.

You can easily add, edit, delete and export records within this DataGrid. You all learnt about it in the previous chapter. Hence, I am not going to explain it more here.

Now comes the part to showcase the custom validation that we added for the fields. We entered the Maximum and Minimum value for the fields. Let us check whether these works actually. What do you think? Let’s do it.

Enter a negative value or a value higher than 100 (100 was our maximum value and 0 was the minimum value, if you can remember). You will see the proper validation message popup in the screen. If you entered a higher value, it will show “Value is more than the maximum value 100” and if you entered a lower value, it will show “Value is less than the minimum value 0”.

Once you corrrect those validation error, you will be now able to save the record.

Hey, did you notice one thing? We didn’t enter the validation message there. These are coming from the Tool itself. Everything is inbuilt there. No code. Click, click and click, you professional quality application is ready for you.

End Note

You can see that, throughout the whole application (for all the 4 chapters) I never wrote a single line of code. I never did write a single line of XAML code to create the UI. It is presented by the tool template automatically. It has a huge feature to do automatically. From the UI design to add, update, delete and even sort, filter all are done automatically by the framework.

I hope, you enjoyed this chapter of the series a lot. Lots of figures I used here, so that, you can understand each steps very easily. If you liked this article, please don’t forget to share your feedback here. Appreciate your feedback, comments, suggestion and vote.

I will soon post the next chapter on Custom Validation soon. Till then enjoy reading my other articles published in my Blog and Silverlight Show.

Kunal is a Microsoft MVP in Silverlight, currently working as a Software Engineer in Pune, India.

Return to section navigation list>

Windows Azure Infrastructure

The Windows Azure OS Upgrades Team announced Windows Azure Guest OS 1.9 (Release 201011-01) on 12/14/2010:

Now deploying to production for guest OS family 1 (compatible with Windows Server 2008 SP1).

The Windows Azure OS Upgrades Team announced Windows Azure Guest OS 2.1 (Release 201011-01) on 12/14/2010:

Now Available for guest OS family 2 (compatible with Windows Server 2008 R2).

Stay tuned for more information about the two OS upgrades.

David Linthicum asserted “The release of the long-anticipated Chrome OS will drive many in the cloud computing space to say, ‘So what?’” in a deck for his Three reasons the cloud does not need Google's Chrome OS post of 12/14/2010 to InfoWorld’s Cloud Computing blog:

Long promoted as the "cloud computing operating system," Google's forthcoming Chrome OS will provide a browser-centric OS that wholly depends on wireless connectivity and the cloud for its core services. The first Chrome OS notebooks won't be available until mid-2011 -- unless you can get into the pilot program for Google's own Cr-48 netbook. Every feature of Chrome OS is synced to the cloud, so users can pick up where they left off regardless of what computer they are leveraging.

If you're old enough to remember "network computing," this is it. In short, never leave anything on your client, and use the network to access core application services and data storage. Fast-forward 15 years, and it's now the cloud and outside of the firewall, but the same rules still apply.

Don't get me wrong: I think Google's Chrome OS is innovative, but it's not needed now. Here are three reasons why:

- Most mobile operating systems, including iOS and Android, already do a fine job leveraging the cloud. They are designed from the ground up to work in a connected world, and as most of you know who lose service from time to time, the operating systems and the applications aren't worth much when not connected to the cloud -- well, perhaps not Angry Birds.

- With the momentum behind the iPad and the coming iPad wannabes, there won't be much call for netbooks, which is the target platform for Chrome OS. Because Google makes both the successful Android OS for smartphones and slates and the Chrome OS for laptops, I suspect you won't see it make Chrome OS available for slates and smartphones.

- Chrome OS assumes good connectivity to the cloud, and while that may happen in a perfect world, it's typically problematic these days. Having to find a Starbucks to connect to a cloud to launch an application or retrieve your data on the road will be annoying, though Chrome OS will have some local storage.

What happens to Chrome OS? It's one of those good ideas, like Google Wave, that just did not find its niche. Perhaps it's too late or too early, but it won't have the uptake that everyone is expecting in the cloud.

Bernard Golden listed his Cloud Computing: 2011 Predictions in a 12/9/2010 post to Network World’s Data Center blog:

It's been an incredibly interesting, exciting, and tumultuous year for cloud computing. But, as the saying goes, "you ain't seen nothin' yet." Next year will be one in which the pedal hits the metal, resulting in enormous acceleration for cloud computing. One way to look at it is that next year will see the coming to fruition of a number of trends and initiatives that were launched this year.

The end of a year often brings a chance to take a breather and think about what lies ahead. Therefore, I've put together this list of what I foresee for 2011 vis a vis cloud computing. Here are ten developments I expect to see next year, broken into two sections: one for cloud service providers, and the other for enterprise users.

Cloud Service Providers

Prediction #1: The CSP business explodes...and then implodes. CSPs will continue to pour money into building cloud computing offerings. Large companies will invest billions of dollars constructing data centers, buying machines and infrastucture, implementing software platforms, and marketing and selling cloud services. Regional and local players will likewise do the same, albeit on a smaller scale.

- Related Content

- Should You Buy the Mario 25th Edition?

- Microsoft may preview Windows 8 at CES

- Broadcom readying chip for low-cost Android smartphones

- HP StorageWorks and MicrosoftWHITE PAPER

- Ladbrokes deploys workforce management

View more related content

- Dell agrees £604m Compellent acquisition

- CloudSwitch upgrades its software

- IT producers must manage e-waste, says Computer Aid

- Storage Infrastructure in a Virtual Server EnvironmentWHITE PAPER

- Infosys develops smart home gateway using Atom, MeeGo

There will be a frenzy of activity as every colo, hosting, and managed service provider confronts the fact that their current offerings are functionally deficient compared to the agility and low cost of cloud computing. However, by the end of the year it will become obvious that being a cloud provider is a capital-intensive, highly competitive business with customer demand for transparency in pricing.

Many new entrants to the business will conclude that this is a battle they can't win and will hastily exit the business. And don't imagine those retreating will only be small, thinly capitalized companies. Sometimes large, publicly-held companies are the worst in terms of sticking with opportunities that require delayed gratification in terms of profits. I expect that late next year or early in 2012 a private equity play will emerge in rolling up CSP offerings whose owners want to offload their failed CSP initiatives.

Prediction #2: Market Segmentation via Customer Self-Selection. Many vendors and commentators feel that the SMB market is a natural for IaaS computing because of their lack of large, highly skilled IT staffs. Sometime next year everyone will realize that removing tin still leaves plenty of challenging IT problems, and cloud computing delivers a few new problems besides. Once that realization sinks in, everyone will agree that SMBs are a natural fit for SaaS and that only larger companies should imagine themselves as IaaS users. Consequently, SaaS providers will gain an even higher profile as adoption rates increase. However, SaaS will by no means be only an SMB phenomenon -- far from it. SaaS will become the default choice for organizations of all sizes that wish to squeeze costs on non-core applications.

Prediction #3: OpenStack will come into its own. The attractiveness of a complete open source cloud computing software stack will become clear, and interest and adoption worldwide of OpenStack will grow during the next year.

<Return to section navigation list>

Windows Azure Platform Appliance (WAPA), Hyper-V and Private Clouds

No significant articles today.

<Return to section navigation list>

Cloud Security and Governance

No significant articles today.

<Return to section navigation list>

Cloud Computing Events

Steve Plank (@plankytronixx) reported Plankytronixx Academy: Windows Azure Connect - Live Meeting MOVED from 15th Dec to 22nd Dec on 12/14/2010:

It’s very unfortunate to have to do this, but the so-called “Plankytronixx Academy” (!) Windows Azure Connect Online Session that was due to run:

15th December 16:00 UK Time (08:00 PST) to 17:15 UK Time (09:15 PST)

…has had to be moved to the same time slot on the 22nd December.

The server from which I was going to do demos, including domain-joining Azure instances to a local Active Directory has slowed to the slowest of crawls. So slow in fact that all troubleshooting measures have become untenable. Though I might find out what’s wrong and get it fixed in time, I suspect not and I want to give as much notice that I can of the change of date.

Diary commitments mean the earliest I can run the new session is on the 22nd December. I hope this doesn’t cause too much inconvenience for any of you who’d put the original date in your diaries. I’m sure though, we’ll have a great session on the 22nd December. Get all that knowledge in to your head in time for Christmas, when you can ponder how to apply it with your family and friends over mince pies and mulled wine…

To attend the NEW SESSION click here on the 22nd December at 16:00 UK time to join the session.

Silverlight Show Webinars will present Building a Silverlight 4 Application End-to-end on 12/15/2010 at 10:00 to 11:30 AM PST:

Once you start building real-world Silverlight applications, things may start to get a bit more complicated than in the demos. How do you start a complete application? Do you just start coding or do you first think about what you are going to build and how you are going to build it? Should you think about architecture?In this webinar, we’ll look at some common questions developers have when building real world Silverlight applications. More specifically, the following topics will be discussed:

- SketchFlow

- WCF RIA Services

- Data binding & DataGrid

- MVVM

- MEF

- Commanding & behaviors

- Messaging, navigation & dialogs

- Custom controls & third party controls

- OOB

- Printing

Note that Data binding and MEF were already covered in previous webinars (check them out at http://www.silverlightshow.net/Shows.aspx), so these topics will only be touched upon briefly in this webinar.

Register here.

The Bay Area .NET Users Group will present a Special end-year event: .NET happy hour with the Experts starting at 6:45 PM PST on 12/15/2010 at Murphys City Pub & Cafe, 1 Hallidie Plaza, San Francisco, CA 94102:

When: Wednesday, 12/15/2010 at 6:45 PM

Where: Murphys City Pub & Cafe 1 Hallidie Plaza San Francisco, CA 94102 (BART Powell, close to Microsoft San Francisco)

Event Description: This special end-year event is all about you, .NET and your questions. Come have a happy hour with a group of .NET experts, hear their thoughts on what matters and what it means to be a .NET developer in 2011, and bring your questions for an informal, friendly and open discussion.

Presenters’ Bios:

- John Alioto is an Architect Evangelist at Microsoft; he focuses on working with customers in areas such as distributed systems, service-oriented architecture (SOA) and cloud computing. John helps decision makers from start-ups and large enterprises define architectural strategies and develop innovative approaches for the use of technology.

- Ward Bell is a Microsoft MVP and the V.P. of Technology at IdeaBlade (www.ideablade.com), makers of the "DevForce" .NET application development product. Ward often obsesses on Silverlight, persistence, development practices, and "Prism".

Bruno Terkaly is a Developer Evangelist at Microsoft. He brings a tireless energy in Northern California, helping developers save time and become faster and more efficient, with one mission: have the tools and technology work for YOU.

- Petar Vucetin is a passionate software developer since the days of Commodore 64, and loves to share what he learnt poking (peeking?) .NET during the last ten years. For the last three years he has been working for Vertigo as a software engineer on all sorts of interesting projects..

Event Notes: This event will NOT take place at Microsoft.

Guests are expected to pay for their dinner.

Eric Nelson (@ericnel) posted Slides and links from ISV Windows Azure briefing on the 13th Dec 2010 on 12/14/2010:

A big thank you to everyone who attended yesterdays “informal” briefing on the Windows Azure Platform. There were lots of great discussions during the day and I know we al certainly enjoyed it.

This post contains (ultimately) the slides and links from yesterday.

- Davids on Windows Azure all up (TBD)

- Eric on SQL Azure (which fyi is hosted on Windows Azure Blob Storage)

Links and Notes

- Start following the UK ISV technical team (our blog and twitter accounts) http://bit.ly/firststopuk

- Sign up to Microsoft Platform Ready (MPR) to get access to the Cloud Essential benefit in January http://bit.ly/ukmprhome

- Profile you company, profile your app – in time self-verify or test it against Azure.

- Cloud Essentials is not yet mentioned on the MPR site but is explained at http://www.microsoftcloudpartner.com/

- SQL Azure team http://blogs.msdn.com/sqlazure

- and http://www.sqlqzurelabs.com for futures

- Davids blog http://blogs.msdn.com/b/david_gristwood

- Steve Planks blog http://blogs.msdn.com/b/plankytronixx