Windows Azure and Cloud Computing Posts for 12/7/2010+

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

• Update 12/8/2010: Tim Anderson (@timanderson) updated his Database.com extends the salesforce.com platform on 12/8/2010 to include details of “a chat with Database.com General Manager Igor Tsyganskiy.” See the Other Cloud Computing Platforms and Services section at the bottom of this post.

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database and Reporting

- Marketplace DataMarket and OData

- Windows Azure AppFabric: Access Control and Service Bus

- Windows Azure Virtual Network, Connect, and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch

- Windows Azure Infrastructure

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Read the detailed TOC here (PDF) and download the sample code here.

Discuss the book on its WROX P2P Forum.

See a short-form TOC, get links to live Azure sample projects, and read a detailed TOC of electronic-only chapters 12 and 13 here.

Wrox’s Web site manager posted on 9/29/2009 a lengthy excerpt from Chapter 4, “Scaling Azure Table and Blob Storage” here.

You can now freely download by FTP and save the following two online-only PDF chapters of Cloud Computing with the Windows Azure Platform, which have been updated for SQL Azure’s January 4, 2010 commercial release:

- Chapter 12: “Managing SQL Azure Accounts and Databases”

- Chapter 13: “Exploiting SQL Azure Database's Relational Features”

HTTP downloads of the two chapters are available for download at no charge from the book's Code Download page.

Tip: If you encounter articles from MSDN or TechNet blogs that are missing screen shots or other images, click the empty frame to generate an HTTP 404 (Not Found) error, and then click the back button to load the image.

Azure Blob, Drive, Table and Queue Services

No significant articles today.

<Return to section navigation list>

SQL Azure Database and Reporting

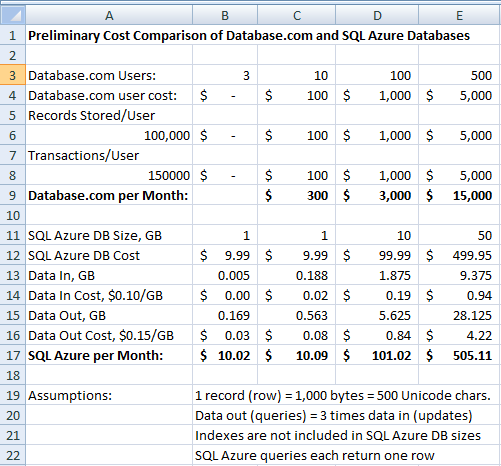

My (@rogerjenn) Preliminary Cost Comparison of Database.com and SQL Azure Databases for 3 to 500 Users of 12/7/2010 details monthly charges for 3, 10, 50, 100 and 500 typical users:

Salesforce.com announced their Database.com cloud database on 12/6/2010 at their Dreamforce 2010 conference at San Francisco’s Moscone Center. My initial review of Database.com’s specifications and pricing indicate to me that its current per-user/per-row storage and transaction charges aren’t competitive with alternative cloud database managers (CDBMs), such as SQL Azure or Amazon RDS. Here’s a capture of Database.com’s Pricing page:

Database.com’s FAQ defines a transaction as “an API call to the database,” which infers that multiple records (rows) can be returned by a single transaction.

Following is screen capture of a simple worksheet that compares the cost of Database.com and SQL Azure for 3, 10, 100 and 500 users who each store 100,000 records and execute 150,000 transaction per month with the Assumptions shown:

The current comparison tops out at 500 users because the corresponding SQL Azure database size is 50 GB, the maximum size at present.

Wayne Walter Berry’s The Real Cost of Indexes post of 8/19/2010 to the SQL Server Team blog provides the basic steps to estimate the size of SQL Azure indexes.

You can download DbComVsSQLAzureCostPrelim.xlsx from my Windows Live Skydrive account or open it as an Excel Web App if you want to try different combinations.

If you detect an error in the comparison worksheet or differ with my approach, please leave a comment or suggestion.

Mary Jo Foley (@maryjofoley) wrote Microsoft readies public SQL Azure Reporting test build, as Salesforce launches a cloud database (and quoted my worksheet) for ZDNet’s All About Microsoft blog of 6/7/2010:

Microsoft is allowing testers to register, as of December 7, for a public Community Technology Preview (CTP) test build of the reporting services technology for its SQL Azure cloud database.

Microsoft SQL Azure Reporting is a cloud-based reporting service built on SQL Azure, SQL Server, and SQL Server Reporting Services technologies that allows users to publish, view, and manage reports that display data from SQL Azure data sources.

The test build that will be made available is designated CTP 2 and will be made available to a “limited” number of testers, according to company officials. A first CTP of SQL Azure Reporting Services “was made available to a private group of TAP (Technology Adoption Program) customers prior to the Professional Developers Conference (PDC) announcement to provide early feedback on the Reporting service,” execs said.

Microsoft execs said at the company’s October 2010 PDC that Microsoft will deliver the final version of SQL Azure Reporting Services in the first half of 2011. A number of PDC attendees said at the time that they considered availability of the reporting services to remove an impediment for them to deploying Microsoft’s cloud database.

News of the SQL Azure Reporting Services CTP2 comes on the same day that Microsoft cloud rival Salesforce.com went public with plans for Database.com, its own cloud database that seems to be a 2011 deliverable (best I can tell). I asked Microsoft execs for their take on Salesforce’s newly announced multitenant relational cloud data store — which is the same database that powers Salesforce’s CRM service today.

I received the following response from Zane Adam, a Microsoft General Manager:

“SQL Azure is built for the cloud by design, combining the familiarity and power of SQL Server with the benefits of the only true PaaS (platform as a service) solution on the market, the Windows Azure platform. Tens of thousands of Microsoft customers are already using SQL Azure for large-scale cloud applications, to connect on-premises and cloud databases, and to move applications between in-house IT systems and the cloud. And we’re continually enhancing this functionality; this week, we are releasing technology previews of new SQL Server synchronization and business intelligence capabilities in SQL Azure.”

I also asked a few SQL Azure experts who don’t work for Microsoft for their initial takes on what Salesforce announced today.

OakLeaf Systems blogger and Azure expert Roger Jennings made some quick price-comparison calculations. While he said there may be adjustments and caveats, at first blush, Salesforce’s “per-row transaction and storage charges seem way out of line to me.” Here is Jenning’s comparison:

Microsoft Regional Director and founder of Microsoft analysis and strategy service provider Blue Badge Insights, Andrew Brust, gave me a list of his initial impressions in the form of a pros and cons list. On Brust’s list:

Pros:

- It’s truly relational and supports triggers and stored procedures

- It seems to have BLOB storage and also a “social component,” so it’s not just relational

- RESTful and SOAP APIs

- It’s “automatically elastic” and “massively scalable”

Cons:

- They seem to charge by the seat as well as database size and number of transactions, rather than by the database size and bandwidth alone

- It seems like it’s totally non-standard and proprietary. It’s not Oracle or SQL Server or DB2. It’s not MySQL or Postgres. It’s not Hadoop. That would make it difficult to migrate on to it or off it. And who knows if it’s as good as the others?

- I can’t tell what the triggers and stored procs are written in. Is it some dialect of SQL? Is it Salesforce.com’s Apex language?

- Seems like Progress Software has a JDBC driver and will one day have an ODBC driver for database.com. If comes from a third party, then the only robustly supported interfaces are the SOAP and REST APIs. That makes migration on or off extremely difficult as well.

- The claims of elasticity and scalability are unsubstantiated, as far as I can tell. That doesn’t mean they are false claims, but it means I have to take them on faith. And I am not very good at that.

- It’s good that it supports stored procedures and triggers. But conventional RDBMSes have been doing that for decades (and even MySQL has been doing that for a while now) and adding lots of features since. Does database.com add any such value of its own?

Any SQL Azure users out there have any further questions or observations about how Salesforce’s offering stacks up against SQL Azure?

Kick off your day with ZDNet's daily e-mail newsletter. It's the freshest tech news and opinion, served hot. Get it.

Jayaram Krishnaswamy characterized his new Microsoft SQL Azure Enterprise Application Development book as “Everything you wanted to know about SQL Azure” on 12/6/2010:

I hope my latest book "Microsoft SQL Azure Enterprise Application Development" which should be available in a couple of days appeals to the readers as much as my two other books on Microsoft BI. This book covers most of the changes that took place between the CTP and the updates in October 2010. Even as I am writing this post it must be undergoing more changes,a fact that may take away the shine from what is written in the past. Notwithstanding, the core ideas do not change; what changes perhaps are, a few more modified screens; a new UI; and some additional hooks. The more profound changes will be totally new elements that gets added to product with time and that is the time for yet another book.

Some of the details of what you can learn copied from Packt Site is reproduced here:

- An easy to understand briefing on Microsoft Windows Azure Platform Services

- Connect to SQL Azure using Microsoft SQL Server Management Studio

- Use new portal after 'early 2011' (see note below)

- Create and manipulate objects on SQL Azure using different tools

- Master the different types of Cloud offerings

- Access SQL Azure through best practices using Client and Server API’s in VB and C# and using hosted services with user authentication Windows Azure

- Learn how to populate the SQL Azure database using various techniques

- Create Business Intelligence Applications using SSIS and SSRS

- Synchronize databases on SQL Azure with on-site enterprise and compact SQL Servers

- Learn how to write an application to access on-site data from a cloud hosted service

- Get a comprehensive briefing on various updates that have been made to SQL Azure and the projects still in incubation

- Understand the future and evolving programs such as the Houston Project, OData Services, Sync Services, and more built to support SQL Azure and transform it into a global enterprise data platform

I take this opportunity to record my sincere thanks to Microsoft Corporation and many others.

Note added on 12/07/2010:

Well, Microsoft has announced a new portal for Windows Azure. As I mentioned in the post things rapidly evolve in this area. You need a really good crystal ball to write a book on anything related to Cloud Services.

Here is the gateway to the New Portal announced most recently,

<Return to section navigation list>

Dataplace DataMarket and OData

No significant articles today.

<Return to section navigation list>

Windows Azure AppFabric: Access Control and Service Bus

Andrew J. Brust (@andrewbrust) wrote Whither BizTalk for his 12/2010 “Redmond Diary” column for Visual Studio Magazine and “Redmond Review” column for Redmond Developer News:

BizTalk Server is a blockbuster product, but it's also a sleeper. Its value is immense, yet its following is limited. Every so often it comes into the Microsoft spotlight, and typically it exits shortly thereafter. Now, because of Windows Azure and Windows Azure AppFabric (both in the cloud and on-premises), the BizTalk profile is rising again. Before it recedes from the foreground, let's consider its history and its future.

As BizTalk has progressed through its numerous versions, it has become more comprehensive, more stable and more results-oriented.

BizTalk Server 2000 and 2002 were COM-based, somewhat buggy and misunderstood by both the market and Microsoft itself. Redmond presented these early releases as Web service developer toolkits, when they were in fact much more. But BizTalk Server 2004, the first Microsoft .NET Framework-based version of the product, brought a stable and full-fledged business-to-business (B2B) data mapping and publish/subscribe integration server.

BizTalk Server 2006 added fit and finish as well as a host of valuable adapters acquired from iWay Software; the 2006 R2 release brought enhanced electronic data interchange (EDI) capabilities, radio-frequency identification (RFID) support and a native adapter for Windows Communication Foundation (WCF). BizTalk 2009 added further EDI enhancements and Hyper-V virtualization support. The recent 2010 release brings integration with Windows Workflow Foundation and the Windows Azure AppFabric Service Bus. If all that were not enough, support for the Health Insurance Portability and Accountability Act (HIPAA), as well as the BizTalk Accelerator for HL7 and BizTalk Accelerator for SWIFT make the product extremely valuable in the health-care and financial services industries. The BizTalk story is now extremely cohesive.

Scatter/Gather

But Microsoft has muddied the waters. The introduction of the service-management component of Windows Server AppFabric, code-named "Dublin," provides an app server that parallels much of the BizTalk architecture, but lacks the latter's vast feature set and manageability. On the cloud side, the Microsoft .NET Service Bus bears some resemblance to the BizTalk message box. Branding-wise, the .NET Service Bus is a component of Windows Azure AppFabric that in its early beta form was actually called BizTalk Services.BizTalk is Microsoft's integration server. Ironically, it needs some integration of its own. A future version of BizTalk built atop Windows Server AppFabric would make sense for on-premises work. A cloud version of BizTalk, based on a version of Windows Azure AppFabric more conformed to its on-premises counterpart, would make sense too. In fact, a lineup of Windows Server AppFabric, a new BizTalk Server based on it, and a Windows Azure version of BizTalk would parallel nicely the triad of SharePoint Foundation, SharePoint Server and SharePoint Online.

At this year's Microsoft Professional Developers Conference (PDC), the BizTalk "futures" that were discussed came pretty close to this reorganized stack. The notion of a multitenant, cloud-based "Integration as a Service" offering with an on-premises counterpart was introduced, as was the intention to architect both products with Windows Azure AppFabric as the underlying platform. That direction is logical and intuitive, and the ease of provisioning that the cloud provides could bring integration functionality to a wider audience.

Why Does It Matter?

That wider reach is important, both for Microsoft customers and Microsoft itself. Integration is infrastructural and it's hard to get people excited about it. It's hard to get Microsoft excited about it, too. BizTalk revenue, though strong, is peanuts compared to Windows and Office, and is even small compared to SQL Server and SharePoint.But, high revenue or not, a good integration server is important to the credibility of the Microsoft stack. You can't have data, business intelligence and applications without plumbing, tooling and management features for moving that data between applications -- and that goes double in the cloud. The on- and off-premises versions of Windows Azure AppFabric provide a good skeleton, but for enterprises, Microsoft has to put flesh on those bones. If Microsoft provided only the Windows Azure AppFabric fundamentals, it would be hard to argue a value proposition over open source products like Jitterbit and FuseSource.

Layering on a product like BizTalk Server, with compelling value provided by its adapters, and the BizTalk EDI and RFID features, means Redmond can make the argument and win. Microsoft should be providing more business value than open source, with more compelling economics than other commercial offerings. With the cloud, maybe BizTalk will morph from the little integration engine that could to the big one that does.

Andrew Brust is CTO for Tallan, a Microsoft National Systems Integrator. He is also a Microsoft Regional Director and MVP, and co-author of "Programming Microsoft SQL Server 2008" (Microsoft Press, 2008).

Full disclosure: I’m a contributing editor for 1105 Media’s Visual Studio Magazine.

<Return to section navigation list>

Windows Azure Virtual Network, Connect, and CDN

MSDN posted Connecting Apps with Windows Azure Connect to Microsoft Developer Network > Learn > Courses > Windows Azure Platform Training Course > Windows Azure > on 12/7/2010:

Description

Windows Azure Connect's primary scenario is enabling IP-level network connectivity between Azure services and external resources. The underlying connectivity model that supports this is quite flexible. For example, you can use Sydney to setup networking between arbitrary groups of machines that are distributed across the internet in a very controlled and secure manner.

Contents

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

Wes Yanaga reminded developers about the Windows Azure Platform 30 Day Pass in a 12/7/2010 post to the US ISV Evangelism blog:

We're offering a Windows Azure platform 30 day pass, so you can put Windows Azure and SQL Azure through their paces. No credit card required. Sign up for Microsoft Platform Ready to get access to technical, application certification testing and marketing support.

- Use this Promo Code: DPWE01

- Sign Up By Clicking This Link: http://www.windowsazurepass.com/?campid=BB8443D6-11FC-DF11-8C5D-001F29C8E9A8

The Windows Azure platform 30 day pass includes the following resources:

Windows Azure

- 4 small compute instances

- 3GB of storage

- 250,000 storage transactions

SQL Azure

- Two 1GB Web Edition database

AppFabric

- 100,000 Access Control transactions

- 2 Service Bus connections

- Data Transfers (per region)

- 3 GB in

- 3 GB out

Here are some great resources to get you started:

- Step-by-step guidance on developing and deploying on the Windows Azure Platform

- Get hands on learning by taking the Windows Azure virtual lab

- Analyze your potential cost savings with the Windows Azure Platform TCO and ROI Calculator

Robert Duffner posted Thought Leaders in the Cloud: Talking with Todd Papaioannou, VP of Architecture, Cloud Computing Group at Yahoo to the Windows Azure Team blog on 12/7/2010:

Todd Papaioannou is currently at Yahoo!, in the role of vice president of cloud architecture for the cloud platform group. Before taking on that role, Todd was responsible for new product architecture and strategy at Teradata, including driving the entire Cloud computing program. Before that, he was the CTO of Teradata's client software group. Prior to joining Teradata, he was chief architect at Greenplum/Metapa. Dr Papaioannou holds a PhD in artificial intelligence and distributed systems.

In this interview, we discuss:

- Virtualization as the megatrend of this decade

- The world's largest Hadoop clusters

- Cloud benefits to businesses large and small

- "If it's not your business to be running data centers, don't do it."

- Analyzing 100 billion events per day

- A future dominated by hybrid clouds

Robert Duffner: Todd, could you introduce yourself and describe your background and your current role at Yahoo?

Todd Papaioannou: I'm the chief architect for cloud computing here at Yahoo. My official title is VP of Cloud Architecture, and I'm responsible for technology, architecture and strategy across the whole of the cloud computing initiatives here at Yahoo!.

The Yahoo! cloud is the underlying engine on which we run the business, and we like to think of it as one of the worlds largest private clouds. So my responsibilities span edge, caching, content distribution, multiple structured and unstructured storage mechanisms, serving containers and the underlying cloud fabric we're focused on rolling out that makes it all possible.

I'm also responsible for Hadoop and the cloud -serving container architecture, as well as all of the data capture and data collection across the whole of the Yahoo network. We dedicate a lot of energy to pulling together a very wide range of technologies as an internal platform as a service.

Robert: I imagine that making the move from Teradata to Yahoo! was significant for you personally. Given that your career has been focused on cloud computing for some time now, has the move to Yahoo made a difference in terms of what kinds of projects, initiatives, and solutions you can personally lead or develop in the cloud computing space?

Todd: Absolutely. At Teradata, I was responsible for driving the cloud computing program from a blank piece of paper through launch and delivery of multiple products, so I have been focused on the cloud for a number of years as well as all of the other big data initiatives and future-facing stuff.

I played the role of looking to the future and helping to drive product strategy and product architecture across the Teradata portfolio. But I became very involved with all of the cloud stuff as I drove that program and saw that this was a very compelling and very interesting part of the market space.

So coming to Yahoo! was an opportunity to help drive one of the largest private clouds in the world. There are probably only two or three other companies in the world that deal with these issues at the scale that Yahoo! does, so it's a fantastic opportunity. It also allows me to work closer to the consumer.

Robert: You said in a presentation that you developed while at Teradata that "virtualization is the megatrend of the next decade." Do you still feel that's the case? And what do you think has the potential to supplant it, either in this decade or the next?

Todd: I still think it's turning out that way. Virtualization is a megatrend that's going on in data centers around the world right now, and virtualization is actually just one component of cloud computing. There's a lot more that goes into cloud computing as a layer above virtualization, and I think the self-service and elasticity aspects are particularly interesting.

As I look to what is going to change stuff in the future, ubiquity of devices is clearly another megatrend, as is the explosion of data. When you take massive data, massive sets of devices, and cloud computing together, you start to see a slightly different vision of how software needs to be built, abstracted, and developed to support both the enterprise and the consumer, going forward.

Robert: Let's talk a little bit about Hadoop. In a quick set of back and forth tweets with Barton George, you clarified who has the largest Hadoop clusters, with Yahoo, Facebook, and eBay being the largest, in that order. Who also is in the top 10, in your estimation, and are there any particularly interesting implementations that fall outside of the top 10 that you think bear watching?

Todd: The top 10 is probably made up of West Coast, Bay Area web companies that are generating huge amounts of data, particularly social graph data, and are finding that traditional tools are not that great for analyzing it. Outside of the ones you mentioned, it's Twitter, Facebook, LinkedIn, Netflix, and those types of folks.

We're also starting to see penetration into the financial industry, where they have huge amounts of data to process as well. US government agencies like the CIA and NSA are using Hadoop now, and they use the Yahoo! distribution of Hadoop to do their processing. They won't tell me what they're doing, but I'd love to know. [laughs]

Robert: You mentioned when you were part of a panel discussion at Structure 2010 that cloud computing enables business users to be separated from infrastructure cycles, so each can move at a different pace. Can you unpack that statement a little bit and tell me what benefits you think cloud computing provides to both smaller and larger businesses?

Todd: To take a simple view of the IT business, there's infrastructure you need to purchase, put in place, and manage. Infrastructure buying cycles tend to be fairly long, because it's a big investment and you want to make sure that you're doing the right thing.

That can be a challenge for a small business, a business unit, or someone that's close to the customer, because they need to move at a much faster pace in today's business climate. Cloud computing, in my mind, allows you to decouple the business logic from the underlying infrastructure and allow those two things to move at separate paces.

As an analogy, consider the fact that building a road, which is infrastructure, takes quite a long time, but small businesses can spring up or shut down along that road, and people can build houses much more quickly. In the same way, cloud computing enables the business to iterate much more quickly, because they don't have to worry about purchasing infrastructure.

Robert: You've tweeted that you see an enormous amount of innovation ongoing today with Hadoop. What excites you the most about the future of that project as a whole?

Todd: I think we're at an inflection point for Hadoop. Obviously, we at Yahoo! are extremely proud that we have created and open sourced Hadoop. Over the last four or five years, we've continued to invest in that environment, and right now we have around 40,000 machines running Hadoop at Yahoo!, which is clearly a huge number, and growing all the time.

There's another set of folks now who are starting to use Hadoop at a smaller scale, and the exciting thing, I think, is that there is now an ecosystem springing up. There are vendors coming into the ecosystem with new tools and new products, and people starting to innovate around the Hadoop core that we built.

Robert: Could you comment on how important the innovation around the core software for the cloud is, in terms of everything that has to happen around running the operations at data centers?

Todd: If you think about the entire business, the data center is the lowest level of infrastructure, and then you have the cloud running above that, and then in our case, the business of web properties running above that. There's a huge amount of innovation that has to happen on a vertical basis.

We've been driving a lot of innovation in how we design our data centers. We recently opened a new data center that got some awards for its design. It's designed [laughs] like a chicken coop, basically, so it's self cooling in some respects. That was a great, novel approach to some of the problems when you roll out a cloud, you're basically trying to build infrastructure.

I want to be able to shunt workloads around from data center to data center depending on changing conditions. For example, we need to respond to it if the data center is getting too hot or we are getting a lot of surge traffic because the U.S. is waking up, and that sort of thing.

That can't really be done by humans in front of a keyboard. What you really need to be thinking about is trying to automate everything. One of the big initiatives I've been pushing is to automate everything in the cloud so we have can have more of a high level thought process around control, rather than a low-level, tactical one that focuses on shunting around specific workloads on an as-needed basis.

Robert: How can organizations that want to move to the private cloud benefit from the lessons learned by big companies like Microsoft and Yahoo! that have gone before them?

Todd: If it's not your business to be running data centers, don't do it. You need to make it Someone Else's Problem. Yahoo!, Microsoft, and a few others out there are in the business of running data centers, and smaller companies should take advantage of that availability.

Companies that do need to run their own data centers, for whatever reason, can benefit from the fact that we have open sourced our infrastructure code. One of our stated goals is to open source all the underlying software that sits in our cloud, and we've done that today so far with Hadoop, which is our big data processing and analytic environment, and also more recently with Traffic Server, which is our caching and content distribution network software.

And we do that for a particular reason, which is that if you're building software internally, the minute you deploy it and no one else externally is using it, it's already on a path to legacy. You can continue to invest in that software, but you're continuing to invest in a one-off solution.

We like the open source world, because if we can build a community around a piece of our software and drive it to be a de facto standard, we can build a measure of future-proofing into our software. If people are already working with it outside of the company, we can also hire people who have previous experience with the software.

For the first time, we recently acquired a company that built its product on top of Hadoop, which helps validate our belief that open sourcing our infrastructure software benefits not only us, but the rest of the world as well.

Robert: Considering the role of Linux to the enterprise on servers, do you see an analogous software package developing for the cloud?

Todd: I don't think that has quite resolved itself yet. There's a lot of competition among the big players like Microsoft, Amazon, and Rackspace. Amazon clearly has a lead, but it's not insurmountable. And then there's obviously the open source world, which includes Eucalyptus, OpenStack, Deltacloud, and others.

It's an exciting time to be working in this landscape, and that's one of the reasons I came to Yahoo!. There's a huge amount of innovation going on at every level of the stack, from way down at the hardware level, all the way up to the cloud service level.

Virtualization, a massive expansion in server computing power, and low prices have really acted as catalysts. I really see the cloud as an abstraction layer above a set of underlying compute, storage, bandwidth, and memory resources. That abstraction allows you to get access to those resources on demand.

Because of that, one of the big initiatives I'm driving here at Yahoo! is to think of cloud computing resources as a utility just like electricity or cell phone minutes. You should just be paying for the utility when you need it, as you need it.

Robert: During a panel discussion on big data, you mentioned that Yahoo is analyzing more than 45 billion events per day from various sources to help direct users to the right content and resources on the web. From the user perspective, how does an emphasis on cloud computing technologies enhance their experience with Yahoo as a portal?

Todd: First, just to correct the number there, either I said the wrong number, or I was just talking about audience data. We actually deal with 100 billion events a day. That covers audience data, advertising data, and a bunch of other events that happen across the Yahoo! network.

Our goal at Yahoo! is basically to offer the most compelling and personally relevant experience to our end users. To do that, we need to understand stuff about you, such as whether you're into sports, travel, finance, or other topics. And we need to do that as you span across our multiple properties.

At Yahoo!, we have hundreds of different web properties, each with a different focus and context. So even if you were interested in sports, it may not be so relevant for us to show you a piece of sport content when you're on Yahoo! Finance.

Because of that, we use all of the events that we collect, and we use Hadoop to do all the processing, so we drive better user understanding, and we're able to do better content targeting and ultimately, better behavior targeting from an advertising standpoint.

Our ultimate goal is to understand you across all of our properties, and depending upon what context you're in, to understand the content you'll be interested in. Based on that, we want to be able to put a contextually relevant advert close to that content to better drive engagement for our advertising customers.

Robert: During that same panel, focused on big data, there was a portion of the discussion about on the data problem that the Fortune 1000 are having. To quote you for a moment, you said, "They all have the same problem, but they haven't figured out how much they're going to pay to solve it." Can you expand on that a little bit, and how you think cloud computing technologies can help the Fortune 1000, both in the short and long term?

Todd: For any business, there's a spectrum of data that is vitally important right now, whether it's investment management, supply chain management, or user registration. Businesses are willing to pay a certain dollar value for that data, whether it's available or active so they can access it immediately, whether or not it's online.

There is a set of data that you don't know the dollar value of yet, because you haven't discovered what it may teach you. But you know that somewhere within that data, there's value to be found, whether it's better user understanding or better insight into how to run your business.

I think the question was, "How do we know if you have big data?" And my response was, "Everybody has big data. They just don't know how much they want to pay for that big data." And by that I mean, whichever business you go to, you can say you have a whole bunch of data that you can really gain insight out of around your business, which you are currently just dropping on the floor.

On the other hand, you probably believe you should pay a lot less for that data as a business than you would for a more traditional enterprise data warehouse or data mart like you might get from Teradata or Oracle.

Still, the insights you can get from that data are huge. So what you want to do is find the platform that matches your dollar cost profile and that allows you to work on that data, discover insight, and then start to promote it up into a more fully featured platform that ultimately ends up costing you more.

You can stick a bunch of data in a public cloud, and it's going to cost you a lot less to store than if you're buying a whole bunch of filers or disks locally, for most people. There's also a set of technologies like Hadoop that allow you to discover value in that data at a much lower cost than you would pay a traditional vendor.

Because of that, the cloud is a great place for people to process big data or unstructured data that they don't know the value of and are looking for insights into their business.

Robert: That concludes the prepared questions I had for you. Is there anything else you would like to address for our Windows Azure community?

Todd: We dabbled a little bit on the public/private cloud question, but we didn't really get into that too much. In fact, I think the future is going to be dominated by the hybrid cloud. Companies are going to have a menu of options presented to them.

Say you're the CIO of some Global 5,000 company, or even a small 10 person business. You've got to look at this menu and say "Given the business service that I want to run, what are my criteria?" For example, sensitive data or high security demands are likely to push me toward a private cloud.

On the other hand, if I have huge amounts of data that I don't need to be highly available, that's the sort of thing that I would put into the public cloud. That would prevent my having to make a large infrastructure investment, and as we talked about earlier, it also lets me move quickly.

I really think the future for all businesses is to look at this hybrid model. So, what's my service, what's my data, where do I want to put it, how much do I want to pay and why do I want to pay that? And rather than one menu, you'll have a set of rate cards from vendors that you can go and choose from.

Robert: Microsoft's Windows Azure platform appliance announcement concerned the ability to take the services we offer in the public cloud and offer them on premises, while still keeping it very much as fundamentally a service.

Todd: I think that makes a lot of sense when you look at what am I going to be worrying about as a CIO. In the life cycle of an application, I may even move it up and down between the layers of the cloud. I may start off in a public cloud and then bring it back in.

I think one of the areas for innovation and investment that the industry needs to make is in enabling that. I do not want to be locked into a single place where I can't move my application and I'm stuck with a single source vendor.

Being able to move my workload from vendor to vendor, private to public, to me is an important element of what will make a successful ecosystem.

Robert: Clearly, you guys are a quintessential example of the public cloud. What are you looking at with regard to public customers?

Todd: We actually don't offer a public cloud like Amazon or Google App Engine. In many ways, though, we are the cloud. People don't think of Yahoo that way, but we're the personal cloud. In terms of where people's resources such as emails, photos, fantasy sports teams, and financial portfolio, among other things, are to be found, Yahoo is a personal cloud service for 100Ms of people. It's just that they don't think of us that way.

Considering whether we would move our workloads out into the public cloud, we finally come to the conclusion that probably, we would not. At the scale we deal with, it doesn't make sense.

There's a certain scale, I think, where it makes sense for you to make it someone else's problem until it becomes a critical part of your business. For us at Yahoo!, running technology and trying to scale technology with 600 million registered users around the world, that is our problem, and it has to be. It's the only way that we can successfully execute on that.

You see this with other folks as well. At the Structure Conference, Jonathan from Facebook was saying they have actually come to that same conclusion and that now they're actually creating their own data center, pouring their own concrete and building up.

And they did that because they realized that, you know what, it was their problem. And they needed to have the level of control and the level of efficiency that you can derive by owning your own infrastructure.

So for folks of our size, it's unlikely we're going to move our workload to Amazon or Azure. It just wouldn't make sense for us.

Robert: Todd, thanks for your time.

Todd: Thank you. It was great to talk to you.

<Return to section navigation list>

Visual Studio LightSwitch

Beth Massi (@BethMassi) described Getting the Most out of LightSwitch Summary Properties in a 12/7/2010 post:

Summary Properties in LightSwitch are properties on your entities (tables) that “describe” them (kind of like the .ToString() method on objects in .NET). A summary property is used by LightSwitch to determine what to display when a row of data is represented on a screen. Therefore, it’s important to get them right so that your data is represented to users in a clear way. In this post I’ll explain how to specify these properties on entities as well as how they work in LightSwitch applications.

Specifying Summary Properties on Entities (Tables)

Regardless of whether you are creating new database tables or attaching to an existing data source, summary properties are used to describe your entities as you model them in the designer. By default, LightSwitch will choose the first string property on your entity as the summary property. For instance, say we have a Customer entity with the following properties pictured below. If you select the Customer entity itself (by clicking on the name of it) you will see that the summary property is automatically set to LastName in the properties window. This is because the LastName property is the first property listed as type string.

In most cases this is the correct behavior you will want, however, for a Customer it makes more sense to display their full name instead. We can easily do this using a computed property. To add one, click on the “Computed Property” button at the top of the designer. This sets the Is Computed property in the properties window for you. Name the property FullName and then click on the “Edit Method” link in the properties window. This method is where you return the value of the computed field.

Computed fields are not stored in the underlying database, they are computed on the entity and only live in the data model. For FullName, I will return “LastName, FirstName” by writing the following code (in bold).

Public Class Customer Private Sub FullName_Compute(ByRef result As String) ' Set result to the desired field value result = Me.LastName + ", " + Me.FirstName End Sub End ClassTo set the FullName as the summary property, go back and select the entity’s name and you will now see FullName in the dropdown on the properties window as an available choice to use as the entity’s summary property.

Summary Properties in Action

Summary properties are displayed anytime you use the Summary control on a screen or when LightSwitch generates a layout for you that needs to display a description of the entity. For instance, to see this summary property in action create a search screen for the customer. Click on the “Screen…” button at the top of the designer, select the Search Screen template and select Customer as the screen data:

(Note, if you don’t have any records in your development database then also add a New Data Screen to enter some data first).Take a look at the summary control in the screen designer of the search screen by expanding the DataGridRow. By default, a summary control will display as a hyperlink to allow the user to open the specific record.

Hit F5 to run the application and open the customer search screen. Notice that the summary control is displaying the FullName property we specified as a clickable hyperlink.

If we click it, then then LightSwitch will generate an edit screen for us to access the record. Notice that the title of the generated Edit screen also displays the summary property:

Summary properties via summary controls also show up in child grids and modal window pickers as well.

More Examples of Summary Properties

Summary properties are not required to be strings, they just have to be able to be represented as strings. For instance, maybe you have an entity that captures date ranges or some item that would be better represented as a date. For our example say we have an Appointment entity and we’ve set a relation where a Customer can have many Appointments. The Appointment table has properties Subject, StartTime, EndTime and some Notes. We can select any of these properties as summary fields. You can even mix+match them by creating a computed property just like before, you just need to make sure the properties can be converted to a string.

Private Sub Summary_Compute(ByRef result As String) ' Set result to the desired field value result = Me.StartTime + ": " + Me.Subject End SubAnother thing that you can do is show data from a related entity. For instance if you have an OrderDetail line item that has a reference lookup value to a Product table, you may want to display the Product Name on the OrderDetail. Create a computed property and then just walk the association to get the property you want on the related entity. Just make sure you perform a null check on the related property first:

Private Sub Summary_Compute(ByRef result As String) ' Set result to the desired field value If Me.Product Is Nothing Then result = "<New Product> - Quantity: " + Me.Quantity Else result = Me.Product.Name + " - Quantity: " + Me.Quantity End If End SubKeep in mind that summary properties are meant to be displayed throughout the application and should not be too lengthy or complicated. Keeping them down to 50 characters or less will make the application look less cluttered.

Return to section navigation list>

Windows Azure Infrastructure

No significant articles today.

<Return to section navigation list>

Windows Azure Platform Appliance (WAPA), Hyper-V and Private Clouds

No significant articles today.

<Return to section navigation list>

Cloud Security and Governance

Buck Woody posted Windows Azure Learning Plan – Security on 12/7/2010:

This is one in a series of posts on a Windows Azure Learning Plan. You can find the main post here. This one deals with Security for Windows Azure.

General Security Information

Overview and general information about Windows Azure Security - what it is, how it works, and where you can learn more.

General Security Whitepaper – answers most questions

Windows Azure Security Notes from the Patterns and Practices site

http://blogs.msdn.com/b/jmeier/archive/2010/08/03/now-available-azure-security-notes-pdf.aspx

Overview of Azure Security

http://www.windowsecurity.com/articles/Microsoft-Azure-Security-Cloud.html

Azure Security Resources

http://reddevnews.com/articles/2010/08/19/microsoft-releases-windows-azure-security-resources.aspx

Cloud Computing Security Considerations

Security in Cloud Computing – a Microsoft Perspective

Physical Security for Microsoft’s Online Computing

Information on the Infrastructure and Locations for Azure Physical Security.

The Global Foundation Services Group at Microsoft handles physical security

Microsoft’s Security Response Center

Software Security for Microsoft’s Online Computing

Steps we take as a company to develop secure software

Windows Azure is developed using the Trustworthy Computing Initiative

Identity and Access in the Cloud

Security Steps you should take

While Microsoft takes great pains to secure the infrastructure, platform and code for Windows Azure, you have a responsibility to write secure code. These pointers can help you do that.

Securing your cloud architecture, step-by-step

Security Guidelines for Windows Azure

Best Practices for Windows Azure Security

Active Directory and Windows Azure

Understanding Encryption (great overview and tutorial)

Securing your Connection Strings (SQL Azure)

http://blogs.msdn.com/b/sqlazure/archive/2010/09/07/10058942.aspx

Getting started with Windows Identity Foundation (WIF) quickly

http://blogs.msdn.com/b/alikl/archive/2010/10/26/windows-identity-foundation-wif-fast-track.aspx

Gartner’s Thomas Bittman described the Economies of Fail in this 12/7/2010 post from the Gartner Data Center conference:

Interesting discussions here at Gartner’s Data Center Conference in Las Vegas. While discussing the importance of economies of scale to cloud providers, I pointed out that economies of scale is a double-edged sword.

While enterprises tend to have many (often hundreds, or even thousands) IT services that they provide, cloud providers tend to have only one, or a handful, but provided on huge scale. Standardization makes automation much easier, and certainly makes economies of large scale very attractive. But what happens when a “service” suffers a decline in demand? For an enterprise, diversification makes this much less of an issue – usually, a decline in one “service” will be made up by growth in another. The capital expense risk is real, but not huge. But what about a cloud provider that focuses on just that service?

Economies of fail.

Megaproviders in the cloud are not immune to economic declines, or changing demand. One of the benefits of cloud computing for end users is transferring their own capital risk to cloud providers. Doesn’t this sound an awful lot like the mortgage crisis in the U.S.?

For cloud providers to be successful, they must protect themselves. As much as possible they must find corollary markets for their services that are not directly related to their core service market – without abandoning the simplification and standardization that enables automation and economies of scale.

Potential customers of cloud providers should be very aware of a cloud provider’s business risk, and protect themselves. Cloud provider resiliency, market diversification and stability should be selection criteria. Remember: a provider cannot be too big to fail – in fact, some providers might become so big and so focused that failure is inevitable.

Chris Hoff (@Beaker) asserted From the Concrete To The Hypervisor: Compliance and IaaS/PaaS Cloud – A Shared Responsibility in this 12/6/2010 to his Rational Survivability blog:

* Update: A few hours after writing this last night, AWS announced they had achieved Level 1 PCI DSS Compliance.* If you pay attention to how the announcement is worded, you’ll find a reasonable treatment of what PCI compliance means to an IaaS cloud provider – it’s actually the first time I’ve seen this honestly described:

Merchants and other service providers can now run their applications on AWS PCI-compliant technology infrastructure to store, process and transmit credit card information in the cloud. Customers can use AWS cloud infrastructure, which has been validated at the highest level (Level 1) of PCI compliance, to build their cardholder environment and achieve PCI certification for their applications.

Note how they phrased this, then read my original post below.

However, pay no attention to the fact that they chose to make this announcement on Pearl Harbor Day

—

Here’s the thing…

A cloud provider can achieve compliance (such as PCI — yes v2.0 even) such that the in-scope elements of that provider which are audited and assessed can ultimately contribute to the compliance of a customer operating atop that environment. We’ve seen a number of providers assert compliance across many fronts, but they marketed their way into a yellow card by over-reaching…

It should be clear already, but for a service to be considered compliant, it clearly means that the customer’s in-scope elements running atop a cloud provider must also undergo and achieve compliance.

That means compliance is elementally additive the same way “security” is when someone else has direct operational control over elements in the stack you don’t.

In the case of an IaaS cloud provider who may achieve compliance from the “concrete to the hypervisor,” (let’s use PCI again,) the customer in turn must have the contents of the virtual machine (OS, Applications, operations, controls, etc.) independently assessed and meet PCI compliance in order that the entire stack of in-scope elements can be described as compliant.

Thus security — and more specifically compliance — in IaaS (and PaaS) is a shared responsibility.

I’ve spent many a blog battling marketing dragons from cloud providers that assert or imply that by only using said provider’s network which has undergone and passed one or more audits against a compliance framework, that any of its customers magically inherit certification by default. I trust this is recognized as completely false.

As compliance frameworks catch up to the unique use-cases that multi-tenancy and technologies such as virtualization bring, we’ll see more “compliant cloud” offerings spring up, easing customer pain related to the underlying moving parts. This is, for example, what FedRAMP is aiming to provide with “pre-approved” cloud offerings. We’ve got visibility and transparency issues to solve , as well as temporal issues such as the frequency and period of compliance audits, but there’s progress.

We’re going to see more and more of this as infrastructure- and platform-as-a-service vendors look to mutually accelerate compliance to achieve that which software-as-a-service can more organically deliver as a function of stack control.

/Hoff

* Note: It’s still a little unclear to me how some of the PCI requirements are met in an environment like an IaaS Cloud provider where “applications” that we typically think of that traffic in PCI in-scope data don’t exist (but the infrastructure does,) but I would assume that AWS leverages other certifications such as SAS and ISO as a cumulative to petition the QSA for consideration during certification. I’ll ask this question of AWS and see what I get back.

Related articles

- Navigating PCI DSS (2.0) – Related to Virtualization/Cloud, May the Schwartz Be With You! (rationalsurvivability.com)

- Seeing Through The Clouds: Understanding the Options and Issues (thesecuritysamurai.com)

- I’ll Say It Again: Security Is NOT the Biggest Barrier To Cloud… (rationalsurvivability.com)

- PCI 2.0 Allows For Compliant Clouds, Make Sure Your Service Providers Are PCI Compliant (securecloudreview.com)

- What’s The Problem With Cloud Security? There’s Too Much Of It… (rationalsurvivability.com)

- FedRAMP. My First Impression? We’re Gonna Need A Bigger Boat… (rationalsurvivability.com)

- Mixed Mode and PCI DSS 2.0 (brandenwilliams.com)

- The Future Of Audit & Compliance Is…Facebook? (rationalsurvivability.com)

- Incomplete Thought: Compliance – The Autotune Of The Security Industry (rationalsurvivability.com)

See the Amazon announcement in the Other Cloud Computing Platforms and Services section below.

<Return to section navigation list>

Cloud Computing Events

Brian Loesgen (@BrianLoesgen) announced an Azure Discovery Event in Los Angeles at the Microsoft downtown office on 12/16/2010:

We will be hosting an Azure Discovery event on December 16th at the Microsoft office in downtown L.A.

Windows Azure Platform - Acceleration Discover Event Invitation

Microsoft would like to invite you to a special event specifically designed for ISVs interested in learning more about the Windows Azure Platform. The “Windows Azure Platform Discover Events” are half-day events that will be held worldwide with the goal of helping ISVs understand the Microsoft’s Cloud Computing offerings with the Windows Azure Platform, discuss the opportunities for the cloud, and show ISVs how they can get started using Windows Azure and SQL Azure today.

The target audience for these events includes BDMs, TDMs, Architects, and Development leads. The sessions are targeted at the 100-200 level with a mix of business focused information as well as technical information.

Agenda

- Microsoft Partners and the Cloud - How the Windows Azure Platform Can Improve Your Business

- Cloud Business Scenarios for ISVs

- Windows Azure Platform Technical Overview, Pricing and SLAs

Registration Link: http://bit.ly/laazure

Fees

This event is brought to you by Metro - Microsoft’s Acceleration Program and is free of charge.

- Register Today

- Date: 12/16/2010

- Time: 9AM -1PM

- Location:

- Microsoft (LA Office)

- 333 S Grand Ave

- Los Angeles CA

- 90071-1504

- Level: 100-200

- Language: English

- Audience: BDMs, TDMs, Architects, and Development leads

- Registration Info: http://bit.ly/laazure

- Questions: email:wesy@microsoft.com

<Return to section navigation list>

Other Cloud Computing Platforms and Services

• Tim Anderson (@timanderson) [pictured below] updated his Database.com extends the salesforce.com platform article on 12/8/2010 to include details of “a chat with Database.com General Manager Igor Tsyganskiy”:

I had a chat with Database.com General Manager Igor Tsyganskiy. He says Microsoft’s SQL Azure is the closest competitor to Database.com but argues that because Salesforce.com is extending its platform in an organic way it will do a better job than Microsoft which has built a cloud platform from scratch. We did not address the pricing comparison directly, but Tsyganskiy says that existing Force.com customers always have the option to “talk to their Account Executive” so there could be flexibility.

Since Database.com is in one sense the same as Force.com, the API is similar. The underlying query language is SOQL – the Salesforce Object Query Language which is based on SQL SELECT though with limitations. The language for stored procedures and triggers is Apex. SQL drivers from Progress Software are intended to address the demand for SQL access.

I mentioned that Microsoft came under pressure to replace its web services API for SQL Server Data Services with full SQL – might Database.com face similar pressure? We’ll see, said Tsyganskiy. The case is not entirely parallel. SQL Server is a cloud implementation of an existing SQL database with which developers are familiar. Database.com on the other hand abstracts the underlying data store – although Salesforce.com is an Oracle customer, Tsyganskiy said that the platform stores data in a variety of ways so should not be thought of as a wrapper for an Oracle database server.

Although Database.com is designed to be used from anywhere, I’d guess that Java running on VMForce with JPA, and following today’s announcement Heroku apps also hosted by Salesforce.com, will be the most common scenarios for complex applications.

Tim Anderson (@timanderson) asserted Database.com extends the salesforce.com platform in a 12/7/2010 post:

At Dreamforce today Salesforce.com announced its latest platform venture: database.com. Salesforce.com is built on an Oracle database with various custom optimizations; and database.com now exposes this as a generic cloud database which can be accessed from a variety of languages – Java, .NET, Ruby and PHP – and accessed from applications running on almost any platform: VMForce, Smartphones, Amazon EC2, Google App Engine, Microsoft Azure, Microsoft Excel, Adobe Flash/Flex and others.

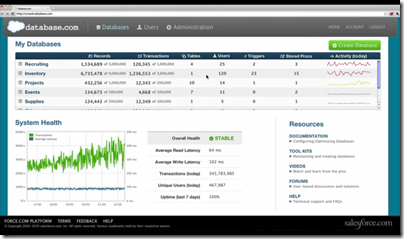

The database.com console is a web application that has a console giving access to your databases and showing useful statistics and system information.

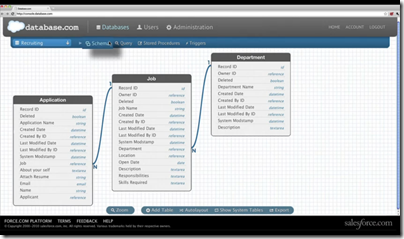

You can also create new databases, specifying the schema and relationships.

The details presented in the keynote today were sketchy – we saw applications that honestly could have been built just as easily with MySQL – but there is more information in the FAQ. The database.com API is through SOAP or REST web services, not SQL. Third parties can create drivers so you can you use it with SQL APIs such as ODBC or JDBC. There is row level security, and built-in full text search.

According to the FAQ, database.com “includes a native trigger and stored procedure language”.

Pricing starts from free – for up to 100,000 records, 50,000 transactions and 3 users per month. After than it is $10.00 per month per additional 100,000 records, $10.00 per month per additional 150,000 transactions, and $10.00 per user if you need the built-in authentication and security system – which as you would expect is based on the native force.com identity system.

As far as I can tell one of the goals of database.com – and also the forthcoming chatter.com free public collaboration service – is to draw users towards the force.com platform.

Roger Jennings has analysed the pricing and reckons that database.com is much more expensive than Microsoft’s SQL Azure – for 500 users and a 50GB database $15,000 per month for database.com vs a little over $500 for the same thing on SQL Azure, though the two are difficult to compare directly and he has had to make a number of assumptions. Responding to a question at the press and analyst Q&A today, Benioff seemed to accept that the pricing is relatively high, but justified in his view by the range of services on offer. Of course the pricing could change if it proves uncompetitive.

Unlike SQL Azure, database.com starts from free, which is a great attraction for developers interested in giving it a try. Trying out Azure is risky because if you leave a service running inadvertently you may run up a big bill.

In practice SQL Azure is likely to be more attractive than database.com for its core market, existing Microsoft-platform developers. Microsoft experimented with a web services API for SQL Server Data Services in Azure, but ended up offering full SQL, enabling developers to continue working in familiar ways.

Equally, Force.com developers will like database.com and its integration with the force.com platform.

Some of what database.com can do is already available through force.com and I am not sure how the pricing looks for organizations that are already big salesforce.com users; I hope to find out more soon.

What is interesting here is the way salesforce.com is making its platform more generic. There will be more force.com announcements tomorrow and I expect to to see further efforts to broaden the platform then.

Related posts:

The worst case bill you can run up if you inadvertently keep an 1-GB SQL Azure database running with no activity is US$9.99 per month.

Klint Finley reported Salesforce.com Announces Hosted Service Called Database.com to the ReadWriteCloud on 12/7/2010:

Salesforce.com will announce its new hosted service Database.com today at its Dreamforce in San Francisco. Database.com is a stand-alone service available via its SOAP and REST APIs to any language on any platform or device. - not just Force.com developers. Database.com will be generally available in 2011 and, like Force.com, will have a freemium pricing model. This new service brings Salesforce.com into competition with Oracle not just in CRM but in Oracle's oldest turf: databases.

Database.com is the same relational database system that powers Salesforce.com, allowing developers to take advantage of the company's infrastructure and scalability. Progress Software has written drivers to enable existing applications and off-the-shelf software to connect to Database.com

Database scalability is a major issue for today's web applications. Various NoSQL solutions, as well as projects like VoltDB and Memcached attempt to solve this problem. Database.com is the latest solution and, given Salesforce.com's track record, it will probably be an appealing one for cloud developers. According to Salesforce.com's announcement, the database currently contains more than 20 billion records and delivers more than 25 billion transactions per quarter with a response time averaging less than 300 milliseconds.

Database.com will have be free for three users with up to 100,000 records and 50,000 transactions per month. It will cost $10 a month for each set of 100,000 records beyond that, and an additional $10 a month for each set of $150,000 transactions.

As noted, the Database.com can be used with any language and apps can be run from anywhere - your own datacenter, RackSpace, Amazon Web Services, Google AppEngine and even mobile devices. Although Salesforce.com keeps using the word "open" to describe it, make no mistake: this is a proprietary format.

Users will be able to export their data from Database.com, which is not a SQL database but is very similar. Using Progress Software's DataDirect Connect drivers, users will be able to connect existing applications to Database.com. According to announcement from Progress, the company will add support for ODBC next.

[Disclosure: Salesforce.com paid for a plane ticket and hotel room for Klint Finley to attend the the Dreamforce conference in San Francisco.]

SD Times Newswire reported Progress Software Introduces JDBC Drivers for Salesforce.com's Database.com on 12/7/2010:

The Progress® DataDirect Connect® drivers are unique in the market as they are the only drivers that use SQL to enable developers to access and move data between Java-based programs and Salesforce, and applications built on Force.com or the new Database.com. By providing connectivity to Java and off-the-shelf applications, these drivers will accelerate the adoption of cloud-based data sources across the enterprise.

In addition, Progress has simplified the Java community's migration to cloud-based data sources by enabling developers to leverage existing skills from traditional on-premise databases to Salesforce, the Force.com platform and Database.com.

"Salesforce.com designed Database.com to address the growing need to support mobile, social and cloud-based applications in the enterprise," said George Hu, executive vice president of platform and marketing, salesforce.com. "Progress gives developers full access to an open, proven and trusted database -- Database.com -- that is built for the cloud to power Java-based applications."Progress will soon add ODBC support for Database.com, which will enable open database access for cross-platform SQL-based Database.com access from Windows, Mac and Linux operating systems without the need for custom code.

"The new class of cloud-enabled Progress DataDirect Connect drivers will complement Database.com by providing open access regardless of platform or operating system," said Jonathan Bruce, senior product manager for Progress Software. "We're delivering JDBC Type 5 functionality to Java developers to allow them to apply their expertise to new cloud data sources such as Salesforce, Force.com and Database.com. Only DataDirect Connect drivers provide this connectivity to Java and off-the-shelf applications, which will accelerate the use of cloud-based data across the enterprise."The introduction of the new JDBC database drivers extends Progress Software's push to broaden Independent Software Vendor (ISV) cloud enablement using the Progress® OpenEdge® SaaS platform. The OpenEdge SaaS platform is used by several hundred ISVs to build applications for the most demanding and diverse business environments in the world.

Dana Gardner asserted Salesforce revs up the cloud data tier era with Database.com in this 12/7/2010 post to his ZDNet Briefings Direct blog:

San Francisco – Salesforce.com at its Dreamforce conference here today debuted a database in the cloud service, Database.com, that combines attractive heterogeneous features for a virtual data tier for developers of all commercial, technical and open source persuasions.

Salesforce.com is upping the ante on cloud storage services by going far beyond the plain vanilla elastic, pay-as-you-go variety of database and storage services that have come to the market in the past few years, with Amazon Web Services as a leading offering. [Disclosure: Salesforce.com helped defray the majority of travel costs for me to attend Dreamforce this week.]

Database.com offers as a service a cross-language, cross-platform, elastic pricing data tier that should be smelling sweet to developers and — potentially — enterprise architects. Salesforce, taking a page from traditional database suppliers like Oracle, IBM, Microsoft and SAP/Sybase, recognizes that owning the data tier — regardless of where it is — means owning a long-term keystone to IT.

If the new data tier in the cloud service is popular, it could disrupt not only the traditional relational database market, but also the development/PaaS market, the Infrastructure as a Service market, and the middleware/integration markets.

Even more fascinating is the prospect of Database.com becoming a new data services resource darling of open source developers, just as they are losing patience and interest in MySQL, now under the stewardship of Oracle since it bought Sun Microsystems. This is a core constituency that is in flux and is being courted assiduously by Amazon, VMWare, Google and others.

IBM, Microsoft and Oracle will need to respond to Database.com — as will Google, Amazon and VMware — first for open source, mobile and start-up developers and later — to an yet uncertain degree — enterprise developers, systems integrators and more conservative ISVs.

So, be sure, the race is on generally to try and provide the best cloud data and increasingly integrated PaaS services at the best cost that proves its mettle in terms of performance, security, reliability and ease of use. If recent cloud interest and adoption are any indication, this could be killer cloud capability that becomes a killer IT capability.

Database.com already raises the stakes for cloud storage providers and wannabes: the value-add to the plain vanilla storage service has to now entice developers with meeting or exceeding Salesforce.com. By catering to a wide swath of tools, frameworks, IT platforms and mobile device platforms, Salesforce.com is heading off the traditional vendors at trying to (not too fast) usher their installed bases to that own vendors brand of hybrid and cloud offerings. Think a lock-in on the ground segue to a lock-in in the sky slick trick.

Both Amazon and Salesforce.com have proven that developers are not timid about changing how they attain value and resources. This may well prove true of how to access and procure data tier services, too, which makes the vendors slick cloud segue trick all the more tricky. Instead of going to the DBA for data services, all stripes of developers could just as easily (maybe more easily) fire up a value-added Database.com instance and support their apps fast and furious.

The stakes are high on attracting the developers, of course, because the more data that Salesforce attracts with Database.com, the more integration and analytics they can offer — which then attracts even more data and applications — and developer allegiance — and so on and so on. It’s a value-add assemblage activity that Salesforce has already shown aptness with Force.com.

What remains to be seen is if this all vaults Salesforce.com beyond it roots as a CRM business applications SaaS provider and emerging PaaS ecosystem supporter for good. If owning the cloud data tier proves as instrumental to business success (as evidenced by Oracle’s consistence in generating envious profit margins) as the on-premises, distributed computing DB business — well, Salesorce.com is looking at a massive business opportunity. And, like the Internet in general, it can easily become an early winner takes all affair.

It’s now a race for scale and value, that cloud-based data accumulation can become super sticky, with the lock-in coming from inescapably attractive benefits, not technical or license lock-in. Can you say insanely good data services? Sure you can. But more easily said than done. Salesforce has to execute well and long on its audacious new offering.

But what if? Like a rolling mountainside snowball gathering mass, velocity and power, Database.com could quickly become more than formidable because of the new nature of data in the cloud. Because the more data from more applications amid and among more symbiotic and collaborative ecosystems, the more insights, analytics and instant marketing prowess the hive managers (and hopefully users) gain.

In the end, Database.com could become a pervasive business intelligence services engine, something that’s far more valuable than a cheap and easy data tier in the sky for hire.

As I noted in a comment to Dana’s post, I don’t understand why Dana believes there’s a “prospect of Database.com becoming a new data services resource darling of open source developers … .” I don’t believe Marc Benioff has mentioned providing the source code for Database.com under an open-source license so users (and competitors) of his cloud database can implement it on premises or in a competing cloud database management system (CDBM). If I’ve missed an unlikely contribution by Benioff to FOSS, please let me know.

Jeff Barr (@jeffbarr) announced AWS Achieves PCI DSS 2.0 Validated Service Provider Status on 12/7/2010:

If your application needs to process, store, or transmit credit card data, you are probably familiar with the Payment Card Industry Data Security Standard, otherwise known as PCI DSS. This standard specifies best practices and security controls needed to keep credit card data safe and secure during transit, processing, and storage. Among other things, it requires organizations to build and maintain a secure network, protect cardholder data, maintain a vulnerability management program, implement strong security measures, test and monitor networks on a regular basis, and to maintain an information security policy.

I am happy to announce that AWS has achieved validated Level 1 service provider status for PCI DSS. Our compliance to PCI DSS v2 has been validated as compliant by an independent Quality Security Assessor (QSA). AWS's status as a validated Level 1 Service Provider means that merchants and other service providers now have access to a computing platform that been verified to conform to PCI standards. Merchants and services providers with a need to certify against PCD DSS and to maintain their own certification can now leverage the benefits of the AWS cloud and even simplify their own PCI compliance efforts by relying on AWS's status as a validated service provider. Our validation covers the services that are typically used to manage a cardholder environment including the Amazon Elastic Compute Cloud (EC2), the Amazon Simple Storage Service (S3), Amazon Elastic Block Storage (EBS), and the Amazon Virtual Private Cloud (VPC).

Our Qualified Service Assessor has submitted a complete Report on Compliance and a fully executed Attestation of Compliance to Visa as of November 30, 2010. AWS will appear on Visa's list of validated service providers in the near future.

Until recently, it was unthinkable to even consider the possibility of attaining PCI compliance within a virtualized, multi-tenant environment. PCI DSS version 2.0, the newest version of DSS published in late October 2010, did provide guidance for dealing with virtualization but did not provide any guidance around multi-tenant environments. However, even without multi-tenancy guidance, we were able to work with our PCI assessor to document our security management processes, PCI controls, and compensating controls to show how our core services effectively and securely segregate each AWS customer within their own protected environment. Our PCI assessor found our security and architecture conformed with the new PCI standard and verified our compliance.

Even if your application doesn't process, store, or transmit credit card data, you should find this validation helpful since PCI DSS is often viewed as a good indicator of the ability of an organization to secure any type of sensitive data. We expect that our enterprise customers will now consider moving even more applications and critical data to the AWS cloud as a result of this announcement.

Learn more by reading our new PCI DSS FAQ.

William Vambenepe (@Vambenepe) claimed Amazon proves that REST doesn’t matter for Cloud APIs in a 12/6/2010 post:

Every time a new Cloud API is announced, its “RESTfulness” is heralded as if it was a MUST HAVE feature. And yet, the most successful of all Cloud APIs, the AWS API set, is not RESTful.

We are far enough down the road by now to conclude that this isn’t a fluke. It proves that REST doesn’t matter, at least for Cloud management APIs (there are web-scale applications of an entirely different class for which it does). By “doesn’t matter”, I don’t mean that it’s a bad choice. Just that it is not significantly different from reasonable alternatives, like RPC.

AWS mostly uses RPC over HTTP. You send HTTP GET requests, with instructions like ?Action=CreateKeyPair added in the URL. Or DeleteKeyPair. Same for any other resource (volume, snapshot, security group…). Amazon doesn’t pretend it’s RESTful, they just call it “Query API” (except for the DevPay API, where they call it “REST-Query” for unclear reasons).

Has this lack of REStfulness stopped anyone from using it? Has it limited the scale of systems deployed on AWS? Does it limit the flexibility of the Cloud offering and somehow force people to consume more resources than they need? Has it made the Amazon Cloud less secure? Has it restricted the scope of platforms and languages from which the API can be invoked? Does it require more experienced engineers than competing solutions?

I don’t see any sign that the answer is “yes” to any of these questions. Considering the scale of the service, it would be a multi-million dollars blunder if indeed one of them had a positive answer.

Here’s a rule of thumb. If most invocations of your API come via libraries for object-oriented languages that more or less map each HTTP request to a method call, it probably doesn’t matter very much how RESTful your API is.

The Rackspace people are technically right when they point out the benefits of their API compared to Amazon’s. But it’s a rounding error compared to the innovation, pragmatism and frequency of iteration that distinguishes the services provided by Amazon. It’s the content that matters.

If you think it’s rich, for someone who wrote a series of post examining “REST in practice for IT and Cloud management” (part 1, part 2 and part 3), to now declare that REST doesn’t matter, well go back to these posts. I explicitly set them up as an effort to investigate whether (and in what way) it mattered and made it clear that my intuition was that actual RESTfulness didn’t matter as much as simplicity. The AWS API being an example of the latter without the former. As I wrote in my review of the Sun Cloud API, “it’s not REST that matters, it’s the rest”. One and a half years later, I think the case is closed.

Related posts:

- REST in practice for IT and Cloud management (part 1: Cloud APIs)

- REST in practice for IT and Cloud management (part 2: configuration management)

- REST in practice for IT and Cloud management (part 3: wrap-up)

- Waiting for events (in Cloud APIs)

- Separating model from protocol in Cloud APIs