Windows Azure and Cloud Computing Posts for 12/11/2010+

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

• Updated 12/12/2010 with articles marked •

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database and Reporting

- Marketplace DataMarket and OData

- Windows Azure AppFabric: Access Control and Service Bus

- Windows Azure Virtual Network, Connect, and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch

- Windows Azure Infrastructure

- Windows Azure Platform Appliance (WAPA), VMRoles, Hyper-V and Private Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Cloud Computing with the Windows Azure Platform published 9/21/2009. Order today from Amazon or Barnes & Noble (in stock.)

Read the detailed TOC here (PDF) and download the sample code here.

Discuss the book on its WROX P2P Forum.

See a short-form TOC, get links to live Azure sample projects, and read a detailed TOC of electronic-only chapters 12 and 13 here.

Wrox’s Web site manager posted on 9/29/2009 a lengthy excerpt from Chapter 4, “Scaling Azure Table and Blob Storage” here.

You can now freely download by FTP and save the following two online-only PDF chapters of Cloud Computing with the Windows Azure Platform, which have been updated for SQL Azure’s January 4, 2010 commercial release:

- Chapter 12: “Managing SQL Azure Accounts and Databases”

- Chapter 13: “Exploiting SQL Azure Database's Relational Features”

HTTP downloads of the two chapters are available for download at no charge from the book's Code Download page.

Tip: If you encounter articles from MSDN or TechNet blogs that are missing screen shots or other images, click the empty frame to generate an HTTP 404 (Not Found) error, and then click the back button to load the image.

Azure Blob, Drive, Table and Queue Services

Anton Staykov posted Windows Azure Storage Tips on 12/11/2010:

Windows Azure is a great platform. It has different components (like Compute, Storage, SQL Azure, AppFabric) which can be used independently. So for example you can use just Windows Azure Storage (be it Blob, Queue or Table) without even using Compute (Windows Azure Roles) or SQL Azure or AppFabric. And using just Windows Azure Storage is worthy. The price is very competitive to other cloud storage providers (such as Amazon S3).

To use Windows Azure Storage from within your Windows Forms application you just need to add reference to the Microsoft.WindowsAzure.StorageClient assembly. This assembly is part of Windows Azure SDK.

O.K. Assuming you have created a new Windows Forms application, you added reference to that assembly, you tried to create your CloudStorageAccount using the static Parse or TryParse method, and you try to build your application. Don’t be surprised, you will get following error (warning):

Warning 5 The referenced assembly "Microsoft.WindowsAzure.StorageClient, Version=1.1.0.0, Culture=neutral, PublicKeyToken=31bf3856ad364e35, processorArchitecture=MSIL" could not be resolved because it has a dependency on "System.Web, Version=4.0.0.0, Culture=neutral, PublicKeyToken=b03f5f7f11d50a3a" which is not in the currently targeted framework ".NETFramework,Version=v4.0,Profile=Client". Please remove references to assemblies not in the targeted framework or consider retargeting your project.

And you will not be able to build.

Well, some of you may not know, but with the Service Pack 1 of .NET Framework 3.5, Microsoft announced a new concept, named “.NET Framework Client Profile” which is available for .NET Framework 3.5 SP 1 and .NET Framework 4.0. The shorter version of what Client Profile is follows:

The .NET Framework 4 Client Profile is a subset of the .NET Framework 4 that is optimized for client applications. It provides functionality for most client applications, including Windows Presentation Foundation (WPF), Windows Forms, Windows Communication Foundation (WCF), and ClickOnce features. This enables faster deployment and a smaller install package for applications that target the .NET Framework 4 Client Profile.

For the full version – check out the inline links.

What to do in order to use Microsoft.WindwsAzure.StorageClient from within our Windows Forms application – go to project Properties and from “Target Framework” in “Application” tab select “.NET Framework 4” and not the “* Client Profile” one:

The gotcha, is that the default setting for Visual Studio is to use Client Profile of the .NET Framework. And you cannot choose this option from the “New Project” wizard, and all new projects you create are targeting the .NET Framework Client Profile (if you choose a .NET Framework 4 or 3.5 project template).

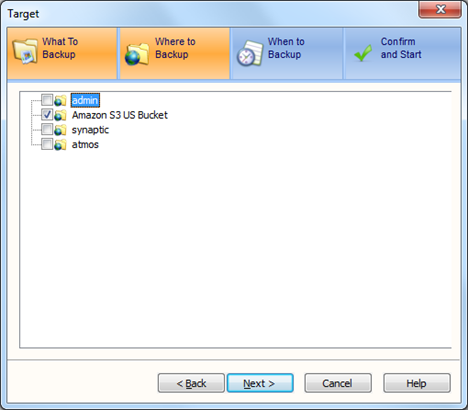

Jerry Huang described Convenient SQL Server Backup to Amazon S3 or Windows Azure Storage in this 12/10/2010 post to his Gladinet blog:

Every SQL Server admin knows how to backup SQL Server. It is a very mature technology since SQL Server 2005 because it has built-in support for Volume Shadow Copy Service. It knows how to take a consistent snapshot of the database and produce the snapshot as files for backup applications to consume and store.

With Gladinet Cloud Backup, SQL Server Backup to Amazon S3 or any other supported Cloud Storage Services such as Windows Azure or Open Stack is as easy as set-it-and-forget-it, while restore is as simple as point and click. (check Gladinet’s homepage for supported cloud storage services)

Get Cloud Backup

You can have cloud backup in two different ways. The first one is a standalone installer package. The second one is an optional add-on for Gladinet Cloud Desktop. This tutorial will use Gladinet Cloud Desktop add-on for illustration purpose.

Mount S3 Virtual Directory

The next step will involve mounting Amazon S3 as a virtual folder.

Snapshot Backup

SQL Server Backup is available in the Snapshot Backup add-on for Gladinet Cloud Desktop. It is also available in the stand-alone Cloud Backup too.

SQL Server Backup is in the Backup by Application section. Application means those applications that support volume shadow copy service.

The SQL Server will show up and you can pick the database that you want to backup. (If SQL Server doesn’t show up, read this MSDN article about SQL Writer, most likely the SQL VSS Writer Service is not started)

Next step you will pick Amazon S3 as the destination.

This is it, the task will run in the background and backup the SQL Server to Amazon S3.

Before we go into the restore, first drop some data on the database to simulate some data lost or corruption case.

Now Restore

First Step is to pick the backup destination since it is now the restore source.

Now select the snapshot you need.

This is it, the database will be restored, you don’t need to restart SQL Server and it continues to run.

Recommended Posts

<Return to section navigation list>

SQL Azure Database and Reporting

• Steve Peschka continued his CASI (Claims, Azure and SharePoint Integration) Kit series with part 6, Integrating SQL Azure with SharePoint 2010 and Windows Azure, of 12/12/2010:

This post is most useful when used as a companion to my five-part series on the CASI (Claims, Azure and SharePoint Integration) Kit.

- Part 1: an introductory overview of the entire framework and solution and described what the series is going to try and cover.

- Part 2: the guidance part of the CASI Kit. It starts with making WCF the front end for all your data – could be datasets, Xml, custom classes, or just straight up Html. In phase 1 we take your standard WCF service and make it claims aware – that’s what allows us to take the user token from SharePoint and send it across application or data center boundaries to our custom WCF applications. In phase 2 I’ll go through the list of all the things you need to do take this typical WCF application from on premises to hosted up in Windows Azure. Once that is complete you’ll have the backend in place to support a multi-application, multi-datacenter with integrated authentication.

- Part 3: describes the custom toolkit assembly that provides the glue to connect your claims-aware WCF application in the cloud and your SharePoint farm. I’ll go through how you use the assembly, talk about the pretty easy custom control you need to create (about 5 lines of code) and how you can host it in a page in the _layouts directory as a means to retrieve and render data in web part. The full source code for the sample custom control and _layouts page will also be posted.

- Part 4: the web part that I’m including with the CASI Kit. It provides an out of the box, no-code solution for you to hook up and connect with an asynchronous client-side query to retrieve data from your cloud-hosted service and then display it in the web part. It also has hooks built into it so you can customize it quite a bit and use your own JavaScript functions to render the data.

- Part 5: a brief walk-through of a couple of sample applications that demonstrate some other scenarios for using the custom control you build that’s described in part 3 in a couple of other common scenarios. One will be using the control to retrieve some kind of user or configuration data and storing it in the ASP.NET cache, then using it in a custom web part. The other scenario will be using the custom control to retrieve data from Azure and use it a custom task; in this case, a custom SharePoint timer job. The full source code for these sample applications will also be posted.

With the CASI Kit I gave you some guidance and tools to help you connect pretty easily and quickly to Windows Azure from SharePoint 2010, while sending along the token for the current user so you can make very granular security decisions. The original WCF application that the CASI Kit consumed just used a hard-coded collection of data that it exposed. In a subsequent build (and not really documented in the CASI Kit postings), I upgraded the data portion of it so that it stored and retrieved data using Windows Azure table storage. Now, I’ve improved it quite a bit more by building out the data in SQL Azure and having my WCF in Windows Azure retrieve data from there. This really is a multi-cloud application suite now: Windows Azure, SQL Azure, and (ostensibly) SharePoint Online. The point of this post is really just to share a few tips when working with SQL Azure so you can get it incorporated more quickly into your development projects.

Integration Tips

1. You really must upgrade to SQL 2008 R2 in order to be able to open SQL Azure databases with the SQL Server Management Studio tool. You can technically make SQL Server 2008 work, but there are a bunch of hacky work around steps to make that happen. 2008 R2 has it baked in the box and you will get the best experience there. If you really want to go the 2008 work-around route, check out this link: http://social.technet.microsoft.com/wiki/contents/articles/developing-and-deploying-with-sql-azure.aspx. There are actually a few good tips in this article so it’s worth a read no matter what.

2. Plan on using T-SQL to do everything. The graphical tools are not available to work with SQL Azure databases, tables, stored procedures, etc. Again, one thing I’ve found helpful since I’m not a real T-SQL wiz is to just create a local SQL Server database first. In the SQL Management tool I create two connections – one to my local SQL instance and one to my SQL Azure instance. I create tables, stored procedures, etc. in the local SQL instance so I can use the graphical tools. Then I use the Script [whatever Sql object] As…CREATE to…New Sql Query Window. That generates the SQL script to create my objects, and then I can paste it into a query window that I have open to my SQL Azure database.

3. This one’s important folks – the default timeout is frequently not long enough to connect to SQL Azure. You can change it if using the .NET SqlClient classes in the connection string, i.e. add "Connection Timeout=30;". If you using SQL Server Management Studio then click the Advanced button on the dialog where you enter the server name and credentials and change it to at least 30. The default is 15 seconds and fails often, but 30 seconds seems to work pretty well. Unfortunately there isn’t a way to change the connection timeout for an external content type (i.e. BDC application definition) to a SQL Azure data source.

4. Don't use the database administrator account for connecting to your databases (i.e. the account you get to create the database itself). Create a separate account for working with the data. Unfortunately SQL Azure only supports SQL accounts so you can't directly use the identity of the requesting user to make decisions about access to the data. You can work around that by using a WCF application that front-ends the data in SQL Azure and using claims authentication in Windows Azure, which is exactly the model the CASI Kit uses. Also, it takes a few steps to create a login that can be used to connect to data a specific database. Here is an example:

--create the database first

CREATE DATABASE Customers

--now create the login, then create a user in the database from the login

CREATE LOGIN CustomerReader WITH PASSWORD = 'FooBarFoo'

CREATE USER CustomerReader FROM LOGIN CustomerReader

--now grant rights to the user

GRANT INSERT, UPDATE, SELECT ON dbo.StoreInformation TO CustomerReader

--grant EXECUTE rights to a stored proc

GRANT EXECUTE ON myStoredProc TO CustomerReader

For more examples and details, including setting server level rights for accounts in SQL Azure, see http://msdn.microsoft.com/en-us/library/ee336235.aspx.

5. You must create firewall rules in SQL Azure for each database you have in order to allow communications from different clients. If you want to allow communication from other Azure services, then you must create the MicrosoftServices firewall rule (which SQL Azure offers to do for you when you first create the database), which is a start range of 0.0.0.0 to 0.0.0.0. If you do not create this rule none of your Windows Azure applications will be able to read, add or edit data from your SQL Azure databases! You should also create a firewall rule to allow communications with whatever server(s) you use to route to the Internet. For example, if you have a cable or DSL router or an RRAS server, then you want to use your external address or NAT address for something like RRAS.

Those should be some good tips to get your data up and running.

Data Access

Accessing the data itself from your custom code – Windows Azure WCF, web part, etc. – is fortunately pretty much exactly the same as when retrieving data from an on-premise SQL Server. Here’s a quick code example, and then I’ll walk through a few parts of it in a little more detail:

//set a longer timeout because 15 seconds is often not

//enough; SQL Azure docs recommend 30

private string conStr = "server=tcp:foodaddy.database.windows.net;Database=Customers;" +

"user ID=CustomerReader;Password=FooBarFoo;Trusted_Connection=False;Encrypt=True;" +

"Connection Timeout=30";

private string queryString = "select * from StoreInformation";

private DataSet ds = new DataSet();

using (SqlConnection cn = new SqlConnection(conStr))

{

SqlDataAdapter da = new SqlDataAdapter();

da.SelectCommand = new SqlCommand(queryString, cn);

da.Fill(ds);

//do something with the data

}

Actually there’s not really here that needs much explanation, other than the connection string. The main things worth pointing out on it that are possibly different from a typical connection to an on-premise SQL Server are:

- server: precede the name of the SQL Azure database with “tcp:”.

- Trusted_Connection: this should be false since you’re not using integrated security

- Encrypt: this should be true for any connections to a cloud-hosted database

- Connection Timeout: as described above, the default timeout is 15 seconds and that will frequently not be enough; instead I set it to 30 seconds here.

One other scenario I wanted to mention briefly here is using data migration. You can use the BCP tool that comes with SQL Server to move data from an on-premise SQL Server to SQL Azure. The basic routine for migrating data is like this:

1. Create a format file – this is used for both exporting the local data and importing it into the cloud. In this example I’m creating a format file for the Region table in the Test database, and saving it to the file region.fmt.

bcp Test.dbo.Region format nul -T -n -f region.fmt

2. Export data from local SQL – In this example I’m exporting out of the Region table in the Test database, into a file called RegionData.dat. I’m using the region.fmt format file I created in step 1.

bcp Test.dbo.Region OUT RegionData.dat -T -f region.fmt

3. Import data to SQL Azure. The main thing that’s important to note here is that when you are importing data into the cloud, you MUST include the “@serverName” with the user name parameter; the import will fail without it. In this example I’m importing data to the Region table in the Customers database in SQL Azure. I’m importing from the RegionData.dat file that I exported my local data into. My server name (the -S parameter) is the name of the SQL Azure database. For my user name (-U parameter) I’m using the format username@servername as I described above. I’m telling it to use the region.fmt format file that I created in step 1, and I’ve set a batch size (-b parameter) of 1000 records at a time.

bcp Customers.dbo.Region IN RegionData.dat -S foodaddy.database.windows.net -U speschka@foodaddy.database.windows.net -P FooBarFoo -f region.fmt -b 1000

That’s all for this post folks. Hopefully this gives you a good understanding of the basic mechanics of creating a SQL Azure database and connecting to it and using it in your SharePoint site. As a side note, I used the CASI Kit to retrieve this data through my Windows Azure WCF front end to SQL Azure and render it in my SharePoint site. I followed my own CASI Kit blog when creating it to try and validate everything I’ve previously published in there and overall found it pretty much on the mark. There were a couple of minor corrections I made in part 3 along with a quick additional section on troubleshooting that I added. But overall it took me about 30 minutes to create a new custom control and maybe 15 minutes to create a new Visual web part.

I used the CASI Kit web part to display one set of SQL Azure data from the WCF, and in the Visual web part I created an instance of the custom control programmatically to retrieve a dataset, and then I bound it to an ASP.NET grid. I brought it all together in one sample page that actually looks pretty good, and could be extended to displaying data in a number of different ways pretty easily. Here’s what it looks like:

Attachment:

CASI_Kit_Part6.zip

Steve is a Microsoft Lead Architect for Portals.

Changir Biyikoglu described How to scale out an app with SQL Azure Federations… The Quintessential Sales DB with Customer and Orders in a 12/11/2010 post:

Continuing on the SQL Azure Federation theme with this post, I’ll cover an important question; what would it take to build applications with the SQL Azure Federations. So lets walk that path… We’ll take the quintessential sales app with customers and orders. I’ll simplify the sample and schema to get you through the headlines. But, we won’t lose any fidelity to the basic relationships in the data model. To recap the sample; as you would expect from a sales db, customers have many orders and orders have many order details (line items). We’ll focus on implementation of 2 scenarios; get orders and order details for a given customer and get all orders of all customers.

Step 1 – Decide the Federation Key

Picking the right federation key is the most critical decision you will need to make. Simply because the federation key is something that the application needs to understand and will be tough thing to change later. How do you pick the federation key? Apps that fit the sharding pattern will have most of their workload focused on a subset of data. With the sample sales app, scenarios mostly revolve around a single customer and its orders. Customers log in place or view their orders or change demographic info etc. Some apps may not have a slam dunk choice like customer_id or may have more than one dimension to optimize. If you have more than one natural choice for a federation key, you will need to think about which specific workload you want to optimize to take a pick. We’ll cover techniques for optimizing multiple dimensions in the advanced class.

It will also be critical to pick a federation key that spreads the workload to many nodes instead of concentrating the workload. After picking a federation key, you don’t want all your load to be concentrated at a single federation member or node. In the sales app, customer id as a federation key help distribute the load across to many nodes in the sales app. One more important factor is the size of the largest federation key instance. Remember that atomic units cannot be split any further. So Atomic units, that is federation key instances, cannot span federation members. When you pick the federation key, the computational capacity requirements of the largest federation key instance should safely fit into a single SQL Azure database.

Step 2 – Denormalize the Schema

With the federation key is set, next step is to modify your schema a little to deploy it into SQL Azure federations. It is a fairly simple change; we need to carry the federation key to all tables that need to be federated. In the sales apps, customer and orders tables naturally have customer_id as the column. However order_details table also need to contain the customer_id column before the schema can be deployed. This is required for us to know how to redistribute your data when a SPLIT command is issued. The script below walks through how to deploy the schema to the SQL Azure federation member.

1: -- Connect to 'master'2: CREATE DATABASE SalesDB (EDITION='business',MAXSIZE=50GB)3: GO4:5: -- CONNECT to 'SalesDB' and Create Federation6: CREATE FEDERATION Orders_Federation(RANGE BIGINT)7: GO8:9: -- Connect to ‘Order_Federation’ federation member covering customer_id 0.10: USE FEDERATION Orders_Federation(0) WITH RESET11: GO12:13: CREATE TABLE orders(14: Customer_id bigint,15: Order_id bigint,16: Order_date datetime,17: primary key (order_id, customer_id))18: FEDERATE ON (customer_id)19: GO20:21: -- note that customerid – federation key - needs to be part of all unique indexes but not noneunique indexes.22: CREATE INDEX o_idx1 on orders(odate)23: GO24:25: -- create a federated table26: CREATE TABLE orderdetails(27: customerid bigint,28: orderdetailid bigint,29: orderid bigint,30: partid bigint,31: primary key (orderdetailid, customerid))32: FEDERATE ON (customerid)33: GO34:35: ALTER TABLE orders36: ADD CONSTRAINT orders_uq1 UNIQUE NONCLUSTERED (orderid,customerid)37: GO38:39: ALTER TABLE orderdetails40: ADD CONSTRAINT orderdetails_fk1 FOREIGN KEY(orderid,customerid) REFERENCES orders(orderid,customerid)41: GO42:43: -- reference table44: CREATE TABLE uszipcodes(zipcode nvarchar(128) primary key, state nvarchar(128))45: GOStep 3 – Make Application Federation Aware

For the large part of the workload that is focused on the atomic unit, life is fairly simple. Your app needs to issue an additional statement to rewire the connection to the right atomic unit. Here is the example statement that connect to customer_id 55.

1: USE FEDERATION Orders_Federation(55) WITH RESET2: GOOnce you are connected, you can safely operate on data that belongs to this customer_id value 55. Even if SPLITs were to redistribute the data, you don’ tneed to remember a new physical database name or do anything different in your connection string. You connection string still connects to the database containing the federation and then issue the USE FEDERATION statement to go to customer_id 55. We will guarantee to land you in the correct federation member. Here is a dead simple implementation of GetData for a classic sales app with Orders and OrderDetails tables. Interesting thing to note here is that the connection string points to the SalesDB database and that the net new code here is the lines between line 13 and 17 below.

1: private void GetData(long customerid)2: {3: long fedkeyvalue = customerid;4:5: // Connection6: SqlConnection connection = new SqlConnection(@"Server=tcp:sqlazure;Database=SalesDB;User ID=mylogin@myserver;Password=mypassword");7: connection.Open();8:9: // Create a DataSet.10: DataSet data = new DataSet();11:12: // Routing to Specific Customer13: using (SqlCommand command = connection.CreateCommand())14: {15: command.CommandText = "USE FEDERATION orders_federation(" + fedkeyvalue.ToString() + ") WITH RESET";16: command.ExecuteNonQuery();17: }18:19: // Populate data from orders table to the DataSet.20: SqlDataAdapter masterDataAdapter = new SqlDataAdapter(@"select * from orders where (customerid=@customerid1)", connection);21: masterDataAdapter.SelectCommand.Parameters.Add("@customerid1", SqlDbType.BigInt);22: masterDataAdapter.SelectCommand.Parameters[0].Value = customerid;23: masterDataAdapter.Fill(data, "orders");24:25: // Add data from the orderdetails table to the DataSet.26: SqlDataAdapter detailsDataAdapter = new SqlDataAdapter(@"select * from orderdetails where (customerid=@customerid1)", connection);27: detailsDataAdapter.SelectCommand.Parameters.Add("@customerid1", SqlDbType.BigInt);28: detailsDataAdapter.SelectCommand.Parameters[0].Value = customerid;29: detailsDataAdapter.Fill(data, "orderdetails");30:31: connection.Close();32: connection.Dispose();33:34: …35: }For parts of the application that needs to fan out queries, in future versions there will be help from SQL Azure. However in v1, you will need to hand craft the queries by firing multiple queries to multiple federation members. If you are simply appending or union-ing resultset across your atomic units, you need to iterate through the federation members. This won’t be too hard. Here is the GetData function expanded to include the scenario where all customers across all federation members are displayed. The function assumes the special customer_id=0 returns all customers so T-SQL is adjusted to enable that through the added @customerid2 parameter in the where clause ; where (customerid=@customerid1 or @customerid2=0). The net new code here is the loop that wraps lines 14 to 39. Lines 35 to 39 basically grab the value required to rewire the connection to the next federation member to repeat the commands until the range high of NULL is hit. NULL is the special value used to represent the maximum value of federation key in the sys.federation_member_columns system view.

1: private void GetData(long customerid)2: {3: long? fedkeyvalue = customerid;4:5: // Connection6: SqlConnection connection = new SqlConnection(@"Server=tcp:sqlazure;Database=SalesDB;User ID=mylogin@myserver;Password=mypassword");7: connection.Open();8:9: // Create a DataSet.10: DataSet data = new DataSet();11:12: do13: {14: // Routing to Specific Customer15: using (SqlCommand command = connection.CreateCommand())16: {17: command.CommandText = "USE FEDERATION orders_federation(" + fedkeyvalue.ToString() + ") WITH RESET";18: command.ExecuteNonQuery();19: }20:21: // Populate data from orders table to the DataSet.22: SqlDataAdapter masterDataAdapter = new SqlDataAdapter(@"select * from orders where (customerid=@customerid1 or @customerid2=0)", connection);23: masterDataAdapter.SelectCommand.Parameters.Add("@customerid1", SqlDbType.BigInt);24: masterDataAdapter.SelectCommand.Parameters[0].Value = customerid;25: masterDataAdapter.SelectCommand.Parameters.Add("@customerid2", SqlDbType.BigInt);26: masterDataAdapter.SelectCommand.Parameters[1].Value = customerid;27: masterDataAdapter.Fill(data, "orders");28:29: // Add data from the orderdetails table to the DataSet.30: SqlDataAdapter detailsDataAdapter = new SqlDataAdapter(@"select * from orderdetails where (customerid=@customerid1 or @customerid2=0)", connection);31: detailsDataAdapter.SelectCommand.Parameters.Add("@customerid1", SqlDbType.BigInt);32: detailsDataAdapter.SelectCommand.Parameters[0].Value = customerid;33: detailsDataAdapter.SelectCommand.Parameters.Add("@customerid2", SqlDbType.BigInt);34: detailsDataAdapter.SelectCommand.Parameters[1].Value = customerid;35: detailsDataAdapter.Fill(data, "orderdetails");36:37: using (SqlCommand command = connection.CreateCommand())38: {39: command.CommandText = "select cast(range_high as bigint) from sys.federation_member_columns";40: fedkeyvalue = (long)command.ExecuteScalar();41: }42: } while (customerid == 0 && fedkeyvalue == null);43:44: connection.Close();45: connection.Dispose();46:47: …48: }Below is the output from a sample sys.federation_member_columns view for the orders_federation. With this federation setup, range_low is inclusive and range_high is exclusive to the given federation member. NULL represents the maximum bigint value +1.

The set of scenarios here is obviously not an exhaustive list. There will be more sophisticated types of queries that require cross federation joins. Those queries will need to utilize client side in v1. In future things will get better with support from SQL Azure for fanout queries. Fanout querying will enable easier querying across federation members.

Clearly, SQL Azure federations require changes to your application. The changes typically impact more the mechanics of the application to wire up coordination of the partitioning effort with the federations but less about the business logic of the application. These changes to the application comes with great benefits of massive scalability and ability to massively parallelize your workload by engaging man nodes of the cluster. There are many applications out there that have been designed utilizing the horizontal partitioning and sharding principals over the last decade. These applications did not have the luxury of having an RBDMS platform that had understanding of the data distribution and robust routing of connections. With SQL Azure federations, horizontal partitioning and sharding become first class citizens in the server and the bar for entry is lowered and it becomes much easier to get that flexibility to redistribute data at will, without downtime. These properties translates to great elasticity and best economics and unlimited scale for applications so I think the changes are well worth the effort.

Toto Gamboa described My First Shot At Sharding Databases on 12/11/2010:

Sometime in 2004, I was faced with a question whether to have our library system’s design, which I started way back in 1992 using Clipper on Novell Netware and later was ported to ASP/MSSQL, be torn apart and come up with a more advanced, scalable and flexible design. The usual problem I would encounter most often is that sometimes in an academic organization, there could be varying structures. In public schools, they have regional structures where libraries are shared by various schools in the region. In some organizations, a school have sister schools with several campuses each with one or more libraries in it but managed by only one entity. In one setup, a single campus can have several schools in it, with each having one or more libraries. These variations pose a lot of challenge in terms of programming and deployment. A possible design nightmare.

Each school or library would often emphasize their independence and uniqueness against other schools and libraries, for example wanting to have their own library policies and control over their collections and users and customers and yet have that desire to share their resources to others and interoperate with one another. Even within inside a campus, one library can even operate on a different time schedule from the other library just a hallway apart. That presented a lot of challenge in terms of having a sound database design.

The old design from 1992 to 2004 was a single database with most tables have references to common tables called “libraries” and “organizations”. That was an attempt to partition content by libraries or organization (a school). Scaling though wasn’t a concern that time as even the largest library in the country won’t probably consume a few gigs of harddisk space. The challenge came as every query inside the system has to filter everything by library or school. As features of our library system grew in numbers and became more advanced and complex, it is apparent that the old design, though very simple when contained in a single database, would soon burst into a problem. Coincidentally though, I have been contemplating to advance the product in terms of feature set. Flexibility was my number one motivator, second was the possibility of doing it all over the web.

Then came the ultimate question, should I retain the design and improve on it, or should I be more daring and ambitious. I scoured over the Internet for guidance of a sound design and after a thorough assessment of current and possibly future challenges that would include scaling, I ended up with a decision to instead break things apart and abandon the single database mindset. The old design went into my garbage bin. Consequently, that was the beginning of my love of sharding databases to address issues of library organization, manageability and control and to some extent, scalability.

The immediate question was how I am gonna do the sharding. Picking up the old schema from the garbage bin, it was pretty obvious that breaking them apart by libraries is the most logical. I haven’t heard the concept of a “tenant” then, but I dont have to as the logic behind in choosing it is as ancient as it can be. There were other potential candidate for keys to shard the database like “schools” or “organization”, but the most logical is the “library”. It is the only entity that can stand drubbing and scrubbing. I went on to design our library system with each database containing only one tenant, the library. As of this writing, our library system have various configurations: one school have several libraries inside their campus, another have several campuses scattered all over metro manila with some campus having one or more libraries but everything sits on a VPN accessing a single server.

Our design though is yet to become fully sharded at all levels as another system acts as a common control for all the databases. This violates the concept of a truly sharded design where there should be no shared entity among shards. Few tweaks here and there though would fully comply with the concept. Our current design though is 100% sharded at the library level.

So Why Sharding?

The advent of computing in the cloud present to us new opportunities, especially with ISVs. With things like Azure, we will be forced to rethink our design patterns. The most challenging perhaps is on how to design not only to address concerns of scalability, but to make our applications tenant-aware and tenant-ready. This challenge is not only present in the cloud, but a lot of on-premise applications can be designed this way. This could help in everyone’s eventual transition to the cloud. But cloud or not, we could benefit a lot on sharding. In our case, we can pretty much support any configuration out there. We also got to mimic the real world operation of libraries. And it eases up on a lot of things like security and control.

Developing Sharded Applications

Aside from databases, applications need to be fully aware that it is not anymore accessing a single database where it can easily query everything with ease without minding other data exists somewhere. Could be on a different database, sitting on another server. Though the application will be a bit more complex in terms of design, often, it is easier to comprehend and develop if you have an app instance mind only a single tenant as oppose to an app instance trying to filter out other tenants just to get the information set of just one tenant.

Our Library System

Currently our library system runs on sharded mode both on premise and on cloud-like hosted environments. You might want to try its online search:

- Running on Premise (http://krc-library.aim.edu/lisa7/lib1/opac/)

- Hosted on 5$ a month Windows Shared Host (http://www.systemaeskuwela.net/sjcr/)

Looking at SQL Azure

Sharding isn’t automatic to any Microsoft SQL Server platform including SQL Azure. One needs to do it by hand and from ground up. This might change in the future though. I am quite sure Microsoft will see this compelling feature. SQL Azure is the only Azure based product that currently does not have natural/inherent support for scaling out. If I am Microsoft, they should offer a full SQL Server service like shared windows hosting sites do along side SQL Azure so it eases up adoption. Our systems database design readiness (being currently sharded) would allow us to easily embrace the new service. But I understand, it would affect, possibly dampen their SQL Azure efforts if they do it. But I would try to reconsider it than offering a very anemic product.

As of now, though we may have wanted to take our library system to Azure with few minor tweaks, we just can’t in this version of SQL Azure for various reasons as stated below:

- SQL Server Full Text Search. SQL Azure does not support this in its current version.

- Database Backup and Restore. SQL Azure does not support this in its current version.

- Reporting Services. SQL Azure does not support this in its current version.

- $109.95 a month on Azure/SQL Azure versus $10 a month shared host with a full-featured IIS7/SQL Server 2008 DB

My Design Paid Off

Now I am quite happy that the potentials of a multi-tenant, sharded database design, though is as ancient, it is beginning to get attention with the advent of cloud computing. My 2004 database design is definitely laying the groundwork for cloud computing adoption. Meanwhile, I have to look for solutions to address what’s lacking in SQL Azure. There could be some easy work around.

I’ll find time on the technical aspects of sharding databases in my future blogs. I am also thinking that PHISSUG should have one of this sharding tech-sessions.

Toto is a database specialist, software designer, and Microsoft MVP.

The MSDN Library described The New Silverlight based SQL Azure Portal in a 12/2010 article:

There are currently two SQL Azure Portals which provide the user interface for provisioning servers and logins, and quickly creating databases. These portals will coexist until early 2011 when the old SQL Azure Portal is scheduled for decommissioning.

The new Silverlight based SQL Azure Portal is integrated with the new Windows Azure Platform Management Portal. It provides all the features included with the old SQL Azure Portal. You can access this new portal at New SQL Azure Portal.

The new portal provides access to a web-based database management tool for existing SQL Azure databases. The management tool supports basic database management tasks like designing and editing tables, views, and stored procedures, and authoring and executing Transact-SQL queries. For more information, see the MSDN documentation for Database Manager for SQL Azure. The database management tool is available when you select a database to manage in the new SQL Azure Portal and click the Manage button on the toolbar. See screenshot below.

The Old SQL Azure Portal

The older SQL Azure Portal is still available at Old SQL Azure Portal. This portal is scheduled to be decommissioned around the first half of 2011.

MSCerts.net posted SQL Azure Primer (part 4) - Creating Logins and Users on 12/6/2010 (missed when posted):

5. Creating Logins and Users

With SQL Azure, the process of creating logins and users is mostly identical to that in SQL Server, although certain limitations apply. To create a new login, you must be connected to the master database. When you're connected, you create a login using the CREATE LOGIN command. Then, you need to create a user account in the user database and assign access rights to that account.

5.1. Creating a New Login

Connect to the master database using the administrator account (or any account with the loginmanager role granted), and run the following command:

CREATE LOGIN test WITH PASSWORD = 'T3stPwd001'At this point, you should have a new login available called test. However, you can't log in until a user has been created. To verify that your login has been created, run the following command, for which the output is shown in Figure 11:

select * from sys.sql_logins

Figure 11. Viewing a SQL login from the master database

If you attempt to create the login account in a user database, you receive the error shown in Figure 12. The login must be created in the master database.

Figure 12. Error when creating a login in a user database

If your password isn't complex enough, you receive an error message similar to the one shown in Figure 13. Password complexity can't be turned off.

Figure 13. Error when your password isn't complex enough

NOTE

Selecting a strong password is critical when you're running in a cloud environment, even if your database is used for development or test purposes. Strong passwords and firewall rules are important security defenses against attacks to your database.

5.2. Creating a New User

You can now create a user account for your test login. To do so, connect to a user database using the administrator account (you can also create a user in the master database if this login should be able to connect to it), and run the following command:

CREATE USER test FROM LOGIN testIf you attempt to create a user without first creating the login account, you receive a message similar to the one shown in Figure 14.

Figure 14. Error when creating a user without creating the login account first

6. Assigning Access Rights

So far, you've created the login account in the master database and the user account in the user database. But this user account hasn't been assigned any access rights.

To allow the test account to have unlimited access to the selected user database, you need to assign the user to the db_owner group:

EXEC sp_addrolemember 'db_owner', 'test'At this point, you're ready to use the test account to create tables, views, stored procedures, and more.

NOTE

In SQL Server, user accounts are automatically assigned to the public role. However, in SQL Azure the public role can't be assigned to user accounts for enhanced security. As a result, specific access rights must be granted in order to use a user account.

7. Understanding Billing for SQL Azure

SQL Azure is a pay-as-you-go model, which includes a monthly fee based on the cumulative number and size of your databases available daily, and a usage fee based on actual bandwidth usage. However, as of this writing, when the consuming application of a SQL Azure database is deployed as a Windows Azure application or service, and it belongs to the same geographic region as the database, the bandwidth fee is waived.

To view your current bandwidth consumption and the databases you've provisioned from a billing standpoint, you can run the following commands:

SELECT * FROM sys.database_usage -- databases defined

SELECT * FROM sys.bandwidth_usage -- bandwidthThe first statement returns the number of databases available per day of a specific type: Web or Business edition. This information is used to calculate your monthly fee. The second statement shows a breakdown of hourly consumption per database.

Figure 15 shows a sample output of the statement returning bandwidth consumption. This statement returns the following information:

time. The hour for which the bandwidth applies. In this case, you're looking at a summary between the hours of 1 AM and 2 AM on January 22, 2010.

database_name. The database for which the summary is available.

direction. The direction of data movement. Egress shows outbound data, and Ingress shows inbound data.

class. External if the data was transferred from an application external to Windows Azure (from a SQL Server Management Studio application, for example). If the data was transferred from Windows Azure, this column contains Internal.

time_period. The time window in which the data was transferred.

quantity. The amount of data transferred, in kilobytes (KB).

Visit http://www.microsoft.com/windowsazure for up-to-date pricing information.

Figure 15. Hourly bandwidth consumption

Following are links to earlier members of this series:

- SQL Azure Primer (part 3) - Connecting with SQL Server Management Studio

- SQL Azure Primer (part 2) - Configuring the Firewall

- SQL Azure Primer (part 1)

Julie Lerman (@julielerman) posted the slides from her Working With Sql Azure from Entity Framework On-Premises presentation to the CodeProject’s Virtual Tech Summit of 12/2/2010 (missed when posted):

Be sure to check out the related presentations.

<Return to section navigation list>

Dataplace DataMarket and OData

• Kevin Ritchie (@KevRitchie) published Day 12 - Windows Azure Platform – Marketplace on 12/12/2010:

On the 12th day of Windows Azure Platform Christmas my true love gave to me the Windows Azure Marketplace.

What is the Windows Azure Marketplace?

You’ve probably heard of the Apple App Store; if not, it’s a central point for application developers to market and sell their applications. The Windows Azure Marketplace is Microsoft’s way of allowing Windows Azure Application developers to do the same thing, but with one fundamental difference. The Marketplace has an additional market; the DataMarket.

The DataMarket is a place that allows leading commercial data providers and authoritative public data sources to make data; such as image files, databases, reports on demographics and real-time feeds, readily available (at a price) to business end users and application developers. End users can consume this data using Microsoft Office tools like Excel or even use Business Intelligence tools like PowerPivot or SSRS (SQL Server Reporting Services) to mine this data and present it in a way that fits their business purposes.

Application developers can use data feeds to create real-time, content rich applications using a consistent RESTful approach. This means that developers can consume this data using tools like Visual Studio; well basically any development tools that support HTTP.

Here’s a tad more info on both Markets http://www.microsoft.com/windowsazure/marketplace/

Well, this brings an end to the 12 days of Windows Azure Platform Christmas. For anyone that has been reading and is new to the Windows Azure Platform, I hope this has given you a good insight into what each component of the platform provides and why you should think about using it.

If anything, it has also been a good learning exercise for me.

I have only one thing left to say Merry Christmas!

Oh and here are some links for more detail on the platform:

- Windows Azure Platform - http://msdn.microsoft.com/en-us/library/dd163896.aspx

- The latest development SDKs - http://www.microsoft.com/downloads/en/details.aspx?FamilyID=7a1089b6-4050-4307-86c4-9dadaa5ed018

- Some express downloads to get you developing - http://www.microsoft.com/express/downloads/

• MirekM updated the download of his Silverlight Data Source Control for OData at CodePlex on 12/10/2010 (site registration required):

Project Description

The library contains Data Source Control for XAML forms. The control extends proxy class to get an access to OData Services(created by Visual Studio) and cooperates with others Silverlight GUI controls. The control supports master-detail, paging, filtering, sorting, validation, changing data. You can append your validation rules. It works like a Data Source Control for RIA Services.

You can try Live demo here http://alutam.cz/samples/DemoOData.aspx

In detail, there are three basic classes: ODataSource, ODataSourceView and Entity.

- ODataSource is a control and you can use it in XAML Forms.

- ODataSourceView is a class which contains a data set. ODataSourceView has the following interfaces: IEnumerable, INotifyPropertyChanged, INotifyCollectionChanged, IList, ICollectionView, IPagedCollectionView ,IEditableCollectionView.

- Entity is a class for one data record and has the following interfaces: IDisposable, INotifyPropertyChanged, IEditableObject, IRevertibleChangeTracking, IChangeTracking.

Here’s a capture of Mirek’s live demo:

• Chris Stein reported OData gaining traction in a 12/10/2010 post to the Simba Technologies blog:

It’s been interesting to watch the list of producers of live OData Services grow over the last few months. The early adopters (like Netflix, some municipal & federal government agencies, and several Microsoft projects) have been joined by the likes of Facebook, eBay and twitpic. The list is even gaining a sense of humour, as evidenced by the entry for Nerd Dinner: “Nerd Dinner is a website that helps nerds to meet and talk, not surprisingly it has adopted OData.” You can check out the complete list here: http://www.odata.org/producers.

As of this writing, the list is up to 33 services with a good mix between large/high-profile entities with wide-ranging application possibilities and small initiatives often focused on just a slice of the tech community itself. We need both, because it’s the big names with brand recognition that catch people’s attention, but small-scale tinkering by techies can be an excellent incubator from which new & creative ideas will eventually emerge.

OData, the technology, is “the web-based equivalent of ODBC, OLEDB, ADO.NET and JDBC” (Chris Sells, via MSDN). Just as the ODBC specification and its descendants have enabled data access between clients and servers across widely disparate hardware and OS platforms, the OData specification promises data access between “consumers” (applications using standard web technologies) and “producers” (data sources residing on various web server platforms).

OData, looked at as a movement, is creating an open-ended environment with a whole new range of business decisions for companies to deal with. At the simplest level, the questions for a company choosing to publish an OData service are similar to those you would consider for a web application:

- Scope: Will the data be accessible only via a corporate Intranet, or will it be available on the open Internet?

- Authentication/Security: Will a user have to login to access the data? Will some portion of the data be available without logging in? The Netflix example is a good one to consider here—their movie catalogue is available to the general public, but a user’s movie queue is restricted to authenticated users only.

- Will the data be Read only or Read-write?

- What subset of fields from the database will be exposed?

- What range of data from the database will be exposed?

The big difference, of course, is that you’re not the one creating the application. You may also create an OData consumer application in addition to the OData producer data source, but the key is that you’re not the only one doing so and there are potentially many applications consuming your data feed. This moves you up to a higher level of business questioning:

- How might our data potentially be combined with other data sources (either by us or by others) and what value does that potentially add for our customers?

- What type of new business relationships are possible with other producers of OData feeds?

- What is the value to our business of this data (i.e. do we need to charge for access to it)?

If your company makes a product that generates data in some form, adding an ODBC driver opens it up to a wide range of general-purpose reporting tools so your customers can slice & dice the data to their hearts’ content. The benefits of providing data connectivity to the desktop are proven and clear. If you’re already doing this, what are your company’s plans for providing data connectivity to the web? Let us know, we’d love to hear from you.

Simba Technologies was one of the first third-party providers of ODBC drivers for Microsoft data sources. The firm now offers a Simba ODBC Driver for Microsoft HealthVault, which links HealthVault data with Microsoft Excel and Access.

Vincent Phillipe-Lauzon posted Advanced using OData in .NET: WCF Data Services to the CodeProject on 12/10/2010:

Samples showcasing usage of OData in .NET (WCF Data Services) ; samples go in increasing order of complexity, addressing more and more advanced scenarios.

Introduction

In this article I give code samples showcasing usage of OData in .NET (WCF Data Services).

The samples will go in increasing order of complexity, addressing more and more advanced scenarios.

Background

For some background on what is OData, I wrote an introductory article on OData a few months ago: An overview of Open Data Protocol (OData). In that article I showed what the Open Data Protocol is and how to consume it with your browser.

In this article, I'll show how to expose data as OData using the .NET Framework 4.0. The technology in the .NET Framework 4.0 used to expose OData is called WCF Data Services. Prior to .NET 4.0, it was called ADO.NET Data Services and prior to that, Astoria, which was Microsoft internal project name.

Using the code

The code sample contains a Visual Studio 2010 Solution. This solution contains a project for each sample I give here.

The solution also contains a solution folder named "DB Scripts" with three files: SchemaCreation.sql (creates a schema with 3 tables into a pre-existing DB), DataInsert.sql (inserts sample data in the tables) & SchemaDrop.sql (drops the tables & schema if needed).

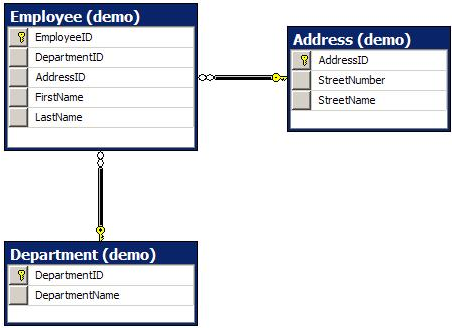

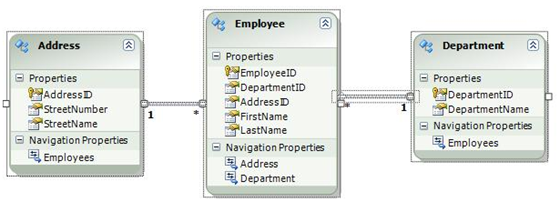

We use the same DB schema for the entire project:

Hello World: your DB on the Web

For the first sample, we'll do an Hello World: we'll expose our schema on the web. To do this, we need to:

- Create an Entity Framework model of the schema

- Create a WCF Data Services

- Map the Data Context to our entity model

- Declare permissions

Creating an Entity Framework model isn't the goal of this article. You can consult MSDN pages to obtain details: http://msdn.microsoft.com/en-us/data/ef.aspx.

Creating a WCF Data Service is also quite trivial, create a new item in the project:

We now have to map the data context to our entity model. This is done by editing the code behind of the service and simply replacing:

public class EmployeeDataService : DataService< /* TODO: put your data source class name here */ >by

public class EmployeeDataService : DataService<ODataDemoEntities>Finally, we now have to declare permissions. By default Data Services are locked down. We can open read & write permission. In this article, we'll concentrate on the read permissions. We simply replace:

// This method is called only once to initialize service-wide policies. public static void InitializeService(DataServiceConfiguration config) { // TODO: set rules to indicate which entity sets and service operations are visible, updatable, etc. // Examples: // config.SetEntitySetAccessRule("MyEntityset", EntitySetRights.AllRead); // config.SetServiceOperationAccessRule("MyServiceOperation", ServiceOperationRights.All); config.DataServiceBehavior.MaxProtocolVersion = DataServiceProtocolVersion.V2; }by

// This method is called only once to initialize service-wide policies. public static void InitializeService(DataServiceConfiguration config) { config.SetEntitySetAccessRule("Employees", EntitySetRights.AllRead); config.SetEntitySetAccessRule("Addresses", EntitySetRights.AllRead); config.SetEntitySetAccessRule("Departments", EntitySetRights.AllRead); config.DataServiceBehavior.MaxProtocolVersion = DataServiceProtocolVersion.V2; }There you go, you can run your project and have our three tables exposed as OData feeds on the web.

Exposing another Data Model

Unfortunately, the previous example is what %95 of the sample you'll find on the web are about. But WCF Data Services can be so much more.

In this section, we'll build a data model from scratch, one that isn't even connected to a database. What could we connect on... of course! The list of process running on your box!

In order to do this, we're going to have to:

- Create a process model class

- Create a data model exposing the processes

- Create a WCF Data Services

- Map the Data Context to our data model

- Declare permissions

We create the process model class as follow:

/// < />summary />>Represents the information of a process.< />/ />summary />> [DataServiceKey("Name")] public class ProcessModel { /// < />summary />>Name of the process.< />/ />summary />> public string Name { get; set; } /// < />summary />>Process ID.< />/ />summary />> public int ID { get; set; } }Now the key take away here are:

- Always use simple types (e.g. integers, strings, date, etc.): they are the only one to map to Entity Data Model (EDM) types

- Always declare the key properties (equivalent to primary key column in a database table) with the DataServiceKey attribute. The concept of key is central in OData, since you can single out an entity by using its ID.

If you violate one of those two rules, you'll have errors in your web service and not much way to know why.

We then create the data model exposing the processes like this:

/// < />summary />>Represents a data model exposing processes.< />/ />summary />> public class DataModel { /// < />summary />>Default constructor.< />/ />summary />> /// < />remarks />>Populates the model.< />/ />remarks />> public DataModel() { var processProjection = from p in Process.GetProcesses() select new ProcessModel { Name = p.ProcessName, ID = p.Id }; Processes = processProjection.AsQueryable(); } /// < />summary />>Returns the list of running processes.< />/ />summary />> public IQueryable<ProcessModel> Processes { get; private set; } }Here we could have exposed more than one process for completeness, but we opted for simplicity.

The key here is to:

- Have ONLY properties returning IQueryable of models.

- Populate those collection in the constructor

Here we populate the model list directly, but sometimes (as we'll see in a next section), you can simply populate it with a deferred query, which is more performing.

Creation and mapping of the data service and the permission declaration works the same way as the previous sample. After you've done that, you have an OData endpoint exposing the processes on your computer. You can interrogate this endpoint with any type of client, such as LinqPad.

This example isn't very useful in itself, but it shows that you can expose any type of data as an OData endpoint. This is quite powerful because OData is a rising standard and as you've seen, it's quite easy to expose your data that way.

You could, for instance, have your production servers expose some live data (e.g. number of active sessions) as OData that you could consume at any time.

Exposing a transformation of your DB

Another very useful scenario, somehow a combination of the previous ones, is to expose data from your DB with a transformation. Now that might be accomplished by performing mappings in the entity model but sometimes you might not want to expose the entity model directly or you might not be able to do the mapping in the entity model. For instance, the OData data objects might be out of your control but you must use them to expose the data.

In this sample, we'll flatten the employee and its address into one entity at the OData level.

- Create an Entity Framework model of the schema

- Create an employee model class

- Create a department model class

- Create a data model exposing both model classes

- Create a WCF Data Services

- Map the Data Context to our data model

- Declare permissions

Creation of the Entity Framework model is done the same way as in the Hello World section.

We create the employee model class as follow:

/// < />summary />>Represents an employee.< />/ />summary />> [DataServiceKey("ID")] public class EmployeeModel { /// < />summary />>ID of the employee.< />/ />summary />> public int ID { get; set; } /// < />summary />>ID of the associated department.< />/ />summary />> public int DepartmentID { get; set; } /// < />summary />>ID of the address.< />/ />summary />> public int AddressID { get; set; } /// < />summary />>First name of the employee.< />/ />summary />> public string FirstName { get; set; } /// < />summary />>Last name of the employee.< />/ />summary />> public string LastName { get; set; } /// < />summary />>Address street number.< />/ />summary />> public int StreetNumber { get; set; } /// < />summary />>Address street name.< />/ />summary />> public string StreetName { get; set; } }We included properties from both the employee and the address, hence flattening the two models. We also renamed the EmployeeID to ID.

We create the department model class as follow:

/// < />summary />>Represents a department.< />/ />summary />> [DataServiceKey("ID")] public class DepartmentModel { /// < />summary />>ID of the department.< />/ />summary />> public int ID { get; set; } /// < />summary />>Name of the department.< />/ />summary />> public string Name { get; set; } }We create a data model exposing both models as follow:

/// < />summary />>Represents a data model exposing both the employee and the department.< />/ />summary />> public class DataModel { /// < />summary />>Default constructor.< />/ />summary />> /// < />remarks />>Populates the model.< />/ />remarks />> public DataModel() { using (var dbContext = new ODataDemoEntities()) { Departments = from d in dbContext.Department select new DepartmentModel { ID = d.DepartmentID, Name = d.DepartmentName }; Employees = from e in dbContext.Employee select new EmployeeModel { ID = e.EmployeeID, DepartmentID = e.DepartmentID, AddressID = e.AddressID, FirstName = e.FirstName, LastName = e.LastName, StreetNumber = e.Address.StreetNumber, StreetName = e.Address.StreetName }; } } /// < />summary />>Returns the list of employees.< />/ />summary />> public IQueryable<EmployeeModel> Employees { get; private set; } /// < />summary />>Returns the list of departments.< />/ />summary />> public IQueryable<DepartmentModel> Departments { get; private set; } }We basically do the mapping when we populate the employee query. Here, as opposed to the previous example, we don't physically populate the employees, we define a query to fetch them. Since LINQ always defines deferred query, the query simply maps information.

We then create a WCF data services, map the data context to the data model and declare permissions as follow:

public class EmployeeDataService : DataService<DataModel> { // This method is called only once to initialize service-wide policies. public static void InitializeService(DataServiceConfiguration config) { config.SetEntitySetAccessRule("Employees", EntitySetRights.AllRead); config.SetEntitySetAccessRule("Departments", EntitySetRights.AllRead); config.DataServiceBehavior.MaxProtocolVersion = DataServiceProtocolVersion.V2; } }This scenario is quite powerful. Basically, you can mix data from the database and from other sources, transform it and expose it as O-Data while still beneficiating of the query power of your model (e.g. the database).

Service Operations

Another useful scenario covered by WCF Data Services, .NET 4.0 implementation of OData, is the ability to expose a service, with input parameters but where the output is treated as other entity sets in OData, that is, queryable in every way.

This is very powerful since the expressiveness of queries in OData is much less than in LINQ, ie there are a lot of queries in LINQ you just can't do in OData. This is quite understandable since queries are packed in a URL. Service Operations fill that gap by allowing you to take parameters in, perform a complicated LINQ query and return the result as a queryable entity-set.

Why would you query an operation being the result of a query? Well, for one thing, you might want to page on the result, using take & skip. But it might be that the result still represents a mass of data and you're interested in only a fraction of it. For instance, you could have a service operation returning the individuals in a company with less than a given amount of sick leave ; for a big company, that is still a lot data!

In this sample, we'll expose a service operation taking a number of employees in input and returning the departments with at least that amount of employees.

- Create an Entity Framework model of the DB schema

- Create a WCF Data Services

- Map the Data Context to our entity model

- Add a service operation

- Declare permissions

The first three steps are identical as the hello world sample.

We define a service operation within the data service as follow:

[WebGet] public IQueryable<Department> GetDepartmentByMembership(int employeeCount) { var departments = from d in this.CurrentDataSource.Department where d.Employee.Count >= employeeCount select d; return departments; }We then add the security as follows:

// This method is called only once to initialize service-wide policies. public static void InitializeService(DataServiceConfiguration config) { config.SetEntitySetAccessRule("Employee", EntitySetRights.AllRead); config.SetEntitySetAccessRule("Address", EntitySetRights.AllRead); config.SetEntitySetAccessRule("Department", EntitySetRights.AllRead); config.SetServiceOperationAccessRule("GetDepartmentByMembership", ServiceOperationRights.AllRead); config.DataServiceBehavior.MaxProtocolVersion = DataServiceProtocolVersion.V2; }Notice that we needed to enable the read on the service operation as well.

We can then hit the service operation with URL such as:

http://localhost:37754/EmployeeDataService.svc/GetDepartmentByMembership?employeeCount=2

You can read more about service operations on this MSDN article.

Consuming OData using .NET Framework

In order to consume OData, you can simply hit the URLs with an HTTP-get using whatever web library at your disposal. In .NET, you can do better than that.

You can simply add a reference to your OData service and Visual Studio will generate proxies for you. This is quick and dirty and works very well.

In case where you've defined your own data model (as in our DB transformation sample), you might want to share those models as data contracts between the server and the client. You would then have to define the proxy yourself, which isn't really hard:

/// < />summary />>Proxy to our data model exposed as OData.< />/ />summary />> public class DataModelProxy : DataServiceContext { /// < />summary />>Constructor taking the service root in parameter.< />/ />summary />> /// < />param /> name="serviceRoot" />>< />/ />param />> public DataModelProxy(Uri serviceRoot) : base(serviceRoot) { } /// < />summary />>Returns the list of employees.< />/ />summary />> public IQueryable<EmployeeModel> Employees { get { return CreateQuery<EmployeeModel>("Employees"); } } /// < />summary />>Returns the list of departments.< />/ />summary />> public IQueryable<DepartmentModel> Departments { get { return CreateQuery<DepartmentModel>("Departments"); } } }Basically, we derive from System.Data.Services.Client.DataServiceContext and define a property for each entity set and create a query for each. We can then use it this way:

static void Main(string[] args) { var proxy = new DataModelProxy(new Uri(@"http://localhost:9793/EmployeeDataService.svc/")); var employees = from e in proxy.Employees orderby e.StreetName select e; foreach (var e in employees) { Console.WriteLine("{0}, {1}", e.LastName, e.FirstName); } }The proxy basically acts as a data context! We treat it as any entity set source and can do queries on it. This is quite powerful since we do not have to translate the queries into URLs ourselves: the platform takes care of it!

Conclusion

We have seen different scenarios using WCF Data Services to expose and consume data. We showed that there is no need to limit ourselves to a database or an entity framework model. We can also expose service operations to do queries that would be otherwise impossible to do through OData and we've seen an easy way to consume OData on a .NET client.

I hope this opens up the possibilities around OData. We typically see samples where a database is exposed on the web and it looks like Access 1995 all over again. But OData is much more than that: it enables you to expose your data on the web but to present it the way you want and to control its access. It's blazing fast to expose data with OData and you do not need to know the query needs of the client since the protocol takes care of it.

License

This article, along with any associated source code and files, is licensed under The Microsoft Public License (Ms-PL)

Vincent-Philippe is a Senior Solution Architect working in Montreal (Quebec, Canada).

<Return to section navigation list>

Windows Azure AppFabric: Access Control and Service Bus

• Suren Machiraju described Hybrid Cloud Solutions With Windows Azure AppFabric Middleware in a chapter-length post of 11/29/2010 (missed when posted):

Abstract

Technical and commercial forces are causing Enterprise Architects to evaluate moving established on-premises applications into the cloud – the Microsoft Windows Azure Platform.

This blog post will demonstrate that there are established application architectural patterns that can get the best of both worlds: applications that continue to live on-premises while interacting with other applications that live in the cloud – the hybrid approach. In many cases, such hybrid architectures are not just a transition point — but a requirement since certain applications or data is required to remain on-premises largely for security, legal, technical and procedural reasons.

Cloud is new, hybrid cloud even newer. There are a bunch of technologies that have just been released or announced so there is no one book or source for authentic information, especially one that compares, contrasts, and ties it all together. This blog, and a few more that will follow, is an attempt to demystify and make sense of it all.

This blog begins with a brief review of two prevalent deployment paradigms and their influence on architectural patterns: On-premises and Cloud. From there, we will delve into a discussion around developing the hybrid architecture.

In authoring this blog posting we take an architect’s perspective and discuss the major building block components that compose this hybrid architecture. We will also match requirements against the capabilities of available and announced Windows Azure and Windows Azure AppFabric technologies. Our discussions will also factor in the usage costs and strategies for keeping these costs in check.

The blog concludes with a survey of interesting and relevant Windows Azure technologies announced at Microsoft PDC 2010 - Professional Developer's Conference during October 2010.

On-Premises Architectural Pattern

On-premises solutions are usually designed to access data (client or browser applications) repositories and services hosted inside of a corporate network. In the graphic below, Web Server and Database Server are within a corporate firewall and the user, via the browser, is authenticated and has access to data.

Figure 1: Typical On-Premises Solution (Source - Microsoft.com)

The Patterns and Practices Application Architecture Guide 2.0 provides an exhaustive survey of the on-premises Applications. This Architecture Guide is a great reference document and helps you understand the underlying architecture and design principles and patterns for developing successful solutions on the Microsoft application platform and the .NET Framework.

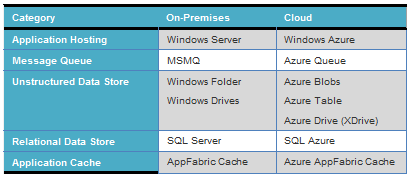

The Microsoft Application Platform is composed of products, infrastructure components, run-time services, and the .NET Framework, as detailed in the following table (source: .NET Application Architecture 434 Guide, 2nd Edition).

Table 1: Microsoft Application Platform (Source - Microsoft.com)

The on-premises architectural patterns are well documented (above), so in this blog post we will not dive into prescriptive technology selection guidance here, however let’s briefly review some core concepts since we will reference them while elaborating hybrid cloud architectural patterns.

Hosting The Application

Commonly* on-premises applications (with the business logic) run on IIS (as an IIS w3wp process) or as a Windows Service Application. The recent release of Windows Server AppFabric makes it easier to build, scale, and manage Web and Composite (WCF, SOAP, REST and Workflow Services) Applications that run on IIS.

* As indicated in Table 1 (above) Microsoft BizTalk Server is capable of hosting on-premises applications; however, for sake of this blog, we will focus on Windows Server AppFabric

Accessing On-Premises Data

You’re on-premises applications may access data from the local file system or network shares. They may also utilize databases hosted in Microsoft SQL Server or other relational and non-relational data sources. In addition, your applications hosted in IIS may well be leveraging Windows Server AppFabric Cache for your session state, as well as other forms of reference or resource data.

Securing Access

Authenticated access to these data stores are traditionally performed by inspecting certificates, user name and password values, or NTLM/Kerberos credentials. These credentials are either defined in the data source themselves, heterogeneous repositories such as in SQL Logins, local machine accounts, or in directory Services (LDAP) such as Microsoft Windows Server Active Directory, and are generally verifiable within the same network, but typically not outside of it unless you are using Active Directory Federation Services - ADFS.

Management

Every system exposes a different set of APIs and a different administration console to change its configuration – which obviously adds to the complexity; e.g., Windows Server for configuring network shares, SQL Server Management Studio for managing your SQL Server Databases; and IIS Manager for the Windows Server AppFabric based Services.

Cloud Architectural Pattern

Cloud applications typically access local resources and services, but they can eventually interact with remote services running on-premises or in the cloud. Cloud applications usually hosted by a ‘provider managed’ runtime environment that provides hosting services, computational services, storage services, queues, management services, and load-balancers. In summary, cloud applications consist of two main components: those that execute application code and those that provide data used by the application. To quickly acquaint you with the cloud components, here is a contrast with popular on-premises technologies:

Table 2: Contrast key On-Premises and Cloud Technologies

NOTE: This table is not intended to compare and contrast all Azure technologies. Some of them may not have an equivalent on-premise counterpart.

The graphic below presents an architectural solution for ‘Content Delivery’. While Content creation and its management is via on-premises applications; the content storage (Azure Blob Storage) and delivery is via Cloud – Azure Platform infrastructure. In the following sections we will review the components that enable this architecture.

components that enable this architecture.

Figure 2: Typical Cloud Solution

Running Applications in Windows Azure

Windows Azure is the underlying operating system for running your cloud services on the Windows Azure AppFabric Platform. The three core services of Windows Azure in brief are as follows:

Compute: The compute or hosting service offers scalable hosting of services on 64-bit Windows Server platform with Hyper-V support. The platform is virtualized and designed to scale dynamically based on demand. The Azure platform runs web roles on Internet Information Server (IIS) and worker roles as Windows Services.

Storage: There are three types of storage supported in Windows Azure: Table Services, Blob Services, and Queue Services. Table Services provide storage capabilities for structured data, whereas Blob Services are designed to store large unstructured file like videos, images, batch files in the cloud. Table Services are not to be confused with SQL Azure; typically you can store the high-volume data in low-cost Azure Storage and use (relatively) expensive SQL Azure to store indexes to this data. Finally, Queue Services are the asynchronous communication channels for connecting between Services and applications not only in Windows Azure but also from on-premise applications. Caching Services, currently available via Windows Azure AppFabric LABS, is another strong storage option.

Management: The management service supports automated infrastructure and service management capabilities to Windows Azure cloud services. These capabilities include automatic and transparent provisioning of virtual machines and deploying services in them, as well as configuring switches, access routers, and load balancers.

A detailed review on the above three core services of Windows Azure is available in the subsequent sections.

Application code execution on Windows Azure is facilitated by Web and Worker roles. Typically, you would host Websites (ASP.NET, MVC2, CGI, etc.) in a Web Role and host background or computational processes in a Worker role. The on-premises equivalent for a Worker role is a Windows Service.