Windows Azure and Cloud Computing Posts for 6/21/2011+

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database and Reporting

- Marketplace DataMarket and OData

- Windows Azure AppFabric: Access Control, WIF and Service Bus

- Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch

- Windows Azure Infrastructur and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Azure Blob, Drive, Table and Queue Services

Steve Marx (@smarx) posted Windows Azure Storage Emulator: The Process Cannot Access the File Because It is Being Used by Another Process on 6/20/2011:

This question popped up on Stack Overflow. For the benefit of future Bing-ers, I thought I’d share the answer here too. In my experience, the error message in the title is generally the result of a port already being in use.

Check which of the storage services (blobs, tables, or queues) is failing to start, and see if anything on your computer is consuming that port. The most common case is blob storage not starting because BitTorrent is using port 10000.

Quick tip, use

netstatto determine which process has the port, as follows:C:\Users\smarx>netstat -p tcp -ano | findstr :10000

TCP 127.0.0.1:10000 0.0.0.0:0 LISTENING 3672

<Return to section navigation list>

SQL Azure Database and Reporting

Cihan Biyikoglu described ID Generation in Federations in SQL Azure: Identity, Sequences and GUIDs (Uniqueidentifier) in a 6/20/2011 post to the SQL Azure Team blog:

This discussion came up today in the preview program aliases today and I wanted to summarize some of our thinking on the topic; Federations in SQL Azure provide the ability to setup a distributed database tier with many member databases. Scaling in distributed environments typically pushes to decentralize logic to parts of the system. This also means some of the centralized database functionality such as identity or sequence generation no longer effectively works given their definitions today. In distributed systems in general and in federations, I recommend using uniqueidentifier as a identifier. It is globally unique by definition and does not require funneling generation through some centralized logic. With unique identifiers you give up on one property; ID generation is no longer sequential.

So what If you’d like to understand the order in which a set of rows were inserted? well that is easy to do in an atomic unit. You can use a datetime data type (ideally datetimeoffset) with great precision and mark the event time such as creation or modification time in this field. However I’d strongly encourage giving up on trying this across atomic units if you need an absolute guarantee. Here is the core of the issue; If you need to sort across AUs, datetimeoffset still may work. However it is easy to forget that there isn’t a centralized clock in a distributed system. Due to the random number of repartitioning operations that may have happened over time, the datetime value may be coming from many nodes and nodes are not guaranteed to have sync clocks (they can be a few mins apart). Given no centralized clock, across atomic units datetime value may not reflect the exact order in which things happened.

Secondly, partitioning over ranges of uniqueidentifiers may get confusing. The sorting of uniqueidentifiers is explained in a few blogs. This one explains the issue well; How are GUIDs sorted by SQL Server. It is a mind stretching exercise but I expect we’ll have tooling to help out with some of this in future.

Not only are uniqueidentifieer values not sequential, they are not well suited to tables whose entities are abstractions of serialized documents, such as sales orders, invoices and checks. Hopefully Cihan will arrange to have SQL Server Denali’s sequence objects implemented in SQL Azure immediately (if not sooner.)

For more details about forthcoming SQL Azure Federations and sharding, see my Build Big-Data Apps in SQL Azure with Federation cover story for Visual Studio Magazine’s March 2011 issue.

Liran Zelkha (@liran_zelkha) described Running TPC-C on MySQL/RDS in a 6/21/2011 post to the High Scalability blog:

I recently came across a TPC-C benchmark results held on MySQL based RDS databases. You can see it here. I think the results may bring light to many questions concerning MySQL scalability in general and RDS scalability in particular. (For disclosure, I'm working for ScaleBase where we run an internal scale out TPC-C benchmark these days, and will publish results soon).

TPC-C

TPC-C is a standard database benchmark, used to measure databases. The database vendors invest big bucks in running this test, and showing off which database is faster, and can scale better.

It is a write intensive test, so it doesn’t necessarily reflect the behavior of the database in your application. But it does give some very important insights on what you can expect from your database under heavy load.

The Benchmark Process

First of all, I have some comments for the benchmark method itself.

- Generally - the benchmarks were held in an orderly fashion and in a rather methodological way – which increases the credibility of the results.

- The benchmark generator client was tpcc-mysql which is an open-source implementation provided by Percona. It’s a good implementation, although I think DBT-2, provided by Apache, is more widely used.

- Missing is the mix weights of the different TPC-C transactions in the benchmark. The TPC-C benchmark contains 6 types of transactions (“new order”, “delivery”, “stock query”, etc.) and the mix weights are important.

- The benchmark focused on throughput, not latency. I think that although TPC-C is mostly about throughput (transactions per minute) it’s recommended to address the latency (“avg response time” for example) as well.

- TPC-C “number of warehouses” determines the size of the database being tests. Number of warehouses ranged between 1 and 32 in this benchmark. In MBs we’re talking 100MB-3GB. That’s usually a small database, and I would be interested in seeing how the benchmark ranks with 1000 warehouses (around 100 GB) or even more.

- The entire range RDS instance types were examined, starting from small to quadruple Extra Large (4XL). Some very interesting results turned out, which will be the focus of this blog post.

Results Analysis

The benchmark results are surprising.

- With hardly any dependency on the database size, MySQL reaches its optimal throughput at around 64 concurrent users. Anything above that causes throughput degradation.

- Throughput is improving as machines get stronger. However, there is a sweet-spot, a point where adding hardware doesn’t help performance. The sweet spot is around the XL machine, which reaches a throughput of around 7000 tpm. 2XL and 4XL machines don’t improve this limit.

It would seem that the Scale-Up scalability approach is rather limited, and bounded. And unfortunately, has some rather close bounds.

So, what’s the bottleneck?

- CPU is unlikely candidate, as CPU power doubles on 2XL and quadruple on 4XL machines.

- I/O is also an unlikely candidate. Since machines memory (RAM) doubles on 2XL and quadruple on 4XL machines. More RAM means more database cache buffers, thus reduced I/O.

- Concurrency? Number of concurrent users? Well we saw that optimal throughput is achieved with around 64 concurrent sessions on the database. See figure 4b in the benchmark report. While with 1 user the throughput 1,000 transactions per user, with 256 users it drops to 1 transaction per user!

Results Explanation

It definitely weird that when more parallel users are added, we get performance degradation – a lower throughput than the one we have with fewer parallel users.

Well, the bottleneck is the database server itself. A database is a complex sophisticated machine. With tons of tasks to perform in each and every millisecond it runs. For each query, the database needs to parse, optimize, find an execution plan, execute it, and manage transaction logs, transaction isolation and row level locks.

Consider the following example. A simple update command needs an execution plan to get the qualifying rows to update and then, reading those rows, lock each and every row. If the command updates 10k rows – this can take a while. Then, each update is executed on the tables, on each of the relevant indexes of that table, and also written to the transaction log file. And that’s for just a simple update.

Or another example - A complex query takes a long time to run. Even 1 second is a lot of time in a highly loaded database. During its run, rows from source table(s) of that query are updated (or added, or deleted). The database must maintain “Isolation level” (see our isolation level blog). Meaning that the user’s result must be the snapshot of the query as it was when the query started. New values in a row that was updated after the query began, even if committed, should not be part of the result. Rather, the database should go and find the “old snapshot” of the row, meaning the way the row looked at the beginning of the query. InnoDB stores information about old versions of rows in its tablespace. This information is stored in a data structure called a rollback segment. And if we get back to the previous example – here’s another task the database has to do – update the rollback segment.

We must remember, every command issued to the database, actually generates dozens of recursive commands inside the database engine. When sessions or users concurrency goes up, load inside the database engine increases exponentially. The database is a great machine, but it has its limits.

So What Do We Do?

So, we understand the database is crowded, and has hardware sweet-spots, which complicates the Scale Up solution – which is expensive, and doesn’t give the required performance edge. Even specialized databases have their limit, and while the sweet-spot changes, every database has one.

There are allot of possible solutions to this problem – adding a caching layer is a must, to decrease the number of database hits, and any other action that can reduce the number of hits on the database (like NoSQL solutions) is welcomed.

But the actual database solution is scale-out. Instead of 1 database engine, we’ll have several, say 10. Instead of 128 concurrent users or even 256 concurrent users (that according to the TPC-C benchmark bring worst results), we’ll have 10 databases with 26 users on each, and each database can reach 64 users (up to 640 concurrent users). And of course, if needed, we can add more databases to handle the increased scale.

It will be interesting to see how scale out solutions for databases handle TPC-C.

<Return to section navigation list>

MarketPlace DataMarket and OData

Marcelo Lopez Ruiz of the WCF Data Services Team posted Announcing datajs version 0.0.4 on 6/20/2011:

We are excited to announce that datajs version 0.0.4 is now available at http://datajs.codeplex.com/.

The latest release adds support for Reactive Extensions for Javascript (RxJs), forward and backwards cache filtering, extended metadata, simplified OData call configuration, and provides various performance and quality improvements.

To get started, simply browse over to the Documentation page, where you will find download instructions, overview and detailed topics, and some samples to get started quickly.

This is the last planned release before version 1.0.0. The features and APIs are not expected to change, so this is a great time to try the library out and provide feedback through the project Discussions!

<Return to section navigation list>

Windows Azure AppFabric: Access Control, WIF and Service Bus

The AppFabric Team posted an updated version of its Announcing the Windows Azure AppFabric June CTP! post on 6/21/2011:

Today we are excited

ingto announce the release of the Windows Azure AppFabric June CTP which includes capabilities that make it easy for developers to build, deploy, manage and monitor multi-tier applications across web, business logic and database tiers as a single logical entity on the Windows Azure Platform.This CTP release consists:

- AppFabric Developer Tools - Enhancements to Visual Studio that enable to visually design and build end-to-end applications on the Windows Azure platform.

- AppFabric Application Manager – Powerful, yet easy way to host and manage n-tier applications that span web, middle and data-tier through the entire application lifecycle including deployment, configuration, monitoring and troubleshooting. The Application Manager includes a comprehensive application management portal that is powered by a REST API for monitoring the health of your n-tier application and performing management operations on the running applications.

- Composition Model - A set of .NET Framework extensions for composing applications on the Windows Azure platform. This builds on the familiar Azure Service Model concepts and adds new capabilities for describing and integrating the components of an application. The AppFabric Developer Tools leverage the composition model to create an application manifest that is used by the AppFabric Application Manager at deployment time to understand the application structure and metadata.

- Support for running Custom Code, WCF, WF - Formal support for executing Windows Workflow Foundation (WF) on Windows Azure and the composition of Windows Communication Foundation (WCF) and WF services, as well as custom code, into a composite application. In addition, it provides enhanced development, deployment, management and monitoring support for WCF and WF services.

You can read more details in this blog post: Introducing Windows Azure AppFabric Applications and the Channel 9 announcement video.

The release is already live in our LABS/Previews environment at: http://portal.appfabriclabs.com/.

There is limited space available to try out the AppFabric Application Manager CTP so be sure to sign-up as early as possible for a better chance to get access to the CTP.

The post update added narrative instructions for applying for access to the CTP, which the original version didn’t describe. The following illustrated section replaces that addition.

Sign Up for the Application Manager CTP

1. Log into the AppFabric Labs portal with your AppFabric Labs Windows Live ID:

2. Click the AppFabric Services button to display the Applications button. Click the Applications button to activate the sign-up window and click the Refresh Namespace button to open the first Request Service Namespace dialog:

3. Click Next to open the second Request Service Namespace dialog. Specify a Namespace name, select the Country/Region and AppFabric Labs Subscription, if applicable:

4. Click the Request Namespace button to display the request acknowledgment page:

The AppFabric Team’s post continues:

In order to build applications for the CTP you will need to install the Windows Azure AppFabric CTP SDK and the Windows Azure AppFabric Tools for Visual Studio. Even if you don’t have access to the AppFabric Application Manager CTP you can still install the tools and SDK to build and run applications locally in your development environment.

This CTP is intended for you to check out and provide us with feedback, so please remember to visit the Windows Azure AppFabric CTP Forum to ask questions and provide us with your feedback.

To learn more please use the following resources:

- Composing and Managing Applications with Windows Azure AppFabric video which is part of the Windows Azure AppFabric Learning Series available on CodePlex.

- Watch the relevant TechEd sessions:

- MDSN Documentation

- Visit the Windows Azure AppFabric website

- Visit the Windows Azure AppFabric Developer page

- Read the Windows Azure AppFabric Overview Whitepaper

Don’t forget that we already have several Windows Azure AppFabric services (Service Bus, Access Control, Caching) in production that are supported by a full SLA. If you have not signed up for Windows Azure AppFabric yet be sure to take advantage of our free trial offer. Just click on the image below and get started today!

Here’s what the Windows Azure AppFabric CTP SDK and the Windows Azure AppFabric Tools for Visual Studio installs or updates:

And here’s the Setup dialog for the Windows Azure AppFabric Developer Tools:

Karandeep Anand (@_karendeep) posted Introducing Windows Azure AppFabric Applications on 6/20/2011 to the AppFabric Team Blog:

Last October, at the PDC 2010 keynote, we shared our vision of building, running, and managing applications reliably and at scale in Windows Azure. Since then, the team has been heads-down to make the vision a reality, and last month at TechEd 2011, we announced that we’ll soon be releasing a rich set of new capabilities to build AppFabric Applications using AppFabric Developer Tools, run them in the AppFabric Container service, and manage them using the AppFabric Application Manager. Today, we are very pleased to announce that the day has come when we can publicly share the first Community Technology Preview (CTP) of these capabilities in Windows Azure! As announced here, the June CTP of AppFabric is now live, and you can start by downloading the new SDK and Developer Tools and by signing up for a free account at the AppFabric Labs portal. Now we’ll go into more detail about what we’ve released today and how you can get started building and managing AppFabric Applications.

AppFabric Applications

Let’s start by defining what we mean when we say AppFabric Applications. In a nutshell, it is any n-tier .NET application that spans the web, middle, and data tiers, composes with external services, and is inherently written to the cloud architecture for scale and availability. In CTP1, we are focusing on a great out-of-box experience for ASP.NET, WCF, and Windows Workflow (WF) applications that consume other Windows Azure services like SQL Azure, AppFabric Caching, AppFabric Service Bus and Azure Storage. The goal is to enable both application developers and ISVs to be able to leverage these technologies to build and manage scalable and highly available applications in the cloud. In addition, the goal is to help both developers and IT Pros, via the AppFabric Developer Tools and AppFabric Application Manager, respectively, to be able to manage the entire lifecycle of an application from coding and testing to deploying and managing.

Now, let’s take a deeper look at the 3 key pieces of the puzzle and how they all fit together to help you through the lifecycle of AppFabric Applications:

- Developer Tools

- AppFabric Container

- Application Manager

Developer Tools

AppFabric Developer Tools provide the rich Visual Studio experience at design-time to build and debug AppFabric Applications. The key goal of the Developer Tools is to enable .NET developers to leverage their existing skills and knowledge with the .NET framework and Visual Studio and extend it to build cloud-ready applications in an easy yet rich way for Windows Azure. The Developer Tools are an extension to the existing way of building .NET applications. And the additional tooling allows developers to not only author business and presentation logic that run in the web and middle-tier, but also to easily enrich and extend their apps by composing with other services through a rich service composition experience.

The first goal with Developer Tools is to enable developers to build cloud-ready—i.e. scalable and available—applications by building on concepts they already know. The AppFabric Container service does the heavy lifting of elastically scaling and making an application available and reliable. In Developer Tools, we distilled the set of design principles and configuration knobs that a developer would need to make their application code leverage these capabilities, and we provided these via simple configuration settings on out-of-box components like WCF services and Workflows. In addition, we also provide a powerful programming model to do state management for scalable and resilient stateful services, but more on that topic later. The developer gets to specify intent on the number of scale instances, number of replicas, the partitioning policies etcetera, depending upon how much control they want; or they could go with the default settings and let the runtime manage most of it. In all cases, basic cloud design principles, like address virtualization and automatic address resolution when deployed in Azure, get taken care of automatically, without ever having to think of the underlying compute or network infrastructure.

The second goal is based on a key trend we see today: to be agile and stay competitive, developers want to focus on building code that adds value to their core offering, and therefore want to just use, or compose with, existing services. There is a large ecosystem of existing web services that help with everything from managing basic identity and access, to processing complex multi-party payments across geographies! Hence, service composition is no longer an afterthought but a core part of designing and building an application. Addressing this became one of the key design principles for the AppFabric Developer Tools experience. The tools provide a simple yet powerful experience for composing applications with first party Azure services like Caching or Storage, as well as for consuming external services like Netflix or PayPal. The extensibility of the developer tools allows even the list of service components we ship out of the box to be extended to include 3rd party services, or your own frequently used custom services. The screenshot below shows a list of out-of-box shipping service components like ASP.Net or Caching (in blue) and some example custom service components like Authorize.Net and Facebook (in black).

To get developers started quickly, we are shipping a few templates for building applications using commonly used patterns like a high-performance ASP.NET application using Caching or a rich Silverlight Business Application. These templates get installed as part of the Developer Tools and provide an easy way to get started for building AppFabric applications.

The third key goal is to enable developers to build and debug the application as a whole. No web site or WCF service is an island; each is part of an end to end multi-tier application. AppFabric Developer Tools allow for a local simulation of building and running an application distributed across a web-tier, middle-tier and data-tier—but by building on top of the existing Windows Azure Compute Emulator that Azure developers are already familiar with.

The last design pivot is to enable developers to easily define the end-to-end multi-tier application in terms of the web and middle-tier code artifacts, database and storage resources, and a spectrum of external services referenced by the application. This metadata is automatically captured as the Composition Model. The model then gets packaged along with the application so that the management system (the Application Manager) can use it for managing the application lifecycle easily.

With CTP1, our goal is to first enable the end-to-end developer experience built around these core principles. We’ll build out richer experiences for each step in the development lifecycle as we go through future iterations of the tools.

AppFabric Container

The AppFabric Container service is the new multi-tenant middle-tier container for hosting and running mid-tier code like WCF and WF, or your custom code, at scale with high availability. At its core, the AppFabric Container consists of a large cluster of machines that have been set up to be resilient, scalable and multi-tenant, and has been pre-provisioned with the necessary frameworks and runtimes required to run your custom code, WCF services or Workflows. With AppFabric Container, we raise the level of abstraction for developers and admins so that they don’t have to deal with any of the complexity of the underlying computing, networking and storage infrastructure. Developers can focus on the business logic, and let the runtime manage the complex details of partitioning, replication, state management, address virtualization, dynamic address resolution, failover, isolation, resiliency, load balancing, request routing etcetera. You simply package and drop your WCF services, Workflows or custom .NET code into the AppFabric Container using the Application Manager or directly from Visual Studio, and let the container host, run and scale the application for you. Then you sit back and manage the service level objectives of your services via the Application Manager.

In addition, the AppFabric Container enables rich hosting scenarios & configurations for both WCF & Windows Workflows (WF). You can build and run brokered services i.e. message activated WCF services, with the integration that AppFabric Container provides with Service Bus. Also, you can run rich WF applications on AppFabric Container with support for persisting state easily and providing business process visibility through the management endpoint to get instance status. We’ll go in to a lot more detail about building WCF & WF applications to run in Windows Azure using AppFabric Container in future blog posts.

Application Manager

The Application Manager is the last piece of the puzzle. It is the infrastructure and tooling that enables you to manage any AppFabric Application through its lifecycle—configuring, deploying, controlling, monitoring, troubleshooting and scaling, all as a single logical application. It uses the metadata of the application i.e. the Composition Model, to automatically understand the components, dependencies and requirements of your multi-tier application so that you don’t have to. The screenshot below shows a sample application that spans the web, middle and data tiers; the application composes with Windows Azure and external 3rd party services, but is being managed as a single unit in the Application Manager.

One of the first things that the Application Manager does when you publish or upload an n-tier application to it is to help you understand the end to end application structure and its contents. It then allows you to centrally configure your entire application and all its parts, including detailed WCF configuration settings—like setting timeouts and number of concurrent connections, or simple environment settings such as which database to connect to in production versus staging. Once you are done configuring the application, a single click allows you to deploy your entire application across multiple web containers and the AppFabric Container. It also wires the application up to all the internal and external referenced services, including, but not limited to, SQL Azure, Caching, Blob Storage, Service Bus Queues etc. And it uses the Access Control Service (ACS) to secure the namespaces. Anyone who has deployed a distributed application spanning multiple tiers understands all the pain that the Application Manager will take away with a single-click! And never once will you have to deal with any virtual machines, network settings or storage configurations.

In addition to centrally configuring and deploying your application, the Application Manager also helps you manage certificates to secure your application, configures the monitoring and diagnostics stores for your app automatically, and creates the right end points and URIs for your deployed services in your namespace.

Other key investments in the Application Manager are in out-of-box monitoring and troubleshooting support for your application. Once you start using your deployed application, you’ll notice a rich set of metrics being automatically generated and collected for your application—everything from basic ASP.NET metrics like number of requests, to request latency numbers for ASP.NET and WCF services, to SQL DB call execution time, to the number of times your cache gets called. All without writing a single line of code during development! And these metrics get aggregated for you across all the instances of your scaled out application, as it is running on the web and AppFabric Container. In addition, if you have built custom components, say for example, a PayPal service that you reference from your application, you can even generate custom metrics in CTP1. You can then start tracking items like the number of orders sent, the number of orders over $1000 processed, and see those custom metrics rendered along with other out-of-box metrics using rich chart controls right in the Application Manager.

On similar lines, we’ve made troubleshooting of your distributed application a lot easier by collecting and centralizing all troubleshooting logs (you get to pick the diagnostics level of the app when you build it) from all the instances of web and AppFabric Container to which the app is deployed to at any given point. All logs are available from one location in the Application Manager, and you can open the downloaded logs in the SvcTraceViewer tool for detailed debugging and diagnosis. You no longer have to write tons of scripts, peer through files, and separate infrastructure issues from application issues. You get all the logs through a UI, and programmatically through our REST API. Yes, we do offer a full REST API (and PowerShell samples too!) to automate, or programmatically access anything that the UI can do, using your own custom dashboards and management tools.

Last but not the least, the Application Manager allows you to scale-out your application with a single-click. You select the number of instances you want to scale different parts of your application to (there are restrictions on the max scale count in CTP1 as we are paying your bills!). And voila—the application automatically and elastically gets scaled out without any downtime!

While I can go on for a while describing all the cool and powerful things you can do with the CTP1 bits, I’ll wrap up this blog post by saying that we are super excited to share this technology preview and hope that you’ll be as excited to try them out and discover all the things we’ve built, and to take the conversation of building and managing applications to the next level! And remember, we have a limited capacity for CTP1 so sign-up quickly for your free account.

We have an exciting roadmap ahead, but we’ll get to that in future blog posts – for now, we’d love to hear what you have to say about your experience with CTP1. Watch out for a series of blog posts in the coming weeks that’ll go in to a lot more detail about the various scenarios, features and capabilities across the 3 key parts we’ve discussed today. Meanwhile, you can start with the samples that get installed with the AppFabric June CTP SDK or use the tutorials available on MSDN. You can also watch this short video on Channel 9 that Ron Jacobs and I did to give you a quick overview and demo of the things we’ve discussed above.

Welcome to a new era of building and managing rich cloud applications on Windows Azure!

<Return to section navigation list>

Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

No significant articles today.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

No significant articles today.

<Return to section navigation list>

Visual Studio LightSwitch

Return to section navigation list>

Windows Azure Infrastructure and DevOps

My Windows Azure Platform Maligned by Authors of NetworkWorld Review answers NetworkWorld’ First look at Windows Azure article of 6/20/2011 by Tom Henderson and Brendan Allen. Page 1:

There's "the cloud" and then there's Windows Azure.

We tested the available production-level features in Microsoft's platform-as-a-service (PaaS) offering, and found that Azure is similar in many ways to what already exists in the cloud sky.

But there are some key differences. Azure is homogeneously Windows-based. And big chunks of Azure are still in beta or "customer technology preview."

Three main components of Azure are currently available: Windows Azure 2008/ 2008 R2 Server Edition Compute Services, Windows SQL Azure instances, and storage facilities. These components are sewn together by Microsoft's AppFabric, an orchestration system for messaging, access control and management portal.

However, none of the Windows Azure instances are currently capable of being controlled by Microsoft's System Center management system. They can't be touched by an organization's Active Directory infrastructure today — only by beta, pre-release features. Instance availability through mirroring or clustering is currently unavailable, too*. [Emphasis added. See comment below.]

Overall, it's far too early to recommend Windows Azure. The architectural diagram looks very interesting, and while some pieces appeared ready in testing, big chunks of the Azure offerings aren't ready for enterprise use**. (See how we tested the product.)

What You Get — Today

Windows Azure provides production application support through Windows Azure 2008/2008 R2 Server Edition Compute services, Windows SQL Azure, and several forms of data storage. Customers can buy these services in graduated instance sizes, and deploy them into various geographies, and different Microsoft data centers within some of the geographies.

What's available today is a subset of the grander Windows Azure future architectural road map. Buying into the Azure vision may turn out to have great value in the future, and the pieces that are running today worked well, but they don't satisfy the wide number of use cases associated with IaaS or PaaS.

Another component of Microsoft's PaaS push is Azure Marketplace, where developers can buy, sell and share building blocks, templates and data sets, plus finished services and apps needed to build Azure platform apps. The DataMarket section's offerings are limited, while the apps section isn't commercially available yet.

Microsoft intends to expand the limited Azure Marketplace offerings with both community and also marketed development tools, Azure-based SaaS third party applications, and other business offerings. Ostensibly third-parties will replicate and offer the Azure model to clientele from these and other sources.

Virtual machine roles

Windows Azure components are defined by roles, currently Web Roles and worker roles (based on IIS and .Net functionality), which can run against SQL Azure database instances. The deployed processes are managed through AppFabric, whose functionality exists inside the Azure resource pools as a management layer and messaging infrastructure.

Microsoft turns Windows Azure into cloud-based supercomputer

* Re: “Instance availability through mirroring or clustering is currently unavailable, too.” (Quotes are from the Windows Azure Service Level Agreements page):

For storage, the Windows Azure Platform maintains three replicas and guarantees “that at least 99.9% of the time [Windows Azure] will successfully process correctly formatted requests [received] to add, update, read and delete data. [Windows Azure] also guarantees that your storage accounts will have connectivity to [its] Internet gateway.

** Re: “big chunks of the Azure offerings aren't ready for enterprise use”:

A substantial number of organizations, ranging in size from Internet startups to Fortune 1000 firms have adopted Azure for numerous enterprise-scale applications. See the Windows Azure Case Studies page.

*** Re: Ostensibly third-parties will replicate and offer the Azure model to clientele from these and other sources”:

Fujitsu Limited announced in a Fujitsu Launches Global Cloud Platform Service Powered by Microsoft Windows Azure press release of 6/7/2011:

Fujitsu Limited and Microsoft Corporation today announced that the first release of its Global Cloud Platform service powered by Windows Azure, running in Fujitsu's datacenter in Japan, will be launched August 2011. The launch of the service, named FGCP/A5, is the result of the global strategic partnership between Fujitsu and Microsoft announced in July 2010. The partnership allows Fujitsu to work alongside Microsoft providing services to enable, deliver and manage solutions built on Windows Azure. As of April 21, 2011, Fujitsu has been offering the service in Japan on a trial basis to twenty companies. This launch marks the first official production release of the Windows Azure platform appliance by Fujitsu. …

The widely quoted press release preceded the authors’ review by 13 days, which should have been ample time to correct the assertion.

From page 2:

Re “Glaringly absent is the Virtual Machine Role. VM Roles are the commodity-version of Azure Windows Server licenses. We've seen them, but because they aren't available yet, extreme constraints are imposed on the current Azure platform.”

VM Roles are available as a beta, which the authors chose not to test, and emulate IaaS feature for applications that can’t be easily adapted to Azure’s PaaS implementation.

Re “Microsoft has developed the Azure infrastructure fabric to be emulated and replicated by future managed services providers (MSP) — likely at the point when both PaaS and IaaS become available.”

See Fujitsu press release quoted above.

Re “We asked for access to the Azure Business Edition:”

There is no “Access Business Edition.” Only SQL Azure has a Business Edition.

Re “Whatever is done inside of the Azure Cloud is controlled by Microsoft's AppFabric, which will place an instance of a predetermined/pre-selected size in one of its data centers — which are defined by region and specific data centers.”

From Microsoft’s Windows Azure AppFabric Overview:

Windows Azure AppFabric provides a comprehensive cloud middleware platform for developing, deploying and managing applications on the Windows Azure Platform. It delivers additional developer productivity adding in higher-level Platform-as-a-Service (PaaS) capabilities on top of the familiar Windows Azure application model. It also enables bridging your existing applications to the cloud through secure connectivity across network and geographic boundaries, and by providing a consistent development model for both Windows Azure and Windows Server.

Finally, it makes development more productive by providing higher abstraction for building end-to-end applications, and simplifies and maintenance of the application as it takes advantage of advances in the underlying hardware and software infrastructure.

From Yung Chow, senior Microsoft IT pro evangelist:

One very important concept in cloud computing is the notion of fabric which represents an abstraction layer connecting resources to be dynamically allocated on demand. In Windows Azure, this concept is implemented as Fabric Controller (FC) which knows the what, where, when, why, and how of the resources in cloud. As far as a cloud application is concerned, FC is the cloud OS. We use Fabric Controller to shield us from the need to know all the complexities in inventorying, storing, connecting, deploying, configuring, initializing, running, monitoring, scaling, terminating, and releasing resources in cloud.

From page 3:

Re: “Public/Private interaction via the Windows Azure Appliance Platform works only for a few private customers, and is likely CTP”

See above re Fujitsu’s WAPA offering.

Re: “Local storage persistence is CTP; only BLOB storage is guaranteed to be persistent after an instance reboot. Drive C is as good as the instance not rebooting unless you unload it from a BLOB first, after the local NTFS drive is initialized after a reboot.”

Local storage persistence is not CTP. Azure Blog, Queue and Table storage persistence has had production status since Windows Azure released to the Web in January 2010. High availability is provided by three replicas, the cost of which is included in the quoted storage price.

Re: “Azure instances are controlled through AppFabric, which is an Azure-resident cloud middleware API and messaging infrastructure — a service bus in Microsoft parlance.”

See above re Windows Azure AppFabric vs. Fabric Controller.

From page 4:

Re: “All worked fine until an upgrade demanded a reboot of the instance. Then we discovered that NTFS local storage isn't guaranteed to be persistent, although Windows Azure Drives and BLOBs are. Indeed our storage disappeared as we'd mistakenly chosen NTFS local storage — after we rebooted the instance.”

Local storage created by any cloud based operating system isn’t persistent. For example, only Amazon S3 (blobs), SimpleDB (tables), SQS (queues), and EBS (drives) are persistent.

Readers of the NetworkWorld article should be aware that many of Amazon Web Services’ features were in the beta stage for several years of AWS’ commercial use and that the entire Google App Engine has been in preview mode since its inception.

<Return to section navigation list>

Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

No significant articles today.

<Return to section navigation list>

Cloud Security and Governance

Jeremy Kirk (@Jeremy_Kirk) asserted “Researchers in Germany have found abundant security problems within Amazon's cloud-computing services due to its customers either ignoring or forgetting published security tips” as a deck to his Researchers: AWS Users Are Leaving Security Holes article of 6/20/2011 for CIO.com:

Researchers in Germany have found abundant security problems within Amazon's cloud-computing services due to its customers either ignoring or forgetting published security tips.

Amazon offers computing power and storage using its infrastructure via its Web Services division. The flexible platform allows people to quickly roll out services and upgrade or downgrade according to their needs.

Thomas Schneider, a postdoctoral researcher in the System Security Lab of Technische Universität Darmstadt, said on Monday that Amazon's Web Services is so easy to use that a lot of people create virtual machines without following the security guidelines.

"These guidelines are very detailed," he said.

In what they termed was the most critical discovery, the researchers found that the private keys used to authenticate with services such as the Elastic Compute Cloud (EC2) or the Simple Storage Service (S3) were publicly published in Amazon Machine Images (AMIs), which are pre-configured operating systems and application software used to create virtual machines.

Those keys shouldn't be there. "They [Customers] just forgot to remove their API keys from machines before publishing," Schneider said.

But the consequences could be expensive: With those keys, an interloper could start up services on EC2 or S3 using the customer's keys and create "virtual infrastructure worth several thousands of dollars per day at the expense of the key holder," according to the researchers.

The researchers looked at some 1,100 AMIs and found another common problem: One-third of those AMIs contained SSH (Secure Shell) host keys or user keys.

SSH is a common tool used to log into and manage a virtual machine. But unless the host key is removed and replaced, every other instance derived from that image will use the same key. This can cause severe security problems, such as the possibility of impersonating the instance and launching phishing attacks.

Some AMIs also contained SSH user keys for root-privileged logins. "Hence, the holder of the corresponding SSH key can login to instances derived from those images with superuser privileges unless the user of the instance becomes aware of this backdoor and manually closes it," according to a technical data sheet on the research.

Among the other data found in the public AMIs were valid SSL (Secure Sockets Layer) certificates and their private keys, the source code of unpublished software products, passwords and personally identifiable information including pictures and notes, they said.

Anyone with a credit card can get access to Amazon Web Services, which would enable a person to look at the public AMIs that the researchers analyzed, Schneider said. Once the problem was evident, Schneider said they contacted Amazon Web Services at the end of April. Amazon acted in a professional way, the researchers said, by notifying those account holders of the security issues.

The study was done by the Center for Advanced Security Research Darmstadt (CASED) and the Fraunhofer Institute for Security in Information Technology (SIT) in Darmstadt, Germany, which both study cloud computing security. Parts of the project were also part of the European Union's "Trustworthy Clouds" or TClouds program.

Although it isn’t Amazon’s responsibility to police customers misuse of their own AMI’s, resulting security breaches could seriously damage AWS’s reputation among enterprise CIOs.

Joe Panettieri (@joepanettieri) reported Cloud Data Centers: Goodbye SAS 70, Hello SSAE 16 in a 6/20/2011 post to the TalkinCloud blog:

When customers and channel partners seek cloud data centers, they often ask about SAS 70 auditing standard. But starting June 15, 2011, the focus started to shift to SSAE 16 — a new standard that seeks to give data center customers and partners more peace of mind. True believers include Online Tech, a data center provider in Michigan.

According to NDB Accountants and Consultants:

“SSAE 16 effectively replaces Statement on Auditing Standards No. 70 (SAS 70) for service auditor’s reporting periods ending on or after June 15, 2011. Two (2) types of SSAE 16 reports are to be issued, a Type 1 and a Type 2. Additionally, SSAE 16 requires that the service organization provide a description of its “system” along with a written assertion by management.”

SAS 70, short for Statement on Auditing Standards No. 70, has been around since 1992. Data centers widely embraced SAS 70 to show customers and partners that they had proper business controls in place.

The replacement, SSAE 16, is short for Statement on Standards for Attestation Engagements (SSAE ) No. 16. SSAE 16 is promoted by the Auditing Standards Board (ASB) of the American Institute of Certified Public Accountants (AICPA).

SSAE 16 keeps pace with the “growing push towards more globally accepted international accounting standards,” according to NDB Accountants and Consultants.

First Movers

True believers in SSAE 16 include Online Tech, which operates data centers in Ann Arbor and Mid-Michigan. A recent audit showed that Online Tech’s data centers comply with SSAE 16. According to a prepared statement from Online Tech, “SSAE 16 provides better alignment with international standards and requires a written assertion from management on the design and operating effectiveness of the data center controls.”

Online Tech also complies with SOC 2 & SOC 3 (Service Organization Control 2 & 3). The standards provide a benchmark by which two data center audits can be compared against each other for security, availability and process integrity, Online Tech asserts.

Talkin’ Cloud will be watching to see if more cloud service providers embrace SSAE 16, SOC 2 and SOC 3.

Follow Talkin’ Cloud via RSS, Facebook and Twitter. Sign up for Talkin’ Cloud’s Weekly Newsletter, Webcasts and Resource Center. Read our editorial disclosures here.

Read More About This Topic

<Return to section navigation list>

Cloud Computing Events

Jim O’Neil reported geographic expansion of the contest in his RockPaperAzure Hits the Big Time! post of 6/21/2011:

My colleagues and I launched the RockPaperAzure competition last spring as fun way for developers to gain some familiarity with Windows Azure – and win a few prizes to boot! Well, “RockPaperAzure 2.0” – namely a Grand Tournament - just hit, and it’s huge! We’re opening up the competition to six additional countries and offering $8500 in prizes, with the overall winner pocketing $5000 (USD)!

So whether for you it’s:

you’re welcome to join the competition. And if you’re not a legal resident of one of those countries, we’re sorry we couldn’t accommodate you in the Grand Tournament, but you can still compete for bragging rights in the open rounds!

It’s easy and FREE to play – check out the website http://rockpaperazure.com for all the details, but be sure to get in before the deadline of July 13th!

<Return to section navigation list>

Other Cloud Computing Platforms and Services

Jeff Barr (@jeffbarr) reported Now Available: Amazon EC2 Running Red Hat Enterprise Linux in a 6/21/2011 post:

We continue to add options to AWS in order to give our customers the freedom and flexibility that they need to build and run applications of all different shapes and sizes.

I'm pleased to be able to tell you that you can now run Red Hat Enterprise Linux on EC2 with support from Amazon and Red Hat. You can now launch 32 and 64-bit instances in every AWS Region and on every EC2 instance type. You can choose between versions 5.5, 5.6, 6.0, and 6.1 of RHEL. You can also launch AMIs right from AWS Console's Quick Start Wizard. Consult the full list of AMI's to get started.

If you are a member of Red Hat's Cloud Access program you can use your existing licenses. Otherwise, you can run RHEL on On-Demand instances now, with Spot and Reserved Instances planned for the future. Pricing for On-Demand instances is available here.All customers running RHEL on EC2 have access to an update repository operated by Red Hat. AWS Premium Support customers can contact AWS to obtain support from Amazon and Red Hat.

Jo Maitland (@JoMaitlandTT) posted Cloud computing winners and losers: Our audience says… to SearchCloudComputing.com on 6/20/2011:

Picking the right cloud computing vendor isn't easy, especially when it's unclear what you need, how all the pieces fit together and which suppliers are in it for the long term. Still, IT pros are forging ahead; our latest survey reveals which providers are top of their minds today.

Over 500 respondents, from IT organizations large and small across North America, took the survey from January through March 2011. They were asked about a variety of topics that impact decisions around cloud computing, including security, storage, networking, virtualization, interoperability, sourcing, hiring and TCO. In summary, all of these present new challenges that affect each company differently depending on their environment.

Many respondents said that the "one-size-fits-all" commodity nature of cloud products and services is tough to integrate with legacy IT environments that have been kicking around for decades.

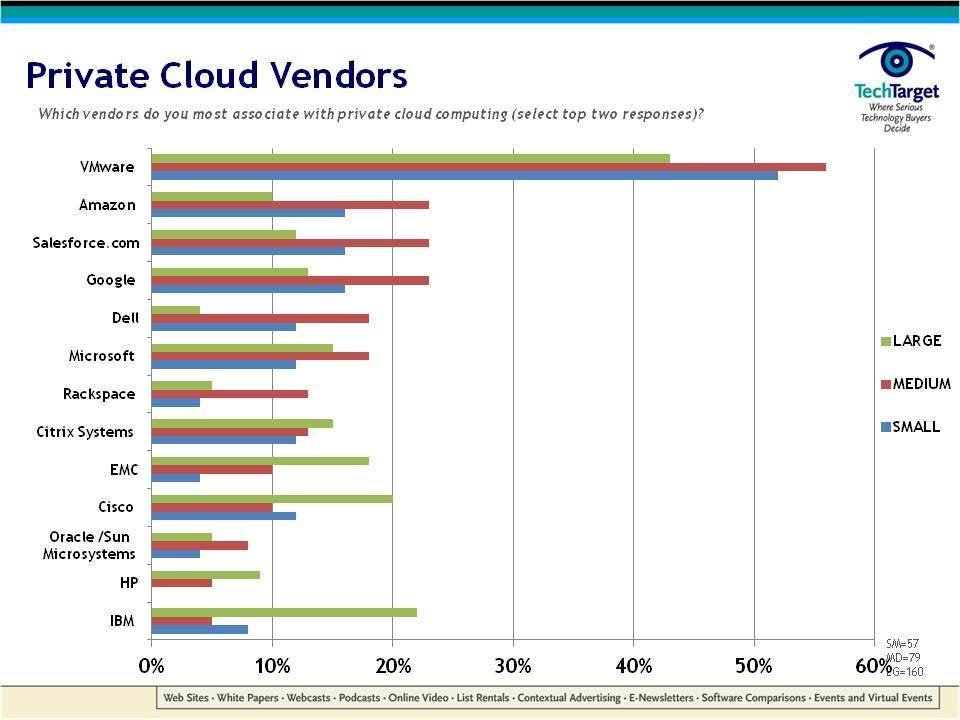

Figure 1: Private cloud vendors (Click to enlarge)Almost 70% of respondents said they had some portion of a private cloud infrastructure in place today. But as they push ahead with private cloud in an effort to stave off the scary world of public cloud, they run the risk of creating yet another silo of infrastructure that will increase management and cost headaches down the road.

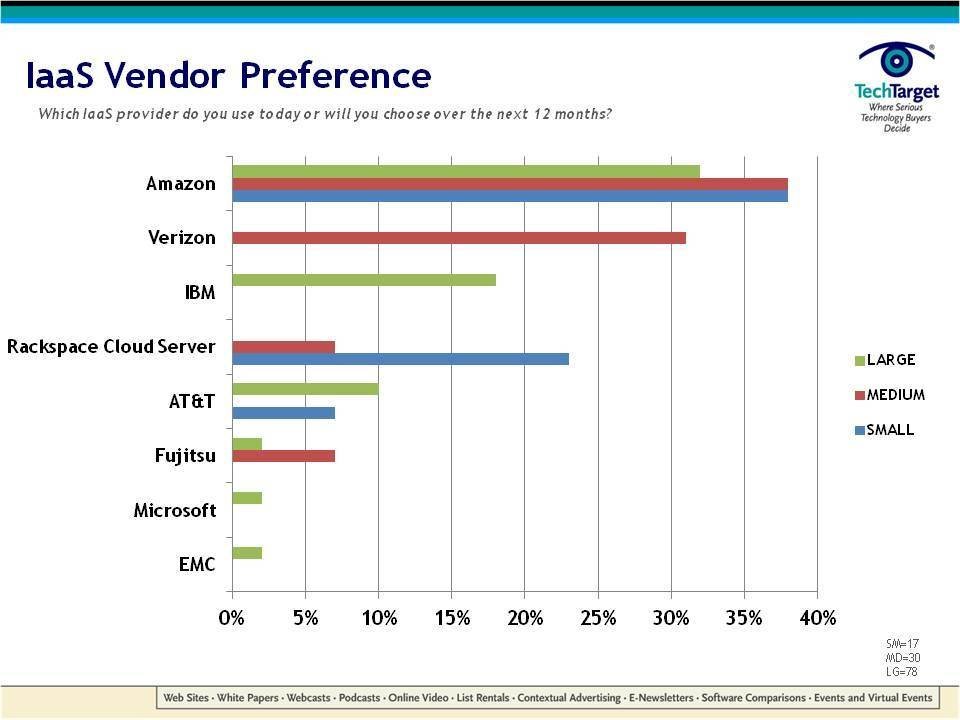

Figure 2: IaaS vendor preference (Click to enlarge)Flexibility and cost were the top reasons cited for using Infrastructure as a Service (IaaS), and the primary use cases were backup and disaster recovery, application test/dev and Web hosting. Almost 30% of large companies (those with over 1,000 employees) said they have aggressive plans to increase their use of IaaS over the next 12 months.

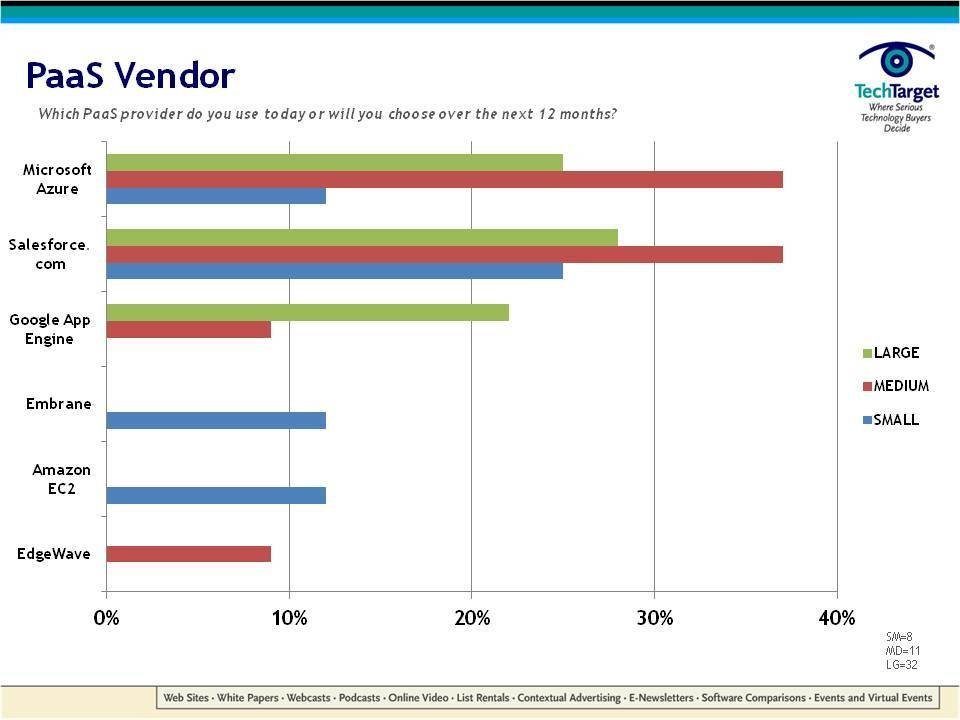

Figure 3: PaaS vendor preference (Click to enlarge)

Fewer infrastructure management headaches and a faster way to run pilot projects were the main drivers for Platform as a Service (PaaS). This market is heating up fast, with new entrants from VMware (Cloud Foundry) and Red Hat (OpenShift) that will most likely show up in our next survey.

Read more: Jo continues with lists of top vendors in the preceding three categories.

Most analysts classify Salesforce.com as CRM SaaS. It’s interesting to see Windows Azure’s lead over Google App Engine, despite what Tom Henderson and Brendan Allen claim are its shortcomings in their First Look at Windows Azure in the Windows Azure Infrastructure and DevOps section above.

Full disclosure: I’m a paid contributor to SearchCloudComputing.com.

Damon Edwards (@damonedwards) reported Kohsuke Kawaguchi presents Jenkins to Silicon Valley DevOps Meetup (Video) in a 6/20/2011 post to the dev2ops: delivering change blog:

Kohsuke Kawaguchi stopped by the Silicon Valley DevOps Meetup on June 7, 2011 to give an in-depth tour of Jenkins. Kohsuke is the founder of both the Hudson and the Jenkins open source projects and now works for CloudBees.

Kohsuke's presentation covered not only the Jenkins basics but also more advanced topics like distributed builds in the cloud, the matrix project, and build promotion. Video and slides are below.

Once again, thanks to Box.net for hosting the event!

I thought LinkedIn hosted the event at their Mountain View, CA headquarters.

Chris Czarnecki asked What has Happened to Google App Engine for Business ? in a 6/20/2011 post to the Learning Tree blog:

Last year Google announced App engine for business. This is the standard App Engine PaaS but enhanced for business so that they can host their internal applications on the App Engine. To facilitate this, Google offered extra features such as

- SSL on custom domains

- SQL service

- Service Level Agreements

It seems that GAE for business has never moved beyond beta stage , with Google announcing that no new applications for accounts are being accepted but that the business features will be available in the next release of the standard GAE. This is both good news and bad. Good in that users of GAE will have improved levels of service and services. But bad because GAE for business was chargeable so the chances are the services will be payable when they become part of standard GAE.

Regular users of GAE will have seen the resource quotas available for free reduced over the last few months so it will be interesting to see what happens over the next release of GAE. There is no doubt that GAE is an excellent environment so payment will not be a barrier to most serious users. However, the changing features and pricing model of Google does raise a more general question for adopters of Cloud Computing. If they choose to move their IT to the cloud, how vulnerable does that make them to being controlled by the cloud vendor when it comes to pricing ? Part of any Cloud adoption strategy must be an analysis of the risk exposure an organisation will have to the selected cloud provider, and then devising a solution that reduces that risk to an acceptable level for the business. This is not an easy task and requires an in depth knowledge of Cloud Computing.

Learning Tree’s Cloud Computing Course considers scenarios such as the one above and introduces strategies for minimising the associated risk. If you are considering using Cloud Computing for your organisation, why not consider attending the course – it will provide you with the information you need to make informed decisions appropriate for your business.

The way I read the tea leaves, Google App Engine will inherit most of the Business version’s purported new features when it leaves preview status later this year.

<Return to section navigation list>

0 comments:

Post a Comment