Windows Azure and Cloud Computing Posts for 6/28/2011+

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database and Reporting

- Marketplace DataMarket and OData

- Windows Azure AppFabric: Access Control, WIF and Service Bus

- Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch

- Windows Azure Infrastructure and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Azure Blob, Drive, Table and Queue Services

Martin Ingvar Kofoed Jensen (@IngvarKofoed) reported ContentMD5 not working in the Storage Client library in a 6/28/2011 post:

This is a follow up on my other blob post about the ContentMD5 blob property (Why is MD5 not part of ListBlobs result?). And it is motivated by the comment from Gaurav Mantri stating that the MD5 is actually in the REST response, it is just not populated by the Storage Client library.

So I also did some more testing and I found out that the MD5 is calculated by the and set blob storage when you upload the blob. Because when I did a simple test of creating a new container and uploading a simple 'Hello World' txt file and tried doing a ListBlobs through the REST API, I got the XML back below. Note that I did not populate the ContentMD5 when I uploaded the file! If I try doing a ListBlobs method call on a CloudBlobContainer instance the ContentMD5 is NOT populated (Read more about this in my other blog post). So my best guess is that this is a error in the API of some sort.

I would be very nice if this was fixed, so we did not have to compuste the MD5 hash by hand and adding it to the blobs metadata. I would make code like I did for the local folder to/from blob storage synchronization much simpler (Read it here).

<EnumerationResults ContainerName="http://myblobtest.blob.core.windows.net/md5test"> <Blobs> <Blob> <Name>MyTestBlob.xml</Name> <Url>http://myblobtest.blob.core.windows.net/md5test/MyTestBlob.xml</Url> <Properties> <Last-Modified>Tue, 28 Jun 2011 16:20:30 GMT</Last-Modified> <Etag>0x8CE038BC293943D</Etag> <Content-Length>12</Content-Length> <Content-Type>text/xml</Content-Type> <Content-Encoding/> <Content-Language/> <Content-MD5>LL0183lu9Ms2aq/eo4TesA==</Content-MD5> <Cache-Control/> <BlobType>BlockBlob</BlobType> <LeaseStatus>unlocked</LeaseStatus> </Properties> </Blob> </Blobs> <NextMarker/> </EnumerationResults>Here is the code I did for doing the REST call:

string requestUrl = "http://myblobtest.blob.core.windows.net/md5test?restype=container&comp=list"; string certificatePath = /* Path to certificate */ HttpWebRequest httpWebRequest = (HttpWebRequest)HttpWebRequest.Create(new Uri(requestUrl, true)); httpWebRequest.ClientCertificates.Add(new X509Certificate2(certificatePath)); httpWebRequest.Headers.Add("x-ms-version", "2009-09-19"); httpWebRequest.ContentType = "application/xml"; httpWebRequest.ContentLength = 0; httpWebRequest.Method = "GET"; using (HttpWebResponse httpWebResponse = (HttpWebResponse)httpWebRequest.GetResponse()) { using (Stream responseStream = httpWebResponse.GetResponseStream()) { using (StreamReader reader = new StreamReader(responseStream)) { Console.WriteLine(reader.ReadToEnd()); } } }

<Return to section navigation list>

SQL Azure Database and Reporting

Richard Mitchell (@richard_j_m) explained SQL Azure Down - how I got labs.red-gate.com back up in a 6/23/2011 post to Red Gate Software’s Simple-Talk blog:

11:06am - Currently SQL Azure in Western Europe is down

How do I know this? Well on labs.red-gate.com (my Azure website) I have elmah installed which started sending me e-mails about connection failures from 10:40am when trying to get the dynamic content from the database (I was too busy playing with my new Eee Pad transformer to notice immediately). Going to the website confirmed the failure and trying to connect to SQL Azure from SQL Server Management studio and the Management confirmed bad things were going on.

11:15am - Need to get the site back up

Luckily due to SQL Azure Backup I have a local database with contents from my SQL Azure database on my local machine. So I now need to get this to be publicly visible, change the connection string in my website and then we're all good (making a lot of assumptions along the way)

My options to get the database live go something along the lines of.

- Punch a hole in the firewall straight at my computer

- Use an already internet visible SQL Server and put the database there

- Create a new internet visible SQL Server and likewise restore the database

- Create another SQL Azure Server and put the database there

Not everything is going smoothly now. There's a little panic setting in. Punching a hole in the firewall to my computer is a bad idea. We don't have any network visible SQL Servers and changing the firewall rules on anything is going to take too long.

Attempting to create another SQL Azure Server is failing - seems to be claiming I don't have [a subscription]:

We also have monitor.red-gate.com running on AWS - does that have a SQL Server handy for me? - No, although we do have a SQL Azure Server already setup and running in another datacentre. Great! A solution.

12:00(ish)pm Use SQL Compare/SQL Data Compare to restore data contents to Azure

Using SQL Compare and SQL Data Compare I start synching the schema and data from my local RedGateLabs database to the new database I created in SQL Azure in north america. Whilst doing this I start changing the connection strings to point to the new server and run a local copy to test the database has synch'ed up properly. All seems good.

12:18pm Deploy site to staging - test if site works and switch to live

Start the publish process from Visual Studio and 12 minutes later we have a working labs.red-gate.com on stanging using the SQL Azure Server in north america. Perform a swap to get staging live and we're done. It's back up thanks to a lot of luck and a little preparation.

12:25pm Publish this article

And...breath...

<Return to section navigation list>

MarketPlace DataMarket and OData

Maarten Balliauw (@maartenballiauw) explained Enabling conditional Basic HTTP authentication on a WCF OData service in a 6/28/2011 post:

Yes, a long title, but also something I was not able to find too easily using Google. Here’s the situation: for MyGet, we are implementing basic authentication to the OData feed serving available NuGet packages.

If you recall my post Using dynamic WCF service routes, you may have deducted that MyGet uses that technique to have one WCF OData service serving the feeds of all our users. It’s just convenient! Unless you want basic HTTP authentication for some feeds and not for others…

After doing some research, I thought the easiest way to resolve this was to use WCF intercepting. Convenient, but how would you go about this? And moreover: how to make it extensible so we can use this for other WCF OData (or WebAPi) services in the future?

The solution to this was to create a message inspector (IDispatchMessageInspector). Here’s the implementation we created for MyGet: (disclaimer: this will only work for OData services and WebApi!)

1 public class ConditionalBasicAuthenticationMessageInspector : IDispatchMessageInspector 2 { 3 protected IBasicAuthenticationCondition Condition { get; private set; } 4 protected IBasicAuthenticationProvider Provider { get; private set; } 5 6 public ConditionalBasicAuthenticationMessageInspector( 7 IBasicAuthenticationCondition condition, IBasicAuthenticationProvider provider) 8 { 9 Condition = condition; 10 Provider = provider; 11 } 12 13 public object AfterReceiveRequest(ref Message request, IClientChannel channel, InstanceContext instanceContext) 14 { 15 // Determine HttpContextBase 16 if (HttpContext.Current == null) 17 { 18 return null; 19 } 20 HttpContextBase httpContext = new HttpContextWrapper(HttpContext.Current); 21 22 // Is basic authentication required? 23 if (Condition.Evaluate(httpContext)) 24 { 25 // Extract credentials 26 string[] credentials = ExtractCredentials(request); 27 28 // Are credentials present? If so, is the user authenticated? 29 if (credentials.Length > 0 && Provider.Authenticate(httpContext, credentials[0], credentials[1])) 30 { 31 httpContext.User = new GenericPrincipal( 32 new GenericIdentity(credentials[0]), new string[] { }); 33 return null; 34 } 35 36 // Require authentication 37 HttpContext.Current.Response.StatusCode = 401; 38 HttpContext.Current.Response.StatusDescription = "Unauthorized"; 39 HttpContext.Current.Response.Headers.Add("WWW-Authenticate", string.Format("Basic realm=\"{0}\"", Provider.Realm)); 40 HttpContext.Current.Response.End(); 41 } 42 43 return null; 44 } 45 46 public void BeforeSendReply(ref Message reply, object correlationState) 47 { 48 // Noop 49 } 50 51 private string[] ExtractCredentials(Message requestMessage) 52 { 53 HttpRequestMessageProperty request = (HttpRequestMessageProperty)requestMessage.Properties[HttpRequestMessageProperty.Name]; 54 55 string authHeader = request.Headers["Authorization"]; 56 57 if (authHeader != null && authHeader.StartsWith("Basic")) 58 { 59 string encodedUserPass = authHeader.Substring(6).Trim(); 60 61 Encoding encoding = Encoding.GetEncoding("iso-8859-1"); 62 string userPass = encoding.GetString(Convert.FromBase64String(encodedUserPass)); 63 int separator = userPass.IndexOf(':'); 64 65 string[] credentials = new string[2]; 66 credentials[0] = userPass.Substring(0, separator); 67 credentials[1] = userPass.Substring(separator + 1); 68 69 return credentials; 70 } 71 72 return new string[] { }; 73 } 74 }

Our ConditionalBasicAuthenticationMessageInspector implements a WCF message inspector that, once a request has been received, checks the HTTP authentication headers to check for a basic username/password. One extra there: since we wanted conditional authentication, we have also implemented an IBasicAuthenticationCondition interface which we have to implement. This interface decides whether to invoke authentication or not. The authentication itself is done by calling into our IBasicAuthenticationProvider. Implementations of these can be found on our CodePlex site.

If you are getting optimistic: great! But how do you apply this message inspector to a WCF service? No worries: you can create a behavior for that. All you have to do is create a new Attribute and implement IServiceBehavior. In this implementation, you can register the ConditionalBasicAuthenticationMessageInspector on the service endpoint. Here’s the implementation:

1 [AttributeUsage(AttributeTargets.Class)] 2 public class ConditionalBasicAuthenticationInspectionBehaviorAttribute 3 : Attribute, IServiceBehavior 4 { 5 protected IBasicAuthenticationCondition Condition { get; private set; } 6 protected IBasicAuthenticationProvider Provider { get; private set; } 7 8 public ConditionalBasicAuthenticationInspectionBehaviorAttribute( 9 IBasicAuthenticationCondition condition, IBasicAuthenticationProvider provider) 10 { 11 Condition = condition; 12 Provider = provider; 13 } 14 15 public ConditionalBasicAuthenticationInspectionBehaviorAttribute( 16 Type condition, Type provider) 17 { 18 Condition = Activator.CreateInstance(condition) as IBasicAuthenticationCondition; 19 Provider = Activator.CreateInstance(provider) as IBasicAuthenticationProvider; 20 } 21 22 public void AddBindingParameters(ServiceDescription serviceDescription, ServiceHostBase serviceHostBase, Collection<ServiceEndpoint> endpoints, BindingParameterCollection bindingParameters) 23 { 24 // Noop 25 } 26 27 public void ApplyDispatchBehavior(ServiceDescription serviceDescription, ServiceHostBase serviceHostBase) 28 { 29 foreach (ChannelDispatcher channelDispatcher in serviceHostBase.ChannelDispatchers) 30 { 31 foreach (EndpointDispatcher endpointDispatcher in channelDispatcher.Endpoints) 32 { 33 endpointDispatcher.DispatchRuntime.MessageInspectors.Add( 34 new ConditionalBasicAuthenticationMessageInspector(Condition, Provider)); 35 } 36 } 37 } 38 39 public void Validate(ServiceDescription serviceDescription, ServiceHostBase serviceHostBase) 40 { 41 // Noop 42 } 43 }

One step to do: apply this service behavior to our OData service. Easy! Just add an attribute to the service class and you’re done! Note that we specify the IBasicAuthenticationCondition and IBasicAuthenticationProvider on the attribute.

1 [ConditionalBasicAuthenticationInspectionBehavior( 2 typeof(MyGetBasicAuthenticationCondition), 3 typeof(MyGetBasicAuthenticationProvider))] 4 public class PackageFeedHandler 5 : DataService<PackageEntities>, 6 IDataServiceStreamProvider

Azret Botash (@Ba3e64) delivered an OData Internals & Implementing Custom Providers presentation via the InfoQ blog on 6/28/2011:

Summary

Azret Botash talks about OData’s internals, especially URI conventions, and demos the creation of a custom provider.Bio

Azret Botash works at DevExpress where he focuses on emerging technologies to be included in the future versions of the products.About the conference

Code PaLOUsa is a conference designed to cover all aspects of software development regardless of technology stack. It has sessions revolving around Microsoft, Java, and other development platforms; along with session on higher levels that are platform agnostic. The conference schedule will feature presentations from well-known professionals in the software development community.

Mitch Milam (@mitchmilam) posted CRM 2011: Exploring the Developer Resources Page on 6/28/2011:

CRM 2011 includes a Developer Resources page which provides additional information and tools for the CRM developer.

You find the page by navigating to: Settings, Customizations, Developer Resources, where the following page is display:

So, how can I use this information?

Organization Unique Name

The Unique Name for an organization is the internal name or the database name that was assigned when you created the organization. This name is sometimes required by applications that connect to your CRM organization to perform operations.

Note: This screen shot is from one of my CRM Online organizations. The Organization Unique Name for an on-premise installation will be more human-readable.

Windows Azure AppFabric Issurer Certificate

If you will be performing any work using the Windows Azure AppFabric, you’ll need to install a certificate that used during the authentication process.

Developer Center

In the CRM Development Center you can find links to tools, training materials, blogs, and the community forums. In a nutshell, it is the central location to find all things CRM-developer-related.

Discovery Service Endpoint

If you will be working with multiple CRM organizations, you’ll need to establish a connection to the CRM Discovery service which will return to you a list of organizations that your security credentials have access to. More information may be found here.

Organizational Service Endpoint

The Organizational Service is the main API for interacting programmatically with CRM 2011. This is what you connect to in order to perform any create, read, update, delete, or other operation against the CRM data. More information may be found here.

Organizational Data Service

The Organizatoin Data Service is similar to Organizational Service but it is restricted to Create, Read, Update, and Delete operations only. More information may be found here.

As noted in MSDN’s Use the REST Endpoint for Web Resources topic:

Microsoft Dynamics CRM 2011 uses the Windows Communication Foundation (WCF) Data Services framework to provide an Open Data Protocol (OData) endpoint that is a REST-based data service. This endpoint is called the Organization Data Service. In Microsoft Dynamics CRM, the service root URI is:

[Your Organization Root URL]/xrmservices/2011/organizationdata.svcOData sends and receives data by using either ATOM or JavaScript Object Notation (JSON). ATOM is an XML-based format usually used for RSS feeds. JSON is a text format that allows for serialization of JavaScript objects.

To provide a consistent set of URIs that corresponds to the entities used in Microsoft Dynamics CRM, an Entity Data Model (EDM) organizes the data in the form of records of "entity types" and the associations between them.

OData Entity Data Model (EDM)

The Microsoft Dynamics CRM EDM is described in an OData Service Metadata document available at

[Your Organization Root URL]/xrmservices/2011/organizationdata.svc/$metadataThis XML document uses conceptual schema definition language (CDSL) to describe the data available. You will download this document and use it to generate typed classes when you use managed code with Silverlight or as a reference for available objects when you use JScript.

Limitations

The REST endpoint provides an alternative to the WCF SOAP endpoint, but there are currently some limitations.

- Only Create, Retrieve, Update and Delete actions can be performed on entity records

- Messages that require the Execute method cannot be performed.

- Associate and disassociate actions can be performed by using navigation properties.

- Authentication is only possible within the application

- Use of the REST endpoint is limited to JScript libraries or SilverlightWeb resources.

- The OData protocol is not fully implemented. Some system query options are not available

- For more information, see OData System Query Options Using the REST Endpoint.

- You cannot use late binding with managed code with Silverlight

- You will typically use WCF Data Services Client Data Service classes while programming by using managed code for the REST endpoint with Silverlight. These classes allow for early binding so that you get strongly typed classes at design time. The only entities available to you are those defined in the system when the classes were generated. This means that you cannot use late binding to work with custom entities that were not included in the WCF Data Services Client Data Service classes when they were generated.

<Return to section navigation list>

Windows Azure AppFabric: Access Control, WIF and Service Bus

Richard Seroter (@rseroter) explained Sending Messages from Salesforce.com to BizTalk Server Through Windows Azure AppFabric in a 6/27/2011 post:

In a very short time, my latest book (actually Kent Weare’s book) will be released. One of my chapters covers techniques for integrating BizTalk Server and Salesforce.com. I recently demonstrated a few of these techniques for the BizTalk User Group Sweden, and I thought I’d briefly cover one of the key scenarios here. To be sure, this is only a small overview of the pattern, and hopefully it’s enough to get across the main idea, and maybe even encourage to read the book to learn all the gory details!

I’m bored with the idea that we can only get data from enterprise applications by polling them. I’ve written about how to poll Salesforce.com from BizTalk, and the topic has been covered quite well by others like Steef-Jan Wiggers and Synthesis Consulting. While polling has its place, what if I want my application to push a notification to me? This capability is one of my favorite features of Salesforce.com. Through the use of Outbound Messaging, we can configure Salesforce.com to call any HTTP endpoint when a user-specified scenario occurs. For instance, every time a contact’s address changes, Salesforce.com could send a message out with whichever data fields we choose. Naturally this requires a public-facing web service that Salesforce.com can access. Instead of exposing a BizTalk Server to the public internet, we can use Azure AppFabric to create a proxy that relays traffic to the internal network. In this blog post, I’ll show you that Salesforce.com Outbound Messages can be sent though the AppFabric Service Bus to an on-premises BizTalk Server. I haven’t seen anyone try integrating Salesforce.com with Azure AppFabric yet, so hopefully this is the start of many more interesting examples.

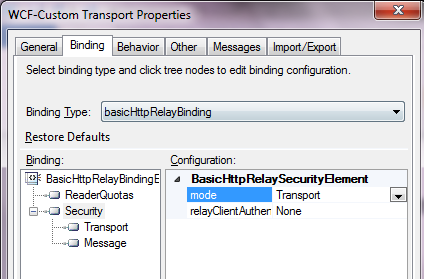

First, a critical point. Salesforce.com Outbound Messaging is awesome, but it’s fairly restrictive with regards to changing the transport details. That is, you plug in a URL and have no control over the HTTP call itself. This means that you cannot inject Azure AppFabric Access Control tokens into a header. So, Salesforce.com Outbound Messages can only point to an Azure AppFabric service that has its RelayClientAuthenticationType set to “None” (vs. RelayAccessToken). This means that we have to validate the caller down at the BizTalk layer. While Salesforce.com Outbound Messages are sent with a client certificate, it does not get passed down to the BizTalk Server as the AppFabric Service Bus swallows certificates before relaying the message on premises. Therefore, we’ll get a little creative in authenticating the Salesforce.com caller to BizTalk Server. I solved this by adding a token to the Outbound Message payload and using a WCF behavior in BizTalk to match it with the expected value. See the book chapter for more.

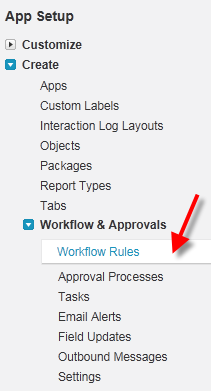

Let’s get going. Within the Salesforce.com administrative interface, I created a new Workflow Rule. This rule checks to see if an Account’s billing address changed.

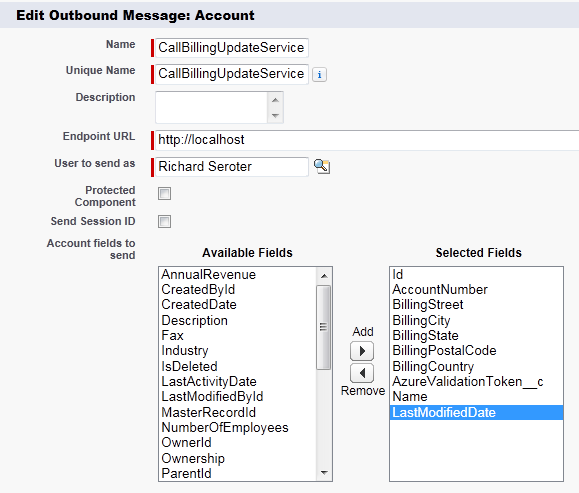

The rule has a New Outbound Message action which doesn’t yet have an Endpoint address but has all the shared fields identified.

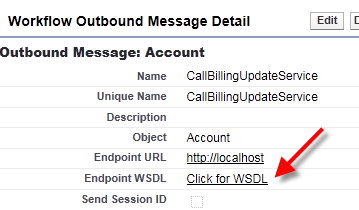

When we’re done with the configuration, we can save the WSDL that complies with the above definition.

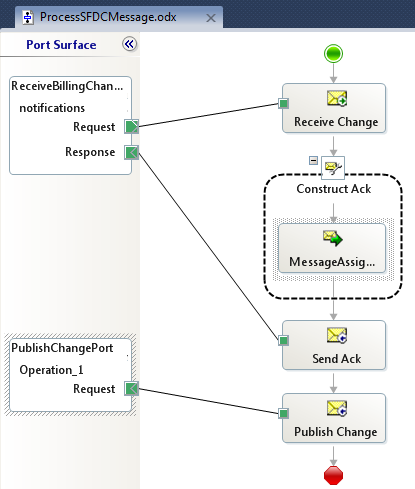

On the BizTalk side, I ran the Add Generated Items wizard and consumed the above WSDL. I then built an orchestration that used the WSDL-generated port on the RECEIVE side in order to expose an orchestration that matched the WSDL provided by Salesforce.com. Why an orchestration? When Salesforce.com sends an Outbound Message, it expects a single acknowledgement to confirm receipt.

After deploying the application, I created a receive location where I hosted the Azure AppFabric service directly in BizTalk Server.

After starting the receive location (whose port was tied to my orchestration), I retrieved the Service Bus address and plugged it back into my Salesforce.com Outbound Message’s Endpoint URL. Once I change the billing address of any Account in Salesforce.com, the Outbound Message is invoked and a message is sent from Salesforce.com to Azure AppFabric and relayed to BizTalk Server.

I think that this is a compelling pattern. There are all sorts of variations that we can come up with. For instance, you could choose to send only an Account ID to BizTalk and then have BizTalk poll Salesforce.com for the full Account details. This could be helpful if you had a high volume of Outbound Messages and didn’t want to worry about ordering (since each event simply tells BizTalk to pull the latest details).

If you’re in the Netherlands this week, don’t miss Steef-Jan Wiggers who will be demonstrating this scenario for the local user group. Or, for the price of one plane ticket from the U.S. to Amsterdam, you can buy 25 copies of the book!

The book’s Amazon page reports “Out of print – Limited availability.”

<Return to section navigation list>

Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

Avkash Chauhan explained Windows Azure Connect: What if Integrator.log file is growing fast? in a 6/28/2011 post:

When you have VM role deployed with Windows Azure connect endpoint software is installed, you may see the integrator.log file could grow very fast. This log is located as below:

C:\Program Files\Windows Azure Connect\Endpoint\Logs\Integrator.log

When you deactivate Windows Azure Connect Service, Integrator.log stops growing, otherwise it grows very fast, about ~1MB per 5 secs.

This problem has been fixed in latest Windows Azure Connect modules so if you redeploy your role, it should pick up the new Windows Azure Connect integrator which includes fix for above described problem.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

The Windows Azure Team posted a Real World Windows Azure: Interview with Grzegorz Skowron-Moszkowicz of Transparencia Sp. z o.o case study on 6/28/2011:

MSDN: Where did the idea for nioovo come from?

Skowron-Moszkowicz: In July 2010, polish Internet company GPC Sp. z o.o. did a study that found that the average paper-based address book contains 137 entries, while electronic versions average 161 entries and only 4.7 percent of those entries contain complete contact information. The study concluded that the primary reason for incomplete contact data is the time-consuming, manual nature of entering and updating information.

To address these challenges, GPC enlisted us to create and maintain a global web portal, called nioovo, which customers could use to update their contact information and then automatically share those updates with family, friends, and colleagues. GPC wanted us to create a truly global service should work just as well in Poland, Germany, and the United States, as in India, China, and New Zealand.

MSDN: Why did you choose to build on Windows Azure?

Skowron-Moszkowicz: At first we thought that none of the available solutions could fulfill our availability, capacity, cost, and security requirements. But after taking a closer look at Microsoft cloud services, we discovered that Windows Azure perfectly fits our needs. We chose Windows Azure because it is the only solution that we trust to provide fast, reliable, and highly secure operation of our global web portal, at costs that even small companies can afford.

MSDN: What are the key benefits of using Windows Azure for nioovo?

Skowron-Moszkowicz: First of all, this kind of agility and scalability would simply not be possible with a traditional IT solution. For GPC—a young Internet company with ambitious plans for the nioovo web portal—unlimited scalability is crucial to its success. And the global network of Microsoft data centers ensures high availability and virtually guarantees that there are no delays or problems with efficiency.

The fact that there are no upfront infrastructure costs is also a great benefit. We carefully monitor usage to determine the best pricing model and at first, we decided to use the pay-as-you-go model—low load meant lower costs. As we increase our load, fixed monthly payments may turn out to be more cost-effective. As our needs change, so do our pricing options.

Finally, because we’ve built nioovo using Windows Azure, we can offer customers a proper level of security, which is very important for the company’s success. It’s good that we don't have to worry about security, since we already have a lot of responsibilities.

MSDN: What are the key benefits of developing on the Windows Azure platform?

Skowron-Moszkowicz: Windows Azure is a perfect tool for creating IT solutions; developers can use the offline development environment to simulate the Windows Azure cloud on their PCs and test applications before they’re deployed. Plus, Windows Azure offers unlimited scalability, great efficiency, and enormous storage space.

MSDN: Is nioovo now available?

Skowron-Moszkowicz: We launched nioovo after six months of development. The interface, which is accessible through the Internet and 120 different mobile devices supported by mobile telecommunications provider Orange Polska, is currently available in eight languages and contact data is compatible with 138 languages. Now customers click through four simple steps on the web portal—which takes less than one minute—and their complete and updated information automatically reaches the people they want to be in touch with.

Click here to read the full case study.

To read more Windows Azure customer success stories, visit: www.windowsazure.com/evidence

Bruce Kyle reported Microsoft Partner Ecosystem Generates $580 Billion, Cloud to Add Net New $800 Billion in a 6/28/2011 post to the US ISV Evangelism blog:

The worldwide Microsoft ecosystem generated local revenues of $580 billion in 2010, up from $537 billion in 2009 and $475 billion in 2007. The IDC study estimates that for every dollar of revenue made by Microsoft Corp. in 2009, local members of the Microsoft ecosystem generated revenues for themselves of $8.70.

According to the IDC study, implementation of cloud computing is forecast to add more than $800 billion in net new business revenues to worldwide economies over the next three years, helping explain why Microsoft has made cloud computing one of its top business priorities.

“As business models continue to change, the Microsoft Partner Network allows partners to quickly and easily identify other partners with the right skill sets to meet their business needs, so Microsoft partners are set up to compete and drive profits now and in the future,” said Jon Roskill, corporate vice president of the Worldwide Partner Group at Microsoft. “The data provided in IDC’s study reflect the fact that the opportunities available to partners will have them poised for success now and in the future.”

The Microsoft-commissioned IDC report reveals that the modifications made to the Microsoft Partner Network equip Microsoft partners with the training, resources and support they need to be well-positioned in the competitive IT marketplace, both with the current lineup of Microsoft products and in the cloud.

Cloud-based solutions offer Microsoft partners the opportunity to grow by extending their current businesses via cloud infrastructure-as-a-service (private and/or public), software-as-a-service (Microsoft Business Productivity Online Standard Suite and/or Office 365), platform-as-a-service (Windows Azure) or a hybrid combined with on-premise solutions.

More information about joining the Microsoft Partners Network is available at https://partner.microsoft.com, and the IDC white paper, commissioned by Microsoft, can be viewed at http://www.microsoft.com/presspass/presskits/partnernetwork/docs/IDC_WP_0211.pdf.

PowerDNN issued a PowerDNN Goes Green: Cloud Computing Coming To a Server Near You press release via PRWeb on 6/28/2011:

PowerDNN, the leading DotNetNuke hosting and solutions provider, has announced that they are doing a significant upgrade to their infrastructure to bring all current and future customers onto their Cloud Environment.

Omaha, NE (PRWEB) June 28, 2011

This is a huge milestone for all PowerDNN customers as it will bring improved speed, expanded redundancy, and greater flexibility to their services. It also allows PowerDNN to become environmentally friendlier, while maintaining competitive pricing.

"We are excited to make this investment in our customers, infrastructure, and the future growth of the company” says Tony Valenti, CEO of PowerDNN. “PowerDNN is a DotNetNuke web-hosting company for end-users, which means there is a high priority placed on giving our customers a customizable, but useful website from day one. By integrating Cloud Computing into our services for all PowerDNN Customers we are providing them with the same underlying technology that empowers Windows Azure, and allows them flexibility of their website in real time.” [Emphasis added.]

PowerDNN's Shared Cloud Hosting Plans start as low as $20 per month and can easily be upgraded into a cloud server or private cloud. For more information on our different Cloud options please contact sales@powerdnn.com.

About PowerDNN.com-- Founded in 2002, PowerDNN.com is the full circle DotNetNuke solutions provider, servicing organizations ranging from small businesses to Fortune 500 Companies to the Federal Government of the United States of America. Specializing in high-reliability, business-critical DotNetNuke solutions, PowerDNN is the clear choice of business and technology experts who demand exceptional customer service and enterprise engineering support for DotNetNuke. For more information please visit http://www.powerdnn.com

<Return to section navigation list>

Visual Studio LightSwitch

Networking26 uploaded a 00:13:58 VS LightSwitch - Working with details and filter Webcast to YouTube on 6/27/2011:

- By default when we click on a record that opens the details screen we see the details based on that record.

- Because we are working with related data it might come in handy being able to view child records based on the parent record.

- In this example when we click to view a Dog's information we will see the details on that dog as well as its puppies.

- We will also add another screen that will allow us to filter our dogs based on it's breed.

Networking26 uploaded an introductory Light Switch Tutorial 01 Webcast on the same day.

Return to section navigation list>

Windows Azure Infrastructure and DevOps

Bill Claybrook explained Resolving cloud application migration issues in a 6/28/2011 article for SearchCloudComputing.com:

In part one of this look at cloud application migration, we discussed how cloud providers, through the selection of hypervisors and networking, affect the capability to migrate applications. In part two, we will talk about how appropriate architectures for cloud applications and open standards can reduce the difficulty in migrating applications across cloud environments.

A good deal of time and money in the IT industry has been spent on trying to make applications portable. Not surprising, the goal around migrating applications among clouds is to somehow make applications more cloud-portable. This can be done in at least three ways:

- Architect applications to increase cloud portability.

- Develop open standards for clouds.

- Find tools that move applications around clouds without requiring changes.

Most of today's large, old monolithic applications are not portable and must be rebuilt in order to fit the target environment. There are other applications that require special hardware, reducing their portability, and even many of the newer applications being built today are not very portable, certainly not cloud portable.

Application architectures and the cloud

Numerous cloud experts have indicated how important an application’s architecture reflects on the ability to move it from one cloud to another. Appropriate cloud application architectures are part of the solution to cloud interoperability, and existing applications may need to be re-architected to facilitate migration. The key is trying to architect applications that reduce or eliminate the number of difficult-to-resolve dependencies between the application stack and the capabilities provided by the cloud service provider.Bernard Golden, CEO of HyperStratus, has noted that, to exploit the flexibility of a cloud environment, you need to understand which application architectures are properly structured to operate in a cloud, the kinds of applications and data that run well in cloud environments, data backup needs and system workloads.

There are at least three cloud application architectures in play today:

- Traditional application architectures (such as three-tier architectures) that are designed for stable demand rather than large variations in load. They do not require an architecture that can scale up or down.

- Synchronous application architectures, where end-user interaction is the primary focus. Typically, large numbers of users may be pounding on a Web application in a short time period and could overwhelm the application and system.

- Asynchronous application architectures, which are essentially all batch applications that do not support end-user interaction. They work on sets of data, extracting and inserting data into databases. Cloud computing offers scalability of server resources, allowing an otherwise long running asynchronous job to be dispersed over several servers to share the processing load.

Platform as a Service (PaaS) providers provide tools for developing applications and an environment for running these applications. To deliver an application with a PaaS platform, you develop and deploy it on the platform; this is the way Google App Engine works. You can only deploy App Engine applications on Google services, but cloud application platforms such as the Appistry CloudIO Platform allow for in-house private cloud deployment as well as deployment on public cloud infrastructures such as Amazon EC2.

Where the application is developed and where it is to be run are factors that feed into the application architecture. For example, if you develop in a private cloud with no multi-tenancy, will this application run in target clouds where multi-tenancy is prevalent? Integrating new applications with existing ones can be a key part of application development. If integration involves working with cloud providers, it is difficult because cloud providers do not typically have open access into their infrastructures, applications and integration platforms.

Older applications that depend on specific pieces of hardware -- meaning they'll want to see a certain type of network controller or disk -- are trouble as well. The cloud provider is not likely to have picked these older pieces of hardware for inclusion in its infrastructure.

In your efforts to migrate applications, you may decide to start working with a cloud provider template where the provider gives you an operating system, such as CentOS or a Red Hat Enterprise Linux template. You'll then try to put your applications on it, fixing up the things that are mismatched between the source application environment and the target environment. The real challenge is that this approach becomes an unknown process, complete with a lot of workarounds and changes.

As you move through a chain of events, fixing problems as you go, you are really rewriting your application. Hopefully you won't have to rewrite it all, but you will surely change configurations and other things. You are then left with a fundamentally different application. This could be good or bad, but either way you'll have at least two versions of your application -- the data center version and the cloud version.

If moving an application back and forth between your data center and a cloud (or from one cloud to another cloud) results in two different versions of the application, you are now managing a collection of apps. As you fix and encounter problems, you'll have to work across however many versions of the application that you have created.

…

Read more: Bill continues with a “Cloud standards and application migration” topic.

Full disclosure: I’m a paid contributor to SearchCloudComputing.com.

<Return to section navigation list>

Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

Alan Stevens posted From virtualisation to private cloud: Spot the Difference to The Register on 6/20/2011 (missed when posted):

We all know what virtualisation consists of: a host containing one or more virtual machines, usually, but not necessarily, servers.

Scale that up and you get dozens, or hundreds, of servers powering hundreds, if not thousands, of virtual machines. That is becoming typical of today's data centre.

So what is a private cloud in this context? The answer is not so clearcut. Some argue that it is a set of computing resources – networking, storage and raw compute – that an organisation can draw on at will.

Pick your own

It lives either in your data centre or in a co-located facility. The essential characteristic is that you own it and control it. And because you are managing all of it, you pay for all of it.

The difference between virtualisation and private cloud is that one provides the means to achieve the other – but it is not quite so simple.

If you virtualise, you reduce hardware maintenance but you still have to manage those machines, patch their operating systems and applications, and generally keep them up to date.

And when their requirements change – maybe the accounts department is doing its monthly invoice run and needs more disk space and CPU – you have to manage that process manually.

Do the automation

What a private cloud brings is automation of that resource allocation as demand moves around the data centre.

It means the commissioning and de-commissioning of machines and the automatic management of available resources, as well as upgrades, patches and maintenance. Virtual machines can commission additional resources as they need them. In other words, the focus of attention shifts from the infrastructure to service delivery.

If a virtual machine is to have freedom to move, it is not just the server configuration that changes

There are several ways to achieve this. VMware's vCenter is a form of cloud management. Microsoft’s System Center also plays in this field, as does HP’s CloudSystem Matrix.

The thing to remember is that if a virtual machine is to have complete freedom to move around the data centre, it is not just the server configuration that changes: the storage, network policies and security polices need to move too.

There are several other products out there offering to take control of your data centre and help you manage it as a single entity. They include software from Cloud.com, Abiquo and Nimbula, among others.

Nimbula Director promises to help you migrate applications into the cloud. It includes policy-based authorisation, together with dynamic storage provisioning, monitoring and metering, automated deployment and cloud management.

Cloud.com is open source and promises similar benefits. It comes as a free, community-supported edition as well as in a fully supported commercial version. Abiquo also offers community and commercial versions of its software.

Fuzzy clouds

But as Mark Bowker, an analyst at the Enterprise Strategy Group, has pointed out, none of the vendors, including the major ones such as VMware, Citrix and Microsoft, are clear as to what a private cloud is, although they are united in their view of virtualisation technology as its bedrock.

To summarise, the key definition of a cloud, whether private or public, is its intelligence and responsiveness to demands from a virtual machine for resources.

But that is just a vision for most companies right now and is likely to remain so unless you buy all your data centre gear from one vendor. Unsurprisingly, that is what the big beasts in the IT jungle would prefer and an option they are all offering.

All they have to do is convince enterprises that it is safe to go back into the single-vendor pool, and that might not be quite so easy.

<Return to section navigation list>

Cloud Security and Governance

Rachel King reported Cloud computing infrastructures will routinely fail, panel says in a 6/23/2011 post from the Structure Conference 2011 to ZDNet’s Between the Lines blog (missed when posted):

The major theme being discussed at GigaOm’s Structure conference in San Francisco this week is the future of cloud computing. Most of us can agree that we’re still in the nascent stages of this technology, and that there are a lot of pitfalls to overcome still. But many Silicon Valley players argue that we need to accept the failures of the cloud to learn from them.

While moderating a “guru panel” dubbed “What can the Enterprise Learn from Webscale?” on Wednesday, Facebook’s VP of Technical Operations Jonathan Heiliger said that “web businesses are designed to fail,” meaning that the fundamental components are going to fail, but that the apps should be built to under scale this failure.

Claus Moldt, Global CIO and SVP Service Delivery at Salesforce.com, added to that:

Everything within the infrastructure needs to be designed with failure in mind…That’s how you have to run your business.

However, the panelists agreed that the best way for a business (especially startups) to avoid complete failure when transitioning to the cloud is building on a horizontal scale - not a vertical one.

LinkedIn’s VP of Engineering Kevin Scott VP warned that if companies don’t think about the infrastructure on a scalable basis in the beginning, they’ll “deeply regret it.”

In addition to having fundamental fault tolerance, you have to think very quickly about how you’re going to scale your applications.

It’s not just about economics…it’s about good system design, dealing with networks and components failing…It forces you to expose clean APIs between these components.

Sid Anand, a software architect at Netflix, offered the example that when the video streaming site moved to Amazon’s Web Services cloud, noting that the move was successful because developers rebuilt the apps from scratch with a new DNA. Thus, as Anand mentioned, Netflix had an “infinite scalable and resilient” system because they didn’t “shoe-horn” apps into a new platform that they weren’t originally designed for.

Of course, one of the biggest hindrances to the cloud still for most consumer and enterprise computer users is questionable security. Without delving too much into specifics, Jacob Rosenberg, an architect at Comcast, advised that enterprises need to take a more holistic approach to understanding security. Scott added that this could incorporate “traditional security techniques and aggressive monitoring” by IT departments.

Noting that “there is a new generation of measures that are being taken to secure cloud-type services,” Rosenberg said that these ideas can still be applied to existing hosted products to make them more secure as well.

Related:

- Amazon CTO: Cloud computing is defined by its benefits

- Red Hat delivers strong first quarter, rides data center upgrades

- Assessing the corporate tablet field: Why the enterprise may be different

- IBM launches new Netezza appliance, eyes big data

- Microsoft BPOS users complain of new e-mail and dashboard outage

<Return to section navigation list>

Cloud Computing Events

Cisco Systems announced Full access to Cisco Live Virtual is now FREE! on in a 6/28/2011 article:

Cisco Live Virtual now offers free, unlimited access to the

world-class content and networking tools that are an essential resource to networking and communications professionals from more than 195 countries.

Cisco Live Virtual offers:If you can't make it to the live event, Cisco Live brings the conference to you, including:

More than 1,000 live and on-demand technical training sessions, keynotes, and Super Sessions

- Networking tools to connect with your peers, Cisco experts, and Cisco partners

- Connect through blogging tools, Twitter, Facebook, and LinkedIn

- Cisco booth content and partner information

- Play games and win prizes

- Year-round access to other Cisco Live events worldwide

Register today at www.ciscolivevirtual.com

- Buzzworthy Cisco Live events such as keynote addresses, Super Sessions, and Town Hall meetings

- More than 70 video and 200 audio webcasts of technical sessions on-demand, after the event - and thousands more from previous events

OmniTI (@surgecon) announced in a 6/28/2011 post Surge 2011 to be held 9/28 to 9/20/2011 at the Baltimore Convention Center in Baltimore, MD:

Join the Internet's top engineers.

In Baltimore September 28th - 30th, hear case studies about designing and operating scalable Internet architectures—and, new this year, Hack Day. Register now to take advantage of the best rates during Early Bird registration:

Discussing Scalability Matters...

...because scalability matters. Surge is more than an event, it's a chance to identify emerging trends and meet the architects behind established technologies. Learn from their mistakes and see how their victories can power your business

Introducing the Surge Hack Day on Sept. 28th. A chance for practitioners to get together for a day, and get their code on.

CloudTimes offered Free Ticket Giveaways for Cloud Control Conference in Boston in a 6/27/2011 post:

Interested in attending the Cloud Control Conference in Boston from July 19th-21st, but don’t have a ticket yet? CloudTimes has 5 free tickets to giveaway – a $1385 value each! All you need to do is send us an email to info@cloudtimes.org with your name, company and email address, Follow us on Twitter and Like us on Facebook. Deadline is EOB on June 29th. We will choose the winners randomly.

CloudTimes is a media sponsor of the Cloud Control Conference and Exhibition in Boston.

Cloud Control Conference – Boston

The event will be held at the Hilton Boston Logan Hotel and Conference Center on July 19, 20 and 21, 2011.

<Return to section navigation list>

Other Cloud Computing Platforms and Services

Barton George (@barton808) posted Structure: Thoughts by Rich Miller of DataCenter Knowledge on 6/28/2011:

To close out my series of interviews from last week’s Structure conference in San Francisco, below is the chat I had with Data Center Knowledge‘s founder and editor, Rich Miller. Last week’s event was the 4th Structure conference that Rich attended and I got his thoughts on some of the hot topics.

Some of the ground Rich covers

- How the discussion of cloud has evolved over the last four years

- (1:21) Rich’s thoughts on OpenFlow and the networking space

- (2:25) Reflections on the next-gen server/chip discussion and the companies on the panel: SeaMicro, Tilera, Calxeda and AMD

- (4:25) Facebook’s OpenCompute project and the new openess in data center design

Extra-credit reading

- Structure: Learning about DevOps & Crowbar from Jesse Robbins

- Structure: Cumulogic, a Java Platform as a Service

- Structure: Why are 1.0 Data Centers suddenly sexy?

- Structure: OpenStack launches Incubation program

Pau for now…

See my Giga Om Structure Conference 2011 - Links to Archived Videos for 6/22/2011 and Giga Om Structure Conference 2011 - Links to Archived Videos for 6/23/2011 posts for more information about the Structure Conference 2011.

App Harbor (@appharbor) announced the add-ons mentioned below in a 6/28/2011 message:

Announcing Add-ons

Today AppHarbor is unveiling our add-on program. As of right now, you can add third-party services from MongoHQ (MongoDB), Cloudant (CouchDB), Redis To Go (Redis), Mailgun (email sending and receiving) and Memcacher (memcached). We have wanted to offer these kinds of services on AppHarbor for a long time, and we think that letting third party service providers onto the platform is the best way to get developers access to a wide selection of high-quality services.

Adding an add-on to one of your applications is very simply: Simply select 'Add-ons' from the application menu on appharbor.com, find the add-on you want and then add it. This causes AppHarbor to provision the resource with the add-on provider so that it is ready for your application to use. The next time you deploy your application, AppHarbor will inject the required configuration information into your application so that the service can be consumed. You can find more detailed information on how that works for the various add-ons in the add-on section of the knowledge base.We hope to soon offer many more exciting services as add-ons on AppHarbor. If you have an idea for a service that you think should be available (or if you think you can do a better job of providing a service already offered), you can start building an add-on right now. Our add-on API is based on Heroku's and the documentation is open. Have an idea for an add-on? Drop us a line at partner@appharbor.com.

Other AppHarbor Platform Additions

We've added a bunch of other platform features, including:

- Support for SNI and Piggyback SSL

- Support for Service Hooks

- Built-in logging of Application Request Errors

AppHarbor is Hiring!

We have got a long list of features that we want to add to the platform, both the ones you're suggesting on the feedback forum and plenty more that we aren't telling anyone about. We've also managed to secure solid financial backing and would love to grow our team. We are looking for superb engineers willing to work in either San Francisco or Copenhagen, Denmark.

Lots of .NET experience is not required, but a willingless to learn C# and .NET is. What is required though, is top-notch object oriented design and development skills, knowledge of distributed version control systems and experience building loosely coupled platforms.We also value web development experience, experience managing servers and database systems and experience with cloud platforms.At AppHarbor, you'll get to work on one of the hottest and most talked-about products in the .NET ecosystem. Every day, you'll help make it easier for developers to deploy and scale their code so that they can spend their time thinking about and building great apps. At AppHarbor, we try to live the product we build: We move fast and often deploy new features and fixes many times a day. AppHarbor offers competetive compensation including stock for top candidates.

Interested? Drop us a line at work@appharbor.com with links to code and projects that you've built. Preferably something hosted on AppHarbor.

Upgrade to Windows Server 2008 R2

Friday night, June 24th, we moved all applications from servers running Windows Server 2008 SP2 (and IIS 7) to brand new servers running Windows Server 2008 R2 (and IIS 7.5). The move went well with the AppHarbor platform seamlessly deploying applications to the new servers, shifting the traffic load and removing applications from the old servers.

Because of small changes to the default inherited application configuration from IIS 7 to IIS 7.5, a few applications encountered issues running on the new servers. We either worked with the application developers to resolve the issues immediately, or moved the applications back to the old servers until the issues could be resolved. We are evaluating how to best conduct updates like these in the future to avoid any application downtime.

Since all applications were redeployed, all platform application App_Data folders were also reset. This served as a reminder to some developers not to use the App_Data folder of their application for other purposes than temporary caching. App_Data contents are not preserved across deploys. This includes re-deploys conducted by the AppHarbor platform as part of regular maintenance such as the one described.

Martin Tantau reported AppHarbor Introduces Add-On API for .NET Developers in a 6/27/2011 post to the CloudTimes blog:

AppHarbor, a platform as a service (PaaS) provider for .NET developers and businesses, today announced an add-on API that will provide a self-service portal for third-party service providers.

Under the baseline “Azure done right“, the AppHarbor team has made some significant progress since their prototype release in September 2010. I had the chance to meet two of the founders, Michael Friis and Rune Sørensen at last week’s GigaOM Structure event in San Francisco. See the short video with Michael Friis below.

Innovative PaaS provider introduces new service letting developers purchase cloud services from multiple vendors with one click

This new release lets developers purchase cloud services through a single, easy-to-use interface and easily integrate additional functionality into the applications they build on the AppHarbor platform. The catalog of add-ons available with this release include MongoHQ, Cloudant, Redis To Go, and Mailgun. These services are now offered on the AppHarbor platform and seamlessly integrate into a users pre-existing applications enabling immediate and automatic access to the comprehensive capabilities of each offering.

.NET Deployment as it should be

Add-on programs like AppHarbor’s provide a process for accelerating the process of bringing new applications from beta through to release. .NET developers can leverage the cloud and more quickly deploy and scale applications.

AppHarbor’s platform gives .NET developers access to a Git- and Mercurial-enabled continuous deployment environment that supports a rapid build, test, and deploy workflow common to agile teams and startups. AppHarbor features automated unit testing which allows developers to test code before it gets deployed, eliminating the possibility of deploying code that breaks their site. Additionally, AppHarbor takes only 15 seconds to deploy new code versions, compared to 15 minutes like other solutions on the market. The AppHarbor platform is self-hosting and capable of deploying new versions of itself. AppHarbor makes deploying and scaling .NET applications quick and simple.

“AppHarbor addresses needs that have yet to be resolved by other solutions in the market and this release further differentiates our PaaS offering,” said Michael Friis, co-founder at AppHarbor. “In a short time, our platform has been adopted by more than 5,000 developers. It fulfills a need that the .NET Community has had for some time. We are extremely excited to announce add-ons that make it extremely easy for our users to purchase the cloud services they desire.”

Available now, AppHarbor customers can visit the add-ons catalog at appharbor.com/addon. Once an add-on is selected, developers can immediately start using the service.

Founded in 2010, AppHarbor has over 5,300 users and has received seed funding from Accel Ventures, Ignition Partners, Quest Software, Salesforce, SV Angel, Start Fund and Y Combinator.

Interview with Michael Friis at GigaOM Structure 2011

Barton George (@barton808) reported Structure: Learning about DevOps & Crowbar from Jesse Robbins in a 6/27/2011 to his Dell Computer blog:

Last week on Day two of Structure the morning sessions ended with an interesting discussion moderated by James Urquhart. The session was entitled “DevOps – Reinventing the Developers Role in the Cloud Age” and featured Luke Kanies – CEO, Puppet Labs and Jesse Robbins – Co-Founder and CEO, Opscode.

After lunch I ran into Jesse and got him to sit down with me and provide some more insight into DevOps as well as explain what Opscode was doing with project Crowbar.

Some of the ground Jesse covers

- (0:21) What is DevOps

- (1:00) The shift that happens between developers and operations. Writing code and getting it into production faster and how it shifts responsibilities between the two groups.

- (2:52) Who are the prime targets for DevOps and how has this changed over time.

- How DevOps began in web shops who needed to do things differently than legacy-bound enterprises.

- How enterprises faced with greenfield opportunities are now embracing devops

- (5:36) The crowbar installer which employs Opscode’s Chef and allows the rapid provisioning of an OpenStack cloud.

Extra-credit reading:

Barton George (@barton808) posted #Dell and #OpenStack: An Insider Update to his personal blog on 6/24/2011 (missed when posted):

For those of you who don’t know, I’m a senior cloud strategist for Dell in our Cloud Solutions group, and I’m also the business / marketing lead for Dell’s OpenStack initiative.

We’ve been incredibly busy working on all things OpenStack, so I wanted to provide a bit of an update on where we’ve been, and where we’re going.

Last summer, Dell was one of a few vendors such as Intel, Citrix, and a few others, that got together and supported the fledgling new OpenStack movement founded by Rackspace and NASA.

(The ONLY hardware solution provider that had the vision to so I should add.)

Since then, we’ve been active in the community, working with partners, and helpng customers on real OpenStack engagements.

- Dell’s been an integral part of all three OpenStack Design Summits to date – sponsoring portions of the events, leading discussions on architecture and design, providing hardware for install fests, and meeting with partners and customers.

- Crowbar anyone? We’ve led the way in an operational model that starts with bare metal provisioning but provides a methodology for managing your evolving OpenStack instance.

- Developed the popular technical whitepaper ”BootStrapping OpenStack Clouds” authored by Dell’s own OpenStack celebrities Rob Hirschfeld and Greg Althaus, as well as contributions from Rackspace OpenStack celebrity Bret Piatt.

- Numerous lightning talks, OpenStack / Crowbar demos, and working with a number of partners like Rackspace, Citrix, Opscode, and others in their OpenStack initiatives

It should be clear that Dell’s a believer in what the OpenStack community is doing, and we are committed to being a part of the community, providing expertise where our core competencies are. It’s been that way since we started back in July of last year.

So what’s the latest?

- Did you know customers have already started working with Dell on getting OpenStack clouds in their environments?

That’s right – our involvement since the beginning puts us on the short list of community partners that have the most history with OpenStack, so we have a functional reference architecture, Dell developed Crowbar software, and cloud services that can work for customers today. (Info at the end of this blog entry on how you can learn more about working with Dell to get real live OpenStack clouds in your environment.)

In fact, check out one of our customers, Cybera, publicly blogged about getting OpenStack running on Dell PowerEdge C technology. (Link to Cybera’s blog at the end of this entry – very deep technical info.)- Dell’s OpenStack Installer, better known to the community as #Crowbar, is coming along nicely! When we announced the existence of Crowbar earlier this year, we were clear that our intention was to contribute it to the open source community. We are well on our way there. We’ve already submitted our blueprint to the OpenStack governance body for Crowbar as a cloud installer (more on that here from Rob Hirschfeld).

And here’s a quick snapshot of our latest work on the Crowbar UI that Rob posted recently.

(What? No more plain white background? That’s right – we’ve got game.)

Dell Crowbar

- The final comment I’ll make is that we see this summer as an important time for OpenStack – Cactus is out, Diablo is around the corner, partners are joining the community daily, and customers are getting excited. More and more of our customers are finding out HOW REAL OpenStack, and are getting on board.

Dell is going to continue to be a mover and shaker in OpenStack, so keep an eye out on Dell as we prepare to make our next big move in OpenStack.

Head back here often to stay up to date, and you can also follow myself and other Dell OpenStack leads on Twitter – @jbgeorge, @zehicle, @barton808, and others.Here’s to the summer of OpenStack!

More info:

- Dell’s OpenStack website (where you can get the “Bootstrapping OpenStack Clouds” technical whitepaper) – http://www.Dell.com/OpenStack

- To talk to someone about becoming a Dell OpenStack customer – OpenStack@Dell.com

- Dell customer Cybera’s blog on “Running OpenStack in Production” – http://www.cybera.ca/tech-radar/running-openstack-production-part-1-hardware

- Dell’s Cloud Evangelist’s blog – www.bartongeorge.net

- Dell’s Cloud Solution Architecht (and OpenStack expert) Rob Hirschfeld’s blog – www.RobHirschfeld.com

Still no news from Dell about the promised (last year) delivery of the Windows Azure Platform Appliance.

Paula Rooney (@PaulaRooney1) reported Red Hat declares war against VMware on cloud front in a 6/23/2011 post to ZDNet’s Virtually Speaking blog:

Red Hat declared war on VMware’s Cloud Foundry today, announcing that 65 new companies have joined the Open Virtualization Alliance backing KVM in a month’s time.

In May, Red Hat, SUSE, BMC Software, Eucalyptus Systems, HP, IBM and Intel, announced the formation of the Open Virtualization Alliance.

As of today, 65 new members have joined, including Dell. Scott Crenshaw, who leads Red Hat’s cloud effort, denounced what he called VMware’s proprietary cloud platform.

Red Hat has backed and been evangelizing the open source hypervisor since buying Qumranet several years ago, and offers significant KVM support in its Enterprise Linux 6.1. The company also recently announced a number of multi-platform cloud products based on KVM including its OpenShift PaaS and Cloud Forms IaaS.

KVM is incorporated in the Linux kernel and is backed by some open source advocates while others prefer Xen. (Interestingly, the two Xen.org founders left Citrix yesterday to launch a startup). Still, it’s been an uphill battle — and a development challenge — for the lesser known KVM open source hypervisor to make headway in the enterprise market.

At the recent Red Hat Summit, execs insisted that KVM is enterprise ready. Today’s announcements — including the release of Red Hat’s MRG 2.0 — are designed to further that goal.

“The floodgates have been lifted and [there's] a massive wave of support for KVM and Red Hat Enterprise Virtualization,” said Crenshaw, vice president and general manager of Red Hat’s cloud business. “Lock-in doesn’t benefit anyone but VMware … the tide is rising against VMware’s hegemony.”

He said VMware’s Cloud Foundry purports to be open but claimed that at its core it is based on VMware’s proprietary ESX virtualization technology. He also said Microsoft’s claims of openness and no cost for Azure are not yet proven. [Emphasis added.]

The full list of OVA announced today is packed with many open source companies, includes Abiquo, AdaptiveComputing, Afore Solutions, Arista Networks, Arkeia, autonomicresources, B1 Systems, BlueCat Networks, Brocade, Carbon 14 Software, Cfengine, CheapVPS, Cloud Cruiser, CloudSigma, CloudSwitch, CodeFutures, CohesiveFT, Collax GmbH, Convirture, Corensic, Censtratus, EnterpriseDB, Everis Inc., Fujitsu Frontech, FusionIO, Gluster, Inc., Grid Dynamics, Groundwork Open Source, HexaGrid Computing, IDT us, Infinite Technologies, Information Builders, Killer Beaver, LLC, Likewise, Mindtree Ltd, MontaVista Software, Morph Labs, nanoCloud, Neocoretech, Nicira Networks, Nimbula, novastorm, One Convergence, OpenNebula / C12G Labs, Providence Software (XVT), Proxmox Server Solutions GmbH, Qindel, RisingTide Systems, ScaleOut Software, Sep Software, Shadow Soft, Smartscale, StackOps, stepping stone GmbH, Storix, UC4, Unilogik, Univention, Usharesoft, Virtual Bridges , Vyatta, Weston Software Inc, XebiaLabs and Zmanda.

KVM once battled to be a serious contender to Xen. Now, its chief commercial backer — Red Hat — is taking on the behemoth — VMware — for the Virtualization 2.0 dollars

I don’t recall Microsoft claims that Windows Azure is “open,” other than to programming languages such as PHP, Java, Ruby, et al., or “no cost.”

<Return to section navigation list>

0 comments:

Post a Comment