Windows Azure and Cloud Computing Posts for 6/3/2011+

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

••• Updated Sunday, 6/5/2011 with articles marked ••• from Michael Washington, Steve Nagy, Tim Negris, Simon Munro, Joe Hummel, Lohit Kashyapa and Martin Ingvar Kofoed Jensen (Evaluating combining Friday, Saturday and Sunday articles.)

•• Updated Saturday, 6/4/2011 with articles marked •• from Alik Levin, the Windows Azure Connect team, Azret Botash, Alex Popescu, Jnan Dash, George Trifanov, Doug Finke, Raghav Sharma, David Tesar, Elisa Flasko, MSDN Courses, Stefan Reid, John R. Rymer, Louis Columbus, Brian Harris and Me.

• Updated Friday, 6/3/2011 at 12:00 Noon PDT or later with articles marked • from Klint Finley, Kenneth Chestnut, the Windows Azure OS Updates team, Steve Yi, Alex James, Peter Meister, Scott Densmore and the Windows Azure Team UK.

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services •••

- SQL Azure Database, Data Sync, Compact and Reporting •, ••

- Marketplace DataMarket and OData •, ••

- Windows Azure AppFabric: Access Control, WIF and Service Bus •, ••

- Windows Azure VM Role, Virtual Network, Connect, RDP and CDN •, ••

- Live Windows Azure Apps, APIs, Tools and Test Harnesses •••

- Visual Studio LightSwitch and Entity Framework 4+ •, •••

- Windows Azure Infrastructur and DevOps •, ••

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds ••, •••

- Cloud Security and Governance ••

- Cloud Computing Events •

- Other Cloud Computing Platforms and Services •, ••, •••

To use the above links, first click the post’s title to display the single article you want to navigate.

•• See New Navigation Features for the OakLeaf Systems Blog for a description of bulleted items.

Azure Blob, Drive, Table and Queue Services

••• Martin Ingvar Kofoed Jensen (@IngvarKofoed) explained the difference of Azure cloud blob property vs metadata in a 6/5/2011 post:

Both properties (some of them) and the metadata collection of a blob can be used to store meta data for a given blob. But there are a small differences between them. When working with the blob storage, the number of HTTP REST request plays a significant role when it comes to performance. The number of request becomes very important if the blob storage contains a lot of small files. There are at least three properties found in the CloudBlob.Properties property that can be used freely. These are ContentType, ContentEncoding and ContentLanguage. These can hold very large strings! I have tried testing with a string containing 100.000 characters and it worked. They could possible hold a lot more, but hey, 100.000 is a lot! So all three of them can be used to hold meta data.

So, what are the difference of using these properties and using the metadata collection? The difference lies in when they get populated. This is best illustrated by the following code:

CloudBlobContainer container; /* Initialized assumed */ CloudBlob blob1 = container.GetBlobReference("MyTestBlob.txt"); blob1.Properties.ContentType = "MyType"; blob1.Metadata["Meta"] = "MyMeta"; blob1.UploadText("Some content"); CloudBlob blob2 = container.GetBlobReference("MyTestBlob.txt"); string value21 = blob2.Properties.ContentType; /* Not populated */ string value22 = blob2.Metadata["Meta"]; /* Not populated */ CloudBlob blob3 = container.GetBlobReference("MyTestBlob.txt"); blob3.FetchAttributes(); string value31 = blob3.Properties.ContentType; /* Populated */ string value32 = blob3.Metadata["Meta"]; /* Populated */ CloudBlob blob4 = (CloudBlob)container.ListBlobs().First(); string value41 = blob4.Properties.ContentType; /* Populated */ string value42 = blob4.Metadata["Meta"]; /* Not populated */ BlobRequestOptions options = new BlobRequestOptions { BlobListingDetails = BlobListingDetails.Metadata }; CloudBlob blob5 = (CloudBlob)container.ListBlobs(options).First(); string value51 = blob5.Properties.ContentType; /* Populated */ string value52 = blob5.Metadata["Meta"]; /* populated */The difference is when using ListBlobs on a container or blob directory and the values of the BlobRequestOptions object. It might not seem to be a big difference, but imagine that there are 10.000 blobs all with a meta data string value with a length of 100 characters. That sums to 1.000.000 extra data to send when listing the blobs. So if the meta data is not used every time you do a ListBlobs call, you might consider moving it to the Metadata collection. I will investigate more into the performance of these methods of storing meta data for a blob in a later blog post.

Michał Morciniec (@morcinim) described How to Deploy Azure Role Pre-Requisite Components from Blob Storage in a 6/3/2011 post to his ISV Partner Support blog:

In Windows Azure SDK1.3 we have introduced the concept of startup tasks that allow us to run commands to configure the role instance, install additional components and so on. However, this functionality requires that all pre-requisite components are part of the Azure solution package.

In practice this has following limitations:

- if you add or modify pre-requisite component you need to regenerate the Azure solution package

- you will have to pay the bandwidth charge of transferring the regenerated solution package (perhaps 100s of MB) even though you actually want to update just one small component

- the time to update entire role instance is incremented by the time it takes to transfer the solution package to Azure datacenter

- you cannot update individual component rather you update entire package

Below I describe an alternative approach. It is based on the idea to leverage blob storage to store the pre-requisite components. Decoupling of pre-requisite components (in most cases they have no relationship with the Azure role implementation) has a number of benefits:

- you do not need to touch Azure solution package, simply upload a new component to blob container with a tool like Windows Azure MMC

- you can update an individual component

- you only pay a bandwidth cost for component you are uploading, not the entire package

- your time to update the role instance is shorter because you are not transferring entire solution package

Here is how the solution is put together:

When Azure role starts, it downloads the components from a blob container that is defined in the .cscfg configuration file

<Setting name="DeploymentContainer" value="contoso" />The components are downloaded to a local disk of the Azure role. Sufficient disk space is reserved for a Local Resource disk defined in the .csdef definition file of the solution – in my case I reserve 2GB of disk space

<LocalStorage name="TempLocalStore" cleanOnRoleRecycle="false" sizeInMB="2048" />Frequently, the pre-requisite components are installers (self extracting executables or .msi files). In most cases they will use some form of temporary storage to extract the temporary files. Most installers allow you to specify the location for temporary files but in case of legacy or undocumented third party components you may not have this option. Frequently, the default location would the directory indicated by %TEMP% or %TMP% environment variables. There is a 100MB limit on the size of the TEMP target directory that is documented in Windows Azure general troubleshooting MSDN documentation.

To avoid this issue I implemented the mapping of the TEMP/TMP environment variables as indicated in this document. These variables point to the local disk we reserved above.

private void MapTempEnvVariable()

{

string customTempLocalResourcePath =

RoleEnvironment.GetLocalResource("TempLocalStore").RootPath;

Environment.SetEnvironmentVariable("TMP", customTempLocalResourcePath);

Environment.SetEnvironmentVariable("TEMP", customTempLocalResourcePath);

}The OnStart() method of the role (in my case it is a “mixed Role” - Web Role that also implements a Run() method) starts the download procedure on a different thread and then blocks on a wait handle.

public class WebRole : RoleEntryPoint

{

private readonly EventWaitHandle statusCheckWaitHandle = new ManualResetEvent(false);

private volatile bool busy = true;

private const int ThreadPollTimeInMilliseconds = 1500;

//periodically check if the handle is signalled

private void WaitForHandle(WaitHandle handle)

{

while (!handle.WaitOne(ThreadPollTimeInMilliseconds))

{

Trace.WriteLine("Waiting to complete configuration for this role .");

}

}

[...]

public override bool OnStart()

{

//start another thread that will carry would configuration

var startThread = new Thread(OnStartInternal);

startThread.Start();

[...]

//Blocks this thread waiting on a handle

WaitForHandle(statusCheckWaitHandle);

return base.OnStart();

}

}If you block in OnStart() or continue depends on the nature of the pre-requisite components and where they are used. If you need your components to be installed before you enter Run() – you should block because Azure will call this method pretty much immediately after you exit OnStart(). On the other hand if you have a pure Web role than you could choose not to block. Instead, you would handle the StatusCheck event and indicate that you are still busy configuring they role – the role will be in Busy state and will be taken out of the NLB rotation.

private void RoleEnvironmentStatusCheck(object sender, RoleInstanceStatusCheckEventArgs e){

if (this.busy)

{

e.SetBusy();

Trace.WriteLine("Role status check-telling Azure this role is BUSY configuring, traffic will not be routed to it.");

}

// otherwise role is READY

return;

}If you choose to block OnStart() there is little point to implement the StatusCheck event handler because when OnStart() is entered the role is in Busy state and the status check event is not raised until OnStart() exits and role moves to Ready state.

The function OnStartInternal() is where the components are downloaded from the preconfigured Blob container and written to local disk that we allocated above. When downloading the files we look for a file named command.bat.

This command batch file holds commands that we will run after the download completes in the similar way as we do it with startup tasks. Here the sample command.bat

vcredist_x64.exe /q

exit /b 0I should point out that if you are dealing with large files you should definitely specify the Timeout property in the BlobRequestOptions for the DownloadToFile() function. The timeout is calculated as a product of the size of the blob in MB and the max. time that we are prepared to wait to transfer 1MB. You can tweak timeoutInSecForOneMB variable to adjust the code to the network conditions. Any value that works for you when you deploy solution to Compute Emulator and use the Azure blob store will also work when you deploy to Azure Compute because you would use blob storage in the same datacenter and the latencies would be much lower.

foreach (CloudBlob blob in container.ListBlobs(new BlobRequestOptions() { UseFlatBlobListing = true }))

{

string blobName = blob.Uri.LocalPath.Substring(blob.Parent.Uri.LocalPath.Length);

if (blobName.Equals("command.bat"))

{

commandFileExists = true;

}

string file = Path.Combine(deployDir, blobName);

Trace.TraceInformation(System.DateTime.UtcNow.ToUniversalTime() + " Downloading blob " + blob.Uri.LocalPath + " from deployment container " + container.Name + " to file " + file);

//the time in seconds in which 1 MB of data should be downloaded (otherwise we timeout)

int timeoutInSecForOneMB = 5;

double sizeInMB = System.Math.Ceiling((double)blob.Properties.Length / (1024 * 1024));

// we set large enough timeout to download the blob of the given size

blob.DownloadToFile(file, new BlobRequestOptions() { Timeout = TimeSpan.FromSeconds(timeoutInSecForOneMB * sizeInMB) });

FileInfo fi = new FileInfo(file);

Trace.TraceInformation(System.DateTime.UtcNow.ToUniversalTime() + " Saved blob to file " + file + "(" + fi.Length + " bytes)");

}After download has been completed the RunProcess() function spawns a child process to run the batch. The runtime process host WaIISHost.exe is configured to run elevated. This is done in the service definition file using the Runtime element. This is required so that the batch commands have admin rights (the process will run under Local System account)

<Runtime executionContext="elevated">

</Runtime>Then we signal the wait handle (if implemented) and /or set busy flag that is used with the StatusCheck event to indicate configuration has completed and role can move to Ready state.

Trace.WriteLine("---------------Starting Deployment Batch Job--------------------");

if (commandFileExists)

RunProcess("cmd.exe", "/C command.bat");

else

Trace.TraceWarning("Command file command.bat was not found in container " + container.Name);

Trace.WriteLine(" Deployment completed.");

// signal role is Ready and should be included in the NLB rotation

this.busy = false;

//signal the wait handle to unblock OnStart() thread - if waiting has been implemented.

statusCheckWaitHandle.Set();The sample solution includes a diagnostics.wadcfg file that transfers the traces and events to blob store (you want to delete or rename it once you go to production to avoid associated storage costs) every 60 seconds.

<?xml version="1.0" encoding="utf-8"?>

<DiagnosticMonitorConfiguration xmlns="http://schemas.microsoft.com/ServiceHosting/2010/10/DiagnosticsConfiguration" configurationChangePollInterval="PT5M" overallQuotaInMB="3005">

<Logs bufferQuotaInMB="250" scheduledTransferLogLevelFilter="Verbose" scheduledTransferPeriod="PT01M" />

<WindowsEventLog bufferQuotaInMB="50"

scheduledTransferLogLevelFilter="Verbose"

scheduledTransferPeriod="PT01M">

<DataSource name="System!*" />

<DataSource name="Application!*" />

</WindowsEventLog>

</DiagnosticMonitorConfiguration>You can then deploy the solution to Azure Compute and look up the traces in the WADLogsTable using any Azure storage access tool such as Azure MMC.

Entering OnStart() - 03/06/2011 9:09:51

Blocking in OnStart() - waiting to be signalled.03/06/2011 9:09:51

OnStartInternal() - Started 03/06/2011 9:09:51

Waiting to complete configuration for this role .

---------------Downloading from Storage--------------------

03/06/2011 9:09:53 Downloading blob /contoso/command.bat from deployment container contoso to file C:\Users\micham\AppData\Local\dftmp\s0\deployment(688)\res\deployment(688).StorageDeployment.StorageDeployWebRole.0\directory\TempLocalStore\command.bat; TraceSource 'WaIISHost.exe' event

03/06/2011 9:09:53 Saved blob to file C:\Users\micham\AppData\Local\dftmp\s0\deployment(688)\res\deployment(688).StorageDeployment.StorageDeployWebRole.0\directory\TempLocalStore\command.bat(36 bytes); TraceSource 'WaIISHost.exe' event

03/06/2011 9:09:53 Downloading blob /contoso/vcredist_x64.exe from deployment container contoso to file C:\Users\micham\AppData\Local\dftmp\s0\deployment(688)\res\deployment(688).StorageDeployment.StorageDeployWebRole.0\directory\TempLocalStore\vcredist_x64.exe; TraceSource 'WaIISHost.exe' event

Waiting to complete configuration for this role .

Waiting to complete configuration for this role .

Waiting to complete configuration for this role .

Waiting to complete configuration for this role .

Waiting to complete configuration for this role .

03/06/2011 9:10:01 Saved blob to file C:\Users\micham\AppData\Local\dftmp\s0\deployment(688)\res\deployment(688).StorageDeployment.StorageDeployWebRole.0\directory\TempLocalStore\vcredist_x64.exe(5718872 bytes); TraceSource 'WaIISHost.exe' event

---------------Starting Deployment Batch Job--------------------

Information: Executing: cmd.exe

; TraceSource 'WaIISHost.exe' event

C:\Users\micham\AppData\Local\dftmp\s0\deployment(688)\res\deployment(688).StorageDeployment.StorageDeployWebRole.0\directory\TempLocalStore>vcredist_x64.exe /q ; TraceSource 'WaIISHost.exe' event

Waiting to complete configuration for this role .

Waiting to complete configuration for this role .

Waiting to complete configuration for this role .

Waiting to complete configuration for this role .

Waiting to complete configuration for this role .

Waiting to complete configuration for this role .

Waiting to complete configuration for this role .

Waiting to complete configuration for this role .

Waiting to complete configuration for this role .

; TraceSource 'WaIISHost.exe' event

; TraceSource 'WaIISHost.exe' event

C:\Users\micham\AppData\Local\dftmp\s0\deployment(688)\res\deployment(688).StorageDeployment.StorageDeployWebRole.0\directory\TempLocalStore>exit /b 0 ; TraceSource 'WaIISHost.exe' event

; TraceSource 'WaIISHost.exe' event

Information: Process Exit Code: 0

Process execution time: 13,151 seconds.

Deployment completed.

Exiting OnStart() - 03/06/2011 9:10:14

Information: Worker entry point called

Information: WorkingIf you need to redeploy the component you just upload the changed component versions to blob storage and restart your role to pick it up. When you combine this technique with the Web Deploy to update the code of Azure web role you should notice that the whole update process is faster and more flexible. This approach would be most useful in development and testing where otherwise you redeploy the solution multiple times.

You can download the solution here.

Joseph Fultz wrote Multi-Platform Windows Azure Storage for smartphones in MSDN Magazine’s June 2011 issue:

Windows Azure Storage is far from a device-specific technology, and that’s a good thing. This month, I’ll take a look at developing on three mobile platforms: Windows Phone 7, jQuery and Android.

To that end, I’ll create a simple application for each that will make one REST call to get an image list from a predetermined Windows Azure Storage container and display the thumbnails in a filmstrip, with the balance of the screen displaying the selected image as seen in Figure 1.

Figure 1 Side-by-Side Storage Image Viewers

Preparing the Storage Container

I’ll need a Windows Azure Storage account and the primary access key for the tool I use for uploads. In the case of secure access from the client I’m developing I would also need it. That information can found in the Windows Azure Platform Management Portal.

I grabbed a few random images from the Internet and my computer to upload. Instead of writing upload code, I used the Windows Azure Storage Explorer, found at azurestorageexplorer.codeplex.com. For reasons explained later, images need to be less than about 1MB. If using this code exactly, it’s best to stay at 512KB or less. I create a container named Filecabinet; once the container is created and the images uploaded, the Windows Azure piece is ready.

Platform Paradigms

Each of the platforms brings with it certain constructs, enablers and constraints. For the Silverlight and the Android client, I took the familiar model of marshaling the data in and object collection for consumption by the UI. While jQuery has support for templates, in this case I found it easier to simply fetch the XML and generate the needed HTML via jQuery directly, making the jQuery implementation rather flat. I won’t go into detail about its flow, but for the other two examples I want to give a little more background.

Windows Phone 7 and Silverlight

If you’re familiar with Silverlight development you’ll have no problem creating a new Windows Phone 7 application project in Visual Studio 2010. If not, the things you’ll need to understand for this example are observable collections (bit.ly/18sPUF), general XAML controls (such as StackPanel and ListBox) and the WebClient.

At the start, the application’s main page makes a REST call to Windows Azure Storage, which is asynchronous by design. Windows Phone 7 forces this paradigm as a means to ensure that any one app will not end up blocking and locking the device. The data retrieved from the call will be placed into the data context and will be an ObservableCollection<> type.

This allows the container to be notified and pick up changes when the data in the collection is updated, without me having to explicitly execute a refresh. While Silverlight can be very complex for complex UIs, it also provides a low barrier of entry for relatively simple tasks such as this one. With built-in support for binding and service calls added to the support for touch and physics in the UI, the filmstrip to zoom view is no more difficult than writing an ASP.NET page with a data-bound Grid.

Android Activity

Android introduces its own platform paradigm. Fortunately, it isn’t something far-fetched or hard to understand. For those who are primarily .NET developers, it’s easy to map to familiar constructs, terms and technologies. In Android, pretty much anything you want to do with the user is represented as an Activity object. The Activity could be considered one of the four basic elements that may be combined to make an application: Activity, Service, Broadcast Receiver and Content Provider.

For simplicity, I’ve kept all code in Activity. In a more realistic implementation, the code to fetch and manipulate the images from Windows Azure Storage would be implemented in a Service.

This sample is more akin to putting the database access in the code behind the form. From a Windows perspective, you might think of an Activity as a Windows Forms that has a means—in fact, the need—to save and restore state. An Activity has a lifecycle much like Windows Forms; an application could be made of one-to-many Activities, but only one will be interacting with the user at a time. For more information on the fundamentals of Android applications, go to bit.ly/d3c7C.

This sample app will consist of a single Activity (bit.ly/GmWui), a BaseAdapter (bit.ly/g0J2Qx) and a custom object to hold information about the images.

Creating the UIs

The common tasks in each of the UI paradigms include making the REST call, marshaling the return to a useful datatype, binding and displaying, and handling the click event to show the zoom view of the image. First, I want to review the data coming back from the REST Get, and what’s needed for each example to get the data to something more easily consumable. …

Joseph continues with a detailed description of the required code for Silverlight and Android applications.

Joseph is a software architect at AMD, helping to define the overall architecture and strategy for portal and services infrastructure and implementations. Previously he was a software architect for Microsoft working with its top-tier enterprise and ISV customers defining architecture and designing solutions.

Full disclosure: 1105 Media publishes MSDN Magazine and I’m a contributing editor of their Visual Studio Magazine.

See also Roger Struckhoff (@struckhoff) announced “Microsoft's Brian Prince Outlines Strategy at Cloud Expo” in his Microsoft Leverages Cloud Storage for Massive Scale post of 6/3/2011 to the Cloud Computing Journal article in the Cloud Computing Events section.

<Return to section navigation list>

SQL Azure Database, Data Sync, Compact and Reporting

•• See also The Windows Azure Connect Team explained Speeding Up SQL Server Connections in a 6/3/2011 post article in the Windows Azure VM Role, Virtual Network, Connect, RDP and CDN section below.

• Kenneth Chestnut wrote Getting in a Big Data state of mind on 6/3/2011 for SD Times on the Web:

One of the most hyped terms in information management today is Big Data. Everyone seems excited by the concept and related possibilities, but is the "Big" in Big Data mere[ly] a state of mind? Yes, it is.

Organizations are realizing the potential challenges resulting from the explosion of unstructured information: e-mails, images, log files, cables, user-generated content, documents, videos, blogs, contracts, wikis, Web content... the list goes on. Traditional technologies and information management practices may no longer prove sufficient given today’s information environment. Therefore, forward-looking organizations and individuals are evaluating new technologies and concepts like Big Data in an attempt to address the unstructured information overload that is currently underway. Some forward-thinking organizations even view these challenges as opportunities to derive new insight and gain competitive advantage.

On the other side of the equation, some technology vendors are equally excited by the potential of Big Data as a Big Market Opportunity. Storage vendors focus on the sheer volume of Big Data (petabytes of information giving way to exabytes and eventually zettabytes) and the need for organizations to have efficient and comprehensive storage for all of that information. Data warehousing and business intelligence vendors emphasize the need for advanced statistical and predictive analytical capabilities to sift through the vast volume of information to make sense of it, and to find the proverbial needle in the haystack, typically using newer technologies such as MapReduce and Hadoop, which are cheaper than previous technologies. And so on.

While advanced organizations see tremendous opportunity for harnessing Big Data, how do they start addressing both the challenges and the potential opportunities given the numerous definitions and confusion that exists in the marketplace today? What if I belong to an organization that doesn’t have information in the petabytes (going to exabytes) scale? Does Big Data apply to me—or you?

This is why Big Data can be considered a state of mind rather than something specific. It becomes a concept that is applicable to any organization that feels that current tools, technologies, and processes are no longer sufficient for managing and taking advantage of their information management needs, regardless of whether its data is measured in gigabytes, terabytes, exabytes or zettabytes. …

Kenneth is vice president of product marketing at MarkLogic, which sells Big Data solutions.

Erik Ejlskov Jensen (@ErikEJ) posted Populating a Windows Phone “Mango” SQL Server Compact database on desktop on 6/2/2011:

If you want to prepopulate a Mango SQL Server Compact database with some data, you can use the following procedure to do this. (Notice, that the Mango tools are currently in beta)

First, define your data context, and run code to create the database on the device/emulator, using the CreateDatabase method of the DataContext. See a sample here. This will create the database structure on your device/emulator, but without any initial data.

Then use the Windows Phone 7 Isolated Storage Explorer to copy the database from the device to your desktop, as described here.

You can now use any tool, see the list of third party tools on this blog, to populate your tables with data as required. The file format is version 3.5. (not 4.0)

Finally, include the pre-populated database in your WP application as an Embedded Resource.

You can then use code like the following to write out the database file to Isolated Storage on first run:

public class Chinook : System.Data.Linq.DataContext

{

public static string ConnectionString = "Data Source=isostore:/Chinook.sdf";

public static string FileName = "Chinook.sdf";

public Chinook(string connectionString) : base(connectionString) { }

public void CreateIfNotExists()

{

using (var db = new Chinook(Chinook.ConnectionString))

{

if (!db.DatabaseExists())

{

string[] names = this.GetType().Assembly.GetManifestResourceNames();

string name = names.Where(n => n.EndsWith(FileName)).FirstOrDefault();

if (name != null)

{

using (Stream resourceStream = Assembly.GetExecutingAssembly().GetManifestResourceStream(name))

{

if (resourceStream != null)

{

using (IsolatedStorageFile myIsolatedStorage = IsolatedStorageFile.GetUserStoreForApplication())

{

using (IsolatedStorageFileStream fileStream = new IsolatedStorageFileStream(FileName, FileMode.Create, myIsolatedStorage))

{

using (BinaryWriter writer = new BinaryWriter(fileStream))

{

long length = resourceStream.Length;

byte[] buffer = new byte[32];

int readCount = 0;

using (BinaryReader reader = new BinaryReader(resourceStream))

{

// read file in chunks in order to reduce memory consumption and increase performance

while (readCount < length)

{

int actual = reader.Read(buffer, 0, buffer.Length);

readCount += actual;

writer.Write(buffer, 0, actual);

}

}

}

}

}

}

else

{

db.CreateDatabase();

}

}

}

else

{

db.CreateDatabase();

}

}

}

}

}

[Please] with (c) (@pleasewithc) described Working with pre-populated SQL CE databases in WP7 in a 6/2/2011 post:

[Don't miss the update below]

When I put my first pre-populated database file into a Mango project & ran it, I was unable to access the distributed database:

“Access to the database file is not allowed.”

Oddly, this error doesn’t appear until you actually attempt to select a record from the database.

When I originally created this database in my DB generator app, I used the following URI as a connection string:

“isostore:/db.sdf”

As you might expect this saves the database to the Isolated Storage of the DB generator app. I point this out because after this database is extracted from the DB generator app, added to your “real” project & deployed to a phone, the database file gets written into a read-only storage area which you can connect to via the appdata:/ URI:

“appdata:/db.sdf”

Although the DataContext constructs without a problem, using the appdata:/ URI, attempting to select records from this database threw the file access error above.

I wanted to rule out whether my database had actually been deployed at all, so I tried connecting to a purposefully bogus file:

Which gave me a different error (file not found), so I knew the database had been deployed and was in the expected location; but strangely, it looks like DataContext / LINQ to SQL doesn’t let me select records while the file resides in the app deployment area. Which effectively renders the “appdata:/” connection URI somewhat pointless :P

What you can do is copy the file out of appdata & into isostore:

This may be something to keep in mind, esp. if you planned to deploy a massive database with your app (although distributing a heavy .xap to the marketplace probably isn’t very advisable in the first place).

Arsanth’s database is only ~200KB at the moment, so producing a working copy is a trivial expense in terms of both storage space & IO time. I was just slightly surprised I actually had to this in order to select against a pre-populated database distributed with the app, so be aware of this gotcha.

Update

Thanks to @gcaughey who pointed out connection string file access modes, which I was not aware of yet.

So instead of copying to Isolated Storage, I tried this connection string:

“datasource=’appdata:/db.sdf’; mode=’read only’”

And sure enough I can select records directly from the distributed database. Right on! If you wanted full CRUD & persistence to your distributed database, you can always create copy as described; but if all you need to do is select from the distributed database, you can set file access modes in the connection string.

Bob Beauchemin (@bobbeauch) reported an Interesting SQL Azure change with next SR on 6/1/2011:

In looking at the what's new for SQL Azure (May 2011) page, I came across the following: "Upcoming Increased Precision of Spatial Types: For the next major service release, some intrinsic functions will change and SQL Azure will support increased precision of Spatial Types."

There's a few interesting things about this announcement.

Firstly, the increased precision for spatial types is not a SQL Server 2008 R2 feature. It's a Denali CTP1 feature. Although the article doesn't indicate whether they've made up a special "pre-Denali" version of this feature, or when exactly "the next major service release" will be (and when SQL Server Denali will be released is unknown), it would be interesting if updated SQL Server spatial functionality made its appearance in SQL Azure *before* making its appearence in an on-premise release of SQL Server. As far as I know, this will be the first time a new, non-deprecation feature is deployed in the cloud before on-premise (non-deprecation because, for example, the COMPUTE BY clause fails in SQL Azure but not in any on-premise RTM release of SQL Server). Note that usage of SQL Server "opaque" features (for example, are instances managed internally be a variant of the Utility Control Point concept?) cannot be determined.

In addition, this may be the first "impactful change" (BOL doesn't say breaking change, but change with a possible impact, but one never knows what the impact would be in other folks' applications) in SQL Azure Database. The BOL entry continues "This will have an impact on persisted computed columns as well as any index or constraint defined in terms of the persisted computed column. With this service release SQL Azure provides a view to help determine objects that will be impacted by the change. Query sys.dm_db_objects_impacted_on_version_change (SQL Azure Database) in each database to determine impacted objects for that database."

Here's a couple of object definitions that will populate this DMV:

create table spatial_test (

id int identity primary key,

geog geography,

area as geog.STArea() PERSISTED,

);-- one row, class_desc INDEX, for the clusted index

select * from sys.dm_db_objects_impacted_on_version_changeALTER TABLE spatial_test

ADD CONSTRAINT check_area CHECK (area > 50);-- two more rows, class_desc OBJECT_OR_COLUMN, for the constraint object

select * from sys.dm_db_objects_impacted_on_version_changeBefore this, the SQL Azure Database koan was "Changes are always backward-compatible". There is now the sys.dm_db_objects_impacted_on_version_change DMV and the BOL page for it even provides sample DDL to handle the impacted objects. But this begs the question: I can run the DMV to determine objects that would be impacted and fix them when the change occurs, but if I don't know when the SU will be released, how can I plan/stage my app change to corespond? Interesting times ahead...

<Return to section navigation list>

MarketPlace DataMarket and OData

••• Lohith Kashyapa (@kashyapa) described Performing CRUD on OData Services using DataJS in a 6/5/2011 post:

Recently i had released a project by name Netflix Catalog using HTML + ODATA + DATAJS + JQUERY. I had a request from a reader asking for a CRUD example using DataJS. This post is about a demo application for performing Create, Read, Update and Delete on a OData service all by just using the DataJS client side framework. I have become a big fan of DataJS and i will do whatever it takes to promote it. So this post is kind of a walkthrough and a step by step guide for using DataJS and performing CRUD with the help of it. So stay with me and follow carefully.

Pre-Requisites:

To follow along with this demo, you do not need Visual Studio.NET licenced version like Developer or Professional or Ultimate edition. As far as possible i tend to create my demos and example using the free express versions available. So here are the pre-requisites:

I prefer IIS Express because, its as if you are developing on a production level IIS but which runs locally on your desktop as a user services rather than a machine level service. More information on this from Scott Gu here.

Also i am using SQL CE or SQL Server Compact Edition – as my backend store. My EF Model is tied to this database. But if you want to replace it with SQL Express you can. You need to change the connection string EF data model in the web.config. But if you want to pursue my path then you need to do the following:

SQL CE tools for Visual Studio

Note: Follow the order of installation.

If you have finished the pre-requisite part, then now the real fun begins. Read on:

Step 1: Create ASP.NET Web Site

Fire up Visual Web Developer 2010 express and select File > New Web Site. Select the “Visual C#” from the installed templates and “ASP.NET Web Site” from the project template. Select File System for the web location and provide a path for the project. Click Ok to create the project.

Note: I will be developing this demo using C# as a language.

Step 2: Clean Up

Once the project is created, it will have some default files and folder system as shown below.

For this demo we do not need any of those. So lets clean up those files and folder. I will be deleting the “Account” folder, “About.aspx”, “Default.aspx” and “Site.master”. We will need the Scripts, App_Data, Styles folders. So lets leave them as is for now. Here is how the solution looks after clean up:

Step 3: Set Web Server

By default when you create any web site projects, Visual Studio will use the “Visual Studio Development Server”. In order to use IIS Express as the web server for the project, right click on the solution and select the option “Use IIS Express”.

Step 4: Create SQL CE Database

As i have said earlier, i will be using SQL CE as the back end store. So lets create a simple database for maintaining User account details – userid, username, email, and password. Right click on the solution and select “Add New Item”. I have named my database as “users”. Note that the extension for the data file is .SDF and it will be stored as a file in your App_Data folder. So if you want to deploy this somewhere you can just zip and send this and app will be up and running after installation.

Note: You will get the following warning when adding the database. Select “Yes”. All it says is we are adding a special file and will place it in App_Data folder.

Step 5: Create Table

Lets create a new table in the database. It will be a simple table with 4 columns. namely:

UserID which is the PK and Identity column

UserName, string column

Email, string column

Password, string column

Expand App_Data folder and double click on Users.sdf file. This will open the Database Explorer with a connection to Users database.

Right click on Tables and select “Create Table”. You will get the table creation UI. My table definition looks like below:

Step 6: Create Entity Framework ModelRight click on solution and select “Add New Item”. Select “ADO.NET Entity Data Model” item type. I have named my model as “UsersModel.edmx”.

When asked to choose Model contents select “Generate From Database” and click next.

Next you will have to choose the data connection. By default the Users.sdf that we created will be listed in the database listing. Give name for the entities and click next.

Next you would have to choose the database objects. Expand Tables and select Users. Give a name for the Model Namespace. In my case i have named it as UsersModel. Click finish.

After this, UsersModel.edmx will be added to the App_Code folder. So we now have the EDMX ready for creating an OData service.

Step 7: Create OData Service

Now we will create a OData service to expose our Users data. Right click on the Solution and select “Add New Item”. Select WCF Data Service from the item template. Give it a name – in my case i have named it as “UsersOData.svc”.

The SVC file will be kept at the root folder where as the code behind for that will be placed in App_Code folder. At this point here is how our solution looks like:

Open the UsersOData.cs file from the App_Code folder. You will see the following code set up for you.

1: public class UsersOData : DataService< /* TODO: put your data source class name here */ >2: {3: // This method is called only once to initialize service-wide policies.4: public static void InitializeService(DataServiceConfiguration config)5: {6: // TODO: set rules to indicate which entity sets and service operations are visible, updatable, etc.7: // Examples:8: // config.SetEntitySetAccessRule("MyEntityset", EntitySetRights.AllRead);9: // config.SetServiceOperationAccessRule("MyServiceOperation", ServiceOperationRights.All);10: config.DataServiceBehavior.MaxProtocolVersion = DataServiceProtocolVersion.V2;11: }12: }If you see the highlighted portion, there is a TODO and it says to put our data source class name here. Remember from the step where we added the Entity Framework, you need to put the EF entity container name here. In my case i had named it as UsersEntities. Also we need to set some entity access rules. After those changes here is the modified code:

1: public class UsersOData : DataService<UsersEntities>2: {3: // This method is called only once to initialize service-wide policies.4: public static void InitializeService(DataServiceConfiguration config)5: {6: config.SetEntitySetAccessRule("*", EntitySetRights.All);7: config.DataServiceBehavior.MaxProtocolVersion = DataServiceProtocolVersion.V2;8: config.UseVerboseErrors = true;9: }10: }At this point if you run the application and navigate to UsersOData.SVC you will receive an error which is:

Well it says it cant fine the type that implements the service. Thanks to the tip provided at the following location – http://ef4templates.codeplex.com/documentation – came to know that the .SVC file has a attribute called Service and it points to the implementation class which is in our class UsersOData.cs. But we need to qualify the class with a namespace and provide the fully classified name to the Service attribute. Open UsersOData.cs file and wrap the class inside a namespace as below:

1: namespace UserServices2: {3: public class UsersOData : DataService<UsersEntities>4: {5: // This method is called only once to initialize service-wide policies.6: public static void InitializeService(DataServiceConfiguration config)7: {8: config.SetEntitySetAccessRule("*", EntitySetRights.All);9: config.DataServiceBehavior.MaxProtocolVersion = DataServiceProtocolVersion.V2;10: config.UseVerboseErrors = true;11: }12: }13: }Next open the UsersOData.SVC file and modify the Service attribute to include the Namespace and the class name as below:

1: <%@ ServiceHost2: Language="C#"3: Factory="System.Data.Services.DataServiceHostFactory"4: Service="UserServices.UsersOData" %>If you now navigate to UsersOData.svc in internet explorer you should see the following:

So we have our OData service up and running with one collection “Users”. Next we will see how we will do a CRUD on this service using the DataJS.

Step 8: Create Index.htm, Import DataJS & JQuery

Now lets create the front end for the application. Right click on the solution and select “Add New Item”. Select a html page and name it as “Index.htm”. I will be using JQuery from the AJAX CDN from Microsoft. The project gets bundled with JQuery 1.4.1. But i prefer using the CDN as i get the latest versions hosted at one of most reliable place on the net. Here is the URL for the JQuery:

1: <script2: type="text/javascript"3: src="http://ajax.aspnetcdn.com/ajax/jquery/jquery-1.5.1.min.js">4: </script>5: <script6: type="text/javascript"7: src="http://ajax.aspnetcdn.com/ajax/jquery.ui/1.8.13/jquery-ui.min.js">8: </script>Next download the DataJS library from the following location: http://datajs.codeplex.com/

Next we need one more JQuery plugin for templating. Download the Jquery Templating library from: https://github.com/jquery/jquery-tmpl.

Let me give you a sneak preview of what we are building. We will have a <table> which lists down all the available user accounts, ability to create a new user account, ability to update an existing account and ability to delete a user account.

I will not get into the UI mark-up now. You can download the code and go through it. Lets start looking at how DataJS was used for the CRUD operation.

Read Operation:

DataJS has a “read” API. This will allow us to read the data from the OData service. In its simplest form Read API takes the URL and a call-back to execute when the read operation succeeds. In this example, what i have done is i have a GetUsers() function and GetUsersCallback() function which handle the read part. Here is the code:

1: //Gets all the user accounts from service2: function GetUsers()3: {4: $("#loadingUsers").show();5: OData.read(USERS_ODATA_SVC, GetUsersCallback);6: }7:8: //GetUsers Success Callback9: function GetUsersCallback(data, request)10: {11: $("#loadingUsers").hide();12: $("#users").find("tr:gt(0)").remove();13: ApplyTemplate(data.results)14: }ApplyTemplate() is a helper function which takes the JSON data uses JQuery templating technology to create the UI markup and append it to the user account listing table.

Create Operation:

If “read” API allows to get the data, DataJS has a “request” API which allows us to do the CUD or Create , Update and Delete operations. There is no specific calls like Create/Update/Delete in OData, rather we use the HTTP verbs to indicate the server what operation to perform. Create uses “POST” verb, Update uses “PUT” verb and Delete uses “DELETE” verb. As you have seen earlier, i have 3 fields on the User entity. In the example while creating a new entity, i create a JSON representation of the data i need to send over to the server and pass it to the request API. The code for the create is as follows:

1: //Handle the DataJS call for new user acccount creation2: function AddUser()3: {4: $("#loading").show();5: var newUserdata = {6: username: $("#name").val(),7: email: $("#email").val(),8: password: $("#password").val() };9: var requestOptions = {10: requestUri: USERS_ODATA_SVC,11: method: "POST",12: data: newUserdata13: };14:15: OData.request(requestOptions,16: AddSuccessCallback,17: AddErrorCallback);18:19: }20:21: //AddUser Success Callback22: function AddSuccessCallback(data, request)23: {24: $("#loading").hide('slow');25: $("#dialog-form").dialog("close");26: GetUsers();27: }28:29: //AddUser Error Callback30: function AddErrorCallback(error)31: {32: alert("Error : " + error.message)33: $("#dialog-form").dialog("close");34: }As you can see, the high lighted code is where i create the JSON representation of the data that needs to be passed. In this case its username, email and password. Also notice the method set to “POST”. The request API takes request options, a success call-back and an error call-back. In the success call-back i go and fetch all the users again and re paint the UI.

Update Operation:

If we want to update a resource which is exposed as an OData we follow the same construct we used in Create. But the only change is the URL you will need to point to. If i have a User whose UserID (in this example the PK) is 1, then the URL to point to this user is /UsersOData.svc/Users(1">/UsersOData.svc/Users(1">/UsersOData.svc/Users(1">http://<server>/UsersOData.svc/Users(1). If you notice we pass the user id in the URL itself. This is how you navigate to a resource in OData. To update this resource, we need to again prepare the data in JSON representation and call the request API but with method set to “PUT”. PUT is the http verb that lets the server know that we need to update the resource with the new values passed. Here is the code block to handle the Update:

1: //Handle DataJS calls to Update user data2: function UpdateUser(userId)3: {4: $("#loading").show();5: var updateUserdata = {6: username: $("#name").val(),7: email: $("#email").val(),8: password: $("#password").val() };9: var requestURI = USERS_ODATA_SVC + "(" + userId + ")";10: var requestOptions = {11: requestUri: requestURI,12: method: "PUT",13: data: updateUserdata14: };15:16: OData.request(requestOptions,17: UpdateSuccessCallback,18: UpdateErrorCallback);19:20: }21:22: //UpdateUser Suceess callback23: function UpdateSuccessCallback(data, request) {24: $("#loading").hide('slow');25: $("#dialog-form").dialog("close");26: GetUsers();27: }28:29: //UpdateUser Error callback30: function UpdateErrorCallback(error) {31: alert("Error : " + error.message)32: $("#dialog-form").dialog("close");33: }Delete Operation:

Delete operation is very similar to Update. The only difference is we wont pass any data. Instead we set the URL to the resource that needs to be deleted and mark the method as “DELETE”. DELETE is the HTTP verb which the server will look for if a resource needs to be deleted. For .e.g if i have a user id 1 and i want to delete that resource, we set the URL as /UsersOData.svc/Users(1">/UsersOData.svc/Users(1">/UsersOData.svc/Users(1">http://<server>/UsersOData.svc/Users(1) and set the method as “DELETE”. Here is the code for the same:

1: //Handles DataJS calls for delete user2: function DeleteUser(userId)3: {4: var requestURI = USERS_ODATA_SVC + "(" + userId + ")";5: var requestOptions = {6: requestUri: requestURI,7: method: "DELETE",8: };9:10: OData.request(requestOptions,11: DeleteSuccessCallback,12: DeleteErrorCallback);13: }14:15: //DeleteUser Success callback16: function DeleteSuccessCallback()17: {18: $dialog.dialog('close');19: GetUsers();20: }21:22: //DeleteUser Error callback23: function DeleteErrorCallback(error)24: {25: alert(error.message)26: }That’s it. With a single HTML file, with DataJS javascript library and a little bit of JQuery to spice up the UI we built a complete CRUD operations within just a couple of minutes.

DataJS is now getting traction and many people have started to use this and follow this library. Hopefully this demo would give a pointer to all those who wanted to know more on the request API. Main goal was to focus on request API of dataJS and how to use that. Hope i have done the justice to that.

Find attached the source code here.

Till next time, as usual, Happy Coding. Code with passion, Decode with patience.

•• MSDN Courses reported the availability of an Accessing Cloud Data with Windows Azure Marketplace course on 6/1/2011:

Description

Working in the cloud is becoming a major initiative for application development departments. Windows Azure is Microsoft's cloud incorporating data and services. This lab will guide the reader through a series of exercises that creates a Silverlight Web Part that displays Windows Azure Marketplace data on a Silverlight Bing map control.

Overview

Working in the cloud is becoming a major initiative for application development departments. Windows Azure is Microsoft’s cloud incorporating data and services. This lab will guide the reader through a series of exercises that creates a Silverlight Web Part that displays Windows Azure Marketplace data on a Silverlight Bing map control.

- Exercise 1: Subscribe to a Microsoft Windows Azure Marketplace dataset

- Exercise 2: Create a Business Data Catalog Model to Access the Dataset

- Exercise 3: Create an External List to Consume the MarketPlace Dataset

- Exercise 4: Create a Web Part to Display the MarketPlace Data

- Exercise 5: Deploy the Web Part

- Summary

Objectives

This lab will demonstrate how you can consume Windows Azure data using SharePoint 2010 and a Silverlight Web Part. To demonstrate connecting to Windows Azure data the reader will

- Subscribe to a Microsoft Windows Azure Marketplace dataset

- Create a Business Data Catalog model to access the dataset

- Create an external list to expose the dataset

- Create a Silverlight Web Part to display the data

- Deploy the Web Part.

System Requirements

You must have the following items to complete this lab:

- 2010 Information Worker Demonstration and Evaluation Virtual Machine

- Microsoft Visual Studio 2010

- Bing Silverlight Map control and Bing Map Developer Id

- Silverlight WebPart

- Internet Access

Setup

You must perform the following steps to prepare your computer for this lab...

- Download the 2010 Information Worker Demonstration and Evaluation Virtual Machine from http://tinyurl.com/2avoc4b and create the Hyper-V image.

- Install the Visual Studio 2010 Silverlight Web Part. The Silverlight Web Part is an add-on to Visual Studio 2010.

- Create a document library named SilverlightXaps located at http://intranet.contoso.com/silverlightxaps. This is where you will store the Silverlight Xap in SharePoint.

- Create a Bing Map Developer Account.

- Download and install the Bing Map Silverlight Control.

Exercises

This Hands-On Lab comprises the following exercises:

- Subscribe to a Microsoft Windows Azure MarketPlace Dataset

- Create a Business Data Catalog Model to Access the Dataset

- Create an External List to Consume the MarketPlace Dataset

- Create a Web Part to Display the MarketPlace Data

- Deploy the Web Part

Estimated time to complete this lab: 60 minutes.

Starting Materials

This Hands-On Lab includes the following starting materials.

- Visual Studio solutions. The lab provides the following Visual Studio solutions that you can use as starting point for the exercises.

- <Install>\Labs\ACDM\Source\Begin\TheftStatisticsBDCModel \TheftStatisticsBDCModel.sln: This solution creates the Business Data Catalog application.

- <Install>\Labs\ACDM\Source\Begin\TheftStatisticsWebPart \TheftStatisticsWebPart.sln: This solution creates the Silverlight Web Part and Silvelright application that will consume data using an external list.

Note:

Inside each lab folder, you will find an end folder containing a solution with the completed lab exercise.

Read more: next >

•• Elisa Flasko (@eflasko) posted Announcing More New DataMarket Content! to the Windows Azure Marketplace DataMarket Blog on 6/3/2011:

Have you ever wondered if there are any environmental hazards around your house? If so, we’ve got the solution! Environmental Data Resources, Inc. is publishing their Environmental Hazard Rank offering. The EDR Environmental Hazard Ranking System depicts the relative environmental health of any U.S. ZIP code based on an advanced analysis of its environmental issues. The results are then aggregated by ZIP code to provide you with a rank so you can see how the ZIP code you're interested in stacks up.

It’s Trivia Time! Today’s question: Is the buying power of US dollar greater today than it was in 1976? And today’s Bonus Question: was the buying power of US dollar greater in 1976 than it was in 1923? If you’re sitting there scratching your head thinking “Hmm, these are good questions, Mr. Azure DataMarket Blog Writer Man”, then fear not, MetricMash has got you covered. They’re publishing their U.S. Consumer Price Index - 1913 to Current offering.

This offering provides the changes in the prices paid by consumers for over 375 goods and services in major expenditure groups – such as food, housing, apparel, transportation, medical care and education cost. The CPI can be used to measure inflation and adjust the real value of wages, salaries and pensions for regulating prices and for calculating the real rate of return on investments. And, speaking of buying power, MetricMash is offering a free trial on this offering to make it easy for developers to use this information inside their apps.

Elisa’s MetricMash description sounds more to me like a Yelp review.

•• George Trifanov (@GeorgeTrifonov) posted ODATA WCF Data Services Friendly URLS using Routing

When you creating your first OData WCF Data service common tasks is to give a friendly URL instead of using filename.svc as entry point.You can archive it with URL routing feature in ASP.NET MVC.Just modify your Global.asax.cs route registration block to include following lines.

public static void RegisterRoutes(RouteCollection routes) { routes.Clear(); var factory = new DataServiceHostFactory(); RouteTable.Routes.Add( new ServiceRoute("API", factory, typeof (TestOdataService)));

It tells system to associate data service factory handler with a given URL.

•• Doug Finke (@dfinke) asserted One clear advantage of OData is its commonality and posted a link to his OData PowerShell Explorer in a 6/2/2011 article:

If you care at all about how data is accessed – and you should - understanding the basics of OData is probably worth your time.

Dave Chappell’s post, Introducing OData, does a great job walking through what OData is. Covering REST, Atom/AtomPub, JSON, OData filter query syntax, mobile devices, the Cloud and more.

Our world is awash in data. Vast amounts exist today, and more is created every year. Yet data has value only if it can be used, and it can be used only if it can be accessed by applications and the people who use them.

Allowing this kind of broad access to data is the goal of the Open Data Protocol, commonly called just OData. This paper provides an introduction to OData, describing what it is and how it can be applied.

OData PowerShell Explorer

I open sourced an OData PowerShell Explorer complete with a WPF GUI using WPK. The PowerShell module allows you to discover and drill down through OData services using either the command line or the GUI interface.

Other Resources

Doug is a Microsoft Most Valuable Professional (MVP) for PowerShell.

• Alex James (@adjames) answered How do I do design? to enable queries against properties of related OData collections in a 6/3/2011 post:

For a while now I've been thinking that the best way to get better at API & protocol design is to try to articulate how you do design.

Articulating your thought process has a number of significant benefits:

- Once you know your approach you can critique and try to improve it.

- Once you know what you'd like to do, in a collaborative setting you can ask others for permission to give it a go. In my experience phrases like "I'd like to explore how this is similar to LINQ ..." work well if you want others to brainstorm with you.

- Once you understand what you do perhaps you can teach or mentor.

- Or perhaps others can teach you.

Clearly these are compelling.

So I thought I'd give this a go by trying to document an idealized version of the thought process for Any/All in OData...

The Problem

OData had no way to allow people to query entities based on properties of a related collection, and a lot of people, myself included, wanted to change this...

Step 1: Look for inspiration

I always look for things that I can borrow ideas from. Pattern matching, if you will, with something proven. Here you are limited only by your experience and imagination, there is an almost limitless supply of inspiration. The broader your experience the more source material you have and the higher the probability you will be able to see a useful pattern.

If you need it – and you shouldn’t - this is just another reason to keep learning new languages, paradigms and frameworks!

Sometimes there is something obvious you can pattern match with, other times you really have to stretch...

In this case the problem is really easy. Our source material is LINQ. LINQ already allows you to write queries like this:

from movie in Movies

where movie.Actors.Any(a => a.Name == 'Zack')

select movie;Which filters movies by the actors (a related collection) in the movie, and that is exactly what we need in OData.

Step 2: Translate from source material to problem domain

My next step is generally to take the ideas from the source material and try to translate them to my problem space. As you do this you'll start to notice the differences between source and problem, some insignificant, some troublesome.

This is where judgment comes in, you need to know when to go back to the drawing board. For me I know I'm on thin ice if I start saying things like 'well if I ignore X and Y, and imagine that Z is like this' .

But don't give up to early either. Often the biggest wins are gained by comparing things that seem quite different until you look a little deeper and find a way to ignore the differences that don't really matter. For example Relational Databases and the web seem very different, until you focus on ForeignKeys vs Hyperlinks, pull vs push seems different until you read about IQueryable vs IObservable.

Notice not giving up here is all about having tolerance for ambiguity, the higher your tolerance, the further you can stretch an idea. Which is vital if you want to be really creative.

In the case of Any/All it turns out that the inspiration and problem domain are very similar, and the differences are insignificant.

So how do you do the translation?

In OData predicate filters are expressed via $filter, so we need to convert this LINQ predicate:

(movie) => movie.Actors.Any(actor => actor.Name == 'Zack')

into something we can put in an OData $filter.

Let's attack this systematically from left to right. In LINQ you need to name the lambda variable in the predicate, i.e. movie, but in OData there is no need to name the thing you are filtering, it is implicit, for example this:

from movie in Movies

where movie.Name == "Donnie Darko"

select movieis expressed like this in OData:

~/Movies/?$filter=Name eq 'Donnie Darko'

Notice there is no variable name, we access the Name of the movie implicitly.

So we can skip the ‘movie’.

Next in LINQ the Any method is a built-in extension method called directly off the collection using '.Any'. In OData '/' is used in place of '.', and built-in methods are all lowercase, so that points are something like this:

~/Movies/?$filter=Actors/any(????)

As mentioned previously in OData we don't name variables, everything is implicit. Which means we can ignore the actor variable. That leaves only the filter, which we can convert using existing LINQ to OData conversion rules, to yield something like this:

~/Movies/?$filter=Actors/any(Name eq 'Zack')

Step 3: Did you lose something important?

There is a good chance you lost something important in this transformation, so my next step is generally to assess what information has been lost in translation. Paying particular attention to things that are important enough that your source material had specific constructs to capture them.

As you notice these differences you need to either convince yourself they don’t matter, or you need to add something new to your solution to bring it back.

In our case you'll remember that in LINQ the actor being tested in the Any method had a name (i.e. 'actor'), yet in our current OData design it doesn't.

Is this important?

Yes it is! Unlike the root filter, where there is only one variable in scope (i.e. the movie), inside an Any/All there are potentially two variables in scope (i.e. the movie and the actor). And if neither are named we won't be able to distinguish between them!

For example this query, which finds any movies with the same name as any actors who star in the movie, is unambiguous in LINQ:

from movie in Movies

where movie.Actors.Any(actor => actor.Name == movie.Name)

select movie;But in our proposed equivalent is clearly nonsensical:

~/Movies/?$filter=Actors/any(Name eq Name)

It seems we need a way to refer to both the inner (actor) and outer variables (movie) explicitly.

Now we can't change the way existing OData queries work - without breaking clients and servers already deployed - which means we can't explicitly name the outer variable, we can however introduce a way to refer to it implicitly. This should be a reserved name so it can't collide with any properties. OData already uses the $ prefix for reserved names (i.e. $filter, $skip etc) so we could try something like $it . This results in something like this:

~/Movies/?$filter=Actors/any(Name eq $it/Name)

And now the query is unambiguous again.

But unfortunately we aren't done yet. We need to make sure nesting works calls works too, for example this:

from movie in Movies

where movie.Actors.Any(actor => actor.Name == movie.Name && actor.Awards.Any(award => award.Name == 'Oscar'))

select movie;If we translate this, with the current design we get this:

~/Movies/?$filter=Actors/any(Name eq $it/Name AND Awards/any(Name eq 'Oscar'))

But now it is unclear whether Name eq 'Oscar' refers to the actor or the award. Perhaps we need to be able to name the actor and award variables too. Here we are not restricted by the past, Any/All is new, so it can include a way to explicitly name the variable. Again we look at LINQ for inspiration:

award => award.Name == 'Oscar'

Cleary we need something like '=>' that is URI friendly and compatible with the current OData design. It turns out ':' is a good candidate, because it works well in querystrings, and isn’t currently used by OData, and even better there is a precedent in Python lambda’s (notice the pattern matching again). So the final proposal is something like this:

~/Movies/?$filter=Actors/any(actor: actor/Name eq $it/Name)

Or for the nested scenario:

~/Movies/?$filter=Actors/any(actor: actor/Name eq $it/Name AND actor/Awards/any(award: award/Name eq 'Oscar'))

And again nothing is ambiguous.

Step 4: Rinse and Repeat

In this case the design feels good, so we are done.

But clearly that won't always be the case. But you should at least know whether the design is looking promising or not. If not it is back to the drawing board.

If it does look promising, I would essentially repeat steps 1-3 again using some other inspiration to inform further tweaking of the design, hopefully to something that feels complete.

Conclusion

While writing this up I definitely teased out a number of things that had previously been unconscious, and I sure this will help me going forward, hopefully you too?

What you think about all this? Do you have any suggested improvements?

Next time I do this I'll explore something that involves more creativity... and I'll try to tease out more of the unconscious tools I use.

Bob Brauer (@sibob) will present an MSDN Webcast: Integrating High-Quality Data with Windows Azure DataMarket (Level 200) on 6/21/2011 at 8:00 AM PDT:

Event ID: 1032487799

- Language(s): English.

- Product(s): Windows Azure.

- Audience(s): Pro Dev/Programmer.

All of us are aware of the importance of high-quality data, especially when organizations communicate with customers, create business processes, and use data for business intelligence. In this webcast, we demonstrate the easy-to-integrate data services that are available within Windows Azure DataMarket from StrikeIron. We look at the service itself and how it works. You'll learn how to integrate it and view several different use cases to see how customers are benefitting from this data quality service that is now available in Windows Azure DataMarket.

Presenter: Bob Brauer, Chief Strategy Officer and Cofounder, StrikeIron, Inc.

Bob Brauer is an expert in the field of data quality, including creating better, more usable data via the cloud. He first founded DataFlux, one of the industry leaders in data quality technology, which has been acquired by SAS Institute. Then he founded StrikeIron in 2003, leveraging the Internet to make data quality technology accessible to more organizations. Visit www.strikeiron.com for more information.

Steve Milroy reported DataMarket Mapper app provides quick, map-based visualization of DataMarket data to the Bing Maps blog on 6/2/2011:

Update: at 11:15am PT, we changed the hyperlink to the DataMarket Application so that our international viewers could access the app. Sorry for any inconvenience.

The Windows Azure DataMarket is a growing repository of data sources and services mediated by Microsoft. It allows customers to purchase vector and tiled datasets using Open Data Protocol (OData) specifications. Available datasets include weather, crime, demographics, parcels, plus many other layers. The OData working group has been looking at full spatial support for this specification; in the meantime, DataMarket datasets can still be used with geospatial applications and Bing Maps.

To further assist use of datasets, we are happy to announce the launch of DataMarket Mapper, a map app that allows quick and easy map-based visualization of DataMarket data. The app was developed by OnTerra Systems, along with the Microsoft DataMarket group. Look for it in the Bing Maps App Gallery. With a DataMarket subscription you can access layers, and visualize these layers on Bing Maps. If lat/longs don’t exist in the data source then the Bing Maps geocoder will geocode on the fly. Even if you don’t have a DataMarket account, we’ve made some crime and demographic data available in the app.

Figure 1 – DataMarket Mapper showing crime statistics per city using Data.gov crime data.

You can also use DataMarket data in Bing Maps applications using Bing Maps AJAX and Silverlight APIs. This involves using the vector shape or tile layer methods. Figure 2 shows parcel tile layer overlay on Bing Maps AJAX 7.0 Control API. If you have a DataMarket key with access to these layers you can also access these demos directly for testing/code samples:

• Alteryx Demographics

• BSI Parcels

• Weatherbug Station LocationsFigure 2 – DataMarket parcel data from B.S.I. shown in a web mapping application using the Bing Maps AJAX 7 API.

We’re excited to launch this new app. After you visit the app and see it in action, we’d enjoy hearing your feedback. We’re already working on updates. In the near future, you’ll see the addition of country-based datasets, Windows Live ID/OAuth, and thematic mapping. If you have any questions or comments or would like help in building a custom Bing Maps application with DataMarket Mapper, please contact OnTerra Systems.com.

Steve is CEO, of OnTerra Systems

<Return to section navigation list>

Windows Azure AppFabric: Access Control, WIF and Service Bus

••• Martin Ingvar Kofoed Jensen (@IngvarKofoed) described A basic inter webrole broadcast communication on Azure using the service bus in a 5/19/2011 article (missed when posted):

In this blog post I'll try to show a bare bone setup that does inter webrole broadcast communication. The code is based on Valery M blog post. The code in his blog post is based on a customer solution and contains a lot more code than needed to get the setup working. But his code also provides a lot more robust broadcast communication with retries and other things that makes the communication reliable. I have omitted all this to make it, as easy to understand and recreate as possible. The idea is that the code I provide, could be used as a basis for setting up your own inter webrole broadcast communication. You can download the code here: InterWebroleBroadcastDemo.zip (17.02 kb)

Windows Azure AppFabric SDK and using Microsoft.ServiceBus.dll

We need a reference to Microsoft.ServiceBus.dll in order to do the inter webrole communication. The Microsoft.ServiceBus.dll assembly is a part of the Windows Azure AppFabric SDK found here.

When you use Microsoft.ServiceBus.dll you need to add it as a reference like any other assembly. You do this by browsing to the directory where the AppFabric SDK was installed. But unlike most other references you add, you need to set the "Copy local" property for the reference to true (default is false).

I have put all my code in a separate assembly and then the main classes are then used in the WebRole.cs file. Even if I have added Microsoft.ServiceBus.dll to my assembly () and setted the "Copy Local" to true, I still have to add it to the WebRole project and also set the "Copy Local" to true here. This is a very important detail!Creating a new Service Bus Namespace

Here is a short step-guide on how to create a new Service Bus Namespace. If you have already done this, you can skip it and just use the already existing namespace and its values.

- Go to the section "Service Bus, Access Control & Caching"

- Click the button "New Namespace"

- Check "Service Bus"

- Enter the desired Namespace (This namespace is the one used for EndpointInformation.ServiceNamespace)

- Click "Create Namespace"

- Select the newly created namespace

- Under properties (To the right) find Default Key and click "View"

- Here you will find the Default Issuer (This value should be used for EndpointInformation.IssuerName) and Default Key (This value should be used for EndpointInformation.IssuerSecret)

The code

Here I will go through all the classes in my sample code. The full project including the WebRole project can be download here: InterWebroleBroadcastDemo.zip (17.02 kb)

BroadcastEventWe start with the BroadcastEvent class. This class represents the data we send across the wire. This is done with the class attribute DataContract and the member attribute DataMember. In this sample code I only send two simple strings. SenderInstanceId is not required but I use it to display where the message came from.

[DataContract(Namespace = BroadcastNamespaces.DataContract)]

public class BroadcastEvent

{

public BroadcastEvent(string senderInstanceId, string message)

{

this.SenderInstanceId = senderInstanceId;

this.Message = message;

}

[DataMember]

public string SenderInstanceId { get; private set; }

[DataMember]

public string Message { get; private set; }

}BroadcastNamespaces

This class only contains some constants that are used by some of the other classes.

public static class BroadcastNamespaces

{

public const string DataContract = "http://broadcast.event.test/data";

public const string ServiceContract = "http://broadcast.event.test/service";

}IBroadcastServiceContract

This interface defines the contract that the web roles uses when communication to each other. Here in this simple example, the contract only has one method, namely the Publish method. This method is, in the implementation of the contract (BroadcastService) used to send BroadcastEvent's to all web roles that have subscribed to this channel. There is another method, Subscribe, that is inherited from the IObservable. This method is used to subscribe to the BroadcastEvents when they are published by some web role. This method is also implemented in the BroadcastService class.

[ServiceContract(Name = "BroadcastServiceContract",

Namespace = BroadcastNamespaces.ServiceContract)]

public interface IBroadcastServiceContract : IObservable<BroadcastEvent>

{

[OperationContract(IsOneWay = true)]

void Publish(BroadcastEvent e);

}IBroadcastServiceChannel

This interface defines the channel which the web roles communicates through. This is done by adding the IClientChannel interface.

public interface IBroadcastServiceChannel : IBroadcastServiceContract, IClientChannel

{

}BroadcastEventSubscriber

The web role subscribes to the channel by creating an instance of this class and registering it. For testing purpose, this implementation only logs when it receives any BroadcastEvent.

public class BroadcastEventSubscriber : IObserver<BroadcastEvent>

{

public void OnNext(BroadcastEvent value)

{

Logger.AddLogEntry(RoleEnvironment.CurrentRoleInstance.Id +

" got message from " + value.SenderInstanceId + " : " +

value.Message);

}

public void OnCompleted()

{

/* Handle on completed */

}

public void OnError(Exception error)

{

/* Handle on error */

}

}BroadcastService

This class implements the IBroadcastServiceContract interface. It handles the publish scenario by calling the OnNext method on all subscribes in parallel. The reason for doing this parallel, is that the OnNext method is blocking, so there is a good change that there is a okay performance gain by doing this in parallel.

The other method is Subscribe. This method adds the BroadcastEvent observer to the subscribers and returns a object of type UnsubscribeCallbackHandler that, when disposed unsubscribe itself. This is a part of the IObserver/IObservable pattern.[ServiceBehavior(InstanceContextMode = InstanceContextMode.Single,

ConcurrencyMode = ConcurrencyMode.Multiple)]

public class BroadcastService : IBroadcastServiceContract

{

private readonly IList<IObserver<BroadcastEvent>> _subscribers =

new List<IObserver<BroadcastEvent>>();

public void Publish(BroadcastEvent e)

{

ParallelQuery<IObserver<BroadcastEvent>> subscribers =

from sub in _subscribers.AsParallel().AsUnordered()

select sub;

subscribers.ForAll((subscriber) =>

{

try

{

subscriber.OnNext(e);

}

catch (Exception ex)

{

try

{

subscriber.OnError(ex);

}

catch (Exception)

{

/* Ignore exception */

}

}

});

}

public IDisposable Subscribe(IObserver<BroadcastEvent> subscriber)

{

if (!_subscribers.Contains(subscriber))

{

_subscribers.Add(subscriber);

}

return new UnsubscribeCallbackHandler(_subscribers, subscriber);

}

private class UnsubscribeCallbackHandler : IDisposable

{

private readonly IList<IObserver<BroadcastEvent>> _subscribers;

private readonly IObserver<BroadcastEvent> _subscriber;

public UnsubscribeCallbackHandler(IList<IObserver<BroadcastEvent>> subscribers,

IObserver<BroadcastEvent> subscriber)

{

_subscribers = subscribers;

_subscriber = subscriber;

}

public void Dispose()

{

if ((_subscribers != null) && (_subscriber != null) &&

(_subscribers.Contains(_subscriber)))

{

_subscribers.Remove(_subscriber);

}

}

}

}

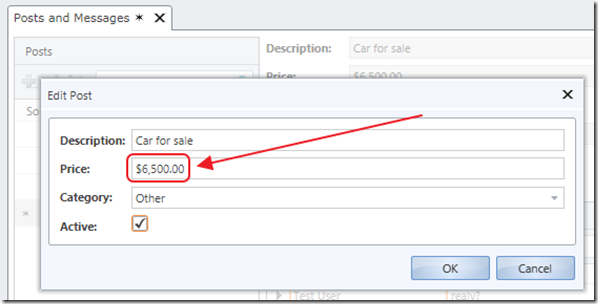

ServiceBusClient