Windows Azure and Cloud Computing Posts for 6/15/2011+

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

• Update 6/15/2011 5:00 PM PDT with new articles marked • from Doug Henschen, Stacey Higginbotham, Microsoft NERD Center, MicrosoftFeed, John K. Walters, Derrick Harris, Mary Jo Foley, Robert Duffner and Jason Bloomberg.

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database, Big Data and Reporting

- Marketplace DataMarket and OData

- Windows Azure AppFabric: Access Control, WIF and Service Bus

- Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch

- Windows Azure Infrastructur and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Azure Blob, Drive, Table and Queue Services

No significant articles today.

<Return to section navigation list>

SQL Azure Database, Big Data and Reporting

• Derrick Harris (@derrickharris) reported Xeround enters GA, tests the SQL-in-the-cloud water in a 6/13/2011 post to Giga Om‘s Structure blog:

Xeround’s cloud-based MySQL service enters general availability Monday [6/13/2011], becoming the first cloud-based third-party MySQL distribution that actually requires customers to pay for the service. Other SQL services exist, but they’re generally offered directly from cloud providers (e.g., Amazon Web Services’ Relational Database Service or Microsoft’s SQL Azure), which puts Xeround in the guinea pig position of trying to convince cloud users they should pay an entirely new database vendor. If it’s successful, there are plenty of other cloud database startups waiting in the wings to ride the SQL-in-the-cloud wave.

Xeround offers an entirely cloud-based MySQL database service that presently runs atop AWS, Rackspace Cloud Servers and Heroku. However, the underlying technology is platform-agnostic, so the company promises support for more clouds soon. CEO Razi Sharir told me in an interview Xeround has more than 2,000 customers spread fairly equally between the United States and Europe.

Going forward, its challenge will be converting beta users to paying customers and bringing in fresh users. Sharir cites an industry-wide conversion rate of 5 to 10 percent in terms of getting beta users to pay, and he doesn’t see any reason his company can’t hit the high end of that range. He noted that a good number of beta users loaded live data into Xeround’s service despite its beta status, indicating their confidence in the service. Thus, getting live applications running in the production-ready version shouldn’t be too difficult. Sharir expects to have thousands of paying customers within the next couple of years.

Xeround charges 12 cents per gigabyte per hour for data storage and 46 cents per gigabyte for data transfer. With those prices, customers get a 99.9 percent uptime guarantee, e-mail/web/phone support, and automatic scaling and healing of resources. The way Sharir describes the latter capabilities, Xeround customers needn’t worry at all about adding resources or failing over because the technology is designed to automate everything.

As we’ll highlight in a Structure 2011 panel next week (of which Xeround will be a part), there are a whole ecosystem of cloud database options waiting to make their marks, including fellow SQL-supporting startups such as NimbusDB, GenieDB and Starcounter.

Xeround appears extremely expensive to me. (The Xeround site confirms Derrick’s reported prices.) SQL Azure rents for $9.99 per month for a 1-GB database with high availability provided by primary and two secondary replicas. SQL Azure’s US$9.99/GB-month fixed price coverts to US$0.017 per GB-hour, about 70 times less expensive than Xeround. SQL Azure data transfer costs $0.10/GB in and $0.15/GB out in North America and Europe.

• Mary Jo Foley described TidyFS: Microsoft's simpler distributed file system in a 6/15/2011 post to her All About Microsoft blog for ZDNet:

Just about a year ago, I first mentioned TidyFS, a new, small distributed file system under development by Microsoft Research. Later this week at the Usenix ‘11 conference, Microsoft researchers behind the TidyFS will be sharing more publicly about their work.

TidyFS is a distributed file system for parallel computations on clusters. On commodity, “shared-nothing” clusters, the primary workloads tend to be generted by distributed execution engines like MapReduce, Hadoop or Microsoft’s Dryad, the Microsoft researchers note in the abstract of their presentation. Other vendors have created distributed file systems for these workloads — like the Google File System (GFS) and the Hadoop Distributed File System (HDFS). Microsoft has one in development, too: TidyFS.

Here’s an architectural diagram from Microsoft from a year ago showing how researchers were envisioning that TidyFS and other experimental components would fit together:

(click on image above to enlarge)

Microsoft researchers are emphasizing the simplicity and small size of TidyFS as differentiators from the other parallel file systems out there. And they’re sharing some of their experiences using the file system in a limited way inside Microsoft Research in their white paper detailing their TidyFS work.

From the TidyFS white paper:

“The TidyFS storage system is composed of three components: a metadata server; a node service that performs housekeeping tasks running on each cluster computer that stores data; and the TidyFS Explorer, a graphical user interface which allows users to view the state of the system.”

Microsoft Research has been deploying and using actively TidyFS for the past year on a research cluster with 256 servers running large-scale, data-intensive computations, according to the white paper. The research cluster is used only for programs run using DryadLINQ, which is a parallelizing compiler for .Net programs using Dryad. (I’ve written before about Dryad — a first commercial version of which Microsoft is planning to deliver later this year as part of a service pack for Windows Server 2008 R2 HPC.)

“On a typical day, several terabytes of data are read and written to TidyFS through the execution of DryadLINQ program,” the white paper notes.

The experimental TidyFS cluster also is making use of a cluster-wide scheduler, codenamed “Quincy,” and a computational cache-manager, codenamed “Nectar.” Even though TidyFS was designed in conjunction with these various other distributed-clustering research projects, the Dryad and DryadLINQ pieces seem to be further along the path to commercialization. (When I asked Microsoft officials earlier this year if Quincy and Nectar would be commercialized later this year along with Dryad, I was told they were not on the same delivery trajectory.)

Nonetheless, the white paper says that “rather than making TidyFS more general, one direction we are considering is integrating it more tightly with our other cluster services.”

As with all Microsoft research projects, there is no absolute guarantee as to when and if TidyFS will evolve into a commercial product or part of a commercial product. However, given that Dryad is on its way to being released as “LINQ to HPC” later this year, I’m thinking TidyFS may not be that far behind, and may someday find its place in the Microsoft “cloud as supercomputer” strategy, alongside Dryad.

• Doug Henschen asserted “Microsoft's CEO says an inside-the-enterprise business intelligence focus misses the real opportunity for large-scale insight” in a deck for his Ballmer To IBM, Oracle: You Don't Know Big Data article of 6/15/2011 for InformationWeek:

Microsoft is doubling down on "big data," one of the tech trends that's emerging as a top priority for its customers, says Steve Ballmer. But Microsoft's CEO isn't talking about the kind of internal business intelligence that he says are the focus for IBM, Oracle and other rivals.

In a day of interviews with four InformationWeek editors at Microsoft's headquarters last week, Ballmer and several of his lieutenants provided an expansive vision for what big data and the related cloud computing movement will bring. It remains to be seen whether Microsoft can translate that vision into an advantage over rivals IBM and Oracle, or, more importantly, into real value for its customers. But we heard compelling arguments for blending on-premises data and computing capacity with new resources and capabilities in the cloud.

If you think about big data through the narrow lens of large-scale data warehousing, Microsoft is the greenhorn among the likes of EMC (by way of its Greenplum acquisition), Hewlett-Packard (through its Vertica acquisition), IBM, Oracle, and Teradata. Those vendors have fielded products for the top end of the market for years, while Microsoft didn't introduce its SQL Server R2 Parallel Data Warehouse (PDW) database until late last year. Hardware-complete PDW appliances from HP and other partners weren't available until early this year.

In fact, Microsoft didn't win its first PDW customer, the Direct Edge stock exchange, until last month, as I reported in this article. At 30-plus-terabytes, the Direct Edge project is larger than any we've seen on Oracle's Exadata. (BNP Paribas's deployment, which started at 23 terabytes, is the largest Exadata reference customer we know of).

The Direct Edge deployment won't be operational until later this year. And even at its zenith, this project won't hold a candle to the petabyte-scale deployments running on Greenplum, Netezza, and Teradata. Direct Edge says its deployment might scale up to about 200 terabytes.

So just where was Ballmer coming from when he said, "Nobody plays in big data, really, except Microsoft and Google"?

Search And Big Insight

Ballmer's perspective on big data is tied to the Bing Internet search engine, a business we heard much more about from Satya Nadella. Until a few months ago, Nadella was the senior VP in charge of engineering for Microsoft's online business, which includes search (Bing), the MSN portal, and Internet ad-serving. It says something that Nadella was Ballmer's hand-picked choice to take over as president of Microsoft's Server and Tools division in January, following the resignation of Bob Muglia.

Nadella has been at Microsoft since 1992, serving in Microsoft Business Solutions (responsible for Microsoft Dynamics applications) and on the server side of the business (working on Windows NT and other server products). During his four-plus years with Microsoft's online business, Nadella says he "relearned everything about infrastructure," something Microsoft's server business needs to do as it moves into cloud computing. [Emphasis added.]

Microsoft's online operation puts big data into perspective. Bing's infrastructure is comprised of 250,000 Windows Server machines and manages some 150 petabytes of data. Microsoft processes two to three petabytes per day. "You really have to figure out how to process that kind of data to keep your index fresh," Nardella says.

Those interested in running apps in the cloud might dismiss Bing-related processing as being stateless -- not a continuously running component of a mission-critical app. Nadella points to Microsoft's AdCenter, which is a complicated business application with a transactional data store. All Internet ad deliveries have to be tracked, and for every search, Microsoft runs some 30,000 auctions simultaneously to re-rank the ads. "That's as stateful an app as you can get," Nadella says. …

Read more about Windows Azure and the Market Place DataMarket : 2 |Next Page »

• Stacey Higginbotham (@gigastacey) reported Comcast likely to show off gigabit cable broadband in a 6/15/2011 post to Giga Om’s Broadband blog:

Comcast will apparently show off a 1 gigabit per second connection Thursday at The Cable Show in Chicago according to Karl Bode, editor at Broadband Reports. Bode, who is a trusted source in the industry said the nation’s top broadband provider would show off the gigabit connection and launch a symmetrical 100 Mbps connection speed tier. He tweeted a picture he identified as Comcast demonstrating 100 Mbps upload speeds.

To support these claims, an email from Comcast sent Tuesday asked me to “be sure to mark your calendars for June 16th at 9:00am CDT/10am EST when Brian Roberts will preview this next-gen service and provide a glimpse into the future of broadband speed.” Verizon is clearly nervous. A Verizon spokesman is emailing me with assertions about the superiority of fiber over cable (he’s right, fiber is better, but cable is far more prevalent). Of course, those emails may have been launched by the story I wrote on Tuesday noting that cable equipment provider Arris (arrs) had shown off 4.5 Gbps cable speeds. Arris does sell its gear to Comcast.

If Comcast does show off 1 Gbps speeds Thursday, there are a lot of questions it should expect to answer starting with if this is a demonstration or something it plans to launch at some point in the near-term future. I’m also curious what such speeds mean for the end consumer on a shared cable network, and how many television channels it would have to sacrifice in order to deliver those speeds (this question is relevant for the faster upstream speeds it would need to deliver as well). Also, does it make sense to have a 250 GB monthly cap when offering gigabit speeds? I’ve asked Comcast about the demonstrations and everything else, but haven’t heard back. But when Roberts makes his big reveal tomorrow (here’s the live stream), it’s what I’ll be listening for.

Related content from GigaOM Pro (subscription req’d):

Working with Big Data in the cloud requires symmetrical, high-speed connections, not the anemic upload rates of telco ADSL lines. See my Scott Guthrie Reports Some Windows Azure Customers Are Storing 25 to 50 Petabytes of Data post, updated 6/11/2011, for the time (and cost) required to transfer a petabyte of data to and from Windows Azure.

Steve Yi recommended on 6/15/2011 a Troubleshooting and Optimizing Queries with SQL Azure TechNet article by Dinakar Nethi:

Dinakar Nethi has written an interesting TechNet Article about how the Dynamic Management Views that are available in SQL Azure and how they can be used for troubleshooting purposes. Take a look and get a feel for how easy it is to use SQL Azure.

Click here for the TechNet article, “Troubleshooting and Optimizing Queries with SQL Azure.

Todd Hoff offered 101 Questions to Ask When Considering a NoSQL Database in a 6/15/2011 post to the High Scalability blog:

You need answers, I know, but all I have here are some questions to consider when thinking about which database to use. These are taken from my webinar What Should I Do? Choosing SQL, NoSQL or Both for Scalable Web Applications. It's a companion article to What The Heck Are You Actually Using NoSQL For?

Actually, I don't even know if there are a 101 questions, but there are a lot/way too many. You might want to use these questions as kind of a NoSQL I Ching, guiding your way through the immense possibility space of options that are in front of you. Nothing is fated, all is interpreted, but it might just trigger a new insight or two along the way.

Where are you starting from?

- A can do anything green field application?

- In the middle of a project and worried about hitting bottlenecks?

- Worried about hitting the scaling wall once you deploy?

- Adding a separate loosely coupled service to an existing system?

- What are your resources? expertise? budget?

- What are your pain points? What's so important that if it fails you will fail? What forces are pushing you?

- What are your priorities? Prioritize them. What is really important to you, what must get done?

- What are your risks? Prioritize them. Is the risk of being unavailable more important than being inconsistent?

What are you trying to accomplish?

- What are you trying to accomplish?

- What's the delivery schedule?

- Do the research to be specific, like Facebook did with their messaging system:

Facebook chose HBase because they monitored their usage and figured out what was needed: a system that could handle two types of data patterns.

Things to Consider...Your Problem

- Do you need to build a custom system?

- Access patterns: 1) A short set of temporal data that tends to be volatile 2) An ever-growing set of data that rarely gets accessed 3) High write loads 4) High throughput, 5) Sequential, 6) Random

- Requires scalability?

- Is availability more important than consistency, or is it latency, transactions, durability, performance, or ease of use?

- Cloud or colo? Hosted services? Resources like disk space?

- Can you find people who know the stack?

- Tired of the data transformation (ORM) treadmill?

- Store data that can be accessed quickly and is used often?

- Would like a high level interface like PaaS?

Things to Consider...Money

- Cost? With money you have different options than if you don't. You can probably make the technologies you know best scale.

- Inexpensive scaling?

- Lower operations cost?

- No sysadmins?

- Type of license?

- Support costs?

Things to Consider...Programming

- Flexible datatypes and schemas?

- Support for which language bindings?

- Web support: JSON, REST, HTTP, JSON-RPC

- Built-in stored procedure support? Javascript?

- Platform support: mobile, workstation, cloud

- Transaction support: key-value, distributed, ACID, BASE, eventual consistency, multi-object ACID transactions.

- Datatype support: graph, key-value, row, column, JSON, document, references, relationships, advanced data structures, large BLOBs.

- Prefer the simplicity of transaction model where you can just update and be done with it? In-memory makes it fast enough and big systems can fit on just a few nodes.

Things to Consider...Performance

- Performance metrics: IOPS/sec, reads, writes, streaming?

- Support for your access pattern: random read/write; sequential read/write; large or small or whatever chunk size you use.

- Are you storing frequently updated bits of data?

- High Concurrency vs High Performance?

- Problems that limit the type of work load you care about?

- Peak QPS on highly-concurrent workloads?

- Test your specific scenarios?

Things to Consider...Features

- Spooky scalability at a distance: support across multiple data-centers?

- Ease of installation, configuration, operations, development, deployment, support, manage, upgrade, etc.

- Data Integrity: In DDL, Stored Procedure, or App

- Persistence design: Memtable/SSTable; Apend-only B-tree; B-tree; On-disk linked lists; In-memory replicated; In-memory snapshots; In-memory only; Hash; Pluggable.

- Schema support: none, rigid, optional, mixed

- Storage model: embedded, client/server, distributed, in-memory

- Support for search, secondary indexes, range queries, ad-hoc queries, MapReduce?

- Hitless upgrades?

Things to Consider...More Features

- Tunability of consistency models?

- Tools availability and product maturity?

- Expand rapidly? Develop rapidly? Change rapidly?

- Durability? On power failure?

- Bulk import? Export?

- Hitless upgrades?

- Materialized views for rollups of attributes?

- Built-in web server support?

- Authentication, authorization, validation?

- Continuous write-behind for system sync?

- What is the story for availability, data-loss prevention, backup and restore?

- Automatic load balancing, partitioning, and repartitioning?

- Live addition and removal of machines?

Things to Consider...The Vendor

- Viability of the company?

- Future direction?

- Community and support list quality?

- Support responsiveness?

- How do they handle disasters?

- Quality and quantity of partnerships developed?

- Customer support: enterprise-level SLA, paid support, none

Yes, Todd, some answers would be helpful. For an overview, see my Choosing a cloud data store for Big Data article of 6/14/2011 for SearchCloudComputing.com.

Full disclosure: I’m a paid contributor to SearchCloudComputing.com.

<Return to section navigation list>

MarketPlace DataMarket and OData

Dhananjay Kumar (@debugmode_) posted Consuming OData in Windows Phone 7.1 or Mango Phone on 6/11/2011:

Background

If you would have read my posts discussing WCF Data Service and Windows Phone 7, there were many steps involved in consuming WCF Data Service in Windows Phone 7

- Download OData client for Windows Phone 7 and add as reference in Windows Phone 7 project

- Create Proxy of WCF Data Service using svcutil.exe

- Add as existing item create proxy in Windows Phone 7 project.

- Performing the operations from Phone.

You can read previous article here

In Beta release of Windows Phone 7.1 or Mango phone consuming OData has been improved very impressively; essentially now you do not need to perform all the steps mentioned in previous articles.

OData URL

In this post, I will be using NorthWind OData URL. You can access that URL from below link.

http://services.odata.org/Northwind/Northwind.svc/

We are going to fetch Customers table from NorthWind DataBase.

Create Project and Add Service Reference

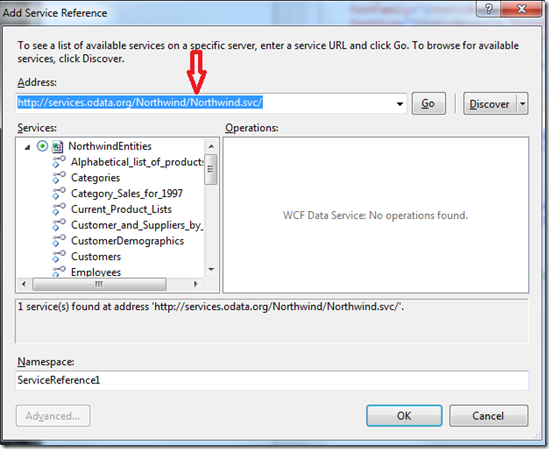

Create a new Windows Phone 7.1 project and choose target framework as Windows Phone 7.1

Right click on project and add Service Reference

In Address you need to give URL of OData. As we discussed in previous steps, here I am giving OData URL of NorthWind Database hosted by OData.org.

Writing Code behind

Add below namespaces on MainPage.Xaml.cs page

Please make sure about second namespace. Since, I have added service reference of OData with name ServiceReference1 and name of project is ODatainMangoUpdated.

Globally defined below variables

In constructor of Page,

- Create instance of NorthWindEntities

- Create instance of DataServiceCollection passing context

- Write the LINQ query.

- Attach event handler LoadCompleted on DataServiceCollection object.

- Fetch the result asynchronously

On the completed event

- Check whether next page exist or not , if yes load automatically

- Set the DataContext of layout as result.

Design Page and Bind List Box

Here you need to create a ListBox and in Data Template put three TextBlock vertically. Bind the different columns of table to the text blocks

For your reference full source codes are given below. Feel free to use them.

MainPage.Xaml

<phone:PhoneApplicationPage x:Class="ODatainMangoUpdated.MainPage" xmlns="http://schemas.microsoft.com/winfx/2006/xaml/presentation" xmlns:x="http://schemas.microsoft.com/winfx/2006/xaml" xmlns:phone="clr-namespace:Microsoft.Phone.Controls;assembly=Microsoft.Phone" xmlns:shell="clr-namespace:Microsoft.Phone.Shell;assembly=Microsoft.Phone" xmlns:d="http://schemas.microsoft.com/expression/blend/2008" xmlns:mc="http://schemas.openxmlformats.org/markup-compatibility/2006" mc:Ignorable="d" d:DesignWidth="480" d:DesignHeight="768" FontFamily="{StaticResource PhoneFontFamilyNormal}" FontSize="{StaticResource PhoneFontSizeNormal}" Foreground="{StaticResource PhoneForegroundBrush}" SupportedOrientations="Portrait" Orientation="Portrait" shell:SystemTray.IsVisible="True"> <!--LayoutRoot is the root grid where all page content is placed--> <Grid x:Name="LayoutRoot" Background="Transparent"> <Grid.RowDefinitions> <RowDefinition Height="Auto"/> <RowDefinition Height="*"/> </Grid.RowDefinitions> <!--TitlePanel contains the name of the application and page title--> <StackPanel x:Name="TitlePanel" Grid.Row="0" Margin="12,17,0,28"> <TextBlock x:Name="ApplicationTitle" Text="OData in Mango" Style="{StaticResource PhoneTextNormalStyle}"/> </StackPanel> <!--ContentPanel - place additional content here--> <Grid x:Name="ContentPanel" Grid.Row="1" Margin="12,0,12,0"> <ListBox x:Name="MainListBox" Margin="0,0,-12,0" ItemsSource="{Binding}"> <ListBox.ItemTemplate> <DataTemplate> <StackPanel Margin="0,0,0,17" Width="432"> <TextBlock Text="{Binding Path=CompanyName}" TextWrapping="NoWrap" Style="{StaticResource PhoneTextExtraLargeStyle}"/> <TextBlock Text="{Binding Path=ContactName}" TextWrapping="NoWrap" Margin="12,-6,12,0" Style="{StaticResource PhoneTextSubtleStyle}"/> <TextBlock Text="{Binding Path=Phone}" TextWrapping="NoWrap" Margin="12,-6,12,0" Style="{StaticResource PhoneTextSubtleStyle}"/> </StackPanel> </DataTemplate> </ListBox.ItemTemplate> </ListBox> </Grid> </Grid> </phone:PhoneApplicationPage>MainPage.Xaml.cs

using System; using System.Linq; using Microsoft.Phone.Controls; using System.Data.Services.Client; using ODatainMangoUpdated.ServiceReference1; namespace ODatainMangoUpdated { public partial class MainPage : PhoneApplicationPage { private NorthwindEntities context; private readonly Uri ODataUri = new Uri("http://services.odata.org/Northwind/Northwind.svc/"); private DataServiceCollection<Customer> lstCustomers; public MainPage() { InitializeComponent(); context = new NorthwindEntities(ODataUri); lstCustomers = new DataServiceCollection<Customer>(context); var result = from r in context.Customers select r; lstCustomers.LoadCompleted += new EventHandler<LoadCompletedEventArgs>(lstCustomers_LoadCompleted); lstCustomers.LoadAsync(result); } void lstCustomers_LoadCompleted(object sender, LoadCompletedEventArgs e) { if (lstCustomers.Continuation != null) { lstCustomers.LoadNextPartialSetAsync(); } else { this.LayoutRoot.DataContext = lstCustomers; } } } }Run Application

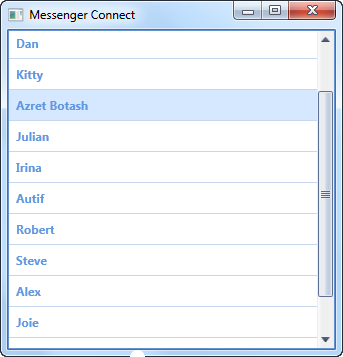

Press F5 to run the application. You should get all the records from Customer table in List box.

<Return to section navigation list>

Windows Azure AppFabric: Access Control, WIF and Service Bus

Riccardo Becker (@riccardobecker) posted Manage Windows Azure AppFabric Cache and some other considerations on 6/15/2011:

Windows Azure AppFabric Caching is a very powerful and easy-to-use mechanism that can speed up your applications and enhance performance and user experience.

It's Windows Server Cache but different

The Azure Caching contains a subset of features from the Server Appfabric. Developing for both requires the Microsoft.ApplicationServer.Caching namespace. You can use the same API but with some differences (isn't that a shame! because without this it would be a matter of deployment instead of an architectural decision). Differences are e.g. anything with regions, notifications and tags are not available (yet). The maximum size for a serialized object in Azure Cache is 8Mb. Furthermore, since it's cloud you don't manage or influence the cache directly So if you want to develop multiplatform for both azure & onpremise you need to differentiate on these issues and design for it. Always design for missing items in cache since you are not in charge (but the Azure Overlord is) and items might be gone for one reason or another especially in cases when you go beyound your cache limit.

Expiration of Windows Azure cache is not default behaviour so least used items are ousted when cache reaches it's limit. Remember that you can add items with a expiration date/time to overrule this default behaviour.

cache.Add(key, data, TimeSpan.FromHours(1));

It's obvious that this statement will cause my "data" to expire after one hour.

Keep in mind that using Windows Azure Caching you have caching on the tap and keeps you away from plumbing your own cache. Keeps you focused on the application itself while you just 'enable' caching in Azure and start using it. Fast access, massive scalability especially compared to SQL (Azure), one layer that provices cache access and a very easy, understable pricing structure.

A good alternative even for onpremise applications!

Azret Botash (@Ba3e64) reported Windows Live developer platform adds OAuth 2.0 in a 6/15/2011 post to his DevExpress blog:

Today Windows Live team announced support for OAuth 2.0

Quote “Not only have we made Messenger Connect the most up-to-date implementation of OAuth 2.0, but we’ve also taken this opportunity to improve key aspects of the developer and end user experience when going through the user authorization process…”

I blogged about our new OAuth library recently and showed you how to connect to Facebook. Here is another example, this time we’ll connect to the new Windows Live - v5.0.

<Return to section navigation list>

Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

No significant articles today.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

• My Cloud Computing with the Windows Azure Platform book is #3 in MicrosoftFeed’s Top 10 Books for Windows Azure Development post of 6/15/2011:

Windows Azure is a cloud services development, hosting and management environment. Here is the list of top 10 books for Windows Azure development that will let you know about how to build, deploy, host, and manage applications using Windows Azure’s programming model and essential storage services.

Discusses the cost model and application life cycle management for cloud-based applications. Explains the benefits of using the Azure Services Platform for cloud computing and much more. Which one is your favorite?

Note: We have included only those books in the list which are available right now in the market.

…

3. Cloud Computing with the Windows Azure Platform

Of course, there might be only 10 books available in the genre. But still not bad, considering the book’s age. It was published in August 2010 to be ready for PDC 2010 and was the first in the category.

• Robert Duffner interviewed Jason Bloomberg (pictured below) in a Thought Leaders in the Cloud: Talking with Jason Bloomberg of ZapThink LLC post of 6/15/2011 to the Windows Azure Team blog:

Jason Bloomberg is managing partner and senior analyst at the enterprise architecture advisory firm ZapThink LLC. He is a thought leader in the areas of enterprise architecture and service-oriented architecture, and he helps organizations around the world better leverage their IT resources to meet changing business needs.

He is a frequent speaker, prolific writer, and pundit. His book, Service Orient or Be Doomed! How Service Orientation Will Change Your Business (John Wiley & Sons, 2006, coauthored with Ron Schmelzer), is widely recognized as the leading business book on service orientation.

In this interview, we discuss:

- Fault tolerance and cloud brokering

- Architecting for failure

- Making the cloud part of your enterprise architecture

- The shift from forecasting demand to forecasting usage costs

- Public versus private cloud

- Different models for multi-tenancy

Robert Duffner: Could you please take a minute to introduce yourself?

Jason Bloomberg: I am managing partner with ZapThink. We are an industry advisory firm focused on service-oriented architecture, enterprise architecture, and broadly speaking, helping organizations be more agile. We take an architectural approach to dealing with the issues large organizations face in leveraging heterogeneous IT environments.

Robert: You recently wrote, “Without cloud brokering, managing a hybrid cloud may be more trouble than it’s worth.” Can you expand on that a little?

Jason: Interestingly enough, I wrote that article just a few days before the most recent Amazon cloud issue, where several different parts of the Amazon cloud infrastructure went down. A lot of companies were caught unaware by that and had cloud-based applications that went down as a result, since they were under the common perception that the cloud is inherently fault tolerant, so by extension, Amazon’s infrastructure is inherently fault tolerant.

There are multiple availability zones. We are doing all this great stuff in the cloud and it’s still going down. In fact, companies that were betting on the Amazon cloud had actually violated one of the core principles of compute systems, which is no less true of the cloud than anywhere else: you need to avoid single points of failure.

By putting all of their eggs into a single cloud basket, those companies actually made the Amazon cloud itself a single point of failure. That’s where cloud brokering comes in. If you are using the public cloud, you need to have multiple public cloud providers and broker across them. That requirement obviously raises the bar in terms of how organizations can leverage the public cloud.

Robert: When all this happened, the last thing we were going to do at Microsoft was engage in any schadenfreude, and for us, it was an opportunity to bring the issue to our customers and partners around their deployment strategy, and have conversations about architecting for resiliency.

Jason: It’s inevitable that outages are going to occur to everyone, and you are inevitably going to get egg on your face when the problem hits you. There is nothing perfect in the world of IT, and you can depend on the fact that problems are going to develop where they are least expected, whoever you are.

Robert: That’s exactly right. We have some customers who deploy to just one data center, and we have other customers who deploy to multiple data centers. It seems that the Amazon customers that only deployed to a single data center probably experienced the most serious outage.

Jason: That’s the single point of failure problem again.

Robert: Indeed. You made another great post called “Failure Is the Only Option,” where you talk about the importance of architecting for failure. Can you expand a little bit on that?

Jason: That also touches on this same topic of avoiding a single point of failure. You should expect failure anywhere and everywhere in your overall IT environment, asking what would happen if each piece failed: the database, the network, the processor, the presentation tier, and so on.

For every single question, you should have a good answer, and if anything fails, it shouldn’t cause everything to go down. The cloud actually makes that precept even more important, because the cloud’s inherent resilience leads cloud providers to use cheap hardware and cheap networks.

They use commodity equipment and rely upon dynamic cloud provisioning and failover to deal with issues of failure. The customer has to understand that the cloud is designed and expected to fail, and they need to build applications accordingly.

You can’t put everything into a single cloud instance, because the cloud provider doesn’t have any sort of magic, no-failure pixie dust. These considerations often mean raising the bar, in terms of how you architect your applications.

Robert: You deal with a lot of enterprises. Where do you often find the low-hanging fruit, in the sense of the things where cloud computing can make the most difference immediately?

Jason: Cloud computing is still relatively new, and most enterprise organizations are still at the phase of dipping their toe in the water. There are a few early adopters that are willing to place bigger bets on some of the more advanced capabilities, but that’s the exception more than the rule.

Most enterprises are still at the level where they will do some storage in the cloud or maybe some virtual machine instances, but they haven’t yet gotten to the point of thinking about application modernization or application consolidation leveraging the cloud.

That step requires much more of an enterprise-architected perspective on how to leverage the cloud. Some organizations are working through that now, and we are placing a great deal of focus there, helping organizations understand how the cloud adds to their options.

It has to fit into their architecture, and the cloud is not some sort of magic alternative to their data centers. The right approach is for them to think of it as one of the different options they have.

Robert: I get to do a lot of executive briefing presentations for customers from all over the world, and it seems that most of the enterprise interest has been around infrastructure-as-a-service versus platform-as-a-service. I think they are looking for the low-hanging fruit, in terms of just taking existing apps and having someone host them in the cloud.

Jason: That’s the most straightforward aspect of cloud adoption, and it’s something of a “horseless carriage” approach. Those organizations understand how to build an app and host it internally, and this approach allows them to extend that understanding to an external hosting model.

It does over-simplify the problem, and it carries some missed opportunity. On one hand, you need to re-architect your apps to take full advantage of the cloud. On the other hand, the cloud opens up new capabilities that you didn’t have before. That’s where the big win is, as opposed to something like just taking your existing ERP solution and sticking it in the cloud.

That’s not the right way to think about the problem. The way to think about the problem is that the cloud is going to reinvent what it means to have enterprise applications, but that’s going to take time. We are not ready for that yet.

Robert: What do you see as things that may never move to the cloud?

Jason: I’m reluctant to use the word never, because when you start thinking about the long-term prospects of a trend like this, what it means to do cloud computing becomes broad and ubiquitous. The cloud is just going to come to mean dynamic provisioning and virtualization deployed in an automated fashion.

We can do that internally or externally, leveraging resources wherever they happen to be. In 5, 10, 15, or 20 years, we probably won’t think of it as cloud anymore. It’s just going to be the way IT is done.

Therefore, it’s not really a question of whether something is cloud or not. It’s really just a question of where you want your virtualization. Where do you need dynamic provisioning? Where do you need your levels of abstraction? How are those abstractions going to provide flexibility to the users?

With that perspective in place, it becomes a continuum of best practices, more than seeing cloud as a particular thing where some things belong and some don’t.

Robert: Mark Wilkinson is giving a talk at the Cloud Expo on “Overcoming the Final Barriers to Widespread Enterprise Cloud Adoption,” where he says many organizations view moving to the cloud as a leap of faith. Do you think organizations typically worry about the right things?

Jason: The companies that are placing big bets on the cloud are early adopters, and they have a particular kind of psychology. They are willing to place the bet on something that has risks, because they perceive that they can get a strategic advantage by being first.

Those companies are willing to assume the risks of the cloud in exchange for beating their competition to the cloud, and then taking that to the customers before the other guy. Only certain companies have that psychology; most companies are too risk-averse to do that.

A few are willing to place larger bets, and most understand that there are risks from the fact that it’s still a new approach, with missing pieces, a lack of standard support, and all the other issues you have with an emerging technology.

Robert: Joe Weinman put up a recent post on the Cloudonomics blog, where he offers some details around his “10 Laws of Cloudonomics.” One is the notion that on demand trumps forecasting. Do you have any thoughts around that?

Jason: I guess it depends on what you mean by “forecasting.” In the context of a traditional way of thinking where you have existing on-premises infrastructure, you know how many servers you’re going to need in the future, because you’re going to track your usage patterns and things like that.

The cloud frees you from matters such as having to forecast how many servers you need to buy, but on the other hand, it opens up new kinds of forecasting. We now need to forecast how we’re going to leverage the cloud and take advantage of cloud-based capabilities as they mature.

That different type of forecasting is very hard to do this early in the market, because there are too many unknowns. It’s easier to say, “Based upon the usage trends, I’m going to need to buy 10 servers next month.” That sort of forecasting is pretty straightforward.

Robert: More and more, I’m starting to see a blurring between infrastructure and platform-as-a-service, and even, to some level, software-as-a-service. A lot of what I call SaaS 2.0 vendors are fundamentally building platforms. Do you see infrastructure and platform-as-a-service coming together?

Jason: I would say, actually, that the distinctions among the three different deployment models were a bit artificial to begin with. That model was based on some early thinking about how these things would play out. Now we’re talking about how infrastructure as a service grew out of virtual servers and things like that, and we have this notion of platform as a service as well, sort of in between, as an outgrowth of middleware markets.

As time goes on, it’s really more of a continuum. How many capabilities do you put into your infrastructure before you have platform-type capabilities? Likewise, some of the elements that characterize platform as a service include application frameworks that you can build applications with. How robust does an application framework have to be before it’s software as a service?

Those answers don’t really matter, of course, because these terms are just meant to help clarify. They’re arbitrary distinctions to help conversations along, more than they are hard and fast distinctions in the technology.

Robert: That’s a good point. For example, with Beanstalk, Amazon is offering abstraction layers on top of the simple ability to run a VM hosted on one of their servers.

Jason: Amazon’s an interesting case, because they’ll run anything up the flagpole they think is cool, and Google does the same thing. If it takes off, great. If not, they’ll do something different. That means that, just because they’re doing something doesn’t mean it’ll become an established market, or even that it’s necessarily a good idea. It just means it was something they could do and they thought it was cool, so they figured they’d do it.

We’ll have to see how all this plays out. Over time, the individual market categories will consolidate and become clearer, but for the moment, it’s sort of all over the place. Of course, from the customer perspective, that just makes things even more confusing.

Robert: Gartner just published a research note saying that three quarters of respondents were pursuing a private cloud strategy and would invest more in private cloud than public. I’ve also seen some good research from James Staten of Forrester. One piece I specifically remember was called “You’re Not Ready for a Private Cloud.”

I think that discussion centers around what it fundamentally takes organizationally to deploy a private cloud. It’s one thing to virtualize workloads and reduce the number of physical servers through consolidation, but it’s a whole other thing to fundamentally deploy a private cloud and services layer.

What advice do you have for organizations looking at a private cloud strategy? And do you think this is really only for the IT shops that have huge budgets, such as large multi-national banks?

Jason: I would offer different advice for different customers, depending on what problems they want to solve. Keep in mind, though, that there’s been a disproportionate emphasis put on private cloud, and I see that as being a result of vendor spin.

From the vendor perspective, private cloud is a great way to sell more gear, so the big middleware and hardware vendors are pushing private cloud on the one hand because it sells more gear. On the other hand, particularly in IBM’s case, they see themselves as providing the gear to the service provider market.

They’re looking to the telcos who have been IBM customers to provide cloud capabilities to their customers. And of course, who are the telcos going to buy the underlying gear from other than IBM?

So there’s this emphasis on that because the vendors want you to do that. In reality, in many cases, there’s a better value proposition available from public cloud for enterprises, as they get a handle on what that means.

After all, public cloud gives you a lot of the cost advantages, the shift to operational expense, and some of the dynamic provisioning capabilities. One of the challenges of the private cloud is the need to support the ability to handle spikes in traffic, just as with your own data centers since day one.

With the public cloud, there are multiple customers, and hopefully everybody has spikes in traffic at different times. That’s the bet, anyway, and if it comes true, everyone benefits from much better utilization of the servers. The private cloud doesn’t provide that advantage, so in many ways, cloud value propositions are watered down in the private cloud.

Vendors are giving a lot of play to security and governance issues with the public cloud. Some of that is true, but you also have those issues in the private cloud as well. So some of it is vendor “fud”—fear, uncertainty, and doubt—and some of it is just a reflection of the immaturity of the market.

It is important for cloud consumers to consider the role of vendor spin, as they are trying to push big enterprises to buy more gear. Many times, the story they tell is, “You have a data center, but now you need to build another one, which we’re going to call a private cloud. You need to buy all new racks, blades, and software.”

Some telcos want to build a private cloud to offer cloud capabilities to customers, and essentially, they’re building a managed service provider infrastructure. Conversely, some large enterprises think of a private cloud as their own internal service provider that will provide cloud capabilities to multiple divisions across a large enterprise.

There are still value propositions for companies in the public cloud, and over time, they’ll figure it all out. They’ll say public clouds are good for this and private clouds are good for that, with best practices that help define the pros and cons of each. For the moment, though, there’s an excessive emphasis on private cloud, in large part because the vendors see more dollar signs there.

Robert: That’s the end of my prepared questions. Is there anything else you’d like to address or expand on?

Jason: I recently published an article called “Cloud Multi-Tenancy: More Than Meets the Eye.” Multi-tenancy, as you know, is a key characteristic of public clouds, but I have found that there are actually different kinds of multi-tenancy at different levels.

First, there’s full multi-tenancy, or shared-schema multi tenancy, which is the kind that Salesforce has, for example, where everybody is essentially in the same application and the same tables. That model brings certain advantages, such as being easy to manage and scale, but it’s a one-size-fits-all kind of option.

There are other approaches as well. For example, there’s the clustered, shared-schema approach, where there are clusters of customers on different SaaS applications. Each of those can be configured to meet the needs of particular customers, which provides a bit more flexibility, but it’s also harder to manage.

Another model is what you might call isolated tenancy, where a big vendor might move a legacy app into the cloud, with a different instance for each customer. It’s not really multi-tenancy at all, at that level, but from the customer perspective, it can look like multi-tenancy.

That could actually encompass a bit more vendor spin, where every customer still gets their own application stack, but it’s now theoretically in the cloud, although, in reality, it’s more of a hosted provider option.

Robert: That’s great. Thanks for taking the time to share your perspectives.

Jason: Thank you; it’s been a pleasure.

ZapThink was one of the early thought leaders in the SOAP Web services boomlet.

Martin Beebe posted Featured Article: Getting Tech.Days on Azure, Episode Two: Attack of the Nodes to the MSDN UK Team Blog on 6/15/2011:

It’s been over 6 months since we decided that we would build the Tech.Days website using Umbraco and host it in Azure and in that time there have been a number of solutions created to get Umbraco working with Azure. Today I’m going to show you how you can the new Accelerator launched in April to get an Umbraco 4.7 site on to Azure with multiple instances.

When we first put the Umbraco site live on Azure, late last year we had a problem: The first version of the Accelerator would only allow us to add one node. The accelerator enabled us to get things on Azure, but critically didn’t allow us to scale. If we did, the whole site would enter a painful “waiting for node to start” loop. Having a cloud solution that is only capable of having one node is about as useful a chocolate fire guard… and so we searched for a solution.

As a temporary measure we managed to solve the issue by writing our own code based on the Accelerator. This enabled us to initialise a handful of nodes to cope with potential traffic spikes, however, getting it working was quite labour intensive and it required me to learn a lot about the Azure SDK and Umbraco. The result was a rather messy solution that worked but wasn’t particularly reusable, I talked about this solution at Tech.Days.

Surely there was a better way.

Luckily there was. Two better ways in fact.

- Firstly The new Umbraco Azure Accelerator

- and Secondly Umbraco 5, you can read more about this here. It went into CTP today and was built with Azure in mind. We look forward to using it for future versions of our websites.

The New Umbraco Azure Accelerator is what we used for our 2nd Version of the Tech.Day website, this site went live today. This new Accelerator enabled two critical features:

- Multiple Instances, you can now scale your application up or down easily by spinning up as many instances as you wish.

- Edit Individual files You can edit individual files and this is synced to all instances. You can do this without redeploying a whole new azure package.

The architecture of a site using the new accelerator looks like this:

Basically the Umbraco website is stored in blob storage and when a web role instance starts up it loads the site from blob storage and attaches it to IIS. If you edit a file inside of blob storage the file change gets replicated across the instances and if you make a change to the instance file system it gets replicated back to blob storage and then in turn it is sent out to the other instances.

All of the data is stored in SQL Azure. You can either let the Accelerator create you a fresh database for you or you can use this tool to migrate an existing SQL database into SQL Azure (This was the tool I used to get the TechDays database from our staging SQL server onto SqlAzure).

All of the session data is stored in either a SQL Azure database or if you are feeling particularly fancy you can use the Windows Azure AppFabric Caching service (there are instruction on how you can do this in the documentation that ships with the Accelerator)

Complete Instructions on how to create and deploy the application are contained as part of the download but general steps are as follows:

- Create a Windows Azure Storage Account

- Start the Setup Script, this will ask for the blob storage details and will then configure the accelerator project with this information.

- Build and publish the service config and package files using Visual Studio and Deploy these two files to a Windows Azure hosted service

- Create a SQL Azure database or enter your existing database credentials.

- Allow the script to create a session database for you.

- Allow the script to deploy Umbraco to blob storage.

- Wait 10-20mins and your site will be live.

Once your site is live it’s pretty easy to adjust the number of instances through the Azure Management portal. All you need to do is:

- Log on to the Windows Azure Platform portal, and click Hosted Services, Storage Accounts & CD on the lower part of the left pane.

- In the upper part of the left pane, choose Hosted Services.

- Select your Accelerator deployment on the middle pane and click Configure, on the ribbon.

- In the Configure Deployment dialog, choose Edit current configuration, and change the Instances count value in the configuration XML.

Figure 1 - Configure Deployment Dialog

The Windows Azure Accelerator for Umbraco will automatically replicate the Umbraco sites on each new instance, allowing your Umbraco instance to scale out.

Alternatively you can change the number of instances programmatically using the service management API . You could for example write a service that scales the application out or reduces capacity based upon traffic spikes.

To use the new accelerator there are a number of prerequisites:

- You need to be using version 4.7 of Umbraco

- Visual Studio 2010 and .NET 4

- Windows Azure SDK and Tools for Visual Studio (March 2011) version 1.4

The new version of Umbraco (Umbraco 5)was only released today as a CTP. As yet I haven’t had a chance to test it but I am assured that Azure support will be built into the application architecture. I’m heading off to Denmark today for the CodeGarden event and will be sure to update you afterwards once I know more. Incidentally they are using Azure to host the CodeGarden event site too.

<Return to section navigation list>

Visual Studio LightSwitch

Beth Massi (@bethmassi) explained Adding Static Images and Text on a LightSwitch Screen in a 6/15/2011 post:

A lot of times you want to display static images and text on your data entry screens, say a company logo or some help text presented in a label to guide the user on the screen. Adding images to buttons on screens is as easy as setting it in the properties window. However, when you want to display an image or static text on the screen directly you need to do a couple things. If you take a look at the Contoso Construction sample that I released a couple weeks ago, you will see that the “Home” screen displays a lot of static images and text in a completely customized screen layout. In this post I will show you how you can add static text and images to your LightSwitch screens by walking through this sample.

Screen Data Items and the Content Tree

By default, all the controls on screens you create in Visual Studio LightSwitch have to be bound to a data item. The screen designer displays all the data items in your view model on the left side. In the center is what’s called a “Content Tree”. Content Items make up the tree – these are the controls bound to each of the data items.

So when building screens in LightSwitch every control in the content tree must be bound to a data item. This data item can be a field on an entity, a collection of entities, or any other data item that you add to the view model. The screen templates help guide you in setting up a layout and all the data binding for a particular type of screen; be that a search screen, details screen, new data screen, etc. These templates are just starting points that you can customize further. In fact, the Home screen in this example is a completely custom layout. After selecting a template, I deleted all the items in the content tree and built up my own layout.

Data items can be basic properties like strings or integers, they can be methods that you call from screen code or buttons, or they can be entities that originate from queries. To add a data item manually to a screen click the “Add Data Item” button at the top of the screen designer. You can then specify the name and type of the data item. The home screen is a combination of all of these types of data items.

Setting Up Data Items

You add static images and text to the screen as local properties via the Add Data Item dialog and then lay them out in the content tree by dragging them from the view model on the left. So to add a static image to the screen first click “Add Data Item”, select Local Property, set the type to Image, and then name the property.

Click OK and it will appear in your view model on the left of the screen designer. Drag the data item where you want it in the content tree (if you don’t get it right you can run it later (F5) and move it around on the screen real time). Finally, change the control to an Image Viewer from Editor.

Repeat the same process for static text. To add static text to the screen first Add Data Item, select Local Property, set the type to String and then name the property. Drag the item into the content tree and then change the control to Label.

Initializing Static Properties

Because static screen properties do not originate from a data entity, you need to set the property value before the screen is displayed. You can do this in the screen’s InitializeDataWorkspace method which runs before any queries execute. You can access screen methods by dropping down the “Write Code” button at the top right of the screen designer. To set one image and one text static property you would write this code:

Private Sub Home_InitializeDataWorkspace(saveChangesTo As List(Of IDataService)) ' Initialize text properties Text_Title = "Contoso Construction Project Manager" ' Initialize image properties Image_Logo = MyImageHelper.GetImageByName("logo.png") End SubIn order to load static images you need to switch to file view, right-click on the Client project’s \Resources folder and select Add –> Existing Item. Browse for the image and then set the build action to “Embedded Resource”.

Next you need to write some code to load the image. In the Contoso Construction sample application it uses static images in a variety of screens so I created a helper class called MyImageHelper that can be used from anywhere in the client code. While in file view, right-click on the \UserCode folder in the Client project and select Add –> Class. Name it MyImageHelper and create a static (Shared) method that loads the image.

''' <summary> ''' This class makes it easy to load images from the client project. ''' Images should be placed in the Client project \Resources folder ''' with Build Action set to "Embedded Resource" ''' </summary> ''' <remarks></remarks> Public Class MyImageHelper Public Shared Function GetImageByName(fileName As String) As Byte() Dim assembly As Reflection.Assembly = Reflection.Assembly.GetExecutingAssembly() Dim stream As Stream = assembly.GetManifestResourceStream(fileName) Return GetStreamAsByteArray(stream) End Function Private Shared Function GetStreamAsByteArray( ByVal stream As System.IO.Stream) As Byte() If stream IsNot Nothing Then Dim streamLength As Integer = Convert.ToInt32(stream.Length) Dim fileData(streamLength - 1) As Byte stream.Read(fileData, 0, streamLength) stream.Close() Return fileData Else Return Nothing End If End Function End ClassYou can add any custom code you need to the client this way. You have a lot of flexibility to add your own custom code and classes to LightSwitch and this is one simple example.

Return to section navigation list>

Windows Azure Infrastructure and DevOps

David Linthicum (@DavidLinthicum) asserted “IT is revisiting its data integration strategies and products because of the cloud; here's what to look for” as a deck to his Data integration technologies that focus on the cloud are the way to go post of 6/15/2011 to InfoWorld’s Cloud Computing blog:

Every enterprise needs data integration at some point, whether you're talking about simple ETL to move sales transactions from an operational data store to a data warehouse or real-time event-driven integration that supports a fast-paced business. Truth is, in many respects, those wars were fought and won long ago. Many IT organizations have long since created their own integration approaches and list of vendors they use.

But the cloud changes everything.

The use of cloud computing -- private, public, or hybrid -- means IT is giving data integration technology providers a second look and perhaps a second chance. These days, the newer data integration providers get the ink around their sustained focus on the cloud, and a few older providers are redoing their technologies to address the special needs of cloud computing connections.

Providers are taking a few macro approaches to data integration for cloud computing:

The "do nothing about cloud computing" guys. A few of the much older integration providers consider cloud computing as just another set of systems. They are not doing much to position themselves in that space, other than some press Spin. These are typically hugely expensive and locally hosted technologies that are very feature-rich. If they are in the cloud space, it's often through the acquisition of a separate set of technologies, such as the next two I describe.

The "integration on the cheap" guys. These are typically locally deployed technologies that are priced anywhere from free to $50,000. There are some open source providers here, ESB (enterprise service bus) providers, and a few data integration startups. They support the cloud, but their focus is more on tactical integration. If you find the cloud there, that's fine, but not the point.

The "cloud is everything" guys. These are a mix of new and midsized providers that have taken an all-in approach to cloud computing. These firms provide both on-premise and multitenant cloud-deployed versions of their integration stacks. They are investing heavily in building or maintaining connectors for major cloud providers.

For cloud integration, I'd use the technologies that focus on -- call me crazy -- cloud integration. As cloud computing technology progresses, you'll need somebody to keep up with the changes, and you don't want to use a data integration technology provider that's more concerned about the SAP API changes than the Salesforce.com or Amazon Web Service API changes. These are two very different worlds, and they require a very different focus.

Klint Finley (@Klintron) reported Gartner Sees PaaS Search Query Spike in a 6/12/2011 post to the ReadWriteCloud blog:

A recent report from Gartner details a spike the firm observed in the number of search queries for the term "PaaS" from users of its website. Independent researcher Louis Columbus summarizes the report on his blog. He notes that at Gartner, search terms are considered a leading indicator of future IT spending.

The number of searches for PaaS on Gartner.com spiked from around 250 in December to over 700 in January, and have remained between 650-700 in February and March.

The spike in interest was likely caused by high profile acquisitions of PaaS vendors by Red Hat and Salesforce.com in December. Red Hat acquired Makara (now OpenShift), and Salesforce.com acquired Heroku.

The spike Gartner saw does not match Google's search trends. Google Trends shows a big spike in April of 2011, with a return to normal in May. Google's spike seems to correspond with VMware's announcement of Cloud Foundry.

Previously, Gartner revealed that SaaS is one of its top search terms.

We covered the Forrester Wave on PaSS here.

No significant articles today.

<Return to section navigation list>

Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

James Saull (@jamessaull) posted Passing the private cloud duck test to the Cloud Comments.net blog on 6/15/2011:

Clouds need Self Service Portals (SSP). I often wonder to whom “self” refers to and I think it would help a lot if people clarified that when describing their products. Is it a systems administrator, a software developer, a sales manager?

I have just read the Forrester report “Market Overview: Private Cloud Solutions, Q2 2011 by James Staten and Lauren E Nelson” which is actually pretty good. They cover IaaS private cloud solutions from the likes of Eucalyptus Systems, Dell, HP, IBM, Microsoft, VMWare etc. What I particularly liked is the way they interviewed and asked the vendors to demonstrate their cloud from a user centred perspective: “as a cloud administrator do…” , “as an engineering user perform…” , “logged in as a marketing user show…”. This moves the conversation away from rhetoric and techy details about hypervisors to the benefits realised by the consumers.

If it doesn’t look like a duck or quack like a duck it probably isn’t a duck.

Forrester have also tried to be quite strict in narrowing down the vendors they included in this report because, frankly, things weren’t passing the duck test. They also asked them to supply solid enterprise customer references where their solution was being used as a private cloud and they found: “Sadly, even some of those that stepped up to our requests failed in this last category”.

Good. Let’s get tough on cloud-washing.

Adam Hall offered a Reminder–the Orchestrator Evaluation Program starts Thursday! in 6/15/2011 post to the System Center Team Blog:

Hi everyone,

This week we will be releasing the System Center Orchestrator BETA! The team are locking down the build so keep an eye out for the announcement real soon.

And on Thursday we will start the Orchestrator Beta Evaluation Program so make sure you are signed up! This program will provide you with ready-made environments, a structured evaluation of Orchestrator and Lync calls with the Product Team every 2 weeks so you can provide your feedback, ask questions and even WIN STUFF!

I look forward to talk[ing] to you very soon.

Adam is a Senior Technical Product Manager.

<Return to section navigation list>

Cloud Security and Governance

Chris Hoff (@Beaker) reported @Beaker Performs A vMotion… in a 6/15/2011 post to his Rational Survivability blog:

The hundreds of tweets of folks guessing as to where I might end up may have been a clue.

Then again, some of you are wise to steer well and clear of The Twitters.

In case you haven’t heard, I’ve decided to leave Cisco and journey over to the “new” hotness that is Juniper.

The reasons. C’mon, really?

OK: Lots of awesome people, innovative technology AND execution, a manageable size, some very interesting new challenges and the ever-present need to change the way the world thinks about, talks about and operationalizes “security.”

The Beakerettes and I are on our way, moving to San Jose next week and I start at the Big J on July 5th.

All my other hobbies, bad habits (Twitter, this here blog) and side projects (HacKid, CloudAudit, etc.) remain intact.

Catch you all on the flip side.

/Hoff

It must have been a great offer to cause @Beaker and the Beakerettes to leave the Right for the Left Coast.

<Return to section navigation list>

Cloud Computing Events

• John K. Walters will moderate the 11:00 PDT Learn How Google App Engine Can Help Easily Build Scalable Applications In The Cloud Web cast of 6/29/2011 sponsored by Application Development Trends:

Google App Engine, which provides the ability to develop and host applications on Google’s infrastructure, has gained momentum quickly since it launched as preview status in 2008. More than 100,000 developers use App Engine every month to deliver apps that dynamically scale with usage without the need to manage hardware or software. App Engine now hosts more than 200,000 active apps that serve over 1.5 billion site views daily.

App Engine gives you the tools and platform to quickly and easily bring your applications to users. Once your app is built, it gives you the flexibility of dynamically scaling to virtually any amount of traffic, with centralized management consoles to make maintenance very simple. In addition, you can constantly innovate with you app with a wide range of world-class APIs and data analytic services.

Come learn about the various features of Google App Engine as well as learn about our future plans for the platform. We will discuss how to build, manage, and scale an application and give you the knowledge on critical things such as our pricing, SLA, and support plans to ensure you can run a successful business. Register today!

- Date: 06/29/2011

- Time: 11:00 am PT

- Duration: 1 hour

It will be interesting to see of Google provides any info about their new relational database for Google App Engine for Business (GAE4B). I haven’t been able to find any RDBMS details so far. GAE4B is being merged into GAE in the forthcoming upgrade, which will bring higher prices for basic GAE services.

• The Microsoft New England Research and Development (NERD) Center announced the Boston Azure Boot Camp will be held there on 9/30 to 10/1/2011:

Event Details

Add to Calendar, Register Now

- Date: 9/30/2011 - 10/1/2011

- Location: Microsoft New England R&D Center, One Memorial Drive, Cambridge, MA 02142

- Time: 8:30am - 9:00pm

- Audience: Developers and Architects interested in Cloud Computing or Windows Azure

- Twitter: @bostonazure

- Description: This free event is a two-day, hands-on bootcamp - Friday September 30 and Saturday October 1. The goal of this two-day deep dive is to get you up to speed on developing for Windows Azure. The class includes trainers with deep real world experience with Azure, as well as a series of labs so you can practice what you just learned.

Through an alternating sequence of lectures and labs, you will learn a whole lot about the Windows Azure Platform - from key concepts to building, debugging, and deploying real applications. You will bring your own Azure-ready laptop - or let us know on the signup form if you would like a loaner, or would like to pair with someone for the coding part.

The Microsoft Partner Network invited partners to Help Shape Steve Ballmer’s WPC 2011 Keynote Speech! in a 6/13/2011 News Detail item:

WPC 2011 is your event, and we would love to get your comments on topics you want to hear from Steve Ballmer, Microsoft’s CEO. Help us shape his 2011 WPC Keynote speech by leaving your requests below.

Are you interested in learning how Microsoft is leading the industry transformation to the cloud? Or how Microsoft is committed to partner success? Is there something specific about the business vision, commitment, or consumerization of business that relates especially to you, the partner, which you want to hear Steve Ballmer speak to?

Let us know by leaving your request as a comment! Submissions close June 22.

I posted the following request on 6/15/2011:

I'd like to hear the current status of the Windows Azure Platform Appliance (WAPA) announced at last year's Worldwide Partners Conference. Fujitsu has announced their WAPA implementation in their Japanese data center, but purported partners HP and Dell Computer have been conspicuous by their silence on offerings. What are Microsoft's intentions regarding making WAPA available to partners with organization smaller than Fujitsu, HP, Dell and eBay.

An update of eBay's progress with implementing WAPA would also be of interest.

Thanks in advance,

Roger Jennings, OakLeaf Systems

Registered Partner

Nancy Medica (@nancymedica) reminded me of a Webinar: Lander Leverages Azure and SQL Azure to build an application to be held 6/22/2011 at 9:00 AM PDT:

Starts: Wednesday June 22, 2011, 11:00AM CDT

- Ends: Wednesday June 22, 2011, 12:00PM CDT

- Event Type: Conference

- Location: This is a virtual event.

- Website: http://webinar.getcs.com/

- Industry: information technology and services

- Keywords: lander, common sense, webinar, azure, windows azure, cloud, cloud computing, application, software, development, application development, cio, cto, it

- Intended For: CIOs, CTOs, IT Managers, IT Developers, Lead Developers

- Organization: Common Sense

Webinar: "Lander. Leverages Azure and SQL Azure to build its core application."

- How to make the most of Azure elasticity storage and scalability on a global SaaS app

- How to use storage Caching to render web pages, eficiently

Presented by Juan De Abreu. Common Sense's Vice President.

Juan is also Common Sense's Delivery and Project Director and a Microsoft's Sales Specialist for Business Intelligence. He has nineteen years of Information Systems Development and Implementation experience.

A CBR Staff Writer reported Microsoft formally joins Cloud Industry Forum in a 6/15/2011 post:

Over 10,000 corporate customers use Windows Azure cloud services

Microsoft UK has formally joined the Cloud Industry Forum (CIF), an industry body that promotes the adoption and use of online cloud-based services by businesses.

Microsoft is one of the first IT companies to offer cloud services. Its Windows Azure already has over 10,000 corporate customers making it a leader in the cloud computing market.

In an effort to improve consumer confidence in cloud computing, the company started 'Cloud Power' campaigns in 2010. The campaign focused on creating awareness of private cloud, public cloud and cloud productivity. It promoted the benefits of cloud computing over those of traditional computing.

CIF chairman Andy Burton said that the experience Microsoft has had in recent months in educating end users will be of tremendous value to the industry body.

Burton said, "We have always acknowledged the breadth and diversity of the Cloud marketplace and have welcomed organisations from every part of the cloud supply chain from ISVs to service providers, and from local vendors to truly global partners such as Microsoft."

The CIF was established in 2009 to provide transparency through certification to a Code of Practice for credible online Cloud service providers and to assist end users in determining core information necessary to enable them to adopt these services.

Both the CIF and Microsoft said that this was the right time for Microsoft to join the forum.

Burton said, "We have prided ourselves on the work we have done since inception in terms of developing a credible, independent voice for the sector. We have alliances in place from BASDA, Eurocloud, the Cloud Security Alliance as well as the likes of Intellect. We are recognised through our work on standards and briefing Government at the highest levels as acknowledged experts in Cloud computing. So the time is right for organisations such as Microsoft to become members."

Microsoft director of partner strategy and programmes Janet Gibbons said Microsoft has been following the work of the Cloud Industry Forum over the past few years with interest and the company supports its aspirations to champion the adoption of cloud services.

Gibbons said, "We share a common goal: improving consumer confidence in the Cloud. This lies behind our investment in the 'Cloud Power' campaigns and is the driving force behind the push for a Code of Practice, as promoted by the Cloud Industry Forum."

"With over 10,000 corporate customers already using Windows Azure, we are firmly at the forefront of the market and we felt the time was now right to endorse the work of the Cloud Industry Forum in championing its certified Code of Practice throughout the Cloud supply chain, building confidence in Cloud solutions for ever increasing numbers of users," she added.

The CIF has announced that the third Annual Cloud Computing World Forum, which includes a conference and free-to-attend exhibition, will be held on 21 and 22 June in London.

<Return to section navigation list>

Other Cloud Computing Platforms and Services

Barton George (@barton808) promoted the Dell Modular Data Center but not the Windows Azure Platform Appliance in his My Cloud Expo preso: Taking the Revolutionary Approach post of 6/15/2011:

Last week Dell was out in force at the Cloud Computing Expo in New York as the event’s diamond sponsor. Besides the Keynote that President of Dell services Steve Schuckenbrock delivered, Dell also gave, or participated in 11 other talks.

I also gave one the talks and mine focused on the revolutionary approach to the cloud and talked about how this approach was setting a new bar for IT efficiency.

Here’s the deck:

(If the embedded deck doesn’t appear above, you can go to it directly on slideshare). Taking The Revolutionary Approach To Cloud Building, How To Architect For The Greatest Efficiency.View more presentations from Dell ServicesTalking with Press and Analysts

At the event I also met with press and analysts. One of the things I find helpful in explaining Dell’s strategy and approach to the cloud is to sketch it out for someone real time. I guess analysts Chris Gaun and Tony Iams of Ideas International found it helpful since they both tweeted a picture of it

.

Besides analysts I also met with several individuals from the press. Mark Bilger, CTO of Dell services and I met with Michael Vizard of IT Business Edge and it resulted in the following article Cloud Computing Starts to Get a Little Foggy.

Additionally, to support the event and Dell’s cloud efforts going forward, Dell launched the Dell in the Clouds site. It’s pretty cool, you may just want to check it out.

Extra-credit reading (all my posts from Cloud Expo):

- Survey Results, is IT optimistic or pessimistic about Cloud?

- Talking to RightScale about “myCloud” and their work with Zynga

- An Update from Eucalyptus’s CTO and Founder

- Ubuntu cloud update — OpenStack, Eucalyptus, Ensemble & Orchestra

- Cloud.com on OpenStack and powering RightScale’s myCloud

- Cloud Expo: Cloud.com, what’s it all about?

- Cloud Expo: Boomi case study — Enterprise Community Housing