Windows Azure and Cloud Computing Posts for 6/29/2011+

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database and Reporting

- Marketplace DataMarket and OData

- Windows Azure AppFabric: Access Control, WIF and Service Bus

- Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch

- Windows Azure Infrastructure and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Azure Blob, Drive, Table and Queue Services

No significant articles today.

<Return to section navigation list>

SQL Azure Database and Reporting

Tim Anderson (@timanderson) delivers the SharePoint Online to SQL Azure connectivity story in his Microsoft Office 365: the detail and the developer story post of 6/29/2011:

I attended the UK launch of Office 365 yesterday and found it a puzzling affair. The company chose to focus on small businesses, and what we got was several examples of customers who had discovered the advantages of storing documents online. We were even shown a live video conference with a jerky, embarrassing webcam stream adding zero business value and reminding me of NetMeeting back in 1995 – which by the way was a rather cool product. Most of what we saw could have been done equally well in Google Apps, except for a demo of the vile SharePoint Workspace for offline editing of a shared document, though if you were paying attention you could see that the presenter was not really offline at all.

There seems to be a large amount of point-missing going on.

There is also a common misconception that Office 365 is “Office in the cloud”, based on Office Web Apps. Although Office Web Apps is an interesting and occasionally useful feature, it is well down the list of what matters in Office 365. It is more accurate to say that Office 365 is for those who do not want to edit documents in the browser.

I am guessing that Microsoft’s focus on small businesses is partly a political matter. Microsoft has to offer an enterprise story and it does, with four enterprise plans, but it is a sensitive matter considering Microsoft’s relationship with partners, who get to sell less hardware and will make less money installing and maintaining complex server applications like Exchange and SharePoint. The, umm, messaging at the Worldwide Partner Conference next month is something I will be watching with interest.

The main point of Office 365 is a simple one: that instead of running Exchange and SharePoint yourself, or with a partner, you use these products on a multi-tenant basis in Microsoft’s cloud. This has been possible for some time with BPOS (Business Productivity Online Suite), but with Office 365 the products are updated to the latest 2010 versions and the marketing has stepped up a gear.

I was glad to attend yesterday’s event though, because I got to talk with Microsoft’s Simon May and Jo Carpenter after the briefing, and they answered some of my questions.

The first was: what is really in Office 365, in terms of detailed features? You can get this information here, in the Service Description documents for the various components. If you are wondering what features of on-premise SharePoint are not available in the Office 365 version, for example, this is where you can find out. There is also a Support Service Description that sets out exactly what support is available, including response time objectives. Reading these documents is also a reminder of how deep these products are, especially SharePoint which is a programmable platform with a wide range of services.

That leads on to my second question: what is the developer story in Office 365? SharePoint is build on ASP.NET, and you can code SharePoint applications in Visual Studio and deploy them to Office 365. Not all the services available in on-premise SharePoint are in the online version, but there is a decent subset. Microsoft has a Sharepoint Online for Office 365 Developer Guide with more details.

Now start joining the dots with technologies like Active Directory Federation Services – single sign-on to Office 365 using on-premise Active Directory – and Windows Azure which offers hosted SQL Server and App Fabric middleware. What about using Office 365 not only for documents and email, but also as a portal for cloud-hosted enterprise applications?

That makes sense to me, though there are still limitations. Here is a thread where someone asks:

Does some[one] know if it is possible to make a database connection with Office365, SharePoint (Designer) and SQL Azure database ?

and the answer from Microsoft’s Mark Kashman on the SharePoint team:

You cannot do this via SharePoint Designer today. What you can do is to create a Silverlight or javaScript client application that calls out to SQL Azure.

In the near future, we are designing a way to make these connections using the base SharePoint technology called BCS (Business Connectivity Services) where then you could develop a service to service to SQL Azure.

If you cannot wait, check out the Cloud Connector for SharePoint 2010 from Layer 2 GmbH.

It seems obvious that Office 365 and Azure together have potential as a developer platform.

What about third-party applications and extensions for Office 365? This is another thing that Microsoft did not talk about yesterday; but it seems to me that there is potential here as well. It is not well integrated, but you can search Microsoft Pinpoint for Office 365 applications and get some results. If Office 365 succeeds, and I think it will, there is an opportunity for developers here.

Related posts:

Tim also posted Office 365 and why it will succeed on 6/28/2011.

<Return to section navigation list>

MarketPlace DataMarket and OData

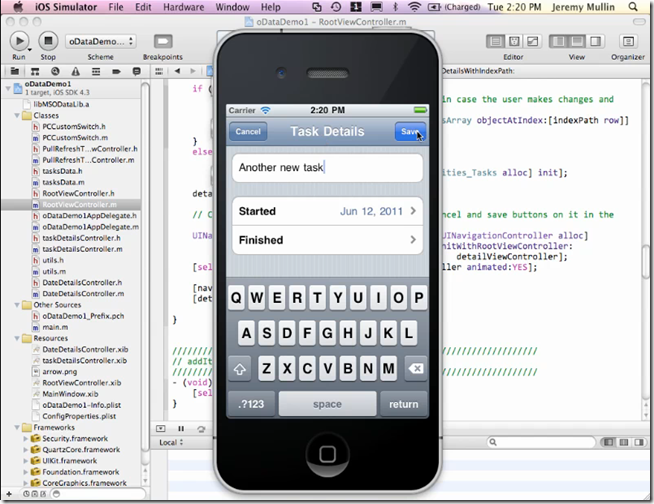

J. D. Mullin (@jdmullin) continued his series with iPhone oData Client, Part 3 of 6/22/2011 (missed when posted):

In Part 2 of this series we moved our service query to a background thread and added “pull to refresh” functionality. In Part 3 we take a quick look at insert, update and delete functionality.

You can stream the screencast here, or download (33MB) and view it locally.

Resources

- The Example Application Source Code

- Paul Crawford’s PCCustomSwitch

- Part 2 of this series

No significant articles today.

<Return to section navigation list>

Windows Azure AppFabric: Access Control, WIF, Cache and Service Bus

The AppFabric Team (@AppFabric) posted Client Side Tracing for Windows Azure AppFabric Caching on 6/29/2011:

In this post, I will describe how to enable Client-side traces for AppFabric Caching Service. I will also touch upon some basic problems you might face while trying out the same.

To configure the cache client to generate System.Diagnostic.Traces pertaining to the AppFabric cache, please follow the below steps:

Configuration File Option

In your app.config/web.config you need to add <tracing sinkType="DiagnosticSink" traceLevel="Verbose" />, to the appropriate location as per the cases below:

a. DataCacheClients Section

If you have a ‘dataCacheClients’ section in your app.config/web.config file under <configuration> section, then add the tracing section as below:

<configuration> <configSections> <section name="dataCacheClients" type="Microsoft.ApplicationServer.Caching.DataCacheClientsSection, Microsoft.ApplicationServer.Caching.Core" allowLocation="true" allowDefinition="Everywhere" /> </configSections> <dataCacheClients> <!--traceLevel can have the following values : Verbose, Info, Warning, Error or Off--> <tracing sinkType="DiagnosticSink" traceLevel="Verbose" /> <dataCacheClient name="default"> <hosts> <host name="[YOUR CACHE ENDPOINT]" cachePort="22233" /> </hosts> <securityProperties mode="Message"> <messageSecurity authorizationInfo="[YOUR TOKEN]"> </messageSecurity> </securityProperties> </dataCacheClient> </dataCacheClients> ... </configuration>Note: Tracing section should be a direct child of the <dataCacheClients> section and should not be added under <dataCacheClient> section(s), if <dataCacheClients> section is present.

b. DataCacheClient Section

If you do not have a <dataCacheClients> section, and the app.config/web.config has only a <dataCacheClient> section, then add the Tracing section as below:

<configuration> <configSections> <section name="dataCacheClient" type="Microsoft.ApplicationServer.Caching.DataCacheClientSection, Microsoft.ApplicationServer.Caching.Core" allowLocation="true" allowDefinition="Everywhere" /> </configSections> <dataCacheClient> <!--traceLevel can have the following values : Verbose, Info, Warning, Error or Off--> <tracing sinkType="DiagnosticSink" traceLevel="Verbose" /> <hosts> <host name="[YOUR CACHE ENDPOINT]" cachePort="22233" /> </hosts> <securityProperties mode="Message"> <messageSecurity authorizationInfo="[YOUR TOKEN]"> </messageSecurity> </securityProperties> </dataCacheClient> ... </configuration>Programmatic Option

You may also choose to set the System.Diagnostic.Trace for cache client programmatically, by calling the below method at any point:

//Argument 1 specifies that a System.Diagnostic.Trace will be used, and //Argument 2 specifies the trace level filter. DataCacheClientLogManager.SetSink(DataCacheTraceSink.DiagnosticSink, System.Diagnostics.TraceLevel.Verbose);You may also change the level at which the trace logs are filtered, programmatically by calling the below method at any stage (say, 5 minutes after your service has started and stabilized you may want to set the cache client trace level to Warning instead of Verbose):DataCacheClientLogManager.ChangeLogLevel(System.Diagnostics.TraceLevel.Warning);Troubleshooting

If you are using Azure SDK 1.3 or above, and trying to get cache client side traces within a Web Role using the above mechanism on DevelopmentFabric environment, you will not be able to see the Cache client traces on the Development Fabric Emulator (or DevFabric UI)

Reasoning/Explanation for the given behavior

With SDK 1.3, the IIS (Role Runtime) runs in a separate process as compared to web role (for ASP.Net session state provider scenario) and all the logs related to Web Role can be seen in the DevFabric UI, however, the cache client side traces go as part of IIS i.e. the Role Runtime. The DevFabric Emulator attaches its own listener “DevelopmentFabricTraceListener” by default and its UI emits logs only heard by this listener. Since IIS is in a different process, this listener is not present and logs cannot be seen on the DevFabric UI.

Resolution

Note: The resolution below is only to see logs for DevFabric scenario; otherwise the logs would go to xStore (Azure Storage Account) in a perfectly fine manner. For your project, add the below in web.config under <system.diagnostics>\<trace>\<listeners> section:

<add type="Microsoft.ServiceHosting.Tools.DevelopmentFabric.Runtime.DevelopmentFabricTraceListener, Microsoft.ServiceHosting.Tools.DevelopmentFabric.Runtime, Version=1.0.0.0, Culture=neutral, PublicKeyToken=31bf3856ad364e35" name="DevFabricListener"> <filter type="" /> </add>You may add this only for your Web.Debug.config, so that when you actually deploy to an Azure environment, this section does not get added as you will use Web.Release.config file.

You also need *not* add a reference to the DLL “Microsoft.ServiceHosting.Tools.DevelopmentFabric.Runtime.dll” as the machine on which you are deploying it on Development Fabric should have this DLL present in GAC (Search for this DLL under %windir%\assembly).

Deploy the sample on DevFabric, and you should now be able to see the Cache traces on the Development Fabric UI.

Kathleen Richards delivered a First Look at Tools for Azure AppFabric Apps to her RDN Express blog on 6/29/2011:

Office in the cloud is stealing headlines today but the most important cloud developments for developers occurred last week.

Microsoft released the Windows Azure AppFabric June CTP. As promised at Tech-Ed North America 2011, it offers the first glimpse of tools and components for AppFabric Applications. The new tools and services are designed to help developers build n-tier applications (web, middle and data-tiers) that are "cloud-ready" and then manage all of the components and dependencies as a single logical entity, throughout the application's lifecycle.

The Visual Studio tools and components include simple configurations for Windows Communication Foundation (WCF) and Windows Workflow (WF), according to Microsoft, an Application Container service for running WCF or WF code and an Application Manager where developers deploy and monitor AppFabric apps with help from compositional models.

Microsoft's Karandeep Anand explained the new tools, components and concepts in the AppFabric Team blog:

In CTP1, we are focusing on a great out-of-box experience for ASP.NET, WCF, and Windows Workflow (WF) applications that consume other Windows Azure services like SQL Azure, AppFabric Caching, AppFabric Service Bus and Azure Storage. The goal is to enable both application developers and ISVs to be able to leverage these technologies to build and manage scalable and highly available applications in the cloud.You can download the Windows Azure AppFabric SDK – June Update here. To sign up for a chance to try out the Application Manager CTP in the Labs environment, you'll need to sign in to the AppFabric Management Portal and follow the instructions outlined in this AppFabric Team blog.

Roger Jennings, a developer and author of the OakLeaf Systems blog and Cloud Computing with the Windows Azure Platform (WROX, 2009) has yet to try out the new tools but says he thinks that AppFabric is "quite innovative and has a great deal of potential."

Jennings explained in an email:

AppFabric (in general) is Microsoft's primary distinguishing feature for Windows Azure. I’ve been waiting for the AppFabric Applications CTP since they dropped support for workflows before Windows Azure released.Check out Jennings' June VSM cover story, "New Migration Paths to the Microsoft Cloud", for more on Azure developer tools and hybrid platform features.

Express your thoughts on Microsoft and the cloud. Are you more excited about Office 365, or the potential of new Windows Azure AppFabric tools and components? Drop me a line at krichards@1105media.com

Full disclosure: I’m a Contributing Editor for Visual Studio Magazine (VSM). VSM and RDN are 1105 Media properties.

<Return to section navigation list>

Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

No significant articles today.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

Ryan Waite of the Windows Azure Team reported “Windows Azure integration gets better” but no Dryad/DryadLINQ in its HPC Pack 2008 R2 Service Pack 2 (SP2) Released post of 6/29/2011:

The essence of High Performance Computing (HPC) is processing power, and Windows Azure’s ability to provide on-demand access to a vast pool of computing resources will put HPC at the fingertips of a broader set of users. With this in mind we're excited to announce the release of a second ‘service pack’ (SP2) for Windows HPC Server 2008 R2. This release is focused on providing customers with a great experience when expanding their on-premises clusters to Windows Azure, and we’ve also included some features for on-premises clusters. Here’s an overview of the new functionality:

Windows Azure integration gets better

We’ve continued our work to provide a single set of management tools for both local compute nodes and Windows Azure compute instances. We’ve now integrated directly with the Widows Azure APIs to provide a simplified experience for provisioning compute nodes in Windows Azure for applications and data. Scaling on-premises cluster workloads to the Cloud is easy for administrators to configure, while ensuring complete transparency to end users. Furthermore, our new features include a tuned MPI stack for the Windows Azure network, support for Windows Azure VM role (currently in beta), and automatic configuration of the Windows Azure Connect preview to allow Windows Azure based applications to reach back to enterprise file server and license servers via virtual private networks.

Parametric sweep applications

Not all HPC applications are MPI applications. The most significant growth in the HPC market comes from conveniently parallel applications like parametric sweep applications and the WCF-based cluster SOA model to build scale-out calculation services. These programming models are commonly used in Monte Carlo analysis or risk calculation in finance. Applications using these scale-out models are ideal for the Cloud because they can typically scale linearly. Add more resources and get your work done faster!

Support for LINQ to HPC beta

In the coming days, we will release a second beta for building data intensive applications (previously codenamed Dryad). We’re now calling it ‘LINQ to HPC’. LINQ has been an incredibly successful programming model and with LINQ to HPC we allow developers to write data intensive applications using Visual Studio and LINQ and deploy those data intensive applications to clusters running Windows HPC Server 2008 R2 SP2. Programmers can now use thousands of servers, each of them with multiple processors or cores, to process unstructured data and gather insights. [Emphasis added.]

New scenarios for on-premises clustering

We also added several features in SP2 that will enable new scenarios for on-premises cluster customers:

- Resource pools allow partitioning cluster resources by groups such that users submitting jobs to their group’s pool are guaranteed a minimum number of resources, but idle resources can be shared with other groups when they have more work to compute.

- A new REST API and web portal makes it easy to submit jobs from any platform and monitor the status from a user “heat map.”

- Soft card authentication support enables users to submit jobs to a cluster in organizations that use smart cards instead of passwords.

- Expanded support for adding Windows 7 desktops to a cluster for “desktop cycle stealing.” These resources can now reside in any trusted Active Directory domain.

- New APIs that support staging and accessing common data that is required by all calculation requests within a session. The client application can include code that sends the data to the cluster and makes it available to each instance of the SOA service on the cluster.

Be sure to learn about all of the features in the release and try it out. I’m sure you’ll be pleased to see what we have in store for you!

Mary Jo Foley provides additional background details in her What's in (and not) with Microsoft's new high-performance computing service pack post of 6/29/2011 to her ZDNet All About Microsoft blog.

Rob Gillen (@argodev) explained how to overcome problems with Using Nevron Controls in Azure in a 6/29/2011 post:

I’ve been playing around with the Nevron Controls for an Azure application I’m building (hopefully more on that soon) and I’ve been fighting with a simple problem that I’m posting here for my own remembrance and hopefully to help a few others.

The problem has been, that the Nevron controls worked fine when I was testing the web app directly, but would cause the dev fabric to blow up if I tried to run it there. I even tried to simply deploy it to Azure assuming that possibly it was a “feature” of the dev fabric – no dice.

Well, today I had some time to dig to the bottom of it and found that it was a simple problem with the way the http handlers were registered. By default, I had the handlers registered like this:

<system.web> <httpHandlers> <add verb="*" path="NevronDiagram.axd" type="Nevron.Diagram.WebForm.NDiagramImageResourceHandler" validate="false"/> <add verb="GET,HEAD" path="NevronScriptManager.axd" type="Nevron.UI.WebForm.Controls.NevronScriptManager" validate="false"/> </httpHandlers> </system.web>However, as Shan points out in this post: http://social.msdn.microsoft.com/Forums/en/windowsazure/thread/0103ca2d-e952-4c28-8733-47630535c05c, you need to use the newer IIS 7 integrated pipeline. A closer look at the official Nevron samples, shows that they accounted for this and I simply missed it. The setup should be something like this:

<system.webServer> <validation validateIntegratedModeConfiguration="false"/> <handlers> <add name="NevronDiagram" preCondition="integratedMode" verb="*" path="NevronDiagram.axd" type="Nevron.Diagram.WebForm.NDiagramImageResourceHandler"/> <add name="NevronScriptManager" preCondition="integratedMode" verb="*" path="NevronScriptManager.axd" type="Nevron.UI.WebForm.Controls.NevronScriptManager"/> </handlers> </system.webServer>Notice in particular that not only is the structure a little different, the declarations are under the system.webServer node rather than the system.web node.

Steve Plank (@plankytronixx) posted links to Windows Azure Bootcamps Labs: SQL Azure Labs 1 to 3 on 6/29/2011:

The second section of the bootcamp deals with SQL Azure. This is not a standalone lab – it builds on what you have already built in Web, Worker and Storage Labs 1 to 4. Refer to the Intro video and the post for Labs 1 to 4.

Maarten Balliauw (@maartenballiauw) explained a new feature to Delegate feed privileges to other users on MyGet in a 6/29/2011 post:

One of the first features we had envisioned for MyGet and which seemed increasingly popular was the ability to provide other users a means of managing packages on another user’s feed.

As of today, we’re proud to announce the following new features:

- Delegating feed privileges to other users – This allows you to make another MyGet user “co-admin” or “contributor” to a feed. This eases management of a private feed as that work can be spread across multiple people.

- Making private feeds private by requiring authentication – It’s now possible to configure a feed so that nobody can consult its list of packages unless a valid login is provided. This feature is not yet available for use with NuGet 1.4.

- Global deployment – We’ve updated our deployment so managing feeds can now be done on a server that’s closer to you.

Now when is Microsoft going to buy us out :-)

Delegating feed privileges to other users

MyGet now allows you to make another MyGet user “co-admin” or “contributor” to a feed. This eases management of a private feed as that work can be spread across multiple people. If combined with the “private feeds” option, it’s also possible to give some users read access to the feed while unauthenticated users can not access the feed created.

To delegate privileges to a user, navigate to the feed details and click the Feed security tab. This tab allows you to change feed privileges for different users. Adding feed privileges can be done by clicking the Add feed privileges… button (duh!).

Available privileges are:

- Has no access to this feed (speaks for itself)

- Can consume this feed (allows the user to use the feed in Visual Studio / NuGet)

- Can manage packages for this feed (allows the user to add packages to the feed via the website and via the NuGet push API)

- Can manage users and packages for this feed (extends the above with feed privilege management capabilities)

After selecting the privileges, the user receives an e-mail in which he/she can claim the acquired privileges:

Privileges are not granted per direct: after assigning privileges, the user has to claim these privileges by clicking a link in an automated e-mail that has been sent.

Making private feeds private by requiring authentication

It’s now possible to configure a feed so that nobody can consult its list of packages unless a valid login is provided. Combined with the feed privilege delegation feature one can granularly control who can and who can not consume a feed from MyGet. Note that his feature is not yet available for use with NuGet 1.4, we hope to see support for this shipping with NuGet 1.5.

In order to enable this feature, on the Feed security tab change feed privileges for Everyone to Has no access to this feed.

This will instruct MyGet to request for basic authentication when someone accesses a MyGet feed. For example, try our sample feed: http://www.myget.org/F/mygetsample/

Global deployment

We’ve updated our deployment so managing feeds can now be done on a server that’s closer to you. Currently we have a deployment running in a European datacenter and one in the US. We hope to expand this further as well as leverage a content delivery network for high-speed distribution of packages.

We need your opinion!

As features keep popping into our head, the time we have to work on MyGet in our spare time is not enough. To support some extra development, we are thinking along the lines of introducing a premium version which you can host in your own datacenter or on a dedicated cloud environment. We would love some feedback on the following survey:

See original post for survey link.

Jonathan Rozenblit (@jrozenblit) described What’s In the Cloud: Canada Does Windows Azure - Connect2Fans in a 6/29/2011 post:

Since it is the week leading up to Canada day, I thought it would be fitting to celebrate Canada’s birthday by sharing the stories of Canadian developers who have developed applications on the Windows Azure platform. A few weeks ago, I started my search for untold Canadian stories in preparation for my talk, Windows Azure: What’s In the Cloud, at Prairie Dev Con.

I was just looking for a few stores, but was actually surprised, impressed, and proud of my fellow Canadians when I was able to connect with several Canadian developers who have either built new applications using Windows Azure services or have migrated existing applications to Windows Azure. What was really amazing to see was the different ways these Canadian developers were Windows Azure to create unique solutions.

This week, I will share their stories.

Connect2Fans

Connect2Fans is a BizSpark Startup who has developed a solution to support artists, writers, musicians, photographers, and other people in the arts and entertainment industry who are finding it difficult to connect directly with their fans. With Connect2Fans, artists have complete control over all fees that are charged to their fans, which will lower or eventually eliminate service fees altogether. Through the Connect2Fans software, the artists and entertainment industry personnel will be able to offer inventory items such as digital files (MP3s, Ringtones, Videos, PDF Books, Software etc), tangible merchandise (CDs, DVDs, t-shirts, photos, and paintings), ticketing (barcoded, PDF, and reserved seating) and hospitality services (restaurants, limo, and VIP services amongst others).

I had a chance to sit down with Jason Lavigne (@Jason_Lavigne), co-founder and director of Connect2Fans to find out how he and his team built Connect2Fans using Windows Azure.

Jonathan: When you and your team were designing Connect2Fans, what was the rationale behind your decision to develop for the Cloud, and more specifically, to use Windows Azure?

Jason: Dave Monk and I put together a video that answers just that.

Jonathan: What Windows Azure services are you using? How are you using them?

Jason: We are using Web Roles, Worker Roles, Windows Azure Storage, and SQL Azure. The one item that we think that would be unique would be our use of MongoDB for our main data store that allows us to maintain 10,000+ concurrent connections per node.

Jonathan: During development, did you run into anything that was not obvious and required you to do some research? What were your findings? Hopefully, other developers will be able to use your findings to solve similar issues.

Jason: The biggest gotcha would be the throughput of Windows Azure Storage Tables (internally we call this Azure DHT) was too slow for the kind of traffic we needed to support. Our solution was to setup MongoDB in worker roles for writes and a replica set on each web role for reads.

Jonathan: Lastly, what were some of the lessons you and your team learned as part of ramping up to use Windows Azure or actually developing for Windows Azure?

Jason: Every day we are learning more and more, but the biggest lesson I learned while learning and using Windows Azure was that Microsoft is a fantastic resource for support. I am not just saying that because you are from Microsoft, I am serious when I say that Microsoft has made it clear from the very beginning that they are there for us and the have certainly stepped up when we needed it.

That’s it for today’s interview with Jason. As you can see, Jason and his team broke away from what you would consider the norm. The Windows Azure platform’s flexibility and ability to custom tailor to a specific set of requirements allowed them to achieve the best results for their specific solution.

I’d like to take this opportunity to thank Jason for sharing his story. If you’d like to find out more about Connect2Fans, you can check out the site, Twitter, and Facebook. To find out more about how Connect2Fans is using the Windows Azure platform, you can reach out to Jason on Twitter.

Tomorrow – another Windows Azure developer story.

Join The Conversation

What do you think of this solution’s use of Windows Azure? Has this story helped you better understand usage scenarios for Windows Azure? Join the Ignite Your Coding LinkedIn discussion to share your thoughts.

Previous Stories

Missed previous developer stories in the series? Check them out here:

<Return to section navigation list>

Visual Studio LightSwitch

Beth Massi (@bethmassi) explained Using SharePoint Data in your LightSwitch Applications in a 6/29/2011 post:

One of the great features of LightSwitch is that it lets you connect to and manipulate data inside of SharePoint. Data can come from any of the built in SharePoint lists like Tasks or Calendar or it can come from custom lists that you create in SharePoint. In this post I’m going to show you how you can work with user task lists in SharePoint via LightSwitch. For a video demonstration of this please see: How Do I: Connect LightSwitch to SharePoint Data?

Connecting to SharePoint

The first thing we do is connect to a SharePoint 2010 site and choose what data we want to work with. If you have just created a new LightSwitch project then on the “Start with Data” screen select Attach to External Data Source. Otherwise you can right-click on the Data Sources node in the Solution Explorer on the right and select “Add Data Source…” to open the Attach Data Source Wizard.

Select SharePoint and Click Next. Next you need to specify the SharePoint site address. In the SharePoint Site Address box, type the URL of the SharePoint site that contains the list that you want to connect to. For example, if the URL of your list is http://sharepoint/sites/mysite/Lists/Tasks/AllItems.aspx, type http://sharepoint/sites/mysite/ into the SharePoint Site Address box. Then it will ask you how you want to log into SharePoint. If you are building a LightSwitch application for internal/company use then you should select Windows credentials here. This means that users on the company domain will connect to SharePoint using their Windows credentials when they run your LightSwitch application. Click Next.

Lastly you need to select the lists that you want to pull into LightSwitch and name the Data Source. For this example I’m selecting Tasks. Notice that UserInformationList is selected by default. This is because every list item in SharePoint has a Modified and Created By field that relates to the users who have access to the site which is stored here. When you select a table from a database or a list from SharePoint, any related entities are also pulled into LightSwitch for you. Click Finish and LightSwitch will warn you that it can’t import predecessor tasks because it does not support many-to-many relationships at this time. Click Continue and the Data Designer will open allowing you to modify the Task and UserInformationList entities.

LightSwitch set up some smart defaults for you when it imports content types from SharePoint. For Task you will notice that a lot of the internal fields are set to not display on the screen by default. Also LightSwitch creates any applicable Choice Lists for you. For instance, you will see that you can only select from a list of predefined values that came from SharePoint for Priority and Status.

Modifying your SharePoint Entities

Even though LightSwitch did some nice heavy-lifting here for us we still are going to want to make some modifications. First I’ll select the ContentType field and uncheck the “Display by Default” in the properties window. This property is automatically set to “Task” by SharePoint when we save an item and I’m going to create a screen that only displays Tasks so I don’t need to show this to the user. I’ll do the same for the TaskGroup property. I’ll also set the Summary Property of the Task entity to the Title and the UserInformatuinList to the Name property. Finally I’ll set the StarteDate and DueDate to type Date instead of Date Time since I don’t want to display the time portion to the user.

Depending on how you’ll be displaying the data from SharePoint you typically want to tweak your entities a little bit like this. I also want to implement a couple business rules that set some defaults on properties for me. The first thing I want to do is set the Complete percentage to 1 or 0 if the user selects a Status value of Completed or Not Started. In the Data Designer click the Status property and then drop down the “Write Code” button at the top right of the Designer and select “Status_Validate” method. Write this code:

Private Sub Status_Validate(results As EntityValidationResultsBuilder) If Me.Status <> "" Then Select Case Me.Status Case "Not Started" Me.Complete = 0 Case "Completed" Me.Complete = 1 End Select End If End SubUsing Windows Authentication for Personalization

Next I want to automatically set the AssignedTo property on the Task to the logged in user. I also want to be able to present the logged in user with just their tasks on their startup screen. To do that, I need to specify that my LightSwitch application uses Windows Authentication just like SharePoint. Open the project Properties (from the main menu select Project –> Application Properties…) and then select the Access Control tab. Select “Use Windows authentication”.

If you want to allow any authenticated user on your Windows domain access to the application then select that option like I have pictured above. Otherwise if you want to control access using permissions and entering users and roles into the system then you have that option as well. (For more information on access control see: How Do I: Set up Security to Control User Access to Parts of a Visual Studio LightSwitch Application?)

Because the the AssignedTo property on the Task is of the type UserInformationList we need to query SharePoint for the right user record. Fortunately, once we have enabled Windows authentication this is easy to do by using a query. In the Solution Explorer right click on UserInformationLists and select “Add Query” to open the query designer. Name the query GetUserByName and add a filter where Account is equal to a new parameter named Account. Also in the Properties window make sure to change the “Number of Results Returned” to “One”.

Now that we have this query set up we can go back to the Data Designer by double-clicking on Tasks in the Solution Explorer and then drop down the “Write Code” button again but this time select the Task_Created method. _Created methods on entities are there so you can set defaults on fields. The method runs any time a new entity is created. In order to call the query we just created in code, you use the DataWorkspace object. This allows you to get at all the data sources in your application. In my case I have only one data source called Team_SiteData which is the SharePoint data source. Once you drill into the data source you can access all the queries. Notice that LightSwitch by default generates query methods to return single records in a data source in which you pass an ID. You can access the complete sets as well.

What we need to pass into our GetUserByName query is the account for the logged in user which you can get by drilling into the Application object. Here’s the code we need:

Private Sub Task_Created() Me.AssignedTo = Me.DataWorkspace.Team_SiteData.GetUserByName(Me.Application.User.Name) End SubWe also want to add another query for our screen that will only pull up tasks for the logged in user. Create another query but this time right-click on the Tasks entity in the Solution Explorer and select “Add Query”. This time call it MyTasks and add a filter this time by expanding the AssignedTo property and selecting Account equals a new parameter named account. Also add a sort by DueDate ascending.

Creating a Screen to Edit SharePoint Data

Next we just need to create a screen based on the MyTasks query. From the query designer you can click the “Add Screen” button or you can right click on the Screens node in the Solution Explorer and select Add Screen. Select the List and Details Screen and for the Screen Data select MyTasks. Click OK

The Screen Designer opens and you should notice that LightSwitch has added a textbox at the top of our content tree for the screen parameter that it added called TaskAccount which is fed into the query parameter Account. Once we set the TaskAccount with a value the query will execute. As it is though, the user would need to enter that data manually and that’s not what we want. We want to set that control to a Label and set the value programmatically.

To do that drop down the Write Code button on the Screen Designer and select the InitializeDataWorkspace method. This method runs before any data is loaded. Here is where we will set the value of TaskAccount to the logged in user:

Private Sub MyTasksListDetail_InitializeDataWorkspace( saveChangesTo As System.Collections.Generic.List(Of Microsoft.LightSwitch.IDataService)) ' Write your code here. Me.TaskAccount = Me.Application.User.Name End SubHit F5 to build and run the application. The My Task List Detail screen should open with just the logged in user’s tasks. Click the “Design Screen” at the top right to tweak the layout. For instance I suggest changing the ModifiedBy and CreatedBy to summary fields because these are automatically filled in by SharePoint when you save. I also changed the List to a DataGrid and show the DueDate since we are sorting by that and made the description field bigger. You can make any other modifications you need here.

Add a new task and you will also see the AssignedTo field is automatically filled out us when we add new records. Cool! In the next post I’ll show you how you can relate SharePoint list data to your LightSwitch application data and work with it on the same screen.

See my SharePoint 2010 Lists’ OData Content Created by Access Services is Incompatible with ADO.NET Data Services post of 3/22/2011 regarding LightSwitch’s problems using OData data sources created by moving Access 2010 tables to SharePoint lists.

Paul Patterson (asked Microsoft LightSwitch – What Do You Want in the Release? in a 6/29/2011 post:

I am curious what others would like to see in the release version, that does not exist in the current beta…

Microsoft Visual Studio LightSwitch has been out in Beta 2 for some time now. All this time in Beta 2 has definitely caused me some angst; but only because I am getting more and more impatient with waiting for the official release – or word of it. I know Microsoft is doing it’s due diligence in getting the right tool out for the right reasons. Taking their time to do it right makes sense. Having said that,

Interesting is how the beta version has put a clear perspective on just how simple LightSwitch is to use. I went through a few months of writing a number of blog posts that addressed most, if not all, of the features of using LightSwitch. Then there came a point when I couldn’t think of anything else to write about with out repeating myself.

Keep in mind that I haven’t really scratched the surface on the extensability aspects of LightSwitch. That is because the context of my articles is for those who want to use LightSwitch, not program LightSwitch. I will eventually do some deep diving into writing extensions, but not until I exhaust everything else from an end user productivity perspective.

So, with that being said, in anticipation of the release version of LightSwitch, here is what is on my own release wishlist;

- Integrated Reporting.

- More Shells

- More Themes

- Mobile Templates

- HTML5

- More Freebies!

Reporting

For reporting, I want easy to use report development capabilities and not the, “..create and add a custom SilverLight control..”, type of reporting. I want to have an extension that I can simply turn on in the properties dialog, and then have a wizard available to allow the writing of reports.

For example; when I turn on a reporting extension for my LightSwitch application, I would love to have the Visual Studio menu updated with a new “Reports” menu item. From that menu I could select to add or edit reports in my application.

I am okay with manually creating the processes to get the reports to show up and display. If LightSwitch recognized that reporting exists, then a wizard based interface could be used within the screen designers, prompting the user for information about what report to open.

Shells and Themes

Creating the an attractive and intuitive user interface is a challenge for most developers; myself included. I am not a graphic artist and I am by no means a user experience professional. However, I do understand that the perception of a solution’s usability is a critical success factor in most user-centric software. The more choices I have for shells and themes, the more likely I will have success in finding something that my customer will like and use.

Mobile Templates

Gearing a solution for a mobile device will be a big win. An inclusive LightSwitch project type that uses a combination of screen templates, shells, and themes for mobile devices would certainly get some kudos in my books.

HTML5

There is a lot of discussions around the future of Silverlight, or more specifically, XAML. Windows 8 is just around the corner and it seems that there is a lot of concern about what support Windows 8 will have for XAML (do a Google search for Windows 8 XAML Jupiter).

Not withstanding the Windows 8 questions, outputting an HTML5 client would be awesome.

Free or Almost Free

I like free – who doesn’t! The more free stuff that I can get for LightSwitch, the better. At the same time, however, I would not have a problem forking out a few bucks for something that will save me time in the end. After all, time is money.

By the way, I put together a site named LightSwitchMarket.com. The plan is to offer anything that is LightSwitch – both paid for and free!

What do you want?

So tell me, what do you want to see in the LightSwitch release?

Return to section navigation list>

Windows Azure Infrastructure and DevOps

David Makogon (@dmakogon) reported My team is hiring: Work with ISV’s and Windows Azure! on 6/29/2011:

I work with a really cool team at Microsoft. Our charter is to help Independent Software Vendors (ISV’s) build or migrate applications to the Windows Azure platform. We’re growing, and looking to fill two specific roles in the US:

Architect Evangelist (DC area, Silicon Valley area). 80% technical, 20% business development experience. Excerpt from the job description:

“You will be responsible for identifying, driving, and closing Azure ISV opportunities in your region. You will help these partners bring their cloud applications to market by providing architectural guidance and deep technical support during the ISV’s evaluation, development, and deployment of the Azure services. This position entails evangelizing the Windows Azure Platform to ISV partners, removing roadblocks to their deployment, and driving partner satisfaction.”

Platform Strategy Advisor (New York area). 50% technical, 50% business development experience. Excerpt from the job description:

“You will be responsible for identifying, driving, and closing Azure ISV opportunities in your region. You will help these partners bring their cloud applications to market by providing business model and architectural guidance and supporting them through their development and go-to-market activities. This position entails evangelizing the Windows Azure Platform to ISV partners, removing roadblocks to their deployment, and driving partner satisfaction.”

Both of these roles are work-from-home positions, along with onsite customer visits and additional travel as necessary.

Job postings are on LinkedIn and the Microsoft Careers site:

- Architect Evangelist (Silicon Valley): LinkedIn, Microsoft Careers

- Platform Strategy Advisor (New York): LinkedIn, Microsoft Careers

I’ll post an update as soon as the DC-area position is posted (should be later today).

Andy Cross (@andybareweb) posted Tips and Tools for a Better Azure Deployment Lifecycle on 6/28/2011:

This post briefly details the tools and techniques I use when creating an Azure solution. They focus around the core Azure technologies rather than third party add-ons and typically are lessons learned that help ease deployment management.

Use Web Deploy for iterative Web Role updates

With Version 1.4 of the Azure SDK came the ability to use “Web Deploy” with Azure Web Roles. This allows the very rapid update of single instance code bases, and where it is possible to use is by far the fastest way to update code running on an Azure Web Role.

Described in more detail here http://blog.bareweb.eu/2011/04/using-web-deploy-in-azure-sdk-1-4-1/, the Web Deploy technique is invaluable in shaving off huge quantities of time that would otherwise be spent starting up brand new instances.

Update your Configuration changes rather than redeploying … and …

Upgrade running roles rather than deleting and recreating them.

These two tips are very similar, but important. When you want to make a configuration change, there’s no need to redeploy your whole project! The Windows Azure portal gives you the ability to make configuration changes to ServiceConfiguration.cscfg. You can then use the RoleEnvironment.Changed event in order to have your instances detect this change, and make any appropriate change to their function.

When you want to make a code change, you can either destroy your old instances or upgrade them. There are different reasons for doing either during development, but it is significantly more simple and SPEEDY to use the Upgrade route rather than stopping, deleting and recreating manually.

For more information, this is an excellent read: http://blog.toddysm.com/2010/06/update-upgrade-and-vip-swap-for-windows-azure-servicewhat-are-the-differences.html

Use Intellitrace to debug issues

When errors occur within roles, whether they fail to run or experience difficulty whilst running, it is important to know about Intellitrace. The caveat to this is that this tool is not available on all versions of Visual Studio, but if you do have it it is one of the most powerful tools available to you.

More details here: http://blogs.msdn.com/b/jnak/archive/2010/06/07/using-intellitrace-to-debug-windows-azure-cloud-services.aspx

Use Remote Desktop to get access to your instances

This one speaks for itself – one of the most standard powerful ways of managing remote environments on the Windows platform is now available in Azure. You should enable this at all times during development – its usefulness in diagnosing issues in production is unsurpassed. http://msdn.microsoft.com/en-us/library/gg443832.aspx

When using Startup Tasks, follow @smarx!

Startup tasks are some of the more powerful ways of getting an Azure role to be just how you want it before it begins to run your code. You can install software, tweak settings and other such details. The problem (if you can call it such) with them is that since they are running in the cloud, before you have your main software running, any failure with them can prove catastrophic with little chance of a UI to report the issues.

I don’t think I can summarise it better than following the recommendation of Steve Marx, as described here: http://blog.smarx.com/posts/windows-azure-startup-tasks-tips-tricks-and-gotchas

Use Windows Azure Bootstrapper

Available at http://bootstrap.codeplex.com/ is a tool that allows you to automatically pull down dependencies as your project is starting up and run them. This can be incredibly useful for tracing, logging and other reasons. I recommend you check it out.

I will be updating these as I find more ways of improving the deployment lifecycle.

Ryan Bateman (@ryanbateman) posted Cloud Provider Global Performance Ranking – May to the CloudSleuth blog on 6/28/2011:

As is our monthly custom, here are our May results gathered via the Global Provider View, ranking the response times seen from various backbone locations around the world.

Fig1: Cloud Provider Response times (seconds) from backbone locations around the world.

- April’s results are available here.

- Visit the Global Provider View Methodology for the back story on these numbers.

Damon Edwards posted Video with John Allspaw at Etsy: What to put on the big dashboards on 6/24/2011:

I had the pleasure of visting the folks at Etsy recently. Etsy is well known in DevOps circles for their Continuous Deploymet and Metrics efforts.

I'll be posting more videos soon, but in the meantime here is a quick hit I recorded with John Allspaw while he gave me a tour of their Brooklyn HQ. I had just asked John about how they decide what to put on the big dashboards on the wall.

Here is what he told me...

John Allspaw on selecting what to dashboard at Etsy from dev2ops.org on Vimeo.

Joannes Vermorel (@vermorel) recommended Three features to make Azure developers say "wow" in a 6/22/2011 post (missed when posted):

Wheels that big aren't technically required. The power of the "wow" effect seems frequently under-estimated by analytical minds. Nearly a decade ago, I remember a time when analysts where predicting that the adoption color screens on mobile phones would take a while to take off as color was serving no practical purposes.

Indeed, color screens arrived several years before the widespread bundling of cameras within cell phones. Then, at present day, there are still close to zero mobile phone features that actually require a color screen to work in smooth condition.

Yet, I also remember that the first reaction of practically every single person holding a phone with color screen for the first time was simply: wow, I wan't one too; and within 18 months or so, the world had upgraded from grayscale screens to color screens, nearly without any practical use for color justifying the upgrade (at the time, mobile games were inexistent too).

Windows Azure is a tremendous public cloud, probably one of the finest product released by Microsoft, but frequently I feel Azure is underserved by a few items that trigger something close to an anti-wow effect in the mind of the developer discovering the platform. In those situations, I believe Windows Azure is failing at winning the heart of the developer, fostering adoption out of sheer enthusiam.

[1.] No instant VM kick-off

With Azure, you can compile your .NET cloud app as an Azure package - weighting only a few MB - and drap & drop the package as a live app on the cloud. Indeed, on Azure, you don't deploy a bulky OS image, you deploy an app, which is about 100x smaller than a typical OS.

Yet, when booting your cloud app takes a minima 7 mins (according to my own unreliable measurements) to Windows Azure Fabric, even if your app require no more than a single VM to start with.

Here, I believe Windows Azure is missing a big opportunity to impress developers by bringing their app live within seconds. After all - assuming that a VM is ready on standby somewhere in the cloud - starting an average .NET app does not take more than a few seconds anyway.

Granted, there are no business case that absolutely require instant app kick-off, and yet, I am pretty sure that if Azure was capable of that, every single 101 Windows Azure session would start by demoing a cloud deployment. Currently, the 7 mins delay is simply killing any attempt at public demonstration of a Windows Azure deployement. Do you really want to keep your audience waiting for 7 mins? No way.

Worse, I typically avoid demoing Azure to fellow developers out of fear of looking stupid facing waiting for 7 mins until my "Hello World" app gets deployed...

[2.] Queues limited to 500 message / sec

One of the most enthusiastic aspect of the cloud is scalability: your app will not need a complete rewrite every time usage increases from a 10x factor. Granted, most apps ever written will never need to scale for the lack of market adoption. From a rational viewpoint, scalability is irrelevant for about 99% of the apps.

Yet, nearly every single developer putting an app in the cloud dreams of being the next Twitter, and thinks (or rather dreams) about the vast scalability challenges that lie ahead.

The Queue Storage offers a tremendous abstraction to scale out cloud apps, sharing the workload over an arbitrarily large amount of machines. Yet, when looking at the fine print, the hope of the developer is crushed when discovering that the supposedly vastly scalable cloud queues can only process 500 messages per second, which is about 1/10th of what MSMQ was offering out of the box on server in 1997!

Yes, queues can be partitioned to spread the worload. Yes, most apps will never reach 500 msg / sec. Yet, as far, I can observe looking at community questions raised by adopters of Lokad.Cloud and Lokad.CQRS (open source libraries targeting Windows Azure), queue throughput is a concern raised by nearly very single developer tackling Windows Azure. This limitation is killing enthusiam.

Again, Windows Azure is missing a cheap opportunity to impress the community. I would suggest to shoot for no less than 1 million messages / second. For the record, Sun was already achieving 3 millions message / sec one on a single quasi-regular server 1 year ago with insane latency constraints. So 1 million is clearly not beyond the reach of the cloud.

[3.] Instant cloud metrics visualization

One frequent worry about on-demand pricing is: what if my cloud consumption get out of control? In the Lokad experience, cloud computing consumption is very predictable and thus, a non-issue in practice. Nevertheless, the fear remains, and is probably dragging down adoption rates as well.

What does it take to transform this "circumstance" into marketing weapon? Not that much. It takes a cloud dashboard that reports live your cloud consumption, key metrics being:

- VM hours consumed for the last day / week / month.

- GB stored on average for the last day / week / month.

- GB transferred In and Out ...

- ...

As it stands, Windows Azure offers a bulky Silverlight console that takes about 20s to load on broadband network connection. Performance is a feature: not having a lightweight dashboard page is a costly mistake. Just think of developers at BUILD discussing their respective Windows Azure consumption over their WP7 phones. With the current setup, it cannot happen.

Those 3 features can be dismissed as anecdotal and irrational, and yet I believe that capturing (relatively) cheap "wow" effect would give a tremendous boost to the Windows Azure adoption rate.

<Return to section navigation list>

Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

No significant articles today.

<Return to section navigation list>

Cloud Security and Governance

Sourya Biswas explained What Bromium’s Funding Means for Cloud Security in a 6/29/2011 post to the CloudTweaks blog:

What can prompt three experienced professionals in the IT industry – former CTO and SVP of engineering at Phoenix Technologies Gaurav Banga, former CTO of the Data Center & Cloud Division of Citrix Simon Crosby, and former VP of advanced products in the Virtualization and Management Division at Citrix Ian Pratt – to abandon established careers and get together to form a new company?

More importantly, what can prompt three experienced venture capitalist funds – Andreessen Horowitz, Ignition Partners, and Lightspeed Venture Partners – to invest $9.2 million in the aforementioned company?

The answer to both these questions is – a viable idea. Bromium, a company looking to make cloud computing safer, certainly has one. Especially since security is perceived as the greatest threat of going to the cloud, and often cited by naysayers as the reason why they are averse to welcoming this emergent technology (See: Can The RSA Conference Help Dispel Cloud Computing Security Fears?).

Since cloud computing service providers seem to be hesitant in assuming the mantle of responsibility (See: Are Cloud Computing Service Providers Shirking Responsibility on Security?), Bromium can certainly fill this niche. In other words, a customer need exists and there’s no one to address this need. Ergo, Bromium has a ready market.

Secondly, it seems to have the talent to service this need as well. The three cofounders have decades of experience solving problems in Information Technology, with additional cloud computing experience thrown in. With this move, they are emulating entrepreneurs like the founders of German software giant SAP who left IBM to start their own venture, and the Infosys co-founders who resigned from Patni Computer Systems to create one of the largest IT service companies in the world.

“Virtualization and cloud computing offer enterprises a powerful set of tools to enhance agility, manageability and availability, and enable IT infrastructure, desktops and applications to be delivered as a service. But these benefits are out of reach unless the infrastructure is secure and can be trusted,” said Bromium co-founder, President, and CEO Gaurav Banga. “Bromium has the team, the technology and the investors to enable us to deliver fundamental changes in platform security, from client to cloud.”

“The way that virtualization is being used today is in the management domain. But it is our belief that you can do a lot more with it, and the big thing we are interested in is security. There are all sorts of malware out there and we are not doing a very good job of protecting against it. This is one of those problems I like because this is hard,” said Ian Pratt, senior vice president in charge of products at the company.

Cloud computing startups receiving funding is not a rare phenomenon, neither is the rapid success of some of these new ventures (See: Venture Capitalists Flock To Cloud Computing Startup). However, a startup working exclusively on cloud security is comparatively rare. If Bromium lives up to its promise, not only will it become a dominant player, but it can benefit the entire cloud computing industry and accelerate its broader adoption.

Scott Godes posted Join me for a free webinar about D&O and cyberinsurance: "Executive Summary": What You Need to Know Before You Walk into the Boardroom on 6/27/2011:

Please join me on July 19, 2011, at 2:00 pm Eastern, for a free webinar hosted by Woodruff Sawyer & Co. Priya Cherian Huskins, Lauri Floresca, and I will discuss D&O insurance, cyberinsurance, and insurance coverage for privacy issues, data breaches, cyberattacks, denial-of-service attacks and more. Here are the details from Woodruff Sawyer‘s announcement:

Disclaimer:

This blog is for informational purposes only. This may be considered attorney advertising in some states. The opinions on this blog do not necessarily reflect those of the author’s law firm and/or the author’s past and/or present clients. By reading it, no attorney-client relationship is formed. If you want legal advice, please retain an attorney licensed in your jurisdiction. The opinions expressed here belong only the individual contributor(s). © All rights reserved. 2011.

<Return to section navigation list>

Cloud Computing Events

The Windows Azure Team (@WindowsAzure) announced New Academy Live Sessions Share Tips and Tricks for Migration and Monetization with Windows Azure in a 6/28/2011 post:

Don’t miss these two new Academy Live Sessions to learn more about how to monetize your solutions on Windows Azure and options for migrating to Windows Azure. Both FREE Academy Live sessions start at 8:00 a.m. PT and run 60 minutes. Click on the session title below to learn more and to register.

Windows Azure Based Unified Ad Serving Platform, Out of the Box Monetization Magic

Thursday, June 30, 2011, 8:00 – 9:00 am PT

Watch this session to learn more about TicTacTi’s Windows Azure-based unified cross device/operating system monetization platform, which enables dynamic insertion of advertisements into any game, application, or video.Easy On-Boarding of Mission-Critical Java Apps to the Windows Azure Cloud

Tuesday, July 5, 2011, 8:00 – 9:00 am PT

In this session, GigaSpaces will provide an overview of its Cloudify for Windows Azure enterprise Java application platform, which helps on-boards mission-critical JEE/Spring/big-data applications to Windows Azure without architectural or code changes. Included will be a live demo illustrating a swift deployment of a multi-tier Spring application onto Windows Azure.

<Return to section navigation list>

Other Cloud Computing Platforms and Services

David Linthicum (@DavidLinthicum) asserted “The cloud is about doing work any time, anywhere; Office 365 is about doing work only on a PC” in a deck for his Office 365: Fewer customers than Microsoft thinks post of 6/29/2011 to InfoWorld’s Cloud Computing blog:

Microsoft Office 365 is not getting as much play as I thought, other than the reality check by my colleague Galen Gruman that this cloud service works well on Windows but not on other platforms, including mobile. Oh Microsoft, we thought you changed.

There will be plenty of hype over the next few weeks, so let's not tread that ground. Instead let's try to understand what this means for the enterprise market -- that is, whether you should make the move.

The value of Office 365 really depends on who you are. If you're an existing Windows and Office user, you'll find that migration path easy and the user experience to be much the same. Those who are sold on Office will be sold on Office 365 -- apart from customers who don't relish the idea of using a cloud-based system for calendaring, email, and document processing. In many instances, people don't mind keeping that info on their own hard disks. In fact, much of the existing Office user base is not yet sold on the cloud.

As a result, the market for this cloud service could be more limited than many people think, and Microsoft may be missing the true value of the cloud.

Those moving to cloud-based systems, even productivity applications, are doing so because of the pervasiveness of the cloud. We can use it everywhere and anywhere, replicating the same environment and file systems from place to place. According to Gruman's article, Microsoft seems to miss that understanding.

Moreover, those moving to cloud computing are typically innovators and early adopters. To my point above, that's an audience Microsoft no longer has. Those who consider themselves innovative around the use of new technology, such as the cloud, have moved away from Microsoft and Windows products in the recent past, myself included.

Finally, the enterprise market: Many people consider Office to have reached feature saturation long ago and don't look forward to upgrades anymore. For them to go Office 365, they will need a more compelling reason other than the fact it's on the cloud.

Don't get me wrong; I think Office 365 will be a compelling cloud-based service, in many respects better than the existing options such as Google Apps. However, Microsoft has taken a very narrow view of this market, so the market will do the same of Microsoft.

Kurt Marko wrote IBM CloudBurst: Bringing Private Clouds to the Enterprise for InformationWeek::Analytics on 6/28/2011 (premium subscription required):

Hardware vendors are competing to build integrated systems of servers, edge networks and pooled storage for virtualized workloads. IBM's aptly named entrée into the world of unified computing stacks is distinguished by application provisioning and service management software worthy of the cloud appellation. (S2870511)

Table of Contents

3 Author's Bio

4 Executive Summary

5 IBM Storms the Private Cloud Market

5 Figure 1: Implementation of a Private Cloud Strategy

6 Figure 2: Virtualization Implementation

7 Figure 3: Technology Deployment Plans

8 Inside CloudBurst

9 Leading With Software

10 Figure 4: CloudBurst Self-Provisioning UI

11 Figure 5: Interest in Single-Manufacturer Blade Systems

12 Figure 6: Small CloudBurst Pricing

13 Server Components

15 Networking and Virtualization

15 Figure 7: CloudBurst System Typology

16 Figure 8: Technology Decisions Guided by Idea of Data Center Convergence

17 Figure 9: Top Drivers for Adopting Technologies Supporting Convergence

18 Figure 10: Interest in Combined-Manufacturer Complete Systems

19 Pooled, Virtualized Storage

20 Use Cases and Conclusions

22 Related ReportsDownload (Premium subscription required)

SDTimes on the Web posted a Cloudera Unveils Industry's First Full Lifecycle Management and Automated Security Solution for Apache Hadoop Deployments press release on 6/29/2011:

Cloudera Inc., the leading provider of Apache Hadoop-based data management software and services, continues to drive enterprise adoption of Apache Hadoop with new software that makes it simpler than ever to run and manage Hadoop throughout the entire operational lifecycle of a system. The company today announced the immediate availability of Cloudera Enterprise 3.5, a substantial update that includes new automated service, configuration and monitoring tools and one-click security for Hadoop clusters. Cloudera Enterprise is a subscription service that includes a management suite plus production support for Apache Hadoop and the rest of the Hadoop ecosystem. Concurrently, the company also announced the launch of Cloudera SCM Express, which is based on the new Service and Configuration Manager in Cloudera Enterprise 3.5, making it fast and easy for anyone to install and configure a complete Apache Hadoop-based stack.

"As interest in Hadoop expands from early adopters to mainstream enterprise and government users, we are increasingly seeing the focus shift from development and testing to understanding potential use cases for the core distribution to the value-added tools and services that will enable and accelerate enterprise adoption," said Matt Aslett, senior analyst at The 451 Group. "Cloudera has gained an enviable position at the center of the expanding Hadoop ecosystem, and we continue to be impressed with both its commercial product plans and engagement with the open source community."

Cloudera Enterprise 3.5: Full Lifecycle Management of Apache Hadoop Deployments

Cloudera Enterprise 3.5, a subscription service comprised of Cloudera Support and a portfolio of software in the Cloudera Management Suite, is a major enabler for the enterprise adoption and production use of Apache Hadoop. For the first time, enterprises can manage the complete operational lifecycle of their Apache Hadoop systems because Cloudera Enterprise 3.5 facilitates deep visibility into Hadoop clusters and automates the ongoing system changes needed to maintain and improve the quality of operations."We have seen extensive adoption of Apache Hadoop across our client base, but the management challenges of running the Hadoop stack in production can be prohibitive,” said Matt Dailey, Hadoop applications developer for the High Performance Computing Center at SRA International, Inc. “This latest release of Cloudera's Management Suite goes a long way to giving enterprises the confidence to operate a Hadoop system in production."

Read more: Next Page

<Return to section navigation list>

0 comments:

Post a Comment