Windows Azure and Cloud Computing Posts for 6/18/2011+

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database and Reporting

- Marketplace DataMarket and OData

- Windows Azure AppFabric: Access Control, WIF and Service Bus

- Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch

- Windows Azure Infrastructure and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Azure Blob, Drive, Table and Queue Services

No significant articles today.

<Return to section navigation list>

SQL Azure Database and Reporting

No significant articles today.

<Return to section navigation list>

MarketPlace DataMarket and OData

My (@rogerjenn) Reading and Updating Office 365 Beta’s SharePoint Online Lists with OData post of 6/19/2011 describes retrieving the OData representations of SharePoint lists created by moving Access 2010 tables to SharePoint Online:

My earlier Access Web Databases on AccessHosting.com: What is OData and Why Should I Care? post (updated 3/16/2011) described working with multi-tenant SharePoint 2010 Server instances provided by AccessHosters.com. Microsoft is about to release Office 365 as a commercial service (presently scheduled for 6/28/2011), so the details for using OData to manipulate SharePoint Online lists have become apropos.

I delivered on 5/23/2011 a Moving Access Tables to SharePoint 2010 or SharePoint Online Lists Webcast for Que Publishing, which provides detailed instructions for creating Web databases by moving conventional Access *.accdb databases to SharePoint Online lists and, optionally, create Web pages that emulate Access forms and reports. Click here to open the Webcast’s slides, which begin by describing how to move Access tables to an onsite SharePoint 2010 server installation and how to overcome problems with relational features that SharePoint lists don’t support. Slides 30 through 34 describe how to move the tables from Northwind.mdb (the classic version) to a ServiceName.sharepoint.com/TeamSite/Nwind subsite, where ServiceName is the name you chose when you signed up for Office 365 (oakleaf for this example):

Opening an OData list of Collections

Log into your site’s default public web site at http://ServiceName.sharepoint.com/, click the Member Login navigation button, type your Microsoft Online username (UserName@ServiceName.onmicrosoft.com) and password if requested, and click OK to enter the default TeamSite in editing mode.

Click the SubsiteName, NWind for this example, and Lists links to display the lists’ names, descriptions, number of items and Last Modified date:

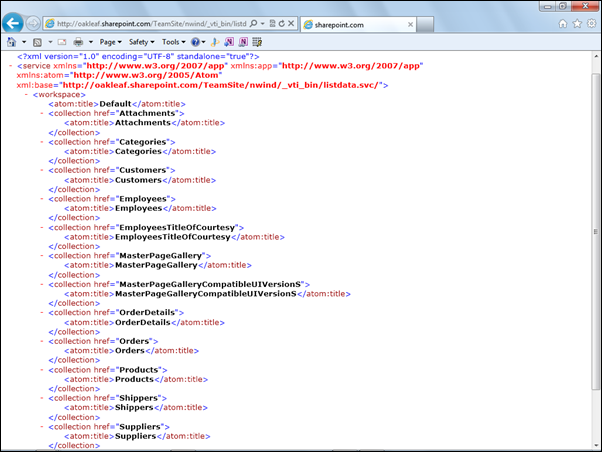

To display in OData a list of the SharePoint lists in a subsite, NWind for this example, type http://ServiceName.sharepoint.com/TeamSite/NWind/_vti_bin/listdata.svc in the address bar of IE9 or later:

Note: vti is an abbreviation for Vermeer Technologies, Inc., the creators of the FrontPage Web authoring application. Microsoft acquired Vermeer in January 1996 and incorporated FrontPage in the Microsoft Office suite from 1997 to 2003.

The EmployeesTitleOfCourtesy item is a lookup list with Dr., Miss, Mr., Mrs. and .Ms choices. The Attachment and two MasterPage… items are default lists found in all sites.

Display Items in a Collection

…

Verify Query Throttling

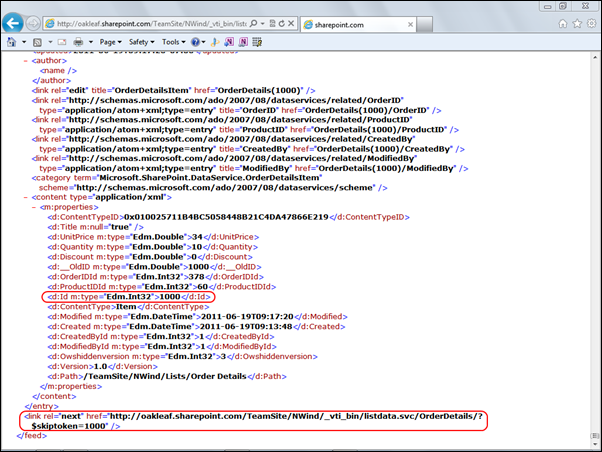

According to the All Site Content page, the OrderDetails table has 2,155 items. To determine if queries are throttled to return a maximum number of items, change the URL’s /CollectionName/ suffix from /EmployeesTitleOfCourtesy/ to /OrderDetails/. Press Ctrl+PgDn to navigate to the last item:

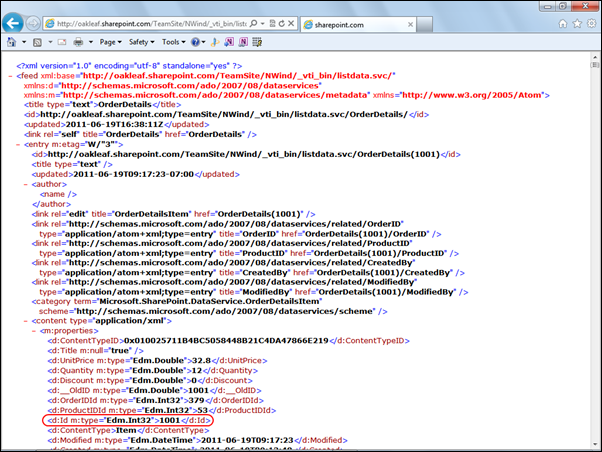

Office 365 beta throttles OData queries by delivering a maximum of 1,000 records per query, as indicated by the last item’s ID of 1000 and the <link rel="next" href="http://oakleaf.sharepoint.com/TeamSite/NWind/_vti_bin/listdata.svc/OrderDetails/?$skiptoken=1000" /> element immediately above the </feed> closing tag. Copy the URL with the skiptoken query option into the address bar to return the next 1,000 Order Details items, starting with ID 1001:

Paging to the end of the document confirms a $skiptoken=2000 value.

To return a single entry, append the Id value enclosed in parenthesis to the list name, as in http://oakleaf.sharepoint.com/TeamSite/NWind/_vti_bin/listdata.svc/OrderDetails(2001).

Note: SharePoint adds an autoincrementing Id primary key value to all lists generated from Access tables and saves the original primary key value in an __OldID column. This column is the source of the problem I reported in my SharePoint 2010 Lists’ OData Content Created by Access Services is Incompatible with ADO.NET Data Services post of 3/22/2011. …

The post continues with a list of useful OData technical references.

Sudhir Hasbe described a Building Model Service from Sanborn available on Windows Azure Marketplace in a 6/19/2011 post:

Link: https://datamarket.azure.com/dataset/bfa417be-be79-4915-82c7-efae9ced5cb7

Type: Paid

Sanborn Building Model Products are an essential ingredient to virtual city implementations. Combining a strong visual appearance with a standards-compliant relational objects database, Sanborn Building Model offers building and geospatial feature accuracy for the most demanding of users. Data contains the information necessary to construct a digital city with hyper-local central business district building data.

Sanborn Building Model can provide valuable geospatial and visualization data for users and organization involved with: State and major urban Fusion Centers, Local, State and Federal Operations Centers, Architectural / Engineering / Construction, Real-Estate and Land Development, Navigation Applications, Urban and Transportation Planning, Security Planning and Training, Public and private utility providers, and Gaming and internet companies. Sanborn Building Model provides a critical component to urban geo-spatial information analysis and modeling that can be used in production environments for GIS, CAD, LULC, planning, and other aspects.

Sudhir Hasbe announced LexisNexis dataset now available!!! in a 6/19/2011 post:

Link: https://datamarket.azure.com/dataset/101eb297-c17f-4f22-a844-16ca2e52252d

Type: Free

The LexisNexis Legal Communities provide access to the largest collection of Legal Blogs written by leading legal professionals, more than 1,600 Legal Podcasts featuring legal luminaries, information about Top Cases and Emerging Issues. The communities enable you to keep current with the latest Legal News, Issues and Trends.

Practice areas covered include: Bankruptcy, Copyright & Trademark Law, Emerging Issues, Environment and Climate Change Law, Estate Practice and Elder Law, Insurance, International & Foreign Law, Patent, Real Estate, Rule of Law, Tax Law, Torts, UCC, Commercial Contracts and Business and Workers Compensation. Professional areas of interest include: Law Firm Professionals, Corporate Legal Professionals, Government Information Professionals, Legal Business, Librarians and Information Professionals, Litigation Professionals, Law Students, and Paralegals.

Sudhir Hasbe reported Chanjet: First Dataset from China on Windows Azure Marketplace in a 6/29/2011 post:

Link: https://datamarket.azure.com/dataset/6bd3a6b3-2fe0-4609-ba11-959190000047

Type: Free

There are over 40 million small and medium-sized enterprises (SME) in China. This business segment plays a crucial role in the unprecedented development and growth of the Chinese economy. SME represents 99% of businesses in China, 60% of the economy and accounts for 75% of the employment. Chanjet provides SME operation and management KPI data based on its nationwide survey conducted among SMEs from multiple industries.

The dataset mainly reports on the following 5 measures: operating margin, inventory turnover, accounts receivable turnover, operating profit growth and sales growth. Some interesting data from the survey. First, compared with 2009, sales have grown substantially to 16.13% in 2010 due to the national control measures after the financial crisis. Second, the combination of increasing prices of raw materials, and RMB appreciation resulted in a decline of profits. Third, the inventory turnover decreased 0.14%, and receivable turnover decreased 0.51%, indicating stable internal management. In short, from the operational indicators for the SME segment, we see that the impact of external factors outweighs the internal factors, and this is causing increasing operational risk for the Chinese SME. The key focus areas for the SME in order to minimize their operational risk, include: capital risk, receivable risk and inventory risk. Chanjet's data service platform integrates industrialization and informatization and provides a great service in helping SME's robust growth.

SAP released a How To... Create Services Using the OData Channel white paper for SAP Netweaver Gateway 2.0 in April 2011 and subsequently published it to the Web. From the beginning topics:

1. Business Scenario

Your backend SAP system contains Banking business data that you want to expose via a REST based API so that it will be easily consumable by the outside world.2. Background Information

SAP NetWeaver Gateway offers an easy way to expose data via the REST based Open Data (OData) format. With Gateway you can generate services based on BOR objects, RFCs, or Screen Scraping. There is also another way using the OData Channel API, which is an ABAP Object API. You develop classes using this API on the backend system where the SAP data resides, not on the actual Gateway system. The OData Channel is very flexible and powerful, it allows you to create simple services or complex ones. This guide will focus on creating a simple service with OData Channel. It is the hope of the author that once you learn how to create this very simple service you will move on to developing more complex and interesting services with OData Channel.

3. Prerequisites

- SAP NetWeaver Gateway 2.0 installed and configured.

- Backend SAP System with the BEP gateway add-on installed.

- Backend SAP System is connected to the SAP NetWeaver Gateway system.

- Knowledge of ABAP Object Oriented programming is required. That said, this is a very detailed step by step guide which you could probably complete without ABAP OO experience…but you most likely wouldn’t be able to fully understand it. …

The article continues with step-by-step procedures for the GET_ENTITYSET, GET-ENTITY, UPDATE_ENTITY and CREATE_ENTITY methods.

<Return to section navigation list>

Windows Azure AppFabric: Access Control, WIF and Service Bus

Sudhir Hasbe reported the availability of an Azure AppFabric Service bus, Silverlight Integration - End-to-End (walkthrough) in a 6/19/2011 post:

AppFabric Service Bus is one of the most important services in Windows Azure. Saravana Kumar, MVP has written 2 blog posts explaining how to use Service bus in Silverlight applications. These post are really detailed.

In this article we are going to see the end to end integration of Azure AppFabric Service bus and Silverlight. First we are going to create a very basic "Hello World" Silverlight application that interacts with a WCF operation called "GetWelcomeText" and displays the text returned in the UI. In order to explain the core concepts clearly, we are going to keep the sample as simple as possible. The initial solution is going to look as shown below. The SL application hosted on a local IIS/AppFabric(server) web server and accessed via a browser as shown below.

Next, we are going to extend the same sample "Hello World" example to introduce Azure AppFabric service bus as shown in the below picture and show the power of AppFabric Service bus platform, which enables us to use the remote WCF services hosted geographically somewhere in the wild.

Original Source: http://blogs.digitaldeposit.net/saravana

The first part of the article doesn't going to touch anything on Azure AppFabric service bus, it's all about creating a simple SL/WCF application.

Azure AppFabric Service bus, Silverlight Integration - End-to-End (walkthrough) - Part 1

<Return to section navigation list>

Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

Steve Plank (@plankytronixx) posted Connecting the Windows Azure network to your corporate network - Monday 23rd from the UK TechDays 2011 conference:

Extending your corporate network in to the Windows Azure datacenter might be important to enhance security for enterprise workloads. For example performing a domain-join between Windows Azure applications and your AD. Or perhaps keeping sensitive data in an on-premise SQL Server database and connecting to it from Windows Azure using Windows Integrated Security.

A virtual network environment permits these topologies. This session covers how Windows Azure Connect enables these scenarios.

Download Planky’s slides here.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

Joannes Vermorel (@vermorel) posted Shelfcheck, on-shelf availability optimizer announced on 6/19/2011:

Last Wednesday, at the Strategies Logistique Event in Paris, we announced a new upcoming product named Shelfcheck. This product targets retailers and it will help them to improve on-shelf availability, a major issue for nearly all retail segments.

In short, through an advance demand forecasting technology such as the one of Lokad, it becomes possible to accurately detect divergence between real-time sales and anticipated demand. If the observed sales for a given product drop to a level that is very improbable considering anticipated demand, then an alert can be issued to store staff for early corrective actions.

On-shelf availability has been challenging the software industry for decades, yet, as far we can observe, there is no solution even close of being satisfying available on the market at present day. For us, it represents a tough challenge that we are willing to tackle. We believe on-shelf availability is the sort of problem that is basically near-impossible to solve without cloud computing; at least near-impossible within reasonable costs for the retailer. Shelfcheck will be deployed on Windows Azure, a cloud computing platform that proves extremely satisfying for retail, as illustrated by the feedback we routinely get for Salescast. [Windows Azure emphasis added.]

A retail pain in the neck

Have you ever experienced frustration in a supermarket when facing a shelf where the product you were looking for was missing? Unless, you've been living under a rock, we seriously bet you face this situation more than once.

Recent studies (*) have shown that, on average, about 10% of the products offered in store are unavailable on average. Worse, the situation seems to have slightly degraded over the last couple of years.

(*) See materials published on ECR France.

In practice, there are many factors that cause on-shelf unavailability:

- Product is not at the right place and customers can't find it.

- Product has been stolen and incorrect electronic inventory level prevents reordering.

- Packaging is damaged and customers put in back after having a close look.

- ...

Whenever a product is missing from a shelf, sales are lost, as studies have shown that clients go for a direct ersatz only 1/3 of the time. Then it generates client frustration too which turns into loss of loyalty. Missing products are putting an incentive on your clients to check if competitors are better.

With about 10% on-shelf unavailability, a back-of-the-envelope calculation indicates the losses for the retail industry amount for a rough 100 billion USD per year world-wide. Disclaimer: that's only an order of magnitude, not a precise estimate. Then, this estimate does not factor industry-wide cannibalizations, aka lost sales aren't always lost for everyone.

You can't improve what don't measure

On-shelf availability is especially painful because detecting the occurrence of the problem is so hard in the first place:

- Direct shelf control is extremely labor intensive.

- RFID remains too expensive for most retail segments.

- Rule-based alerts are too inaccurate to be of practical use.

Thus, the primary goal of Shelfcheck is to deliver a technology that let retailers detect out-of-shelf (OOS) issues early on. Shelfcheck is based on an indirect measurement method: instead of trying to assess the physical state of the shelf itself, it looks at the consequences of an OOS within real-time sales. If sales for a given product drop to a level that is considered as extremely improbable, then the product is very likely to suffer an OOS issue, and an alert is issued.

By offering a reliable OOS metering system, Shelfcheck will help retailers to improve their on-shelf availability levels, a problem that proved to be extremely elusive to quantitative methods so far.

Store staff frustration vs. client frustration

The core technological challenge behind Shelfcheck is the quality of OOS alerts. Indeed, it's relatively easy to design a software that will keep pouring truckload of OOS alerts, but the time of the store staff is precious (expensive too), and they simply can't waste their energy chasing false-positive alerts, that is to say "phantom problems" advertized by the software, but having no real counterparts within the store.

Delivering accurate OOS, finely prioritized and mindful of the store geography (*) is the number one goal for Shelfcheck. Considering the amount of data involved when dealing with transaction data at the point of sale level, we believe that cloud computing is an obvious fit for the job. Fortunately, the Lokad team happens to somewhat experienced in this area.

(*) Store employees certainly don't want ending-up running to the other side of the hypermarket in order to check for the next OOS problem.

Product vision

The retail software industry is crippled by consultingware where multi-millions dollars solutions are sold and do not live up to expectations. We, at Lokad, do not share this vision. Shelfcheck will be following the vision behind Salescast, our safety stock optimizer.

Shelfcheck will be:

- Plug & Play, with simple documented ways of feeding sales data into Shelfcheck.

- Dead simple, no training required as Lokad manages all the OOS detection logic.

- Robotized, no human intervention required to keep the solution up and running.

- SaaS & cloud hosted, fitting any retailers from the family shop up to the largest retail networks.

The pricing will come as a monthly subscription. It will be strictly on demand, metered by the amount of data to be processed. We haven't taken any final decision on that matter, but we are shooting for something in the range of $100 per month per store. Cost will probably be lower for minimarkets and higher for hypermarkets. In any case, our goal is to make Shelfcheck vastly affordable.

Feature-wise, Shelfcheck is pre-alpha stage, hence, the actual feature set of the v1.0 is still heavily subject to change. Yet, here is the overview:

- Real time OOS alerts available through web and mobile access.

- Real time prioritization based on confidence in the alerts and financial impact of the OOS issues.

- OOS dashboard feeding the management with key on-shelf performance indicators.

1 year free usage for early adopters

We are extremely excited by the sheer potential of this new technology. We are looking for volunteers to beta-test Shelfcheck. Lokad will offer:

- Until Shelfcheck is released, early adopters will be provided with unlimited free access.

- Upon release, early adopters will get 1 year of further free usage, within a limit of 10 points of sale.

In exchange, we will kindly ask for feedback, first about the best way for Lokad to acquire your sales data, and second about the usage of Shelfcheck itself.

Naturally, this feedback will drive our development efforts, bringing Shelfcheck closer to your specific needs. Want to grab the opportunity? Just mail us at contact@lokad.com.

Cory Fowler (@SyntaxC4) described Windows Azure Examples in a 6/18/2011 post:

It was brought to my attention today that there are a lack of “Examples” on Windows Azure Applications. This completely baffled me, as I know of many sites that offer Code Snippets and Full Windows Azure Applications. To address this issue I bring you the Windows Azure Examples Blog post as a follow up to my highly acclaimed Essential Resources for Getting Started with Windows Azure Post that I co-authored with John Bristowe.

Are there Example Applications for Windows Azure?

There are a number of great resources for Finding Windows Azure Applications to help model a Cloud Based Solution; no matter what Architectural Pattern.

Open Source Solutions

Microsoft All-in-One Code Framework

AzureFest (Open Source Presentation)

Smarx Role

DeployToAzure (TFS Build Activities)

MultiTenantWebRole (NuGet: Install-Package MultiTenantWebRole)[Microsoft] Learning Resources

Windows Azure Platform Training Kit

Windows Identity Foundation (WIF) Training Kit

Windows Azure Tools

Hadoop in Windows Azure

Windows Azure Team Blog

SQL Azure Team Blog

Windows Azure Storage Team Blog

Windows Azure Code Samples

Windows Azure How-To Topics[Community] Learning Resources

Brian Swan (@brian_swan, pictured below) posted Interview with Ben Waine, 2011 PHP on Azure Contest Winner on 6/17/2011:

I recently had a chance to catch up via e-mail with Ben Waine, winner of the 2011 PHP on Azure contest. The announcement of his victory was actually made at the Dutch PHP Conference in May, but we’ve both been extremely busy since then, so exchanging e-mails has taken a while. I only followed the contest from a distance while it was happening, but after hearing that Ben had won the contest (I had the good fortune of meeting Ben in person at the 2010 Dutch PHP Conference) and after reading his blog series about building his application, I wanted to find out more about his experience.

He does a great job of detailing his experience on his blog, so I highly suggest reading his articles if you are looking to understand the benefits and challenges of running PHP application on the Windows Azure platform. If you are interested in learning more about Ben and his hind-sight perspective on his project, read on…

Brian: For our readers, can you introduce yourself? Who are you? What do you do?

Ben: I'm a software engineer at Sky Betting and Gaming in the UK. Previous to that I worked at SEO Agency Stickyeyes. During these two jobs I have also been completing my undergraduate degree in Computing at Leeds Met University. I'm a PHP developer focusing on back end development. I'm a little front end phobic!

Brian: How did you find out about the PHP on Azure contest? What piqued you interest in it?

Ben: I head about the competition at PHPBNL11 while interviewing Katrien De Graeve about her talk on Azure. I have to confess, it was initially the prospect of going to Vegas that piqued my interest. I knew I would have to produce a large body of work over the next few months as I had a dissertation to write. As I am quite comfortable with the Linux stack, I thought it would be interesting to produce an application on Azure, a platform I wouldn't normally develop for.

Brian: Nothing wrong with wanting to go to Vegas! (I want to go too…I’ve never been there!) Were you surprised to win? (Did you think you had a chance at winning?)

Ben: I thought I might have a chance of winning. A number of Microsoft competitions have been run in recent years, and while take up is usually high, so is competitor attrition. The competitions usually end with just a few (high quality) entries. But, I knew I'd have to complete the work or fail my degree!

I knew when the competition winner would be announced (at the end of DPC11), so I'd planned to look on Twitter at 6 pm but didn't account for the time difference between Leeds and Amsterdam. When I logged in there were already loads of messages of congratulations and support. It was a great feeling!

But, I was also quite surprised. The UI for my app was a little rough and ready, and an number of features I would have loved to include fell by the way side because I had to work on my dissertation. I only had time to produce the bare bones functionality, like harvesting data from Twitter and producing graphs and data tables (which were the most important aspects of the application).Brian: Did the work you did on this application count towards your dissertation?

Ben: The brief set by my university was quite broad: “Produce a software project that does something”. Many people chose to develop e-commerce websites or CMS systems. As a developer doing those things all day, I wanted to try something a little different. It was great to work on a project two days a week that was totally different to the work I do for my employers. I submitted the entire software piece as the practical component of the dissertation and used the data it generated in the write up.

Brian: What gave you the idea for you Twitter Sentiment Engine (TSE)?

Ben: I've always liked Twitter, it's a really fast paced and relevant medium. With no experience in sentiment analysis, I thought my dissertation would be a great chance to learn about this exciting subject.

Brian: In retrospect, what were the biggest pain points in using PHP on the Azure Platform? How did you overcome them?

Ben: The biggest pain point for me on Azure was deployment. Knowing little about the platform, I first used the command line tools for PHP and packaged my project for deployment via the management portal. This process generally took around 40 minutes from start to finish. Compare this to the five minute automated deployments I'm used to and it's easy to see why deployment was frustrating at times. I plan to continue developing for Azure and have been looking into the Service Management API. My next project will be to create a build server on an Azure instance utilizing some custom phing tasks to allow quick deployment onto Azure from a VCS.

I think PHP on Azure is still in it's infancy. There is a lot of good technical content provided by Microsoft and early adopters, but there isn't yet the breadth of documentation PHP developers have come to expect. It was sometimes difficult to get answers to questions about things like application logging, deployment, and some of the tooling. However, I did always manage to figure it out in the end as Azure does have a nice community of developers around it.

Brian: What were the biggest (nice) surprises?

Ben: The nicest surprise was SQL Azure. It was easy to set up, integrates well with SQL Management Studio, and, from reading about it, seems to be extremely reliable. Coupled with the PDO_SQLSRV driver, it showed that SQL Server is definitely PHP ready. It was a great example of how cloud computing can 'just work', putting a familiar facade in front of redundant hardware.

As of v5.3, PHP is feature complete on Windows. It wasn't necessary to change the any of the preliminary work I had done on the project on a Linux platform. This is a great step for interoperability and definitely a nice surprise.

Brian: That’s great feedback…good to hear we are moving in the right direction. What advice would you give to PHP developers who are interested in the Azure platform?

Ben: Take some time to familiarize yourself with the various demo's and guides provided on MSDN. Writing PHP isn't the hard bit. You don't have to change anything in the way you write code. The hard bit is deploying successfully and consistently to the platform. Do the simple 'hello world' app and then go further and deploy the 'hello word' app of your chosen framework as well. This will give some of the knowledge required to deploy more complex real world applications.

Brian: Sounds like good advice. What are your plans for your Twitter Sentiment Engine going forward?

Ben: I've really enjoyed working on TSE, but I know it's not a complete project. It served it's purpose (gathering information for my dissertation) but there are so many features I'd like to write:

- Search

- Multi User Support

- User Trends (what other users are tracking / popular searches)

- A three tiered architecture based on message queues

- A nice UI

By the time Vegas comes round I'd like to have a full-featured application deployed on Azure. I've started to componentize the Bayesian filtering logic I used in the first iteration of TSE. It's available on Guthub: https://github.com/benwaine/BayesPHP. I plan to undergo a similar process with some of the other features, like Twitter sample gathering. Each of the components will be discreet and fully unit tested. The new version of the application will also feature unit and integration tests. Having produced a good prototype the next iteration will concentrate on quality, scalability and features.

Brian: Were you using Bayesian filtering to filter out Twitter spam?

Ben: The project used Bayesian classification to classify tweets into categories: positive and negative. The number of positive and negative tweets was then plotted onto a graph over time. The idea of the project was to capture real time changes in sentiment using a machine learning process that required no human interaction.

The lifecycle of a ‘keyword tracking request’ has two components: sampling & learning and classification. During the learning phase Twitter’s search API is used to harvest tweets containing the keyword specified and smiley phase glyphs like :-) or :-( . This gives the learning process a loose indication of the sentiment of the tweets. It uses these to learn the words that indicate positive or negative sentiment in the context of the keyword. In the classification phase of the life cycle, any tweet containing the keyword is classified into positive or negative based on the words that appeared in the learning phase.

Brian: That sounds very cool! Thanks Ben.

If you are interested in learning more about Ben’s Twitter Sentiment Engine and how he used the Azure platform, follow Ben on Twitter (but be careful what you say…he’ll be listening!) and subscribe to his blog. Ben also assured me that he welcomes contributions to his project on Github: https://github.com/benwaine/BayesPHP.

<Return to section navigation list>

Visual Studio LightSwitch

Return to section navigation list>

Windows Azure Infrastructure and DevOps

John Foley asserted “In this Q&A, Microsoft's CEO pushes back, but also provides new insights into the company's cloud computing business, its hardware strategy, and his customers' next big bet” in a deck for his Feisty Ballmer Talks Cloud, Office 365, And Big Data article of 6/17/2011 for InformationWeek:

Microsoft’s cloud computing business is on a “steep ascent,” in the words of CEO Steve Ballmer, but what does that mean in terms of revenue, profit, per-seat economics, and rate of growth?

Quantifying its cloud business -- providing hard numbers on specific services -- isn’t something that Microsoft is ready to do. Ballmer says “millions of seats” have moved to Business Productivity Online Services, which includes software-as-a-service versions of Exchange and SharePoint, and the company’s other SaaS offerings, but that’s as far as he would go. “I don’t know whether we’d give you something more specific or not,” Ballmer said in a June 9 interview with InformationWeek editors at the company’s Redmond, Wash., headquarters.

Microsoft’s progress in the cloud, including the pending launch of Office 365, which will replace BPOS, was top of mind for the InformationWeek team (Rob Preston, Doug Henschen, Paul McDougall, and me) on this visit. Just 14 months ago, Ballmer told us that Microsoft’s cloud business was on the cusp of “hockey stick” growth. We’re monitoring the pace of adoption closely because of the massive change it represents not just for Microsoft, but for ITS business customers.

Ballmer was in a recalcitrant mood -- parrying some of our questions, calling others “unanswerable.” But he was also forthcoming, providing new insights into the company’s Windows Azure business (it’s growing slower than expected), its hardware strategy (it wants more control over the hardware), the secret to making SaaS pay off (minimize churn), and more.

Following is a lightly edited transcript of our conversation.

We started by asking Ballmer about his forecast last year that “hockey stick” growth was ahead for Microsoft’s cloud business, which includes BPOS, Windows Azure, and the soon-to-launch Office 365.

Ballmer: We've got a lot of room still to go, but we've definitely seen a hockey stick in terms of adoption of Business Productivity Online Services, soon to be Office 365. Literally rising to millions of seats effectively in a very short time. In that case, we have seen absolutely good liftoff, hockey stick-like liftoff.

In terms of Azure, we've seen good acceptance. But if you were to say, What percentage of enterprise computing is likely to move? Mission critical apps as a percentage will move to the cloud more slowly than information worker infrastructure. We’ll see a different pace and cadence.

Now, we're a big company; the numbers are all big. Every growth in Office 365 is something else that somebody didn't buy. You can look at our financial results by segment or the whole company, and you may not say, yes, I got it, I see it, it's right there. On the other hand, there is seat explosion, and if you look at the list of reference customers, people have moved, people are moving. We are in a very steep ascent, both in terms of commitments and deployments.

InformationWeek then asked whether there were any surprises in Microsoft’s cloud strategy, any areas where adoption was actually faster than expected.

Ballmer: I would have said, we were expecting faster growth [rather] than we're seeing faster growth. If anything, if you ask a year later about private clouds and public clouds, particularly as it relates to line of business applications, I'm smarter than I was and we're all smarter than we were a year ago.

The private cloud thing was important; it's still important. Is it a private cloud, or is it really just a virtualized data center? There is a conceptual difference between a virtualized data center and a private cloud, yet a lot of what’s getting sold as private clouds are really virtualized data centers. And a lot of what’s being done in the public cloud is the hosting of virtual machines, which, again, is a little bit different. We would not run our big services with the same kind of technology that are running enterprise data centers.

So, the move to next-generation operations and management infrastructure is going to be a little tougher, because there's a lot of legacy, a lot of understanding [required] of current approaches. Things will move a little more slowly, and people aren't willing to commit line of business apps en masse to the public cloud anyway right now. They're willing to commit websites. Startups are willing to move in that direction. But there's not an en masse move to the cloud versus virtualization.

Virtualization is in full flight, of course. The Hyper-V private cloud stuff that we've announced with our System Center Management tools I'm real excited about. But we're smarter 12 months into it than we were when I saw you guys last.

Ballmer and other Microsoft execs continue to talk about cloud growth, but where’s the evidence of it? Microsoft doesn’t break out cloud services in its financial statements, and quantifiable data is scant.

Ballmer: We've got literally many millions of seats that moved to the cloud. Millions of seats -- stop and think about that, that's a big statement, millions of seats from enterprise customers. I'm not talking about small and medium business. I don't know whether we'd give something more specific or not. I'm not close to what we're saying publicly on that, but I'll let Frank Shaw [Microsoft’s VP of corporate communications] get the correct public pronouncement that's not going to cause us a problem with the financial guys who haven't heard it before. But I am really excited about the speed with which that's happening. You'll see it mostly as reference customers as opposed to the numbers.

Whether the number was one million, 5 million, or 10 million it's hard to assess the difference. Across the planet, there's about 1.2 billion computers installed. Roughly a third would be in some kind of managed enterprise environment, say 400 million-ish. Millions is a big number. It's a big number.

Read more: Page 2: Cloud Economics, 2, 3 |Next Page »

Simon May described 3 new heroes in next generation IT Departments in a 6/17/2011 post:

I’ve already highlighted how IT departments are going to change over the next few years a few times and what some people in those departments are going to be doing as their roles inevitably change but there are going to be some new roles that appear too. These roles will start to shape how the IT department works for the next few years and, just like all IT jobs are, they’re a response to the changes going on in the business and in the market. As the industry chases the promised befits of cloud like reduced cost and increased agility these roles are going to be the new linchpins (as Seth would say) able to cross boundaries and pull leavers.

They won’t be the only people in the team, they will be new people or people who’ve stepped over from another discipline.

Cloud integration engineer

Linking everything together is going to be critical in the future. I wouldn’t say we’re at cloud version 1.0 yet – we’re probably closer to 0.7 where people are working out how to do cool things, there’s lots of experimentation going on. As we as an industry marches forward we’re turning over more rocks and identifying new bugs that need squashing. Who is doing the squashing though depends upon the type of bug but it’s clear that, as with anything new, we’ve got technology blazing a trail and lawmakers and regulators playing catch up. Add to that mix the fear of vendor lock in that some people have, the fear of giving up “control” and the worries that accompany anything new and you’ve got a mix complex mix of problems to overcome.

That’s where hero number one, the Cloud Integration Engineer comes to the rescue with his super power – connectivity. Cloud integration engineers will be adept at connecting public clouds and private clouds to create the now much touted hybrid cloud, part in your sphere of granular control, part in the sphere of vendor management. These guys will know the technology involved in Windows Azure to a deep enough level to understand how to connect an Azure and an on premises application together (hint they’ll be using Windows Azure Connect). These guys will also know how to throw up an EC2 instance and have it pull data from a SQL Azure database and they’ll know how to connect Exchange 2010 to Office 365 in a coexistence scenario so that the 2000 guys in a field in Devon get fast internal email over their 256k internet connection whilst the field guys get rapid connections where ever they are around the world.

Some will be experts in plumbing finished services (SaaS) together using APIs and code to do magic, others will be PowerShell gurus that can provision 100 mailboxes with a single command, others still will make ninja like use of the tooling. These guys will likely be capable of code but will understand the plumbing of enterprises well enough to make things seamless. They’ll have a better understanding of security than most developers and IT pros but they’ll be connected to the business so they’ll work as guides to security not gate keepers to access.

Cloud operations manager

Operations in the cloud is far simpler than it is with traditional infrastructures, primarily because you don’t need to manage everything at the most granular level. Managing IT Operations in the Data Centre is about managing servers, making sure they’re up, making sure the hardware is working, making sure they have enough memory and CPU for their highest load ( + 15% or so) , for some this will be the old days, but for most it’s today – even if they’re virtualised. Virtual doesn’t make resource management go away – it just changes the economics.

With the cloud you still need to manage resource and ensure availability to some extent – but it is an easier job. You don’t need to make sure that servers have enough memory any more, you need to make a choice about scaling up or out on demand. Scaling up is adding more servers, scaling out is about adding more powerful servers. It takes intelligence to make that call, it takes understanding of the business value of scaling, both the process of scaling and the decision can be automated though. To crystallise that lets take the ever-so-much over used tap metaphor of cloud.

In the tap metaphor we equate supply to a tap, when you turn it on the tap runs and you get the resource you want – cool. However you pay for what you use, so what happens if you’re not doing anything useful with your water, if it’s just running down the drain? If you equate the tap to a cloud service and the water to compute resource you get the picture – especially when you consider that taps get broken, develop leaks or occasionally a rogue plumber installs them. You need to ensure that the capacity you use is being used for business – that’s where the Cloud Operations Manager comes to the rescue with their power of insight.

These guys will be able to use their close business relationship to determine when it’s right to allow the tap to run free, and when it’s time to turn it down. When you’re being DDOS attacked and when you’re seeing a sales boom, when you’re internal users are hammering your SharePoint because the company just announced pay rises or because Bob from accounts accidentally posted his personal photos to the whole company he looks funny.

Cloud Operations Managers will also be watching what’s happening to ensure it’s sensible and complies with policy (which is obviously boring and stifles innovation / agility) but who else is going to make sure that when Bob in accounts leaves he doesn’t take his Docs account with him and oh the company looses all that information – that knowledge, the business intelligence, the competitive edge – just because the employ left taking their personal documents with them in cloud storage. They have the super-power of understanding.

IT Marketing and Communications Officer

Communication is why businesses succeed and fail, you can have a great plan, sound strategy, superb revenue streams but if you’re team can’t communicate they can’t succeed. When IT is one of the resources in the mix that allows your organisation to get things done communication about it’s benefits is essential. If you’re going to guide your organisation to make the most informed choices and to make the most of what they’ve paid for you need someone to be evangelising the options.

We’ve tried this as an industry before, numerous times over but a skill set that allows your IT department to market it’s wares it’s what’s needed. You need your people to know that they will benefit from using cloud storage because it’s pervasive and lets them access their information anywhere. Previously we as IT guys would probably have said that in terms of “to reduce the load on the file server so that we can save 2gb of storage” – terms that don’t matter to the end user. Marketers are adept at making things relevant to the their market, internally this means translating, explaining and engaging a user community. I’ve seen this done a few times, often under different banners with differing levels of success – however it’s worked best when a marketeer got involved. Essentially these folks will breed cooperation.

Summary

So there we have it, three new roles, superpowers of connectivity, understanding and cooperation, which when you think of it don’t sound that super do they? By the way all of these roles are available on job sites today. Whilst researching I found a role paying £120k per anum doing Windows Azure integration with existing systems, Operations Manager roles that commonly reference SaaS/IaaS/PaaS and private cloud and for the IT Marketing roles are hidden away in specialist recruitment circles – which is always a good sign.

If you think that these new roles will add cost to IT then, sorry, you’re wrong and for two reasons. The first being that they will possibly not be part of IT itself, IT will virtualise these roles into other areas of the organisation to provide deep integration with the day to day reality of what the organisation does. Secondly they’ll be saving money or making it, IT Marketing Officer will be extracting every last penny from the move to cloud based email like Exchange Online, the Cloud Operations Manager will be ensuring things stay on track and costs don’t scale out of control and the Cloud Integration Engineer will be helping make money by doing new innovative stuff – day in, day out – creating competitive advantage.

As an IT Pro how do you get there? Learn. Learn about virtualisation, learn about cloud. We have webcasts, jumpstarts, downloads, resources and you probably have free training hours if you have Software Assurance or an Enterprise Agreement. Us it.

Rainer Stropek (@rstropek) described 10_Things_You_Can_Do_to_Use_Windows_Azure_More_Effectively in a presentation to Microsoft IT and Dev Connections. From the Abstract:

You are already using Windows Azure or you are planning to take the step into the cloud? In this session Rainer Stropek, MVP for Windows Azure, presents some of the most important tips to get the most out of your investment in Microsoft‟s cloud computing platform. Did you know that you can host multiple websites in a single Azure web role? Rainer will cover things like this during his session. You will learn about the theory behind them and see them presented in practical examples.

<Return to section navigation list>

Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

Yves Goeleven (@YvesGoeleven) presented a 01:11:53 MSDN Live Meeting : Building Hybrid applications with the Windows Azure Platform session on 6/7/2011 (missed when presented):

There are plenty of business drivers for enterprises to consider a move the cloud, but at the same time there are plenty of reasons as well why these enterprises will not be able to move their entire stack in the short term. At least for the next ten years hybrid systems will be the defacto standard way of implementing cloud based systems in the corporate world.

In this session I will show you around the different services, offered by the Windows Azure Platform, that ensure a seamless integration between cloud and on-premises resources.

<Return to section navigation list>

Cloud Security and Governance

Gorka Sadowski described new PCI Compliance in Virtualized Environments requirements on 6/18/2011 for the LogLogic blog:

The PCI Council released a document on PCI Compliance in Virtualized environments. This plays really well with LogLogic’s VMware strategy and some of our upcoming technology.

Turns out that PCI recognizes that:

- Logging in even more important but difficult in virtualized environments

- Logs are important in more sections than just Section 10, for example 3.4 and 5.2

For example:

Page 18:

- Section "Harden the Hypervisor"

- Separate administrative functions such that hypervisor administrators do not have the ability to modify, delete, or disable hypervisor audit logs.

- Send hypervisor logs to physically separate, secured storage as close to real-time as possible.

- Monitor audit logs to identify activities that could indicate a breach in the integrity of segmentation, security controls, or communication channels between workloads.

Page 33:

- Requirement 5.2

- Ensure that all anti-virus mechanisms are current, actively running, and generating audit logs.

Page 32:

- Requirement 3.4 Render PAN unreadable anywhere it is stored (including on portable digital media, backup media, and in logs) by using any of the following approaches:

- One-way hashes based on strong cryptography (hash must be of the entire PAN)

- Truncation (hashing cannot be used to replace the truncated segment of PAN)

- Index tokens and pads (pads must be securely stored)

- Strong cryptography with associated key-management processes and procedures

Page 37:

- Logging of activities unique to virtualized environments may be needed to reconstruct the events required by PCI DSS Requirement 10.2. For example, logs from specialized APIs that are used to view virtual process, memory, or offline storage may be needed to identify individual access to cardholder data.

- The specific system functions and objects to be logged may differ according to the specific virtualization technology in use.

- Audit trails contained within virtual machines are usually accessible to anyone with access to the virtual machine image.

- Specialized tools may be required to correlate and review audit log data from within virtualized components and networks.

- It may be difficult to capture, correlate, or review logs from a virtual shared hosting or cloud- based environment.

- Additional Best Practices / Recommendations:

- Do not locate audit logs on the same host or hypervisor as the components generating the audit logs.

These requirement clearly cry out for LogLogic’s solutions. Please feel free to leave your comments.

David Navetta posted David Navetta Discusses Cyber Insurance on FOX News Live on 6/17/2011 to the Information Law Group blog:

David Navetta recently spoke to FOX News Live concerning cyber insurance and risk transfer. You can watch the interview HERE.

<Return to section navigation list>

Cloud Computing Events

Microsoft World Wide Events will present MSDN Webcast: Integrating High-Quality Data with Windows Azure DataMarket (Level 200) on 6/21/2011 at 8:00 AM PDT:

Event ID: 1032487799

Language(s): English.

Product(s): Windows Azure.

- Audience(s): Pro Dev/Programmer.

All of us are aware of the importance of high-quality data, especially when organizations communicate with customers, create business processes, and use data for business intelligence. In this webcast, we demonstrate the easy-to-integrate data services that are available within Windows Azure DataMarket from StrikeIron. We look at the service itself and how it works. You'll learn how to integrate it and view several different use cases to see how customers are benefitting from this data quality service that is now available in Windows Azure DataMarket.

Presenter: Bob Brauer (@sibob), Chief Strategy Officer and Cofounder, StrikeIron, Inc.Bob Brauer is an expert in the field of data quality, including creating better, more usable data via the cloud. He first founded DataFlux, one of the industry leaders in data quality technology, which has been acquired by SAS Institute. Then he founded StrikeIron in 2003, leveraging the Internet to make data quality technology accessible to more organizations. Visit www.strikeiron.com for more information.

Jim O’Neill announced Rock, Paper, Azure LIVE in Cambridge–Jun 23rd in a 6/18/2011 post:

If you follow my blog, you know that some of my evangelist colleagues and I put together a series of webcasts in April and May focused around playing an on-line version of Rock, Paper, Scissors via Windows Azure. We’ve just completed an East Coast run of live events as well that have incorporated the contest, and now I'm bringing it home to the Boston Azure User Group this Thursday, June 23rd.

This upcoming meeting will feature a brief overview of how we leveraged Windows Azure for this project, but the majority of the time will be a ‘hackathon’ allowing you to get some hands-on experience deploying to Windows Azure and have some fun coding

your own automated ‘bot’ to play Rock, Paper, Scissors (with a few twists) against your fellow attendees. If you’ve put off kicking the tires of Windows Azure, this is a great time to jump in!

Did I mention prizes?? The Boston Azure User Group will have an XBox/Kinect, a Visual Studio Professional license, and some other goodies to give away based on the leaderboard at the end of the night!

Want in (well, who wouldn’t)? Here’s what you need to do:

- RSVP via the Boston Azure User Group

- Sign-up for your FREE (no-strings attached) 30-day Windows Azure account (Promo code: PLAYRPA)

- Download (the also FREE) Visual Web Developer 2010 Express (or Visual Studio 2010 Pro or higher)

- Install the Windows Azure Tools and SDK

We have some videos and instructions as well on the Get Started section of the RockPaperAzure Challenge site to help you get set up. Don’t have a Windows laptop with Vista or Windows 7? No worries, we’ll have some additional machines available – just make sure you note that you’ll need one when you RSVP.

<Return to section navigation list>

Other Cloud Computing Platforms and Services

Derrick Harris (@derrickharris) asked Can Google App Engine compete in the enterprise? in a 6/18/2011 post to Giga Om’s Structure blog:

Google has killed its App Engine for Business offering, the enterprise-friendly version of its Platform as a Service that included a partnership with VMware around the Spring Java framework. Part of a bevy of changes to App Engine announced at last month’s Google I/O conference, the news went largely unnoticed in the shadow of major pricing adjustments. But the missteps with App Engine for Business are indicative of the types of challenges Google faces as it attempts to ready its PaaS service to compete with companies like Red Hat and Salesforce.com in luring enterprise developers.

No more App Engine for Business

I spoke with Gregory D’alesandre, senior product manager for Google App Engine, who explained to me Google’s new approach to selling PaaS to businesses. In retrospect, he said, Google might have announced App Engine for Business too early — more than a year ago — before it had a chance to gauge reaction to some of its more-limiting features. The company’s “trusted testers,” it turns out, liked many of the features, but they didn’t like the idea of a separate offering or the fact that App Engine for Business locked down API access from outside the owner’s domain.

So, D’alesandre explained, many of App Engine for Business features have or will be rolled into App Engine proper, which Google actually will take out of “preview” mode later this year and make an official part of its Google Enterprise portfolio. D’alesandre said that SQL support, SSL encryption and full-text search, all parts of the App Engine for Business offering, are top priorities not just for the App Engine team, but for Google overall, and should be ready once the product loses its “preview” status. SQL support, he added, is particularly important because of its appeal to enterprise developers, but it’s a complex process incorporating it into a platform that wasn’t designed with SQL in mind.

App Engine also still will support Spring for developing Java applications, D’alesandre said, and Google is actively working with VMware to figure out the next steps to advance their cloud computing partnership even beyond Spring. Other features of the new, improved App Engine will be a 99.95 percent SLA and additional support options. D’alesandre noted that expanded Python support and some new API improvements are in the works, too.

Too little, too late?

What’s not clear, however, is whether enterprise developers will buy into the new App Engine or whether its existing hundreds of thousands of developers will stick with the platform once the changes finally take effect. The latter group will have to adjust to higher prices than they were paying in the “preview” phase, and the former group — notoriously wary of vendor lock-in — will have to contend with Google’s very specific set of technologies and coding practices. As The Register‘s Cade Metz explained in a recent in-depth look at App Engine, the platform is very proprietary and requires sticking to a defined set of best practices.

D’alesandre isn’t convinced the concerns around limited language support and proprietary technologies are dealbreakers. The way he sees it, owning the App Engine stack from servers up through APIs means Google can easily troubleshoot and optimize will full knowledge of all the components. As for language support, he sees benefit in providing deep sets of features for the languages it does support — Python, Java and Google’s own Go. It’s difficult to argue with his stance regarding languages considering that PaaS is notorious for being single-language-only and only has begun to break those chains recently in the forms of VMware’s Cloud Foundry, DotCloud and Red Hat’s OpenShift . Google actually was ahead of the game by adding Java support relatively early on.

However, even D’alesandre acknowledged that he’s impressed with some of the PaaS offerings that have hit the market lately. If anything, timing might be critical for Google, which will need to get the production-ready App Engine into the market before any of the myriad other PaaS offerings gather too much momentum. I thought App Engine for Business was a formidable competitor when announced, but time and new PaaS launches — including from Amazon Web Services — have dulled its edge.

Software inferiority?

There’s also the issue of Google “outdated” architecture, as so described in a recent blog post by ex-Googler Dhanji Prasanna. He opined that while Google’s data center infrastructure is second to none, its software stack is aging and has become a hindrance in terms of performance and development flexibility. D’alesandre declined to comment specifically on Prasanna’s criticisms, but called him a “good friend” and “absolutely brilliant engineer” who “was looking for something different” than Google’s development culture.

On his personal blog today, IBM’s Savio Rodrigues offered a down-to-earth take on the debate over Google’s software stack. While Prasanna cites Hadoop and MongoDB (among others) as being superior to their Google counterparts MapReduce and MegaStore — a position that very well might be accurate — Rodrigues notes that “[c]ombining individual best of breed software building blocks into a cloud platform as a service environment that offers the functionality of Google App Engine, requires enterprises to do a lot more work than simply using Google App Engine.”

Of course, he added, that Google’s strict adherence to its own code raises a big question: “how your enterprise needs will be prioritized against the needs of internal Google developers.”

We’ll discuss the potential potholes for PaaS at next week’s Structure 2011 event, many of which are exemplified by the questions surrounding Google App Engine. It’s still early enough in the game for Google’s strategy change to pay off, but it will require some serious effort to convince enterprise developers that Google’s approach to PaaS is worth buying into.

How about competition with Windows Azure and SQL Azure, Derrick? I still haven’t been able to find any details about the App Engine’s forthcoming relational database management system (RDBMS).

Alex Popescu posted a link to a MongoDB at Foursquare: Practical Data Storage video presentation to his myNoSQL blog on 6/18/2011:

The fine guys from 10gen have granted me access to publish here videos from their Mongo events organized across US and Europe—thanks Meghan.

I’ve decided to start this series with one high profile company in MongoDB portfolio: Foursquare—you’ll understand why I’m saying this if you check the content I’ve published before about Foursquare.

Without further ado, Harry Heymann’s‘ talk: Practical data storage: MongoDB at Foursquare.

This video was made available by 10gen, creators of MongoDB and organizers of the Mongo events.

<Return to section navigation list>

1 comments:

Thanks a lot for relaying our Shelfcheck announcement! Joannes from Lokad

Post a Comment