Windows Azure and Cloud Computing Posts for 6/20/2011+

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database and Reporting

- Marketplace DataMarket and OData

- Windows Azure AppFabric: Access Control, WIF and Service Bus

- Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch

- Windows Azure Infrastructur and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Azure Blob, Drive, Table and Queue Services

No significant articles today.

<Return to section navigation list>

SQL Azure Database and Reporting

Steve Yi (pictured below) reminded SQL Azure database architects and developers on 6/20/2011 of Cihan Biyikoglu’s Considerations When Building Database Schema with Federations in SQL Azure:

Cihan Biyikoglu has written an interesting article on database schema when working with Federations.

“With federations, you take parts of your schema and scale it out. With each federation in the root database, a subset of objects are scaled-out. You may create multiple federations because distribution characteristics and scalability requirements may vary across sets of tables. For example, with an ecommerce app, you may both have a large customer-orders set of tables and a very large product catalog that may have completely different distribution requirements.”

For more details about forthcoming SQL Azure Federations and sharding, see my Build Big-Data Apps in SQL Azure with Federation cover story for Visual Studio Magazine’s March 2011 issue.

Sudhir Hasbe described SQL Azure Data Sync Service in a 6/18/2011 post:

For a while I have wanted to try out Data Sync Service. Today I finally got to it. Sql Azure Data Sync service enables you to sync data between SQL Azure and SQL Azure databases or SQL Azure and SQL Server databases. The video by Liam Cavanagh was super helpful.

<Return to section navigation list>

MarketPlace DataMarket and OData

My (@rogerjenn) updated Reading Office 365 Beta’s SharePoint Online Lists with the Open Data Protocol (OData) of 6/20/2011 replaces the earlier Reading and Updating Office 365 Beta’s SharePoint Online Lists with OData, which didn’t include update details. The updated version’s new content:

Remote Authentication in SharePoint Online Using Claims-Based Authentication

Third-party OData browsers, such as Fabrice Marguerie’s Sesame Data Browser, enable displaying, querying and, in some cases, updating content delivered by most OData providers. Sesame is a Sliverlight application, which runs from the desktop or in a browser. Sesame offers a built-in set of sample data sets and enables a variety of authentication methods, including Windows Azure, SQL Azure, Azure DataMarket and HTTP Basic.

Here’s a screen capture of browser-based Sesame with a connection specified to Fabrice’s Northwind sample database OData source:

Clicking OK displays members of the tables collection in the left-hand frame. Clicking an entry displays up to its first 15 elements. Hovering over a record selection arrow opens a button to display items in a related table by means of a lookup column, such as orders for AROUT in the following example:

Clicking the related table button opens the first 15 or fewer related entries in a linked query window:

The query string is Customers('AROUT')/Orders.

Problems Displaying SharePoint Online Lists in OData Format with the Sesame browser

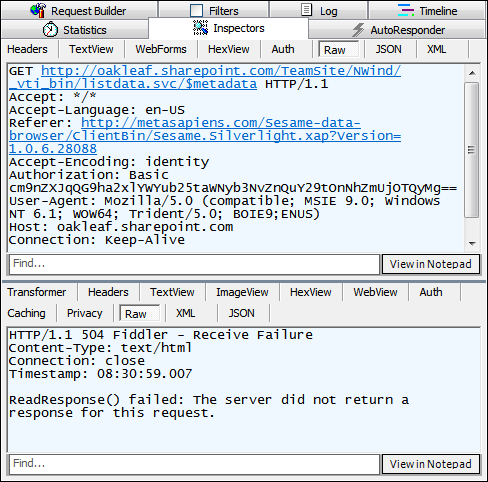

Attempting to open the OData representation of a SharePoint Online list with Sesame’s Basic authentication (your Office 365 username and password), fails with a .NET Not Found exception. Here’s Fiddler 2.3.4.4 display of the raw HTTP request and response messages involved:

Following is a fiddler capture for a successful IE9 browser request for the OData representation of the SharePoint Online Products list:

Here’s Fiddler’s Headers view of the successful operation:

SharePoint Online doesn’t accept Basic authentication. Instead, it requires a pair of login cookies to enable remote claims-based authentication.

Robert Bogue’s Remote Authentication in SharePoint Online Using Claims-Based Authentication article for the MSDN Library’s “Claims and Security Articles for SharePoint 2011” topic explains how remote claims-based authentication works:

Introduction to Remote Authentication in SharePoint Online Using Claims-Based Authentication

The decision to rely on cloud-based services, such as Microsoft SharePoint Online, is not made lightly and is often hampered by the concern about access to the organization's data for internal needs. In this article, I will address this key concern by providing a framework and sample code for building client applications that can remotely authenticate users against SharePoint Online by using the SharePoint 2010 client-side object model.

Note: Although this article focuses on SharePoint Online, the techniques discussed can be applied to any environment where the remote SharePoint 2010 server uses claims-based authentication.

I will review the SharePoint 2010 authentication methods, provide details for some of the operation of SharePoint 2010 with claims-mode authentication, and describe an approach for developing a set of tools to enable remote authentication to the server for use with the client-side object model.

Brief Overview of SharePoint Authentication

In Office SharePoint Server 2007, there were two authentication types: Windows authentication, which relied upon authentication information being transmitted via HTTP headers, and forms-based authentication. Forms-based authentication used the Microsoft ASP.NET membership and roles engines for managing users and roles (or groups). This was a great improvement in authentication over the 2003 version of the SharePoint technologies, which relied exclusively on Windows authentication. However, it still made it difficult to accomplish many scenarios, such as federated sign-on and single sign-on.

To demonstrate the shortcomings of relying solely on Windows authentication, consider an environment that uses only Windows authentication. In this environment, users whose computers are not joined to the domain, or whose configurations are not set to automatically transmit credentials, are prompted for credentials for each web application they access, and in each program they access it from. So, for example, if there is a SharePoint-based intranet on

intranet.contoso.com, and My Sites are located onmy.contoso.com, users are prompted twice for credentials. If they open a Microsoft Word document from each site, they are prompted two more times, and two more times for Microsoft Excel. Obviously, this is not the best user experience.However, if the same network uses forms-based authentication, after users log in to SharePoint, they are not prompted for authentication in other applications such as Word and Excel. But they are prompted for authentication on each of the two web applications.

Federated login systems, such as Windows Live ID, existed, but integrating them into Office SharePoint Server 2007 was difficult. Fundamentally, the forms-based mechanism was designed to authenticate against local users, that is, it was not designed to authenticate identity based on a federated system. SharePoint 2010 addressed this by adding direct support for claims-based authentication. This enables SharePoint 2010 to rely on a third party to authenticate the user, and to provide information about the roles that the user has. …

Robert continues with an “Evolution of Claims-Based Authentication” topic and …

SharePoint Claims Authentication Sequence

Now that you have learned about the advantages of claims-based authentication, we can examine what actually happens when you work with claims-based security in SharePoint. When using classic authentication, you expect that SharePoint will issue an HTTP status code of 401 at the client, indicating the types of HTTP authentication the server supports. However, in claims mode a more complex interaction occurs. The following is a detailed account of the sequence that SharePoint performs when it is configured for both Windows authentication and Windows Live ID through claims.

- The user selects a link on the secured site, and the client transmits the request.

- The server responds with an HTTP status code of 302, indicating a temporary redirect. The target page is

/_layouts/authenticate.aspx, with a query string parameter of Source that contains the server relative source URL that the user initially requested.- The client requests

/_layouts/authenticate.aspx.- The server responds with a 302 temporary redirect to

/_login/default.aspxwith a query string parameter of ReturnUrl that includes the authentication page and its query string.- The client requests the

/_login/default.aspxpage.- The server responds with a page that prompts the user to select the authentication method. This happens because the server is configured to accept claims from multiple security token services (STSs), including the built-in SharePoint STS and the Windows Live ID STS.

- The user selects the appropriate login provider from the drop-down list, and the client posts the response on

/_login/default.aspx.- The server responds with a 302 temporary redirect to

/_trust/default.aspxwith a query string parameter of trust with the trust provider that the user selected, a ReturnUrl parameter that includes theauthenticate.aspxpage, and an additional query string parameter with the source again. Source is still a part of the ReturnUrl parameter.- The client follows the redirect and gets

/_trust/default.aspx.- The server responds with a 302 temporary redirect to the URL of the identity provider. In the case of Windows Live ID, the URL is https://login.live.com/login.srf with a series of parameters that identify the site to Windows Live ID and a wctx parameter that matches the ReturnUrl query string provided previously.

- The client and server iterate an exchange of information, based on the operation of Windows Live ID and then the user, eventually ending in a post to

/_trust/default.aspx, which was configured in Windows Live ID. This post includes a Security Assertion Markup Language (SAML) token that includes the user's identity and Windows Live ID signature that specifies that the ID is correct.- The server responds with a redirect to

/_layouts/authenticate.aspx, as was provided initially as the redirect URL in the ReturnUrl query string parameter. This value comes back from the claims provider as wctx in the form of a form post variable. During the redirect, the/_trust/default.aspxpage writes two or more encrypted and encoded authentication cookies that are retransmitted on every request to the website. These cookies consist of one or more FedAuth cookies, and an rtFA cookie. The FedAuth cookies enable federated authorization, and the rtFA cookie enables signing out the user from all SharePoint sites, even if the sign-out process starts from a non-SharePoint site.- The client requests

/_layouts/authenticate.aspxwith a query string parameter of the source URL.- The server responds with a 302 temporary redirect to the source URL.

Note: If there is only one authentication mechanism for the zone on which the user is accessing the web application, the user is not prompted for which authentication to use (see step 6). Instead,

/_login/default.aspximmediately redirects the user to the appropriate authentication provider—in this case, Windows Live ID.SharePoint Online Authentication Cookies

An important aspect of this process, and the one that makes it difficult but not impossible to use remote authentication for SharePoint Online in client-side applications, is that the FedAuth cookies are written with an HTTPOnly flag. This flag is designed to prevent cross-site scripting (XSS) attacks. In a cross-site scripting attack, a malicious user injects script onto a page that transmits or uses cookies that are available on the current page for some nefarious purpose. The HTTPOnly flag on the cookie prevents Internet Explorer from allowing access to the cookie from client-side script. The Microsoft .NET Framework observes the HTTPOnly flag also, making it impossible to directly retrieve the cookie from the .NET Framework object model.

Note: For SharePoint Online, the FedAuth cookies are written with an HTTPOnly flag. However, for on-premises SharePoint 2010 installations, an administrator could modify the web.config file to render normal cookies without this flag. …

Robert concludes the article with “Using the Client Object Models for Remote Authentication in SharePoint Online” and “Reviewing the SharePoint Online Remote Authentication Sample Code Project.”

Fabrice will need to add a SharePoint authentication option with code similar to that described in the article to enable interacting with OData content from SharePoint Online.

<Return to section navigation list>

Windows Azure AppFabric: Access Control, WIF and Service Bus

No significant articles today.

<Return to section navigation list>

Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

No significant articles today.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

The MSDN Samples Gallery team posted links to 64 Windows Azure applications in a MSDN Developer Samples: Learn Through Code post of 6/20/2011:

Entelect Solutions reported Entelect pioneers Azure cloud implementation for Liberty in a 6/20/2011 press release from Johannesburg, South Africa:

After less than a year of development, Entelect Software is preparing for the upcoming launch of the new Liberty Health burn jOules Customer Loyalty Programme. The development and rollout of this programme is a significant step forward for the use of cloud computing in South Africa. Entelect Software used the Microsoft Azure platform for the entire system, including self-service portals for members, partners, intermediaries and administrators.

The company chose Azure because it allows them to build, host and scale software applications in secure Microsoft data centres.

“Hosting on Microsoft Azure was the reason we could provide Liberty with delivery in the timeframes they required. It also simplified and enhanced the quality of the overall solution. Certainly there were challenges, but for a system architected from scratch, requiring such a complex distribution of partners, the cloud proved to be the best solution, without a doubt,” said Martin Naude, Chief Technology Officer at Entelect Software.

The project started in July 2010 with an in-depth consulting and specifications stage. A key requirement was finding a central location for the project's diverse stakeholders and the business requirement to be able to roll out the programme internationally, and facilitate an unlimited number of partners, members and brokers in the future.

Entelect had to overcome a variety of serious challenges in the development of the burn jOules product, but it was well worth the effort for the benefits involved.

The cloud platform, while it requires a learning curve in development, provides a range of services that simplifies and enhances the system. The provision of services such as queuing, reporting, integration and optimisation, are cost effective and efficient through economies of scale. Another advantage is how Windows Azure takes care of countless software versions and backward compatibility issues. While there are always risks no matter what you do, the platform offers the best minds on a large scale to secure your company and stakeholder information.

Entelect Software has planned and tested the product extensively before going live. The company's careful attention to detail upfront has allowed them to produce a superb product, which meets all the client requirements.

Entelect is using the cloud platform for a number of other projects, and the experience they gained through their innovative use of the Azure platform is helping them to cement their position as pioneers in the field.

Other Azure projects currently in development by Entelect Software include a digital store, a labour management portal for specialists in engineering, a dynamic system for managing inventory and high-value equipment across multiple sites, a bird watching information platform, and Entelect's Web, event and retail management online product suites. With projects such as these currently being developed, the company is rapidly expanding its knowledge base and market exposure.

Entelect

Entelect is a leading software engineering and solutions company with head offices in Johannesburg, South Africa. Our rapid growth and respected reputation is driven by our strong delivery track record, exceptional people and approach to solving business problems.

Since inception in 2001, Entelect has established itself as a quality player with a strong client base spanning most industry sectors, some of which include financial services, healthcare, entertainment, telecommunications, engineering, mining and logistics.

For more information, please visit http:http://www.entelect.co.za.

Srinivasan Sundara Rajan asked is it Time for the Windows Azure Tablet? in a 6/20/2011 post to the SYS-CON Media blog:

Several industry predictions point to an explosion of tablets as the most favored productivity device for the enterprise and the consumer. The analysts have predicted a worldwide increase in usage for tablets.

The biggest advantages of tablets is that they are lightweight yet powerful enough for carrying out enterprise class productivity operations, and weighing much more than a smartphone in terms of enterprise productivity needs.

Tablets and Cloud

While the tablets are another dimension of improvements in client devices from the desktop era to today, their popularity is closely aligned with the growth of the Cloud Computing paradigm.In one of my earlier articles, I stressed the fact that application software of the future will likely have a piece that runs on clients and a piece that runs in the Cloud, and tablets are part of client strategy of many enterprises.

The productivity gains achieved through the mobility of tablets can only be realized if the corresponding server applications can be connected in cloud and the tablet clients can consume the required data on the fly. Also the tablet clients can sync up their modified data with the cloud on an as-needed basis.

Windows Azure Tablet

Many new tablet devices along with their respective operating system of choice are entering the market, most notable being the HP TouchPad with advanced features built on the robust webOS operating system. We expect to see the HP TouchPad make great waves in the generic tablet market.

In this context, we expect the Microsoft Azure client-specific tablet will likely be worthy for Windows Azure Cloud consumers and to provide a value proposition accordingly.

Some of the features expected of the so-called Windows Azure tablet are:

- Full-featured Windows Azure Client APIs and environment so that Windows Azure applications can run standalone or on the Cloud

- Wizard-driven connectors for Office 365 and other Azure offerings such as SharePoint online

- Availability of Windows App Fabric components and SQK Azure Data sync, so mobile workers can perform required changes in a disconnected manner, yet sync up with the Cloud when there is connectivity

- Ability to use local storage and back them up to Windows Azure storage services based on need

- If the number of transactions is high, they can switch over to Windows Azure Server Queues so that the transactions are guaranteed to be completed.

- Ability for the client applications running on the tablet to burst to Windows Azure Cloud based on the resource usage and finally sync up the results to the client once the task is completed

- Finally free bundled subscriptions to Windows Azure Cloud for tablet owners to have a certain level of personal space and also compute and storage allocation.

Summary

As per my predictions in earlier blogs, we see that tablets are more than consumer entertainment devices; rather they will act as office productivity devices of the IT enterprise in the short term and continue till further innovations take place.In this context, Cloud and tablets are seen as natural allies toward taking the office productivity to the new levels. With the support from major players already happening, the availability of the Windows Azure Tablet will provide further choices for the enterprises in their IT transformation initiatives toward improved office productivity.

I’m even less sanguine about the prospects for a Windows Azure tablet than I am for the Google Chrome netbook. The Windows Azure SDK gets revved to often to include in in a consumer device.

The Windows Azure Team posted a Real World Windows Azure: Interview with MetraTech CEO Scott Swartz on Metanga to the Windows Azure Team blog on 6/20/2011:

MSDN: Tell us about your company and the solution that you have created.

Swartz: I started MetraTech in 1998 and Metanga is our new Software-as-a-Service (SaaS) offering. Metanga is a multi-tenant SaaS billing solution designed to help ISVs monetize today’s customer and partner relationships as they move to a SaaS model. We’ve always said that billing should fit a customer’s business model, not the other way around. Our unique flexibility enables us to be easily integrated with other software components to create a complete solution. Our MetraNet billing solution, available as on-premises software or as a managed service, enables customers to build innovative models across 90 countries, 26 currencies and 12 languages.

MSDN: What are the key benefits of using Windows Azure with Metanga?

Swartz: Billing is a mission critical application, so the fact that we can provide disaster recovery, scalability and the ability to respect data residency requirements make building natively on Windows Azure a no brainer. Multi-tenancy and the cloud also offers elasticity allowing us to support large and small clients alike without the inherent compromises we’d have to make in a traditional data center. Additionally, the Windows Azure platform offers incredible technology such as Windows Azure AppFabric caching and Windows Azure Storage that enables us to build a far better service for our clients.

MSDN: Could you give us an overview of the solution?

Swartz: Simply put, Metanga enables ISVs to make more money. Selling shrink-wrapped software and support has traditionally been a straightforward model. But the game is changing, and as ISVs deploy more of their solutions in the cloud, they are faced with new financial challenges. In order to provide a complete and positive online customer experience, the billing system needs to provide the financial information on the state of supporting agreements from all parties to the relationship portal(s). Customers expect to buy SaaS applications differently and managing metered charges, subscriptions, upgrades and downgrades, channel partners, commissions, notifications and entitlements are all part of a new model where the customer drives an ever-changing contractual agreement. ISVs could try to build all that as a part of their application, but’s it’s time consuming and costly. In turn, if they use manual billing methods they may lose 2-4% in revenue leakage. We offer a faster and simpler path for an ISV when it comes to cloud monetization.

MSDN: Is this solution available to the public right now?

Swartz: Yes, we just officially launched the application on Windows Azure; customers can enroll from our site and request a sandbox environment. Our team will give customers a walkthrough of the API and training documentation and then set them them try out the system. We’re available at any time to assist in the setup of products or prices, or if someone needs help.

Click here to learn more about Metanga and Windows Azure.

The Microsoft-All-In-One Code Framework team posted a New release of code samples from Microsoft All-In-One Code Framework (2011-6-19) article on 6/19/2011:

A new release of Microsoft All-In-One Code Framework is available on June 19th. We expect that its 14 new code samples would reduce developers’ efforts in solving the following typical programming tasks.

Download address: http://1code.codeplex.com/releases/view/68596

Alternatively, you can download the code samples using Sample Browser or Sample Browser Visual Studio extension. They give you the flexibility to search samples, download samples on demand, manage the downloaded samples in a centralized place, and automatically be notified about sample updates.

If it is the first time that you hear about Microsoft All-In-One Code Framework, please watch the introduction video on Microsoft Showcase, and read the introduction on our homepage http://1code.codeplex.com/.

---------------------------------

New Windows Azure Code Samples

We released a set of Windows Azure code samples based on customers’ heatedly requested code sample topics in the official Windows Azure Code Samples Voting Forum.

CSAzureStartupTask

Download: http://code.msdn.microsoft.com/CSAzureStartupTask-ebb574a0

The sample demonstrates using the startup tasks to install the prerequisites or to modify configuration settings for your environment in Windows Azure. The code sample is created for answering this code sample request Startup tasks and Internet Information Services (IIS) configuration voted for 27 times.

You can use Startup element to specify tasks to configure your role environment. Applications that are deployed on Windows Azure usually have a set of prerequisites that must be installed on the host computer. You can use the start-up tasks to install the prerequisites or to modify configuration settings for your environment. Web and worker roles can be configured in this manner.

CSAzureManagementAPI, VBAzureManagementAPI

Downloads

C# version: http://code.msdn.microsoft.com/CSAzureManagementAPI-609fc31a

VB version: http://code.msdn.microsoft.com/VBAzureManagementAPI-3200a617Managing hosted services and deployments is another hot Windows Azure sample request. Twenty developers voted for it.

The Windows Azure Service Management API is a REST API for managing your services and deployments. It provides programmatic access to much of the functionality available through the Management Portal. Using the Service Management API, you can manage your storage accounts and hosted services, your service deployments, and your affinity groups. This code sample shows how to create a new hosted service on Windows Azure using the Windows Azure Service Management API.

CSAzureWebRoleBackendProcessing, VBAzureWebRoleBackendProcessing

Downloads

C# version: http://code.msdn.microsoft.com/CSAzureWebRoleBackendProces-d0e501dc

VB version: http://code.msdn.microsoft.com/VBAzureWebRoleBackendProces-5c14157dThis code sample shows how a web role can be used to perform all of the frontend and backend operations for an application, according to this popular Windows Azure code sample request: Using a web role for both the frontend and backend processing of an application.

Windows Azure provides worker role to perform backend processing. Generally, a worker role is deployed to one or more separated instances from the web role. However, sometimes we want to perform backend processing in the same instance of web role for cost saving purpose etc.

This sample shows how to achieve this goal by using startup task in a web role to run a console application in the backend. The backend processor works much similar to a worker role. The difference is that it runs as a startup task in the same instance of the web role.

Please note if a VM crashes both the frontend web role and the backend processor on the VM stop working, and the scalability of the backend processor is coupled with scalability of the web role, which may not be suitable in some scenario.

New Windows Workflow Foundation Code Sample

VBWF4ActivitiesCorrelation

Download: http://code.msdn.microsoft.com/VBWF4ActivitiesCorrelation-3563324c

Consider that there are two such workflow instances:

start start

| |

Receive activity Receive activity

| |

Receive2 activity Receive2 activity

| |A WCF request comes to call the second Receive2 activity. Which one should take care of the request? The answer is Correlation. This sample will show you how to correlate two workflow service to work together.

If you have any feedback, please fill out this quick survey or email us: onecode@microsoft.com

<Return to section navigation list>

Visual Studio LightSwitch

Dan Moyer (@danmoyer) described Connecting to a Workflow Service from LightSwitch Part 3 in a 2/13/2011 post (missed when posted):

Overview

In the third and final post of this topic I’ll show one way of using the results from a workflow service from a LightSwitch application.

The content of this post draws heavily from two other blog posts:

- Beth Massi’s How Do I Video: Create a Screen that can Both Edit and Add Records in a LightSwitch Application …

- Robert Green’s Using Both Remote and Local Data in a LightSwitch Application

Take note of some problems I have in this demo implementation. Look for Issue near the end of this post for more discussion.

As time permits, I plan to investigate further and will be soliciting feedback from the LightSwitch developer’s forum.

If you know of a different implementation to avoid the problems I’ve encountered, please don’t hesitate to send me an email or comment here.

Implementation

I want to keep the example easy to demonstrate, so I’ll usd only the OrderHeader table from the AdventureWorks sample database.

The OrderHeader table contains fields such as ShipMethod, TotalDue, and Freight. For demo purposes I’m treating the data in Freight as Weight, instead of a freight charge.

Based on its business rules, the workflow service returns a value of a calculated shipping amount. The OrderHeader table does not contain a ShippingAmount. So I thought this a good opportunity to use what Beth Massi and Robert Green explained in their blog to have LightSwitch create a table in its intrinsic database and connect that table’s data to the AdventureWorks OrderHeader table.

In Part 2 of this topic, you created a LightSwitch application and added Silverlight library project to the solution containing an implementation of a proxy to connect to the workflow service. In the LightSwitch project, you should already have a connection to the AdventureWorks database and the SalesOrderHeader table.

Now add a new table to the project. Right click on Data Source node, and select Add a Table in the context menu. Call the table ShippingCost. This new table is created in the LightSwitch intrinsic database.

Add a new field, ShipCost of type Decimal to the table.

Add a relationship to the SalesOrderHeaders table, with a zero to 1 and 1 relationship.

Change the Summary Property for ShippingCost from the default Id to ShipCost.

Now add a search and detail screen for the SalesOrderHeader entity. The default naming of the screens are SearchSalesOrderHeader and SalesOrderHeaderDetail.

I want to use the SalesOrderHeaderDetail as the default screen when a user clicks the Add… button on the search screen.

How to do this is explained in detail in Beth Massi’s How Do I video and Robert Green’s blog.

In the SearchSalesOrderHeader screen designer, Add the Add… button. Right click the Add… button and select Edit Execute Code in the context menu. LightSwitch opens the SearchSalesOrderHeader.cs file. Add the following code to the griddAddAndEditNew_Execute() method for LightSwitch to display the SalesOrderHeaderDetail screen.

Now open the SalesOrderHeaderDetail screen in the designer. Rename the query to SalesOrderHeaderQuery. Add a SalesOrderHeader data item, via the Add Data Item menu on the top of the designer. I’m being brief on the detail steps here because these steps are very well explained in the Beth Massi’s video and Robert Green’s blog.

Your screen designer should appear similar to this:

Click on the Write Code button in the upper left menu in the designer and select SalesOrderHeaderDetail_Loaded under General Methods.

With the SalesOrderHeaderDetail.cs file open, add a private method which this screen code can call for getting the calculated shipping amount.

This method creates an instance of the proxy to connect to the workflow service and passes the Freight (weight), SubTotal, and ShipMethod from the selected SalesOrderHeader row. (Lines 87-90)

It returns the calculated value with a rounding to two decimal places. (Line 91)

When the user selects a SalesOrderHeader item from the search screen, the code in SalesOrderHeaderDetail_Loaded() is called and the SalesOrderID.HasValue will be true. (Line 17)

The _Loaded() method calls the workflow service to get the calculated shipping data. (line 21)

It then checks if the selected row already contains a ShippingCost item. (Line 23).

When you first run the application, the ShippingCost item will be null because no ShippingCost item was created and associated with the SalesOrderHeader item from the AdventureWorks data.

So the implementation creates a new ShippingCosts entity, sets the calculated ShippingCost, associates the new SalesOrderItem entity to the new ShippingCosts entity, and saves the changes to the database. Lines 25-29.

If the SalesOrderHeader does have a ShippingCost, the code gets the ShippingCost entity, updates the ShipCost property with the latest calculated shipping cost and saves the update. (Line 33 – 38)

Lines 19 – 40 handle the case when the user selects an existing SalesOrderHeader item from the search screen. Here the implementation handles the case when the user selects the Add… button and the SalesOrderID.HasValue (Line 17) is false.

For this condition, the implementation creates a new SalesOrderHeader item and a new ShippingCost item. The AdventureWorks database defaults some of the fields when a new row is added. It requires some fields to be initialized, such as the rowguid, to a new unique value. (Line 53)

For this demo, to create a the new SalesOrderHeader without having an exception, I initialized the properties with values shown in Lines 45 – 53. Similarly, I initialized the properties of the new ShippingCost item as shown in lines 56 – 58.

Note the setting of ShipCost to -1 in line 56. This is another ‘code smell.’ I set the property here and check for that value in the SalesOrderHeaderDetail_Saved() method. I found when this new record is saved, in the _Saved() method, it’s necessary to call the GetCalculatedShipping() method to overwrite the uninitialized (-1) value of the Shipping cost.

Issue There must be a better way of doing this implementation and I would love to receive comments in email or to this posting of ways to improve this code. This works for demo purposes. But it doesn’t look right for an application I’d want to use beyond a demo.

Finally, there is the case where the user has clicked the Add… button and saves the changes or where the user has made a change to an existing SalesOrderHeader item and clicks the Save button.

Here, I found you need to check if the SalesOrderHeader.Shipping cost is not defined. (Lines 69 – 76).

If there is not an existing ShippingCost entity associated with the SalesOrderHeader item, a new one is created (line 71) and its properties are set (lines 73 – 75). LightSwitch will update the database appropriately.

And there is that special case mentioned above, where SalesOrderHeader does contain an existing ShippingCost item, but contains a non-initialized ShippingCost item. (Line 77). For this case, the ShipCost is updated.

Issue What I also discovered in this scenario that the user needs to click the Save button twice in order to have the data for both the SalesOrderHeader and the ShipCost entities saved.

Perhaps I haven’t done the implementation here as it should be. Or perhaps I’ve come across a behavior which exists in the Beta 1 version in the scenario of linking two entities and needing to update the data in both of them.

Again, I solicit comments for a better implementation to eliminate the problems I tried to work around.

I think some of the problems in the demo arise from using the intrinsic table ShippingCost table with the external AdventureWorks table and the way LightSwitch does validation when saving associated entities.

If I modified the schema of the AdventureWorks OrderHeader table to include a new column, say for instance, a CalculatedShippingCost column, this code would become less problematic, because it would be working with just the SalesOrderHeader entity, and not two related entities in two databases.

Return to section navigation list>

Windows Azure Infrastructure and DevOps

Lori MacVittie (@lmacvittie) asserted “We focus a lot on encouraging developers to get more “ops” oriented, but seem to have forgotten networking pros also need to get more “apps” oriented” as an introduction to her WILS: Content (Application) Switching is like VLANs for HTTP post of 6/20/2011:

Most networking professionals know their relevant protocols, the ones they work with day in and day out, that many of them are able to read a live packet capture without requiring a protocol translation to “plain English”. These folks can narrow down a packet as having come from a specific component from its ARP address because they’ve spent a lot of time analyzing and troubleshooting network issues.

And while these same pros understanding load balancing from a traffic routing decision making point of view because in many ways it is similar to trunking and link aggregation (LAG) – teaming and bonding – things get a bit less clear as we move up the stack. Sure, TCP (layer 4) load balancing makes sense, it’s port and IP based and there’s plenty of ways in which networking protocols can be manipulated and routed based on a combination of the two. But let’s move up to HTTP and Layer 7 load balancing, beyond the simple traffic in –> traffic out decision making that’s associated with simple load balancing algorithms like round robin or its cousins least connections and fastest response time.

Content – or application - switching is the use of application protocols or data in making a load balancing (application routing) decision. Instead of letting an algorithm decide which pool of servers will service a request, the decision is made by inspecting the HTTP headers and data in the exchange. The simplest, and most common case, involves using the URI as the basis for a sharding-style scalability domain in which content is sorted out at the load balancing device and directed to appropriate pools of compute resources.

CONTENT SWITCHING = VLANs for HTTP

Examining a simple diagram, it’s a fairly trivial configuration and architecture that requires only that the URIs upon which decisions will be made are known and simplified to a common factor. You wouldn’t want to specify every single possible URI in the configuration, that would be like configuring static routing tables for every IP address in your network. Ugly – and not of the Shrek ugly kind, but the “made for SyFy" horror-flick kind, ugly and painful.

Networking pros would likely never architect a solution that requires that level of routing granularity as it would negatively impact performance as well as make any changes behind the switch horribly disruptive. Instead, they’d likely leverage VLAN and VLAN routing, instead, to provide the kind of separation of traffic necessary to implement the desired network architecture. When packets arrive at the switch in question, it has (may have) a VLAN tag. The switch intercepts the packet, inspects it, and upon finding the VLAN tag routes the packet out the appropriate egress port to the next hop. In this way, traffic and users and applications can be segregated, bandwidth utilization more evenly distributed across a network, and routing tables simplified because they can be based on VLAN ID rather than individual IP addresses, making adds and removals non-disruptive from a network configuration viewpoint.

The use of VLAN tagging enables network virtualization in much the same way server virtualization is used: to divvy up physical resources into discrete, virtual packages that can be constrained and more easily managed. Content switching moves into the realm of application virtualization, in which an application is divvied up and distributed across resources as a means to achieve higher efficiency and better performance.

Content (application or layer 7) switching utilizes the same concepts: an HTTP request arrives, the load balancing service intercepts it, inspects the HTTP header (instead of the IP headers) for the URI “tag”, and then routes the request to the appropriate pool (next hop) of resources. Basically, if you treat content switching as though it were VLANs for HTTP, with the “tag” being the HTTP header URI, you’d be right on the money.

<Return to section navigation list>

Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

The Windows Azure Platform Team posted yet another job opening in a Software Development Engineer, Senior Job post of 6/20/2011 to LinkedIn:

Job Description

- Job Category: Software Engineering: Development

- Location: Redmond, WA, US

- Job ID: 751978-38947

- Division: Server & Tools Business

Our job is to drive the next generation of the security and identity support for Windows Azure and we need a senior development talent.

We are the AAD Storage team and are part of the Directory, Access and Identity Platform (DAIP) team which owns Active Directory and its next generation cloud equivalents. AAD Storage team's job is to design and build scalable, distributed storage model that will become the foundation for the Azure Active Directory implementation. As part of this charter we also directly own delivering in the near future the next generation security and identity service that will enable Windows Azure and Windows Azure Platform Appliance to boot up. If you have great passion for the cloud, for excellence in engineering, and hard technical problems, our team would love to talk with you about this rare and unique opportunity.

In this role you will:

Work with the talented team of SDEs designing and implementing directory storage model that will scale to cover Windows Azure customer's needs

Contribute to the relationship between DAIP and the Windows Azure teams in addressing the next generation security and identity infrastructure for Windows Azure's customers

Contribute broadly to the product in areas including infrastructure, sync, storage and service delivery

Provide leadership in component and feature design, coding, engineering process, and setting the product vision.

Despite having only one WAPA customer (Fujitsu), the team continues to expand.

<Return to section navigation list>

Cloud Security and Governance

No significant articles today.

<Return to section navigation list>

Cloud Computing Events

No significant articles today.

<Return to section navigation list>

Other Cloud Computing Platforms and Services

Michael Coté (@cote) posted Justin Sheehy on Basho, NoSQL, and Velocity 2011 to his Redmonk blog on 6/20/2911:

While at Velocity 2011, I asked Basho‘s Justin Sheehy to tell us how things have been going at Basho and what the current state of the NoSQL world is. We also have a good discussion of how developers are finding the “post-relational database world” and how GitHub plays into Basho’s business.

Disclosure: GitHub is a client. …

David Strom described A New Way to Track Amazon Cloud Costs in a 6/20/2011 post to the ReadWriteCloud:

Tired of trying to keep track of AWS' convoluted pricing for your cloud deployment? Then take a look at what Uptime Software has with its new UptimeCloud service, beginning tomorrow. The idea is to connect to your cloud environment at Amazon and keep track of what you are spending - and more importantly, will be spending - so that when the bill arrives at the end of the month there are no surprises.

The UptimeCloud service will show you the current costs of all your running instances, as well as make recommendations for how to save money by changing your cloud configuration. Here you see a dashboard summary, and there is a lot more detailed information available too.

Uptime collects historical information so you can see what has been going on in your cloud provider's environment, and also tracks instances that may not be currently running but consuming storage fees.

Right now the service is only available for Amazon's Web Services, but the company will be slowly adding in other providers such as GoGrid and Rackspace in the future. They are also looking at adding other infrastructure providers to have hybrid cloud pricing too. They want to provide the total cost visibility for every kind of cloud application and be able to conduct cost comparisons at a very accurate level.

Uptime claims they are the first to offer this kind of service, at least to the level of graphical visualization and simplicity involved. The company has a deep monitoring and management background with all kinds of infrastructure deployments. The beta service is available tomorrow, but no pricing has been announced, and it will initially be free for the first customers.

NOTE: I briefly did some consulting work for the company last year.

Martin Tantau (@cloudtimesorg) reported LongJump Goes Social and Mobile – Exclusive Interview with CEO Pankaj Malviya (pictured below) in a 6/20/2011 post to the CloudTimes blog:

LongJump is introducing two new services today: LongJump Javelin and LongJump Relay, which bolster its cloud platform aimed at helping companies turn their data into customizable cloud-based business apps that can go mobile in minutes. CloudTimes had the chance to interview LongJump’s Founder and CEO, Pankaj Malviya, about LongJump’s mission and today’s product announcement.

What is Platform-as-a-Service and what do you mean with a Cloud Application Platform?

Over the past few years, Platform-as-a-Service (PaaS) is technology that has been gaining rapid adoption throughout the industry for providing web services that include a programmable environment where no dedicated servers are required to run applications. LongJump’s Cloud Application Platform can run as a PaaS or as a privately installed instance, allowing companies to rapidly create robust business applications in a public or private cloud – without requiring any coding. These applications feature a full suite of security tools that companies will require and productivity tools that workers will expect in the applications.

Which problem do you solve and what is LongJump about?

There are numerous business software products that focus on doing one specific thing well. However, most organizations have untold numbers of procedures that go unmet with a fixed solution. As a result, companies cobble together spreadsheets, homegrown databases, or forgo software entirely to try and get a handle on their data. LongJump is helping companies make this process easier to manage, share and collaborate around important data by enabling businesses to quickly build cloud-based apps that are fully customizable on-the-fly. LongJump’s platform comes with more than 50+ ready-to-use templates that companies can run immediately, and assists with all record and data management, process management, reporting, and data delivery to solve every unique problem.

Today, the company is introducing two brand new offerings called LongJump Javelin and Relay. LongJump Relay provides a social interface to business apps, and with LongJump Javelin, turn these custom apps into standalone mobile apps — no coding required. LongJump is the first PaaS provider to offer an integrated platform, turbocharged with social and mobile capabilities, enabling companies to embrace the convergence of cloud, social networking and mobile.

Please read the related press release here.

Why did you pick out specifically databases, CRM and PaaS?

These areas are fundamental to many businesses. Online databases are required for record management of data that is unique to each company or process. CRM is required for customer record management. PaaS provides a fully extensible foundation for building comprehensive applications.

Which are your key features and what are the key benefits for your customers?

- Rapidly build robust data management applications in minutes

- Built-in policy and workflow engine for making that data actionable

- Social interface (Relay) for companies to track and monitor ever-changing data

- Mobile interface (Javelin) for making that data accessible on any mobile device

- Reporting and dashboards for analytics to measure and asses activities across a range of areas (sales, product, performance, etc.)

- Field-level permissions for maximum, flexible security definitions

- Complete REST API access for integration with other web services

- Full Java extensibility for providing an easy and engaging user experience

Which are your target customers and how big is the market?

For online database, the target is small and medium sized businesses and departments. For CRM, the target is Sales and Marketing teams. For PaaS, the market is enterprises and ISVs transitioning into providing SaaS or web-based solutions.

What is your business model and when do you expect to be profitable?

The company is already profitable. We have a mix of subscription-based SaaS business, licensed software, and professional services.

How do you differentiate yourself from other database-as-a-service and CRM providers?

None of the three areas we focus on are “turnkey.” While our software is easy to customize and use, even for the non-developer, there are still foundational principles around data and process modeling that require an understanding of how the platform works to get the most value from it. As such, we pride ourselves on being highly attuned to individual customers and helping them build their applications, customize their data and workflow, and streamline their reporting. We also have an incredible amount of functional depth in our product for a highly affordable price. Our customer service is also highly regarded.

Who are the founders and investors in LongJump?

Pankaj Malviya and Rick McEachern are the co-founders. LongJump officially launched in 2007, was bootstrapped and has never taken outside investment.

Yet another PaaS play (YAPP).

Chris Czarnecki asked Cloud Performance: Who is the Fastest? in a 6/19/2011 post to the Learning Tree blog:

For any organisation considering deploying applications to the cloud, performance of the selected provider is a primary consideration. Cost, reliability are others too, but if a provider cannot deliver an acceptable level of performance then any advantages in cost savings and improvements in reliability are quickly lost. Having helped many organisations deploy applications to the cloud as well as deploying them for my organisation, I am continually looking at the performance metrics of the providers we use. It was thus with great interest that I read the results of a recent survey by Compuware that ranked the major cloud computing providers on performance for the month of April. Compuware have an application that they have deployed to the major cloud providers and monitored its performance during the month. The top five performing providers were reported as

- Google App Engine

- Windows Azure (US ventral)

- GoGrid(Us-East)

- Opsource (US-East(

- Rackspace(US – East)

Compuware

servestated that there is very little difference between these top 5 organisations in delivered performance. The most glaring omision from this list of top performing providers is of course Amazon. They actually came in 6th and this in the month when they had the large outage. [Replaced serve with ware in several places.]The results published by Compuware

serveare interesting, but what do they really tell us ? No details of the application or type of application were released. Is it one application that the same code base is deployed to all the vendors or the same application implemented in different programming languages deployed to the respective vendor platforms? There are many many unanswered questions that the survey results raise.For somebody with little knowledge of Cloud Computing and the different vendor offerings, the Compuware

serveresults appear conclusive, but for those with a good knowledge know that Google is prepared for Java and Python applications, Azure for .NET applications for instance whilst Amazon is prepared for all types. Google Amazon and Microsoft Azure offer cloud scale storage which is not available from some of the other providers – were any of these used in the application. In reality, whilst I welcome the comparison study Compuware have published, its very difficult to directly compare all the vendors equally as their offerings are not equal. As a result, unless more details of the study are made available, it could be that apples are being compared to pears.To be able to make sense of the different Cloud Computing products available, and to recognise the reality and limitations of reports such as the Compuware

serveone, which can then be used by organisations to make well informed decisions on moving to the cloud, a lot of information and knowledge is required. This exact knowledge can be gained by attending Learning Tree’s Cloud Computing course, which provides detailed technical and business information on all the major Cloud Computing products, enabling informed business driven decisions to be made about Cloud Computing and its adoption. If you would like know more about Cloud Computing, why not consider attending ? It would be great to see you at one of the courses, a complete schedule can be found here.

As I mentioned in a comment to Chris’ post:

I’d recommend that you search for “Compuserve” and replace it with “Compuware.”

As one of the few remaining users of an ancient CompuServe e-mail alias (now provided by AOL), I can assure you that CompuServe isn’t running cloud performance tests (or much else anymore).

Derrick Harris (@derrickharris) posted GoDaddy unveils its take on cloud computing to Giga Om’s Structure blog on 6/16/2011 (missed when posted):

Updated: It looks like web hosting giant GoDaddy (s dady) is now in the cloud computing business with a new service called Data Center On Demand, which could potentially make a dent in the market share of providers such as Amazon Web Services (s amzn) or Rackspace (s rax). The service is currently in a limited-release phase and is expected to launch in July.

According to a marketing brochure for the service, GoDaddy plans to offer three options for users. However, all three levels provide fixed resource amounts for a monthly fee, with additional resources available “a la carte.” This is a deviation from the standard infrastructure as a service model of charging for resources on an hourly basis and allowing for the number of servers to be spun up or down on demand.

In a fairly major deviation from the standard IaaS value proposition, GoDaddy’s offering also “requires technical expertise,” so the company suggests customers have a professional IT staff in place. Arguably, IaaS always requires some degree of server administration know-how, but those tasks have been handled largely by developer-friendly APIs and GUIs.

Here’s GoDaddy’s disclaimer regarding its management process:

Currently, Data Center On Demand machines do not come with control panels installed. This means, to use Data Center On Demand, you should be comfortable managing machines’ Web services through shell commands (bash) or installing control panels yourself.

Update: A GoDaddy spokesperson informed me that Data Center On Demand will, indeed, include a graphical interface for server management when the service is publicly available. I can attest to this, having seen screenshots of the interface in its current form. GoDaddy’s cloud uses Cloud.com’s CloudStack private-cloud software for the resource-orchestration layer, making it one of many service providers white-labeling the Cloud.com product.

GoDaddy’s take on Infrastructure-as-a-Service looks like it has some shortcomings in terms of

developer-friendliness andpricing flexibility, but the company does have household-name status and a large contingent of satisfied web hosting customers from which to pull cloud users.Not surprisingly coming from a domain-name registrar, too, GoDaddy is hosting the Data Center On Demand at least two URLs: datacenterondemand.com and elasticdatacenters.com. The company’s support forums seem to indicate that the service has been available to early users since some time in May.

I have contacted GoDaddy for further details and will update this story should I receive additional information.

Welcome to the IaaS rat race, Go Daddy!

<Return to section navigation list>

0 comments:

Post a Comment