Windows Azure and Cloud Computing Posts for 6/14/2011+

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

• Updated 6/14/2011 at 4:50 PM PDT with new articles marked • by Michael Coté, SD Times on the Web, LightSwitch Team, Windows Azure Team, Greg Oliver, Mary Jo Foley and Wes Yanaga.

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database and Reporting

- Marketplace DataMarket and OData

- Windows Azure AppFabric: Access Control, WIF and Service Bus

- Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch

- Windows Azure Infrastructur and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Azure Blob, Drive, Table and Queue Services

• Mary Jo Foley (@maryjofoley) asked Is Apple really using Windows Azure to power iCloud? in a 6/14/2011 post to her All About Microsoft blog for ZDNet:

Over the past month, I received a couple of tips claiming that Apple was a Windows Azure customer.

At first, I just dismissed the tips as nothing but a crazy rumor. Then I got more serious and started asking around, to no avail.

But once Apple launched the beta of its iCloud iMessage service, the Infinite Apple site, along with a few other blogs, analyzed the traffic patterns and found that Apple appeared to be using both Azure and Amazon Web Services for hosting.

“We don’t believe iCloud stores actual content. Rather, it simply manages links to uploaded content,” according to an updated June 13 Infinite Apple post.

I asked Microsoft for comment and was told the company does not share the names of its customers. I asked Apple for comment and heard nothing back.

I’ve heard some speculate that Apple may simply be using Azure’s Content Delivery Network (CDN) capability. The Azure CDN extends the storage piece of the Windows Azure cloud operating system, allowing developers to deliver high-bandwidth content more quickly and efficiently by placing delivery points closer to users.

Based on Infinite Apple’s info, Apple seems to be using Azure’s BLOB (binary large object) storage, which is part of the Windows Azure core. However, the Windows Azure Content Delivery Network (CDN) is integrated directly with Azure’s storage services.

Apple’s iCloud is still in beta, and Apple is just in the process of turning on its much-touted $500 million North Carolina data center. Apple’s datacenter, as my ZDNet colleague Larry Dignan noted, is running a combination of Mac OS X, IBM/AIX, Sun/Solaris, and Linux systems.

Maybe Apple’s seeming reliance on Azure and AWS — in whatever capacity they’re actually being used — is temporary. It’s still interesting, though. (And probably something the Softies wish they could use in an official case study.)

See also Goncalvo Ribeiro joins the chorus with a Guess What: iCloud Uses Windows Azure Services For Hosting Data post of 6/13/2011 to the Redmond Pie blog in the Live Windows Azure Apps, APIs, Tools and Test Harnesses section below.

• Greg Oliver continued his series with Automating Blob Uploads, Part 2 on 6/14/2011:

I tossed out a challenge in a comment I left on the first of this series – there’s a problem with this approach to automating blob uploads. It’s not a problem that affects everyone. For example, perhaps you have a multi-tenant solution where each customer has their own storage account.

When a Windows Client wants access to the Azure Storage Service directly, the storage credentials must be available locally in order to structure the REST requests. Usually, as in the code that I’ve provided, the credentials are stored in clear text in the configuration file. However, if the client is part of a hybrid solution and many customers will have it, you probably don’t want to do this. These credentials are the keys to the kingdom – with them anything can be done with any of the data in the storage account.

One solution to this problem is to put the credentials behind a web service call. SSL encrypt the call to the web service and authenticate callers with the AppFabric Access Control Service (ACS) or some other means, then pass the storage credentials back to the client. Without going into the details of encryption/decryption, certificates, etc., this solution is the same as the one that I have already provided with very minor tweaks to the code.

Another solution is the one for which I am providing additional sample code. Use a Shared Access Signature (SAS) to protect blob storage access and put the accesses to queue storage behind secured and authenticated web service calls. In a nutshell, a SAS is a time-bombed key with specific access rights to either blobs in a container or a single blob.

In this modified solution, I have added a WCF web role to my Azure service. This WCF web role is based on the Visual Studio template that is listed along with web role, worker role, etc, when creating a new Cloud Solution.

The flow of control is altered only slightly:

- Prior to uploading the blob, a call is made to request the SAS from the web service. The SAS is a string. This string is supplied as a parameter on the upload calls.

- Instead of accessing queue storage directly, additional calls were added to the web service:

- Send notification message

- Get acknowledgement message

- Delete acknowledgement message

While this solution solves some security concerns, there is again a cost. The client cannot run without the server in this architecture. I have lost loose coupling in favor of security. In my third article on this topic I’ll partially deal with this problem. (Hint: AppFabric Service Bus Queues)

Full source is provided here.

Maarten Balliauw (@maartenballiauw) described Advanced scenarios with Windows Azure Queues in a 6/14/2011 post:

For DeveloperFusion, I wrote an article on Windows Azure queues. Interested in working with queues and want to use some advanced techniques? Head over to the article:

Last week, in Brian Prince’s article, Using the Queuing Service in Windows Azure, you saw how to create, add messages into, retrieve and consume those messages from Windows Azure Queues. While being a simple, easy-to-use mechanism, a lot of scenarios are possible using this near-FIFO queuing mechanism. In this article we are going to focus on three scenarios which show how queues can be an important and extremely scalable component in any application architecture:

- Back off polling, a method to lessen the number of transactions in your queue and therefore reduce the bandwidth used.

- Patterns for working with large queue messages, a method to overcome the maximal size for a queue message and support a greater amount of data.

- Using a queue as a state machine.

The techniques used in every scenario can be re-used in many applications and often be combined into an approach that is both scalable and reliable.

To get started, you will need to install the Windows Azure Tools for Microsoft Visual Studio. The current version is 1.4, and that is the version we will be using. You can download it from http://www.microsoft.com/windowsazure/sdk/.

N.B. The code that accompanies this article comes as a single Visual Studio solution with three console application projects in it, one for each scenario covered. To keep code samples short and to the point, the article covers only the code that polls the queue and not the additional code that populates it. Readers are encouraged to discover this themselves.

Want more content? Check Advanced scenarios with Windows Azure Queues. Enjoy!

Steve Marx (@smarx) explained Appending to a Windows Azure Block Blob in a 6/14/2011 post:

The question of how to append to a block blob came up on the Windows Azure forums, and I ended up writing a small console program to illustrate how it’s done. I thought I’d share the code here too:

static void Main(string[] args) { if (args.Length < 3) { Console.WriteLine("Usage:"); Console.WriteLine("AppendBlob <storage-connection-string> <path-to-blob> <text-to-append>"); Console.WriteLine(@"(e.g. AppendBlob DefaultEndpointsProtocol=http;AccountName=myaccount;AccountKey=v1vf48... test/test.txt ""Hello, World!"")"); Console.WriteLine(@"NOTE: AppendBlob doesn't work with development storage. Use a real cloud storage account."); Environment.Exit(1); } var blob = CloudStorageAccount.Parse(args[0]).CreateCloudBlobClient().GetBlockBlobReference(args[1]); List<string> blockIds = new List<string>(); try { Console.WriteLine("Former contents:"); Console.WriteLine(blob.DownloadText()); blockIds.AddRange(blob.DownloadBlockList(BlockListingFilter.Committed).Select(b => b.Name)); } catch (StorageClientException e) { if (e.ErrorCode != StorageErrorCode.BlobNotFound) { throw; } Console.WriteLine("Blob does not yet exist. Creating..."); blob.Container.CreateIfNotExist(); } var newId = Convert.ToBase64String(Encoding.Default.GetBytes(blockIds.Count.ToString())); blob.PutBlock(newId, new MemoryStream(Encoding.Default.GetBytes(args[2])), null); blockIds.Add(newId); blob.PutBlockList(blockIds); Console.WriteLine("New contents:"); Console.WriteLine(blob.DownloadText()); }

As the usage text says, this doesn’t seem to work against development storage, so use the real thing.

Very similar code can be used to insert, delete, replace, and reorder blocks. For more information, see the MSDN documentation for Put Block and Put Block List.

<Return to section navigation list>

SQL Azure Database and Reporting

Harish Ranganathan (@ranganh) described the solution to an Entity Framework with SQL Azure, "There is already an open DataReader associated with this Command which must be closed first."} problem in a 6/14/2011 post:

I hit this error when I deployed an app built with Entity Framework onto Windows Azure. Obviously, I wasn’t explicitly using DataReader so it kind of puzzled me. As it happens every time

It worked well with local database, application running local and database on SQL Azure. But once I used the Dev Fabric to test the application locally, it failed and gave the above error.

Entity Framework relies on using Multiple Active Results Sets (MARS), and it would be a part of connection string multipleactiveresultsets=True. Earlier SQL Azure didn’t support MARS, so we have to explicitly set it to “false” before deploying to SQL Azure.

But starting April 2010, SQL Azure supports MARS (yeah, that is the level of update I had on SQL Azure i..e 1 year late

) and this post nicely covers on this particular topic http://blogs.msdn.com/b/adonet/archive/2010/06/09/remember-to-re-enable-mars-in-your-sql-azure-based-ef-apps.aspx

On the new SQL Azure features, read http://blogs.msdn.com/b/sqlazure/archive/2010/04/16/9997517.aspx

In my case, I wired up the connection string myself using the Azure user account and password and totally forgot the MARS setting.

All I had to do was to add the multipleactiveresultsets=True to my connection string and it started working fine.

<Return to section navigation list>

MarketPlace DataMarket and OData

rsol1 claimed “OData is to REST what MS Access is to databases” in OData the silo buster post of 6/14/2011:

Introduction

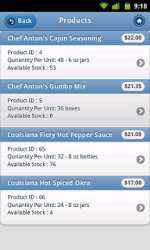

Like many others I am eagerly awaiting the release of SAP Netweaver Gateway, a technology which promises to simplify SAP integration. By exposing SAP Business Suite functionality as REST based OData services Gateway will enable SAP applications to share data with a wide range devices, technologies and platforms in an easy to understand and consume manner.

The intention of this blog is to give a high level overview to the Open Data Protocol (OData), showing by example how to access and consume the services. For a more concise definition I recommend the following recently released article - Introducing OData – Data Access for the Web, the cloud, mobile devices, and more

What is OData?

“OData is to R[EST] what MS Access is to databases” a complimentary tweet I read, because MS Access is very easy to learn it allows users to get close to the data and empowers them to build simple and effective data centric applications.

“OData can be considered as the ODBC API for the Web/Cloud” – Open Database Connectivity (ODBC) is a standard API independent of programming language for doing Create, Read, Update and Delete (CRUD) methods on most of the popular relational databases.

“OData is a data silo buster”

Source: SAPNETWEAVER GATEWAY OVERVIEW

Essentially OData is an easy to understand extensible Web Protocol for sharing data. Based on the REST design pattern and AtomPub standard, it provides consumers with a predictable interface for querying a variety of datasources not only limited to traditional databases.

OData Services

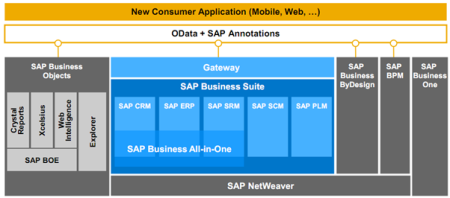

Odata.org provides a sample of the Northwind Database exposed as OData formatted services, this is a great resource for exploring the protocol.

**If you are using IE you may want to disable the feed reading view.

The link below is to the Northwind service document, it lists all of the service operations, each operation represents a collection query-able data.

http://services.odata.org/Northwind/Northwind.svc/

To access the Customers collection we append the link provided above to the base url as follows

http://services.odata.org/Northwind/Northwind.svc/Customers/

We can accesses specific Customers via the links provided in the feed http://services.odata.org/Northwind/Northwind.svc/Customers(‘ALFKI’)

With the query string operations we can start to control the data provided, for example paging Customers 20 at a time.

http://services.odata.org/Northwind/Northwind.svc/Customers?$top=20 http://services.odata.org/Northwind/Northwind.svc/Customers?$skip=20&$top=20

Using filters we can search for Customers in a particular city or Products in our price range

http://services.odata.org/Northwind/Northwind.svc/Customers?$filter=City eq ‘London’

Once we have found the data we wanted we can use the links to navigate to the related data. http://services.odata.org/Northwind/Northwind.svc/Suppliers(2)/Products

http://services.odata.org/Northwind/Northwind.svc/Products(5)/Order_Details

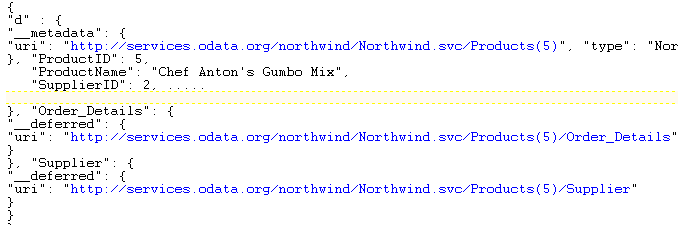

Finally we can format the response as JSON

http://services.odata.org/Northwind/Northwind.svc/Products(5)?$format=json

Consuming OData

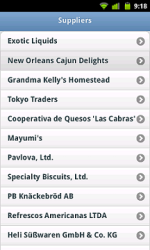

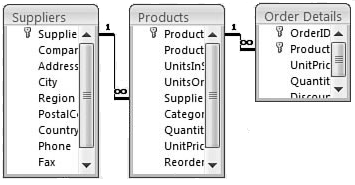

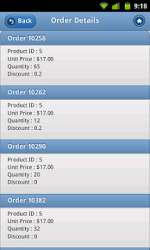

To illustrate how easy it is to consume OData, I am sharing simple JQuery Mobile application, it uses the following tables and relationships.

OData Sample Application - hopefully the 100 lines of HTML code, JavaScript and annotations are easy to follow, copy the source to a html file, save it locally and run in a browser. Best viewed in the latest versions of Firefox, Chrome and IE.

Opening up more than data

A couple of months ago I set off on a exercise to learn all I could about OData, along the way I started investigating some of the available opensource libraries like datajs and odata4j, rediscovering a passion for JavaScript and Java. Through the many examples and resources available it was surprising how easy it was to take my limited skills into the world of Cloud and Mobile application development.

Conclusion

Similar in many ways to ODBC and SOAP, OData is an initiative started by Microsoft as means for providing a standard for platform agnostic interoperability. For it to become successful it needs to be widely adopted and managed.

From a high level the strengths of OData are that it can be used with a multitude of data sources, it is easy to understand and consume. From what I have seen it will be great for simple scenarios and prototyping. Early to tell what its real weaknesses are, however to be simple and easy to use there has to be either a lot of pipes and plumbing or constraints and limitations when used on top of technologies like BAPI’s and BDC’s, things like managing concurrency, transactions and state come to mind. Conversely the threats of not being adopted and competing standards and initiatives like RDF and GData represent some big opportunities for an extensible protocol.

I’m not sure I agree that “OData is to REST what MS Access is to databases,” but the remainder of rsol1’s post appears on target.

<Return to section navigation list>

Windows Azure AppFabric: Access Control, WIF and Service Bus

• Wes Yanaga reported the availability of Windows Azure AppFabric Access Control Services Academy Videos in a 6/14/2011 post to the US ISV Evangelism blog:

Access Control provides an easy way to provide identity and access control to web applications and services, while integrating with standards-based identity providers, including enterprise directories such as Active Directory®, and web identities such as Windows Live ID, Google, Yahoo! and Facebook.

The service enables authorization decisions to be pulled out of the application and into a set of declarative rules that can transform incoming security claims into claims that applications understand. These rules are defined using a simple and familiar programming model, resulting in cleaner code. It can also be used to manage users’ permissions, saving the effort and complexity of developing these capabilities.

Here’s a brief description of potential usage scenarios, benefits and features. I’ve added eight links to a collection of short videos about ACS – each video is approximately two minutes. These are “demystifying” videos about ACS including supporting slides available for download. Thanks to fellow Microsoft employee, Alik Levin for providing these links

Use Access Control to

- Create user accounts that federate a customer's existing identity management system that uses Active Directory® service, other directory systems, or any standards-based infrastructure

- Exercise complete, customizable control over the level of access that each user and group has within your application

- Apply the same level of security and control to Service Bus connections

Access Control Benefits:

- Federated identity and access control through rule based authorization enables applications to respond as if the user accounts were managed locally

- Flexible standards-based service that supports multiple credentials and relying parties

- Lightweight developer-friendly programming model based on the Microsoft .NET Framework and Windows Communication Foundation

Access Control Features:

Setup Issuer trust with a simple Web interface or programmatically through APIs

Supports Active Directory and other identity infrastructures, with minimal coding

Support for multiple credentials, including X.509 certificates

Support for standard protocols including REST

Applications that run inside and outside the organizational boundary can rely on the service

Validate application and user request from data and connectivity services

ACS Academy Videos

- What is ACS?

- What can ACS do for me?

- ACS Architecture

- ACS Functionality

- ACS Deployment Scenarios

- ACS and the Cloud

- ACS and WIF

- ACS and ADFS

About Windows Azure

The Windows Azure platform is commercially available in 41 countries and enables developers to build, host and scale applications in Microsoft datacenters located around the world. It includes Windows Azure, which offers developers an Internet-scale hosting environment with a runtime execution environment for managed code, and SQL Azure, which is a highly available and scalable cloud database service built on SQL Server technologies. Developers can use existing skills with Visual Studio, .NET, Java, PHP and Ruby to quickly build solutions, with no need to buy servers or set up a dedicated infrastructure, and with automated service management to help protect against hardware failure and downtime associated with platform maintenance.

Getting Started with Windows Azure

- See the Getting Started with Windows Azure site for links to videos, developer training kit, software developer kit and more. Get free developer tools too.

- See Tips on How to Earn the ‘Powered by Windows Azure’ Logo.

- For free technical help in your Windows Azure applications, join Microsoft Platform Ready.

Learn What Other ISVs Are Doing on Windows Azure

For other videos about independent software vendors (ISVs) on Windows Azure, see:

- Accumulus Makes Subscription Billing Easy for Windows Azure

- Azure Email-Enables Lists, Low-Cost Storage for SharePoint

- Crowd-Sourcing Public Sector App for Windows Phone, Azure<

- Food Buster Game Achieves Scalability with Windows Azure

- BI Solutions Join On-Premises To Windows Azure Using Star Analytics Command Center

- NewsGator Moves 3 Million Blog Posts Per Day on Azure

- How Quark Promote Hosts Multiple Tenants on Windows Azure

Steve Plank (@plankytronixx) posted Click-by-Click Video: Using on-premise Active Directory to authenticate to a Windows Azure App on 6/14/2011:

This video is a click-by-click, line-by-line screen-cam of how to authenticate using an on-premise Active Directory with a Windows Azure application. It uses Active Directory Federation Services V2.0 (ADFS 2.0) and Windows Azure’s App Fab Access Control Service V2 (App Fab ACS V2 – which was released on April 11th 2011) to achieve this.

You could just watch this and follow along with your own infrastructure, Visual Studio 2010 and your Windows Azure subscription (or get a free one here if you don’t already have one) and try it out for yourself…

This video has been a long time coming since I said I’d create one when I made this video, which describes the process from an architectural perspective. I’ve received quite a few emails saying “when is the screen-cam coming”… Well – here it is!

Vittorio Bertocci (@vibronet) reported a Powerscripting Podcast: ACS, Windows Azure and… PowerShell in a 6/13/2011 post:

Last week I had the pleasure of being guest on the PowerScripting podcast, THE show about PowerShell. That’s actually pretty uncharacteristic for me, considering that at every session I deliver at TechEd I spend the first few minutes on colorful disclaimers about the session being for developers, as opposed to IT administrator… however both the hosts (Hal & Jonathan) and the guys in chat were simply awesome and made me feel perfectly at home!

You can find the recording here. What did we chat about? Well, when I finally managed to stop speaking about myself we went through Windows Azure & PaaS, the idea of claims based identity, WIF & ACS, and finally on the Windows Azure and ACS cmdlets we released on Codeplex. I got a lot of encouragement, you guys seem to like those really a lot, and I also got a ton of useful feedback (“plurals? really? Snapins instead of modules? REALLY?” - Guys, what do I know, I am a dev) that we are already working into the planning.

If you are an IT administrator, I would be super grateful if you could take a quick look at a simple 4-questions survey: that will be of immense help for prioritizing our next wave of releases, and will give you the chance to be heard.

Roopesh Sheenoy summarized Windows Azure AppFabric CTP - Queues and Topics in a 6/13/2011 post to the InfoQ blog:

The Azure team recently shipped a Community Preview (CTP) for AppFabric, with Service Bus Queues and Service Bus Topics, which can be leveraged in a whole new set of scenarios to build Occasionally Connected or Distributed Systems.

The AppFabric Service Bus already provides a simple Messaging platform which is already in Production. This works fine as long as the Producer and the Consumer are online at the same time. What the CTP introduces in form of Service Bus Queues is the ability to create asynchronous messages, where the messages will be reliably stored till the consumer consumes the message – if the Consumer is offline, the messages will just keep queuing till the consumer comes back online.

As David Ingham explains, Asynchronous Queues offer a lot of potential benefits - especially load leveling and balancing, loose coupling between the different components of a system.

Service Bus Topics is very similar to Queues, with one major difference – the publish/subscribe pattern provided by Topics allows each published message to be available for multiple subscriptions once they are registered to that Topic. Each subscription tracks the last message it read in a particular Topic, so from the consumer end, each subscription looks like a separate virtual Queue.

Subscriptions can also have rules in place to filter messages from the Topic based on their properties – so each consumer can get it’s distinct subset of messages published in the Topic.

There could be some confusion in terminology between Service Bus Queues and Windows Azure Queues that form a part of the Azure Storage. As Clemens Vasters describes it in an interview on Channel9, the Windows Server equivalent of Azure Storage Queues is Named Pipes and Mailslots, whereas the equivalent of Service Bus Queues is MSMQ. More guidance on when to use which option is expected to come when the Service Bus Queue goes into production.

AppFabric is a cloud middleware platform for developing, deploying and managing applications on Windows Azure. It provides secure connectivity across network and geographic boundaries.

<Return to section navigation list>

Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

Jaime Alva Bravo posted Certificates in Windows Azure to the AppFabricCAT blog on 6/13/2011:

Since Azure requires a heighten level of security, certificates became a key concept. As I was looking into it, I found the management a bit confusing and have seen similar questions popping up in the forums. Hence, the content below tries to give a quick overview of where certificates exist in the Azure portal as well as in Visual Studio and how they map to what you want to accomplish, while at the same time offering links on the subject to help you go into deeper detail.

The first time I saw the “management certificates” node (see top left red circle in figure 1), to me it seemed to say “manage certificates”, where you manage all your Azure certificates; but, this is not the case. For those familiar with certificates this will be clear, since “Management Certificates” are a type of certificates, and yes, this is where they are shown. Once I understood this, then it seem to me that Azure only uses Managed certificates, which is not true. The other type of certificates are called “Service Certificates”, which begs the question, where is the node for those?

Management certificates only contain a public key and their extension is “.cer”. The other type of certificate, the private type, has the additional need of a password to authenticate (beyond what a public certificate already requires). You do not manage those under “Management Certificates” in the Windows Azure Management Portal. The private or service certificates have the “.pfx” extension and, as you will noticed, they cannot be uploaded under “Management Certificates”. They are required when you want to remote into the machine carrying your Azure role (VM, worker or web). This private certificate is uploaded to the “Certificates” folder for the particular Hosted Service in the “Hosted Services” node, as shown below (see left red circle in figure 1). When you select the “Certificates” folder, you will see the “Add Certificates” button in the Management Portal (see top left box in figure 1).

Figure 1: Public vs. Private certificates in Azure

At this point, what got me confused is that in both cases (public and private certificates) the “Add Certificate” button is the same. Except for the small title below the button, the public certificate shows “Certificates” and the private certificate shows “hosted Service Certificate”.

In essence: adding management/public certificates (.cer) is done via the “Management Certificates” tab which can be reused by many of your projects but the service/private certificates (.pfx) go under one of your “Hosted Services”, because they are dedicated to that hosted service only (obviously, for security reasons sharing the same credentials across services would not be recommended).

For further reference, this is the MSDN page that talks about these two types of certificates (but not in the context of the Azure portal).

Using public and private certificates side by side in Visual studio

Just to put in all in context, let’s take the sample of setting up the ability to remote desktop to an Azure role , because it involves two steps that use certificates. After publishing your project (see Figure 2), the first window will require your public certificate (see Figure 3).

Figure 2: publishing

Figure 3: Public certificates in VS

To then allow a remote desktop connection to the role you are about to publish, you will need to click on “Configure Remote Desktop connections…” and specify the private certificate there (see Figure 4). As you will notice only .PFX extension are allowed.

Figure 4: Private certificates in VS

Useful links on certificates

- Certificates in Azure management – http://blog.bareweb.eu/2011/01/using-certificates-in-windows-azure-management/

- SSL in Azure – http://msdn.microsoft.com/en-us/rfid/wazplatformtrainingcourse_deployingapplicationsinwindowsazurevs2010_topic5

- Creating certificates in for Azure roles – http://msdn.microsoft.com/en-us/library/gg432987(v=MSDN.10).aspx

Reviewer: Jason Roth

It’s difficult not to type “Jaime Alfa Bravo.”

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

• The Windows Azure Team published Cross-Post: Java Sample Application for Windows Azure on 6/14/2011:

If you’re looking for a ready-to-use sample Java application for Windows Azure, you should check out Thomas Conte’s recent post, “Java sample application for Windows Azure.” In this helpful post, he describes the source code for a very simple Java Web application that he has published on GitHub. This code includes all the basic building blocks you would need to start developing a “real” Java application for Windows Azure that uses blob storage and SQL Azure.

The sample is an Eclipse project that contains the following elements:

- A “classic” JSP application created using the “Dynamic Web Project” template in Eclipse

- The Windows Azure SDK for Java, including all its pre-requisites in terms of third-party libraries

- The JDBC Driver for SQL Server and SQL Azure (version 3.0)

- Hibernate (3.6.4)

Click here to access the code on GitHub; click here to read Tom’s post.

Techila Technologies, Inc. reported Cloud Computing With Techila and Windows Azure Opens up new Possibilities for Cancer Research in a 6/14/2011 press release via PRNewswire:

The greatest challenge in cancer research is to identify genes that can predict the progress of the disease. The number of genes and gene combinations is vast, so powerful computing tools are required to achieve this. Assisted by Techila Technologies Ltd (http://www.techilatechnologies.com ), a Finnish company specialising in autonomic high-performance and technical computing, a research team at the University of Helsinki utilized the huge computing capacity of the Windows Azure cloud computing service for the first time. A computing task that usually requires some 15 years can now be completed in less than five days.

In the treatment of breast cancer, it is of vital importance to be able to predict the likelihood of the development of new metastases. The prognosis for a breast cancer treatment in general is good. ´However, metastases make breast cancer a fatal disease. Cells from the primary tumour infiltrate other parts of the body, forming metastases. The problem is that we are far from understanding this process in sufficient details. We do not know how and which cells become separated from the tumor, where they go or how they act there,' says Sampsa Hautaniemi, docent at the University of Helsinki.

The research team has hundreds of breast cancer tumors at their disposal. The aim is to determine who is most likely to develop metastases by studying the activity of the genes and combining research data.

According to Hautaniemi, computing already plays a significant role in modern medical research because the data available to researchers are getting more extensive all the time. 'It certainly makes the future seem brighter knowing that thanks to cloud computing services, computational resources no longer restrict the setting of research objectives. Earlier on we had to define our research plans based on whether certain computations could be performed.'

Efficiency almost 100 per cent

According to Rainer Wehkamp, founder of Techila Technologies Ltd, this was the first time the Windows Azure cloud service was utilised on such a massive scale. 'Our aim was to demonstrate to the University of Helsinki that the technology developed by Techila makes it easy to utilize massive computing power from a cloud. There are also many potential applications outside universities; this kind of computing power could be utilised by e.g. insurance companies in their risk assessment calculations. And as the systems are global in scale, the possibilities are almost limitless.'

The computing was performed in a cloud using the Autonomic Computing technology developed by Techila, and a total of 1,200 processor cores were involved in the operation. The Techila technology was originally developed to utilise the computing power available in table-top computers and servers in businesses and universities, so the transition from a complex multiplatform environment to a homogenous cloud environment presented no problems. 'Azure can be harnessed solely for computing purposes, so its efficiency is extremely high, even as high as 99 per cent,' says Wehkamp.

Microsoft is happy with the results of the pilot project. 'Together, Techila's Grid solution and Windows Azure create a well-functioning and easy-to-use system. Cloud computing takes projects involving high-performance computing to a totally new level. Azure is a massively scalable computing platform service with sufficient resources to complete even the most demanding projects,' promises solution sales manager Matti Antila of Microsoft Finland.

Further information:

- Full case-study: http://www.techilatechnologies.com/azure-case-study

- Techila+Azure integration: http://www.techilatechnologies.com/azure

- Windows Azure: http://www.microsoft.com/windowsazure

Goncalvo Ribeiro joins the chorus with a Guess What: iCloud Uses Windows Azure Services For Hosting Data post of 6/13/2011 to the Redmond Pie blog:

The wizards at InfiniteApple, a recently-established Apple-related blog have found out that Apple’s iCloud online services actually use third-party services, namely Amazon Cloud Services and Windows Azure.

It turns out that iCloud seems to use Windows Azure and Amazon Cloud Services for storage, as shown by a packet dump. Below we’re quoting some of the juicy parts:

PUT https://mssat000001.blob.core.windows.net:443/cnt/1234.5678 HTTP/1.1

Host: mssat000001.blob.core.windows.net:443HTTP/1.1 201 Created

Content-MD5: [redacted]

Last-Modified: [redacted]

ETag: [redacted]

Server: Windows-Azure-Blob/1.0 Microsoft-HTTPAPI/2.0

x-ms-request-id: [redacted]

x-ms-version: 2009-09-19

Date: [redacted]

Connection: close

Content-Length: 0

While we’re no network experts, it’s pretty easy to infer that iCloud is using third-parties to store most data, including images, as explained by InfiniteApple. The dump above only shows references to Windows Azure, although the service contacts Amazon Cloud Services in a number of instances.

Yesterday, I went at great lengths to explain why I believe iCloud will have a great shot at realizing Microsoft’s original "software plus services" vision, since it does a lot to keep all devices owned by the same user in sync. While it’s rather funny that Apple has made a brand-new product that has technology made by one of its longtime rivals at its heart, I believe that’s not necessarily a downside: by outsourcing its storage and server management to an outside company, Apple can now spend more energy on making sure the product itself works.

Microsoft and Apple have worked together in the past. Back in 1997, when Steve Jobs returned to the then-struggling company, he struck a deal with Microsoft for the Redmond company to develop software for the Mac platform, in order to revive it. Back in 2008, Apple worked with Microsoft again to bring Exchange, a centralized e-mail and contacts service used by many companies, to the iPhone, in order to appeal to business customers, which were lacking at the time.

Having such a reputable company as a customer was probably one of Microsoft’s dreams when it created Azure. With data centers all over the world, Microsoft will now have the opportunity to power a service like iCloud and pride itself in the technological advancements of Azure; while Apple can proud itself in the user base iCloud might one day get. I believe it’s a win-win for both companies.

It’s important to note that this is merely related to iCloud’s back end and it’s unlikely that Microsoft had any major involvement in developing iCloud.

(via InfiniteApple)

Harish Ranganathan (@ranganh) posted Web Role Instances recreated, repaired while deploying MVC 3 Applications on Windows Azure on 6/14/2011:

Recently, I was deploying an MVC 3 Application on Windows Azure. The deployment took longer than expected and the Roles were just restarting/repairing and it couldn’t just start the role instances. Only thing that seemed to have worked was uploading the Packages. Post that, the Roles were simply unable to start and be ready. I deleted the deployment, re-created the package and deployed. Still no avail. It would successfully upload the package but post that the roles would never be able to start. They will just keep getting recycled.

I then figured out that MVC 3 is not supported in Windows Azure yet and in fact, you need to explicitly add MVC 3 assemblies to the bin folder or have MVC 3 installed as a start up task along with your Windows Azure project.

Steve Marx has an excellent post (and to the point, of all the posts I read) on getting MVC 3 installed as a start up task on Windows Azure, at http://blog.smarx.com/posts/asp-net-mvc-in-windows-azure

Just follow the instructions and you should be fine to have your MVC 3 Application running on Windows Azure.

Harish is a Developer Evangelist for Microsoft India.

<Return to section navigation list>

Visual Studio LightSwitch

• The LightSwitch Team posted a link to a New Video– How Do I: Deploy a LightSwitch Application to Azure? (Beth Massi) on 6/14/2011:

Check it out, we just released a new “How Do I” video on the Developer Center that shows you how to deploy your Visual Studio LightSwitch application to the Azure cloud.

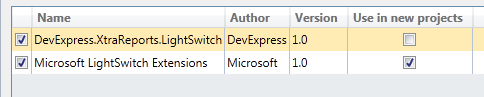

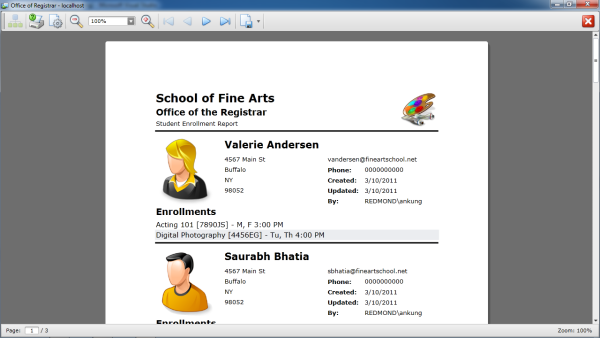

Seth Juarez (@SethJuarez) announced a LightSwitch Reporting Preview in DevExpress Reporting v11.1 in a 6/13/2011 post:

With our recent 11.1 release we have added support for Microsoft’s brand new development platform: Visual Studio LightSwitch. LightSwitch enables developers to quickly create business applications using preconfigured screens that adapt to the data you create. Unlike the Access databases of yore, the projects LightSwitch creates are written in .NET and adhere to standard practices when developing Silverlight applications. Additionally, LightSwitch allows for customer extensibility.

Here is where we come in.

DevExpress has partnered with Microsoft to bring you our award winning reporting solution within the context of LightSwitch. I will give you a quick tour using a pre-configured LightSwitch project. A little warning though: LightSwitch is still in Beta! Because of this little detail, our reporting extension will also be in beta up until there is an actual release of the LightSwtch platform. What I am showing here is indeed subject to change.

The screenshots posted here use the Course Manager application available over at MSDN.

The Extension

The first (and important step) is activating the extension. This is done by navigating over to the project properties and checking the appropriate extension.

The Service

LightSwitch is actually creating a Silverlight application. Those familiar with our reporting suite know that in order to have a correctly functioning Silverlight report viewer one must add our Report Service. This is also the case for LightSwitch:

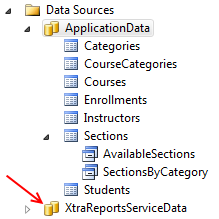

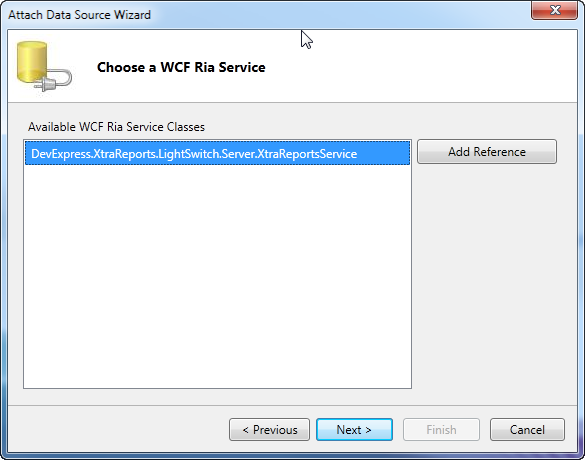

The procedure to get this up and loaded is to add a new Data Source (by selecting a WCF RIA Service) and choosing the XtraReportService option.

The Report

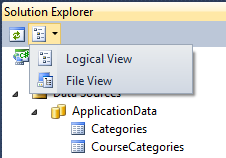

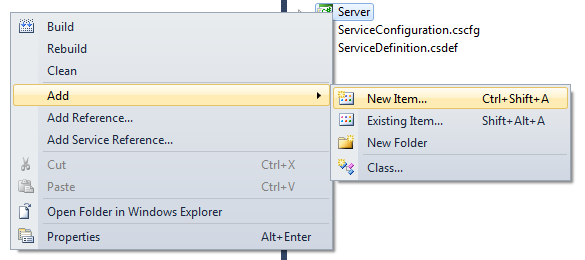

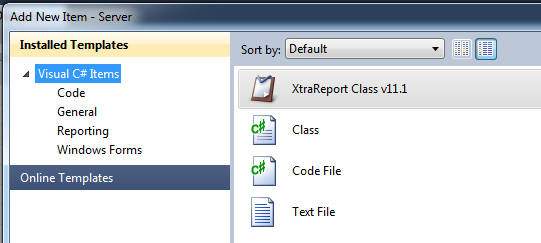

Once these two things are set up, its time to actually build the report! This is done by switching from Logical View over to File View

and adding a new XtraReport to the Server project.

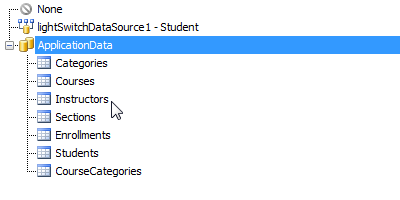

Once there, the first thing to do is to select the data to which the report will be bound.

We have a special LightSwitchDataSource which allows the direct querying of the entities you define within LighSwitch. We never intended this implementation to be a hack of sorts that relied on extraneous data sources. We felt that a native LightSwitch experience that allowed the querying of in-project entities would provide the maximum benefit.

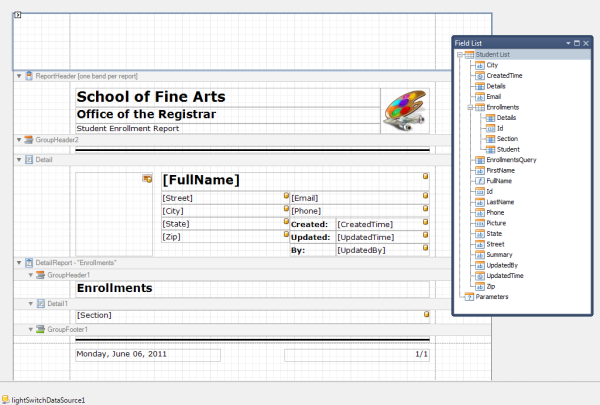

The nice thing about the entities designed within LightSwitch is that they also support the notion of master-detail type reports. Here is a screenshot of a report design in action:

This particular report is bound to the LightSwitch Student entity. As you can see, the enrollments link is preserved in order to allow for a master-detail type report.

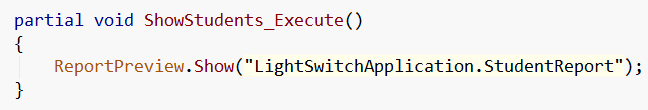

The Code

One of the benefits of using LightSwitch is their notion of Screens. When editing Screens in LightSwitch the user has access to the Visual Tree. It is there that one can add buttons to trigger events. Once the button is added, the Execute Code can be edited. We have created a special method to render your reports once designed. Take a look:

How simple is that!

The Result

Here is what the report looks like when everything is done:

Summary

Hopefully this little tour has answered some of your questions. I would like to reiterate, however, that this is still in beta. There are still a number of details that we need to polish up. The first question you might have is “how much will it cost?” A great question! This is one of those details we are still working out. Stay tuned for more information!

If you have any LightSwitch related questions, a great resource can be found over at MSDN: http://msdn.com/LightSwitch. There you can find tons of downloads, samples, and training materials.

As always, if there are any comments and/or questions, feel free to get a hold of me!

Return to section navigation list>

Windows Azure Infrastructure and DevOps

David Linthicum (@DavidLinthicum) asserted “With the forthcoming release of iCloud, there's finally a cloud for every home -- and to help enterprise adoption” in the deck for his The rise of home clouds will boost cloud usage in business article for InfoWorld’s Cloud Computing blog:

By now we've all heard about the Apple's iCloud service: iCloud provides the ability to share applications, videos, music, data, and other resources among Apple devices. I suspect Apple will have a good run with iCloud, considering it solves a problem many Apple users -- including me -- have and pricing it right.

However, what's most important about the appearance of iCloud is not the service itself, but the fact it signifies the move of IaaS clouds into homes. I call these home clouds.

We've had clouds at home for some time now, such as the use of SaaS services like those provided by Google. However, iCloud is certainly an IaaS-oriented service, and the ability to use a mass-marketed IaaS cloud is new.

Although Apple gets most of the ink, the reality is that providers such as DropBox, Box.net, and Google already provide file-sharing and storage services. iCloud simply provides many of the same file-sharing services with tight integration into Apple software such as iTunes, iWork, iOS, and Mac OS X.

At the same time, many of the newer network storage devices, such as those from Iomega, not only let you use clouds for tiered and/or redundant storage, but actually let you become a cloud as well. This "personal cloud" service is free with the Iomega NAS device.

What's important here is that most people got SaaS when they used SaaS in their personal lives, such as Yahoo Mail and Gmail. Now, the same can be said about IaaS with the emergence of iCloud and other file-sharing and storage services that focus on the home market. Thus, I won't need to have as many long whiteboard conversations as I try to explain the benefits of leveraging storage and compute infrastructure you don't actually own. I can now say, "It's like iCloud." And the lights suddenly come on.

With the acceptance of the home cloud, more acceptance of the enterprise clouds will naturally follow. Thanks, Apple -- I think.

As I mentioned in a Tweet today, “Classifying iCloud as a ‘home cloud’ is a stretch IMO.” David replied “<- You[‘re] missing the point” to which I replied “<= Exactly.”

Carl Brooks (@eekygeeky) contends “Apple iCloud is not cloud computing. Thanks for nothing, Jobs” in his impassioned Apple fuels cloud computing hype all over again post of 6/2/2011 to SearchCloudComputing.com:

… And you, IT person, grumpily reading this over your grumpy coffee and your grumpy keyboard, you have Apple to thank for turning the gas back on under the hype balloon. Now, when you talk about cloud to your CIO, CXO, manager or whomever, and their strange little face slowly lights up while they say, "Cloud? You mean like that Apple thing? My daughter has that…" and you have to explain it all over again, you will hate the words "cloud computing" even more. …

Full disclosure: I’m a paid contributor to SearchCloudComputing.com.

See the DevOpsDays.org will present the DevOpsDays Mountain View 2011 Conference on 6/17 and 6/18/2011 and O’Reilly Media’s Velocity Conference started today at the Santa Clara Convention Center in Santa Clara articles in the Cloud Computing Events section below.

No significant articles today.

<Return to section navigation list>

Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

• Andy Gottlieb asserted “WAN Virtualization enables reliable public cloud networking” as an introduction to his Beware the Network Cost Gotchas of Cloud Computing post of 6/14/2011 to the Cloud Computing Journal:

Although promising cost efficiency with its "pay as you need" model, the cost of implementing cloud computing can skyrocket when the expense of reliable and secure connectivity with sufficient bandwidth delivered via private WANs such as MPLS is added to the total bill. A less expensive potential alternative is to leverage less expensive Internet connections at data centers and radically less expensive broadband connections like ADSL and cable at remote locations. But everyone knows public WANs aren't reliable enough for enterprise use. Or are they?

For enterprises looking to move aggressively toward public, private or hybrid cloud computing, the need for a Wide Area Network (WAN) access layer that is scalable, reliable and inexpensive clearly exists.

Most enterprises today deploy MPLS networks from AT&T, Verizon and the like because they are reliable enough, meeting "four nines" (99.99 percent) reliability standards. This reliability, however, comes at a high price. At typical U.S. domestic pricing of ~$350 to as much as $2,500 (internationally) per Mbps per month for copper T1-based connections for a remote office, enterprises have not been able to grow their WAN bandwidth commensurate with the rate at which demand is growing. And that is before future cloud computing needs are taken into account. Although less expensive per Mbps than branch connectivity, data center MPLS bandwidth generally costs between $40 and $200 per Mbps per month.

By contrast, Internet bandwidth is priced from sub-$4 to ~$15 per Mbps per month for broadband cable and ADSL connections, and as low as $5 per Mbps per month for high-speed bandwidth at carrier-neutral colocation (colo) facilities. This 100x price/bit disparity exists because public Internet connectivity offers only about 99 percent reliability. By reliability, we are referring to the union of simple availability - the network is up and running with basic connectivity - and that packets are getting through to their destinations without being lost or excessively delayed. Unaided, Internet connections are indeed not reliable enough for most enterprise internal WAN requirements today, and as more enterprise applications are moved to "the cloud," the need for reliable and predictable performance from the WAN will only increase. Yet the popular perception of the Internet on its own is indeed accurate: it works pretty well most of the time. But "pretty well" is not good enough for most enterprises, and "most of the time" is not good enough for pretty much any.

A solution to the problem of cost-effective WAN connectivity for both private and public cloud computing that does not sacrifice reliability and performance predictability now exists. WAN Virtualization is changing the structure of the enterprise WAN in the same way that VMware and server virtualization are changing enterprise computing, by enabling reliable public cloud networking.

Where cloud/utility computing with server virtualization leverages the efficient pooling of computing and storage resources, WAN Virtualization delivers a similar efficient pooling of WAN resources, wrapping a layer of hardware and intelligent software around multiple WAN connections - existing private MPLS WANs, as well as any kind of Internet WAN links - to augment or replace those private WAN connections.

WAN Virtualization solutions are generally appliance-based, and are usually implemented as a two-ended solution to ensure the target reliability. They require network diversity per location, whether this is two (or more) different ISPs at each network location, or as little as an existing MPLS connection plus the local Internet access / VPN backup link. Aggregating several links per remote location, many of which are from the same service provider, is also beneficial to allow cost-effective bandwidth scalability.

They support per-flow or per-packet classification and QoS across these aggregated connections. They will typically support data security over the Internet via encryption; IPSec or 128-bit AES encryption, as for SSL VPNs, is common.

Because packet loss is the biggest killer of IP application performance, WAN Virtualization needs to do its best to avoid loss and mitigate the effects when loss occurs, through such techniques as buffering, retransmission, re-ordering, and even selective packet replication.

A successful WAN Virtualization solution will do continuous measurement of the state of each network path in each direction: loss, latency, jitter and bandwidth utilization, preferably many times a second. Uni-directional measurement, rather than simply measuring these statistics on a round-trip basis, is important in ensuring optimal handling of network congestion events. The real key to a WAN Virtualization solution is its ability to use that continuous measurement information to react sub-second to severe problems with any network connection to ensure performance predictability - addressing not just link failures, but also the kinds of congestion-related network problems that may occur more frequently on the public Internet than on private WANs.

Migration Path to Cloud Computing - No Forklift WAN Upgrades

A significant advantage of the WAN Virtualization approach for a cloud computing migration is that it can be deployed not just a site at a time, but an application, user or server at a time, at the discretion of the enterprise WAN manager. Enterprises may choose to use WAN Virtualization, whether temporarily or permanently, to augment, rather than completely replace, an existing MPLS WAN.Complementary to existing WAN Optimization technology, WAN Virtualization is typically deployed as a network overlay, either in-line or out-of-line, supporting both fail-to-wire capability and high-availability redundancy options for both ease of deployment and maximum reliability.

WAN Virtualization solutions also ensure that real-time traffic like VoIP and interactive traffic such as VDI or web-based applications - the most common cloud computing uses - are continuously put on the best performing network paths, rather than simply placed on the connection, which is "usually" better at session initiation, to be moved only in the event of a complete link failure, as simple link load balancers do.

By making inexpensive Internet bandwidth reliable, WAN Virtualization complements perfectly the growing move to carrier-neutral colo facilities - places where essentially unlimited amounts of Internet bandwidth can be had for as little as $5 per Mbps per month.

Such a colo is the perfect place to deploy WAN bandwidth-hungry private cloud services. It's also ideal for centralizing network complexity, e.g., put the next-generation firewall at a colo, and use it to provide inexpensive, scalable Internet access, for secure access to both public cloud services as well as "generic" Internet sites. Just a small handful of colo facilities (two to seven) can deliver scalable, reliable Internet access for even the largest global WANs.

Hybrid Clouds "Solved"

With a private cloud deployment at such a colo, it's now straightforward to take advantage of public-cloud services in the same facility using a Gigabit Ethernet cross-connect within the building, because WAN Virtualization has now allowed enterprises to solve the network reliability and application performance predictability issues and the network security issues of access to public cloud services available at that facility, in a cost effective and scalable manner. This the most sensible way to "do" hybrid cloud computing, moving even huge workloads from private servers to public ones, because it can deliver the performance and it scales.WAN Virtualization technology, when combined with carrier-neutral colo facilities, provides a pragmatic, evolutionary path to leveraging cloud computing. It solves network reliability, predictability and WAN cost issues, as well as many of the security and IT control issues, of a move to public or hybrid clouds. WAN Virtualization allows the network manager to be ready for the demands cloud computing will place on his/her enterprise WAN - without breaking the bank.

Jeff Wettlaufer reported Configuration Manager 2012 Beta 2 VHD now available in a 6/14/2011 post to the System Center Team blog:

Hi everyone, we are really excited to release the Beta 2 VHD of ConfigMgr 2012. You can download this VHD here.

This download comes as a pre-configured Virtual Hard Disk (VHD). It enables you to evaluate System Center Configuration Manager 2012 Beta 2 on Windows Server 2008 R2. It is an exported Hyper V Virtual Machine, pre configured as a 1 server site environment. Not all site roles are configured, but all can be added in your testing.

You can download the VHD here.

Jeff is Senior Technical Product Manager, System Center, Management and Security Division

<Return to section navigation list>

Cloud Security and Governance

InformationWeek::Analytics announced the availability of their Insurance & Technology Digital Issue: July 2011 in a 6/14/2011 post:

LEARNING TO FLY IN THE CLOUD: These days, it's difficult to talk data centers without getting into a conversation about the cloud. But are the benefits of cloud computing real, or are they a lot of hot air? Insurance & Technology's July digital edition helps you cut through the hype and find the true value of the cloud.

Table of Contents

4 QUESTIONS EVERY INSURER MUST ASK ABOUT THE CLOUD: Think you have the cloud figured out? If you haven't addressed these four critical questions, your cloud strategy may never get off the ground.

THE BUILDING BLOCKS OF A MODERN DATA CENTER: Data center transformation is built on automation, virtualization and cloud computing. But reinventing the data center with these technologies begins with an IT transformation.

CARRIERS' CORE CONCERNS ABOUT THE CLOUD: Insurers are cautiously taking to the cloud. But security concerns are likely to keep core systems in-house for the foreseeable future.

PLUS:

Case Study: Optimizing Data Center Performance

Hidden Costs of Hybrid Clouds: Integration Challenges

Creating an Intelligent Infrastructure for Decision SupportAbout the Author

Insurance & Technology's editorial mission is to provide insurance company technology and business executives with the targeted and timely information and analysis they need to get a return on the technology investments required to advance business strategies and be more profitable, productive and competitive. Our content spans across multiple media platforms -- including a print publication, Web site, digital edition, e-newsletter, live events, virtual events, Webcasts, video, blogs and RSS feeds -- so insurance executives can access information via any channel/platform they prefer. I&T’s audience comprises the key lines of business -- life, property/casualty, reinsurance and health -- and ranges from global and multiline insurance companies to specialty lines carriers. Key ongoing areas of focus include policy administration, claims, distribution, regulation/compliance, risk management/underwriting, security, customer insight/business intelligence and architecture/infrastructure.

<Return to section navigation list>

Cloud Computing Events

DevOpsDays.org will present the DevOpsDays Mountain View 2011 Conference on 6/17 and 6/18/2011. From the conference schedule:

Friday 17 June (registration sold out)

- 09:00-09:30 Registration and Breakfast

- 09:30-09:45 Introduction + Devops State of the Union

- 09:45-10:30 To Package or not to Package

- 10:30-11:15 Orchestration at Scale

- 11:15-11:30 break

- 11:30-12:15 DevOps Metrics and Measurement

- 12:15-13:30 Lunch

- 13:30-14:30 4 short talks

- 14:30-15:15 DevOps..Where's the QA?

- 15:15-16:00 Break

- 16:00-16:45 Ignite talks 5 minutes of fame

- 16:45-17:30 Escaping the DevOps Echo Chamber

- 17:30-18:30 Closing/Openspace ideas

- 20:00-... Social Event/Drinks

O’Reilly Media’s Velocity Conference started today at the Santa Clara Convention Center in Santa Clara. Following are Operations sessions that start Tuesday afternoon and later:

3:45pm Tuesday, 06/14/2011

- Managing the System Lifecycle and Configuration of Apache Hadoop and Other Distributed Systems

- Ballroom EFGH

- Philip Zeyliger (Cloudera)

What new challenges does managing and operating distributed systems pose? How is managing distributed systems fundamentally different than managing single-node machines? In this talk, we discuss the problems and work through the architecture of a system that eases lifecycle and configuration management of HDFS, MapReduce, HBase, and a few others. Read more.

- 1:00pm Wednesday, 06/15/2011

- Asynchronous Real-time Monitoring with MCollective

- Mission City

- Jeff McCune (Puppet Labs)

Traditional methods of monitoring service performance have shortcomings due to the on-demand nature of cloud computing. Learn how to deploy MCollective to provide a self-organizing service monitoring and remediation. Read more.

- 1:50pm Wednesday, 06/15/2011

- Why the Yahoo FrontPage Went Down and Why It Didn't Go Down For up to a Decade before That

- Mission City

- Jake Loomis (Yahoo!)

This year the Yahoo! frontpage went dark. Tweets rang out, "What happened? Yahoo! is never down. I can't remember the last time this happened." What enabled www.yahoo.com's remarkable record of reliability? This session will cover the top 5 tricks that contributed to the stability of Yahoo!'s frontpage and a description of what caused the eventual downtime. Read more.

- 2:40pm Wednesday, 06/15/2011

- Where Is Your Data Cached (And Where Should It Be Cached)?

- Mission City

- Sarah Novotny (Blue Gecko)

Modern web stacks have many different layers that can benefit from caching. From raid card caches, disk caches and CPU caches to database caching, application code caching and rendered page caching there is a lot to consider when optimizing and troubleshooting. Let's investigae what's redundant, what's optimal and where your applications might benefit from a closer look. Read more.

- 4:05pm Wednesday, 06/15/2011

- Distributed Systems, Databases, and Resilience

- Mission City

- Justin Sheehy (Basho Technologies)

We will face the reality that anything we depend on can fail, and in the worst possible combination. We will learn how to build systems that let us happily sleep at night despite this troubling realization. Read more.

- 4:55pm Wednesday, 06/15/2011

- Creating the Dev/Test/PM/Ops Supertribe: From "Visible Ops" To DevOps

- Mission City

- Gene Kim (Visible IT Flow)

I'm going to share my top lessons of how great IT organizations simultaneously deliver stellar service levels and fast flow of new features into production. It requires creating a "super-tribe", where development, test, IT operations and information security genuinely work together to solve business objectives as opposed to throwing each under the bus. Read more.

- 5:20pm Wednesday, 06/15/2011

- Building for the Cloud: Lessons Learned at Heroku

- Mission City

- Mark Imbriaco (Heroku)

The Heroku platform grew from hosting 0 applications to more than 120,000 without ever buying a server. We'll talk about the overall architecture of the platform and dive into some of the operational consequences of those architectural choices. Read more.

- 1:00pm Thursday, 06/16/2011

- Wikia: The Road to Active/Active

- Ballroom ABCD

- Jason Cook (Wikia)

In the pursuit of making our site fast, efficient, and resilient in the face of failure we have chosen to use geographically dispersed data centers. In this session I will cover the tools and techniques we used to extend MediaWiki to render pages out of multiple geographically dispersed data centers. Read more.

- 1:00pm Thursday, 06/16/2011

- Software Launch Failures: The Good Parts

- Ballroom EFGH

- Kate Matsudaira (SEOmoz)

What do you do when everything falls apart during your product launch? This talk will cover tools for surviving the worst, and making the most of it. We'll look at post-launch checklists to help recover rapidly, managing expectations to instill confidence in your customers and management, keeping up team morale, and successful post-mortems. Read more.

- 1:50pm Thursday, 06/16/2011

- Instrumenting the real-time web: Node.js, DTrace and the Robinson Projection

- Ballroom ABCD

- Bryan Cantrill (Joyent, Inc.)

We describe our experiences developing DTrace-based system visualization ofNode.js in an early production environment: the 2010 Node Knockout programming contest. We describe the challenges of instrumenting a distributed, dynamic, highly virtualized system -- and what our experiences taught us about the problem, the technologies used to tackle it, and promising approaches. Read more.

- 2:40pm Thursday, 06/16/2011

- Scaling Concurrently

- Ballroom ABCD

- John Adams (Twitter)

Interconnecting many systems to express a single function is often a difficult task. What seems like a simple problem, becomes a difficult one when trying to serve millions of users at the same time without failure or downtime. Timeouts, redundancy, humans, and high levels of concurrency are a challenge, and I'll walk through problems and solutions encountered while scaling twitter.com. Read more.

- 4:05pm Thursday, 06/16/2011

- Actionable Web Performance for Operations

- Ballroom ABCD

- Theo Schlossnagle (OmniTI)

We all know that web performance is key. It drives adoption, loyalty and revenues. While it is so important, real-time web performance metrics are often absent from operations culture. Which metrics are important to operations? How can I use these metrics to improve performance, availability and stability? Learn how to make operational sense out of this critical performance indicators. Read more.

- 4:55pm Thursday, 06/16/2011

- A Network Perspective on Application Performance

- Ballroom ABCD

- Mohit Lad (Thousand Eyes Inc)

How many locations should one serve content from to make their application truly fast globally? At what point does the improvement become marginal? These are questions that we answer in this talk using actual network performance data and arm the audience with the knowledge necessary to make informed decisions on network planning for optimizing application performance. Read more.

- 4:55pm Thursday, 06/16/2011

- reddit.com War Stories: The mistakes we made and how you can avoid them.

- Ballroom EFGH

- Jeremy Edberg (reddit.com)

reddit.com does 1.3 billion pageviews a month, and that number grows by about 10% each month. Through that growth they've made some mistakes along the way. Some they have fixed, and some they are still paying the price. Come hear Jeremy Edberg, reddit's head of technology, speak about their successes and failures. Read more.

- 5:20pm Thursday, 06/16/2011

- Post-Mortem Round Table

- Ballroom EFGH

- Moderated by:

- Mandi Walls (AOL)

- Panelists: Mark Imbriaco (Heroku), Matt Hackett (Tumblr)

A panel discussion featuring folks from sites which have had large outages in the past months. Discussion topics would cover causes, resolutions, process changes after the outage, cultural changes after the outage, and lingering after affects with regards to how the userbase reacted. Read more.

<Return to section navigation list>

Other Cloud Computing Platforms and Services

• Michael Coté (@cote) posted a not-so-brief Opscode 1.0 – Brief Note to his Redmonk blog on 6/14/2011:

Opscode GA’s its offerings. It’s time for it to start some automation knife fighting.

Opscode, the commercial company around the automation project Chef, had a bundle of what I’d call “1.0″ announcements today: firming up their product offerings for general availability:

- They’ve fully baked their hosted Chef suite, renaming it Opscode Hosted Chef (née Opscode Platform).

They released Opscode Private Chef for all those folks who can’t stomach running their automation suite in the cloud.

- You can also see them staking out a claim in the next generation IT management space with their “Cloud Infrastructure Automation” term, used to describe running IT in a cloud-centric and inspired way, e.g., by using Chef.

Also, there’s Windows support, which is sort of a minority interest at the moment but damn fine to start hammering out now.

But does anyone care?

There’s plenty of momentum for Opscode as their numbers-porn slide shows:

Segment Context & Such

Indeed, I continue to see interest in Chef, particularly from developers and ISV types – see their Crowbar partnership with Dell, putting together OpenStack, Chef, and Dell hardware for quick-clouds on the cheap. Check the small Opscode partnering mention in El Reg’s piece on Calxeda today as well.

I tend to hear more interest in Puppet from IT types (see coverage of their offering here), something the Private Chef offering might help address. IT folks have been skittish about using cloud for their software, and, why not? As one admin told me last year, “well, if the Internet goes down, I’m dead in the water,” he has no tools. And, despite the fact that you’re probably dead in the water in all cases where “the Internet goes down” and that ServiceNow seems to be doing just fine, that’s some FUD that doesn’t deserve much scorn.

On the broader front, I’m still not seeing much regard from the traditional automation vendors for these model-driven automation up-starts, Chef and Puppet. But they should be paying attention more: both are classic “fixing a moribund category that sucks” strategies that seem to be actually working in removing The Suck by focusing on speed.

Downloadable PoCs

And if you’re on the other end of the stick – buying and/or using automation software – checking out these new approaches is definitely worth your time. The reports are sounding similar to the early days of open source, where the CIO is stuck in multi-month PoCs and license renewals, while a passionate admin somewhere just downloads Puppet or Chef, does a quick-n-dirty PoC, and then gets clearance to take more time to consider these whacky, new methods.

More

• SD Times on the Web reported VMware Introduces vFabric 5, an Integrated Application Platform for Virtual and Cloud Environments in a 6/14/2011 post of a press release:

VMware, Inc. (NYSE: VMW) … today announced VMware vFabric 5, an integrated application platform for virtual and cloud environments. Combining the market-leading Spring development framework for Java and the latest generation of vFabric application services, vFabric 5 will provide the core application platform for building, deploying and running modern applications. vFabric 5 introduces for the first time a flexible packaging and licensing model that will allow enterprises to purchase application infrastructure software based on virtual machines, rather than physical hardware CPUs, and to pay only for the licenses in use. This model will eliminate the decades old need for organizations to purchase excess software in anticipation of peak loads, incurring significant costs and allowing software licenses to sit dormant outside of peak periods. The model in vFabric 5 more closely aligns to cloud computing models that directly link the cost of software with use, consumption and value delivered to the organization.

“Cloud computing is reshaping not just how IT resources are consumed by the business, but how those resources are purchased, licensed and delivered,” said Tod Nielsen, President, Application Platform, VMware. “While application infrastructure technologies have advanced to meet the needs of today’s enterprise, to date, the business models have remained rigid and out of date. With the introduction of VMware vFabric 5, VMware will inextricably link the cost of application infrastructure software to volume utilized by the organization and the value delivered back to the business, helping all organizations advance further toward a cloud environment.”

Optimized for vSphere

vFabric 5 is engineered specifically to take advantage of the server architecture of VMware vSphere, the most widely deployed virtualization platform. The new Elastic Memory for Java (EM4J) capability that will be available in vFabric tc Server will allow for optimal management of memory across Java applications through the use of memory ballooning in the JVM. This capability, in combination with VMware vSphere will enable greater application server density for Java workloads on vFabric. …

Read more: Next Page; Pages 2, 3

Raghav Sharma reported NCR Joins the Cloud Bandwagon in a 6/14/2011 post to the CloudTimes blog:

NCR Corporation, a leading technology and solutions provider for the retail and financial services industry, is the newest entrant in the growing cloud computing market.

NCR recently launched their new cloud hosting service, by the name of NCR Hosting Services, which would enables cloud providers’ to gain access to their IT and service support infrastructure. The new services are targeted to telecom carriers, original equipment manufacturers (OEMs) and system integrators. However, the service is currently limited to the USA.

NCR have modeled their services in a rather flexible way, allowing the customers to choose from facility-plus-assets service solution or lease rack space for their own equipment in NCR’s data centers. NCR is currently offering services based out of their high-security, temperature-controlled facility at Beltsville, MD.

According to Nadine Routhier, vice president of NCR Telecom and Technology, “With our new hosting services NCR is able to help cloud service providers scale their businesses quickly while minimizing capital expenditure and risk. We are already trusted by some of the biggest brands in the U.S. to deliver a reliable, high-availability and high-security service.”

Gartner has estimated the worldwide cloud computing market to be worth $150.0 billion by 2014. The nature of cloud-based services, acting sometimes are utility services, and therefore sometimes referred as utility computing as well, are growing in popularity due to the pay as you go model. Given the width of options available to suit different kind of customers and the low time to market, cloud computing and services based on it, are fast becoming de-facto way of initiating into business.

According to their press release, “NCR’s state-of-the-art, high-security tier 4 hosting center offers redundant hardware, dual Tier one Internet Service Providers (ISPs) and automatic failover, backed by a comprehensive disaster recovery facility to deliver high system availability. “

Given the increasing focus on cloud security, NCR has shown considerable intent there. They have put in place different audits, reviews and multiple examinations and certifications for their facilities and services. This includes but is not limited to, “Type II SAS 70 reviews, U.S. Federal Financial Institution Examination Council (FFIEC) governance and examinations, ISO/IEC 27001:2005 certification”.

Welcome to the crowd.

Joe Panattieri posted The Talkin’ Cloud 50: Top 50 Cloud Computing VARs & MSPs to the TalkinCloud blog on 6/14/2011:

Nine Lives Media Inc. today unveiled the Talkin’ Cloud 50 report, which identifies the top VARs and MSPs building and offering cloud services for their customers. The list and report are available now in the Talkin’ Cloud 50 portal.

Among the report’s highlights:

- Growth: Annual cloud revenues among the Talkin’ Cloud 50 rose 47 percent, on average, from 2009 to 2010.

- Revenues: The average Talkin’ Cloud 50 company now generates more than $6 million in annual cloud revenues.

- Primary Services: 68 percent of the companies offer Infrastructure as a Service (IaaS); 42% offer platform as a Service (PaaS); and 74% offer Software as a Service (SaaS).

- Specific Focus Areas: The report also covers specific segment areas such as hosted/SaaS email; virtual servers in the cloud, storage as a service; security as a service; hosted unified communications and more.

- Strategic Relationships: The most popular cloud partners included VMware, Microsoft BPOS/Office 365, Google Apps, Zenith Infotech, Amazon Simple Storage Service, Rackspace, Intermedia and Ingram Micro Services.

The Talkin’ Cloud 50’s top 10 companies are:

- OpSource, www.opsource.net

- Apptix, Inc., www.apptix.com

- mindSHIFT Technologies, www.mindshift.com

- iland Internet Solutions, www.iland.com

- Alliance Technology Group, www.alliance-it.com

- Anittel Group Limited, www.anittel.com.au

- HEIT, www.goheit.com

- NetBoundary, www.netboundary.com

- MySecureCloud, www.mysecurecloud.com

- Excel Micro, Inc., www.excelmicro.com

Check the Talkin’ Cloud 50 portal for the complete list and additional details. The Talkin’ Cloud 50 report is based on data from Talkin’ Cloud’s online survey, conducted through March 2011. The Talkin’ Cloud report recognizes top VARs and MSPs in the cloud based on cloud-centric revenues, applications offered, users supported, and additional variables. Cloud aggregators, cloud brokers and cloud services providers (CSPs) are also profiled in the Talkin’ Cloud 50 report.

We’ll be back soon with more insights and analysis from the Talkin’ Cloud 50 report.

<Return to section navigation list>

.png)

0 comments:

Post a Comment