Windows Azure and Cloud Computing Posts for 3/29/2011+

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database and Reporting

- Marketplace DataMarket and OData

- Windows Azure AppFabric: Access Control, WIF and Service Bus

- Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch

- Windows Azure Infrastructur and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Azure Blob, Drive, Table and Queue Services

No significant articles today.

<Return to section navigation list>

SQL Azure Database and Reporting

Brian Swan explained Java Access to SQL Azure via the JDBC Driver for SQL Server on 3/29/2011:

I’ve written a couple of posts (here and here) about Java and the JDBC Driver for SQL Server with the promise of eventually writing about how to get a Java application running on the Windows Azure platform. In this post, I’ll deliver on that promise. Specifically, I’ll show you two things: 1) how to connect to a SQL Azure Database from a Java application running locally, and 2) how to connect to a SQL Azure database from an application running in Windows Azure. You should consider these as two ordered steps in moving an application from running locally against SQL Server to running in Windows Azure against SQL Azure. In both steps, connection to SQL Azure relies on the JDBC Driver for SQL Server and SQL Azure.

The instructions below assume that you already have a Windows Azure subscription. If you don’t already have one, you can create one here: http://www.microsoft.com/windowsazure/offers/. (You’ll need a Windows Live ID to sign up.) I chose the Free Trial Introductory Special, which allows me to get started for free as long as keep my usage limited. (This is a limited offer. For complete pricing details, see http://www.microsoft.com/windowsazure/pricing/.) After you purchase your subscription, you will have to activate it before you can begin using it (activation instructions will be provided in an email after signing up).

Connecting to SQL Azure from an application running locally

I’m going to assume you already have an application running locally and that it uses the JDBC Driver for SQL Server. If that isn’t the case, then you can start from scratch by following the steps in this post: Getting Started with the SQL Server JDBC Driver. Once you have an application running locally, then the process for running that application with a SQL Azure back-end requires two steps:

1. Migrate your database to SQL Azure. This only takes a couple of minutes (depending on the size of your database) with the SQL Azure Migration Wizard - follow the steps in the Creating a SQL Azure Server and Creating a SQL Azure Database sections of this post.

2. Change the database connection string in your application. Once you have moved your local database to SQL Azure, you only have to change the connection string in your application to use SQL Azure as your data store. In my case (using the Northwind database), this meant changing this…

String connectionUrl = "jdbc:sqlserver://serverName\\sqlexpress;"

+ "database=Northwind;"

+ "user=UserName;"

+ "password=Password";…to this…

String connectionUrl = "jdbc:sqlserver://xxxxxxxxxx.database.windows.net;"

+ "database=Northwind;"

+ "user=UserName@xxxxxxxxxx;"

+ "password=Password";(where xxxxxxxxxx is your SQL Azure server ID).

Connecting to SQL Azure from an application running in Windows Azure

The heading for this section might be a bit misleading. Once you have a locally running application that is using SQL Azure, then all you have to do is move your application to Windows Azure. The connecting part is easy (see above), but moving your Java application to Windows Azure takes a bit more work. Fortunately, Ben Lobaugh has written a great post that that shows how to use the Windows Azure Starter Kit for Java to get a Java application (a JSP application, actually) running in Windows Azure: Deploying a Java application to Windows Azure with Command-Line Ant. (If you are using Eclipse, see Ben’s related post: Deploying a Java application to Windows Azure with Eclipse.) I won’t repeat his work here, but I will call out the steps I took in modifying his instructions to deploy a simple JSP page that connects to SQL Azure.

1. Add the JDBC Driver for SQL Server to the Java archive. One step in Ben’s tutorial (see the Select the Java Runtime Environment section) requires that you create a .zip file from your local Java installation and add it to your Java/Azure application. Most likely, your local Java installation references the JDBC driver by setting the classpath environment variable. When you create a .zip file from your java installation, the JDBC driver will not be included and the classpath variable will not be set in the Azure environment. I found the easiest way around this was to simply add the sqljdbc4.jar file (probably located in C:\Program Files\Microsoft SQL Server JDBC Driver\sqljdbc_3.0\enu) to the \lib\ext directory of my local Java installation before creating the .zip file.

Note: You can put the JDBC driver in a separate directory, include it when you create the .zip folder, and set the classpath environment variable in the startup.bat script. But, I found the above approach to be easier.

2. Modify the JSP page. Instead of the code Ben suggests for the HelloWorld.jsp file (see the Prepare your Java Application section), use code from your locally running application. In my case, I just used the code from this post after changing the connection string and making a couple minor JSP-specific changes:

<%@ page language="java"

contentType="text/html; charset = ISO-8859-1"

import = "java.sql.*"

%><html>

<head>

<title>SQL Azure via JDBC</title>

</head>

<body>

<h1>Northwind Customers</h1>

<%

try{

Class.forName("com.microsoft.sqlserver.jdbc.SQLServerDriver");

String connectionUrl = "jdbc:sqlserver://xxxxxxxxxx.database.windows.net;"

+ "database=Northwind;"

+ "user=UserName@xxxxxxxxxx;"

+ "password=Password";

Connection con = DriverManager.getConnection(connectionUrl);

out.print("Connected.<br/>");

String SQL = "SELECT CustomerID, ContactName FROM Customers";

Statement stmt = con.createStatement();

ResultSet rs = stmt.executeQuery(SQL);while (rs.next()) {

out.print(rs.getString(1) + ": " + rs.getString(2) + "<br/>");

}

}catch(Exception e){

out.print("Error message: "+ e.getMessage());

}

%>

</body>

</html>That’s it!. To summarize the steps…

- Migrate your database to SQL Azure with the SQL Azure Migration Wizard.

- Change the database connection in your locally running application.

- Use the Windows Azure Starter Kit for Java to move your application to Windows Azure. (You’ll need to follow instructions in this post and instructions above.)

<Return to section navigation list>

MarketPlace DataMarket and OData

Michael Crump (@mbcrump) continued his OData series with Producing and Consuming OData in a Silverlight and Windows Phone 7 application (Part 2) post of 3/28/2011 for the Silverlight Show blog:

This article is Part 2 of the series “Producing and Consuming OData in a Silverlight and Windows Phone 7 application.”

- Producing and Consuming OData in a Silverlight and Windows Phone 7 application. (Part 1) – Creating our first OData Data Source and querying data through the web browser and LinqPad.

- Producing and Consuming OData in a Silverlight and Windows Phone 7 application. (Part 2 ) – Consuming OData in a Silverlight Application.

- Producing and Consuming OData in a Silverlight and Windows Phone 7 application. (Part 3) – Consuming OData in a Windows Phone 7 Application.

To refresh your memory on what OData is:

The Open Data Protocol (OData) is simply an open web protocol for querying and updating data. It allows for the consumer to query the datasource (usually over HTTP) and retrieve the results in Atom, JSON or plain XML format, including pagination, ordering or filtering of the data.

To recap what we learned in the previous section:

- We learned how you would produce an OData Data Source starting from File->New Project and selecting empty ASP.NET Application.

- We generated a SQL Compact 4 Edition DB and populated it with data.

- We created our Entity Framework 4 Model and OData Data Service.

- Finally, we learned about basic sorting and filtering of the OData Data Source using the web browser and a free utility called LinqPAD.

In this article, I am going to show you how to consume an OData Data Source using Silverlight 4. In the third and final part of the series, we will consume the data using Windows Phone 7. Read the complete series of articles to have a deep understanding of OData and how you may use it in your own applications.

See the video tutorial

Download the source code for part 2 | Download the slidesAdding on to our existing project.

Hopefully you have completed part 1 of the series, if you have not you may continue with this exercise by downloading the source code to Part 1 and continue. I would recommend at least watching the video to part 1 in order to have a better understanding of where you can add OData to your own applications.

Go ahead and load the SLShowODataP1 project inside of Visual Studio 2010, and look under Solution Explorer. Now right click CustomerService.svc and Select “View in Browser”.

If everything is up and running properly, then you should see the following screen (assuming you are using IE8).

Please note that any browser will work. I chose to use IE8 for demonstration purposes only.

If you got the screen listed above then we know everything is working properly and can continue. If not download the solution and continue with the rest of the article.

To keep things simple, we are going to use this same project and add in our Silverlight Application. So to begin, you will Right Click on the Solution and select “Add” then “New Project”.

You will want to select “Silverlight” –> “Silverlight Application” –> and give it a name of “SLODataApp” and hit “OK”.

You will be presented with the following screen and we are going to leave everything as the default.

Now our project should look like the following:

We have a Silverlight Application as well as a ASP.NET Web Application. You should notice that the ASP.NET application has automatically added the Silverlight helper JavaScript as well as the test page to load our Silverlight Application.

The first thing that we are going to do is create our user interface. This will allow us to select any of our customers and display a “Details” view.

Here is a screenshot from the completed application.

Double click on your MainPage.xaml and replace the existing Grid with the code snippet provided below.

1: <Grid x:Name="LayoutRoot" Background="White">2: <Grid.RowDefinitions>3: <RowDefinition />4: <RowDefinition />5: </Grid.RowDefinitions>6: <ListBox ItemsSource="{Binding}" DisplayMemberPath="FirstName" x:Name="customerList" Margin="8" />7: <StackPanel DataContext="{Binding SelectedItem, ElementName=customerList}" Margin="8" Grid.Row="1">8: <TextBlock Text="Details:"/>9: <TextBlock Text="{Binding FirstName}"/>10: <TextBlock Text="{Binding LastName}"/>11: <TextBlock Text="{Binding Address}"/>12: <TextBlock Text="{Binding City}"/>13: <TextBlock Text="{Binding State}"/>14: <TextBlock Text="{Binding Zip}"/>15: </StackPanel>16: </Grid>Lines 1-5 are setting up our display to allow two rows. One for the ListBox that contains our individual collection and one for our “details view” that will provide additional information about our customer.

Line 6 contains our ListBox that we going to bind the collection to. The DisplayMemberPath tells the listbox that we want to show the “Name” column. We also give this ListBox a name so we can refer our StackPanel’s DataContext to it. Finally, we give it a margin so it appears nice and neat on the form.

Lines 7-15 contains our StackPanel which represents our “Details” view. We set the DataContext to bind to the SelectedItem of our ListBox to populate the Name, Address, City, State and Zip Code when a user selects an item.

Lines 16 is simply the closing tag for the Grid.

Now that we have our UI built, it should look like the following inside of Visual Studio 2010.

We will now need to add a Service Reference to our OData Data Service before we can write code-behind to retrieve the data from the service.

We will need to right-click on our project and select Add Service Reference.

On the “Add Service Reference” screen, click Discover then type “DataServices” as the name for the Namespace and finally hit OK.

Note: You can use another name besides “DataServices”, I typically use that as Namespace name to avoid confusion to myself and others.

If this OData service was hosted somewhere you could actually use a http://www.yourdomain.com/CustomerService.svc instead of specifying the port number.

You may notice that you have a few extra .dll’s added to your project as well as a new folder called Service References. You may also notice how large the project has become since our initial ASP.NET Empty Web Project!

Now that we are finished with our UI and adding our Service Reference, let’s add some code behind to call our OData Data Service and retrieve the data into our Silverlight 4 Application.

Now would be a good time to go ahead and Build our project. Select Build from the Menu and Build Solution. Now we can Double click on the MainPage.xaml.cs file and add the following code snippet, after replacing the MainPage() Constructor.

1: public MainPage()2: {3: InitializeComponent();4: this.Loaded += new RoutedEventHandler(MainPage_Loaded);5: }6:7: void MainPage_Loaded(object sender, RoutedEventArgs e)8: {9: var ctx = new CustomersEntities(new Uri("/CustomerService.svc", UriKind.Relative));10: var qry = from g in ctx.CustomerInfoes11: select g;12:13: var coll = new DataServiceCollection<CustomerInfo>();14: coll.LoadCompleted += new EventHandler<LoadCompletedEventArgs>(coll_LoadCompleted);15: coll.LoadAsync(qry);16:17: DataContext = coll;18: }19:20: void coll_LoadCompleted(object sender, LoadCompletedEventArgs e)21: {22: if (e.Error != null)23: {24: MessageBox.Show("Error Detected, handle accordingly.");25: }26: }Lines 1-5 is the standard code generated except for the Loaded event. The Loaded Event created a new Event Handler called Main_Page Loaded.

Line 9 creates a new context with our CustomersEntities (which was generated by Entity Framework 4) and passes the URI of our data service which is located in our ASP.NET Project called CustomerService.svc.

Lines 10-11 simply creates a query that returns all records in our collection. We will modify this shortly to create a where and an orderby example.

Lines 13-15 creates a DataServiceCollection which provides notification if an item is added, deleted or the list is refreshed. It is expecting a collection that was also created by Entity Framework.

Line 17 is simply setting the DataContext of MainPage to the collection.

Finally lines 20-26 are checking for an error and displaying a MessageBox. Of course, in a production application you may want to log that error instead of interrupting the user with a message they shouldn’t be concerned with.

If we go ahead and run this application, we would get the following screen.

After selecting a user, we would get the Details view as shown below:

Let’s go back and add a query to filter the data. So go back to your MainPage.xaml.cs file and change this line:

1: var qry = from g in ctx.CustomerInfoes2: select g;to:

1: var qry = from g in ctx.CustomerInfoes2: where g.ID < 33: orderby g.FirstName4: select g;Now if we run this project you will notice that we only have 2 results returned and ordered by the FirstName. So in this example, Jon is listed first.

Conclusion

At this point, we have seen how you would produce an OData Data Source and learn some basic sorting and filtering using the web browser and LinqPad. We have also consumed the data in a Silverlight 4 Application and queried the data with and without a filter. In the next part of the series, I am going to show you how to consume this data in a Windows Phone 7 Application. Again, thanks for reading and please come back for the final part.

Michael is an MCPD who shares his findings in his personal blog http://michaelcrump.net.

Doris Chen posted Redmond MVC & jQuery WebCamp |Presentation, Demo and Labs Available to Download on 3/28/2011:

The following is the agenda for the WebCamp on Friday (3/25) in Redmond, WA and I am going to use this agenda as a reference to post the WebCamp content for both MVC and jQuery sessions.

As we have shown you in the WebCamp, the presentation, demo and labs material for MVC3 sessions can be downloaded from

- http://trainingkit.webcamps.ms/AspNetMvc.htm (for both beginner and intermediate levels)

The presentation, demo and labs for jQuery sessions are as follows:

- Welcome and Introduction

- Session 1 jQuery Fundamentals

- Session 2 Templating &New Plugins

- Session 3 jQuery, OData & MVC

- Suggested labs for jQuery:

… Thanks for attending the WebCamp and hope to see you in the upcoming WebCamps soon.

<Return to section navigation list>

Windows Azure AppFabric: Access Control, WIF and Service Bus

Itai Raz continued his AppFabric series with Introduction to Windows Azure AppFabric blog posts series – Part 5: Scale-out Application Infrastructure on 3/29/2011:

In the previous posts in this series we covered the challenges that Windows Azure AppFabric is trying to solve, and started discussing the Middleware Services in the post regarding Service Bus and Access Control, the post regarding Caching, and the post regarding Building Composite Applications.

In this post we will discuss how the AppFabric Application Infrastructure is optimized for cloud-scale services and middle-tier components, which enables you to get the benefits of cloud computing. These benefits include: horizontal scale-out, high-availability, and multi-tenancy.

Scale-out Application Infrastructure

As noted above, we already covered the AppFabric Middleware Services, and the support you get for developing and running Composite Applications. As illustrated by the image below, the next crucial AppFabric component that enables all these great capabilities is the AppFabric Container.

The AppFabric Container is an infrastructure run-time component. It enables services and components running on top of it to get all of the great benefits that a cloud-based environment provides.

Services that can run on top of the container include the AppFabric Middleware Services mentioned in previous posts, other middle-tier components provided by Microsoft, and components that you create.

Let's discuss the main capabilities that the AppFabric Container provides.

Composition RuntimeThe Composition Runtime enables managing the full lifecycle of application components including: loading, unloading, starting, and stopping. It also supports configurations such as auto-start and on-demand activation of components. You can configure these at development time, but you can also use the AppFabric Management Portal to manage components' runtime state, set components' runtime properties, and control components' lifecycle. Instead of having to develop these capabilities on your own, you get them out-of-the-box from the Container, and you are able to easily set and configure them.

Multi-tenancy and SandboxingOne of the basic concepts of cloud computing is optimizing the use of all available resources in a highly elastic way, so that resource usage is maximized and load and resilience is distributed across the available resources. To make this happen, you need to support many tenants in a highly dense way and ensure that no tenant interferes with another. In addition, this has to be handled in an automated manner, based on the requirements of the application. Once again, the Container is engineered to provide these capabilities so you can maximize the use of the underlying resources through multi-tenancy while providing sandboxing of the different tenants.

State ManagementWhen designing applications, it is complicated to implement state management and scale-out in conjunction. In order to be able to scale-out your applications, you end up moving your state management to a different application tier, such as the database. This allows stateless components to be more easily scaled-out; however, this really just moves the bottleneck of state management to a different tier.

What if components and state could reside together and still be able to scale horizontally? This way they could scale-out together, saving the developer the need to worry about complexities of external state management and other infrastructure capabilities. The Container provides state management capabilities that enable you to scale-out your application without having to externalize your components' state.

Scale-out and High AvailabilityWith state management capabilities provided out-of-the-box, you would next like to optimize your application performance and provide high availability. You want your application to automatically scale according to the application load. The Container provides these abilities. It takes care of transparently replicating application components so there are no single points of failure. It can also automatically spin-up new instances of components as the application load increases and then spin them down as the application load decreases.

Dynamic Address Resolution and RoutingWhen you run in a fabric-based environment such as the cloud, components can be placed, replaced, and reconfigured dynamically by the environment in order to provide the application with optimal scale and availability. With all of these moving parts, it is critical to keep track of them and work out where they are and how to invoke them. The Container plays a key role in managing all of this logic for you and automatically and efficiently routes requests to the target services and components, saving you the need to worry about changes occurring in the environment.

As you can see, the concepts and capabilities discussed above are key for you to be able to get the advantages of a cloud based environment. If you really want to optimize your application to get these benefits, and had to implement all of these capabilities on your own, it would cost you a lot of time and require you to implement some pretty complicated concepts. This is just another example how AppFabric, and specifically the AppFabric Container, can make the life of the developer a lot easier and more productive when building applications, by providing these capabilities out-of-the-box.

A first Community Technology Preview (CTP) of the features discussed in this post will be released in a few months, so you will be able to start testing them soon.

As a reminder, you can start using our CTP services in our LABS/Preview environment at: https://portal.appfabriclabs.com/. Just sign up and get started.

Other places to learn more on Windows Azure AppFabric are:

- Windows Azure AppFabric website and specifically the Popular Videos under the Featured Content section

- Windows Azure AppFabric developers MSDN website

Be sure to start enjoying the benefits of Windows Azure AppFabric with our free trial offer. Just click on the image below and start using it today!

<Return to section navigation list>

Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

The Windows Azure Team announced CONTENT UPDATE: Delivering High-Bandwidth Content with the Windows Azure CDN in a 3/29/2011 post:

The MSDN library content about how to use the Windows Azure Content Delivery Network (CDN) has been revised, reorganized, and expanded to cover the new features available in the Windows Azure Platform Management Portal. In Delivering High-Bandwidth Content with the Windows Azure CDN, the updated content provides a detailed overview of the CDN and step-by-step instructions for how to setup, enable, and manage the CDN using the new functionality available in the Management Portal. Check it out, and let us know what you think by using the on-page rating/feedback or by sending email to azuresitefeedback@microsoft.com.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

Steve Marx (@smarx) posted a preview of a live Windows Azure Cassandra + Tornado Test app on 3/29/2011:

Stay tuned for more info.

Rachel Collier reviewed the Moving applications to the cloud on the Microsoft Windows Azure platform book from patterns & practices on 3/29/2011:

This book is the first volume in a series about the Windows Azure platform. Here’s a summary:

Moving Applications to the Cloud on the Microsoft Windows Azure Platform

How do you build applications to be scalable and have high availability? Along with developing the applications, you must also have an infrastructure that can support them. You may need to add servers or increase the capacities of existing ones, have redundant hardware, add logic to the application to handle distributed computing, and add logic for failovers. You have to do this even if an application is in high demand for only short periods of time.

The cloud offers a solution to this dilemma. The cloud is made up of interconnected servers located in various data centers. However, you see what appears to be a centralized location that someone else hosts and manages. By shifting the responsibility of maintaining an infrastructure to someone else, you're free to concentrate on what matters most: the application.

This is just one of the marvelous books you can find out about in the MSDN library.

Michal Morciniec explained How to Obtain Powered by Azure Logo for your Windows Azure website in a post to his MSDN blog of 3/28/2011:

To help partners market their applications Microsoft has created a new logo “Powered by Windows Azure”. You can read about the objectives of this co-marketing program in this web page. In this blog we will have a look at the process and requirements to obtain this logo assuming that you have already deployed your web site to Azure.

The first step is to access the Microsoft Platform Ready website. You click the Sign Up link where you can provide some information about yourself and your company. You can then Sign In with your Live ID to access the portal

2. Now we are redirected to a page that lists in panel My MPR the list of applications registered for certification. The application can be added by clicking as small link Add an app below the Application table

3. The form appears where you provide information about the Windows Azure website that you want to certify. The application name, version, description and the URL to the application are provide.

4. In the remaining section of the form we indicate the language of the website and our interest in certifying it on Windows Azure. If the application is already working on Azure specify current date for Target Compatibility Date. Press Save & Submit button

5. We now go back to the main MPR page and can see Windows Azure Platform technology listed when our Azure website is selected in the list of the applications. We can now click the Download tool link that appears in the Actions column (it may take a minute or two for this link to become active).

5. When the link is clicked the tabbed section below list of applications changed to the Test Phase. In that section we can download the certification tool by clicking on the link Microsoft Platform Ready Test Tool. This page allows us also to upload the test results generated from this tool for our registered application.

6. Install the Microsoft Platform Ready Test Tool on your computer.

7.Start the tool by typing Microsoft Platform in the Start->Search… textbox. In the Microsoft Platform Ready Test Tool click on the button Start New Test

8. In the Test Information screen select Windows Azure checkbox, fill out name of the test and press the Next button

9. In the next screen click the Edit link to fill out details required by the test

10. The test requires a URL to the Windows Azure website and its IP address

11. Log in to Windows Azure developer portal to look up these details

12. Copy and paste the DNS name (without the http:// prefix) and Input Endpoints to the test pre-requisites screen and press Verify button. The Configuration Status should be Pass for both items. Press Close button.

13. On the next screen verify that the pre-requisite status is Pass and press the Next button

14. On the next page mark the check box and press the Next button. The status will be Executing tests

15. On next screen you can view the status of the test that should be Pass. Click Next to view the report

16. On the final screen you can click Show button to view the report. Then click Reports button to generate the test results package.

17. On this page select the tests that you want to include in the test results package. Press Next button

18. Review the summary of the tests and press the Next button.

19. Provide the application name and version and press Next.

20. Now we need to specify additional information to generate the package. In particular we specify the Application ID from the http://www.microsoftplatformready.com that was assigned in step 5. On this screen we can also review the Logo agreement. Press Next to generate the package.

21. On the final screen make good note of the location of the test package

22. Now we go back to the http://www.microsoftplatformready.com and log in. In the Dashboard click the application Name in the application table and then click the Test tab on the horizontal tab bar and Test My Apps tab on the vertical tab bar. You should see a screen as below

23. Now upload the test package – this is a .zip archive located in the Documents folder, in the subfolder of MPR\Results Package.

24. Press Upload button to submit it to the portal.

25. Press the Refresh link to refresh test result processing state. You should see a page as below

You can download now the Powered by Windows Azure logo by clicking at the download link.You can also get access to additional marketing material by clicking on the Verify vertical tab and then on the Market horizontal tab.

The process has changed a bit and the Microsoft Platform Ready pages have been redone since I went through this process to obtain the Powered by Windows Azure logo for my OakLeaf Systems Azure Table Services Sample Project - Paging and Batch Updates Demo:

<Return to section navigation list>

Visual Studio LightSwitch

The Visual Studio Lightswitch Team added an Excel Importer [Extension] for Visual Studio LightSwitch to the MSDN Code Samples library on 3/28/2011:

Introduction

Excel Importer is a Visual Studio LightSwitch Beta 2 Extension. The extension will add the ability to import data from Microsoft Excel to Visual Studio LightSwitch applications. The importer can validate the data that is being imported and will even import data across relationships.

Getting Started

To build and run this sample, Visual Studio 2010 Professional, the Visual Studio SDK and LightSwitch are required. Unzip the ExcelImporter zip file into your Visual Studio Projects directory (My Documents\Visual Studio 2010\Projects) and open the LightSwitchUtilities.sln solution.

Building the Sample

To build the sample, make sure that the LightSwitchUtilities solution is open and then use the Build | Build Solution menu command.

Running the Sample

To run the sample, navigate to the Vsix\Bin\Debug or the Vsix\Bin\Release folder. Double click the LightSwitchUtilities.Vsix package. This will install the extension on your machine.

Create a new LightSwitch application. Double click on the Properties node underneath the application in Solution Explorer. Select Extensions and check off LightSwitch Utilities. This will enable the extension for your application.

In your application, add a screen for some table. For example, create a Table called Customer with a single String property called Name. Add a new Editable Grid screen for Customers. Create a new button for the screen called ImportfromExcel. In the button's code, call LightSwitchUtilities.Client.ImportFromExcel, passing in the screen collection you'd like to import data from.

Run the application and hit the Import From Excel button. This should prompt you to select an Excel file. This Excel file must be located in the Documents directory due to Silverlight limitations. The Excel file's first row should identify each column of data with Title. These titles will be displayed in the LightSwitch application to allow you to map them to properties on the corresponding LightSwitch table.

The Visual Studio Lightswitch Team updated its Visual Studio LightSwitch Vision Clinic Walkthrough & Sample app in the MSDN Code Samples library for Beta 2 on 3/28/2011:

Learn how to get started building business applications with Visual Studio LightSwitch.

This download contains the database and sample application for the "Walkthrough: Creating the Vision Clinic Application" topic in the product documentation. Both Visual Basic and C# samples are available.

This sample is intended for use with Visual Studio LightSwitch Beta 2. To learn more about Visual Studio LightSwitch and download the Beta, please visit the LightSwitch Developer Center. For questions please visit the LightSwitch Forums.

Walkthrough: Creating the Vision Clinic Application

This walkthrough demonstrates the end-to-end process of creating an application in Visual Studio LightSwitch. You will use many of the features of LightSwitch to create an application for a fictional vision clinic. The application includes capabilities for scheduling appointments and creating invoices.

Steps:

Create a Project: Create the application project.

Define Tables: Add Patient, Invoice, and Invoice Detail entities.

Create a Choice List: Create a list of values.

Define a Relationship: Link related tables.

Add Another Entity: Add the Appointment entity.

Create a Screen: Create a screen to display patients.

Run the Application: Run the application and enter data.

Connect to a Database: Connect to an external database.

Make Changes to Entities: Modify the Products and Product Rebate entities.

Create a List and Details Screen: Create a screen to display products.

Change the Screen Layout: Modify the layout of the Product List screen.

Make Runtime Changes: Make changes in the running application.

Create a Query: Create a parameterized query and bind it to a screen.

Add a Computed Field: Create a computed field and add it to a screen.

Create a Cross-database Relationship: Create a virtual relationship between entities in different databases.

Create the Invoices Screen: Create a screen to display invoices.

Modify the Invoices Screen: Change the layout of the Invoices screen in the running application.

Add Screen Logic: Write code to calculate dates.

Add More Computed Fields: Create more computed fields and add them to the Invoices screen.

Deploy the Application:Publish the application as a 2-tier Desktop application.

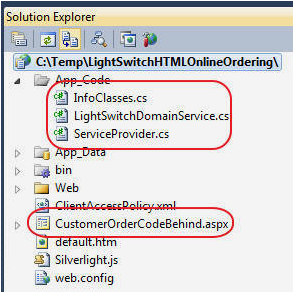

Michael Washington (@defwebserver) posted LightSwitch and HTML to The Code Project on 3/28/2011:

Using LightSwitch For The Base Of All Your Projects

The number one reason you would not use LightSwitch, is that you have a web application that needs to accessed by clients who cannot, or will not, install the Silverlight plug-in.

For example, if we create the application covered here: OnlineOrdering.aspx and we decide we want to allow Customers to place orders with their IPad, we would assume that we cannot use LightSwitch for the project. This article will demonstrate, that it is easy to add normal HTML pages, to allow access to the LightSwitch application.

Because LightSwitch uses a normal SQL database, this is not difficult. However, one of the benefits of Lightswitch, is that it allows you to easily create and maintain business rules. These business rules are the heart (and the point) of any application. You want any 'web-based client' to work with any LightSwitch business rules. In addition, you want the security to also work.

For example, In the LightSwitch Online Ordering project, we allow an administrator to place orders for any Customer, but when a Customer logs in, they can only see, and place orders for themselves. This article will demonstrate how this can be implemented in HTML pages. If a change is made to the LightSwitch application, the HTML pages automatically get the new business logic without needing to be re-coded.

The Sample Application

We first start with the application covered here: OnlineOrdering.aspx.

We add some pages to the deployed application.

We log into the application using normal Forms Authentication.

These pages work fine with any standard web browser including an IPad.

A Customer is only able to see their orders.

The Customer can place an order.

All the business rules created in the normal LightSwitch application are enforced.

How It's Done

The key to making this work is that LightSwitch is built on top of standard Microsoft technologies such as RIA Domain Services. While this is not normally done, it is possible to connect to RIA Domain Services using ASP.NET code.

One blog covers doing this using MVC: http://blog.bmdiaz.com/archive/2010/04/03/using-ria-domainservices-with-asp.net-and-mvc-2.aspx. As a side note, you can use MVC to create web pages. I originally created this sample using MVC but switched back to web forms because that is what I code in mostly.

The Service Provider

It all starts with the Service Provider. When calling RIA Domain Services from ASP.NET code, you need a Service Provider. Min-Hong Tang of Microsoft provided the basic code that I used to create the following class:

Collapse | Copy Code

public class ServiceProvider : IServiceProvider { public ServiceProvider(HttpContext htpc) { this._httpContext = htpc; } private HttpContext _httpContext; public object GetService(Type serviceType) { if (serviceType == null) { throw new ArgumentNullException("serviceType"); } if (serviceType == typeof(IPrincipal)) return this._httpContext.User; if (serviceType == typeof(HttpContext)) return this._httpContext; return null; } }The Domain Service

Next we have the Domain Service. This is the heart of the application. The LightSwitch team intentionally does not expose any of the WCF service points that you would normally connect to. The LightSwitch Domain Service is one of the few LightSwitch internal components you can get to outside of the normal LightSwitch client.

The following class uses the Service Provider and returns a Domain Service that will be used by the remaining code.

Collapse | Copy Code

using System.Web; using System.ServiceModel.DomainServices.Server; using LightSwitchApplication.Implementation; public class LightSwitchDomainService { public static ApplicationDataDomainService GetLightSwitchDomainService(HttpContext objHtpContext, DomainOperationType DOT) { // Create a Service Provider ServiceProvider sp = new ServiceProvider(objHtpContext); // Create a Domain Context // Set DomainOperationType (Invoke, Metdata, Query, Submit) DomainServiceContext csc = new DomainServiceContext(sp, DOT); // Use the DomainServiceContext to instantiate an instance of the // LightSwitch ApplicationDataDomainService ApplicationDataDomainService ds = DomainService.Factory.CreateDomainService(typeof(ApplicationDataDomainService), csc) as ApplicationDataDomainService; return ds; } }Authentication

When we created the original application, we selected Forms Authentication. LightSwitch simply uses standard ASP.NET Forms Authentication. This allows you to insert a LightSwitch application into an existing web site and use your existing users and roles.

I even inserted a LightSwitch application into a DotNetNuke website (I will cover this in a future article).

We can simply drag and drop a standard ASP.NET Login control and drop it on the page.

I only needed to write a small bit of code to show and hide panels when the user is logged in:

Collapse | Copy Code

protected void Page_Load(object sender, EventArgs e) { if (this.User.Identity.IsAuthenticated) // User is logged in { // Only run this if the panel is hidden if (pnlLoggedIn.Visible == false) { pnlNotLoggedIn.Visible = false; pnlLoggedIn.Visible = true; ShowCustomerOrders(); } } else // User not logged in { pnlNotLoggedIn.Visible = true; pnlLoggedIn.Visible = false; } }

You can even open the site in Visual Studio, and go into the normal ASP.NET Configuration...

And manage the users and roles.

A Simple Query

When a user logs in, a simple query shows them the Orders that belong to them:

Collapse | Copy Code

private void ShowCustomerOrders() { // Make a collection to hold the final results IQueryable<Order> colOrders = null; //// Get LightSwitch DomainService ApplicationDataDomainService ds = LightSwitchDomainService.GetLightSwitchDomainService(this.Context, DomainOperationType.Query); // You can't invoke the LightSwitch domain Context on the current thread // so we use the LightSwitch Dispatcher ApplicationProvider.Current.Details.Dispatcher.BeginInvoke(() => { // Define query method DomainOperationEntry entry = serviceDescription.GetQueryMethod("Orders_All"); // execute query colOrders = (IQueryable<Order>) ds.Query(new QueryDescription(entry, new object[] { null }), out errors, out totalCount).AsQueryable(); }); // We now have the results - bind then to a DataGrid GVOrders.DataSource = colOrders; GVOrders.DataBind(); }When a user clicks on the Grid, this code is invoked:

Collapse | Copy Code

protected void GVOrders_RowCommand(object sender, GridViewCommandEventArgs e) { if (e.CommandName == "Select") { LinkButton lnkButtton = (LinkButton)e.CommandSource; int intOrderID = Convert.ToInt32(lnkButtton.CommandArgument); ShowOrderDetails(intOrderID); } }This code invokes a slightly more complex series of queries because we must display the Product names, show the total for all Order items, and format the output using a custom class.

Collapse | Copy Code

private void ShowOrderDetails(int intOrderID) { // Make a collection to hold the final results List<OrderDetailsInfo> colOrderDetailsInfo = new List<OrderDetailsInfo>(); // A value to hold order total decimal dOrderTotal = 0.00M; // Make collections to hold the results from the Queries IQueryable<Product> colProducts = null; IQueryable<OrderDetail> colOrderDetails = null; // Get LightSwitch DomainService ApplicationDataDomainService ds = LightSwitchDomainService.GetLightSwitchDomainService(this.Context, DomainOperationType.Query); // You can't invoke the LightSwitch domain Context on the current thread // so we use the LightSwitch Dispatcher ApplicationProvider.Current.Details.Dispatcher.BeginInvoke(() => { // Define query method DomainOperationEntry entry = serviceDescription.GetQueryMethod("Products_All"); // execute query colProducts = (IQueryable<Product>) ds.Query(new QueryDescription(entry, new object[] { null }), out errors, out totalCount).AsQueryable(); }); ApplicationProvider.Current.Details.Dispatcher.BeginInvoke(() => { // Define query method DomainOperationEntry entry = serviceDescription.GetQueryMethod("OrderDetails_All"); // execute query colOrderDetails = (IQueryable<OrderDetail>) ds.Query(new QueryDescription(entry, new object[] { null }), out errors, out totalCount).AsQueryable(); }); // We only want the Order details for the current order var lstOrderDetails = colOrderDetails.Where(x => x.OrderDetail_Order == intOrderID); foreach (var Order in lstOrderDetails) { // Get the Product from the ProductID var objProduct = colProducts.Where(x => x.Id == Order.OrderDetail_Product).FirstOrDefault(); // Add the item to the final collection colOrderDetailsInfo.Add(new OrderDetailsInfo { Quantity = Order.Quantity, ProductName = objProduct.ProductName, ProductPrice = objProduct.ProductPrice, ProductWeight = objProduct.ProductWeight }); // Update order total dOrderTotal = dOrderTotal + (objProduct.ProductPrice * Order.Quantity); } // We now have the results - bind then to a DataGrid gvOrderDetails.DataSource = colOrderDetailsInfo; gvOrderDetails.DataBind(); lblOrderTotal.Text = String.Format("Order Total: ${0}", dOrderTotal.ToString()); }Inserting Data

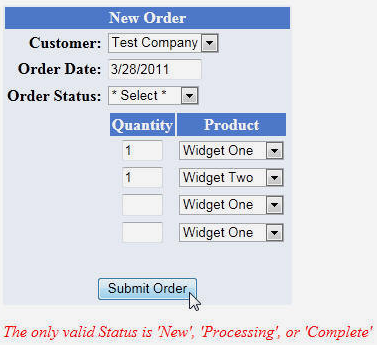

You can click the New Order link to switch to the New Order form and create a new Order.

If we look at the table structure in SQL Server, we see that when you click Submit, an Order entity needs to be created, and any OrderDetail entities need to be added to it.

In addition, we need to capture any errors and display them.

The following code does that:

Collapse | Copy Code

protected void btnSubmitOrder_Click(object sender, EventArgs e) { try { // Hold the results from the Queries IQueryable<Product> colProducts = null; // Get LightSwitch DomainService ApplicationDataDomainService ds = LightSwitchDomainService.GetLightSwitchDomainService(this.Context, DomainOperationType.Submit); // Get the ID of the Customer Selected int CustomerID = Convert.ToInt32(ddlCustomer.SelectedValue); // We will need all the Products // lets reduce hits to the database and load them all now ApplicationProvider.Current.Details.Dispatcher.BeginInvoke(() => { colProducts = ds.Products_All(""); }); // Create New Order Order NewOrder = Order.CreateOrder ( 0, Convert.ToDateTime(txtOrderDate.Text), ddlOrderStatus.SelectedValue, CustomerID ); // Get the OrderDetails if (txtQuantity1.Text != "") { int ProductID1 = Convert.ToInt32(ddlProduct1.SelectedValue); OrderDetail NewOrderDetail1 = new OrderDetail(); NewOrderDetail1.Quantity = Convert.ToInt32(txtQuantity1.Text); NewOrderDetail1.Product = colProducts.Where(x => x.Id == ProductID1) .FirstOrDefault(); NewOrder.OrderDetails.Add(NewOrderDetail1); } if (txtQuantity2.Text != "") { int ProductID2 = Convert.ToInt32(ddlProduct1.SelectedValue); OrderDetail NewOrderDetail2 = new OrderDetail(); NewOrderDetail2.Quantity = Convert.ToInt32(txtQuantity2.Text); NewOrderDetail2.Product = colProducts.Where(x => x.Id == ProductID2) .FirstOrDefault(); NewOrder.OrderDetails.Add(NewOrderDetail2); } if (txtQuantity3.Text != "") { int ProductID3 = Convert.ToInt32(ddlProduct1.SelectedValue); OrderDetail NewOrderDetail3 = new OrderDetail(); NewOrderDetail3.Quantity = Convert.ToInt32(txtQuantity3.Text); NewOrderDetail3.Product = colProducts.Where(x => x.Id == ProductID3) .FirstOrDefault(); NewOrder.OrderDetails.Add(NewOrderDetail3); } if (txtQuantity4.Text != "") { int ProductID4 = Convert.ToInt32(ddlProduct1.SelectedValue); OrderDetail NewOrderDetail4 = new OrderDetail(); NewOrderDetail4.Quantity = Convert.ToInt32(txtQuantity4.Text); NewOrderDetail4.Product = colProducts.Where(x => x.Id == ProductID4) .FirstOrDefault(); NewOrder.OrderDetails.Add(NewOrderDetail4); } // Set-up a ChangeSet for the insert var ChangeSetEntry = new ChangeSetEntry(0, NewOrder, null, DomainOperation.Insert); var ChangeSetChanges = new ChangeSet(new ChangeSetEntry[] { ChangeSetEntry }); // Perform the insert ds.Submit(ChangeSetChanges); // Display errors if any if (ChangeSetChanges.HasError) { foreach (var item in ChangeSetChanges.ChangeSetEntries) { if (item.HasError) { foreach (var error in item.ValidationErrors) { lblOrderError.Text = lblOrderError.Text + "<br>" + error.Message; } } } } else { lblOrderError.Text = "Success"; } } catch (Exception ex) { lblOrderError.Text = ex.Message; } }Use At Your Own Risk

I would like to thank Karol and Xin of Microsoft for their assistance in creating this code, however, Microsoft wanted me to make sure that I made it very clear that this is unsupported. The Lightswitch team only supports calling LightSwitch code from the Silverlight Lightswitch client. What this means:

- Don't call Microsoft for support if you have problems getting this working

- It is possible that a future service pack could disable something

- In LightSwitch version 2 (and beyond) they may decide to make their own HTML page output, or make a lot of changes that would break this code.

My personal opinion (follow at your own risk)

- I will still use the LightSwitch forums for help as needed.

- I can write code that talks directly to the SQL server tables (you would have to code the business rules manually)

- You don't have to upgrade the project to LightSwitch 2 (and beyond).

I think I understand why they won't officially support this scenario. Number one, if a security issue arose they want the ability to control both ends of the data flow, and they can't promise to support code written on the client side that they have no way of knowing what it is. Also, they want to leave their options open in future versions of LightSwitch.

Because I know I can always code directly against the SQL server data, the risk wont keep me up at night.

License

This article, along with any associated source code and files, is licensed under The Microsoft Public License (Ms-PL)

Michael is a Microsoft Silverlight MVP and is one of the founding members of The Open Light Group.

Return to section navigation list>

Windows Azure Infrastructure and DevOps

The Windows Azure Team described Steps to Set Up Invoicing in this 3/28/2011 window:

Josh Duncan posted Next-Gen Ops Management Needed in Cloud to the Data Center Knowledge blog on 3/22/2011 (missed when published):

Today’s IT operations management requirements are changing. While the basics of performance and availability management are still the same, extending traditional IT operations to the cloud introduces a new layer of complexity, which requires organizations to manage the operational challenges that come with a dynamic environment.

When organizations are considering scaling their operations to the cloud, attention is often spent on the basic setup of the infrastructure, and operations management typically gets pushed out to a later date. While the architecture is certainly a challenge in itself, overlooking the day-in and day-out management of a new, virtualized environment in the planning stages can create bigger problems down the road, not to mention undermine the success of the cloud-based infrastructure.

Consider service delivery and usage

More often than not, enterprises who are internally building a private cloud take a “if we build it, they will come” mentality. The problem with this approach is if you can’t guarantee service delivery, people will lose faith in your cloud. It’s that simple. If users don’t believe your shared resource is an advantage to them, there is going to be resistance. This lack of faith can cause a downward spiral, and before you know it, you won’t be able to get critical resources on it. The bottom line is, if you can’t guarantee service delivery, you can’t drive up utilization in your cloud environment. This will drive up costs and your cloud will be looked at as an under-utilized or wasted resource.

Start with a strong foundation

IT organizations need to prepare to manage the challenges of the cloud from the start. First, the cloud may be virtual, but behind the scenes, there is a lot of physical infrastructure required. The notion that once you move to the cloud the physical parts go away is simply not true. You still have to purchase, deploy, and provision the hardware to power the cloud. Second, the legacy physical management challenges that were never easy to deal with in the first place are now in an environment where dynamic resource allocation is the standard. Virtualization has added another layer of management challenges that must be managed and correlated with the physical dependencies.

Clear insight into activities in the cloud is necessary

Because all events are interconnected in the cloud, guaranteeing service delivery requires complete insight into everything that’s happening, and the ability to determine the likely impacts. The inability to answer important questions like “What applications are running on this server?” and “Can I allocate their resources over to a different device fast enough so the service isn’t impacted?” can create a ripple effect throughout the environment when a problem occurs. And before you can address the issue, your cloud SLAs have been impacted and your customers are contacting you about a problem you didn’t even know existed. That’s a scenario all businesses want to avoid.

The benefits of the cloud can only be achieved when an organization is solving traditional and dynamic management problems of IT and service delivery. Applying only traditional management approaches in a more complex and dynamic world is a recipe for disaster.

Think ops management from the beginning

Ultimately, those who are going to be successful in the cloud are the ones who figure out how to operate it effectively and efficiently. Cloud builders embarking on the journey to the cloud need to make operations management a priority from Day One. You simply can’t expect to bolt it on later and be successful. This is why designing a next-generation cloud-based infrastructure requires a next-generation operations management approach. Doing so will help eliminate problems down the road and increase your chances of success in the cloud.

Josh Duncan is a Product Evangelist at Zenoss.

<Return to section navigation list>

Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

John Brodkin asserted “Microsoft pitches self-service private cloud in System Center 2012 at its Microsoft Management Summit” as he reported Private cloud can prevent IT run arounds, Microsoft says in a 3/29/2011 article for ITWorld-Canada:

If IT shops want to prevent users from going to the cloud, they have to bring the cloud to the users.

That was Microsoft Corp.'s message to customers Tuesday as the Microsoft Management Summit kicked off with a preview of System Center 2012. When IT shops don't provision services to end users fast enough, Microsoft officials said, the users will get what they want from Amazon's Elastic Compute Cloud or perhaps from Windows Azure.

But a private cloud model, enabled by new self-service capabilities in System Center, can make IT delivery so efficient that users won't need to make an end run around IT, Microsoft said.

There are many pieces to Microsoft's overhaul of its management platform, but the key for the user is a project code-named "Concero," a self-service portal for deploying business applications without having to deal with the underlying physical and virtual infrastructure.

Just as Amazon's Elastic Compute Cloud and Windows Azure let anyone get the computing and storage capacity they need, Concero lets business users request resources from their IT shops from a Web browser. The users can still access public cloud services like Azure, but they do so in a controlled environment that complies with company policies and regulations.

Demonstrating the new capabilities onstage, product manager Jeremy Winter showed how users can navigate a service catalog published in an internal IT portal to request additional resources -- for example, to boost the storage and CPU allocated to a particular application. They can also view their applications, virtual machines and clouds running both in the internal data center and on Windows Azure, and perform tasks like stopping and starting virtual machines. But while fine-grained control is available to those who want it, Microsoft said its overall goal is to abstract the complexity away.

"It's not about the virtual machines. It's about the applications that run in them," Winter said.

System Center 2012 is due out later this year, although some pieces are available in beta, such as Virtual Machine Manager. Even though Microsoft argued that Microsoft applications run best on Hyper-V, rather than VMware, the company said System Center 2012 will manage VMware and Xen in addition to its own Hyper-V virtualization technology.

"We have the only product that offers heterogeneous management across all major hypervisors," said program manager Michael Michael. Virtual Machine Manager 2012 will simplify the process of creating virtual machines from bare-metal capacity, using host profiles to standardize the process.

Using application virtualization, System Center 2012 will separate the operating system from the application, allowing multiple applications to share the same OS image and reducing the number of OS images an IT shop must maintain, Microsoft said.

Concero and Virtual Machine Manager are part of a broader group of offerings within System Center. Once a user makes a request from Concero, an IT manager can respond to that request through a portal that allows selection of logical networks, load balancers, storage, memory, virtual CPUs and anything else needed to meet the request.

Drawing from Microsoft's acquisition of performance monitoring company AVIcode, System Center 2012 will have new tools to help diagnose problems when performance is degraded. Microsoft is also unveiling System Center Advisor, a Web portal that provides ongoing assessment of configuration changes and reduces downtime by providing warnings before problems occur. Customers who have already purchased Software Assurance on WindowsServer, Exchange and other applications will automatically be licensed to use System Center Advisor, which is now available as a release candidate.

<Return to section navigation list>

Cloud Security and Governance

No significant articles today.

<Return to section navigation list>

Cloud Computing Events

Bruce Kyle recommended that you Watch Countdown to MIX but included a dead link in a 5/29/2011 post to the US ISV Evangelism blog:

Find our more about what’s happening at MIX on Channel 9. Jennifer Ritzinger (event owner) and Mike Swanson (keynote and content owner) host a weekly video show to talk about the latest news and ‘behind-the-scenes’ information about the MIX event.

Visit the [corrected] MIX Countdown page on Channel 9 to view current and past episodes.

See you at MIX 11.

Aviraj Ajgekar posted Announcing ‘Ask the Experts’ Webcast Series…Do Register !!! to the TechNet blog on 3/28/2011:

Here are the cloud-related Webcast details:

Date & Time Topic Speakers Register April 6th, 2011

4:00 PM to 5:30 PMBuilding a Private Cloud using Hyper-V and System Center & What's in Windows Azure for IT Professionals MS Anand,

Saranya Sriram

<Return to section navigation list>

Other Cloud Computing Platforms and Services

Joe Panettieri reported HP CloudSystem Partner Program: Hits and Misses in a 3/29/2011 post to the TalkinCloud blog:

Hewlett-Packard, as expected, launched the HP CloudSystem Partner Program today at the HP Americas Partner Conference in Las Vegas. HP Channel Chief Stephen DiFranco (pictured) also shared some key channel milestones with roughly 1,000 VARs at the conference. But I have to concede: Other than reselling HP hardware, I’m still a little confused about how channel partners will profit from some portions of HP’s cloud strategy.

On the upside, the HP CloudSystem Partner Program:

- “provides tools for channel partners to build expert clud practices by evolving their business models and developing cloud-focused skills.”

- “enables partners to build and manage complete, integrated cloud solutions across private, public and hybrid cloud environments.”

According to company executives, the HP CloudSystem is a complete, open, integrated hardware/software solution that channel partners can deploy for customers. The system includes the following components (click on graphic for larger image):

For large solutions providers — especially those that serve big service providers and enterprises — the HP CloudSystem partner program sounds intriguing.

Missing Pieces

Still, I believe HP missed an opportunity to promote its cloud strategy to SMB channel partners, smaller VARs and MSPs. Among my two major questions:

1. What Is the HP Cloud?: HP CEO Leo Apotheker said a so-called HP Cloud is under development. It sounds like a public cloud alternative to Amazon Web Services, Microsoft Windows Azure, Rackspace Cloud and more. But Apotheker didn’t describe whether the HP Cloud will include a channel program. Nor did he say when HP Cloud will debut. After Apotheker’s keynote, I think some partners were left with more questions than answers.

2. White Label Cloud Services?: Many TalkinCloud readers, particularly smaller VARs and MSPs, want white label SaaS applications that they can rebrand and sell as their own. Examples include online backup, storage, disaster recovery, hosted email, hosted PBX, anti-spam and encryption. But I didn’t hear any plans involving HP Cloud delivering white label services to channel partners. Moreover, DiFranco said HP partners typically build their businesses by leveraging the HP brand. Generally speaking, I think SMB partners increasingly want to lead with their own brand, particularly when it comes to cloud computing and managed services.

Smart Moves

Still, HP is making some smart SMB cloud moves. You just need to poke around to find them. Off stage, HP VP Meaghan Kelly described the company’s growing relationship with Axcient, a cloud storage provider that works closely with MSPs in the SMB market.

The HP-Axcient relationship, only about a month old, has engaged 200 MSPs that generate roughly $500,000 in annual recurring revenues. That’s an impressive start. And I suspect HP is preparing to do more to promote additional SMB-centric cloud solutions to VARs and MSPs.

Perhaps HP Cloud Maps and HP Cloud Discovery Workshop, two related efforts, will assist those SMB efforts. Here’s why.

- HP Cloud Maps involves tested configurations of popular applications, such as Microsoft Exchange. Plenty of partners want to get into the SaaS email market. But here again, I wonder: How many channel partners really want to launch their own hosted Exchange data centers? I suspect most channel partners are simply looking to white label third-party hosted Exchange services.

- HP CloudSystem Community: Apparently, it will allow channel partners to share and sell Cloud Map services. That sounds promising. Done right, perhaps major service providers can leverage Cloud Map services to extend white label cloud offerings to smaller VARs and MSPs.

Ultimately, HP wants channel partners to sell more servers, networking and storage into the cloud computing market. That makes sense for plenty of HP channel partners. But so far I think HP’s cloud effort overlooks smaller VARs and MSPs that want to become cloud integrators — tying together multiple third-party SaaS systems. …

Read More About This Topic

David Linthicum claimed “In spite of its weak cloud strategy, Oracle is profiting handsomely from the cloud movement” in a preface to his Oracle's journey to the cloud -- and to the bank post of 5/29/2011 to InfoWorld’s Cloud Computing blog:

As I predicted in this blog, Oracle has found a profitable journey in the cloud over and above its existing open source database offerings. Specifically, Oracle sales increased 37 percent to $8.76 billion last quarter, according to Bloomberg. Oracle credits cloud computing for the revenue jump; indeed, Oracle's databases posted a 29 percent gain in new license sales.

This is due to the fact that we're hooked on Oracle, we're moving to the cloud, and we're bringing Oracle along for the ride, even though Oracle was late to market with its cloud strategy and the company's real goal is to rebrand its existing products as "cloud." However, as clouds are created for public consumption or within the enterprises, Oracle will find a place at the table now and for some time to come.

Klint Finley over at ReadWriteWeb picked up on this as well, citing my past predictions around the growth of Oracle and the relative lack of interest in open source databases:

Even in cases where NoSQL tools are adopted, traditional databases tend to remain. For example, CERN and CMS adopted Apache CouchDB and MongoDB for certain uses, but kept Oracle for others. And the numbers out from Oracle today suggest that the company's databases are not just being used by cloud customers, but behind the scenes as well.

The issue is that Oracle is almost like an infrastructure you can't get rid of, no matter how expensive, even compared to the value and features of open source alternatives. Too many Oracle-savvy users depend on the core features of the company's database to build applications, even in the cloud, and they are not willing to change -- often for good reason, I'm sure. However, we have to consider the long-term value of not seeking thriftier alternatives.

Oracle’s earnings increase proves cloud-washing is profitable.

Matthew Weinberger posted his Amazon Virtual Private Cloud Gets Dedicated EC2 analysis to the TalkinCloud on 3/29/2011:

Amazon Web Services says the Amazon Virtual Private Cloud (VPC) offering has gained hardware-isolated Amazon EC2 Dedicated Instances. The goal is to let cloud service providers leverage the scalable, elastic EC2 cloud computing platform, but maintain the option of keeping their private cloud, well, private.

Only recently, Amazon Web Services launched a major enhancement to Amazon VPC that enabled administrators to access their private cloud infrastructure through the Internet (as opposed to by a VNC from datacenter to AWS).

While that certainly added to Amazon VPC’s channel appeal right there, these Dedicated Instances look to give organizations that don’t want their data in the public cloud access to the same compute engine as everyone else, according to the official blog entry. That’s a potential plus for organizations who need to meet compliance needs but also want to handle workloads in the cloud.

And Amazon VPC is letting users mix-and-match dedicated and regular instances. For instance, you can have certain applications run in one of these physically isolated, customer-specific Amazon EC2 instances, but let others run free within your VPC.

Of course, you’ll have to pay for the privilege of having Amazon dedicate hardware to you and you alone – taking advantage of these Dedicated Instances costs an extra $10 per use-hour on top of the normal pay-as-you-go VPC and EC2 rates. …

Read More About This Topic

Lydia Leong (@cloudpundit) analyzed the economics of Amazon’s dedicated instances in a 3/28/2011 post:

Back in December, I blogged about the notion of Just Enough Privacy — the idea that cloud IaaS customers could share a common pool of physical servers, yet have the security concerns of shared infrastructure addressed through provisioning rules that would ensure that once a “private” customer got a virtual machine provisioned on a physical server, no other customers would then be provisioned onto that server for the duration of that VM’s life. Customers are far more willing to share network and storage than they are compute, because they’re worried about hypervisor security, so this approach addresses a significant amount of customer paranoia with no real negative impact to the provider.

Amazon has just added EC2 Dedicated Instances, which are pretty much exactly what I wrote about previously. For $10 an hour per region with single-tenancy, plus a roughly 20% uplift to the normal Amazon instance costs, you can have single-tenant servers. There are some minor configuration complications, and dedicated reserved instances have their own pricing (and are therefore separate from regular reserved instances), but all in all, these combine with the recently-released VPC features for a reasonably elegant set of functionality.

The per-region charge carries a significant premium over any wasted capacity. An extra-large instance is a full physical server; it’s 8x larger than a small instance, and its normal pricing is exactly 8x, $0.68/hour vs. a small’s $0.085/hour (Linux pricing). Nothing costs more than a quadruple extra large high-memory instance ($2.48/hour), also a full physical server. Dedicated tenancy should never waste more than a full physical server’s worth of capacity, so the “wasted” capacity carries around a 15x premium on normal instances and a 4x premium on the expensive high-memory instances, compared to if that capacity had simply been sold as a multi-tenant server. It’s basically a nuisance charge for really small customers, and not even worth thinking about by larger customers (it’s a lot less than the cost of a cocktail at a nice bar in San Francisco). All in all, it’s pretty attractive financially for Amazon, since they’re getting a 20%-ish premium on the instance charges themselves, too. (And if retail is the business of pennies, those pennies still add up when you have enough customers.)

Amazon has been on a real roll since the start of the year — the extensive VPC enhancements, the expansion of the Identity and Access Management features, and the CloudFormation templates are among the key enhancements. And the significance of the Citrix/Amazon partnership announcement shouldn’t be overlooked, either.

Randy Bias (@randybias) updated his AWS Feature Releases, Enterprise Clouds, and Legacy App Adoption post on 3/29/2011:

A couple of weeks ago I posted about Amazon’s continued rapid release cycle and tallied up their releases by year. I think it’s even more interesting to look at where these feature releases are happening by service.

The stacked graph by service is as follows (click through for full size image):

And here is a slightly different view that unpacks the various services a bit:

Obviously the EC2 service, which encapsulates a number of sub-services (e.g. ELB, EBS, Elastic IP), has the lion’s share of updates, but every service is being touched on a regular basis.

Perhaps most importantly, in 2010, every single service had significant feature updates and releases. This, I think, is the crux of one of Amazon’s key competitive advantages. A fast-firing multi-service release cycle that allows them to continue to plow ahead of others in the market place.

In my Cloud Connect 2011 Keynote, I panned the so-called “enterprise cloud” model for building clouds. This is the model epitomized by traditional (I prefer “legacy”) enterprise vendors who are trying to help cloud service providers capture the non-existent legacy application outsourcing market.

Perhaps enterprise clouds will ultimately be successful, but can anyone really see a legacy enterprise vendor providing this level of release cycle across 10+ services on a monthly, quarterly, or annual basis? It requires a whole different kind of DNA; the kind we see in large web/Internet operators and cloud pioneers such as Amazon and Google.

Meantime, AWS continues to release feature after feature that reduce the impedance mismatch for legacy applications to adopt their cloud. Mark my words, while greenfield apps are driving AWS today, legacy apps will eventually need clouds to move to and I suspect that by the time we see mass adoption (2-3 years out most likely) AWS will be as attractive a target as an ‘enterprise cloud’, but at a fraction of the price.

I doubt if Randy included in his graphs the new Amazon consumer cloud features announced below.

Werner Vogels (@werner) described Music to my Ears - Introducing Amazon Cloud Drive and Amazon Cloud Player on 3/28/2011:

Today Amazon.com announced new solutions to help customers manage their digital music collections. Amazon Cloud Drive and Amazon Cloud Player enable customers to securely and reliably store music in the cloud and play it on any Android phone, tablet, Mac or PC, wherever they are.

As a big music fan with well over 100Gb in digital music I am particularly excited that I now have access to all my digital music anywhere I go.

Order in the Chaos

The number of digital objects in our lives is growing rapidly. What used to be only available in physical formats now often has digital equivalents and this digitalization is driving great new innovations. The methods for accessing these objects is also rapidly changing; where in the past you needed a PC or a Laptop to access these objects, now many of our electronic devices have become capable of processing them. Our smart phones and tablets are obvious examples, but many other devices are quickly gaining these capabilities; TV Sets and Hifi systems are internet enabled, and soon our treadmills and automobiles will be equally plugged into the digital world.

Managing all these devices, along with the content we store and access on them, is becoming harder and harder. We see that with our Amazon customers; when they hear a great tune on a radio they may identify it using the Shazam or Soundhound apps on their mobile phone and buy that song instantly from the Amazon MP3 store. But now this mp3 is on their phone and not on the laptop that they use to sync their iPod with and not on the Windows Media Center PC that powers their HiFi TV set. That's frustrating - so much so that customers tell us they wait to buy digital music until they are in front of the computer they store their music library on, which brings back memories of a world constrained by physical resources.

The launch of Amazon Cloud Drive, Amazon Cloud Player and Amazon Cloud Player for Android will help to bring order in this chaos and will ensure that customers can buy, access and play their music anywhere. Customers can upload their existing music library into Amazon Cloud Drive and music purchased from the Amazon MP3 store can be added directly upon purchase. Customers then use Amazon Cloud Player Web application to easily manage their music collections with download and stream options. The Amazon Cloud Player for Android is integrated with the Amazon MP3 app and gives customers instant access to all the music they have stored in Amazon Cloud Drive on their mobile device. Any purchases that customers make on their Android devices can be stored in Amazon Cloud Drive and are immediately accessible from anywhere.

A Drive in the Cloud

To build Amazon Cloud Drive the team made use of a number of cloud computing services offered by Amazon Web Services. The scalability, reliability and durability requirements for Cloud Drive are very high which is why they decided to make use of the Amazon Simple Storage Service (S3) as the core component of their service. Amazon S3 is used by enterprises of all sizes and is designed to handle scaling extremely well; it stores hundreds of billions of objects and easily performs several hundreds of thousands of storage transaction a second.

Amazon S3 uses advanced techniques to provide very high durability and reliability; for example it is designed to provide 99.999999999% durability of objects over a given year. Such a high durability level means that if you store 10,000 objects with Amazon S3, you can on average expect to incur a loss of a single object once every 10,000,000 years. Amazon S3 redundantly stores your objects on multiple devices across multiple facilities in an Amazon S3 Region. The service is designed to sustain concurrent device failures by quickly detecting and repairing any lost redundancy, for example there may be a concurrent loss of data in two facilities without the customer ever noticing.

Cloud Drive also makes extensive use of AWS Identity and Access Management (IAM) to help ensure that objects owned by a customer can only be accessed by that customer. IAM is designed to meet the strict security requirements of enterprises and government agencies using cloud services and allows Amazon Cloud Drive to manage access to objects at a very fine grained level.

A key part of the Cloud Drive architecture is a Metadata Service that allows customers to quickly search and organize their digital collections within Cloud Drive. The Cloud Player Web Applications and Cloud Player for Android make extensive use of this Metadata service to ensure a fast and smooth customer experience.

Making it simple for everyone

Amazon Cloud Drive and Amazon Cloud Player are important milestones in making sure that customer have access to their digital goods at anytime from anywhere. I am excited about this because it is already making my digital music experience simpler and I am looking forward to the innovation that these services will drive on behalf of our customers.

If you are an engineer interested in working on Amazon Cloud Drive and related technologies the team has a number of openings and would love to talk to you! More details at http://www.amazon.com/careers.

The price for storage beyond the 5 GB free level is $2 per GB per year.

As Randy reports and I’ve asserted before, Amazon is truly on a “feature roll,” but this new cloud services doesn’t appear to be part of AWS.

Jeff Barr (@jeffbarr) reported in a 3/28/2011 post that Amazon Web Services is Adding a Second AWS Availability Zone in Tokyo:

Our hearts go out to those who have suffered through the recent events in Japan. I was relieved to hear from my friends and colleagues there in the days following the earthquake. I'm very impressed by the work that the Japan AWS User Group (JAWS) has done to help some of the companies, schools, and government organizations affected by the disaster to rebuild their IT infrastructure.