Windows Azure and Cloud Computing Posts for 3/7/2011+

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database and Reporting

- Marketplace DataMarket and OData

- Windows Azure AppFabric: Access Control, WIF and Service Bus

- Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch and Entity Framework

- Windows Azure Infrastructure

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security, Governance and DevOps

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Azure Blob, Drive, Table and Queue Services

No significant articles today.

<Return to section navigation list>

SQL Azure Database and Reporting

My (@rogerjenn) Signing Up for the SQL Azure Reporting Services CTP post of 3/4/2011 reported:

I finally received my invitation code for the first SQL Azure Reporting Services CTP. The message from the SQL Azure team is reasonably explicit with regard to the steps involved, but users unfamiliar with the new Windows Azure Portal might find the following screen captures of the steps useful.

Important: You must have an active Windows Azure Platform account to complete the CTP signup process.

1. Open the Windows Azure Platform Portal at http://windows.azure.com and click the Reporting button in the left frame to open the SQL Azure Reporting CTP window:

Tip: If you haven’t signed up for the CTP, clicking the Register button is the quickest way to do this.

2. Click the Provision button to open the Terms of Service dialog and mark the I agree to the Terms of Use check box:

3. Click Next to open the Subscription selection dialog and select a subscription that includes an SQL Azure database:

Important: Only the South Central US (San Antonio) data center hosts the SQL Azure Reporting Services CTP, so you’ll save egress and ingress bandwidth if you select a subscription for a Windows Azure compute instance in the same data center.

4. Click Next to open the Invitation Code dialog, paste the invitation code at the bottom of your invitation message into the text box, type a complex password (with three of four required features) for the service, and repeat the password:

5. Click Next to complete the signup process and display SQL Azure’s Reporting Services information page at http://www.microsoft.com/en-us/SQLAzure/reporting.aspx:

The invitation message concludes with the following:

DOCUMENTATION AND FEEDBACK

- Documentation for getting started and using the SQL Azure Reporting CTP can be found in the SQL Azure library on MSDN at http://msdn.microsoft.com/en-us/library/gg430130.aspx.

- You can provide us feedback through the Connect site at https://connect.microsoft.com/SQLServer/Feedback and filing a Bug or Suggestion (Select Category = "SQL Azure Reporting) or by visiting the SQL Azure forum at http://social.msdn.microsoft.com/Forums/en-US/ssdsgetstarted/threads.

- To vote on feature requests and make suggestions for V1 features, please visit http://www.mygreatsqlazurereportingidea.com.

Stay tuned for future posts explaining how to use the reporting services.

<Return to section navigation list>

MarketPlace DataMarket, OData and WCF

Glenn Block explained Adding vcard support and bookmarked URIs for specific representations with WCF Web Apis in a 3/7/2010 post to the .NET Endpoint blog:

REST is primarily about 2 things, Resources and Representations. If you’ve seen any of my recent talks you’ve probably seen me open Fiddler and how our ContactManager sample supports multiple representations including Form Url Encoded, Xml, Json and yes an Image. I use the image example continually not necessarily because it is exactly what you would do in the real world, but instead to drive home the point that a representation can be anything. Http Resources / services can return anything and are not limited to xml, json or even html though those are the most common media type formats many people are used to.

One advantage of this is your applications can return domain specific representations that domain specific clients (not browsers) can understand. What do I mean? Let’s take the ContactManager as an example. The domain here is contact management. Now there are applications like Outlook, ACT, etc that actually manage contacts. Wouldn’t it be nice if I could point my desktop contact manager application at my contact resources and actually integrate the two allowing me to import in contacts? Turns out this is where media types come to the rescue. There actually is a format called vcard that encapsulates the semantics for specifying an electronic business card which includes contact information. rfc2425 then defines a “text/directory” media type which clients can use to transfer vcards over HTTP.

A sample of how a vcard looks is below (taken form the rfc)

begin:VCARD

source:ldap://cn=bjorn%20Jensen, o=university%20of%20Michigan, c=US

name:Bjorn Jensen

fn:Bj=F8rn Jensen

n:Jensen;Bj=F8rn

email;type=internet:bjorn@umich.edu

tel;type=work,voice,msg:+1 313 747-4454

key;type=x509;encoding=B:dGhpcyBjb3VsZCBiZSAKbXkgY2VydGlmaWNhdGUK

end:VCARDNotice, it is not xml, not json and not an image :-) It is an arbitrary format thus driving the point I was making about the flexibility of HTTP.

Creating a vcard processor

So putting two and two together that means if we create a vcard processor for our ContactManager that supports “text/directory” then Outlook can import contacts from the ContactManager right?

OK, here is the processor for VCARD.

public class VCardProcessor : MediaTypeProcessor{public VCardProcessor(HttpOperationDescription operation):base(operation, MediaTypeProcessorMode.Response){}public override IEnumerable<string> SupportedMediaTypes{get{yield return "text/directory";}}public override void WriteToStream(object instance,Strea stream, HttpRequestMessage request){var contact = instance as Contact;if (contact != null){var writer = new StreamWriter(stream);writer.WriteLine("BEGIN:VCARD");writer.WriteLine(string.Format("FN:{0}", contact.Name));writer.WriteLine(string.Format("ADR;TYPE=HOME;{0};{1};{2}",contact.Address, contact.City,contact.Zip));writer.WriteLine(string.Format("EMAIL;TYPE=PREF,INTERNET:{0}",contact.Email));writer.WriteLine("END:VCARD");writer.Flush();}}public override object ReadFromStream(Stream stream,HttpRequestMessage request){throw new NotImplementedException();}}The processor above does not supporting posting vcard, but it actually could.

Right. However there is one caveat, Outlook won’t send Accept headers

, all it has a “File-Open” dialog. There is hope though. It turns out that the dialog supports uris, thus as long as I can give it uri which is a bookmark to a vcard representation we’re golden.

This is where in the past it gets a bit hairy with WCF. Today to do this means I need to ensure that my UriTemplate has a variable i.e. {id} is fine, but then I have to parse that ID to pull out the extension. It’s ugly code point blank. Jon Galloway expressed his distaste for this approach (which I suggested as a shortcut) in his post here (see the section Un-bonus: anticlimactic filename extension filtering epilogue).

In that post, I showed parsing the ID inline. See the ugly code in bold?

[WebGet(UriTemplate = "{id}")]public Contact Get(string id, HttpResponseMessage response){int contactID = !id.Contains(".")? int.Parse(id, CultureInfo.InvariantCulture): int.Parse(id.Substring(0, id.IndexOf(".")),CultureInfo.InvariantCulture);var contact = this.repository.Get(contactID);if (contact == null){response.StatusCode = HttpStatusCode.NotFound;response.Content = HttpContent.Create("Contact not found");}return contact;}Actually that only solves part of the problem as I still need the Accept header to contain the media type or our content negotiation will never invoke the VCardProcessor!

Another processor to the rescue

Processors are one of the swiss army knives in our Web Api. We can use processors to do practically whatever we want to an HTTP request or response before it hits our operation. That means we can create a processor that automatically rips the extension out of the uri so that the operation doesn’t have to handle it as in above, and we can make it automatically set the accept header based on mapping the extension to the appropriate accept header.

And here’s how it is done, enter UriExtensionProcessor.

public class UriExtensionProcessor :Processor<HttpRequestMessage, Uri>{private IEnumerable<Tuple<string, string>> extensionMappings;public UriExtensionProcessor(IEnumerable<Tuple<string, string>> extensionMappings){this.extensionMappings = extensionMappings;this.OutArguments[0].Name = HttpPipelineFormatter.ArgumentUri;}public override ProcessorResult<Uri> OnExecute(HttpRequestMessage httpRequestMessage){var requestUri = httpRequestMessage.RequestUri.OriginalString;var extensionPosition = requestUri.IndexOf(".");if (extensionPosition > -1){var extension = requestUri.Substring(extensionPosition + 1);var query = httpRequestMessage.RequestUri.Query;requestUri = string.Format("{0}?{1}",requestUri.Substring(0, extensionPosition), query);;var mediaType = extensionMappings.Single(map => extension.StartsWith(map.Item1)).Item2;var uri = new Uri(requestUri);httpRequestMessage.Headers.Accept.Clear();httpRequestMessage.Headers.Accept.Add(new MediaTypeWithQualityHeaderValue(mediaType));var result = new ProcessorResult<Uri>();result.Output = uri;return result;}return new ProcessorResult<Uri>();}}Here is how it works (note how this is done will change in future bits but the concept/appoach will be the same).

- First UrlExtensionProcessor takes a collection of Tuples with the first value being the extension and the second being the media type.

- The output argument is set to the key “Uri”. This is because in the current bits the UriTemplateProcessor grabs the Uri to parse it. This processor will replace it.

- In OnExecute the first thing we do is look to see if the uri contains a “.”. Note: This is a simplistic implementation which assumes the first “.” is the one that refers to the extension. A more robust implementation would look after the last uri segment at the first dot. I am lazy, sue me.

- Next strip the extension and create a new uri. Notice the query string is getting tacked back on.

- Then do a match against the mappings passed in to see if there is an extension match.

- If there is a match, set the accept header to use the associated media type for the extension.

- Return the new uri.

With our new processors in place, we can now register them in the ContactManagerConfiguration class first for the request.

public void RegisterRequestProcessorsForOperation(HttpOperationDescription operation, IList<Processor> processors,MediaTypeProcessorMode mode){var map = new List<Tuple<string, string>>();map.Add(new Tuple<string, string>("vcf", "text/directory"));processors.Insert(0, new UriExtensionProcessor(map));}Notice above that I am inserting the UriExtensionProcessor first. This is to ensure that the parsing happens BEFORE the UriTemplatePrcoessor executes.

And then we can register the new VCardProcessor for the response.

public void RegisterResponseProcessorsForOperation(HttpOperationDescription operation,IList<Processor> processors,MediaTypeProcessorMode mode){processors.Add(new PngProcessor(operation, mode));processors.Add(new VCardProcessor(operation));}Moment of truth – testing Outlook

Now with everything in place we “should” be able to import a contact into Outlook. First we have Outlook before the contact has been imported. I’ll use Jeff Handley as the guinea pig. Notice below when I search through my contacts he is NOT there.

Now after launching the ContactManager, I will go to the File->Open->Import dialog, and choose to import .vcf.

Click ok, and then refresh the search. Here is what we get.

What we’ve learned

- Applying a RESTful style allows us evolve our application to support a new vcard representation.

- Using representations allows us to integrate with a richer set of clients such as Outlook and ACT.

- WCF Web APIs allows us to add support for new representations without modifying the resource handler code (ContactResource)

- We can use processors for a host of HTTP related concerns including mapping uri extensions as bookmarks to variant representations.

- WCF Web APIs is pretty cool

I will post the code. For now you can copy paste the code and follow my direction using the ContactManager. It will work.

What’s next.

In the next post I will show you how to use processors to do a redirect or set the content-location header.

The Microsoft Case Studies team posted Data Visualization Firm Uses Data Service to Create Contextualized Business Dashboards on 2/25/2011 (missed when posted):

As a producer of data visualization and business dashboard software, Dundas continually strives to display its customers’ key performance indicators (KPIs) in ways that offer the best possible strategic insight. To put KPIs in the right context, many organizations want to combine internally gathered information with data feeds from outside sources—a process that has traditionally been cumbersome and expensive.

To address this issue, Dundas updated its Dashboard product to support DataMarket, a part of Windows Azure Marketplace. Now customers can supply Dundas Dashboard with rich, reliable data from a single cloud-based source using a simple cost-effective subscription model. They can also easily modify dashboards for more contextualized KPIs with no development effort or additional storage hardware, saving money and improving business intelligence.

Organization Size: 80 employees

Partner Profile: Based in Toronto, Ontario, Dundas Data Visualization provides data visualization and dashboard solutions to thousands of clients worldwide. It has 80 employees and annual revenues of about CDN$15 million (U.S.$15 million).

Business Situation: Dundas wanted to put customers’ internal business intelligence data in better context by comparing it with data from multiple external sources, which is traditionally a cumbersome and expensive process.

Solution: The company updated its Dundas Dashboard product to support DataMarket (a part of Windows Azure Marketplace) to give customers cost-effective access to reliable, trusted public domain and premium commercial data.

Benefits:

- Enhances business intelligence to promote customers’ growth

- Lowers data acquisition costs

- Helps expand business, provides a competitive edge

- Reduces latency to improve services

Software and Services:

- Microsoft Silverlight

- Microsoft SQL Server 2008

- Windows Azure Marketplace DataMarket

- Microsoft .NET Framework

Elisa Flasko (@eflasko, pictured below) posted Real World DataMarket: Interview with Ayush Yash Shrestha, Professional Services Manager at Dundas Data Visualization on 3/2/2011 (Missed when posted):

As part of the Real World DataMarket series, we talked to Ayush Yash Shrestha, the Professional Services Manager at Dundas Data Visualization, about how his company is taking advantage of DataMarket (a part of Windows Azure Marketplace) to enhance its flagship Dundas Dashboard business intelligence software.

MSDN: Can you tell us about Dundas Data Visualization and the services you provide?

Shrestha: Dundas produces data visualization and dashboard solutions that are used by some of the largest companies in the world. Dundas Dashboard is a one-stop shop for bringing in data from various systems, building business models, and finding key performance indicators (KPIs). Through a simple drag-and-drop interface, a fairly sophisticated business user—even without development experience—can turn raw data into a useful dashboard in about four steps. It’s self-service business intelligence (BI).MSDN: What business challenges did Dundas and its customers face before it recently began using DataMarket?

Shrestha: We wanted to help our customers contextualize their internal BI data by comparing it with data from outside the enterprise. A KPI without proper context is meaningless. You can use your BI system to gather internal data and determine if you’re meeting your goals, but if you don’t have the ability to combine the data with outside information, then your goals may not be contextually sound. Traditionally, buying this information from multiple providers and using multiple data feeds with different formats has been cumbersome and expensive, with a lot of IT support requirements.MSDN: How does DataMarket help you address the need to cost-effectively contextualize BI data?

Shrestha: We’re able to bring in reliable, trusted public domain and premium commercial data into Dashboard without a lot of development time and costs. This is possible because DataMarket takes a standardized approach, with a REST-based model for consuming services and data views that are offered in tabular form or as OData feeds. Without having a standard in place, we’d be forced to write all kinds of web services and retrieval mechanisms to get data from the right places and put it into the proper format for processing.MSDN: What are the biggest benefits to Dundas customers by incorporating DataMarket into Dashboard?

Shrestha: Our customers just create a single DataMarket account, and they’re ready to get the specific data that they need. This will result in significant savings, especially for companies that would otherwise make several large business-to-business data purchases. Also, the data provided through DataMarket is vetted. Without a service such as DataMarket in place, there is a risk of businesses adding inaccurate data to their dashboards. With DataMarket being such a reliable source, we’re able to provide meaningful context that few other dashboard providers offer at this time. Finally, we can build new dashboards for a customer once, and they can be easily improved as the DataMarket data catalog continues to gain depth. We can integrate additional KPI context into existing dashboards with a few mouse clicks, versus the old approach of reengineering the system.MSDN: And what are the biggest benefits to Dundas?

Shrestha: The most important are added value, competitive edge, and new business opportunities. If a customer is comparing Dundas Dashboard with a competing product that does not use DataMarket, we immediately have an edge thanks to the vast additional resources that DataMarket provides. Also, customers often expect us to offer analysis services in addition to pure data visualization, which in the past we did not have the resources to provide. With DataMarket, we’re now in a position to offer this additional level of service, which will help us expand our customer base and increase revenue.

Matt Stroshane (@MattStroshane) posted Introducing the OData Client Library for Windows Phone, Part 3 on 3/3/2011 (missed when published):

This is the third part of the 3-part series, Introducing the OData Client Library. In today’s post, we’ll cover how to load data from the Northwind OData service into the proxy classes that were created in part one of the series. This post is also part of the OData + Windows Phone blog series.

Prerequisites

Introducing the OData Client Library for Windows Phone, Part 1

Introducing the OData Client Library for Windows Phone, Part 2How to Load Data from Northwind

There are four steps for loading data with the OData Client Library for Windows Phone. The following examples continue with the Northwind “Customer” proxy classes that you created in part one. Exactly where this code is located in your program is a matter of style. Later posts will demonstrate different approaches to using the client library and proxy classes.

Note: Data binding implementation will vary depending on your programming style. For example, whether or not you use MVVM. To simplify the post, I will not address it here and assume that, one way or another, you will bind your control to the DataServiceCollection object.

1. Create the DataServiceContext Object

To create the data service context object, all you need to do is specify the service root URI of the Northwind OData service, the same URI that you used to create the proxy classes.

If you look inside the NorthwindModel.vb file, you’ll see that DataSvcUtil.exe created a class inheriting from DataServiceContext, named NorthwindEntities. This is the context class that is used in the following example:

2. Create the DataServiceCollection Object

To create the data service collection object, you need to specify that you’ll be putting Customer objects in it, and that you will load the collection with our NorthwindEntities context. Because the collection contains Customer objects, the collection is named Customers in the following example:

Note: You’ll need to register for the Customers LoadCompleted event later on. In VB, you set up for this by using the WithEvents keyword. In C#, you typically register for the event handler in the next step, when the data is requested.

3. Request the Data

Now that you have your context and collection objects ready to go, you are ready to start loading the data. Because all networking calls on the Windows Phone are asynchronous, this has to happen in two parts. The first part involves requesting the data; the second part (next step) involves receiving the data. In the following example, you use the LoadAsync method of the DataServiceCollection to initiate the request.

Because the collection knows the context, and the context knows the root URI, all you have to do is specify the URI that is relative to the root. For example, the full URI of the Customers resource is:

http://services.odata.org/Northwind/Northwind.svc/Customers

Because the root URI is already known by the context, all you need to specify is the relative URI, /Customers. This is what’s used in the following code example:

Note: The LoadAsync method can be called in a variety of places, for example, when you navigate to a page or click a “load” button.

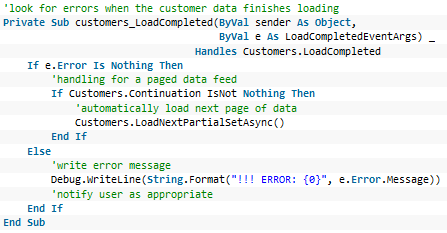

4. Receive the Data

If you have a Silverlight control bound to the data service collection, it will automatically be updated when the first set of data comes back (the amount of which depends on your request and how that particular service is configured). Even with data binding, it is still important to handle the LoadCompleted event of the DataServiceCollection object. This allows you to handle errors from the data request. Additionally, the following example uses the Continuation property to check for more pages of data and automatically load them using the LoadNextPartialSetAsync() method.

Note: This is just one example for handling the LoadCompleted event. You’ll likely see many other types of implementations.

Choosing a Home for Your Code

If you have a single-page application that is not very complicated, it is just fine putting your DataServiceContext and DataServiceCollection in the code-behind page, for example MainPage.xaml.vb.

In the case of multi-page apps, the model-view-viewmodel (MVVM) programming pattern is a popular choice for Windows Phone apps. With MVVM, the data service context and collection are typically placed in the ViewModel code file, for example MainViewModel.vb.

Conclusion

This series covered the basics of getting started with the OData Client Library for Windows Phone.

The Microsoft development teams are always looking for ways to improve your programming experience, so I don’t expect it will stay this way forever. Keep an eye out for new features with each release of the Windows Phone development tools. The documentation will always detail changes in the topic What’s New in Windows Phone Developer Tools.

See Also: Community Content

Netflix Browser for Windows Phone 7 – Part 1, Part 2

OData v2 and Windows Phone 7

Data Services (OData) Client for Windows PHone 7 and LINQ

Learning OData? MSDN and I Have the videos for you!

OData and Windows Phone 7, OData and Windows Phone 7 Part 2

Fun with OData and Windows Phone

Developing a Windows Phone 7 Application that consumes OData

Lessons Learnt building the Windows Phone OData browserSee Also: Documentation

Open Data Protocol (OData) Overview for Windows Phone

How to: Consume an OData Service for Windows Phone

Connecting to Web and Data Services for Windows Phone

Open Data Protocol (OData) – Developers

WCF Data Services Client Library

WCF Data Service Client Utility (DataSvcUtil.exe)

<Return to section navigation list>

Windows Azure AppFabric: Access Control, WIF and Service Bus

Pluralsight released Richard Seroter’s Integrating BizTalk Server with Windows Azure AppFabric course to its library on 3/7/2011:

Following are the course’s main segments:

- Introduction to Integrating BizTalk Server and Windows Azure AppFabric (00:21:55)

- Sending Messages from BizTalk Server to Windows Azure AppFabric (00:46:36)

- Sending Messages from Windows Azure AppFabric to BizTalk Serv (00:37:14)

Keith Bauer posted Leveraging the Windows Azure Service Management REST API to Create your Hosted Service Namespace on 3/7/2011:

If you’re looking to leverage the Windows Azure Service Management REST API to determine if a hosted service namespace is available, and to subsequently create the hosted service through your own interface and/or tools, then you will want to be aware of the subtleties of this process in order to complete this in the most effective way possible. This article will discuss an approach which leverages the Service Management REST API for determining if the sought after namespace is still available and what you need to implement in order to create your hosted service via the REST API. This article provides all the sample code you will need, including details for ensuring all of the preliminary tasks are taken care of, such as creating and assigning your own certificate, which is required in order to securely manage Windows Azure via the Service Management REST API’s.

This article is based on the needs of a recent customer that expressed an interest in automating a process for determining whether or not a given DNS prefix (e.g., <DNS Prefix>.cloudapp.net) is already taken, and if it’s not, what needs to be done to register this namespace… all through their own custom management utility.

Introduction

In a majority of scenarios, I would imagine customers will just leverage the Windows Azure Management Portal, where we provide a user interface for creating your hosted services. However, this process requires you to interact with the Windows Azure portal design, and the Windows Azure portal process, as you attempt various DNS (a.k.a. URL) prefixes while you work to obtain your official hosted service namespace. Fortunately, there is already information on the internet that discusses this topic, including How to Create a Hosted Service. The dialog for accomplishing this is also shown in Figure 1. What is not so prevalent, are examples for accomplishing this same task in its entirety via the REST API. Therefore, that will be the focus of this post, to show the requirements of this same task leveraging the Windows Azure Service Management REST API. Along this journey, I will share some important notes that you will find indispensible as you venture down the path of using the REST API for this purpose.

Figure 1 – Create a new Hosted Service

Getting Started

Let’s get started by reviewing the two basic elements of what we are trying to accomplish via the Windows Azure Service Management REST API:

1. Determine if a hosted service namespace is available

2. Create the hosted service

As it turns out, we can tackle the second requirement (i.e., create the hosted service), fairly easily with the Windows Azure Service Management REST API. As you will see in code examples below, this API contains a Create Hosted Service operation which helps us accomplish this task. It is actually the first requirement, determining if a hosted service namespace is available, that causes us a bit of grief. Unfortunately we do not have a supported means of querying Windows Azure to determine if a hosted service namespace already exists; this is not yet part of the Service Management REST API. Therefore, we will have to resort to some brute force tactics in order to provide this feedback. That is, at least until the Windows Azure team provides us with an API for this particular task. These brute force tactics result in us having to capture an HTTP response after attempting to create the service.

Preliminary Steps

Since we have decided to leverage the Windows Azure Service Management REST API, we need to first take care of a couple important preliminary steps. Without these steps, you will not be able to leverage the REST API since there is a requirement of mutual authentication of certificates over SSL to ensure that a request made to the management service is secure. Here are the preliminary steps we need to tackle:

1. Obtain or Create an X.509 certificate (this is required for using the Windows Azure Service Management REST API’s)

2. Associate the certificate to your Windows Azure Subscription

This sounds simple enough, and it really is. We just need to be aware of the options you have and how to work around any errors you may encounter as you attempt to create and associate a certificate with your Windows Azure subscription.

Obtain or Create an X.509 Certificate

There are a couple common ways of obtaining an X.509 certificate. You can contact your CA (Certificate Authority) and request one, or more commonly for developers that need a quick-n-dirty approach, you can create your own self-signed certificate. The steps below will walk you through creating your own self-signed X.509 certificate for use with the Windows Azure Service Management REST API. The two most common approaches for doing this on your own include using makecert.exe (a Certificate Creation Tool), or by leveraging IIS. This article will pursue the IIS approach since it just takes a few clicks and we’ll be on our way with a Windows Azure compatible certificate.

To create your own self-signed certificate using IIS you need to open the IIS Management Console and double-click Server Certificates as shown in Figure 2.

Figure 2 – IIS Manager

Once the Server Certificates panel opens, you can just right-click on the background of the panel to display the Server Certificates popup menu, as shown in Figure 3, and select Create Self-Signed Certificate. Then, just follow the screen prompts, enter a name for your certificate, and click OK to complete the process.

Figure 3 – IIS Manager | Server Certificates

Note: A benefit of leveraging IIS for the self-signed certificate creation process is that it automatically defaults to the requirements needed for Windows Azure. These certificate requirements include ensuring that your certificate is a valid X.509 v3 certificate and that it is at least 2048 bits.

Note: A downside of leveraging IIS for the self-signed certificate creation process is that it automatically assigns default values to the some of the certificate fields, such as the Subject field, leaving you little option for customization. Whereas, use of the makecert.exe tool mentioned earlier provides more flexibility during the certificate creation process as values can be explicitly defined.

Now that your certificate is created we can move on to the next preliminary step.

Associate the Certificate to your Windows Azure Subscription

In order to associate your newly created certificate to your Windows Azure account we first need to export the certificate to a file that we can upload to the Windows Azure portal. We can do this directly in the IIS Management Console. Open the property dialog for your new certificate by double-clicking the certificate that was just created, navigate to the Details tab, and select the Copy to File command as shown in Figure 4.

Figure 4 – IIS Manager | Server Certificates | Certificate Property Dialog

This will launch the Certificate Export Wizard. As you click through this wizard, there are two options you want to pay particular attention to: 1) when asked if you want to export your private key, you should select “No, do not export the private key”, and 2) when asked what format you want to use, you should select “DER encoded binary X.509 (.CER)”; this is illustrated in Figure 5. This process will generate the (.cer) file you need to upload to Windows Azure.

Figure 5 – Certificate Export Wizard

Next, you will need to upload the certificate file [.cer] to the Windows Azure portal. You will need to sign in (if you are not automatically signed in), and navigate to the Management Certificates section, and then choose Add Certificate as indicated in Figure 6 below.

Figure 6 – Windows Azure | Management Certificates | Add Certificate

Important: There are two pieces of information you will want to protect: 1) your subscription ID, and 2) your management certificate. From this point on, anyone you share this information with will be able to access and manage your deployments, your storage account credentials, and your hosted services in Windows Azure.

Now that the preliminary steps of creating a certificate and associating it with our Windows Azure subscription are out of the way, we can get to the meat of this topic and get going with the Windows Azure Service Management REST API’s.

Working with the Windows Azure Service Management REST API’s

There are a few different ways we can choose to interact with the Windows Azure Service Management REST API’s. We can raise up the level of abstraction and use the WCF REST Starter Kit, or even the recently announced (PDC 2010) WCF WEB API’s . We can also leverage csmanage (a command line Windows Azure Service Management API tool). Additionally, we can leverage raw HTTP Requests. I chose to use the raw HTTP request approach because it doesn’t hide much of what is going on and provides a solid foundation for learning exactly what is needed; this also builds the basis for better understanding any of the higher level abstractions. Specifically, we will try and leverage the REST API to determine if it can be used to verify if a hosted service namespace is available, as well as to create the hosted service.

As we start to research the list of operations available in the Windows Azure Service Management REST API, you will soon discover that we currently do not provide an operation for determining if a hosted service namespace is available. This is unfortunate and presents us with a dilemma. To complicate matters further, we can’t attempt to just resolve the DNS for this verification either. This is because hosted services which have no deployments will have already had their DNS name created and registered; however, the DNS name will be pointing to an IP address of 0.0.0.0. Only once a deployment takes place will we update the DNS record to point to the public virtual IP. Therefore, we are forced into attempting to create the hosted service and checking the response code in order to determine if a given namespace is already taken. We can do this by calling the Create Hosted Service operation and inspecting the result for an HTTP 409 (Conflict) response. I know this is not a great solution, but as of now, it is the only viable one we have.

Let’s analyze the code needed to implement this all of this.

We can start with some basic declarations.

Line [2] represents the Windows Azure subscription ID. In case it isn’t obvious, the ID provided above is not a valid subscription ID. You should replace this with your own subscription ID as it will be required for every REST API call we make.

Line [3] represents the certificate thumbprint. You will have to replace this with your own valid thumbprint. This is the thumbprint of the certificate you created to manage your Windows Azure subscription, and it can be copied from the Certificate property dialog, shown in Figure 4 above. We will use the thumbprint later when retrieving the certificate from our local certificate store.

Lines [4-5] represent the management services base URI and the hosted services URI. When combined with your subscription ID, they will form the complete Request URI (e.g., /services/hostedservices">https://management.core.windows.net/<subscription-id>/services/hostedservices).

Note: Defining the both azureSubscriptionID and azureCertificateThumbprint as constants was done for didactic reasons. Typically, this information would be stored in a secured configuration file or in the registry.

Next, it is important to remember that every Service Management REST API call will require us to pass along a certificate. We have optimized this process by centralizing the code which returns the needed certificate into a GetManagementCertificate method. The following code snippet extracts the IIS generated self-signed certificate from your personal certificate store on the local machine. The bulk of the work is line [3] where we specify the certificate store name and location, and in line [10] where we execute the Find method and search for our certificate. The results of the Find method return a collection, so lines [13-22] ensure we only return the first element from this collection. In reality, since we are searching by a unique characteristic of the certificate, we should only get 1 element. If we do happen to obtain more than one certificate, which would only be possible if you changed the X509FindType enumeration to search for a non-unique property, we will only return the first element as determined by the code in line [16].

Extracting the certificate and passing it along with the request can be a troublesome task if you don’t know what to watch out for. Here are some guidelines to help understand some of the intricacies.

Note: You may be one of those people that don’t like the certificate store, and you would rather simplify matters by just reading from the file system. After all, you already exported your certificate and created a [.cer] file to upload into Windows Azure. Why not just send that along with every request? It would certainly be easier than searching the certificate store and returning the first item in the collection. The X509Certificate2 class even has a constructor for just this purpose.

Well, it turns out that you cannot leverage the certificate file [.cer] we exported from IIS. If you remember, the file you exported does not contain your private key, and this is required when making calls to the Windows Azure Service Management REST API. This is why we call the certificate store. We need to obtain the correct certificate and ensure the private key is provided in our request. However, if you would prefer to read your certificate from the file system, as opposed to the more secure certificate store, then you should export your certificate with the private key included. This will create a [.pfx] file and will require a password. More details on how to code for this approach can be found here: http://stackoverflow.com/questions/5036590/how-to-retrieve-certificates-from-a-pfx-file-with-c

If you do not have the right certificate, or if the private key is not provided, you may see the following error when attempting your REST API call: “System.Net.WebException: The remote server returned an error: (403) Forbidden”.

Note: A potentially misleading aspect of creating a self-signed certificate with IIS is that the “Friendly Name” you specify in the wizard does not correlate to the “Subject Name” of the certificate. This can be troublesome when executing the X509Certificate2Collection.Find method using the X509FindType enumeration FindBySubjectName member as a certificate matching the “Friendly Name” will not be returned. Instead, you will get a collection with zero elements. If you happen to encounter a zero element collection when you are sure the certificate exists, then make sure this is not the culprit.

Now that all of the pre-requisites are complete, and we have the code created to obtain our certificate, we can finally execute on what we originally set out to do – create a hosted service by leveraging the REST API. I have included a complete method below which accomplishes this task. Following the code block, I will explain the primary concepts underpinning these lines of code.

Line [1] defines our CreateHostedService method with a string parameter representing the name of the hosted service we would like to create.

Line [6] builds the complete request URI for the Create Hosted Service operation. This is basically a concatenation of the base management URI, your Windows Azure subscription ID, and the hosted services URI. Should you decide to call REST API operations that are not part of the hosted services collection of operations, you will have to substitute the hosted services URI with a more relevant URI.

Line [12] returns a certificate by calling our GetManagementCertificate function. This function leverages the certificate thumbprint to search for and return a valid certificate.

Be aware that although a certificate thumbprint is unique, it is also subject to change when you renew your certificate. This may have implications on your ability to successfully execute code which relies on a certificate thumbprint.

Lines [16-17] create the required request headers for the Create Hosted Service operation. Failure to supply these headers will result in a failure of your request. Also, realize that if you decide to execute other hosted service operations, such as List Hosted Services or Get Hosted Service Properties, you may have a different set of required request headers or request header values. You should refer to the documentation of each hosted service for details.

Lines [21-26] create the string which stores the required request body. This request body provides the guts of our REST call. Let’s break these down a bit and analyze a few of the important elements.

Line [22] contains the required operation name <CreateHostedService>. A complete list of all hosted service operations can be found here.

Line [23] contains the required <ServiceName> element. The value of the service name was passed in as an argument to our CreateHostedService method and represents the actual name of the hosted service we will try and create.

Line [24] contains the required <Label> element. Pay close attention to this one. The value provided here must be Base64 encoded. This is accomplished by calling Convert.ToBase64String(Encoding.UTF8.GetBytes(“yourLabelValue”)).

Line [25] contains the <Location> element. Note that this element is required if the <AffinityGroup> element is not specified. Either the <Location> or <AffinityGroup> element must be present in the call, but not both. For purposes of this example, I selected a location: North Central US. You can obtain a list of all valid locations by calling the List Locations operation. At the time of this post, the valid list of locations returned from the List Locations operation included: “Anywhere US”, “South Central US”, “North Central US”, “Anywhere Europe”, “North Europe”, “West Europe”, “Anywhere Asia”, “Southeast Asia”, and “East Asia”.

Lines [28-52] provide a fairly typical implementation of how to send an XML string within the body of a web request. This includes escaping the double quotes, defining a byte array, establishing the content length, writing an XML string to a request body stream, and finally calling GetResponse to receive the response stream which we will return along with the status code to whatever procedure calls our CreateHostedService method.

Line [57] provides the check we need to determine if this hosted service name already exists. Remember earlier in this post, where we had the requirement to determine if a hosted service already existed? Well, we do not yet have an API we can call to check for this. Therefore, we must use the only alternative we currently have available to us… trapping for the exception that tells us the hosted service already exists. A (409) Conflict HTTP status code will alert us of this condition.

Note: When first running this sample, I discovered my logged in user account did not have the correct permissions to attach the private key from the certificate to the request (even though I was able to obtain the certificate from the certificate store). This resulted in the following error when attempting to execute this method: “The request was aborted: Could not create SSL/TLS secure channel.” To overcome this, you should ensure the account executing this code has the appropriate permissions, or for a quick-n-dirty approach, you can run Visual Studio as an administrator. There are more details on this particular exception in a related MSDN Forum post.

Note: If you have exceeded your hosted services quota, if your request body is malformed, if your request body is missing a required field, or if the <Label> element is not Base64 encoded, you may receive a generic “The remote server returned an error: (400) Bad Request.” More details on this status code and other possible causes can be found in the Service Management Status and Error Codes documentation.

Note: If your hosted service namespace already exists, then you will receive the following response when attempting to call the Create Hosted Service operation: “The remote server returned an error: (409) Conflict.”

Conclusion

I hope you find this guidance useful as you venture into using the Windows Azure Service Management REST API’s.

Keith is a Principal Program Manager for the Microsoft Business Platform Division (BPD) AppFabric Customer Advisory Team (CAT).

<Return to section navigation list>

Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

No significant articles today.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

Sajo Jacob started a new series with a Startup tasks, Elevated privileges & VM Role – Part 1 post of 3/7/2011:

In this series of blog posts, let’s do a hands on implementation of the different role types in Azure and how they differ from each other.

A Primer on Windows Azure Roles

Windows Azure supports three types of roles:

- Web role: used for web applications and is supported by IIS 7.

- Worker role: used for general development and/or to perform any background processing work for a web role.

- VM role: used to run an image of Windows Server 2008 R2 created on-premise with any required customizations.

In addition to these with 1.3 SDK, you now have to ability to launch your Web/Worker role with elevated privileges and/or use startup tasks to run scripts/exe’s/msi etc. with elevated privileges. So think of it as Web/Worker role with Jet packs.

With great power comes greater responsibility

The diagram above is not meant to say one is better or the other is bad, it is meant to give you an understanding of the differences with respect to the abstraction and control that varies with each of them. As you start changing the environment going upwards, manageability of the application environment becomes more of your responsibility rather than the Fabric’s.

Startup Tasks

To demonstrate a scenario where you need to prep your application environment with a dependency, let’s attempt to install Internet Explorer 9 beta on a Cloud VM which is running on Windows Server 2008 R2.

Please note that to try this on Windows Server 2008 (non R2) you might have to additionally install Platform update for Windows Server 2008 for DirectX (which is a dependency for IE9).

Here is the screenshot of my solution explorer for visual clarity.

I have a tiny little REST service “DistributeRain” which simply returns the installed browser information

public class DistributeRain { Version ver = null; //REST Service to return browser information [WebInvoke(UriTemplate = "/GetBrowserVersion", Method = "GET")] public string GetBrowserVersion() { Thread t = new Thread(new ThreadStart(GetBrowserInfo)); t.SetApartmentState(ApartmentState.STA); t.Start(); t.Join(); return ver.ToString(); } //Grab the version public void GetBrowserInfo () { WebBrowser browser = new WebBrowser(); ver = browser.Version; } }First let’s try an out-of-box install of our service with some of the default configuration settings in the ServiceConfiguration file that is generated with the 1.3 SDK. We still haven’t done anything to install IE9 beta.

Here is how my service configuration looks, notice the highlighted line

<?xml version="1.0" encoding="utf-8"?> <ServiceConfiguration serviceName="RainCloud" xmlns="http://schemas.microsoft.com/ServiceHosting/2008/10/ServiceConfiguration" osFamily="1" osVersion="*"> <Role name="RainService"> <Instances count="1" /> <ConfigurationSettings> <Setting name="Microsoft.WindowsAzure.Plugins.Diagnostics.ConnectionString" value="UseDevelopmentStorage=true" /> <Setting name="Microsoft.WindowsAzure.Plugins.RemoteAccess.Enabled" value="true" /> <Setting name="Microsoft.WindowsAzure.Plugins.RemoteAccess.AccountUsername" value="username" /> <Setting name="Microsoft.WindowsAzure.Plugins.RemoteAccess.AccountEncryptedPassword" value="password" /> <Setting name="Microsoft.WindowsAzure.Plugins.RemoteAccess.AccountExpiration" value="2999-03-23T23:59:59.0000000-07:00" /> <Setting name="Microsoft.WindowsAzure.Plugins.RemoteForwarder.Enabled" value="true" /> </ConfigurationSettings> <Certificates> <Certificate name="Microsoft.WindowsAzure.Plugins.RemoteAccess.PasswordEncryption" thumbprint="thumbprint" thumbprintAlgorithm="sha1" /> </Certificates> </Role> </ServiceConfiguration>So the following attributes determines the Operating System on which your role will be deployed

osFamily="1" osVersion="*"1.X versions of the OS are compatible with Windows Server 2008 SP2 and 2.X is compatible with Windows Server 2008 R2.

Let’s deploy the above solution as is and check the results.

So we got back Internet Explorer 7, which comes installed out-of-box with a Windows Server 2008 SP2 compatible OS.

Take a look at the OS matrix for the OS flavors available on Azure.

Now lets flip the osFamily switch and redeploy with the following change.

osFamily="2" osVersion="*"Like expected you get back a version corresponding to Internet Explorer 8 which is running on a Windows Server 2008 R2 compatible OS.

You can change the version of the Operating System from the portal

Now that we know the lay of the land, let’s attempt to install Internet Explorer 9 beta on a Windows Server 2008 R2 instance.

Install IE9 beta

So the magic piece of code is highlighted below in the ServiceDefinition file.

<?xml version="1.0" encoding="utf-8"?> <ServiceDefinition name="RainCloud" xmlns="http://schemas.microsoft.com/ServiceHosting/2008/10/ServiceDefinition"> <WebRole name="RainService"> <Sites> <Site name="Web"> <Bindings> <Binding name="Endpoint1" endpointName="Endpoint1" /> </Bindings> </Site> </Sites> <Endpoints> <InputEndpoint name="Endpoint1" protocol="http" port="80" /> </Endpoints> <Imports> <Import moduleName="Diagnostics" /> <Import moduleName="RemoteAccess" /> <Import moduleName="RemoteForwarder" /> </Imports> <Startup> <Task commandLine="IE9.cmd" executionContext="elevated" taskType="simple"> </Task> </Startup> </WebRole> </ServiceDefinition>You need to simply set the commandLine to the name of the program/script that you want to run and executionContext to the permissions with which you want the commandLine arguments to run.

So what is in our IE9.cmd file?

IE9-Windows7-x64-enu.exe /quietYes, that’s it!

Remember to use silent switches (non UI) while installing executables/MSI’s to avoid the need for user intervention.

Make sure to change the command file properties in Visual Studio to “Copy to the Output Directory”

Seems like we’re all set to have IE9 installed on Azure, let’s try another deploy and retest.

So seems like our IE9 install failed for some reason. Some debugging by remoting in revealed an error “Process exit code 0×00000070 (112) [There is not enough space on the disk. ]”

I did notice in the logs, that the IE9 install was attempting to install a .MSU file (which is a windows update), and anytime you think about Windows Update in Azure, know that Windows update is disabled since all OS updates are managed for you by the platform itself. So I went ahead and turned on Windows Update with a slight change to my startup task.

I added the following to my IE9.cmd batch file.

sc config wuauserv start= auto net start wuauserv IE9-Windows7-x64-enu.exe /quietIdeally you should turn off Windows Update right after your installs are completed, and installing IE9 introduces reboot scenarios. I will not cover install + reboot scenarios in this blog post.

So I was feeling slightly more confident, but the next deploy with this change had the same issue.

Steve Marx suggested remoting into the Azure VM and running the Startup task as a “System” user which is a great way to simulate what the startup task was attempting to do. I saw the same error when running as System which validated that the issue had something to do with space where the install files were expanding.

%TEMP%

So there is a 100MB limit on the TEMP folder used by the startup tasks. So to extend the Quota and make sure that my process had access to the TEMP variable, I wrote a console app which spawned my IE9 install and prior to spawning the install, it did the following to set the TEMP variable. All you need to do after that is to call the console app from the startup task.

Directory.CreateDirectory(path); Environment.SetEnvironmentVariable("TEMP", path);Let’s see what our REST service returns back after this change.

Perfect, we now get back the version corresponding to IE9 beta. Hopefully this will get you started with thinking about the possibilities and applications for Startup tasks.

I will cover Elevated privileges and VM Roles in subsequent blog posts. Stay tuned!

Sajo is a Senior Program Manager on the Windows Azure Team.

Stuart Drudge (pictured below) prefaced his A Windows Phone 7 app that explores the outer atmosphere article of 3/7/2011 for the Guardian with “How the University of Southampton developed an app following a balloon 70,000 feet above the ground:”

In Flight Mode, the app installed on the handset was able to record and transmit GPS and location data part of the way up and down.

Sending an HTC 7 Trophy smartphone into the stratosphere in a balloon might sound like an attention-grabbing stunt. However, last Friday a team from Southampton University did it for entirely serious atmospheric research reasons. And yes, there was indeed an app for that.

The School of Engineering Science team worked with Microsoft to develop a Windows Phone 7 application to help track the location of the handset and balloon, and predict its ultimate landing site.

In Flight Mode, the app installed on the handset was able to record and transmit GPS and location data part of the way up and down – while within range of mobile networks – while in Hunter Mode on the team's own handsets, it plotted the latest data and predicted a landing site on a Bing map.

The flight was part of the university's Astra project – Atmospheric Science Through Robotic Aircraft. "We want to explore the outer atmosphere using low-cost high-altitude unmanned aircraft," said research fellow Andras Sobester.

"We are collecting data which will then plug in to various atmospheric science projects, monitoring pollutants such as volcanic ash for example, and informing the science behind modelling the climate and earth system in general."

Friday's flight was a test run to see if the handset was up to the task – it passed with flying colours – while testing the technology out. The positioning data was sent to a Windows Azure server, which did the number-crunching work to calculate its course. The software and app was the work of developer Segoz, working with the university team. [Emphasis added.]

Why use a phone to do this? "10 years ago, using a box with a 1GHz processor wouldn't have come in under 10kg in weight," said research fellow Steven Johnston. The need to reduce the "payload" on the balloon is what made a smartphone appealing, but why Windows Phone 7 rather than, say, an iPhone or Android handset?

"We looked at iPhone, but objective C is just not for me, on a personal level," says Johnston. "I love my Visual Studio and do a lot of net development, so I didn't need to learn any new skills for this. The key thing is that we're not doing all the calculations on the phone itself: Azure comes in for the serious backend processing."

Johnston said he's looking forward to Microsoft extending the Bluetooth stack in Windows Phone 7, which would enable a handset to communicate with more on-board devices. One use for this might be to transmit data via satellite to track the entire flight rather than just the parts within range of a GSM network. Meanwhile, other sensors – he mentions a compass – will enable the phones to record more useful data.

Geoff Hughes, academic team lead in Microsoft's developer platform and evangelism division, says the app's Hunter Mode shows potential for other kinds of scientific research, when there is a wider audience of people who would be interested in following events.

"If you could get a whole series of people following a scientific experiment in this way, they could potentially take part in the project," he says. "It's an exciting spin on trying to get the community involved with what is a very fascinating science project."

Sue Tabbit asserted “Moving its computing system into the cloud has helped the postal service cut costs and improve flexibility” as a deck for her Microsoft Cloud Computing helps modernise Royal Mail article of 3/7/2011 for The Telegraph:

Difficult times call for drastic measures. So it is that Royal Mail, by its own admission a very conservative organisation, finds itself blazing a trail in cloud computing.

Facing threats of increased competition and now privatisation, the national postal service is under growing pressure to operate more efficiently. For the IT department, the challenge is even greater. Without a flexible, scalable IT infrastructure, there is only so dynamic the company can be.

It was for this reason that Royal Mail began to look into the cloud option – at a time when it was still very much ‘‘bleeding edge’’, Mr Steel notes. “It was 2008 and we were entering the recession,” he explains. “We were looking for rapid modernisation.”

Although it already had an external service provider running its IT systems from a data centre, flexibility to adjust the terms of the agreement was limited. The company needed to be able to scale and adapt its IT systems more dynamically, without paying a premium for the privilege.

Royal Mail has swapped an old, on-premise Lotus Notes office application for a flexible, modern solution (Microsoft Business Productivity Online Suite – BPOS) which is hosted entirely in the cloud. Servers, software, storage and bandwidth are all supplied on demand, so that Royal Mail pays only for the resources that it consumes. As Mr Steel explains, when staff numbers swell by 20 per cent to cope with the Christmas rush, the systems are scaled up accordingly – then switched off again in January.

Over its current four-year contract, the company will save 10-15 per cent on maintenance costs alone, as it no longer owns the IT systems involved; these are now the responsibility of Microsoft and CSC, Royal Mail’s broader IT service provider.

Security and compliance is not a major issue, Mr Steel adds. Royal Mail began conservatively; reaping the benefits of the cloud for office applications while keeping more sensitive material in house.

Now Mr Steel’s team has opted for another of Microsoft’s solutions, Hyper-V, for more sensitive line-of-business applications such as order processing.

This provides a private cloud environment, with all the associated advantages of cloud computing such as flexibility and scalability, while allowing sensitive data to be kept on premise and under direct control. “Importantly, we can seamlessly move applications in and out of the cloud as and when we choose,” Mr Steel notes. The success of these bold initiatives have been surprising, even to him.

A survey of 30,000 users revealed that there was ‘‘phenomenally’’ high user satisfaction with the transition. Crucially, the project has not been solely about maintaining the status quo while throwing in a bit more flexibility.Operating on a platform that has been optimised for the cloud means that employees can collaborate with unprecedented ease.

Soon after going live, 142,000 online meeting minutes were hosted in the cloud in a single month.

Developing additional customised collaboration applications based on SharePoint is easy too, with CSC developing and deploying these within days or weeks rather than months. Examples include a system for booking rooms (users can search and view suitable venues and check availability) and a corporate YouTube application.

“We spent a lot of time making our version of SharePoint very graphical so that it draws users in,” Mr Steel notes.

“This is important, because we know we’ll be using the cloud increasingly in future,” he adds. “We are seriously considering rewards in cloud storage. Instead of paying £30 a year per gigabyte to store documents, we can look towards costs in the region of £1-£1.50 per gigabyte, because the process depends on sharing high-capacity resources with other companies. More significantly, it will achieve a level of business agility that would otherwise have cost up to £10m to attain.”

Mr Steel is now something of a cloud evangelist. “In terms of its importance, cloud computing is right up there with the internet itself,” he says.

“It has given us a foundation to adapt and change so, whatever the future holds, we are ready. Decisions are infinitely quicker now.”

Related Articles

- The power of cloud computing, 17 Feb 2011

- Cloud Computing works for social enterprises, 07 Mar 2011

It appears to me that this page was sponsored by Microsoft because there’s a Windows Phone 7 contest announcement in the right IFrame. However, I didn’t see a note to that effect.

Aaron Godfrey posted An interesting read from CIO at http://bit.ly/ga7yTk (10/15/2009) but good to see that we've come a long way since 2009 when it comes to migrating to Azure to LinkedIn’s Windows Azure User Group on 3/7/2011:

Many of the issues the article have been resolved through the Visual WebGui Instant CloudMove solution which takes a migration approach combined with a virtualization engine.

Through its unique virtualization framework, a layer existing between the web server and the application and its highly automated process, Instant CloudMove converts legacy applications to native .Net code and then optimizes it for Microsoft's Azure platform. This produces new software that runs fast and efficiently on the cloud while retaining the desktop user controls from the original app. It also offers a modernization alternative complementing the automatic migration process with user interface modernization. The underlying Visual WebGui framework implements an exceptionally light HTTP/XML web protocol using AJAX which remains transparent to the developers.

Automation that reduces costs dramatically is the essence of the product. The process is structured is such a way that it reduces the number of human decisions to a bare minimum. It can almost be said the Instant CloudMove is 'C/S to web for dummies'.

But perhaps the greatest strength of Instant CloudMove is how it handles security. The issue of security is a major problem on the cloud, due to AJAX-based GUIs. Visual WebGui, however, implements AJAX in a completely secure fashion. It opens a single secured channel and the server side components will not accept input of any type or from any region of the application that is not expected. Its input algorithm in many ways resembles a web application firewall, blocking any malicious or out-of-place input.

To this end, Gimox prides itself with an open offer of a $10,000 prize to any hacker who succeeds in compromising a Visual WebGui application!

Instant CloudMove comprises two tools – the CloudMove AssessmentTool and the CloudMove TranspositionTool. The first is an essential aid in analyzing the source application and estimating its suitability for transition to the cloud platform. It can review a number of apps and rank them according to their suitability for migration, and even predict their running costs on Azure. The Transposition Tool's job is to turn that estimate into reality, by transposing the .NET code to work efficiently on the Windows Azure platform.

Gizmox is offering the CloudMove Assessment Tool absolutely free so ISV's and enterprises alike can see just what the migration is going to involve before they decide.

A bonus of using Instant CloudMove is that after the system is up and running on the Azure cloud platform, continued web development can be done in a pure .Net environment through the highly successful Visual WebGui Professional Studio, maximizing existing resources on the new platform.

Summary

Instant CloudMove is a major cost saver for enterprises and ISVs looking to leverage cloud platform technology now. The contributory factors to this are:

- It provides a realistic path to the cloud model now seen more than ever as a highly cost-effective infrastructure, compared to existing platforms.

- It lets that path be taken quickly and with minimal changes in HR and expertise requirements.

- It runs highly efficiently on Microsoft Azure cloud, a resource-based model, consuming less CPU and less bandwidth than web solutions and therefore costs less to run.

- It produces web applications with rock-solid security and a rich GUI, just like the original C/S application.

And on top of all that, by facilitating the move to cloud, Instant CloudMove expands the availability and accessibility of the (once) legacy application to virtually any computing device or mobile phone, giving a SaaS model to ISVs and unlimited user scope to enterprise apps. To learn more please follow @VisualWebGui.

The Windows Azure Team got around to announcing NOW AVAILABLE: February 2011 Update of the Windows Azure Platform Training Kit on 3/7/2011:

February 2011 update of the Windows Azure Platform Training Kit is now available for download. The Windows Azure Platform Training Kit provides hands-on labs (HOLs), presentations, and samples to help you understand how to build applications on the Windows Azure platform. This new version includes several updated HOLs to include support for the Windows Azure AppFabric February CTP and the new AppFabric Caching, Access Control, and Service Bus portal experience.

Specific updates include:

- HOL: Building Windows Azure Apps with the Caching service

- HOL: Using the Access Control Service to Federate with Multiple Business Identity Providers

- HOL: Introduction to the AppFabric Access Control Service V2

- HOL: Introduction to the Windows Azure AppFabric Service Bus Futures

- Improved PHP installer script for Advanced Web and Worker Roles

- Demo Script - Rafiki PDC Keynote Demo

In addition to these new updates, the demo scripts and setup scripts for all HOLs have been updated to allow users on machines running Windows 7 SP1 to access all materials. [Emphasis added.]

Click here to download the February 2011 update of the Windows Azure Platform Training kit. Click here to browse through all of the HOLs on MSDN.

The download page has 3/1/2011 as its date.

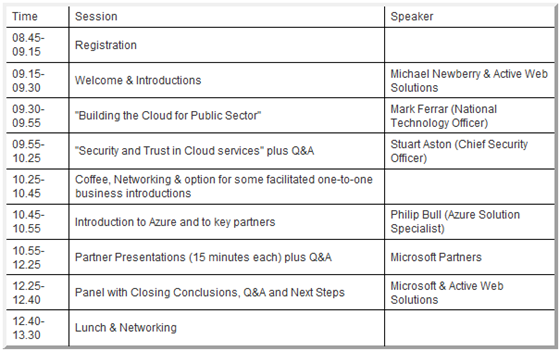

Eric Nelson (@ericnel) answered Q&A: Where does high performance computing fit with Windows Azure? in a 3/7/2011 post:

Answer

I have been asked a couple of times this year about taking compute intensive operations to Windows Azure and/or High Performance Computing on Windows Azure. It is an interesting (if slightly niche) area. The good news is we have a great paper from David Chappell on HPC Server and Windows Azure integration.

As a taster: A SOA application running entirely on Windows Azure runs its WCF services in Azure Worker nodes.

<Return to section navigation list>

Visual Studio LightSwitch and Entity Framework

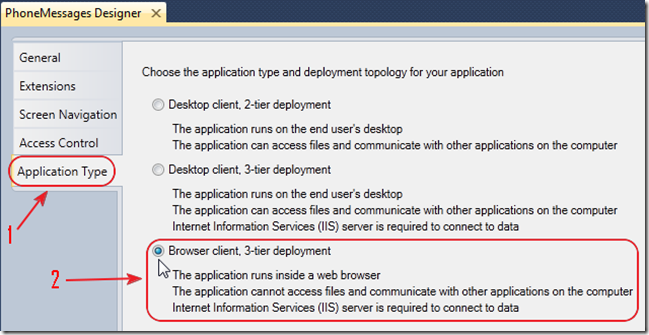

Paul Patterson (@PaulPatterson) explains how to Make Money with Microsoft LightSwitch in a 3/7/2011 post:

A lot of rhetoric is circulating the web regarding Microsoft [Visual Studio] LightSwitch. So far only a beta version of LightSwitch has been released for vetting by the developer community. Even at the currently released beta version, LightSwitch has certainly stirred up some discussion on how and where this new software development tool will fit into the grand scheme of things.

By releasing the beta version of LightSwitch to the software developer community, Microsoft is soliciting feedback in the hopes that when released, LightSwitch will add significant value (and more sales) to the Microsoft Visual Studio line of software development tools. The value proposition to developers is that LightSwitch will dramatically decrease the amount of time it takes to create professional quality software.

So how can my business make money with LightSwitch?

Last summer I wrote an article (see here) that talked to how LightSwitch could provide value to an organization by empowering the organization with a tool that can deliver an enterprise class solution in a substantially shorter period of time. Can you say, “Quicker ROI”?

In the 9 months since that article I have poked and prodded LightSwitch (beta 1). I have created numerous silly applications that are meaningless but, none the less, have provided me some insight that has been invaluable.

So here it is…

LightSwitch will help your business make more money when LightSwitch is used for creating software solutions.

Here is how…

The three main risks, or constraints, of a typical project include; the scope (the requirements) of the project, the amount of time available to complete the project, and the amount of money available to pay for the project. Each of these constraints impacts each other and ultimately provides the inputs that measure the success of a project.

LightSwitch offers value in a number of ways that mitigates each of these risks mentioned above. Most remarkable will be in decreasing the costs and time required to create and write a software solution.

LightSwitch is a development tool that uses point and click style dialogs to configure a data centric software application, without the need to right any software development code. Using this data centric approach to development, information about the types of data being used is entered into simple dialog windows within LightSwitch. User interface screens are configured and generated using point and click interactions. Similar point and click interactions means that a developer can create and deploy a software solution to desktop or the web.

Anyone Can Use It (well, almost)

This means that the developer does not necessarily need to be a “developer”. A person with a little knowledge of data design will have the necessary knowhow to create an application using LightSwitch.

Yes, LightSwitch will give those who “know enough to be dangerous” the ammunition needed to create something for their organization. LightSwitch, however, has been designed to mitigate the risks associated with those type users. LightSwitch constrains most of the design and generated output to baked-in software best practices. Using Silverlight and RIA based service technology; even the simplest designs can churn out a quality solution.

Some developer pundits liken LightSwitch to a code generation tool. Others suggest opinions that LightSwitch is a glorified Microsoft Access. Subjective as it may seem, it’s my opinion that LightSwitch is simply a tool and should not be looked upon as a replacement for anything. Regardless at what way you look at it, LightSwitch is a development tool, plain and simple.

Using LightSwitch, I can create a web ready Silverlight based solution in less than an hour. Using the traditional approach would have meant several days of combined coding and database development efforts, not to mention the time it would take to deploy the solution.

Albeit LightSwitch constrains a generated solution to things like user interface design, but the tradeoff is huge. As long as the scope of function points are met, the project sponsors are happy and are more than confortable with the graphical user interface that LightSwitch produces. As long as it does the job and helps them make money.

Notwithstanding, By design LightSwitch is “extendable” meaning that the baseline solution that gets created will be easily enabled to leverage other requirements such as all kinds of data sources, user interface elements, and various other extension points.

Having said that, LightSwitch is currently released as a beta 1 version of a product. Beta 2 is expected soon and with the developer community’s continued involvement, the official release of LightSwitch will be piggy backed with extensions that will make for a very attractive and community supported product.

Been There, Done That

My years of experience in business analyst and project management roles has given me a perspective that I believe most software developers have not had an opportunity to experience and learn from. That perspective is one from a greater organizational view, and how an organization measures the value of a (custom) developed software solution.

When presenting a business case to the key stakeholders of a business, it is the answers to the, “how will it make me money?” questions that they want to here. If I show each of them that I can deliver on a quality solution faster than the competitors. And if a project sponsor wants to know, “How quickly will I see my ROI?”, what do you think is going to have a greater success of being championed and approved; something that gets created with lots of bells and whistles, or something that simply gets the job done?

You tell me…

Arthur Vickers answered When is Code First not code first? in Entity Framework 4.1 on 3/7/2011:

We recently announced the upcoming release of EF 4.1 which includes support for the Code First approach to EF development. This name fits nicely into the three approaches supported by the Entity Framework: Database First, Model First, and Code First. However, it can be a little misleading in that using Code First does not always mean writing your code first.

Database First

The idea behind Database First is that you start with an existing database and use tools (such as the EF Designer in Visual Studio) to generate C# or VB classes from the database. You can modify the classes that are created and the mappings between the classes and the database through the EF designer, or even, if you are feeling brave, through modification of the underlying XML. The key to this approach is that the database comes first and the code and model come later.

Model First

The idea behind Model First is that you start with a blank canvas and then design an Entity Data Model, again using the EF Designer. This model can then be used to generate a database and also to generate C# or VB classes. The key to this approach is that the model comes first and the code and database come later.

Code First generating a database

In the original prototypes of Code First it was actually called Code Only. This was because the only thing that you needed to write was code—the model and the database were created for you. This is the approach we have demoed at conferences and have shown in walkthroughs, etc.

This approach can also be considered a model first approach depending on how you think about your model. The Model First approach described above is for people who like to create an Entity Data Model using a visual designer. However, many developers instead choose to model their application domain using just the code. This approach is often associated with Domain Driven Design (DDD) where the domain model is represented by the code. So, depending on how you view modeling and how you want to represent your model, you may also consider Code First to be a model first approach.

Having Code First generate your database for you is certainly a very powerful approach and we see a lot of people using it, but if it doesn’t work for you then read on!

Code First with an existing database

Imagine you have an existing database and maybe your DBA doesn’t even let you modify that database. Can you still use Code First? The answer is “Yes!” and we are seeing lots of people doing this. Furthermore, this isn’t just an afterthought; Code First has been specifically designed to support this. The steps are very simple:

- Make sure your DbContext knows how to find your existing database. One way to do this is to put a connection string in your config file and then tell DbContext about it by passing “name=MyConnectionStringName” to the DbContext constructor. See this post for more details.

- Create the classes that you want to map to your database.

- Add additional mapping using data annotations or the Code First fluent API to take care of the places where the Code First conventions need to be overridden to get correct mappings.

This approach is essentially a database first approach, but using code for the mapping instead of a visual designer and XML. We hope to make a T4 template available that would start from an existing database and do the above steps for you—we’d like to hear from you if you would find such a template useful.