Windows Azure and Cloud Computing Posts for 3/4/2011+

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

• Updated 3/5/2011 1:00 PM with additional 3/4/2011 articles marked •

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database and Reporting

- Marketplace DataMarket and OData

- Windows Azure AppFabric: Access Control, WIF and Service Bus

- Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch

- Windows Azure Infrastructure

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Azure Blob, Drive, Table and Queue Services

No significant articles today.

<Return to section navigation list>

SQL Azure Database and Reporting

The SQL Server Team added a new Community Page to their site on 3/4/2011:

<Return to section navigation list>

MarketPlace DataMarket and OData

• Bruno Aziza interviewed Jamie MacLennan, CTO of Predixion Software, in a 00:06:23 Predicting the Future: The Next Step in BI Bizintelligence.tv segment:

Predictive analytics is the next step in BI: not only can you be retrospective and see what has happened in your company in the past, but now we can distill new information from the old information to actually predict what will happen in the future. Jamie MacLennan, CTO of Predixion Software, explains the difference between business intelligence and predictive analytics and shares a program that Predixion has created in Excel to review the Practice Fusion data.

The following video shows how Predixion utilized medical data available on Azure Marketplace DataMarket, quickly building an application on Excel 2010 using PowerPivot. Titled "Prescription Finder," this application uses historical medical data to find the top 10 medications prescribed for any given medical diagnosis while also utilizing predicitive analytics to suggest possible related conditions.

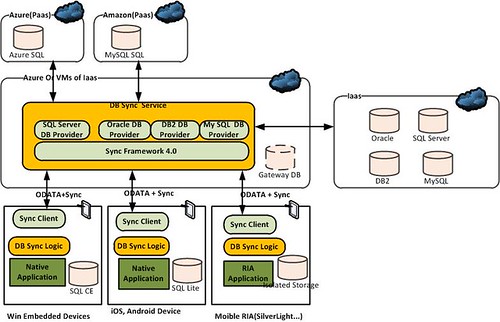

• Paul posted Android OData + Sync client to the Flick Software blog on 2/22/2011 (missed when posted):

In a previous blog, we talked about integrating mobile RDB to enterprise clouds using Sync Framework 4.0. http://flicksoftware.com/2010/12/integrate-mobile-rdb-with-rdb-in-the-clouds-using-sync-framework-4-0/. Since then, we have spent some time to develop an OData + Sync client on Adroid and build a prototype on top of it.

The following is an intro to OData + Sync protocol from the Microsoft website. "The OData + Sync protocol enables building a service and clients that work with common set of data. Clients and the service use the protocol to perform synchronization, where a full synchronization is performed the first time and incremental synchronization is performed subsequent times. The protocol is designed with the goal to make it easy to implement the client-side of the protocol, and most (if not all) of the synchronization logic will be running on the service side. OData + Sync is intended to be used to enable synchronization for a variety of sources including, but not limited to, relational databases and file systems. The OData + Sync Protocol comprises of the following group of specifications, which are defined later in this document:”

Specification

Description

Defines conventions for building HTTP based synchronization service, and Web clients that interact with them.

Defines the request types (upload changes, download changes, etc…) and associated responses used by the OData + Sync protocol.

[OData + Sync: ATOM]

Defines an Atom representation for the payload of an OData + Sync request/response.

[OData + Sync: JSON]

Defines a JSON representation of the payload of an OData + Sync request/response.

Our Android OData + Sync client implements the OData Sync:Atom protocol: it tracks changes in SQLite, incrementally transfers DB changes and resolves conflict. It wraps up these general sync tasks so that applications can focus on implementing business specific sync logic. Using it, developers can sync their SQLite DB with almost any type of relational DBs on the backend server or in the cloud. It can give a boost to the mission critical business applications development on Android. These types of applications use SQLite as local storage to persist data and sync data to/from the backend (clouds) when connection is available. So the business workflow won’t be interrupted by poor wireless signal, data loss caused by hardware/software defects will be minimized and data integrity will be guaranteed.

<Return to section navigation list>

Windows Azure AppFabric: Access Control, WIF and Service Bus

The Windows Azure AppFabric Team advised Recent changes might cause Windows Azure AppFabric Portal error message for a few days in a 3/4/2011 post:

In a few days, we’ll be deploying an updated Windows Azure AppFabric portal to correspond to recent changes in our environment. In the interim, users of the AppFabric portal located at http://appfabric.azure.com may experience the following issue:

When creating a new service namespace, the namespace appears as “Activating” for several seconds and then appears as “Active”.

If you click on the namespace link you may be redirected to an error page.

If you wait 2-5 minutes, the problem should go away and you should be able to view your namespace information.

We will launch the new portal which will resolve these errors in a few days. We apologize for any inconvenience.

<Return to section navigation list>

Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

No significant articles today.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

• Polly S. Traylor wrote Businesses in the Cloud: Stories From the Ground on 3/3/2011 for the Fox Small Business Center:

If you still can’t wrap your brain around "the cloud" and what it can do for your business, just think of it as a Web-based form of outsourcing. For a small business that doesn't have the resources to manage repetitive business processes and technology in-house, cloud computing can be a valuable time- and money-saver.

Arzeda, a four-person Seattle biotech firm, needed more data processing power for product development — but this would've required an investment of $250,000 in new technology to plan for peak capacity. Instead, the company signed up for a Microsoft cloud service, Windows Azure, which gives it the computing power it needs for a lot less (read case study). [Emphasis added.]

“We pay only for what we use,” says Alexandre Zanghellini, co-founder of Arzeda. “We’re spending only 13 percent of what would have been our capital expense for an on-premises solution, and have made it just an operational expense."

How it works

Cloud service providers charge fees to remotely host and manage their customers’ applications, infrastructure or data. Workers can access the data and applications from any device with an Internet connection, using a login and password.

Cloud services are so affordable because the provider can spread costs among many customers, making it a shared resource rather than a dedicated one. Customers benefit by paying only for what they use, and gain the flexibility to scale up or down as needs change. In addition, companies don't have to purchase software licenses or manage any technology themselves.There are many ways a small company can use the cloud to offload time-intensive and costly tasks: Cloud providers can handle storage, administer backups and security, host business software or manage virtually any process that can be computerized.

Jeremy Pyles — CEO and founder of Niche Modern, a Beacon, New York, maker of glass-blown pendant lights and chandeliers — used to spend anywhere from 20 to 30 hours a week on bookkeeping activities. Since he began using an online billing service called Bill.com in 2008, he's spending only two or three hours weekly.

This is despite the fact that the company, originally a retail business in Manhattan, switched gears when he and his wife launched a design and manufacturing business in 2005. In every year except 2009, the six-person company has doubled its sales. "It's been very exciting, but also tricky because we are manufacturing the products ourselves and we don't have much of an infrastructure," Pyles says. "That put a lot of stress on me and my wife."

Why the cloud?

Pyles decided he needed a solution that would allow him to focus more on business strategy and marketing, and less on document management. His first step was to use a paperless check service offered by his bank, Bank of America. But he couldn't integrate the electronic checks with his company's accounting system, Intuit QuickBooks, and there was no workflow for approvals.After doing more research online, he settled on Bill.com, which met his requirements for workflow and syncing with QuickBooks, and would be easy for anyone in his company to use. The service costs $50 per month, which is a bargain compared to hiring a bookkeeper at $40 an hour, he says.

Now, Niche Modern’s vendors (including the company's full-time contractors) e-mail invoices to Bill.com, where they are processed and stored. Within minutes, Bill.com e-mails the invoices to Pyles’ assistant, who routes them to managers for approval. Every day, Pyles logs on to Bill.com for a few minutes to view and approve bills and schedule payments in advance.

"I still get to be involved in the last step of workflow, which is the peace-of-mind component," he says. Pyles’ assistant can also scan and fax paper invoices and send them to the Bill.com e-mail address. Within 15 days, vendors get paid.

There are other benefits, too: Bill.com provides a cash flow forecast based on Niche Modern’s billing and invoicing records, which Pyles says is invaluable for business planning. Finally, with QuickBooks integration, Niche Modem has an internal backup of all billing documentation, in addition to storage at Bill.com. Pyles says he's considering using Bill.com for customer invoicing as well.It can take a leap of faith to put part of your business in the cloud. Strong security and backup processes are mandatory, and you'll need to consider the provider’s track record and history. But consider what the extra time can do for you.

It gave Pyles the ability to focus on renegotiating business contracts, including insurance, which has saved his company thousands of dollars per year. Being more efficient has even played a role in helping the company grow and hire three new employees in the last year. “[Now] we want a new hire to do things that only humans can do, not what computers can do," says Pyles.

Polly is a former high-tech magazine journalist with CIO and The Industry Standard, among others. She writes about business, health care and technology from Golden, Colorado.

• Mauricio Rojas explained Azure Migration: ASP.NET Site with Classic Pipeline mode in a 3/4/2010 post to the ArtinSoft blog:

Recently while we were performing the migration of a blog engine in ASP.NET to Azure we had to deal with some issues with

the differences between IIS6 and IIS7.

Windows Azure Web Roles, use IIS7, with Integrated Pipeline mode and that is a breaking change for some ASP.NET sites. The are at least two main changes:

First is for HTTP modules and handlers. (This info was taken from: http://arcware.net/use-a-single-web-config-for-iis6-and-iis7/)

" For IIS6, they were configured in the <system.web> section, as such:

<httpModules>

<add name="..." type="..." />

<add name="..." type="..." />

<add name="..." type="..." />

</httpModules>

<httpHandlers>

<add verb="..." path="..." type="..." />

<add verb="..." path="..." type="..." />

</httpHandlers>However, to get these to work in IIS7 you must *move them* from the <system.web> section to the new <system.webServer> section, which is what IIS7 requires when running in Integrated Pipeline mode (it’s not needed for Classic Pipeline mode)

So instead of the above you have this instead:

<system.webServer> </modules> <add name="..." type="..." /> </modules> <handlers accessPolicy="Read, Write, Script, Execute"> <add verb="...." name="..." path="..." type="..." /> </handlers> </system.webServer>Notice there are a couple slight changes, which means you can’t just copy and paste these as-is from <system.web> into <system.webServer>:

<httpModules> and <httpHandlers> have been renamed to <modules> and <handlers>, respectively.

Each handler in IIS7 requires a name attribute. If you don’t specify it, you'll get an error message.

The handlers node has an optional, but good-to-define accessPolicy attribute.

This value depends on your handlers, so use the ones that are right for you."Second

There are some restrictions in IIS7 when you are running in Integrated mode.

For example you cannot do calls to the request property of an HttpContext object. All calls to HttpContext.Current.Request will have a problem because Request will be null.You can see more details here: http://mvolo.com/blogs/serverside/archive/2007/11/10/Integrated-mode-Request-is-not-available-in-this-context-in-Application_5F00_Start.aspx

• Bruce Kyle described How to Get Started with Java, Tomcat on Windows Azure in this 3/4/2011 post to the US ISV Evangelism blog:

Step-by-step instructions on building a Java application in Eclipse and installing it on Windows Azure have been published in the Interoperability Bridges and Labs Center.

The post by Ben Lobaugh shows how you can take advantage of the high availability and scalability of the Windows Azure cloud using Java on Eclipse. The post will walk you through each step. See Deploying a Java application to Windows Azure with Eclipse.

Summary of the Steps

You’ll need the Windows AzureStarter Kit for Java from the CodePlex project page. There you will find a Zip file that contains a template project.

Next, you can get Eclipse. The Windows Azure Starter Kit for Java was designed to work with as a simple command line build tool, or in the Eclipse integrated development environment (IDE). You then import the project into Eclipse, select the Java server environment, and the Java Runtime Environment. You can now build your app.

For Azure, you’ll configure project files startup.cmd and ServiceDefinition.csdef. You’ll edit the startup.cmd file to support either Jetty or Tomcat.

You can start up the local Windows Azure cloud platform using the Windows Azure SDK Command Prompt. And Build.

Finally, package application for deployment on Windows Azure and deploy to the cloud.

Sample Code to Get Tomcat Running in Azure

You can get … the projects from http://ricardostorage.blob.core.windows.net/projects/AzureJavaTomcatJBossEclipse.zip

It includes two Eclipse projects, AzureJava (simple Tomcat) and AzureJBoss. You’ll need to follow Deploying a Java application to Windows Azure with Eclipse first. The code will show how to integrate Tomcat into your Azure project.

Special thanks to Ricardo Villalobos for his work on this project.

Inge Henriksen described Azure Membership, Role, and Profile Providers and provided a link to source code in a 3/4/2011 post:

I have made a open source ASP.NET application with custom Membership, Role, and Profile Providers that stores in the Azure Table and Blob Storage. This solution is very useful if you want to quickly get up-and-going with your own Azure Web Application since the foundation around user, role, and profiles is already made ready for you.

Project homepage: http://azureproviders.codeplex.com/

License: MIT

Project DescriptionComplete ASP.NET solution that uses the Azure Table Storage and Azure Blob storage as a data source for a custom Membership, Role, and Profile providers. Developed in C# on the .NET 4 framework using Azure SDK V1.3. Helps you get up and running with Azure in no time. MIT license.

Features

- Complete membership provider that stores data in the Azure Table Storage

- Complete role provider that stores data in the Azure Table Storage

- Complete profile provider that stores data in the Azure Table Storage and Blob Storage

- Register account page with e-mail confirmation and Question/Answer

- Sign In page with automatic locking after too many failed attempts

- Sign Out page

- Change Password page with e-mail confirmation

- Reset Password page where new password is sent to e-mail account

- Locked account functionality where user can unlock account using link sent to users e-mail account

- User e-mail validation by using link sent after account validation, account is not activated before e-mail is validated

- User profile page where user can set his/her gender and upload a profile image that is stored as a Azure Blob

- Admin page that shows an overview of all the roles

- Admin page that shows some site statistics

Admin page that shows an overview of all the users:

Thanks, Inge. This will save many Azure developers many hours of writing and testing boiler-plate code.

Cliff Saran reported Azure propells Southampton's Windows Phone 7 balloon above the clouds in a 3/4/2011 article for ComputerWeekly.com:

Microsoft Azure is helping Southampton University to reach above the clouds in a first-of-its-kind experiment to probe the stratosphere using an unmanned vehicle.

The Atmospheric Research through Robotic Aircraft project aims to show how a low-cost helium high altitude balloon could be used to send a payload with atmospheric monitoring equipment into the upper atmosphere.

Andras Sobester, research fellow at University of Southampton, said, "We are limited to devices that weigh next to nothing, but our data processing requirements are immense."

According to Sobester, there are not many options for launching sensors into the upper atmosphere. The helium balloon is the cheapest approach. "A fully manned research aircraft costs £10,000 per hour," he said.

The launch, which took place earlier on Friday March 4, propelled a Windows Phone 7 device and digital camera over 60,000 feet into the stratosphere. The phone runs an application developed by mobile apps specialist Segoz.co.uk, which uses mobile connectivity to connect to the Microsoft Azure cloud.

"[Processing in] Azure is used to predict the projected landing site," Sobester said. [Emphasis added.]

Steven Johnson, who built the app, says the phone is a good device for data capture compared to a dedicated data logger, as it contains a relatively powerful 1 GHz processor and up to 32 Gbytes of memory.

The Windows Phone 7 Bluetooth stack could also be used to connect wirelessly to instrumentation.

In the second stage of the project, the researchers hope to use the balloon to launch robot gliders that have an autopilot with a pre-programmed flight path.

Matt Stroshane (@mattstroshane) listed Download Links for Windows Phone and Windows Azure Developers in a 3/4/2011 post:

This page describes the full spectrum of what you might want to download for developing cloud-enabled Windows Phone applications.

You don’t need to install everything all at once to get started. In upcoming blog posts, I will list which of these pieces you need in order to follow along with the post.

I will do my best to keep this page up to date, but always see App Hub: Getting Started and Windows Azure: Downloads for the latest details.

Note: Are you a student that wants to get rocking with the latest professional tools? Check out Microsoft DreamSpark: “It’s about giving students Microsoft professional tools at no charge.”

Overview

For Windows Phone development downloads, see:

- Baseline Setup: Visual Basic Development for Windows Phone

- Baseline Setup: C# Development for Windows Phone

- Notable Downloads for Windows Phone Development

For Windows Azure platform (a.k.a. “Cloud”) development downloads, see:

- Baseline Setup: Windows Azure Platform Development

- Notable Downloads for Windows Azure Platform Development

When installing everything on a fresh OS, see:

Baseline Setup: Visual Basic Development for Windows Phone

VB development requires Visual Studio 2010 Professional (or greater) and an additional download to install the VB project types, etc.

- Visual Studio 2010 Professional, Premium, or Ultimate

- Windows Phone Developer Tools (Release Notes)

- Windows Phone Developer Tools January 2011 Update (Release Notes)

- Download and install the Windows Phone Developer Tools Fix

- Visual Basic for Windows Phone Developer Tools – RTW

Baseline Setup: C# Development for Windows Phone

- (optional) Visual Studio 2010 Professional, Premium, or Ultimate

- Windows Phone Developer Tools (Release Notes) – Visual Studio 2010 Express will be installed if you do not have Professional|Premium|Ultimate

- Windows Phone Developer Tools January 2011 Update (Release Notes)

- Download and install the Windows Phone Developer Tools Fix

Notable Downloads for Windows Phone Development

- OData Client for Windows Phone: CodePlex, info

- MVVM Light Toolkit: CodePlex, info

- Silverlight for Windows Phone Toolkit - Feb 2011: CodePlex, info, NuGet

- Coding4Fun Windows Phone Toolkit: CodePlex, info, NuGet

- ReactiveUI for Windows Phone 7: Github zip, info, NuGet

- Phoney Tools for Windows Phone 7: CodePlex, info, NuGet

- RestSharp, supporting WP7: Github, info, NuGet

- WP7Contrib for Windows Phone 7: CodePlex

Baseline Setup: Windows Azure Platform Development

This setup prepares your computer for developing cloud services and databases on the Windows Azure platform.

- (optional) Visual Studio 2010 Professional, Premium, or Ultimate

- Windows Azure “One Simple Installer” installs:

* Windows Azure tools for Microsoft Visual Studio

* Windows Azure SDK

*Visual Web Developer 2010 Express (if you don’t have VS 2010)

*Required IIS feature settings

*Required hot fixes

*more information on the Windows Azure: Download page

Noteable Downloads for Windows Azure Platform Development

- WCF Data Services Toolkit: CodePlex

- Windows Azure Mangement Tools

- Windows Azure Tools for Eclipse (includes a Windows Azure storage explorer)

- Windows Azure AppFabric SDK

- Fiddler2 HTTP debugging proxy, info

- Open Data Protocol Visualizer for Visual Studio 2010

Fresh OS Installation Sequence

When installing everything on a fresh operating system, I recommend the following installation sequence. Note: I haven’t tried a full setup with the free tools, but will add that information when time allows.

- SQL Server 2008 R2 – if you’re going to have it, I recommend that this goes on before Visual Studio

- Visual Studio 2010 Professional, Premium, or Ultimate

- Expression Studio 4 Ultimate – if you’re going to have it, I recommend that this goes on before the WP7 tools

- Baseline setup for Visual Basic or C# Windows Phone development

- Baseline setup for Windows Azure platform development

- Notable downloads: Windows Phone notables and Windows Azure notables

References

App Hub: Getting Started

Windows Azure: Downloads

Microsoft DreamSpark

David Gristwood interviewed Richard Prodger in this 00:12:30 How Active Web Solutions built their “Global Alerting Platform” on Windows Azure Channel9 video clip of 3/4/2011:

David Gristwood met up with Richard Prodger, from Active Web Solutions (AWS) to discover more about their new “Global Alerting Platform” (http://www.globalalerting.com/), a system that ushers in a new generation of devices that mean “no matter where you are, you can be sure that you will always be in contact and you can be found”, and finds out why the built it, and why they chose the Windows Azure platform.

Chris Czarnecki asked Why Does Microsoft do it Differently with Azure Java Support ? in a 3/4/2011 post to the Learning Tree blog:

I recently posted that Microsoft have support for running Java applications on Azure. I was intrigued by this offering and wondered why Microsoft were chasing this market when they have yet to convince their own .NET community that Azure is a suitable cloud platform over say Amazon AWS.

I develop for both the Java and .NET platforms so am not biased towards one or the other, and having a Platform as a Service (PaaS) such as Azure being capable of supporting both would be highly attractive to me and my company as we could streamline our cloud usage to a common platform. Until the recent Windows Azure Starter Kit for Java, the process of deploying a Java application to Azure involved writing some .NET code in C# and some Azure specific config files. The new CTP makes the process a little simpler but it is still tedious if I take the view I am a Java developer and not a .NET developer. With the CTP release Microsoft have said they are interested in getting feedback from Java developers so they can ‘nail down the correct experience for Java developers’.

I find this approach very naive. Java has always defined a standard structure for Web applications that enable them to be deployed to many different servers, from vendors such as IBM and Oracle with no change in code or configuration. When it comes to cloud deployment, Amazon with Elastic Beanstalk, VMware with Code2Cloud and Google with Goggle App Engine, all enable standard Java Web applications to be deployed to their clouds. They have taken the standard structure and worked with it and created tools that make deployment seamless. Developers need not change anything from the way they normally develop to the way they normally deploy to use their clouds. So why have Microsoft decided to be different and create a tool that seems to want to mix a bit of .NET and a bit of Azure into the standard Java Web application world ? I really cannot understand the approach they have taken.

So in a highly competitive space, Microsoft have released an inferior toolset, requiring new skills to deploy to a cloud that is more expensive than the equivalent others on a per hour basis. To me it seems they have failed at the very beginning of their attempt to entice Java developers to the Azure world. Hopefully I am wrong, because as I stated before it would suit me to be able to deploy Java to Azure, but I am not expecting anything better soon. If you would like to try the toolkit out, Microsoft are offering 750 hours per month free on their extra small instances plus a SQL Azure database. Let me know how you find it.

Doug Rehnstrom [pictured below] asserted Government Should Consider Windows Azure as Part of its “Cloud-First” Strategy in a 3/4/2011 post to the Learning Tree blog:

Recently, the United States Chief Information Officer, Vivek Kundra, published a 25 point plan to reform the government’s IT management.

Point number 3 states that the government should shift to a “Cloud-First” policy. When describing that point, Mr. Kundra tells of a private company that managed to scale up from 50 to 4,000 virtual servers in three days, to support a massive increase in demand. He contrasts that with the government’s “Cash-For-Clunkers” program, which was overwhelmed by unexpected demand.

You might be interested in the entire report at this URL, http://www.cio.gov/documents/25-Point-Implementation-Plan-to-Reform-Federal%20IT.pdf.

Microsoft Windows Azure is perfect for this type of scenario. If your program is less successful than you hoped, you can scale down. If you’re lucky enough to be the next Twitter, you can scale up as much as you need to. Tune your application up or down to handle spikes in demand. You only pay for the resources that you use, and Microsoft handles the entire infrastructure for you. Scaling up or down is simply a matter of specifying the number of virtual machines required, in a configuration file.

Visual Studio and the .NET Framework make programming Windows Azure applications easy. Visual Studio automates much of the deployment of an Azure application. Web sites can be created using ASP.NET. Web services are easy with Windows Communication Foundation. There’s seamless integration of data using either Azure storage or SQL Azure. Plus, you can leverage the existing knowledge of your .NET developers.

If you prefer Java or PHP, Azure supports those as well. Most any program that will run on Windows will also run on Azure, as under the hood it’s just Windows Server 2008.

In point 3.2 of that report, it states that each agency must find 3 “must move” services to migrate to the cloud. If you’re a government IT worker or contractor trying to help meet that goal, you might be interested in learning more about Windows Azure. Come to Learning Tree course 2602, Windows Azure Platform Introduction: Programming Cloud-Based Applications.

There are many other cloud computing platforms and services besides Azure. These include Amazon EC2, Google App Engine and many others. To learn more about the broad range of cloud services and choices available, you might like to come to Learning Tree Course 1200: Cloud Computing Technologies.

Daniel de Oliveira, Fernanda Araújo Baião and Marta Mattoso described Migrating Scientific Experiments to the Cloud in a 3/4/2011 post to the HPC in the Cloud blog:

The most important advantage behind the concept of cloud computing for scientific experiments is that the average scientist is capable of accessing many types of resources without having to buy or configure the whole infrastructure.

This is a fundamental need for scientists and scientific applications. It is preferable that scientists be isolated from the complexity of configuring and instantiating the whole environment, focusing only on the development of the in silico experiment.

The amount of published scientific and industrial papers provide evidence that cloud computing is being considered as a definitive paradigm and it is already being adopted by many scientific projects.

However, many issues have to be analyzed when scientists decide to migrate a scientific experiment to be executed in a cloud environment. The article “Azure Use Case Highlights Challenges for HPC Applications in the Cloud” presents several challenges focused on HPC support, specifically, for [the] Windows Azure Platform. We discuss in this article some important topics on cloud computing support from a scientific perspective. Some of these topics were organized as a taxonomy in our chapter “Towards a Taxonomy for Cloud Computing from an e-Science Perspective” of the book “Cloud Computing: Principles, Systems and Applications”[11]. [Emphasis added.]

Background on e-Science and Scientific Workflows

Over the last decades, the effective use of computational scientific experi-ments evolved in a fast pace, leading to what is being called e-Science . The e-Science experiments are also known as in silico experiments [12]. In silico experiments are commonly found in many domains, such as bioinformatics [13] and deep water oil exploitation [14]. An in silico experiment is conducted by a scientist, who is responsible for managing the entire experiment, which comprises composing, executing and analyzing it. Most of the in silico experiments are composed by a set of programs chained in a coherent flow. This flow of programs aiming at a final scientific goal is commonly named scientific workflow [12,15].

A scientific workflow may be defined as an abstraction that allows the struc-tured controlled composition of programs and data as a sequence of operations aiming a desired result. Scientific workflows represent an attractive alternative to model pipelines or script-based flows of programs or services that represent solid algorithms and computational methods. Scientific Workflow Management Systems (SWfMS) are responsible for the workflow execution by coordinating the in-vocation of programs, either locally or in remote environments. SWfMS need to offer support throughout the whole experiment life cycle, including: (i) design the workflow through a guided interface (to follow a specific scientific method [16]); (ii) control several variations of workflow executions [15]; (iii) execute the workflow in an efficient way (often in parallel); (iv) handle failures (v) access, store and manage data.

The combination of the life cycle support with the HPC environment has many challenges to SWfMS due to the heterogeneous execution environments of the workflow. When the HPC is a cloud platform, more issues arise as discussed next.

Cloud check-list before migrating a scientific experiment

We discuss scientific workflow issues related to cloud computing in terms of architectural characteristics, business model, technology infrastructure, privacy, pricing, orientation and access, as shown in Figure 1 .

Page: 1 of 6

Read more: 2, 3, 4, 5, 6 Next >

The Windows Azure Team described the National September 11 Memorial & Museum and Archetype Unveil Interactive Timeline Hosted on Windows Azure in a 3/4/2011 post:

The National September 11 Memorial & Museum's mission is to bear witness to the terrorist attacks of September 11, 2001, and February 26, 1993, and honor the nearly 3,000 victims of these attacks and those who risked their lives to save others. In order to drive a deep level of engagement with the website and museum collections, the organization worked with Archetype to design and develop a set of dynamic and interactive applications that are both media-rich and highly accessible.

The result was the 9/11 Interactive Timeline, which was launched last Wednesday, February 23, 2011. Hosted on Windows Azure, the 9/11 Interactive Timeline incorporates a rich set of audio, video and images from the Museum's collection to enable users to follow the events of September 11, 2001. As users interact with the timeline, they can learn more about specific artifacts and can share their experiences with others via social networking tools such as Facebook and Twitter.

Archetype also designed and developed the Lady Liberty interactive experience for the National 9/11 Memorial and Museum. Also hosted on Windows Azure, the Lady Liberty interactive experience brings to life a replica of Lady Liberty, which was placed outside a Manhattan firehouse in the days following September 11, 2001. In order to allow online visitors to experience the tributes and remembrances that adorn Lady Liberty from torch to toe, the online application allows users to select from a whole series of images, as well as pan through and zoom into or magnify any part of an image. Users can also learn more about some of the artifacts through intuitive callouts and pop-ups.

Archetype also developed an advanced Windows Azure-based administration tool for these applications, allowing the 9/11 Memorial and Museum staff to manage content, as well as stage, preview and test updates. You can learn more about Archetype here.

<Return to section navigation list>

Visual Studio LightSwitch

• Ira Bell posted a detailed Connecting to Microsoft SQL Azure with Microsoft Visual Studio LightSwitch to the NimboTech blog on 3/3/2011:

In this blog I will explain how to quickly create a project with Microsoft Visual Studio LightSwitch that connects to Microsoft SQL Azure. It’s important to note that LightSwitch is currently in Beta, so you should be careful when working with this product and obviously refrain from your desire to manipulate production data. I think this is a wonderful product as it quite potentially could bridge the educational gap for those wanting to learn how to develop applications but struggle with the tedious aspect of learning all of the fundamentals. With that being said, I still think it’s important to learn the fundamentals.

In case you’re wondering what Visual Studio LightSwitch is, take a look at the blog posting I created on this subject:

http://blog.nimbotech.com/2011/03/what-is-microsoft-visual-studio-lightswitch/The first thing that you’ll need to do is to install the Microsoft Visual Studio LightSwitch Beta. You can do that by accessing the following site and clicking on the Download LightSwitch Beta button:

Once you’ve got LightSwitch installed you’ll need to log in to the Windows Azure Developer Portal. If you haven’t signed up for a Windows Azure account, you can do so at the following link by clicking the Sign up now button:

Note: All images in this blog posting are click-able and will open up new windows showing the details in high resolution.

Creating a SQL Azure Database

- In the Windows Azure Developer Portal (which is based in Microsoft Silverlight), click the Database menu item

- Then, click the server name under the Azure Subscription menu on the left. (Note: If you don’t see a server listed you’ll need to click the Create New Server button on the top menu.)

- Next, click the Create button in the database portion of the menu.

The steps above are highlighted in the image below:

Next, you will need to type a unique name for your new database.

- For this demonstration I chose the name skydivingrules (because i think it does)

- Select Web for the Edition

- Select 1GB for the Maximum Size

Note: There are several options here. You’re welcome to use whatever you need based on your requirements. Since this is a demonstration, I’ve selected the cheapest and smallest. However, at the time of this writing there is a 5GB option available for the Web Edition. The other Edition available is called Business, and currently has options of 10GB, 20GB, 30GB, 40GB, and 50GB. I suspect Microsoft will increase these size limits eventually – but who knows for sure.

The steps above are highlighted in the image below:

Next you you need to access the management portal for your database. To do this, you simply click the Manage button in the database portion of the menu.

The image below shows the Manage button:

You’ll need to authenticate with your administrator name and password. As you can see, I’ve named an administrator as irabell.

The image below shows the authentication screen for the Azure database management portal:

In this portal, the cube in the center will display the name of the database you’re accessing in the top header. It’s probably a best practice to verify that you’re actually working on the correct database.

- Select New Table from the Operations menu

The steps above are highlighted in the image below:

For this demonstration, I’ve decided to implement the following:

- I’ve named the table SkydivingInstructors

- I’ve created a FirstName column which is a nvarchar data type with a 25 character limit

- I’ve created a LastName column which is a nvarchar data type with a 15 character limit

- I’ve created an Over500HoursExperience column which is a bit data type (Note: LightSwitch will pick up this type as Boolean)

- I’ve created a NumberOfJumps column which is an int data type

I can then simply click the Save button in the General menu to save the changes I’ve made to my SkydivingInstructors table.

The steps above are highlighted in the image below:

SQL Azure is secure! You’ll need to add an allowance policy to the firewall for connectivity. Otherwise, you’ll get a nasty error saying that you aren’t authorized to access the environment. Since we don’t want that, you’ll need to click your server name on the left then click the Firewall Rules button in the center of the screen to access the firewall manager.

The steps above are highlighted in the image below:

Once inside the firewall manager, you’re able to quickly add the IP address that you’ll be connecting from. The tool provides your current IP address so that you don’t need to use one of the common sites such as IPChicken.com or WhatIsMyIP.com.

The steps above are highlighted in the image below:

The final step in the Windows Azure Platform Developer Portal is to make a note of the Fully Qualified Server Name for connectivity. You’ll need that to specificy your connection options in LightSwitch.

The steps above are highlighted in the image below:

Creating a Sample LightSwitch Application

Open up Microsoft Visual Studio and select LightSwitch from your list of Installed Templates.

You have two options for your project, as shown in the image below (Visual Basic or Visual C#):

Select Attach to external database, as shown in the image below:

Select Database and click Next, as shown in the image below:

On the Connection Properties screen, you’ll need to enter the information that you captured in the steps above.

For my properties, I entered the following:

- Server name: mhr19sybzi.database.windows.net

- Select the Use SQL Server Authentication radio button

- User name: irabell

- Enter the Password

- Select the skydivingrules database from the dropdown menu under Select or enter a database name

The steps above are highlighted in the image below:

Now I probably don’t need to hit the Test Connection button because the skydivingrules database wouldn’t have shown up if I had made an error. But, because we all love the feeling of success I went ahead and clicked the Test Connection button.

The results of the Test Connection button event are highlighted in the image below:

LightSwitch is now able to connect to the skydivingrules database and provide a list of the database objects. From this section you can select which objects you’d like to have present for your application. Since I only created one table, I’ll select the SkydivingInstructors checkbox and then click Finish. Note: You have the opportunity to name the Data Source here, or later in the Visual Studio Solution Explorer.

The steps above are highlighted in the image below:

As you can see in the image below, Visual Studio LightSwitch is now ready for my specifications on the table I created.

Please note that I’ve deleted the Azure server used in this demonstration. The server address I used for the connection string will not work for you. You’ll need to create your own using the steps I’ve provided above! Enjoy!

Return to section navigation list>

Windows Azure Infrastructure

• Larry Grothaus posted a review of the Businessweek on Cloud Providers and Customers article to TechNet’s ITInsights blog on 3/4/2011:

I was reading an interesting story just published by Bloomberg Businessweek titled “The Cloud: Battle of the Tech Titans” [see article below]. The story covers some of the more obvious benefits of cloud computing such as reducing the costs associated with having underutilized hardware and time spent maintaining software, but it also drills into a variety of other benefits. These include the correlating benefit of IT having more time and budget to spend on innovating and moving their business forward, as well as the availability of the massive computing capacity which cloud services deliver. This is particularly important in relation to the ever-expanding data and information available to companies, which is also discussed in the piece.

One of the excerpts in the article that caught my eye was about the data volume availability and how this technology shift differs from previous examples:

“What's different this time—as compared with the rise of the mainframe or the PC—is scale. As the consumer Web exploded, the global mass of computer data went supernova. This year, according to IDC, the world's digital universe will reach 1.2 zettabytes, or 1.2 quadrillion megabytes. If you take every word ever written in every language, it's about 20,000 times that.”

The topic of private cloud infrastructures is also covered in the story, describing it as a “more efficient version of traditional IT infrastructure”. Also included is information about how companies can use private cloud solutions as a bridge between traditional IT and public cloud infrastructures. The story also includes cloud related companies that are private cloud naysayers who, not surprisingly don’t offer these types of hybrid, flexible solutions, and only have off-premise cloud offerings.

A variety of Microsoft customers, such as Starbucks and Kraft, also talk about their real-world cloud experiences in the story. One of the included customer examples is the City of Miami. The City had its 911 system for emergencies and also a 311 hotline for reporting non-emergencies, like streets needing to be repaired, lost dogs or minor complaints. They wanted to improve their 311 system and add a web application that would allow citizens to report and track resolution of their calls.

Even though they were facing an IT budget that was down 18 percent and an aging IT infrastructure, they still wanted to make this happen for their customers. After considering the options, they selected the Windows Azure Platform to run their new online 311 service. Their IT group has seen an estimated 75 percent cost savings in the first year of the service, and developers are planning to further enhance the system with things like the ability to upload photos. The full details of the City of Miami story are available in a video and case study here.

I found “The Cloud: Battle of the Tech Titans” article to be a good read, let me know if you have thoughts on it as well. If you’re looking for more information on what Microsoft has to offer businesses interested in cloud computing, check out our Cloud Power site too.

• Ashlee Vance asserted “Amazon, Google, and Microsoft are going up against traditional infrastructure makers like IBM and HP as businesses move their most important work to cloud computing, profoundly changing how companies buy computer technology” in her The Cloud: Battle of the Tech Titans cover story of 3/3/2011 for Bloomberg Business Week’s The Power of the Cloud issue for 3/7/2011.

The Cloud: Battle of the Tech Titans (page 5 of 7)

Other global corporations such as Bechtel, Eli Lilly (LLY), and Pfizer (PFE) have experienced this ground-up push. So, too, has InterContinental Hotels Group, which manages 650,000 rooms at 4,500 hotels worldwide. IHG has started a project to revamp its technological lifeblood, its central reservation system. The current version relies on decades-old mainframe technology; the new one is coming to life on Amazon's cloud. Bryson Koehler, a senior vice-president at IHG, says the company considered keeping the software in-house, just on upgraded hardware. "But this is a six-year journey," he says. "The last thing I want to do is move over to current technology and then be outdated by the time we finish the project." Koehler expects that pooling all of the company's data into the cloud will let IHG deliver information more quickly to customers regardless of their location, and to perform more complex analysis of users' behavior.

Strapped city, state, and federal technology departments have been some of the organizations most enchanted by the quick turnarounds the cloud promises. Miami, for example, has built a service that monitors nonemergency 311 requests. Local residents can go to a website that pulls up a map of the city and places pins in every spot tied to a 311 complaint. Before the cloud, the city would have needed three months to develop a concept, buy new computing systems (including extras in case of a hurricane), get a team to install all the necessary software, and then build the service. "Within eight days we had a prototype," says Derrick Arias, the assistant director of IT for the City of Miami. "That blew everyone away internally."

Companies such as Starbucks (SBUX) and Kraft Foods (KFT) have been early backers of Microsoft's cloud services, which include online versions of Office and its business software platform, Azure. Starbucks uses Microsoft's cloud e-mail to keep its army of baristas up to date about sales and daily deals. When Kraft bought Cadbury last year, it too relied on Microsoft software to merge the two companies' technology systems. Kraft has also begun conducting more complex computing experiments on Azure. "I want to change to a model at Kraft where basically our core infrastructure is part of the Internet," says Kraft CIO Mark Dajani. He's looking to upgrade about 10 percent of Kraft's infrastructure per year and wishes he could move faster. "If I built Kraft from the ground up today," he says, echoing the feelings of many big company CIOs, "I would leverage the cloud even more." [Emphasis added.]

All this chatter about handing over crucial data and processes to places such as Amazon clearly annoys executives at the technology giants. "This is our 100th year," says Ric Telford, IBM's vice-president for cloud services. "We have had new competitors, new niches, and new markets come and go." Telford's counterparts at Cisco (CSCO), EMC, HP, IBM, and the other incumbents share similar sentiments. The old-school tech giants don't reject the cloud. They're just anti-chaos. Their view, with some justification, is that conducting key parts of business elsewhere carries risks. The cloud is fine for experimentation around the edges, but better to keep the mission-critical functions in-house. They contend that big technological shifts should not be left to enterprise-computing amateurs such as Amazon and Google. (Microsoft, which has sold vast amounts of technology to corporations for decades, can hardly be called an amateur.) Big tech understands the nuances of doing business on a global scale and how to take care of the finance, health-care, and government customers concerned about an information free-for-all. As Telford puts it, "You can't just take a credit card and swipe it and be on our cloud."

Read More: Previous Page Page 1 2 3 4 5 6 7

• Charles Babcock asserted “CloudSleuth's comparison of 13 cloud services showed a tight race among Microsoft, Google App Engine, GoGrid, Amazon EC2, and Rackspace” in a deck for his Microsoft Azure Named Fastest Cloud Service article of 3/4/2011:

In a comparative measure of cloud service providers, Microsoft's Windows Azure has come out ahead. Azure offered the fastest response times to end users for a standard e-commerce application. But the amount of time that separated the top five public cloud vendors was minuscule.

These are first results I know of that try to show the ability of various providers to deliver a workload result. The same application was placed in each vendor's cloud, then banged on by thousands of automated users over the course of 11 months.

Apple announced the iPad2. It's faster and slimmer. The dual core processor and faster graphics will speed it up, but it maintains the same battery life and price. Ipad fans will be thrilled. Best of all, Steve Jobs was on hand to show it all.

Google App Engine was the number two service, followed by GoGrid, market leader Amazon EC2, and Rackspace, according to tests by Compuware's CloudSleuth service. The top five were all within 0.8 second of each other, indicating the top service providers show a similar ability to deliver responses from a transaction application.

For example, the test involved the ability to deliver a Web page filled with catalog-type information consisting of many small images and text details, followed by a second page consisting of a large image and labels. Microsoft's Azure cloud data center outside Chicago was able to execute the required steps in 10.142 seconds. GoGrid delivered results in 10.468 seconds and Amazon's EC2 Northern Virginia data center weighed in at 10.942 seconds. Rackspace delivered in 10.999 seconds.

It's amazing, given the variety of architectures and management techniques involved, that the top five show such similar results. "Those guys are all doing a great job," said Doug Willoughby, director of cloud strategy at Compuware. I tend to agree. The results listed are averages for the month of December, when traffic increased at many providers. Results for October and November were slightly lower, between 9 and10 seconds.

The response times might seem long compared to, say, the Google search engine's less than a second responses. But the test application is designed to require a multi-step transaction that's being requested by users from a variety of locations around the world. CloudSleuth launches queries to the application from an agent placed on 150,000 user computers.

It's the largest bot network in the world, said Willoughby, then he corrected himself. "It's the largest legal bot network," since malware bot networks of considerable size keep springing up from time to time.

Page 2: Testing Reflects The Last Mile

1 | 2 Next Page »

• David Chou [pictured below] reviewed David Pallman’s The Windows Azure Handbook, Volume 1: Strategy & Planning e-book in a 3/4/2011 post:

I was happy to see that a colleague and friend, David Pallman (GM at Neudesic and one of the few Windows Azure MVP’s in the world), successfully published the first volume of his The Windows Azure Handbook series:

- Planning & Strategy

- Architecture

- Development

- Management

I think that is a very nice organization/separation of different considerations and intended audiences. And the ‘sharding’ of content into four separate volumes is really not intended as a ploy to sell more with what could be squeezed into one book. :) The first volume itself has 300 pages! And David only spent the minimally required time on the requisite “what is” cloud computing and Windows Azure platform topics (just the two initial chapters, as a matter of fact). He then immediately dived into more detailed discussions around the consumption-based billing model and its impacts, and many aspects that contribute into a pragmatic strategy for cloud computing adoption.

Having worked with Windows Azure platform since its inception, and successfully delivering multiple real-world customer projects, David definitely has a ton of lessons, best practices, and insights to share. Really looking forward to the rest of the series.

Kudos to David!

David Chou is a technical architect at Microsoft. David Pallman is a Windows Azure MVP.

<Return to section navigation list>

Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

• The Server & Tools Business team posted a Senior Program Manager Job specification for the Windows Server Cloud Engineering (WSCE) Group on 3/4/2011:

- Date: Mar 4, 2011

- Location: Redmond, WA, US

- Job Category: Software Engineering: Program Management

- Location: Redmond, WA, US

- Job ID: 745009-36987

- Division: Server & Tools Business

Do you want to be at the center of Microsoft’s future in cloud computing? Do you like to make complex technology highly usable? Can you put yourself in the shoes of different customers to drive the creation of a compelling feature set? If so, the Windows Server Cloud Engineering (WSCE) Group is for you!

We are looking for a Senior Program Manager to help drive the full realization of the Windows Azure Platform Appliance. Infrastructure as a Service and Platform as a Service are where computing is headed; Software as a Service providers will use the Windows Azure Platform Appliance so that they can focus on what they do best. If you want to be in the center of this transformation of server computing, driving the Windows Azure Platform Appliance is the place for you.

This position will help plan and spec Windows Server, Windows Azure Platform and the Windows Azure Platform Appliance features that bring a new form of cloud computing to our best customers, and work across STB with products building on these technologies. Both specifying deep technical aspects of complex service platform functionality and the capability to think on a broad, product wide scale will be part of this position.

Our ideal candidate is a proven and experienced PM with both technical and business skills with a passion for not only building innovative and new products. V1 product experience is preferred but not required. The candidate we seek will have a minimum track record of 4-6 years of senior technical contribution and a rich background in software and services. This experience should demonstrate a proven track record of looking not only to their business and product, but across multiple products for Microsoft wide impact.

This position will contribute directly to the technical innovation and product strategy of the Windows Azure Platform Appliance. The ideal candidate will have both business insights and engineering experience in a technical leadership role as part of a startup group or company. Candidates must be able to analyze a complicated set of engineering and business constraints in order to contribute effectively. A high degree of autonomy is expected, as is contribution across the wider organization.

Nearest Major Market: Seattle

Nearest Secondary Market: Bellevue

Job Segments: Cloud, Engineer, Engineering, Management, Manager, Software Engineer, System Administrator, Technology

It’s clear that WAPA is still alive and (presumably) well in the STB division.

• Srinivasan Sundara Rajan described Migrating ECM Work Load to Cloud in his Hybrid Enterprise Content Management and Cloud Computing post of 3/4/2011:

Content Management as a Cloud Candidate

In my previous article on data warehouse workload as a candidate for cloud, I elaborated on How the hybrid delivery model Supports Data Warehouse migration into cloud.Most of the major IT companies predict a hybrid delivery in the future, where by the future enterprises need to look for a delivery model that comprises certain workloads on clouds and some of them continue to be on data centers, and then looks for a model that will integrate them together.

Much like data warehouses and business intelligence, content management is also a fit candidate for the cloud, especially in a hybrid delivery model.

Before we go further into a blueprint of how content management fits within a hybrid cloud environment, we will see the salient features of content management and how the cloud tenants make them a very viable work load to be moved to the cloud.

Enterprise content management is about managing the lifecycle of electronic documents. Electronic documents take many forms - data, text, images, graphics, voice or video - but all need to be managed so that they can be properly stored, located when needed, secured against unauthorized access, and properly retained.

Content management employs whatever components that are necessary to capture, store, classify, index, version, maintain, use, secure and retain electronic documents.

The following are the ideal steps for migrating an on-premise content management system to a cloud platform.

1. Capture Metadata About the Content

Typically documents that form part of content management are sourced from different applications within the organization. An enterprise content management system will only be useful, if the users of able to locate the documents of interest to them using the advanced search facilities.The search related to documents can be possible only if relevant metadata (data about the document) of the documents is extracted and stored. There could be multiple types of metadata about a document that will be enabled for searches.

Typically the metadata about the documents is stored in a separate metadata repository outside of the content store itself. Also typically the metadata store will be a relational database.

This model facilitates business users to search the document attributes using several combinations before they retrieve the actual document itself.

As this metadata is repeatedly searched much more frequently than the actual document itself is viewed, it is only ideal that the metadata store is kept inside the ‘Premises'. Also the creation of metadata is much more real time in line with the underlying business processes.

These characteristics make the metadata capture and the associated storage be kept inside premises than moving that to the cloud.

2. Storage of the Actual Documents in Cloud Storage

Typically the meta data while is important it is relatively small size , however the actual document will be larger in size, especially if information like photos, videos or engineering drawings are stored. These documents will be ideal to store inside the Cloud Platform.The PaaS or SaaS content management platform on cloud, like SharePoint Online, will have APIs to create folder structures and other attributes required to physically store the document on the cloud.

PaaS platforms like Windows Azure and its offshoot SharePoint Online (part of office 365) are ideal for storing the documents in the cloud

The worker role and associated APIs can facilitate a asynchronous storage of the documents, so that after the metadata is saved, the end user or the application need not wait for the documents to be committed on the cloud

3. Rendering of Documents Based on User Request to the Cloud

While the user can search the metadata repository for different search conditions, the actual document can be viewed only by linking the metadata to the actual document stored in the Cloud platform.Normally viewing of the document amounts to rendering the special type of content and also employ special algorithm for compression and decompression of the content and other buffering features to improve the performance.

This can be typically solved by utilizing the SaaS-based rendition platforms on the cloud or extending the PaaS platform.

Summary

The above steps will provide a blueprint of a hybrid enterprise content management on the cloud. The diagram below depicts the same.

<Return to section navigation list>

Cloud Security and Governance

K. Scott Morrison reported on 3/4/2011 an Upcoming Webinar: Extending Enterprise Security Into The Cloud to be held on 3/15/2011 at 9:00 AM PST:

On March 21[??], 2011 Steve Coplan, Security Analyst from the 451 Group and I will present a webinar describing strategies CIOs and enterprise architects can implement to create a unified security architecture between on-premise IT and the cloud.

I have great respect for Steve’s research. I think he is one of the most cerebral analysts in the business; but what impresses me most is that he is always able to clearly connect the theory to its practical instantiation in the real world. It’s a rare skill. He also has a degree in Zulu, which has little to do with technology, but makes him very interesting nonetheless.

Lately Steve and I have been talking about the shrinking security perimeter in the cloud and what this means to the traditional approaches for managing single sign-on and identity federation. This presentation is a product of these discussions, and I’m anticipating that it will be a very good one.

I hope you can join us for this webinar. It’s on Tuesday, March 15, 2011 9:00 AM PST | 12:00 PM EST | 5:00 PM GMT. You can register here. [Emphasis added.]

Overview:

For years enterprises have invested in identity, privacy and threat protection technologies to guard their information and communication from attack, theft or compromise. The growth in SaaS and IaaS usage however introduces the need to secure information and communication that spans the enterprise and cloud. This webinar will look at approaches for extending existing enterprise security investments into the cloud without significant cost or complexity.

<Return to section navigation list>

Cloud Computing Events

• Matt Morrolo announced a Private Clouds for Development: Exposing and Solving the Self-Service Gap Webcast in a 3/4/2011 post to the Application Development Trends (ADT) site:

Date: March 16, 2011 at 11:00 AM PST

Speaker: Anders Wallgren, Chief Technical Officer at Electric Cloud

Cloud computing is happening in a big way. No wonder infrastructure-as-a-service, resource elasticity, and user self-service promises huge returns.

Software development and tests needed for scalable, flexible, compute-intensive IT infrastructure make it a great customer for the benefits of cloud computing. Whether it is vast clusters for testing, dozens of machines to run ALM tools, or the incessant requests for “just one more box,” development teams are always asking for something and it is always changing.

Because of these unique needs, as well as security concerns, many enterprises are turning first to building a private cloud run by IT and used by development, within their own firewall. This webinar explores:

- A blueprint for creating a private development cloud that enables IT to provide infrastructure-as-a-service to developers

- How task and workflow automation, resource management, and tool integrations allow development teams to effectively use a private cloud infrastructure and run these tasks in a shared environment

- How IT organizations at leading enterprises have leveraged Electric Cloud solutions to transform generic grid and VM infrastructure into private development clouds tailored to their development teams

• The Windows Azure Team recommended on 3/4/2011 that you Don't Miss Free Webinar Tuesday, March 8: "Moving Client/Server and Windows Forms Applications to Windows Azure with Visual WebGui Instant Cloud Move":

Join a free webinar Tuesday, March 8, 2011 at 8:00 am PT with Gizmox VP of Research and Development, Itzik Spitzen, to learn how new migration and modernization tools are empowering businesses to transform their existing client/server applications into native Web applications. This transformation to web applications can enable businesses to combine the economics and scalability of the cloud with the richness, performance, security and ease of development of client/server. During this session, you will also learn how Visual WebGui (VWG) provides the shortest path from client/server business applications to Windows Azure, and how Gizmox has helped its customers move to Windows Azure.

Click here to learn more and register for this session.

• The Windows Azure User Group of the Netherlands (WAZUG NL) announced that Panagiotis Kefalidis will deliver A Windows Azure Instance - What is under the hood? presentation on 3/17/2011 at 5:00 PM in Utrecht:

17:00 uur Sessie 1: "A Windows Azure Instance - What is under the hood?"

door Panagiotis Kefalidis (een Windows Azure MVP uit Griekenland):

"Did you ever wonder what is going on inside an instance of Windows Azure? How does it get the notification from the Fabric controller? How do they communicate and what kind of messages they exchange? Is it all native or there is a managed part? We'll make a quick overview of what an instance is, what is happening when you click the deploy button and there proceed to an anatomy. This is a highly technical hardcore session including tracing, debugging and tons of geekiness. Very few slides, lots of live demos."

Additional sessions:

19:00 uur Sessie 2: "Spending Tax Money on Azure Solutions"

door Rob van der Meijden (CIBG):

Hoe een mobile solution te bouwen met Windows Azure voor de burger. In deze sessie wordt ingegaan op de beweegredenen, architectuur en randvoorwaarden voor een Windows Azure solution voor een mobile device en hoe overheidsdiensten in de toekomst gebruik kunnen gaan maken van de cloud ontwikkelingen.19:45 uur Sessie 3: Cost-effective Architecture in Windows Azure door Hans ter Wal: "Windows Azure biedt ons vele mogelijkheden m.b.t. schaal- en wendbaarheid welke voorheen wellicht ondenkbaar waren. Veelal waren de oplossingen voorheen simpelweg te kostbaar i.v.m. hard- en software kosten. Waar zitten de kosten in Windows Azure en waar moet je op letten bij het opzetten van je architectuur?"

Locatie: Mitland Hotel Utrecht, ARIËNSLAAN 1, 3573 PT UTRECHT

www.mitland.nl

• The CloudTweaks blog reported on 3/4/2011 The CIO Cloud Summit – June 14th-16th, 2011:

Register with our event partner to meet and network with several leading CIOs on June 14th-16th, 2011

Event Description

The CIO Cloud Summit, Jun 14 – Jun 16, 2011, will bring together CIOs and IT thought-leaders from various industries to discuss cloud computing topics and trends, including security, public versus private cloud, cloud optimization, and more.

Meet And Network with Leading CIOs

The CIO Cloud Summit will help C-suite executives better understand the true capabilities of cloud computing and the transformational opportunities it can bring to their business. Take advantage of this unique opportunity to make new business contacts and network with a group of leading technology executives. The CIO Cloud Summit will include representatives from various industries, including, but not limited to:

- Healthcare

- Transportation

- Telecom

- Finance

- Insurance

- Retail

- Government

- Utilities

Top Reasons You Need to Be There

- Exclusive Attendee List – The CIO Cloud Summit brings together CIOs and top IT executives across several industry verticals. The discussions will be on your level and on topics you want to discuss.

- Learning Opportunity – To make the best decisions, you need to be up-to-date on latest IT trends. We determine which topics really matter and make sure you get the chance to have in-depth discussions.

- Intimate Environment – The CIO Cloud Summit agenda is designed to ensure that your ideas and opinions can be shared with your peers. Huge technology conferences have a place, but why become another face in the crowd?

Testimonials from CDM Media’s CIO Events

Past attendees had some great things to say. Check out our testimonial page to hear for yourself.

Wade Wegner (@WadeWegner) announced Cloud Cover Episode 38 - VM Role with Corey and Cory on 3/4/2011:

Join Wade and Steve each week as they cover the Windows Azure Platform. You can follow and interact with the show @CloudCoverShow.

In this episode, Steve and Wade [pictured at right] are joined by both Corey Sanders—a PM on the Windows Azure Fabric Controller team—and Cory Fowler—Canada’s only Windows Azure MVP—as they discuss the Virtual Machine (VM) Role in Windows Azure. Corey explains the purpose of VM Role, how to get it set up and working, and when to use it (and not use it).

Reviewing the news, Wade and Steve:

- Highlight the February 2011 update of the Windows Azure Platform Training Kit and Course

- Reveal a new node available for the Windows Azure CDN in Doha, QT

- Review MD5 checking in Windows Azure Blobs

- Discuss the improved experience for Java Developers with Windows Azure

- Cover the availability of a schema-free graph engine called Neo4j on Windows Azure

If you’re looking to try out the Windows Azure Platform free for 30-days—without using a credit card—try the Windows Azure Pass with promo code “CloudCover”.

Show links:

Cory Fowler (@SyntaxC4) posted Cloud Cover Show Visit on 4/4/2011:

While I was at MVP Summit 2011, I was invited to join Steve Marx and Wade Wegner on my favourite Channel 9 Program – Cloud Cover Show. The show featured Corey Sanders from the Windows Azure Fabric Controller Team, he talks about his role in the Windows Azure VMRole.

It was great to be able to provide the tip of the week, which I chose to highlight Microsoft Research Project – [Windows] Azure Throughput Analyzer.

The [Windows] Azure Throughput Analyzer is a tool that can be used to test the performance of transactions to Windows Azure Storage Services from your On-Premise Application.

Enjoy the Show…

Do you want to Develop on Windows Azure without a Credit Card?

The Cloud Cover Show now gives you the ability to do so, head over

to Windows Azure Pass and use the promo-code: cloudcover

<Return to section navigation list>

Other Cloud Computing Platforms and Services

Ken Fromm (@frommww) continued his series with Cloud + Machine-to-Machine = Disruption of Things: Part 2 in a 3/4/2011 guest post to the ReadWriteCloud:

Editor's note: This is the second in a two-part series on the advantages that cloud computing brings to the machine-to-machine space. It was first published as a white paper by Ken Fromm. Fromm is VP of Business Development at Appoxy, a Web app development company building high scale applications on Amazon Web Services. He can be found on Twitter at @frommww.

Once data is in the cloud, it can be syndicated - made accessible to other processes - in very simple and transparent ways. The use of of REST APIs and JSON or XML data structures, combined with dynamic language data support, allows data to be accessed, processed and recombined in flexible and decentralized ways. A management console, for example, can set up specific processes to watch ranges of sensors and perform operations specific data sets. These processes can be launched and run on any server at any time, controlled via a set schedule or initiated in response to other signals.

Data can also propagate easily throughout a system so that it can be used by multiple processes and parties. Much like Twitter streams that make use simple subscription/asymmetric follow approach, M2M data streams can be exposed to credentialed entities by a similar loosely coupled and asymmetric subscription process.

The model is essentially a simple data bus containing flexible data formats, which other processes can access without need for a formal agreement. Data sets, alarms and triggers can move throughout the system much like status and other federated signals are flowing within Consumer Web 2.0.

Low-Cost Sensors and Reliable Data Transmission

Because screen interfaces can be separated from data collection, inexpensive and remote devices can be used as the interfaces. Separating views from data from application logic means that widely available languages and tools can be used, making it easier and faster to add new features and rapidly improve interfaces.

The cloud benefits outlined here are predicated on low-cost sensors being available (and low-power for a number of uses) as well as inexpensive and high availability data transmission.

The first assumption is a pretty solid bet now and will only become more true in the next year or two. And with mobile companies looking at M2M as important sectors and wireless IP access extending out in both coverage and simplicity (and bluetooth to mobile for some instances), being able to reliably and pervasively send data to the Web is also becoming more of a certainty.

Flexible and Agile App Development

Another core benefit of the cloud is development speed and agility. Because screen interfaces can be separated from data collection, inexpensive and remote devices can be used as the interfaces. Separating views from data from application logic means that widely available languages and tools can be used, making it easier and faster to add new features and rapidly improve interfaces. Device monitoring and control can take on all the features, functions and capabilities that Web apps and browsers provide without having to have developers learn special languages or use obscure device SDKs.

Developing these applications can be done quickly by leveraging popular dynamic languages such as Ruby on Rails, Python and Java. Ruby on Rails (Ruby is the language; Rails is an application framework) offers many advantages when it comes to developing Web applications:

- simple dynamic object-oriented language

- built-in Web application framework

- transparent model-view-controller architecture

- simple connections between applications and databases

- large third-party code libraries

- vibrant developer community

Here's Mark Benioff, CEO of Salesforce explaining his purchase of Heroku, a Ruby on Rails cloud development platform from ComputerWeekly in December 2010:

"Ruby is the language of Cloud 2 [applications for real-time mobile and social platforms]. Developers love Ruby. It's a huge advancement. It offers rapid development, productive programming, mobile and social apps and massive scale. We could move the whole industry to Ruby on Rails."Developing applications in the cloud provides added speed and agility because of reduced development cycles. Projects can be developed quickly with small teams. Cloud-based services and code libraries can be used to so that teams develop only what is core to their application.

Adding charts and graphs to an application is a matter of including a code library or signing up for third party service and connecting to it via REST APIs. Adding geolocation capabilities involves a similar process. In this way, new capabilities can be added quickly and current capabilities extended without having to develop entire stacks of functionality not core to a company's competencies.

Here's VMWare's CEO Paul Maritz on the advantages of programming frameworks:

"Developers are moving to Django and Rails. Developers like to focus on what's important to them. Open frameworks are the foundation for new enterprise application development going forward. By and large developers no longer write windows or Linux apps. Rails developers don't care about the OS - they're more interested in data models and how to construct the UI."Monitoring and control dashboards and data visualization are critical to creating effective M2M applications. Having dynamic languages and frameworks that facilitate rapid development and rapid iteration means companies can move quickly to roll out new capabilities and respond rapidly to customer needs.

Circuit photo by pawel_231; cloud photo by Rybson.

Big Data Processing