Windows Azure and Cloud Computing Posts for 3/11/2011+

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

•• Updated 3/13/2011 with additional articles by John Gilham, David Linthicum, Lydia Leong and Ernest Mueller marked ••.

• Updated 3/12/2011 with additional articles by Robin Shahan, Andy Cross, Steve Marx, Glenn Gailey, Bill Zack, Steve Yi, Greg Shields, Lucas Roh, Adron Hall, Jason Kincaid and Microsoft Corporate Citizenship marked •.

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database and Reporting

- Marketplace DataMarket and OData

- Windows Azure AppFabric: Access Control, WIF and Service Bus

- Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch

- Windows Azure Infrastructur and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Azure Blob, Drive, Table and Queue Services

Dan Liu listed .NET Types supported by Windows Azure Table Storage Domain Service in a 3/11/2010 post:

Kyle [McClellan] has several blog posts [from November 2010 and earlier] explaning how to use Windows Azure Table Storage (WATS) Domain Service, the support of which comes from WCF RIA Services Toolkit.

Below is a list of types that are currently working with WATS Domain Service.

Jerry Huang described A Quick and Easy Backup Solution for Windows Azure Storage in a 3/9/2011 to his Gladinet blog:

Windows Azure Storage is getting more popular and more and more Gladinet customers are using Azure Storage now.

One of the primary use case for Windows Azure Storage is Online Backup. It is pretty cool to have your local documents and folders backup to Windows Azure, inside data centers run by Microsoft, a strong brand name that you can trust with your important data.

This article will walk you through the new Gladinet Cloud Backup 3.0, a simple and yet complete backup solution for your Windows PC, Server and Workstations.

Step 1 – Download and Install Gladinet Cloud Backup

Step 2 – Add Windows Azure as Backup Destination

From the drop down list, choose Windows Azure Blob Storage

You can get the Azure Blob Storage credentials from the Azure web site (windows.azure.com)

Step 3 – Manage Backup Tasks from Management Console

There are two modes of backup. You can use the one that works the best for you.

- Mirrored Backup – the local folder will be copied to Azure Storage. Once it is done, the copy on the Azure storage is exactly the same as your local folder’s content.

- Snapshot Backup – A snapshot of the local folder will be taken first and then the snapshot will be saved to Azure Storage.

If you need to backup SQL Server and other types of applications that supports Volume Shadow Copy Service, you can use the snapshot backup to get it done.

Related Posts

<Return to section navigation list>

SQL Azure Database and Reporting

Steve Yi posted SQL Azure Pricing Explained on 3/11/2011:

We created a quick 10 minute video that provides an overview of the pricing meters and billing associated with using SQL Azure. The pricing model for SQL Azure is one of the most straightforward in the industry - it's basically just a function of database size and amount of data going in and out of our cloud datacenters.

What's covered are:

the business benefits of utilizing our cloud database

- a comparison and overview of the different editions of SQL Azure database

- understanding the pricing meters

- examples of pricing

- a walk-through of viewing your bill

You can also view it in full-screen mode by going here and clicking on the full-screen icon in the lower right when you play the video.

If you haven't already, for a limited time, new customers to SQL Azure get a 1GB Web Edition Database for no charge and no commitment for 90 days. You also get free usage of Windows Azure and AppFabric services through June 30. This is a great way to evaluate the SQL Azure and the Windows Azure platform without any of the risk. Details on the offer are here. There are also several different offers available on the Windows Azure offer page.

… We have some great information lined up for next week. I'm a huge fan of the Discovery Channel, and Shark Week is an event I annually mark on my calendar. Imitation is the sincerest form of flattery, so next week will be "Migration Week" here on the blog, where we'll have some materials and guidance on migrating from Access and on-premises databases to SQL Azure and why it's important. … [Emphasis added.]

<Return to section navigation list>

MarketPlace DataMarket and OData

• Glenn Gailey (@gailey777) answered When Should I Version My OData Service? in a 3/12/2011 post:

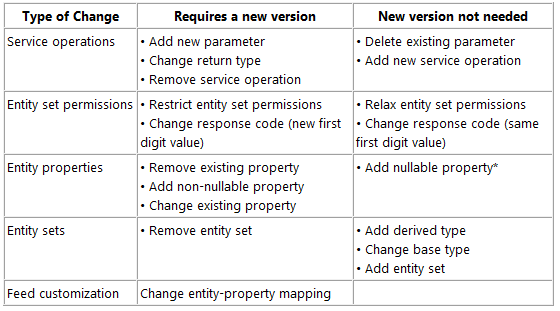

While researching an answer [to] a forum post on versioning, I was digging into one of the v1 product specs and found a couple of very useful logical flowcharts that describe how to handle data service versioning scenarios. Sadly, this information has yet to make it into the documentation. I will do my best in this post to distill the essence of these rather complex flowcharts into some useful rules of thumb for when to version your data service. (Then I will probably also put this information in the topic Working with Multiple Versions of WCF Data Services.

Data Model Changes that Recommend a New Data Service Version

The kinds of changes that require you to create a new version of a data service can be divided into the following two categories:

- Changes to the service contract—which includes updates to service operations or changes to the accessibility of entity sets (feeds)

- Changes to the data contract— which includes changes to the data model, feed formats, or feed customizations.

The following table details for which kinds of changes you should consider publishing a new version of the data service:

* You can set the IgnoreMissingProperties property to true to have the client ignore any new properties sent by the data service that are not defined on the client. However, when inserts are made, the properties not sent by the client (in the POST) are set to their default values. For updates, any existing data in a property unknown to the client may be overwritten with default values. In this case, it is safest to use a MERGE request (the default). For more information, see Managing the Data Service Context (WCF Data Services).

How to Version a Data Service

When required, a new OData service version is defined by creating a new instance of the service with an updated service contract or data contract (data model). This new service is then exposed by using a new URI endpoint, which differentiates it from the previous version.

For example:

- Old version: http://myhost/v1/myservice.svc/

- New version: http://myhost/v2/myservice.svc/

Note that Netflix has already prepared for versioning by adding a v1 segment in the endpoint URI of their OData service: http://odata.netflix.com/v1/Catalog/

When you upgrade your data service, your clients will need to also be updated with the new data service metadata and the new root URI . The good thing about creating a new, parallel data service is that it enables clients to continue to access the old data service (assuming your data source can continue to support both versions). You should remove the old version when it is no longer needed.

The Microsoft Office 2010 Team published Connecting PowerPivot to Different Data Sources in Excel 2010 to the MSDN Library in 3/2011:

- Summary: Learn how to import data from different sources by using Microsoft PowerPivot for Excel 2010.

- Applies to: Microsoft Excel 2010 | Microsoft PowerPivot for Excel 2010

- Published: February 2011

- Provided by: Steve Hansen, Microsoft Visual Studio MVP and founder of Grid Logic.

The PowerPivot add-in for Microsoft Excel 2010 can import data from many data sources, including relational databases, multidimensional sources, data feeds, and text files. The real power of this broad data-source support is that you can import data from multiple data sources in PowerPivot, and then either combine the data sources or create ad hoc relationships between them. This enables you to perform an analysis on the data as if it were from a unified source.

Download PowerPivot for Excel 2010

This article discusses three of the data sources that you can use with PowerPivot:

- Microsoft Access

- Microsoft Azure Marketplace DataMarket

- Text files

See the Read It section in this article for a comprehensive list of data sources that PowerPivot supports.

Microsoft Access

Microsoft Access 2010 is a common source of data from analytical applications. By using PowerPivot, you can easily connect to an Access database and retrieve the information that you want to analyze.

To use Microsoft Access with PowerPivot

Open a new workbook in Excel and then click the PowerPivot tab.

Click the PowerPivot Window button on the ribbon.

In the Get External Data group, click From Database, and then select From Access.

Click the Browse button, select the appropriate Access database, and then click Next.

At this point, you have two options.

- You can import data from a table that is in the database or from a query that is in the database. If you do not want all of the records from a table, you can apply a filter to limit the records that are returned.

- You can import the data by using an SQL query. Unlike the first option, where you can return data based on a query that is defined in the database, you use the second option to specify an SQL query in PowerPivot that is executed against the database to retrieve the records.

The next steps show how to retrieve data from tables and queries in the database.

Select the option, Select from a list of tables…, and then click Next.

Select the tables that you want to import.

By default, all of the columns and records in the selected tables are returned. To filter the records or specify a subset of the columns, click Preview & Filter.

To automatically include tables that are related to the selected tables click Select Related Tables.

Click Finish to load the data into PowerPivot.

Note:

Importing data from a Microsoft SQL Server database requires a connection to a SQL Server instance, but is otherwise identical to importing data from a Microsoft Access database.

Microsoft Azure Marketplace DataMarket

The Microsoft Azure Marketplace DataMarket offers a large number of datasets on a wide range of subjects.

Note:

To take advantage of the Azure Marketplace DataMarket, you must have a Windows Live account to subscribe to the datasets.

To use Microsoft Azure Marketplace DataMarket data in PowerPivot

In PowerPivot, in the Get External Data group, select From Azure Datamarket.

Important:

If you do not see the option, you have an earlier version of PowerPivot and should download the latest version of PowerPivot.

Figure 1. PowerPivot, Connect to an Azure DataMarket Dataset

Click View available Azure DataMarket datasets to browse the available datasets.

After you locate a dataset, click the Subscribe button in the top-right part of the page. Clicking the button subscribes you to the dataset and enables you to access the data in it. After you subscribe, go back to the dataset home page.

Click the Details tab. Partway down the page, locate the URL under Service root URL and copy it into the PowerPivot import wizard. This URL is the dataset URL that PowerPivot uses to retrieve the data.

Figure 2. Azure Marketplace DataMarket, Service Root URL

To locate the account key that is associated with your account, click Find in the Table Import Wizard to open a page that displays the key. (You might have to log in first to view the key.)

After you locate the key, copy and paste it into the import wizard and then click Next to display a list of available tables. From here, you can modify the tables and their corresponding columns to select which data to import.

Click Finish to import the data.

Note:

That data from the Azure DataMarket is exposed by using Windows Communication Foundation (WCF) Data Services (formerly ADO.NET Data Services). If you select the From Data Feeds option when you retrieve external data, you can connect to other WCF Data Services data sources that are not exposed through the Azure DataMarket.

Text File

PowerPivot lets users import text files with fields that are delimited by using a comma, tab, semicolon, space, colon, or vertical bar.

To load data from a text file

In the Get External Data group in the PowerPivot window, Select From Text.

Click Browse, and then navigate to the text file that you want to import.

If the file includes column headings in the first row, select Use first row as column headers.

Clear any columns that you do not want to import.

To filter the data, click the drop-down arrows in the field(s) that you want to use as a filter and select the field values to include.

Click Finish to import the data.

One of the compelling features of PowerPivot is that it enables you to combine data from multiple sources and then use the resulting dataset as if the data were unified. For example, suppose that you manage a portfolio of office buildings. Suppose further that you have a SQL Server database that contains generic information about the buildings in your portfolio, an Analysis Services cube that contains financial information such as operating expenses for the buildings, and access to crime information via the Azure MarketPlace DataMarket. By using PowerPivot, you can combine all of this data in PowerPivot, create ad hoc relationships between the sources, and then analyze it all in Excel as if it were a single data source.

You can use PowerPivot to leverage data from the following supported data sources in your next analysis project:

- Microsoft SQL Server

- Microsoft SQL Azure

- Microsoft SQL Server Parallel Data Warehouse

- Microsoft Access

- Oracle

- Teradata

- Sybase

- Informix

- IBM DB2

- Microsoft Analysis Services

- Microsoft Reporting Services

- Data Feeds (WCF Data Services formerly ADO.Net Data Services)

- Excel workbook

- Text file

Tip:

This is not an exhaustive list; there is also an Others option that you can use to create a connection to data sources via an OLE DB or ODBC provider. This option alone significantly increases the number of data sources that you can connect to.

<Return to section navigation list>

Windows Azure AppFabric: Access Control, WIF and Service Bus

•• John Gilham described Single Sign-on between Windows Azure and Office 365 services with Microsoft Online Federation and Active Directory Synchronization in a 3/13/2011 post to the AgileIT blog:

A lot of people don’t realize there will be 2 very interesting features in Office 365 which makes connecting the dots with your on-premise environment and Windows Azure easy. The 2 features are directory sync and federation. It means you can use your AD account to access local apps in your on-premise environment; just like you always have. You can also use the same user account and login process to access Office 365 up in the cloud, and you could either use federation or a domain-joined application running in Azure to also use the same AD account and achieve single-sign-on.

<snip>

Extending the model to the cloud

Windows Azure Connect (soon to be released to CTP) allows you to not only create virtual private networks between machines in your on-premise environment and instances you have running in Windows Azure, but it also allows you to domain-join those instances to your local Active Directory. In that case, the model I described above works exactly the same, as long as Windows Azure Connect is configured in a way to allow the client computer to communicate with the web server (which is hosted as a domain-joined machine in the Windows Azure data centre). The diagram would look like this and you can followed the numbered points using the list above:

Diagram 2: Extending AD in to Windows Azure member servers

Office 365

Office 365 uses federation to “extend” AD in to the Office 365 Data Centre. If you know nothing of federation, I’d recommend you read my federation primer to get a feel for it.

The default way that Office 365 runs, is to use identities that are created by the service administrator through the MS Online Portal. These identities are stored in a directory service that is used by Sharepoint, Exchange and Lync. They have names of the form:

planky@plankytronixx.emea.microsoftonline.com

However if you own your own domain name you can configure it in to the service, and this might give you:

planky@plankytronixx.com

…which is a lot more friendly. The thing about MSOLIDs that are created by the service administrator, is that they store a password in the directory service. That’s how you get in to the service.

Directory Synchronization

However you can set up a service to automatically create the MSOLIDs in to the directory service for you. So if your Active Directory Domain is named plankytronixx.com then you can get it to automatically create MSOLIDs of the form planky@plankytronixx.com. The password is not copied from AD. Passwords are still mastered out of the MSOLID directory.

Diagram 3: Directory Sync with on-premise AD and Office 365

The first thing that needs to happen, is that user entries made in to the on-premise AD, need to have a corresponding entry made in to the directory that Office 365 uses to give users access. These IDs are known as Microsoft Online IDs or MSOLIDs. This is achieved through directory synchronization. Whether directory sync is configured or not – the MS Online Directory Service (MSODS) is still the place where passwords and password policy is managed. MS Online Directory Sync needs to be installed on-premise.

When a user uses either Exchange Online, Sharepoint Online or Lync, the Identities come from MSODS and authentication is performed by the identity platform. The only thing Directory Sync really does in this instance is to ease the burden on the administrator to use the portal to manually create each and every MSOLID.

One of the important fields that is synchronised from AD to the MSODS is the user’s AD ObjectGUID. This is a unique immutable identifier that we’ll come back to later. It’s rename safe, so although the username, UPN, First Name, Last Name and other fields may change, the ObjectGUID will never change. You’ll see why this is important.

<snip>

Read the complete article @> Single-sign-on between on-premise apps, Windows Azure apps and Office 365 services. - Plankytronixx - Site Home - MSDN Blogs

Ron Jacobs (@ronljacobs) announced the availability of the AppFabric WCF Service Template (C#) in an 3/11/2011 post:

Now available Download the AppFabric WCF Service Template C#

Windows Communication Foundation (WCF) is Microsoft’s unified programming model for building service-oriented applications. Windows Server AppFabric provides tools for managing and monitoring your web services and workflows.

The AppFabric WCF Service template brings these two products together providing the following features:

- Monitor the calls to your service across multiple servers with AppFabric Monitoring

- Create custom Event Tracing for Windows (ETW) events that will be logged by AppFabric Monitoring

Setup

To build and test an AppFabric WCF Service you will need the following:

Walkthrough

- Add a new project using the ASP.NET Empty Web Application Template.

- Add a new item to your project using the AppFabric WCF Service template named SampleService.svc

- Open the SampleService.svc.cs file and replace the SayHello method with the following code:

public string SayHello(string name) { // Output a warning if name is empty if (string.IsNullOrWhiteSpace(name)) AppFabricEventProvider.WriteWarningEvent( "SayHello", "Warning - name is empty"); else AppFabricEventProvider.WriteInformationEvent( "SayHello", "Saying Hello to user {0}", name); return "Hello " + name; }Enable Monitoring

- Open web.config

- Enable the EndToEndMonitoring Tracking profile for your web application

Verify with Development Server

- In the Solution Explorer window, right click on the SampleService.svc file and select View in Browser.

- The ASP.NET Development Server will start and the SampleService.svc file will load in the browser.

- After the browser opens, select the URL in the address box and copy it (CTRL+C).

- Open the WCF Test Client utility.

- Add the service using the endpoint you copied from the browser.

- Double click the SayHello operation, enter your name in the name parameter and click Invoke.

- Verify that your service works.

Verify with IIS

To see the events in Windows Server AppFabric you need to deploy the Web project to IIS or modify your project to host the solution in the local IIS Server. For this example you will modify the project to host with the local IIS server.

Run Visual Studio as Administrator

If you are not running Visual Studio as Administrator, exit and restart Visual Studio as Administrator and reload your project. For more information see Using Visual Studio with IIS 7.

- Right click on the Web Application project you’ve recently created and select properties

- Go to the Web tab

- Check Use Local IIS Web Server and click Create Virtual Directory

- Save your project settings (Debugging will not save them)

- In the Solution Explorer window, right click on the SampleService.svc file and select View in Browser. The address should now be that of the IIS (“http://localhost/applicationName/”) and not of the ASP.NET Development Server (“http://localhost:port/”).

- After the browser opens, select the URL in the address box and copy it (CTRL+C).

- Open the WCF Test Client utility.

- Add the service using the endpoint you copied from the browser.

- Double click the SayHello operation, enter your name in the name parameter and click Invoke.

- Verify that your service works.

- Leave the WCF Test Client open (you will need to use it in the next step).

Verify Monitoring

- Open IIS Manager (from command line: %systemroot%\system32\inetsrv\InetMgr.exe)

- Navigate to your web application (In the Connections pane open ComputerName à Sites à Default Web Site à ApplicationName)

- Double Click on the AppFabric Dashboard to open it

- Look at the WCF Call History you should see some successful calls to your service.

- Switch back to the WCF Test Client utility. If you’ve closed the utility, repeat steps 5-8 in the previous step, Verify with IIS.

- Double click the SayHello operation, enter your name in the name parameter and click Invoke.

- Change the name to an empty string, or select null from the combo box and invoke the service again. This will generate a warning event.

- To see the monitoring for this activity switch back to the IIS Manager and refresh the AppFabric Dashboard.

- Click on the link in the WCF Call History for SampleService.svc and you will see events for the completed calls. In this level you can see calls made to get the service’s metadata (Get calls) and calls for the service operations (SayHello calls).

- To see specific events, right click on an entry for the SayHello operation and select View All Related Events.

- In the list of related events you will see the user defined event named SayHello. The payload of this event contains the message logged by the operation.

Itai Raz continued his AppFabric series with Introduction to Windows Azure AppFabric blog posts series – Part 4: Building Composite Applications of 3/10/2011:

In the previous posts in this series we covered the challenges that Windows Azure AppFabric is trying to solve, and started discussing the Middleware Services in this post regarding Service Bus and Access Control, and this post regarding Caching. In the current post we will discuss how AppFabric addresses the challenges developers and IT Pros face when Building Composite Applications and how the Composite App service plays a key role in that.

Building Composite Applications

As noted in the first blog post, multi-tier applications, and applications that consist of multiple components and services which also integrate with other external systems, are difficult to deploy, manage and monitor. You are required to deploy, configure, manage and monitor each part of the application individually, and you lack the ability to treat your application a single logical entity.

Here is how Windows Azure AppFabric addresses these challenges.

The Composition Model

To get the ability to automatically deploy and configure your composite applications, and later get the ability to manage and monitor your application as a single logical entity, you first need to define which components and services make up your composite application, and what the relationships between them are. This is done using the Composition Model, which is a set of extensions to the .NET Framework.

Tooling Support

You can choose to define your application model in code, but when using Visual Studio to develop your application, you also get visual design time capabilities. In Visual Studio you can drag-and-drop the different components that make up your application, define the relationships between the components, and configure the components as well as the relationships between them.

The image below shows an example of what the design time experience of defining your application model within Visual Studio looks like:

In addition to the development tools experience, you also get runtime tooling support through the AppFabric Portal. Through the portal you get capabilities to make runtime configuration changes, as well as get monitoring capabilities and reporting, which are discussed in the Composite App service section below.

Composite App service

By defining your application model, you are now able to get a lot of added value capabilities when deploying and running your application.

You can automatically deploy your end-to-end application to Windows Azure AppFabric from within Visual Studio, or you can create an application package that can be uploaded to the Windows Azure AppFabric Portal.

No matter how you choose to deploy your application, the Composite App service takes care of automatically provisioning, deploying and configuring all of the different components, so you can just start running your application. This reduces a lot of complexity and manual steps required from developers and IT Pros today.

In addition, the service enables you to define component level as well as the end-to-end application level performance requirements, monitoring, and reports. You are also able to more easily troubleshoot and optimize the application as a whole.

Another important capability of the Composite App service is to enable you to run Windows Communication Foundation (WCF) as well as Windows Workflow Foundation (WF) services on Windows Azure AppFabric.

These are two very important technologies that you should use when building service oriented and composite applications. The Composite App service enables you to use these technologies as part of your cloud application, and to use them as components that are part of your composite application.

So, as we showed in this post, Windows Azure AppFabric makes it a lot easier for you to develop, deploy, run, manage, and monitor your composite applications. The image below illustrates how you are able to include the different AppFabric Middleware Services, as well as data stores and other applications, including applications that reside on-premises, as part of you composite application:

A first Community Technology Preview (CTP) of the features discussed in this post will be released in a few months, so you will be able to start testing them soon. [Emphasis added.]

To learn more about the capabilities of AppFabric that enable Building Composite Applications, please watch the following video: Composing Applications with AppFabric Services | Speaker: Karandeep Anand.

Also make sure to familiarize yourself with Windows Communication Foundation (WCF) and Windows Workflow Foundation (WF), these technologies already provide great capabilities for building service oriented and composite applications on-premises today, and will be available soon on Windows Azure AppFabric in the cloud.

As a reminder, you can start using our CTP services in our LABS/Preview environment at: https://portal.appfabriclabs.com/. Just sign up and get started.

Other places to learn more on Windows Azure AppFabric are:

- Windows Azure AppFabric website and specifically the Popular Videos under the Featured Content section.

- Windows Azure AppFabric developers MSDN website

Be sure to start enjoying the benefits of Windows Azure AppFabric with our free trial offer. Just click on the image below and start using it today!

<Return to section navigation list>

Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

• Steve Marx (@smarx) explained Using the Windows Azure CDN for Your Web Application in a 3/11/2011 post:

The Windows Azure Content Delivery Network has been available for more than a year now as a way to cache and deliver content from Windows Azure blob storage with low latency around the globe. Earlier this week, we announced support in the CDN for caching web content from any Windows Azure application, which means you can get these same benefits for your web application.

To try out this new functionality, I built an application last night: http://smarxcdn.cloudapp.net. It fetches web pages and renders them as thumbnail images. (Yes, most of this code is from http://webcapture.cloudapp.net, which I’ve blogged about previously.) This application is a perfect candidate for caching:

- The content is expensive to produce. Fetching a web page and rendering it can take a few seconds each time.

- The content can’t be precomputed. The number of web pages is practically infinite, and there’s no way to predict which ones will be requested (and when).

- The content changes relatively slowly. Most web pages don’t change second by second, so it’s okay to serve up an old thumbnail. (In my app, I chose five minutes as the threshold for how old was acceptable.)

I could have cached the data at the application level, with IIS’s output caching capabilities or Windows Azure AppFabric Caching, but caching with the CDN means the data is cached and served from dozens of locations around the globe. This means lower latency for users of the application. (It also means that many requests won’t even hit my web servers, which means I need fewer instances of my web role.)

The Result

You can try a side-by-side comparison with your own URLs at http://smarxcdn.cloudapp.net, but just by viewing this blog post, you’ve tested the application. The image below comes from http://az25399.vo.msecnd.net/?url=blog.smarx.com:

If you see the current time in the overlay, it means the image was just generated when you viewed the page. If you see an older time, up to five minutes, that means you’re viewing a cached image from the Windows Azure CDN. You can try reloading the page to see what happens, or go play with http://smarxcdn.cloudapp.net.

Setting up a CDN Endpoint

Adding a Windows Azure CDN endpoint to your application takes just a few clicks in the Windows Azure portal. I’ve created a screencast to show you exactly how to do this (also at http://qcast.it/pXhWH):

The Code

To take advantage of the Windows Azure CDN, your application needs to do two things:

- Serve the appropriate content under the

/cdnpath, because that’s what the CDN endpoint maps to. (e.g., http://az25399.vo.msecnd.net/?url=blog.smarx.com maps to http://smarxcdn.cloudapp.net/cdn/?url=blog.smarx.com.)- Send the content with the correct cache control headers. (e.g., Images from my application are served with a

Content-Cacheheader value ofpublic, max-age=300, which allows caching for up to five minutes.)To meet the first requirement, I used routing in my ASP.NET MVC 3 application to map

/cdnURLs to the desired controller and action:routes.MapRoute( "CDN", "cdn", new { controller = "Webpage", action = "Fetch" } );To meet the second requirement, the correct headers are set in the controller:

public ActionResult Fetch() { Response.Cache.SetCacheability(HttpCacheability.Public); Response.Cache.SetMaxAge(TimeSpan.FromMinutes(5)); return new FileStreamResult(GetThumbnail(Request.QueryString["url"]), "image/png"); }The two

Response.Cachelines are what set theCache-Controlheader on the response. You can also useweb.configto set cache control headers on static content.[UPDATE 3:37pm] David Aiken pointed out that there’s a better way to get this header emitted in ASP.NET MVC 3. This code seems to be approximately equivalent to what I wrote originally. Thanks, David!

[OutputCache(Duration=300, Location=OutputCacheLocation.Any)] public ActionResult Fetch() { return new FileStreamResult(GetThumbnail(Request.QueryString["url"]), "image/png"); }Download

You can download the full source code for http://smarxcdn.cloudapp.net here: http://cdn.blog.smarx.com/files/smarxcdn_source.zip. Note that I have not included

CutyCapt.exe, which is required for creating the thumbnails. You can get CutyCapt here. Also note that my CDN URL is hardcoded in this solution, so be sure to change that if you’re building your own application.Side Note

This application uses ASP.NET MVC 3. Having already made use of several techniques for installing ASP.NET MVC 3 in Windows Azure, I decided it was time for a new one. This code includes curl, and uses that to download the ASP.NET MVC 3 installer before running it.

Here’s the side-by-side view with the latest page from my Access In Depth blog:

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

• Robin Shahan (@robindotnet) reported that IIS Failed Request Logs haven’t been fixed in her Azure SDK 1.4 and IIS Logging post of 3/11/2011:

I was excited about the release of Azure SDK 1.4. With 1.3, I had to put in a workaround to ensure that the IIS logs were transferred to blob storage correctly (as they were in version 1.2). In the release notes for Azure SDK 1.4, this is included in the list of changes:

Resolved an IIS log file permission Issue which caused diagnostics to be unable to transfer IIS logs to Windows Azure storage.

If true, this means I can take out the startup task I put in (explained in this article) to work around the problem.

Having learned never to assume, I decided to check out the new version and test two of my Azure instances – one that hosts a WCF service and one that hosts a web application.

I installed SDK 1.4, published the two cloud projects, and opened the URLs in the web browser. After navigating around a bit to create IIS logging, I put in some invalid links to generate Failed Request Logging. Then I used the Cerebrata Azure Diagnostics Manager to check it out.

The good news is that the IIS logging has been fixed. It transfers to blob storage as it did in Tools/SDK 1.2.

The bad news is that the IIS Failed Request logs have NOT been fixed. I RDP’d into the instance, and had to change the ownership on the directory for the failed request logs to see if it was even writing them, and it was not. So there’s no change from SDK 1.3.

If you want to use SDK 1.4 and have IIS Failed Request logs created and transferred to blob storage correctly, you can follow the instructions from this article on setting up a startup task and remove the code from the PowerShell script that applies to the IIS logs, leaving behind just the code for the IIS Failed Request logs. It will look like this:

echo "Output from Powershell script to set permissions for IIS logging." Add-PSSnapin Microsoft.WindowsAzure.ServiceRuntime # wait until the azure assembly is available while (!$?) { echo "Failed, retrying after five seconds..." sleep 5 Add-PSSnapin Microsoft.WindowsAzure.ServiceRuntime } echo "Added WA snapin." # get the DiagnosticStore folder and the root path for it $localresource = Get-LocalResource "DiagnosticStore" $folder = $localresource.RootPath echo "DiagnosticStore path" $folder # set the acl's on the FailedReqLogFiles folder to allow full access by anybody. # can do a little trial & error to change this if you want to. $acl = Get-Acl $folder $rule1 = New-Object System.Security.AccessControl.FileSystemAccessRule( "Administrators", "FullControl", "ContainerInherit, ObjectInherit", "None", "Allow") $rule2 = New-Object System.Security.AccessControl.FileSystemAccessRule( "Everyone", "FullControl", "ContainerInherit, ObjectInherit", "None", "Allow") $acl.AddAccessRule($rule1) $acl.AddAccessRule($rule2) Set-Acl $folder $acl # You need to create a directory name "Web" under FailedReqLogFiles folder # and immediately put a dummy file in there. # Otherwise, MonAgentHost.exe will delete the empty Web folder that was created during app startup # or later when IIS tries to write the failed request log, it gives an error on directory not found. # Same applies for the regular IIS log files. mkdir $folder\FailedReqLogFiles\Web "placeholder" >$folder\FailedReqLogFiles\Web\placeholder.txt echo "Done changing ACLs."This will work just fine until they fix the problem with the IIS Failed Request logs, hopefully in some future release.

• Andy Cross (@andybareweb) described a Workaround: WCF Trace logging in Windows Azure SDK 1.4 in a 3/12/2011 post:

This post shows a workaround to the known issue in Windows Azure SDK 1.4 that prevents the capture of WCF svclog traces by Windows Azure Diagnostics. The solution is an evolution of the work by RobinDotNet’s on correcting IIS logging, and a minor change to the workaround I produced for Windows Azure SDK v1.3.

When using WCF trace logging, certain problems can be encountered. The error that underlies these issues revolves around file permissions related to the log files, which prevents the Windows Azure Diagnostics Agent from being able to access the log files and transfer the files to Windows Azure blob storage. These permissions were evident in SDK v1.3 and are still around in SDK v1.4. The particular problem I am focussing on now is in terms of getting access to WCF Trace Logs.

This manifests itself in a malfunctioning Windows Azure Diagnostics setup – log files may be created but they are not transferred to Blob Storage, meaning they become difficult to get hold of, especially in situations where multiple instances are in use.

The workaround is achieved by adding a Startup task to the WCF Role that you wish to collect service level tracing for. This Startup task then sets ACL permissions on the folder that the logs will be written to. In SDK version 1.3 we also created a null (zero-byte) file with the exact filename that the WCF log is going to assume. In version 1.4 of the SDK, this is unnecessary and prevents the logs being copied to blob storage.

The Startup task should have a command line that executes a powershell script. This allows much freedom on implementation, as powershell is a very rich scripting language. The Startup line should read like:

powershell -ExecutionPolicy Unrestricted .\FixDiagFolderAccess.ps1>>C:\output.txtThe main work then, is done by the file FixDiagFolderAccess.ps1. I will run through that script now – it is included in full with this post: http://blog.bareweb.eu/2011/03/implementing-and-debugging-a-wcf-service-in-windows-azure/

echo "Windows Azure SDK v1.4 Powershell script to correct WCF Trace logging errors. Andy Cross @andybareweb 2011" echo "Thank you RobinDotNet, smarx et al" Add-PSSnapin Microsoft.WindowsAzure.ServiceRuntime # wait until the azure assembly is available while (!$?) { echo "Failed, retrying after five seconds..." sleep 5 Add-PSSnapin Microsoft.WindowsAzure.ServiceRuntime } echo "Added WA snapin."This section of code sets up the Microsoft.WindowsAzure.ServiceRuntime cmdlets, a set of useful scripts that allow us access to running instances and information regarding them. We will use this to get paths of “LocalResource” – the writable file locations inside an Azure instance that will be used to store the svclog files.

# get the ######## WcfRole.svclog folder and the root path for it $localresource = Get-LocalResource "WcfRole.svclog" $folder = $localresource.RootPath echo "DiagnosticStore path" $folder # set the acl's on the FailedReqLogFiles folder to allow full access by anybody. # can do a little trial & error to change this if you want to. $acl = Get-Acl $folder $rule1 = New-Object System.Security.AccessControl.FileSystemAccessRule( "Administrators", "FullControl", "ContainerInherit, ObjectInherit", "None", "Allow") $rule2 = New-Object System.Security.AccessControl.FileSystemAccessRule( "Everyone", "FullControl", "ContainerInherit, ObjectInherit", "None", "Allow") $acl.AddAccessRule($rule1) $acl.AddAccessRule($rule2) Set-Acl $folder $aclAt this point we have just set the ACL for the folder that SVCLogs will go to. In the previous version of the Windows Azure SDK, one also needed to create a zero-byte file in this location, but this is no longer necessary. Indeed doing so causes problems itself.

You can find an example in the following blog; http://blog.bareweb.eu/2011/03/implementing-and-debugging-a-wcf-service-in-windows-azure/, full source for SDK version 1.4 is here: WCFBasic v1.4

A full listing follows:

echo "Windows Azure SDK v1.4 Powershell script to correct WCF Trace logging errors. Andy Cross @andybareweb 2011"

echo "Thank you RobinDotNet, smarx et al"Add-PSSnapin Microsoft.WindowsAzure.ServiceRuntime

# wait until the azure assembly is available

while (!$?)

{

echo "Failed, retrying after five seconds..."

sleep 5Add-PSSnapin Microsoft.WindowsAzure.ServiceRuntime

}echo "Added WA snapin."

# get the ######## WcfRole.svclog folder and the root path for it

$localresource = Get-LocalResource "WcfRole.svclog"

$folder = $localresource.RootPathecho "DiagnosticStore path"

$folder# set the acl's on the FailedReqLogFiles folder to allow full access by anybody.

# can do a little trial & error to change this if you want to.$acl = Get-Acl $folder

$rule1 = New-Object System.Security.AccessControl.FileSystemAccessRule(

"Administrators", "FullControl", "ContainerInherit, ObjectInherit",

"None", "Allow")

$rule2 = New-Object System.Security.AccessControl.FileSystemAccessRule(

"Everyone", "FullControl", "ContainerInherit, ObjectInherit",

"None", "Allow")$acl.AddAccessRule($rule1)

$acl.AddAccessRule($rule2)Set-Acl $folder $acl

• The Microsoft Corporate Citizenship site posted Microsoft Disaster Response: Community Involvement: 2011 Japan Earthquake on 3/11/2011:

On March 11, 2011 at 14.46 (local time), a magnitude 8.9 earthquake struck 81 miles (130km) east of Sendai, the capital of Miyagi prefecture (Japan), followed by a 13 foot tsunami. It is with great concern we are seeing the images from Japan. The scene of the devastation is quite amazing. It will be a while for all of us to get a full sense of the disaster and its impact. Microsoft has activated its disaster response protocol to monitor the situation in Japan and other areas on tsunami warning alert, and offer support as appropriate. We are taking a number of steps, including ensuring the safety of our employees and their families and assessing all of our facilities for any impact.

Microsoft is putting in place a range of services and resources to support relief efforts in Japan including:

- Reaching out to customers, local government, inter-government and non-government agencies to support relief efforts.

- Working with customers and partners to conduct impact assessments.

- Providing customers and partners impacted by the earthquake with free incident support to help get their operations back up and running.

- Offering free temporary software licenses to all impacted customers and partners as well as lead governments, non-profit partners and institutions involved in disaster response efforts.

- Making Exchange Online available at no cost for 90 days to business customers in Japan whose communications and collaboration infrastructure may be affected. We hope this will help them resume operations more quickly while their existing systems return to normal.

Making a cloud-based disaster response communications portal, based on Windows Azure, available to governments and nonprofits to enable them to communicate between agencies and directly with citizens. [Emphasis added.]

…

The Windows Azure Team posted Real World Windows Azure: Interview with Glen Knowles, Cofounder of Kelly Street Digital on 3/11/2011:

As part of the Real World Windows Azure series, we talked to Glen Knowles, Cofounder of Kelly Street Digital, about using the Windows Azure platform to deliver the Campaign Taxi platform in the cloud. Here's what he had to say:

MSDN: Tell us about Kelly Street Digital and the services you offer.

Knowles: Kelly Street Digital is a self-funded startup company with eight employees-six of whom are developers. We created Campaign Taxi, an application available by subscription that helps customers track consumer interactions across multiple marketing campaigns. Designed for advertising and marketing agencies, it helps customers set up digital campaigns, add functionality to their websites, store consumer information in a single database, and present the data in reports.

MSDN: What was the biggest challenge Kelly Street Digital faced prior to implementing Campaign Taxi on the Windows Azure platform?

Knowles: The Campaign Taxi beta application resided on Amazon Web Services for seven months. We had to hire consultants to manage the cloud environment and the database servers. Not only was it expensive to hire consultants, but it was unreliable because they sometimes had conflicting priorities and they lived in different time zones. In December 2009, a few days before the Christmas holiday, the instance of Campaign Taxi in the Amazon cloud stopped running. Our consultant was on vacation in Paris, France. I couldn't call Amazon, and they provided no support options. The best we could do was post on the developer forum. When you rely on the developer community for support, you can't rely on them at Christmas time because they're on holiday. We needed a more reliable cloud solution.

MSDN: Describe the solution you built with the Windows Azure platform?

Knowles: We did a two-week pilot program with Campaign Taxi on the Windows Azure platform. The first thing we noticed was that the response time of the application was significantly faster than it had been with Amazon Web Services. It took one developer three weeks to migrate the application to Windows Azure. We sent our lead developer to a four-hour training through Microsoft BizSpark, and then he quickly wrote a script that ported the application's relational database to SQL Azure. The migration from Microsoft SQL Server to Microsoft SQL Azure was quite straightforward because they're very similar. We use Blob storage to import consumer data, temporarily store customers' uploaded data files, and store backup instances of SQL Azure.

The Campaign Taxi application aggregates consumer interactions during marketing campaigns.

MSDN: What benefits have you seen since implementing the Windows Azure platform?

Knowles: We paid U.S.$4,970 each month for Amazon Web Services; the cost of subscribing to the Windows Azure platform is only 16 percent of that cost-$795 a month-for the same configuration. I can use the annual cost savings to pay a developer's salary. Also, Campaign Taxi runs much faster on the Windows Azure platform. For that kind of improved latency, we would have paid a premium. Windows Azure is an unbelievable product. I'm an evangelist for it in my network of startups. We've chosen this cloud platform and we're sticking with it.

- Read the full story at: http://www.microsoft.com/casestudies/casestudy.aspx?casestudyid=4000009046

- To read more Windows Azure customer success stories, visit: www.windowsazure.com/evidence

Steve Plank (@plankytronixx, pictured below) posted London scrubs up to a high gloss finish with Windows Azure and Windows Phone 7 on 3/11/2011:

The Mayor of London is an entertaining fellow. A regular on the TV news quiz “Have I Got News For You” (the clip is very funny…).

Here he presents a Windows Phone 7 app using Windows Azure at the back end which allows Londoners to report grafitti, rubbish dumping and so on. Of course the local council get a photo of the offending eyesore, plus its location.

Windows Azure and Windows Phone 7 app to clean up London

It’s a great example of that H+S+S (Hardware + Software + Services) caper that keeps raising its head. What I like about this is that it’s pretty difficult to forecast the likely success (as someone once said – “forecasting is a very difficult job. Especially if it’s about the future…”) of this. Will Londoners completely ignore it, or will they take to it like ducks to water? It’s one of those 4 key Windows Azure workloads.

Whether it’s a storming success with tens of thousands of graffiti reports per hour coming in from all corners of London, or just 2 or 3 reports – the local council can scale this to give whatever citizens use it a great experience. In that sense, I think it means government departments and local authorities can take chances on things they’d have never considered before because the cost of building an infrastructure for an idea that may never be successful is now so low.

Wade Wegner (@WadeWegner) posted Cloud Cover Episode 39 - Dynamically Deploying Websites in a Web Role on 3/11/2011:

Join Wade and Steve each week as they cover the Windows Azure Platform. You can follow and interact with the show @CloudCoverShow.

In this episode, Steve and Wade are joined by Nate Totten—a developer from Thuzi—as they look at a solution for dynamically deploying websites in a Windows Azure Web Role. This is a slick solution that you can use to zip up your website and drop it into storage, and a service running in your Web Role then picks it up and programmatically sets up the website in IIS—all in less than 30 seconds.

Reviewing the news, Wade and Steve:

- Highlight the release of Windows Azure SDK 1.4, updates to the Windows Azure Management Portal, CDN, and the Windows Azure Connect CTP Refresh.

- Cover the release of David Pallmann's book The Windows Azure Handbook, Volume 1: Strategy & Planning.

- Mention that the production Windows Azure AppFabric Portal may show an error messages. Note: By show release, this has been resolved by updates to the portal.

Multitenant Windows Azure Web Roles with Live Deployments

Windows Azure Cloud Package Download and sample site

MultiTenantWebRole NuGet Package

Andy Cross (@andybareweb) described Implementing Azure Diagnostics with SDK V1.4 in a 3/11/2011 post:

The Windows Azure Diagnostics infrastructure has a good set of options available to activate in order to diagnose potential problems in your Azure implementation. Once you are familiar with the basics of how Diagnostics work in Windows Azure, you may wish to move on to configuring these options and gaining further insight into your application’s performance.

This post is an update to the previous post Implementing Azure Diagnostics with SDK v1.3. If you are interested in upgrading from 1.3 to 1.4, it should be noted that there are NO BREAKING CHANGES between the two.

Here is a cheat-sheet table that I have built up of the ways to enable the Azure Diagnostics using SDK 1.4. This cheat-sheet assumes that you have already built up a DiagnosticMonitorConfiguration instance named “config”, with code such as the below. This code may be placed somewhere like the “WebRole.cs” “OnStart” method.

string wadConnectionString = "Microsoft.WindowsAzure.Plugins.Diagnostics.ConnectionString"; CloudStorageAccount storageAccount = CloudStorageAccount.Parse(RoleEnvironment.GetConfigurationSettingValue(wadConnectionString)); RoleInstanceDiagnosticManager roleInstanceDiagnosticManager = storageAccount.CreateRoleInstanceDiagnosticManager(RoleEnvironment.DeploymentId, RoleEnvironment.CurrentRoleInstance.Role.Name, RoleEnvironment.CurrentRoleInstance.Id); DiagnosticMonitorConfiguration config = DiagnosticMonitor.GetDefaultInitialConfiguration();Note that although roleInstanceDiagnosticManager has not yet been used, it will be later.

Alternatively, and if you wish to configure Windows Azure Diagnostics at the start and then modify its configuration later in the execution lifecycle without having to repeat yourself, you can use “RoleInstanceDiagnosticManager.GetCurrentConfiguration()”. It should be noted that if you use this approach in the OnStart method, your IISLogs will not be configured, so you should use the previous option.

string wadConnectionString = "Microsoft.WindowsAzure.Plugins.Diagnostics.ConnectionString"; CloudStorageAccount storageAccount = CloudStorageAccount.Parse(RoleEnvironment.GetConfigurationSettingValue(wadConnectionString)); RoleInstanceDiagnosticManager roleInstanceDiagnosticManager = storageAccount.CreateRoleInstanceDiagnosticManager(RoleEnvironment.DeploymentId, RoleEnvironment.CurrentRoleInstance.Role.Name, RoleEnvironment.CurrentRoleInstance.Id); DiagnosticMonitorConfiguration config = roleInstanceDiagnosticManager.GetCurrentConfiguration();The difference between the two approaches is the final line, the instantiation of the variable config

If you debug both, you’ll find that config.Directories.DataSources has 3 items in its collection for the first set of code, and only 1 for the second. In brief this means that the first can support crashdumps, IIS logs and IIS failed requests, whereas the second can only support crashdumps. This difference is a useful indication of what this config.Directories.DataSources collection is responsible for – it is a list of paths (and other metadata) that Windows Azure Diagnostics will transfer to Blob Storage.

Furthermore, once you have made changes to the initial set of config data (choosing either of the above techniques), it is best practise to use the second approach, otherwise you will always overwrite any changes that you have already made.

Data source Storage format How to Enable Windows Azure logs Table config.Logs.ScheduledTransferPeriod = TimeSpan.FromMinutes(1D); config.Logs.ScheduledTransferLogLevelFilter = LogLevel.Undefined;

IIS 7.0 logs Blob Collected by default, simply ensure Directories are transferredconfig.Directories.ScheduledTransferPeriod = TimeSpan.FromMinutes(1D); Windows Diagnostic infrastructure logs Table config.DiagnosticInfrastructureLogs.ScheduledTransferLogLevelFilter = LogLevel.Warning;config.DiagnosticInfrastructureLogs.ScheduledTransferPeriod = TimeSpan.FromMinutes(1D); Failed Request logs Blob Add this to Web.Config and ensure Directories are transferred<tracing>

<traceFailedRequests>

<add path=”*”>

<traceAreas>

<add provider=”ASP” verbosity=”Verbose” />

<add provider=”ASPNET” areas=”Infrastructure,Module,Page,AppServices” verbosity=”Verbose” />

<add provider=”ISAPI Extension” verbosity=”Verbose” />

<add provider=”WWW Server” areas=”Authentication,Security,Filter,StaticFile,CGI,Compression,Cache, RequestNotifications,Module” verbosity=”Verbose” />

</traceAreas>

<failureDefinitions statusCodes=”400-599″ />

</add>

</traceFailedRequests>

</tracing>Code:

config.Directories.ScheduledTransferPeriod = TimeSpan.FromMinutes(1D);

Windows Event logs Table config.WindowsEventLog.DataSources.Add(“System!*”);config.WindowsEventLog.DataSources.Add(“Application!*”); config.WindowsEventLog.ScheduledTransferPeriod = TimeSpan. FromMinutes(1D);

Performance counters Table PerformanceCounterConfiguration procTimeConfig = new PerformanceCounterConfiguration();procTimeConfig.CounterSpecifier = @”\Processor(*)\% Processor Time”; procTimeConfig.SampleRate = System.TimeSpan.FromSeconds(1.0);

config.PerformanceCounters.DataSources.Add(procTimeConfig);

Crash dumps Blob CrashDumps.EnableCollection(true); Custom error logs Blob Define a local resource.LocalResource localResource = RoleEnvironment.GetLocalResource(“LogPath”); Use that resource as a path to copy to a specified blob

config.Directories.ScheduledTransferPeriod = TimeSpan.FromMinutes(1.0);

DirectoryConfiguration directoryConfiguration = new DirectoryConfiguration();

directoryConfiguration.Container = “wad-custom-log-container”;

directoryConfiguration.DirectoryQuotaInMB = localResource.MaximumSizeInMegabytes;

directoryConfiguration.Path = localResource.RootPath;

config.Directories.DataSources.Add(directoryConfiguration);

After you have done this, remember to set the Configuration back for use, otherwise all of your hard work will be for nothing!

roleInstanceDiagnosticManager.SetCurrentConfiguration(config);This completes the setup of Windows Azure Diagnostics for your role. If you are using a VM role or are interested in another approach, you can read this blog on how to use diagnostics.wadcfg.

Avkash Chauhan explained Handling Error while upgrading ASP.NET WebRole from .net 3.5 to .net 4.0 - The configuration section 'system.web.extensions' cannot be read because it is missing a section declaration in a 3/10/2011 post:

After you have upgraded your Windows Azure application which includes ASP.NET WebRole, form .net 3.5 to .net 4.0 (either with Windows Azure SDK 1.2/1.3/1.4), it is possible that your role will be stuck in busy or Starting state.

If you are using Windows Azure SDK 1.3 or later, you have ability to access your Windows Azure VM over RDP. Once you RDP, to your VM and launch the internal HTTP endpoint in web browser, and you could see the following error:

Server Error Internet Information Services 7.0 Error Summary HTTP Error 500.19 - Internal Server Error The requested page cannot be accessed because the related configuration data for the page is invalid. Detailed Error Information Module IIS Web Core Notification Unknown Handler Not yet determined Error Code 0x80070032 Config Error The configuration section 'system.web.extensions' cannot be read because it is missing a section declaration Config File \\?\E:\approot\web.config Requested URL http://<Internel_IP>:<Internal_port>/ Physical Path Logon Method Not yet determined Logon User Not yet determined Failed Request Tracing Log Directory C:\Resources\directory\<Deployment_Guid>.Website.DiagnosticStore\FailedReqLogFiles Config Source 277: </system.web> 278: <system.web.extensions> 279: <scripting>Web.config will have the system.web.extensions section as below:

<system.web.extensions> <scripting> <scriptResourceHandler enableCompression="true" enableCaching="true"/> </scripting> </system.web.extensions>You can also verify that ApplicationHost.config has extensions defined as below:

%windir%\system32\inetsrv\config\ApplicationHost.config <add name="ScriptModule-4.0" type="System.Web.Handlers.ScriptModule, System.Web.Extensions, Version=4.0.0.0, Culture=neutral, PublicKeyToken=31bf3856ad364e35" preCondition="managedHandler,runtimeVersionv4.0" />To solve this problem you will need to add the following in <configSections> section in your web.config:

<sectionGroup name="system.web.extensions" type="System.Web.Configuration.SystemWebExtensionsSectionGroup, System.Web.Extensions, Version=4.0.0.0, Culture=neutral, PublicKeyToken=31bf3856ad364e35"> <sectionGroup name="scripting" type="System.Web.Configuration.ScriptingSectionGroup, System.Web.Extensions, Version=4.0.0.0, Culture=neutral, PublicKeyToken=31bf3856ad364e35"> <section name="scriptResourceHandler" type="System.Web.Configuration.ScriptingScriptResourceHandlerSection, System.Web.Extensions, Version=4.0.0.0, Culture=neutral, PublicKeyToken=31bf3856ad364e35" requirePermission="false" allowDefinition="MachineToApplication"/> <sectionGroup name="webServices" type="System.Web.Configuration.ScriptingWebServicesSectionGroup, System.Web.Extensions, Version=4.0.0.0, Culture=neutral, PublicKeyToken=31bf3856ad364e35"> <section name="jsonSerialization" type="System.Web.Configuration.ScriptingJsonSerializationSection, System.Web.Extensions, Version=4.0.0.0, Culture=neutral, PublicKeyToken=31bf3856ad364e35" requirePermission="false" allowDefinition="Everywhere"/> <section name="profileService" type="System.Web.Configuration.ScriptingProfileServiceSection, System.Web.Extensions, Version=4.0.0.0, Culture=neutral, PublicKeyToken=31bf3856ad364e35" requirePermission="false" allowDefinition="MachineToApplication"/> <section name="authenticationService" type="System.Web.Configuration.ScriptingAuthenticationServiceSection, System.Web.Extensions, Version=4.0.0.0, Culture=neutral, PublicKeyToken=31bf3856ad364e35" requirePermission="false" allowDefinition="MachineToApplication"/> <section name="roleService" type="System.Web.Configuration.ScriptingRoleServiceSection, System.Web.Extensions, Version=4.0.0.0, Culture=neutral, PublicKeyToken=31bf3856ad364e35" requirePermission="false" allowDefinition="MachineToApplication"/> </sectionGroup> </sectionGroup> </sectionGroup>

PRNewswire reported Matco Tools implementing the Virtual Inventory Cloud (VIC) solution from GCommerce, which is built on the Microsoft Azure platform in a 3/9/2011 press release:

A major market leader in the tools & equipment aftermarket is taking a new cloud-based solution to market. Matco Tools, a manufacturer and distributor of professional automotive equipment, is implementing the Virtual Inventory Cloud (VIC) solution from GCommerce, which is built on the Microsoft Azure platform. [Emphasis added.]

“Special Orders continue to increase due to the continuous expansion of part numbers, and the industry cannot afford to continue throwing people at a broken process,” said Steve Smith, President & CEO of GCommerce. “Our partnership with Matco Tools will lead to further improvements in VIC for the entire automotive aftermarket industry and others like it.”

VIC™ enables automation of the special/drop-ship ordering process between distributors/retailers and manufacturers/suppliers, allowing both parties to capture sales opportunities and streamline a slow manual process. GCommerce has emerged as the leading provider of a B2B solution for the automotive aftermarket by providing Software as a Service (SaaS) using elements of cloud-based technologies to automate procurement and purchasing for national retailers, wholesalers, program groups and their suppliers.

Windows Azure and Microsoft SQL Azure enable VIC to be a more efficient trading system capable of handling tens of millions of transactions per month for automotive part suppliers. GCommerce’s VIC implementation with support from Microsoft allows suppliers to create a large-scale virtual data warehouse that eliminates manual processes and leverages a technology-based automation system that empowers people. This platform is designed to work with existing special order solutions and technologies, and has potential to transform distribution supply chain transactions and management across numerous industries.

“VIC is a top line and bottom line solution for Matco and its suppliers, enabling both increased revenue opportunities and process cost savings. It’s all about speed and access to information,” said Mike Smith, VP Materials, Engineering and Quality of Matco Tools. “There is a growing concern around drop-ship special orders, which is why we are approaching the issue with a solution that fits the industry at large, as well as Matco individually.”

Currently, it can be time-consuming and inefficient for retailers and distributors when tracking down a product for a customer. With VIC, information required to quote a special order can be captured in seconds vs. the minutes, hours, and sometimes days delay of decentralized manual methods.

About GCommerce

GCommerce, founded in 2000, is a leading provider of Software-as-a-Service (SaaS) technology solutions designed to streamline distribution supply chain operations. Their connectivity solutions facilitate real-time, effective information sharing between incompatible business systems and technologies, enabling firms to improve revenue, operational efficiencies, and profitability. For more information, contact GCommerce at (515) 288-5850 or info(at)gcommerceinc(dot)com.About Matco Tools

Matco Tools is a manufacturer and distributor of quality professional automotive equipment, tools and toolboxes. Its product line now numbers more than 13,000 items. Matco also guarantees and services the equipment it sells. Matco Tools is a subsidiary of Danaher Corporation, a Fortune 500 company and key player in several industries, including tools, environmental and industrial process and control markets.

<Return to section navigation list>

Visual Studio LightSwitch

Michael Otey asserted “Microsoft's new development environment connects to SQL Server and more” in a deck for his FAQs about Visual Studio LightSwitch article of 3/10/2011 for SQL Server Magazine:

Providing a lightweight, easy-to-access application development platform is a challenge. Microsoft had the answer at one time with Visual Basic (VB). However, as VB morphed from VB 6 to VB.NET, the simplicity and ease of development was lost. Visual Studio (VS) LightSwitch is Microsoft’s attempt to create an easy-to-use development environment that lets non-developers create data-driven applications. Whether it succeeds is still up in the air. Here are answers to frequently asked questions about LightSwitch.

1. What sort of projects does LightSwitch create?

LightSwitch lets you create Windows applications (two-tiered or three-tiered) and browser-based Silverlight applications. Two-tiered applications are Windows Communication Foundation (WCF) applications that run on the Windows desktop. Three-tiered applications are WCF applications that connect to IIS on the back end. LightSwitch desktop applications can access local system resources. LightSwitch web applications connect to IIS and can’t access desktop resources.

2. I heard Silverlight was dead. Why does LightSwitch generate Silverlight apps?

Like Mark Twain’s famous quote, the rumors of Silverlight’s death are greatly exaggerated. People talk about Silverlight being replaced by HTML 5, but Silverlight is meant to work with HTML and is designed to do the things that HTML doesn’t do well. Silverlight will continue to be a core development technology for the web, Windows, and Windows Phone.

3. How is LightSwitch different from WebMatrix or Visual Web Developer Express?

All three can be used to develop web applications. However, WebMatrix and Visual Web Developer Express are code-centric tools that help code web applications written in VB or C#. LightSwitch is an application generator that lets you build working applications with no coding required.

4. Can LightSwitch work with other databases beside SQL Server?

The version of LightSwitch that’s currently in beta connects to SQL Server 2005 and later and to SQL Azure. However, LightSwitch applications at their core are .NET applications, and the released version of LightSwitch should be able to connect to other databases where there’s a .NET data provider.

5. Is it true that LightSwitch doesn’t have a UI designer?

Yes. Microsoft development has taken a misguided (in my opinion) route, thinking templates are an adequate substitute for screen design. LightSwitch doesn’t have a visual screen designer or control toolbox. Instead, when you create a new window, which LightSwitch calls Screens, you select from several predefined templates. It does offer a Customize Screen button that lets you perform basic tasks like renaming and reordering items on the screen.

6. Can you modify applications created by LightSwitch?

Yes. LightSwitch generates standard VS web projects that you can modify. Open the LightSwitch .lsproj project file or .sln solution file in one of the other editions of VS, then you can modify the project.

7. Will VS LightSwitch be free?

Microsoft hasn’t announced the pricing for LightSwitch; however, it’s not likely to be free. That said, as with other members of the VS family, the applications that you create with LightSwitch will be freely distributable and with no runtime charges or licensing required to execute them.

8. Where can I get LightSwitch?

LightSwitch is currently in beta. To find out more about LightSwitch and download the free beta go to the Visual Studio LightSwitch website.

I agree that lack of a UI designer for LightSwitch is misguided, but I doubt if it will gain one at this point. According to Microsoft’s “Soma” Somasegar, Beta 2 of LightSwitch is due “in the coming weeks.”

Return to section navigation list>

Windows Azure Infrastructure and DevOps

•• David Linthicum asked Does SOA Solve Integration? in a 3/13/2011 post to ebizQ’s Where SOA Meets cloud blog:

Loraine Lawson wrote a compelling blog post: "Did SOA Deliver on Integration Promises?" Great question.

"So did SOA solve integration? No. But then again, no one ever promised you that. As Neil observes, we'll probably never see a 'turnkey enterprise integration solution,' but that's probably a good thing - after all, organizations have different needs, and such a solution would require an Orwellian-level of standardization."

The fact of the matter is that SOA and integration are two different, but interrelated concepts. SOA is a way of doing architecture, where integration may be a result of that architecture. SOA does not set out to do integration, but it maybe a byproduct of SOA. Confused yet?

Truth-be-told integration is a deliberate approach, not a byproduct. Thus, you need to have an integration strategy and architecture that's a part of your SOA, and not just a desired outcome. You'll never get there, trust me.

The issue is that there are two architectural patterns at play here.

First, is the objective to externalize both behavior and data as sets of services that can be configured and reconfigured into solutions. That's at the heart of SOA, and the integration typically occurs within the composite applications and processes that are created from the services.

Second, is the objective to replicate information from source to target systems, making sure that information is shared between disparate applications or complete systems, and that the semantics are managed. This is the objective of integration, and was at the heart of the architectural pattern of EAI.

Clearly, integration is a deliberate action and thus has to be dealt with within architecture, including SOA. Thus, SOA won't solve your integration problems; you have to address those directly.

•• Ernest Mueller (@ernestmueller) reported a DevOps at CloudCamp at SXSWi! meetup in a 3/12/2011 post:

Isn’t that some 3l33t jargon. Anyway, Dave Nielsen is holding a CloudCamp here in Austin during SXSW Interactive on Monday, March 14 (followed by the Cloudy Awards on March 15) and John Willis of Opscode and I reached out to him, and he has generously offered to let us have a DevOps meetup in conjunction.

John’s putting together the details but is also traveling, so this is going to be kind of emergent. If you’re in Austin (normally or just for SXSW) and are interested in cloud, DevOps, etc., come on out to the CloudCamp (it’s free and no SXSW badge required) and also participate in the DevOps meetup!

In fact, if anyone’s interested in doing short presentations or show-and-tells or whatnot, please ping John (@botchagalupe) or me (@ernestmueller)!

• Bill Zack added another positive review of David Pallman’s first volume in a Mapping your Move to the Windows Azure Platform post of 3/12/2011:

The Windows Azure Handbook, Volume 1 by David Pallmann has just been published. It is the most up-to-date work on Windows Azure that I have seen so far. It is available in print or you can get it as an eBook at Amazon.com.

Volume 1 is on Planning and Strategy. Subsequent volumes will cover Architecture, Development and Management.

This is an excellent book for anyone evaluating and planning for Windows Azure. It also provides an excellent comparisons of several other public cloud services including Amazon Web Services and Google AppEngine.

One of its strengths is detailed documentation of a complete methodology for evaluating the technical and business reasons for determining which of your applications should be moved to the cloud first.

Tad Anderson published a Book Review: Microsoft Azure Enterprise Application Development by Richard J.Dudley and Nathan Duchene on 3/11/2011, calling the title a “Nice concise overview of Microsoft Azure:”

I have not had any clients express interested in the cloud yet. They are not willing to hear about giving up the control over their environments which is a stigma the cloud conversation carries with it. I still wanted to know what is going on with Azure, without having to spend 2 months mulling through a tome. This book was the perfect size and depth for getting me up to speed quickly.

The book is intended to give you enough information to decide whether or not further movement toward the cloud is something you want to do, and it does that perfectly.The book starts off with an overview of cloud computing, an introduction to Azure, and covers setting up a development environment. After that the rest of the book designs and builds a sample application which is used to introduce the key components of Microsoft Azure.

The book has a chapter on each of the following topics: Azure Blob Storage, Azure Table Storage, Queue Storage, Web Role, Web Services and Azure, Worker Roles, Local Application for Updates, Azure AppFabric, Azure Monitoring and Diagnostics, and Deploying to Windows Azure.

Most chapters introduces the topic and then show a working example. The others that just describe the topic describe it in enough detail that you have a good understanding of the topic and they provide good references if you want to dig deeper.

The book did a really good job covering the different types of services and different types of storage available. It also did a great job of describing the differences in SQL Server and SQL Azure.

All in all I thought this book did exactly what it set out to do. It provided me with enough information that I now feel like I know what Microsoft Azure is all about.

I highly recommend this book to anyone who wants an introduction the Microsoft Azure platform.

Steve Plank (@plankytronixx) recommended Don’t Kill the Messenger: Explaining to the Board how Planning for the Cloud will Help the Business in a detailed 3/11/2011 post:

What we need, in our dual-roles as both technologists and business-analysts, is a sort of kit bag of tools we can use to explain in simple, low-jargon, understandable language to say, the CIO or other C-level executive of a business, how a move to the cloud will benefit the organisation.

It’s a problem more of communication than anything. They’ve all seen the latest, greatest things come and go like fashion. There’s no doubt about the debate and hubub around cloud technologies, but it’s pretty confusing.

It’s like when you take a family holiday. You get back after 2 weeks in the sun and perhaps buy a newspaper at the airport and you realise “whoa – a lot of stuff has been going on”. You come straight in to the middle of 1 or 2 big news stories and because you weren’t there at the start, it’s confusing.

That’s what I mean when I say the cloud is confusing to non-technology audiences. There’s a lot of buzz about it in business circles and even in the mainstream media. But they are coming in to the middle of the story and it’s like a news story. It’s an evolving feast. There is no definitive story. It’s not like looking up Newton’s laws of physics or Darwin’s theories of evolution. In every business-focussed cloud presentation I’ve ever seen, there’s an attempt at a definitive explanation of what the cloud is. They try to distil it in to one or two sentences. These things often end up looking like company mission statements. I’ve seen some of these mission statements that are so far up their own backsides they can see daylight at the other end. “Cloud Computing is the Egalitarianism of a Scalable IT Infrastructure”. Well, yes it is, but it’s more than just that – and does the language help somebody who has come along to find out what it really means. Hmmm – I’m doubtful. Perhaps the eyes of the CIO who has just had his budgets slashed glazed over at this point. Perhaps the CEO has no idea what a “Scalable IT Infrastructure” is or even why his car-manufacturing business needs one.

I personally think the notion of trying to reduce a description of cloud computing to the most compact statement you can create just leads to more misunderstanding.

However, when you talk to technologists like us – we can all identify with the 4 key workloads that are very suitable to cloud computing.

As technologists we can all immediately relate to these diagrams of load/time. These are not the only uses for the cloud, but they are the key uses. However, the C-level audience rapidly starts to lose interest. They have to have the stories associated with these diagrams, before they get to see the diagrams. Showing the diagrams after we’ve told the stories gives us credibility because it proves we’ve done our homework.