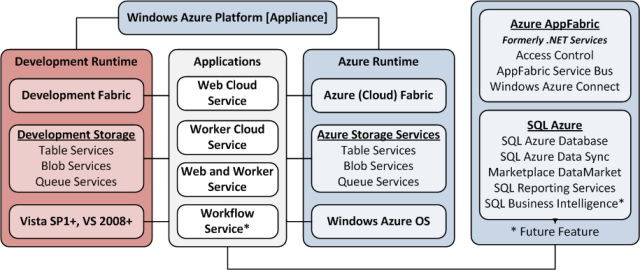

Windows Azure and Cloud Computing Posts for 3/8/2011+

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database and Reporting

- Marketplace DataMarket and OData

- Windows Azure AppFabric: Access Control, WIF and Service Bus

- Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch

- Windows Azure Infrastructure

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Azure Blob, Drive, Table and Queue Services

Wes Yanaga reported New Windows Azure Videos on MSDEV in a 3/8/2011 post to the US ISV Evangelism blog:

There are three new videos posted to MSDEV related to Windows Azure.

Windows Azure Drives - Demonstrates how to create and use a Windows Azure Drive, which lets you mount a VHD as an NTFS volume, exposed as a local drive letter to an Azure application, so legacy applications that expect to access data from a local drive can be migrated to Azure with minimal code changes.

The Windows Azure Storage: Tables- This screencast will show you how to get started with Windows Azure storage tables, including how to create tables and add, edit, or delete data.

Windows Azure Connect - Describes Connect, a feature of Windows Azure that lets you establish a secure virtual network connection between your local, on-premises resources and cloud resources, using standard IP protocols. Includes a demonstration of using Connect to allow an application running in the cloud to access a SQL Server database running on a local server.

If you are interested in giving Windows Azure a free test drive please visit this link and use Promo Code: DPWE01

<Return to section navigation list>

SQL Azure Database and Reporting

Liam Cavanagh (@liamca) posted SQL Azure Data Sync Update on 3/8/2011:

It’s been a while since we announced SQL Azure Data Sync CTP2 and I wanted to provide an update on how CTP2 is progressing as well as an update on the service in general.

Late last year at PDC we announced CTP2 and started accepting registrations for access to the preview release. In mid-December we started providing access to the service and have continued to work our way through the registrations, adding more users. We recently made a few updates to the service as a direct result of feedback we’ve received; bug fixes and a number of usability enhancements.

We’ve seen huge interest in CTP2, so much so that we may not be able to give everyone who registered access to the preview. We are going to continue to process registrations throughout March, but for those of you that do not get access we will be releasing CTP3 this summer and will make that preview release open to everyone. Feel free to continue to register for CTP2 as that will allow us to email you as soon as CTP3 becomes available.

While on the subject of CTP3, here are some of the new features planned:

A new user interface integrated with the Windows Azure portal.

The ability to select a subset of columns to sync as well as to specify a filter so that only a subset of rows are synced.

The ability to make certain schema changes to the database being synced without having to re-initialize the sync group and re-sync the data.

Conflict resolution policy can be specified.

General usability enhancements.

CTP3 will be the last preview, before the final release later this year. We’ll announce the release dates on this blog when available.

Finally, here are some links that may be helpful:

To ask questions, report problems and provide feedback on SQL Azure Data Sync CTP2, use the SQL Azure forum: http://social.msdn.microsoft.com/forums/en-US/ssdsgetstarted/threads/

To submit new feature requests or vote on existing feature requests: http://www.mygreatwindowsazureidea.com/forums/44459-sql-azure-data-sync-feature-voting

To register for access to CTP2 and be notified about CTP3: http://go.microsoft.com/fwlink/?LinkID=204776&clcid=0x409

<Return to section navigation list>

MarketPlace DataMarket and OData

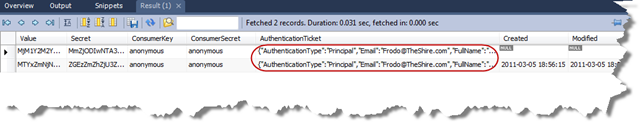

Azret Botash continued his series with OData and OAuth - Part 5 – OAuth 1.0 Service Provider in ASP.NET MVC (with XPO as the data source) on 3/7/2011:

Implementing an OAuth service provider is not a difficult task once you select an OAuth library that you like. The one I am going to be using is the one I like the most. It’s simple to use and gives me a lot of freedom when it comes to token management.

The authentication flow should already be familiar from our experience as an OAuth consumer.

Let’s follow the spec. From section 6 - Authenticating with OAuth of the 1.0 spec:

Service Provider Grants Request Token

Typically, you would write and IHttpHandler for handling OAuth requests in ASP.NET. I find that it’s much cleaner to have them go through the MVC Controller.

public ActionResult GetRequestToken() { Response response = ServiceProvider.GetRequestToken( Request.HttpMethod, Request.Url); this.Response.StatusCode = response.StatusCode; return Content(response.Content, response.ContentType); }Behind the scenes, ServiceProvider.GetRequestToken() will parse the query parameters and validate the signature of the request based on the specified signing method. The specified consumer key will be validated using a ConsumerStore object that we can configure in Web.config.

<section name="oauth.consumerTokenStore" type="BankOfShire.OAuth.ConsumerTokenStore" />The default implementation accepts anonymous/anonymous but it’s a good idea to require a consumer registration and issue consumer keys and secrets. Just like we did for twitter here.

public class ConsumerStore : ConfigurationSection, IConsumerStore { public virtual IConsumer GetConsumer(string consumerKey) { if (String.Equals(consumerKey, "anonymous")) { return new ConsumerBase() { CallbackUri = null, ConsumerKey = "anonymous", ConsumerSecret = "anonymous", }; } return null; } }The responsibility for issuing request tokens is assigned to RequestTokenStore. Configurable in Web.config:

<section name="oauth.requestTokenStore" type="BankOfShire.OAuth.RequestTokenCache" />Request tokens in OAuth 1.0 are short lived. They should only be valid during the authentication process. If you look at the authentication flow above, that would be from B to F.

So how long should the request token lifetime be? Yahoo for example issues tokens that expire after 1 hour. Is this enough time for the user to type in their credentials? I think so. Maybe even too long. Remember, longer lifespan means more physical hardware. The ideal situation is too keep request tokens in memory without having to persist them in the database. For small sites, ASP.NET Cache should do fine:

public class RequestTokenCache : RequestTokenStore { public override IToken GetToken(string token) { HttpContext context = HttpContext.Current; if (context != null) { return context.Cache.Get("rt:" + token) as IToken; } return null; } public override IToken AuthorizeToken(string token, string authenticationTicket) { HttpContext context = HttpContext.Current; if (context == null) { return null; } IToken unauthorizeToken = context.Cache.Get("rt:" + token) as IToken; if (unauthorizeToken == null || unauthorizeToken.IsEmpty) { return null; } IToken authorizeToken = new Token( unauthorizeToken.ConsumerKey, unauthorizeToken.ConsumerKey, unauthorizeToken.Value, unauthorizeToken.Secret, authenticationTicket, unauthorizeToken.Verifier, unauthorizeToken.Callback); context.Cache.Insert( "rt:" + authorizeToken.Value, authorizeToken, null, DateTime.UtcNow.AddSeconds(base.ExpirationSeconds), System.Web.Caching.Cache.NoSlidingExpiration ); return authorizeToken; } public override IToken CreateUnauthorizeToken(string consumerKey, string consumerSecret, string callback) { IToken unauthorizeToken = new Token( consumerKey, consumerSecret, Token.NewToken(TokenLength.Long), Token.NewToken(TokenLength.Long), String.Empty, /* unauthorized */ Token.NewToken(TokenLength.Short), callback); HttpContext context = HttpContext.Current; if (context != null) { context.Cache.Insert( "rt:" + unauthorizeToken.Value, unauthorizeToken, null, DateTime.UtcNow.AddSeconds(base.ExpirationSeconds), System.Web.Caching.Cache.NoSlidingExpiration ); } return unauthorizeToken; } }But I strongly recommend exploring other caching solutions: Memcached, Velocity and other distributed caches if you can. If you can’t, just store them in the database and do some clean up periodically.

Service Provider Directs User to Consumer

Once the request token is issued, the consumer will redirect to the provider site so that the user can enter the credentials. We’ll need to markup some UI.

[HttpPost] public ActionResult AuthorizeToken(AuthorizeModel model) { try { IToken token; if (!VerifyRequestToken(out token)) { return View(model); } if (model.Allow != "Allow") { return Redirect(token.Callback); } MixedAuthenticationIdentity identity = MixedAuthentication.GetMixedIdentity(); if (identity == null || !identity.IsAuthenticated) { if (!ModelState.IsValid) { return View(model); } } MixedAuthenticationTicket ticket = identity != null && identity.IsAuthenticated ? identity.CreateTicket() : MembershipService.ResolvePrincipalIdentity( model.Email, model.Password); bool authorized = false; if (CanImpersonate(ticket)) { if (AuthorizeRequestToken( ticket, out token)) { MixedAuthentication.SetAuthCookie(ticket, true, null); authorized = true; } } else { ModelState.AddModelError("", "Email address or Password is incorrect."); } if (authorized) { Uri returnUri = ((Url)token.Callback).ToUri( Parameter.Token(token.Value), Parameter.Verifier(token.Verifier)); return Redirect(returnUri.ToString()); } return View(model); } catch (Exception e) { ModelState.AddModelError("", e); return View(model); } }ServiceProvider has a helper method to deal with request token authorization. We’ll authorize request tokens using the same ticket that we use for normal sign-ins. (You can read about MixedAuthenticationTicket here.) The RequestTokenStore will be responsible for issuing authorized tokens. See RequestTokenStore.AuthorizeToken above.

bool AuthorizeRequestToken(MixedAuthenticationTicket ticket, out IToken token) { ValidationScope scope; token = ServiceProvider.AuthorizeRequestToken( Request.HttpMethod, Request.Url, ticket.ToJson(), out scope); if (token == null || token.IsEmpty) { if (scope != null) { foreach (ValidationError error in scope.Errors) { ModelState.AddModelError("", error.Message); return false; } } ModelState.AddModelError("", "Invalid / expired token"); return false; } return true; }Service Provider Grants Access Token

Once the request token has been authorized, we need to issue a permanent (or long lived) access token.

public ActionResult GetAccessToken() { Response response = ServiceProvider.GetAccessToken( Request.HttpMethod, Request.Url); this.Response.StatusCode = response.StatusCode; return Content(response.Content, response.ContentType); }It will be the responsibility of the AccessTokenStore to issue new tokens. Here is an implementation that we use for our site. Remember, we use XPO for our data access with MySql in the back.

<section name="oauth.accessTokenStore" type="BankOfShire.OAuth.AccessTokenCache" />public class AccessTokenCache : AccessTokenStore { public override IToken GetToken(string token) { if (String.IsNullOrEmpty(token)) { return null; } IToken t = null; using (Session session = _Global.CreateSession()) { t = session.GetObjectByKey<Data.Token>(token); if (t == null || t.IsEmpty) { return null; } } return t; } public override void RevokeToken(string token) { if (String.IsNullOrEmpty(token)) { return; } using (Session session = _Global.CreateSession()) { session.BeginTransaction(); Data.Token t = session.GetObjectByKey<Data.Token>(token); if (t != null) { t.Delete(); } session.CommitTransaction(); } } public override IToken CreateToken(IToken requestToken) { if (requestToken == null || requestToken.IsEmpty) { throw new ArgumentException("requestToken is null or empty."); } Token token = new Token( requestToken.ConsumerKey, requestToken.ConsumerSecret, Token.NewToken(TokenLength.Long), Token.NewToken(TokenLength.Long), requestToken.AuthenticationTicket, String.Empty, String.Empty ); using (Session session = _Global.CreateSession()) { DateTime utcNow = DateTime.UtcNow; session.BeginTransaction(); Data.Token t = new Data.Token(session); t.Value = token.Value; t.Secret = token.Secret; t.ConsumerKey = token.ConsumerKey; t.ConsumerSecret = token.ConsumerSecret; t.AuthenticationTicket = token.AuthenticationTicket; t.Created = utcNow; t.Modified = utcNow; t.Save(); session.CommitTransaction(); } return token; } }An XPO object for our token store:

[Persistent("Tokens")] public class Token : XPLiteObject, DevExpress.OAuth.IToken { public Token() : base() { } public Token(Session session) : base(session) { } [Key(AutoGenerate = false)] [Size(128)] public string Value { get; set; } [Size(128)] public string Secret { get; set; } [Indexed(Unique=false)] [Size(128)] public string ConsumerKey { get; set; } [Size(128)] public string ConsumerSecret { get; set; } [Size(SizeAttribute.Unlimited)] public string AuthenticationTicket { get; set; } public DateTime Created { get; set; } public DateTime Modified { get; set; } bool DevExpress.OAuth.IToken.IsEmpty { get { return String.IsNullOrEmpty(Value) || String.IsNullOrEmpty(Secret) || String.IsNullOrEmpty(ConsumerKey) || String.IsNullOrEmpty(ConsumerSecret); } } bool DevExpress.OAuth.IToken.IsCallbackConfirmed { get { return true; } } string DevExpress.OAuth.IToken.Callback { get { return String.Empty; } } string DevExpress.OAuth.IToken.Verifier { get { return String.Empty; } } }That’s it, as far as the authentication flow is goes. Now when a request for a protected resources comes in (with a previously issued access token) we can check the ‘Authorization’ header and create the appropriate MixedAuthenticationIdentity.

MixedAuthenticationTicket ticket;

stringauthorizationHeader = HttpContext.Current != null?

HttpContext.Current.Request.Headers["Authorization"] : null;

if(!String.IsNullOrEmpty(authorizationHeader)) {

ValidationScope scope;

TokenIdentity tokenIdentity = ServiceProvider.GetTokenIdentity(

context.Request.HttpMethod,

context.Request.Url,

authorizationHeader,

outscope);

if(tokenIdentity == null) {

if(scope != null) {

foreach (ValidationError error inscope.Errors) {

throw newHttpException(error.StatusCode, error.Message);

}

}

return null;

}}

ticket = MixedAuthenticationTicket.FromJson(tokenIdentity.AuthenticationTicket);

}Note that the MixedAuthentication helper class takes care of this so our Application_AuthenticateRequest will work the same for both Forms Authentication (cookie) and OAuth (Access Token).

protected void Application_AuthenticateRequest(object sender, EventArgs e) { MixedAuthenticationPrincipal p = MixedAuthentication.GetMixedPrincipal(); if (p != null) { HttpContext.Current.User = p; Thread.CurrentPrincipal = p; } }What’s next

Download source code for Part 5

- Part 1: Introduction

- Part 2: FormsAuthenticationTicket & MixedAuthentication

- Part 3: Understanding OAuth 1.0 and OAuth 2.0, Federated logins using Twitter, Google and Facebook

- Part 4: Managing Identities

- Part 5: Implementing OAuth 1.0 Service Providers (storing and caching tokens, distributed caches)

- Part 6: Securing OData feeds without query interceptors

- Part 7: Client Apps

<Return to section navigation list>

Windows Azure AppFabric: Access Control, WIF and Service Bus

No significant articles today.

<Return to section navigation list>

Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

Wes Yanaga reported New Windows Azure Videos on MSDEV in a 3/8/2011 post to the US ISV Evangelism blog:

There are three new videos posted to MSDEV related to Windows Azure.

Windows Azure Drives - Demonstrates how to create and use a Windows Azure Drive, which lets you mount a VHD as an NTFS volume, exposed as a local drive letter to an Azure application, so legacy applications that expect to access data from a local drive can be migrated to Azure with minimal code changes.

The Windows Azure Storage: Tables- This screencast will show you how to get started with Windows Azure storage tables, including how to create tables and add, edit, or delete data.

Windows Azure Connect - Describes Connect, a feature of Windows Azure that lets you establish a secure virtual network connection between your local, on-premises resources and cloud resources, using standard IP protocols. Includes a demonstration of using Connect to allow an application running in the cloud to access a SQL Server database running on a local server.

If you are interested in giving Windows Azure a free test drive please visit this link and use Promo Code: DPWE01

Repeated from the Azure Blob, Drive, Table and Queue Services section above.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

Steve Plank (@plankytronixx) reported Windows Phone 7 + Cloud Services SDK Developer Preview is now Available in a 3/8/2011 post to his Plankytronixx blog:

Microsoft Research has made available for download a developer preview of its Windows Phone 7 + Cloud Services Software Development Kit (SDK).

The new SDK is related to Project Hawaii, a mobile research initiative focusing on using the cloud to enhance mobile devices. The “building blocks” for Hawaii applications/services include computation (Windows Azure); storage (Windows Azure); authentication (Windows Live ID); notification; client-back-up; client-code distribution and location (Orion).

The SDK is for the creation of Windows Phone 7 (WP7) applications that leverage research services not yet available to the general public.

The first two services that are part of the January 25 SDK are Relay and Rendezvous. The Relay Service is designed to enable mobile phones to communicate directly with each other, and to get around the limitation created by mobile service providers who don’t provide most mobile phones with consistent public IP addresses. The Rendezvous Service is a mapping service “from well-known human-readable names to endpoints in the Hawaii Relay Service.” These names may be used as rendezvous points that can be compiled into applications, according to the Hawaii Research page.

For more information see SDK page on Microsoft Research web site.

The ADO.NET Team reported Entity Framework Improvements in Visual Studio 2010 SP1 in a 3/8/2011 post:

Today’s release of Visual Studio 2010 SP1 includes several performance and stability improvements for Entity Framework 4.0. MSDN subscribers can download the service pack immediately, and the release will have general availability on Thursday, March 10.

- Download Service Pack 1 (MSDN Subscribers)

- Thursday's general availability download is here.

Here’s what new in SP1 for Entity Framework:

Performance Improvements for Large Models

The most notable improvement is performance in the designer (or at the command-line using EDMGen.exe) when working with large models. In Visual Studio 2010, reverse-engineering a model from a medium to large database (>200 tables) would take an unnecessarily long time. With SP1, a large model (for example, 500 tables) can now be reverse-engineered within seconds.

Hotfixes included in SP1

- "System.StackOverflowException" unhandled exception occurs when you run a .NET Framework 4.0-based application if a custom base class inherits from the EntityObject class

- FIX: The principal entity in an SQL application generates unnecessary updates when the application uses the Entity Framework in the .NET Framework 4

Bug Fixes for Self-Tracking Entities

- BUG & Workaround: Using Entity Framework Self Tracking Entities with AppFabric CACHE (‘Velocity’) and .NET 4.0 RTM

- Edmxes and HandleCascadeDelete

- Self-Tracking Entity Template fails to generate code for function imports that don’t have a return value

- Entity does not get marked as modified when FK association is nulled via EntityReference.EntityKey

- Miscellaneous code-generation changes to conform to VB pretty-lister guidelines

- Template generates uncompilable code for dot-separated namespaces

- Template doesn’t generate default values for scalar properties on complex types

<Return to section navigation list>

Visual Studio LightSwitch

Beth Massi (@bethmassi) reported Visual Studio 2010 Service Pack 1 Released and warned about its incompatibility with Visual Studio LightSwitch Beta 1 in a 3/8/2011 post:

Jason Zander just announced the availability of Visual Studio 2010 Service Pack 1! [see post below.] The download is available today for MSDN subscribers and will be made available to the general public on Thursday March 10th.

This release addresses customer reported bug fixes as well as some feature updates. Some of my favorites are IntelliTrace support for 64-bit platforms as well as unit testing for SharePoint projects. There is also a much better help viewer included in SP1. Take a look at the full list of changes in the Visual Studio and Team Foundation Server knowledge base articles. Also see this important information on SP1 compatibility with other downloads and the SP1 Readme.

Compatibility Note: Be aware that if you’re evaluating LightSwitch Beta 1, do not install the Visual Studio 2010 Service Pack 1 (SP1) as these releases are not compatible. Please see the LightSwitch Team Blog for details. If you’ve already installed SP1 then to remove it, go to your Add/Remove Programs and uninstall it there. If you are still having problems, perform a Repair on LightSwitch Beta 1 and you should be up and running again. The LightSwitch team will be addressing this issue very soon with the release of LightSwitch Beta 2 so please stay tuned for more information. [Emphasis Beth’s.]

The Visual Studio LightSwitch Team (@VSLightSwitch) seconded Beth's warning in its LightSwitch Beta 1 is incompatible with Visual Studio 2010 SP1 post of 3/8/2011:

We want to inform you that if you’re working with the LightSwitch Beta 1, do not install the Visual Studio 2010 Service Pack 1 (SP1) as these releases are not compatible. If you want to install LightSwitch Beta 1 into Visual Studio 2010 Pro or Ultimate versions please be aware that LightSwitch Beta 1 will only work with Visual Studio 2010 RTM (released version). If you just install LightSwitch Beta 1 please do not install Visual Studio 2010 SP1 after that.

Here are the symptoms of what will happen if you install LightSwitch Beta1 and VS2010 SP1 on the same machine (install sequence does not matter):

- No errors are encountered at setup for either LightSwitch Beta1 or VS2010 SP1

- You will hit the following error at F5/Build for a C# or VB LightSwitch project (reported in the Error List tool window).

An exception occurred when building the database for the application. Method not found: 'Void Microsoft.Data.Schema.Sql.SchemaModel.ISqlSimpleColumn.set_IsIdentity(Boolean)'.

If you’ve already installed SP1 then to remove it, go to your Add/Remove Programs and uninstall it there. If you are still having problems, perform a Repair on LightSwitch Beta 1 and you should be up and running again. We will be addressing this issue very soon so please stay tuned for more information here on the blog.

S. “Soma” Somasegar announced from TechEd ME 2011 “In the coming weeks, we will make available Visual Studio LightSwitch Beta 2” in his Visual Studio 2010 enhancements post of 3/7/2011:

Today, I have the privilege of keynoting TechEd Middle East in Dubai for the second year in a row.

TechEd Middle East debuted last year, and this year the event has grown significantly. TechEd provides a nice opportunity to show off some of the cool work that teams at Microsoft do. I love connecting with customers and hearing how they're using our products, and for me, TechEd is a great way to get that.

I'm sharing several pieces of news with the live audience in Dubai that I want to share with you as well.

Visual Studio 2010 SP1

Visual Studio 2010 shipped about 11 months ago, and we continue to work on it and respond to customer feedback received through Visual Studio Connect. This feedback has guided our focus to improve several areas, including IntelliTrace, unit testing, and Silverlight profiling.

You can learn more about how we're improving Visual Studio 2010 on Jason Zander's blog later today. On March 8th, MSDN subscribers will be able to download and install Visual Studio 2010 SP1 from their subscriber downloads. If you're not an MSDN subscriber, you can get the update on Thursday, March 10th.

TFS-Project Server Integration Feature Pack

Also available for Visual Studio Ultimate with MSDN subscribers via Download Center today is the TFS-Project Server Integration Feature Pack. Integration between Project Server and Team Foundation Server enables teams to work more effectively together using Visual Studio, Project, and SharePoint and coordinates development between teams using disparate methodologies, such as waterfall and agile, via common data and metrics.

Visual Studio Load Test Feature Pack

We know that ensuring your applications perform continuously at peak levels at all times is central to your success. Yet load and performance testing often happen late in the application lifecycle. And fixing defects and detecting architectural and design issues later in the application lifecycle is more expensive than defects caught earlier in development. This is why we've built load and performance testing capabilities into the Visual Studio IDE itself.

Today, we're introducing a new benefit - Visual Studio 2010 Load Test Feature Pack - available to all Visual Studio 2010 Ultimate with MSDN subscribers. With this feature pack, you can simulate as many virtual users as you need without having to purchase additional Visual Studio Load Test Virtual User Pack 2010 licenses. For more information regarding this new Visual Studio 2010 Ultimate with MSDN benefit, visit the Visual Studio Load Test Virtual User Pack 2010 page.

Visual Studio LightSwitch Beta 2

Visual Studio LightSwitch offers a simple way to develop line of business applications for the desktop and cloud. Since the launch of Visual Studio LightSwitch Beta 1, we have seen over 100,000 downloads of the tool and a lot of developer excitement. In the coming weeks, we will make available Visual Studio LightSwitch Beta 2. With this second beta, we will also enable you to build line of business applications that target Windows Azure and SQL Azure. [Emphasis added.]

In the meantime you can learn more about LightSwitch and follow the @VisualStudio Twitter account for announcements.

Namaste!

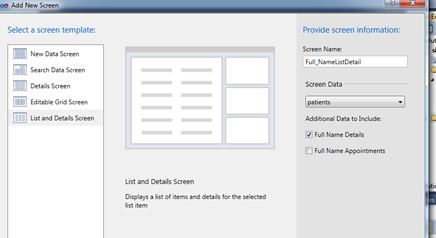

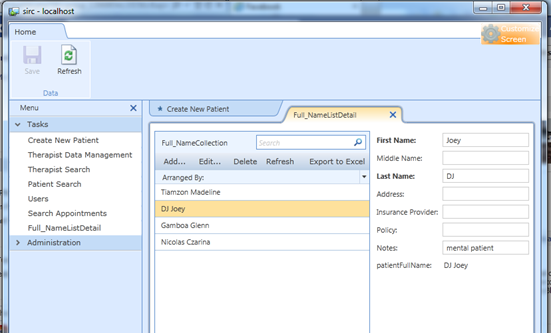

Edu Lorenzo described Adding a user control to a Visual Studio LightSwitch Application in a 3/7/2011 post:

Okay.. also as response to offline inquiries on how to change the look of a Visual Studio LightSwitch application and if it is even remotely possible to add your own control to it, here is a blog to show how it is done. If you have been reading the previous parts of my series of blogs for developing a Visual Studio LightSwitch application, you won’t be surprised to hear that VS-LS uses Silverlight OOB(Out Of Browser) technology to create the application. So how do we add a user control? We add a new SILVERLIGHT control to our application.

I don’t really have a need for a user control in the application that I am making so I will just add a new screen that we can mess around with :p

So I add a new screen to my existing project:

But won’t do anything to it, yet. I just need a place to drop the user control.

Now to create a user control I add a new Silverlight Class Library by right-clicking the solution and choosing Add-New-Project and choosing Silverlight Class Library from the selection.

And now we have a place to play J

The next thing we do is to add a Silverlight user control to this Silverlight project. Right-click on the class library, choose add new item and choose Silverlight User Control.

I named mine “lightList” because.. er.. yeah.

Now I have a nifty xaml file that I can edit using either visual studio or expression studio.

I’m not that good with Silverlight and Xaml so I asked a friend Abram Limpin to come up with some xaml that I can bind to a datasource (itemsource in SL Language)

And he guided me towards this:

<Grid x:Name=”LayoutRoot” Background=”White”>

<ComboBox ItemsSource=”{Binding Screen.Full_NameCollection}” SelectedItem=”{Binding Screen.Full_NameCollection.SelectedItem, Mode=TwoWay}”></ComboBox>

</Grid>

Okay let’s see what it does..

It is basically a ComboBox that is bound using the ItemsSource property to the Screen with a collection called Full_NameCollection. Where is this screen? This is the one that we made at the start of this blog.

So in effect, what we did was we bound the ComboBox to a screen, and the Full_NameCollection of that screen. Also, what we did was we created a user control that is dedicatedly bound to that ItemsSource. And if the possibility or need of a datacontrol whose ItemsSource would be dynamic comes up, you will create a user control with blank ItemsSource property and set that when you use the control. I might be able to illustrate that later on.

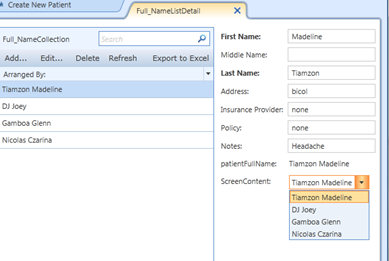

And now we get to the highlight.. adding the user control to the screen that we created earlier.

But before that, let’s take a look at what the screen looks like before we add the user control

Here we have the screen with the names of the patients with their details also in the screen.

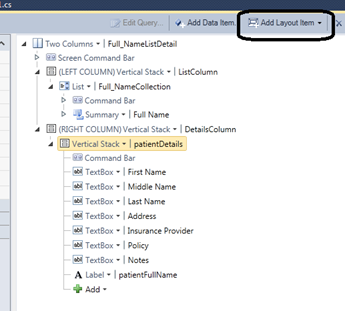

And taking a look at the design view of the ViewModel for the Patient’s details..

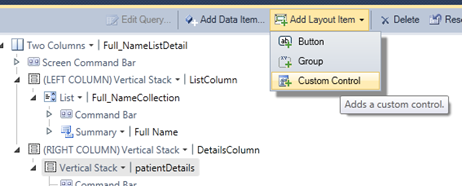

We see an Add Layout Item button.. and we use that to add our user control. With the Vertical Stack on the Right Column highlighted, we click Add Layout Item and choose Add User Control

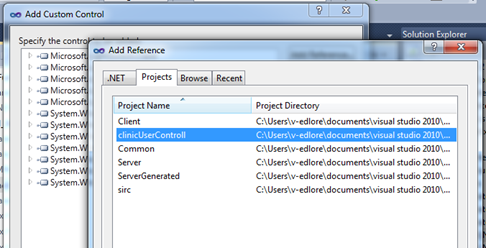

This should bring up the Add Custom Control Dialog and we add an in-project reference to the Silverlight Project we created earlier.

If successful this will give you the name of the project as part of the controls available to you.

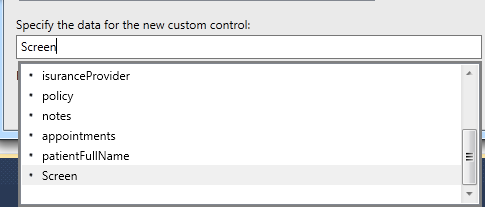

I highlighted the “Specify the data for the new custom control:” to show where you should edit to enable a user control with a blank ItemsSource to get its data.

If you experiment by placing your cursor inside that textbox, you will see your options.

Okay, so we now have a user control, in the form of a combobox, in the screen we added earlier on.

Lets’ give it a try.

And there you have it! A databound user control that wasn’t there before!

Return to section navigation list>

Windows Azure Infrastructure

Lydia Leong (@CloudPundit) offered advice about Contracting in the cloud in an 3/8/2011 post:

There are plenty of cloud (or cloud-ish) companies that will sell you services on a credit card and a click-through agreement. But even when you can buy that way, it is unlikely to be maximally to your advantage to do so, if you have any volume to speak of. And if you do decide to take a contract (which might sometimes be for a zero-dollar-commit), it’s rarely to your advantage to simply agree to the vendor’s standard terms and conditions. This is just as true with the cloud as it is with any other procurement. Vendor T&Cs, whether click-through or contractual, are generally not optimal for the customer; they protect the vendor’s interests, not yours.

Do I believe that deviations from the norm hamper a cloud provider’s profitability, ability to scale, ability to innovate, and so forth? It’s potentially possible, if whatever contractual changes you’re asking for require custom engineering. But many contractual changes are simply things that protect a customer’s rights and shift risk back towards the vendor and away from the customer. And even in cases where custom engineering is necessary, there will be cloud providers who thrive on it, i.e., who find a way to allow customers to get what they need without destroying their own efficiencies. (Arguably, for instance, Salesforce.com has managed to do this with Force.com.)

But the brutal truth is also that as a customer, you don’t care about the vendor’s ability to squeeze out a bit more profit. You don’t want to negotiate a contract that’s so predatory that your success seriously hurts your vendor financially (as I’ve sometimes seen people do when negotiating with startups that badly need revenue or a big brand name to serve as a reference). But you’re not carrying out your fiduciary responsibilities unless you do try to ensure that you get the best deal that you can — which often means negotiating, and negotiating a lot.

Typical issues that customers negotiate include term of delivery of service (i.e., can this provide give you 30 days notice they’ve decided to stop offering the service and poof you’re done?), what happens in a change of control, what happens at the end of the contract (data retrieval and so on), data integrity and confidentiality, data retention, SLAs, pricing, and the conditions under which the T&Cs can change. This is by no means a comprehensive list — that’s just a start.

Yes, you can negotiate with Amazon, Google, Microsoft, etc. And even when vendors publish public pricing with specific volume discounts, customers can negotiate steeper discounts when they sign contracts.

My colleagues Alexa Bona and Frank Ridder, who are Gartner analysts who cover sourcing, have recently written a series of notes on contracting for cloud services, that I’d encourage you to check out:

- Four Risky Issues When Contracting for Cloud Services

- How to Avoid the Pitfalls of Cloud Pricing Variations

- Seven Ways to Reduce Hidden Upfront Costs of Cloud Contracts

- Six Ways to Avoid Escalating Costs During the Life of a Cloud Contract

(Sorry, above notes are clients only.)

David Linthicum asserted “Kicking and screaming, many businesses are adopting the public cloud because their data and budget needs leave them no choice” in a deck for his Data growth drives most reluctant enterprises to the cloud post of 3/8/2011 to InfoWorld’s Cloud Computing blog:

I'm always taken aback by the fact that no matter how much I max out the storage on my new computers, I always seem to quickly run out. This is largely due to explosion in the size and amount of digital content like images, video, and audio files, along with the need to deal with larger and larger volumes of data.

The same thing is happening within enterprises. They are simply running out of room and have no place to turn but the cloud. Many are going to the cloud kicking and screaming, but most have no choice, given budget constraints paired with the need to expand capacity. …

The news here: Enterprises that have pushed back -- and perhaps are still pushing back -- on cloud computing are getting their hands forced as they run out of database and file storage headroom. Those who stated just six months ago that the cloud was "too risky" are now making deals with providers to secure a new location for file and database processing. Those with pilot projects under way to evaluate the use of cloud computing are now moving quickly to production.

I'm not sure I saw this coming, but I'm often hearing from clients that they may hate the cloud, but need to get there ASAP.

The cloud won't always be the cheapest place to manage file storage and databases, but it's certainly the fastest path to a storage solution. The incremental subscription payments go down a lot easier than hundreds of thousands of dollars in capital hardware and software expenses.

This path of least resistance is behind these enterprises' cloud computing adoption -- not elasticity, scalability, or other benefits of cloud computing that the experts continue to repeat. Color me surprised -- not shocked, but definitely surprised that this is turned out to be the major motivator to send reluctant enterprises to the cloud.

Buck Woody (@buckwoody) continued his series with Windows Azure Use Case: Infrastructure Limits on 3/8/2011:

This is one in a series of posts on when and where to use a distributed architecture design in your organization's computing needs. You can find the main post here: http://blogs.msdn.com/b/buckwoody/archive/2011/01/18/windows-azure-and-sql-azure-use-cases.aspx

Description:

Physical hardware components take up room, use electricity, create heat and therefore need cooling, and require wiring and special storage units. all of these requirements cost money to rent at a data-center or to build out at a local facility.

In some cases, this can be a catalyst for evaluating options to remove this infrastructure requirement entirely by moving to a distributed computing environment.

Implementation:

There are three main options for moving to a distributed computing environment.

Infrastructure as a Service (IaaS)

The first option is simply to virtualize the current hardware and move the VM’s to a provider. You can do this with Microsoft’s Hyper-V product or other software, build the systems and host them locally on fewer physical machines. This is a good option for canned-applications (where you have to type setup.exe) but not as useful for custom applications, as you still have to license and patch those servers, and there are hard limits on the VM sizes.Software as a Service (SaaS)

If there is already software available that does what you need, it may make sense to simply purchase not only the software license but the use of it on the vendor’s servers. Microsoft’s Exchange Online is an example of simply using an offering from a vendor on their servers. If you do not need a great deal of customization, have no interest in owning or extending the source code, and need to implement a solution quickly, this is a good choice.Platform as a Service (PaaS)

If you do need to write software for your environment, your next choice is a Platform as a Service such as Windows Azure. In this case you no longer manager physical or even virtual servers. You start at the code and data level of control and responsibility, and your focus is more on the design and maintenance of the application itself. In this case you own the source code and can extend or change it as you see fit. An interesting side-benefit to using Windows Azure as a PaaS is that the Application Fabric component allows a hybrid approach, which gives you a basis to allow on-premise applications to leverage distributed computing paradigms.No one solution fits every situation. It’s common to see organizations pick a mixture of on-premise, IaaS, SaaS and PaaS components. In fact, that’s a great advantage to this form of computing - choice.

References:

5 Enterprise steps for adopting a Platform as a Service: http://blogs.msdn.com/b/davidmcg/archive/2010/12/02/5-enterprise-steps-for-adopting-a-platform-as-a-service.aspx?wa=wsignin1.0

Application Patterns for the Cloud: http://blogs.msdn.com/b/kashif/archive/2010/08/07/application-patterns-for-the-cloud.aspx

Sean Deuby attempts to dispel Cloud Computing Confusion: “5 characteristics, 3 service models, and 4 deployment models: No wonder it's confusing!” in 3/7/2011 article for Windows IT Pro:

The first session I attended at Microsoft’s 2011 MVP Global Summit last week was a town hall–style meeting with a collection of Microsoft VPs lined up in the front of the room and multiple rows of supporting product managers in the back of the room. I can’t discuss the session details because of the nondisclosure agreement every MVP must sign, but one thing really struck me. Even in an audience full of elite technologists, the questions that were asked made it obvious there’s still a lot of confusion about what cloud computing is...and what it isn’t.

One question that generated a lot of discussion, both from the panel and the audience, was “What’s the difference between a private cloud and a private LAN? Aren’t they the same thing?” This kind of question comes from all roles in the organization, from the IT pro in the trenches who thinks this cloud stuff is all hype, to the CIO who’s been hearing about the financial benefits but is afraid of the unknown. One audience member asked, “If we’re confused about cloud computing, how are we supposed to explain it to managers and executives who can barely use their computers?”

Cloud computing is more than just having computers on your private LAN. It’s the result of the synergy between a number of different technologies and service models, some of which have been around for a long time. One of the challenges in explaining cloud computing is that there are three sets of definitions to digest: the characteristics of the cloud model, the deployment models, and the service models. To understand how cloud computing differs from previous IT technologies, let’s review the definitions.

Essential Characteristics of the Cloud Computing Model

The five characteristics of the cloud computing model were originally defined by the National Institute of Standards and Technologies (NIST) and have since been refined by a number of experts. The model been published many times before, but if you’re like me you need to see something several times before you really internalize it. Frankly, I recommend that you memorize this list; you’ll have the vague term “cloud” thrown at you many times in the future, and understanding these five characteristics will help you judge whether the latest offering you’re being shown is really cloud computing. And as an added plus, you’ll be able to finally explain cloud computing to your friends and relatives.

- On-demand self-service. You can quickly and easily configure the computing resources you need all by yourself, without filling out forms or emailing the service provider. An important point is that what you’re using is service-based (“I need 15 computing units”), not resource-based (“I need an HP ProLiant DL380 G6 with 32GB of RAM”). Your computing needs are abstracted from what you’re really being allocated. You don’t know, and in most cases you shouldn’t care. This is one of the biggest hurdles for IT departments that want to create their own internal cloud computing environment.

- Broad network access. You can access these resources from anywhere you can access the Internet, and you can access them from a browser, from a desktop with applications designed to work with them, or from a mobile device. One of the most popular application models (such as iPhone apps) is a mobile application that communicates with a cloud-based back end.

- Resource pooling. The cloud service provider, whether it’s Amazon or your own IT department, manages all of its cloud’s physical resources; creates a pool of virtual processor, storage, and network resources; and securely allocates them between all of its customers. This means your data might physically reside anywhere at any moment, although you can generally make certain broad choices for regulatory reasons (e.g., what country your data resides in). But you won’t know whether it’s in the San Antonio or Chicago data center, and certainly not what physical servers you’re using.

- Rapid elasticity. You can grow and shrink your capacity (processing power, storage, network) very quickly, in minutes or hours. Self-service and resource pooling are what make rapid elasticity possible. Triggered by a customer request, the service provider can automatically allocate more or less resources from the available pool.

- Measured service. Also described as subscription-based, measured service means that the resources you’re using are metered and reported back to you. You pay for only the resources you need, so you don’t waste processing power like you do when you have to buy it on a server-by-server basis.

Cloud Computing Service Models

A service model describes how the capability is provided to the customer. I find the easiest way to understand cloud service models is with a layered approach, very similar to the OSI networking model, with the infrastructure at the bottom and the upper layers the user sees at the top.

Infrastructure as a Service (IaaS). The lowest level of the service model stack, an IaaS provider delivers fundamental computing power in the form of virtual machines (VMs) for the user to install and run OSs and applications as though they were in the user’s own data center. The user maintains everything but the VM itself.

Platform as a Service (PaaS). The next layer up the service model stack, PaaS takes care of both the computing and the application infrastructure (such as the programming language and its associated tools) for the customer to develop cloud-based software applications (SaaS). In addition to serving external customers, Microsoft is using its Azure PaaS offering as a platform to transform its own enterprise applications into SaaS applications.

Software as a Service (SaaS). The topmost layer of the service model, SaaS applications hide the entire IT infrastructure running in the cloud and present only the application to the user. Typically, these applications can be accessed only through a web browser, although some SaaS applications require installing components on a user’s desktop or in the user’s IT infrastructure for full functionality. This is by far the most popular and best-known service model, with thousands of examples, from Gmail to hosted Exchange Server to Salesforce to Facebook to Twitter.

Other “as a Service” models. Cloud service models don’t have to necessarily follow the layered approach; practically any aspect of software can be abstracted into the cloud and provided to the customer as a service. For example, Federation as a Service (FaaS) takes the work of establishing federated trusts between an enterprise and various cloud service providers away from the enterprise. The FaaS provider establishes trusts with hundreds of cloud providers (usually SaaS), and the enterprise simply connects to a portal with all the providers represented in a menu.

Cloud Computing Deployment Models

The other set of definitions for cloud computing relates to how these services are physically deployed for the customer to use.

Public cloud. This is the best-known cloud computing deployment model, and it’s what’s usually being referred to when “cloud” is used with no qualifiers. A public cloud is hosted by a service provider, and its resources are pooled across many customers (although the resources appear to be dedicated to the customer). Amazon Elastic Compute Cloud (EC2), Windows Azure, and Salesforce.com are all public cloud providers. Note that although they share the same deployment model, they have different service models. Amazon is best known for its IaaS services, Microsoft provides PaaS, and Salesforce uses a SaaS deployment model. Public cloud service providers represent the most mature technology and practices at this point.

Private cloud. NIST defines a private cloud simply as a cloud infrastructure that’s operated sorely for an organization—in other words, it’s not shared with anyone. The major driving factors for private cloud are security and regulatory/compliance requirements; if you want to take advantage of cloud computing’s flexibility and cost savings, but you have strict requirement for where your data resides, then you must keep it private. Many of the big security concerns being voiced about cloud computing can be remedied with a private cloud.

Note that this definition makes no distinction for whether the private cloud is hosted on-premise by your company’s IT organization, or off-premise by a service provider; the erroneous assumption is often made that if it’s private, it must be in-house. Most companies are just beginning to explore what it takes to build your own private cloud, and technology companies are marketing hardware and software (such as the Windows Azure Platform Appliance) to make this enormous task easier.Hybrid cloud. The hybrid cloud is pretty self-explanatory. It’s a combination of both public and private clouds, maintained separately but that have, for example, the same application running in both. The best known use case for this deployment model is an application that runs in a private cloud but can tap into its public cloud component to provide burst capacity (such as an online toy retailer during the holiday season).

This model is still in its infancy, but the hybrid cloud is the future of cloud computing for enterprises. It will eventually become the most widely used model because it provides the best of both public and private cloud benefits.Community cloud. A relatively unknown variation of the public cloud, a community cloud is shared across several organizations, but these organizations have shared concerns or goals.

Sifting Through the Hype

No wonder cloud computing is a confusing topic! Five characteristics, three service models, and four deployment models equals a lot of things to take into consideration. My recommendation is to first understand and internalize the essential characteristics, then learn the basics of the service and deployment models.

With all these definitions in mind, let’s revisit some common remarks and questions about cloud computing:

- “What’s the difference between a private cloud and a private LAN? Aren’t they the same thing?” A private LAN describes only the network layer, the very bottom of the IT infrastructure, and doesn’t address any of the many layers of software above it that must be in place to qualify as a private cloud.

- “We’ve been doing this for years by another name—it’s called hosting services.” Hosting services might be cloud service providers, but by no means are they cloud service providers by default. Traditional hosted services require you to purchase all or part of a physical server (i.e., the computing presented to the user isn’t abstracted from the infrastructure in the physical data center), changing configuration is managed by the hosting company rather than the customer (i.e., it’s not self-service), and you typically can’t dynamically change capacity. The lesson from this common objection is to hold the objection up to the five essential characteristics of cloud computing, and see if it fits.

- In InfoWorld’s interview with Intel CIO Diane Bryant, Diane said, “As a large enterprise I have a very large infrastructure—I have 100,000 servers in production—and so I am a cloud. I have the economies of scale, I have the virtualization, I have the agility.” I spent 10 years in Intel’s IT division on the corporate Active Directory team, and although Intel has virtualization, without massive pooling of compute and storage resources and subscription-based, full user self-service, it’s not a (private) cloud.

- Finally, there’s a very pragmatic way to answer the assertion that “This cloud computing thing is mostly hype.” Microsoft has invested $2.3 billion into cloud computing and has 30,000 engineers working on it. In addition, the company intends to create SaaS versions of its entire software portfolio. Say what you will about Microsoft, but no company invests that much of its money into a computing model without being completely convinced that it’s worth it. It wasn’t that long ago that Microsoft abruptly shifted to face the web, and many people were also skeptical of that shift at the time—but look at the world we’re in today.

Cloud computing isn’t just hype. No, it won’t solve world hunger, and it’s not for everyone. But it’s here to stay, and it will—in fact, it is—transforming the IT world. You’d best start working to understand it, because it will be a part of your future.

David Pallman (@davidpallman) announced Now on Kindle: The Windows Azure Handbook, Volume 1: Planning & Strategy on 3/7/2011:

Last week my book, The Windows Azure Handbook, Volume 1: Planning & Strategy, became available (a 300-page print book). This book is now also available as an e-book on Amazon Kindle.

Kindle e-books can be read not only on the Kindle book reader device but also on many PC, phone, and tablet devices - including Windows PC, Mac, iPad, iPhone, Android, BlackBerry, and Windows Phone 7.

The Windows Azure Team added its imprimatur on the New Book by Windows Azure MVP David Pallmann, "The Windows Azure Handbook, Volume 1: Strategy & Planning", Now Available on 3/8/2011:

David Pallmann, one of our Windows Azure MVPs, has just published a great new book, "The Windows Azure Handbook, Volume 1: Strategy & Planning". According to David's post, this book has a two-fold mission: to make a case that cloud computing is worth exploring, and also that they should make Windows Azure their cloud-computing platform of choice. Designed to help business and technical decision makers evaluate and plan, the book is split in two parts: Understanding Windows Azure and Planning for Windows Azure.

According to David, this is the first of a series of books covering the Windows Azure platform across the full lifecycle, from both business and technical perspectives.

Subsequent volumes will focus on architecture, development, and management. Learn more about David's plans for the series in this post.

Jay Fry (@jayfry3)posted a Survey: cloud really is shaking up the IT role -- with some new job titles to prove it on 3/7/2011:

Cloud computing may still only be in the early stages of adoption, but it’s getting harder and harder to say that cloud is just a minor tremor in the IT world. More and more evidence is pointing to the conclusion that this is a full-on earthquake.

In the survey announced today done by IDG Research Services and sponsored by CA Technologies, Inc. (my employer), almost every single person that participated (96% of them anyway) believed that the main thing that the IT department does -- and is valued for -- has changed in the past 5 years. It's not just about running the hardware and software systems any more. Seven in 10 expect that shift to continue in the next 2 years.

Over half of the people attribute the changes to one thing: cloud computing. And that’s with adoption still in its early stages (our survey at the end of last year showed quite a bit of progress with adoption: 92% of the largest enterprises have at least one cloud service; 53% of IT implementers report more than 6. But most everything else isn’t being done in the cloud).

Tracking the shift in IT by watching the new IT titles. So what is this new area of value that IT is shifting to? One way to find out is to ask about the job titles. This particular survey did something that surveys often don’t do: it asked an open-ended, free-form question. The question was this: “In your opinion what job title(s) will exist in two years that do not exist today?”

I got a peek at the raw results: the approximately 200 respondents listed 112 separate answers. I guess that range of comments isn’t surprising. It’s hard to summarize, but very interesting to thumb through. But I’ll give it a shot, anyway:

A bunch of the new titles from both the U.S. and European respondents had cloud in the title. These included cloud manager, cloud computing optimizer, cloud service manager, cloud capacity planning, supervision of the clouds, chief cloud officer, and the like. These cloudy titles describe some of the new IT jobs that are appearing; I walked through some similar new titles in a previous post. Today’s new survey supports many of those earlier assertions from me and others I’ve been following.

Respondents also mentioned some interesting new titles that didn’t explicitly say “cloud.” In fact, only 40 of the new titles that respondents mentioned included the word “cloud.” The rest didn’t. These other titles described some of the increasingly important capabilities, in many cases directly connected to the shifts that cloud computing is causing.

So what were some of those other new titles expected over the next few years? Vendor manager, outsourcing manager, ombudsperson of IT issues, IT vendor performance manager, security/risk manager, chief process officers, chief communications officer, chief business enabler, virtualization solutions manager, business relationship manager, and chief analytics officer (which one respondent specifically described should report to the board of directors).

Pointing to requirements in the shift to an IT service supply chain. While this second set of titles doesn’t explicitly talk about cloud computing, they are being called out precisely because of the shift that the cloud is causing. Specifically:

- There’s a greater need to monitor and manage 3rd party vendors that are providing pieces of IT service to enterprises.

- Risk and compliance are called out as even more important than they are now. In fact, a later question in the survey tagged security management as the role that would change the most as a result of cloud computing. In addition, the survey asked what would be the moment that we’ll all know that “cloud has arrived”? Most popular answer: cloud will have “arrived” when vendors deliver solutions adequately addressing security and performance.

- Virtualization titles were also noted in the list of new ones, but many were higher-level managers, focused on solutions rather than particular technologies. In fact, when we asked which job titles did IT people think would be the most likely to disappear over the next 2 years, they listed infrastructure managers and administrators of all sorts. The folks doing administration, configuration, and management of server, databases, and networks were voted most likely to fade away. (I also did a previous post on the IT job “endangered species list,” if you're interested in more on that topic.)

More business savvy, please. The rest of the survey backed up the requirement implied in these new titles. The belief is that IT is today mainly known as owner and operator of the IT infrastructure. And that is what is changing. More than half (54%) of the respondents see the primary value of IT coming from its role managing an IT service supply chain within two years.

I’ve posted about this shift to treating IT like a supply chain several times in this blog, and it’s something that I and CA Technologies (judging from a lot of the bets the company is making in this space) expect to become more and more real over the next few years.

And with this move, the jobs will inevitably shift, too. That shift, if this survey is right, is starting now. The IT roles, our respondents predict, will focus more on being a business enabler – doing a better job integrating what IT can provide with what the business is asking for. The relationship management and communication skills with the business side of the house become much more important. Does a move to this supply chain model mean business savvy will trump technical IT skills? It certainly seems to be headed that way.

That doesn’t mean IT will banish its techies. On the contrary, those skills will continue to be important, too, given how central technology remains to IT’s success. However, cloud computing is giving IT a new focus and position on the business side of the house that it hasn’t really had before. IT has a chance to contribute more to business strategy. I expect many in IT to jump at the chance.

So...do the titles mentioned by our survey respondents make sense to you? What new titles are you seeing appear (or fade away) in your organization?You can find a summary of the IDG survey, “The Changing Role of IT and the Move to an IT Supply Chain Model,” including graphics of some of the more interesting results and links to download the complete write-up, here.

<Return to section navigation list>

Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

No significant articles today.

<Return to section navigation list>

Cloud Security and Governance

Richard Santalesa reported an Update: Video archive version of last week's cloud webinar now available on 3/8/2011:

Update: In the event you were unable to attend last week's webinar covering various Legal Issues of Cloud Computing, an archived video version is available for viewing at your convenience at:

http://event.on24.com/r.htm?e=285427&s=1&k=DE81EEFD2A15B72713DB88007F7C2AA6

for the next 30 days, and then thereafter in the MSPtv video archives.

Background: Information Law Group attorneys, Richard Santalesa and Boris Segalis, are presenting the first in a series of monthly cloud computing-related webinars, beginning this Wednesday, March 2, 2011 – 12:30 pm ET

Click here or http://tinyurl.com/6dhfppf to register for this free webinar. Cloud computing’s very real benefits have taken the IT market by storm, combining quick implementation, low overhead and the ability to scale as needed. However, the potential legal risks raised by diving into cloud computing without a full and joint assessment by IT, legal and operations are very real.

In this introductory webinar on cloud computing, we will review the cloud landscape, cover basic definitions and provide an overview of legal and other risks that should be considered when moving to the cloud, regardless of the cloud infrastructure being planned for or already in use.

As always, we will be taking your questions for follow-up in our Q & A session as time allows. We look forward to seeing you!

Greg Shields asked Can you trust your public cloud provider? and “Is a vendor assertion of compliance enough?” in a 3/7/2011 post to the SearchCloudComputing.com blog:

In a recent speech to a roomful of large organization IT professionals, I was asked to explain private cloud computing. During our conversation, however, the topic very quickly turned toward public cloud.

This happens a lot, particularly among IT pros who don't yet fully understand these two concepts. Yet it was the emotion stemming from these differences, not the technical differences themselves, that struck me as notable. To make a sweeping generalization, large organization IT trusts no one.

Any finger-pointing, however, appears to be equally directed toward both legitimate potential risks and the very vendors that supply a large organization's IT needs.

It is this mindset, in my opinion, that places so many large organizations at odds with public cloud. No matter which major form of public cloud you are considering, the internal cultures and processes of large organizations are poorly suited for extension into the cloud.

A major component of that misalignment has to do with the (very legitimate) concerns of data security and data ownership, along with some industry and governmental regulations. Certain established regulatory and security policies were created long before the notion of public cloud entered IT's collective eye. In many cases, these prohibit the storage, processing or transmittal of data across assets not owned by the company.

Ignoring for a moment the gory details of security policy or regulatory compliance requirements, think for a minute about how this hurdle might be overcome. Listening to the individuals in that room -- and many others just like it -- you'll hear the same arguments:

- "We can't ensure data security in a cloud service."

- "We can't ensure that we're meeting regulatory compliance in a cloud service."

- "We can't ensure inappropriate accesses are prevented in a cloud service."

These individuals are absolutely valid in their assurance concerns, as they all have security policies to meet and regulatory compliance to fulfill. At issue, though, are the mechanisms by which said policies can be met.

Dreaming of regulatory compliance in the cloud

Few in our industry can say that they are experts at regulatory compliance; most know that if they follow a specific set of agreed-upon steps in configuring and maintaining systems, they'll get in a good grade when the auditor walks in the door.Yet achieving security and compliance fulfillment can be a contractual thing, as well as a technical thing. Consider a situation where your business falls under the rules of some regulation like Sarbanes-Oxley (SOX). You'd then have a series of activities necessary to maintain compliance for each device and service: Turn on the firewall, configure Event Log settings, restrict rights and permissions, and so on. Completing these steps and assuring they remain unchanged means achieving fulfillment.

But who's to say another party couldn't complete these activities on your behalf? Who's to say that that same party couldn't legally and contractually assert said completion? If this external party provided a legally- and contractually-binding assertion of SOX fulfillment, would that be enough to meet the needs of your auditors?

You're already doing this in several ways. You hand IT administration tasks to outside contractors who work within your walls; those people aren't employees. From a legal perspective, that company/contractor relationship isn't a far cry from the relationship between company and cloud service provider: They're performing a service while giving you an assertion of fulfillment vis-à-vis their contract with you. In return, you give them the keys to your applications.

In any case, some level of auditing is obviously required. In the cloud scenario, one assumes it would be completed by the vendor's auditors with the results federated to yours. If every auditor is equally certified and follows the same rules -- which, in a perfect world, they do -- this federation of auditing could be considered valid fulfillment.

We might not be fully there yet, though; by nature, security and regulatory compliance are always a trailing function. That said, the seeds of this future state are already being sewn. In January of this year, the National Institute of Standards and Technology (NIST) released its first special publication on cloud computing. Still in draft, this document outlines the NIST's definition of cloud computing while delivering very high-level guidance for entities considering moving toward or away from public cloud services.

In the end, is a vendor assertion of compliance enough? Possibly. One hopes such assertions may soon provide the necessary legal backing that facilitates cloud computing adoption in large organizations. The economics of that movement are already well-established. All that remains is the trust.

More on cloud security:

Greg is a Microsoft MVP and a partner at Concentrated Technology.

Full Disclosure: I’m a paid contributor to SearchCloudComputing.com.

<Return to section navigation list>

Cloud Computing Events

Joe Panattieri asked HP Americas Partner Conference: Ready to Talk Cloud? in a 3/8/2011 post to the TalkinCloud blog:

As the HP Americas Partner Conference approaches (March 28-30, Las Vegas), it’s a safe bet Hewlett-Packard CEO Léo Apotheker (pictured) and Channel Chief Stephen DiFranco will roll out cloud strategies to VARs, managed services providers and aspiring cloud services providers (CSPs).

After all, HP has little choice but to counter — or leapfrog — recent cloud partner program moves by Cisco and IBM. The Cisco Cloud Partner Program debuted at last week’s Cisco Partner Summit, and IBM launched a Cloud Computing Specialty at the IBM PartnerWorld conference in February 2011. Also, Dell is mulling some cloud partner program ideas amid a storage channel push, according to TalkinCloud’s sister site, The VAR Guy.

In stark contrast, HP is a “question mark” in the cloud market, according to BusinessWeek. The mainstream magazine notes: “…the new CEO has yet to articulate a cloud strategy.”

I bet that changes at the HP Americas Partner Conference. CEO Apotheker and Channel Chief DiFranco are set to keynote the event. DiFranco has extensive experience in the managed services market. Indeed, he previously developed MSP-centric partner initiatives while leading Lenovo’s channel efforts. I’d expect DiFranco to leverage those MSP channel experiences over in the cloud and SaaS markets.

Also of note: HP in December 2010 announced a managed services enterprise push and I suspect HP could extend that push toward the cloud market. Somewhere along the line, HP will also need to articulate a clear strategy that counters VCE — the joint venture form VMware, Cisco and EMC. Rumor has it VCE is now preparing its own dedicated partner program for VARs focused on virtualization and private clouds.

HP needs a response. My best guess: I expect to hear it at the HP Americas Partner Conference.

Follow Talkin’ Cloud via RSS, Facebook and Twitter. Sign up for Talkin’ Cloud’s Weekly Newsletter, Webcasts and Resource Center. Read our editorial disclosures here.

Read More About This Topic

Bruno Terkaly reported Peter Kellner To Speak at Azure Meetup in a 3/8/2011 post:

Don’t miss this important meetup

Sign up now

ConnectionRoad

Peter’s Product

Synchronizing files from a windows PC to a cloud server seems simple, but to be able to do it for hundreds of thousands of users simultaneously while tracking bandwidth limits, cloud space limits and still give awesome performance and file security is a huge challenge.

In this presentation, Peter Kellner will talk through how using Azure three legs (Compute, Storage and Database), the applications was built from the ground up to achieve this.

For those of you who have ever used Microsoft’s SyncToy, be prepared to see the next generation.

Peter Kellner Peter Kellner, a Microsoft ASP.NET MVP since 2007, is founder and president of ConnectionRoad, and a seasoned software professional specializing in high quality, scalable and extensible .Net web applications.

His experience includes building and leading engineering teams both on and off shore.

When not working, Peter spends most his free time biking. He has ridden his bike across the globe. Most recently he and his wife, Tammy, rode across the U.S., from California to Georgia, in just 27 days

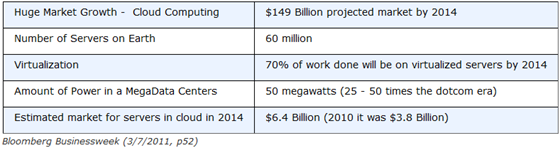

Bruno Terkaly posted Cloud Opportunities near you–Growth of Cloud Computing on 3/7/2011:

MSDN Presents…

Rob Bagby and Bruno Terkaly in a city near you:

Cloud Development is one of the fastest growing trends in our industry.

Don’t get left!

In this event, Rob Bagby and Bruno Terkaly will provide an overview of developing with Windows Azure. They will cover both where and why you should consider taking advantage of the various Windows Azure’s services in your application, as well as providing you with a great head start on how to accomplish it. This half-day event will be split up into 3 sections. The first section will cover the benefits and nuances of hosting web applications and services in Windows Azure, as well as taking advantage of SQL Azure. The second section will cover the ins and outs of Windows Azure storage, while the third will illustrate the Windows Azure AppFabric.

The fact is that cloud computing offers affordable innovation. At most corporations, IT spending is growing at unsustainable rates. This is making innovation very difficult.

Companies, scientists, and innovators are turning to the cloud. Don’t get left behind.

Date Location Time Registration Link 4/11/2011 Denver 1:00 PM – 5:00 PM https://msevents.microsoft.com/CUI/EventDetail.aspx?EventID=1032480157&Culture=en-US 4/12/2011 San Francisco 1:00 PM – 5:00 PM https://msevents.microsoft.com/CUI/EventDetail.aspx?EventID=1032480158&Culture=en-US 4/15/2011 Tempe 1:00 PM – 5:00 PM https://msevents.microsoft.com/CUI/EventDetail.aspx?EventID=1032480159&Culture=en-US 4/18/2011 Bellevue 1:00 PM – 5:00 PM https://msevents.microsoft.com/CUI/EventDetail.aspx?EventID=1032480160&Culture=en-US 4/19/2011 Portland 1:00 PM – 5:00 PM https://msevents.microsoft.com/CUI/EventDetail.aspx?EventID=1032480161&Culture=en-US 4/20/2011 Irvine 1:00 PM – 5:00 PM https://msevents.microsoft.com/CUI/EventDetail.aspx?EventID=1032480162&Culture=en-US 4/21/2011 Los Angeles 1:00 PM – 5:00 PM https://msevents.microsoft.com/CUI/EventDetail.aspx?EventID=1032480163&Culture=en-US Why you should go - Cloud computing facts:

The cloud makes it possible for new levels of computing power:

(1) 10's of thousands of cores

(2) 100's of terabytes of data

(3) 100,000's of daily jobs

(4) incredible efficiencies with typical levels of 80%-90% cpu utilizationAmazing Statistics:

Alex Williams (@alexwilliams, pictured below) posted a Live Blog from CloudConnect: Amazon CTO Werner Vogels and the Scaling Cloud (and other keynoters) to the ReadWriteCloud blog on 3/28/2011:

Amazon CTO Werner Vogels is the lead keynote today at CloudConnect, an event that has sold out this year with about 3,000 people in attendance.

Lew Tucker, Cloud CTO, Cisco Systems and Cloudscaling CEO Randy Bias will follow Vogels.

We'll be looking for three key themes:

The Importance of an Open Infrastructure.

- How the API Plays a Growing Role in the Cloud.

- The Future of Compute, Storage and I/0.

8:59 a.m.: Let's get started.

9:04 a.m.: Vogels says this will not be a sales talk. Yay! Very choppy video. Ugh. Vogels is talking about the ecosystem and the growth of mobile.

9:08 a.m. Cloud service terms are muddling the way we think about infrastructure and its impacts. The real importance is in the types of applications we build. Cloud services needs to exhibit benefits of the greater cloud. The service provider should be running the infrastructure so people can just use it. That is what the cloud looks like these days.

9:11 a.m.: Most important is to grow the ecosystem. Enterprise software was built for enterprise operations. This is leading to major changes. Startups are growing to bigger sizes than enterprise companies. With increasing competition, enterprises are becoming more start up like. He is mentioning companies such as Safenet that provide ways for the enterprises to interrelate with the cloud.

9:16 a.m.: Vogels is recounting a story he had with a potential customer that showed him a Garner Magic Quadrant. He was being asked about hosting. But the difference is really about how growth can be supported. Hosting architectures need to support high performing Web sites.

9:20 a,m. In this high performance environment, companies are leveraging the ecosystem. SAP, for instance, is using the cloud to measure carbon impact.

9:22 a.m.Sill getting very choppy stream. Hard to follow. Companies are using the redundancy of the Amazon server network. Companies are using EU region for hot loads with the US being cold but there in case the data load needs to be transferred due to an outage, for example.

9:25 a.m.: Business environments change radically. Companies need to continually update disaster recovery. He is citing importance of having a network such as Amazon.

9:27 a.m.: Vogels is going through the company's announcements of the past year. Its goal is to make sure the ecosystem really blossoms.

Lew Tucker, Cloud CTO, Cisco Systems

9:31 a.m.: What are effective networks? It's really about network entropy. It's abotu decomposing and reconstructing into new configurations. Tucker is bringing up John Gage, who talked about the network being the computer. Today, we see the massive growth of TCP/IP and the world of networked devices. That movement has pushed us into the age of the laptops, mobile platforms and the future mesh that will transform how society functions.

9:35 a.m.: Cloud computing is arriving just in time, Tucker says. It is like a perfect storm. What we have learned is that the Web won and applications need to be built in its form. That means a unified infrastructure. But what do we do to push things forward so applications are independent of the underlying infrastructure?

9:42 a.m. Talking about joining OpenStack and Cisco's commitment to open standards. Missed a bit due to poor stream

Now, we're on to Randy Bias.

Randy Bias, CEO, CloudScaling

9:45 a.m.: CloudScaling build clouds. He is walking through how he and his company views about building clouds. What he is really referring to are the public clouds we see built. Private clouds are needed because there are ways that the enterprise needs to be serviced differently than Amazon. He sees two battles being waged. There are the commodity public clouds provided by Amazon and the enterprise clouds.

You have to ask yourself: Where is the enterprise cloud that compares to Amazon Web Services. Amazon, he notes, is taking off at an amazing pace. AmazonS3 is growing at 150%. That's petabytes of data. Extrapolate and you see a scaling business.

9:55 a.m. For the enterprise cloud, the cost to build and maintain is far greater than commodity clouds. Amazon is winning and enterprise cloud providers need to consider how to compete more effectively against them.

10:07 a.m.: I've been following the interviews with Matthew Lodge of VMware and Matt Thompson, General Manager, Developer and Platform Evangelism at Microsoft. The fous is again om developers and the importance of infrastructures to support application growth. The focus is on programming the cloud and not the individual computer.

Kevin McEntee, VP of Ecommerce and Systems Engineering, Netflix

10:14 a.m.: In 1998, the company used a big Oracle database and a big Java programming environment. It crashed. They went to the cloud looking for high availability. They got that but they alo discovered new agility for developers and the business, too. Agility came with eliminating complexity. Accidental complexities came with adjusting to a Web environment.

10:22 a.m: Netflix culture fits with AWS programming environment. There is no CIO. There is no control.

We're going to stop there. Thanks for following!

Brenda Michelson (pictured below) delivered a briefer recap of more Cloud Connect: Opening Keynotes on 3/8/2011:

The conference opens with short keynotes and a panel discussion on private clouds.