Windows Azure and Cloud Computing Posts for 3/24/2011+

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

• Updated 3/26/2011 with articles marked • from Patrick Butler Monterde, Nick J. Trough, Alik Levin, Steef-Jan Wiggers, Me, Vijay Tewari, Thomas Claburn, Jeff Wettlauter, Adam Hall, System Center Team, Michael Crump, Srinivasan Sundara Rajan, Changir Biyikoglu, SQL Server Team, Bernardo Zamora, David Linthicum, Jonathan Rozenblit, Todd Hoff, and Nicole Hemsoth.

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database and Reporting

- Marketplace DataMarket and OData

- Windows Azure AppFabric: Access Control, WIF and Service Bus

- Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch

- Windows Azure Infrastructur and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Azure Blob, Drive, Table and Queue Services

No significant articles today.

<Return to section navigation list>

SQL Azure Database and Reporting

• Changir Biyikoglu announced a session about SQL Azure Federations at Teched 2011 in a 3/26/2011 post:

See you all in Atlanta at TechEd in May 2011 [for a session described here:]

DBI403 Building Scalable Database Solutions Using Microsoft SQL Azure Database Federations

- Session Type: Breakout Session

- Level: 400 - Expert

- Track: Database & Business Intelligence

- Speaker(s): Cihan Biyikoglu

SQL Azure provides an information platform that you can easily provision, configure and use to power your cloud applications. In this session we explore the patterns and practices that help you develop and deploy applications that can exploit the full power of the elastic, highly available, and scalable SQL Azure Database service. The session details modern scalable application design techniques such as sharding and horizontal partitioning and dives into future enhancements to SQL Azure Databases.

- Product/Technology: Microsoft® SQL Azure™

- Key Learning: SQL Azure Federations

- Key Learning: Building scalable solutions on SQL Azure

- Key Learning: Developing multi-tenant solutions on SQL Azure

Changir Biyikoglu explained Moving to Multi-Tenant Database Model Made Easy with SQL Azure Federations in a 3/23/2011 post:

In an earlier post I talked about how federations provide a robust connection model. I wanted to take a moment to drill into the great ability of the filtering connections and how it makes taking a classic on premise database model known as; single-tenant-per-database model to a fully dynamic multi-tenant database model. With the move, app has to make little changes to the business logic but now can support multiple tenants per database to take advantage of best economics.

Obvious to many but for completeness, lets first talk about why single-database-per-tenant model causes challenges;

- In some cases the capacity requirements of a small tenant could scale down below the minimum capacity database available in SQL Azure. Assume you have tenants with MBs of data. Consuming a GB database wastes valuable $s. You may ask why there is a scale-down limit on a database in SQL Azure and it is a long discussion but the short answer is this; there is a constant cost to a database and a smaller database does not improve much in cost to provide benefits. In future we may be able make optimizations but for now, 1GB Web Edition database size is the smallest database you can buy.

- When the tenant count is large, managing very large number of independent surfaces causes issues. Here are a few; with 100K tenants, if you were to create 100K databases for them, you would find that operations that are scoped globally to address all your data such as schema deployments or queries that need to report size usage per tenant across all databases would take excessively long to compute. The reason is the sheer number of connects and disconnects that you have to perform.

- Apps that have to work with such large number of databases end up causing connection pool fragmentations. I touched on this on previous post as well but to reiterate; assuming that your middle tier that can get a request to route its query to any one of the 100K tenants, the app server ends up maintaining 100K pools with a single connection in each pool. Since each app server does not always get a request for each 100K tenant frequently enough, many times, the app finds no connection in the pool thus need to establish a cold connection from scratch.

Having multiple tenants in a single database with federations solves all these issues;

- Multi-tenant database model with federations allow you to dynamically adjust the number of tenants per database at any moment in time. Repartitioning command like SPLIT allow you to perform these operations without any downtime.

- Simply rolling multiple tenant into a single database, optimizes the management surface and allows batching of operations for higher efficiency.

- With federations you can connect through a single endpoint and don’t have to worry about connection pool fragmentation for your applications regular workload. SQL Azure’s gateway tier does the pooling for you.

What’s the catch? Isn’t there any downside to multi-tenancy?

Sure there are downsides to implementing multi-tenancy in general. The most important one is database isolation. With multi-tenant database model, you don’t get strict security isolation of data between tenants. For example, you can no longer have a user_id that can only access a specific tenant in that database in SQL Azure with a multi-tenant database. You have to govern access always through a middle tier to ensure that isolation is protected. Access to data through open protocols like OData or WebServices can solve these issues.

Another complaint I hear often is the need to monitor and provide governance between tenants in your application in the multi-tenant case. Imagine a case where you have 100 tenants in a single database and one of these tenants consume excessive amount of resources. Your next query from a well-behaving tenant may get slowed-down/blocked/throttled in the database. In v1, federations provide no help here. Without app level governance you cannot create a fair system between these tenants in federations v1. The solutions to governance problem tends to be app specific anyways so I’d argue you would want this logic in the app tier and not in the db tier anyways. Here is why; with all governance logic, it is best to engage and deny/slow-down the work as early as possible in its execution lifetime. So pushing this logic higher in the stack to app tier for evaluation is a good principle to follow. That is not to say db should not participate in the governance decision. SQL Azure can contribute by providing data about a tenant’s workload and in future versions we may help with that.

Yes, there are issue to consider… But multi-tenancy has been around for a while and there are also good compromises and workarounds in place.

How does federation help with posting single-database-per-tenant (single-tenant) apps to multi-tenant model?

Lets switch gears and assume you are sold… Multi-tenancy it is… Lets see how federations help.

In a single-tenant app, the query logic in application is coded with the assumption that all data in a database belongs to one tenant. With multi-tenant apps that work with identical schemas, refactored code simply injects tenant_id into the schema (tables, indexes etc) and every query the app issues, contains the tenant_id=? predicate. In a federation, where tenant_id is the federation key, you are asked to still implement the schema changes. However federations provide a connection type called a FILTERING connection that automatically injects this tenant_id predicate without requiring app refactoring. Our data-dependent routing sets up a FILTERING connection by default. Here is how;

1: USE FEDERATION orders_federation(tenant_id=155) WITH RESET, FILTERING=ONSince SQL Azure federations know about your schema and your federation key, you can simply continue to issue the “SELECT * FROM dbo.orders” query on a filtered connection and the algebrizer in SQL Azure auto-magically injects “WHERE tenant_id=155” into your query. This basically means that connection to federations do the app logic refactoring for you…You don’t need to rewrite business logic and fully validate this code to be sure that you have tenant_id injected into every query.

With federations there is still good reasons to use UNFILTERING connections. FILTERING connections cannot do schema updates or does not work well when you’d like to fan out a query. So, “FILTERING=OFF” connections come with no restrictions and can help with efficiency. Here is how you get an UNFILTERING connection.

1: USE FEDERATION orders_federation(tenant_id=155) WITH RESET, FILTERING=OFFExistence of two modes of connection however create also an issue; what happens when TSQL intended for FILTERING connections is executed on an UNFILTERING connection? We can help here; federation provide a way for you to detect the connection type; in sys.dm_exec_sessions you can query the federation_filtering_state field to detect the connection state.

1: IF (select federation_filtering_state from sys.dm_exec_sessions2: where @@SPID=session_id)=13: BEGIN4: EXEC your single-tenant logic …5: END6: ELSE7: BEGIN8: RAISERROR ..., "Not on a filtering connection!"9: ENDThere are many other ways to ensure this does not happen but defensive programming is still the best approach to ensure robust results.

So net net, multi-tenancy is great for a number of reasons and you can move applications with heavy business logic coded in single-tenant model, with smaller work into a multi-tenant model with federations. I specifically avoid saying this is a transparent port due to issues I discussed and you still need to consider compromises in your code.

When federations become available this year, we’ll have more examples of how to move single-tenant applications over to multi-tenant model with federations but for those of you dreading the move, there is help coming.

The question is when is the “help coming” in the form of a SQL Azure Federation CTP?

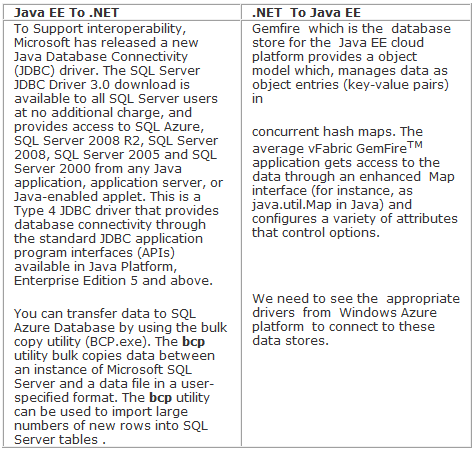

Edu Lorenzo explained How to Move Data to [SQL] Azure on 3/23/2011:

Here is a short blog [post] on how to move data to Azure. This is just part of a longer blog on how to publish a databound app so bear with me.

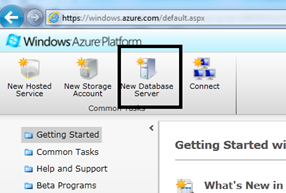

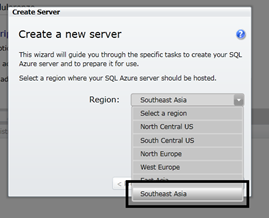

Create an azure database. Call it whatever you want.

Create a new server that will house your database. I suggest choosing an area near you geographically. I chose Southeast Asia as that is the nearest to me the last time I checked :p

Fill in credentials for your database Admin. I used “eduadmin” as the admin name and “M!cR0$0f+” as password… yeah right

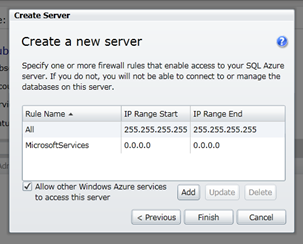

Then we add some rules. This is going to be your connectivity rules.

You now will have a database server with a master database

It is now time to click CREATE to create a new DB. I click Create then type in “adventureworks” as the database name.

- You now have an empty database called “adventureworks” up in the cloud.

- Now to put some data in it. I will use SQLServer2008R2′s management studio so I can show you some new stuff that you can use to migrate data.

Open up Management studio and use the Fully Qualified DNS Name that Azure gives you as the server and the credentials you entered earlier so you can connect your management studio directly to the azure database that you have. Take note of how I wrote the username.

My management studio is now connected to both my local version of the Adventureworks database and my cloud based database

Then I script out the data and schema from my local copy of adventureworks using Management Studio while taking note of some advanced options

As you can see, we can now (using R2) script data out to an azure friendly format.

- And then I run the script against my azure version of adventureworks

And the database has been created

To double check, I use the browser based azure database manager

And that’s it. A lot of things I did not indicate. This is a quickie blog [post].

Buck Woody (@buckwoody) described a SQL Azure Use Case: Department Application Data Store by moving Access tables to SQL Azure on 3/22/2011:

This is one in a series of posts on when and where to use a distributed architecture design in your organization's computing needs. You can find the main post here: http://blogs.msdn.com/b/buckwoody/archive/2011/01/18/windows-azure-and-sql-azure-use-cases.aspx

Description:

Most organizations use a single set of enterprise applications where employees do their work. This system is designed to be secure, safe, and to perform well. It is attended and maintained by a staff of trained technical professionals. But not all business-critical work is contained in these systems. Users who have installed software such as Microsoft Office often set up small data-stores and even programs to do their work. This is often because the system is “bounded” - the data or program only affects an individual’s work.

The issue arises when an individual’s data system is shared with other users. In effect the user’s system becomes a server.

There are many issues with this arrangement. The “server” in this case is not secure, safe and does not perform well at higher loads. It may have design issues, and is normally cannot be accessed from outside the office. Users might even install insecure software to allow them to access the system when they are on the road, adding to the insecurity. When the system becomes unavailable or has a design issue, IT may finally become aware of the application’s existence, and it’s criticality.

Implementation:

There are various options to correct this situation. The first and most often used is to bring the system under IT control, re-write or adapt the application to a better design, and run it from a proper server that is backed up, performs well, and is safe and secure. Depending on how critical the application is, how many users are accessing it, and the level of effort, this is often the best choice.

But this means that IT will have to assign resources, including developer and admin time,licenses, hardware and other infrastructure and development resources, and this takes time and adds to budget.

If the application simply needs to have a central data store, then SQL Azure is a possible architecture solution. In smaller applications such as Microsoft Access, data can be separated from the application - in other words, the users can still create reports, forms, queries and so on and yet use SQL Azure as the location of the data store. A minimal investment of IT time in designing the tables and indexes properly then allows the users to maintain their own application. No servers, installations, licenses or maintenance is needed.

Another advantage to this arrangement is that the user’s department can set up the subscription to SQL Azure, which shifts the cost burden to the department that uses the data, rather than to IT.

Microsoft Access is only one example - many programs can use an ODBC connection as a source, and since SQL Azure uses this as a connection method, to the application it appears as any other database. The application then can be used in any location, not just from within the building, so there’s no need for a VPN into the building - the front end application simply goes with the user.

Another option for a front-end is to have the power-user create a LightSwitch application. More of a pure development environment than Microsoft Access, it still offers an easy process for a technical business user to create applications. Pairing this environment with SQL Azure makes for a mobile application that is safe and secure, paid for by the department. Interestingly, if designed properly, the data store can serve multiple kinds of front-end programs simultaneously, allowing a great deal of flexibility and the ability to transfer the data to an enterprise system at a later date if that is needed.

Resources:

Example process of moving a Microsoft Access data back-end to SQL Azure: http://www.informit.com/guides/content.aspx?g=sqlserver&seqNum=375

<Return to section navigation list>

MarketPlace DataMarket and OData

• My (@rogerjenn) Windows Azure and OData Sessions at MIX11 post updated 3/26/2011 lists 15 sessions for a search on Azure and 4 (2 duplicates) for a search on OData.

MIX11 will be held 4/12 through 4/14/2011 at the Mandalay Bay hotel, Las Vegas, NV. Hope to see you there.

• Michael Crump announced on 3/24/2011 a new series with a Producing and Consuming OData in a Silverlight and Windows Phone 7 application (Part 1) post:

I have started a new series on SilverlightShow.net called Producing and Consuming OData in a Silverlight and Windows Phone 7 application. I decided that I wanted to create a very simple and easy to understand article that not only guides you step-by-step but includes a video and full source code. I personally believe this is the best way to teach someone something and I hope you enjoy the series. I also want to thank SilverlightShow for giving me this opportunity to help other developers get up to speed quickly with OData.

The table of contents for the series is listed below. I will be adding parts 2 and 3 shortly.

- Producing and Consuming OData in a Silverlight and Windows Phone 7 application. (Part 1) – Creating our first OData Data Source and querying data through the web browser and LinqPad.

- Producing and Consuming OData in a Silverlight and Windows Phone 7 application. (Part 2 ) – Consuming OData in a Silverlight Application.

- Producing and Consuming OData in a Silverlight and Windows Phone 7 application. (Part 3) – Consuming OData in a Windows Phone 7 Application.

What is OData?

The Open Data Protocol (OData) is simply an open web protocol for querying and updating data. It allows for the consumer to query the datasource (usually over HTTP) and retrieve the results in Atom, JSON or plain XML format, including pagination, ordering or filtering of the data.

In this series of articles, I am going to show you how to produce an OData Data Source and consume it using Silverlight 4 and Windows Phone 7. Read the complete series of articles to have a deep understanding of OData and how you may use it in your own applications.

The Full Article

The full article is hosted on SilverlightShow and you can access it by clicking here. Don’t forget to rate it and leave comments or email me [michael[at]michaelcrump[dot]net if you have any problems.

<Return to section navigation list>

Windows Azure AppFabric: Access Control, WIF and Service Bus

• Alik Levin continued his series with Windows Azure Web Role WCF Service Federated Authentication Using AppFabric Access Control Service (ACS) v2 – Part 2 on 3/25/2011:

This is a continuation to Windows Azure Web Role WCF Service Federated Authentication Using AppFabric Access Control Service (ACS) v2 – Part 1.

Step 2 – Create and configure WCF Service as relying party in ACS v2 Management Portal

The content in this step is adapted from How To: Authenticate with Username and Password to the WCF Service Protected by Windows Azure AppFabric Access Control Service Version 2.0.

In the next procedures you will create create and configure a relying party using the ACSv2.0 management portal. A relying party application is the WCF services that you want to use to implement the federated authentication for using the ACS.

To create and a configure a relying party

- Navigate to https://portal.appfabriclabs.com and authenticate using Live ID.

- Click on Service Buss, Access Control & Caching tab.

- Click on Access Control node in the treeview under the AppFabric root node. List of your namespaces should appear.

- Select your namespace by clicking on it and then click on Access Control Service ribbon. New browser tab should open an Access Control Service page.

- Click the Relying Party Applications link in the Trust Relationships section.

- On the Relying Party Applications page, click the Add link.

- On the Add Relying Party Application page specify the following information:

In the Relying Party Application Settings section:

- Name—Specify the display name for this relying party. For example, Windows Azure Username Binding Sample RP.

- Mode—Choose the Enter settings manually option.

- Realm—Specify the realm of your WCF service. For example, http://localhost:7000/Service/Default.aspx.

- Return URL—Leave blank.

- Error URL—Leave blank.

- Token format—Choose the SAML 2.0 option.

- Token encryption policy—Choose the Require encryption option.

- Token lifetime (secs) —Leave the default of 600 seconds.

In the Authentication Settings section:

- Identity providers—Leave all unchecked.

- Rule groups—Check the Create New Rule Group option.

In the Token Signing Options section:

- Token signing—Choose the Use a dedicated certificate option.

- Certificate:

- File—Browse for an X.509 certificate with a private key (.pfx file) to use for signing.

- Password—Enter the password for the .pfx file in the field above.

In the Token Encryption section:

- Certificate:

- File—Browse to load the X.509 certificate (.cer file) for the token encryption for this relying party application. It should be the certificate without private key, the one that will be used for SSL protection of your WCF service.

- Click Save.

- Saving your work also triggers creating a rule group. Now you need to generate rules for the rule group.

To generate rules in the rule group

- Click the Rule Groups link.

- On the Rule Groups page, click the Default Rule Group for Windows Azure Username Binding Sample RP rule group.

- On the Edit Rule Group page, click the Add link at the bottom.

- On the Add Claim Rule page, in the If section, choose the Access Control Service option. Leave the default values for the rest of the options.

- Click Save.

In the next procedures you will create and configure the service identity to respond to a token request based on a username and password.

To configure the service identity for using username and password credentials

- Navigate to https://portal.appfabriclabs.com and authenticate using Live ID.

- Click on Service Buss, Access Control & Caching tab.

- Click on Access Control node in the treeview under the AppFabric root node. List of your namespaces should appear.

- Select your namespace by clicking on it and then click on Access Control Service ribbon. New browser tab should open an Access Control Service page.

- Click the Service Identities link in the Service Settings section.

- On the Service Identities page, click the Add link.

- On the Add Service Identity page provide a Name. You will use it as a username for your username and password pair when requesting a token.

- Optionally, add a description in the Description section.

- Click Save. The page title should change to Edit Service Identity.

- Click the Add link in the Credentials section. You should be redirected to the Add Credentials page.

- On the Add Credentials page, provide the following information:

- Type—Choose Password from drop-down list.

- Password—Enter your desired password. You will use it as a password for your username and password pair when requesting a token.

- Effective date—Enter the effective date for this credential.

- Expiration date—Enter the expiration date for this credential.

- Click Save.

Related Materials

- Windows Azure Web Role WCF Service Federated Authentication Using AppFabric Access Control Service (ACS) v2 – Part 1

- Widows Azure Content And Guidance Map For Developers – Part 1, Getting Started

- Securing Windows Azure Distributed Application Using AppFabric Access Control Service (ACS) v2 – Scenario and Solution Approach

- Windows Azure Web Role ASP.NET Application Federated Authentication Using AppFabric Access Control Service (ACS) v2 – Part 1

- Windows Azure Web Role ASP.NET Application Federated Authentication Using AppFabric Access Control Service (ACS) v2 – Part 2

- Windows Azure Web Role ASP.NET Application Federated Authentication Using AppFabric Access Control Service (ACS) v2 – Part 3

- Securing Windows Azure Web Role ASP.NET Web Application Using Access Control Service v2.0 (same as above on one page)

Alik’s post might have benefitted from a few screen captures.

• Eugenio Pace (@eugenio_pace) began a new series with Authentication in WP7 client with REST Services–Part I on 3/24/2011:

In the last drop, we included a sample that demonstrates how to secure a REST web service with ACS, and a client calling that service running in a different security realm:

In this case, ACS is the bridge between the WS-Trust/SAML world (Litware in the diagram) and the REST/SWT side (Adatum’s a-Order app)

This is just a technical variation of the original sample we had in the book, that was purely based on SOAP web services (WS-Trust/SAML only):

But we have another example in preparation which is a Windows Phone 7 Client. Interacting with REST based APIs is pretty popular with mobile devices. In fact is what we decided to use when building the sample for our Windows Phone 7 Developer Guide.

There’s no WIF for the phone yet, so implementing this in the WP7 takes a little bit of extra work. And, as usual, there’re many ways to solve it.

The “semi-active” way:

This is a very popular approach. In fact, it’s the way you’re likely to see this done with the phone in many samples. It essentially involves using an embedded browser (browser = IE) and delegate to it all token negotiation until it gets the token you want. This negotiation is nothing else than the classic “passive” token negotiation, based on HTTP redirects that we have discussed ad infinitum, ad nauseam.

The trick is in the “until you get the token you want”. Because the browser is embedded in the host application (a Silverlight app in the phone), you can handle and react to all kind of events raised by it. A particular useful event to handle is Navigating. This signals that the browser is trying to initiate an HTTP request to a server. We know that the last interaction in the token negotiation (passive) is actually posting the token back the the relying party. That’s the token we want!

So if we have a way of identifying the last POST attempt by the browser, then we have the token we need. There are many ways of doing this, but most look like this:

In this case we are using the “ReplyTo” address, that has been configured in ACS with a specific value “break_here” and then extract the token with the browser control SaveToString method. The Regex functions you see there, simply extract the token from the entire web page.

Once you’ve got the token, then you use it in the web service call and voila!

With this approach your phone code is completely agnostic of how you actually get the final token. This works with any identity provider, and any protocol supported by the browser.

Here’re some screenshots of our sample:

The first one is the home screen (SL). The second one shows the embedded browser with a login screen (adjusted for the size of the phone screen) and the last one the result of calling the service.

JavaScript in the browser control in the phone has to be explicitly enabled:

If you don’t do this, the automatic redirections will not happen and you will see this:

You will have to click on the (small) button for the process to continue. This is exactly the same behavior that happens with a browser on a desktop (only that in most cases scripting is enabled).

In next post I’ll go into more detail of the other option: the “active” client. By the way, this sample will be posted to our CodePlex site soon.

• Eugenio Pace (@eugenio_pace) announced Drop #2 of Claims Identity Guide on CodePlex on 3/22/2011:

Second drop of samples and draft chapters is now available on CodePlex. Highlights:

- All 3 samples for ACS v2: ("ACS as a Federation Provider", "ACS as a FP with Multiple Business Partners" and "ACS and REST endpoints"). These samples extend all the original "Federation samples" in the guide with new capabilities (e.g. protocol transition, REST services, etc.)

- Two new ACS specific chapters and a new appendix on message sequences

Most samples will work without an ACS account, since we pre-provisioned one for you. The exception is the “ACS and Multiple Partners”, because this requires credentials to modify ACS configuration. You will need to subscribe to your own instance of ACS to fully exercise the code (especially the “sign-up” process).

The 2 additions to the appendix are:

Message exchanges between Client/RP/ACS/Issuer:

And the Single-Sign-Out process (step 10 below):

You will also find the Fiddler sessions with explained message contents.

Feedback always welcome!

Clemens Vasters (@clemsensv) delivered a 00:07:51 What I do at work – Cloud and Service Bus for Normal People video segment on 3/25/2011:

David Chou posted Internet Service Bus and Windows Azure AppFabric on 3/24/2011:

Microsoft’s AppFabric, part of a set of ”application infrastructure” (or middleware) technologies, is (IMO) one of the most interesting areas on the Microsoft platform today, and where a lot of innovations are occurring. There are a few technologies that don’t really have equivalents elsewhere, at the moment, such as the Windows Azure AppFabric Service Bus. However, its uniqueness is also often a source of confusion for people to understand what it is and how it can be used.

Internet Service Bus

Oh no, yet another jargon in computing! And just what do we mean by “Internet Service Bus” (ISB)? Is it hosted Enterprise Service Bus (ESB) in the cloud, B2B SaaS, or Integration-as-a-Service? And haven’t we seen aspects of this before in the forms of integration service providers, EDI value-added networks (VANs), and even electronic bulletin board systems (BBS’s)?

At first look, the term “Internet Service Bus” almost immediately draws an equal sign to ‘Enterprise Service Bus’ (ESB), and aspects of other current solutions, such as ones mentioned above, targeted at addressing issues in systems and application integration for enterprises. Indeed, Internet Service Bus does draw relevant patterns and models applied in distributed communication, service-oriented integration, and traditional systems integration, etc. in its approaches to solving these issues on the Internet. But that is also where the similarities end. We think Internet Service Bus is something technically different, because the Internet presents a unique problem domain that has distinctive issues and concerns that most internally focused enterprise SOA solutions do not. An ISB architecture should account for some very key tenets, such as:

- Heterogeneity – This refers to the set of technologies implemented at all levels, such as networking, infrastructure, security, application, data, functional and logical context. The internet is infinitely more diverse than any one organization’s own architecture. And not just current technologies based on different platforms, there is also the factor of versioning as varying implementations over time can remain in the collective environment longer than typical lifecycles in one organization.

- Ambiguity – the loosely-coupled, cross-organizational, geo-political, and unpredictable nature of the Internet means we have very little control over things beyond our own organization’s boundaries. This is in stark contrast to enterprise environments which provide higher-levels of control.

- Scale – the Internet’s scale is unmatched. And this doesn’t just mean data size and processing capacity. No, scale is also a factor in reachability, context, semantics, roles, and models.

- Diverse usage models – the Internet is used by everyone, most fundamentally by consumers and businesses, by humans and machines, and by opportunistic and systematic developments. These usage models have enormously different requirements, both functional and non-functional, and they influence how Internet technologies are developed.

For example, it is easy to think that integrating systems and services on the Internet is a simple matter of applying service-oriented design principles and connecting Web services consumers and providers. While that may be the case for most publicly accessible services, things get complicated very quickly once we start layering on different network topologies (such as through firewalls, NAT, DHCP and so on), security and access control (such as across separate identity domains), and service messaging and interaction patterns (such as multi-cast, peer-to-peer, RPC, tunneling). These are issues that are much more apparent on the Internet than within enterprise and internal SOA environments.

On the other hand, enterprise and internal SOA environments don’t need to be as concerned with these issues because they can benefit from a more tightly controlled and managed infrastructure environment. Plus integration in the enterprise and internal SOA environments tend to be at a higher-level, and deal more with semantics, contexts, and logical representation of information and services, etc., organized around business entities and processes. This doesn’t mean that these higher-level issues don’t exist on the Internet; they’re just comparatively more “vertical” in nature.

In addition, there’s the factor of scale, in terms of the increasing adoption of service compositions that cross on-premise and public cloud boundaries (such as when participating in a cloud ecosystem). Indeed, today we can already facilitate external communication to/from our enterprise and internal SOA environments, but to do so requires configuring static openings on external firewalls, deploying applications and data appropriate for the perimeter, applying proper change management processes, delegate to middleware solutions such as B2B gateways, etc. As we move towards a more inter-connected model when extending an internal SOA beyond enterprise boundaries, these changes will become progressively more difficult to manage collectively.

An analogy can be drawn from our mobile phones using cellular networks, and how its proliferation changed the way we communicate with each other today. Most of us take for granted that a myriad of complex technologies (e.g., cell sites, switches, networks, radio frequencies and channels, movement and handover, etc.) is used to facilitate our voice conversations, SMS, MMS, and packet-switching to Internet, etc. We can effortlessly connect to any person, regardless of that person’s location, type of phone, cellular service and network, etc. The problem domain and considerations and solution approaches for cellular services, are very different from even the current unified communications solutions for enterprises. The point is, as we move forward with cloud computing, organizations will inevitably need to integrate assets and services deployed in multiple locations (on-premises, multiple public clouds, hybrid clouds, etc.). To do so, it will be much more effective to leverage SOA techniques at a higher-level and building on a seamless communication/connectivity “fabric”, than the current class of transport (HTTPS) or network-based (VPN) integration solutions.

Thus, the problem domain for Internet Service Bus is more “horizontal” in nature, as the need is vastly broader in scope than current enterprise architecture solution approaches. And from this perspective Internet Service Bus fundamentally represents a cloud fabric that facilitates communication between software components using the Internet, and provides an abstraction from complexities in networking, platform, implementation, and security.

Opportunistic and Systematic Development

It is also worthwhile to discuss opportunistic and systematic development (as examined in The Internet Service Bus by Don Ferguson, Dennis Pilarinos, and John Shewchuk in the October 2007 edition of The Architecture Journal), and how they influence technology directions and Internet Service Bus.

Systematic development, in a nutshell, is the world we work in as professional developers and architects. The focus and efforts are centered on structured and methodical development processes, to build requirements-driven, well-designed, and high-quality systems. Opportunistic development, on the other hand, represents casual or ad-hoc projects, and end-user programming, etc.

This is interesting because the majority of development efforts in our work environments, such as in enterprises and internal SOA environments, are aligned towards systematic development. But the Internet advocates both systematic and opportunistic developments, and increasingly more so as influenced by Web 2.0 trends. Like “The Internet Service Bus” article suggests, today a lot of what we do manually across multiple sites and services, can be considered a form of opportunistic application; if we were to implement it into a workflow or cloud-based service.

And that is the basis for service compositions. But to enable that type of opportunistic services composition (which can eventually be compositing layers of compositions), technologies and tools have to be evolved into a considerably simpler and abstracted form such as model-driven programming. But most importantly, composite application development should not have to deal with complexities in connectivity and security.

And thus this is one of the reasons why Internet Service Bus is targeted at a more horizontal, and infrastructure-level set of concerns. It is a necessary step in building towards a true service-oriented environment, and cultivating an ecosystem of composite services and applications that can simplify opportunistic development efforts.

ESB and ISB

So far we discussed the fundamental difference between Internet Service Bus (ISB) and current enterprise integration technologies. But perhaps it’s worthwhile to discuss in more detail, how exactly it is different from Enterprise Service Bus (ESB). Typically, an ESB should provide these functions (core plus extended/supplemental):

- Dynamic routing

- Dynamic transformation

- Message validation

- Message-oriented middleware

- Protocol and security mediation

- Service orchestration

- Rules engine

- Service level agreement (SLA) support

- Lifecycle management

- Policy-driven security

- Registry

- Repository

- Application adapters

- Fault management

- Monitoring

In other words, an ESB helps with bridging differences in syntactic and contextual semantics, technical implementations and platforms, and providing many centralized management capabilities for enterprise SOA environments. However, as we mentioned earlier, an ISB targets concerns at a lower, communications infrastructure level. Consequently, it should provide a different set of capabilities:

- Connectivity fabric – helps set up raw links across boundaries and network topologies, such as NAT and firewall traversal, mobile and intermittently connected receivers, etc.

- Messaging infrastructure – provides comprehensive support for application messaging patterns across connections, such as bi-directional/peer-to-peer communication, message buffers, etc.

- Naming and discovery – a service registry that provides stable URI’s with a structured naming system, and supports publishing and discovering service end point references

- Security and access control – provides a centralized management facility for claims-based access control and identity federation, and mapping to fine-grained permissions system for services

A figure of an Internet Service Bus architecture is shown below.

And this is not just simply a subset of ESB capabilities; ISB has a couple of fundamental differences:

- Works through any network topology; whereas ESB communications require well-defined network topologies (works on top)

- Contextual transparency; whereas ESB has more to do with enforcing context

- Services federation model (community-centric); whereas ESB aligns more towards a services centralization model (hub-centric)

So what about some of the missing capabilities that are a part of ESB, such as transformation, message validation, protocol mediation, complex orchestration, and rules engine, etc.? For ISB, these capabilities should not be built into the ISB itself. Rather, leverage the seamless connectivity fabric to add your own implementation, or use one that is already published (can either be cloud-based services deployed in Windows Azure platform, or on-premises from your own internal SOA environment). The point is, in the ISB’s services federation model, application-level capabilities can simply be additional services projected/published onto the ISB, then leveraged via service-oriented compositions. Thus ISB just provides the infrastructure layer; the application-level capabilities are part of the services ecosystem driven by the collective community.

On the other hand, for true ESB-level capabilities, ESB SaaS or Integration-as-a-Service providers may be more viable options, while ISB may still be used for the underlying connectivity layer for seamless communication over the Internet. Thus ISB and ESB are actually pretty complementary technologies. ISB provides the seamless Internet-scoped communication foundation that supports cross-organizational and federated cloud (private, public, community, federated ESB, etc.) models, while ESB solutions in various forms provide the higher-level information and process management capabilities.

Windows Azure AppFabric Service Bus

The Service Bus provides secure messaging and connectivity capabilities that enable building distributed and disconnected applications in the cloud, as well hybrid application across both on-premise and the cloud. It enables using various communication and messaging protocols and patterns, and saves the need for the developer to worry about delivery assurance, reliable messaging and scale.

In a nutshell, the Windows Azure AppFabric Service Bus is intended as an Internet Service Bus (ISB) solution, while BizTalk continues to serve as the ESB solution, though the cloud-based version of BizTalk may be implemented in a different form. And Windows Azure AppFabric Service Bus will play an important role in enabling application-level (as opposed to network-level) integration scenarios, to support building hybrid cloud implementations and federated applications participating in a cloud ecosystem.

<Return to section navigation list>

Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

• Steef-Jan Wiggers posted BizTalk AppFabric Connect: WCF-Adapter Service Stored Procedure on 3/24/2011:

In a post yesterday I [showed] a way to invoke a stored procedure in SQL Azure using WCF-SQL Adapter. There is another [way] to invoke a stored procedure using AppFabric Connect for Services functionality in BizTalk Server 2010. This new feature feature brings together the capabilities of BizTalk Server and Windows Azure AppFabric thereby enabling enterprises to extend the reach of their on-premise Line of Business (LOB) systems and BizTalk applications to cloud. To be able to bridge the capabilities of BizTalk Server and Windows Azure AppFabric you will need Biztalk Server 2010 Feature Pack.

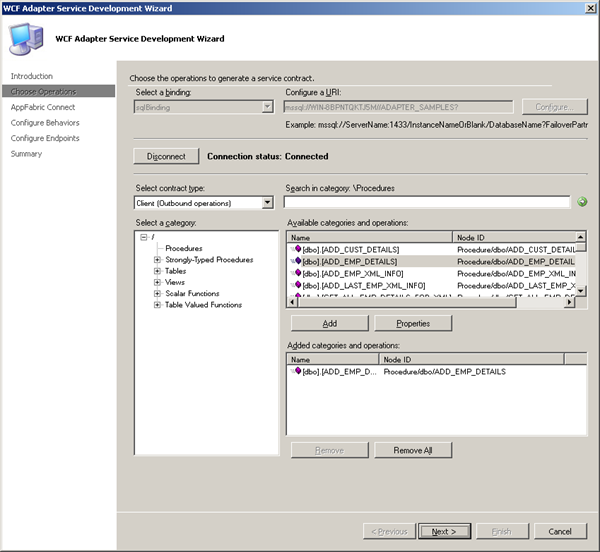

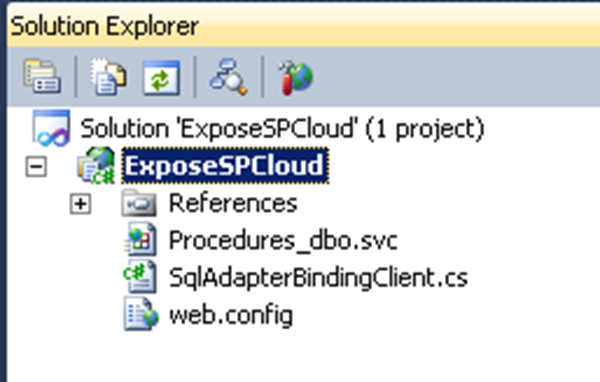

In this post I will show how to leverage BizTalk WCF-SQL Adapter to extend reach of stored procedure to the cloud. First I will create a service that will expose the stored-procedure. I create a VS Studio project and select WCF Adapter Service. Steps that follow are also described in an article called Exposing LOB Services on the Cloud Using AppFabric Connect for Services. I skip first page concerning and go to choose the operations to generate a service contract page.

I choose local database, stored procedure ADD_EMP_DETAILS (same as in previous posts) and click Next. In AppFabric Connect page, select the Extend the reach of the service on the cloud checkbox. In the Service Namespace text box, enter the service namespace that you must have already registered with the Service Bus.

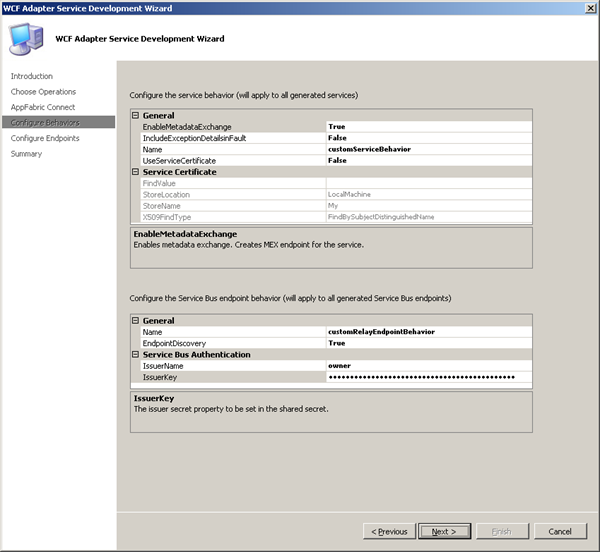

Next step is configure the service behavior. You will have to configure the service behavior (for both on-premises and cloud-based services) and the endpoint behavior (for only endpoints on the Service Bus).

I enabled the EnableMetadatExchange to True, so the service metadata is available using standardized protocols, such as WS-Metadata Exchange (MEX). I not using security features as in certificates so the UseServiceCertificate is set to False. I enabled EndpointDiscovery, which makes the endpoints publicly discoverable. Next page is around configuring endpoints.

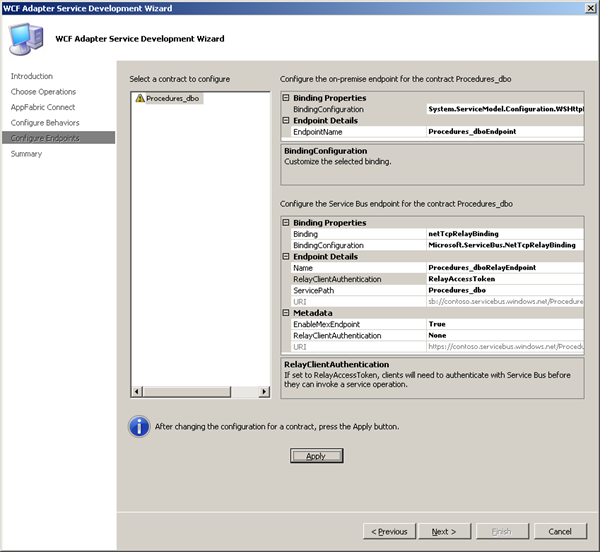

I accepted the the defaults for the on-premises endpoint and focus on configuring the Service Bus endpoints. I select netTcpRelayBinding for which the URL scheme is sb and set EnableMexEndPoint to True. Rest I accepted default values. After configuration you will have to click Apply. Then click and final screen will appear.

Finish and the wizard creates both on-premise and Service Bus endpoints for the WCF service.

Next steps involve publishing the service. In the Visual Studio project for the service, right-click the project in Solution Explorer and click Properties. On the Properties page, in the Web tab, under Servers category, select the Use Local IIS Web Server option.The Project URL text box is automatically populated and then click Create Virtual Directory.In Solution Explorer, right-click the project again and then click Build. When service is build you will have to configure it in IIS for auto-start. By right clicking the service, Manage WCF and WF Service and then configure you might run into this error (which you can ignore a this element is not present in intellisense).

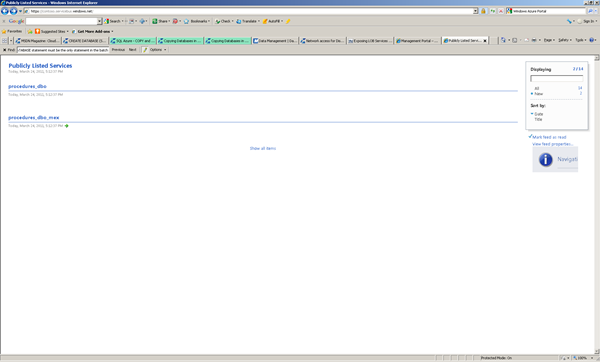

In Auto-Start you can set it to enable. Next step is to verify if the Service Bus endpoints for the WCF service are published. You will have to view all the available endpoints in the Service Bus ATOM feed page for the specified service namespace:

.servicebus.windows.net/">https://<namespace>.servicebus.windows.net

I typed this URL in a Web browser and saw a list of the all the endpoints available under the specified service bus namespace. To be able to consume the WCF service to able to invoke the procedure you will need to build a client. I create a Windows Application to consume WCF Service, so inside my project I created a new project a Windows Forms Application and added a service reference using the URL for the relay metadata exchange endpoint.

In ServiceBus Endpoint the RelayClientAuthentication was set to RelayAccessToken, which means that the client will need to pass the authentication token to authenticate itself to the Service Bus. Therefore you will have to add following section in app.config.

<behaviors>

<endpointBehaviors>

<behavior name="secureService">

<transportClientEndpointBehavior credentialType="SharedSecret">

<clientCredentials>

<sharedSecret issuerName="<name>" issuerSecret="<value>" />

</clientCredentials>

</transportClientEndpointBehavior>

</behavior>

</endpointBehaviors>

</behaviors>You must get the values for issuerName and issuerSecret from the organization (e.g. your Azure account) that hosts the service. The endpoint configuration in app.config has to be changed to this:

<endpoint address="sb://contoso.servicebus.windows.net/Procedures_dbo/"

binding="netTcpRelayBinding" bindingConfiguration="Procedures_dboRelayEndpoint"

contract="ServiceReferenceSP.Procedures_dbo" name="Procedures_dboRelayEndpoint" behaviorConfiguration="secureService"/>Code for making this work (implementation of invoke button).

using System;

using System.Collections.Generic;

using System.ComponentModel;

using System.Data;

using System.Drawing;

using System.Linq;

using System.Text;

using System.Windows.Forms;

using ExposeSPCloudTestClient.ServiceReferenceSP;namespace ExposeSPCloudTestClient

{

public partial class Form1 : Form

{

public Form1()

{

InitializeComponent();

}private void btnInvoke_Click(object sender, EventArgs e)

{

int returnValue = -1;Procedures_dboClient client = new Procedures_dboClient("Procedures_dboRelayEndpoint");

client.ClientCredentials.UserName.UserName = "sa";

client.ClientCredentials.UserName.Password = "B@rRy#06";try

{

Console.WriteLine("Opening client...");

Console.WriteLine();

client.Open();

client.ADD_EMP_DETAILS("Steef-Jan Wiggers", "Architect", 20000, out returnValue);

}

catch (Exception ex)

{

MessageBox.Show("Exception: " + ex.Message);

}

finally

{

client.Close();

}

txtResult.Text = returnValue.ToString();}

}

}Query employee table before execution of code above results as depicted below:

After invoking stored-procedure through consuming the service the new record is added.

As you can see I am added to employees table through adapter service called by the client.As you can see there is another way of invoking a stored-procedure making use of BizTalk AppFabric Connect Feature.

No significant articles today.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

• Patrick Butler Monterde described the Windows Azure Toolkit for Windows Phone 7 in a 3/25/2011 post:

Link: http://watoolkitwp7.codeplex.com/

Hello,

I am pleased to announce the Windows Azure Toolkit for Windows Phone 7. The toolkit is designed to make it easier for you to build mobile applications that leverage cloud services running in Windows Azure. The toolkit includes Visual Studio project templates for Windows Phone 7 and Windows Azure, class libraries optimized for use on the phone, sample applications, and documentation.

Windows Azure

Windows Azure is a cloud-computing platform that lets you run applications and store data in the cloud. Instead of having to worry about building out the underlying infrastructure and managing the operating system, you can simply build your application and deploy it to Windows Azure. Windows Azure provides developers with on-demand compute, storage, networking, and content delivery capabilities.

For more information about Windows Azure, visit the Windows Azure website. For developer focused training material, download the Windows Azure Platform Training Kit or view the online Windows Azure Platform Training Course.

Getting started: If you’re looking to try out the Windows Azure Platform free for 30-days—without using a credit card—try the Windows Azure Pass with promo code “CloudCover”. For more details, see Try Out Windows Azure with a Free Pass.

Content

- Setup and Configuration

- Toolkit Content

- Getting Started

- Troubleshooting

- Videos (coming soon)

Requirements

You must have the following items to run the project template and the sample solution included in this toolkit:

- Microsoft Visual Studio 2010 Professional (or higher) or both Microsoft Visual Web Developer 2010 Express and Microsoft Visual Studio

- 2010 Express for Windows Phone

- Windows Phone Developer Tools

- Internet Information Services 7 (IIS7)

- Windows Azure Tools for Microsoft Visual Studio 2010 (November Release)

• Jonathan Rozenblit asserted Connecting Windows Azure to Windows Phone Just Got A Whole Lot Easier in a 3/24/2011 post:

As of today, connecting Windows Azure to your application running on Windows Phone 7 just got a whole lot easier with the release of the Windows Azure Toolkit for Windows Phone 7 designed to make it easier for you to leverage the cloud services running in Windows Azure. The toolkit, which you can find on CodePlex, includes Visual Studio project templates for Windows Phone 7 and Windows Azure, class libraries optimized for use on the phone, sample applications, and documentation.

The toolkit contains the following resources:

- Binaries – Libraries that you can use in your Windows Phone 7 applications to make it easier to work with Windows Azure (e.g. a full storage client library for blobs and tables). You can literally add these libraries to your existing Windows Phone 7 applications and immediate start leveraging services such as Windows Azure storage.

- Docs – Documentation that covers setup and configuration, a review of the toolkit content, getting started, and some troubleshooting tips.

- Project Templates – VSIX (which is the unit of deployment for a Visual Studio 2010 Extension) files are available that create project templates in Visual Studio, making it easy for you to build brand new applications.

- Samples – Sample application that fully leverages the toolkit, available in both C# and VB.NET. The sample application is also built into one of the two project templates created by the toolkit.

There’s a really great article on how to get started in the wiki. Definitely check that out before you get started. To help make it even easier to get started with the toolkit, today’s (3/25) Cloud Cover episode will focus on the toolkit and how to get started using it. Over the next few weeks, videos, tutorials, demo scripts, and other great resources to go along with the toolkit will be released. Stay tuned here – I’ll keep you posted with all the new stuff as soon as it becomes available!

Want more?

If those resources aren’t enough to get you started, make sure to stop by Wade Wegner’s blog (a fellow evangelist) for a quick “how to get started" tutorial.Get Windows Azure free for 30 days

As a reader of the Canadian Mobile Developers’ blog, you can get free access to Windows Azure for 30 days while you’re trying out the toolkit. Go to windowsazurepass.com, select Canada as your country, and enter the promo code CDNDEVS.

If you think you need more than 30 days, no problem. Sign up for the Introductory Special instead. From now until June 30, you’ll get 750 hours per month free!

If you have an MSDN subscription, you have Windows Azure hours included as part of your benefits. Sign in to MSDN and go to your member’s benefits page to activate your Windows Azure benefits.

Next Steps

Download the toolkit and get your app connected to Windows Azure.This post is also featured on the Canadian Mobile Developers’ Blog.

Steve Marx (@smarx) and Wade Wegner (@WadeWegner) produced a Cloud Cover Episode 41 - Windows Azure Toolkit for Windows Phone 7 on 3/25/2011:

Join Wade and Steve each week as they cover the Windows Azure Platform. You can follow and interact with the show @CloudCoverShow.

In this episode, Steve and Wade walk through the new Windows Azure Toolkit for Windows Phone 7, which is designed to make it easier to build phone applications that use services running in Windows Azure. Wade explains why it was built, where to get it, and how it works.

Reviewing the news, Wade and Steve:

- Highlight the new Eclipse Plugin CTP for Windows Azure

- Show a neat way to get MVC 3 project types to the cloud service wizard

- Describe the ease LightSwitch Beta 2 brings to Windows Azure and SQL Azure development

Windows Azure Toolkit for Windows Phone 7

Microsoft delivers toolkit for using Windows Azure to build Windows Phone 7 apps

Windows Azure Toolkit for Windows Phone 7 Released

Wade also introduces his new son, Ethan James Wegner, born last Saturday:

Patriek van Dorp described Updates and Patches in Windows Azure in a 3/25/2011 post:

My latest post on “When to deploy a VM Role in Windows Azure?” triggered a discussion on when the Fabric Controller brings down your Windows Azure Role instances. Someone said that updates and patches were only automatically deployed on Web Roles and Worker Roles and not on VM Roles so VM Roles wouldn’t be subject to the monthly updates. He was half right and to understand what happens you need to know what happens under the hood in the Windows Azure Fabric.

Datacenters used by Microsoft for hosting Windows Azure consist of thousands of multi-core servers placed in racks. These racks are placed in containers and those containers are managed as a whole. If a certain percentage of all the servers in a container is malfunctioning, the entire container is replaced.

Depending on the number of cores a physical server has (this will always be in multiples of 8), a number of so called Host VM’s are running per server. Each Host VM utilizes 8 physical cores (this is also the number of cores of the largest Guest VM size). Each Host VM hosts 0 to 8 Guest VM’s, which are the VM’s we configure in our Azure Roles. These Guest VM’s can be of different sizes; Small (1 core), Medium (2 cores), Large (4 cores) and Extra Large (8 cores). (For the sake of simplicity I’ll omit the Extra Small instance which shares cores amongst instances)

Figure 1 shows how Guest VM’s are allocated amongst different Host VM’s on the same server.

Figure 1: Hardware, Host VM and Guest VM situated on top of each other

Now, Guest VM instances of VM Roles are not updated automatically by the Fabric Controller. The Host VM, on the other hand ís automatically updated on “patch Tuesday” once a month (this is not necessarily on Tuesday). This will affect all Guest VM instances that are hosted on that Host VM. This also means any instances of a VM Role that happen to be hosted on that particular Host VM.

Additionally Web Roles and Worker Roles are also affected by the automatic Guest VM OS updates.

<Return to section navigation list>

Visual Studio LightSwitch

Beth Massi (@BethMassi) posted a LightSwitch Beta 2 Content Rollup on 3/25/2011:

Well, it’s been 10 days since we released Visual Studio LightSwitch Beta 2 and we’ve been working really hard to get all of our samples, “How Do I” videos, articles and blog posts updated to reflect Beta 2 changes. Yes, that’s right. We’re going through all of our blog posts and updating them so folks won’t get confused when they find the posts later down the road. You will see notes at the top of them that indicate whether the information only applies to Beta 1 or has been updated to Beta 2 (if there’s no notes, then it applies to both). Check them out, a lot of the techniques have changed. I especially encourage you to watch the “How Do I” videos again.

So here’s a rollup of Beta 2 content that’s been done, reviewed, or completely redone from the team. You will find all this stuff categorized nicely on the new LightSwitch Learning Center into Getting Started, Essential and Advanced Topics. We add more each week!

Developer Center:

- Learning Center Redesign for Beta 2

- What's New in Beta 2

- Community Page (Started)

- "What is LightSwitch?"

How Do I Videos:

#1 - How Do I: Define My Data in a LightSwitch Application?

#2 - How Do I: Create a Search Screen in a LightSwitch Application?

#3 - How Do I: Create an Edit Details Screen in a LightSwitch Application?

#4 - How Do I: Format Data on a Screen in a LightSwitch Application?

#5 - How Do I: Sort and Filter Data on a Screen in a LightSwitch Application?

#6 - How Do I: Create a Master-Details (One-to-Many) Screen in a LightSwitch Application?

#7 - How Do I: Pass a Parameter into a Screen from the Command Bar in a LightSwitch Application?

#8 - How Do I: Write business rules for validation and calculated fields in a LightSwitch Application?

#9 - How Do I: Create a Screen that can Both Edit and Add Records in a LightSwitch Application?

#10 - How Do I: Create and Control Lookup Lists in a LightSwitch Application?

#11 - How Do I: Set up Security to Control User Access to Parts of a Visual Studio LightSwitch Application?Blogs:

Data

- Using Both Remote and Local Data in a LightSwitch Application

- How to Create a Many-to-Many Relationship

- Getting the Most out of LightSwitch Summary Properties

- How Do I: Import and Export Data to/from a CSV file

Screens

- How to Create a Screen with Multiple Search Parameters in LightSwitch

- Creating a Custom Search Screen in Visual Studio LightSwitch

- How to Programmatically Control LightSwitch UI

Queries

- Query Reuse in Visual Studio LightSwitch

- How Do I: Create and Use Global Values In a Query

- How Do I: Filter Items in a ComboBox or Modal Window Picker in LightSwitch

Office

- How to Send Automated Appointments from a LightSwitch Application

- How To Create Outlook Appointments from a LightSwitch Application

- How To Send HTML Email from a LightSwitch Application

- Using Microsoft Word to Create Reports For LightSwitch (or Silverlight)

Deployment

- Deployment Guide: How to Configure a Web Server to Host LightSwitch Applications

- Step-by-Step: How to Publish to Windows Azure

Tips, Tricks & Gotchas

- LightSwitch Gotcha: How To Break Your LightSwitch Application in Less Than 30 Seconds

- Where Do I Put My Data Code In A LightSwitch Application?

- Validating Collections of Entities (Sets of Data) in LightSwitch

Security

- Filtering data based on current user in LightSwitch apps

- How to reference security entities in LightSwitch

Architecture

- The Anatomy of a LightSwitch Application Series Part 1 - Architecture Overview

- The Anatomy of a LightSwitch Application Series Part 2 – The Presentation Tier

- The Anatomy of a LightSwitch Application Part 3 – the Logic Tier

- The Anatomy of a LightSwitch Application Part 4 – Data Access and Storage

Customization & Extensibility

- Using Custom Controls to Enhance Your LightSwitch Application UI - Part 1

- LightSwitch Beta 2 Extensibility “Cookbook”

Samples:

- LightSwitch Course Manager End-to-End Application

- Visual Studio LightSwitch Vision Clinic Walkthrough & Sample

- LightSwitch Beta 2 Extensibility “Cookbook”

Training:

Channel 9:

Edu Lorenzo described Publishing a Visual Studio LightSwitch App to Windows Azure on 3/24/2011:

Well whaddayaknow!!! The two things that I have been playing around with just got married!!! Nah, not really like that. I was just so pleasantly surprised when I found that now.. Visual Studio Lightswitch can Publish to Azure!!!!

So here goes!!!!

Off the top of my head, I think I will need both a hosted storage and a database because VS-LS apps are mostly data driven. Hmmm…. I’ll make one of both.

Then I create a lightswitch app and publish!

Of course you should publish it as a web app

And tell Lightswitch that it is to be deployed to Windows Azure

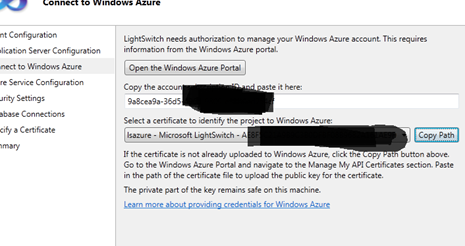

I supply the subscription ID and create a certificate for it

Upload the certificate I just made to my azure portal

I click next on the Lightswitch Publish wizard and choose appropriate values

Create a new Certificate

Connect to my Database

Then

And publish!

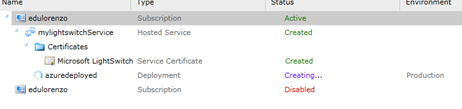

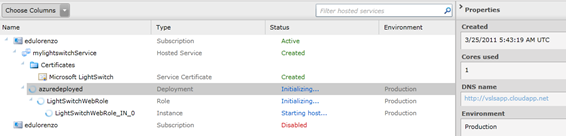

Watch as your management portal is being updated..

Wait for it… … …

And you now have.. a LightSwitch app deployed on azure!

Beth Massi (@BethMassi) provided a detailed and lavishly illustrated Deployment Guide: How to Configure a Web Server to Host LightSwitch Applications on 3/23/2011:

Note: This information applies to LightSwitch Beta 2.

Visual Studio LightSwitch applications are logically three-tier applications and consist of a client, application services (a.k.a. middle-tier), and data store (a.k.a database). LightSwitch applications can be deployed to a variety of environments in a couple different ways. You can deploy the client as a Desktop application or a Web (browser-based) application.

A Desktop application runs outside the browser on a Windows machine and has access to the computer storage and other running applications. Users see a desktop icon to launch the application like any other Windows application. A Web application runs inside the browser and does not have full access to storage or other applications on the machine, however, a Web application can support multiple browsers and run on Mac as well as Windows computers.

If you select a desktop application you can choose to host the application services locally on the same machine. This creates a two-tier application where all the components (client + middle-tier) are installed on the user’s Windows computer and they connect directly to the database. This type of deployment avoids the need for a web server and is appropriate for smaller deployments on a local area network (LAN) or Workgroup. In this situation, the database can be hosted on one of the clients as long as they can all connect directly.

Both desktop and browser-based clients can be deployed to your own (or web hoster’s) Internet Information Server (IIS) or hosted in Azure. This sets up a three-tier application where all that is installed on the client is a very small Silverlight runtime and the application services (middle-tier) are hosted on a web server. This is appropriate if you have many users and need more scalability and/or you need to support Web browser-based clients over the internet.

(click images to enlarge)

If you don’t have your own web server or IT department then deploying to Azure is an attractive option. Check out the pricing options here as well as the Free Azure Trial. Last week Andy posted on the team blog how to publish your LightSwitch application to Azure, if you missed it see:

Step-by-Step: How to Publish to Windows Azure

In this post I’m going to show you how you can set up your own server to host the application as well as some configuration tips and tricks. First I’ll walk through details of configuring a web server for automatic deployment directly from the LightSwitch development environment and then move onto the actual publishing of the application. I’ll also show you how to create install packages for manual deployment later, like to an external web hoster. We’ll also cover Windows and Forms authentication. Finally I’ll leave you with some tips and tricks on setting up your own application pools, integrated security and SSL that could apply to not only LightSwitch applications, but any web site or service that connects to a database. Here’s what we’ll walk through:

Configuring a server for deployment

- Installing Prerequisites with Web Platform Installer

- Verifying IIS Settings, Features and Services

- Configuring Your Web Site and Database for Network Access

Deploying your LightSwitch application

- Direct Publishing a LightSwitch Application

- Creating and Installing a LightSwitch Application Package

- Deploying Applications that use Forms Authentication

- Deploying Applications that use Windows Authentication

Configuration tips & tricks

- Configuring Application Pools & Security Considerations

- Using Windows Integrated Security from the Web Application to the Database

- Using Secure Sockets Layer (SSL) for LightSwitch Applications

Keep in mind that once you set up the server then you can deploy multiple applications to if from LightSwitch. And if you don’t have a machine or the expertise to set this up yourself, then I’d suggest either a two-tier deployment for small applications, or looking at Azure as I referenced above. This guide is meant for developers and IT Pros that want to host LightSwitch web applications on premises.

So let’s get started!

Installing Prerequisites with Web Platform Installer

You can use the Web Platform Installer (WPI) to set up a Windows web server fast. It allows you to select IIS, the .NET Framework 4 and a whole bunch of other free applications and components. All the LightSwitch prerequisites are there which includes setting up IIS and the web deploy service properly as well as including SQL Server Express. This makes it super easy to set up a machine with all the stuff you need. Although you can do this all manually, I highly recommend you set up your LightSwitch server this way. You can run the Web Platform Installer on Windows Server 2008 (& R2), Windows 7, and Windows Server 2003. Both IIS 7.0 and IIS 6.0 are supported. If the server already has IIS 6.0 installed, IIS 7.0 will not be installed.

(Note that LightSwitch Beta 2 prerequisites have changed from Beta 1, they do not contain any assemblies that are loaded into the Global Assembly Cache anymore. Therefore you must uninstall any Beta 1 prerequisites before installing Beta 2 prerequisites.)

To get started, on the Web Platform tab select the Products link at the top then select Tools on the left and click the Add button for the Visual Studio LightSwitch Beta 2 Server Runtime. This will install IIS, .NET Framework 4, SQL Server Express 2008 and SQL Server Management Studio for you so you DO NOT need to select these components again.

If you already have one or more of these components installed then the installer will skip those. LightSwitch server prerequisites do not install any assemblies; they just make sure that the server is ready for deployment of LightSwitch applications so you have a smooth deployment experience for your LightSwitch applications. Here's the breakdown of the important dependencies that get installed:

- IIS 7 with the correct features turned on like ASP.NET, Windows Authentication, Management Services

- .NET Framework 4 + SP1

- Web Deployment Tool 1.1 and the msdeploy provider so you can deploy directly from the LightSwitch development environment to the server

- SQL Server Express 2008 (engine & dependencies) and SQL Server Management Studio (for database administration)(Note: LightSwitch will also work with SQL Server 2008 R2 but you will need to install that manually if you want that version)

The prerequisites are also used as a special LightSwitch-specific customization step for your deployed web applications. When deploying to IIS 7, they will:

- Make sure your application is in the ASP.NET v4.0 Application Pool

- Make sure matching authentication type has been set for the web application

- Adds an application administrator when your application requires one

So by installing these you don’t have to worry about any manual configuration of the websites after you deploy them. Please note, however, that if you already have .NET Framework 4 on the machine and then install IIS afterwards (even via WPI) then ASP.NET may not get configured properly. Make sure you verify your IIS settings as described below.

To begin, click the "I Accept" button at the bottom of the screen and then you'll be prompted to create a SQL Server Express administrator password. Next the installer will download all the features and start installing them. Once the .NET Framework is installed you'll need to reboot the machine and then the installation will resume. Once you get to the SQL Server Express 2008 setup you may get this compatibility message:

If you do, then just click "Run Program" and after the install completes, install SQL Server 2008 Service Pack 1.

Plan on about 30 minutes to get everything downloaded (on a fast connection) and installed.

Verifying IIS Settings, Features and Services

Once IIS is installed you’ll need to make sure some features are enabled to support LightSwitch (or any .NET web application). If you installed the Visual Studio LightSwitch Server Prerequisites on a clean machine then these features should already be enabled.

In Windows 2008 you can check these settings by going to Administrative Tools –> Server Manager and under Roles Summary click on Web Server (IIS). Then scroll down to the Role Services. (In Windows 7 you can see this information by opening “Add or Remove Programs” and selecting “Turn Windows features on or off”.) You will need to make sure to install IIS Management Service, Application Development: ASP.NET (this will automatically add additional services when you check it), and under Security: Windows Authentication.

Next we need to make sure the Web Deployment Agent Service is started. Open up Services and right-click on Web Deployment Agent Service and select Start if it’s not already started.

Configure Your Web Site and Database for Network Access

Now before moving on we should make sure we can browse to the default site. First, on the web server, you should be able to open a browser to http://localhost and see the IIS 7 logo. If that doesn’t happen something got hosed in your install and you should troubleshoot that in the IIS forums or the LightSwitch forums. Next we should test that other computers can access the default site. In order for other computers on the network to access IIS you will need to enable “World Wide Services (HTTP Traffic-In)” under Inbound Rules in your Windows Firewall. This should automatically be set up when you add the Web Server role to your machine (which happens when you install the prerequisites above).

At this point you should be able to navigate to http://<servername> from another computer on your network and see the IIS 7 logo. (Note: If you still can’t get it to work, try using the machine’s IP address instead of the name)

Next you need to make sure the SQL Server that you want to deploy the LightSwitch application database (which stores your application data, user names, permissions and roles) is available on the network. In this example I’m going to deploy the database to the same machine as the web server but you could definitely put the database on it’s own machine running either SQL Server or Express depending on your scalability needs. For smaller, internal, departmental applications running the database on the same machine is probably just fine. SQL 2008 Express is installed as part of the prerequisites above, you just need to enable a few things so that you can connect to it from another machine on your network.

Open up SQL Server Configuration Manager and expand the SQL Server Services and start the SQL Server Browser. You may need to right-click and select Properties to set the start mode to something other than Disabled. The SQL Server Browser makes the database instance discoverable on the machine. This allows you to connect via <servername>/<instancename> syntax to SQL Server across a network. This is going to allow us to publish the database directly from LightSwitch as well.

Next you need to enable the communication protocols so expand the node to expose the Protocols for SQLEXPRESS as well as the Client Protocols and make sure Named Pipes is enabled. Finally, restart the SQLEXPRESS service.

Direct Publishing a LightSwitch Application

Here is the official documentation on how to publish a LightSwitch application - How to: Deploy a 3-tier LightSwitch Application. As I mentioned, there’s a couple ways you can deploy to the server, one way is directly from the LightSwitch development environment, but the other way is by creating an application package and manually installing it on the server. I’ll show you both.

Now that we have the server set up, the Web Deployment Agent service running, and remote access to SQL Server, we can publish our application directly from the LightSwitch development environment. For this first example, I'm going to show how to deploy an application that does not have any role-based security set up. We’ll get to that in a bit. So back on your LightSwitch development machine, right-click on the project in the Solution Explorer and select “Publish”.

The Publish Wizard opens and the first question is what type of client application you want, desktop or Web (browser-based). I’ll select desktop for this example.

Next we decide where and how we want to deploy the application services (middle-tier). I want to host this on the server we just set up so select “IIS Server” option. For this first example, I will also choose to deploy the application directly to the server and since I installed the prerequisites already, I’ll leave the box checked “IIS Server has the LightSwitch Deployment Prerequisites installed”.

Next we need to specify the server details. Enter the URL to the web server and specify the Site/Application to use. By default, this will be set to the Default Web Site/AppName. Unless you have set up another website besides the default one leave this field alone. Finally, specify an administrator username and password that has access to the the server.

Next you need to specify a couple connection strings for the application database. The first one is the connection that the deployment wizard will use to create or update the database. This refers specifically to the intrinsic application database that is maintained by every LightSwitch application and exists regardless of whether you create new tables or attach to an existing database for your data. Make sure you enter the correct server and instance name in the connection string, in my case it’s called LSSERVER\SQLEXPRESS. Leaving the rest as integrated security is fine because I am an administrator of the database and it will use my windows credentials to connect to the database to install it. Regardless of what connection string you specify, the user must have dbcreator rights in SQL Server.