Windows Azure and Cloud Computing Posts for 3/26/2011+

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

• Updated 3/27/2011 with articles marked • from the Windows Azure Team, Firedancer, Flick Software, Alex Williams, SQL Azure Labs, Saugatuck Technology, Damir Dobric, Graham Callidine, Bruce Guptill, David Linthicum, Robert Green, Inge Henriksen and Bert Craven.

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database and Reporting

- Marketplace DataMarket and OData

- Windows Azure AppFabric: Access Control, WIF and Service Bus

- Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch

- Windows Azure Infrastructure and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Azure Blob, Drive, Table and Queue Services

Richard Parker and Valeriano Tortola reported on 3/26/2011 the availability of Beta 2 of their FTP to Azure Blob Storage Bridge project on CodePlex:

Project Description

Deployed in a worker role, the code creates an FTP server that can accept connections from all popular FTP clients (like FileZilla, for example) for command and control of your blob storage account.

What does it do?

It was created to enable each authenticated user to upload to their own unique container within your blob storage account, and restrict them to that container only (exactly like their 'home directory'). You can, however, modify the authentication code very simply to support almost any configuration you can think of.

Using this code, it is possible to actually configure an FTP server much like any traditional deployment and use blob storage for multiple users.

This project contains slightly modified source code from Mohammed Habeeb's project, "C# FTP Server" and runs in an Azure Worker Role.

Can I help out?

Most certainly. If you're interested, please download the source code, start tinkering and get in touch!

Thomas Conte posted Deploying Ruby in Windows Azure with access to Windows Azure Blobs, Tables and Queues on 3/22/2011:

You probably know already that the Windows Azure Platform supports many types of technologies; we often mention Java or PHP in that regard, but today I would like to show you how to deploy a basic Ruby application in Windows Azure. This will also give us the opportunity to see a few utilities and tips & tricks around application deployment automation in Windows Azure Roles using Startup Tasks.

First, a quick word about Windows Azure Platform support in Ruby in general: I am going to use the Open Source WAZ-Storage library that allows you to access Windows Azure Blobs, Tables & Queues from your Ruby code. This will allow us both to run our Ruby application in the cloud, and leverage our non-relational storage services.

In order to start with the most compact application possible, I am going to use the Sinatra framework that allows very terse Web application deveopment; here the small test application I am going to deploy, it consists in a single Ruby file:

require 'rubygems'require 'sinatra'require 'waz-blobs'require 'kconv'get '/' doWAZ::Storage::Base.establish_connection!(:account_name => 'foo', :access_key => 'bar', :use_ssl => true)c = WAZ::Blobs::Container.find('images')blobnames = c.blobs.map { |blob| blob.name }blobnames.join("<br/>")endHere are a few key points of this superb application:

- It is a test application that will run within Sinatra’s “development” configuration, and not a real production configuration… But this will do for our demonstration!

- The WAZ-Storage library is very easy to use: just change the “foo/bar” in establish_connection() with your Storage account and key, then choose an existing container name for the call to Container.find()

- The code will assemble the list of Blob names from the container into a character string that will be displayed in the resulting page

- The WAZ-Storage library of course exposes a way more complete API, that allows creation, modification, destruction of Blobs and Containers… You can go to their Github to download the library, and you are of course welcome to contribute ;-)

Let’s see how we are going to prepare our environement to execute this application in Windows Azure.

We are going to use a Worker Role to execute the Ruby interpreter. The benefit of using the Ruby interpreter and the Sinatra framework is that their impact on the system is very light: all you need to do is install the interpreter, download a few Gems, and you will have everything you need. It’s a perfect scenario to use Startup Tasks in a Worker Role, a feature that will allow us to customize our environment and still benefit from the Platform As A Service (PAAS) features, like automatic system updates & upgrades.

I have created a new project of type Cloud Service in Visual Studio 2010, where I added a Worker Role. I then added a “startup” directory in the Worker Role project, where I will store all the files required to install Ruby. It looks like this:

Let’s see what all these files do:

ruby-1.9.2-p180-i386-mingw32.7z is of course the Ruby interpreter itself. I chose the RubyInstaller for Windows version, which is ready to use. I chose the 7-Zip version instead of the EXE installer, because it will just make operations simpler (all you need to do is extract the archive contents). I could also dynamically download the archive (see below), but since it’s only 6 MB, I finally decided to include it directly in the project.

test.rb is of course my Ruby “application”.

curl is a very popular command-line utility, that allows you to download URL-accessible resources (like files via HTTP). I am not using it for the moment, but it can be very useful for Startup Tasks: you can use it to download the interpreter, or additional components, directly from their hosting site (risky!) or from a staging area in Blob Storage. It is typically what I would do for bigger components, like a JDK. I downloaded the Windows 64-bit binary with no SSL.

7za is of course the command-line version of 7-Zip, the best archiver on the planet. You will find this version on the download page.

WAUtils will be used in the startup script to find the path of the local storage area that Windows Azure allocated for me. This is where we will install the Ruby interpreter. There is no way to “guess” the path of this area, you need to ask the Azure runtime environment for the information. The source code for WAUtils is laughably simple, I just compiled it in a separate project and copied the executable in my “startup” folder.

namespace WAUtils{class Program{static void Main(string[] args){if (args.Length < 2){System.Console.Error.WriteLine("usage: WAUtils [GetLocalResource <resource name>|GetIPAddresses <rolename>]");System.Environment.Exit(1);}switch (args[0]){case "GetLocalResource":GetLocalResource(args[1]);break;case "GetIPAddresses":break;default:System.Console.Error.WriteLine("unknown command: " + args[0]);System.Environment.Exit(1);break;}}static void GetLocalResource(string arg){LocalResource res = RoleEnvironment.GetLocalResource(arg);System.Console.WriteLine(res.RootPath);}}}As you can see, I use the RoleEnvironment.GetLocalResource() method to find the path to my local storage.

Let’s now have a look in my solution’s ServiceDefinition.csdef; this is where I will add this local storage definition, called “Ruby” for which I asked 1 GB.

<LocalResources><LocalStorage name="Ruby" cleanOnRoleRecycle="false" sizeInMB="1024" /></LocalResources>I will also add an Input Endpoint, to ask the Windows Azure network infrastructure to expose my loca port 4567 (Sinatra’s default) to the outside world as port 80.

<Endpoints><InputEndpoint name="Ruby" protocol="tcp" port="80" localPort="4567" /></Endpoints>I will then add two Startup Tasks:

<Startup><Task commandLine="startup\installruby.cmd" executionContext="elevated" /><Task commandLine="startup\startruby.cmd" taskType="background" /></Startup>

- installruby.cmd will execute with Administrator rights (eve if it is not scritcly required in this case… but this is typically what you would use for system configuration tasks)

- startupruby.cmd will start the Ruby interpreter, will not use Administrator rights (for security reasons), and will run in “background” mode, which means that the Worker Role will not wait for the task completion to finish its startup procedure.

Let’s now see these two scripts!

First, installruby.cmd:

cd %~dp0for /f %%p in ('WAUtils.exe GetLocalResource Ruby') do set RUBYPATH=%%pecho y| cacls %RUBYPATH% /grant everyone:f /t7za x ruby-1.9.2-p180-i386-mingw32.7z -y -o"%RUBYPATH%"set PATH=%PATH%;%RUBYPATH%\ruby-1.9.2-p180-i386-mingw32\bincall gem install sinatra haml waz-storage --no-ri --no-rdocexit /b 0Here are the steps:

- We run WAUtils.exe to find the local storage path, and we save it in a variable called RUBYPATH

- We use the Windows cacls.exe command to give all right to this directory

- We extract the 7-Zip archive using 7za, in the RUBYPATH directory

- We add Ruby’s “bin” sub-directory to the system PATH

- Finally, we use the “gem” command to dynamically download the Ruby libraries we need

Now, startupruby.cmd that will run my application:

for /f %%p in ('startup\WAUtils.exe GetLocalResource Ruby') do set RUBYPATH=%%pset PATH=%PATH%;%RUBYPATH%\ruby-1.9.2-p180-i386-mingw32\binruby test.rbThat’s it, I hope these guidelines will help you re-create your own Ruby service in Windows Azure!

And thanks to my colleague Steve Marx for inspiration & guidance :-)

<Return to section navigation list>

SQL Azure Database and Reporting

• The SQL Azure Labs Team announced the availability of a Database Import and Export for SQL Azure CTP on 3/26/2011:

SQL Azure database users have a simpler way to archive SQL Azure and SQL Server databases, or to migrate on-premises SQL Server databases to SQL Azure. Import and export services through the Data-tier Application (DAC) framework make archival and migration much easier.

The import and export features provide the ability to retrieve and restore an entire database, including schema and data, in a single operation. If you want to archive or move your database between SQL Server versions (including SQL Azure), you can export a target database to a local export file which contains both database schema and data in a single file. Once a database has been exported to an export file, you can import the file with the new import feature. Refer to the FAQ at the end of this article for more information on supported SQL Server versions.

This release of the import and export feature is a Community Technology Preview (CTP) for upcoming, fully supported solutions for archival and migration scenarios. The DAC framework is a collection of database schema and data management services, which are strategic to database management in SQL Server and SQL Azure.

Microsoft SQL Server “Denali” Data-tier Application Framework v2.0 Feature Pack CTP

The DAC Framework simplifies the development, deployment, and management of data-tier applications (databases). The new v2.0 of the DAC framework expands the set of supported objects to full support of SQL Azure schema objects and data types across all DAC services: extract, deploy, and upgrade. In addition to expanding object support, DAC v2.0 adds two new DAC services: import and export. Import and export services let you deploy and extract both schema and data from a single file identified with the “.bacpac” extension.

For an introduction to and more information on the DAC Framework, this whitepaper is available: http://msdn.microsoft.com/en-us/library/ff381683(SQL.100).aspx .

Installation

In order to use the new import and export services, you will need the .NET 4 runtime installed. You can obtain the .NET 4 installer from the following link.

With .NET 4 installed, you will then install the following five SQL Server redistributable components on your machine. The only ordering requirement is that SQLSysClrTypes be installed before SharedManagementObjects.1. SQLSysClrTypes.msi

2. SharedManagementObjects.msi

3. DACFramework.msi

4. SqlDom.msi

5. TSqlLanguageService.msiNote: Side-by-side installations with SQL Server “Denali” CTP 1 are not supported and will cause users some issues. Installations of SQL Server 2008 R2 and earlier will not be affected by these CTP components.

Usage

You can leverage the import and export services by using the following .EXE which is provided as an example only.

DacImportExportCli.zipSample Commands

Assume a database exists that is running on SQL Server 2008 R2, which a user has federated (or integrated security) access to. The database can be exported to a “.bacpac” file by calling the sample EXE with the following arguments:

DacImportExportCli.exe -s serverName -d databaseName -f C:\filePath\exportFileName.bacpac -x -eOnce exported, the export file can be imported to a SQL Azure database with:

DacImportExportCli.exe -s serverName.database.windows.net -d databaseName -f C:\filePath\exportFileName.bacpac -i -u userName -p passwordA DAC database running in SQL Server or SQL Azure can be unregistered and dropped with:

DacImportExportCli.exe -s serverName.database.windows.net -drop databaseName -u userName -p passwordYou can also just as easily export a SQL Azure database to a local .bacpac file and import it into SQL Server.

Frequently Asked Questions

A FAQ is provided to answer some of the common questions with importing and exporting databases with SQL Azure

The FAQ can be found on the TechNet Wiki.

See also Steve Yi reported Simplified Import and Export of Data in a 3/24/2011 post to the SQL Azure Team blog below.

• See the Windows Azure Team recommended on 3/26/2011 that you Try the Windows Azure Platform Academic 180 day pass if you meet the qualifications and have a promo code in the Windows Azure Infrastructure and DevOps section below. (Users of previous Windows Azure passes need not apply.)

Steve Yi reported Simplified Import and Export of Data in a 3/24/2011 post to the SQL Azure Team blog:

Importing and exporting data between on-premises SQL Server and SQL Azure just got a lot easier, and you can get started today with the availability of the Microsoft SQL Server "Denali" Data-tier Application (DAC) Framework v2.0 Feature Pack CTP. Let's call this the DAC framework from this point on J. To learn more about DAC, you can read this whitepaper.

If you're eager to try it out, go to the SQL Azure Labs page; otherwise read on for a bit to learn more.

There are 3 important things about this update to the DAC framework:

- 1. New import & export feature: This DAC CTP introduces an import and export feature that lets you store both database schema and data into a single file with a ".bacpac" file extension, radically simplifying data import and export between SQL Server and SQL Azure databases with the use of easy commands.

- 2. Free: This functionality will be shipping in all editions of the next version of SQL Server "Denali"-including free SKUs-and everyone will be able to download it.

- 3. The Future = Hybrid Applications Using SQL Server + SQL Azure: Freeing information to move back and forth from on-premises SQL Server and SQL Azure to create hybrid applications is the future of data. The tools that ship with SQL Server "Denali" will use the DAC framework to enable data movement as part of normal management tasks.

The Data-tier Application (DAC) framework is a collection of database schema and data management libraries that are strategic to database management in SQL Server and SQL Azure. In this CTP, the new import and export feature allow for the retrieval and restoration of a full database, including schema and data, in a single operation.

If you want to archive or move your database between SQL Server versions and SQL Azure, you can export a target database to a single export file, which contains both database schema and data in a single file. Also included are logins, users, tables, columns, constraints, indexes, views, stored, procedures, functions, and triggers. Once a database has been exported, users can import the file with the new import operation.

This release of the import and export feature is a preview for fully supported archival and migration capability of SQL Azure and SQL Server databases. In coming months, additional enhancements will be made to the Windows Azure Platform management portal. Tools and management features shipping in upcoming releases of SQL Server and SQL Azure will have more capabilities powered by DAC, providing increased symmetry in what you can accomplish on-premises and in the cloud.

How Do I Use It?

Assume a database exists that is running within an on-premises SQL Server 2008 R2 instance that a user has access to. You can export the database to a single ".bacpac" file by going to a command line and typing:

DacImportExportCli.exe -s serverName -d databaseName -f C:\filePath\exportFileName.bacpac -x -e

Once exported, the newly created file with the extension ".bacpac" can be imported to a SQL Azure database if you type:

DacImportExportCli.exe -s serverName.database.windows.net -d databaseName -f C:\filePath\fileName.bacpac -i -u userName -p password

A DAC database running in SQL Server or SQL Azure can be unregistered and dropped with:

DacImportExportCli.exe -s serverName.database.windows.net -drop databaseName -u userName -p password

You can also just as easily export a SQL Azure database to a local export file and import it into SQL Server.

How Should I Use the Import and Export Features?

It's important to note that export is not a recommended backup mechanism for SQL Azure databases. (We're working on that. so look for an update in the near future). The export file doesn't store transaction log or historical data. The export file simply contains the contents of a SELECT * for any given table and is not transactionally consistent by itself.

However, you can create a transactionally consistent export by creating a copy of your SQL Azure database and then doing a DAC export on that. This article has details on how you can quickly create a copy of your SQL Azure database. If you export from on-premise SQL Servers, you can isolate the database by placing it in single-user mode or read-only mode, or by exporting from a database snapshot.

We are considering additional enhancements to make it easier to export or restore SQL Azure databases with export files stored in cloud storage - so stay tuned.

Tell Us What You Think

We're really interested to hear your feedback and learn about your experience using this new functionality. Check out the SQL Azure forum and send us your thoughts here.

Check out the SQL Azure Labs page for installation and usage instructions, and frequently asked questions (FAQ).

Tony Bailey (pictured below) claimed SQL Migration Wizard “solves a big problem for me” in a 3/24/2010 post to the TechNet blogs:

My colleague George [Huey] is working hard on the SQL Azure Migration Wizard [SQLAzureMW]

Some of the tool reviews speak for themselves.

Why SQL Azure?

- Relational database service built on SQL Server technologies

- If you know SQL, why waste development hours trying to configure and maintain your own or your hosted SQL Server box?

- SQL Azure provides a highly available, scalable, multi-tenant database service hosted by Microsoft in the cloud

So, use the tool to migrate a SQL database to SQL Azure. SQLAzureMW is a user interactive wizard that walks a person through the analysis / migration process.

Got a question about SQL migration? Get free help.

Sign up for Microsoft Platform Ready: http://www.microsoftplatformready.com/us/dashboard.aspx

Chat, phone hours of operation are from 7:00 am - 3:00 pm Pacific Time.

I can attest to SQLAzureMW’s superior upgrade capabilities. See my Using the SQL Azure Migration Wizard v3.3.3 with the AdventureWorksLT2008R2 Sample Database post of 7/18/2010.

For a bit of history, read my Using the SQL Azure Migration Wizard with the AdventureWorksLT2008 Sample Database post of 9/21/2009.

Steve Yi pointed to an MSDN Article: How to Connect to SQL Azure Using sqlcmd in a 3/24/2010 post to the SQL Azure team blog:

One of the reasons that SQL Azure is such a powerful tool is that it's based on the same database engine as SQL Server. For example, you can connect to the Microsoft SQL Azure Database with the sqlcmd command prompt utility that is included with SQL Server. The sqlcmd utility lets you enter Transact-SQL statements, system procedures, and script files at the command prompt. MSDN has written a tutorial on how to do this. Take a look and get a feel for how easy it is to get started using SQL Azure.

Steve Yi pointed to another MSDN Article: SQL Azure Data Sync Update in a 3/24/2010 post to the SQL Azure Team blog:

At PDC 2010 - SQL Azure Data Sync CTP2 was announced and it has been progressing quite successfully with huge interest, so much so that we are going to continue to process registrations throughout March 2011. For those of you who waiting for access, we appreciate your patience. We'll be releasing CTP3 this summer which will be made available to everyone.

While on the subject of CTP3, here are some of the new features planned:

- A new user interface integrated with the Windows Azure management portal.

- The ability to select a subset of columns to sync as well as to specify a filter so that only a subset of rows are synced.

- The ability to make certain schema changes to the database being synced without having to re-initialize the sync group and re-sync the data.

- Conflict resolution policy can be specified.

- General usability enhancements.

CTP3 will be the last preview, before the final release later this year. We'll announce the release dates on this blog when available.

Click here for the link to the MSDN article

Bill Ramo (@billramo) explained Migrating from MySQL to SQL Azure Using SSMA in a 3/23/2011 post to the Microsoft SQL Server Migration Assistant (SSMA) Team blog:

In this blog post, I will describe how to setup your trial SQL Azure account for your migration project, create a “free” database on SQL Azure and walkthrough differences in the process of using SSMA to migrate the tables from the MySQL Sakila sample database to SQL Azure. For a walkthrough of how to migrate a MySQL database to SQL Server, please refer to the post “MySQL to SQL Server Migration: How to use SSMA”. This blog assumes that you have a local version of the MySQL Sakila-DB sample database already installed and that you have SQL Server Migration Assistant for MySQL v1.0 (SSMA) installed and configured using the instructions provided in the “MySQL to SQL Server Migration: How to use SSMA” blog post.

Getting Started with SQL Azure

If you don’t have a SQL Azure account, you can get a free trial special at http://www.microsoft.com/windowsazure/offers/ through June 30th, 2011. The trial includes a 1GB Web Edition database. Click on the Activate button to get your account up and running. You’ll log in with your Windows Live ID and then complete a four step wizard. Once you are done, the wizard will take you to the Windows Azure Platform portal. If you miss this trail, stay tuned for additional trial offers for SQL Azure.

The next step is to create a new SQL Azure Server by clicking on the “Create a new SQL Azure Server” option in the Getting Started page. You will be prompted for your subscription name that you created in the wizard, the region where your SQL Azure server should be hosted, the Administrator Login information, and the firewall rules. You will need to configure the firewall rules to specify the IPv4 address for the computer where you will be running SSMA.

Click on the Add button to add your firewall rule. Give it a name and then specify a start and end range. The dialog will display your current IPv4 address so that you can enter it in for your start and end range.

Once your are done with the firewall rules, you can then click on your subscription name in the Azure portal to display the fully qualified server name that was just created for you. It will look something like this: x4ju9mtuoi.database.windows.net. You are going to use this server name for your SSMA project.

Using the Azure Portal to Create a Database

SSMA can create a database as part of the project, but for this blog post I’m going to walk through the process of creating the database using the portal and then use the SSMA feature to place the resulting database in a schema within the database. Within the Azure portal, with the subscription selected, you will click in the Create Database command to start the process.

Enter in the name of the database and then keep the other options as the defaults for your free trial account. If you have already paid for a SQL Azure account, you can use the Web edition to go up to 5 GB or switch to the Business edition to up the size limit between 10GB and 50GB. Once created, you will want to click on the Connection Strings – View button to display the connection string information you will use for your SSMA project shown below. The password value shows just a placeholder value.

You are now ready to setup your SSMA project.

Using SSMA to Migrate a MySQL Database to SQL Azure

Once you start SSMA, you will click on the New Project command shown in the image below, enter in the name of the project, and select the SQL Azure option for the Migration To control.

You will then follow the same processes described in the “MySQL to SQL Server Migration: How to use SSMA” blog post that I will outline below.

- Click on the Connect to MySQL toolbar command to complete the MySQL connection information.

- Expand out the Databases node to expand the Sakila database and check the Tables folder as shown below.

- Click on the Create Report command in the toolbar button. You can ignore the errors. For information about the specific errors with converting the Sakila database, please refer to the blog post “MySQL to SQL Server Migration: Method for Correcting Schema Issues”. Close the report window.

- Click on the Connect to SQL Azure button to complete the connection to your target SQL Azure database. Use the first part of the server name and the user name for the administrator as shown below. If you need to change the server name suffix to match your server location, click on the Tools | Project Settings command, click on the General button in the lower left of the dialog and then click on the SQL Azure option in the tree above. From there you can change the suffix value.

- Expand out the Databases node to see the name of the database created in the SQL Azure portal. You will see a Schemas folder under the database name that will be the target for the Sakila database as shown below.

- With the Tables node selected in the MySQL Metadata Explorer, click on the Convert Schema command to create schema named Sakila containing the Sakila tables within the SSMA project as shown below.

- Right click on the Sakila schema above and choose the Synchronize with Database command to write the schema changes to your SQL Azure database and then click OK to confirm the synchronization of all objects. This process creates a SQL Server schema object within your database named Sakila and then all the object from your MySQL database go into that schema.

- Select the Tables node for the Sakila database in the MySQL Metadata Explorer and then issue the Migrate Data command from the toolbar. Complete the connection dialog to your MySQL database and the connection dialog to the SQL Azure database to start the data transfer. Assuming all goes well, you can dismiss the Data Migration Report as shown below.

At this point, you now have all of the tables and their data loaded into your SQL Azure database contained in a schema named Sakila.

Validating the Results with the SQL Azure Database Manager

To verify the transfer of the data, you can use the Manage command from the Azure Portal and shown below. Just select the database and press the Manage command.

This will launch the SQL Azure Database Manager program in a new browser window with the Server, Database, and Login information prepopulated in the connection dialog. If you have other SQL Azure databases you want to connect to without having to go to the portal, you can always connect via the URL - https://manage-ch1.sql.azure.com/.

Once connected, you can expand the Tables node and select a table like sakila.film to view the structure of the table. You can click on the Data command in the toolbar to view and edit the table’s data as shown below.

The SQL Azure Database Manager will also allow you to write an test queries against your database by Database command and then selecting the New Query button in the ribbon. To learn more about this tool, check out the MSDN topic – Getting Started with The Database Manager for SQL Azure.

Additional SQL Azure Resources

To learn more about SQL Azure, see the following resources.

Steve Yi explained Working with SQL Azure using .NET in a 3/23/2011 post to the SQL Azure Team blog:

In this quick 10 minute video, you'll get an understanding of how to work with SQL Azure using .NET to create a web application. I'll demonstrate this using the latest technologies from Microsoft, including ASP.NET MVC3 and Entity Framework.

Although the walkthrough utilizes Entity Framework, the same techniques can be used when working with traditional ADO.NET or other third party data access systems such as NHibernate or SubSonic. In a previous walk-through, we used Microsoft Access to track employee expense reports. We showed how to move the expense-report data to SQL Azure while still maintaining the on premises Access application.

In this video, we will show how to extend the usage of this expense-report data that's now in our SQL Azure cloud database and make it more accessible via an ASP.NET MVC web application.

The sample code and database scripts are available on Codeplex, if you want to try this for yourself.

If you want to view it in full-screen mode, go here and click on the full-screen icon in the lower right-hand corner of the playback window.

We've got several other tutorials on the SQL Azure Codeplex site , go to http://sqlazure.codeplex.com. Other walk-throughs and samples there include ones on how to secure a SQL Azure database, programming .NET with SQL Azure, and several others.

If you haven't started experimenting with SQL Azure, for a limited time, new customers to SQL Azure get a 1GB Web Edition Database for no charge and no commitment for 90 days. This is a great way to try SQL Azure and the Windows Azure platform without any of the risk. Check it out here.

Steve Yi announced another video about SQL Azure in Securing SQL Azure of 3/23/2011:

In this brief 10 minute video, I will demonstrate how to use the security features in SQL Azure to control access to your cloud database. This walkthrough shows how to create a secure connection to a database server, how to manage SQL Azure database settings on the firewall, and how to create and map users and logins to control authentication and authorization.

One of the strengths of SQL Azure is that it provides encrypted SSL communications between the cloud database and the running application. It doesn't matter whether it is running on Windows Azure, on-premises, or with a hosting service. All communications between the SQL Azure Database and the application utilize encryption (SSL) at all times.

Firewall rules prevent only specific IP addresses or IP-address ranges to access a SQL Azure database. Like SQL Server, users and logins can be mapped to a specific database or server-level roles which can provide complete control, restrict a user to just read-only access of a database, or no access at all.

I will walk you through a straightforward example here, which really shows the benefits of SQL Azure. After checking out this video, visit Sqlazure.com for more information and for additional resources. There's also database scripts and code you can download on Codeplex to follow along and re-create this example.

If you want to view it in full-screen mode, go here and click on the full-screen icon in the lower right-hand corner of the playback window.

We've got several other tutorials on the SQL Azure Codeplex site , go to http://sqlazure.codeplex.com. Other walk-throughs and samples there include ones on how to secure a SQL Azure database, programming .NET with SQL Azure, and several others.

If you haven't started experimenting with SQL Azure, for a limited time, new customers to SQL Azure get a 1GB Web Edition Database for no charge and no commitment for 90 days. This is a great way to try SQL Azure and the Windows Azure platform without any of the risk. Check it out here.

<Return to section navigation list>

MarketPlace DataMarket and OData

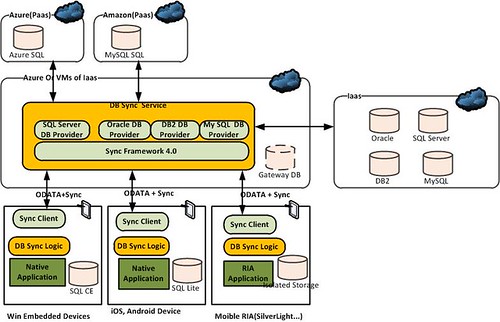

• Flick Software introduced an OData Sync Client for Android on 3/26/2011:

The following is an intro to OData + Sync protocol from the Microsoft website:

"The OData + Sync protocol enables building a service and clients that work with common set of data. Clients and the service use the protocol to perform synchronization, where a full synchronization is performed the first time and incremental synchronization is performed subsequent times.

The protocol is designed with the goal to make it easy to implement the client-side of the protocol, and most (if not all) of the synchronization logic will be running on the service side. OData + Sync is intended to be used to enable synchronization for a variety of sources including, but not limited to, relational databases and file systems. The OData + Sync Protocol comprises of the following group of specifications, which are defined later in this document:”

Specification Description OData + Sync: HTTP Defines conventions for building HTTP based synchronization service, and Web clients that interact with them. OData + Sync: Operations Defines the request types (upload changes, download changes, etc…) and associated responses used by the OData + Sync protocol. OData + Sync: ATOM Defines an Atom representation for the payload of an OData + Sync request/response. OData + Sync: JSON Defines a JSON representation of the payload of an OData + Sync request/response. Our Android OData + Sync client implements the OData Sync:Atom protocol: it tracks changes in SQLite, incrementally transfers DB changes and resolves conflict. It wraps up these general sync tasks so that applications can focus on implementing business specific sync logic. Using it, developers can sync their SQLite DB with almost any type of relational DBs on the backend server or in the cloud. It can give a boost to the mission critical business applications development on Android. These types of applications use SQLite as local storage to persist data and sync data to/from the backend (clouds) when connection is available. So the business workflow won’t be interrupted by poor wireless signal, data loss caused by hardware/software defects will be minimized and data integrity will be guaranteed.

Pablo Castro described An efficient format for OData in a 3/25/2011 post to the OData blog:

The need for a more efficient format for OData has been coming up often lately, with this thread being the latest on the topic. I thought I would look into the topic in more detail and take a shot at characterizing the problem and start to drill into possible alternatives.

Discussing the introduction of a new format for OData is something that I think about with a lot of care. As we discussed in this forum in the past, special formats for closed systems are fine, but if we are talking about a format that most of the ecosystem needs to support then it is a very different conversation. We need to make sure it does not fragment the ecosystem and does not leave particular clients or servers out.

All that said, OData is now used in huge server farms where CPU cycles used in serializing data are tight, and in phone applications where size, CPU utilization and battery consumption need to be watched carefully. So I think is fair to say that we need to look into this.

What problem do we need to solve?

Whenever you have a discussion about formats and performance the usual question comes up right away: do you optimize for size or speed? The funny thing is that in many, many cases the servers will want to optimize for throughput while the clients talking to those servers will likely want to optimize for whatever maximizes battery life, with whoever is paying for bandwidth wanting to optimize for size.

The net of this is that we have to find a balanced set of choices, and whenever possible leave the door open for further refinement while still allowing for interoperability. Given that, I will loosely identify the goal as creating an "efficient" format for OData and avoid embedding in the problem statement whether more efficient means more compact, faster to produce, etc.

What are the things we can change?

Assuming that the process of obtaining the data and taking it apart so it is ready for serialization is constant, the cost of serialization tends to be dominated by the CPU time it takes to convert any non-string data into strings (if doing a text-based format), encode all strings into the right character encoding and stitching together the whole response. If we were only focused on size we could compress the output, but that taxes CPU utilization twice, once to produce the initial document and then again to compress it.

So we need to write less to start with, while still not getting so fancy that we spend a bunch of time deciding what to write. This translates into finding relatively obvious candidates and eliminating redundancy there.

On the other hand, there are things that we probably do not want to change. Having OData requests and responses being self-contained is important as it enables communication without out of band knowledge. OData also makes extensive use of URLs for allowing servers to encode whatever they want, such as the distribution of entities or relationships across different hosts, the encoding of continuations, locations of media in CDNs, etc.

What (not) to write (or read)

If you take a quick look at an OData payload you can quickly guess where the redundancy is. However I wanted to have a bit more quantitative data on that so I ran some numbers (not exactly a scientific study, so take it kind of lightly). I used 3 cases for reference from the sample service that exposes the classic Northwind database as an OData service:

- 1Customer: http://services.odata.org/Northwind/Northwind.svc/Customers?$top=1

- 100CustomersAndOrders: http://services.odata.org/Northwind/Northwind.svc/Customers?$top=100&$expand=Orders

- 100NarrowCustomers: http://services.odata.org/Northwind/Northwind.svc/Customers?$top=100&$select=CustomerID,CompanyName

So a really small data set, a larger and wider data set, and a larger but narrower data set.

If you contrast the Atom versus the JSON size for these, JSON is somewhere between half and a third of the size of the Atom version. Most of it comes from the fact that JSON has less redundant/unneeded content (no closing tags, no empty required Atom elements, etc.), although some is actually less fidelity, such as lack of type annotations in values.

Analyzing the JSON payload further, metadata and property names make up about 40% of the content, and system-generated URLs ~40% as well. Pure data ends up being around 20% of the content. For those that prefer to see data visually:

There is quite a bit of variability on this. I've seen feeds where URLs are closer to 20-25% and others where metadata/property names go up to 65%. In any case, there is a strong case to address both of them.

Approach

I'm going to try to separate the choice of actual wire format from the strategy to make it less verbose and thus "write less". I'll focus on the latter first.

From the data above it is clear that the encoding of structure (property names, entry metadata) and URLs both need serious work. A simple strategy would be to introduce two constructs into documents:

- "structural templates" that describe the shape of compound things such as records, nested records, metadata records, etc. Templates can be written once and then actual data can just reference them, avoiding having to repeat the names of properties on every row.

- "textual templates" that capture text patterns that repeat a lot throughout the document, with URLs being the primary candidates for this (e.g. you could imagine the template being something like " http://services.odata.org/Northwind/Northwind.svc/Customers('{0}')" and then the per-row value would be just what is needed to replace "{0}").

We could inline these with the data as needed, so only the templates that are really needed would go into a particular document.

Actual wire format

The actual choice of wire format has a number of dimensions:

- Text or binary?

- What clients should be able to parse it?

- Should we choose a lower level transport format and apply our templating scheme on top, or use a format that already has mechanisms for eliminating redundancy?

Here are a few I looked at:

- EXI: this is a W3C recommendation for a compact binary XML format. The nice thing about this is that it is still just XML, so we could slice it under the Atom serializers and be done. It also achieves impressive levels of compression. I worry though that we would still do all the work in the higher layers (so I'm not sure about CPU savings), plus not all clients will be able to use it directly, and implementing it seems like quite a task.

- "low level" binary formats: I spent some time digging into BSON, Avro and Protocol Buffers. I call them "low level" because this would still require us to define a format on top to transport the various OData idioms, and if we want to reduce redundancy in most cases we would have to deal with that (although to be fair Avro seems to already handle the self-descriptive aspect on the structural side).

- JSON: this is an intriguing idea someone in the WCF team mentioned some time ago. We can define a "dense JSON" encoding that uses structural and textual templates. The document is still a JSON document and can be parsed using a regular JSON parser in any environment. Fancy parsers would go directly from the dense format into their final output, while simpler parsers can apply a simple JSON -> JSON transform that would return the kind of JSON you would expect for a regular scenario, with plain objects with repeating property names and all that. This approach probably comes with less optimal results in size but great interoperability while having reasonable efficiency.

Note that for the binary formats and for JSON I'm leaving compression aside. Since OData is built on HTTP, clients and servers can always negotiate compression via content encoding. This allows servers to make choices around throughput versus size, and clients to suggest whether they would prefer to optimize for size or for CPU utilization (which may reflect on battery life on portable devices).

A dense JSON encoding for OData

Of these, I'm really intrigued by the JSON option. I love the idea of keeping the format as text, even though it could get pretty cryptic with all the templating stuff. I also really like the fact that it would work in browsers in addition to all the other clients.

This is a long write up already, the actual definition of the JSON encoding belongs to a separate discussion, provided that folks think this is an interesting direction. So let me just give an example for motivation and leave it at that.

Let's say we have a bunch of rows for the Title type from the Netflix examples above. We would typically have full JSON objects for each row, all packaged in an array, with a __metadata object each containing "self links" and such. The dense version would instead consist of an object stream that has "c", "m" or "d" objects for "control", "metadata" and "data" respectively. Each object represents an object in the top level array, and each value is either a metadata object introducing a new template or a data value representing a particular value for some part of the template, with values matched in template-definition order; control objects are usually first/last and indicate things like count, array/singleton, etc. It would look like this:

(there are some specifics that aren't quite right on this, take it as an illustration and not a perfect working example)

Note the two structural templates (JSON-schema-ish) and the one textual template. For a single row this would be a bit bigger than a regular document, but probably not by much. As you have more and more rows, they become densely packed objects with no property names and mostly fixed order for unpacking. With a format like this, the "100NarrowCustomers" example above would be about a third of the size of the original JSON. That's not just smaller on the wire, but a third of text that does not need to be processed at all.

Next steps?

We need a better evaluation of the various format options. I like the idea of a dense JSON format but we need to have validation that the trade-offs work. I will explore that some more and send another note. Similarly I'm going to try and get to the next level of detail in the dense JSON thing to see how things look.

If you read this far you must be really motivated by the idea of creating a new format…all feedback is welcome.

<Return to section navigation list>

Windows Azure AppFabric: Access Control, WIF and Service Bus

• Bert Craven explained Implementing a REST-ful service using OpenRasta and OAuth WRAP with Windows Azure AppFabric ACS in a 3/27/2011 post:

I’ve been building prototypes again and I wanted to build a service that exposed a fairly simple, resource-based data API with granular security, i.e. some of my users would be allowed to access one resource but not another or they might be allowed to read a resource but not create or update them.

To do this I’ve used OpenRasta and created an security model based on OAuth/WRAP claims issued by the Windows Azure AppFabric Access Control Service (ACS).

The client can now make a rest call to the ACS passing an identity and secret key. In return they will be issued with a set of claims. A typical claim encompasses a resource in my REST service and the action(s) the user is allowed to perform, so their claim set might show that they were allowed to execute GET against resource foo but not POST or PUT.

In my handler in OpenRasta I add method attributes that indicate what claims are required to invoke that method, for instance in my handler for resources of type foo I might have the following method :

1 [RequiresClaims("com.somedomain.api.resourceaction", "GetFoo")] 2 public OperationResult GetFooByID(int id) 3 { 4 //elided 5 }

In my solution I have created an OpenRasta interceptor which examines inbound requests, validates the claim set and then compares the claims required by the method attribute to the claims in the claim set. Only if there is a match can the request be processed.

I was going to write a long blog post about how to build this from scratch with diagrams and screen shots and code samples but I found that I couldn’t be arsed. If you’d like more info more on how to do this just drop me a line. In the meantime I’ve dropped the source for the interceptor, validator and attribute as follows :

RequiresClaimsAttribute : https://gist.github.com/889319

ClaimsAuthorizingInterceptor : https://gist.github.com/889324

TokenValidator : https://gist.github.com/889325

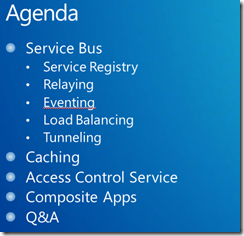

• Damir Dobric described My Session at WinDays 2011 to be given 4/6/2011 16:20:00 in Rovinj, Croatia:

Welcome to all of you who will attend WinDays 2011. This year I will give the session about technologies which are owned by home-division called Microsoft Business Platform Division. All of you who do not know much about this division please just note what technologies are in focus: WCF, WF, BizTalk, Windows Server AppFabric, Windows Azure AppFabric, Service Bus, Access Control Service, etc. etc.

This time the major focus is Windows Azure AppFabric which include many serious technologies for professional enterprise developers.

My personal title of the session is:

Here is the agenda:

Note that I will keep the right to slightly change the program (you know like TV).

- TIME: 6.4.2011. 16:20:00

- Hall: 7

- Category: DEVELOPMENT

- DEMO: 80%

- LEVEL: 300+

- Where: Rovinj – Croatia

<Return to section navigation list>

Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

• Graham Callidine continued his video series with a 00:26:49 Windows Azure Platform Security Essentials: Module 5 - Secure Networking using Windows Azure Connect video segment:

Graham Calladine, Security Architect with Microsoft Services, provides detailed description of Windows Azure Connect, a new mechanism for establishing, managing and securing IP-level connectivity between on-premises and Windows Azure resources.

You’ll learn about:

- Potential usage scenarios of Windows Azure Connect

- Descriptions of the different components of Windows Azure Connect, including client software, relay service, name resolution service, networking policy model

- Management overview

- Joining your cloud-based virtual machines to Active Directory

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

• Inge Henriksen reported Version 1.1 of Azure Providers has been released on 3/27/2011:

I have released version 1.1 of my [Azure Membership, Role, Profile, and Session-State Providers] project on Codeplex.

New in version 1.1 is:

Complete Session-State Provider that uses the Azure Table Storage so that you can share sessions between instances

- All e-mail sent from the application is handled by a worker role that picks e-mails from the Azure Queue

- Automatic thumbnail creation when uploading a profile image

- Several bugs have been fixed

- Added retries functionality for when accessing the table storage

- General rewrite of how to access the table storage that require less code and is more readable

Project home page: http://azureproviders.codeplex.com/

• The Windows Azure Team recommended on 3/26/2011 that you Try the Windows Azure Platform Academic 180 day pass if you meet the qualifications and have a promo code:

Clicking the Don’t have a promo code? button and enter your Windows Live ID for more information. If you’ve received any free Windows Azure promotion previously, you a message similar to the following:

A Windows Azure platform 30 day pass has already been requested for this Windows Live ID. Limit one per account.

Limiting potential Azure developers to a single free pass, no matter how short the duration, doesn’t seem very generous to me.

Jas Sandhu (@jassand) reported on Microsoft Interoperability at EclipseCon 2011 in a 3/25/2011 post:

I've just returned from EclipseCon 2011, in wet and less than usually sunny Santa Clara California, and it's been definitely a jam packed and busy event with a lot of things going on. Interoperability @ Microsoft was a Bronze Sponsor for the event and we also had a session, "Open in the Cloud:- Building, Deploying and Managing Java Applications on Windows Azure Platform using Eclipse” by Vijay Rajagopalan, previously architect on our team, Interoperability Strategy, and now leading the Developer Experience work for the Windows Azure product team.

The session primarily covers the work we have done on Windows Azure to make it an open and interoperable platform which supports development using many programming languages and tools. In the session, you can learn the primers on building large-scale applications in the cloud using Java, taking advantage of new Windows Azure Platform as a Service features, Windows Azure applications using Java with Eclipse Tools, Eclipse Jetty, Apache Tomcat, and the Windows Azure SDK for Java.

We have been working on improving the experience for Java developers who use Eclipse to work with Windows Azure. At this session we announced the availability of a new Community Technology Preview (CTP) of a new plugin for Eclipse which provides Java developers with a simple way to build and deploy web applications for Windows Azure. The Windows Azure Plugin for Eclipse with Java, March 2011 CTP, is an open source project released under the Apache 2.0 license, and it is available for download here. This project has been developed by Persistent Systems and Microsoft is providing funding and technical assistance. For more info in this regard please check out the post, “New plugin for Eclipse to get Java developers off the ground with Windows Azure” by Craig Kitterman and the video interview and demo with Martin Sawicki, Senior Program Manager in the Interoperability team. Please send us feedback on what you like, or don’t like, and how we can improve these tools for you.

I would like to thank the folks at the Eclipse foundation and the community for welcoming us and I look forward to working with you all in the future and hope to see you at EclipseCon next year!

Jas is a Microsoft Technical Evangelist

Steve Peschka explained Using WireShark to Trace Your SharePoint 2010 to Azure WCF Calls over SSL in a 3/19/2011 post:

One of the interesting challenges when trying to troubleshoot remotely connected systems is figuring out what they're saying to each other. The CASI Kit that I've posted about other times on this blog (http://blogs.technet.com/b/speschka/archive/2010/11/06/the-claims-azure-and-sharepoint-integration-toolkit-part-1.aspx) is a good example whose main purpose in life is providing plumbing to connect data center clouds together. One of the difficulties in troubleshooting it is that case is that the traffic travels over SSL so it can be fairly difficult to troubleshoot. I looked at using both NetMon 3.4, which has an Expert add in now for SSL that you can get from http://nmdecrypt.codeplex.com/, and WireShark. I've personally always used NetMon but had some difficulties getting the SSL expert to work so decided to give WireShark a try.

WireShark appears to have had support for SSL for a couple years now; it just requires that you provide the private key used with the SSL certificate that is encrypting your conversation. Since the WCF service is one that I wrote it's easy enough to get that. A lot of the documentation around WireShark suggests that you need to convert your PFX of your SSL certificate (the format that you get when you export your certificate and include the private key) into a PEM format. If you read the latest WireShark SSL wiki (http://wiki.wireshark.org/SSL) though it turns out that's not actually true. Citrix actually has a pretty good article on how to configure WireShark to use SSL (http://support.citrix.com/article/CTX116557), but the instructions are way to cryptic when it comes to what values you should be using for the "RSA keys list" property in the SSL protocol settings (if you're not sure what that is, just follow the Citrix support article above). So to combine that Citrix article and the info on the WireShark wiki, here is a quick run down on those values:

- IP address - this is the IP address of the server that is sending you SSL encrypted content that you want to decrypt

- Port - this is the port the encrypted traffic is coming across on. For a WCF endpoint this is probably always going to be 443.

- Protocol - for a WCF endpoint this should always be http

- Key file name - this is the location on disk where you have the key file

- Password - if you are using a PFX certificate, this is a fifth parameter that is the password to unlock the PFX file. This is not covered in the Citrix article but is in the WireShark wiki.

So, suppose your Azure WCF endpoint is at address 10.20.30.40, and you have a PFX certificate at C:\certs\myssl.pfx with password of "FooBar". Then the value you would put in the RSA keys list property in WireShark would be:

10.20.30.40,443,http,C:\certs\myssl.pfx,FooBar.

Now, alternatively you can download OpenSSL for Windows and create a PEM file from a PFX certificate. I just happend to find this download at http://code.google.com/p/openssl-for-windows/downloads/list, but there appear to be many download locations on the web. Once you've download the bits that are appropriate for your hardware, you can create a PEM file from your PFX certificate with this command line in the OpenSSL bin directory:

openssl.exe pkcs12 -in <drive:\path\to\cert>.pfx -nodes -out <drive:\path\to\new\cert>.pem

So, supposed you did this and created a PEM file at C:\certs\myssl.pem, then your RSA keys list property in WireShark would be:

10.20.30.40,443,http,C:\certs\myssl.pem

One other thing to note here - you can add multiple entries separated by semi-colons. So for example, as I described in the CASI Kit series I start out with a WCF service that's hosted in my local farm, maybe running in the Azure dev fabric. And then I publish it into Windows Azure. But when I'm troubleshooting stuff, I may want to hit the local service or the Windows Azure service. One of the nice side effects of taking the approach I described in the CASI Kit of using a wildcard cert is that it allows me to use the same SSL cert for both my local instance as well as Windows Azure instance. So in WireShark, I can also use the same cert for decrypting traffic by just specifying two entries like this (assume my local WCF service is running at IP address 192.168.20.100):

10.20.30.40,443,http,C:\certs\myssl.pem;192.168.20.100,443,http,C:\certs\myssl.pem

That's the basics of setting up WireShark, which I really could have used late last night. :-) Now, the other really tricky thing is getting the SSL decrypted. The main problem it seems from the work I've done with it is that you need to make sure you are capturing during the negotiation with the SSL endpoint. Unfortunately, I've found with all the various caching behaviors of IE and Windows that it became very difficult to really make that happen when I was trying to trace my WCF calls that were coming out of the browser via the CASI Kit. In roughly 2 plus hours of trying it on the browser I only ended up getting one trace back to my Azure endpoint that I could actually decrypt in WireShark, so I was pretty much going crazy. To the rescue once again though comes the WCF Test Client.

The way that I've found now to get this to work consistently is to:

- Start up WireShark and begin a capture.

- Start the WCF Test Client

- Add a service reference to your WCF (whether that's your local WCF or your Windows Azure WCF)

- Invoke one or more methods on your WCF from the WCF Test Client.

- Go back to WireShark and stop the capture.

- Find any frame where the protocol says TLSV1

- Right-click on it and select Follow SSL Stream from the menu

A dialog will pop up that should show you the unencrypted contents of the conversation. If the conversation is empty then it probably means either the private key was not loaded correctly, or the capture did not include the negotiated session. If it works it's pretty sweet because you can see the whole conversation, or only stuff from the sender or just receiver. Here's a quick snip of what I got from a trace to my WCF method over SSL to Windows Azure:

- POST /Customers.svc HTTP/1.1

- Content-Type: application/soap+xml; charset=utf-8

- Host: azurewcf.vbtoys.com

- Content-Length: 10256

- Expect: 100-continue

- Accept-Encoding: gzip, deflate

- Connection: Keep-Alive

- HTTP/1.1 100 Continue

- HTTP/1.1 200 OK

- Content-Type: application/soap+xml; charset=utf-8

- Server: Microsoft-IIS/7.0

- X-Powered-By: ASP.NET

- Date: Sat, 19 Mar 2011 18:18:34 GMT

- Content-Length: 2533

- <s:Envelope xmlns:s="http://www.w3.org/2003/05/soap-envelope" blah blah blah

So there you have it; it took me a while to get this all working so hopefully this will help you get into troubleshooting mode a little quicker than I did.

The Windows Azure Team posted a Seattle Radio Station KEXP Takes to the Cloud with Windows Azure story on 3/22/2011:

At the Nonprofit Technology Conference in Washington, D.C., last week, Akhtar Badshah, Microsoft's senior director of Global Community Affairs, shared how Microsoft and popular Seattle radio station KEXP have teamed up to give the station a "technological makeover." Leveraging Microsoft software and services such as Windows Azure, KEXP is transforming operations ranging from the station's internal communications to how listeners worldwide interact with music. The makeover will take three years to complete but listeners will start seeing dramatic improvements this spring.

KEXP, which began as a 10-watt station in 1972, grew into a musical force in Seattle and beyond with the help of a supportive membership base and Internet streaming. KEXP was the first station in the world to offer CD-quality 24/7 streaming audio and now broadcasts in Seattle on 90.3, in New York City on 91.5, and worldwide via the web at KEXP.org.

The radio station's colorful history and widespread support among musicians has made it a virtual treasure chest of musical media, from its music library to related content such as live performances, podcasts and videos, album reviews, blog posts, DJ notes and videos. KEXP's website allows users to listen live and to studio performances and archives of recent shows, as well as read about artists and buy music. To date, much of this vast musical information has been compartmentalized, unconnected, or not available online.

That's about to change, thanks to a metadata music and information "warehouse" that KEXP has built on the Windows Azure platform. The warehouse, or "Music Service Fabric", will store all of the bits of related data put them at listeners' fingertips. The Music Service Fabric will normalize all play data throughout the station and will be used to power the real-time playlist, streaming services and new features such as contextual social sharing.

According to Badshah, KEXP's willingness to experiment made the station a natural partner and the station's use of Microsoft technology ranging from Windows Azure to SharePoint Online to Microsoft Dynamics CRM helps show technology can help nonprofits in a holistic way.

To learn more about this story, please read the related posts, "Tech Makeover: KEXP Takes Its Musical Treasure Chest to the Cloud", on Microsoft News Center and, "KEXP.org: Where the Music and Technology Matter (Part 2)", on the Microsoft Unlimited Potential Blog.

I was a transmitter engineer and diskjocky for the first listener-sponsored (and obviously non-profit) radio station KPFA in Berkeley, CA in a prior avatar (when I was at Berkeley High School and UC Berkeley.) KPFA started life in 1949 with a 250-watt transmitter. To say the least, KPFA has had a “colorful history,” which you can read about here.

<Return to section navigation list>

Visual Studio LightSwitch and Entity Framework 4+

• Robert Green announced an Updated Post on Where Do I Put My Data Code? in a 3/27/2011 post:

I have just updated Where Do I Put My Data Code In A LightSwitch Application? for Beta 2. I reshot all the screens and have made some minor changes to both the text and the narrative. The primary difference is that I have moved my data code into the screen’s InitializeDataWorkspace event handler, rather than using the screen’s Created handler (which was known as Loaded in Beta 1).

I am working on updating the rest of my posts for Beta 2. Stay tuned.

Julie Lerman (@julielerman) described Round tripping a timestamp field for concurrency checks with EF4.1 Code First and MVC 3 in a 3/25/2011 post to her Don’t Be Iffy blog:

MVC is by definition stateless so how do you deal with timestamp that needs to be persisted across post backs in order to be used for concurrency checking?

Here’s how I’m achieving this using EF4.1 (RC), Code First and MVC 3.

I have a class, Blog.

Blog has a byte array property called TimeStamp, that I’ve marked with the code first DataAnnotation, Timestamp:

[TimeStamp] public byte[] TimeStamp{get; set;}If you letting CF generate the database you’ll get the following field as a result of the combination of byte array and TimeStamp attribute:

So the database will do the right thing thanks to the timestamp (aka rowversion) data type – update that property any time the row changes.

EF will do the right thing, too, thanks to the annotation – use the original TImeStamp value for updates & deletes and refresh it as needed –if it’s available, that is.

But none of this will work out of the box with MVC. You need to be sure that MVC gives you the original timestamp value when it’s time to call update.

So…first we’ll have to assume that I’m passing a blog entity to my Edit view.

public ActionResult Edit(int id) { var blogToEdit=someMechanismForGettingThatBlogInstance(); return View(blogToEdit); }In my Edit view I need to make sure that the TimeStamp property value of this Blog instance is known by the form.

I’m using this razor based code in my view to keep track of that value:

[after posting this, Kathleen Dollard made me think h arder about this and I realized that MVC 3 provides an even simpler way thanks to the HiddenFor helper…edits noted]

@Html.Hidden("OriginalTimeStamp",Model.TimeStamp)@Html.HiddenFor(model => model.TimeStamp)This will ensure that the original timestamp value stays in the timestamp property even though I’m not displaying it in the form.

When I post back, I can access that value this way:[HttpPost] public ActionResult Edit(byte[] originalTimeStamp, Blog blog) {When I post back, Entity Framework can access still get at the original timestamp value in the blog object that’s passed back through model binding.

[HttpPost] public ActionResult Edit(Blog blog) {Then, in the code I use to attach that edited blog to a context and update it,

I can grab that originalTimeStamp value and shove it into the blog instance using Entry.Property.OriginalValue, like so,EF will recognize the timestamp value and use it in the update. (No need to explicitly set the original value now that I’ve already got it.)db.Entry(blog).State = System.Data.EntityState.Modified;db.Entry(blog).Property(b => b.TimeStamp).OriginalValue = originalTimeStamp;db.SaveChanges();After making the Dollard-encouraged modification, I verified that the timestamp was being used in the update:

set [Title] = @0, [BloggerName] = @1, [BlogDetail_DateCreated] = @2, [BlogDescription] = @3

where (([PrimaryTrackingKey] = @4) and ([TimeStamp] = @5))

select [TimeStamp]

from [dbo].[InternalBlogs]

where @@ROWCOUNT > 0 and [PrimaryTrackingKey] = @4',N'@0 nvarchar(128),@1 nvarchar(10),@2 datetime2(7),@3 nvarchar(max) ,@4 int,@5 binary(8)',@0=N'My Code First Blog',@1=N'Julie',@2='2011-03-01 00:00:00',@3=N'All about code first',@4=1,@5=0x0000000000001771You can use the same pattern with any property that you want to use for concurrency even if it is not a timestamp. However, you should use the [ConcurrencyCheck] annotation. The Timestamp annotation is only for byte arrays and can only be used once in a given class.

If you use ConcurrencyCheck on an editable property, you’ll need to use a hidden element (not hiddenfor) to retain the value of that property separately, otherwise EF will use the value coming from the form as the original property then grab it s a parameter of the Edit post back method and finally, use Entry.Property.OriginalValue (all that crossed out stuff

) to shove the value back into the entity before saving changes.

The next video in my series of EF4.1 videos for MSDN (each accompanied by articles) is on Annotations. It’s already gone through the review pipeline and should be online soon. It does have an example of using the TimeStamp annotation but doesn’t go into detail about using that in an MVC 3 application, so I hope this additional post is helpful.

Robert Green announced the availability of an Updated Post on Using Remote and Local Data in a 3/23/2011 post:

I have just updated Using Both Remote and Local Data in a LightSwitch Application for Beta 2. I reshot all the screens and have made some changes to both the text and the narrative. I also added a section on the code you need to write to enable saving data in two different data sources. In Beta 1, you could have two data sources on a screen and edit both of them by default. Starting with Beta 2, the default is that you can only have one editable data source on a screen. If you want two or more, you have to write a little code. I cover that in the updated post.

I am working on updating the rest of my posts for Beta 2. Stay tuned.

Return to section navigation list>

Windows Azure Infrastructure and DevOps

• David Linthicum asserted Cloud Integration Not as Easy as Many Think in a 3/27/2011 post to ebizQ’s Where SOA Meets Cloud blog:

Okay, you need to push your customer data to Salesforce.com, and back again? There are dozens of technologies, cloud and not cloud, which can make this happen. Moreover, there are many best practices and perhaps pre-built templates that are able to make this quick and easy.

But, what if you're not using Salesfoce.com, and your cloud is a rather complex IaaS or PaaS cloud, that is not as popular and thus not as well supported with templates and best practices? Now what?

Well, you're back in the days when integration was uncharted territory and when you had to be a bit creative when attempting to exchange information with one complex and abstract system with another. This means mapping data, transformation and routing logic, adapters, many of the old school integration concepts seem to be a lost art these days. Just because your source or target system is a cloud and not a traditional system, that does not make it any easier.

The good news is that there are an awful lot of effective integration technologies around these days, most of them on-premise with a few of them cloud-delivered. But, learning to use these products still requires that you have a project mentality when approaching cloud-to-enterprise integration, and it's not an afterthought, as it is many times. This means time, money, and learning that many enterprises have not dialed into their cloud enablement projects.

Many smaller consulting firms are benefiting form this confusion and are out there promoting their ability to connect stuff in your data center with stuff in the cloud. Most fall way short in delivering the value and promise of cloud integration, and I'm seeing far too many primitive connections, such as custom programmed interfaces and FTP solutions out there. That's a dumb option these days, considering that the problem as already been solved by others.

I suspect that integration will continue to be an undervalued part of cloud computing, until it becomes the cause of many cloud computing project failures. Time to stop underestimating that work that needs to be done here.

• Bruce Guptill authored Open Network Foundation Announces Software-Defined Networking as a Saugatuck Technology Research Alert on 3/23/2011 (site registration required):

What Is Happening? On Tuesday March 22, 2011, the Open Networking Foundation (ONF) announced its intent to standardize and promote a series of data networking protocols, tools, interfaces, and controls known as Software-Defined Networking (SDN) that could enable more intelligent, programmable, and flexible networking. The linchpin of SDN is the OpenFlow interface, which controls how packets are forwarded through network switches. The SDN standard set also includes global management interfaces. See Note 1 for a current list of ONF member firms.

ONF members indicate that the organization will first coordinate the ongoing development of OpenFlow, and freely license it to member firms. Defining global management interfaces will follow next.

If sufficiently developed and uniformly adopted, SDN could significantly improve network interoperability and reliability, while improving traffic management and security, and reducing the overall costs of data networking. This could enable huge improvements in the ability of Cloud platforms and services to interoperate securely, efficiently, and cost-effectively. They could also engender massive changes in basic Internet/web connectivity and management, and raise significant concerns regarding network neutrality. …

Bruce continues with the usual “Why Is It Happening” and “Market Impact” sections.

James Hamilton (pictured below) posted Brad Porter’s Prioritizing Principals in "On Designing and Deploying Internet-Scale Services" in a 3/26/2011 post:

Brad Porter is Director and Senior Principal engineer at Amazon. We work in different parts of the company but I have known him for years and he’s actually one of the reasons I ended up joining Amazon Web Services. Last week Brad sent me the guest blog post that follows where, on the basis of his operational experience, he prioritizes the most important points in the Lisa paper On Designing and Deploying Internet-Scale Services.

--jrh

Prioritizing the Principles in "On Designing and Deploying Internet-Scale Services"

By Brad Porter

James published what I consider to be the single best paper to come out of the highly-available systems world in many years. He gave simple practical advice for delivering on the promise of high-availability. James presented “On Designing and Deploying Internet-Scale Services” at Lisa 2007.

A few folks have commented to me that implementing all of these principles is a tall hill to climb. I thought I might help by highlighting what I consider to be the most important elements and why.

1. Keep it simple

Much of the work in recovery-oriented computing has been driven by the observation that human errors are the number one cause of failure in large-scale systems. However, in my experience complexity is the number one cause of human error.

Complexity originates from a number of sources: lack of a clear architectural model, variance introduced by forking or branching software or configuration, and implementation cruft never cleaned up. I'm going to add three new sub-principles to this.

Have Well Defined Architectural Roles and Responsibilities: Robust systems are often described as having "good bones." The structural skeleton upon which the system has evolved and grown is solid. Good architecture starts from having a clear and widely shared understanding of the roles and responsibilities in the system. It should be possible to introduce the basic architecture to someone new in just a few minutes on a white-board.

Minimize Variance: Variance arises most often when engineering or operations teams use partitioning typically through branching or forking as a way to handle different use cases or requirements sets. Every new use case creates a slightly different variant. Variations occur along software boundaries, configuration boundaries, or hardware boundaries. To the extent possible, systems should be architected, deployed, and managed to minimize variance in the production environment.

Clean-Up Cruft: Cruft can be defined as those things that clearly should be fixed, but no one has bothered to fix. This can include unnecessary configuration values and variables, unnecessary log messages, test instances, unnecessary code branches, and low priority "bugs" that no one has fixed. Cleaning up cruft is a constant task, but it is a necessary to minimize complexity.

2. Expect failures

At its simplest, a production host or service need only exist in one of two states: on or off. On or off can be defined by whether that service is accepting requests or not. To "expect failures" is to recognize that "off" is always a valid state. A host or component may switch to the "off" state at any time without warning.

If you're willing to turn a component off at any time, you're immediately liberated. Most operational tasks become significantly simpler. You can perform upgrades when the component is off. In the event of any anomalous behavior, you can turn the component off.

3. Support version roll-back

Roll-back is similarly liberating. Many system problems are introduced on change-boundaries. If you can roll changes back quickly, you can minimize the impact of any change-induced problem. The perceived risk and cost of a change decreases dramatically when roll-back is enabled, immediately allowing for more rapid innovation and evolution, especially when combined with the next point.

4. Maintain forward-and-backward compatibility

Forcing simultaneous upgrade of many components introduces complexity, makes roll-back more difficult, and in some cases just isn't possible as customers may be unable or unwilling to upgrade at the same time.

If you have forward-and-backwards compatibility for each component, you can upgrade that component transparently. Dependent services need not know that the new version has been deployed. This allows staged or incremental roll-out. This also allows a subset of machines in the system to be upgraded and receive real production traffic as a last phase of the test cycle simultaneously with older versions of the component.

5. Give enough information to diagnose