Windows Azure and Cloud Computing Posts for 10/19/2011+

| A compendium of Windows Azure, SQL Azure Database, AppFabric, Windows Azure Platform Appliance and other cloud-computing articles. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table, Queue and Hadoop Services

- SQL Azure Database and Reporting

- Marketplace DataMarket and OData

- Windows Azure AppFabric: Apps, Access Control, WIF and Service Bus

- Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch and Entity Framework v4+

- Windows Azure Infrastructure and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

Azure Blob, Drive, Table, Queue and Hadoop Services

Jon Zuanich (@jonzuanich) posted Hadoop World 2011: A Glimpse into Operations to the Cloudera blog on 10/19/2011:

Check out the Hadoop World 2011 conference agenda!

Find sessions of interest and begin planning your Hadoop World experience among the sixty breakout sessions spread across five simultaneous tracks at http://www.hadoopworld.com/agenda/.

Preview of Operations Track and Sessions

The Operations track at Hadoop World 2011 focuses on the practices IT organizations employ to adopt and run Apache Hadoop with special emphasis on the people, processes and technology. Presentations will include initial deployment case studies, production scenarios or expansion scenarios with a focus on people, processes and technology. Speakers will discuss advances in reducing the cost of Hadoop deployment and increasing availability and performance.

Unlocking the Value of Big Data with Oracle

Jean-Pierre Dijcks, OracleAnalyzing new and diverse digital data streams can reveal new sources of economic value, provide fresh insights into customer behavior and identify market trends early on. But this influx of new data can create challenges for IT departments. To derive real business value from Big Data, you need the right tools to capture and organize a wide variety of data types from different sources, and to be able to easily analyze it within the context of all your enterprise data. Attend this session to learn how Oracle’s end-to-end value chain for Big Data can help you unlock the value of Big Data.

Hadoop as a Service in Cloud

Junping Du, VMwareHadoop framework is often built on native environment with commodity hardware as its original design. However, with growing tendency of cloud computing, there is stronger requirement to build Hadoop cluster on a public/private cloud in order for customers to benefit from virtualization and multi-tenancy. This session discusses how to address some the challenges of providing Hadoop service on virtualization platform such as: performance, rack awareness, job scheduling, memory over commitment, etc, and propose some solutions.

Hadoop in a Mission Critical Environment

Jim Haas, CBS InteractiveOur need for better scalability in processing weblogs is illustrated by the change in requirements – processing 250 million vs. 1 billion web events a day (and growing). The Data Waregoup at CBSi has been transitioning core processes to re-architected Hadoop processes for two years. We will cover strategies used for successfully transitioning core ETL processes to big data capabilities and present a how-to guide of re-architecting a mission critical Data Warehouse environment while it’s running.

Practical HBase

Ravi Veeramchaneni, NAVTEQMany developers have experience in working on relational databases using SQL. The transition to No-SQL data stores, however, is challenging and often time confusing. This session will share experiences of using HBase from Hardware selection/deployment to design, implementation and tuning of HBase. At the end of the session, audience will be in a better position to make right choices on Hardware selection, Schema design and tuning HBase to their needs.

Hadoop Troubleshooting 101

Kate Ting, ClouderaAttend this session and walk away armed with solutions to the most common customer problems. Learn proactive configuration tweaks and best practices to keep your cluster free of fetch failures, job tracker hangs, and other common issues.

I Want to Be BIG – Lessons Learned at Scale

David “Sunny” Sundstrom, SGISGI has been a leading commercial vendor of Hadoop clusters since 2008. Leveraging SGI’s experience with high performance clusters at scale, SGI has delivered individual Hadoop clusters of up to 4000 nodes. In this presentation, through the discussion of representative customer use cases, you’ll explore major design considerations for performance and power optimization, how integrated Hadoop solutions leveraging CDH, SGI Rackable clusters, and SGI Management Center best meet customer needs, and how SGI envisions the needs of enterprise customers evolving as Hadoop continues to move into mainstream adoption.

There are several training classes and certification sessions provided surrounding the Hadoop World conference. Don’t forget to register and become Cloudera Certified in Apache Hadoop.

Jack Norris (@Norrisjack) posted The ins and outs of MapReduce on 10/19/2011 to the SD Times on the Web blog:

MapReduce is a cost-effective way to process “Big Data” consisting of terabytes, petabytes or even exabytes of data, although there are also good reasons to use MapReduce in much smaller applications.

MapReduce is essentially a framework for processing widely disparate data quickly without needing to set up a schema or structure beforehand. The need for MapReduce is being driven by the growing variety of information sources and data formats, including both unstructured data (e.g. freeform text, images and videos) and semi-structured data (e.g. log files, click streams and sensor data). It is also driven by the need for new processing and analytic paradigms that deliver more cost-effective solutions.

MapReduce was popularized by Google as a means to deal effectively with Big Data, and it is now part of an open-source solution called Hadoop.

A brief history of Hadoop

Hadoop was created in 2004 by Doug Cutting (who named the project after his son’s toy elephant). The project was inspired by Google’s MapReduce and Google File System papers that outlined the framework and distributed file system necessary for processing the enormous, distributed data sets involved in searching the Web. In 2006, Hadoop became a subproject of Lucene (a popular text-search library) at the Apache Software Foundation, and then its own top-level Apache project in 2008.Hadoop provides an effective way to capture, organize, store, search, share, analyze and visualize disparate data sources (unstructured, semi-structured, etc.) across a large cluster of computers, and it is capable of scaling from tens to thousands of commodity servers, each offering local computation and storage resources.

The map and reduce functions

MapReduce is a software framework that includes a programming model for writing applications capable of processing vast amounts of distributed data in parallel on large clusters of servers. The “Map” function normally has a master node that reads the input file or files, partitions the data set into smaller subsets, and distributes the processing to worker nodes. The worker nodes can, in turn, further distribute the processing, creating a hierarchical tree structure. As such, the worker nodes can all work on much smaller portions of the problem in parallel.

Jack is VP Marketing for MapR Technologies.

Mauricio Rojas answered Can I FTP to my Azure account? in a 10/18/2011 post:

Azure Storage easily gives you 10TB of storage.

So a common question, is how can you upload information to your Storage Account.

FTP is a standard way of uploading files but is usually not available in Azure.

However is it possible to implement something that mimics an FTP but allows you to save data to the FTP server?

Well I thought on trying on implementing something like that but luckily several guys have already done that.

Richard Parker has a post on his blog and has also posted the source code in

codeplex FTP2Azure. This implementation works with clients like FileZile. It only does Active connections.Maarten Balliauw also did some similar work. He did not provided the source code but he does active and passive connections so a mix with Richard’s post can be interesting.

Jacques Bughin, John Livingston, and Sam Marwaha asserted “Companies are learning to use large-scale data gathering and analytics to shape strategy. Their experiences highlight the principles—and potential—of big data” in a deck for their Seizing the potential of ‘big data’ article for the 11/2011 McKinsey Quarterly issue:

Large-scale data gathering and analytics are quickly becoming a new frontier of competitive differentiation. While the moves of companies such as Amazon.com, Google, and Netflix grab the headlines in this space, other companies are quietly making progress.

In fact, companies in industries ranging from pharmaceuticals to retailing to telecommunications to insurance have begun moving forward with big data strategies in recent months. Together, the activities of those companies illustrate novel strategic approaches to big data and shed light on the challenges CEOs and other senior executives face as they work to shatter the organizational inertia that can prevent big data initiatives from taking root. From these experiences, we have distilled four principles that we hope will help CEOs and other corporate leaders as they try to seize the potential of big data. …

Read more.

Jacques Bughin is a director in McKinsey’s Brussels office, John Livingston is a director in the Chicago office, and Sam Marwaha is a director in the New York office.

<Return to section navigation list>

SQL Azure Database and Reporting

My (@rogerjenn) updated PASS Summit: SQL Azure Sync Services Preview and Management Portal Demo post of 10/19/2011 adds a new section with a flow diagram and links to TechNet wiki articles:

Getting Ready to Set Up SQL Azure Sync Services

Testing SQL Azure Sync Services requires a Windows Azure Platform subscription with at least one Web database, a local SQL Server 2008 R2 [Express] sample database (Northwind for this example), and migrating a copy of the database to SQL Azure before you define a Sync Group. The Northwind Orders tables’ datetime fields have 13 years added to bring the date ranges from 2008 to 2011.

To comply with critical constraints:

- Order Details tables have been renamed to OrderDetails, because the Preview doesn’t support syncing tables with spaces.

- The Employees table’s ReportsTo column is removed because it creates a circular synchronization problem.

The Microsoft TechNet Wiki has a SQL Azure Data Sync - Known Issues topic covers issues that are known to the SQL Azure Data Sync team but for which there are no workarounds.

Note: If you don’t have a subscription with an unused database benefit, you can obtain a free 30-day pass here. The TechNet Wiki’s SQL Azure Data Sync - How to Get Started article provides detailed instructions for obtaining a description and setting up a new SQL Azure server and database.

Create your SQL Azure server in the North Central US region, which is the only US region that currently supports Data Sync. West Europe is the only European region. I used George Huey’s SQL Azure Migration Wizard as described in my Using the SQL Azure Migration Wizard v3.3.3 with the AdventureWorksLT2008R2 Sample Database post of 7/18/2010 except for the source database. SQL MigWiz v3.7.7 is current.

Note: You must have SQL Server 2008 R2 [Express] SP1 installed to use SQL MigWiz v3.7.7.

The flow diagram at the upper right is from the TechNet Wiki’s SQL Azure Data Sync - Create a Sync Group article (updated 10/13/2011.)

Bryan Swan (@brian_swan) described Using SQL Azure to Store PHP Session Data in a 10/19/2011 post to the Silver Lining blog:

In my last post, I looked at the session handling functionality that is built into the Windows Azure SDK for PHP, which uses Azure Tables or Azure Blobs for storing session data. As I wrote that post, I wondered how easy it would be to use SQL Azure to store session data, especially since using a database to store session data is a common and familiar practice when building distributed PHP applications. As I found out, using SQL Azure to store session data was relatively easy (as I’ll show in this post), but I did run into a couple of small hurdles that might be worth taking note of.

Note: Because I’ll use the SQL Server Drivers for PHP to connect to SQL Azure, you can consider this post to also cover “Using SQL Server to Store PHP Session Data”. The SQL Server Drivers for PHP connect to SQL Azure or SQL Server by simply changing the connection string.

The short story here is that I simply used the session_set_save_handler function to map session functionality to custom functions. The biggest hurdle I ran into was that I had to heed this warning in the session_set_save_handler documentation: “As of PHP 5.0.5 the write and close handlers are called after object destruction and therefore cannot use objects or throw exceptions. The object destructors can however use sessions. It is possible to call session_write_close() from the destructor to solve this chicken and egg problem.” I got around this by putting my session functions in a class and including a __destruct method that called session_write_close(). A smaller hurdle was that I needed to write a stored procedure that inserted a new row if the row didn’t already exist, but updated it if it did exist.

The complete story follows. I’ll assume that you already have a Windows Azure subscription (if you don’t, you can get a free trial subscription here: http://www.microsoft.com/windowsazure/free-trial/). Keep in mind that this code is “proof of concept” code – it needs some refining to be ready for production.

1. Create the database, table, and stored procedure (the stored procedure described above). To keep my PHP code simple, it assumes that you have created a database called SessionsDB with a table called sessions and a stored procedure called UpdateOrInsertSession. (A TODO item is to add the creation of the table and stored procedure to the PHP code, but the creation of the database will have to be done separately.) To create these objects, execute the code below using the SQL Azure Portal or SQL Server Management Studio (details in this article – Overview of Tools to Use with SQL Azure):

Create table:

CREATE TABLE sessions(id NVARCHAR(32) NOT NULL,start_time INT NOT NULL,data NVARCHAR(4000) NOT NULL,CONSTRAINT [PK_sessions] PRIMARY KEY CLUSTERED([id]))Create stored procedure:

CREATE PROCEDURE UpdateOrInsertSession(@id AS NVARCHAR(32),@start_time AS INT,@data AS NVARCHAR(4000))ASBEGINIF EXISTS (SELECT id FROM sessions WHERE id = @id)BEGINUPDATE sessionsSET data = @dataWHERE id = @idENDELSEBEGININSERT INTO sessions (id, start_time, data)VALUES ( @id, @start_time, @data )ENDENDOne thing to note about the table: the data column will contain all the session data in a serialized form. This allows for more flexibility in the data you store.

2. Add the SqlAzureSessionHandler class to your project. The complete class is attached to this post, but I’ll call out a few things here…

The constructor takes your server ID, username, and password. Formatting the connection options is taken care of, but will need to be changed if you are using SQL Server. (i.e. The username will not require the “@serverId” suffix and your server name will not require the “tcp” prefix and “.database.windows.net” suffix.)

Also note that session_set_save_handler is called in the constructor.

public function __construct($serverId, $username, $password){ $connOptions = array("UID"=>$username."@".$serverId, "PWD"=>$password, "Database"=>"SessionsDB");$this->_conn = sqlsrv_connect("tcp:".$serverId.".database.windows.net", $connOptions);if(!$this->_conn){die(print_r(sqlsrv_errors()));}session_set_save_handler(array($this, 'open'),array($this, 'close'),array($this, 'read'),array($this, 'write'),array($this, 'destroy'),array($this, 'gc'));}The write method serializes and base64 encodes all the session data before writing it to SQL Azure. Note that the InsertOrUpdateSession stored procedure is used here so that new session data is inserted, but existing session data is updated:

public function write($id, $data){$serializedData = base64_encode(serialize($data));$start_time = time();$params = array($id, $start_time, $serializedData);$sql = "{call UpdateOrInsertSession(?,?,?)}";$stmt = sqlsrv_query($this->_conn, $sql, $params);if($stmt === false){die(print_r(sqlsrv_errors()));}return $stmt;}Of course, when session data is read, it must be base64 decoded and unserialized:

public function read($id){// Read data$sql = "SELECT dataFROM sessionsWHERE id = ?";$stmt = sqlsrv_query($this->_conn, $sql, array($id));if($stmt === false){die(print_r(sqlsrv_errors()));}if (sqlsrv_has_rows($stmt)){$row = sqlsrv_fetch_array($stmt, SQLSRV_FETCH_ASSOC);return unserialize(base64_decode($row['data']));}return '';}There are no surprises in the close, destroy, and gc methods. However, note that this __destruct method must be included:

function __destruct(){session_write_close(); // IMPORTANT!}3. Instantiate SqlAzureSessionHandler before calling session functions as you normally would. After creating a new SqlAzureSessionHandler object, you can handle sessions as you normally would (but the data will be stored in SQL Azure):

require_once "SqlAzureSessionHandler.php";$sessionHandler = new SqlAzureSessionHandler("serverId", "username", "password");session_start();if(isset($_POST['username'])){$username = $_POST['username'];$password = $_POST['password'];$_SESSION['username'] = $username;$_SESSION['time'] = time();$_SESSION['otherdata'] = "some other session data";header("Location: welcome.php");}That’s it. Hope this is informative if not useful.

<Return to section navigation list>

MarketPlace DataMarket and OData

The SQL Azure Labs Team recently posted (somewhat of) an answer to “Where is the OData lab that was previously posted to SQL Azure Labs?” on the FAQ page:

Thank you for your interest in the OData Service for SQL Azure previewed on SqlAzureLabs. At this time we are not accepting new registrations for this lab.

Existing registered SQL Azure OData services will continue to be supported through the end of March, 2012. We will shortly be updating this site to allow users to reconfigure those existing SQL Azure OData endpoints, using previously registered Windows Ids, but we will not be accepting new registrations.

We gathered great feedback from this lab and are continuing to evaluate the inclusion of this functionality for SQL Azure. If you have any additional comments or feedback, please let us know at http://social.msdn.microsoft.com/Forums/en-US/sqlazurelabssupport/threads.

For information on deploying a custom OData Service over your SQL Azure database, see http://msdn.microsoft.com/en-us/data/gg192994.

Notice the missing reference in Julie’s article:

You can learn more about the Visual Studio Entity Data Model designer integration with SQL Azure in the whitepaper: _____________________________________.

Greg Duncan posted Mircosoft Research is now Open Data to the OData Primer wiki on 10/12/2011 (missed when posted):

Have you ever wondered about the following questions:

- What areas of interest does Microsoft invest in for Research ?

- Who are the people at Microsoft Research ?

- What publications do Microsoft Researchers publish ?

- How do Microsoft Researchers Collaborate ?

- What projects are currently going on in Microsoft Research ?

- Where can you find latest software from the Microsoft Research Labs ?

...

Microsoft has released what they call “RMC OData”. Yes, the Research at Microsoft.com is now made available as OData. Here is the URL for the same: http://odata.research.microsoft.com/

What is RMC OData, you may ask. Here is the official description of this service: RMC OData is a queryable version of research.microsoft.com (RMC) data, produced in the OData protocol (see odata.org for more information about the OData protocol). RMC data provides metadata about assets currently published on research.microsoft.com, such as publications, projects, and downloads.

So what data is available for consumption as part of the RMC Data, here it is:

- Downloads – Applications and tools which can be installed on your own machine or data sets which you can use in your own experiments. Downloads are created by groups from all Microsoft Research labs.

- Events – Events occurring in different locations around the world hosted by or in collaboration with Microsoft Research, with different themes and subject focus.

- Groups – Groups from all Microsoft Research labs; groups usually contains a mix of researchers and engineering staff.

- Labs – Microsoft Research locations around the world.

- Projects – Projects from all Microsoft Research labs.

- Publications – Publications authored by or in collaboration with researchers from all Microsoft Research labs.

- Series – Collections of videos created by Microsoft Research, grouped by event or subject focus.

- Speakers – Speakers for Microsoft Research videos; in many cases speakers are not Microsoft Research staff.

- Users – Microsoft Research staff; predominantly researchers but also includes staff from other teams and disciplines within Microsoft Research.

- Videos – Videos created by Microsoft Research; subjects include research lectures and marketing/promotional segments, some with guest lecturers and visiting speakers.

<Return to section navigation list>

Windows Azure AppFabric: Apps, Access Control, WIF and Service Bus

Yves Goeleven posted a Video: Simplifying distributed application development with NServiceBus and the Windows Azure Platform to his Cloud Shaper blog on 10/19/2011:

Yesterday I delivered a presentation to my fellow mvp’s on how NServiceBus and the Windows Azure Platform can be combined to simplify distributed application development in the cloud. The session has been recorded and is now available for everyone who is interested.

Enjoy (and sorry for my crappy presentation skills)

The Windows Azure Customer Advisory Team (CAT), formerly the Windows Azure App Fabric CAT, announced We Have Moved to MSDN in a 10/18/2011 post:

All new content will be located on the MSDN Library.

See you there!

Their first four MSDN articles are:

- Windows Azure Developer Guidance

- How to integrate a BizTalk Server application with Service Bus Queues and Topics

- Leveraging Node.js libuv in Windows Azure

- Best Practices for Leveraging Windows Azure Service Bus Brokered

Seb posted Azure AppFabric + MS CRM Online, updates to his Mind the Cloud blog on 10/16/2011:

MS CRM 2011 Online after latest updates supports Azure Appfabric integration with Access Control Services (ACS) 2.0. That is quite significant, since all new appfabric namespaces are now created as v 2.0 . Not much has changed around configuration from MS CRM side, still you need to use plugin registration tool but be sure you have version from SDK 5.0.6 where support for Windows Azure AppFabric Access Control Services (ACS) 2.0 was added .

<Return to section navigation list>

Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

No significant articles today.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

Tom Hollander (@tomhollander) described Updates to Windows Azure MSBuild post for SDK 1.5 in a 10/19/2011 post:

Just a quick note that I’ve edited my previous post Using MSBuild to deploy to multiple Windows Azure environments based on some changes to the platform that have come with the Windows Azure SDK 1.5 and Windows Azure PowerShell cmdlets 2.0.

The main changes are to the MSBuild targets added to the .ccproj file:

- The default service configuration file has been changed to ServiceConfiguration.cloud.cscfg

- A new property <PackageForComputeEmulator> was added and set to true. This is counterintuitive as I’m not packaging for the compute emulator, but this is required to enable packaging of a default web site without specifying the phyiscalDirectory. If you don’t include the <PackageForComputeEmulator> setting, you may see an error in your build output like “error CloudServices077: Need to specify the physical directory for the virtual path 'Web/' of role WebRole1”.

- The location of the generated package has moved from previous releases. Rather than specify my own property for this I’m now reusing $(PublishDir) which is set by the built-in CorePublish target.

The only other change I made to the post was to update the PowerShell script to use the new snap-in name that came with the Windows Azure Platform PowerShell cmdlets 2.0 – it’s now called WAPPSCmdlets instead of AzureManagementToolsSnapIn.

Note that I haven’t revisited the documented approach to transforming configuration files. You may want to check out Joel Forman’s post for some tips on how to do this with latest SDK.

Steve Marx (@smarx) asserted “Good tools integration and an elegant design make it easy to get your first app up and running on Microsoft's cloud” in an introduction to his Getting Started with Microsoft's Windows Azure Cloud: The Lay of the Land article of 10/19/2011 for Dr. Dobbs:

Recently, cloud platforms have emerged as a great way to build and deploy applications that require elastic scale, high reliability, and a rapid release cadence. By eliminating the need to buy and maintain hardware, the cloud gives developers inexpensive, on-demand access to computers, storage, and bandwidth without any commitment. Developing for the cloud also makes it easy and less risky to try out new ideas. If an idea is successful, it can be scaled up by adding more hardware. If an idea is unsuccessful, it can be shut down quickly.

The core benefits of the cloud were obvious from the start, but when I joined the Windows Azure team in 2007, the cloud was primarily seen as a way to provision virtual machines on demand. Today, I'm excited to see that the idea of a platform as a service (PaaS) model has grown and matured, and there are now many cloud providers that are recognizing this model.

As much as Windows Azure has changed and grown since I first joined the team, the core benefits have remained constant. Windows Azure offers an easy development experience, it gives developers the flexibility to choose their favorite tools, and it supports very demanding workloads.

Building Your First Cloud Application

Because Windows Azure supports many kinds of applications, programming languages, and tools, there are lots of ways you can get started, but let's start with an ASP.NET web application as an example.

The Installing the SDK and Getting a Subscription tutorial walks you through the process of getting your development environment ready for Windows Azure. Note that you don't actually need to sign up for Windows Azure to get the tools and start developing. The steps below are based on version 1.5 of the SDK.

Once you have the tools installed, start Visual Studio as an administrator, and create a new project based on the "Windows Azure Project" template as pictured in Figure 1.

Figure 1.

Next, you'll be prompted to choose one or more "roles" for your application. In the next section, we'll learn more about roles and the part they play in cloud architecture. For now, just choose an ASP.NET MVC 3 Web Role by highlighting it and clicking the right arrow to add it to your solution, as in Figure 2.

Figure 2.

As with any ASP.NET MVC application, the next screen will ask you what kind of MVC application you want to build. Just to get something to play with, I suggest choosing the "Internet Application" template; see Figure 3.

Figure 3.

At this point, you have a functioning Windows Azure application. You can run it locally by pressing F5, and when you're ready to deploy to the cloud, you can choose either "Package" to create the files you'll need to deploy to the cloud, or "Publish" to deploy directly from Visual Studio; see Figure 4.

Figure 4.

Now you can develop your custom application simply by editing the default ASP.NET MVC template, as you would for any ASP.NET application.

What you've seen so far may not look that impressive on the surface. We built a simple web application and ran it locally. It was easy to do, but we could have done that without Windows Azure. What's truly impressive is that this same application, without modification, can easily be deployed and scaled across many servers and even multiple data centers with ease. I can also update my application at any time without having to touch individual servers and without any downtime. All of this is possible because of Windows Azure's scalable programming model.

Scalable Programming Model

Not every application needs to grow to thousands of virtual machines and terabytes of data, but some do; and it's often the case that the developer of an application doesn't know what scale will ultimately be required. So, it's important to use a cloud platform that supports applications of any scale.

As is typical in the cloud, Windows Azure encourages a scale-out architecture. Scale-out means that your application's elastic scale is achieved by adding or removing virtual machines (not by moving to bigger and bigger servers, which would be called "scale up"). Scale-out architecture also gives your application reliability, high availability, and the ability to be upgraded without downtime.

In Windows Azure, this scale-out architecture is implemented by use of roles. A role is a component of your application that runs on multiple instances (virtual machines). Each instance of a role runs identical code, so a role can be scaled simply by adding or removing instances.

In the application we built in the previous section, our application had exactly one role (the ASP.NET MVC 3 web role). In a typical three-tier application, you might have a front-end web role and a back-end service role (along with a database for the third tier). Each role has its own code and can be scaled independently. Any role that accepts traffic from the internet (like a web application) sits behind a load balancer, ensuring that all instances contribute to your application's scalability.

For this architecture to work, each virtual machine needs to be identical. That means it needs to be running the same code (which it is, thanks to the concepts of roles). It also needs to have the same data. To make sure that all virtual machines have the same data, data should be centralized in a storage system. This model is exactly like a web farm with a database for storage. …

Brian Swan (@brian_swan) posted Handling PHP Sessions in Windows Azure on 10/18/2011 to the Silver Lining blog:

One of the challenges in building a distributed web application is in handling sessions. When you have multiple instances of an application running and session data is written to local files (as is the default behavior for the session handling functions in PHP) a user session can be lost when a session is started on one instance but subsequent requests are directed (via a load balancer) to other instances. To successfully manage sessions across multiple instances, you need a common data store. In this post I’ll show you how the Windows Azure SDK for PHP makes this easy by storing session data in Windows Azure Table storage.

In the 4.0 release of the Windows Azure SDK for PHP, session handling via Windows Azure Table and Blob storage was included in the newly added SessionHandler class.

Note: The SessionHandler class supports storing session data in Table storage or Blob storage. I will focus on using Table storage in this post largely because I haven’t been able to come up with a scenario in which using Blob storage would be better (or even necessary). If you have ideas about how/why Blob storage would be better, I’d love to hear them.

The SessionHandler class makes it possible to write code for handling sessions in the same way you always have, but the session data is stored on a Windows Azure Table instead of local files. To accomplish this, precede your usual session handling code with these lines:

require_once 'Microsoft/WindowsAzure/Storage/Table.php';require_once 'Microsoft/WindowsAzure/SessionHandler.php';$storageClient = new Microsoft_WindowsAzure_Storage_Table('table.core.windows.net','your storage account name','your storage account key');$sessionHandler = new Microsoft_WindowsAzure_SessionHandler($storageClient , 'sessionstable');$sessionHandler->register();Now you can call session_start() and other session functions as you normally would. Nicely, it just works.

Really, that’s all there is to using the SessionHandler, but I found it interesting to take a look at how it works. The first interesting thing to note is that the register method is simply calling the session_set_save_handler function to essentially map the session handling functionality to custom functions. Here’s what the method looks like from the source code:

public function register(){return session_set_save_handler(array($this, 'open'),array($this, 'close'),array($this, 'read'),array($this, 'write'),array($this, 'destroy'),array($this, 'gc'));}The reading, writing, and deleting of session data is only slightly more complicated. When writing session data, the key-value pairs that make up the data are first serialized and then base64 encoded. The serialization of the data allows for lots of flexibility in the data you want to store (i.e. you don’t have to worry about matching some schema in the data store). When storing data in a table, each entry must have a partition key and row key that uniquely identify it. The partition key is a string (“sessions” by default, but this is changeable in the class constructor) and the the row key is the session ID. (For more information about the structure of Tables, see this post.) Finally, the data is either updated (it it already exists in the Table) or a new entry is inserted. Here’s a portion of the write function:

$serializedData = base64_encode(serialize($serializedData));$sessionRecord = new Microsoft_WindowsAzure_Storage_DynamicTableEntity($this->_sessionContainerPartition, $id);$sessionRecord->sessionExpires = time();$sessionRecord->serializedData = $serializedData;try{$this->_storage->updateEntity($this->_sessionContainer, $sessionRecord);}catch (Microsoft_WindowsAzure_Exception $unknownRecord){$this->_storage->insertEntity($this->_sessionContainer, $sessionRecord);}Not surprisingly, when session data is read from the table, it is retrieved by session ID, base64 decoded, and unserialized. Again, here’s a snippet that show’s what is happening:

$sessionRecord = $this->_storage->retrieveEntityById($this->_sessionContainer,$this->_sessionContainerPartition,$id);return unserialize(base64_decode($sessionRecord->serializedData));As you can see, the SessionHandler class makes good use of the storage APIs in the SDK. To learn more about the SessionHandler class (and the storage APIs), check out the documentation on Codeplex. You can, of course, get the complete source code here: http://phpazure.codeplex.com/SourceControl/list/changesets.

As I investigated the session handling in the Windows Azure SDK for PHP, I noticed that the absence of support for SQL Azure as a session store was conspicuous. I’m curious about how many people would prefer to use SQL Azure over Azure Tables as a session store. If you have an opinion on this, please let me know in the comments.

Avkash Chauhan (@avkashchauhan) described Increasing Endpoints in your Windows Azure Application using In-Place Upgrade from Management Portal on 10/19/2011:

Previously: If you need to change the number or type of endpoints for existing roles, you must delete and redeploy the service.

Recently upgrade to Windows Azure will let you apply in-place upgrade to your Windows Azure Application which includes addition to new endpoint. Please sure to have your Windows Azure application upgraded to use Windows Azure SDK 1.5 as in-place upgrade should work with application using SDK 1.5 and I tested with it. More information and documentation will be added to MSDN soon.

For example if you have had your service running without RDP. And now if you added RDP access to your service this means you have added 2 more endpoints to your service. Now you can just apply in-place update to your application to update your previously running service with new service which includes new endpoint for RDP.

Read more about In-Place Upgrade: http://blogs.msdn.com/b/windowsazure/archive/2011/10/19/announcing-improved-in-place-updates.aspx

Kevin Kell recommended that you (and Herman Cain) Use Cloud Bursting to Handle Unexpected Spikes in Demand in a 10/18/2011 post to the Knowledge Tree blog:

I am not a Republican, nor am I a Democrat. I am, however, an American voter interested in the issues. I am also a techie.

Tonight I am watching the latest Republican primary debate in Las Vegas. How many more are there left to go?

Anyway, the first part of the debate basically became a discussion of Herman Cain’s “9-9-9″ plan. It seemed that there was much confusion among the candidates. There were multiple analyses that each was citing. To his credit, Herman Cain himself said that everyone should “do their own math” and check out the analysis on his website.

The analysis may or may not be interesting, but right now I don’t know. What is more interesting to me is this:

Figure 1 – Service Unavailable

Now I’m no expert in political strategy but this, to me, seems like a bad thing. Obviously the goal of the site is to get the message out to as many people as possible. This implementation falls short.

Could this problem have been avoided using “the Cloud”? Absolutely. Is there any issue with security or data privacy on this site? No.

This is exactly the type of problem that can be solved by “Cloud Bursting”. That is using public cloud resources to handle anticipated or unanticipated spikes in demand. Had this site used this technique it could have made use of elastic scalability to quickly provision additional resources and avoid this outage. Tomorrow, when everyone has forgotten about tonight’s debate, the solution deployment could be scaled back in to avoid paying for unused resources.

All of this is tangential, of course, to the actual debate that is going on right now. As I am typing this the debate has taken a bit of a negative turn. I hope that a fist fight does not break out between the Governor of Texas and the former Governor of Massachusetts. I guess that is why I am more interested in technology than in politics.

<Return to section navigation list>

Visual Studio LightSwitch and Entity Framework 4.1+

Beth Massi (@bethmassi) announced the availability of Video: How Do I Handle Database Concurrency Issues? in a post to the Visual Studio LightSwitch blog on 10/19/2022:

Check it out, we released a new “How Do I” video on the LightSwitch Developer Center:

How Do I: Handle Database Concurrency Issues?

In a multi-user environment, when two users pull up the same record from the database and modify it, when the second user saves the data it raises a data conflict because the original values do not match what is stored in the database anymore. In this video you will learn how LightSwitch handles these database concurrency issues out automatically as well as how to control what happens yourself in code.

Janet I. Tu (@janettu) reported Cool vid: Microsoft Australia launches LightSwitch with light show to her Seattle Times Business / Technology blog on 10/19/2011:

It appears that Microsoft Australia launched Visual Studio LightSwitch with a light show featuring some office towers in Sydney.

Visual Studio LightSwitch is a self-service development tool that enables people to build business applications for the desktop and cloud.

The blog TechAU noted that "as part of the Australian launch, Microsoft used a computer controlled lighting grid to display animations on two skyscrapers in Sydney. The effect is pretty impressive and while technically not done using light switches, you get the correlation." (Hat tip to blogger Long Zheng.)

Here's the video from MicrosoftAustralia's YouTube channel:

Return to section navigation list>

Windows Azure Infrastructure and DevOps

Drew McDaniel posted Announcing Improved In-place Updates to the Windows Azure blog on 10/19/2011:

Based on your feedback, today we are making some helpful updates to Windows Azure that provide developers flexibility for making in-place updates to deployed services. Best of all, these updates are easily made without changing the public IP of the service.

Windows Azure now supports a wider range of changes to a deployed service without requiring the use of a VIP swap or a complete delete and re-deploy of the service. With the following changes permitted through an in-place update, you will now be allowed to make almost all possible updates without requiring a VIP swap or a re-deploy!

The newly allowed in-place updates are:

- Change the virtual machine size (scale-up or scale-down)

- Increase local storage

- Add or remove roles to a deployment

- Change the number or type of endpoints

Summary of changes allowed

Changes permitted to hosting, services, and roles In-place update Staged (VIP swap) Delete and re-deploy Operating system version Yes Yes Yes .NET trust level Yes Yes Yes Virtual machine size Yes Warning: Changing virtual machine size will destroy local data. When making this change using the service management APIs, the force flag is required

Note: Requires Windows Azure SDK 1.5 or later

Yes Yes Local storage settings Yes: Increase only. Note: Requires Windows Azure SDK 1.5 or later

Yes Yes Add or remove roles in a service Yes Yes Yes Number of instances of a particular role Yes Yes Yes Number or type of endpoints for a service Yes Note: Requires Windows Azure SDK 1.5 or later.

Note: Availability may be temporarily lost as endpoints are updated

No Yes Names and values of configuration settings Yes Yes Yes Values (but not names) of configuration settings Yes Yes Yes Add new certificates Yes Yes Yes Change existing certificates Yes Yes Yes Deploy new code Yes Yes Yes

Changing Virtual Machine Size example

The following example shows how to change the virtual machine size through the Windows Azure portal.

Figure 1: Initial state of the deployment

When attempting to change the size of the VM without checking the box for “Allow VM size or role count to be updated” the following error will be shown:

Figure 2: Error message when attempting to change role count

Now when the same operation is attempted with the “Allow VM size or role count to be updated” checkbox checked the VM size will be updated:

Figure 3: Update with the checkbox checked

Figure 4: Deployment after update has completed

More Control over in-place updates

In addition to the wider applicability of an in-place update, you now have greater control over the update process itself by being able to rollback an update that is in progress or even starting a second update. While an update is in progress (defined by at least one instance of the service not yet updated to the new version) you can roll back the update by using the Rollback Update Or Upgrade operation.

If the rollback operation is specified to be in automatic mode, the rollback will begin immediately in the first upgrade domain without waiting for all instances in the upgrade domain that is currently offline to return to the Ready state. To prevent multiple upgrade domains from being offline simultaneously the rollback can be done in manual mode. In manual mode the first upgrade domain will not be rolled back until Walk Upgrade Domain is called for the first upgrade domain.

Multiple Update Operations

Initiating a second update operation while the first update is ongoing will perform similar to the rollback operation. If the second update is in automatic mode, the first upgrade domain will be upgraded immediately, possibly leading to instances from multiple upgrade domains being offline at the same point in time. Further details on using the rollback operation or initiating multiple mutating operations can be found here.

Summary of Update Options

Now that most modification can be performed using any of the three options for updating a hosted service, it is worth taking some time to describe when each option is most appropriate. This is best described by the following table of advantages and disadvantages:

Update option Description Advantages Disadvantages In-place update The new package is uploaded and applied to the running instances of the service. Only a single running deployment is required. Service availability can be maintained as long as there are at least two instances for each role. Service capacity is limited while instances are brought down, updated and then restarted. Service code must be able to support multiple versions of the code running on different instances as the instances are updated Staged (VIP swap) Upload the new package and swap it with the existing production version. This is referred to as a VIP swap because it simply swaps the visible IP addresses of the services. No service downtime or loss of capacity. Two deployments are required to be running at least during the update. Redeploy the service Suspend and then delete the service, and then deploy the new version. Only a single running deployment is required. Service will be down between deleting the service and redeploying the service. Virtual IP (VIP) will often change when the service is redeployed.

David Linthicum (@DavidLinthicum) posted Understanding Cloud Performance Metrics on 10/19/2011 as a guest article for the CloudSleuth blog:

Those who focus on the performance of public cloud computing systems often concentrate on a single aspect, typically response times within very specific problem domains for short durations of time. I’m asserting that you have to take a more sophisticated approach to consider cloud performance holistically. Let’s explore that in this blog.

At this point in the maturation of cloud computing I’m willing to present a few core concepts that should be points of consideration when you look at the performance measurements of public clouds. These include:

• Think Globally

• Consider Mixed Use

• Leverage Long Time Horizons

• Consider Composite or Borderless Cloud

The concept of thinking globally refers to the fact that public clouds are leveraged from sites all over the world, thus you must consider that fact in the performance metrics. For example, a buddy of mine tested cloud performance from a location that was only 5 miles from the provider’s data center. The assumption was made that his performance would match the performance of an office in Asia. He was wrong, and the project had to be reworked around the performance issues discovered after deployment.

Indeed, as you can see from the latest “Cloud Provider Global Performance Ranking – 12 Month Average,” performance varies greatly by geographical region. Consider the location of the provider’s points of presence, and the consumer’s. The results vary greatly. Recognize the need to leverage mechanisms such as the Gomez Performance Network (GPN) which is able to run test transactions on the deployed target applications and monitor the response time and availability from multiple points around the globe.

Consider mixed use means that we measure cloud computing performance using a set of static tests, and we change up those test to best reflect the changing use of public cloud computing environments over time. Thus, we move from data- and I/O-intensive applications, to those that are processor saturating, and perhaps user- and/or API interface-intensive. The idea is to develop a good profile of overall public cloud computing provider performance, no matter what type of application is running.

Leverage long time horizons refers to the concept that the longer you measure performance, the more accurate those measurements will be. Performance results that only reflect a few days or weeks are largely meaningless. Instead, make sure to focus on performance data that considers long time horizons, including time when there is network saturation, outages, and fail-overs from center-to-center. That data tells a more realistic story than simple response time data over shorter durations.

Finally, you need to consider borderless or composite cloud applications within the performance metrics profile, else you’ll only be telling a portion of the story.

Cloud applications are very often a mix of elements provided by both the application’s owner and third-parties service providers. Indeed, considering the research provided by our host, most commercial web sites use an average of more than 9 different services supplied from outside the domain of the application’s owner.

Indeed, as I’m building cloud computing applications for clients, the trend of creating cloud applications using many external services will only continue. I suspect within just a few years there will be twice as many different services supplied from outside the core application domain, many of which will be tightly coupled.

Count on the core metrics we use to leverage cloud performance to change over time as we get better at gathering cloud computing performance data, and the use of cloud computing increases. Still, considering the information above, I think we’re off to a pretty good start.

Microsoft’s North Central US (Chicago, IL) region consistently places first in CloudSleuth’s monthly cloud performance sweepstakes. I’ve requested CloudSleuth to add the South Central US (San Antonio, TX) region, where my OakLeaf Systems Azure Table Services Sample Project is located.

The HPC in the Cloud blog’s HPCWire reported Intel Exec Sees Cloudy Future for HPC in a 10/19/2011 post:

This week Jeff Ehrlich interviewed Bill Magro, director of HPC software at Intel about how the cloud will deliver high performance computing to small IT shops that never imagined they would have access to high-end resources.

Magro argues that “commercial shops will likely adopt HPC systems in greater numbers once a few technical and commercial barriers are eliminated.” He also notes that contrary to popular opinion, HPC has indeed moved beyond the academic setting to include a number of small to mid-sized enterprises. He says that “HPC systems are often run within engineering departments, outside the corporate datacenters, which is a factor in why IT professionals don’t see as much HPC in industry.” He says that in any case, well over half of HPC is in industry currently—and the proportion will continue to grow as smaller enterprises find new ways to use HPC.

The concept of small to medium sized businesses finding ways to access and use HPC resources is one that has become a centerpiece for organizations like the Council on Competitiveness, which argues there is a large “missing middle” for HPC. In simple terms, this middle includes those not at the top end (academic institutions and laboratories).

When asked whether or not the “killer app” to reach the missing middle in HPC is cloud computing, Magro says that “Cloud is a delivery mechanism for computing; HPC is a style of computing. HPC is the tool, and the cloud is one distribution channel for that tool.” He says that cloud can help to increase overall HPC adoption, “but new users need to learn the value of HPC as a first step.”

Nonetheless, he does see a clear path for clouds increasing the commerial adoption of HPC, even though there are still some technical barriers. As Margo stated, “If you look at Amazon’s EC2 offering, it has specific cluster-computing instances, suitable for some HPC workloads and rentable by the hour. Others have stood up HPC/Cloud offerings, as well. So, yes, a lot of people are looking at how to provide HPC in the cloud, but few see cloud as replacing tightly integrated HPC systems for the hardest problems."

Full story at Intelligence in Software

Michael Biddick (@michaelbiddick) wrote the InformationWeek 2011 IT Process Automation Survey in 8/2011 and Information Week posted it on 10/18/2011:

Process Before Tools

Shifting spending from IT operations to innovation is a key goal for every CIO we know. One way to accomplish that is with a highly virtualized data center, where any processes that can be automated are, and where on-premises IT offerings are augmented with public cloud services where it makes sense. We launched our InformationWeek 2011 IT Process Automation Survey to look at the state of automation and see what lessons can be learned from business technology professionals using these tools.

While many in our poll find real benefits in automation, make sure you're being realistic. Cost reductions can be elusive, but respondents validate our belief that automated IT processes lead to improved quality and efficiency, while freeing time to spend on higher-value projects. We also found that private clouds are driving a new renaissance, as highly scalable and virtualized data centers just can’t happen without automation. Public cloud environments, in contrast, add complexity to process automation, as workloads are set up in silos, but they also provide potential solutions in the form of cloud-broker technology.

The success of any IT process automation project will largely depend on your systems. The more standardization, the easier automation will be. If you have a complex environment, with homegrown applications and complicated workflows, automation will be equally complex and costly. Still, it's worth doing, and we'll explain how. (R3381011)

Survey Name: InformationWeek 2011 IT Process Automation Survey

Survey Date: August 2011

Region: North America

Number of Respondents: 345

Purpose: To examine the challenges and progress around IT process automationTable of Contents

3 Author's Bio

4 Executive Summary

5 Research Synopsis

6 Process Before Tools

8 Cloud Impact on Automation

11 Private Policy

13 Automation Vendors

16 Under the Hood

17 Enabling Automation

18 Overcoming Challenges

19 Process Automation Success Factors

27 Related Reports

Michael is president and CTO of Fusion PPT and an InformationWeek Analytics contributor

Tony Bailey reported Windows Azure LIVE CHAT Goes… Live on 10/16/2011:

Now trying out and adopting the Windows Azure platform got little easier.

Call center teams are ready and willing to help answer your Windows Azure platform questions.

- How much will the Windows Azure platform really cost me?

- What am I getting myself in to?

- What is a compute instance?

- I have a client-server application. Is it viable to move it to the Windows Azure platform?

- I want to market and sell my cloud application. What are the best ways to connect to a marketplace?

Visit www.azure.com and see what I mean.

<Return to section navigation list>

Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

Yung Chou reported Post-Event Live Chats to Discuss Building Private Cloud on 10/19/2011:

After the upcoming TechNet events, we will be hosting three post-event Live Chats for all to share the experience of and also answering the questions you may have about building private cloud. Here are the dates and registration information:

- Live! IT Time: Private Cloud Chat (Episode 1), 11/9/2011 10:00:00 AM Pacific Time

- Live! IT Time: Private Cloud Chat (Episode 2), 12/21/2011 10:00:00 AM Pacific Time

- Live! IT Time: Private Cloud Chat (Episode 3), 1/11/2012 10:00:00 AM Pacific Time

The above conference calls are specifically for following up with those who have (1) attended one of the TechNet events and (2) tried building a private cloud. If not already, make sure you also register to attend one of the events and also download System Center Virtual Machine Manager 2012 RC to start getting some experience on building private cloud.

There is no easy way to learn private cloud. It is a complex topic and takes time to master the subject. I encourage your invest your time to learn private cloud and become the next private cloud expert in your organization.

Barton George (@barton808) posted Cote and I discuss Dell World and our new Web|Tech Vertical on 10/19/2011:

Last week we held Dell’s first Dell World event here in Austin, Texas. The two-day event was targeted at CxOs and IT professionals and featured an expo, panels and speakers such as CEOs Mark Benioff, Paul Otellini, Steve Ballmer and Paul Maritz as well as former CIO of the United States, Vivek Kundra. And of course, being Austin, it also featured a lot of great music and barbeque.

At the end of the first day Michael Cote grabbed sometime with me and we talked about the event.

Some of the ground I cover:

- Dell World overview and our Modular Data Center

- (3:35) Talking to press/analysts about our new Web|Tech vertical and our focus on developers

- (6:00) The event’s attempt to up-level the conversation rather than diving into speeds, feeds and geeky demos.

The Dell Modular Data Center on the expo floor (photo: Yasushi Osonoi)

(double click to see full sized)

Extra Credit reading

- Silicon Angle: Dell Gets It – Developers are the new Kingmakers

- Time lapse Video: Dell’s Modular Data Center powers Bing Maps

- Video: A Walk-through of Dell’s Modular Data Center

Maybe there’s hope for a WAPA from Dell after all.

Lori MacVittie (@lmacvittie) continued Examining architectures on which hybrid clouds are based… with her Cloud Infrastructure Integration Model: Virtualization post of 10/19/2011 to F5’s DevCentral blog:

IT professionals, in general, appear to consider themselves well along the path toward IT as a Service with a significant plurality of them engaged in implementing many of the building blocks necessary to support the effort. IaaS, PaaS, and hybrid cloud computing models are essential for IT to realize an environment in which (manageable) IT as a Service can become reality.

That IT professionals –65% of them to be exact – note their organization is in-progress or already completed with a hybrid cloud implementation is telling, as it indicates a desire to leverage resources from a public cloud provider.

What the simple “hybrid cloud” moniker doesn’t illuminate is how IT organizations are implementing such a beast. To be sure, integration is always a rough road and integrating not just resources but its supporting infrastructure must certainly be a non-trivial task. That’s especially true given that there exists no “standard” or even “best practices” means of integrating the infrastructure between a cloud and a corporate data center.

Existing standards and best practices with respect to network and site-level virtualization provide an alternative to a bridged integration model.

Without diving into the mechanism – standards-based or product solution – we can still examine the integration model from the perspective of its architectural goals, its advantages and disadvantages.

THE VIRTUALIZATION CLOUD INTEGRATION ARCHITECTURE

The basic premise of a virtualization-based cloud integration architecture is to transparently enable communication with and use of cloud-deployed resources. While the most common type of resources to be integrated will be applications, it is also the case that these resources may be storage or even solution focused. A virtualization-based cloud integration architecture provides for transparent run-time utilization of those resources as a means to enable on-demand scalability and/or improve performance for a highly dispersed end-user base.

Sometimes referred to as cloud-bursting, a virtualized cloud integration architecture presents a single view of an application or site regardless of how many physical implementations there may be. This model is based on existing GSLB (Global Server load balancing) concepts and leverages existing best practices around those concepts to integrate physically disparate resources into a single application “instance”.

This allows organizations to leverage commoditized compute in cloud computing environments either to provide greater performance – by moving the application closer to both the Internet backbone and the end-user – or to enhance scalability by extending resources available to the application into external, potentially temporary, environments.

A global application delivery service is responsible for monitoring the overall availability and performance of the application and directing end-users to the appropriate location based on configurable variables such as location, performance, costs, and capacity. This model has the added benefit of providing a higher level of fault tolerance because should either site fail, the global application delivery service simply directs end-users to the available instance. Redundancy is an integral component of fault tolerant architectures, and two or more sites fulfills that need. Performance is generally improved by leveraging the ability of global application delivery services to compare end-user location, network conditions and application performance and determine which site will provide the best performance for the given user.

Because this model does not rely upon a WAN or tunnel, as with a bridged model, performance is also improved because it eliminates much of the overhead inherent in intra-environment communications on the back-end.

There are negatives, however, that can imperil these benefits from being realized. Inconsistent architectural components may inhibit accurate monitoring that impedes some routing decisions. Best practice models for global application delivery imply a local application delivery service responsible for load balancing. If a heterogeneous model of local application delivery is used (two different load balancing services) then it may be the case that monitoring and measurements are not consistently available across the disparate sites. This may result in decisions being made by the global application delivery service that are not as able to meet service-level requirements as would be the case when using operationally consistent architectural components.

This lack of architectural consistency can also result in a reduced security posture if access and control policies cannot be replicated in the cloud-hosted environment. This is particularly troubling in a model in which application data from the cloud-hosted instances may be reintroduced into corporate data stores. If data in the cloud is corrupted, it can be introduced into the corporate data store and potentially wreak havoc on applications, systems, and end-users that later access that tainted data.

Because of the level of reliance on architectural parity across environments, this model requires more preparation to ensure consistency in security policy enforcement as well as ensuring the proper variables can be leveraged to make the best-fit decision with respect to end-user access.

<Return to section navigation list>

Cloud Security and Governance

Jack Greenfield continued his Business Continuity Basics series on 10/19/2011:

This post describes the basics of business continuity. Business continuity poses both business and technical challenges. In this series, we focus on the technical challenges, which include failure detection, response, diagnosis, and defect correction. Since failure diagnosis and defect correction are difficult to automate effectively in the platform, however, we narrow the focus even further to failure detection and response.

Failure Detection

Failures are expected during normal operation due to the characteristics of the cloud environment.

They can be detected by monitoring the service. Reactive monitoring observes that the service is not meeting Service Level Objectives (SLOs), while proactive monitoring observes conditions which indicate that the service may fail, such as rapidly increasing response times or memory consumption.

Failure detection is always relative to some observer. For example, in a partial network outage, the service may be available to one observer but not another. If the network outage blocks all external access to the service, it will appear to be unavailable to all observers, even if it is still operating correctly. A service therefore cannot reliably detect its own failure.

Failure Response

Failure response involves two related but different objectives: high availability and disaster recovery. Both boil down to satisfying SLOs: high availability is about maintaining acceptable latencies, while disaster recovery is about recovering from outages in an acceptable amount of time with no more than an acceptable level of data loss.

High Availability

Availability is generally measured in terms of responsiveness. A service is considered responsive if it responds to requests within a specified amount of time. Availability is measured as the amount of time the service is responsive in some time window divided by the length of the window, expressed as a percentage. Most services typically offer at least 99.9% availability, which amounts to 43.2 minutes of downtime or less per month.

Availability SLOs may not offer an accurate indication of the actual availability of a service in practice, because of how responsiveness is defined. For example, a common way to define availability is to say that a service is considered responsive if at least one request succeeds in every 5 minute interval. By this definition, the service can be unresponsive for 4 minutes and 59 seconds in every 5 minute interval, providing an actual availability of 0.3%, while satisfying a stated SLO of 99.9% availability.

Disaster Recovery

Disaster recovery is governed by SLOs that become relevant when the service becomes unavailable.

- A Recovery Time Objective (RTO) is the amount of time that can elapse before normal operation is restored following a loss of availability. It typically includes the time required to detect the failure, and the time required to either restore the affected components of the service, or to fail over to new components if the affected components cannot be restored fast enough.

- A Recovery Point Objective (RPO) is a point in time to which the service must recover data committed by the user prior to the failure. It should be less than or equal to what users consider an "acceptable loss" in the event of a disaster.

Dave Asprey (@daveasprey) reported Encrypting Amazon Storage: Not So Simple on 10/17/2011:

At my Interop presentation last week, a few people asked me about how secure storage on Amazon AWS can be. Here’s my take.

First off, you’d have to say which type of storage you’re thinking of using before you figure out how secure you can make it. There are 3 different kinds of storage within Amazon:

Instance storage – This is storage which comes included with your Server instance when you start it up in AWS. Think of this as your normal C: drive. The storage is specific only to that instance and does not persist when the instance is terminated. This storage is just about as secure as your Amazon instance itself is.

Raw block storage – known as Elastic Block Storage (EBS). Provides a fast, persistent raw data store which is retained independent of the server (AMI) instances. Think of this as your disk drives in the cloud. When server(s) want to access this storage, they “mount” a file system on top of these raw blocks and then proceed to I/O. This is the only way AWS offers block storage today and, coincidentally, this is also where our Secure Cloud encryption key management service works by encrypting the EBS blocks and then providing access control when a server image tries to gain access. (We partner with Amazon Web Services on this service.)

Simple Storage Service (S3) – This is an independent, persistent, cloud storage service offered by AWS which is accessible “only” via a well defined HTTP based web API. Customers can store and retrieve large blobs of data in S3 by using this Web service and most typically use this to store Amazon Machine Images (i.e. templates for instantiating active server instances). AWS does not impose any structure on the stored data and does not disclose how the data is stored in the backend. Some 3rd parties have come up with solutions which layer file systems etc on S3, but by and large, these are still in infancy due to various limitations. To allay customers concerns about storing sensitive data in S3, AWS recommends customers utilize a encryption library, which has to be designed into the application that the customer is using to store the data on S3 and then manage the encryption keys separately. Amazon recently announced the launch of S3 Server Side Encryption for Data at Rest, along with a diagram of how it works.

With this new announcement, Amazon provides for an option for customers to use encryption hosted by AWS to encrypt/decrypt S3 data – which in turn provides some relief to S3 customers who do not want to rewrite their applications to use the client library.

One significant limitation is that there is no external key management provided with this solution – the keys are associated with your S3 credentials and if those credentials are hacked, everything is accessible. The best use case as far as I can tell for this is to satisfy compliance policies for storing data in S3, or possibly someone hacking/breaking into AWS and stealing S3 stores without using your account credentials. (this last example is highly unlikely…)

From the point of view of storing files on a server, the new S3 Server-Side-Encryption is doing exactly what Dropbox does, except that with S3-SSE, Amazon itself holds the keys while with Dropbox it is Dropbox that holds the keys. In both cases, the actual data that users upload always sits encrypted on each provider’s servers, and the data owner (customer) has no control over the encryption keys.

In the case of S3 Client Side Encryption, the data owner does control the keys, but has to manage the keys manually.

Dave is VP Cloud Security for Trend Micro.

Encryption is not so simple with Windows Azure storage and SQL Azure, either. I’ve been lobbying for Transparent Data Encryption (TDE) for SQL Azure for a couple of years with no success.

<Return to section navigation list>

Cloud Computing Events

Eric Nelson (@ericnel) posted Six Weeks of Windows Azure – Tips to make the most of those six weeks! on 10/19/2011:

We have designed Six Weeks of Windows Azure [UK] to ensure a company with no exposure to the Windows Azure Platform or Cloud Computing will get plenty of value by taking part. However, we are also focused on given you options between now and then to maximise the value you get from the six weeks.

The first two tips are now live over on the Six Weeks of Windows Azure website:

- Getting Ready Tip 1: Attend a Windows Azure Discovery Workshop (Nov to Jan)

Next is Nov 8th

- Getting Ready Tip 2: Send your developers to a Windows Azure Bootcamp (Nov)

- Edinburgh is Nov 11th

- London is Nov 25th

Related Links:

- Detailed overview of Six Weeks of Windows Azure and FAQ.

- Signup

Robert Cathey (robertcathey) announced on 10/18/2011 a Webinar: Web-Scale Cloud Building with Arista Networks and Quanta to be held on 8/21/2011 at 10:00 AM PDT:

We spend a lot of time on stage at conferences talking about building web-scale clouds. This Friday, we’re partnering with Quanta and Arista to deliver a webinar for engineers and anyone else wanting to dig deeper into the details of architecture, equipment selection, and other technical issues that are sometimes ignored in similar presentations. We’ll also spend some time talking about successful cloud business models based on these design approaches. Here’s the info:

Friday, 21 October 2011

10:00 am PDT

Duration: 1 hour

Register here

Enterprise technology providers have been positioning their solutions for public and private clouds with meager results, it is fundamentally impossible to build profitable cloud services when paying the enterprise computing, storage, and virtualization taxes to companies whose business model does not support the operation of profitable cloud services. Arista has partnered to deliver several successful clouds to market – this session will go in depth into the architectures and equipment selected, how to cost effectively scale the operation, mistakes to avoid, and discuss successful business models for transformation into a cloud provider.

Spread the word to anyone that wants to learn about building web-scale clouds from folks who’ve done it.

Martin Tantow (@mtantow) posted REMINDER: Cloud Mafia on Wednesday on 10/17/2011:

The long awaited second gathering of Cloud Mafia is coming up. Easily the most fun cloud event in the Bay Area. Don’t miss it.

Wednesday, October 19 · 6:00pm - 9:00pm

Manor West

750 Harrison St

San Francisco, CASchedule:

6 to 7pm Networking

7 to 7:30pm Welcome/Introductions

7:30-to 10pm Networking/PartyCloud Mafia is a community built of companies and individuals who live and breath cloud. The meetup group is open to anyone– developers, CEOs, sales and marketing teams– to meet peers, build networks, share ideas, learn about the cloud and help others learn. We will have lightning talks and guest speakers, including customers with real-world stories, industry luminaries, VCs and more. At heart, this is an informal, social gathering of like-minded cloud enthusiasts out to change the world via the cloud.

Sign up here:

Sorry for the short notice.

<Return to section navigation list>

Other Cloud Computing Platforms and Services

Tim Anderson (@timanderson) reported Zend’s PHP cloud: develop in the cloud, deploy anywhere on 10/19/2011:

Zend has announced Zend Studio 9 beta, the latest version of its IDE for PHP. The feature that caught my eye is integrated support for the Zend Developer Cloud, currently in technical preview. Setting up a PHP development environment is not too difficult, but can be a hassle to maintain, and the idea of being able to fire up an IDE anywhere and start coding is attractive.

You do not need Zend Studio to use the Developer Cloud; they are independent projects, and you can use the free Eclipse PHP Development Tools (PDT) or another IDE or editor.

The PHP Developer Cloud is not just a shared hosting environment for PHP applications:

All applications are housed within a container on the Zend Application Fabric. This container is separate from all other containers and has its own database instance and is easily connected to your IDE.

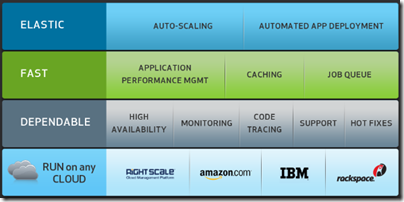

The Zend Application Fabric is for deployment as well as development. It is a server framework that includes the Zend Framework and also the capability of scaling on demand.

Once you have developed your app, you can deploy to any cloud provider that supports the Zend Application Fabric, including Amazon Web Services, IBM SmartCloud, a private “custom cloud”, or a resilient multiple cloud option which Zend calls RightScale. You can deploy to RightScale using both Amazon and Rackspace together, which I presume means your app will keep on going even if one of these providers were to fail.

Details on the site are sketchy, but if Zend has got this right it ticks a lot of boxes for enterprise PHP developers.

Related posts:

Windows Azure also supports the Zend framework.

Lukas Eder (@laseder) reported Cloud Fever now also at Sybase on 10/19/2011:

After SQL Server (SQL Azure) and MySQL (Google Cloud SQL), there is now also a SQL Anywhere database available in the cloud:

It’s called Sybase SQL Anywhere OnDemand or code name Fuji. I guess the connotation is that your data might as well be relocated to Fuji. Or your DBA might as well work from Fuji. Who knows

I don’t know where to start adding integration tests for jOOQ with all those cloud-based SQL solutions. Anyway, exciting times are coming! [Link added.]

Considering SAP’s emphasis on the cloud, I’m surprised it took Sybase so long to announce a beta. It appears from Sybase’s site that Sybase SQL Anywhere OnDemand is intended for ISVs to install on their own hardware with bursting to Amazon EC2 instances for peak traffic periods. For more information, see their About SQL Anywhere OnDemand Edition page and video.

Signed up and should receive my registration key “in a few minutes.”

David Linthicum (@DavidLinthicum) asserted “Although Apple's iCloud is a watered-down version of cloud computing, it will have a major effect on cloud perceptions” in a deck for his iCloud's big implications for cloud computing article of 10/19/2011 for InfoWorld’s Cloud Computing blog:

Apple's iCloud is out. Like many of you, I spent part of the day playing around with the new features such as applications, data, and content syncing. I even purchased 20GB of cloud storage and sent a few items back and forth among my iPad, Mac, and iPhone. It's a good upgrade, but not a great one.

That's a personal take. What about the implications for IT? As you might expect, the phone began to ring soon after iCloud's debut last week. What does a "cloud guy" think about iCloud, and how will it benefit or hurt cloud computing itself going forward? There are two implications to consider.