Windows Azure and Cloud Computing Posts for 10/4/2011+

| A compendium of Windows Azure, SQL Azure Database, AppFabric, Windows Azure Platform Appliance and other cloud-computing articles. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database and Reporting

- Marketplace DataMarket and OData

- Windows Azure AppFabric: Apps, Access Control, WIF and Service Bus

- Windows Azure VM Role, Virtual Network, Connect, RDP, Traffic Manager and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch and Entity Framework v4+

- Windows Azure Infrastructure and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

Azure Blob, Drive, Table and Queue Services

No significant articles today.

<Return to section navigation list>

SQL Azure Database and Reporting

Andrew Brust (@AndrewBrust) asserted “SQL Azure has become a major pillar in Microsoft's cloud-based strategy” in a deck for his Can SQL Server Punch Through the Clouds to No. 1? post of 10/1/2011 to his Redmond Review blog for Redmond Developer News:

At the end of August, while everyone was enjoying a last blast of summer, Microsoft announced a last blast of its own: Redmond told us that the next version of SQL Server, code-named "Denali," will be the final version to support the OLE DB data-access API. Microsoft says that SQL Server will now be aligned with ODBC. Considering that OLE DB was introduced roughly 15 years ago as a replacement for ODBC, there's a modicum of irony here.

But it's not a crazy move: Throughout the OLE DB era, ODBC has continued to enjoy the industry's broad support. Maybe this decision is just Redmond's belated recognition of a market preference. Microsoft has had a succession of database APIs over the last 20 years, and killing OLE DB may be just one more twist to the tale. But the decision seems to go beyond mere API support issues, extending to SQL Server's status as a cross-platform standard, and the status of SQL Azure as a major pillar in the Microsoft cloud strategy.

It's All About the Interop

Fundamental to my thinking are the blog posts announcing the OLE DB decision (bit.ly/pLpvRC and bit.ly/oYWm2f). They discuss not just the favored status of ODBC, but also SQL Server support for Java and PHP. At first I saw this as a non sequitur -- even a deliberate distraction, meant to quell criticism. But after further reflection, it started to make sense: Microsoft is decoupling SQL Server from Windows-centric OLE DB to broaden the flagship database's appeal across different developer stacks.Of course, SQL Server has long been usable from non-Windows platforms. Support for Java developers isn't new, and explicit support for PHP developers goes back to 2009 (see my May column from that year). But supporting and evangelizing SQL Server on these platforms has been more of a gesture than a market-altering initiative. So why the renewed interop focus? Why deprecate OLE DB now?

Two words: the cloud. SQL Server may have worked with Java and PHP before, but applications written in those languages often run on OSes other than Windows. Bringing a Windows server into the mix just for the database, in many cases, has simply been a deal-breaker. But as developers warm up to cloud computing, especially for database management, the underlying OS is an abstract matter; the database box is black. For developers using cloud databases, the API matters most. And for developers on non-Microsoft platforms, the call-level interface of ODBC works better than the COM-based interface of OLE DB. So SQL Server becomes more palatable, ODBC wins, and so do Java and PHP developers. For Microsoft to beat its competitors, sometimes it needs to help them.

Looking to Precedent

We've seen this before. Another Microsoft enterprise product, Exchange Server, provides support for the effectiveness of this "coopetition" approach. When Exchange ActiveSync was introduced, it provided push e-mail service to Windows Mobile devices. At the time, that Windows Mobile-Exchange combination was a breakthrough, meant to challenge the dominance of RIM BlackBerry devices and BlackBerry Enterprise Server in push e-mail. But the Windows Mobile market share challenges constrained the success of Exchange in this arena. Microsoft's response? License Exchange ActiveSync to its smartphone competitors, including Apple and Google.Licensing Exchange ActiveSync helped make iPhone much more compelling than it was upon introduction, and it made Android 2.x handsets acceptable to business users, too. This probably hurt Windows Mobile, which lasted for only one more major update and one subsequent dot release. But it consolidated Microsoft's dominant position in the enterprise e-mail space; has likely contributed to the success of Exchange Online and Office 365; inflicted at least 10 of the 1,000 cuts now plaguing RIM; and hammered another nail in the IBM Lotus Notes coffin.

That takes us right back to the database world: According to the product Web site, SQL Server had overtaken another IBM product -- DB2 -- for the No. 2 spot in relational database license revenue by 2009. You can bet that Microsoft Server & Tools Business President Satya Nadella has his eyes on No. 1. And knowing Nadella, he'll want to use the cloud to get Microsoft over the top.

Interoperability helps server market share. Server market share begets cloud momentum. If the cloud is SQL's road to No. 1 in the database world, then maybe SQL is Windows Azure's ticket to No. 1 in the cloud world. Exchange ActiveSync brought e-mail interop and Microsoft's enterprise e-mail leadership. Maybe the renaissance of ODBC, one of Microsoft's first 1990s client/server salvos, can lead to similar leadership in this decade for Microsoft in the cloud.

Don’t forget that SQL Server 2008 R2 Reporting Services also can expose data from reports as OData.

<Return to section navigation list>

MarketPlace DataMarket and OData

Evan Czaplicki and Shayne Burgess (@shayneburgess, pictured below) of the OData team described OData Compression in Windows Phone 7.5 (Mango) in a 10/4/2011 post:

One of the most frequently requested features for OData is payload compression. We have added two events to DataServiceContext in the OData phone client included in the Windows Phone SDK 7.1 that enables you to work with compressed payloads:

- WritingRequest – occurs immediately before a request is sent

- ReadingResponse – occurs immediately after a response is received

Now, let’s take a look at these in action. Here is a method that adds support for gzip compression for OData feeds in a Windows Phone app:

using SharpCompress.Compressor;

using SharpCompress.Compressor.Deflate;private void EnableGZipResponses(DataServiceContext ctx)

{

ctx.WritingRequest += new EventHandler<ReadingWritingHttpMessageEventArgs>(

(_, args) =>

{

args.Headers["Accept-Encoding"] = "gzip";

}

};ctx.ReadingResponse += new EventHandler<ReadingWritingHttpMessageEventArgs>(

(_, args) =>

{

if (args.Headers.ContainsKey("Content-Encoding") &&

args.Headers["Content-Encoding"].Contains("gzip"))

{

args.Content = new GZipStream(args.Content, CompressionMode.Decompress);

}

}

);

}

This method registers two event handlers that make all of the client-side modifications to the message necessary to accept gzip compressed messages; just pass in your DataServiceContext. We’ll examine each event handler individually.

The WritingRequest event handler

Here we simply set the Accept-Encoding to gzip to inform the server that we can accept a gzip’d response. With this header set, the server will send us a gzip’d response, if it can.

The ReadingResponse event handler

The Content-Encoding header tells us how the server encoded the response body. We only modify the response body (in args.Content) when this header has been set to gzip. In this way, gzip-compressed content is decompressed with GZipStream, and everything else is just left as is.

Unfortunately, GZipStream is not currently supported on Windows Phone. We must rely on a third-party library to perform decompression and so we have used the SharpCompress Library, which worked quite well. If you download SharpCompress, you’ll get a zipped folder containing some libraries. Just add SharpCompress.WP7.dll to your project references, and you’ll be ready to use GZipStream on Windows Phone. If you’ve never added a reference before, check out this walkthrough. You can probably skip straight to the section called “To add a reference in Visual C#”. Browse to where you saved the SharpCompress.WP7.dll, and then add it to your project.

Note that if we also wanted to send a compressed request (POST) to the data service, we could simply set the Content-Encoding header to gzip in the WritingRequest handler and compress the payload stream returned by the Content property.

Server-Side Configuration

The client side changes we’ve discussed so far require that our server be configured to support message compression. If you are using IIS, check out these links to get compression set up: IIS 6.0, IIS 7.0, or a more extensive guide for both. You should be compressing OData payloads as soon as your server is ready.

Turker Keskinpala (@tkes) announced OData Service Validation Tool Available on Codeplex in an 8/26/2011 post to the OData blog (missed when published due to feed failure):

We are happy to announce that the OData Service Validation Tool is now an Outercurve project and is available on Codeplex (http://bit.ly/nqEVlH). We released it in such a way that the tool is fully open source and we will be able to accept contributions. This is something we are very excited about since we saw this project as a community project from the very beginning. Please feel free to blog/tweet about this and let the OData world know.

You can immediately fork it or download the source code, play with it, deploy in your environment and experiment. We are going to be improving the Codeplex content and documentation (e.g. how to write new rules) over the next weeks. We will also populate the issue list with issues currently in our internal issue database so that you can start tackling some issues if you are itching for contributing to the project.

We will continue to have a hosted version updated with the same 2 week cadence from the Codeplex branch at http://validator.odata.org.

Thank you for your patience while we worked through all the legal issues and for your continued support for the project. We are looking forward to improving the interoperability of OData as a community through the OData Service Validation Tool.

Please let us know if you have any questions and jump in the discussion if you haven't already done so either on this mailing list or on the discussion list on project page.

<Return to section navigation list>

Windows Azure AppFabric: Apps, Access Control, WIF and Service Bus

Microsoft’s Download Center offered a Microsoft Dynamics CRM Online FixIssuer Tool for Windows Azure AppFabric Integration download on 10/4/2011:

Tool to fix Microsoft Dynamics CRM certificate for Windows AppFabric integration.

Quick details

- Version: 1.0

- Date Published: 9/26/2011

- Language: English

- File Name: FixIssuer.exe

- Size: 77 KB

Overview

This download applies only to Microsoft Dynamics CRM Online organizations who have implemented integration with Windows Azure AppFabric Service Bus. You do not need to apply this update if you do not use this feature.

The Windows Azure AppFabric team recently made security enhancements which requires the Microsoft Dynamics CRM Online service to use a new certificate to authenticate against the Windows Azure AppFabric service. Running the command line tool mentioned above will modify the configuration in your Azure namespace. The changes allow the messages sent from Microsoft Dynamics CRM Online service to your Windows Azure AppFabric service endpoint to be authenticated with both the current certificate as well as the updated certificate.

Download the instructions for using this tool, see Related Resources below or cut and paste this link into your browser:

System requirements

Supported Operating Systems: Windows 7, Windows Server 2008. Windows Server 2008

Petri Wilhelmsen of Tech Days Norge uploaded a 00:02:23 What features of AppFabric Service Bus will make cloud applications great? video interview with Ralph Squillace to You Tube on 9/29/2011:

Windows Azure SDK: http://bit.ly/nuT1gi

Learn Windows Azure: http://bit.ly/nictJ7

Windows [Azure] AppFabric Blog: http://bit.ly/rgLyM0

Slides + Scripts: http://bit.ly/pG9x5K

<Return to section navigation list>

Windows Azure VM Role, Virtual Network, Connect, RDP, Traffic Manager and CDN

Duncan MacKenzie reported Channel 9 has gone global! on 10/3/2011:

Before we launched the most recent version of Channel 9 we did a lot of work to make sure site performance was acceptable for our users. This involves data collection to try to determine what aspects of the site are slow, and then work to make it all better. At some point though, we run into problems that just can’t be solved.

One of those issues occurs naturally when you have a web site being served from a single location, in our case the United States, to people located all around the world. For some folks the site will be slower than for others, and it seems like there is very little that could be done about this problem. We could move our site hosting to a new location, but that would just move the problem… now different people would have a good connection and others would have a poor one.

Turns out, if you are hosted in Azure, there is a better solution; multiple web servers located in different geographic regions around the world. This is a concept that is used by many large web sites already, but it requires specialized DNS services and (until now) wasn’t something that a ‘regular’ site could take advantage of. Now, using the Windows Azure Traffic Manager (WATM), it is possible to add this functionality to any Windows Azure hosted site by [Emphasis added]:

- Creating multiple instances of your site (placed in different geographic regions),

- Creating a ‘performance’ based traffic manager policy,

- Adding all of your production instances into this policy, and

- Modifying the DNS entry of your site (in our case the entry for channel9.msdn.com) to point at the special DNS name associated with your new WATM policy.

A ‘performance’ based policy, like we are using, sends traffic to the fastest performing active instance in the policy, based on network performance. This is not a real time indicator, but instead is based on aggregate performance data, and so the policy will generally always send a given user to the same location.

You can start out with only a single production instance in the policy (which wouldn’t actually do anything at this point, since all the traffic would be going to that single instance) and then add additional production instances around the world as you wish. In our case we started with three instances:

- Our original production site in the North Central US,

- A new site in East Asia, and

- A new site in Northern Europe

While the exact location of these servers isn’t something that is publically discussed, they correspond to three of the six Windows Azure data centers as shown in this picture.

Recently you may have noticed a new icon along the top of the site:

Hovering over this icon will show you the data center you are currently hitting. Hopefully this will be the best data center for you, but if it isn’t there are a few possibilities. One is that the data center you are hitting is truly the fastest option for you (sometimes network performance does not correspond to the real world distances between you and a data center), and the other is that you seem to be coming from a different location than you really should be. One issue to be aware of is that some large shared DNS services can cause this issue, because they can make it seem like you are physically located wherever their DNS servers are sitting… which could be quite far from your actual location. This article discusses the issue with services such as Google’s DNS service and OpenDNS briefly for those of you that are interested.

For more information on the Windows Azure Traffic Manager, check out this episode of Cloud Cover!

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

Avkash Chauhan described Handling "System.ServiceModel.AddressAlreadyInUseException: HTTP could not register URL" Exception in Compute Emulator with Windows Azure SDK 1.5 in a 10/4/2011 post:

Using Windows Azure SDK 1.5, if you create a worker role with HTTP internal endpoint and you have weak wildcard binding (http://+:<port_number>) setting you may encounter the following error while running your application in compute emulator.

System.ServiceModel.AddressAlreadyInUseException: HTTP could not register URL http://+:<port_number>/. Another application has already registered this URL with HTTP.SYS. ---> System.Net.HttpListenerException: Failed to listen on prefix 'http://+:<port_number>/' because it conflicts with an existing registration on the machine.

Up to Windows Azure SDK 1.4 when you have multiple instances based Windows Azure application running in Compute Emulator, all instances had same IP address but different port however with SDK 1.5, not all instances will have different IP address & port for each instance. (Click here for more info). This is the reason having weak wildcard binding will cause above error.

To solve this problem In SDK 1.5, you can bind to the specific IP rather than using the weak wildcard "+" so when you run your multiple instances based Windows Azure application in compute emulator it will not cause the above described error.

Brian Swan (@brian_swan) described Support for Worker Roles in the Windows Azure SDK for PHP in a 10/4/2011 post:

Since the release of version 4.0 of the Windows Azure SDK for PHP, one of the missing pieces has been the ability to package Worker roles as part of a deployment. As of this past weekend, that is no longer the case. Maarten Balliauw, the main contributor to the SDK, has added support for worker roles to the default scaffolder, which now makes it extremely easy to run not only one worker role, but many worker roles (and many web roles too). In this post, I’ll show you how to package and deploy a worker role as part of a web application.

Note: If you aren’t familiar with scaffolds, I’d suggest reading the following tutorials for context:

Here are the steps to including a Worker role in an Azure/PHP deployment:

1. Get the latest Windows Azure SDK for PHP.

As of this writing, you’ll need to get the latest bits from here: http://phpazure.codeplex.com/SourceControl/list/changesets. You should soon see these latest bits available for download directly from the CodePlex site here: http://phpazure.codeplex.com/releases/view/73437. If you haven’t already installed and set up the SDK, you can do so by following this tutorial: Set Up the Windows Azure SDK for PHP.

2. Run the default scaffolder.

This tutorial, Build and Deploy a Windows Azure PHP Application, will give you a good idea of what a scaffold is and how to use the default scaffold in the SDK. The short story is that a scaffold provides a project skeleton that takes care of much of the set up and configuration you would have to do for a Windows Azure PHP project. All you have to do is add your source code. To create the default scaffold with a worker role, run this command:

scaffolder run -out="c:\path\to\output\directory" -web="WebRoleName" -workers="WorkerRoleName"I ran this command with my output directory set to c:\workspace\test, a Web role with name WebRole1, and a Worker role with name WorkerRole1. Here is what you should see as the resulting directory:

Note: With the new SDK, you can create a scaffold that has many Web roles and many Worker roles with a command similar to this:

scaffolder run -out="c:\path\to\output\directory" -web="WebRoleName1,WebRoleName2,...,WebRoleNameN" -workers="WorkerRoleName1,WorkerRoleName2,...,WorkerRoleNameN""3. Add the source code for your Web and Worker roles.

Now, simply add the source code for your Web Role to the WebRole1 directory and the source code for your Worker role to the WorkerRole1 directory.

4. Test by running in the Compute Emulator.

You can test your code by running your project in the Windows Azure Compute Emulator with this command:

package create -in="C:\path\to\scaffold\directory" -out="c:\path\to\drop\packge\files" -dev=trueSo in my case, I set the –in parameter to c:\workspace\test and the –out parameter to c:\workspace\test\build.

5. Package and deploy to Windows Azure.

Finally, when you are ready to deploy your application to Windows Azure, you can do so with this command (same as above but with dev=false):

package create -in="C:\path\to\scaffold\directory" -out="c:\path\to\drop\packge\files" -dev=falseIn your output directory, you should find two files: a .cspkg file and a .cscfg file. You can deploy these to Windows Azure through the developer portal: https://windows.azure.com/ (more info on how to do this here). Other deployment options include using the command line tools available in the SDK, or using WAZ-PHP-Utils tools available on GitHub (and developed by Ben Lobaugh).

That’s it. Of course, all of this begs the question, WHY use worker roles? The answer to that (or at least one answer) is coming in my next post.

Karl Shiflett edited the patterns & practices - Case Study: TailSpin Windows Phone 7 [Mango] Survey Application CodePlex project on 10/3/2011:

You can download the current project drop here.

Current Drop

Overview

Windows Phone 7 Mango provides an exciting opportunity for companies and developers to build applications that travel with users, are interactive and attractive, and are available whenever and wherever users want to work with them.

By combining Windows Phone 7 Mango applications with on-premises services and applications, or remote services and applications that run in the cloud (such as those using the Windows Azure™ technology platform), developers can create highly scalable, reliable, and powerful applications that extend the functionality beyond the traditional desktop or laptop; and into a truly portable and much more accessible environment.

This guide describes a scenario around a fictitious company named Tailspin that has decided to encompass Windows Phone 7 Mango as a client device for their existing cloud-based application. Their Windows Azure-based application named Surveys is described in detail in a previous book in this series, Developing Applications for the Cloud on the Microsoft Windows Azure Platform. For more information about that book, see the page by the same name on MSDN® at Windows Phone 7 Developer Guide.

In addition to describing the client application, its integration with the remote services, and the decisions made during its design and implementation, this book discusses related factors, such as the design patterns used, the capabilities and use of Windows Phone 7, and the ways that the application could be extended or modified for other scenarios.

The result is that, after reading this case study, you will be familiar with how to design and implement applications for Windows Phone 7 Mango that take advantage of remote services to obtain and upload data while providing a great user experience on the device.

Windows Phone 7 Test Guidance Survey

The Windows Phone 7 Guidance team has posted a very short, 10 question survey that centers on testing of Windows Phone 7 applications.

http://www.surveymonkey.com/s/NBZ3FS8

This survey will be used to drive the second phase of our current project.Project's scope

This project updates the current Windows® Phone 7.0 Guidance published on MSDN at Windows Phone 7 Developer Guide to Windows Phone 7.5 Mango while at the same time reducing the written guidance down to a case study of the TailSpin application. This case study will also examine and provide additional guidance for unit testing Windows Phone 7 applications.

This project and the written guidance have been renamed to Case Study: TailSpin Windows Phone 7 Survey Application.

The key themes for these projects are:

1. A Windows Phone 7 Mango client application

2. A Windows Azure backend for the system

3. Unit testing for Windows Phone 7 Mango client applications

<Return to section navigation list>

Visual Studio LightSwitch and Entity Framework 4.1+

The Visual Studio LightSwitch Team (@VSLightSwitch) reminded readers about a new MSDN Magazine: Securing Access to LightSwitch Applications article in a 10/4/2011 post:

Let’s face it: Implementing application security can be daunting. Luckily, Visual Studio LightSwitch makes it easy to manage permissions-based access control in line-of-business (LOB) applications, allowing you to build applications with access-control logic to meet the specific needs of your business.

In this month’s issue of MSDN Magazine we’ve got a feature article on LightSwitch Security you should check out: LightSwitch Security: Securing Access to LightSwitch Applications.

Seth Juarez (@SethJuarez) reported Building Business Applications with Visual Studio LightSwitch 2011– A Webinar with Alessandro Del Sole (Oct. 4th 10:00AM PST) in a 10/3/2011 post to the DevExpress Reporting Blog:

I am tremendously excited to welcome Alessandro Del Sole as one of our newest webinar presenters! He is a Microsoft MVP in Visual Basic Development, Author, and all around LightSwitch genius! He will be guiding us through the process of developing real applications using Microsoft’s new addition to the Visual Studio family: LightSwitch.

His description says it all:

Visual Studio LightSwitch is the new development tool from Microsoft for rapidly building business applications for the Desktop, the Web, and the Cloud. LightSwitch is for developers of all skill levels, offering lots of coding-optional features, providing a variety of pre-built templates and tools so that the only code you write is for optimizing your business logic. This avoids the need of writing all the code for data access and the user interface, saving you an incredible amount of time. LightSwitch makes it simple to interact with multiple, existing data sources and deploying applications to clients. In this session you will get started with Visual Studio LightSwitch 2011 and you will see how easy and quick it can be to build high-quality, professional business applications, including an overview of the Microsoft technologies running under the hood.This webinar is a live training session starting at 10:00AM PST on Tuesday October 4th. I personally was surprised by the amount of things possible with LightSwitch, its general design strategies, as well as how fast I could make real applications. I will also be on hand during the webinar to answer general questions as well as any LightSwitch related reporting questions.

Please excuse the short notice. Watch the recorded Webinar here.

Return to section navigation list>

Windows Azure Infrastructure and DevOps

Herman Mehling asserted “Emerging app performance testing solutions are offering real-time testing, deep visibility into apps, integration with multiple technologies, and DevOps collaboration features” in a deck for his Application Performance Testing in the DevOps Model article of 10/4/2011 for DevX (site registration required):

The quest to bring developers and operational people closer together in our Web-centric and increasingly cloud-centric world has created numerous innovations over the years. Many of those innovations, such as SOA, APM and DevOps, focus on the performance testing of apps -- a space that neatly intersects the concerns and responsibilities of both developers and operations teams.

The whole DevOps idea has grown up around the awareness that there is a disconnect between development activity and operations activity. This disconnect frequently breeds conflict and inefficiency, exacerbated by a swirl of competing motivations and processes.

For the most part, developers are paid to create solutions (aka changes) that will address the evolving needs of a business. Companies often incentivize developers to create better, faster solutions as quickly and efficiently as possible.

At the same time, companies rely on operations teams to do the exact opposite: preserve the status quo by ensuring that current products and services are stable and reliable, and making money. For operations teams, changes are threatening at best and unwelcome at worst.

Complicating the scene even more is that dev and ops teams often use different software tools, report to competing corporate leaders, and practice conflicting corporate politics. Some dev and ops teams even work poles apart geographically.

App Performance Testing That Serves Dev and Ops

Over the past year, a number of app performance testing solutions have appeared, some offering real-time testing, deep visibility into apps, integration with multiple technologies, and collaborative working for DevOps stakeholders.

Some of the more interesting solutions include MuleSoft's Mule ESB and SaaS-based variants of real-user monitoring (RUM) from Compuware and New Relic.

MuleSoft provides enterprise-class software based on Mule ESB, Apache Tomcat, and other open source application infrastructure products. Mule ESB is a lightweight enterprise service bus (ESB) and integration platform. The tool allows cross-functional IT teams to leverage the same Web-based console to deploy, monitor and manage applications, with the goal of enabling more agile deployment processes.

The main value that RUM provides is that it measures key front-end metrics from the moment a user request is initiated in the app to the final loading of the resulting Web page. Compuware's Gomez Real-User Monitoring measures mobile Web site and native application performance. It measures performance directly from a user's browser and mobile device, allowing developers and IT professionals to evaluate real-user performance by device or browser, geography, network and connection speed, as well as the resultant impact on business metrics such as page views, conversions, abandonment and end-user satisfaction.

Delivered on demand, Gomez RUM does not require any hardware installation or maintenance. Key capabilities include: mobile browser-based, real-user performance monitoring across all JavaScript-enabled mobile browsers, and mobile native application-based real-user performance monitoring across iOS and Android.

New Relic's RUM is one of the first commercial implementations of technology built on the Episodes framework for measuring Web page load times. For each page request, New Relic captures network time, time in the application itself and time spent rendering the Web page. The software also tracks the type and version of browser, operating system and geographic location of the user. …

Read more: Next Page: App Performance Testing Tools for Developers ![]()

Janet Tu reported Elop: First Nokia Windows Phones this quarter in a 10/4/2011 post to the Seattle Times’ Business / Technology blog:

Nokia CEO Stephen Elop promised Tuesday that the Finnish phonemaker would unveil its first Windows Phones this quarter, according to Reuters.

The launch is expected to coincide with the annual Nokia World trade show in London starting Oct. 26 but it remains to be seen whether the devices will be shipped in time for the holiday-sales season, Reuters reports.

A fall launch date had been widely expected.

Nokia has bet all its smartphone chips on a collaboration with Microsoft, saying earlier this year that it would be ditching its own Symbian system in favor of Windows Phone on all its smartphones. A lot is at stake for both Nokia and Microsoft in this partnership, with both companies having seen their shares of the mobile phone market slide.

Just last week, Nokia announced it was cutting 3,500 jobs.

Jesus Rodriguez described Managing Your Datacenter From Your Smartphone or Tablet. Moesion: The Vision, The Product, The Future in a 10/4/2011 post:

A few days ago, Tellago Studios, announced the release of Moesion, a cloud platform to manage your IT systems, whether on-premise or on the cloud, from your smartphone or tablet. Moesion attempts to make IT professionals more responsive and productive by enabling traditional system management capabilities from their mobile device without the need of a computer or a VPN connection.

As a platform, Moesion extends the best practices and techniques of decades of IT system management with modern concepts of cloud, mobile and social computing. Moesion is the first manifestation of a bigger vision to bring true mobility enterprise IT.

The Vision

These are exciting times in the software industry. Movements like cloud, mobility and social computing are revolutionizing the way we build, interact and even think about software applications. While we regularly witness the impact of these technologies in the consumer world, we think enterprise software is not exempt of these transformations. On the contrary, we think that applying mobile, cloud and social computing dynamics to traditional enterprise software can drastically enhance the way organizations do business while, at the same token, bring a much needed breath of innovation to enterprise IT.

Moesion is our first step executing towards this vision by building a cloud platform that enables the management of IT systems from your mobile device. Moesion is based on a series of simple principles that, we believe, will be the foundation of the next generation of IT system management software.

- IT Pros should be able to manage their systems anytime, anywhere: The times in which you needed a computer and a VPN connection to manage your IT infrastructure are about to change. We firmly believe that IT professionals should be able to manage their IT systems directly from their mobile device without the need to remotely login to their corporate networks.

- Modern IT systems should be manageable from your mobile device:We believe that any modern, relevant IT system should be manageable from your smartphone or tablet.

- IT system management should be social: System management is an intrinsically social activity. We believe that IT professionals should be able to share knowledge and collaborate with each other while managing their IT systems.

- IT system management should be simple: Call us radicals, but we think that managing and monitoring an IT system shouldn’t be more difficult that interacting with a friend in your favorite social network. Taking this principle even further, we believe that a some social networking concepts such as tagging, poking, following, and liking can drastically improve the experience of managing an enterprise IT infrastructure.

- Cloud infrastructures are the future of IT system management: Let’s face it, IT system management software requires expensive on-premise infrastructures and are ridiculously difficult to maintain, scale and upgrade. We believe that the next generation of IT system management software will be based on cloud infrastructures and platforms that will drastically simplify the adoption experiencing while bringing an unprecedented level of innovation to enterprise IT.

The Product

Moesion represents the first iteration of the vision explained in the previous section. We can try to better explain Moesion using a fictional but very common story of an IT pro who, for this example, we can call Bob. As many other IT pros, Bob is responsible for managing dozens of servers in his company. One day Bob is traveling to a business meeting in another city when he receives call from his manager explaining that the main employee portal doesn’t seem to be working. At that time, Bob brings up his IPhone and quickly queries the event log of the web server hosting the company portal. The quick inspection reveals a series of errors thrown by the Internet Information Services instance hosting the employee portal. Without leaving his IPhone, Bob brings up an Internet Information Services Manager application and goes to the list of websites just to realize that the employee portal website has been stopped. Directly from his IPhone, Bob starts up the specific website and calls back his manager to confirm that the portal is working again.

Despite the fictional example, the previous story describes what IT professionals can accomplish by using Moesion.

In a nutshell, Moesion could be defined as a cloud based platform that enables the management of IT systems, whether on-premise or on the cloud, from your mobile device. As an IT professional, you can use Moesion to extend your system management experience to your mobile device including:

- Manage your IT systems and cloud services from your smartphone or tablet

- Publish and execute management script directly from your mobile device

- Have immediate access to dozens of system management applications and hundreds of scripts directly from your smartphone or tablet.

The following picture illustrates a high level diagram of the Moesion platform.

Expanding on the previous diagram, we could explain the Moesion platform as five fundamental components.

- Dozens of mobile HTML5 applications to manage your IT systems from your smartphone or tablet

- A social script repository to publish and execute scripts from your mobile device.

- A lightweight agent that controls the system management capabilities of specific servers

- An application store on which you can access dozens of new applications and hundreds of scripts to manage your IT infrastructure

- A multi-tenant cloud infrastructure to host, provision and scale your Moesion deployment.

By combining the previous capabilities, Moesion provides a very flexible platform that allows IT professionals to efficiently manage their IT infrastructure using their smartphone of tablet.

The Future

This initial version of Moesion is just our initial step towards providing a complete experience to manage your IT infrastructure from your mobile device. Together with this initial release, the Moesion team announced the immediate roadmap for the platform which could be summarized in the following topics.

- More and more apps: Almost every week, you will find new applications in the Moesion app store. The immediate roadmap includes on-premise systems such as VMWare, Citrix or Oracle DB as well as cloud platforms such as Amazon AWS or RightScale.

- Monitoring (Twitter for your IT systems): Very soon Moesion will include capabilities to subscribe to events in your IT infrastructure by using similar social dynamics to platforms such as Twitter, LinkedIn or Facebook.

- Knowledge management: Moesion will provide the infrastructure to capture and evolve blocks of knowledge about your IT infrastructure that will facilitate the understanding the troubleshooting or your IT systems.

Conclusion

Moesion is a new platform that enables the management of your IT infrastucture from your smartphone or tablet. Moesion combines the principles of mobile, cloud and social computing to extend the management of your IT systems to your mobile device. Currently, Moesion is available as a private Beta at http://www.moesion.com

<Return to section navigation list>

Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

Microsoft’s Server and Tools Business group indicated that WAPA was still alive (if not well) by placing an advert for a SR Eng Service Engineer Job on 10/3/2011:

Are you passionate about Cloud Services? Do you want to be a part of enabling enterprises, hosters and governments run cloud services via the Cloud Appliance? Are you an experienced operations manager who lives and breathes site up, availability, and reliability? If you answered ‘yes’ to these questions above this job is for you!

The Windows Azure Platform Appliance (WAPA) integrates hardware, software and services together in a single package that will light up on-premises instances of the Windows Azure Platform technologies at tremendous scale. It provides unique ways for enterprises, hosters and governments to efficiently manage elastic virtualized environments with compute, networking, storage, database and application development capabilities. We will deliver WAPA with the help of hardware and operational partners to bring together the best in hardware innovation, IT services and software.

We are looking to significantly expand the program beyond the current four partners, Dell, eBay, Fujitsu and HP. The Cloud Appliance Production Services team is looking for a few passionate, driven and experienced Operations Managers to drive this expansion. Come help us scale the appliance program and deliver a new technology to our customers and partners.

Key Responsibilities: In this role you will be operating the Windows Azure Platform Appliance at various customer locations around the world and working with the product team to improve a v1 product. Individuals in this role are accountable for designing and driving operability into the platform, leading “run-it” execution in our operational silos(incident management, T3 customer support, platform deployment, platform build-out), and leading service restoration during a crisis. The successful candidate will be able to demonstrate breadth and depth managing complex, highly available, and scalable services.

Responsibilities:

- Champion platform operability by designing and driving improvements into features, monitors and tools.

- Oversight and accountability for performance in incident management, software deployment, hardware build-out and customer support operational silos in a 24X7X365 environment

- Cut minutes off outages. Manage technical response during critical incidents (Incident Commander)

- POC for key internal and external partners

- Develop and maintain operational playbooks that guide engineering teams' day to day activities

- Participate in the design of v.next cloud architecture

- Drive continuous improvement into the product, process, and technology through gap analysis and solution design.

Required skills:

- Operations Experience in a 24 x 7 x 365 enterprise environment

- Demonstrated strategic thinking, quantitative and analytical skills, team leadership, and collaboration

- Excellent problem resolution, judgment, negotiating and decision making skills

- Detail and results oriented with a good balance of passion for technology and eagerness to accept ownership and accountability.

- Must effectively manage and prioritize multiple tasks in accordance with high level objectives/projects.

- Excellent written and oral communication skills required; Ability to communicate to a variety of audiences including engineers, executive management and customers.

- 6-10 years of experience managing high volume web services, Windows Server, and virtualization in a complex, high availability environment

- 6-10 years of experience managing scalable networks with a deep understanding of the OSI Model, enterprise networks, TCP/IP, diagnosing network faults

- BS/BA in Computer Science, math, telecommunications, or equivalent education or experience

Nearest Major Market: Seattle

Nearest Secondary Market: Bellevue

Job Segments: Application Developer, Cloud, Computer Science, Customer Service, Database, Engineer, Engineering, System Administrator, Technology, Telecom, Telecommunications, Virtualization

I haven’t seen any evidence of continued interest in WAPA by Dell and HP, both of whom have announced competitive public and private cloud implementations. As far as I’ve heard, only Fujitsu has implemented WAPA (in August 2011) and eBay is still experimenting with the appliance.

Deb McFadden (@debrajoh) reported System Center Configuration Manager 2012 Blog Series Kick-Off in a 10/4/2011 post to the Microsoft Server and Cloud Platform Team blog:

As you may know, the upcoming release of System Center Configuration Manager 2012 introduces a user-centric paradigm for desktop management that helps address the management challenge of consumer devices entering the workplace. A majority of users want flexible options to be more productive – they want to work from any device, from anywhere and expect always-on access to the corporate resources. IT organizations need a consistent way of managing diverse devices and users without compromising corporate compliance or security. System Center Configuration Manager 2012 will enable the IT organizations to deliver rich, consistent experiences to every user, regardless of where they are located or what device they might be using while maintaining a unified management infrastructure.

To help bring you up to speed on the new features and functionality in Configuration Manager 2012, we are kicking off a new blog series this week. These blogs will provide background on how the solution was developed, share best practices for implementation and administration, and highlight tools and guidance for configuring and operating the new release. We very much want this blog series to be a two-way dialogue – so please make sure to share your comments and questions. In the meantime, download the Configuration Manager 2012 Beta 2 trial and check out the evaluation resources.

We are genuinely excited to talk about the upcoming release of System Center Configuration Manager and engage with the passionate System Center community!

Deb is a Microsoft Principal Group Program Manager. I hope she’ll include substantial content for Project “Concero,” which is reported to be a part of the SCCM release.

Kevin Remde (@KevinRemde) posted Doh! (What I learned about Windows Failover Clustering. The hard way.) on 10/4/2011:

Okay.. I feel like sharing this because it’s pretty stupid, but in a geeky-sort-of-way the solution was interesting enough to share. Think Chicken & Egg. (or “Catch-22”).

As the title of this post suggests, the subject is Windows Failover Clustering. For those of you who are not familiar with it, Windows Failover Clustering is a built-in feature available in Windows Server 2008 R2 Enterprise and Datacenter editions. Along with shared storage (for which we used the free iSCSI Software Target from Microsoft to implement), it provides a very easy-to-configure and use cluster for serving up highly available services. In our case, this would be virtual machines running on two clustered virtualization hosts.

The Background

As a training platform, but primarily for use as a demonstration platform for our presentations (and certainly more real-world than one laptop alone can demonstrate), our team received budget to acquire several Dell servers. We found a partner (Thank you Groupware Technology!) who was willing to house the servers for us (Thank you, The idea was that we, the 12 IT Pro Evangelists (ITEs) in the US would travel to San Jose in groups of 3-4 and do the installation of a solid private cloud platform, using Microsoft’s current set of products (Windows Server 2008 R2 and System Center). This past week I was fortunate enough to be a member of the first wave, along with my good buddies Harold Wong, Chris Henley, and John Weston. The goal was to build it, document it, and then hand if off to the next groups to use our documentation and start from scratch, eventually leaving us with great documentation, and a platform to do demonstrations of Microsoft’s current and future management suites.

The Process

We all arrived in San Jose Monday morning, and installed all 5 server operating systems in the afternoon. We installed them again Tuesday morning.

“Huh? Why?”

It’s a long story involving how Dell had configured the storage we ordered. We needed to swap some drives between machines and set up RAID and partitioning in a way that was more workable to our goals. I’ll leave that discussion for one of my teammates to blog about.

Anyway, once we had the servers up, I installed and configured the Microsoft iSCSI software target on our “VMSTORAGE” server, and configured two other servers as Hyper-V Hosts in a host cluster, with Windows Failover Clustering and CSV storage. By the end of the week we had overcome hardware, networking, missed-BIOS-checkmarks (did you know that Hyper-V will install, but you can’t actually use it if you somehow miss enabling Virtualization support on the CPU on one of the host cluster machines? Who’da thunk it?!) , we had 5 physical and a half-dozen virtual servers installed and running, with Live Migration enabled for the VMs in the cluster. Our domain had two domain controllers; one as a clustered, highly-available VM, and the other as a VM that was not-clustered, but still living in the CSV volume; C:\ClusterStorage\Volume1 in our case. (That’s a hint, by the way. Do you see the problem yet?)

The “Doh!”

One of the many hurdles we had to overcome early on was an inadequate network switch for our storage network. 100Mbps wasn’t going to cut it, so until our Gig-Ethernet switch arrived on Friday, Harold used his personal switch that he carries with him. On Friday before we left for the airport, we shut down the servers and let the folks there install the new switch. Harold need his switch back at home.

But in restarting the servers, here’s the catch: Windows Failover Clustering requires Active Directory. The storage mount-point (C:\ClusterStorage\Volume1) on our cluster nodes requires the Failover Clustering. And remember where I said our domain controllers were?

“Um.. So… Your DCs couldn’t start, because their location wasn’t available. And their location wasn’t available, because the DC’s hadn’t started. And your DC’s couldn’t start, because their storage location wasn’t available, and… !!”

Bingo. Exactly. Chicken, meet Egg. It was our, “Oh shoot!” moment. (Not exactly what I said, but you get the idea.)

“So how did you fix it?”

I’ll tell you…

The Resolution

Our KVM was a Belkin unit that supports IP connections and access to the machines through a browser. We configured it to be externally accessible. So I was able to use that to get in to the physical servers and try to solve this “missing DCs” puzzle; though to make matters much more difficult, the web interface for that KVM is really, REALLY horrible. The mouse didn’t track to my mouse directly, no ALT+ key support, TAB key didn’t work.. I ended up doing a lot of the work from a command-line simply because it was easier than trying to line up and click on things! Perhaps in a future blog post I will give Belkin a piece of my mind regarding this piece-of-“shoot” device…

So, my solution was based on two important facts:

- The Microsoft iSCSI Target creates storage “devices” that are really just fixed-size .VHD files, and

- Windows Server 2008 R2 natively supports the mounting of VHD files into the file system.

“Ah ha! So on the storage machine, you mounted the .VHD file that was your cluster storage disk, and you copied out the .VHD file from one of the domain controller VMs!”

Yeah.. that’s basically it. Though I did have one problem. The .VHD file was in-use; probably by the iSCSI Software Target service. So when I tried to attach it, the OS wouldn’t let me.

Fortunately I found that by stopping that “Microsoft iSCSI Software Target” service on the storage server (I also stopped the “Cluster Service” on the two Hyper-V cluster nodes), I was able to attach to the .VHD, navigate into it, and copy out the .VHD disk for the needed Domain Controller. (Actually, I also removed the .VHD from its original location. I didn’t want the DC to come alive again when the storage came back online, if the identical DC was already awake and functioning.)

So after that, it was as simple (?) as this:

- Re-create the DC virtual machine on one of our standalone Hyper-V Servers (using local storage this time),

- Attach the DC’s retrieved .VHD file to the new machine,

- Fire it up,

- Reconfigure networking within the DC (the running machine saw it's NIC as a different adapter, but that was easily fixed) and verify that it was alive on the network,

- And then restart the iSCSI Target service on the VMSTORAGE server, and then the Cluster Service on the Hyper-V nodes.

Everything came back to life almost immediately; including the Remote Desktop Gateway that we had configured so that we could remotely connect to the machines in a more meaningful, functional way.

So the moral of the story is:

When you’re building your own test lab, or even considering where to put your DCs in your production environment, make sure you have at least one DC that comes online without depending upon other services (such as high-availability solutions) that, in turn, require a DC to be functioning.All-in-all, it was a great week.

Kevin is an IT Pro Evangelist with Microsoft.

Bruce Kyle described how to Connect Your SharePoint Online Apps to Azure Through Business Connectivity Services (BCS) in a 10/3/2011 post to the US ISV Evangelism blog:

Microsoft plans to expand the business critical services delivered by Office 365 and Azure and make moving to the cloud even easier for customers. By the end of the year, the first round of service updates to SharePoint Online since Office 365 launched will be complete and will enable customers to use Business Connectivity Services (BCS) to connect to data sources via Windows Communication Foundation (WCF) Web Services endpoints. BCS lets customers use and search data from other systems as if it lives in SharePoint—in both read and write modes.

The announcement was made at SharePoint Conference begins in Anaheim, California today and on the SharePoint team blog, Welcome to Microsoft SharePoint Conference 2011!

The announcement is key for ISVs who want to develop applications for SharePoint Online. BCS provides the way you can call from the server to databases and Web Services.

Learn more about Using Business Connectivity Services in SharePoint 2010.

<Return to section navigation list>

Cloud Security and Governance

Dave Shackleford (@daveshackleford) posted Network segmentation best practices in virtual and private cloud environments to TechTarget’s SearchCloudSecurity blog on 10/4/2011:

The concept of network segmentation and isolation is far from new -- in fact, security professionals have long espoused the concepts of defense in depth and layered network security, both of which include network segmentation as a vital component. But how does this work within virtualized infrastructures? Can the same types of network isolation be achieved within these environments? Fortunately, the answer is yes, although the techniques and technologies may differ somewhat from traditional physical networks. Let’s take a look at some network segmentation best practices enterprises can follow to ensure security in their virtual and private cloud infrastructure.

Network segmentation best practices: Segmentation categories

There are several categories of segmentation that need to occur within virtual and private cloud environments. The first is between different types of network segments particular to the virtual environment itself. These include network segments that carry production traffic to and from virtual machines, segments that carry management traffic, and specialized traffic such as that used for storage networks and memory/data migration between hypervisor platforms.

For most enterprise virtualization platforms, there is a specialized virtual network connection designated for management traffic. For VMware platforms, this is known as the “service console”traffic, and is used to connect hypervisor platforms to management systems such as vCenter, as well as standalone SSH connections and vSphere client connections. As this traffic may contain sensitive data, it should be segregated with a completely distinct virtual switch, if possible, as well as a separate network interface card in the server (NIC).

The second major traffic type in most virtual environments is virtualization operations traffic, usually associated with dynamic memory migration (VMware vMotion for Microsoft Live Migration, for example) and storage operations (commonly iSCSI-based). This traffic may contain the contents of a virtual machine’s dynamic memory (RAM) or storage information, and also should be isolated on a separate virtual switch and NIC. The third traffic type is virtual machine production traffic, and this should be separate from the other two types discussed. If separate virtual switches are not possible, separate subdivisions of virtual switches called port groups can be used to isolate the specific ports needed for these traffic types.

Network segmentation best practices: Switch-based segmentation

The second major type of segmentation capability available to virtual environments is traditional switch-based segmentation. Aside from separate virtual switches and port groups, all virtualization platforms support traditional layer 2 virtual LAN (VLAN) tags, which separate broadcast domains. These can be applied in a number of places. For most complex virtualization deployments, virtual switches are assigned with distinct VLAN tags for numerous port groups that connect to a physical switch trunk port via one or more NICs. For the simplest implementation, one VLAN can be assigned to an entire virtual switch, and each virtual machine connected to the switch communicates on that VLAN alone. However, this can lead to a large number of virtual switches, and this may become unwieldy, especially in systems with a limited number of physical NICs. Most large deployments will simply assign different VLANs to separate port groups on each virtual switch, and then pass these through physical switch trunk ports.

For organizations that require even more granularity, some virtual switch types support private VLANs (PVLANs). This also allows a single VLAN to be subdivided into additional port groupings. VMware’s Distributed Switch, a standard vSphere feature, supports PVLANs, and so does Cisco’s Nexus 1000v switch, among others.

Network segmentation best practices: Higher-layer segmentation

The final isolation technique for virtual and private cloud infrastructure is higher layer segmentation, namely using IP addresses (Layer 3). This is often accomplished with router access control lists (ACLs), firewall rule sets and load balancers, although other access control devices like proxies can also be used. Many network access control devices can now be implemented as virtual appliances, allowing higher-level network segmentation to occur completely within the hypervisor platform itself. Existing network access control platforms can also be used to segment traffic into and out of virtual environments, although some re-architecture or segment design work may be required to create new rules for all virtual machines within a specific segment.

Security practitioners have known for some time that network segmentation is a useful step in protecting their networks, and fortunately, this can be done very effectively within virtual and private cloud infrastructures. Although some planning and architecture design may be needed, network segmentation best practices are still a key control to help combat threats, prevent data breaches and meet compliance requirements.

Dave is the senior vice president of research and the chief technology officer at IANS. He’s also a SANS analyst, instructor and course author, as well as a GIAC technical director.

Full disclosure: I’m a paid contributor to TechTarget’s SearchCloucComputing.com blog.

<Return to section navigation list>

Cloud Computing Events

Bruno Terkaly (@BrunoTerkaly) posted Announcing Windows Azure Camps–Free 2-Days of cloud computing on 10/4/2011:

Come join us for 2 days of cloud computing!

Developer Camps (DevCamps for short) are free, fun, no-fluff events for developers, by developers. You learn from experts in a low-key, interactive way and then get hands-on time to apply what you've learned.

What am I going to learn at the Windows Azure Developer Camp?

At the Azure DevCamps, you'll learn what's new in developing cloud solutions using Windows Azure. Windows Azure is an internet-scale cloud computing and services platform hosted in Microsoft data centers. Windows Azure provides an operating system and a set of developer services used to build cloud-based solutions.

The Azure DevCamp is a great place to get started with Windows Azure development or to learn what's new with the latest Windows Azure features.

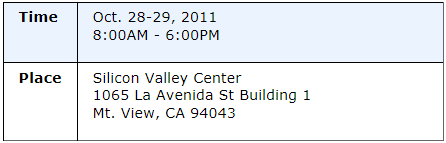

Time and Place

Agenda

Come for one day, come for both. Either way, you'll learn a ton. Here's what we'll cover.

Featured Speakers

BusinessWire reported Seattle Interactive Conference Announces Agenda and Programming Agreements with Windows Azure, Gnomedex, Biznik, TechStars and AdReady; Sets "Battle of Geek Bands" Details in a 10/4/2011 press release:

Seattle Interactive Conference (www.seattleinteractive.com), a major two-day event celebrating the convergence of online technology, creativity, and emerging trends in one of the world's most innovative cities, today announced its agenda, along with a series of powerful programming alliances and details for its "Battle of the Geek Bands" event.

Set for November 2-3 at The Conference Center at the Washington State Convention Center in downtown Seattle, Seattle Interactive Conference (SIC) will feature more than 50 visionary speakers ( http://bit.ly/nsBHBv ) in areas ranging from online commerce and social media to gaming, interactive advertising, entertainment, and more. Some of the many highlights include: "Transforming the Digital Music Frontier," a lunchtime keynote featuring Sir Mix-a-Lot, Death Cab for Cutie bassist Nick Harmer, KEXP's Aaron Starkey and Tim Bierman, manager of Pearl Jam's immensely popular online fan club; "The Transformation of News Media," examining how interactive technology continues to affect the business of news media daily; a presentation by Molly Norris, head of interactive for The World Bank; and Online gaming visionary Ed Fries of Halo 2600. SIC will also include a packed schedule of business networking opportunities and distinctly Seattle-flavored social events.

"We developed the Seattle Interactive Conference with the singular purpose of bringing a world-class interactive technology event to Seattle and the Pacific Northwest," said Brian Rauschenbach, SIC curator. "With more than 50 interactive industry luminaries and thought leaders set to present, powerful additional programming partnerships and an array of uniquely Seattle social and networking events, such as Battle of the Geek Bands, we are thrilled to soon be opening our doors to attendees from throughout the US and beyond."

Events, alliances and other highlights include:

- "Battle of the Geek Bands" and SIC Opening Party on Tuesday evening, November 1 featuring a special concert by The Presidents of the United States of America: Sponsored by FILTER and set for 8:00 pm (doors at 7:00), in Seattle's Showbox SoDo, Battle of the Geek Bands was developed to showcase the many great musicians who happen to work throughout the vibrant online technology sector. Five qualifying bands will get to perform on-stage at The Showbox SoDo that evening. The performances will be judged by a celebrity panel of music industry luminaries and geek VIPs including the iconic Sir Mix-a-Lot, along with Jason Finn and Chris Ballew of The Presidents of the United States of America and John Cook, co-founder of GeekWire. The evening's emcee will be John Roderick, lead singer/guitarist for the Long Winters and an entertainment media veteran. Winners get an SIC trophy, bragging rights and a cash prize. For more information on how bands can enter: http://bit.ly/pBkKHv . Following the contest, The Presidents of the United States of America will perform a special set for all SIC attendees.

- Windows Azure (Microsoft) will provide SIC attendees with two days of technical sessions and access to product and technical experts. The Cloud Experience track is for experienced developers who want to learn how to leverage the cloud for mobile, social and Web application scenarios. No matter what platform or technology involved, these sessions will provide attendees with a deeper understanding of cloud architecture, back-end services and business models to scale for user demand and business growth. Cloud Experience sessions and the expert-staffed lab are open to all registered attendees of SIC.

- Gnomedex: This event is now coming out of retirement, if only for a day on November 2, to join the SIC experience and further engender this community's spirit. For ten years, Gnomedex brought to the stage hundreds of thought leaders and many influencers before they were influential. Innovation, inspiration, and ideation are still very much alive, and Gnomedex festivities will once again kindle excitement around these values. As has been true for every one of its past events, every Gnomedex participant will be treated like a VIP.

- Biznik: The overall theme for SIC is transformations, and the entrepreneurs on the Biznik Entrepreneurial Stage get what it takes to move businesses from here to there. A transformation from an old way of doing things to a new way requires the right mix of innovation and risk. Throughout the day of November 3, the presenters on the Biznik Stage will examine that risk; what transformations take place in employees, products, and cultures when true innovation changes how the game is played.

- TechStars Demo Day - On November 3rd, SIC is co-producing TechStars Demo Day, which targets the investment community and which will unveil ten exciting new companies from the TechStars class of 2011. Held at Seattle's Showbox SoDo, Demo Day will give each company six-minute presentation windows during which they can highlight their respective businesses and investment opportunities. The style is fun and entertaining - it's a different kind of pitch event that includes amazing opportunities for networking. TechStar's after-party will merge with SIC's Closing Party at Showbox SoDo that evening for a powerful and fun night of networking and socializing.

- SIC://City: This is far more than a standard exhibit hall. SIC://City is a showplace of truly unique spaces, not standard booths, but "blank canvases" companies can transform into a space all their own for use throughout the conference's duration. Exhibiting companies will have the opportunity to get creative, have meaningful interactions, and leave powerful impressions. SIC://City provides a venue at which companies can demo, showcase and sell their offerings, or just create an experience to spread the word while providing potential customers and partners a unique place to meet, greet and experience each businesses first-hand. More information: http://bit.ly/qT8ZLL .

- AdReady: This leading demand-side online display advertising platform will run its own annual partners' program on November 2 at the SIC venue and is encouraging its customers to participate directly in SIC. "As a sponsor, we want to see the conference succeed, as participants, we want to be a part of the conversation, but as a company we want to have our partners help shape the future of online advertising," said Karl Siebrecht, CEO, AdReady. "Whether for agencies, advertisers or publishers, that future is beyond search, more than a banner and even more than can be found in the social media."

OTHER SIC EVENTS

Other SIC social events open to all Full Conference and Flex Pass holders include:

- An after-party featuring another exclusive, only-for-SIC concert by Shabazz Palaces and a Vinyl vs. Analog DJ set by Fourcolorzack in Showbox at the Market on Wednesday evening, November 2: Doors at 7:00 pm, show starts at 8:00;

- Closing Party featuring special musical guests on the evening of Thursday, November 3 at Showbox SoDo: Doors at 7:00 pm, show starts at 8:00;

- Meet-ups and other targeted business networking opportunities throughout the event.

About Seattle Interactive Conference

The first-annual Seattle Interactive Conference is a two-day event celebrating the convergence of online technology, creativity, and emerging trends in one of the world's most innovative cities. SIC brings together entrepreneurs, developers and online business professionals from throughout the US and beyond for a powerful combination of in-depth presentations, networking opportunities, and uniquely Seattle social events. Attendees have the rare opportunity to explore transformative technologies and business models with visionary thinkers and peers in areas ranging from online commerce and social media to gaming, interactive advertising, entertainment, and much more.

SOURCE: Seattle Interactive Conference

Gregory Leake reported on SQL Azure content in the Upcoming SQL PASS Summit 2011 and Microsoft SQL Azure in a 10/4/2011 post:

The Professional Association for SQL Server will hold the PASS Summit 2011 from Oct 11-14 in Seattle, Washington. Are you attending? There are many reasons why you should be, including dozens of sessions with Windows Azure Platform content and keynotes by Microsoft leaders highlighting new technologies. PASS Summit is the largest SQL Server and BI conference in the world, planned and presented by the SQL Server community, for the SQL Server community. If you are attending the event, you can visit this web page and learn about many of the sessions that should be of interest to Windows Azure platform developers. In addition to the numerous sessions on Microsoft SQL Azure, the upcoming release of SQL Server “Denali” will also be a key focus of the event. If you can’t attend in person, the keynotes will be made available in streaming format—so you can follow them as well as news for the event at http://www.sqlpass.org/summit/2011/.

Event Keynotes

- Wednesday, October 12th : 8:15 AM PST

Ted Kummert

Senior Vice President, Business Platform Division

Microsoft Corporation

- Thursday, October 13th : 8:15 AM PST

Quentin Clark

Corporate Vice President, SQL Server Database Systems Group

Microsoft Corporation- Friday, October 14th: 8:15 AM PST

David J. DeWitt

Technical Fellow, Data and Storage Platform Division

Microsoft Corporation

In all, there will be over 170 sessions at the event, as well as Kiosks and ExpertPods where you can meet and interact with technical experts from both Microsoft and the SQL Server community. While there is a dedicated SQL Azure track, SQL Azure will also be a key component of many of the core SQL Server sessions in the BI and Development tracks as well.

So we invite you to learn from the top industry experts in the world about best practices, effective troubleshooting, how to prevent issues, save money, and build a better SQL Server environment for your company or clients. We hope to see you there!

Follow PASS Summit on Twitter

You can post and follow PASS Summit news on Twitter using #sqlpass . We will be tweeting about PASS Summit from @WindowsAzure as usual.

Erica Thinesen described Windows Azure [UK] Online Conference: What to Expect in a 10/4/2011 post to the ITProPortal:

The Windows Azure online conference is a new series sponsored by UK Tech Days. The goal of this conference is to teach participants the ins and outs of Azure.

For those who attend the Windows Azure online conference, expect an in-depth look at the benefits of Windows Azure and ways to utilise the platform for development purposes. Prior to the online conference, it is recommended that participants review videos and other content provided after reserving a spot. These videos provide background information about cloud computing and Windows Azure that may be important to know during the conference.

Participants will also learn the differences in developing applications for cloud computing and in-house platforms. Differences in SQL and storage, for example, may seem strange to those used to traditional platforms. One of the goals of this conference is to dispel myths and remove an insecurities people have about cloud development.

As technology continues to improve and grow, conferences like this one provide participants with information about how to use new technologies in the best ways possible. Even though cloud computing is relatively new, this technology has gained in popularity quickly. Understanding and using cloud-based platforms like Windows Azure can make working with new technology much easier and more efficient.

Those who attend the online conference do not need prior experience using Windows Azure or in cloud development. The conference provides basic information about developing apps for the platform. Participants are encouraged to ask questions about ways to start a project, coding, how to migrate an application or questions about the platform's architecture.

In addition to presenting information about Windows Azure, session leaders and participants will have the opportunity to discuss the particulars of Azure during an interactive discussion. Writing down questions or comments in advance helps participants focus and ask the types of questions most important to them.

Participants do not have to be professional web developers to attend. Students, those with an avid in technology or those who simply want to learn more about Azure are welcome to attend. The conference is free to attend, so everyone is welcome. With a variety of people attending this conference, the types of questions asked will touch on many areas. This is a good learning opportunity for anyone who wants to learn more about Windows Azure and cloud development. Experts will be on hand to answer questions and cultivate new points for discussion during the interactive segment of the conference.

<Return to section navigation list>

Other Cloud Computing Platforms and Services

Martin Tantow (@mtantow) recommended that you Be Ready for Oracle’s Big Data Appliance with NoSQL and Hadoop in a 10/4/2011 post to the CloudTimes blog:

Oracle was previously perceived as slow moving in terms of its readiness and transformation for the new era of the cloud. But finally, it broke the silence last Monday at the OpenWorld 2011 event when it announced its most recent and most important product release, the Oracle Big Data Appliance. This new cloud technology is strongly built on NoSQL and Hadoop, which are the key movers in huge cloud data servers.

Oracle retained its flagship database that is seriously built on a rock-solid traditional technology, which they refer to as their relational data storage. Recently, however, with the trend on moving to the cloud platform of new websites and their major customer base who clamor for transformation from relational database to global cloud level, Oracle decided to upgrade to a new level of scalability and speed. Popular websites like Facebook, Twitter and Netfix are just a few websites that have been using NoSQL to meet the demands of their users and the global market.

Oracle’s key purpose in acquiring NoSQL and Hadoop is not merely for loading speed, but to allow their business and their stakeholders to gather unstructured data from anywhere across the web and to use them as new powerful sources of new business opportunities. Beyond this, Oracle will also provide a connection for its other products using the traditional Oracle relational database to do business reports and real-time analytics to combine both the structured and unstructured data statistics.

EMC, an Oracle business partner provided a real picture of how this would work using an auto insurance company as an example. Currently, this auto insurance uses software that allows data storage for their big customer base that sets the rate for each of them. The old software in reality has the majority of customers subsidizing the cost for a few bad drivers. Now, using this new software that can do business analytics the majority of customers will now be able to customize the rates that will give most of the customers some savings and the bad drivers to pay more for their insurance premiums.

Another example is based on a household’s social networking graph, where it will be possible to give discounts to users who apply more parental restrictions compared to those who have a thrill-seeking behavior on the web. This may sound scary and are still on the development stage, but is not very far from reality. This may also be seen as an opportunity to teach web users to be more responsible since penalties can easily be imposed using this software.

Another frightful consequence with Oracle’s Big Data Appliance is its ability to mine public data that are within the cloud. In an official Oracle announcement Andrew Mendelson, senior vice president of Oracle Server Technologies said “With the explosion of data in the past decade, including more machine-generated data and social data, companies are faced with the challenge of acquiring, organizing and analyzing this data to make better business decisions. New technologies, such as Hadoop, offer some relief, but don’t provide a holistic solution for customers’ Big Data needs. With today’s announcement, Oracle becomes the first vendor to offer customers a complete and integrated set of products to address critical Big Data requirements, unlock efficiencies, simplify management and create data insights that maximize business value.”

Jaikumar Vijayan (@jaivijayan) asserted “Oracle's Big Data appliance validates NoSQL market, vendors say” in his Oracle does about-face on NoSQL article of 10/4/2011 for Computerworld: