Windows Azure and Cloud Computing Posts for 10/10/2011+

| A compendium of Windows Azure, SQL Azure Database, AppFabric, Windows Azure Platform Appliance and other cloud-computing articles. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database and Reporting

- Marketplace DataMarket and OData

- Windows Azure AppFabric: Apps, Access Control, WIF and Service Bus

- Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch and Entity Framework v4+

- Windows Azure Infrastructure and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

Azure Blob, Drive, Table and Queue Services

David Pallman posted The Cloud Gourmet 30-Minute Meal: Static Web Site Migration to Windows Azure on 10/9/2011:

Bonjour and welcome again to The Cloud Gourmet with your host, Chef Az-ure-D.

My friends, today I would like to share with you the first of several “30 minute meal” recipes for Windows Azure or as you might put it, “low-hanging fruit”. In cooking, not everything must be a masterpiece that requires a great effort. So it is in the cloud: a great many useful things can be done easily and in a short period of time. We are not talking about “fast food” here, which I detest, but simple meals that are nutritious and satisfying that also happen to be quick and easy.

Perhaps some of you reading this have been considering Windows Azure for some time but have been afraid to, shall we say, take the plunge? I have the perfect remedy. Create one of these 30 minute meals and see for yourself how satisfying Windows Azure is. Doing so will give you confidence and something to show your colleagues. In no time you will be on your way to more ambitious projects.

Recipe: Migrating Static Web Sites to Windows Azure in 30 Minutes

Web sites come in all sizes and complexities. We have in mind here static web sites, in which we are serving up content but there is no server-side authentication, logic, database, web services, or dynamic page creation. If this is indeed the case, then your needs are simple: you need something to serve up HTML/CSS/JavaScript files along with media content (images, video, audio) or downloadable content such as documents or zip files. Here’s a step-by-step recipe for migrating your static web site to Windows Azure in 30 minutes.

I just happen to have one of these made already that I can pull out of the oven and show you: http://azuredesignpatterns.com. Note that even though the site is static that doesn’t mean it has to be limited in terms of visuals or interactivity. You can use everything at your disposal on the client side including HTML5, CSS, and JavaScript. This site in fact, static though it is, works on PC browsers as well as touch devices like tablets and phones.

Plan

The standard way to host a web site in Windows Azure is to use the Compute service and a web role. However, that’s overkill and an unnecessary expense for a static web site. An alternative is to use blob storage. This works because blob storage is similar to file storage, and blobs can be served up publicly over the Internet if you arrange for the proper permissions. In essence, the Windows Azure Blob Service becomes your web server. I personally use this approach quite often and it works well.

Ingredients:

1 web site consisting of n files to be served up (may be flat or in a folder hierarchy).You Will Need:

- A Windows Azure subscription

- A cloud storage tool to work with Windows Azure blob storage such as Cloud Storage Studio or Azure Storage Explorer. We’ll use Azure Storage Explorer in our screen captures.

Directions

Step 1: Create a Windows Azure Storage Account

In this step you will create a Windows Azure Storage account (you can also use an existing storage account if you prefer).

1A. In a web browser, go to the Windows Azure portal

1B. Navigate to the Hosted Service, Storage Accounts & CDN category on the lower left.

1C. Navigate to the Storage Accounts area on the upper left.

1D. Click the New Storage Account toolbar button. A dialog appears.

Note: if you want to use an existing storage account instead of creating a new one, advance to Step 1G.1E. Specify a unique name for the storage account, select a region, and click OK. We’re using the name mystaticwebsite1 here, you will need to use a different name.

1F. The storage account will soon appears in the portal. Wait for it to show a status of Created, which sometimes takes a few minutes.

1G. Ensure the storage account is selected in the portal, then click the View Access Keys toolbar button. Record one of the keys (which you will need later) and then click Close.

Step 2: Create a Container

In this step you will create a container in blob storage to hold your files. A container is similar to a file folder. We use will use a cloud storage tool for this step:

2A. Launch your preferred cloud storage tool.

2B. Open the storage account in your tool. You will need to supply the storage account name (Step 1E) and a storage key (Step 1G).

2C. Use your cloud storage tool to create a new container. Be sure to specify both name and container access level in the dialog.

- Name: Give the container any name you like, such as web.

- Access Control: Specify public blob access.

Step 3: Upload Files

In this step you will upload your static web files to the container you created in Step 2 using your cloud storage tool.

3A. In your cloud storage tool, select the container and click the toolbar button to upload blobs. A file open dialog will appear.

3B. Navigate to the folder where your static web files reside, select the files to upload and click Open.

3C. Wait for your files to upload and appear in the cloud storage tool.

3D. Check the Name (Uri) and ContentType property of each file you uploaded and revise them if necessary:

- Name: If you uploaded from your root web folder, no changes are needed. If you uploaded from a subfolder (such as /Images), you must rename blobs to have the “folder/” prefix in their name. For example, if you uploaded logo.jpg from an images subfolder, rename the blob from “logo.jpg” to “images/logo.jpg”.

- ContentType: The content type must be set or a web browser will be unsure what to do with the file. The content type may have automatically been set properly based on the file you uploaded and the tool you are using. If not, manually set it. The table below shows some common web file types and the proper ContentType value that should be specified.

Be sure to check and correct the name and content type of each file you have uploaded.

3E. If you have subfolders of web content, repeat steps 3A-3D for each subfolder.

Step 4: Test Navigation

In this you will confirm your ability to access the site.

4A. Find the root index file of your web site and compute its URL as follows (the cloud storage tool you are using may be able to do this for you):

http://<storageaccountname>.core.blob.windows.net/<containername>/<indexfilename>In the example we have been showing, the URL is:

http://mystaticwebsite1.core.blob.windows.net/web/index.html

4B. Visit your web URL in a browser. The site should come up, and should be fully navigable. Voila!If the site is not accessible or is partially accessible with content missing, double check you have correctly performed the previous steps. Here’s a checklist:

- Did you create your storage account?

- Are the storage account name and key you have been using the correct ones?

- Did you create your container with a public access level?

- Is the container name you are using the correct one?

- Did you upload all of your web files to the container?

- Is the index file name you are using the correct one?

- Did you rename blobs from subfolders to have the folder prefix in their names?

- Did you verify the blobs have the correct content type?

Concluding Remarks

Congratulations, I knew you could do it! I have never been prouder.

It’s beyond the scope of this recipe, but be aware that if you wanted to have a prettier domain name you can map a custom domain to your container or forward a custom domain to your container/root-index-file.Although running your static web site out of blob storage is quite inexpensive, you should remember to delete the blobs when you no longer need them; otherwise you’ll be charged for the storage and bandwidth used month after month.

What we’ve done here is quite different from how you would host a complex or dynamic web site using the Compute service; I hope it has served its purpose to get something of yours up in the cloud in a short period of time—and that it spurs you on to something more ambitious.

Dhananjay Kumar (@debug_mode) described Three simple steps to create Azure table in a 10/10/2011 post to his debug mode… blog:

In this post I will show you three simple steps to create Azure Table.

Let us say you want to create Azure table called Employee with following columns

- EmployeeID

- EmployeeName

- EmployeeSalary

First step is to create entity class representing employee.

Each row of azure table contains at least below columns

- Row key

- Partition Key

- Time Stamp

And custom columns along with above columns. So Employee table will be having all together below columns

- Row Key

- Partition Key

- Time Stamp

- EmployeeID

- EmployeeName

- EmployeeSalary

To represent entity class, we need to create a class inheriting from class TableServiceEntity. EmployeeEntity class will be as below,

using System; using Microsoft.WindowsAzure.StorageClient; namespace Test { public class EmployeeEntity : TableServiceEntity { public EmployeeEntity(string partitionKey, string rowKey) : base (partitionKey,rowKey ) { } public EmployeeEntity() : base() { PartitionKey = "test"; RowKey = String.Empty; } public string EmployeeID { get; set; } public string EmployeeName { get; set; } public int EmployeeSalaray { get; set; } } } There is couple of points worth discussing about above class.

- It inherits from TableServiceEntity class

- It is having two constructors

- Passing rowkey and partitionkey as string value to base constructor

- Custom columns are as the public property of the class.

Step 2: Create Data Context class

Once Entity class is in place, we need to create DataContext class. This class will be used to create the table.

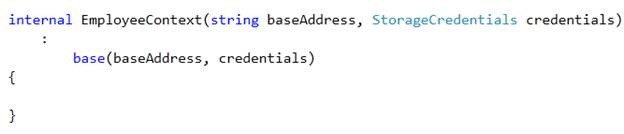

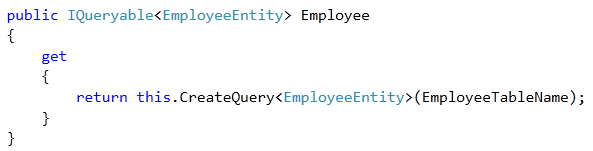

using System; using Microsoft.WindowsAzure.StorageClient; using Microsoft.WindowsAzure; using System.Linq; namespace Test { public class EmployeeContext : TableServiceContext { internal EmployeeContext(string baseAddress, StorageCredentials credentials) : base(baseAddress, credentials) { } internal const string EmployeeTableName = "Employee"; public IQueryable<EmployeeEntity> Employee { get { return this.CreateQuery<EmployeeEntity>(EmployeeTableName); } } } }There are couples of points worth discussing about above code

- It inherits from TableServiceContext class

- In constructor we are passing credentials

- In constructor we are passing base address to create the table.

- Table name is given inline in the code as below; Employee is name of the table we want to create.

- We need to add IQueyable property to create query.

Step 3: Creating Table

To create table we need a very simple code.

- Create the account. In below code DataConnectionString is name of the connection string. This could be pointing to local storage or Azure Tables.

- We are creating table from Data Model. Passing EmployeeContext class as parameter.

using System; using Microsoft.WindowsAzure.StorageClient; using Microsoft.WindowsAzure; using System.Linq; using Microsoft.WindowsAzure.ServiceRuntime; namespace Test { public class CrudClass { public class CrudClass { public void CreateTable() { CloudStorageAccount account = CloudStorageAccount.Parse(RoleEnvironment.GetConfigurationSettingValue("DataConnectionString")); CloudTableClient.CreateTablesFromModel(typeof(EmployeeContext ), account.TableEndpoint.AbsoluteUri, account.Credentials); } } } When you call CreateTable() method of CrudClass , it will create Employee table for you in Azure table.

<Return to section navigation list>

SQL Azure Database and Reporting

My SQL Azure Sessions at the PASS Summit 2011 post of 10/10/2011 provides searchable descriptions of the following nine regular sessions in the SQL Azure track at the Professional Association for SQL Server (PASS) Summit to be held Tuesday 10/11 through Friday 10/14/2011:

Beyond the Hype - Hybrid Solutions for On-Premise and In-Cloud Database Applications (AZ-202-M) by Buck Woody (Microsoft)

- Everything Your Developer Won’t Tell You About SQL Azure (AZ-200) by Arie Jones (Perpetual Technologies,Inc.)

- Getting your Mind Wrapped Around SQL Azure (AZ-301-C) by Evan Basalik (Microsoft CSS)

- How to migrate your databases to SQL Azure (and what's new) (AZ-300) by Nabeel Derhem (MOHE)

- Migrating Large Scale Application to the Cloud with SQL Azure Federations (AZ-400-M) by Cihan Biyikoglu (Microsoft)

- SQL Azure - Where We Are Today, and Microsoft's Cloud Data Strategy (AZ-201-M) Gregory Leake by (Microsoft) and Lynn Langit (Microsoft)

- SQL Azure Sharding with the Open Source Enzo Library (AZ-100) by Herve Roggero (Blue Syntax Consulting LLC)

- SQLCAT: What are the Largest Azure Projects in the World and how do they Scale (AZ-302-A) by Kevin Cox (Microsoft) and Michael Thomassy (Microsoft Corp.)

- This Ain't Your Father's Cloud (AZ-101) by Buck Woody (Microsoft) and Kevin Kline (Quest Software)

<Return to section navigation list>

MarketPlace DataMarket and OData

<Return to section navigation list>

Windows Azure AppFabric: Apps, Access Control, WIF and Service Bus

Mike Wood (@mikewo) posted Tips for Working with the Windows Azure AppFabric Caching Service – Part III of III to his Cumulux blog on 10/9/2011:

This post will complete our series on tips with working with the Windows Azure AppFabric Caching Service. In our first post we discussed tips on some of the differences between the Windows Azure AppFabric Caching Service and the Windows Server AppFabric Caching Service available for your own data center. These differences could help drive decisions on where and what you decide to cache in your Windows Azure solutions. We followed this with a second post focusing on how to choose the size of cache for Windows Azure AppFabric Caching.

For our final post we will cover some considerations you’ll want to have when scaling your Windows Azure solution that is using the Windows Azure AppFabric Caching service. This post may refer to the Windows Azure AppFabric Caching as simply the Caching Service. References to the Windows Server AppFabric Caching will be called out specifically.

Scaling up

A big benefit of Cloud Computing is that of elastic scaling, or easily increasing or decreasing your resources to meet the demand on your system. Given that you are specifically choosing a cache size which has some limitations on throughput you will want to select a cache size that will easily meet your needs for the load you are predicting. When you need to scale your usage of Windows Azure AppFabric Caching you can do so by visiting the Windows Azure Management portal to request the cache size be increased (or decreased). This sounds perfectly simple, and really it is, but there a couple of things you’ll need to take into account as you scale in either direction.

If you change the cache size by increasing the amount of cache the existing cache data stays intact. The quotas and size is simply increased. If you decrease the size of the cache you are using the system will start to asynchronously purge the cache of the least recently accessed items until the size of the cache is within the new size you selected. While the system is purging items from the cache, whether from a cache size change, or because the limit has been reached and there is memory pressure as new items are added, it is possible to get an Exception when attempting to add new items, but it is more likely that the clients won’t see this issue at all and the least recently accessed items will just fall out of the cache. Note that this can cause other issues if code simply blindly expects a value to be in cache. Your code should always be prepared to deal with the fact that an item previously place into the cache is now gone. Also note that this size change is not instantaneous, it may take a few minutes for the change to occur.

Something to keep in mind as you change the size of your cache to scale is that unlike instance counts for your roles which you can change at any time, once you request a cache size change in the management portal you must wait a day before you request another scale operation for that cache. This means if you are scaling down in response to a drop in load on your system you won’t be able to scale back up in response to a quick spike until the next day with that specific cache. This means that you probably want to plan for more like a peak scenario with your cache rather than a more flexible up and down that you can do with your compute instance counts. Note that you are not limited to a single cache and you can have several caches to achieve your goals, each with their own size and usage.

One option to work around the one day change limitation is if you get caught needing to scale in either direction but you’ve recently made a scale request is to create a new cache and update your solution to use the new cache. This will require you to have the cache settings moved to the Service Configuration for your solution rather than hiding it away in the web or app.config so that a change won’t require a full redeployment of your solution. You can add code to the environment changing events so that updates to the configuration can reset the cache location if the cache location changes. Obviously in this case it would completely be a new cache and the system wouldn’t have access to the items stored in the previous cache, so take that into consideration as you make this kind of decision. Depending on what you are caching this may not be an option for you.

In conclusion…

The Windows Azure AppFabric Caching service is an in-memory distributed cache that can really help to take the load off your persisted stores and increase the performance and scalability of your hosted solutions. Once you are aware of the differences between the cloud and on-premise versions of the AppFabric Caching services, and the capabilities of the cloud version specifically, you’ll be a long way in having the information you need to make good decisions on when and if to include these caching services in your Windows Azure hosted solutions.

There are some really great resources on the internet on how to use the caching services. Please check out the Windows Azure Platform Training Kit which has a hands on lab (Building Windows Azure Applications with the Caching Service) for using the caching services and try it out in the AppFabric labs environment.

Tom Hollander described Using Service Bus Topics and Subscriptions with WCF in a 10/8/2011 post:

Introduction

In my last post, I showed how to use Windows Azure AppFabric Service Bus Queues with WCF. Service Bus Queues provide a great mechanism for asynchronous communication between between two specific applications or services. However in complex systems it’s often useful to support this kind of messaging between many applications and services. This is particularly important for systems utilising a “Message Bus” approach, where one application “publishes” events which may be consumed by any number of “subscriber” applications. While it’s possible to simulate this kind of pattern with a simple queuing mechanism, the Service Bus now has built-in support for this pattern using a feature called Topics. A topic is essentially a special kind of queue which allows multiple subscribers to independently receive and process their own messages. Furthermore, each subscription can be filtered, ensuring the subscriber only receives the messages that are of interest.

In this post I will build on the previous Service Bus Queues sample, replacing the queue with a topic. I also include two subscriber services, each which uses its own subscription attached to the topic. The first subscription is configured to receive all messages from the publisher, while the second contains a filter that only allows messages through if their EventType property is equal to '”Test Event”. The basic architecture is shown below, and the full code sample can be downloaded here.

If you’ve looked through my previous Queues sample, you’ll find that there are very few changes required to support Topics and Subscriptions. The changes are:

- The application now creates Topics and Subscriptions (including Filters) instead of Queues, using the same configuration-driven helper library

- The client’s WCF configuration points to the topic URI

- The two services’ WCF configuration point to the topic URI as the address and the subscription URI as the listenUri

- The client code has extended to send metadata along with the message, allowing the subscription filters to act upon the metadata.

Although most of the sample is the same as last time, I’ve included the full description of the sample in this post to prevent readers from needing to reference both posts.

Creating the Topic and Subscriptions

To use the Service Bus, you first need to have a Windows Azure subscription. If you don’t yet have one, you can sign up for a free trial. Once you have an Azure subscription, log into the Windows Azure Portal, navigate to Service Bus, and create a new Service Namespace. You can then create one or more topics directly from the portal, and then create subscriptions under each topic. However at the time of writing, there’s no way to specify filters when creating subscriptions from the portal, so every subscription will receive every message sent to the topic. To access the full power of topics and subscriptions, you should create them programmatically. For my sample I built a small library that lets you define your topics, subscriptions (including filters) and queues in a configuration file so they can be created when needed by the application. Here’s how I created the topic and subscription used for Service Two, which is configured to filter for events where the EventType metadata property is equal to “Test Event”:

<serviceBusSetup> <credentials namespace="{your namespace here}" issuer="owner" key="{your key here}" /> <topics> <add name="topic1" > <subscriptions> <add name="sub2" createMode="DeleteIfExists" filter="EventType = 'Test Event'" /> </subscriptions> </add> </topics> </serviceBusSetup>Note that for any interactions with Service Bus, you’ll need to know your issuer name (“owner” by default) and secret key (a bunch of Base64 gumph), as well as your namespace, all which can be retrieved from the portal. For my sample, this info needs to go in a couple of places in each configuration file.

Defining the Contract

As with any WCF service, you need to start with the contract. Queuing technologies are inherently one-way, so you need to use the IsOneWay property on the OperationContract attribute. I chose to use a generic base interface that accepts any payload type, which can be refined for specific concrete payloads. However if you don’t want to do this, a simple single interface would work just fine.

[ServiceContract] public interface IEventNotification<TLog> { [OperationContract(IsOneWay = true)] void OnEventOccurred(TLog value); } [ServiceContract] public interface IAccountEventNotification : IEventNotification<AccountEventLog> { } [DataContract] public class AccountEventLog { [DataMember] public int AccountId { get; set; } [DataMember] public string EventType { get; set; } [DataMember] public DateTime Date { get; set; } }Building and Hosting the Services

This sample includes two “subscriber” services, both implemented identically but running in their own IIS applications. The services are implemented exactly the same way as any other WCF service. You could build your own host, but I choose to host the services in IIS via a normal .svc file and associated code-behind class file. For my sample, whenever I receive a message I write a trace message and also store the payload in a list in a static variable, which is also displayed on a simple web page.

public class Service1 : IAccountEventNotification { public void OnEventOccurred(AccountEventLog log) { Trace.WriteLine(String.Format("Service One received event '{0}' for account {1}", log.EventType, log.AccountId)); Subscriber.ReceivedEvents.Add(log); } }The magic of wiring this service up to the Service Bus all happens in configuration. First, make sure you’ve downloaded and referenced the latest version of the Microsoft.ServiceBus.dll – NuGet is the easiest way to get this (just search for “WindowsAzure.ServiceBus”).

Now it’s just a matter of telling WCF about the service, specifying the NetMessagingBinding and correct URIs, and configuring your authentication details. Since I haven’t got the SDK installed, the definitions for the bindings are specified directly in my web.config files instead of in machine.config.

The WCF configuration for Service One is shown below. Note that the topic URI is specified in the service endpoint’s address attribute, and the subscription URI is specified in its listenUri attribute.

<system.serviceModel> <!-- These <extensions> will not be needed once our sdk is installed--> <extensions> <bindingElementExtensions> <add name="netMessagingTransport" type="Microsoft.ServiceBus.Messaging.Configuration.NetMessagingTransportExtensionElement, Microsoft.ServiceBus, Version=1.5.0.0, Culture=neutral, PublicKeyToken=31bf3856ad364e35"/> </bindingElementExtensions> <bindingExtensions> <add name="netMessagingBinding" type="Microsoft.ServiceBus.Messaging.Configuration.NetMessagingBindingCollectionElement, Microsoft.ServiceBus, Version=1.5.0.0, Culture=neutral, PublicKeyToken=31bf3856ad364e35"/> </bindingExtensions> <behaviorExtensions> <add name="transportClientEndpointBehavior" type="Microsoft.ServiceBus.Configuration.TransportClientEndpointBehaviorElement, Microsoft.ServiceBus, Version=1.5.0.0, Culture=neutral, PublicKeyToken=31bf3856ad364e35"/> </behaviorExtensions> </extensions> <behaviors> <endpointBehaviors> <behavior name="securityBehavior"> <transportClientEndpointBehavior> <tokenProvider> <sharedSecret issuerName="owner" issuerSecret="{your key here}" /> </tokenProvider> </transportClientEndpointBehavior> </behavior> </endpointBehaviors> </behaviors> <bindings> <netMessagingBinding> <binding name="messagingBinding" closeTimeout="00:03:00" openTimeout="00:03:00" receiveTimeout="00:03:00" sendTimeout="00:03:00" sessionIdleTimeout="00:01:00" prefetchCount="-1"> <transportSettings batchFlushInterval="00:00:01" /> </binding> </netMessagingBinding> </bindings> <services> <service name="ServiceBusPubSub.ServiceOne.Service1"> <endpoint name="Service1"

listenUri="sb://{your namespace here}.servicebus.windows.net/topic1/subscriptions/sub1" address="sb://{your namespace here}.servicebus.windows.net/topic1" binding="netMessagingBinding" bindingConfiguration="messagingBinding" contract="ServiceBusPubSub.Contracts.IAccountEventNotification" behaviorConfiguration="securityBehavior" /> </service> </services> </system.serviceModel>One final (but critical) thing to note: Most IIS-hosted WCF services are automatically “woken up” whenever a message arrives. However this does not happen when working with the Service Bus—in fact it only starts listening to the subscription after it’s already awake. During development (and with the attached sample) you can wake up the services by manually browsing to the .svc file. However for production use you’ll obviously need a more resilient solution. For applications hosted on Windows Server, the best solution is to use Windows Server AppFabric to host and warm up the service as documented in this article. If you’re hosting your service in Windows Azure, you’ll need to use a more creative solution to warm up the service, or you could host in a worker role instead of IIS. I’ll try to post more on possible solutions sometime in the near future.

Building the Client

Building the client is much the same as for any other WCF application. I chose to use a ChannelFactory so I could reuse the contract assembly from the services, but any WCF proxy approach should work fine.

The one thing I needed to do differently was explicitly attach some metadata when publishing the event to the topic. This is necessary whenever you want to allow subscribers to filter messages based on this metadata, as is done by Service Two in my sample. As you can see from the code, this is achieved by attaching a BrokeredMessageProperty object to the OperationContext.Current.OutgoingMessageProperties:

var factory = new ChannelFactory<IAccountEventNotification>("Subscribers"); var clientChannel = factory.CreateChannel(); ((IChannel)clientChannel).Open(); using (new OperationContextScope((IContextChannel)clientChannel)) { // Attach metadata for subscriptions var bmp = new BrokeredMessageProperty(); bmp.Properties["AccountId"] = accountEventLog.AccountId; bmp.Properties["EventType"] = accountEventLog.EventType; bmp.Properties["Date"] = accountEventLog.Date; OperationContext.Current.OutgoingMessageProperties.Add( BrokeredMessageProperty.Name, bmp); clientChannel.OnEventOccurred(accountEventLog); } // Close sender ((IChannel)clientChannel).Close(); factory.Close();Again, the interesting part is the configuration, although it matches the service pretty closely. In the case of the client, you only need to specify the topic’s URI in the endpoint definition since it knows nothing about the subscribers:

<system.serviceModel> <extensions> <bindingElementExtensions> <add name="netMessagingTransport" type="Microsoft.ServiceBus.Messaging.Configuration.NetMessagingTransportExtensionElement, Microsoft.ServiceBus, Version=1.5.0.0, Culture=neutral, PublicKeyToken=31bf3856ad364e35"/> </bindingElementExtensions> <bindingExtensions> <add name="netMessagingBinding" type="Microsoft.ServiceBus.Messaging.Configuration.NetMessagingBindingCollectionElement, Microsoft.ServiceBus, Version=1.5.0.0, Culture=neutral, PublicKeyToken=31bf3856ad364e35"/> </bindingExtensions> <behaviorExtensions> <add name="transportClientEndpointBehavior" type="Microsoft.ServiceBus.Configuration.TransportClientEndpointBehaviorElement, Microsoft.ServiceBus, Version=1.5.0.0, Culture=neutral, PublicKeyToken=31bf3856ad364e35"/> </behaviorExtensions> </extensions> <behaviors> <endpointBehaviors> <behavior name="securityBehavior"> <transportClientEndpointBehavior> <tokenProvider> <sharedSecret issuerName="owner" issuerSecret="{your key here}" /> </tokenProvider> </transportClientEndpointBehavior> </behavior> </endpointBehaviors> </behaviors> <bindings> <netMessagingBinding> <binding name="messagingBinding" sendTimeout="00:03:00" receiveTimeout="00:03:00" openTimeout="00:03:00" closeTimeout="00:03:00" sessionIdleTimeout="00:01:00" prefetchCount="-1"> <transportSettings batchFlushInterval="00:00:01" /> </binding> </netMessagingBinding> </bindings> <client> <endpoint name="Subscribers" address="sb://{your namespace here}.servicebus.windows.net/topic1" binding="netMessagingBinding" bindingConfiguration="messagingBinding" contract="ServiceBusPubSub.Contracts.IAccountEventNotification" behaviorConfiguration="securityBehavior" /> </client> </system.serviceModel>Summary

Windows Azure AppFabric Service Bus makes it easy to build loosely-coupled, event-driven systems using the Topics and Subscriptions capability. While you can program directly against the Service Bus API, using the WCF integration via the NetMessagingBinding allows you to use your existing skills (and possibly leverage existing code) to take advantage of this great capability.

<Return to section navigation list>

Windows Azure VM Role, Virtual Network, Traffic Manager, Connect, RDP and CDN

Brian Hitney explained Geo-Load Balancing with the Azure Traffic Manager in a 10/10/2011 post:

One of the great new features of the Windows Azure platform is the Azure Traffic Manager, a geo load balancer and durability solution for your cloud solutions. For any large website, managing traffic globally is critical to the architecture for both disaster recovery and load balancing.

When you deploy a typical web role in Azure, each instance is automatically load balanced at the datacenter level. The Azure Fabric Controller manages upgrades and maintenance of those instances to ensure uptime. But what about if you want to have a web solution closer to where your users are? Or automatically direct traffic to a location in the event of an outage?

This is where the Azure Traffic Manager comes in, and I have to say, it is so easy to set up – it boggles my mind that in today’s day and age, individuals can prop up large, redundant, durable, distributed applications in seconds that would rival the infrastructure of the largest websites.

From within the Azure portal, the first step is to click the Virtual Network menu item.

On the Virtual Network page, we can set up a number of things, including the Traffic Manager. Essentially the goal of the first step is to define what Azure deployments we’d like add to our policy, what type of load balancing we’ll use, and finally a DNS entry that we’ll use as a CNAME:

We can route traffic for performance (best response time based on where user is located), failover (traffic sent to primary and only to secondary/tertiary if primary is offline), and round robin (traffic is equally distributed). In all cases, the traffic manager monitors endpoints and will not send traffic to endpoints that are offline.

I had someone ask me why you’d use round robin over routing based on performance – there’s one big case where that may be desirable: if your users are very geography centric (or inclined to hit your site at a specific time) you’d likely see patterns here one deployment gets maxed out, while another does not. To ease the traffic spikes to one deployment, round robin would be the way to go. Of course, an even better solution is to combine traffic shaping based on performance with Azure scaling to meet demand.

In the above image, let’s say I want to create a failover for the Rock Paper Azure botlab (a fairly silly example, but it works). I first added my main botlab (deployed to South Central) to the DNS names, and then added my instance deployed to North Central:

From the bottom of the larger image above, you can see I’m picking a DNS name of botlab.ctp.trafficmgr.com as the public URL. What I’d typically do at this point is go in to my DNS records, and add a CNAME, such as “www.rockpaperazure.com” –> “rps.ctp.trafficmgr.com”.

In my case, I want this to be a failover policy, so users only get sent to my North Central datacenter in the event the south central instance is offline. To simulate that, I took my south central instance offline, and from the Traffic Manager policy report, you’d see something like this:

To test, we’ll fetch the main page in IE:

… and we’re served from North Central. Of course, the user doesn’t know (short of a traceroute) where they are going, and that’s the general idea. There’s nothing stopping you from deploying completely different instances except of course for the potential end-user confusion!

But what about database synchronization? That’s a topic for another post …

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

Barb Darrow (@gigabarb) asked Can Microsoft Web Matrix 2.0 lure developers to Azure? in a 10/10/2011 post to Giga Om’s Structure blog:

Microsoft hopes to use the company’s Web Matrix 2.0 tool–now in beta–to lure new-age web developers to Azure, its cloud-computing platform-as-a-service.

While the Azure PaaS has a potentially huge built-in audience of .Net programmers, it lacks cachet among the “cool kid,” next-gen web developers. Those developers tend to gravitate towards Linux and Perl, Ruby, Python or Java languages.

Microsoft has talked a tiny bit about Web Matrix 2.0 on various company blogs, but nowhere makes any mention about Azure as a potential target site for their applications.

One Microsoft blogger described Web Matrix 2.0 as:

“…an all-in-one tool you can use to download, install and tweak and publish a website based on an open source app like WordPress, Umbraco, Drupal, … OR program your own site with an easy-to-use Razor syntax and ASP.NET Web Pages.”

But the tool could serve a vital purpose. Microsoft has spent years and billions of dollars building out Azure infrastructure and services, which went live in February 2010. But the effort lacks stature among the web and cloud cognoscenti who don’t necessarily see Azure’s Windows heritage as a good thing.

For many of those highly technical developers, Azure’s PaaS stack is overkill; they prefer to deploy on Amazon Web Services IaaS instead. And those who really want to avail themselves of a PaaS would be more likely to check out VMware’s Cloud Foundry or Salesforce.com’s Heroku.

Our own Stacey Higginbotham wrote that CloudFoundry “will offer developers tools to build out applications on public clouds, private clouds and anyplace else, whether the underlying server runs VMware or not.”

That’s an attractive proposition for developers who want the widest possible addressable market. Developers can use non-Microsoft languages to write for Azure, but many in the non-.Net camp don’t see the appeal of writing applications that will run in a Microsoft-only environment.

“What Azure needs is a way for developers to use it without caring about it being Azure. [The] ideal state would be a dude writing an app in PHP or Java or Ruby and being able to run it on Azure or Heroku or Google App Engine without having to care,” said Carl Brooks, infrastructure analyst with Tier 1 Research, a division of The 451 Group.

Disclosure: Automattic, the maker of WordPress.com, is backed by True Ventures, a venture capital firm that is an investor in the parent company of this blog, Giga Omni Media. Om Malik, founder of Giga Omni Media, is also a venture partner at True.

Microsoft has emphasized deploying Visual Studio LightSwitch applications on Windows Azure.

John White posted SharePoint Tools for Windows Azure, Visual Studio, jQuery, and HTML5 to his The White Pages blog on 10/10/2011:

As I mentioned in my last post, at the recent SharePoint 2011 conference, I attended a number of sessions where Visual Studio played a major role. Andrew Connell articulated design patterns around using SharePoint with Windows Azure, Ted Pattison showed patterns around jQuery, HTML5 and oData, and Eric Shupps used the performance testing tools in VS2010 to show the impact of performance tweaks.

In all of the sessions mentioned above, reference was made to add ins, extensions, or other tools that make working with SharePoint and Azure a great deal easier. I took note of most of them, and in the process of summarizing them, thought that I should amalgamate them with my own current list of dev tools, and post it here. Extensions can and should be installed via the extensions manager in Visual Studio, and I’ll note them below:

Cloudberry Utility for working with Azure BLOB Storage. Makes moving files to/from blob storage simple Visual Studio 2010 SharePoint Power Tools* Adds a sandboxed Visual Web Part item template and other enhancements. CKS Development Tools for SharePoint* Community led effort that includes many Tools and templates for SharePoint development CAML Intellisense for VS2010* Adds Intellisense to VS2010 for those of use still stuck with CAML Visual Studio 2010 Silverlight Web Part* Project Template for writing Silverlight web parts – both full trust and sandboxed supported Web Standards Update for Visual Studio 2010 SP1* Adds Intellisense for HTML5 and CSS3 to VS2010 SharePoint Timer Job Item* Supports the creation of administrative timer jobs in SharePoint 2010 SharePoint 2010 and Windows Azure Training Course Training course to get up to speed on working with SharePoint 2010 and Windows Azure jQuery Libraries Main libraries for working with jQuery jQuery UI Library UI controls for use with jQuery jQuery Templates Add in for the templating of controls in jQuery Modernizr Open source project to allow older browsers to work with HTML5/CSS3 elements

Ilya Lehrman (@ilya_l) reported Business Connectivity Services for SharePoint Online - available end-of-year on 10/5/2011:

Business Connectivity Services come to SharePoint Online. Microsoft plans to expand the business critical services delivered by Office 365 and Azure and make moving to the cloud even easier for customers. By the end of the year, the first round of service updates to SharePoint Online since Office 365 launched will be complete and will enable customers to use Business Connectivity Services (BCS) to connect to data sources via Windows Communication Foundation (WCF) Web Services endpoints. BCS lets customers use and search data from other systems as if it lives in SharePoint—in both read and write modes.

At the SharePoint 2011 conference Microsoft announced that by the end of the year SharePoint Online will get BCS (via WCF end-points). That will allow much deeper integration into customer business environments, that heretofore required on-premises SharePoint installations. I can also see using Windows Azure as a platform for hosting those WCF end-points, utilizing Windows Azure Connect to reach inside the datacenter to provide the data to SharePoint Online... Anyone up for a proof of concept implementation? ;)

This post is germane because it cites an official Microsoft source for the report.

<Return to section navigation list>

Visual Studio LightSwitch and Entity Framework 4.1+

Jan van der Haegen (@janvanderhaegen) described Hacking into the heart of a LightSwitch application in a 10/9/2011 post:

I received an email a couple of weeks ago, in which one consultant to another mentioned “LightSwitch or another code generation tool”…

Although it is true that LightSwitch generates code for you, a running LightSwitch application contains only a really small amount of generated code.

Like any MVVM application, the heart of the application is the model.

In LightSwitch, this heart is called the application model. When you debug through a LightSwitch application, you can investigate this application model and see that it is “nothing more” then a big dictionary with over 800 entries for even the simplest application.

Since this is the heart of any LightSwitch application, it’s important to understand how this dictionary is filled, and where it is used…

The LightSwitch application model.

The application model is a combination of three major parts that I’m aware of…

- Core: Multiple LSML files (xml compliant documents called “LightSwitch Markup Language” files) hidden in the LightSwitch internal assemblies.

- Extensiblity: multiple LSML files from different modules. Each time you create an extension, a file called module.lsml is added along with a ModuleLoader implementation that loads that file. Extensions are not the only modules that provide input for the application model though, LightSwitch comes with a Security module (users, rights, user groups, …) which is also a module, and so is your LightSwitch application itself!

- Application: the latter, your LightSwitch application, contains a file called ApplicationDefinition.lsml.

The application model is used in three different places as well:

- Runtime: obviously, as stated before, it’s the heart of your running application.

- Compile time: from the data in your appication model, the LightSwitch framework generates everything from classes to config to the database (Entity Framework).

- Design time: this may sound a bit weird, but the LightSwitch specific screens in visual studio, aren’t much more than advanced graphical XML (LSML) editors…

LSML editor: all screen definitions are found in the different modules that form the application definition

LSML editor: all screens and data sources are found in the different modules that form the application definition

LSML editor: all properties are found in the different modules that form the application definition

Ok, enough theory, time to get our hands dirty.

The basic idea is simple: if, during the installation of our extension, we can get our hands on the ApplicationDefintion.lsml file, we can add anything we want to the LightSwitch application…

If you wonder why we want to do this, the reason is simple. We want to create a skinning extension that is capable of storing user specific skin configurations and need a better alternative than the LightSwitch default implementation.

Before I show you this ugly hack (and man o’ man, it’s one of the ugliest pieces of code I have probably ever written…), a word to the wise: with great power comes great responsibility. It is really easy to corrupt the file, and thus destroying the developer’s LightSwitch application. Don’t hold me responsible if you though… Also, if LightSwitch releases a new version, the implementation might be subject to change, so having your crucial business needs depend on this hack is a bad idea.

Right, let’s get to it. The perfect place to implement our hack is the point where our extension is loaded by the Visual Studio environment.

Create a new extension (any extension will do at this point, shell, theme, user control, datasource, whatever) and have a look at the project called MyExtension.Common: there is a class called ModuleLoader.cs, which is ofcourse our prime candidate…

It contains a method called LoadModelFragments.

[ModuleDefinitionLoader("MyExtension")] internal class ModuleLoader : IModuleDefinitionLoader { #region IModuleDefinitionLoader Members /* ... */ IEnumerable<Stream> IModuleDefinitionLoader.LoadModelFragments() {Doing a bit of research, I found that this method is called indeed during both the runtime of the LightSwitch application, and at design time, by the Visual Studio LightSwitch environment. It’s a must that we find a way to differentiate between both cases: runtime is simply too late, the database tables have been created, and corresponding classes have been generated. We’re only interested in modifying the application definition at design time…

Assembly assembly = Assembly.GetExecutingAssembly(); Assembly s = Assembly.GetCallingAssembly(); if(s.FullName.StartsWith("Microsoft.LightSwitch.Design.Core.Internal")){The implementation of our hack keeps getting dirtier, don’t judge my coding skills by what you saw above, and what you’re about to see below!!

At this point, we found a point in time where we are sure that the Visual Studio LightSwitch environment is loading our extension. Before we can add our custom tables to the default dataworkspace in the application definition, and basically get a free ride from the LightSwitch framework to actually add our tables to the database and generate the model and data access classes, we must first get a reference to the ApplicationDefinition.lsml file.

Because this code resides in an extension, one can be sure that our extension has been copied to the solution’s folder called Pvt_Extensions. Let’s backtrack the applicationdefinition file from there…

string assemblyUrl = assembly.CodeBase; int index = assemblyUrl.LastIndexOf("_Pvt_Extensions"); if (index != -1) { string ApplicationDefinition = assemblyUrl.Substring(8, index -8) + "Data/ApplicationDefinition.lsml"; /* HomeWork time ... */That’s all there is to it.

I kind of ran out of time here (running late for a training today), so school is out, and here’s your homework for today: to know what one needs to add to this file, create a new LightSwitch application. Take a backup of the ApplicationDefinition.lsml file, then compare it to a version where you added a really simple table. Try to implement this process where indicated in the code above. There’s two more pitfalls to encounter, and I’m curious to see how you will handle them.

Meanwhile, I’ll clean up my sample a bit and release it later this week. As a little teaser: my sample uses similar (yet not exactly) the same technique as described above, to merge different LightSwitch applications together…

Jan van der Haegen (@janvanderhaegen) also described Looking for a ServiceProxy.UserSettingsService alternative in another 10/9/2011 post:

While creating my skinning extension, I’m noticing some weird behaviour with the default UserSettingsService returned by the imported IServiceProxy. One would think that this service stores settings that are specific to the user, you know, the one logged in to the LightSwitch application, managed in the LightSwitch’s Security module (users & rights)…

Unfortunately…

Bill R -MSFT (MSFT)

Hi Jan,

You are correct that the user settings are not stored in the database. Also the settings are stored on the PC and not “with” the app so it is true that the users settings will not persist across machines.

As far as where we write them to:

On a desktop app we write to “Environment.SpecialFolder.MyDocuments” + \Microsoft\LightSwitch\Settings\[App]

On a web app we use SliverLight Isolated Storage (http://www.silverlight.net/learn/graphics/file-and-local-data/isolated-storage-(silverlight-quickstart)

The settings you can store and retrieve with the UserSettingsService, are saved in locations specific to the currently logged in Windows user, on that particular machine, which is insufficient for our needs.

In my opinion, LightSwitch user settings are a valid candidate to be stored in the default dataworkspace ( i.e. the database). That way, different users can have different settings even if they access the LightSwitch application from the same Windows account on the same machine (unless you use Windows authentication obviously), and the user’s settings persist over different machines.

That’s why, the skinning extension comes with a lengthy install guide asking the developer to “please create this table in the dataworkspace with these specific fields”… Not a good practice, I know, but our hands are kind of tied together because of the LightSwitch UserSettingsService implementation.

Unless of course… We can come up with some kind of alternative…

Return to section navigation list>

Windows Azure Infrastructure and DevOps

[Windows Azure Compute] [South Central US] reported [Yellow] Windows Azure Compute Partial Service Degradation on 10/9/2011:

Oct 9 2011 12:12PM Our monitoring has detected an intermittent issue with Windows Azure Compute in the South Central US region. At this time it is not possible to service existing hosted services already deployed in a particular cluster in this region (create, update, or delete deployments). It is possible to deploy new hosted services however, and existing hosted services are not affected. Storage accounts in this region are not affected either. We are actively investigating this issue and working to resolve it as soon as possible. Further updates will be published to keep you apprised of the situation. We apologize for any inconvenience this causes our customers.

Oct 9 2011 1:10PM The investigation is still underway to identify the root cause of this issue. If your application needs to be serviced and is affected by this issue, remember that Windows Azure Compute is currently fully functional in all other regions, so if your application can be geo-located in another region, you may deploy your service successfully elsewhere. Then updating your DNS records to direct your application traffic to that newly deployed service would in fact be equivalent to servicing your application currently deployed in the affected cluster in the South Central US region. Further updates will be published to keep you apprised of the situation. We apologize for any inconvenience this causes our customers.

Oct 9 2011 1:58PM The root cause has been identified and the repair steps have been executed and validated. Windows Azure Compute is now fully functional in the South Central US region. We apologize for any inconvenience this caused our customers

The issue didn’t affect my OakLeaf Systems Azure Table Services Sample Project (Tools v1.4 with Azure Storage Analytics) demo app.

The issue didn’t affect my OakLeaf Systems Azure Table Services Sample Project (Tools v1.4 with Azure Storage Analytics) demo app.

<Return to section navigation list>

Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

Yung Chou (@yungchou) described System Center Virtual Machine Manager (VMM) 2012 as Private Cloud Enabler (3/5): Deployment with Service Template in a 10/10/2011 post:

By this time, I assume we all have some clarity that virtualization is not cloud. There are indeed many and significant differences between the two. A main departure is the approaches of deploying apps. In the 3rd article of the 5-part series as listed below, I would like to examine service-based deployment introduced in VMM 2012 for building a private cloud.

- Part 1. Private Cloud Concepts

- Part 2. Fabric, Oh, Fabric

- Part 3. Deployment with Service Template (This article)

- Part 4. Private Cloud Lifecycle

- Part 5. Application Controller

VMM 2012 has the abilities to carry out both traditional virtual machine (VM)-centric and emerging service-based deployments. The form[er] is virtualization-focused and operated at a VM level, while the latter is service-centric approach and intended for private cloud deployment.

This article is intended for those with some experience administering VMM 2008 R2 infrastructure. And notice in cloud computing, “service” is a critical and must-understand concept which I have discussed elsewhere. And just to be clear, in the context of cloud computing, a “service” and an “application” means the same thing, since in cloud everything to user is delivered as a service, for example SaaS, PaaS, and IaaS. Throughout this article, I use the terms, service and application, interchangeably.

VM-Centric Deployment

In virtualization, deploying a server has becomes conceptually shipping/building and booting from a (VHD) file. Those who would like to refresh their knowledge of virtualization are invited to review the 20-Part Webcast Series on Microsoft Virtualization Solutions.

Virtualization has brought many opportunities for IT to improve processes and operations. With system management software such as System Center Virtual Machine Manager 2008 R2 or VMM 2008 R2, we can deploy VMs and installs OS to a target environment with few or no operator interventions. And from an application point of view, with or without automation the associated VMs are essentially deployed and configured individually.

For instance, a multi-tier web application like the one shown above is typically deployed with a pre-determined number of VMs, followed by installing and configuring application among the deployed VMs individually based on application requirements. Particularly when there is a back-end database involved, a system administrator typically must follow a particular sequence to first bring a target database server instance on line by configuring specific login accounts with specific db roles, securing specific ports, and registering in AD before proceeding with subsequent deployment steps. These operator interventions are required likely due to lack of a cost-effective, systematic, and automatic way for streamlining and managing the concurrent and event-driven inter-VM dependencies which become relevant at various moments during an application deployment.

Despite there may be a system management infrastructure in place like VMM 2008 R2 integrated with other System Center members, at an operational level VMs are largely managed and maintained individually in a VM-centric deployment model. And perhaps more significantly, in a VM-centric deployment too often it is labor-intensive and with relatively high TCO to deploy a multi-tier application “on demand” (in other words, as a service) and deploy multiple times, run multiple releases concurrently in the same IT environment, if it is technically feasible at all. Now in VMM 2012, the ability to deploy services on demand, deploy multiple times, run multiple releases concurrently in the same environment become noticeably straightforward and amazing simple with a service-based deployment model.

Service-Based Deployment

In a VM-centric model, there lacks an effective way to address event-driven and inter-VMs dependencies during a deployment, nor there is a concept of fabric which is an essential abstraction of cloud computing. In VMM 2012, a service-based deployment means all the resources encompassing an application, i.e. the configurations, installations, instances, dependencies, etc. are deployed and managed as one entity with fabric . The integration of fabric in VMM 2012 is a key delivery and clearly illustrated in VMM 2012 admin console as shown on the left. And the precondition for deploying services to a private cloud is all about first laying out the private cloud fabric.

Constructing Fabric

To deploy a service, the process normally employs administrator and service accounts to carry out the tasks of installing and configuring infrastructure and application on servers, networking, and storage based on application requirements. Here servers collectively act as a compute engine to provide a target runtime environment for executing code. Networking is to interconnect all relevant application resources and peripherals to support all management and communications need, while the storage is where code and data actually resides and maintained. In VMM 2012, this is collectively managed with a single concept as fabric.

There are three resource pools/nodes encompassing fabric: Servers, Networking, and Storage. Servers contain various types of servers including virtualization host groups, PXE, Update (i.e. WSUS) and other servers. Host groups are container to logically group servers with virtualization hosting capabilities and ultimately represent the physical boxes where VMs can be possibly deployed to, either with specific network settings or dynamically selected by VMM Intelligent Placement, as applicable, based on defined criteria. VMM 2012 can manage Hyper-V based, VMware, as well as other virtualization solutions. During adding a host into a host group, VMM 2012 installs an agent on a target host which then becomes a managed resource of the fabric.

A Library Server is a repository where the resources for deploying services and VMs are available via network shares. As a Library Server is added into fabric, by specifying the network shares defined in the Library Server, file-based resources like VM templates, VHDs, iso images, service templates, scripts, server app-v packages, etc. are become available and to be used as building blocks for composing VM and service templates. As various types of servers are brought in the Server pool, the coverage expanded and capabilities increased as if additional fibers are weaved into fabric.

Networking presents the wiring among resources repositories, running instances, deployed clouds and VMs, and the intelligence for managing and maintaining the fabric. It essentially forms the nervous system to filter noises, isolate traffic, and establish interconnectivity among VMs based on how Logical Networks and Network Sites are put in place.

Storage reveals the underlying storage complexities and how storage is virtualized. In VMM 2012, a cloud administrator can discover, classify and provision remote storage on supported storage arrays through the VMM 2012 console. VMM 2012 fully automates the assignment of storage to a Hyper-V host or Hyper-V host cluster, and tracks the storage that is managed by VMM 2012.

Deploying Private Cloud

A leading feature of VMM 2012 is the ability to deploy a private cloud, or more specifically to deploy a service to a private cloud. The focus of this article is to depict the operational aspects of deploying a private cloud with the assumption that an intended application has been well tested, signed off, and sealed for deployment. And the application resources including code, service template, scripts, server app-v packages, etc. are packaged and provided to a cloud administrator for deployment. In essence, this package has all the intelligence, settings, and contents needed to be deployed as a service. This self-contained package can then be easily deployed on demand by validating instance-dependent global variables and repeating the deployment tasks on a target cloud. The following illustrated the concept where a service is deployed in update releases and various editions with specific feature compositions, while all running concurrently in VMM 2012 fabric. Not only this is relative easy to do by streamlining and automating all deployment tasks with a service template, the service template can also be configured and deploy to different private clouds.

The secret sauce is a service template which includes all the where, what, how, and when of deploying all the resources of an intended application as a service. It should be apparent that the skill sets and amount of efforts to develop a solid service template apparently are not trivial. Because a service template not only needs to include the intimate knowledge of an application, but the best practices of Windows deployment in addition to system and network administrations, server app-v, and system management of Windows servers and workloads. The following is a sample service template of Stock Trader imported into VMM 2012 and viewed with Designer where Stock Trader is a sample application for cloud deployment downloaded from Windows Connect.

Here are the logical steps I follow to deploy Stock Trader with VMM 2012 admin console:

- Step 1: Acquire the Stock Trader application package from Windows Connect.

- Step 2: Extract and place the package in a designated network share of a target Library Server of VMM 2012 and refresh the Library share. By default, the refresh cycle of a Library Server is every 60 minutes. To make the newly added resources immediately available, refreshing an intended Library share will validate and re-index the resources in added network shares.

- Step 3: Import the service templates of Stock Trader and follow the step-by-step guide to remap the application resources.

- Step 4: Identify/create a target cloud with VMM 2012 admin console.

- Step 5: Open Designer to validate the VM templates included in the service template. Make sure SQLAdminRAA is correctly defined as RunAs account.

- Step 6: Configure deployment of the service template and validate global variables in specialization page.

- Step 7: Deploy Stock Trader to a target cloud and monitor the progress in Job panel.

- Step 8: Troubleshoot the deployment process, as needed, restart the deployment job, and repeat the step as needed

- Step 9: Upon successful deployment of the service, test the service and verify the results.

A successful deployment of Stock Trader with minimal instances in my all-in-one-laptop demo environment (running in Lenovo W510 with sufficient RAM) took about 75 to 90 minutes as reported in Job Summary shown below.

Once the service template is successfully deployed, Stock Trader becomes a service in the target private cloud supported by VMM 2012 fabric. The following two screen captures show a Pro Release of Stock Trader deployed to a private cloud in VM.M 2012 and the user experience of accessing a trader’s home page.

Not If, But When

Witnessing the way the IT industry has been progressing, I envision that private cloud will soon become, just like virtualization, a core IT competency and no longer a specialty. While private cloud is still a topic that is being actively debated and shaped, the upcoming release of VMM 2012 just in time presents a methodical approach for constructing private cloud based on a service-based deployment with fabric. It is a high-speed train and the next logical step for enterprise to accelerate private cloud adoption.

Closing Thoughts

I here forecast the future is mostly cloudy with scattered showers. In the long run, I see a clear cloudy day coming.

Be ambitious and opportunistic is what I will encourage everyone. When it comes to Microsoft private cloud, the essentials are Windows Server 2008 R2 SP1 with Hyper-V and VMM 2012. And those who first master these skills will stand out, become the next private cloud subject matter experts, and lead the IT pro communities. While recognizing private cloud adoption is not a technology issue, but a culture shift and an opportunity of career progression, IT pros must make a first move.

In an upcoming series of articles tentatively titled “Step-by-Step Deployment of Private Cloud with VMM 2012,” I will walk through the operations of the above steps and detail the process of deploying Stock Trader to a private cloud. To keep yourself informed of my content deliveries, I want to invite you to follow me on twitter and subscribe my blog.

David Linthicum (@DavidLinthicum) asserted “As more enterprises seek the flexibility of hybrid clouds, many end up driving them into the ground instead” in a deck for his The hybrid cloud is not a silver bullet post of 10/10/2011 for InfoWorld’s Cloud Computing blog:

"Hybrid clouds can save us" -- that's the mantra I'm hearing more and more from corporate IT these days.

You can't blame these folks. With hybrid clouds, you can leverage both private and public clouds and dynamically choose where processes and data should reside. Indeed, many of the cloud technology vendors talk about dragging and dropping images, data, and processes from private to public cloud. But it's not that easy.

The problem comes when enterprises rely too much on vendor promises and not enough on their own architectural requirements. The vendors show up with their interpretation of what a hybrid cloud should be, enterprises understand the actual limitations too late, the budget runs out, and the plug is pulled.

The problems are the lack of architectural forethought around the business requirements to the technology requirements, as well as the absence of a clear understanding of the advantages and limitations of the technology available. Workers involved with these projects get caught up in the hype, feel pressured to get something cloudlike up and running, and hit walls they don't see until it's too late.

There's an easy fix. First, you need an honest assessment of the core requirements to determine if a hybrid cloud is appropriate. In many of the problems that I see, private clouds or public clouds -- not a hybrid -- are a better fit. However, some people are so sold on the hybrid idea that it's difficult them to change their minds.

If a hybrid cloud is needed, make sure to understand your requirements in the context of the available technology. Yes, you can knit it together yourself, but I do not recommend that at this point.

And remember that there is no silver bullet: Cloud computing platforms and products require that you make some trade-offs. That's fine. Just understand them before committing.

"Hybrid clouds can save us" from what? Losing our jobs? I’m not hearing this “mantra.”

<Return to section navigation list>

Cloud Security and Governance

Jay Heiser asked “” in the introduction to his Scooters & flashlights means your data is secure with us post of 10/10/2011 to his Gartner blog:

In April, Google uploaded a marketing video to YouTube, Security and Data Protection in a Google Data Center. This 7 minute piece explains that interior doors are protected with biometric sensors, and that scooters are provided for the guards. Take a silent moment to try to visualize the sort of infosec security failure that would be solved with scooters. Is there some sort of Google security training camp where security staff are taught advanced scooter incident response techniques? Instead of Mall Cop Two, can we anticipate Google Cop?

Just before (and continuing after) Irene struck, Amazon’s AWS service health dashboard included the reassuring news “We are monitoring Hurricane Irene and making all possible preparations, e.g. generator fuel, food/water, flashlights, radios, extra staff.” Laying in some extra staff to temporarily beef up the inherently lean personnel of a cloud service sounds like a great idea, but shouldn’t a provider always have enough generator fuel to outlive unforseen power failures, let alone scheduled hurricanes? Why wouldn’t they always have some MREs on hand to tide their admin crew through a snow storm or civil emergency? How about a deck of cards, or a board game, too? Once they get to the point where they need flashlights and radios, the extra staff will need something else to do while waiting for the power and the Internet to come back up.

Taking a more minimalist approach, Dropbox is sticking with the marvelously ambiguous position “We use the same secure methods as banks.” Does that mean the same methods that banks use to prevent robbers from getting into your safety deposit boxes, or the same methods that UBS uses to monitor for fraud?

Best practice for determining if a service provider is fit for purpose remains an open topic. I do have some ideas on what a buyer should be told, and I’m confident that this sort of information is not it.

Jay is a Gartner research vice president specializing in the areas of IT risk management and compliance, security policy and organization, forensics, and investigation.

StrongAuth, Inc. announced the StrongKey CryptoEngine, a Java-based free and open-source software (FOSS) encryption engine on 8/16/2011 (missed when published):

The StrongKey CryptoEngineTM (SKCE) is Java-based free and open-source software (FOSS) that can either be directly integrated into applications or established as a web-service on the network. It performs the following functions:

- Encrypts/Decrypts files of any type and any size, using the AES or 3DES algorithms

- Generates and escrows the symmetric encryption keys to a network-based Key Management solution - the StrongAuth KeyApplianceTM [See post below.] It also automatically recovers the appropriate key from the key-management system when decrypting ciphertext

- Stores/Retrieves encrypted files automatically to/from public clouds such as Amazon's S3 or Microsoft's Azure. It can also store/retrieve encrypted files to/from private clouds built using Eucalyptus ,from Storage Area Networks, Network Attached Storage and local file-systems [Emphasis added.]

- Works with applications in any programming language as long as the application can make a SOAP-based web-service request,

- Helps prove compliance to regulatory requirements, such as PCI-DSS, FFIEC, HIPAA, GLBA 201 CMR 17.00 for encryption and key-management

- Saves encrypted files using the W3C XMLEncryption standard format for portability

- Authenticates and authorizes requests against Active Directory or OpenDS

The StrongKey CryptoEngineTM is available free of licensing cost under the LGPL. Free support is also available through the forum at SourceForge. Sites interested in a Service Level Agreement (SLA) with a guaranteed response time for service requests should contact us to discuss their needs.

StrongAuth, Inc. also announced its StrongAuth CryptoApplianceTM a US$4,995 integrated solution for encrypting files and automatically transferring them to public cloud storage services such as Amazon's S3, Microsoft Azure, RackSpace, etc.:

The StrongAuth CryptoApplianceTM is an integrated solution for encrypting files and automatically transferring them to public cloud storage services such as Amazon's S3, Microsoft Azure, RackSpace, etc. The encrypted files can also be transferred to private clouds enabled by tools such as Eucalypts and OpenStack. The encryption keys are escrowed on and managed securely by the StrongAuth KeyApplianceTM, providing proof of compliance for protecting sensitive data in accordance with security regulations such as PCI-DSS.

The StrongAuth CryptoApplianceTM includes the following features; the ability to:

- Encrypt files from any application by calling a web-service on the appliance

- Automatically generate and escrow encryption keys with the StrongAuth KeyApplianceTM

- Leverage public clouds for storing the encrypted files. The appliance currently supports Amazon S3; Microsoft Azure and RackSpace cloudfiles is targeted for June 30, 2011;

- Leverage private clouds built on Eucalyptus for storing the encrypted files; OpenStack is targeted for June 30, 2011.

- Prove compliance to regulatory requirements, such as PCI-DSS, for encryption and key-management;

- Save encrypted files in the W3C XMLEncryption standard format so they are portable anywhere;

- Integrate the appliance to existing identity management systems, such as Active Directory or other LDAP-based identity management systems; and

- A GUI-based application to enable immediate use of the appliance's web-services to encrypt, decrypt and move files to and between public and private clouds.

The CryptoApplianceTM, with its unprecedented blend of features, is available at the price of $4,995 per appliance.

How to buy

Contact sales@strongauth.com to find out more. (This e-mail address is being protected from spam bots, you need JavaScript enabled to view it)

<Return to section navigation list>

Cloud Computing Events

John White described How to Batch Download Videos and Presentations from SharePoint Conference 2011 in a 10/10/2011 post:

I’ve seen a number of questions about this, and I’m in the process of collecting all of the presentations and slide decks from the conference, so I thought that I’d share my process. It’s relatively Straightforward to batch these up.

The videos are all in one location, and the presentations are in another. The base folder for the videos is http://media.conferencevue.com/media and the naming format for the files is SPCsessionnumber.zip, so for session 301, the filename is SPC301.zip. You can simply punch the full URL into a browser and the files will start downloading. In order to batch download a bunch, I am using the open source WGET utility, recommended to me by Ziad Wakim. I simply created a batch file that calls WGET for all of the presentations that I wanted.

The process is the same for the slide decks, but the site base is http://www.mssharepointconference.com/SessionPresentations and the file name format is identical to the videos with the .zip replaced with .pptx. However, the conference site expects a cookie, so you’ll first need to log into the myspc site. Once done, export the cookie for the site to a text file (In IE use File – Import and Export – Export to a file) that’s available to the WGET command. Once it’s a simple command switch on the end of the WGET command to load in the cookie for each request. If the cookie is exported to a file names cookies.txt, then the command will look something like:

wget http://www.mssharepointconference.com/SessionPresentations/SPC413.pptx –load-cookies cookies.txt

Another batch file with a line for each slide deck you want, and you can run it and download everything at once. One note – you’ll need to be patient. Given the speeds, I don’t think that I’m the only one who has thought of this….

Attempting to download a sample file from http://media.conferencevue.com/media/SPC301.zip threw a HTTP 503 Service Unavailable error with Internal-Reason: 132 when I tested the URL at 2:30 PM PDT. Subsequent tests hung with a Waiting for response from conferencevue.com … status message. At 5:00 PM PDT, I was able to connect to the endpoint, but the download rate was so slow that I abandoned the effort.

John O’Donnell explained Why you should consider attending the Microsoft Dynamics CRM User Group (CRMUG) Summit Nov 9-11 in a 10/10/2011 post:

Each year the Dynamics CRM User Group or CRMUG hosts a great user conference where you can network not only with partners but customers who are using the product each day. Here are some of the reasons you will want to attend this unique event:

Network with CRM Users to Solve your CRM Challenges

CRMUG Summit is designed with one thing in mind: to connect you,the Dynamics CRM User, with the right resources to address your specific CRM challenges, issues and priorities. We create ample networking opportunities, both formal and informal, to make it easy for you to connect with other Users, partners, or Microsoft staff members who have the experiences and answers you need. Those opportunities include structured networking sessions, Microsoft Town Hall meetings, exclusive expo times, interactive discussions, Microsoft Conduits, role/industry/location-based roundtables, Ask the Experts sessions, meetings-on-the-fly, networking receptions and more. And, of course, there will be plenty of opportunities to keep the conversations and the fun going even after the day’s planned agenda is complete (after all, we will be in Vegas)!

Learn From the Experiences of Other CRM Users