Windows Azure and Cloud Computing Posts for 10/3/2011+

| A compendium of Windows Azure, SQL Azure Database, AppFabric, Windows Azure Platform Appliance and other cloud-computing articles. |

I was honored by the award of MVP status for Windows Azure on 10/1/2011. Click here for a link to my new public MVP profile. My congratulations to other new and all renewed MVPs.

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database and Reporting

- Marketplace DataMarket and OData

- Windows Azure AppFabric: Apps, Access Control, WIF and Service Bus

- Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch and Entity Framework v4+

- Windows Azure Infrastructure and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

Azure Blob, Drive, Table and Queue Services

No significant articles today.

<Return to section navigation list>

SQL Azure Database and Reporting

Cihan Biyikoglu reported his presentations at SQL PASS in Oct and SQL Rally in Nov in a 10/3/2011 post:

Federations is on its way out the door with the next update of SQL Azure. With that, I’ll be on the road performing. Here is the schedule. Stop by, say hi and speak up to talk about your own “federation” stories!

SQL PASS – Seattle, WA – Oct 11-14th

Migrating Large Scale Application to the Cloud with SQL Azure Federations (SQL Azure)

- SQL Server Engine Team - Unplugged (Application and Database Development)

SQL Rally – Aronsborg, Sweden – Nov 8 - 9th

- Building Large Scale Applications on SQL Azure – (Developer Track)

<Return to section navigation list>

MarketPlace DataMarket and OData

Jianggan Li reported Taipei launches open govt portal in a 10/3/2011 post to the Asia-Pacific Future Gov blog:

Taipei City Government’s official open data portal, data.taipei.gov.tw, has been made live.

The City Government has been building mobile Apps for residents and visitors since last year, covering areas such as city administration, transportation and tourism. And now the portal becomes the unified access point for the public to use government open data.

The data sets will be made available in batches. Chang Chia-sheng, Commissioner for IT of Taipei City Government, explains to FutureGov that the selection criteria for the first bath include:

- Focus mainly on data which city residents could use

- Mainly information that has already been open for citizens to enquire free of charge

- Mocus on the data sets which have been formatted for easy export

The first batch of data covers areas including transportation, administration/politics, public safety, education, culture & arts, health, environmental protection, housing & construction as well as facilities. In total 131 different data sets are made available through the portal.

Chang [pictured at right] explains that the challenges encountered during the process were mainly around data classification, consolidation of data of disparate formats as well as ensuring the data are comprehensive and accurate.

“The quality of data is very important for the convenience of users,” Chang says. “Therefore it became a big challenge for us to ensure proper classification of the data, to make sure users can get the information they want within three or four clicks.”

After having studied the different classification methods of existing open government portals across the world and the patterns of user enquiries in Taiwan, the Department found that relying on the nature of data and the departments related are most intuitive for users. This is supplemented by indexing and searching modes. Chang says this will “create premium quality directory service”.

Also, because the data is consolidated from different departments, the formats and fields are different. To ensure that the data on the portal are consistent and scalable, the portal uses Open Data Protocol

Portalstandard (http://www.odata.org/), which follows many of the principles of REST (Representational State Transfer) - software architectural style for distributed hypermedia systems. [Emphasis added.]This simplifies the complexity of data-related operations and enhances service efficiency, according to Chang.

As the nature of different data sets and the processes of relevant departments determine that the update frequencies of data are not uniform. For example, water supply disruption information has to be updated daily, while information around cultural issues such as historical buildings is not updated that frequently.

“For the update of data to be smooth, we need to study and understand the operational process and data update timeframe of all the relevant departments,” Chang says. “And this also allows us to monitor and ensure that each department updates their data promptly.”

Apps contest

Learning from some advanced cities in the west, Taipei City Government plans to hold software development competitions on a regular business, attracting intelligence from the public to improve government services. This year, leveraging the software competition by Industrial Development Bureau of the Ministry of Economic Affairs, Taipei City Government is organising a contest on developing innovative apps based on government open data. The aim is to encourage students and business-minded individuals, companies and other organisations to use government data to develop more interesting, practical and valuable software apps and services, “making Taipei city a more convenient, friendly and intelligent city”.

Next steps

Moving forwards, in addition to continuously enlarging the data sets available, the City Government is also looking at providing more innovative services. For example, an App has been developed for the “Mayor’s mailbox”, giving residents a new way to provide feedback, complimenting paper, e-mail and web channels. This will also allow them to send pictures more easily, using the built-in cameras and location services of their mobile devices. “This will allow us to have more accurate information about the case and deal with it more timely, accurately,” says Chang.

The City Government also plans to continue seminars and contests to promote its open government policy, encourage public participation, and “make Taipei a more liveable place”.

<Return to section navigation list>

Windows Azure AppFabric: Apps, Access Control, WIF and Service Bus

Zerg Zergling described Ruby web sites and Windows Azure AppFabric Access Control in a 10/3/2011 post to the Windows Azure’s Silver Lining blog:

Recently I was trying to figure out how to allow users to login to a Ruby (Sinatra) web site using an identity service such as Facebook, Google, Yahoo, etc. I ended up using Windows Azure AppFabric Access Control Service (ACS) since it has built-in support for:

- Yahoo

- Windows Live ID

- WS-Federation Identity Providers

The nice thing is that you can use one or all of those, and your web application just needs to understand how to talk to ACS and not the individual services. I worked up an example of how to do this in Ruby, and will explain it after some background on ACS and how to configure it for this example.

What is ACS?

ACS is a claims based, token issuing provider. This means that when a user authenticates through ACS to Facebook or Google, that it returns a token to your web application. This token contains various ‘claims’ as to the identity of the authenticating user.

The tokens returned by ACS are either simple web tokens (SWT) or security assertion markup language (SAML)1.0 or 2.0. The token contains the claims, which are statements that the issuing provider makes about the user being authenticated.

The claims returned may, at a minimum, just contain a unique identifier for the user, the identity provider name, and the dates that the token is valid. Additional claims such as the user name or e-mail address may be provided – it’s up to the identity provider as to what is available. You can see the claims returned for each provider, and select which specific ones you want, using the ACS administration web site.

ACS costs $1.99 a month per 100,000 transactions, however there’s currently a promotion going on until January 1, 2012 during which you won’t be charged for using the service. See http://www.microsoft.com/windowsazure/features/accesscontrol/ for more details.

Configuring ACS

To configure ACS, you’ll need a Windows Azure subscription. Sign in at http://windows.azure.com, then navigate to the “Service Bus, Access Control & Caching” section. Perform the following tasks to configure ACS:

- Using the ribbon at the top of the browser, select “New” from the Service Namespace section.

- In the “Create a new Service Namespace” dialog, uncheck Service Bus and Cache, enter a Namespace and Region, and then click the Create Namespace button.

- Select the newly created namespace, and then click the Access Control Service icon on the ribbon. This will take you to the management site for your namespace.

- Follow the “Getting Started” steps on the page.

1: Select Identity Providers

In the Identity Providers section, add the identity providers you want to use. Most are fairly straight forward, but Facebook is a little involved as you’ll need an Application ID, Application secret, and Application permissions. You can get those through http://www.facebook.com/developers. See http://blogs.objectsharp.com/cs/blogs/steve/archive/2011/04/21/windows-azure-access-control-services-federation-with-facebook.aspx for a detailed walkthrough of using ACS with Facebook.

2: Relying Party Applications

This is your web application. Enter a name, Realm (the URL for your site,) the Return URL (where ACS sends tokens to,) error URL’s, etc. For token format you can select SAML or SWT. I selected SWT and that’s what the code I use below uses. You’ll need to select the identity providers you’ve configured. Also be sure to check “Create new rule group”. For token signing settings, click Generate to create a new key and save the value off somewhere.

3: Rule groups

If you checked off “Create new rule group” you’ll have a “Default Rule Group” waiting for you here. If not, click Add to add one. Either way, edit the group and click Generate to add some rules to it. Rules are basically how you control what claims are returned to your appication in the token. Using generate is a quick and easy way to populate the list. Once you have the rules configured, click Save.

4: Application Integration

In the Application Integration section, select Login pages, then select the application name. You’ll be presented with two options; a URL to an ACS-hosted login page for your application and a button to download an example login page to include in your application. For this example, copy the link to the ACS-hosted login page.

ACS with Ruby

The code below will parse the token returned by the ACS-hosted login page and return a hash of claims, or an array containing any errors encountered during validation of the token. It doesn't fail immediately on validation, as it may be useful to examine the validation failures to figure out any problems that were encountered. Also note that the constants need to be populated with values specific to your application and the values entered in the ACS management site.

require 'nokogiri'

require 'time'

require 'base64'

require 'cgi'

require 'openssl'REALM=‘http://your.realm.com/’ TOKEN_TYPE=‘http://schemas.xmlsoap.org/ws/2009/11/swt-token-profile-1.0’ #SWT ISSUER=‘https://yournamespace.accesscontrol.windows.net/' TOKEN_KEY='the key you generated in relying applications above’class ResponseHandler

attr_reader :validation_errors, :claims

def initialize(wresult)

@validation_errors = []

@claims={}

@wresult=Nokogiri::XML(wresult)

parse_response()

end

def is_valid?

@validation_errors.empty?

end

private

#parse through the document, performing validation & pulling out claims

def parse_response

parse_address()

parse_expires()

parse_token_type()

parse_token()

end

#does the address field have the expected address?

def parse_address

address = get_element('//t:RequestSecurityTokenResponse/wsp:AppliesTo/addr:EndpointReference/addr:Address')

@validation_errors << "Address field is empty." and return if address.nil?

@validation_errors << "Address field is incorrect." unless address == REALM

end

#is the expire value valid?

def parse_expires

expires = get_element('//t:RequestSecurityTokenResponse/t:Lifetime/wsu:Expires')

@validation_errors << "Expiration field is empty." and return if expires.nil?

@validation_errors << "Invalid format for expiration field." and return unless /^(-?(?:[1-9][0-9]*)?[0-9]{4})-(1[0-2]|0[1-9])-(3[0-1]|0[1-9]|[1-2][0-9])T(2[0-3]|[0-1][0-9]):([0-5][0-9]):([0-5][0-9])(\.[0-9]+)?(Z|[+-](?:2[0-3]|[0-1][0-9]):[0-5][0-9])?$/.match(expires)

@validation_errors << "Expiration date occurs in the past." unless Time.now.utc.iso8601 < Time.iso8601(expires).iso8601

end

#is the token type what we expected?

def parse_token_type

token_type = get_element('//t:RequestSecurityTokenResponse/t:TokenType')

@validation_errors << "TokenType field is empty." and return if token_type.nil?

@validation_errors << "Invalid token type." unless token_type == TOKEN_TYPE

end

#parse the binary token

def parse_token

binary_token = get_element('//t:RequestSecurityTokenResponse/t:RequestedSecurityToken/wsse:BinarySecurityToken')

@validation_errors << "No binary token exists." and return if binary_token.nil?

decoded_token = Base64.decode64(binary_token)

name_values={}

decoded_token.split('&').each do |entry|

pair=entry.split('=')

name_values[CGI.unescape(pair[0]).chomp] = CGI.unescape(pair[1]).chomp

end

@validation_errors << "Response token is expired." if Time.now.to_i > name_values["ExpiresOn"].to_i

@validation_errors << "Invalid token issuer." unless name_values["Issuer"]=="#{ISSUER}"

@validation_errors << "Invalid audience." unless name_values["Audience"] =="#{REALM}"

# is HMAC valid?

token_hmac = decoded_token.split("&HMACSHA256=")

swt=token_hmac[0]

@validation_errors << "HMAC does not match computed value." unless name_values['HMACSHA256'] == Base64.encode64(OpenSSL::HMAC.digest(OpenSSL::Digest::Digest.new('sha256'),Base64.decode64(TOKEN_KEY),swt)).chomp

# remove non-claims from collection and make claims available

@claims = name_values.reject {|key, value| !key.include? '/claims/'}

end

#given an path, return the content of the first matching element

def get_element(xpath_statement)

begin

@wresult.xpath(xpath_statement,

't'=>'http://schemas.xmlsoap.org/ws/2005/02/trust',

'wsu'=>'http://docs.oasis-open.org/wss/2004/01/oasis-200401-wss-wssecurity-utility-1.0.xsd',

'wsp'=>'http://schemas.xmlsoap.org/ws/2004/09/policy',

'wsse'=>'http://docs.oasis-open.org/wss/2004/01/oasis-200401-wss-wssecurity-secext-1.0.xsd',

'addr'=>'http://www.w3.org/2005/08/addressing')[0].content

rescue

nil

end

end

endSo what’s going on here? The main pieces are:

- get_element, which is used to pick out various pieces of the XML document.

- parse_addres, expires, token_type, which pull out and validate the individual elements

- parse_token, which decodes the binary token, validates it, and returns the claims collection.

After processing the token, you can test for validity by using is_valid? and then parse through either the claims hash or validation_errors array. I'll leave it up to you to figure out what you want to do with the claims; in my case I just wanted to know the identity provider the user selected and thier unique identifier with that provider so that I could store it along with my sites user specific information for the user.

Summary

As mentioned in the introduction, ACS let’s you use a variety of identity providers without requiring your application to know the details of how to talk to each one. As far as your application goes, it just needs to understand how to use the token returned by ACS. Note that there may be some claim information provided that you can use to gather additional information directly from the identity provider. For example, FaceBook returns an AccessToken claim field that you an use to obtain other information about the user directly from FaceBook.

As always, let me know if you have questions on this or suggestions on how to improve the code.

<Return to section navigation list>

Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

No significant articles today.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

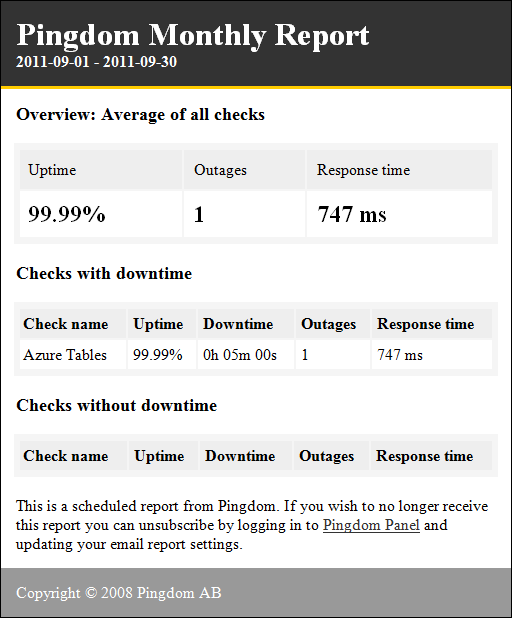

My (@rogerjenn) Uptime Report for my Live OakLeaf Systems Azure Table Services Sample Project: September 2011 includes detailed response-time data from Pingdom.com:

My live OakLeaf Systems Azure Table Services Sample Project demo runs two small Azure Web role instances from Microsoft’s US South Central (San Antonio, TX) data center. Here’s its uptime report from Pingdom.com for September 2011:

The exact 00:05:00 downtime probably is a 5-minute sampling interval artifact.

[Detailed response-time data from Pingdom.com elided for brevity.]

This is the fourth uptime report for the two-Web role version of the sample project. Reports will continue on a monthly basis.

The Azure Table Services Sample Project

See my Republished My Live Azure Table Storage Paging Demo App with Two Small Instances and Connect, RDP post of 5/9/2011 for more details of the Windows Azure test harness instance.

I believe this project is the oldest continuously running Windows Azure application. I first deployed it in June 2009 when I was writing Cloud Computing with the Windows Azure Platform for Wiley/WROX, which was the first book published on Windows Azure.

Himanshu Singh described Cross-Post: Environments for Windows Azure Development in a 10/3/2011 post to the Windows Azure Team blog:

Anyone who has worked on a software development project will be familiar with the concept of an “environment”. Simply put, an environment is a set of infrastructure that you can deploy your application to, supporting a specific activity in your software development lifecycle. Any significant project will have multiple environments, generally with names such as “Test”, “UAT”, “Staging” and “Production”.

When teams first start working with Windows Azure, they are often unsure about how to effectively represent the familiar concept of “environments” using the capabilities of the cloud. They note that every deployment has a “Production” and a “Staging” slot, but what about all their other environments, such as Test or UAT? Other teams ask if they should host some environments on their own servers running under the compute emulator.

Tom Hollander from Microsoft Services’ Worldwide Windows Azure Team has put together a post that explains how to use Windows Azure abstractions such as billing accounts, subscriptions and hosted services to represent different environments needed throughout the development lifecycle. With some careful planning, each team and activity should be able to use their environments with an appropriate mix of flexibility and control.

Click here to read the full post and learn how to plan environments for your next Windows Azure team project.

Himanshu is a a Senior Product Manager on the Windows Azure Marketing team. Part of his job is to drive outbound marketing activities, including managing the Windows Azure Team blog.

Avkash Chauhan asked Does Windows Azure Startup task have time limit? What to do with heavy processing startup task in Windows Azure Role? in a 10/3/2011 post:

So you have a startup task in … any Windows Azure Role which includes the following actions:

- Startup Tasktype is set to “Simple”. (Background and foreground task type will not block the role to start)

- Download ZIP installer from blob storage.

- Unzip it

- Install in silent mode.

- Run some script to start the application

Let’s consider above overall steps take about X amount of time to get it done, you may have the following questions:

- Is there any limit for startup task to finish?

- Is the time taken by startup task should be follow some guideline?

- What will happen with the role, if startup task takes forever or just stuck?

- What is the best way to handle such situation?

Here are some details you can consider with your Windows Azure Application which follows above scenario:

When your role is waiting to get ready it is not added into load balancer and once it is ready it is added into load balancer which indicated that your role is ready to accept traffic from outside. When the role returns from OnStart, the Role is added to the Load Balancer so if your startup task is active, Role is not added into load balancer yet. During the time your startup task is performing action, the Role status should be “Busy” (Any other state should be consider as problem). So if your role takes more than X minutes to initialize during startup task, this just indicate that your role is not able to accept traffic and that is indicated by its status as “Busy”.

The fact:

There is no time limit for your role to start, but if you take more than 15 minutes it is possible your service might hit some outage while your role is getting ready.

So what is 15 minute?:

There is a 15 minute timeout on role start which applies only when your service is set for automatic upgrade. It means the fabric controller will only wait 15 minutes for the role to start before proceeding to the next upgrade domain.15 minute limit is about how long the Fabric Controller will wait for a role instance to go to the Ready state during a rolling update.

So if fabric controller is moved to next upgrade domain, your role will continue to run and wait in OnStart and your service will be unavailable until fabric finishes all the upgrade domains before your first upgrade domain finishes.

What about when service is set to Automatic or Manual upgrade:

As you may know if your service is set to perform Manual upgrade, fabric controller upgrade one domain at a time and wait until it is ready before moving to the next upgrade domain. So if you have service upgrade set to Manual then 15 minutes wait by fabric controller will not apply as Fabric controller will wait for your startup task to finish before moving to next upgrade domain. So you might consider having manual service upgrade mode however there are still concern during regular host OS update as well.

How to handle such scenarios:

If you have a scenario in which you believe your startup task takes more than 15 minutes to get ready and you don’t want to have service outage, you can increase your service update domains and correspondingly the number of instances you have so that you always have at least one instance up and ready to take traffic while others are initializing.

Good Read:

- Windows Azure Fabric Controller Details:

- Upgrade & Fault Domains:

Gialiang Ge reported Microsoft All-In-One Code Framework Sample Browser v4 Released – A New Way to Enjoy 700 Microsoft Code Samples in a 10/3/2011 post to the Microsoft All-In-One Framework’s MSDN blog:

Today, we reached a new milestone of Microsoft All-In-One Code Framework. It gives me great pleasure to announce our newest Microsoft All-In-One Code Framework Sample Browser - v4 available to the globe. With this release, we embrace the hope of giving global developers a completely new and amazing experience to enjoy over 700 Microsoft code samples.

Compared with the previous version, this new version of Sample Browser is completely redesigned from tip to toe. We heard lots of users’ voices about how we can do better. So in Sample Browser v4, its user interface, sample search and download experience have numerous changes. I hope that you will love our effort here.

Install: http://aka.ms/samplebrowser

If you have already installed our last version of Sample Browser, you simply need to restart the application. You will get the auto-update.

The main user interface of the Sample Browser is composed of three columns.

The left column is the search condition bar. You can easily enter the query keywords and filter the result by Visual Studio versions, programming languages, and technologies. The search history is recorded so you can resort to your past search with a single simple click.

In the middle, the sample browser lists the sample search result. We added the display of the customer ratings and the number of raters. We also integrated social media so that you can quickly share certain samples in your network – you are highly encouraged to do it! You can selectively download the samples, or download all samples after the search is completed.

All downloading samples are queued. You can find their download status by clicking the “DOWNLOADING” button.

If an update is available for the sample, your will be reminded to get the sample update. The sample download and cancel are very flexible. Enjoy the flexibility! :-)

The right column of Sample Browser displays the most detailed information of the selected code sample. You can learn all properties of the sample before you decide to download it, read the sample documentation, and in the near future, we will add the “social” feature that allows you to learn what people are saying about the sample in the social network.

Besides the above features, we added another heatedly requested one: NEWEST samples. If you click NEWEST, the search result displays samples released in the past two months. Clicking it again will cancel the NEWEST filter.

The Sample Request Service page introduces how you can request a code sample directly from Microsoft All-In-One Code Framework if you cannot find the wanted sample in Sample Browser. The service is free of charge!

Last but not least, here is the settings page, where you can configure the sample download location and the network proxy.

We love to hear you feedback. Your feedback is our source of passion. Please try the Sample Browser and tell us how you think about it. Our email address is onecode@microsoft.com

What’re Coming Soon?

We never stop improving the Sample Browser! Here are the features that are coming soon this year.

- Visual Studio Integration

We will upgrade the old version of Sample Browser Visual Studio extension to the new, so you can enjoy the same experience from within Visual Studio! - Expanding to all MSDN Samples Gallery samples

Today, the Microsoft All-In-One Code Framework Sample Browser searches for only Microsoft All-In-One Code Framework code samples. We will start to expand the scope to all samples in MSDN Samples Gallery. - Add the "social" feature in the details panel to allow you to see the discussion of a sample in the social network

- Add the "FAVORITES" feature to allow you to tag certain samples as your favorites.

- Add the "MINE" to allow you to add your PRIVATE samples to Sample Browser.

- Add the offline search function – index the downloaded samples and allow you to search samples offline.

- Integrate the MSDN Samples Gallery Sample Request Forum with the Sample Browser

Special Thanks

First of all, special thanks to all customers who provided feedback to onecode@microsoft.com. Your feedback helped us understand where we can do better than the last version, and we will do better and better thanks to your suggestions!

This new version of Sample Browser is written by our developer Leco Lin who put lots of effort on it. Our tester Qi Fu tested the application and strangled the bugs. Jialiang Ge designed the features and functions of the Sample Browser. The UI is worked out by Lissa Dai and Jialiang together. Special thanks to Anand Malli who reviewed the source code of Sample Browser and shared lots of suggestions. Special thanks to Ming Zhu and Bob Bao who gave seamless technical support of WPF to Leco and smoothened the development. Special thanks to the Garage – a Microsoft internal community of over twenty-three hundred employees who like building innovative things in their free time. These people gave us many ideas about the Sample Browser. Special thanks to Mei Liang and Dan Ruder for their supports and suggestions. They will next introduce the sample browser to other teams in Microsoft and collect feedback. Last but not least, I want to particularly thank Steven Wilssens and his MSDN Samples Gallery team. This team created the amazing MSDN Samples Gallery – the host of all samples. We have a beautiful partnership, and we together make the idea of Sample Browser come true.

<Return to section navigation list>

Visual Studio LightSwitch and Entity Framework 4.1+

The Visual Studio LightSwitch Team (@VSLightSwitch) described Advanced LightSwitch: Writing Queries in LightSwitch Code in a 10/3/2011 post:

This post describes how to query data in LightSwitch using code and how to determine where that query code is executing. It doesn’t describe how to model a query in the designer or how to write query interception code or bind a query to a screen. If you are new to queries in LightSwitch, I suggest you start here – Working With Queries. This post is all about coding and where the query code executes. The goal of the LightSwitch query programming model is to get query code to execute where it is the best performing; as close to the database as possible.

LightSwitch Query Programming Model

Here is how things are structured in the LightSwitch query programming model. LightSwitch uses three different Types to perform its LINQ-like query syntax. They are IDataServiceQueryable, IEnumerable and IQueryable.

- IDataServiceQueryable – This is a LightSwitch-specific type that allows a restricted set of “LINQ-like” operators that are remote-able to the middle-tier and ultimately issued to the database server. This interface is the core of the LightSwitch query programming model. IDataServiceQueryable has a member to execute the query, which returns results that are IEnumerable.

- IEnumerable – Once a query has been executed and results are available on the calling tier, IEnumerable allows them to be further restricted using the full set of LINQ operators over the set of data. The key thing to remember, though, is that the data is all on the calling tier and is just being filtered there. LINQ operators issued over IEnumerable do not execute on the server.

- IQueryable – This is the interface that provides the full set of LINQ operators and passes the expression to the underlying provider. The only place you will see IQueryable in LightSwitch is in the _PreprocessQuery method, which always resides on the service tier. Since this post is just about coding, we won’t be talking about IQueryable.

LightSwitch Code Generation

Next, let’s talk about the members that get generated when you model an entity with relationships in LightSwitch. These are the members that are used in the LightSwitch query programming model.

For each entity, LightSwitch generates:

An entity set member on the Data Source. For example, if you had a Customer entity in your SalesData data source, you’d get an entity set member like this:

C#

this.DataWorkspace.SalesData.CustomersVB

Me.DataWorkspace.SalesData.CustomersFor each “many” relationship, LightSwitch generates an entity collection member and query member for the relationship. For example, if you had a 1:many relationship between Customer and Order in your SalesData model, you’d get the following members on Customer:

C#

EntityCollection<Order> Orders; // EntityCollection is IEnumerable

IDataServiceQueryable<Order > OrdersQuery;VB

Orders As EntityCollection(Of Order)'EntityCollection is IEnumerable

OrdersQuery As IDataServiceQueryable(Of Order)Here’s a diagram of the members/types and transitions between them:

LightSwitch Query Scenarios

Here are some example query scenarios and the code that you could write to solve them. The code for any of these examples can be written anywhere you can write code in LightSwitch, client or server.

Scenario 1: Get the top 10 Orders with the largest discount:

- Using the entity set, the operators are all processed remotely, since they are all supported LightSwitch operators. The query doesn’t execute until it is enumerated in the “for each” statement.

VB:

Dim orders = From o In Me .DataWorkspace.SalesData.Orders

Order By o.Discount Descending Take 10For Each ord As Order In orders NextC#:

IDataServiceQueryable <Order> orders =( from o in this.DataWorkspace.SalesData.Orders

orderby o.Discount descending selecto).Take(10);foreach (Order ord in orders)

{ }Scenario 2: Get the top 10 Orders with the largest discount from a given customer:

- There are two different ways of doing this, one using the Orders member and the other using the OrdersQuery member. One is local, the other is remote. If all of the orders are already loaded, the local query is just fine. The orders may be loaded if you have a list on a screen that contains all orders or if you’ve already accessed the “Orders” member somewhere else in code. If you’re only looking for the top 10 Orders and that’s all you’re going to load, the remote query is preferable.

VB:

'Local execution

Dim orders = From o In cust.Orders

Order By o.Discount Descending Take 10For Each ord As Order In orders

Next'Remote execution

Dim orders = From o In cust.OrdersQuery

Order By o.Discount Descending Take 10For Each ord As Order In orders

NextC#:

// Local execution

foreach (Order ord in (from o in cust.Orders

orderby o.Discount descending select o).Take(10))

{

}// Remote execution

foreach (Order ord in (from o in cust.OrdersQuery

orderby o.Discount descending select o).Take(10))

{

}Scenario 3: Get the top 10 Orders with the largest discount from a query:

- This is a modeled query called “CurrentOrders”. This query executes remotely.

VB:

Dim orders = From o In Me.DataWorkspace.SalesData.CurrentOrders

Order By o.Discount Descending Take 10

For Each ord As Order In orders

NextC#:

foreach (Order ord in (from o in this.DataWorkspace.SalesData.CurrentOrders() orderby o.Discount descending select o).Take(10))

{}Scenario 4: Sum Total of top 10 Orders with no Discount

- This query retrieves the top 10 orders and then aggregates them locally. The Execute() method is what executes the query to bring the orders to the caller.

VB:

Dim suborders = From o In Me.DataWorkspace.SalesData.Orders

Where o.Discount = 0

Order By o.OrderTotal Descending

Take 10Dim sumOrders = Aggregate o In suborders.Execute() Into Sum(o.OrderTotal)C#:

decimal sumOrders = (from o in application.DataWorkspace.SalesData.Orders

where o.Discount == 0 orderby o.OrderTotal descending

select o).Execute().Sum(o=>o.OrderTotal);Scenario 5: Sum Totals of top 10 Orders with no Discount from a Customer

- Again, there are two different ways of doing this, one using the Orders member and the other using the OrdersQuery member. One performs the first part of the query locally, the other is remote. The same rule applies as Scenario 2: If all of the orders are already loaded, the local query is just fine. If you’re only looking for the sum of the top 10 Orders and that’s all you’re going to load, the remote query is preferable.

VB:

'Local execution

Dim sumOrders = Aggregate o In cust.Orders

Where o.Discount = 0

Order By o.OrderTotal Descending

Take 10 Into Sum(o.OrderTotal)'Remote & local execution

Dim ordersQuery = From o In cust.OrdersQuery

Where o.Discount = 0

Order By o.OrderTotal Descending

Take 10Dim sumOrders = Aggregate o In ordersQuery.Execute() Into Sum(o.OrderTotal)C#:

// Local executiondecimal sumOrders = (from o in cust.Orders where o.Discount == 0

orderby o.OrderTotal descending

select o.OrderTotal).Take(10).Sum(o=>o.OrderTotal);// Remote & local execution

decimal sumOrders = (from o in cust.OrdersQuery where o.Discount == 0

orderby o.OrderTotal descending

select o).Take(10).Execute().Sum(o=>o.OrderTotal);Scenario 6: Sum Totals of top 10 Orders with no Discount from a query

- This is very similar to the previous scenario, except calling the modeled query always executes remote. The sum is still performed locally.

VB:

Dim orders = From o In Me.DataWorkspace.SalesData.CurrentOrders

Where o.Discount = 0

Order By o.OrderTotal Descending

Take 10Dim sumOrders = Aggregate o In orders.Execute() Into Sum(o.OrderTotal)C#:

decimal sumOrders = (from o in this.DataWorkspace.SalesData.CurrentOrders()

where o.Discount == 0

orderby o.OrderTotal descending

select o).Take(10).Execute().Sum(o=>o.OrderTotal);I hope these scenarios have helped you see how the LightSwitch query programming model is there to help you write the queries you need while helping to keep things as performant as possible.

Return to section navigation list>

Windows Azure Infrastructure and DevOps

David Pallman reported Relaunching AzureDesignPatterns.com with HTML5 and Expanded Content on 10/3/2011:

For several years now I’ve maintained a design pattern catalog of design patterns for Windows Azure (Microsoft’s cloud computing platform) at AzureDesignPatterns.com. I’ve recently overhauled the site with expanded content and it is now HTML5-based. Since this is a work in progress you can still access the former site.

Expanded Content

Expanded content on each pattern makes the site a lot more useful. You’ll find the typical sections found in most design pattern catalogs: problem, solution, analysis, and examples.

The patterns are arranged topically (Compute, Storage, Relational Data, Communication, Security, Network, and Application). Currently the Compute patterns are up on the site and content for the other categories is on their way. The navigation panel on the left allows you to open one of these categories and quickly find the pattern you want, and it works equally well with touch or mouse. By keyboard you can enter the name of a pattern in the search area with auto-complete.

This content ties in closely to my upcoming Windows Azure architecture book which is nearing completion. The book includes two parts. Part 1 describes the Windows Azure platform services and the architecture of applications that use it. Part 2 is a comprehensive design pattern catalog.

HTML5 Overhaul

I’ve recreated the site as an HTML5 site for several reasons. First, to broaden its reach: AzureDesignPatterns.com is now accessible across PC browsers as well as touch devices like tablets and phones. This is achieved by avoiding the use of plugins, handling backward compatibility with modernizr, and using fluid layout. The site selects one of four CSS layouts (desktop, tablet-landscape, tablet-portrait, or phone) to best fit the device it is rendering on. Layout areas and type are proportionally sized in accordance with the principles of responsive web design. Another reason for doing this is, I’m going deep on HTML5 this year and upgrading my existing sites is one way to practice and learn. This is my first “real” HTML5 experiment.I found some nice shared resources online I incorporated into the site. I made use of the Less Framework to plan my device layouts and create my baseline CSS. To provide auto-complete on keyboard input in the search area, I adapted this auto-complete control. For animating the cloudscape behind the navigation panel, I used this animation example that leverages the MooTools JavaScript framework.

Hosting in Windows Azure

It would be kind of hypocritical for a design patterns site for Windows Azure to not be hosted in Windows Azure. However, this is a content-only site with no server-side logic—it doesn’t actually require hosting in the Compute service and we can save some expense by serving the site from low-cost Windows Azure Blob Storage, a technique I've previously written about. The same technique was used for the former Silverlight-based site.I hope you enjoy the new Azure Design Patterns site and find [it] useful.

Lori MacVittie (@lmacvittie) asserted “The secret to live migration isn’t just a fat, fast pipe – it’s a dynamic infrastructure” in an introduction to her Live Migration versus Pre-Positioning in the Cloud post of 10/3/2011 to F5’s DevCentral blog:

Very early on in the cloud computing hype cycle we posited about different use cases for the “cloud”. One that remains intriguing and increasingly possible thanks to a better understanding of the challenges associated with the process is cloud bursting. The first time I wrote about cloud bursting and detailed the high-level process the inevitable question that remained was, “Well, sure, but how did the application get into the cloud in the first place?”

Back then there was no good answer because no one had really figured it out yet.

Since that time, however, there have grown up many niche solutions that provide just that functionality in addition to the ability to achieve such a “migration” using virtualization technologies. You just choose a cloud and click a button and voila!

Yeah. Right. It may look that easy, but under the covers there’s a lot more details required than might at first meet the eye. Especially when we’re talking about live migration.

LIVE MIGRATION versus PRE-POSITIONING

Many architectural-based cloud bursting solutions require pre-positioning of the application. In other words, the application must have been transferred into the cloud before it was needed to fulfill additional capacity demands on applications experiencing suddenly high volume. It assumed, in a way, that operators were prescient and budgets were infinite. While it’s true you only pay when an image is active in the cloud, there can be storage costs associated with pre-positioning as well as the inevitable wait time between seeing the need and filling the need for additional capacity. That’s because launching an instance in a cloud computing environment is never immediate. It takes time, sometimes as long as ten minutes or more. So either your operators must be able to see ten minutes into the future or it’s possible that the challenge for which you’re implementing a cloud bursting strategy (handle overflow) won’t be addressed by such a challenge.

Enter live migration. Live migration of applications attempts to remove the issues inherent with pre-positioning (or no positioning at all) by migrating on-demand to a cloud computing environment and maintaining at the same time availability of the application. What that means is the architecture must be capable of:

- Transferring a very large virtual image across a constrained WAN connection in a relatively short period of time

- Launch the cloud-hosted application

- Recognize the availability of the cloud-hosted application and somehow direct users to it

- When demand decreases you must siphon users off (quiesce) the cloud-hosted application instance

- When no more users are connected to the cloud-hosted application, take it down

Reading between the lines you should see a common theme: collaboration. The ability to recognize and act on what are essentially “events” occurring in the process require awareness of the process and a level of collaboration traditionally not found in infrastructure solutions.

CLOUD is an EXERCISE in INFRASTRUCTURE INTEGRATION

Sound familiar? It should. Live migration, and even the ability to leverage pre-positioned content in a cloud computing environment, is at its core an exercise in infrastructure integration. There must be collaboration and sharing of context, automation as well as orchestration of processes to realize the benefits of applications deployed in “the cloud.” Global application delivery services must be able to monitor and infer the health at the site level, and in turn local application delivery services must monitor and infer the health and capacity of the application if cloud bursting is to successfully support the resiliency and performance requirements of application stakeholders, i.e. the business.

The relationship between capacity, location, and performance of applications is well-known. The problem is pulling all the disparate variables together from the client, application, and network components which individually hold some of the necessary information – but not all. These variables comprise context, and it requires collaboration across all three “tiers” of an application interaction to determine on-demand where any given request should be directed in order to meet service level expectations. That sharing, that collaboration, requires integration of the infrastructure components responsible for directing, routing, and delivering application data between clients and servers, especially when they may be located in physically diverse locations.

As customers begin to really explore how to integrate and leverage cloud computing resources and services with their existing architectures, it will become more and more apparent that at the heart of cloud computing is a collaborative and much more dynamic data center architecture. That without the ability not just to automate and orchestrate, but integrate and collaborate infrastructure across highly diverse environments, cloud computing – aside from SaaS - will not achieve the successes it is predicted.

Jay Fry (@jayfry3) asked Bridging the mobility (and fashion) divide: can enterprise IT think more like the consumer world? in a 10/2/2011 post:

GigaOm’s Mobilize 2011 conference last week seemed to be a tale of two worlds – the enterprise world and the consumer world – and how they can effectively incorporate mobility into their day-to-day business. And in some cases, how they are failing to do that.

I could feel that some of the speakers (like Steve Herrod of VMware and Tom Gillis of Cisco) were approaching some of the mobility issues on the table with their traditional big, complex, enterprise-focused world firmly in view. Of course, that approach also values robustness, reliability, and incremental improvements. It’s what enterprises and their IT departments reward, and rightly so.

But there was another group of speakers at Mobilize, too: those who come at things with the consumer world front and center. Mobility was certainly not optional for these guys. Another telling difference: the first thing on the mind of these folks was user experience. This included the speakers from Pandora, Twitter, and Instagram, among others.

Even fashion was a dead give-away

In what seemed like an incidental observation at first, I’d swear you could tell what side of this enterprise/consumer divide someone would fall on based on how Mobilize speakers and attendees were dressed. The enterprise-trained people in the room (and I have no choice but to begrudgingly put myself in this category) were sporting dress shirts, slacks, and shiny shoes. Those that were instead part of the mobile generation were much more casual, in a simplistically chic sort of way. Jeans, definitely. Plus a comfortable shirt that looked a bit hipper. And most definitely not tucked in.

This latter group talked about getting to the consumer, with very cool ideas and cooler company names, putting a premium on the user experience. Of course, many of these were also still in search of a real, sustainable business model.

So, GigaOm did a good job of bringing these two camps together, and giving them a place to talk through the issues. The trick now? Make sure the two contingents don’t talk past each other and instead learn what the other has to offer to bridge this divide.Impatience with the enterprise IT approach?

In conversations with blogger and newly minted GigaOm contributor Dave O’Hara (@greenm3) and others at the event, I got a feeling that some of the folks immersed in the mobile side of the equation don’t have a good feel for the true extent of what enterprise adoption of a lot of these still-nascent technologies can mean, revenue-wise especially. Nor do they have a good understanding of all the steps required to make it happen in IT big organizations.

Getting enterprises to truly embrace what mobility can mean for them faces many of the same hurdles I’ve seen over the past few years with cloud computing. Even if the concepts seem good, enterprise adoption is not always as simple as it seems like it should be. Or as fast as those with consumer experience would expect or want.That’s where maybe folks with an enterprise bent, I think (selfishly, probably) can have a useful role. If you can get enterprise IT past the initial knee-jerk “no way are you bringing that device into my world” reaction, there are some great places that these new, smart, even beautiful mobile devices could make a difference.

Getting the enterprises to listen

The mobile trends being identified at Mobilize 2011 were on target in many cases, but in some cases even the lingo could have rubbed those with enterprise backgrounds the wrong way – or seemed slightly tone-deaf to what enterprises have to deal with.Olof Schybergson (@Olof_S), CEO of Fjord, made some really intriguing points, for example, about key mobile service trends: digital is becoming physical. The economy of mash-up services needs orchestrators. Privacy is now a kind of currency. And, the user is the new operating system when it comes to thinking about mobile services.

There were many good thoughts there that IT guys in a large organization would probably take as logical, or even a given. But that last point, the bit about the user being an OS, just doesn’t ring true, and would probably get a few of the enterprise IT guys to scratch their heads.

The user isn’t the OS; he or she is the design point and the most important entity – the one calling the shots. Instead, the user is really the focal point of the design for integrating mobile devices into the existing environment.

Consumerization is pressuring enterprise mobility

But many of the right issues came up in Philippe Winthrop’s panel on mobility in the enterprise.Bob Tinker from MobileIron believed that this is indeed all coming together nicely and we’ll look back and see that “2011 was the year that mobile IT was born. It was the year that the IT industry figured out mobile. It’s the year the mobile industry figured out IT.” Why? For no other reason than there is no other option. And, people are themselves becoming more tech savvy, something he called the “ITization of the consumer.”

Chuck Goldman from Apperian noted that there is considerable pressure on the C-suite in large enterprises not just to begin to figure out how to incorporate a broad array of mobile devices, but to “build apps that are not clunky.”

Tinker agreed that the IT consumerization effect is significant. “Users are expecting the same level of mobility they have in their consumer world in their workplace. And I think the ramifications for this are fairly profound.” That seems to be the underlying set-up for much of what’s happening in the enterprise around mobility, for sure.Mobility is causing disruption…and opportunity

Which begs the question: who is looking at the integration of consumer and enterprise approaches in the right way to bridge this gap? At Mobilize, Cisco and VMware certainly were talking about doing so. I saw tweets from the Citrix analyst symposium from the week before about some of the efforts they are doing to try to connect the dots here.But mobile will turn IT on its head, said Tinker in the panel. And it will rearrange the winners and losers in the vendor space along the way. “Look at the traditional IT industry and ask how will they adapt to mobile. Many of them will not,” said Tinker.

To me, this signals a market with a lot of opportunity. Especially to innovate in a way or at a speed that makes it hard for some of these larger companies to deliver on. Frankly, it’s one of the opportunity areas that my New Thing is definitely immersed in. And I expect other start-ups to do the same.

Another golden chance for IT to lead

Apperian’s Goldman believes that this is a great chance for IT, in much same way that I’ve argued cloud computing can be. The move to adopt mobility as part of a company’s mainstream way of delivering IT means that “IT has an opportunity here that is golden,” said Goldman. “It gives them the opportunity to be thought leaders. Once you start doing that, your employees start loving IT and that love translates into good will” and impacts your organization’s top and bottom lines.

Loving IT? To most, that sounds like crazy talk.It certainly won’t be easy. Getting ahead of the curve on IT consumerization and mobility requires a bit of imagination on the side of the enterprises, and a bit of patience and process-orientation by the folks who understand mobile. It will require people who have been steeped in enterprise IT, but are willing to buck the trend and try something new. It will require people with mobility chops who can sit still long enough to crack into a serious enterprise.

As for my part in this, it probably also means I’ll have to learn to wear jeans more often. Or at least leave my shirt untucked. And, frankly, I’m OK with that. I’ll keep you posted on both my take on this evolving market and my fashion sense.

Jay is now “with a stealthy cloud/mobility startup)” and was formerly VP of Marketing for CA Technologies and Cassatt Corp.

David Linthicum (@DavidLinthicum) asserted “A new study shows that corporate IT is concerned about the deployment of cloud computing applications without the involvement of IT” in a deck for his Uh oh: The cloud is the new 'bring your own' tech for users article of 9/30/2011 for InfoWorld’s Cloud Computing blog:

A new study by cloud monitoring provider Opsview finds that more than two thirds of U.K. organizations are worried about something called "cloud sprawl." Cloud sprawl happens when employees deploy cloud computing-based applications without the involvement of their IT department. In the U.S., we call these "rogue clouds," but it looks like this situation is becoming an international issue -- and reflects the same "consumerized IT" trend reflected by the invasion of personal mobile devices into the enterprise in the last 18 months.

Here's my take on this phenomenon: If IT does its job, then those at the department levels won't have to engage cloud providers to solve business problems. I think that most in IT disagree with this, if my speaking engagements are any indication. However, if I were in IT and somebody told me they had to use a cloud-based product to solve a problem because they could no longer wait for IT, I would be more likely to apologize than to tell them they broke some rule. Moreover, I would follow up with guidance and learn how to use the cloud myself more effectively. …

In the rogue cloud arena, most uses of cloud computing are very tactical in nature. They might include building applications within Google App Engine to automate a commission-processing system, using a cloud-based shared-calendar system for project staffers, or using a database-as-a-service provider to drive direct marketing projects. You name it. …

Read more: 2, next page ›

<Return to section navigation list>

Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

Kevin Holman described OpsMgr: [Management Pack] Update: New Base OS MP 6.0.6957.0 adds Cluster Shared Volume monitoring, BPA, new reports, and many other changes in a 9/30/2011 post:

Get it from the download center here: http://www.microsoft.com/download/en/details.aspx?id=9296

This really looks like a nice addition to the Base OS MP’s. This update centers around a few key areas for Windows 2008 and 2008 R2:

- Adds Cluster Shared Volume discovery and monitoring for free space and availability. This is critical for those Hyper-V clusters on Server 2008 R2.

- Adds a new monitor to execute the Windows Best Practices Analyzer for different discovered installed Roles, and then generate alerts until these are resolved.

- Changes to many built in rules/monitors, to reduce noise, database space and I/O, and increase a positive “out of the box” experience. Also added a few new monitors and rules.

- Changes to the MP Views – removing some old stuff and adding some new

- Addition of some new reports – way cool

Let take a look at these changes in detail:

Cluster Share Volume discovery and monitoring:

We added a new discovery and class for cluster shared volumes:

We added some new monitors for this new class:

NTFS State Monitor and State monitor are disabled by default. The guide states:

- This monitor is disabled as normally the state of the NTFS partition is not needed (Dirty State notification).

- This monitor is disabled as it when enabled it may cause false negatives during backups of the Cluster Shared Volumes

I’d probably leave these turned off.

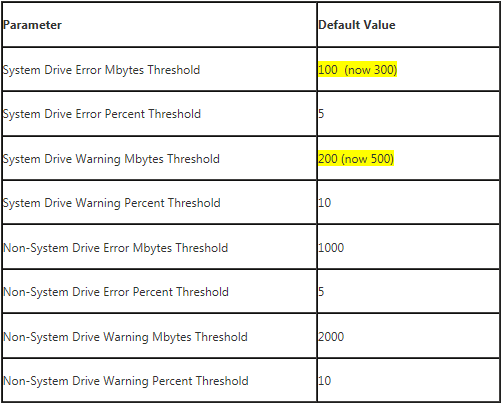

The free space monitoring for CSV’s is different than how we monitor Logical disks. This is good – because CSV’s are hosted by the cluster virtual resource name, not by the Node, as logical disks are handled. What CSV’s have is two monitors, which both run a script every 15 minutes, and compare against specific thresholds. Free space % is 5 (critical) and 10 (warning) while Free space MB is 100 (critical) and 500 (warning) by default. Obviously you will need to adjust these to what’s actionable in your Hyper-V cluster environment.

BOTH of these unit monitors act and alert independently, as seen in the above graphic for state, and below graphic for alerts:

Some notes on how free space monitoring of CSV’s work:

- Each unit monitor has state (critical or warning) and generate individual alerts (warning ONLY)

- There is an aggregate rollup monitor (Cluster Share Volume – Free Space Rollup Monitor) that will roll up WORST STATE of any member, and ALSO generate alerts, when the WORST state rolls up CRITICAL. This is how we can generate warning alerts to notify administrators, but then also generate a new, different CRITICAL alert for when error thresholds are breached. I really like this new design better than the Logical Disk monitoring…. it gives the most flexibility to be able to generate warning and critical alerts when necessary. Perhaps you only email notify the warning alerts, but need to auto-create incidents on the critical. The only downside is that if a CSV volume fills up and breaches all thresholds in a short time frame, you will potentially get three alerts.

There are also collection rules for the CSV performance:

Best Practices Analyzer monitor:

A new monitor was added to run the Best Practices Analyzer. You can read more about the BPA here:

http://technet.microsoft.com/en-us/library/dd392255(WS.10).aspx

We can open Health Explorer and get detailed information on what's not up to snuff:

Alternatively – we can run this task on demand to ensure we have resolved the issues:

Changes to built in Monitors and Rules:

Many rules and monitors were changed from a default setting, to provide a better out of the box experience. You might want to look at any overrides you have against these and give them a fresh look:

- “Logical Disk Availability Monitor” renamed to “File System error or corruption”

- I wrote more about this monitor here: http://blogs.technet.com/b/kevinholman/archive/2010/07/29/logical-disk-availability-is-critical-what-does-this-mean.aspx

- “Avg Disk Seconds per Write/Read/Transfer” monitors changed from Average Threshold monitortype to Consecutive Samples Threshold monitortype.

- This is VERY good – this stops all the noise for the default enabled Sec/Transfer monitor, caused by momentary perf spikes.

- The default threshold is set to “0.04” which is 40ms latency. This is a good generic rule of thumb for the typical server.

- The default sample rate is once per minute, for 15 consecutive samples.

- Note – make sure you implement or at least evaluate hotfixes 2470949 or 2495300 for 2008R2 and 2008 Operating systems, which affect these disk counters.

- Make sure you look at any overrides you had previously set on these – as they likely should be reviewed to see if they are still needed.

- Disabled “Percentage Committed Memory in Use” monitor

- This monitor used to change state when more than 80% of memory was utilized. This created unnecessary noise due the fact that more and more server roles utilize all available memory (SQL, Exchange) and this monitor was not always actionable.

- Disabled “Total Percentage Interrupt Time” and “Total DPC Time Percentage”.

- These monitors would often generate alert and state noise in heavily virtualized environments, especially when the CPU’s are oversubscribed or heavily consumed temporarily. These were turned off by default, because there are better performance counters at the Hypervisor host level to track this condition than these OS level counters.

- Added “Free System Page Table Entries” and “Memory Pages per Second” monitors. These are both enabled out of the box to track excessive paging conditions. Also added MANY perf collection rules targeting memory counters, some disabled by default, some enabled.

- “Total CPU Utilization Percentage” monitor was increased from 3 to 5 samples. The timeout was shortened from 120 to 100 seconds (to be less than the interval of 120 seconds).

- Disabled the following perf counter collection rules by default:

- Avg Disk Sec/Write

- Avg Disk Sec/Read

- Disk Writes Per Second

- Disk Reads Per Second

- Disk Bytes Per Second

- Disk Read Bytes Per Second

- Disk Write Bytes Per Second

- Average Disk Read Queue Length

- Average Disk Write Queue Length

- Average Disk Queue length

- Logical Disk Split I/O per second

- Memory Commit Limit

- Memory Committed Bytes

- Memory % Committed Bytes in use

- Memory Page Reads per Second

- Memory Page writes per second

- Page File % use

- Pages Input per second

- Pages output per second

- System Cache Resident Bytes

- System Context Switches per second

- Enabled the following perf counter collection rules by default:

- Memory Pool Paged Bytes

- Memory Pool Non-Paged bytes

A full list of all disabled rules, monitors and discoveries is available in the guide in the Appendix section. The disabling of all these logical disk and memory perf collections is AWESOME. This MP really collected more perf data than most customers were ready to consume and report on. By including these collection rules, but disabling them, we are saving LOTS of space in the databases, valuable transactions per second in SQL, network bandwidth, etc… etc.. Good move. If a customer desires them – they are already built and a quick override to enable them is all that’s necessary. Great work here. I’d like to see us do more of this out of the box from a perf collection perspective.

Changes to MP views:

The old on the left – new on the right:

Top level logical disk and network adapter state views removed.

Added new views for Cluster Shared Volume Health, and Cluster Shared Volume Disk Capacity.

New Reports! Performance by system, and Performance by utilization:

There are two new reports deployed with this new set of MP’s (provided you import the new reports MP that ships with this download – only available from the MSI and not the catalog)

To run the Performance by System report – open the report, select the time range you’d like to examine data for, and click '”Add Object”. This report has already been filtered only to return Windows Computer objects. search based on computer name, and add in the computer objects that you’d like to report on. On the right – you can pick and choose the performance objects you care about for these systems. We can even show you if the performance value is causing an unhealthy state – such as my Avg % memory used – which is yellow in the example:

Additionally – there is a report for showing you which computers are using the most, or the least resources in your environment. Open “Performance by Utilization”, select a time range, choose a group that contains Windows Computers, and choose “Most”. Run that, and you get a nice dashboard – with health indicators – of which computers are consuming the most resources, and potentially also impacted by this:

Using the report below – I can see I have some memory issues impacting my Exchange server, and my Domain Controller is experiencing disk latency issues.

By clicking the DC01 computer link in the above report – it takes me to the “Performance by System” report for that specific computer – very cool!

Summary:

In summary – the Base OS MP is already a rock solid management pack. This made some key changes to make the MP even less noisy out of the box, and added critical support for discovering and monitoring Cluster Shared Volumes.

Known Issues in this MP:

1. A note on upgrading these MP’s – I do not recommend using the OpsMgr console to show “Updates available for Installed Management Packs”. The reason for this, is that the new MP’s shipping with this update (for CSV’s and BPA) are shipped as new, independent MP’s…. and will not show up as needing an update. If you use the console to install the updated MP’s – you will miss these new ones. This is why I NEVER recommend using the Console/Catalog to download or update MP’s…. it is a worst practice in my personal opinion. You should always download the MSI from the web catalog at http://systemcenter.pinpoint.microsoft.com and extract them – otherwise you will likely end up missing MP’s you need.

2. There might be an issue when you try and execute the reports:

An error has occurred during report processing

Query execution failed for dataset ‘PerfDS’ or Query execution failed for dataset ‘PerformanceData’

The EXECUTE permission was denied on the object ‘Microsoft_SystemCenter_Report_Performace_By_System’, database ‘OperationsManagerDW’, schema ‘dbo’.

I recommend enabling remote errors on you reporting server so the report output will show you the full details of the error: http://technet.microsoft.com/en-us/library/aa337165.aspx (without remote errors enabled – you might only see the top two lines in the error above)

This is due to a security permission on the new stored procedures which are deployed with this report. Thanks to PFE Tim McFadden for bringing this to my attention and to PFE Antoni Hanus for determining a resolution before we even had a chance to look into it:

- Open SQL Mgmt Studio and connect to the SQL server hosting the Data Warehouse (OperationsManagerDW)

- Navigate to OperationsManagerDW > Programmability > Stored Procedures > dbo.Microsoft_SystemCenter_Report_Performace_By_System

- Right click dbo.Microsoft_SystemCenter_Report_Performace_By_System and choose Properties

- Click the Permissions Page. Click the Search button. Hit Browse. Check [OpsMgrReader] and Click OK. Click OK again.

- Click the check box in the Grant column for EXECUTE in the Permission row. It should look like this:

- Click Ok

- Repeat steps 3-9 above - for the stored procedure dbo.Microsoft_SystemCenter_Report_Performace_By_Utilization

If you are getting a specific error about “System.Data.SqlClient.SqlException: Procedure or function Microsoft_SystemCenter_Report_Performace_By_Utilization has too many arguments specified” that is still under investigation.

3. The logical disk free space monitortypes for both Windows 2003 and Windows 2008 were re-written. These we changed to a consecutive samples monitortype. However – in doing the modifications – several changes were made that might cause an impact:

The following three override-able properties were changed:

- DebugFlag – removed

- TimeoutSeconds – removed

- SystemDriveWarningMBytesThreshold – renamed to “SystemDriveWarningMBytesTheshold” (I am sure this wasn’t by design)

If you previously had overrides referencing any of these properties before, you might get an error when importing or modifying your existing override MP:

Date: 10/3/2011 2:14:21 PM

Application: System Center Operations Manager 2007 R2

Application Version: 6.1.7221.81

Severity: Error

Message:: Verification failed with [1] errors:

-------------------------------------------------------

Error 1:

: Failed to verify Override [OverrideForMonitorMicrosoftWindowsServer2003LogicalDiskFreeSpaceForContextMicrosoftWindowsServer2003LogicalDisk02b92b47f8f74b2393f88f6a673823f5].

Cannot find OverridableParameter with name [SystemDriveWarningMBytesThreshold] defined on [Microsoft.Windows.Server.2003.FreeSpace.Monitortype]

-------------------------------------------------------Failed to verify Override [OverrideForMonitorMicrosoftWindowsServer2003LogicalDiskFreeSpaceForContextMicrosoftWindowsServer2003LogicalDisk02b92b47f8f74b2393f88f6a673823f5].Cannot find OverridableParameter with name [SystemDriveWarningMBytesThreshold] defined on [Microsoft.Windows.Server.2003.FreeSpace.Monitortype]

: Failed to verify Override [OverrideForMonitorMicrosoftWindowsServer2003LogicalDiskFreeSpaceForContextMicrosoftWindowsServer2003LogicalDisk02b92b47f8f74b2393f88f6a673823f5].

Cannot find OverridableParameter with name [SystemDriveWarningMBytesThreshold] defined on [Microsoft.Windows.Server.2003.FreeSpace.Monitortype]

: Cannot find OverridableParameter with name [SystemDriveWarningMBytesThreshold] defined on [Microsoft.Windows.Server.2003.FreeSpace.Monitortype]You will be stuck and will not be able to save any more overrides to that MP until you resolve the issue.

You MUST export the XML of your broken override MP at this point. In the XML – search for: “SystemDriveWarningMBytesThreshold”

Modify the following:

Parameter="SystemDriveWarningMBytesThreshold"

change it to:

Parameter="SystemDriveWarningMBytesTheshold"

Save the modified XML, and reimport. (always save a backup copy FIRST before making any changes!) You will now be able to use your existing override MP again.

If your issues was caused by the fact you have overridden timeout or debugflag – then simple delete those overrides in XML.

4. The knowledge is out of date for the new default values in the free space monitors. The changed values are referenced below:

5. The BPA monitors can be noisy for Server 2008R2 systems.

The new BPA monitor runs a powershell script that calls the built in BPA in the Server 2008 R2 operating System. It runs this once per day. It does not have any capability to filter out known configurations or BPA issues that you choose not to resolve. While the UI provides the ability to create exclusions for specific issues in the BPA results, this monitor does not support that functionality. The result is, that this monitor could cause a large percentage of your servers to generate an alert and enter a warning state. This is designed as a very simple monitor to bring attention to the BPA in Server 2008 R2, and to recommend adherence to best practices. If you don’t want this monitor to generate alerts or affect health state – then disable this monitor via overrides.

6. The “performance by utilization” report section dealing with Logical Disk % Idle time is flip-flopped…. in “Most Utilized” it reports “%100” as the highest, descending down to smaller numbers. When in fact, 100% idle is NOT utilized at all. The same issue shows up with the “least utilized” report model. So for now – these specific values don’t work in a helpful manner. However, as a workaround – you can still run a “performance top objects” report for this same counter, and choose “top N” and “bottom N” in the report to gain access to the same data.

<Return to section navigation list>

Cloud Security and Governance

<Return to section navigation list>

Cloud Computing Events

The HPC in the Cloud blog reported Oracle Executives to Outline Oracle's Comprehensive Cloud Strategy in a 10/2/2011 press release:

- On Thursday, October 6, 2011, during Oracle OpenWorld, Oracle senior executives will outline Oracle's comprehensive cloud strategy and roadmap.

- The Oracle Powers the Cloud event includes more than 25 cloud sessions, 15 cloud demos and an Executive keynote address.

- Executives will discuss how Oracle offers the broadest, most complete integrated offerings for enterprise cloud computing.

- Oracle provides a comprehensive strategy that includes both private and public clouds, and all cloud layers -- software as a service (SaaS), platform as a service (PaaS), and infrastructure as a service (IaaS).

- Oracle OpenWorld full-conference registrants can attend the Oracle Powers the Cloud event at no additional charge.

About Oracle OpenWorld

Oracle OpenWorld San Francisco, the information technology event dedicated to helping businesses optimize existing systems and understand upcoming technology breakthroughs, draws more than 45,000 attendees from 117 countries. Oracle OpenWorld 2011 offers more than 2000 educational sessions, 400 product demos, exhibitions from 475 partners showcasing applications, middleware, database, server and storage systems, industries, management and infrastructure -- all engineered for innovation. Oracle OpenWorld 2011 is being held October 2-6 at The Moscone Center in San Francisco. For more information or to register please visit www.oracle.com/openworld. Watch Oracle OpenWorld live on YouTube for keynotes, sessions and more at www.youtube.com/oracle.

About Oracle

Oracle is the world's most complete, open, and integrated business software and hardware systems company. For more information about Oracle, please visit our Web site at http://www.oracle.com.

Laid on a bit thickly when you consider Larry Ellison’s earlier derisive statements about cloud computing (see below).

Joe Panettieri reported Oracle Touts Hybrid Cloud, Mobile Strategy at OpenWorld in a 10/2/2011 post from Oracle OpenWorld 2012:

Oracle Fusion Applications are the best-designed applications for on-premise, cloud and mobile platforms, asserted Steve Miranda, senior VP, applications development, Oracle [pictured at right]. Miranda made the assertion during Oracle PartnerForum, part of the broader Oracle OpenWorld conference that opened today in San Francisco.

“Fusion is the only app software that uses the exact same code base on-premise and in the cloud,” said Miranda. He told roughly 4,500 Oracle channel partners that Oracle Fusion Applications are fully mobile enabled, running in a web browser on iPads and other mobile devices — with no coding changes required.

True Believers

Meanwhile, Oracle is putting the spotlight on cloud software partners that have optimized their applications for Oracle Exadata servers. Oracle Channel Chief Judson Althoff claimed that Exadata and Exalogic systems deliver 10X to 20X performance increases to help partners “transform customers’ data centers.”

To build his case, Althoff invited three Oracle ISVs onto the stage — at least two of which have bet heavily on cloud computing.

1. Alon Aginsky, CEO cVidya: The company has launched a cloud application that allows customers and service providers to gain “revenue intelligence.” cVidya is looking at Exadata to help small service providers gain economies of scale against big rivals.

2. Doug Daugherty, CTO Triple Point: The company focuses on commodity and risk management solutions, including SaaS solutions. “Our tests have completely changed how we’re approaching performance,” said Daugherty.

The database has always been the bottleneck when something is performance-intensive, he noted. “When we ran on Exadata, the five-hour process came back down to 51 minutes at 30 percent utilization through the entire test,” he added.

3. Michael Meriton, CEO, GoldenSource: The company focuses on enterprise data management in capital markets. Though not necessarily a cloud solution (I think), the GoldenSource example reinforced Oracle’s push to promote scalable Exadata and Exalogic solutions.

The hybrid cloud chatter is just starting at Oracle OpenWorld. CEO Larry Ellison is set to keynote twice — once today (Oct. 2) and again on Oct. 4. Pundits think Ellison will focus heavily on Oracle’s cloud strategy on Oct. 4.

Read More About This Topic

Eric Nelson (@ericnel) posted Slides and Links from UK BizSpark Azure Camp Sep 30th on 10/3/2011:

On Friday David and I delivered a new session as part of a great agenda for the BizSpark Azure Camp. Our session was “10 Great Questions to ask about the Windows Azure Platform”.

A big thank you to everyone who attended and asked such great questions – and also to the other speakers for making it a great day.

One of the things we discussed was “next steps”. I would recommend the monthly Windows Azure Discovery Workshops if you are new to the platform and would like to explore the possibilities whilst getting a more detailed grounding in the technology. They take place in Reading, are completely FREE and the next is on October the 11th. Hope to see some of you there.

Slides

Links

- Eric Nelson http://www.ericnelson.co.uk

- David Gristwood http://blogs.msdn.com/david_gristwood

- Get better connected with our team http://bit.ly/ukisvfirststop (Blog/Twitter/LinkedIn/FREE Events)

- 2b Will it run Foo?

- Umbraco on Aure http://uktechdays.cloudapp.net/home.aspx

- http://things.smarx.com/