Windows Azure and Cloud Computing Posts for 9/7/2011+

| A compendium of Windows Azure, SQL Azure Database, AppFabric, Windows Azure Platform Appliance and other cloud-computing articles. |

• Updated 9/7/2011 4:30 PM with articles marked • by Walter Myers III, the Windows Azure Platform Appliance Team, Windows Azure Team and Avkash Chauhan.

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database and Reporting

- Marketplace DataMarket and OData

- Windows Azure AppFabric: Apps, WF, Access Control, WIF and Service Bus

- Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch and Entity Framework v4+

- Windows Azure Infrastructure and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

Azure Blob, Drive, Table and Queue Services

Steve Marx (@smarx) described Building a Task Scheduler in Windows Azure in a 6/7/2011 post:

In my last blog post (“Managing Concurrency in Windows Azure with Leases”), I showed how blob leases can be used as a general concurrency control mechanism. In this post, I’ll show how we can use that to build a commonly-requested pattern in Windows Azure: task scheduling.

- We should be able to add tasks in any order and set arbitrary times for each.

- When it’s time, tasks should be processed in parallel. (If lots of tasks are ready to be completed, we should be able to scale out the work by running more instances.)

- There should be no single point of failure in the system.

- Each task should be processed at least once, and ideally exactly once.

Note the wording of that last requirement. In a distributed system, it’s often impossible to get “exactly once” semantics, so we need to choose between “at least once” and “no more than once.” We’ll choose the former. These semantics mean that our tasks still need to be idempotent, just like any task you use a queue for.

Representing the Schedule

Tackling requirement #1, we’ll use a table to store the list of tasks and when they’re due. We’ll store a simple entity called a

ScheduleItem:public class ScheduledItem : TableServiceEntity { public string Message { get; set; } public DateTime Time { get; set; } public ScheduledItem(string message, DateTime time) : base(string.Empty, time.Ticks.ToString("d19")) { Message = message; Time = time; } public ScheduledItem() { } }Note that we’re using a timestamp for the partition key, meaning that the natural sort order of the table will put the tasks in chronological order, with the next task to complete at the top. As we’ll see, this sort order makes it easy to query for which tasks are due.

Managing Concurrency

We could at this point write code that polls the table, looking for work that’s ready to complete, but we have a concurrency problem. If all instances did this in a naïve way, we would end up processing tasks more than once when multiple instances saw the tasks at the same time (violating requirement #4). We could have just a single instance of the role that does the work, but that would violate requirements #2 (parallel processing) and #3 (no single point of failure).

Part of the solution is to use a queue. A queue could distribute the work in parallel to multiple worker instances. However, we’ve just pushed the concurrency problem elsewhere. We still need a way to move items from the task list onto the queue in a way that avoids duplication.

Now, we have a fairly easy concurrency problem to solve with leases. We just need to make sure that only one instance at a time looks at the schedule and moves ready tasks onto the queue. Here’s code that does exactly that:

using (var arl = new AutoRenewLease(leaseBlob)) { if (arl.HasLease) { // inside here, this instance has exclusive access var ctx = tables.GetDataServiceContext(); foreach (var item in ctx.CreateQuery<ScheduledItem>("ScheduledItems").Where(s => s.PartitionKey == string.Empty && s.RowKey.CompareTo(DateTime.UtcNow.Ticks.ToString("d19")) <= 0) .AsTableServiceQuery()) { q.AddMessage(new CloudQueueMessage(item.Message)); // potential timing issue here: If we crash between enqueueing the message and removing it // from the table, we'll end up processing this item again next time. This is unavoidable. // As always, work needs to be idempotent. ctx.DeleteObject(item); ctx.SaveChangesWithRetries(); } } } // lease is released hereWe can safely run this code on all of our instances, knowing that the lease will protect us from having more than one instance doing this simultaneously.

Once the messages are on a queue, all the instances can work on the available tasks in parallel. (Nothing about this changes.)

All Together

Putting this all together, we have http://scheduleditems.cloudapp.net, which consists of:

- A web role inserting entities into the

ScheduledItemstable. Each entity has a string message and a time when it’s ready to be processed. These are maintained in sorted order by time.- A worker role that loops forever. One instance at a time (guarded by a lease) transfers due tasks from the table to a queue, and all instances process work from that queue.

This scheme meets all of our original requirements:

- Tasks can be added in any order, since they’re always maintained in chronological sort order.

- Tasks are executed in parallel, because they’re being distributed by a queue.

- There’s no single point of failure, because any worker instance can manage the schedule.

- Because tasks aren’t removed from the table until they’re on a queue, every task will be processed at least once. In the case of failures, it’s possible for a message to be processed multiple times (if an instance fails between enqueuing a message and deleting it from the table, or if an instance fails between dequeuing a message and deleting it).

The mechanism presented here could certainly be improved. For example, rescheduling a task is possible by performing an insert and a delete as a batch transaction. Recurring tasks could be supported by rescheduling a task instead of deleting it once it’s been inserted onto the queue.

Feedback

The snippets of code above are really all you need to implement task scheduling, but I wonder if a reusable library would be useful for this, or if it might make sense to integrate with some existing library. Let me know on Twitter (@smarx) what you think.

[UPDATE 11:12PM PDT] I had meant to include (but forgot) a note that Windows Azure AppFabric Service Bus Queues have a built-in way to schedule when the message appears on the queue: http://msdn.microsoft.com/en-us/library/microsoft.servicebus.messaging.brokeredmessage.scheduledenqueuetimeutc.aspx

Steve Marx (@smarx) explained Managing Concurrency in Windows Azure with Leases in a 9/6/2011 post:

Concurrency is a concept many developers struggle with, both in the world of multi-threaded applications and in distributed systems such as Windows Azure. Fortunately, Windows Azure provides a number of mechanisms to help developers deal with concurrency around storage:

- Blobs and tables use optimistic concurrency (via ETags) to ensure that two concurrent changes won’t clobber each other.

- Queues help manage concurrency by distributing messages such that (under normal circumstances), each message is processed by only one consumer.

- Blob leases allow a process to gain exclusive write access to a blob on a renewable basis. Windows Azure Drives use blob leases to ensure that only a single VM has a VHD mounted read/write.

These mechanisms generally work transparently around your access to storage. As an example, if you read a table entity and then update it, the update will fail if someone else changed the entity in the meantime.

Sometimes, however, an application needs to control concurrency around a different resource (one not in storage). For example, I see a somewhat regular stream of questions from customers about how to ensure that some initialization routine is performed only once. (Perhaps every time your application is deployed to Windows Azure, you need to download some initial data from a web service and load it into a table.)

Leases for concurrency control

Of the concurrency primitives available in Windows Azure, blob leases are the most general and can be used in much the same way that an object is used for locking in a multi-threaded application. A lease is the distributed equivalent of a lock. Locks are rarely, if ever, used in distributed systems, because when components or networks fail in a distributed system, it’s easy to leave the entire system in a deadlock situation. Leases alleviate that problem, since there’s a built-in timeout, after which resources will be accessible again.

Returning to our initialization example, we might create a blob named “initialize” and use a lease on that blob to ensure that only one Windows Azure role instance is performing the initialization.

This pattern of using leases for concurrency control is so powerful that I included a special class called

AutoRenewLeasein my storage extensions NuGet package (smarx.WazStorageExtensions).AutoRenewLeasehelps you write these parts of your code similarly to how you might use alockblock in C#. Here’s the basic usage:using (var arl = new AutoRenewLease(leaseBlob)) { if (arl.HasLease) { // inside here, this instance has exclusive access } } // lease is released here

AutoRenewLeasewill automatically create the blob if it doesn’t already exist. Note that unlike C#’slock, this is a non-blocking operation. If the lease can’t be acquired (because the blob has already been leased), execution will continue (with aHasLeasevalue offalse).As the name indicates,

AutoRenewLeasetakes care of renewing the lease, so you can spend as much time inside theusingblock without worrying about the lease expiring. (Blob leases expire after 60 seconds.AutoRenewLeaserenews the lease every 40 seconds to be safe.)Revisiting our initialization example once again, we might write a method like this to perform our initialization only once (and have all instances block until initialization is complete):

// blob.Exists has the side effect of calling blob.FetchAttributes, which populates the metadata collection while (!blob.Exists() || blob.Metadata["progress"] != "done") { using (var arl = new AutoRenewLease(blob)) { if (arl.HasLease) { // do our initialization here, and then blob.Metadata["progress"] = "done"; blob.SetMetadata(arl.leaseId); } else { Thread.Sleep(TimeSpan.FromSeconds(5)); } } }Here, we’re using the blob for leasing purposes, but we’re also using its metadata to track progress. This gives us a way to have all the instances wait until initialization is actually complete before moving on.

I found this pattern useful enough that I made a convenience method for it and included that in my storage extensions too. Here’s its basic usage:

AutoRenewLease.DoOnce(blob, () => { // do initialization here });To make sure something is done on every new deployment, you might use the deployment ID as the blob name:

AutoRenewLease.DoOnce(container.GetBlobReference(RoleEnvironment.DeploymentId), () => { // do initialization here });Download

The classes and methods mentioned above are all part of smarx.WazStorageExtensions (NuGet package, GitHub repository).

Next Steps

Leases are incredibly useful for managing all sorts of concurrency challenges. In upcoming blog posts, I’ll cover using leases to help with task scheduling and general leader election. Stay tuned!

<Return to section navigation list>

SQL Azure Database and Reporting

• Walter Myers III (@WalterMyersIII) wrote Field Note: Using Certificate-Based Encryption in Windows Azure Applications for the new Windows Azure Field Notes Group on 9/7/2011:

This is the first in a series of articles focused on sharing “real-world” technical information from the Windows Azure community. This article was written by Walter Myers III, Principal Consultant, Microsoft Consulting Services.

Problem

I have seen various Windows Azure-related posts where developers have chosen to use symmetric key schemes for the encryption and decryption of data. An important scenario is when a developer needs to store encrypted data in SQL Azure, which will then be decrypted in a Windows Azure application for presenting to the user. Another is a data synchronization scenario where on-premises data must be kept synchronized with data in SQL Azure, with the data encrypted while off-premises in Windows Azure. [Emphasis added.]

A developer might store the encryption key in Windows Azure storage as a blob, which will be secure as long as the storage key that references the Windows Azure storage is safe; but this is not a best practice, as the developer must have access to the symmetric key and may unknowingly compromise the symmetric key on premises. Additionally, if the Windows Azure application is compromised, then it is possible the key will become compromised as well. This article provides a model and code for certificate-based encryption/decryption of data for Windows Azure applications.

Solution

First, let me provide some background. With a certificate-based (asymmetric key) approach, a best practice is to follow a “separation of concerns” protocol in order to protect the private key. Thus, IT would be responsible for any certificates with private keys that are uploaded to the Windows Azure Management Portal as service certificates for use by Windows Azure applications (service certificates available to Windows Azure applications must be uploaded to the corresponding hosted service). Developers will be provided with the public key only for use on their development machines at the time of application deployment. When testing in the development fabric, the developer must use a certificate that they have created through self-certification using IIS7. When deploying, they would simply replace the thumbprint in their encrypt/decrypt code with that of the service certificate uploaded to Windows Azure and also deploy the public key of the service certificate with their application.

The developer must deploy the public key with their application so that, when Windows Azure spins up role instances, it will match up the thumbprint in the service definition with the uploaded service certificate and deploy the private key to the role instance. The private key is intentionally non-exportable to the .pfx format, so you won’t be able to grab the private key through an RDC connection into a role instance.

Implementation of Solution

Now that we have tackled a little theory, let’s walk through this to see the concepts demonstrated concretely. Note that this solution uses the Visual Studio-provided functionality for certificate management.

If you haven’t already, go ahead and install the public key certificate into your personal certificate store. Use Local Computer instead of the Current User store, so your code will be consistent with where Windows Azure will deploy your certificate. Note that in order to see the certificate, you can’t just launch certmgr.msc, because it will take you to the Current User store. You will have to launch mmc.exe and select the File | Add/Remove Snap-In… menu item, add the Certificates snap-in, and select Computer Account in order to see the Local Computer Certificates, as seen in the snapshot below.

So your certificate console should now look something like below:

Now let’s take a look at how things will look in Visual Studio 2010 before you deploy your application, and then we will look at how the certificate console looks in an Windows Azure role instance. Below is a screen shot where I selected the Properties page for my web role, and selected the Certificates tab. I added the certificate highlighted in the above screenshot and renamed it EncryptDecrypt. Note that the store location is LocalMachine, and the Store Name is My, which is what we want.

Once you have added your certificate here, you can now go to the ServiceDefinition.csdef file and yours should look similar to below. You will also find an entry along with the thumbprint in the ServiceConfiguration.cscfg file.

After you have deployed your application, you can then establish a Remote Desktop Connection (RDC) to any instance (presuming you have configured RDC when you published your application). In the same manner as above, launch mmc.exe and add the Certificates snap-ins for both Local Computer and Current User. Your RDC window should look similar to below.

Notice that certificates are installed into the Local Computer personal certificates store, but none have been installed in the Current User personal store. It was the combination of uploading the service certificate to your hosted service and configuring the certificate for your role that caused Windows Azure to install the certificate in your certificate store. Now if you right-click on the certificate and attempt to export, as discussed above, you will see that the private key is not exportable, which is what we would expect, as seen below.

So now we know how the certificate we will use for encrypt/decrypt of our data is handled. Let’s next take a look at the encrypt/decrypt routine that will do the work for us.

public static class X509CertificateHelper { public static X509Certificate2 LoadCertificate(StoreName storeName, StoreLocation storeLocation, string thumbprint) { // The following code gets the cert from the keystore X509Store store = new X509Store(storeName, storeLocation); store.Open(OpenFlags.ReadOnly); X509Certificate2Collection certCollection = store.Certificates.Find(X509FindType.FindByThumbprint, thumbprint, false); X509Certificate2Enumerator enumerator = certCollection.GetEnumerator(); X509Certificate2 cert = null; while (enumerator.MoveNext()) { cert = enumerator.Current; } return cert; } public static byte[] Encrypt(byte[] plainData, bool fOAEP, X509Certificate2 certificate) { if (plainData == null) { throw new ArgumentNullException("plainData"); } if (certificate == null) { throw new ArgumentNullException("certificate"); } using (RSACryptoServiceProvider provider = new RSACryptoServiceProvider()) { provider.FromXmlString(GetPublicKey(certificate)); // We use the public key to encrypt. return provider.Encrypt(plainData, fOAEP); } } public static byte[] Decrypt(byte[] encryptedData, bool fOAEP, X509Certificate2 certificate) { if (encryptedData == null) { throw new ArgumentNullException("encryptedData"); } if (certificate == null) { throw new ArgumentNullException("certificate"); } using (RSACryptoServiceProvider provider = (RSACryptoServiceProvider) certificate.PrivateKey) { // We use the private key to decrypt. return provider.Decrypt(encryptedData, fOAEP); } } public static string GetPublicKey(X509Certificate2 certificate) { if (certificate == null) { throw new ArgumentNullException("certificate"); } return certificate.PublicKey.Key.ToXmlString(false); } public static string GetXmlKeyPair(X509Certificate2 certificate) { if (certificate == null) { throw new ArgumentNullException("certificate"); } if (!certificate.HasPrivateKey) { throw new ArgumentException("certificate does not have a PK"); } else { return certificate.PrivateKey.ToXmlString(true); } } }Note that in the Encrypt and Decrypt routines above, we have to get the public key for the encryption but must get the private key for the decryption. This makes sense, because Public Key Infrastructure (PKI) allows us to perform encryption by anyone who has the public key, but only the person with the private key has the privilege of decrypting the encrypted string. A notable difference is when we get the key, we can export the public key to XML as seen in the Encrypt routine, but we can’t export the private key to XML in the Decrypt routine, since certificates are deployed with the private key set as non-exportable on Windows Azure, which we learned earlier.

Let’s now take a look at some code I wrote to simply encrypt a string and decrypt a string using the X509 encrypt/decrypt helper class from above:

string myText = "Encrypt me."; X509Certificate2 certificate = X509CertificateHelper.LoadCertificate( StoreName.My, StoreLocation.LocalMachine, "D3E6F7F969546ED620A255794CAB31D8C07E9F31"); if (certificate == null) { Response.Write("Certificate is null."); return; } byte[] encoded = System.Text.UTF8Encoding.UTF8.GetBytes(myText) byte[] encrypted; byte[] decrypted; try { encrypted = X509CertificateHelper.Encrypt(encoded, true, certificate); } catch (Exception ee) { Response.Write("Encrypt failed with error: " + ee.Message + "<br>"); return; } try { decrypted = X509CertificateHelper.Decrypt(encrypted, true, certificate); } catch (Exception ed) { Response.Write("Decrypt failed with error: " + ed.Message + "<br>"); return; }So in the code above I loaded my certificate, using the personal store on the local machine. The last parameter in the LoadCertificate method of my X509 encrypt/decrypt class holds the thumbprint that I grabbed from the Certificates tab in the property page for the role. As an exercise, you can write some code to retrieve this string from the ServiceConfiguration.cscfg file.

References: http://www.josefcobonnin.com/post/2007/02/20/Encrypting-with-Certificates.aspx

<Return to section navigation list>

MarketPlace DataMarket and OData

Shayne Burgess (@shayneburgess) wrote Build Great Experiences on Any Device with OData for the 9/2011 issue of MSDN Magazine:

In this article, I’ll show you what I believe is a serious data challenge facing many organizations today, and then I’ll show you how the Open Data Protocol (OData) and its ecosystem can help to alleviate that challenge. I’ll then go further and show how OData can be used to build a great experience on Windows Phone 7 using the new OData library for Windows Phone 7.1 beta (code-named “Mango”).

The Data Reach Challenge

An interesting thing happened at the end of 2010: for the first time in history, the number of smartphone shipments surpassed the number of PC shipments. A key thing to note is that this isn’t a one-horse race. There are many players (Apple Inc., Google Inc., Microsoft, Research In Motion Ltd. and so on) and platforms (desktop, Web, phones, tablets and more coming all the time). Many organizations want to target client experiences on all or most of these devices. And many organizations also have a variety of data and services that they want to make available to client devices. These may be on-premises, available through traditional Web services or built in the cloud.

The combination of a large and ever-expanding variety of client platforms with a large and ever-expanding variety of services creates a significant cost and complexity problem. When support for a new client platform is added, the data and services often have to be updated and modified to support that platform. And when a new service is added, all of the existing client platforms need to be modified to support that service. This is what I consider to be the data reach problem. How can we define a service that’s flexible enough to suit the needs of all the existing client experiences—and new client experiences that haven’t been invented yet? How can we define client libraries and applications that can work with a variety of services and data sources? These are some of the key questions that I hope to show can be partly addressed with OData.

The Open Data Protocol

OData is a Web protocol for querying and updating data that provides a uniform way to unlock your data. Simply put, OData provides a standard format for transferring data and a uniform interface for accessing that data. It’s based on ATOM and JSON feeds and the interface uses standard HTTP (REST) interfaces for exposing querying and updating capabilities. OData is a key component in bridging the data reach problem because, being a uniform, flexible interface, the API can be created once and used from a variety of client experiences.

The OData Ecosystem

The flexible OData interface that can be used from a variety of clients is most powerful when there are a variety of client experiences that can be built using existing OData-enabled clients. The OData client ecosystem has evolved over the last few years to the point where there are client libraries for the majority of client devices and platforms, with more coming all the time. Figure 1 lists just a sampling of the client and server platforms available at the time this article was written (a much more complete list of existing OData services is at odata.org/producers).

Figure 1 A Sampling of the OData Ecosystem

Clients .NET Silverlight Windows Phone WebOS iPhone DataJS Excel Tableau Services Windows Azure SQL Azure DataMarket SharePoint SAP Netflix eBay Wine.com

I can generally target any of the listed services in Figure 1 with any client. To help demonstrate this in practice, I’ll give examples of OData flexibility using a couple of different clients.

I’ll use the Northwind OData (read-only) sample service available on odata.org at http://services.odata.org/Northwind/Northwind.svc/. If you’re familiar with the Northwind database, the Northwind service shown in Figure 2 simply exposes the Northwind model as an OData service directly. Figure 2 shows the service document in the Northwind service from the browser; the service document exposes the entity sets in the service that are basically just the tables from the Northwind database.

.png)

Figure 2 The Northwind ServiceLet’s look at a couple of examples of using the client libraries to consume and build experiences using the Northwind OData service. For the first example, let’s consider a client experience that doesn’t require any code to build. The PowerPivot add-in for Excel provides an in-memory analysis engine that can be used directly from within the Excel interface and supports importing data directly from an OData feed. The PowerPivot add-in also includes support for importing data directly from the Windows Azure Marketplace DataMarket, as well as a number of other data sources.

Figure 3 shows a pivot chart created by importing the Products and Orders data from the Northwind OData service and joining the result. The price-per-unit and quantity fields of the order details are combined to produce a total value for each order, and then the average total value for each order is shown, grouped by product and then displayed on a simple pivot chart for analysis. All of this is done using the built-in interface and tools in PowerPivot and Excel.

.png)

Figure 3 PowerPivot Add-InLet’s look next at another example of how to build an OData client experience on a different platform: iOS. The OData client library for iOS lets you build apps on that platform (such as for the iPad and iPhone) that consume existing OData services. The iOS library for OData includes a code-generation tool, odatagen, which will generate a set of proxy classes from the metadata on the service. The proxy classes provide facilities for generating OData URIs, deserializing a response into client-side objects and issuing create, read, update and delete (CRUD) operations against the service. The following code snippet shows an example of executing a request for the set of customers from the OData service:

- // Create the client side proxy and specify the service URL.

- NorthwindCatalog *proxy =

- [[NorthwindCatalog alloc]initWithUri:@http://host/Northwind.svc];

- // Create a query.

- DataServiceQuery *query = [proxy Customers];

- QueryOperationResponse *response = [query execute];

- customers = [query getResults];

The DataServiceQuery and QueryOperationResponse are used to execute a query by generating a URI and executing it against the service.

The Windows Phone 7 Library

Next, I’ll demonstrate another example of an OData library on a third platform. I did a quick overview of building on OData on other platforms, but for this one I’ll do a detailed walk-through to give insight into what it takes to build an app. To do this, I’ll show you the basic steps needed to write a data-driven experience on Windows Phone 7 using the new Windows Phone 7.1 (Mango) tools. For the rest of the article, I’ll walk through the key considerations when building a Windows Phone 7 app and show how the OData library is used. The app I build will target the same Northwind sample I used in the previous two examples (the Excel PowerPivot add-in and the iOS library).

The latest release of the Windows Phone 7.1 (Mango) beta tools features a significant upgrade in the SDK support for OData. The new library supports a set of new features that makes creating OData-enabled Windows Phone 7 apps easier. The Visual Studio Tools for Windows Phone 7 were updated to support the generation of a custom client proxy based on a target OData service. For those familiar with the existing Microsoft .NET Framework and Silverlight OData clients, this, too, will be familiar. To use the Visual Studio tools to automatically generate the proxy in a Windows Phone 7 project, right-click the Service References node in the project tree and select Add Service Reference (note that this is only available if the new Mango tools are installed). Once the Add Service Reference dialog appears, enter the URI of the OData service you’re targeting and select Go. The dialog will display the entity sets exposed by the service; you can then enter a namespace for the service and select OK. The first time the code generation is performed using Add Service Reference, it will use default options, but it’s possible to further configure the code generation by clicking the Show All Files option in the solution explorer, opening the .datasvcmap file in the service reference and configuring the parameters. Figure 4 shows the completed Add Service Reference dialog with the list of entity sets available.

.png)

Figure 4 Using the Add Service Reference DialogA command-line tool is also included in the Visual Studio Tools for Windows Phone 7 that’s able to perform the same code-generation step. In either case, the code generation will create a client proxy class (DataServiceContext) for interacting with the service, and a set of proxy classes that reflect the shape of the service. …

Shane continues with details of LINQ support, tombstoning and credentials. He concludes:

Learning More

This article gives a summary of OData, the ecosystem that has been built around it and a walk-through using the OData client for Windows Phone 7.1 (Mango) beta to build great mobile app experiences. For more information on OData, visit odata.org. To get more information on WCF Data Services, see bit.ly/pX86x6 or the WCF Data Services blog at blogs.msdn.com/astoriateam.

Shayne is a program manager in the Business Platform Division at Microsoft, working specifically on WCF Data Services and the Open Data Protocol.

<Return to section navigation list>

Windows Azure AppFabric: Apps, WF, Access Control, WIF and Service Bus

• The Windows Azure Platform Team (@WindowAzure) sent the following e-mail on 9/7/2011:

Dear Customer,

Today we are excited to announce an update to the Windows Azure AppFabric Service Bus. This release introduces enhancements to Service Bus that improve pub/sub messaging through features like Queues, Topics and Subscriptions, and enables new scenarios on the Windows Azure platform, such as:

- Async Cloud Eventing - Distribute event notifications to occasionally connected clients (e.g. phones, remote workers, kiosks, etc.)

- Event-driven Service Oriented Architecture (SOA) - Building loosely coupled systems that can easily evolve over time

- Advanced Intra-App Messaging - Load leveling and load balancing for building highly scalable and resilient applications

These capabilities have been available for several months as a preview in our LABS/Previews environment as part of the Windows Azure AppFabric May 2011 CTP. More details on this can be found here: Introducing the Windows Azure AppFabric Service Bus May 2011 CTP.

To help you understand your use of the updated Service Bus and optimize your usage, we have implemented the following two new meters in addition to the current Connections meter:

- Entity Hours - A Service Bus "Entity" can be any of: Queue, Topic, Subscription, Relay or Message Buffer. This meter counts the time from creation to deletion of an Entity, totaled across all Entities used during the period.

- Message Operations - This meter counts the number of messages sent to, or received from, the Service Bus during the period. This includes message receive requests that return empty (i.e. no data available).

These two new meters are provided to best capture and report your use of the new features of Service Bus. You will continue to only be charged for the relay capabilities of Service Bus using the existing Connections meter. Your bill will display your usage of these new meters but you will not be charged for these new capabilities.

We continue to add additional capabilities and increase the value of Windows Azure to our customers and are excited to share these new enhancements to our platform. For any questions related to Service Bus, please visit the Connectivity and Messaging - Windows Azure Platform forum.

Windows Azure Platform Team

Eric Nelson (@ericnel) claimed Are you using Workflow Foundation? And Azure? Then you need the CTP of the Activity Pack in a 9/7/2011 post:

In September we released the first CTP of the Workflow Foundation Activity Pack for Windows Azure. It includes activities which you can “call” from your Workflows in Workflow Foundation 4.0. It can be found on the CodePlex page and is also available via NuGet, you can type “Install-Package WFAzureActivityPack” in your package manager console to install the activity pack.

This Activity Pack includes the following:

For Windows Azure Storage Service – Blob

- PutBlob creates a new block blob, or replace an existing block blob.

- GetBlob downloads the binary content of a blob.

- DeleteBlob deletes a blob if it exists.

- CopyBlob copies a blob to a destination within the storage account.

- ListBlobs enumerates the list of blobs under the specified container or a hierarchical blob folder.

For Windows Azure Storage Service – Table

- InsertEntity<T> inserts a new entity into the specified table.

- QueryEntities<T> queries entities in a table according to the specified query options.

- UpdateEntity<T> updates an existing entity in a table.

- DeleteEntity<T> deletes an existing entity in a table using the specified entity object.

- DeleteEntity deletes an existing entity in a table using partition and row keys.

For Windows Azure AppFabric Caching Service

- AddCacheItem adds an object to the cache, or updates an existing object in the cache.

- GetCacheItem gets an object from the cache as well as its expiration time.

- RemoveCacheItem removes an object from the cache.

Related Links

<Return to section navigation list>

Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

No significant articles today.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

• Avkash Chauhan described an Experiment with Role.Instances Collection and creating all Role Instances Order in a 9/7/2011 post:

When there are multiple instance in a web role, I was trying to check if Role.Instances collection ordered consistently on all endpoints. I also wanted to be sure when instance count is increased by modifying service configuration, the new instances be added to the end of the collection or will it be random.

So I created a web role and changed instance count to 10. I also modified OnStart() function as below:

public override bool OnStart()

{

foreach (RoleInstance roleInst in RoleEnvironment.CurrentRoleInstance.Role.Instances){

Trace.WriteLine("Current Time: " + DateTime.Now.TimeOfDay);

Trace.WriteLine("Instance ID: " + roleInst.Id);

Trace.WriteLine("Instance Role Name: " + roleInst.Role.Name);

Trace.WriteLine("Instance Count: " + roleInst.Role.Instances.Count);

Trace.WriteLine("Instance EndPoint Count: " + roleInst.InstanceEndpoints.Values.Count);

Trace.WriteLine("IP : " + RoleEnvironment.CurrentRoleInstance.InstanceEndpoints["EndPoint1"].IPEndpoint.Address);

int port = RoleEnvironment.CurrentRoleInstance.InstanceEndpoints["EndPoint1"].IPEndpoint.Port;

Trace.WriteLine("Port: " + port);

}

return base.OnStart();

}

I started application in the Compute Emulator so I can look the start time for each instance in my Web Role:

I wanted to check the start time of each instance so I collected the following info for each instance:

Role[#]

Role.Instance.ID

Start Order

Role[0]

- Current Time: 15:07:05.9445984

- Instance ID: deployment(341).WebRoleTest.ASPWebRole.0

- IP : 127.0.0.1

- Port: 5145

2

Role[1]

- Current Time: 15:07:06.3676226

- Instance ID: deployment(341).WebRoleTest.ASPWebRole.1

- IP : 127.0.0.1

- Port: 5146

5

Role[2]

- Current Time: 15:07:06.4216257

- Instance ID: deployment(341).WebRoleTest.ASPWebRole.2

- IP : 127.0.0.1

- Port: 5147

7

Role[3]

- Current Time: 15:07:06.2376152

- Instance ID: deployment(341).WebRoleTest.ASPWebRole.3

- IP : 127.0.0.1

- Port: 5148

4

Role[4]

- Current Time: 15:07:05.9345979

- Instance ID: deployment(341).WebRoleTest.ASPWebRole.4

- IP : 127.0.0.1

- Port: 5149

1

Role[5]

- Current Time: 15:07:06.5746345

- Instance ID: deployment(341).WebRoleTest.ASPWebRole.5

- IP : 127.0.0.1

- Port: 5150

9

Role[6]

- Current Time: 15:07:06.2106137

- Instance ID: deployment(341).WebRoleTest.ASPWebRole.6

- IP : 127.0.0.1

- Port: 5151

3

Role[7]

- Current Time: 15:07:06.3846236

- Instance ID: deployment(341).WebRoleTest.ASPWebRole.7

- IP : 127.0.0.1

- Port: 5152

6

Role[8]

- Current Time: 15:07:06.5906354

- Instance ID: deployment(341).WebRoleTest.ASPWebRole.8

- IP : 127.0.0.1

- Port: 5153

10

Role[9]

- Current Time: 15:07:06.4746288

- Instance ID: deployment(341).WebRoleTest.ASPWebRole.9

- IP : 127.0.0.1

- Port: 5154

8

Based on above I could create the following Instance order:

- 1 deployment(341).WebRoleTest.ASPWebRole.4

- 2 deployment(341).WebRoleTest.ASPWebRole.0

- 3 deployment(341).WebRoleTest.ASPWebRole.6

- 4 deployment(341).WebRoleTest.ASPWebRole.3

- 5 deployment(341).WebRoleTest.ASPWebRole.1

- 6 deployment(341).WebRoleTest.ASPWebRole.7

- 7 deployment(341).WebRoleTest.ASPWebRole.2

- 8 deployment(341).WebRoleTest.ASPWebRole.9

- 9 deployment(341).WebRoleTest.ASPWebRole.5

- 10 deployment(341).WebRoleTest.ASPWebRole.8

When I ran the same application a few times, the order was not consistent. Adding new instances was also changing the order. So you can see the current role instance is always first in the list however the ordering is very different.

So If you would need to create instance order list, the best would be to have each instance write an entity to a table when it starts up (with a timestamp or a row key that represents the current time). Then you’ll be able to construct an ordered list.

Herve Roggero (@hroggero) claimed Spotlight (R) on Azure: Cool Tool in a 9/7/2011 post:

While testing the performance characteristics of the SQL Azure backup tool I am building (called Enzo Backup for SQL Azure), I decided to try Spotlight

(R)on Azure in order to obtain specific performance metrics from a virtual machine (VM) running on Microsoft's data center. Indeed, my backup solution comes with a cloud agent (running as a worker role in Azure) that performs backup and restore operations entirely in the cloud. Due to the nature of this application, I needed to have an understanding of possible memory and CPU pressures. [Emphasis added.]

As of today, very few techniques are available to capture VM's performance metrics in Microsoft Azure. One thing you can do is to configure your application to send Diagnostics data to Azure Tables and Blobs; however this requires a lot of work when comes time to parse the information. I had previously tried the Azure Management Pack of System Center. While the Management Pack was indeed powerful, it was difficult to install and came with a very limited number of metrics by default, making it more difficult for a small organization like mine to customize and fully leverage. System Center's capabilities with Azure will grow over time and will most likely appeal to larger organizations. [Emphasis added.]

When it comes to small companies with few operational capabilities, Spotlight

(R)on Azure delivers (from Quest). The installation is extremely simple and the tool comes with many metrics out of box. In my tests, I was able to obtain a quick read on my virtual machine and review performance graphs showing CPU Utilization, CPU Queue Length, Memory Utilization and Network Traffic. The tool shows by default the last 15 minutes or data, which is very handy. If you have a large farm of virtual machines, you can also view metrics rolled up across your virtual machines. Finally the tool allows you to declare Alerts if you cross performance thresholds (the thresholds values can be changed to your needs).All in all, this is a simple, yet powerful performance monitoring tool for your Azure virtual machines. Here is a link that shows the tool in action: http://www.youtube.com/watch?v=FDJjytW-VFI and here is a link where you can download the tool for a test drive: http://communities.quest.com/docs/DOC-9906

Not only is the Monitoring Pack for Windows Azure Applications difficult to install (it takes 60 steps - see my Installing the Systems Center Monitoring Pack for Windows Azure Applications on SCOM 2012 Beta of 9/5/2011). What’s worse is that % Processor Time Total (Azure) and Memory Available Megabytes (Azure) metrics are disabled by default (see my Configuring the Systems Center Monitoring Pack for Windows Azure Applications on SCOM 2012 Beta of 9/6/2011).

Linda Rosencrance (@Reporter1035) reported Microsoft Dynamics NAV ISVs Gearing Up for NAV 7 in Windows Azure in a 9/6/2011 post to the MSDynamicsWorld.com blog:

As Microsoft Corp. gears up to launch Microsoft Dynamics NAV 7, including its Windows Azure-hosted, multi-tenant version, ISVs are also working figure out how to get their NAV-compatible add-on solutions ready to run in the Azure cloud as well.

Microsoft's director of US ISV strategy, Mark Albrecht, told a group of Dynamics partners at a recent GP Partner Connections event that the company is actively focusing on cloud readiness of Dynamics NAV ISV partners to accelerate their development of Azure-friendly version of their solutions in conjunction with the NAV 7 release on Azure. Microsoft understands, Albrecht says, that having enough ISV solutions available in conjunction with a multi-tenant, Azure-based NAV solution will be a key differentiator.

"Our initial efforts in moving ISVs to the cloud will be [focused on] verticals and industries that have the biggest impact on the customer's purchase decision," Albrecht added. "We're working to make sure that both product and business model are making the shift to the cloud in the right time frame for that partner."

ISVs prepare

To-Increaseis one of those ISVs getting its solutions ready for the Azure-ready release of NAV 7. To-Increase develops Dynamics NAV solutions for the industrial equipment manufacturing industry.

"To-Increase is an ISV so we are always preparing for the next release of Microsoft [Dynamics products]," said Adri Cardol, Product Manager Microsoft Dynamics NAV, To-Increase. "We have the information Microsoft is making available on the new release. We're studying what the impact is on our solution and how we can best modify the design, adjust the design or redevelop the product to meet the requirements of the new Azure platform. So what we are doing is getting ready, but the available information is coming to us in bits and pieces."

Cardol said To-Increase is studying the information step-by-step and trying to estimate the impact of every piece of information that it gets so it can be ready when NAV 7 is rolled out. As part of Microsoft's early access beta program, they know what NAV 7 is all about. However, he said currently To-Increase is studying the new functionality of the newest build from Microsoft to determine how it will affect the company's solutions.

"Since NAV will be running on the Azure platform, we sort of assume that any new functionality that we develop will also run on that platform, simply as a hosted solution that will not be specific for the Azure platform," he said.

RMI Corp. is also working to get an Azure-hosted version of ADVANTAGE, the company's Dynamics NAV-based system for companies that rent, sell and service equipment, ready for the NAV 7 launch, according to Paul Chapdelaine, RMI's owner.

"We're a Dynamics NAV ISV and about four years ago we started offering a SaaS offering-at Microsoft's request-and about a year and a half ago we actually stopped offering the on-premise and went ‘all in' the cloud," he said. "The way I view this is that Microsoft will start offering another layer. Right now, we're hosting the software on SQL Server and we're combining other functional elements so we deliver to the end user a completely integrated Office environment."

"We're not looking to host individual installations on a box some place, we need true multi-tenancy," he said. "We've accomplished that where we are now, but we did it through a lot of hard work because NAV doesn't lend itself to that environment natively."

However, Chapdelaine declined to offer specifics on exactly how RMI accomplished that feat because "we've done it and no one else has. It's a competitive advantage and it took a lot of effort."

But Chapdelaine said he has some questions about how the Azure version of NAV 7 will work with his company's other offerings.

"As long as Microsoft Azure is a SQL database, a data storage device, that may work very well," he said. "But we want to see how it evolves with our other offerings. [And we want to know] who's going to control the end user experience. We want to make sure we can continue to control the end user's experience with the software. I need to be able to configure it to meet their needs with ancillary products."

Finding new ways to tailor a NAV solution

Going to cloud-based or hosted solutions also opens new opportunities but it also creates restrictions for ISVs, which is why it's important to estimate what the impact will be on your solution, Cardol said.

"If you want to fully use the possibility of hosted solutions you have to go to a much more templated type solution for an ERP application than we are used to in the ERP world," said Cardol of To-Increase. "The ERP world has a basic solution with which you go on-premise and, together with the customer, you implement, you customize, you tailor it to the specific needs of that customer. So that is an area that has to change in the sense that to fully use the benefit of hosted you have to come up with much more templated solutions that are much more standardized toward certain business areas."

That's exactly what To-Increase is working on, he said.

And although Dynamics NAV is the main attraction for RMI's customers, they also find themselves tailoring their solution for customers by offering other ISV add-ons to round out its offering like Commerce Clearing House sales tax updates, Matrix Documents, and Jet Reports, Chapdelaine said.

"How are we going to control how all of that works together?" he asked. "We're not concerned, we just don't know. We've talked to some folks within Microsoft and they have assured us that as soon as Dynamics NAV is ready to go at 7, they'll let us play in that sandbox first and do some pilots so we can figure out how to do it."

Planning for the NAV 7 release

Cardol said when his company's solutions will be ready depends on the actual release date of Dynamics NAV 7.

"We know it will be mid-2012 and we have a release schedule that's more or less in sync with that," he said. "We have our releases in May and November so ideally we will still catch it in the May release, maybe even delay it a month or so to meet the NAV 7 release. But if the NAV 7 release is going be in August or September 2012, then we might want to postpone our releases until November."

Microsoft is expected to release an initial beta version of NAV 7 at the beginning of 2012 with an announcement at the NAV Directions conference.

"They're going to show it at Directions for the first time," he said. "We haven't even got a copy of it," says RMI's Chapdelaine. "It's at that point, we'll start moving to Azure."

Joris de Gruyter (@jorisdg) described a 10-Minute AX 2012 App: Windows Azure (Cloud) in a 9/6/2011 post:

Powerfully simple put to the test. If you missed the first article, I showed you how to create a quick WPF app that reads data from AX 2012 queries and displays the results in a grid. In this article, I will continue showing you how easy it is to make use of AX 2012 technology in conjunction with other hot Microsoft technologies. Today, we will create a Windows Azure hosted web service (WCF), to which we'll have AX 2012 publish product (item) data. Again, this will take 10 minutes of coding or less. Granted, it was a struggle to strip this one down to the basics to keep it a 10-minute project.

Windows Azure is Microsoft's cloud services platform. We'll be creating a web role here, hosting a WCF service. The idea I'm presenting here is an easy push of data out of AX to an Azure service. There, one could build a cloud website or web service, for example to serve mobile apps (Microsoft has an SDK to build iOS, Android and Windows Phone 7 apps based on Azure services). We'll spend most time setting up the Azure WCF service (and a quick webpage to show what's in the table storage), and finally we'll create a quick AX 2012 VS project to send the data, and hook that into AX using an event handler!

So, we'll build an Azure WCF service using Table storage (as opposed to BLOB storage or SQL Azure storage). Make sure to evaluate SQL Azure if you want to take this to the next level. So, our ingredients for today will require you to install something you may not have already...

Ingredients:

- Visual Studio 2010

- Windows Azure SDK (download here)

- Access to an AX 2012 AOS

Now, the Azure SDK has some requirements of its own. I have it using my local SQL server (I did not install SQL Express) and my local IIS (I did not install IIS express).

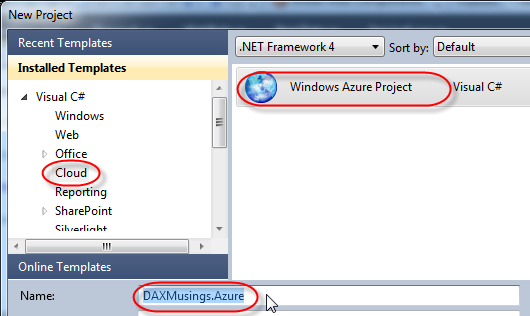

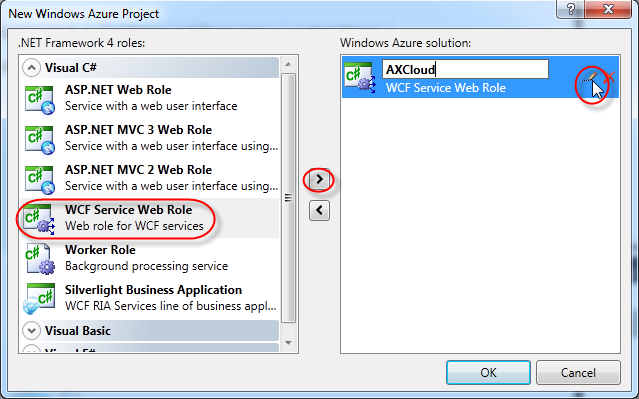

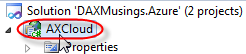

Ok, everything installed and ready? Start the timer! You need to start Visual Studio 2010 as administrator. So right-click Visual Studio in your start menu and select "Run as Administrator". This is needed to be able to run the Windows Azure storage emulators. We'll start a new project and select Cloud > Windows Azure Project. I've named this project "DAXMusings.Azure". Once you click OK, Visual Studio will ask you for the azure role and type of project. I've select "WCF Service Web Role". Click the little button to add the role to your solution. Also, click the edit icon (which won't appear until your mouse hovers over it) to change the name to "AXCloud".

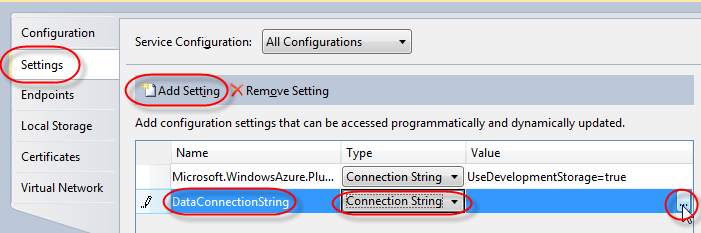

Ok, first things first, setup the data connection for the table storage. Right-click on the "AXCloud" under "Roles" and select properties.

Under settings, click the "Add Setting" button, name it "DataConnectionString", set the drop down to... "Connection String", and click the ellipsis. On that dialog screen, just select the "Use the Windows Azure storage emulator" and click OK.

Hit the save button and close the properties screen. So, what we will be storing, and communicating over the webservice, is item data. So, let's add a new class and call it ItemData. To do this, we right-click on the AXCloud project, and select Add > New Item. On the dialog, select Class and call it "ItemData".

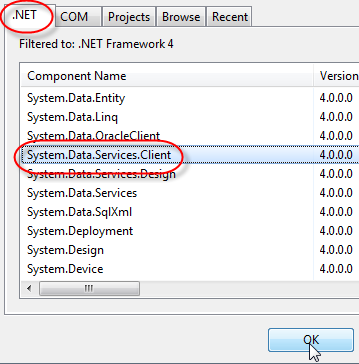

So, here's the deal. For WCF to be able to use this, we need to declare this class as a data contract. For Azure table storage to be able to use it, we need three properties: RowKey (string), Timestamp (DateTime) and PartitionKey (string). For item data, we will get an ITEMID from AX, but we'll store it in the RowKey property since that is the unique key. We'll just add a Name property as well, to have something more meaningful to look at. To support the data contract attribute, you'll need to add the namespace "System.Runtime.Serialization" and for the DataServiceKey you need to add the namespace "System.Data.Services.Common". The PartitionKey we'll set to a fixed value of "AX" (this property has to do with scalability and where the data lives in the cloud; also, the RowKey+PartitionKey technically make up the primary key of the data...). We'll also initialize the Timestamp to the current DateTime, and provide a constructor accepting the row and partition key.

Click here for screenshot or copy the code below:namespace AXCloud { [DataContract] [DataServiceKey(new[] { "PartitionKey", "RowKey" })] public class ItemData { [DataMember] public string RowKey { get; set; } [DataMember] public String Name { get; set; } [DataMember] public string PartitionKey { get; set; } [DataMember] public DateTime Timestamp { get; set; } public ItemData() { PartitionKey = "AX"; Timestamp = DateTime.Now; } public ItemData(string partitionKey, string rowKey) { PartitionKey = partitionKey; RowKey = rowKey; } } }

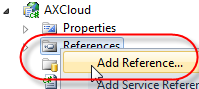

Next, we'll need a TableServiceContext class. We'll need to add a reference to "System.Data.Services.Client" in our project. Just right-click on the "References" node of the AXCloud project and select "Add Reference". On the .NET tab, find the "System.Data.Services.Client" assembly and click OK.For easy coding, let's add another using statement at the top as well, for the Azure storage assemblies.

And we'll add the Context class. This will provide a property that's Queryable, and an easy way to add an item through an AddItem() method.

Click here for screenshot or copy the code below:public class ItemDataServiceContext : TableServiceContext { public ItemDataServiceContext(string baseAddress, StorageCredentials credentials) : base(baseAddress, credentials) { } public IQueryable Items { get { return this.CreateQuery("Items"); } } public void AddItem(string itemId, string name) { ItemData item = new ItemData(); item.RowKey = itemId; item.Name = name; this.AddObject("Items", item); this.SaveChanges(); } }

So, first time we use this app (or any time you restart your storage emulator), the table needs to be created before we can use it. In a normal scenario this would not be part of your application code, but we'll just add it in here for easy measure. Open the "WebRole.cs" file in your project, which contains a method called "OnStart()". At the top, add the using statements for the Windows Azure assemblies. Inside the onstart() method, we'll add code that creates the table on the storage account if it doesn't exist.Click here for a full screenshot or copy the below code to add before the return statement:CloudStorageAccount.SetConfigurationSettingPublisher((configName, configSettingPublisher) => { var connectionString = Microsoft.WindowsAzure.ServiceRuntime.RoleEnvironment.GetConfigurationSettingValue(configName); configSettingPublisher(connectionString); } ); CloudStorageAccount storageAccount = CloudStorageAccount.FromConfigurationSetting("DataConnectionString"); storageAccount.CreateCloudTableClient().CreateTableIfNotExist("Items");

Next, we'll create a new operation on the WCF service to actually add an item. Since we already have all the ingredients, we just need to add a method to the interface and its implementation. First, in the IService.cs file, we'll add a method where the TODO is located. You probably want to remove all the standard stuff there, but to keep down the time needed and to make it easier for you to see where I'm adding code, I've left it in these screenshots.Now, into the Service1.svc.cs we'll add the implementation of the method, for which we'll need to add the Windows Azure using statements again:

Click here for a full screenshot or copy the code below:public void AddItem(ItemData item) { CloudStorageAccount.SetConfigurationSettingPublisher((configName, configSettingPublisher) => { var connectionString = Microsoft.WindowsAzure.ServiceRuntime.RoleEnvironment.GetConfigurationSettingValue(configName); configSettingPublisher(connectionString); }); CloudStorageAccount storageAccount = CloudStorageAccount.FromConfigurationSetting("DataConnectionString"); new ItemDataServiceContext(storageAccount.TableEndpoint.ToString(), storageAccount.Credentials).AddItem(item.RowKey, item.Name); }

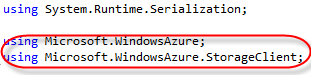

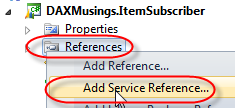

So now the last thing to do is create a class the consumes this service, which we can use as a post event handler for the insert() method on the InventTable table in AX... I am skipping through some of the steps here, but basically, we create a new class library project in Visual Studio (open a second instance of Visual Studio, since we'll need to keep the Azure service running!), and "Add it to AOT". For a walkthrough on how to do this, check this blog article. We're calling this class libary "DAXMusings.ItemSubscriber".Alright, when you have the new project created and added to the AOT, we need to add a service reference to the Azure WCF service. Since we're running all of this locally, you'll need to make sure the Azure service is started (F5 on the Azure project). This will open internet explorer to your service page (on mine it's http://127.0.0.1:81/Service1.svc). If Azure opens your web site but not the .svc file (you probably will get a 403 forbidden error then), just add "Service1.svc" behind the URL it did open. If you renamed the WCF service in the project, you'll have to use whatever name you gave it.

So, we'll add that URL as a service reference to our subscriber project, and I'm giving it namespace "AzureItems":

Now that we have the reference, we will make a static method to use as an event handler in AX. First, we need to add references to XppPrePostArgs and InventTable by opening the Application Explorer, navigating to Classes\XppPrePostArgs and Data Dictionary\Tables\InventTable respectively. For each one, right-click on the object and select "Add to project" (for more information on the why and how, check this blog post on managed handlers).

Next, there's one ugly part I will have you add. Since the reference to the service is in a class library, which will then be used in an application (AX), the service reference configuration (bindings.. the endpoint etc) which gets stored in the App.config for your class library, will not be available to the AX executables. Technically, we should copy/paste these values into the Ax32Serv.exe.config (or the client one, depending where your code will run). However, this would require a restart of the AOS server, etc. So (.NET gurus will kill me here, but just remember: this is supposed to be up and running in 10 minutes!) for the sake of moving along, we will just hardcode a quick endpoint into the code...

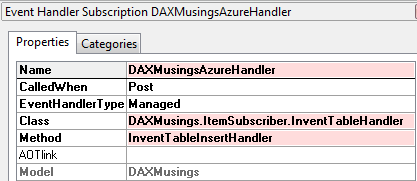

Click here for full screenshot of the class.public static void InventTableInsertHandler(XppPrePostArgs args) { InventTable inventTable = new InventTable((Microsoft.Dynamics.AX.ManagedInterop.Record)args.getThis()); AzureItems.ItemData itemData = new AzureItems.ItemData(); itemData.RowKey = inventTable.ItemId; itemData.Name = inventTable.NameAlias; System.ServiceModel.BasicHttpBinding binding = new System.ServiceModel.BasicHttpBinding(); binding.Name = "BasicHttpBinding_IService1"; System.ServiceModel.EndpointAddress address = new System.ServiceModel.EndpointAddress("http://127.0.0.1:83/Service1.svc"); AzureItems.Service1Client client = new AzureItems.Service1Client(binding, address); client.AddItem(itemData); }

Notice how the URL is hardcoded in here (this is stuff that should go into that config file). One thing I've noticed, with the Azure project running emulated on my local machine, that it seems to change ports every now and then (I started and stopped it a few times). So you may need to tweak this while you're testing this stuff out.So last thing to do is add this as a Post-Handler on the InventTable insert() method... (for more information and walkthrough on adding managed post-handlers on X++ objects, check this blog post)

Time to add an item and test! If you wish to debug this to see what's going on, remember the insert method will be running on the AX server tier so you need to attach the debugger to the AOS service.

How do you now know your item was actually added in the Azure table storage? Well, I will post some extra code tomorrow (a quick and dirty ASP page that lists all items in the table).

I am expecting you will run into quite a few issues trying to get through this exercise. I know I have, some of them were minor things but took me hours to figure out what I was missing. Feel free to contact me or leave a comment, and I'll try to look into each issue.

Brian Swan (@brian_swan) posted an Overview of Command Line Deployment and Management with the Windows Azure SDK for PHP on 9/6/2011:

I’ve been exploring the Windows Azure SDK for PHP command line tools that allow you to create, deploy, and manage new hosted services and web roles. In this post, I’ll walk you through a the basics of using the tools, but first I want to point out a couple of the benefits that come to mind when using these tools:

- The tools are platform-independent (with the exception of the package tool). The service, certificate, and deployment tools essentially wrap the REST-based Windows Azure management API. (If you look at the files in the SDK, you’ll notice that there are .bat and .sh files for these tools.) So, you can manage your Windows Azure services and deployments from the platform of your choice. (Note that because the management API is REST-based, you can use it via the language of your choice, not just PHP. In fact, tools based on languages other than PHP already exist - See this Ruby example on github.)

- The tools allow you to programmatically manage your Windows Azure services and deployments.This series of articles, Building Scalable Applications for Windows Azure, demonstrates how to programmatically scale an application using the PHP classes in the SDK. The same type of management is possible with the command line tools.

In the rest of this post, I’ll assume that you have set up the Windows Azure SDK for PHP and that you have a ready to deploy application (see Packaging Applications).

Overview of service, certificate, and deployment tools

The operations exposed by the service, certificate and deployment tools should give you a good idea of what you can do with them. The following tables list the operations that are available. For more information (such as short operation names and parameter information), type the tool name at the command line.

service

Note: The list operation returns two properties: ServiceName and Url. The value of the Url property is the management API endpoint, not the service URL. If you want the service URL, you can create it from the ServiceName property: http://ServiceName.cloudapp.net.

certificate

deployment

Note: The getproperties operation returns 5 properties: Name, DeploymentSlot, Label, Url, and Status. The value of the Name property is the deployment ID.

Using the service, certificate, and deployment tools

Creating and uploading a service management API certificate

Before you can use the command line management tools, you need to create certificates for both the server (a .cer file)and the client (a .pem file). Both files can be created using OpenSSL, which you can download for Windows and run in a console.

To create the .pem certificate, execute this:

openssl req -x509 -nodes -days 365 -newkey rsa:1024 -keyout mycert.pem -out mycert.pemTo create the .cer certificate, execute this:

openssl x509 -inform pem -in mycert.pem -outform der -out mycert.cer(For a complete description of OpenSSL parameters, see the documentation at http://www.openssl.org/docs/apps/openssl.html.)

Next, you’ll need to upload the .cer certificate to your Windows Azure account. To do this, go to the Windows Azure portal, select Hosted Services, Storage Accounts & CDN, choose Management Certificates, and click Add Certificate.

Now you are ready to use the service, certificate, and deployment tools.

Create a configuration file

The command line tools in the the Windows Azure SDK for PHP support using a configuration file for commonly used parameters. Here’s what I put into my config.ini file:

-sid="my_subscription_id"-cert="path\to\my\certificate.pem"This makes it possible to use the –F option on any operation to include my SID and certificate.

List hosted services

The following command will list all hosted services:

service list -F="C:\config.ini"This operation will return two properties ServiceName and Url. As mentioned above, the Url property is the management URL, not the service URL. You can create the service URL from the value of the ServiceName property: http://ServiceName.cloudapp.net.

Create a new hosted service

The following command will create a new hosted service:

service create -F="C:\config.ini" --Name="unique_dns_prefix" --Location="North Central US" --Label="your_service_label"Note that the value of the Name parameter must be your unique DNS prefix. This is the value that will prefix cloudapp.net to create your service URL (e.g. http://your_dns_prefix.cloudapp.net). When this operation completes successfully, it will return your unique DNS prefix.

Add a certificate

The command below will add a certificate to a hosted service. Note that this isn’t a management certificate, but one that might be used to enable RDP, for example.

certificate addcertificate -F="C:\config.ini" --ServiceName="dns_prefix" --CertificateLocation="path\to\your\certificate.cer" --CertificatePassword=your_certificate_passwordNote that the ServiceName parameter takes the DNS prefix of your service. When this operation completes successfully, it returns the DNS prefix of the targeted service.

Create a staging deployment from a local package

Next, to create a new deployment from a local package (.cspkg file) and service configuration file (.cscfg)…

deployment createfromlocal -F="C:\config.ini" --Name="dns_prefix" --DeploymentName="deployment_name" --Label="deployment_label" --Staging --PackageLocation="path\to\your.cspkg" --ServiceConfigLocation="path\to\ServiceConfiguration.cscfg" --StorageAccount="your_storage_account_name"Again, the Name parameter is the DNS prefix of your hosted service. When this operation completes successfully, it returns the request ID of the request. (Look for future posts on using this request ID with the getasynchronousoperation tool in the SDK.)

Get deployment properties

Once a deployment has been created, you can get its properties:

deployment getproperties -F="C:\config.ini" --Name="dns_prefix" --BySlot=StagingNote that you get get deployment properties BySlot (staging or production) or ByName (the name of the deployment).

Move staging deployment to production

To move a deployment from staging to production…

deployment swap -F="C:\config.ini" --Name="dns_endpoint"When this operation completes successfully, it returns the request ID of the request.

Add an instance

And lastly, to change the number of instances for a deployment…

deployment editinstancenumber -F="C:\config.ini" --Name="dns_prefix" --BySlot=production --RoleName="role_name" --NewInstanceNumber=2Wrap Up

Hopefully, those examples are enough to give you the idea. In future posts, I’ll take a look at how to create scripts using these commands to make deployment and management quick and easy. In the meantime, we would love to hear your thoughts on these command line tools. How usable are they for you? What’s missing? Etc.

<Return to section navigation list>

Visual Studio LightSwitch and Entity Framework 4.1+

The ADO.NET Entity Framework Team reported Code First Migrations: Alpha 2 Released in a 6/6/2011 post:

Six weeks back we released the first preview of Code First Migrations. This preview was called ‘Code First Migrations August 2011 CTP’. However, after some changes to how we name our releases it is best to think of that release as ‘Code First Migrations Alpha 1’. Today we are happy to announce that ‘Code First Migrations Alpha 2’ is available.

Alpha 2 is still is primarily focused on the developer experience inside of Visual Studio. We realize this isn’t the only area in which migrations is important and our team is also working on scenarios including team build, deployment and invoking migrations from outside of Visual Studio as well as from custom code.

What Changed

The short answer… a lot! You gave us a lot of feedback on our first alpha and we summarized it in the 'Code First Migrations: Your Feedback' blog post. The changes are pretty widespread so we would recommend reading the Code First Migrations Alpha 2 Walkthrough to get an overview of the new functionality. Here are some of the more notable changes:

- We did a lot of work to improve the ‘no-magic’ workflow, including a code based format for expressing migrations. Our walkthrough also starts with the no-magic approach and then shows how to transition to a more-magic approach for folks who want to use it.

- A provider model is now available. Providers need to inherit from the System.Data.Entity.Migrations.Providers.DbMigrationSqlGenerator base class and are registered in a new Settings class that is added when you install the NuGet package. Alpha 2 includes a provider for SQL Server (including SQL Azure) and SQL Compact.

- We’ve taken a NuGet package name change. Alpha 1 was released as EntityFramework.SqlMigrations, but given the design changes that name makes less sense. We have now dropped the ‘Sql’ and are now just EntityFramework.Migrations.

- We’ve improved how we do type resolution to find your context etc. In the first preview there were a number of issues around how we discovered your context, especially in solutions with multiple projects. This would result in various errors when trying to run either of the migration commands. We’ve done some work to make this more robust in Alpha 2.

Feedback

We really want your feedback on this work so please download it, use it and let us know what you like and what you would like to see change. You can provide feedback by commenting on this blog post.

The key areas we really want your feedback on are:

- Shape of the API for expressing migrations. The API for migrations isn’t complete yet but Alpha 2 contains a large part of what we expect the final API to look like. We’d love your feedback on whether we are heading down the right path.

- Will you use the automatic migrations functionality? We support a purely imperative workflow where all changes are explicitly expressed in code. A lot of folks have told us that is how they want to work. We want to know if we should continue to invest in the option of having sections of the migrations process handled automatically.

Getting Started

The Code First Migrations Alpha 2 Walkthrough gives an overview of migrations and how to it for development.

Code First Migrations Alpha 2 is available via NuGet as the EntityFramework.Migrations package.

Known Issues & Limitations

We really wanted to get your feedback on these design changes so Alpha 2 hasn’t been polished and isn’t a complete set of the features we intend to ship.

Some known issues, limitations and ‘yet to be implemented’ features include:

- You must start using migrations targeting an empty database that contains no tables or targeting a database that does not yet exist. Code First Migrations assumes that it is starting against an empty database. If you target a database that already contains some/all of the tables for your model then you will get an error when migrations tries to recreate the same tables. We are currently working on a boot strapping feature that will allow you to start using migrations against an existing schema.

- VB.Net is not yet supported. Migrations can currently only be scaffolded in C#, we are adding VB.Net code generation at the moment and will release another Alpha shortly that includes VB.Net support. You can, however, use a C# project to work with migrations that reference a domain model and context that are defined in a separate VB.Net project(s).

- There is an issue with using Code First Migrations in a team environment that we are currently working through. Code First Migrations generates some additional metadata alongside each migration that captures the source and target model for the migration. The metadata is used to replicate any automatic migrations between each code-based migration. If the source model of a migration matches the target model of the previous migration then the automatic pipeline is skipped. The issue arises when two developers add migrations locally and then check-in. Because the metadata of their migrations are captured during scaffolding they don’t reflect the model changes made by the other developer, even if the second developer to check-in sync’s before committing their changes. This can cause the automatic pipeline to kick in when the migrations are run. We realize this is the key team development scenario and are working to resolve it at the moment.

- No outside-of-Visual-Studio experience. Alpha 2 only includes the Visual Studio integrated experience. We also plan to deliver a command line tool and an MSDeploy provider for Code First Migrations.

- Downgrade is currently not supported. When generating migrations you will notice that the Down method is empty, we will support down migration soon.

- Migrate to a specific version is not supported. You can currently only migrate to the latest version, we will support migrations up/down to any version soon.

Prerequisites & Incompatibilities

Migrations is dependent on EF 4.1 Update 1, this updated release of EF 4.1 will be automatically installed when you install the EntityFramework.Migrations package.

Important: If you have previously run the EF 4.1 stand alone installer you will need to upgrade or remove the installation before using migrations. This is required because the installer adds the EF 4.1 assembly to the Global Assembly Cache (GAC) causing the original RTM version to be used at runtime rather than Update 1.

This release is incompatible with “Microsoft Data Services, Entity Framework, and SQL Server Tools for Data Framework June 2011 CTP”.

Support

This is a preview of features that will be available in future releases and is designed to allow you to provide feedback on the design of these features. It is not intended or licensed for use in production. If you need assistance we have an Entity Framework Pre-Release Forum.

Rowan Miller of the ADO.NET Entity Framework Team posted Code First Migrations: Alpha 2 Walkthrough on 9/6/2011:

We have released the second preview of our migrations story for Code First development; Code First Migrations Alpha 2. This release includes a preview of the developer experience for incrementally evolving a database as your Code First model evolves over time.

This post will provide an overview of the functionality that is available inside of Visual Studio for interacting with migrations. This post assumes you have a basic understanding of the Code First functionality that was included in EF 4.1, if you are not familiar with Code First then please complete the Code First Walkthrough.

Building an Initial Model

Before we start using migrations we need a project and a Code First model to work with. For this walkthrough we are going to use the canonical Blog and Post model.

- Create a new ‘Demo’ Console application

.- Add the EntityFrameworkNuGet package to the project

- Tools –> Library Package Manager –> Package Manager Console

- Run the ‘Install-Package EntityFramework’ command

.- Add a Model.cs class with the code shown below. This code defines a single Blog class that makes up our domain model and a BlogContext class that is our EF Code First context.

Note that we are removing the IncludeMetadataConvention to get rid of that EdmMetadata table that Code First adds to our database. The EdmMetadata table is used by Code First to check if the current model is compatible with the database, which is redundant now that we have the ability to migrate our schema. It isn’t mandatory to remove this convention when using migrations, but one less magic table in our database is a good thing right!

using System.Data.Entity;

using System.Collections.Generic; using System.ComponentModel.DataAnnotations; using System.Data.Entity.Infrastructure; namespace Demo { public class BlogContext : DbContext { public DbSet<Blog> Blogs { get; set; } protected override void OnModelCreating(DbModelBuilder modelBuilder) { modelBuilder.Conventions.Remove<IncludeMetadataConvention>(); } } public class Blog { public int BlogId { get; set; } public string Name { get; set; } } }Installing Migrations

Now that we have a Code First model let’s get Code First Migrations and configure it to work with our context.

- Add the EntityFramework.MigrationsNuGet package to the project

- Run the ‘Install-Package EntityFramework.Migrations’ command in Package Manager Console