Windows Azure and Cloud Computing Posts for 9/19/2011+

| A compendium of Windows Azure, SQL Azure Database, AppFabric, Windows Azure Platform Appliance and other cloud-computing articles. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database and Reporting

- Marketplace DataMarket and OData

- Windows Azure AppFabric: Apps, Access Control, WIF and Service Bus

- Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch and Entity Framework v4+

- Windows Azure Infrastructure and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

Azure Blob, Drive, Table and Queue Services

Avkash Chauhan reminded readers of a Great new feature introduced in Windows Azure Storage: Geo Replication in a 9/18/2011 post:

Now anyone using Windows Azure Storage can sleep without any tension, by knowing that their data in Windows Azure Storage (blob, table and queue) have another safe copy replicated within the same region. I am awake at 10:20 PM for other reasons however I am taking sigh of relief by knowing that I have a copy of my data is replicated..

A few terms to know about:

- Geo-Failover

- Geo-Replication Transaction Consistency

- Primary & Secondary Location

- Disabling Geo-Replication

- Re-Bootstrap Storage Account

- Cross-PartitionKey relationships

To learn about above term and many more please visit at: http://blogs.msdn.com/b/windowsazurestorage/archive/2011/09/15/introducing-geo-replication-for-windows-azure-storage.aspx

Preps2 posted a Comparison of Windows Azure Storage Queues and Service Bus Queues on 9/17/2011:

Feature Windows Azure Storage Queues

Service Bus Queues

Comments

Programming Models Raw REST/HTTP Yes Yes .NET API Yes(Windows Azure Managed Library) Yes(AppFabric SDK) Windows Communication Foundation (WCF) binding No Yes Windows Workflow Foundation (WF) integration No Yes Protocols Runtime REST over HTTP REST over HTTP Bi-directional TCP The Service Bus managed API leverages the bi-directional TCP protocol for improved performance over REST/HTTP. Management REST over HTTP REST over HTTP Messaging Fundamentals Ordering Guarantees No First-In-First-Out (FIFO) Note: guaranteed FIFO requires the use of sessions. Message processing guarantees At-Least-Once (ALO) At Least-Once (ALO)Exactly-Once (EO) The Service Bus generally supports the ALO guarantee; however EO can be supported by using SessionState to store application state and using transactions to atomically receive messages and update the SessionState. The AppFabric workflow uses this technique to provide EO processing guarantees. Peek Lock Yes Visibility timeout: default=30s; max=2h Yes Lock timeout: default=30s; max=5m Windows Azure queues offer a visibility timeout to be set on each receive operation, while Service Bus lock timeouts are set per entity. Duplicate Detection No Yes, send-side duplicate detection The Service Bus will remove duplicate messages sent to a queue/topic (based on MessageId). Transactions No Partial The Service Bus supports local transactions involving a single entity (and its children). Transactions can also include updates to SessionState. Receive Behavior Non-blocking, i.e., return immediately if no messages REST/HTTP: long poll based on user-provided timeout.NET API: 3 options: blocking, blocking with timeout, non-blocking. Batch Receive Yes(explicit) Yes. Either (a) Implicitly using prefetch, or (b) explicitly using transactions. Batch Send No Yes (using transactions) Receive and Delete No Yes Ability to reduce operation count (and associated cost) in exchange for lowered delivery assurance. Advanced Features Dead lettering No Yes Windows Azure queues offer a ‘dequeue count’ on each message, so applications can choose to delete troublesome messages themselves. Session Support No Yes Ability to have logical subgroups within a queue or topic. Session State No Yes Ability to store arbitrary metadata with sessions. Required for integration with Workflow. Message Deferral No Yes Ability for a receiver to defer a message until they are prepared to process it. Required for integration with Workflow. Scheduled Delivery No Yes Allows a message to be scheduled for delivery at some future time. Security Authentication Windows Azure credentials ACS roles ACS allows for three distinct roles: admin, sender and receiver. Windows Azure has a single role with total access, and no ability for delegation. Management Features Get Message Count Approximate No Service Bus queues offer no operational insight at this point, but plan to in the future. Clear Queue Yes No Convenience functions to clear queue efficiently. Peek / Browse Yes No Windows Azure queues offer the ability to peek a message without locking it, which can be used to implement browse functionality. Arbitrary Metadata Yes No Windows Azure queues allow an arbitrary set of <key, value> pairs on queue metadata. Quotas/Limits Maximum message size 64 8KB256KB Maximum queue size Unlimited 5GB Specified at queue creation, with specific values of 1,2,3,4 or 5 GB. Maximum number of entities per service namespace n/a 10,000

Neil MacKenzie noted that “Windows Azure SDK v1.5 increased maximum message size to 64KB” in a 9/18/2011 comment. (Corrected above.)

<Return to section navigation list>

SQL Azure Database and Reporting

Rob Tiffany (@robtiffany) posted Sync Framework v4 is now Open Source, and ready to Connect any Device to SQL Server and SQL Azure on 9/11/2011 (missed when published):

The profound effects of the Consumerization of IT (CoIT) is blurring the lines between consumers and the enterprise. The fact that virtually every type of mobile device is now a candidate to make employees productive means that cross-platform, enabling technologies are a must. Luckily, Microsoft has brought the power to synchronize data with either SQL Server on-premise or SQL Azure in the cloud to the world of mobility. If you’ve ever synched the music on your iPhone with iTunes, the calendar on your Android device with Gmail, or the Outlook email on your Windows Phone with Exchange, then you understand the importance of sync. In my experience architecting and building enterprise mobile apps for the world’s largest organizations over the last decade, data sync has always been a critical ingredient.

The new Sync Framework Toolkit found on MSDN builds on the existing Sync Framework 2.1′s ability to create disconnected applications, making it easier to expose data for synchronization to apps running on any client platform. Where Sync Framework 2.1 required clients to be based on Windows, this free toolkit allows other Microsoft platforms to be used for offline clients such as Silverlight, Windows Phone 7, Windows Mobile, Windows Embedded Handheld, and new Windows Slates. Additionally, non-Microsoft platforms such as iPhones, iPads, Android phones and tablets, Blackberries and browsers supporting HTML5 are all first-class sync citizens. The secret is that we no longer require the installation of the Sync Framework runtime on client devices. When coupled with use of an open protocol like OData for data transport, no platform or programming language is prevented from synchronizing data with our on-premise and cloud databases. When the data arrives on your device, you can serialize it as JSON, or insert it into SQL Server Compact or SQLite depending on your platform preferences.

The Sync Framework Toolkit provides all the features enabled by theSync Framework 4.0 October 2010 CTP. We are releasing the toolkit as source code samples on MSDN with the source code utilizing Sync Framework 2.1. Source code provides the flexibility to customize or extend the capabilities we have provided to suit your specific requirements. The client-side source code in the package is released under the Apache 2.0 license and the server-side source code under the MS-LPL license. The Sync Framework 2.1 is fully supported by Microsoft and the mobile-enabling source code is yours to use, build upon, and support for the apps you create.

Now some of you might be wondering why you would use a sync technology to move data rather than SOAP or REST web services. The reason has to do with performance and bandwidth efficiency. Using SOA, one would retrieve all the data needed to the device in order to see what has changed in SQL Server. The same goes for uploading data. Using the Sync Framework Toolkit, only the changes, or deltas, are transmitted over the air. The boosts performance and reduces bandwidth usage which saves time and money in a world of congested mobile data networks with capped mobile data plans. You also get a feature called batching, which breaks up the data sent over wireless networks into manageable pieces. This not only prevents you from blowing out your limited bandwidth, but it also keeps you from using too much RAM memory both on the server and your memory-constrained mobile device. When combined with conflict resolution and advanced filtering, I’m sold!

I think you’ll find the Sync Framework Toolkit to be an immensely valuable component of your MEAP solutions for the enterprise as well as the ones you build for consumers.

Keep Synching,

<Return to section navigation list>

MarketPlace DataMarket and OData

Glenn Gailey (@ggailey777) posted Creating “Cool Looking” Windows Phone Apps on 9/19/2011:

So far, I’ve written several Window Phone 7 apps, including the OData and Windows Phone quickstart, a Northwind-based app for my MVVM walkthrough, and a couple of others—mostly consuming (as you might guess) OData. Since I created these apps to supplement documentation, they have never been much to look at—I never intended to publish them to the Marketplace. However, now that I have my Samsung Focus unlocked for development, I figured it was time to try to create a “real-world” app that I can a) get certified for the Marketplace and b) I can be proud of having on my mom’s phone.

Fortunately, I’ve been working on an Azure-based OData content service project that can integrate very nicely with a Window Phone app, so for the past few weeks I have been coding and learning what makes these apps look so cool. I’ll try to share some of what I’ve learned in this post.

Coolest Windows Phone Apps (IMHO)

Just to give you some idea of where I can coming from, here’s a partial list of some of the cooler-looking apps that I’ve seen on the Windows Phone platform (feel free to add your favs in comments to this post):

- Pictures – this built-in app features a Panorama control that displays layers of pictures, which makes even the most mundane snaps seem cooler.

- IMDB – OK I admit that I must use IMDB when I watch movies, I’m weird that way. Plus this app is slick, with it’s use of multiple levels of Panorama controls and tons of excellent graphics (image is everything in Hollywood, right?)—if anything there may be too much bling.

- Ferry Master – A nifty little app written by someone on the phone team for folks who take Washington State ferries, including background images of Puget Sound scenes.

- Mix11 Explorer – this app, written for the Mix 2011 conference, uses a simple layering of basic shapes that mirrors the design of the Mix 2011 web site.

- Kindle – Amazon’s classy eReader on the phone, with obviously high production value and graphics.

I would love to show screenshots of these, but most screens aren’t even available outside of the phone (just search Marketplace for these app names and check-out their screenshots.)

Find Classy Graphics

I know that the general design principles from the phone folks are that “modern design in a touch application is undecorated, free of chrome elements, and minimally designed.” However, graphics is the kind of content that really pops on the phone. Make sure that the graphics that you use are clean and have impact, and that you have the rights to use them or you could get blocked in certification (and you don’t want to get hassled by the owner after you publish the app). Many professional apps leverage some of the brand images and design themes of their corporate web sites.

Use Expression Blend

Up to this point, I’ve used exclusively Visual Studio Express to write my Windows Phone apps. As I mentioned, my apps have mostly involved programming the OData Client Library for Windows Phone. While much better for writing code (IMHO) and essential for debugging, the design facilities in Visual Studio are, shall we say, limited. Unless you are an expert in the powerful-but-labyrinthine XAML expression language, you are going to need some extra help. Fortunately, Microsoft’s Expression Blend is designed specifically for XAML (WPF, Silverlight, Windows Phone), and it even supports animations. Here’s a rundown of Expression Blend versus Visual Studio Express:

Expression Blend

While I’m still not completely comfortable with the UI, Expression Blend has proven very adept at these design aspects:

- Applying styles – Expression is much more intuitive and visual than VS, even making it easy to create gradients

- Working with graphics – very easy to add background images to elements

- Animations – I haven’t even tried any of these yet, but good luck creating a storyboard in VS

- Entering text – XAML is weird with multi-line text in text-blocks, and Expression will generate this code automagically

- Preview in both orientations and in both light and dark themes – this is akin to previewing web pages in multiple browsers—make sure you take advantage of this functionality

Visual Studio Express

Visual Studio is hands-down best with these programming aspects:

- Solution/project management – I prefer to setup the project and add resources in VS

- Build/debug – both will launch the emulator, but in VS you can actually debug in the emulator—or the device, which is much better

- Add Service Reference – I don’t think that Expression has anything like this tool, which is very important for OData (especially in Mango when it actually works)

Because each IDE has it’s own strengths, I’ve found myself keeping my project open in both Expression and VS, and flipping back and forth during development.

Use Layering

In XAML, you can control the layer (z) of elements in the display as well as the opacity of elements. This control enables you to create a nice, modern layered look (think of today’s Windows 7 versus XP) with background element being partly visible through elements in front. This enables you to create a more “composed” screen with a sense of depth.

User Interaction

Smartphones are interactive devices, and you can make it respond to orientation changes, motion, location, and even giggling. Plus, navigating a page by swiping with your finger is the best part. In fact, swiping is so cool that Windows 8 features it heavily in the newly-announced Windows Metro (which looks a lot like Windows Phone 7). Make sure that you include some of this in your apps, at least handle the orientation change from portrait to landscape, which is easier than you think if you do your XAML correctly.

Panorama Control is Cool

As a key component to nearly all of my favorite apps, the Panorama control features all of the aspects we just discussed: leveraging graphics, using layers, and user interactions. It’s basically a long horizontal control that, unlike the Pivot control, displays a single, contiguous background image across multiple screen-sized items. As the user flips between items, there is a nice layered motion effect (like in those 1930’s Popeye cartoons) where the individual items, and their graphics, moves faster than the background images, giving an illusion of depth to the app.

Follow the Guidelines

To support the general “coolness” of the platform, the Windows Phone team has published an extensive set of design guidelines. Of course, they recommend that you stick as closely as possible to the phone-driven themes (which the IMDB app doesn’t do too well), but most of the guidance is meant to promote easy-to-use apps and a more uniform platform. (I’m not sure how this compares to, say, iPhone apps, since I’ve never had an iPhone.)

At any rate, since I am planning to go through the entire Marketplace process, I will probably post another blog with the results of my adventure.

<Return to section navigation list>

Windows Azure AppFabric: Apps, Access Control, WIF and Service Bus

Clemens Vasters (@clemensv) posted Service Bus Topics and Queues – Advanced on 9/18/2011:

This session is a followup to the Service Bus session that I did at the build conference and explains advanced usage patterns:

Clemens later posted Securing Service Bus with the Access Control Service post on 9/19/2011:

This session explains how to secure Service Bus using the Access Control Service. This is also an extension session for my session at BUILD, but watching the BUILD session is not a strict prerequisite.

Abishek Lal described Windows Azure Service Bus Queues, Topics / Subscriptions in a 9/14/2011 post (missed when published):

We just released to production the Queues, Publish-Subscribe with Topics / Subscriptions features that were earlier showcased in Community Technology Preview for Service Bus. For an introductory period these Brokered Messaging features are free, but you continue to pay Azure data transfer. Also the Relay features continue to be charged as before. Following are steps to quickly get started, and the links to additional resources:

The steps below are to download and run a simple application that showcases the publish-subscribe capabilities of Service Bus. You will need to have Visual Studio and NuGet installed. We will start by creating a new console application in VS:

Open the Project –> Properties and change the Target framework to .NET Framework 4

From the References node in Solution Explorer click on the context menu item for Add Library Package Reference. This will show only if you have NuGet Extension installed. (To learn more about NuGet see this TechEd video).

Search for “AppFabric” and select the Windows Azure AppFabric Service Bus Samples – PublishSubscribe item. Then complete the Install and close this dialog.

Note that the required client assemblies are now referenced and some new code files are added.

Add the following line to the main method in Program.cs and hit F5

Microsoft.Samples.PublishSubscribe.Program.Start(null);At this point you will be prompted to provide a ServiceNamespace and Issuer Name and Key. You can create your own namespace from https://windows.azure.com/

Once a new namespace has been created you can retrieve the key from the Properties section by clicking View under Default Key:

These values can now be used in the Console application:

At this point you can run thru the different scenarios showcased by the sample. Following are some additional resources:

- Download page for SDK and Samples

- Documentation for Service Bus September Release

- Forum for feedback

Looking forward to your feedback / questions / concerns / suggestions.

Rick Garibay (@rickggaribay) reported Azure AppFabric Service Bus Brokered Messaging GA & Rude CTP Diffs on 5/14/2011 (missed when posted):

Today at the Build conference in Anaheim California, Satya Nadella, President Server and Tools business announced general availability of the production release of AppFabric Queues and Topics, otherwise known as Brokered Messaging.

Brokered Messaging introduces durable queue capabilities and rich, durable pub-sub with topics and subscriptions that compliment the existing Relayed Messaging capabilities.

I covered Brokered Messaging following the May CTP release of Queues and followed up shortly with an overview and exploration of Topics (please see some other great resources at the end of this post).

Since then, there was a June CTP release which included the new AppFabric Application and no visible changes to Brokered Messaging, however since its release, the AppFabric Messaging team has been hard at work refining the API and behaviors based on feedback from Advisors, MVPs and the community at large.

Since I’ve already covered Queues and Topics in the aforementioned posts, I’ll dive right in to some terse examples which demonstrate the API changes. Though not an exhaustive review of all of the changes, I’ve covered the types that your most likely to come across and will cover Queues, Topics and Subscriptions extensively in my upcoming article in CODE Magazine which will also include more in-depth walk-throughs of the .NET Client API, REST API and WCF scenarios.

Those of you who have worked with the CTPs will find some subtle and not so subtle changes, but all in all I think all of the refinements are for the best and I think you’ll appreciate them as I have. For those new to Azure AppFabric Service Bus Brokered Messaging, you’ll benefit most from reading my first two posts based on the May CTP (or any of the resources at the end of this post) to get an idea of the why behind queues and topics and then come back here to explore the what and how.

A Quick Note on Versioning

In the CTPs that preceded the release of the new Azure AppFabric Service Bus features, a temporary assembly called “Microsoft.ServiceBus.Messaging.dll” was added to serve a container for new features and deltas that were introduced during the development cycle. The final release includes a single assembly called “Microsoft.ServiceBus.dll” which contains all of the existing relay capabilities that you’re already familiar with as well as the addition of support for queues and topics. If you are upgrading from the CTPs, you’ll want to get ahold of the new Microsoft.ServiceBus.dll version 1.5 which includes everything plus the new queue and topic features.

The new 1.5 version of the Microsoft.ServiceBus.dll assembly targets the .NET 4.0 framework. Customers using .NET 3.5 can continue using the existing Microsoft.ServiceBus.dll assembly (version 1.0.1123.2) for leveraging the relay capabilities, but must upgrade to .NET 4.0 to take advantage of the latest features presented here.

.NET Client API

Queues

Below is a representative sample for creating, configuring, sending and receiving a message on a queue:

Administrative Operations

1:2: // Configure and create NamespaceManager for performing administrative operations3: NamespaceManagerSettings settings = new NamespaceManagerSettings();4: TokenProvider tokenProvider = settings.TokenProvider = TokenProvider.CreateSharedSecretTokenProvider(issuer,key);5:6: NamespaceManager manager = new NamespaceManager(ServiceBusEnvironment.CreateServiceUri("sb", serviceNamespace, string.Empty), settings);7:8: // Check for existence of queues on the fabric9: var qs = manager.GetQueues();10:11: var result = from q in qs12: where q.Path.Equals(queueName, StringComparison.OrdinalIgnoreCase)13: select q;14:15: if (result.Count() == 0)16: {17: Console.WriteLine("Queue does not exist");18:19: // Create Queue20: Console.WriteLine("Creating Queue...");21:22: manager.CreateQueue(new QueueDescription(queueName) { LockDuration = TimeSpan.FromSeconds(5.0d) });23:24: }Runtime Operations

1: // Create and Configure Messaging Factory to provision QueueClient2: MessagingFactorySettings messagingFactorySettings = new MessagingFactorySettings();3: messagingFactorySettings.TokenProvider = settings.TokenProvider = TokenProvider.CreateSharedSecretTokenProvider(issuer, key);4: MessagingFactory messagingFactory = MessagingFactory.Create(ServiceBusEnvironment.CreateServiceUri("sb", serviceNamespace, string.Empty), messagingFactorySettings);5:6: QueueClient queueClient = messagingFactory.CreateQueueClient(queueName, ReceiveMode.PeekLock);7:8: Order order = new Order();9: order.OrderId = 42;10: order.Products.Add("Kinect", 70.50M);11: order.Products.Add("XBOX 360", 199.99M);12: order.Total = order.Products["Kinect"] + order.Products["XBOX 360"];13:14: // Create a Brokered Message from the Order object15: BrokeredMessage msg = new BrokeredMessage(order);16:17: /***********************18: *** Send Operations ***19: ************************/20:21: queueClient.Send(msg);22:23: /**************************24: *** Receive Operations ***25: ***************************/26:27: BrokeredMessage recdMsg;28: Order recdOrder;29:30: // Receive and lock message31: recdMsg = queueClient.Receive();32:33: if(recdMsg != null)34: {35: // Convert from BrokeredMessage to native Order36: recdOrder = recdMsg.GetBody<Order>();37:38: Console.ForegroundColor = ConsoleColor.Green;39: Console.WriteLine("Received Order {0} \n\t with Message Id {1} \n\t and Lock Token:{2} \n\t from {3} \n\t with total of ${4}", recdOrder.OrderId, recdMsg.MessageId, recdMsg.LockToken, "Receiver 1", recdOrder.Total);40: recdMsg.Complete();41: }42: queueClient.Close();Note that MessageSender and MessageReceiver are now optional. Here’s an example that shows PeekLocking a message, simulating an exception and trying again:

1:2: // Alternate receive approach using agnostic MessageReceiver3: MessageReceiver receiver = messagingFactory.CreateMessageReceiver(queueName); // Recieve, complete, and delete message from the fabric4:5: try6: {7: // Receive and lock message8: recdMsg = receiver.Receive();9:10: // Convert from BrokeredMessage to native Order11: recdOrder = recdMsg.GetBody<Order>();12:13: // Complete read, release and delete message from the fabric14: receiver.Complete(recdMsg.LockToken);15:16: Console.ForegroundColor = ConsoleColor.Green;17: Console.WriteLine("Received Order {0} \n\t with Message Id {1} \n\t and Lock Token:{2} \n\t from {3} \n\t with total of ${4} \n\t at {5}", recdOrder.OrderId, recdMsg.MessageId, recdMsg.LockToken, "Receiver 2", recdOrder.Total, DateTime.Now.Hour + ":" + DateTime.Now.Minute + ":" + DateTime.Now.Second);18: }19: catch20: {21: // Should processing fail, release the lock from the fabric and make message available for later processing.22: if (recdMsg != null)23: {24: receiver.Abandon(recdMsg.LockToken);25:26: Console.ForegroundColor = ConsoleColor.Red;27: Console.WriteLine("Message could not be processed.");28:29: }30: }31: finally32: {33: receiver.Close();34: }

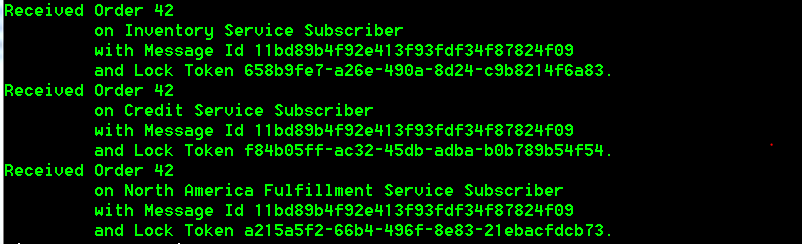

As shown below, this sample results in order 42 being received by the QueueClient:

Topics

Below is a representative sample for creating, configuring, sending and receiving a message on a topic:

Administrative Operations

1: // Configure and create NamespaceManager for performing administrative operations2: NamespaceManagerSettings settings = new NamespaceManagerSettings();3: settings.TokenProvider = TokenProvider.CreateSharedSecretTokenProvider(issuer, key);4: NamespaceManager manager = new NamespaceManager(ServiceBusEnvironment.CreateServiceUri("sb", serviceNamespace, string.Empty), settings);5:6: // Check for existence of topics on the fabric7: var topics = manager.GetTopics();8:9: var result = from t in topics10: where t.Path.Equals(topicName, StringComparison.OrdinalIgnoreCase)11: select t;12:13: if (result.Count() == 0)14: {15: Console.WriteLine("Topic does not exist");16:17: // Create Queue18: Console.WriteLine("Creating Topic...");19:20: TopicDescription topic = manager.CreateTopic(topicName);21: }22:23: // Create Subscriptions for InventoryServiceSubscription and CreditServiceSubscription and associate to OrdersTopic:24: SubscriptionDescription inventoryServiceSubscription = new SubscriptionDescription(topicName, "InventoryServiceSubscription");25: SubscriptionDescription creditServiceSubscription = new SubscriptionDescription(topicName, "CreditServiceSubscription");26:27:28: // Set up Filters for NorthAmericaFulfillmentServiceSubscription29: RuleDescription northAmericafulfillmentRuleDescription = new RuleDescription();30: northAmericafulfillmentRuleDescription.Filter = new SqlFilter("CountryOfOrigin = 'USA' OR CountryOfOrigin ='Canada' OR CountryOfOrgin ='Mexico'");31: northAmericafulfillmentRuleDescription.Action = new SqlRuleAction("set FulfillmentRegion='North America'");32:33:34: // Create Subscriptions35: SubscriptionDescription northAmericaFulfillmentServiceSubscription = new SubscriptionDescription(topicName, "NorthAmericaFulfillmentServiceSubscription");36:37: // Delete existing subscriptions38: try { manager.DeleteSubscription(topicName, inventoryServiceSubscription.Name); } catch { };39: try { manager.DeleteSubscription(topicName, creditServiceSubscription.Name); } catch { };40: try { manager.DeleteSubscription(topicName, northAmericaFulfillmentServiceSubscription.Name); } catch { };41:42:43: // Add Subscriptions and Rules to Topic44: manager.CreateSubscription(inventoryServiceSubscription);45: manager.CreateSubscription(creditServiceSubscription);46: manager.CreateSubscription(northAmericaFulfillmentServiceSubscription, northAmericafulfillmentRuleDescription);47:

Runtime Operations

1: // Create and Configure Messaging Factory to provision TopicClient2: MessagingFactorySettings runtimeSettings = new MessagingFactorySettings();3: runtimeSettings.TokenProvider = TokenProvider.CreateSharedSecretTokenProvider(issuer, key);4: MessagingFactory messagingFactory = MessagingFactory.Create(ServiceBusEnvironment.CreateServiceUri("sb",serviceNamespace,String.Empty),runtimeSettings);5:6: // Create Topic Client for sending messages to the Topic:7: TopicClient client = messagingFactory.CreateTopicClient(topicName);8:9: /***********************10: *** Send Operations ***11: ***********************/12:13: // Prepare BrokeredMessage and corresponding properties14: Order order = new Order();15: order.OrderId = 42;16: order.Products.Add("Kinect", 70.50M);17: order.Products.Add("XBOX 360", 199.99M);18: order.Total = order.Products["Kinect"] + order.Products["XBOX 360"];19:20: // Set the body to the Order data contract21: BrokeredMessage msg = new BrokeredMessage(order);22:23: // Set properties for use in RuleDescription24: msg.Properties.Add("CountryOfOrigin", "USA");25: msg.Properties.Add("FulfillmentRegion", "");26:27: // Send the message to the OrdersTopic28: client.Send(msg);29: client.Close();30:31: /**************************32: *** Receive Operations ***33: ****************************/34:35: BrokeredMessage recdMsg;36: Order recdOrder;37:38: // Inventory Service Subscriber39: SubscriptionClient inventoryServiceSubscriber = messagingFactory.CreateSubscriptionClient(topicName, "InventoryServiceSubscription",ReceiveMode.PeekLock);40:41: // Read the message from the OrdersTopic42: while ((recdMsg = inventoryServiceSubscriber.Receive(TimeSpan.FromSeconds(5))) != null)43: {44: // Convert from BrokeredMessage to native Order45: recdOrder = recdMsg.GetBody<Order>();46:47: // Complete read, release and delete message from the fabric48: inventoryServiceSubscriber.Complete(recdMsg.LockToken);49:50: Console.ForegroundColor = ConsoleColor.Green;51: Console.WriteLine("Received Order {0} \n\t on {1} \n\t with Message Id {2} \n\t and Lock Token {3}.", recdOrder.OrderId, "Inventory Service Subscriber", recdMsg.MessageId, recdMsg.LockToken);52: }53: inventoryServiceSubscriber.Close();54:55: // Credit Service Subscriber56: SubscriptionClient creditServiceSubscriber = messagingFactory.CreateSubscriptionClient(topicName, "CreditServiceSubscription");57:58: // Read the message from the OrdersTopic59: recdMsg = creditServiceSubscriber.Receive();60:61: // Convert from BrokeredMessage to native Order62: recdOrder = recdMsg.GetBody<Order>();63:64: // Complete read, release and delete message from the fabric65: creditServiceSubscriber.Complete(recdMsg.LockToken);66:67: Console.ForegroundColor = ConsoleColor.Green;68: Console.WriteLine("Received Order {0} \n\t on {1} \n\t with Message Id {2} \n\t and Lock Token {3}.", recdOrder.OrderId, "Credit Service Subscriber", recdMsg.MessageId, recdMsg.LockToken);69:70: creditServiceSubscriber.Close();71:72: // Fulfillment Service Subscriber for the North America Fulfillment Service Subscription73: SubscriptionClient northAmericaFulfillmentServiceSubscriber = messagingFactory.CreateSubscriptionClient(topicName, "northAmericaFulfillmentServiceSubscription");74:75: // Read the message from the OrdersTopic for the North America Fulfillment Service Subscription76: recdMsg = northAmericaFulfillmentServiceSubscriber.Receive(TimeSpan.FromSeconds(5));77:78:79: if(recdMsg != null)80: {81: // Convert from BrokeredMessage to native Order82: recdOrder = recdMsg.GetBody<Order>();83:84: // Complete read, release and delete message from the fabric85: northAmericaFulfillmentServiceSubscriber.Complete(recdMsg.LockToken);86:87: Console.ForegroundColor = ConsoleColor.Green;88: Console.WriteLine("Received Order {0} \n\t on {1} \n\t with Message Id {2} \n\t and Lock Token {3}.", recdOrder.OrderId, "North America Fulfillment Service Subscriber", recdMsg.MessageId, recdMsg.LockToken);89: }90: else91: {92: Console.ForegroundColor = ConsoleColor.Yellow;93: Console.WriteLine("No messages for North America found.");94: }95:96: northAmericaFulfillmentServiceSubscriber.Close();

When running this sample, you’ll see that I have received Order 42 on my Inventory, Credit and North America Fulfillment Service subscriptions:

WCF

One of the great things about the WCF programming model is that it abstracts much of the underlying communication details and as such, other than dropping in a new assembly and and refactoring the binding and configuration, it is not greatly affected by the API changes from the May/June CTP to GA.

As I mentioned, one thing that has changed is that the ServiceBusMessagingBinding has been renamed to NetMessagingBinding. I’ll be covering and end to end example of using the NetMessagingBinding in my upcoming article in CODE Magazine.

REST API

The REST API is key to delivering these new capabilities across a variety of client platforms and remains largely unchanged, however one key change is how message properties are handled. Instead of individual headers for each, there is now one header with all the properties JSON encoded. Please refer to the updated REST API Reference doc for details. I’ll also be covering and end-to-end example of using the REST API to write an read to/from a queue in my upcoming article in CODE Magazine.

More Coming Soon

As I mentioned, in my upcoming article in CODE Magazine, I’ll cover the Why, What, and How behind Azure AppFabric Service Bus Brokered Messaging including end to end walkthroughs with the .NET Client API, REST API and WCF Binding. The November/December issue should be on newsstands (including Barnes and Noble) or your mailbox towards the end of October. You can also find the article online at http://code-magazine.com

Resources

You can learn more about this exciting release as well as download the GA SDK version 1.5 by visiting the following resources:

- Azure AppFabric SDK 1.5:http://www.microsoft.com/download/en/details.aspx?id=27421

- Clemens Vasters on the May CTP: http://vasters.com/clemensv/2011/05/16/Introducing+The+Windows+Azure+AppFabric+Service+Bus+May+2011+CTP.aspx

- Great video by Clemens Vasters on Brokered Messaging: http://vasters.com/clemensv/2011/06/11/Understanding+Windows+Azure+AppFabric+Queues+And+Topics.aspx

- David Ingham on Queues: http://blogs.msdn.com//b/appfabric/archive/2011/05/17/an-introduction-to-service-bus-queues.aspx

- David Ingham on Topics: http://blogs.msdn.com//b/appfabric/archive/2011/05/25/an-introduction-to-service-bus-topics.aspx

- My Introduction to Queues: http://rickgaribay.net/archive/2011/05/17/appfabric-service-bus-v2-ctp.aspx

- My Introduction to Topics:http://rickgaribay.net/archive/2011/05/31/exploring-appfabric-service-bus-v2-may-ctp-topics.aspx

<Return to section navigation list>

Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

No significant articles today.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

Brian Swan (@brian_swan) explained How to Get Diagnostics Info for Azure/PHP Applications–Part 1 in a 9/19/2011 post to the Windows Azure’s Silver Lining blog:

In this post, I’ll look at why getting diagnostic data for applications in the cloud is important for all applications (not just PHP), provide a short overview of what Windows Azure Diagnostics is, and show how to get diagnostics data for PHP applications (although much of what I look at is applicable regardless of language). In this post I’ll focus on how to use a configuration file (the diagnostics.wadcfg file) to get diagnostics, while in Part 2 I’ll look at how to to this programmatically.

Why get diagnostic data?

There are lots of reasons to collect and analyze data about applications running in the cloud (or any application, for that matter). One obvious reason is to help with troubleshooting. Without data about your application, it’s very difficult to figure out why something is broken when it breaks. However, gathering diagnostic data for applications running in the cloud takes on added importance. Without diagnostic data, it becomes difficult (perhaps impossible) to take advantage of scalability, a basic value proposition of the could. In order to know when to add or subtract instances from a deployment, it is essential to know how your application is performing. This is what Windows Azure Diagnostics allows you to do. (Look for more posts soon about how to scale an application based on diagnostics.)

What is Windows Azure Diagnostics?

In understanding how to get diagnostic data for my PHP applications running in Azure, it first helped me to understand what this thing called “Windows Azure Diagnostics” is. So, here’s the 30,000-foot view that, hopefully, will provide context for the how-to section that follows…

Windows Azure Diagnostics is essentially an Azure module that monitors the performance (in the broad sense) of role instances. When a role imports the Diagnostics module (which is specified in the ServiceDefinition.csdef file for a role), the module does two things:

- The Diagnostics module creates a configuration file for each role instance and stores these files in your Blob storage (in a container called wad-control-container). Each file contains information (for example) about what diagnostic information should be gathered, how often it should be gathered, and how often it should be transferred to your Table storage. You can specify these settings (and others) in a diagnostics.wadcfg file that is part of your deployment (the Diagnostics module will look for this file when it starts). Regardless of whether you include the diagnostics.wadcfg file with your deployment, you can create or make changes programmatically after deployment (the Diagnostics module will create and/or update the configuration files that are in Blob storage).

- According to settings in the configuration files in Blob storage, the Diagnostics module begins writing data to local storage and transferring it to Table storage (to enable the transfer you have to supply storage connection string information in your ServiceConfiguration.cscfg file).

So that’s the high-level view (import a module, configure via a file or programmatically, diagnostics info is written to your Azure storage account). Now for the details…

How to get diagnostic data using a configuration file

There are two basic ways you can get diagnostics data for a Windows Azure application: using a configuration file and programmatically. In this post, I’ll examine how to use a configuration file (I’ll look at how to get diagnostics programmatically in Part 2). I’ll assume you have followed this tutorial, Build and Deploy a Windows Azure PHP Application, and have a ready-to-package PHP application.

Note: Although I will walk through this configuration in the context of a PHP application, the configuration steps are the same for any Azure deployment, regardless of language.

Step 1: Import the Diagnostics Module

To specify that a role should import the Diagnostics module, you need to edit your ServiceDefinition.csdef file. After you run the default scaffolder in the Windows Azure SDK for PHP (details in the tutorial link above), you will have a skeleton Azure PHP application with a directory structure like this:

Open the ServiceDefinition.cscfg file in your favorite XML editor and make sure the <Imports> element has this child element:

<Import moduleName="Diagnostics"/>That all that’s necessary to import the Diagnostics module.

Step 2: Enable transfer to Azure storage

To allow the Diagnostics module to transfer data from local storage to your Azure storage account, you need to provide your storage account name and key in the ServiceConfiguration.cscfg file. Again in your favorite XML editor, open this file and make sure the <ConfigurationSettings> element has the following child element::

<Setting name="Microsoft.WindowsAzure.Plugins.Diagnostics.ConnectionString"value="DefaultEndpointsProtocol=https;AccountName=your_account_name;AccountKey=your_account_key"/>Obviously, you’ll have to fill in your storage account name and key.

Step 3: Edit the configuration file (diagnostics.wadcfg)

Now you can specify what diagnostic information you’d like to collect. Notice that included in the directory structure shown above is the diagnostics.wadcfg file. Open this file in an XML editor and you’ll begin to see exactly what you can configure. I’ll point out some of the important pieces and provide one example.

In the root element (<DiagnosticMonitorConfiguration>), you can configure two settings with the configurationChangePollInterval and overallQuotaInMB attributes:

- The frequency with which the Diagnostic Monitor looks at the configuration files in your blob storage for updates (see the What is Windows Azure Diagnostics section above for more information) is controlled by the configurationChangePollInterval.

- The amount of local storage set aside for storing diagnostic information is controlled by the overallQuotaInMB attribute. When this quota is reached, old entries are deleted to make room for new ones.

In the example here, these settings are 1 minute and 4GB respectively:

<DiagnosticMonitorConfiguration xmlns="http://schemas.microsoft.com/ServiceHosting/2010/10/DiagnosticsConfiguration"configurationChangePollInterval="PT1M"overallQuotaInMB="4096">Note: if you want to increase the value of the overallQuotaInMB setting, you may also need to edit your ServiceDefinition.csdef file prior to deployment – details here.

The following table describes what each of the child elements in the default diagnostics.wadcfg file does. (For more detailed information, see Windows Azure Diagnostics Configuration Schema.)

Element

Description

<DiagnosticInfrastructureLogs>

Logs information about the diagnostic infrastructure, the RemoteAccess module, and the RemoteForwarder module.

<Directories>

Defines the buffer configuration for file-based logs that you can define.

<Logs>

Defines the buffer configuration for basic Windows Azure logs.

<PerformanceCounters>

Logs information about how well the operating system, application, or driver is performing. For a list of performance counters that you can collect, see List of Performance Counters for Windows Azure Web Roles.

<WindowsEventLog>

Logs events that are typically used for troubleshooting application and driver software.

The bufferQuotaInMB attribute on these elements controls how much local storage is set set aside for each diagnostic (the sum of which cannot exceed the value of the overallQuotaInMB attribute). If the value is set to zero, no cap is set for the particular diagnostic, but the total collected at any one time will not exceed the overall quota.

The scheduledTransferPeriod attribute controls the interval at which information is transferred to your Azure storage account.

As an example, this simple configuration file collects information about specified performance counters (the sampleRate attribute specifies how often the counter should be collected):

<DiagnosticMonitorConfiguration xmlns="http://schemas.microsoft.com/ServiceHosting/2010/10/DiagnosticsConfiguration"configurationChangePollInterval="PT1M"overallQuotaInMB="4096"><PerformanceCounters bufferQuotaInMB="0" scheduledTransferPeriod="PT5M"><PerformanceCounterConfigurationcounterSpecifier="\Processor(_Total)\% Processor Time" sampleRate="PT1M" /><PerformanceCounterConfigurationcounterSpecifier="\Memory\Available Mbytes" sampleRate="PT1M" /><PerformanceCounterConfigurationcounterSpecifier="\TCPv4\Connections Established" sampleRate="PT1M" /></PerformanceCounters></DiagnosticMonitorConfiguration>Step 4: Package and deploy your project

Now, you are ready to package and deploy your project. Instructions for doing so are here: Build and Deploy a Windows Azure PHP Application.

Step 5: Collect diagnostic information

After you deploy your application to Windows Azure, the Diagnostics module will begin writing data to your storage account (it may take several minutes before you see the first entry). In the case of performance counters (as shown in the example configuration file above), diagnostic information is written to a table called WADPerformanceCountersTable in Table storage. To get this information, you can query the table using the Windows Azure SDK for PHP like this:

define("STORAGE_ACCOUNT_NAME", "Your_storage_account_name");define("STORAGE_ACCOUNT_KEY", "Your_storage_account_key");$table = new Microsoft_WindowsAzure_Storage_Table('table.core.windows.net', STORAGE_ACCOUNT_NAME, STORAGE_ACCOUNT_KEY);$metrics = $table->retrieveEntities('WADPerformanceCountersTable');$i = 1;foreach($metrics AS $m) {echo $i.". ".$m->DeploymentId . " - " . $m->RoleInstance . " - " . $m->CounterName . ": " . $m->CounterValue . "<br/>";$i++;}Of course, getting this data can be somewhat trickier if you have several roles writing data to your storage account. And, this begs the question, what do I do with this data now that I’ve got it? So, it looks like I have plenty to cover in future posts.

Michael Washam (MWashamMS) described Windows Azure Diagnostics and PowerShell – Performance Counters in a 9/19/2011 post:

With the introduction of Windows Azure PowerShell Cmdlets 2.0 we have added a lot of functionality around managing Windows Azure Diagnostics. This is a 2nd article in a series that covers various aspects of diagnostics and PowerShell. Click here to see the previous article on using tracing and the Windows Azure Log.

Just as in the other articles you will need to add the PowerShell Snapin (or module):

Add-PsSnapin WAPPSCmdletsNext I have a handy helper function called GetDiagRoles that I use for all of my diagnostic examples:

Initialization and Helper Function

$storageAccountName = "YourStorageAccountName" $storageAccountKey = "YourStorageAccountKey" $deploymentSlot = "Production" $serviceName = "YourHostedService" $subscriptionId = "YourSubscriptionId" # Thumbprint for your cert goes below $mgmtCert = Get-Item cert:\CurrentUser\My\D7BECD4D63EBAF86023BB4F1A5FBF5C2C924902A function GetDiagRoles { Get-HostedService -ServiceName $serviceName -SubscriptionId $subscriptionId -Certificate $mgmtCert | ` Get-Deployment -Slot $deploymentslot | ` Get-DiagnosticAwareRoles -StorageAccountName $storageAccountName -StorageAccountKey $storageAccountKey }I have another helper function I have written that basically creates a new PerformanceCounterConfiguration object. You will need an array of these to pass to the Set-PerformanceCounter cmdlet. The function takes the performance counter specifier (more on this shortly) and the sample rate in seconds for each counter.

Helper Function that Initializes a Counter Object

function CPC($specifier, $SampleRate) { $perfCounter = New-Object Microsoft.WindowsAzure.Diagnostics.PerformanceCounterConfiguration $perfCounter.CounterSpecifier = $specifier $perfCounter.SampleRate = [TimeSpan]::FromSeconds($SampleRate) return $perfCounter }From there it is up to you to pass the performance counters you are interested in. I’ve written another function that wraps up a the functionality of creating a PowerShell array, populating it with the counters I am interested in and sets them for the diagnostic aware role I pass in from GetDiagRoles. Note: I’m also comparing the current role name to the name of one of my web roles so I’m only adding web related counters on instances that I care about. I retrieved the counter names by typing in: typeperf.exe /qx while logged into my Windows Azure roles via RDP. This way I can actually get counters specific to the machines in the service (and the processes such as RulesEngineService.exe (my sample NT service). Finally, it makes a call to the Set-PerformanceCounter cmdlet with the array of performance counters, and configures diagnostics to transfer these to Windows Azure Storage every 15 minutes.

Helper Function that Configures Counters for a Role

function SetPerfmonCounters { $sampleRate = 15 $counters = @() $counters += CPC "\Processor(_Total)\% Processor Time" $sampleRate $counters += CPC "\Memory\Available MBytes" $sampleRate $counters += CPC "\PhysicalDisk(*)\Current Disk Queue Length" $sampleRate $counters += CPC "\System\Context Switches/sec" $sampleRate # Process specific counters (retrieved via typeperf.exe /qx) $counters += CPC "\Process(RulesEngineService)\% Processor Time" $sampleRate $counters += CPC "\Process(RulesEngineService)\Private Bytes" $sampleRate # $RoleName is populated from a call to GetDiagRoles in the next snippet. if($RoleName -eq "SomeWebRole") { $counters += CPC "\W3SVC_W3WP(*)\Requests / Sec" $sampleRate $counters += CPC "\ASP.NET\Requests Queued" $sampleRate $counters += CPC "\ASP.NET\Error Events Raised" $sampleRate $counters += CPC "\ASP.NET\Request Wait Time" $sampleRate # Process specific counters (retrieved via typeperf.exe /qx $counters += CPC "\Process(W3WP)\% Processor Time" $sampleRate $counters += CPC "\Process(W3WP)\Private Bytes" $sampleRate } $input | Set-PerformanceCounter -PerformanceCounters $counters -TransferPeriod 15 }Now linking all of this together to configure performance logging is pretty simple. In the snippet below I call GetDiagRoles which returns a collection of all of the diagnostic aware roles in my service. Which I then pipe individually to the SetPerfmonCounters function previously created.

Set Counters for each Role

GetDiagRoles | foreach { $_ | SetPerfmonCounters }Now I am successfully logging perfmon data and transferring it to storage. What’s next? Well analysis and clean up of course! To analyze the data we have added another cmdlet called Get-PerfmonLogs that downloads the data and optionally converts it to .blg for analysis. The snippet below creates a perfmon log foreach role in your service using the BLG format. Note the Get-PerfmonLog cmdlet also supports -From and -To (or -FromUTC or -ToUTC) so you can selectively download performance counter data.

Download Perfmon Logs for Each Role

GetDiagRoles | foreach { $Filename = "c:\DiagData\" + $_.RoleName + "perf.blg" $_ | Get-PerfmonLog -LocalPath $Filename -Format BLG }Once the data is downloaded you can utilize the Clear-PerfmonLogs to clean up the previously downloaded perfmon counters from Windows Azure Storage. Note all of the Clear-* diagnostic data cmdlets support a -From and -To parameter to allow you to manage what data to delete.

Delete Perfmon Data from Storage for Each Role

GetDiagRoles | foreach { $_ | Clear-PerfmonLog -From "9/19/2011 6:00:00 AM" -To "9/19/2011 9:00:00 AM" }Finally, one last example. If you ever have the need to completely clear out and reset your perfmon counters there is a -Clear switch that gives you this functionality.

Reset Perfmon Logs for Each Role

GetDiagRoles | foreach { $_ | Set-PerfmonLog -Clear }

See Michael’s earlier post below.

HPC in the Cloud reported Microsoft Platform Powers Mission-Critical Operations in Financial Services on 9/19/2011:

TORONTO, September 19 -- Today at SIBOS 2011, Microsoft Corp. announced that a growing number of financial services customers are benefiting from the significant gains in agility, operational efficiency and cost savings achieved by moving to the high-performance Windows Server operating system and Microsoft SQL Server database.

These customers have not only reduced the cost of running their core processes, they have also realized substantial benefits by making these core processes part of a dynamic IT infrastructure that enables them to understand and serve customers better, bring new products to market more quickly, continually improve operations, and collaborate with an evolving set of partners in their global value chains.

"Microsoft is making a long-term commitment to supporting the mission-critical business applications of our financial services customers," said Karen Cone, general manager, Worldwide Financial Services, Microsoft. "Our customers are testament to this commitment to delivering a solid foundation for mission-critical workloads, with the dependability, performance and flexibility required to achieve sustainable competitive advantage in today's financial services industry."

Legacy System Modernization

Skandinavisk Data Center (SDC), which services the banking businesses of more than 150 financial institutions in Denmark, Sweden, Norway and the Faroe Islands, is expecting to save $20 million annually by moving its core banking system from its mainframe platform to Windows Server and SQL Server. With client growth and cost reduction at the forefront of SDC's business imperatives, it required a solution to help minimize spending while retaining and gaining new member banks. By migrating from the mainframe to a Windows platform, it is estimated that SDC will reduce operational costs for its core banking system by 30 percent, giving it a competitive edge. The migration of online transactions in the core banking system was completed this fall, reducing operational costs by more than 20 percent annually. As the next and final step, the systems database will be migrated from DB2 to SQL Server.

In addition, with partner Temenos Group AG, Microsoft is supporting banks across the globe with the TEMENOS T24 (T24) core banking system optimized for SQL Server. Microsoft and Temenos recently completed a high-performance benchmark that measured the high-end scalability of T24 on SQL Server and Windows Server 2008 Datacenter. The model bank environment, created to reflect tier-one retail banking workloads, consisted of 25 million accounts and 15 million customers across 2,000 branches. At peak performance, the system processed more than 3,400 transactions per second in online testing and averaged more than 5,200 interest accrual and capitalizations per second during close of business processing. The testing demonstrated near-linear scalability (95 percent) in building up toward the final hardware configuration. Banks such as Sinopac in Taiwan and Rabobank Australia and New Zealand are among the first to benefit from expertise and capabilities developed at the Microsoft and Temenos competency center. Furthermore, Microsoft and Temenos recently announced that Mexican financial institutions are live on T24 on Microsoft's cloud platform, Windows Azure. Banks that select T24 on a Microsoft platform for on-premises deployment today can therefore be confident of a road map to the cloud. [Emphasis added.]

In the past, only mainframes could run and maintain mission-critical trading solutions that require a lot from the database infrastructure: high-availability, redundancy, transaction and data integrity, consistency, predictability, and the balancing of proactive prevention with effective recovery. One such solution, the SunGard Front Arena, is a global capital markets solution that delivers electronic trading and position control across multiple asset classes and business lines. Integrating sales and distribution, trading and risk management, and settlement and accounting, Front Arena helps capital markets and businesses around the world improve performance, transparency and automation. Front Arena was designed to handle very large data flows and, in high-volume environments such as equities exchange trading, Front Arena customers routinely enter as many as 130,000 trades per day. As a result, in March and April 2011, engineers from SunGard and Microsoft worked together to confirm the performance and scalability of Front Arena on Microsoft SQL Server 2008 R2 at the Microsoft Platform Adoption Center in Redmond, Wash. The team designed a benchmark test to emulate a real-world, enterprise-class financial workload, running the software on industry-standard hardware typically found in datacenters today. Front Arena running on SQL Server 2008 R2 exceeded the goals set by the team, confirming that SQL Server 2008 R2 delivers the performance, scalability and value companies demand from their trading platform.

Mitigating Risk

The Financial Crime Risk Management solution suite from Fiserv Inc., built on the Microsoft platform, provides a comprehensive portfolio of fraud risk mitigation and anti-money laundering capabilities. AML Manager, a key component of the solution suite, will adopt the newest release of Microsoft SQL Server, code-named "Denali," in the future.Transforming the Reconciliation Process

Saxo Bank, a specialist in trading and investment, has determined that its transaction volumes have increased with the help of SunGard's Ambit Reconciliation solution, deployed on Windows Server and SQL Server. The solution provides a real-time matching and reconciliation platform on which Saxo Bank has consolidated its reconciliation and exception management operations across all trading platforms at the bank. According to Saxo Bank, it is now able to process more transactions per day compared with four years ago, with fewer staff to manage the increase in transaction volumes.Improving Payments Efficiency and Reliability

In a recent report based on its Uptime Meter, a six-month availability aggregate, Stratus demonstrated that its fault-tolerant servers running Windows Server and S1 Corp.'s payments solutions are achieving "six nines" (99.9999 percent) of availability. Both hardware- and software-related incidents are included in the measurement. The combination of mission-critical technologies from S1, Stratus and Microsoft provides a compelling alternative to more costly mainframe solutions.The success of Microsoft's mission-critical strategy depends not only on the technology and guidance provided by Microsoft, but also on the products and services provided by its large ecosystem of partners. A new generation of solutions from independent software vendors -- across banking, capital markets and insurance -- combined with Microsoft's development tools and technologies, is now delivering the dynamic environment needed to realize the benefits of next-generation, mission-critical platforms from on-premises to the cloud.

Michael Washam (MWashamMS) described Getting Started with Windows Azure Diagnostics and PowerShell in a 9/16/2011 post from the //BUILD/ Windows conference:

In my BUILD session Monitoring and Troubleshooting Windows Azure Applications I mention several code samples that make life much easier for implementing diagnostics with the Windows Azure PowerShell Cmdlets 2.0. This is the first in a series that walk you through configuring end-to-end diagnostics for your Windows Azure Application with PowerShell.

To get started with the Windows Azure PowerShell Cmdlets you will first need to add the WAPPSCmdlets snapin to your script:

Add-PsSnapin WAPPSCmdletsAfter the snapin is loaded you will need to get access to the roles that you want to configure or retrieve diagnostic information about.

$storageAccountName = "YourStorageAccountName" $storageAccountKey = "YourStorageAccountKey" $deploymentSlot = "Production" $serviceName = "YourHostedService" $subscriptionId = "YourSubscriptionId" # Thumbprint for your cert goes below $mgmtCert = Get-Item cert:\CurrentUser\My\D7BECD4D63EBAF86023BB4F1A5FBF5C2C924902A function GetDiagRoles { Get-HostedService -ServiceName $serviceName -SubscriptionId $subscriptionId -Certificate $cert | ` Get-Deployment -Slot $deploymentslot | ` Get-DiagnosticAwareRoles -StorageAccountName $storageAccount -StorageAccountKey $storageKey }GetDiagRoles performs the following steps:

1) Retrieves your hosted service

2) Retrieves the correct deployment (staging or production)

3) Returns a collection of all of the roles that have diagnostics enabled.For example the following code will print all of the role names that are returned:

GetDiagRoles | foreach { write-host $_.RoleName }Note: The $_ operator basically means the “current” object in the collection.

Of course we want to configure diagnostics and not just print out role names.

The following example sets the diagnostic system to transfer any trace logs that have a loglevel of Error to storage every 15 minutes.GetDiagRoles | foreach { # Sets Windows Azure Diagnostics to transfer any trace messages from your code # that are errors to storage over every 15 minutes. $_ | Set-WindowsAzureLog -LogLevelFilter Error -TransferPeriod 15 }This functionality makes it extremely useful to instrument your code to capture errors or just trace execution of your code to look for logic errors.

try { // something that could throw an exception } catch(Exception exc) { System.Diagnostics.Trace.TraceError(DateTime.Now + " " + exc.ToString()); }How to retrieve the data? Once the diagnostic system has had time to transfer any trace logs to storage it’s as simple as the following examples:

# Dump out all tracelogs in storage to the console GetDiagRoles | Get-WindowsAzureLog# Dump all tracelogs in storage that # have exception in the message to the console GetDiagRoles | Get-WindowsAzureLog | ` Where { $_.Message -like "*exception*" }# Save all trace logs to the file system (as .csv) GetDiagRoles | foreach { $FileName = "c:\DiagData\" + $_.RoleName + "azurelogs.csv" Get-WindowsAzureLog -LocalPath $FileName }Of course there are -From and -To arguments for all of the Get-* diagnostic cmdlets so you can filter by certain time ranges etc.

A few other goodies we have added are the ability to clear your diagnostic settings (reset them to none) and also the ability to clean out your diagnostic storage.# Clear all settings for the Windows Azure Log GetDiagRoles | foreach { $_ | Set-WindowsAzureLog -Clear }# Clear all data for the Windows Azure Log Clear-WindowsAzureLog -StorageAccountKey $key -StorageAccountName $nameLook for more posts from me on how to do more advanced Windows Azure diagnostics such as perfmon logs and file system logging.

Michael Washam (MWashamMS) posted Announcing the release of Windows Azure Platform PowerShell Cmdlets 2.0 from the //BUILD/ Windows conference on 9/16/2011:

Even while we are here at BUILD we are working hard to make deploying and managing your Windows Azure applications simpler. We have made some significant improvements to the Windows Azure Platform PowerShell Cmdlets and we are proud to announce we are releasing them today on CodePlex: http://wappowershell.codeplex.com/releases.

In this release we focused on the following scenarios:

- Automation of Deployment Scenarios

- Windows Azure Diagnostics Management

- Consistency and Simpler Deployment

As part of making the cmdlets more consistent and easier to deploy we have renamed a few cmdlets and enhanced others with the intent of following PowerShell cmdlet design guidelines much closer. From a deployment perspective we have merged the Access Control Service PowerShell Cmdlets with the existing Windows Azure PowerShell Cmdlets to have both in a single installation.

In addition to those changes we have added quite a few new and powerful cmdlets in this release:

Toddy Mladenov posted Demystifying physicalDirectory or How to Configure the Site Entry in the Service Definition File for Windows Azure on 9/11/2011 (missed when posted):

If you played a bit more with the sites configuration in Windows Azure you may have discovered some inconsistent behavior between what Visual Studio does and what the cspack.exe command line does when it relates to physicalDirecroty attribute. I certainly did! Here is the problem I encountered while trying to deploy PHP on Windows Azure.

Project Folder Structure

I was following the instructions on Installing PHP on Windows Azure leveraging Full IIS Support but decided to leverage the help of Visual Studio instead building the package by hand. Not a good idea for this particular scenario :( After creating my cloud solution in Visual Studio I ended up with the following folder structure:

+ PHPonAzureSol

+ PHPonAzure

…

- ServiceConfiguration.cscfg

- ServiceDefinition.csdef

+ PHPRole

…

+ bin

- install-php.cmd

- install-php-azure.cmd

+ PHP-Azure

- php-azure.dll

+ Sites

+ PHP

- index.php

+ WebPI-cmd

…

Where:

PHPonAzureSolwas my VS solution folderPHPonAzurewas my VS project folder containing the CSDEF and CSCFG files- and

PHPRolewas my VS project folder containing the code for my web roleThe PHPRole folder contained the WebPI command line tool needed to install PHP in the cloud stored in the

WebPI-cmdsubfolder; the PHP extensions for Azure in thePHP-Azuresubfolder; the installation scripts in thebinsubfolder; and most importantly my PHP pages inSites\PHPsubfolder (in this case I had simpleindex.phppage containingphpinfo()).Configuring Site Entry in the CSCFG File

Of course my goal was to configure the site to point to the folder where my PHP files were stored. In this particular case this was the

PHPonAzureSol\PHPRole\Sites\PHPfolder if you follow the structure above. This is simply done by adding thephysicalDirectoryattribute to theSitetag in CSDEF. Here is how mySitetag looked like:

<Site name="Web" physicalDirectory="..\PHPRole\Sites\PHP">

<Bindings>

<Binding name="Endpoint1" endpointName="Endpoint1" />

</Bindings>

</Site>

My expectation was that with this setting in CSDEF IIS will be configured to point to the content that comes from the

physicalDirectoryfolder. Hence if I type the URL of my Windows Azure hosted service I should be able to see theindex.phppage (i.e. http://[my-hosted-service].cloudapp.net should point to your PHP code).Visual Studio handling of physicalDirectory attribute

Of course when I used Visual Studio to pack and deploy my Web Role I was unpleasantly surprised. It seems Visual Studio ignores the

physicalDirectoryattribute from your CSDEF file, and points the site to your Web Role’s approot folder (or the content fromPHPRolefolder if you follow the structure above). Thus if I wanted to access my PHP page I had to type the following URL:http://[my-hosted-service].cloudapp.net/Sites/PHP/index.php

Not exactly what I wanted :(

The reason for this is that Visual Studio calls

cspack.exewith additional options (either/sitePhysicalDirectoriesor/sites) that overwrite thephysicalDirectoryattribute from CSDEF. As of now I am not aware of a way to change this behavior in VS.Update (9-12-2011): As it seems VS ignores the

physicalDirectoryattribute ONLY if your web site is called Web (i.e.name="Web"as in the example above). If you rename the site to something else (name="PHPWeb"for example) you will end up with the expected behavior described below. Unfortunatelyname="Web"is the default setting, and this may result in unexpected behavior for your application.cspack.exe handling of physicalDirectory attribute

Solution to the problem is to call cspack.exe from the command line (without the above mentioned options of course:)).

There are few gotchas about how you call cspack.exe using the folder structure that Visual Studio creates. After few trial-and-errors where I received several of those errors:

Error: Could not find a part of the path '[some-path-here]'.I figured out that you should call cspack.exe from the solution folder (

PHPonAzureSolin the above structure). Once I did this everything worked fine and I was able to access myindex.phpby just typing my hosted service’s URL.How physicalDirectory attribute works?

For those of you interested how the physicalDirectory attribute works here is a simple explanation.

MSDN documentation for How to Configure a Web Role for Multiple Web Sites points out that

physicalDirectoryattribute value is relative to the location of the Service Configuration (CSCFG) file. This is true in the majority of the cases however I think the following two clarifications are necessary:

- Because the attribute is present in the Service Definition (CSDEF) file the correct statement is that

physicalDirectoryattribute value is relative to the location of the Service Definition (CSDEF) file instead. Of course if you use Visual Studio to build your folder structure you can always assume that the Service Configuration (CSCFG) and the Service Definition (CSDEF) files are placed in the same folder. If you build your project manually you should be careful how you set thephysicalDirectoryattribute. This is of course important if you want to use relative paths in the attribute.- This one I think is much more important than the first one, and it states that you can use absolute paths in the

physicalDirectoryattribute. ThephysicalDirectoryattribute can contain any valid absolute path on the machine where you build the package. This means that you can pointcspack.exeto include any random folder from your local machine as your site’s root.Here is how this works.

What cspack.exe does is to take the content of the folder configured in

physicalDirectoryattribute and copy it under[role]/sitesroot/[num]folder in the package. Here is how my package structure looked like (follow the path in the address line):During deployment IIS on the cloud VM is configured to point the site to

sitesroot\[num]folder, and serve the content from there. Here is how it is deployed in the cloud:And here is the IIS configuration for this cloud VM:

You might like:

- Microsoft Windows Azure Development Cookbook Review - Snip/Shot by Toddy Mladenov

- Windows Azure Role Instance Limits Explained

- What Environment Variables Can You Use in Windows Azure? - Snip/Shot by Toddy Mladenov

- Will Google Take Over the Half of the Social Networking Market with Google+? - Snip/Shot by Toddy Mladenov

<Return to section navigation list>

Visual Studio LightSwitch and Entity Framework 4.1+

Paul Patterson posted An Introduction to LightSwitch – Edmonton Dot Net User Group Presentation – September 12, 2011 on 9/13/2011 (missed when posted):

The following slide deck was presented at the September 12, 2011 Edmonton .Net User Group meetup…

…please forgive me for the shameless header image of me at the presentation. I am usually quite shy

Slide 1

- So, as mentioned, my name is Paul Patterson.

- I work for an organization named Quercus Solutions Inc. We are a technology based company specializing in Microsoft technologies such as .Net, SharePoint, Office 365, and Microsoft Azure.

- First off, by show of hands, how many of you have had an opportunity to play with LightSwitch at all?

- Cool. So most, if not all, of the information I have is probably already familiar to you – which is good because if I fail on something, I will call on you to bail me out. J

- So, what I am going to present to you is a summary of what Microsoft Visual Studio LightSwitch is, and what it may mean to you as a professional software developer, as well as what it may mean to you as a “non-programmer”. Probably more so for the non-programmer.

- With this presentation, I will be talking with the perspective of the non-professional software developer – those departmental people who may look at LightSwitch as an option to solving a business problem, for example.

Slide 2

- So, just so you know what to expect, here is a quick look at an agenda.

- I’ll first give you a quick introduction to LightSwitch, in which will include a peak at the technologies that make LightSwitch tick.

- The introduction will include a quick demo where I’ll put some what is presented into practice.

- Next I’ll touch on some extensibility points about LightSwitch.

- And then, with time permitting, I’ll skim over a few deployment points, and possibly show a quick deployment scenario for you.

- As far as questions go; at the risk of not having enough time to get through the presentation and demos, if we could hold on to the questions until the end, we might be able to get through the entire presentation.

- Having said that, I have to caveat that LightSwitch is a huge topic. We could easily spend an entire day going through all the fine details of LightSwitch. Given the 1 hour time box here, I have to cover the higher level points, so I totally expect some questions; just if we can hold off until the end, that would serve us best – thanks.

Slide 3

- So, according to Microsoft… in using Microsoft Visual Studio LightSwitch 2011, we, “…will be able to build professional quality business applications quickly and easily, for the desktop and the cloud.”

- Okay! First off, I am not a designated LightSwitch “Champion”. I am just a curious fella who happened onto something that tweaked my interest a few years back.

- Before you start laying into me with some subjectivity, you should first understand where I coming from, and how I think.

- I generally like to find things that help me take care of a task in as short a time as possible.

- This probably came from the various roles as the technical go-to guy within the non-technical departments I’ve worked in.

- A lot of my early experience to solving business problems involved the use of Excel and Access.

- When I first read about this tool that Microsoft was working on, one that had the potential to do something fast and easy; I was intrigued.

- I started watching the development of LightSwitch very early on, even before the first beta was released.

- I believe it was at sometime in 2008, maybe 2007, that Microsoft made people aware that it was working on this new tool codenamed KittyHawk.

- Rumour had it that the KittyHawk team included some former FoxPro people – which would make sense because of the timing of FoxPro’s retirement.

- Anyways it was sometime early last year that I read about this LightSwitch tool that Microsoft was readying for beta testing.

- After culling through some forums and interweb rumour mills, I took it to task to keep a diligent eye on this thing – hence the start of my blog PaulSPatterson.com.

- So, since early last year I have been keeping my ear to the ground, listening and watching how this product has evolved in to what it is today.

Slide 4

- So why did I tell you all that!

- I am going on my intuition and gut instincts that LightSwitch is going to have a relatively large impact on the industry. Maybe not tomorrow, or even within the next year, but something is telling me to keep an eye on this tool.

- Software developers tend to keep some technologies close to their chest.

- All I am saying is to keep an open mind about LightSwitch, and don’t discount the obvious – such as the value proposition the product has.

Slide 5

- Back to the agenda, let’s talk about the technology behind LightSwitch…

Slide 6

- LightSwitch is a part of the Visual Studio family of products.

- It essentially sits as a SKU between Visual Studio Pro and the free Express products.

- I believe the current retail price for LightSwitch is about $200.00.

- When you install LightSwitch, if you already have Visual Studio 2010 (Professional or better), it automagically integrates with Visual Studio, making its templates available for selection from the new project templates dialogs.

- If you don’t have Visual Studio installed, LightSwitch installs as a stand-alone tool; using the same familiar Visual Studio IDE.

- Using LightSwitch, it is possible to create and deploy an application without writing a single line of code.

- As such, you can already begin to imagine the value proposition that this will have with the non-developer types.

- Like I said before, LightSwitch is data centric, and all that someone has to do is provide some data, select to add some screens for the data, and presto, you have an application ready to show off to all your work buddies.

- It really is just that easy! (That is Shell Busey, home improvement guy!). Yes, I just dated myself

Slide 7

- LightSwitch uses “best practices” in how it creates applications.

- For example, LightSwitch applications are built on a classic three-tier architecture where each tier runs independently of the others and performs a specific role in the application.

- Here is an example 3-tier architecture model. The presentation tier (or “UI”). The logic tier which is the liaison between the presentation tier and the… data storage tier; which is responsible for the application data.

Slide 8

- We can map specific technologies used in LightSwitch to this architecture.

- The presentation tier is a Silverlight 4.0 application.

- It can run as a Windows desktop application or any hosted in a browser that supports Silverlight

- Which by the way can be done on Mac, I’ve done it, I just can’t remember if it was Chrome or Safari that I got it to work in.

- For the logic tier, WCF RIA DomainServices is used.

- The logic tier process can be hosted locally (on the end-user’s machine), on an IIS server, or in Windows Azure.

- For the data tier, a LightSwitch application’s primary application storage (for development) is SQL Server (SQL Express) technologies.

- This database access is accomplished via an Entity Framework provider, and custom build WCF RIA DomainServices.

- There are also opportunities to consume other data sources, which are exposed, typically, via WCF RIA services – oData is a good example.