Windows Azure and Cloud Computing Posts for 9/5/2011+

| A compendium of Windows Azure, SQL Azure Database, AppFabric, Windows Azure Platform Appliance and other cloud-computing articles. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database and Reporting

- Marketplace DataMarket and OData

- Windows Azure AppFabric: Apps, Access Control, WIF and Service Bus

- Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch and Entity Framework v4+

- Windows Azure Infrastructure and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

Azure Blob, Drive, Table and Queue Services

Alex Popescu (@al3xandru) described Accumulo: A New BigTable Inspired Distributed Key/Value by NSA in a 9/5/2011 post to his MyNoSQL blog:

The National Security Agency has submitted to Apache Incubator a proposal to open source Accumulo, a BigTable inspired key-value store that they were building since 2008. The project proposal page provides more details about Accumulo history, building blocks, and how it compares to the other BigTable open source implementation HBase:

Access Labels: Accumulo has an additional portion of its key that sorts after the column qualifier and before the timestamp. It is called column visibility and enables expressive cell-level access control. Authorizations are passed with each query to control what data is returned to the user.

Iterators: Accumulo has a novel server-side programming mechanism that can modify the data written to disk or returned to the user. This mechanism can be configured for any of the scopes where data is read from or written to disk. It can be used to perform joins on data within a single tablet.

Flexibility: Accumulo places no restrictions on the column families. Also, each column family in HBase is stored separately on disk. Accumulo allows column families to be grouped together on disk, as does BigTable.

Logging: HBase uses a write-ahead log on the Hadoop Distributed File System. Accumulo has its own logging service that does not depend on communication with the HDFS NameNode.

Storage: Accumulo has a relative key file format that improves compression.

You can read more about Accumulo here and check the Hacker News and Reddit discussions.

Michael Stack has commented on the HBase mailing list:

The cell based ‘access labels’ seem like a matter of adding an extra field to KV and their Iterators seem like a specialization on Coprocessors. The ability to add column families on the fly seems too minor a difference to call out especially if online schema edits are now (soon) supported. They talk of locality group like functionality too — that could be a significant difference. We would have to see the code but at first blush, differences look small.

No significant articles today.

<Return to section navigation list>

SQL Azure Database and Reporting

No significant articles today.

<Return to section navigation list>

MarketPlace DataMarket and OData

No significant articles today.

<Return to section navigation list>

Windows Azure AppFabric: Apps, Access Control, WIF and Service Bus

Adxstudio explained how to enable Azure AppFabric ACS Authentication (4.1) for Adxstudio on 9/5/2011:

This article covers the steps for enabling Windows Azure AppFabric Access Control Service (ACS) federated authentication for an Adxstudio portal. The first step involves configuring ACS on Windows Azure. The next step involves taking settings from the first step and applying them to the ASP.Net portal configuration.

Contents

- Prerequisites

- Configuring Access Control Service

- Open ACS Management Portal

- ACS Management Portal

- Adding Identity Providers

- Add Relying Party Application

- Gathering Portal Settings

- Configuring the Portal

- Update the Connection String

- Publish the ACS Configuration

Prerequisites

A Windows Azure platform subscription should be available which provides access to the Azure Management Portal. The ASP.Net portal can be hosted from Windows Azure Hosted Services or on-premises from an IIS 7.0 server running .Net Framework 4.0.

Configuring Access Control Service

A Service Namespace is required to contain the authentication settings.

- Sign into the Azure Management Portal

- Browse over to the "Access Control" table

- Bottom left: Click Service Bus, Access Control & Caching

- Top Left: Click Access Control

- Select the subscription that contains the ACS namespace

- Click New to open the new ACS namespace dialog

- Specify an available namespace ensuring that "Access Control" service is selected

- Click Create Namespace

Open ACS Management Portal

- The new namespace should appear in the table

- Select the namespace

- Click the Access Control Service button to open the ACS Management Portal

ACS Management Portal

The ACS based federated authentication settings are managed under this area of the portal. The two major steps to completing these settings are to select Identity Providers and specifying Relying Party Applications.

Adding Identity Providers

Identity providers decide which user accounts are available for authentication. By default, the Windows Live ID identity provider is available which allows authentication by Live ID users.

- Click the Add link to select a new identity provider

- Some providers only require a display name as a label for authenticating users

- Other providers require further credentials to be established between the identity provider and ACS. Refer to the Learn more links for details.

- Repeat this step for each identity provider you wish to make available

Add Relying Party Application

At least one relying party application must be setup for federated authentication to succeed. Navigate to the Relying Party applications area and click the "Add" link.

On the Add Relying Party Application page provide a display Name that identifies the relying party. Note that this name may also appear to the end user during sign-in depending on the implementation of the portal's sign-in page.

Under the Realm field, specify the domain URL of the portal. Valid URLS include:

- http://localhost/

- http://localhost:8080/

- http://contoso.com/

- https://contoso.com/

Authentication against ACS will fail if the portal is not hosted from this URL. It is not necessary for the portal to be available to the Internet.

For the Return URL, specify the realm URL and append a path of /Federation.axd.

- http://localhost/Federation.axd

If you are hosting one of the Adxstudio sample portals, an Error.aspx page is available that can be sepecified as the Error URL.

- http://localhost/Error.aspx

For the Token format, specify SAML 2.0. The Token encryption policy can be set to None and the Token lifetime can be left at the default of 600 seconds.

Under the Identity Providers section, check the providers that are allowed to authenticate.

If this is the first relying party application, check the box to Create new rule group which creates a default rule group when saved. Subsequent relying party applications may share this default rule group rather than creating a new one.

Under the Token signing section, specify Use service namespace certificate (standard).

Click Save.

This step may be repeated to add more relying party applications. This enables a single sign-on experience between all the relying party portals.

Gathering Portal Settings

At this point, the required ACS management settings are complete and several values need to be applied to the ASP.Net portal. Take note of the following settings:

- Record the Service Namespace value

- Under Trust relationships -> Relying Party application, open the relying party details, record the Realm URI

- Under Service settings -> Certificates and keys -> Token Signing, open the Service Namespace for the x.509 Certificate. Under the Certificate section, record the thumbprint

- Under Development -> Application integration -> Endpoint Reference, record the WS-Federation Metadata

Configuring the Portal

Portal configuration involves taking the values collected from the ACS Management Portal and applying them to the web.config file of the portal. This will be demonstrated against the Adxstudio sample portals.

- Browse to the folder containing the Adxstudio sample portals:

- %adxstudio install root%\XrmPortals\4.1.xxxx\Samples\

- Open the XrmSamples.sln solution in Visual Studio 2010

- In the Solution Explorer, select a portal to deploy such as the BasicPortal

- Open the Web.ACS.config web config transform file (expand the Web.config node)

- Replace the placeholder values within the Web.ACS.config file with the values gathered in the previous step

The following is a condensed form of the Web.ACS.config file to help locate the relevant configuration attributes that need to be updated.

<configuration> <appSettings> <add key="FederationMetadataLocation" value="[WS-Federation Metadata]"/> </appSettings> <microsoft.identityModel> <service> <audienceUris> <add value="[Realm URI]" /> </audienceUris> <federatedAuthentication> <wsFederation issuer="https://[Service Namespace].accesscontrol.windows.net/v2/wsfederation" realm="[Realm URI]"/> </federatedAuthentication> <issuerNameRegistry> <trustedIssuers> <add thumbprint="[Thumbprint]" name="https://[Service Namespace].accesscontrol.windows.net/" /> </trustedIssuers> </issuerNameRegistry> </service> </microsoft.identityModel> </configuration>

Update the Connection String

As with any Adxstudio Portal, the connection string needs to be updated to specify a valid Microsoft Dynamics CRM 2011 server. For details refer to this article.

Publish the ACS Configuration

The Web.ACS.config file is a transformation file used to convert a sample portal running against a MembershipProvider into a portal that supports ACS federated authentication.

The XrmSamples.sln solution already contains the build configuration needed to apply this transform during deployment. With this solution still open in VS2010, go to the menu -> Build -> Configuration Manager.... Within the Configuration Manager dialog, use the Active solution configuration dropdown to select the ACS option. Each portal that is capable of an ACS transform, should show a value of ACS under the Configuration column. Close the Configuration Manager.

Return to the Solution Explorer and right-click the portal project. Select Publish... to open the Publish Web dialog. Check that the Build Configuration value indicates "ACS". Select a Publish method such as "File System" and set a Target Location to be the Physical Path of the IIS website or web application that is hosting the portal (this should initally be an empty folder). Click Publish to transform the web.config and copy the portal files to the Target Location.

Create a website or web application specifying the Physical Path to be the published Target Location. The website should also specify the Realm URI value as its site binding setting.

In a web browser, open the Realm URI to navigate to the website. Click the Login link to open the portal's identity provider selection page. If the configuration is correct, a module should appear in the body of the page allowing the user to select from the configured identity providers.

Inside Azure AppFabric ACS Authentication (4.1)

<Return to section navigation list>

Windows Azure VM Role, Traffic Manager, Virtual Network, Connect, RDP and CDN

Avkash Chauhan posted Windows Azure Traffic Manager: Using Performance Load balancing method for multiple services in the same DC on 9/5/2011:

As you may know Windows Azure Traffic Manager (WATM) BETA is available. Windows Azure Traffic Manager is a load balancing solution that enables the distribution of incoming traffic among different hosted services in your Windows Azure subscription, regardless of their physical location. Traffic routing occurs as a the result of policies that you define and that are based on one of the following criteria:

- Performance – traffic is forwarded to the closest hosted service in terms of network latency

- Round Robin – traffic is distributed equally across all hosted services

- Failover – traffic is sent to a primary service and, if this service goes offline, to the next available service in a list

More Info: http://msdn.microsoft.com/en-us/gg197529

Here is a scenario in which Windows Azure Traffic Manager (WATM) is used with “Performance” load balancing method:

For example, there are total 4 services hosted in 3 difference. Because there are 4 services in 3 DC so definitely 2 services will be in one same DC. The following table shows the distribution:

Traffic Manager Policy: mywatmpolicy.ctp.trafficmgr.com

which hosted service will be returned to client?

For above scenario in which same services in hosted in multiple DC, “performance” load balancing method is best as it is used for geo-distributed scenario.

In above scenario where two same services are hosted in same DC are no supported in Windows Azure Traffic Manager (WATM) CTP. In above example, only one hosted service (either #2 or #3) will get all traffic disregarding the order they are displayed in the UI.

Suggestion: Instead of creating additional hosted services in the same region, you must increase the number of instances of just one hosted service in the region.

You may ask, if the limitation to have more than one hosted service in the same DC is not currently supported applied only to "Performance". So yes, this limitation is only for performance method of WATM however, there are no limitations for round robin, all hosted services are treated equally regardless their region. If you choose "Round Robin" setting in WATM, then you can use more than one Hosted Service in the same region and configure with WATM.

If you have multiple hosted service in the same DC with WATM Performance setting then there are a few things you must know:

- Only one hosted service will get all traffic and other(s) will have none

- You will have no control over which one (out of many) hosted service will get the traffic, from the UI

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

Anna Leach asserted “Next bus is in 5 minutes. It has 3 annoying youths on board” in a deck for her London bus timings mobile beta site spotted post of 9/5/2011 to The Register:

Londoners will soon be able to use their phones to check when the next bus is coming, thanks to a new feed of data opened up by Transport For London and available on a mobile-optimised website. The Live Bus Departures Countdown service will be useful for passengers lingering, fretful and uninformed, at the 17,000 London bus stops without road-side countdown tickers.

Live Bus Departures is still in a beta testing phase but was spotted this weekend by a Twitter user. TFL promises an official launch this autumn. There is a SMS-based version of the site for those with older phones.

To get local bus times, type in a street, postcode or route number and pick a stop from the map that pops up. Each bus stop has a five digit PIN number that you can save to get quick access to the information from your most used stops.

Some Londoners have been seriously enthused by the development – blogger Diamond Geezer said: "This website could make a genuine difference to my quality of life." We hope so.

Transport for London says:

"TfL has commenced user testing of the new ‘Countdown’ system which will provide real time bus arrival information for all 19,000 bus stops across London via the web and SMS.

“The existing roadside sign service is currently limited to 2,000 bus stops and this new service covering all buses and all stops will launch fully in the Autumn of this year.”

Transport for London has been keen to release transport information - check out their TFL data page where they offer up information feeds to outside developers. Feeds available include live CCTV footage of key roads and routes – images updated every two minutes, and information about Tube train locations updated every 30 seconds.

The existing real-time data feeds on the TFL site are managed by Microsoft's Windows Azure, its cloud computing platform which allows for pay-as-you-use-it hosting and fast data syndication

Janet I. Tu (@janettu) described How Twitter is integrated into Microsoft Windows Phone Mango in a 9/5/2011 post to the Seattle Times’ Business/Technology blog:

OK, not me, specifically. But the updated version of Windows Phone 7, codenamed "Mango," will be integrated with Twitter, making it easier for users to instantly see what their friends are saying about them across different social platforms, including Twitter and Facebook.

Andy Myers, a writer on the Windows Phone Engineering Team, has a post up on the official Microsoft Windows Phone blog demonstrating how that works for the consumer. He says he finds the integration especially useful for the Me Card, a tile on the start screen that notifies the user anytime someone mentions the user in a tweet, comments on his/her Facebook status or posts something on their wall.

(The comments to Myers' post, suggesting features users would like to see, are interesting too.)

The Windows Phone Mango update adds about 500 features to Windows Phone 7. Phone manufacturers have not yet announced when new handsets carrying Mango will go on sale in the U.S., though they are expected this fall; nor has a date been announced for when owners of current Windows Phone 7 handsets will get the update.

(Image of HTC Titan running Windows Phone Mango from Microsoft)

Eric Nelson (@ericnel) reported Windows Azure Tools from Quest including graphical monitoring with Spotlight on Azure in a 9/5/2011 post to his IUpdateable blog:

I haven’t had a chance to play with them yet (downloading now) – but these three tools from Quest look very interesting and worth sharing “un-tried.” Also (IMHO) it looks to me like the discussion forums could do with more folks to help shape the evolution of these products.

Spotlight on Azure– provides in-depth monitoring and performance diagnostics of Azure environments from the individual resources level up to the application level.

- Quest Cloud Storage Manager for Azure – provides file and storage management that enables users to easily access multiple storage accounts in Azure using a simple GUI interface.

- Quest Cloud Subscription Manager for Azure – drills into Azure subscription data, providing a detailed view of resource utilization with customized reporting and project mapping.

There are lots of storage managers out there, hence what stuck out for me is Subscription Manager and Spotlight.

They have just blogged on Subscription Manager and created a very promising video on Spotlight on Azure – which has some very appealing graphics. It deserves a lot more views than the current 204! Get viewing now …

I’ve tried Spotlight on Azure and liked it. However, I’d like to be able to choose my own color palette for the UI, rather than the purple provided. The downloads are 30-day trial versions. See my Uptime Report for my Live OakLeaf Systems Azure Table Services Sample Project: August 2011 post of 9/3/2011 to see why (scroll down.)

David Pallman posted When Worlds Collide #2: HTML5 + Cloud = Elasticity² on 9/5/2011:

In a previous post I’ve written about the dual revolutions going on in web applications: HTML5 and devices on the front end, and cloud computing on the back end—how they influence each other and are together changing the design of modern web applications. In this post I’ll talk about an aspect both share, elasticity.

Elastic Scale of the Cloud: Elasticity is a term frequently used in cloud computing. Amazon Web Services even named their compute service “Elastic Compute 2”. Elasticity means we can allocate or drop resources at will: if we need more servers or more storage, we ask for the resources we want and have them minutes later, ready to use. If we’re done with a resource, we release it and stop paying for it. This is all self-serve, mind you: there’s no bureaucratic process or personnel to go through. Elasticity is one of those defining characteristics that sets true cloud computing apart from traditional hosting.

Using the Windows Azure platform as an example, there are several levels of elasticity available to us. The elasticity most often spoken of is the ability to change the number of VM instances in the roles (VM farms) that make up your hosted cloud application. There are other areas we can consider elastic as well: we can make our individual VMs larger or smaller, choosing from 5 different VM sizes that give us varying amounts of cores, memory, and local storage. We can choose from a variety of database sizes. We can even scale our application to a global level by deploying it to multiple data centers around the world and using a traffic manager to route access, based on locale or other criteria.

Elastic scale can be enlisted manually, where you simply request the changes you want interactively; or it can be automated, where you programmatically alter the scale of your cloud application. You might change the size of your deployment based on activity levels you are monitoring; or you might be able to anticipate load changes by something very predictable like the calendar and well-known seasonal patterns of behavior. Elasticity isn’t just important for scale reasons: it’s also an essential ingredient in the cloud’s cost model, where you only for what you use and only use what you need.

You can think of the cloud, then, as a very big rubber band: one that lets you run as small as a tiny, barely-costs-anything “caretaker” deployment all the way to global scale that can service millions. A change you could put in effect the same day you discerned the need for it, by the way.

Elastic Experiences in HTML5: Although we often use different words for it, web applications can be elastic too—but in a different way. Modern web sites can and should be responsive: that is, adaptive to a variety of devices, screen sizes, aspect ratios, and input methods. The philosophy, movement, and features behind HTML5 promote and enable this notion of elastic experience. While it’s not uncommon today to implement web applications individually for browsers and mobile devices (either as m. sites or native device applications), where we’re heading is to more typically implement a site once that runs on a PC browser as well as a touch tablet or phone. As our world becomes more and more about smaller devices and larger screens, and using more than one device to access that world, this “write once, run anywhere” approach is simply to going to make more and more sense and minimize expense. Not always, but much of the time.

The elasticity of experience is not only about adapting to smaller size or larger screens, though: there are a host of related considerations. Layouts have to change to suit aspect ratio and orientation; the form of input and navigation can vary from mouse and keyboard to touch; some font choices are unusable or look ridiculous on extremely small or large displays; some functional aspects (such as the availability of JavaScript) aren’t always available. What is needed then is more than mere fluid layout: applications need to make intelligent decisions about many things in order to maximize their usefulness and usability in whatever context they find themselves. Historically there has been a widespread belief in the need for “graceful degradation,” where you give up features and aspects of the intended experience with more limited devices; the new thinking turns this around to say we begin with a very decent and tuned experience even on limited devices and practice “progressive enhancement” as we get larger screens and the availability of more features. For a very good treatment of this subject, see Ethan Marcotte’s inspiring article and book on Responsive Web Design.

So you can also think of web sites done in the spirit of HTML5 as a rubber band, too: one that’s all about the elasticity of layout, navigation, and progressive enhancement, providing good experiences without compromise that bring out the best in whatever device you happen to be using today. Actually, a more fitting analogy than a rubber band is a gas. A gas? That’s right; remember what you learned about gases in high school physics? A gas will expand in volume to fill its container no matter what its size and shape. Go and do likewise. And you thought you’d never use Boyle’s Law in real life!

Elasticity Squared: The Combined Effect: As we’ve seen, both the front and the back end of modern web applications share this characteristic of elasticity. There are even more dimensions of elasticity we could discuss, such as the elasticity of data, but we’ll save that discussion for another time.

In software design, as in many areas of life, there is often the “can’t see the forest for the trees” problem where you have to decide whether to focus on the big picture of how you serve your user constituency vs. the individual experience you are providing. All too often one gets attention and not the other on projects. When you join the new web with cloud computing, you bring together two disciplines that naturally pay attention to each area. Together, HTML5 and cloud computing bring elasticity to both the individual and the community they are a part of.

<Return to section navigation list>

Visual Studio LightSwitch and Entity Framework 4.1+

The Visual Studio LightSwitch (@VSLightSwitch) team posted Update for Microsoft Visual Studio LightSwitch 2011 (KB2603917) on 9/1/2011:

This update allows Visual Studio LightSwitch projects to be successfully published to SQL Azure.

VS10SP1-KB2603917-x86.exe, 2.0 MB: Download

Overview

When publishing a LightSwitch project to SQL Azure, the user receives the following error during the publish operation "SQL Server version not supported."

System requirements

Supported Operating Systems: Windows 7, Windows Server 2003 R2 (32-Bit x86), Windows Server 2003 R2 x64 editions, Windows Server 2008 R2, Windows Vista Service Pack 2, Windows XP

Microsoft Visual Studio LightSwitch 2011

Instructions

- Click the Download button on this page to start the download, or select a different language from the Change language drop-down list and click Change.

- Do one of the following:

- To start the installation immediately, click Run.

- To save the download to your computer for installation at a later time, click Save.

- To cancel the installation, click Cancel.

In case you missed this on 9/1/2011.

Return to section navigation list>

Windows Azure Infrastructure and DevOps

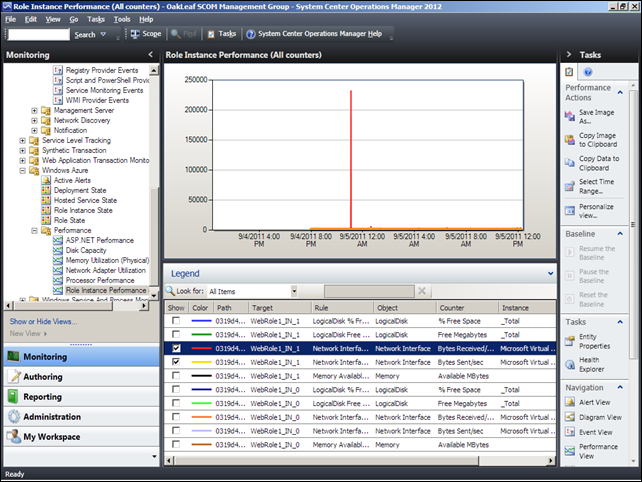

My (@rogerjenn) Configuring the Systems Center Monitoring Pack for Windows Azure Applications on SCOM 2012 Beta post of 9/5/2011 described how to configure performance monitoring and grooming Windows Azure Diagnostics data:

The Systems Center Operations Manager team’s “Guide for System Center Monitoring Pack for Windows Azure Applications” documentation provides sketchy instructions for configuring the monitors you install for a service hosted by Windows Azure. (See the Installing the Systems Center Monitoring Pack for Windows Azure Applications on SCOM 2012 Beta post of 9/4/2011.)

This article is an illustrated tutorial for configuring performance monitoring and grooming Windows Azure Diagnostics data to prevent its storage requirements from costing large sums each month.

Inspecting Default Windows Azure Monitor Data

The “Key Monitoring Scenarios” section of the documentation says:

The following performance collection rules, which run every 5 minutes, collect performance data for each Windows Azure application that you discover:

- ASP.NET Applications Requests/sec (Azure)

- Network Interface Bytes Received/sec (Azure)

- Network Interface Bytes Sent/sec (Azure)

- Processor % Processor Time Total (Azure)

- LogicalDisk Free Megabytes (Azure)

- LogicalDisk % Free Space (Azure)

- Memory Available Megabytes (Azure)

However, the docs fail to mention until the last page that collecting Processor % Processor Time Total (Azure) and Memory Available Megabytes (Azure) data is disabled by default.

Clicking the Monitoring button and selecting the Monitoring \ Windows Azure \ Performance \ Role Instance Performance shows all available counters that are enabled by default. To display one or more counters, mark their check boxe(s):

This chart for Bytes/sec Sent by the second Web Role (WebRole1_IN_1) Network Interface represents the outbound network traffic (chargeable) starting about four hours after completing installation of the WAzMP at about 4:00 PM Sunday, 9/4/2011, through noon on Monday 9/5/2011.

Steady relatively steady-state bursts from 1,500 to about 1,800 bytes/sec probably represents monitoring traffic. The three pulses to about 2,300, 3,100 and 2,100 are likely to be from early risers in Europe.

You can mark additional check boxes to overlay additional data, such as Bytes/sec Received (not chargeable). The 240 MB spike near midnight might be from a denial of service attempt.

The post continues with the following topics:

- Enabling Available MB of Memory and Total CPU Utilization Percentage Monitoring for a Windows Azure Role Instance (ServiceName)

- Making Windows Azure Monitors Visible in the Authoring \ Management Pack Objects \ Monitors List

- Changing the Enabled by Default value from False to True with an Override

- Enabling Windows Azure Diagnostics Data Storage Grooming

<Return to section navigation list>

Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

No significant articles today.

<Return to section navigation list>

Cloud Security and Governance

Chris Hoff (@Beaker) analyzed VMware’s vShield – Why It’s Such A Pain In the Security Ecosystem’s *aaS… in a 9/4/2011 post:

Whilst attending VMworld 2011 last week, I attended a number of VMware presentations, hands-on labs and engaged in quite a few discussions related to VMware’s vShield and overall security strategy.

I spent a ton of time discussing vShield with customers — some who love it, some who don’t — and thought long and hard about writing this blog. I also spent some time on SiliconAngle’s The Cube discussing such, here.

I have dedicated quite a lot of time discussing the benefits of VMware’s security initiatives, so it’s important that you understand that I’m not trying to be overtly negative, nor am I simply pointing fingers as an uneducated, uninterested or uninvolved security blogger intent on poking the bear. I live this stuff…every day, and like many, it’s starting to become messy. (Ed: I’ve highlighted this because many seem to have missed this point. See here for example.)

I’ve become…comfortably numb…

It’s fair to say that I have enjoyed “up-to-the-neck” status with VMware’s various security adventures since the first marketing inception almost 4 years ago with the introduction of the VMsafe APIs. I’ve implemented products and helped deliver some of the ecosystem’s security offerings. My previous job at Cisco was to provide the engineering interface between the two companies, specifically around the existing and next generation security offerings, and I now enjoy a role at Juniper which also includes this featured partnership.

I’m also personal friends with many of the folks at VMware on the product and engineering teams, so I like to think I have some perspective. Maybe it’s skewed, but I don’t think so.

There are lots of things I cannot and will not say out of respect for obvious reasons pertaining to privileged communications and NDAs, but there are general issues that need to be aired.

Geez, enough with the CYA…get on with it then…

As I stated on The Cube interview, I totally understand VMware’s need to stand-alone and provide security capacities atop their platform; they simply cannot expect to move forward and be successful if they are to depend solely on synchronizing the roadmaps of dozens of security companies with theirs.

However, the continued fumbles and mis-management of the security ecosystem and their partnerships as well as the continued competitive nature of their evolving security suite makes this difficult. Listening to VMware espouse that they are in the business of “security ecosystem enablement” when there are so few actually successful ecosystem partners involved beyond antimalware is disingenuous…or at best, a hopeful prediction of some future state.

Here’s something I wrote on the topic back in 2009: The Cart Before the Virtual Horse: VMware’s vShield/Zones vs. VMsafe APIs that I think illustrates some of the issues related to the perceived “strategy by bumping around in the dark.”

A big point of confusion is that vShield simultaneously describes both an ecosystem program and a set of products that is actually more than just anti-malware capabilities which is where the bulk of integration today is placed.

Analysts and journalists continue to miss the fact that “vShield” is actually made up of 4 components (not counting the VMsafe APIs):

- vShield Edge

- vShield App

- vShield Endpoint

- vShield Manager

What most people often mean when they refer to “vShield” are the last two components, completely missing the point that the first two products — which are now monetized and marketed/sold as core products for vSphere and vCloud Director — basically make it very difficult for the ecosystem to partner effectively since it’s becoming more difficult to exchange vShield solutions for someone else’s.

An important reason for this is that VMware’s sales force is incentivized (and compensated) on selling VMware security products, not the ecosystem’s — unless of course it is in the way of a big deal that only a partnership can overcome. This is the interesting juxtaposition of VMware’s “good enough” versus incumbent security vendors “best-of-breed” product positioning.

VMware is not a security or networking company and ignoring the fact that big companies with decades of security and networking products are not simply going to fade away is silly. This is true of networking as it is security (see software-defined networking as an example.)

Technically, vShield Edge is becoming more and more a critical piece of the overall architecture for VMware’s products — it acts as the perimeter demarcation and multi-tenant boundary in their Cloud offerings and continues to become the technology integration point for acquisitions as well as networking elements such as VXLAN.

As a third party tries to “integrate” a product which is functionally competitive with vShield Edge, the problems start to become much more visible and the partnerships more and more clumsy, especially in the eyes of the most important party privy to this scenario: the customer.

Jon Oltsik wrote a story recently in which he described the state of VMware’s security efforts: “vShield, Cloud Computing, and the Security Industry”

So why aren’t more security vendors jumping on the bandwagon? Many of them look at vShield as a potentially competitive security product, not just a set of APIs.

In a recent Network World interview, Allwyn Sequeira, VMware’s chief technology officer of security and vice president of security and network solutions, admitted that the vShield program in many respects “does represent a challenge to the status quo” … (and) vShield does provide its own security services (firewall, application layer controls, etc.)

Why aren’t more vendors on-board? It’s because this positioning of VMware’s own security products which enjoy privileged and unobstructed access to the platform that ISV’s in the ecosystem do not have. You can’t move beyond the status quo when there’s not a clear plan for doing so and the past and present are littered with the wreckage of prior attempts.

VMware has its own agenda: tightly integrate security services into vSphere and vCloud to continue to advance these platforms. Nevertheless, VMware’s role in virtualization/cloud and its massive market share can’t be ignored. So here’s a compromise I propose:

- Security vendors should become active VMware/vShield partners, integrate their security solutions, and work with VMware to continue to bolster cloud security. Since there is plenty of non-VMware business out there, the best heterogeneous platforms will likely win.

- VMware must make clear distinctions among APIs, platform planning, and its own security products. For example, if a large VMware shop wants to implement vShield for virtual security services but has already decided on Symantec (Vontu) or McAfee DLP, it should have the option for interoperability with no penalties (i.e., loss of functionality, pricing/support premiums, etc.).

Item #1 Sounds easy enough, right? Except it’s not. If the way in which the architecture is designed effectively locks out the ecosystem from equal access to the platform except perhaps for a privileged few, “integrating” security solutions in a manner that makes those solutions competitive and not platform-specific is a tall order. It also limits innovation in the marketplace.

Look how few startups still exist who orbit VMware as a platform. You can count them on less fingers that exist on a single hand. As an interesting side-note, Catbird — a company who used to produce their own security enforcement capabilities along with their strong management and compliance suite — has OEM’d VMware’s vShield App product instead of bothering to compete with it.

Now, item #2 above is right on the money. That’s exactly what should happen; the customer should match his/her requirements against the available options, balance the performance, efficacy, functionality and costs and ultimately be free to choose. However, as they say in Maine…”you can’t get there from here…” at least not unless item #1 gets solved.

In a complimentary piece to Jon’s, Ellen Messmer writes in “VMware strives to expand security partner ecosystem“:

Along with technical issues, there are political implications to the vShield approach for security vendors with a large installed base of customers as the vShield program asks for considerable investment in time and money to develop what are new types of security products under VMware’s oversight, plus sharing of threat-detection information with vShield Manager in a middleware approach.

…and…

The pressure to make vShield and its APIs a success is on VMware in some respects because VMware’s earlier security API , the VMsafe APIs, weren’t that successful. Sequiera candidly acknowledges that, saying, “we got the APIs wrong the first time,” adding that “the major security vendors have found it hard to integrate with VMsafe.”

Once bitten, twice shy…

So where’s the confidence that guarantees it will be easier this time? Basically, besides anti-malware functionality provided by integration with vShield endpoint, there’s not really a well-defined ecosystem-wide option for integration beyond that with VMware now. Even VMware’s own roadmaps for integration are confusing. In the case of vCloud Director, while vShield Edge is available as a bundled (and critical) component, vShield App is not!

Also, forcing integration with security products now to directly integrate with vShield Manager makes for even more challenges.

There are a handful of security products besides anti-malware in the market based on the VMsafe APIs, which are expected to be phased out eventually. VMware is reluctant to pin down an exact date, though some vendors anticipate end of next year.

That’s rather disturbing news for those companies who have invested in the roadmap and certification that VMware has put forth, isn’t it? I can name at least one such company for whom this is a concern.

Because VMware has so far reserved the role of software-based firewalls and data-loss prevention under vShield to its own products, that has also contributed to unease among security vendors. But Sequiera says VMware is in discussions with Cisco on a firewall role in vShield. And there could be many other changes that could perk vendor interest. VMware insists its vShield APIs are open but in the early days of vShield has taken the approach of working very closely with a few selected vendors.

Firstly, that’s not entirely accurate regarding firewall options. Cisco and Juniper both have VMware-specific “firewalls” on the market for some time; albeit they use different delivery vehicles. Cisco uses the tightly co-engineered effort with the Nexus 1000v to provide access to their VSG offering and Juniper uses the VMsafe APIs for the vGW (nee’ Altor) firewall. The issue is now one of VMware’s architecture for integrating moving forward.

Cisco has announced their forthcoming vASA (virtual ASA) product which will work with the existing Cisco VSG atop the Nexus 1000v, but this isn’t something that is “open” to the ecosystem as a whole, either. To suggest that the existing APIs are “open” is inaccurate and without an API-based capability available to anyone who has the wherewithal to participate, we’ll see more native “integration” in private deals the likes of which we’re already witnessing with the inclusion of RSA’s DLP functionality in vShield/vSphere 5.

Not being able to replace vShield Edge with an ecosystem partner’s “edge” solution is really a problem.

In general, the potential for building a new generation of security products specifically designed for VMware’s virtualization software may be just beginning…

Well, it’s a pretty important step and I’d say that “beginning” still isn’t completely realized!

It’s important to note that these same vendors who have been patiently navigating VMware’s constant changes are also looking to emerging competitive platforms to hedge their bets. Many have already been burned by their experience thus far and see competitive platform offerings from vendors who do not compete with their own security solutions as much more attractive, regardless of how much marketshare they currently enjoy. This includes community and open source initiatives.

Given their druthers, with a stable, open and well-rounded program, those in the security ecosystem would love to continue to produce top-notch solutions for their customers on what is today the dominant enterprise virtualization and cloud platform, but it’s getting more frustrating and difficult to do so.

It’s even worse at the service provider level where the architectural implications make the enterprise use cases described above look like cake.

It doesn’t have to be this way, however.

Jon finished up his piece by describing how the VMware/ecosystem partnership ought to work in a truly cooperative manner:

This seems like a worthwhile “win-win,” as that old tired business cliche goes. Heck, customers would win too as they already have non-VMware security tools in place. VMware will still sell loads of vShield product and the security industry becomes an active champion instead of a suspicious player in another idiotic industry concept, “coopitition.” The sooner that VMware and the security industry pass the peace pipe around, the better for everyone.

The only thing I disagree with is how this seems to paint the security industry as the obstructionist in this arms race. It’s more than a peace pipe that’s needed.

Puff, puff, pass…it’s time for more than blowing smoke.

/Hoff

Related articles

- VMware’s (New) vShield: The (Almost) Bottom Line (rationalsurvivability.com)

- VMware strives to expand security partner ecosystem (infoworld.com)

- VMware preparing data loss prevention features for vShield (infoworld.com)

- Highlights from VMworld 2011 (datacenterknowledge.com)

- Clouds, WAFs, Messaging Buses and API Security… (rationalsurvivability.com)

- AWS’ New Networking Capabilities – Sucking Less

(rationalsurvivability.com)

- Sourcefire Enables Application Control Within Virtual Environments (it-sideways.com)

- Using The Cloud To Manage The Cloud (informationweek.com)

- Virtualizing Your Appliance Is Not Cloud Security (securecloudreview.com)

- OpenFlow & SDN – Looking forward to SDNS: Software Defined Network Security(rationalsurvivability.com)

<Return to section navigation list>

Cloud Computing Events

The East Bay .NET User Group announced on 9/5/2011 a New Day, New Time and New Location for its Azure for Developers presentation by Robin Shahan (@RobinDotNet), Microsoft MVP:

In this talk, Robin will show the different bits of Windows Azure and how to code them. She will explain why you would use each bit, sharing her experience migrating her company’s infrastructure to Azure last year. Topics include:

- SQL Azure: Migrating a database from the local SQLServer to a SQLAzure instance.

- Diagnostics: Adding code to handle the transfer of diagnostics to Table Storage and Blob Storage - tracing, IIS logs, performance counters, Windows event logs, and infrastructure logs.

- Web roles: Creating a Web Role with a WCF service.

- WCF: Adding methods to the service that read and write to/from the SQL Azure database. This includes exponential retry code for SQL Azure connection management.

- Connections: Changing the connection strings and publishing the service to the cloud.

- Clients: Changing a client app to consume the service, showing how to add a service reference and then call it. Changing the client to run against the service in the cloud. Showing the diagnostics using the tools from Cerebrata.

- Queues: Adding a method to the service to submit an entry to the queue. Adding code to initialize the queue as needed.

- Worker roles: Adding code to the worker role to retrieve the entries from the queue and process them.

- Blobs: The method that processes the queue entries writes the messages to blob storage.

Speaker: Robin Shahan

Robin is a Microsoft MVP with over 20 years of experience developing complex, business-critical applications for Fortune 100 companies such as Chevron and AT&T. She is currently the Director of Engineering for GoldMail, where she recently migrated their entire infrastructure to Microsoft Azure. Robin regularly speaks at various .NET User Groups and Code Camps on Microsoft Azure and her company's migration experience. She can be found on twitter as @RobinDotNet and you can read exciting and riveting articles about ClickOnce deployment and Microsoft Azure on her blog at http://robindotnet.wordpress.com

Rob Gillen (@argodev) posted Slides from DevLink 2011 on 9/5/2011:

I had the privilege of speaking at DevLink 2011 a few weeks ago in Downtown Chattanooga, TN. I have been a bit OBE (overcome by events) since I left the conference and have been unable to post my slides until now. I hope to get the videos and other materials up in the coming week or so. If you came to one of these sessions – thanks – the attendance at both was great and I appreciated the questions from the audience.

Intro to GPGPU with CUDA (DevLink): View more presentations from Rob Gillen.

AWS vs. Azure: View more presentations from Rob Gillen.

<Return to section navigation list>

Other Cloud Computing Platforms and Services

Joe Brockmeier (@jzb) reported Coming Soon to Engine Yard: MongoDB, PostgreSQL 9 in a 9/5/2011 post to the ReadWriteCloud blog:

Engine Yard is moving well beyond its roots. On August 23rd, the company signed an agreement to acquire Orchestra to add PHP to its Platform-as-a-Service (PaaS) offerings. Now it's announcing plans to add to its database stack.

In a post last week, Engine Yard's Ines Sombra outlined plans to upgrade the MySQL implementation, and expand the database stack to PostgreSQL 9 and MongoDB.

According to Sombra, Engine Yard is going to be upgrading MySQL to 5.1 and 5.5. (You can find the current supported stack on the Engine Yard site.) PostgreSQL 9 is in a limited alpha preview for Engine Yard customers, with plans for a public beta shortly. MongoDB is not yet in alpha, but Sombra writes that Engine Yard has partnered with MongoHQ and MongoLab.

Engine Yard has its work cut out for it keeping up with Heroku, which was acquired by Salesforce.com in December 2010. Heroku has been busy as well, adding Node.js last year and Clojure recently. (Heroku also supports Ruby, of course, and Java.) For datastores, Heroku supports a wide variety – MySQL, MongoDB, CouchDB, Memcache, and PostgreSQL.

After picking up PHP, PostgreSQL, and MongoDB, where do you think Engine Yard should go next?

Kenneth Van Surksum (@kennethvs) reported the availability of Papers: vCloud Architecture ToolKit 2.0 in a 9/5/2011 post to his CloudComputing.info blog:

In June this year, cloudcomputing.info reported that VMware released a set of papers, dubbed the vCloud Architecture Toolkit (vCAT) providing guidance for customers planning to implement their own cloud infrastructure.

Now VMware has updated the vCloud Architecture Toolkit to version 2.0, which contains the following papers:

- Document Map, providing an overview of the documents below

- vCAT introduction, providing considerations when first deploying a cloud strategy.

- Private VMware vCloud Service Definition, providing business and functional requirements for Private cloud architectures including sample use cases

- Public VMware vCloud Service Definition, providing business and functional requirements for Public cloud architectures including sample use cases

- Architecting a VMware vCloud, providing design considerations for architecture

- Operating a VMware vCloud, providing operational considerations for running a vCloud

- Consuming a VMware vCloud, providing organization and user considerations for building and running vApps within a vCloud

- vCloud implementiation Examples:

- Hybrid VMware vCloud Use Case

Or you can download the whole bundle.

Anuradha Shukla reported Tieto Accelerates Time-to-Market with Cloud in a 9/5/2011 post to the CloudTweaks.com blog:

IT service company Tieto is taking SAP and Database platforms to the cloud by the introduction of two new PaaS (Platform as a Service) offerings.

By leveraging the dynamic SAP Landscape, enterprises can run applications without spending on expensive hardware or technical expertise. The Dynamic SAP Landscape, claims Tieto, has a tested environment, ready models for implementations and operations, operational processes as well as tools in place.

“Cloud service model is bringing a new level of efficiency and flexibility in all core areas of IT,” said director Mika Tuohimetsä at Tieto’s Managed Services and Transformation. “We see that PaaS has started to catch attention of customers’ executives for its ability to accelerate time-to-market and advantages of cost reduction. New cloud platform services are our answers to those needs.”

Tieto notes that the enterprises investing in this Database platform delivered as a service will be able to enjoy benefits from optimization of hardware resources and make significant savings on software licenses. The company is offering these services 24/7 and emphasizes that Database platform as a service offers high availability for all databases on hardware and application level, as well as horizontal and vertical scaling.

In addition to including robust backup management Database platform offers customers a possibility to choose between different levels of disaster recovery. Based in Northern Europe, Tieto provides IT and product engineering services and aims to become a leading service integrator creating the best service experience in IT.

It offers highly specialized IT solutions and services that are complemented by a strong technology platform to create tangible business benefits for its local and global customers.

The company’s PaaS services utilize energy efficient and high secured data centre facilities in Finland and Sweden, with advanced cloud service capabilities.

The Cloud Journal staff reported China Telecom Announces Plans for the Cloud on 9/5/2011:

From ChinaTechNews.Com.

“Chinese telecom operator China Telecom has announced its eSurfing cloud computing strategy, brand, and solution, and it plans to launch eSurfing cloud devices and cloud storage products in 2012.

China Telecom established a cloud computing project team in 2009 and the company has completed tests in Shanghai, Guangdong, Guangxi, and Jiangxi. According to Yang Jie, vice general manager of China Telecom, the data center is the core for the scale development of cloud computing. At present, China Telecom has 300 cloud computing rooms, covering a total area of ten million square meters. For the cloud application sector, Yang said China Telecom’s cloud devices and cloud storage products have been put into commercial trial. The formal operation is expected to be started at the beginning of 2012.

Yang also said that network capacity is the foundation for the scale development of cloud computing. At the beginning of 2011, China Telecom launched a project to promote the access of optical fiber network. For network coverage, China Telecom has deployed its network in 342 cities and 2,055 counties, and 90% of villages and towns in China.”

The rest of this article may be found here.

Brian Gracely (@bgracely) posted Thoughts from VMworld 2011 to his Clouds of Change blog on 9/4/2011:

Prior to VMworld, I jotted down some thoughts on areas that I wanted to explore during the week. As I stated then, it feels like we're at a point where there is going to be significant change in many segments of the IT industry.

It was quite a busy week for me, as it was for most people:I didn't get to explore all of the areas in my original list. But I did get to walk the exhibitor floor, attend the keynotes and have many hallway conversations with experts in most of those areas. So here's what I gleaned from the week:

- Recorded The Cloudcast (.NET) - Live - Eps.18 - vCloud and vCloud Security

- Part of a Silicon Angle "Cloud Realities" panel with Jay Fry (@jayfry3) and Matthew Lodge (@mathewlodge)

- Recorded the Daily Blogger Techminute for Cisco

- Recorded an Intel "Conversations in the Cloud" podcast with (@techallyson) - airing date TBD

- Presented "Cisco UCS and Cloud Vision" whiteboard in Intel booth.

VMworld ("the conference"): Of all the events I attend each year, VMworld seems to be the one that does the best job at building interactive communities. Whether it was the outstanding "Hangout Area", huge social media wall, vExpert events or the daily vTweet-up events (often hosted by non-VMware groups), there were always casual opportunities to meet new people and interact around new ideas. Just an awesome collection of brain-power and really great people. Kudos to everyone at VMware for making community a top priority.

The only two suggestions I'd make to the organizers would be:

- Put people's name on both sides of the badge, and in larger fonts. Whether you're meeting someone in a booth, in the hallway or an event, it's good to know who you're talking to (especially in crowds) and all too often the badge is flipped over.

- I heard about many sessions, especially in the new areas (Cloud Application Platforms) where the rooms were under-reserved. While I understand the policy was that all sessions must be pre-booked to attend, it would be great to know when attendance is low and allow first-come-first-seated attendance to those events (full-pass or partial-pass). Just send a tweet 5 minutes before if seats aren't filling up.

VMware Announcements & Strategy: As I watched Steve Herrod's keynote, which covered an incredible range of technologies and business use-cases, it became very clear that VMware has reached a point (that many companies with huge market-share in a certain category reach) where they are going off in many different directions. And as has been the case with many companies before, it's not exactly clear what the identify of the company will be going forward. Back in 2010, I joked that the company should change it's name from VMware to CLOUDware. Maybe that wasn't too far off. Or maybe APPSware or SERVICESware would be a better fit. At a macro-level, they are trying to play in all the game-changing markets (virtualization, IaaS, PaaS, SaaS, VDI, mobile) and their portfolio is very impressive. But managing the revenue transitions, since not all those technologies need VMware vSphere, and the broad mix of skills to make it all work are the challenges. It will be interesting to see how this evolves over the next 2-3 years.

vSphere is now a 5th generation technology, which is often (historically in technology cycles) the point where innovation slows and companies either move into broad market-segmentation or they begin to mostly add functionality that previously existed elsewhere (partner or competitive products). vSphere 5 cleared the final hurdle of being able to handle any size, any performance-level VM ("Monster VMs"), so what's left to conquer in the hypervisor space?

VMware Partners:

The partner ecosystem has always been a huge part of VMworld, but this year seemed different for a few reasons:

- As was noted back when the session catalog was announced, the bulk of the sessions were either given by VMware employees or were focused on VMware-only technologies. In the past, it was fairly balanced between VMware and complimentary ecosystem discussions.

- Neither Paul or Steve's keynote made any significant mention of partners, unlike in the past when partners often came on-stage for demonstrations.

- Many partners expressed concern about the way that the "open API or open plugin" architecture that was so robust and successful with vCenter has not been extended with vCloud Director. This may just be that it wasn't until vCenter 3.5 that plugins started and we're only on vCloud Directory 1.5, but the limited guidance or consistency from VMware about how this will evolve did come up in conversations many times.

It's quite possible that the task of appearing impartial to dozens of huge technology partners (Diamond and Gold Sponsors) has resulted in the shift in focus, so it might not be worth reading too much into this. But it will be an interesting area to watch going forward.

Next-Generation End User Computing:

This was probably the area that had the most people excited, at least from a VMware technology perspective. Between Project Octopus (Enterprise DropBox), AppBlast (HTML5 App Client), Horizon Mobile (Mobile Hypervisor) and "Universal Service Broker", it appears that VMware has laid out an architecture for the next-generation end-user experience. As someone who doesn't want IT involved in the desktop/mobile space, I was excited to see the possibilities. But it created some questions in my mind:

- Will this be something that is run by IT, with their history of locking down functionality? Or will it be offered by Cloud Providers directly to Lines of Business or small groups, where they may value flexibility and pace more than the typical control of IT?

- It wasn't really clear how AppBlast works (where are those OS-bound, or Windows applications actually running?), but it did feel like a way to leap-frog past traditional VDI. So are we at the beginning of an era of "legacy-VDI" and "Cloud-VDI" (or "Cloud-Desktop"), where "Cloud" could refer to either the delivery model (in-house or hosted) or the viewing model (in a browser, independent of OS)?

- Enterprise-specific tablets have had a difficult enough time finding success in the market, so did Horizon Mobile just put the final nail in that coffin? It doesn't work with iOS yet (huge gap), but if you're an Enterprise (or Gov't), why not just have a BYOD policy (subsidized or not) and use the mobile hypervisor? The downside is lock-in to the VMware Horizon Manager for AppStore. It also seems like a huge opportunity for some company to fill in the lack-of-iOS support with a product/service/solution.

Next-Generation Applications: This is a very new space for VMware (Spring, vFabric, Cloud Foundry), but it's definitely near and dear to Paul Maritz's heart, as was stated throughout his keynote. There were some announcements around vFabric Data Director as well as partnerships (and micro) for Cloud Foundry (Cloudcast interview with Dave McCrory about Cloud Foundry), but the show is still dominated by infrastructure-centric attendees. While topics like vFabric or Cloud Foundry might be more appropriate topics at conferences like OSCON, GlueCon, LinuxCon or Cloud Camps, VMware might want to take a page from EMC's book (Data Scientist Summit at EMC World) and hold an 1/2 day event specifically for PaaS developers.

Storage: From purely a technology standpoint, Storage got the most buzz at VMworld. Specifically, any storage that involved the words "SSD" and/or "Flash". This has been building for most of 2011, so this wasn't new, but it's starting to reach a point where big things are going to happen. Those things will be M&A activities and huge customer deployment announcements. Fusion-io still gets the most buzz, but there was quite a bit of anticipation about where all-flash arrays like Pure Storage would stick in the market, and where heavyweights like EMC or NetApp would go next.The other two concepts that seem to get occasional mention were:

- Combined compute + storage solutions that deliver HA/DRS/vMotion capabilities without the use of external SAN arrays.

- The need to move past traditional File/Block methods of describing how data is stored for VMs, and moving to native-VM-aware technologies that aren't bound by the limitations of File/Block overhead.

Networking: VMworld isn't a networking-centric show, like InterOp, so you don't expect a lot of networking to be discussed. But there were a few interesting areas:

- The IETF Draft of VXLAN was announced - jointly written by VMware, Cisco, Arista, Broadcom, Citrix and RedHat. VXLAN provides a L2 overlay for L3 networks, putting control of VM mobility at the edges of the network (hypervisor, vSwitch, vFirewall edge).

- With the VXLAN announcement, many people asked what this meant for existing "VM Mobility in the Network", such as OTV, LISP or the variety of ways that network vendors are attempting to build next-generation Layer 2 (or "flat") networks. I suspect that customers will ultimately use a combination of technologies and architectures. It's not like this is the first time "all the smarts at the edge" approaches have come along. Microsoft tried to put IPsec and SSL in every Windows end-points to make the network dumb ("and simpler") but that never quite caught on. Maybe this time will be different, or maybe it won't. Only time will tell.

- Cisco and Juniper announced enhancements to their virtual firewall products, but (as mentioned above), it's not 100% clear how the VMware vShield roadmap and/or APIs will allow these to integrate and innovate.

Cloud Management, Automation and Orchestration: Nothing huge here in terms of product announcements, but it was definitely top-of-mind for almost every infrastructure-centric professional I met. There is an urgency to "get smart about Cloud Management, Automation and Orchestration".

They seem to realize that they can either watch their roles get automated, or be the expert on how the automation gets designed and deployed. The trick is figuring out which Management/Automation/Orchestration tools work and how to differentiate them. This still seemed to be an open-ended question for many people.

Huge opportunity for people to create trainings, organize local discussion events, recruit talent, etc.

Interesting Twists: This demo got me thinking quite a bit about the possibilities for bringing data closer to compute, or compute closer to data.

Brian is a cloud evangelist for Cisco.

<Return to section navigation list>

0 comments:

Post a Comment