Windows Azure, Build and Cloud Computing Posts for 9/12/2011+ (Part II)

| A compendium of Windows Azure, SQL Azure Database, AppFabric, Windows Azure Platform Appliance and other cloud-computing articles. |

Note: This post contains the overflow from Windows Azure and //BUILD/ Posts for 9/12/2011+.

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database and Reporting

- Marketplace DataMarket and OData

- Windows Azure AppFabric: Apps, Access Control, WIF and Service Bus

- Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch and Entity Framework v4+

- Windows Azure Infrastructure and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

Azure Blob, Drive, Table and Queue Services

Michael Collier (@MichaelCollier) recommended that you Back Up Your Dev Storage BEFORE Updating to Windows Azure SDK 1.5 in a 9/15/2011 post:

Yesterday Microsoft made available a new version of the Windows Azure SDK. The new Windows Azure SDK 1.5 has many great features, some of which I discussed previously.

One thing that was not apparent to me before upgrading from 1.4 to 1.5 was that as part of the upgrade, a new development storage database would need to get created and I’d loose acess to all the data previously stored in development storage. When starting the storage emulator for the first time after tooling upgrade, DSINIT should run. DSINIT will create a new development storage database for you. The new database should be named “DevelopmentStorageDb20110816″. You can then connect to it using your favorite storage tool, such as Cerebrata Storage Studio or Azure Storage Explorer. Once connecting, you will notice that all the data you had previously stored in dev storage is now gone!

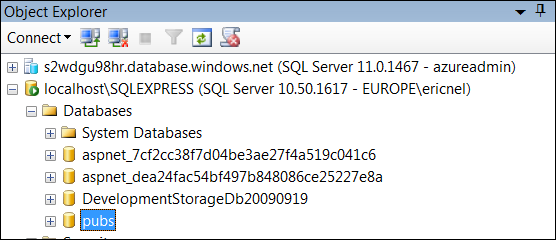

Actually, the data doesn’t appear to be so much “gone” as “I can’t get there from here”. If you take a look at your local SQL Express instance, you’ll likely notice the new “DevelopmentStorageDb20110816″ database and the prior “DevelopmentStorageDb20090919″ database. How you can get to that data easily . . . . I’m not yet sure.

What to do now? You’re best bet is likely to grab Mr. Fusion and your DeLorean and go back in time to the point prior to installing the updated Windows Azure tools. Back up your dev storage data, and then proceed to install the new tools. With the backup data, you can then restore it to the new dev storage. Cerebrata has a nice walkthrough of doing so here (minus the Mr. Fusion and DeLorean part). If you don’t have Mr. Fusion handy, you should also be able to uninstall the 1.5 tools, install the 1.4 tools, backup the data, reinstall the 1.5 tools, and then restore the data.

The lesson here – back up your dev storage data before updating to the latest Windows Azure tools.

<Return to section navigation list>

SQL Azure Database and Reporting

Eric Nelson (@ericnel) described Backing up SQL Azure with Backup from Red-Gate in a 9/13/2011 post:

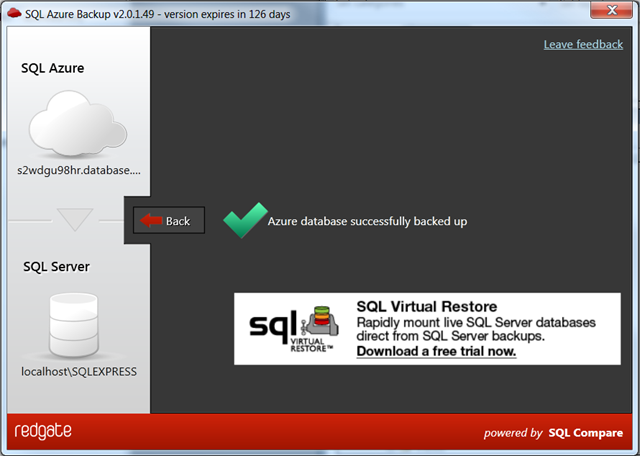

Last week I finally got round to trying out the beta of a new tool from Red-Gate software – SQL Azure Backup. It is an example of one of those tools that just makes you smile – small download, no install, works as advertised and even has a little “character”

It can backup to SQL Server or to Windows Azure Blob Storage using Microsoft’s Import/Export Service.

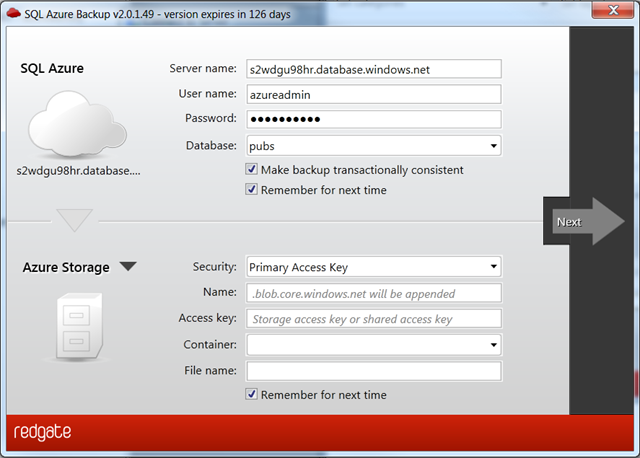

Step 1: Enter your SQL Azure Details

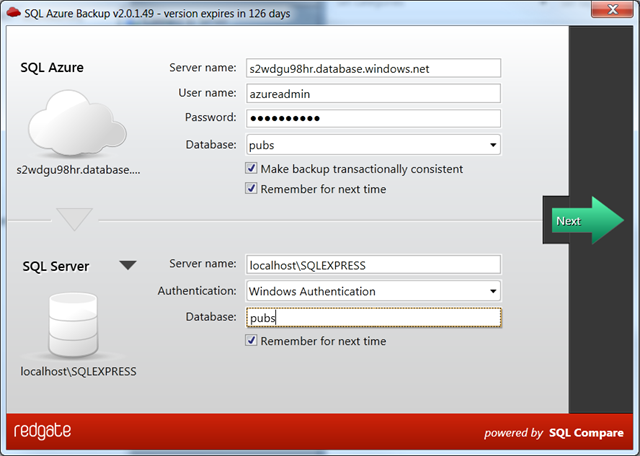

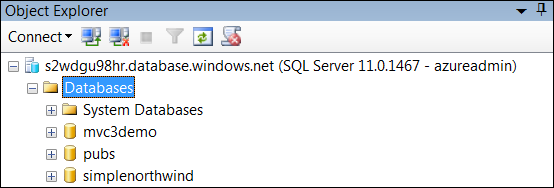

Step 2: Enter details of the target – in this case a local SQL Express

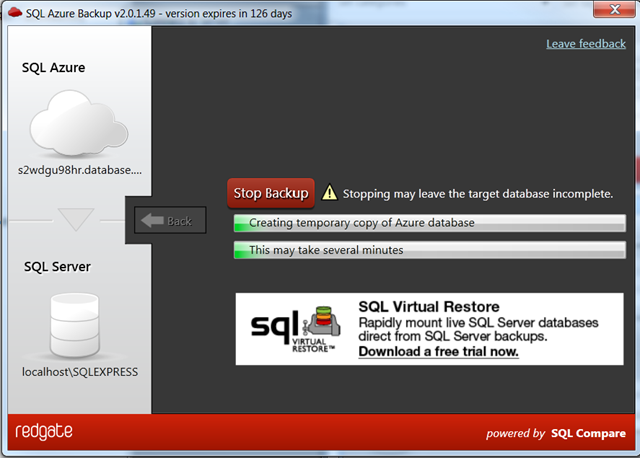

Step 3: Make a cup of tea

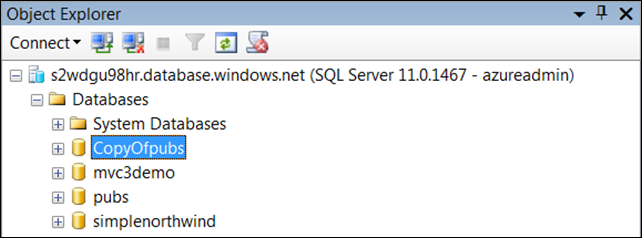

OR – watch what is happening. It creates a new db in SQL Azure for transactional consistency

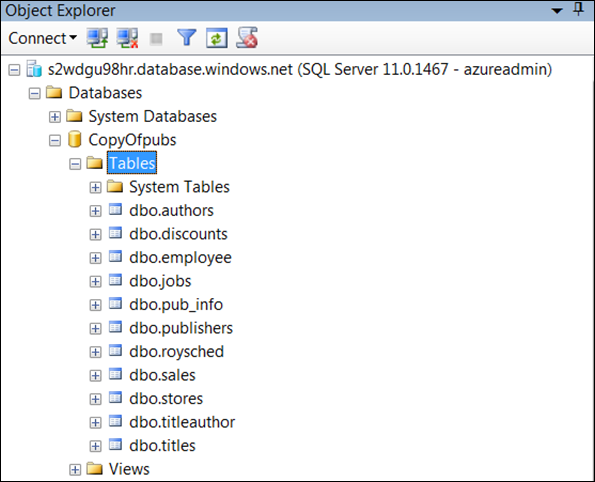

Which then gets the schema and data

Which is ultimately deleted

Step 4: Backup is complete

Step 5: And the target database is ready

Related Links

Gilad Parann-Nissany discussed Database security in the cloud in a 9/13/2011 post to the Porticor blog:

We often get requests for best practices related to relational database security in the context of cloud computing. People want to install their database of choice, whether it be Oracle, MySQL, MS SQL, or IBM DB2…

This is a complex question but it can be broken down by asking “what’s new in the cloud?” Many techniques that have existed for ages remain important, so let’s briefly review database security in general.

Database security in context

A database usually does not stand alone; it needs to be regarded in the light of the environment it inhabits. From the security perspective, it pays to stop and think about:

- Application security. The application which uses the database (“sits atop” the DB) is itself open to various attacks. Securing the application will close major attack vectors to the data, such as SQL injection

- Physical security. In the cloud context, it means choose a cloud provider that has implemented and documented security best practices

- Network security. Your cloud environment and 3rd part security software should include network security techniques such as firewalls, virtual private networks, and intrusion detection and prevention

- Host security. In the cloud, your instances (a.k.a virtual servers) should use an up-to-date and patched operating system, virus and malware protection, and monitor and log all activities

Having secured everything outside the database, you are still left with threats to the database itself. They often involve:

- direct attacks on the data itself (in an attempt to get at it)

- indirect attacks on the data (such as at the log files)

- attempts to tamper with configuration

- attempts to tamper with audit mechanisms

- attempts to tamper with the DB software itself (e.g. tamper with the executables of the database software)

So far, these threats are recognizable to any database security expert with years of experience in the data center. So what changes in the cloud?

Data at rest in the cloud

At the end of the day, databases save “everything” on disks, often in files that may represent tables, configuration information, executable binaries, or other logical entities.

Defending and limiting access to these files is of course key. In the “old” data center, this was usually done by placing the disks in a (hopefully) secure location, i.e. in a room with good walls and restricted access. In the cloud, virtual disks are accessible through a browser, and also to some of the employees of the cloud provider; obviously some additional thinking is required to secure them.

Besides keeping your access credentials closely guarded, it is universally recommended that virtual disks with sensitive data should always be encrypted.

There are two basic ways to defend these files

- File-level encryption. Basically you need to know which specific files you wish to protect, and encrypt them by an appropriate technique

- Full disk encryption. This encrypts everything on the disks

Full disk encryption today is the best practice. It ensures nothing is forgotten.

Encryption keys in the cloud

Encrypting your data at rest on virtual disks is definitely the right way to go. You should also consider were the encryption keys are kept, since if an attacker gets hold of the keys they will be able to decrypt your data.

It is recommended to avoid solutions that keep your keys right next to your data, since then you actually have no security.

It is also recommended to avoid vendors that tell you “don’t trust the cloud, but trust us, and let us save your keys”. There are a number of such vendors in the market. The fact is that cloud providers such as Amazon, Microsoft, Rackspace or Google – know their stuff. If you do not trust them with your precious data, why trust cloud vendor X?

One approach that does work from a security perspective – you can keep all your keys back in your data center. But that is cumbersome; in fact you went out to the cloud because you wanted to move out of the data center.

A unique solution does exist. Porticor provides its unique key management solution which allows you to trust no one but yourself, yet enjoy the full power of a pure cloud implementation. For more on this, see this white paper. This solution also fully implements full disk encryption, as noted above.

Database security in the cloud is a complex subject, yet entirely possible today.

About the author: Gilad Parann-Nissany is the founder and CEO of Porticor Cloud Security, a leading security provider for cloud Infrastructure (IaaS) and Platforms (PaaS).

<Return to section navigation list>

MarketPlace DataMarket and OData

No significant articles today.

<Return to section navigation list>

Windows Azure AppFabric: Apps, Access Control, WIF and Service Bus

No significant articles today.

<Return to section navigation list>

Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

No significant articles today.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

Brian Raymor of the IE Team described the status of Site Ready WebSockets in a 9/15/2011 post:

The Web gets richer and developers are more creative when sites and services can communicate and send notifications in real-time. WebSockets technology has made significant progress over the last nine months. The standards around WebSockets have converged substantially, to the point that developers and consumers can now take advantage of them across different implementations, including IE10 in Windows 8. You can try out a WebSockets test drive that shows real time, multiuser drawing that works across multiple browsers.

What is WebSockets and what does it do?

WebSockets enable Web applications to deliver real-time notifications and updates in the browser. Developers have faced problems in working around the limitations in the browser’s original HTTP request-response model, which was not designed for real-time scenarios. WebSockets enable browsers to open a bidirectional, full-duplex communication channel with services. Each side can then use this channel to immediately send data to the other. Now, sites from social networking and games to financial sites can deliver better real-time scenarios, ideally using same markup across different browsers.

What has changed with WebSockets?

WebSockets have come a long way since we wrote about them in December 2010. At that time, there were a lot of ongoing changes in the basic technology, and developers trying to build on it faced a lot of challenges both around efficiency and just getting their sites to work. The standard is now much more stable as a result of strong collaboration across different companies and standards bodies (like the W3C and the Internet Engineering Task Force).

The W3C WebSocket API specification has stabilized, with no substantive issues blocking last call. The specification has new support for binary message types. There are still issues under discussion, like improving the validation of subprotocols. The protocol is also sufficiently stabilized that it’s now on the agenda of the Internet Engineering Steering Group for final review and approval.

Site-ready technologies

The Web moves forward when developers and consumers can rely on technologies to work well. When WebSockets technology was shifting and “under construction,” we used HTML5 Labs as a venue for experimentation and feedback from the community. With a prototype we gain implementation experience that leads to stronger engagement in the working group and the opportunity to collect feedback from the community, both of which ultimately lead to a better, and more stable, design for developers and consumers. We’re excited and encouraged by how HTML5 Labs helped us work with the community to bring WebSockets to where it is today.

Jason Zander (@jlzander) posted Announcing Visual Studio 11 Developer Preview on 9/14/2011:

Today in the BUILD keynote I had the opportunity to show some of the new functionality in Microsoft® Visual Studio® 11 Developer Preview and Microsoft® Team Foundation Server Preview. MSDN subscribers can download the previews today as well as the new release of .NET Framework 4.5 Developer Preview; general availability is on Friday, September 16.

- Download Visual Studio 11, Team Foundation Server 11, and several other components today (MSDN Subscribers Only Downloads).

- Friday 10:00 AM PDT general availability for Visual Studio 11 and Team Foundation Server 11 can be downloaded.

Some exciting announcements are being made here at BUILD. Visual Studio 11 provides an integrated development experience that spans the entire lifecycle of software creation from architecture to code creation, testing and beyond. This release adds support for Windows 8 and HTML 5, enabling you to target platforms across devices, services and the cloud. Integration with Team Foundation Server enables the entire team to collaborate throughout the development cycle to create quality applications.

.NET 4.5 has focused on top developer requests across all our key technologies, and includes new features for Asynchronous programming in C# and Visual Basic, support for state machines in Windows Workflow, and increased investments in HTML5 and CSS3 in ASP.NET.

We’ve shared a lot at BUILD already, for more on the future of Windows development I suggest you take a look at Steven Sinofsky and S. Somasegar’s blogs. More details on Team Foundation Server including the new service announced at BUILD and how we’re helping teams be more productive can be found on Brian Harry’s blog.

Quick Tour around Visual Studio 11 Features

Visual Studio 11 has several new features, all designed to provide an integrated set of tools for delivering great user and application experiences; whether working individually or as part of a team. Let me highlight a few:

Develop Metro style Apps for Windows 8

Visual Studio 11 includes a set of templates that get you started quickly developing Metro style applications with JavaScript, C#, VB or C++. The blank Application template provides the simplest starting point with a default project structure that includes sample resources and images. The Grid View, Split View, and Navigation templates are designed to provide a starting point for more complex user interfaces.

From Visual Studio 11, seamlessly open up your Metro style app with JavaScript in Expression Blend to add the style and structure of your application.

Due to the dynamic nature of HTML it is often difficult to see how a web page is going to look unless it is running. Blend’s innovative interactive design mode enables you to run your app on the design surface as a live app instead of a static visual layout.

Enhancements for Game Development

We have added Visual Studio Graphics tools to help game developers become more productive, making it easier to build innovative games. Visual Studio 11 provides access to a number of resource editing, visual design, and visual debugging tools for writing 2D / 3D games and Metro style applications. Specifically, Visual Studio Graphics includes tools for:

Viewing and basic editing of 3D models in Visual Studio 11.

Viewing and editing of images and textures with support for alpha channels and transparency.

Visually designing shader programs and effect files.

Debugging and diagnostics of DirectX based output.

Code Clone Analysis

Visual Studio has historically provided tools that enable a developer to improve code quality by refactoring code. However this process depends on the developer to determine where such reusable code is likely to occur. The Code-Clone Analysis tool in Visual Studio 11 examines your solution looking for logic that is duplicated, enabling you to factor this code out into one or more common methods. The tool does this very intelligently; it does not just search for identical blocks of code, rather it searches for semantically similar constructs using heuristics developed by Microsoft Research.

This technique is useful if you are correcting a bug in a piece of code and you want to find out whether the same bug resulting from the same programmatic idiom occurs elsewhere in the project.

Code Review Workflow with Team Explorer

Visual Studio 11 Preview works hand in hand with Team Foundation Server 11 to provide best in class application lifecycle management. Visual Studio 11 facilities collaboration is by enabling developers to request and perform code reviews through using Team Explorer. This feature defines a workflow in Team Foundation Server that saves project state and routes review requests as work items to team members. These workflows are independent of any specific process or methodology, so you can incorporate code reviews at any convenient point in the project lifecycle.

The Request Review link in the My Work pane enables you to create a new code review task and assign it to one or more other developers.

The reviewer can accept or decline the review, and respond to any messages or queries associated with the code review, add annotations and more. Visual Studio 11 displays the code by using a “Diff” format, showing the original code and the changes made by the developer requesting the review. This feature enables the reviewer to quickly understand the scope of the changes and work more efficiently.

Exploratory Testing and Enhanced Unit Testing

As development teams become more flexible and agile, they demand adaptive tools that still ensure a high commitment to quality. The Exploratory Testing feature is an adaptive tool for agile testing that enables you to test without performing formal test planning. You can now directly start testing the product without spending time writing test cases or composing test suites. When you start a new testing session, the tool generates a full log of your interaction with the application under test. You can annotate your session with notes, and you can capture the screen at any point and add the resulting screen shot to your notes. You can also attach a file providing any additional information if required. With the exploratory testing tool you can also:

- File actionable bugs fast. The Exploratory Testing tool enables you to generate a bug report, and the steps that you performed to cause unexpected behavior are automatically included in the bug report.

- Create test cases. You can generate test cases based on the steps that caused the bugs to appear.

- Manage exploratory testing sessions. When testing is complete, you can return to Microsoft Test Manager, which saves the details of the testing session and includes information such as the duration, which new bugs were filed, and which test cases were created.

What’s New in .NET 4.5

.NET 4.5 has focused on our top developer requests. Across ASP.NET, the BCL, MEF, WCF, WPF, Windows Workflow, and other key technologies, we’ve listened to developers and added functionality in .NET 4.5. Important examples include state machine support in Windows Workflow, and improved support for SQL Server and Windows Azure in ADO.NET. ASP.NET has increased investments in HTML5, CSS3, device detection, page optimization, and the NuGet package system, as well as introduces new functionality with MVC4. Learn more at Scott Guthrie’s blog.

.NET 4.5 also helps developers write faster code. Support for Asynchronous programming in C# and Visual Basic enables developers to easily write client UI code that doesn’t block, and server code that scales more efficiently. The new server garbage collector reduces pause times, and new features in the Parallel Computing Platform enable Dataflow programming and other improvements.

Start Coding

Visual Studio 11 includes several new features which will help developers collaborate more effectively while creating exciting experiences for their users. Here are some are some resources to help you get started.

Andy Cross (@AndyBareWeb) reported a Windows Azure v1.5 W3WP Crash and workaround in this 9/14/2011 post:

I have been updating my Windows Azure projects to the new SDK and ran into a problem. This is an issue introduced by myself but it may help a few people if I spell out what it was. All 7 production apps are now running v1.5 with no problem, the error is in a development piece of code (R&D quality).

When tracing using the TraceListener “DevelopmentFabricTraceListener”, the application throws an exception as it tries to write to the Trace listeners.

In Visual Studio the web.config entries:

<add type="Microsoft.ServiceHosting.Tools.DevelopmentFabric.Runtime.DevelopmentFabricTraceListener, Microsoft.ServiceHosting.Tools.DevelopmentFabric.Runtime, Version=1.0.0.0, Culture=neutral, PublicKeyToken=31bf3856ad364e35" name="DevFabricListener"> <filter type=""/> </add>is highlighted and underlined, with the tooltip “Invalid module qualification: Failed to resolve assembly Microsoft.ServiceHosting.Tools.DevelopmentFabric.Runtime”.

In my application, a Web Role, I was tracing using this trace listener, which would throw and exception. In Global.asax: Application_Error I was also tracing this exception, causing another exception to be thrown by the tracing infrastructure. Very quickly this deteriorates into a StackOverflowException and crashes W3WP.

The resolution is to simply comment out this particular TraceListener.

I am yet to discover why the name is failing to resolve (I will update here if I do – or if you find out why please let me know). A new version number would be my guess.

The Bytes by MSDN blog posted Bytes by MSDN: September 14 - Lynn Langit on 9/14/2011:

Tune in as Dave Nielsen (@davenielsen) and Lynn Langit (@llangit, pictured at right) discuss their work with governments and non-profit organizations. They cover how not-for-profits and government entities can save money by leveraging Cloud Computing. It’s a no-brainer, whether using Software as a Service (SaaS) or expanding storage, Windows Azure can provide a pay-for-what-you-need solution. Watch this conversation with Dave and Lynn to find out how the state of Florida is using the Cloud’s scalability during tax season to save money.

Video Downloads: WMV (Zip) | WMV | iPod | MP4 | 3GP | Zune | PSP

Audio Downloads: AAC | WMA | MP3 | MP4

Lynn Langit and Dave Nielsen recommend you check out

The HPC in the Cloud blog published a GigaSpaces Enables Seamless Deployment of Mission-Critical Java Applications to Windows Azure press release on 9/13/2011:

GigaSpaces Technologies, a pioneer of next generation application platforms for mission-critical applications, is now offering a solution that provides seamless on-boarding for enterprise Java and big-data applications to the Windows Azure platform.

"Customers that have enterprise Java applications can leverage the GigaSpaces solution to help them transition to the Windows Azure platform," said Prashant Ketkar, Director of Product Marketing, Windows Azure at Microsoft. "GigaSpaces is working with Microsoft to give Windows Azure customers deployment, management and monitoring capabilities for running applications in an agile and cost-effective way."

The GigaSpaces solution for Azure leverages the company's decade of experience in delivering application platforms for large-scale, mission-critical Java applications and was recognized at the recent Microsoft Worldwide Partners Conference as a building block for Windows Azure.

Windows Azure enables developers to build, host and scale applications in Microsoft datacenters located around the world. Developers can use existing skills with .NET, Java, Visual Studio, PHP and Ruby to quickly build solutions with no need to buy servers or set up a dedicated infrastructure. The solution also has automated service management to help protect against hardware failure and downtime associated with platform maintenance.

"Our platform enables businesses to move their applications to the cloud with no code change and minimal learning curve -- meaning that they can continue to develop in their traditional environment and leverage existing skills and assets, while gaining the cost and agility benefits of deploying to the Windows Azure cloud," says Adi Paz, EVP Marketing and Business Development at GigaSpaces. "At the same time, we provide enterprises and ISVs the ability to burst into the Windows Azure cloud at peak loads, run their pre-production on Windows Azure and save the costs associated with large-scale system testing."

The GigaSpaces solution for Windows Azure provides the following unique values:

- On-board mission critical enterprise Java/JEE/Spring and big-data applications to Windows Azure: Quickly and seamlessly, with no code change.

- True enterprise-grade production environment: Continuous availability and failover, elastic scaling across the stack and automating the application deployment lifecycle.

- Make Windows Azure services natively available to enterprise Java applications: Through tight integration between the GigaSpaces platform and Azure.

- The GigaSpaces market-leading Java in-memory data grid: Provides extreme performance, low latency and fine-grained multi-tenancy.

- Control and visibility: Built-in application- and cluster-aware monitoring.

- Avoiding vendor lock-in: Enables businesses to maintain existing development practices, and provides support for any application stack.

"GigaSpaces has brought to the Windows Azure implementation more than 10 years of experience in developing and deploying enterprise-grade application platforms for large-scale deployments running mission-critical applications for the world's largest organizations," continues GigaSpaces' Paz. "We look forward to bringing these same benefits to Windows Azure customers, on top of the many benefits of the Windows Azure platform."

To learn more about this integration, visit www.gigaspaces.com/azure , or see the Microsoft-GigaSpaces webcast. Cloudify for Azure will be available in Q4.

About GigaSpaces

GigaSpaces Technologies is the pioneer of a new generation of application virtualization platforms and a leading provider of end-to-end scaling solutions for distributed, mission-critical application environments, and cloud enabling technologies. GigaSpaces is the only platform on the market that offers truly silo-free architecture, along with operational agility and openness, delivering enhanced efficiency, extreme performance and always-on availability. The GigaSpaces offering includes solutions for enterprise application scaling and enterprise PaaS and ISV SaaS enablement that are designed from the ground up to run on any cloud environment -- private, public, or hybrid -- and offers a pain-free, evolutionary path to meet tomorrow's IT challenges.

Hundreds of organizations worldwide are leveraging GigaSpaces' technology to enhance IT efficiency and performance, among which are Fortune Global 500 companies, including top financial service enterprises, e-commerce companies, online gaming providers and telecom carriers.

For more information, please visit http://www.gigaspaces.com , or our blog at http://blog.gigaspaces.com .

Simon Munro (@simonmunro) described running MongoDB on Windows Azure in a 9/13/2011 post:

I was asked via email to confirm my thoughts on running MongoDB on Windows Azure, specifically the implication that it is not good practice. Things have moved along and my thoughts have evolved, so I thought it may be necessary to update and publish my thoughts.

Firstly, I am a big fan of SQL Azure, and think that the big decision to remove backwards compatibility with SQL Server was a good one that enabled SQL Azure to rid itself of some of the problems with RDBMSs in the cloud. But, as I discussed in Windows Azure has little to offer NoSQL, Microsoft is so big on SQL Azure (for many good reasons) that NoSQL is a second class citizen on Windows Azure. Even Azure Table Storage is lacking in features that have been asked for for years and if it moves forward, it will do so grudgingly and slowly. That means that an Azure architecture that needs the goodness offered by NoSQL products needs to roll in an alternative product into some Azure role of sorts (worker or VM role). (VM Roles don’t fit in well with the Azure PaaS model, but for purposes of this discussion the differences between a worker role and VM role are irrelevant.)

Azure roles are not instances. They are application containers (that happen to have some sort of VM basis) that are suited to stateless application processing – Microsoft refers to them as Windows Azure Compute, which gives a clue that they are primarily to be used for computing, not persistence. In the context of an application container Azure roles are far more unstable than an AWS EC2 instance. This is both by design and a good thing (if what you want is compute resources). All of the good features of Windows Azure, such as automatic patching, failover etc are only possible if the fabric controller can terminate roles whenever it feels like it. (I’m not sure how this termination works, but I imagine that, at least with web roles, there is a process to gracefully terminate the application by stopping the handling of incoming requests and letting the running ones come to an end.) There is no SLA for a Windows Azure compute single instance as there is with an EC2 instance. The SLA clearly states that you need two or more roles to get the 99.95% uptime.

For compute, we guarantee that when you deploy two or more role instances in different fault and upgrade domains your Internet facing roles will have external connectivity at least 99.95% of the time.

On 4 February 2011, Steve Marx from Microsoft asked Roger Jennings to stop publishing his Windows Azure Uptime Report [Emphasis added.]

Please stop posting these. They’re irrelevant and misleading.

To others who read this, in a scale-out platform like Windows Azure, the uptime of any given instance is meaningless. It’s like measuring the availability of a bank by watching one teller and when he takes his breaks.

Think, for a moment, what this means when you run MongoDB in Windows Azure – your MongoDB role is going to be running where the “uptime of any given instance is meaningless”. That makes using a role for persistence really hard. The only way then is to run multiple instances and make sure that the data is on both instances.

Before getting into how this would work on Windows Azure, consider for a moment that MongoDB is unashamedly fast and that speed is gained by committing data to memory instead of disk as the default option. So committing to disk (using ‘safe mode’) or a number of instances (and their disks) goes against some of what MongoDB stands for. The MongoDB api allows you to specify the ‘safe’ option (or “majority” in 2.0, but more about that later) for individual commands. This means that you can fine tune when you are concerned about ensuring that data is saved. So, for important data you can be safe, and in other cases you may be able to put up with occasional data loss.

(Semi) Officially MongoDB supports Windows Azure with the MongoDB Wrapper that is currently an alpha release. In summary, as per the documentation, is as follows:

- It allows running a single MongoDB process (mongod) on an Azure worker role with journaling. It also optionally allows for having a second worker role instance as a warm standby for the case where the current instance either crashes or is being recycled for a normal upgrade.

- MongoDB data and log files are stored in an Azure Blob mounted as a cloud drive.

- MongoDB on Azure is delivered as a Visual Studio 2010 solution with associated source files

There are also some additional screen shots and instructions in the Azure Configuration docs.

What is interesting about this solution is the idea of a ‘warm standby’. I’m not quite sure what that is and how it works, but since ‘warm standby’ generally refers to some sort of log shipping and the role has journaling turned on, I assume that the journals are written from the primary to the secondary instances. How this works with safe mode (and ‘unsafe’ mode) will need to be looked at and I invite anyone who has experience to comment. Also, I am sure that all of this journaling and warm standby has some performance penalty.

It is unfortunate that there is only support for a standalone mode as MongoDB really comes into its own when using replica sets (which is the recommended way of deploying it on AWS). One of the comments on the page suggests that they will have more time to work on supporting replica sets in Windows Azure sometime after the 2.0 release, which was today.

MongoDB 2.0 has some features that would be useful when trying to get it to work on Windows Azure, particularly the Data Centre Awareness “majority” tagging. This means that a write can be tagged to write across the majority of the servers in the replica set. You should be able to, with MongoDB 2.0, run it in multiple worker roles as replicas (not just warm standby) and ensure that if any of those roles were recycled that data would not be lost. There will still be issues of a recycled instance rejoining the replica set that need to be resolved however – and this isn’t easy on AWS either.

I don’t think that any Windows Azure application can get by with SQL Azure alone – there are a lot of scenarios where SQL Azure is not suitable. That leaves Windows Table Storage or some other database engine. Windows Table Storage, while well integrated into the Windows Azure platform, is short on features and cloud be more trouble than it is worth. In terms of other database engines, I am a fan of MongoDB but there are other options (RavenDB, CouchDB) – although they all suffer from the same problem of recycling instances. I imagine that 10Gen will continue to develop their Windows Azure Wrapper and expect that a 2.0 replica set enabled wrapper would be a fairly good option. So at this stage MongoDB should be a safe enough technology bet, but make sure that you use the “safe mode” or “majority” sparingly in order to take advantage of the benefits of MongoDB.

My Uptime Report for my Live OakLeaf Systems Azure Table Services Sample Project: June 2011 post was the first uptime report with two Web roles. The Republished My Live Azure Table Storage Paging Demo App with Two Small Instances and Connect, RDP of 5/9/2011 described the change from one to two web roles for the sample project.

Abel Cruz posted Part I – Moving Microsoft Partner Network’s engine (Partner Velocity Platform – PVP) to the Microsoft® Windows® Azure™ Platform on 9/13/2011:

In 2009 Microsoft released the Windows Azure platform, an operating environment for developing, hosting, and managing cloud-based services. Windows Azure established a foundation that allows customers to easily move their applications from on-premises locations to the cloud. Since then, Microsoft, analysts, customers, partners and many others have been telling stories of how customers benefit from increased agility, a very scalable platform, and reduced costs.

This post is the first in a planned series about Windows® Azure™. I will attempt to show how you can adapt an existing, on-premises ASP.NET application—like the Partner Velocity Platform (PVP) which is the engine that drives all partner-related functions behind the Microsoft Partner Network’s (MPN)—to one that operates in the cloud. The series of posts are intended for any architect, developer, or information technology (IT) professional who designs, builds, or operates applications and services that are appropriate for the cloud. Although applications do not need to be based on the Microsoft ® Windows® operating system to work in Windows Azure, these posts are written for people who work with Windows-based systems. You should be familiar with the

Microsoft .Net Framework, Microsoft Visual Studio®, ASP.NET, and Microsoft

Visual C#®.Introduction to the Windows Azure Platform

I can spend tons of time duplicating what others have already written about Windows Azure. But I will not. I will, however, provide you with pointers to where you can get great information that will give you a comprehensive introduction to it. Other than that, I will concentrate to add to what others have written and provide context as it pertains to the migration of the PVP platform to Windows Azure.

Introduction to the Windows Azure Platform provides an overview of the platform to get you started with Windows Azure. It describes web roles and worker roles, and the different ways you can store data in Windows Azure.

Here are some additional resources that introduce what Windows Azure is all about.

There is a great deal of information about the Windows Azure platform in the form of documentation, training videos, and white papers. Here are some Webs sites you can visit to get started:

- The portal to information about Microsoft Windows Azure is at http://www.microsoft.com/windowsazure/.

It has links to white papers, tools such as the Windows Azure SDK, and many

other resources. You can also sign up for a Windows Azure account here.- The Windows Azure platform Training Kit contains hands-on labs to

get you quickly started. You can download it at http://www.microsoft.com/downloads/details.aspx?FamilyID=413E88F8-5966-4A83-B309-53B7B77EDF78&displaylang=en.- Find answers to your questions on the Windows Azure Forum at http://social.msdn.microsoft.com/Forums/en-US/windowsazure/threads.

In my next post I will setup the stage and tell you about the PVP Platform, MPN, the challenges Microsoft IT faces with the current infrastructure and code behind the PVP platform. We will discuss some of our goals and concerns and discuss the strategy behind the move of PVP to Azure.

Subscribed. Abel is a Principal PM at Microsoft IT Engineering.

Luiz Santos reiterated Microsoft SQL Server OLEDB Provider Deprecation Announcement in a 9/13/2011 post to the ADO.NET Team blog:

The commercial release of Microsoft SQL Server, codename “Denali,” will be the last release to support OLE DB. Support details for the release version of SQL Server “Denali” will be made available within 90 days of release to manufacturing. For more information on Microsoft Support Lifecycle Policies for Microsoft Business and Developer products, please see Microsoft Support Lifecycle Policy FAQ. This deprecation applies to the Microsoft SQL Server OLE DB provider only. Other OLE DB providers as well as the OLE DB standard will continue to be supported until explicitly announced.

It’s important to notice that this announcement does not affect ADO’s and ADO.NET’s roadmaps. In addition, while Managed OLEDB classes will continue to be supported, if you are using it to connect to SQL Server through the SNAC OLEDB Provider, you will be impacted.

If you use SQL Server as your RDBMS, we encourage you to use SqlClient as your .NET Provider. In case you use other database technologies, we would recommend that you adopt their native .NET Providers or Managed ODBC in the development of new and future versions of your applications. You don’t need to change your existing applications using OLE DB, as they will continue to be supported, but you may want to consider migrating them as a part of your future roadmap.

Microsoft is fully committed to making this transition as smooth and easy as possible. In order to prepare and help our developer community, we will be providing assistance throughout this process. This will include providing guidance via technical documentation as well as tools to jump start the migration process and being available right away to answer questions on the SQL Server Data Access forum.

<Return to section navigation list>

Visual Studio LightSwitch and Entity Framework 4.1+

Beth Massi (@bethmassi) described Filtering Lookup Lists with Large Amounts of Data on Data Entry Screens in a 9/15/2011 post:

First off let me say WOW, it’s great to be back to blog writing! Sorry I have been away for a couple weeks – I’ve been working on a lot of cool stuff internally since I got back from my trip. And I know I have a loooooong list of article and video suggestions from all of you that I need to get to so thanks for bearing with me! Today I’m going to show you another common (and often requested) technique when creating data entry screens.

In my previous posts on data-driven lookup lists (or sometimes called “Pick Lists”) I showed a few techniques for formatting, editing and adding data to them. If you missed them:

- How to Create a Multi-Column Auto-Complete Drop-down Box in LightSwitch

- How to Allow Adding of Data to an Auto-Complete Drop-down Box in LightSwitch

In this post I’m going to show you a couple different ways you can help users select from large sets of lookup list data on your data entry screens. For instance, say we have a one-to-many relationship from category to product so when entering a new product we need to pick a category from an auto-complete box. LightSwitch generates this automatically for us based on the relation when we create the product screen. We can then format it like I showed in my previous example.

Now say we’ve set up our product catalog of hundreds or even thousands of these products and we need to select from them when creating Orders for our Customers. Here’s the data model I’ll be working with – this illustrates that a Product needs to be selected on an OrderDetail line item. OrderDetail also has a parent OrderHeader that has a parent Customer, just like good ‘ol Northwind.

In my product screen above there are only about 20 categories to choose from so displaying all the lookup list data from the category table in this drop down works well. However, that’s not the best option for the product table with lots of data -- that’s just too much data to bring down and display at once. This may not be a very efficient option for our users either as they would need to either scroll through the data or know the product name they were specifically looking for in order to use the auto-complete box. A better option is to use a modal window picker instead which allows for more search options as well as paging through data. Another way is to filter the list of products by providing a category drop-down users select first. Let’s take a look at both options.

Using a Modal Window Picker

Say I have selected the Edit Details Screen template to create a screen for entering an OrderDetail record. By default, LightSwitch will generate an auto-complete box for the Product automatically for us. It also does this for the OrderHeader as well since this is also a parent of OrderDetail. On this screen however, I don’t want the user to change the OrderHeader so I’ll change that to a summary control. I’ll also change the Auto Complete Box control on the Product to a Modal Window Picker:

I also want the products to display in alphabetical order so I’ll create a query called SortedProducts and then at the top of the screen select “Add Data Item” and then choose the SortedProducts query:

Once you add the query to the screen, select the Product in the content tree and set the “Choices” property to “SortedProducts” instead of Auto.

You can also fine-tune how many rows of data will come down per page by selecting the SortedProducts query and then setting the number of items to display per page in the properties window. By default 45 rows per page are brought down.

Now hit F5 to run the application and see what you get. Notice that when you run the screen you can now select the ellipses next to Product which brings up the Modal Window Picker. Here users can search and page through the data. Not only is this easier for the user to find what they are looking for, using a Modal Window Picker is also the most efficient on the server.

Using Filtered Auto-Complete Boxes

Another technique is using another auto-complete box as a filter into the next. This limits the amount of choices that need to be brought down and displayed to the user. This technique is also useful if you have cascading filtered lists where the first selection filters data in the second, which filters data in the next, and so forth. Data can come from either the same table or separate tables like in my example – all you need is to set up the queries on your screen correctly so that they are filtered by the proper selections.

So going back to the OrderDetail screen above, set the Product content item back to an Auto Complete Box control. Next we’ll need to add a data item to our screen for tracking the selected category. This category we will use to determine the filter on the Product list so that users only see products in the selected category. Click “Add Data Item” again and this time add a Local Property of type Category called SelectedCategory.

Next, drag the SelectedCategory over onto the content tree above the Product. LightSwitch will automatically create an Auto Complete Box control for you.

If you want to also sort your categories list you do it the same way as we did with products, create a query that sorts how you like, add the data item to the screen, and then set the Choices property from Auto to the query.

Now we need to create a query over our products that filters by category. There are two ways to do this, you can create a new global query called ProductsByCategory or if this query is only going to be used for this specific screen, you can just click Edit Query next to the SortedProduct query we added earlier. Let’s just do it that way. This opens the query designer which allows you to modify the query locally here on the screen. Add a parameterized filter on Category.Id by clicking the +Filter button, then in the second drop-down choose Category.Id, in the fourth drop down select Parameter, and in the last drop-down choose “Add New…” to create a parameterized query. You can also make this parameter optional or required. Let’s keep this required so users must select the category before any products are displayed.

Lastly we need to hook up the parameter binding. Back on the screen select the Id parameter that you just created on the SortedProducts query and in the properties window set the Parameter Binding to SelectedCategory.Id. Once you do this a grey arrow on the left side will indicate the binding.

Once you set the value of a query parameter, LightSwitch will automatically execute the query for you so you don’t need to write any code for this. Hit F5 and see what you get. Notice now that the Product drop down list is empty until you select a Category at which point feeds the SortedProducts query and executes it. Also notice that if you make a product selection and then change the category, the selection is still displayed correctly, it doesn’t disappear. Just keep in mind that anytime a user changes the category the product query is re-executed against the server.

One additional thing that you might want to do is to initially display the category to which the product belongs. As it is, the Selected Category comes up blank when the screen is opened. This is because it is bound to a screen property which is not backed by data. However we can easily set the initial value of the SelectedCategory in code. Back in the Screen Designer drop down the “Write Code” button at the top right and select the InitializeDataWorkspace method and write the following:

Private Sub OrderDetailDetail_InitializeDataWorkspace(saveChangesTo As List(Of IDataService)) ' Write your code here. If Me.OrderDetail.Product IsNot Nothing Then Me.SelectedCategory = Me.OrderDetail.Product.Category End If End SubNow when you run the screen again, the Selected Category will be displayed.

Using Filtered Auto-Complete Boxes on a One-to-Many Screen

The above example uses a simple screen that is editing a single OrderDetail record – I purposely made it simple for the lesson. However in real order entry applications you are probably going to be editing OrderDetail items at the same time on a one-to-many screen with the OrderHeader. For instance the detail items could be displayed in a grid below the header.

Using a Modal Window Picker is a good option for large pick lists that you want to use in an editable grid or on a one-to-many screen where you are editing a lot of the “many”s like this. However using filtered auto-complete boxes inside grid rows isn’t directly supported. BUT you can definitely still use them on one-to-many screens, you just need to set up a set of controls for the “Selected Item” and use the filtered boxes there. Let me show you what I mean.

Say we create an Edit Detail Screen for our OrderHeader and choose to include the OrderDetails. This automatically sets up an editable grid of OrderDetails for us.

Change the Product in the Data Grid Row to a Modal Window Picker and you’re set – you’ll be able to edit the line items and use the Modal Window Picker on each row. However in order to use the filtered drop downs technique we need to create an editable detail section below our grid. On the content tree select the “children” row layout and then click the +Add button and select Order Details – Selected Item.

This will create a set of fields below the grid for editing the selected detail item (it will also add Order Header but since we don’t need that field here you can delete it). I’m also going to make the Data Grid Row read only by selecting it and in the properties windows checking “Use Read-only Controls” as well as remove the “Add…” and “Edit…” buttons from the Data Grid command bar. I’ll add an “AddNew” button instead. This means that modal windows won’t pop up when entering items; instead we will do it in the controls below the grid. You can make all of these changes while the application is running in order to give you a real-time preview of the layout. Here’s what my screen looks like now in customization mode.

Now that we have our one-to-many screen set up the rest of the technique for creating filtered auto-complete boxes is almost exactly the same. The only difference is the code you need to write if you want to display the Selected Category as each line item is edited. To recap:

- Create a parameterized query for products that accepts an Id parameter for Category.Id

- Add this query to the screen (if it’s not there already) and set it as the Choices property on the Product Auto Complete Box control

- Add a data item of type Category to the screen for tracking the selected category

- Drag it to the content tree above the Selected Item’s Product to create an Auto Complete Box control under the grid

- Set the Id parameter binding on the product query to SelectedCategory.Id

- Optionally write code to set the Selected Category

- Run it!

The only difference when working with collections (the “many”s) is step 6 where we write the code to set the Selected Category. Instead of setting it once, we will have to set it anytime a new detail item is selected in the grid. On the Screen Designer select the OrderDetails collection on the left side then drop down the “Write Code” button and select OrderDetails_SelectionChanged. Write this code:

Private Sub OrderDetails_SelectionChanged() If Me.OrderDetails.SelectedItem IsNot Nothing AndAlso Me.OrderDetails.SelectedItem.Product IsNot Nothing Then Me.SelectedCategory = Me.OrderDetails.SelectedItem.Product.Category End If End SubWrap Up

In this article I showed you a couple techniques available to you in order to display large sets of lookup list data to users when entering data on screens. The Modal Window Picker is definitely the easiest and most efficient solution. However sometimes we need to really guide users into picking the right choices and we can do that with auto-complete boxes and parameterized queries.

Return to section navigation list>

Windows Azure Infrastructure and DevOps

Karl Shiflett (@kdawg02) provided several useful tips for the Windows 8 Developer Preview in his Windows 8 Gives New Life to Older Hardware post of 9/18/2011:

Like most of you, I’ve been wonderfully surprised by the Microsoft BUILD conference this last week. The delivered software and presentations to help us get started with Windows 8 far exceeded any expectations I had.

To try and add anything to what has been clearly communicated would be foolish on my part. Instead let me tell you about my “Lazarus” experience this week.

I’ve been eyeing the Asus EP121 for several weeks now. I got to play with one at the Bellevue Microsoft Store. This is one sweet unit.

Well, I have a dusty, HP tm2 TouchSmart Laptop/Tablet. It has a Core i3 1.2ghz, 4GB memory, integrated graphics card, slow 5400rpm drive. My thinking was, if I can pull a Lazarus on this computer for 6-12 months, I’ll save myself the $1,000 now and wait for the next generation hardware and with fast CPU, SSD, HD screen, etc.

I did use the HP tm2 for Window Phone 7 development and OneNote note taking. It was kind of slow, especially compared to other modern hardware.

The slowness was not attributed to Windows 7, but rather to lame hardware.

PC hardware manufacturers please start making decent hardware that competes with Apple’s hardware and PLEASE stop putting crapware on my new PC. All crapware should be a line item, opt-in.

I need to move off this topic before I go into a tirade.

On the good side, one of the keynotes at BUILD showed new hardware coming soon that looks like the MacBook Air, metal, thin, etc. At last. Please offer good components in your units, I’ll pay for them.

So I replaced the first generation 5,400rpm hard drive with a 7,200 second generation SATA. Was getting just a little excited, breathing new life into my laptop.

Lazarus!

Following simple directions on Scott Hanselman’s blog, I loaded the Win8 Preview on a USB.

When I booted the laptop I change the default boot to the USB so I could install Windows. When Windows restarts, don’t forget to change the default boot back to your hard drive.

Installation took 12 minutes; Windows, Visual Studio, demo applications, etc. Core i3 and a decent disk, still respectable.

The laptop boots very quickly, applications are responsive and fun to use. I have not installed Office yet, but will soon. For now, just learning to get around Windows 8 and how to write Metro XAML apps.

Scud Missile

After I logged in, I ran Windows Update and one of the items installed was the, “Microsoft IntelliPoint 8.2 Mouse Software for Windows – 64 bit” This update on my laptop caused the touch to quick working. So I used Add Remove programs to uninstall it, rebooted and got touch working again.

Visual Studio XAML Designer Patch

You need to install a patch published by the Expression Team to correct a mouse issue with the designer.

https://connect.microsoft.com/VisualStudio/Downloads/DownloadDetails.aspx?DownloadID=38599

After downloading, don’t forget to “Unblock” the .zip file. The instructions left this out.

You MUST follow the installation instructions, most important you must install the patch as an administrator.

The fun part will be trying to figure out how to open an Administrator Command Prompt. I could not figure out how to do this using the Metro interface. So… I opened Windows Explorer in the Desktop, navigated to the \Windows\system32 folder, right-clicked on the cmd.exe file and selected, “Run as Administrator.” While you at it, go ahead and pin that Administrator Command Window to the TaskBar, problem solved.

Getting Around Windows 8

Since you probably won’t be writing code using your TouchScreen keyboard, you’ll want to get up to speed on Windows Shortcuts. The following blog post is being recommended by several on Twitter so I’ve included it here as well.

http://www.winrumors.com/windows-8-tips-and-tricks-for-mousekeyboard-users/

Before Your Frist Project

Before you dive into your first Metro project, take time and watch some of the BUILD videos. If you only watch one video, watch this one: http://channel9.msdn.com/events/BUILD/BUILD2011/BPS-1004. Jensen Harris clearly explains Metro and the thinking behind it. He is also one of the best presenters at BUILD and connects with the audience and viewers alike.

Video

The below video shows my HP tm2 after the Lazarus operation. Short, 3 minutes gives you a good feel for how a Core i3 runs Windows 8.

Close

These are good times for Windows developers.

For me, I’m finishing up my WPF/Prism BBQ Shack program and will move the cash register and online purchasing modules to Metro. Metro is perfect for a touch screen cash register. This will be so much fun to write.

Karl works on the Microsoft p&p Prism, Phone and Web Guidance teams.

Doug Seven clarified the relationship between the Windows 8 Platform, the CLR and Development Tools in his A bad picture is worth a thousand long discussions post of 9/15/2011:

While here at Build I’ve been in lots of conversations with customers, other attendees, Microsoft MVP’s, Microsoft Regional Directors, and Microsoft engineering team members. One of the recurring topics that I’ve been talking about ad nausium is the “boxology” diagram of the Windows 8 Platform and Tools (shown here).

Now let me tell you, I have drawn a lot of these “marketecture” diagrams in my time and its not easy. These kind of diagrams are never technically accurate. There is simply no easily digestible way to make a technically accurate diagram for a complex system that renders well on a slide and is easy to present and explain. The end result is that you create a diagram that is missing a lot of boxes – missing a lot of the actual technology that is present in the system. Unfortunately that is exactly what has happened here – the Windows 8 boxology is missing some of the actual technology that is present.

One of the conversations that has come up is around the missing box in the “green side” (aka Metro style apps) for the .NET Framework and the CLR. Do VB and C# in Metro style apps compile and run directly against the WinRT? Is this the end of the .NET Framework?

Others who have done some digging into the bits are wondering if there are two CLRs. What the heck is going on in Windows 8?

I spent some time with key members of the .NET CLR team last night (no names, but trust me when I say, these guys know exactly how the system works), and here’s the skinny.

Basic Facts:

- There is only one CLR. Each application or application pool (depending on the app type) spins up a process and the CLR is used within that process. Meaning, a Metro style app and a Desktop Mode app running at the same time are using the same CLR bits, but separate instances of the CLR.

- The .NET Framework 4.5 is used in both Desktop Mode apps and in Metro style apps. There is a difference though. Metro style apps use what is best described as a different .NET Profile (e.g. Desktop apps use the .NET Client Profile and Metro style apps use the .NET Metro Profile). There is NOT actually a different profile, but the implementation of .NET in Metro style apps is LIKE a different profile. Don’t go looking for this profile – its basically rules built into the .NET Framework and CLR that define what parts of the framework are available.

- Whether a Desktop Mode app or a Metro style app, if it is a .NET app, it is compiled to the same MSIL. There isn’t a special Windows 8 Metro IL – there is, like the CLR, only one MSIL.

A More Accurate Picture

A more correct (but still marketecture that is not wholly technically accurate) would look like this:

In this diagram you can see that the CLR and the .NET Framework 4.5 are used for C# and Visual Basic apps in either Desktop Mode apps (blue side) or Metro style apps (green side). Silverlight is still only available in Desktop Mode as a plug-in to Internet Explorer (yes, out of browser is still supported in Desktop Mode). Another addition in this diagram is DirectX, which was strangely absent from the original diagram. DirectX is the defacto technology for high-polygon count applications, such as immersive games. DirectX leverages the power of C++ and can access the GPU.

This biggest confusion, as I mentioned, has been around the use of the .NET Framework across the blue side and green side. The reason for the, as I call it, .NET Metro Profile is because the Metro style apps run in an app container that limits what the application can have access to in order to protect the end user from potentially malicious apps. As such, the Metro Profile is a subset of the .NET Client Profile and simply takes away some of the capabilities that aren’t allowed by the app container for Metro style apps. Developers used to .NET will find accessing the WinRT APIs very intuitive – it works similarly to having an assembly reference and accessing the members of said referenced assembly.

Additionally, some of the changes in the Metro Profile are to ensure Metro style apps are constructed in the preferred way for touch-first design and portable form factors. An example is File.Create(). Historically if you were using .NET to create a new file you would use File.Create(string fileLocation) to create the new file on the disk, then access a stream reader to create the contents of the file as a string. This is a synchronous operation – you make the call and the process stalls while you wait for the return. The idea of modern, Metro style apps is that ansychronous programming practices should be used to cut down on things like IO latency, such as that created by file system operations. What this means is that the .NET Metro Profile doesn’t provide access to FileCreate() as a synchronous operation. Instead, you can still call File.Create() (or File.CreateNew()…I can’t recall right now) as an asynchronous operation. Once the callback is made you can still open a stream reader and work with the file contents as a string, just like you would have.

Ultimately all of this means that you have some choice, but you don’t have to sacrifice much if anything along the way. You can still build .NET and Silverlight apps the way you are used to, and they will run on Windows for years to come. If you want to build a new Metro style app, you have four options to choose from:

- XAML and .NET (C# or VB)You don’t have to giving up too much in the .NET Framework (remember, you only give up what is forbidden by the Application Container), and you get access to WinRT APIs for sensor input and other system resources.

- XAML and C++You can use your skills in XAML and C++ in order to leverage (or even extend) WinRT. Of course you don’t get the benefit of the .NET Framework, but hey….some people like managing their own garbage collection.

- HTML and JavaScriptYou can leverage the skills you have in UI layout, and make calls from JavaScript to WinRT for access to system resources, and sensor input.

- DirectX and C++If you’re building an immersive game you can use DirectX and access the device sensors and system resources through C++ and WinRT.

Hopefully this adds some clarity to the otherwise only slightly murky picture that is the Windows 8 boxology.

Don’t forget to check out Telerik.com/build.

Doug is Executive VP at Telerik.

Jason Perlow (@jperlow)asked is Windows Server 8: The Ultimate Cloud OS? in a 9/14/2011 post to ZDNet’s Tech Broiler blog:

Since last Thursday, I was ordered under strict nondisclosure to keep my mouth shut. And that was really hard for me to do because I could barely contain my enthusiasm for what is probably the most significant server operating system release that Microsoft has ever planned to roll out.

Nothing from Microsoft, and I mean literally nothing has ever been this ambitious or has tried to achieve so much in a single server product release since Windows 2000, when Active Directory was first introduced.

Last week, a group of about 30 computer journalists were invited to Microsoft’s headquarters in Redmond to get an exclusive two-day preview of what is tentatively being referred to as “Windows Server 8″.

So much was covered over the course of those two days that the caffeine-fueled and sleep-deprived audience was sucking feature and functionality improvements through a proverbial fire-hose.

We weren’t given any PowerPoints or code to take home — that material will be reserved for after the BUILD conference taking place in Anaheim this week, and I promise to get you galleries and demos of the technology as soon as I can.

[UPDATE: I now have the PowerPoints, and I'll be updating the content of this article to reflect the comprehensive feature list.]

Still, I did take enough notes to give you a brief albeit nowhere near complete overview of the Server OS that is likely to ship from Microsoft within the next year. And it will definitely make huge waves in the enterprise space, I guarantee.

It’s not fully known if “Windows Server 8″ is just a working title or if it is the actual product name, but what was shown to us in the form of numerous demos and about 20 hours of PowerPoints will be the Server OS that will replace Windows Server 2008 R2.

Server 8 will unleash a massive tsunami of new features specifically targeted at building and managing infrastructure for large multi-tenant Clouds, drastically increased scalability and reliability features in the areas of Virtualization, Networking, Clustering and Storage, as well as significant security improvements and enhancements.

Frankly, I am amazed by the amount of features — numbering in the hundreds — that have been added to this product, and how many are actually working right now given the Alpha-level code we were shown. In all the demos, very few glitches occurred, and much of the underlying code and functionality appears to be very mature.

It’s my perception based on the maturity off the code that we were demoed that we were shown features that have been under development for several years, possibly going back as far as the Windows Vista release timeframe, which leads me to believe that a great deal of stuff was dropped on the cutting room floor in the Server 2008 and Server 2008 R2 releases and was left out by Microsoft’s top brass at the Server group until it was truly ready for prime time.

We did see some new UI improvements — namely the new Server Manager, which has been designed to replace a lot of the MMC drill-down and associated snap-ins and is targeted towards sysadmins that need to manage multiple views of a large amount of systems simultaneously, based on actual services and roles running on the managed systems, using a “Scenario-Driven” user interface.

However, a lot of what we saw in terms of actual look-and feel was just standard old-school Windows UI, and a lot of PowerShell.

In fact, I would say that Microsoft is pushing PowerShell really hard to sysadmins because you can actually get some very sophisticated tasks done in only a single command, such as migrating one or multiple virtual machines to another host, or altering storage quotas.

Thousands of “Commandlets” for PowerShell have already been written, so as to take advantage of the scripting functionality and heavy automation that will be required for large scale Windows Server 8 and Cloud deployments.

This is not to say Windows 8 Server will be going all command-line Linux-y. There will be new significant UI peices, but Microsoft appears to have done their software development in reverse this time around — build the API layers and underlying engines first, and then write the UI layers to interface with it afterwards.

They’ve got a year now to polish the UI elements, a number of which we were told had some commonality with the “Metro” UI shown at BUILD for the WIndows 8 client. As I said, we didn’t get to see them at the special Reviewers Workshop, but I’ll show them to you as soon as I am able.

Microsoft also stressed that many of the APIs for various new features, including their entire management API will be opened for third party vendors to integrate with and so they could write their own UIs.

One of the ways they are going to do this is by releasing a completely portable, brand-new Web-based Enterprise Management (WBEM) CIM server called NanoWBEM for Linux, written by one of the main developers of of OpenPegasus, which has been designed to work Windows Server 8’s new management APIs, so that various vendors can build in the functionality into their products via a common provider interface.

While not strictly Open Source per se, NanoWBEM will be readily licensable to other companies, which is a big step for Microsoft in opening up interfaces into Windows managment.

Windows Management Instrumentation (WMI) has also been enhanced considerably, as it now is capable of talking to WSMAN or DCOM directly. This makes it much easier for developers to write new WMI providers.

As to be expected of a Cloud-optimized Windows Server release, many enhancements are going to come in the form of improvements to Hyper-V. And boy are they big ones.

For starters, Hyper-V will now support up to 32 processors and 512GB of RAM per VM. In order to accomodate larger virtual disks, a new virtual file format, .VHDX, will be introduced and will allow for virtual disk files greater than 2 terabytes.

Not impressed? How about 63-node Hyper-V clusters that can run up to 4000 concurrent VMs simultaneously? No, I’m not joking. They actually showed it to us, for real, and it was working flawlessly.

Live Migration in Hyper-V has also been greatly enhanced — to the point where clustered storage isn’t even required to do a VM migration anymore.

Microsoft demonstrated the ability to literally “beam” — a la Star Trek — a virtual machine between two Hyper-V hosts with only an IP connection.

A VM on a developer’s laptop hard disk running on Hyper-V was sent over Wi-Fi to another Hyper-V server without any downtime — all we saw was a single dropped PING packet. We also observed the ability of Hyper-V to do live migrations across different subnets, with multiple live migrations being queued up and transferring simultaneously.

Microsoft told us that the limit to the amount of VM and storage migrations that could run simultaneously across a Hyper-V cluster was governed only by the amount of bandwidth that you actually have. No limits to the number of concurrent live migrations in the OS itself. None.

It should also be added that with the new SMB 2.2 support, Hyper-V virtual machines can now live on CIFS/SMB network shares.

Another notable improvements to Hyper-V will include “Hyper-V Replica” which is roughly analogous to the asynchronous/consistent replication functionality sold with Novell’s Platespin’s Protect 10 virtualization disaster recovery product. This of course will be a built-in feature of the OS and will not require additional licensing whatsoever.

The list of Hyper-V features goes on and on. A new Open Extensible Virtual Switch will allow 3rd-parties to plug into Hyper-V’s switch architecture. SR-IOV for privileged access to PCI devices has now been implemented as well as CPU metering and resource pools, which should be a welcome addition to anyone currently using them in existing VMWare environments to portion out virtual infrastructure.

VDI… Did I mention the VDI improvements? Windows Server 8’s Remote Desktop Session Host, or RDSH (what used to be called Terminal Server) now fully supports RemoteFX and is enabled by default out of the box.

What’s the upside to this? Well now you can put GPU cards in your VDI server so that your remote clients, be it terminals or tablets or Windows desktops that have the new RemoteFX-enabled RDP client software can run multi-media rich applications remotely with virtually no performance degradation.

As in, completely smooth video playback on remote desktops, as well as the ability to experience full-blown hardware-accelerated Windows 7 Aero and Windows 8 Metro UIs with full DirectX10 and OpenGL 1.1 support on virtualized desktops.

This will work with full remote desktop UIs as well as “Published” applications, a la Citrix. And no, you won’t need Citrix XenApp in order to support load balanced remote desktop sessions anymore. It’s all built-in.

RemoteFX and the new RDSH is killer, but you know what’s really significant? You can template virtual desktops from a single gold master image stored on disk and instantiated in memory as a single VM and then customize individual sessions to have roaming profiles with customized desktops and apps and personal storage using system policy. That conserves a heck of a lot of disk space and memory on the VDI server.

And in Server 8, RDP is also now much more WAN optimized than in previous incarnations.

Can you say hasta la vista, Citrix? I knew that you could.

[Editor's Note: This is my personal opinion. As far as Microsoft is concerned, Citrix is still one of their most valued partners, and in has no way indicated to us that this new RDSH functionality is intended to replace XenApp.]

One of the demos we saw using this technology was a 10-finger multitouch display running RDP and RemoteFX, with the Microsoft “Surface” interface virtualized over the network. It was truly stunning to see.

A number of network improvments have also been implemented that improve Hyper-V as well as all services and roles running on the Server 8 stack, which includes full network virtualization and network isolation for multi-tenancy environments.

This includes Port ACLs that can block by source and destination VM, implementations of Private VLANs (PVLAN), network resource pools and open network QoS as well as packet-level IP re-write with GRE encapsulation and consistent device naming.

Multi-Path I/O (MPIO) drivers (such as EMC’s PowerPath and IBM’s SDDPCM) when combined with Microsoft’s virtual HBA provider can also now be installed as virtualized fiber channel host bus adapters (HBA) within virtual machines, in order to take better advantage of the performance of enterprise SAN hardware and for VMs to have direct access to SAN LUNs.

Windows Server 8 will also include improved Offloaded Data Transfer, so that when you drag and drop files between two server windows, the server OS knows to transfer data directly from one system to another, rather than passing it through your workstation or through another server.

“Branch Caching” performance has also been improved and reduces the need for expensive WAN optimization appliances. Microsoft has also implemented a type of Bitorrent-like technology for the enterprise in branch offices that enables client systems to find the files they need locally on other client systems and servers instead of going across the WAN

The NFS server and client code within Server 8 has also been completely re-written from the ground up and is now much faster, which should be a big help when needing to inter-operate with Linux and UNIX systems.

Server 8 will also include built-in NIC teaming, a “feature” that has always been a part of Windows Server but has been provided in the past by 3rd-party vendors. With the new NIC teaming feature, network interface boards from different vendors can be mixed into bonded teams of trunked interfaces which will provide performance improvements as well as redundancy.

No more need for 3rd-party utilities and driver kits to do this.

Storage in Server 8 has also been greatly enhanced, most importantly the introduction of data de-duplication as part of the OS. Based on two years of work at Microsoft for just the algorithm alone, de-duplication uses commonality factoring to hugely compress the amount of data stored on a volume, with no significant performance implications.

Naturally, this also allows the backup window for a server with a de-duplicated file system to be reduced dramatically.

Oh and chkdsk? Huge storage volumes can be checked and fixed in an on-line state in a mere fraction of a time that it took before. Like, in ten percent of the time it used to.

Server 8 will have built in support for JBODs, as well as new support for SMB storage using RDMA (Remote Direct Memory Access) networks, allowing for large storage pools to be built with commodity 10 gigabit ethernet networks rather than much more expensive fiber-channel SAN technology. Microsoft also demonstrated the capability for Server 8 to “Thin Provision” storage on JBODs as well.

Clustered disks can now be fully encrypted using BitLocker technology and the new Clustered Shared Volume 2.0 implementation fully supports storage integration for built-in replication as well as hardware snapshotting.

And we saw a bunch of new storage virtualization stuff too. I didn’t take good notes that day, sorry.

I’m sure I’m leaving out a large number of other important things, including an all-new IP address managment UI (appropriately named IPAM) as well as some new schema extensions to Active Directory that greatly improves file security when using native Windows 8 servers. And all of the new stuff that’s been added to IIS and Windows’s networking stack in order to accomodate large multi-tenant environments and hybrid clouds.

By the end of the second day at the Windows Server 8 Reviewer’s Workshop I was literally ready to pass out from the sheer amount of stuff being shown to us, and my brain had turned to mush, but all of this should whet your appetites for Server 8 when I finally have some code running and can actually demonstrate some of this stuff.

While Microsoft has certainly gotten its act together with its last two Server releases in terms of basic stability, has brought it’s core OS up to date with Windows 7 and has made a good college try at virtualization with early releases of Hyper-V, I haven’t been truly excited about a Windows Server release in a long time.

Call me excited.

In my opinion, Server 8 changes everything, particularly from a complete virtualization and storage value proposition. CIOs are going to be very hard pressed to resist the product simply from all the stuff that you get built-in that you would otherwise have to spend an utter fortune on with 3rd-party products.

Are these new features worth the wait? Should Microsoft’s cloud and virtualization software competitors be worried? Talk Back and Let Me Know.