Windows Azure and Cloud Computing Posts for 12/24/2010+

| A compendium of Windows Azure, Windows Azure Platform Appliance, SQL Azure Database, AppFabric and other cloud-computing articles. |

| Merry Christmas/Happy Holidays |  | A Happy and Prosperous New Year |

•• Updated 12/26/2010 with articles marked ••.

• Updated 12/25/2010 with articles marked •.

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Azure Blob, Drive, Table and Queue Services

- SQL Azure Database and Reporting

- Marketplace DataMarket and OData

- Windows Azure AppFabric: Access Control and Service Bus

- Windows Azure Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch

- Windows Azure Infrastructure

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

To use the above links, first click the post’s title to display the single article you want to navigate.

Azure Blob, Drive, Table and Queue Services

•• Added My (@rogerjenn) Testing IndexedDB with the SqlCeJsE40.dll COM Server and Microsoft Internet Explorer 8 or 9 Beta as an expanded version of the Microsoft’s new Interoperability Bridges and Labs Center site offers a link to the Interoperability @ Microsoft team blog’s IndexedDB Prototype Available for Internet Explorer of 12/21/2010 article. Following is the added content:

The installation folder also contains a CodeSnippets folder with four asynchronous and corresponding synchronous ECMAScript examples:

Create IndexedDB Sample: Create_Open Indexed DB (opens the DB if it exists)

- ObjectStore Samples: Create ObjectStores, Delete ObjectStores

- CRUD Samples (requires running sample #2): Put, Add, Get, Delete, Cursor Update and Cursor Get records

- Index Samples (requires running sample #3): Create Index, Index Open Key Cursor, Index Open Cursor, Index Get Methods, Index getKey Methods, Delete Index

Here’s a screen capture of Asynchronous Sample #1 after creating the AsyncIndexedDB database:

To invoke a function, open the page and click the associated button.

SQL Server Compact provides the persistent store, which IE saves as \Users\Username\AppData\Local\Microsoft\DatabaseID\DatabaseName.sdf:

Following is a capture of SQL Server 2008 R2 Management Studio displaying the content of the KIDSTORE ObjectStore created by running all four Asynchronous API Samples:

The autoincrementing __internal__keygen column reflects two previous additions and deletions of six kids with Asynchronous API Samples #3.

Following is a capture of IE8’s built-in JScript debugger in use with the IndexedDB Asynchronous API Samples 3.html page (#3 CRUD Samples.) The breakpoint is in the Async_Delete_Records() function (click image for a 1024-px full-size version.)

The debugger conveniently display properties and methods of IndexedDB’s objects. To learn more, see Deepak Jain’s JScript Debugger in Internet Explorer 8 post of 3/12/2008 and the WebDevTools Team’s JScript Debugging: Made easy with IE8 article of 3/5/2008.

•• Updated My (@rogerjenn) Testing IndexedDB with the Trial Tool Web App and Mozilla Firefox 4 Beta 8 post of 12/25/2010 with additional details of problems creating ObjectStores with the current Firefox 4 beta:

Do the following to initialize an IndexedDB instance and open a BookShop database:

- Click the lower (console) pane’s Output tab to enable console output.

- Click the Open Database link to add code to open the database.

- Click Load Pre Pre-requisites to add code to initialize the window.IndexedDB object.

- Click Run to execute the code added in steps 1 and 2.

- Click the [Object] link in the console’s Database Opened item to display the empty database properties, as shown here:

An ObjectStore is IndexedDB’s equivalent of a Windows Azure table. To (attempt to) create a BookList ObjectStore after opening the database with the preceding example, do the following:

- Open the Create Object Store example.

- Click Load Pre Pre-requisites to create the instance and open the Bookshop database.

- Add a document.write(“Attempting to create a ‘BookList’ ObjectStore …”); instruction after the ConsoleHelper.waitFor() … line to verify that the code block executes (see below).

- Click Run to execute the code.

Problem: Executing the Create Object Store example fails to create the BookList data store. The DAO.db.createObjectStore() method fails silently; neither the success or error message appears in the console:

Executing the Get All Object Stores example returns an empty list.

Note: The Firebug add-on doesn’t work with and won’t install on Firefox 4 Beta 8.

•• Morebits posted Building Windows Azure Service Part3: Table Storage on 12/26/2010:

This post shows how to create a project that contains the classes which enable the GuestBook application to store guest entries in the Windows Azure Table Storage.

The Table Storage service offers semi-structured storage in the form of tables that contain collections of entities. Entities have a primary key and a set of properties, where a property is a (name, typed-value) pair. The Table Storage service primary key has the following two properties:

- PartitionKey and RowKey keys that uniquely identify each entity in the table.

- Every entity in the Table Storage service also has a Timestamp system property, which allows the service to keep track of when an entity was last modified. This Timestamp field is intended for system use and should not be accessed by the application.

The Table Storage API provides a TableServiceEntity class that defines the necessary properties, which you can use as the base class for your entities. This API is compliant with the REST API provided by Data Services and allows you to use the.NET Client Library for Data Services to work with data in the Table service using .NET objects.

Although the Table Storage does not enforce any schema for tables, which makes it possible for two entities in the same table to have different sets of properties, the GuestBook application uses a fixed schema to store its data.

In order to use the.NET Client Library for Data Services to access the data in Table Storage, you need to create a context class that derives from TableServiceContext, which itself derives from DataServiceContext in .NET Client Library for Data Services .

The Table Storage API allows applications to create the tables from the context class. For this to happen, the context class must expose each required table as a property of type IQueryable<SchemaClass>, where SchemaClass is the class that models the entities stored in the table.

In this post, you will perform the following tasks:

- Create the data model project.

- Create a schema class for the entities stored by the GuestBook application.

- Create a context class to use .NET Client Library for Data Services to access the data in Table Storage.

- Create a data source class that enables the creation of a data source object. The data source object can be bound to ASP.NET data controls which enable the user to access the Table Storage data.

To create the data model project

- In Solution Explorer dialog right-click the GuestBook solution and select New Project.

- In the Add New Project dialog window, choose the Windows category.

- Select Class Library in the templates list.

- In the Name box enter the GuestBook_Data.

- Click OK.

Figure 5 Creating the Data Model Project

- In Solution Explorer right-click the GuestBook_Data project.

- Select Add Reference.

- In the Add Reference window dialog select .NET tab.

- Add reference to System.Data.Services.Client.

- Repeat the previous steps to add a reference to Microsoft.WindowsAzure.StorageClient.

- Delete the default Class1.cs generated by the template.

To create the schema class

This section shows how to create the class that defines the schema for the GuestBook table entries. Its parent class Microsoft.WindowsAzure.StorageClient.TableServiceEntity defines the properties required by every entity stored in a Windows Azure table.

- Right-click the GuestBookData project, click Add New item.

- In the Add New Item dialog window, select the Code category.

- In the templates list select Class.

- In the Name box enter GuestBookEntry.cs.

- Click Add.

- Open the GuestBookEntry.cs file and replace its content with the following code.

using System; using System.Collections.Generic; using System.Linq; using System.Text; using Microsoft.WindowsAzure.StorageClient; namespace GuestBook_Data { /// <summary> /// The GuestBookEntry class defines the schema for each entry (row) /// of the guest book table that stores guest information. /// <remarks> /// The parent class TableServiceEntity defines the properties required /// by every entity that uses the Windows Azure Table Services. These /// properties include PartitionKey and RowKey. /// </remarks> /// </summary> public class GuestBookEntry : Microsoft.WindowsAzure.StorageClient.TableServiceEntity { /// <summary> /// Create a new instance of the GuestBookEntry class and /// initialize the requires keys. /// </summary> public GuestBookEntry() { // The partition key allows partitioning the data so that // there is a separate partition for each day of guest // book entries. The partition key is used to assure // load balancing for data access across fabric nodes (servers). PartitionKey = DateTime.UtcNow.ToString("MMddyyyy"); // Row key allows sorting, this assures the rows are // returned in time order. RowKey = string.Format("{0:10}_{1}", DateTime.MaxValue.Ticks - DateTime.Now.Ticks, Guid.NewGuid()); } // Define the properties that contain guest information. public string Message { get; set; } public string GuestName { get; set; } public string PhotoUrl { get; set; } public string ThumbnailUrl { get; set; } } }To create the context class

This section shows how to create the context class that enables Windows Azure Table Storage services. Its parent class Microsoft.WindowsAzure.StorageClient.TableserviceContext manages the credentials required to access your account and provides support for a retry policy for update operations.

- Right-click the GuestBookData project, click Add New item.

- In the Add New Item dialog window, select the Code category.

- In the templates list select Class.

- In the Name box enter GuestBookDataContext.cs.

- Click Add.

- Open the GuestBookDataContext.cs file and replace its content with the following code.

using System; using System.Collections.Generic; using System.Linq; using System.Text; using Microsoft.WindowsAzure; using Microsoft.WindowsAzure.StorageClient; namespace GuestBook_Data { /// <summary> /// The GuestBookDataContext class enables Windows /// Azure Table Services. /// <remarks> /// The parent class TableserviceContext manages the credentials /// required to access account information and provides support for a /// retry policy for update operations. /// </remarks> /// </summary> public class GuestBookDataContext : TableServiceContext { /// <summary> /// Create an instance of the GuestBookDataContext class /// and initialize the base /// class with storage access information. /// </summary> /// <param name="baseAddress"></param> /// <param name="credentials"></param> public GuestBookDataContext(string baseAddress, StorageCredentials credentials) : base(baseAddress, credentials) { } /// <summary> /// Define the property that returns the GuestBookEntry table. /// </summary> public IQueryable<GuestBookEntry> GuestBookEntry { get { return this.CreateQuery<GuestBookEntry>("GuestBookEntry"); } } } }To create the data source class

This section shows how to create the data source class that allows for the creation of data source objects that can be bound to ASP.NET data controls and allow for the user to interact with the Table Storage.

- Right-click the GuestBookData project, click Add New item.

- In the Add New Item dialog window, select the Code category.

- In the templates list select Class.

- In the Name box enter GuestBookDataSource.cs.

- Click Add.

- Open the GuestBookDataSource.cs file and replace its content with the following code.

using System; using System.Collections.Generic; using System.Linq; using System.Text; using Microsoft.WindowsAzure; using Microsoft.WindowsAzure.StorageClient; namespace GuestBook_Data { /// <summary> /// The GuestBookEntryDataSource class allows for the creation of data /// source objects that can be bound to ASP.NET data controls. These /// controls enable the user to perform Create, Read, Update and Delete /// (CRUD) operations. /// </summary> public class GuestBookEntryDataSource { // Storage services account information. private static CloudStorageAccount storageAccount; // Context that allows the use of Windows Azure Table Services. private GuestBookDataContext context; /// <summary> /// Initializes storage account information and creates a table /// using the defined context. /// <remarks> /// This constructor creates a table using the schema (model) /// defined by the /// GuestBookDataContext class and the storage account information /// contained in the configuration connection string settings. /// Declaring the constructor static assures that the initialization /// tasks are performed only once. /// </remarks> /// </summary> static GuestBookEntryDataSource() { // Create a new instance of a CloudStorageAccount object from a specified configuration setting. // This method may be called only after the SetConfigurationSettingPublisher // method has been called to configure the global configuration setting publisher. // You can call the SetConfigurationSettingPublisher method in the OnStart method // of the web or worker role before calling FromConfigurationSetting. // If you do not do this, the system raises an exception. storageAccount = CloudStorageAccount.FromConfigurationSetting("DataConnectionString"); // Create table using the schema (model) defined by the // GuestBookDataContext class and the storage account information. CloudTableClient.CreateTablesFromModel( typeof(GuestBookDataContext), storageAccount.TableEndpoint.AbsoluteUri, storageAccount.Credentials); } /// <summary> /// Initialize context used to access table storage and the retry policy. /// </summary> public GuestBookEntryDataSource() { // Initialize context using account information. this.context = new GuestBookDataContext(storageAccount.TableEndpoint.AbsoluteUri, storageAccount.Credentials); // Initialize retry update policy. this.context.RetryPolicy = RetryPolicies.Retry(3, TimeSpan.FromSeconds(1)); } /// <summary> /// Gets the contents of the GuestBookentry table. /// </summary> /// <returns> /// results: the GuestBookEntry table contents. /// </returns> /// <remarks> /// This method retrieves today guest book entries by using the current /// date as the partition key. The web role uses this method to /// bind the results to a data grid to display the guest book. /// </remarks> public IEnumerable<GuestBookEntry> Select() { var results = from g in this.context.GuestBookEntry where g.PartitionKey == DateTime.UtcNow.ToString("MMddyyyy") select g; return results; } /// <summary> /// Insert new entries in the GuestBookEntry table. /// </summary> /// <param name="newItem"></param> public void AddGuestBookEntry(GuestBookEntry newItem) { this.context.AddObject("GuestBookEntry", newItem); this.context.SaveChanges(); } /// <summary> /// Update the thumbnail URL for a table entry. /// </summary> /// <param name="partitionKey"></param> /// <param name="rowKey"></param> /// <param name="thumbUrl"></param> public void UpdateImageThumbnail(string partitionKey, string rowKey, string thumbUrl) { var results = from g in this.context.GuestBookEntry where g.PartitionKey == partitionKey && g.RowKey == rowKey select g; var entry = results.FirstOrDefault<GuestBookEntry>(); entry.ThumbnailUrl = thumbUrl; this.context.UpdateObject(entry); this.context.SaveChanges(); } } }For related topics, see the following posts.

- Building Windows Azure Service Part1: Introduction

- Building Windows Azure Service Part2: Service Project.

- Building Windows Azure Service Part4: Web Role UI Handler

- Building Windows Azure Service Part5: Worker Role Background Tasks Handler

- Building Windows Azure Service Part6: Service Configuration

- Building Windows Azure Service Part7: Service Testing

<Return to section navigation list>

SQL Azure Database and Reporting

Dave Noderer reported on 12/24/2010 the availability of Herve Roggero’s “Azure Scalability Prescriptive Architecture using the Enzo Multitenant Framework” whitepaper of 12/16/2010 in a SQL Sharding and SQL Azure… post:

Herve Roggero has

justpublished a paper that outlines patterns for scaling using SQL Azure and the Blue Syntax [Consulting] ([his] and Scott Klein’s company) sharding API. You can find the paper at: http://www.bluesyntax.net/files/EnzoFramework.pdfHerve and Scott have also

justreleased an Apress book, Pro SQL Azure.The idea of being able to split (shard) database operations automatically and control them from a web based management console is very appealing.

These ideas have been talked about for a long time and implemented in thousands of very custom ways that have been costly, complicated and fragile. Now, there is light at the end of the tunnel. Scaling database access will become easier and move into the mainstream of application development.

The main cost is using an API whenever accessing the database. The API will direct the query to the correct database(s) which may be located locally or in the cloud. It is inevitable that the API will change in the future, perhaps incorporated into a Microsoft offering. Even if this is the case, your application has now been architected to utilize these patterns and details of the actual API will be less important.

Herve does a great job of laying out the concepts which every developer and architect should be familiar with!

From the “Azure Scalability Prescriptive Architecture using the Enzo Multitenant Framework” whitepaper’s Introduction:

Cloud computing, and more specifically the Microsoft Azure platform, offers new opportunities for businesses seeking scalability, high availability and a utility-based consumption model for their applications. As part of its infrastructure, Microsoft Azure places an emphasis on scale-out designs; indeed it is relatively easy to create and deploy web applications in Windows Azure. And with the push of a button, administrators can run a web application on 2 or more web servers.

However, scaling out OLTP (online transactional processing) databases has traditionally been difficult for many reasons, including the lack of transparent distributed database support and limited availability of management tools forcing developers to go through a difficult learning curve. Although the need for increased performance and scalability has always been prevalent, distributed data management has always been considered difficult to tackle without more support from database vendors.In data warehousing however, distributed data stores along with optimized data access paths have shown that building a distributed data access layer is possible, and manageable. Indeed data warehouses have limited needs to update data and are primarily used to run reports and data analysis tools. The most prominent data warehousing systems leverage a shared nothing data architecture minimizing data access latency.

While performance is always important, cloud-based applications place more emphasis on scalability. Certain cloud applications have a need to host data that belong to a specific customer (such as SaaS applications) or some other entity (such as a date range, or a geographic location); these applications are referred to as multitenant applications in this paper. Multitenant applications provide a shared presentation layer, a shared service layer for implementing business rules and caching, and one or more databases logically or physically separating customer data for increased privacy, performance and scalability. A successful multitenant design allows corporations to keep adding customers without having to worry about the various application layers. The primary objective of scalability is to support business growth by optimizing resources and flexibility.

Planning to build a multitenant system that leverages distributed data stores can be complicated and requires careful planning. This white paper introduces you to a multitenant architecture leveraging the performance and scalability advantages of a scale out architecture using the Enzo Multitenant Framework.

The Microsoft India blog summarized the recent Sharding With SQL Azure white paper in a 12/23/2010 post:

Earlier this week we published a whitepaper entitled Sharding with SQL Azure to the TechNet wiki. In the paper, Michael Heydt and Michael Thomassy discuss the best practices and patterns to select when using horizontal partitioning and sharding with your applications.

Specific guidance shared in the whitepaper:

- Basic concepts in horizontal partitioning and sharding

- The challenges involved in sharding an application

- Common patterns when implementing shards

- Benefits of using SQL Azure as a sharding infrastructure

- High level design of an ADO.NET based sharding library

- Introduction to SQL Azure Federations

Overview

So what is sharding and partitioning, and why is it important?

Often the need arises where an application’s data requires both high capacity for many users and support for very large data sets that require lightning performance. Or perhaps you have an application that by design must be elastic in its use of resources such as a social networking application, log processing solution or an application with a very high number of requests per second. These are all use cases where data partitioning across physical tables residing on seperate nodes; sharding or SQL Azure Federations, is capable of providing a performant scale-out solution.

In order to scale-out via sharding, an architect must partition the workload into independent units of data or atomic units. The application then must have logic built into it to understand how to access this data through the use of a custom sharding pattern or through the upcoming release of SQL Azure Federations.

Multi-Master Sharding Archetype

Also introduced by the paper is a multi-master sharding pattern where all shards are considered read/write, there is no inherent replication of data between shards and the model is referred to as a “shared nothing” as no data is shared or replicated between shards.

Use the Multi-Master Pattern if:

Clients of the system need read/write access to data

The application needs the ability for the data to grow continuously

Linear scalability of data access rate is needed as data size increases

Data written to one shard must be immediately accessible to any client

To use sharding with SQL Azure, application architeture must take into account:

Current 50GB resource limit on SQL Azure database size

Multi-Tenant peformance throttling and connection management when adding/removing shards

Currently sharding logic must be written at the application level until SQL Azure Federations is released

Shard balancing is complicated and may require application downtime while shards are created or removed

SQL Azure Federations

SQL Azure Federations will provide the infrastructure to support the dynamic addition of shards and the movement of atomic units of data between shards providing built-in scale-out capabilities. Federation Members are shards that act as the physical container for a range atomic units. Federations will support online repartioning as well as connections, which are established with the root database to maintain continuity and integrity.

More Information

Additionally, we have spoken publicly about coming SQL Azure Federations technology at both PDC and PASS this year. Since that time we have published a number of blog posts and whitepapers for your perusal:

- Evaluation of Scale-up vs Scale-out: how to evaluate the scalability options when using SQL Azure

- Intro to SQL Azure Federations: SQL Azure Federations overview

- Scenarios and Applications for SQL Azure Federations: highlighting the power of SQL Azure Federations technology

- Scaling with SQL Azure Federations: quick walkthrough of building and app with SQL Azure Federations

<Return to section navigation list>

MarketPlace DataMarket and OData

•• Jonathan Kolbo wrote the first installment of DataView jQuery Plugin - Part 1 on 12/25/2010:

I recently worked on a project that utilized an OData data service and jQuery to display product information. The product information is displayed in several different ways like an html table, list, or predefined html block. In addition to multiple display types, the data also needs to be paged, sorted, and searched. After reviewing the project specs, I realized that it might be possible to write a jQuery plugin that can provide all of this functionality.

I have a lot of experience with asp.net, and one of the big benefits to that framework is the ability to drag and drop controls on to a page, set a few parameters, and boom! you are done. One of my favorite controls is the ListView control introduced in Asp.Net 3.0. This control allows you to wire up a datasource and then specify and html template. That template is used to render each record of data. It is extremely flexible and when partnered with the paging control, gets us really close to what I want. The problem is my project wasn’t an asp.net webforms project. I think that the listview control is a good model for what I want to duplicate with my jQuery plugin.

Setting up an html templating system in jQuery is trivial. There are quite a few jQuery templating engines out there so we will use one of those and add the rest of the functionality. I want my plugin to provide html templating, paging, sorting, and filtering. Throw in a few extra options and I think I will have an extremely powerful plugin. Also, why stop there? Why not make it possible to use this plugin for any web service? Forget only OData! I want to use this same plugin for asmx, SAOP, or REST web services as well!

This may be a rather lengthy post, so I will split it up over a few days. I don’t pretend that this is production ready (although I am running something similar in production now) and it may not be the cleanest way to do this, but it is a start. And hey, It works for me!

What is OData?

I don’t want to spend to much time on how OData works but according to the OData website:

[T]he Open Data Protocol (OData) is a Web protocol for querying and updating data that provides a way to unlock your data and free it from silos that exist in applications today. OData does this by applying and building upon Web technologies such as HTTP, Atom Publishing Protocol(AtomPub) and JSON to provide access to information from a variety of applications, services, and stores. The protocol emerged from experiences implementing AtomPub clients and servers in a variety of products over the past several years. OData is being used to expose and access information from a variety of sources including, but not limited to, relational databases, file systems, content management systems and traditional Web sites.

It basically allows us to access your data the same way you access a webpage, via URL. You can append a query to the end of the url to limit or filter the records that are returned. You can learn more about OData here, here, and here. Several sites are using OData to provide access to their data so that developers can use that data in their own apps. Netflix has an OData feed set up at http://odata.netflix.com/Catalog. This feed gives us access to Netflix’s entire video catalog, so it makes a perfect feed to use in our examples.

jQuery , jQuery UI and Widget Factory

In addition to OData, we are going to make extensive use of jQuery and jQuery UI. I have written many jQuery plugins in my day, but before jQuery really exploded on to the scene, I was a big MS Ajax guy. I really like the way MS Ajax allowed you to develop “controls” in javascript that you can then use to give functionality to an element on the page. Unlike jQuery plugins, these controls were actual object instances that run independently of each other. This gives you the ability to track state, and just made more sense to me in my object oriented world of server side development. I longed for something similar in jQuery . Eventually I got smart enough to use the data property of a jquery object to store state information, but it was still not very clean. And then, one day I found jQuery UI’s Widget Factory, and all was right in the world. A jQuery widget is different from a jQuery plugin. A widget has lifecyle events, can track state, and communicate with other widgets. The widget factory is part of jQuery UI, and it used to build all of the widgets in the UI Library. Finding good information on how to use the widget factory isn’t very easy, but I found everything I needed to know at this site.

If you feel comfortable with these pieces, you should be ready to move on.

Michael Cloots posted Creating a reusable WCF RIA DAL layer for OData, JSON and SOAP protocols to the Prism for Silverlight blog on 12/24/2010:

Having a reusable DAL layer is a necessity when it comes to building a maintainable modular application. I was thinking about creating a separate Silverlight application, including LINQ to SQL and Domain Services, which will function as a DAL layer in all the prism modules. After a lot of headaches, I finally came to the following best practice:

Building the DAL layer -> Silverlight 4 Application

For the DAL layer, we’ll start with a simple Silverlight application. Add LINQ to SQL or ADO.NET entity framework to the web part and create your domain service class. So far so easy! You can test this setup by adding a domain data source to the client part. Bind this to your datagrid. This step is successful when the data is shown!

Domain Service Class as a WCF Service

I have read a very interesting article about the domain service class and its WCF role:

This article [informed] me that a domain service class is a superior WCF service. So it should be possible to host a domain service class on your IIS and call it from other Silverlight applications (Prism modules) by adding a Service Reference.

Preparing the domain service class

Before we can consume the domain service class as a WCF service, we have to modify the Web.config. Our <system.ServiceModel> tag should contain some endpoints for the domain service class. Before you can do that you must add the ‘Microsoft.ServiceModel.DomainServices.Hosting’ reference. If you can[‘t] find this reference, you should reinstall the WCF RIA Service Toolkit, which can be found here: http://www.microsoft.com/downloads/en/details.aspx?FamilyID=c4f02797-5f9e-4acf-a7dc-c5ded53960a6&displaylang=en

system.serviceModel>

<domainServices>

<endpoints>

<add name="JSON" type="Microsoft.ServiceModel.DomainServices.Hosting.JsonEndpointFactory, Microsoft.ServiceModel.DomainServices.Hosting, Version=4.0.0.0, Culture=neutral, PublicKeyToken=31bf3856ad364e35" />

<add name="OData" type="System.ServiceModel.DomainServices.Hosting.ODataEndpointFactory, System.ServiceModel.DomainServices.Hosting.OData, Version=4.0.0.0, Culture=neutral, PublicKeyToken=31bf3856ad364e35" />

<add name="Soap" type="Microsoft.ServiceModel.DomainServices.Hosting.SoapXmlEndpointFactory, Microsoft.ServiceModel.DomainServices.Hosting, Version=4.0.0.0, Culture=neutral, PublicKeyToken=31bf3856ad364e35" />

</endpoints>

</domainServices>

<serviceHostingEnvironment aspNetCompatibilityEnabled="true"

multipleSiteBindingsEnabled="true" />

</system.serviceModel>When this is done, you can simply publish your Web project to IIS (remember the localhost portnumber).

Accessing the Domain Service class in your Prism module

So far the difficult part. Accessing the domain service class is easy. Just add a service reference and enter the uri. The uri will look like the following:

http://[hostname]/[namespacename]-[classname].svc

For me it will be:

http://localhost:3650/Services/DS-Web-DomainServiceAll.svc

Calling the functions in the domain service class works just like calling a WCF service.

Congratulations! You just created a reusable DAL layer based on a domain service class!

Note

In a modular Prism application you have to do an extra step. The Shell should also be aware that there is a service to be called, because the module is loaded in the Shell. When adding the service reference, a clientconfig file will be generated. The Shell should also know this file.

This problem can be solved by adding a link to the clientconfig file in the Shell project (client):

Ben Day posted a related Silverlight Asynchronous WCF Calls Without Ruining Your Architecture (with ReturnResult<T>) article on 12/24/2010, which includes a link to sample code:

A while back I wrote a blog post about how Silverlight 4’s asynchronous network calls make a layered client-side architecture difficult. In that post I talk about wanting to have a loosely coupled, testable, n-tier architecture on my Silverlight client application. Basically, I wanted to have a client-side architecture that was nicely layered like Figure 1 rather than a flat, 1-tier architecture like Figure 2.

Figure 1 – A layered client-side Silverlight architecture

Figure 2 – A flat client-side Silverlight architectureAlthough I didn’t write it in that original post, unit testing and unit testability was one of my top reasons for wanting a layered architecture. If everything is clumped in to one big XAP/DLL, it’s going to be difficult to test small “units” of functionality. My top priority was to keep the WCF data access code (aka. the code that calls the back-end WCF services) separated from the rest of my code behind Repository Pattern interfaces. Keeping those Repositories separated and interface-driven is essential for eliminating unit test dependencies on those running WCF services.

A Change In Plans

When I wrote that original post back in May of 2010, I was *fighting* the asynchronous WCF network calls. After a little bit of time, I had one of those moments where I asked myself “self, why is this so *&#$@!& difficult? There’s no way that this is right. I think we’re doing something wrong here.” I’ve since changed my approach and I’ve embraced the asynchronous nature of Silverlight and make it a core part of my architecture.

The Problem with WCF Data Access

Let’s take the Repository Pattern. The Repository Pattern is an encapsulation of logic to read and write to a persistent store such as a database or the file system. If you can write data to it, pull the plug on your computer, and you’re still be able to read that data the next time that you start your computer, it’s a persistent store. When you’re writing a Silverlight application that talks to WCF Services, your persistent store is your WCF Service.

If you look at IRepository<T> or IPersonRepository in Figure 3, you’ll see that the GetById() and GetAll() methods return actual, populated IPerson instances. This is how it would typically look in a non-Silverlight, non-async implementation. Some other object decides it wants Person #1234, gets an instance of IPersonRepository, calls GetById(), passes in id #1234, waits for the GetById() call to return, and then continues going about it’s business. In this case, the call to GetById() is a “blocking call”. The code calls IPersonRepository.GetById, the thread of execution enters the repository implementation for GetById(), does its work, and then the repository issues a “return returnValue;” statement that returns the populated value, and the thread of execution leaves the repository. While the thread of execution is inside of the repository, the caller is doing nothing else other than waiting for the return value.

Figure 3 – Server-side, non-Silverlight Repository Pattern implementationIn Silverlight, if your Repository calls a WCF service to get it’s data, this *CAN NOT BE IMPLEMENTED LIKE THIS* unless you do something complex like what I did in my previous post (translation: unless you do something that’s probably wrong). The reason is that the majority of the work that happens in the IPersonRepository.GetById() method is actually going to happen on a different thread from the thread that called the GetById() method. IPersonRepository.GetById() will initiate the process of loading that IPerson but it won’t be responsible for finishing it and won’t even know when the call has been finished. This means that IPersonRepository.GetById() can’t return an instance of IPerson anymore…it now has to return void!

I know what you’re thinking. You’re *sure* that I’m wrong. Give it a second. Think it over. It’s kind of a mind-bender. Re-read the paragraph a few more times. Maybe sketch some designs out on paper. Trust me, I’m right.

These asynchronous WCF calls mean that none of your repositories can return anything other than void. Think this out a little bit more. This also means that anything that in turn calls a WCF Repository is also not permitted to return anything but void. It’s insidious and it destroys your plans for a layered architecture (Figure 1) and pushes you closer to the “lump” architecture (Figure 2).

A New Hope

Letting these asynchronous WCF calls ruin my layered architecture was simply unacceptable to me. What I needed was to get something like return values but still be async-friendly. I also needed a way to handle exceptions in async code, too. If you think about it, exceptions are kind of like return values. If an exception happens on the thread that’s doing the async work, because there isn’t a continuous call stack from the client who requested the work to the thread that’s throwing the exception, there’s no way to get the exception back to the original caller because throwing exceptions relies on the call stack. No blocking call, no continuous call stack.

Enter ReturnResult<T>. This class encapsulates the connection between the original caller and the asynchronous thread that does the work.

An implementation of IPersonRepository that uses ReturnResult<T> would look something like the code in Figure 5. The key point here is that when the WCF service call is finished and the GetByIdCompleted() method is executed, the repository has access to the ReturnResult<T> through the callback variable. When the Repository has something to return to the caller – either an exception or an instance of IPerson – it calls the Notify() method on ReturnResult<T>. From there the ReturnResult<T> class takes care of the rest and the Repository doesn’t need to know anything about it’s caller.

public class WcfPersonRepository : IPersonRepository

{

public void GetById(ReturnResult<IPerson> callback, int id)

{

PersonServiceClient service = null;try

{

// create an instance of the WCF PersonServiceClient proxy

service = new PersonServiceClient();

// subscribe to the completed event for GetById()

service.GetByIdCompleted +=

new EventHandler<GetByIdCompletedEventArgs>(

service_GetByIdCompleted);// call the GetById service method

// pass "callback" as the userState value to the call

service.GetByIdAsync(id, callback);

}

catch

{

if (service != null) service.Abort();

throw;

}

}void service_SearchCompleted(object sender, GetByIdCompletedEventArgs e)

{

var callback = e.UserState as ReturnResult<IList<IPerson>>;if (callback == null)

{

// this is bad

throw new InvalidOperationException(

"e.UserState was not an instance of ReturnResult<IPerson>.");

}if (e.Error != null)

{

// something went wrong

// "throw" the exception up the call stack

callback.Notify(e.Error);

return;

}try

{

IPerson returnValue;// do whatever needs to be done to create and populate an

// instance of IPerson

callback.Notify(returnValue);

}

catch (Exception ex)

{

callback.Notify(ex);

}

}

}

Figure 5 – Asynchronous IPersonRepository implementation with ReturnResult<T>Now, let’s look at an example of something that calls IPersonRepository. A common example of this would be PersonViewModel needing to call in to the IPersonRepository to get a person to display. In Figure 6 you can see this implemented and the key line is repository.GetById(new ReturnResult<IPerson>(LoadByIdCompleted), id);. This line creates an instance of ReturnResult<IPerson>() and passes in a callback delegate to the ViewModel’s LoadByIdCompleted() method. This is how the ViewModel waits for return values from the Repository without having to wait while the thread of execution is blocked.

public class PersonViewModel

{

public void LoadById(int id)

{

IPersonRepository repository = GetRepositoryInstance();// initiate the call to the repository

repository.GetById(

new ReturnResult<IPerson>(LoadByIdCompleted), id);

}private void LoadByIdCompleted(ReturnResult<IPerson> result)

{

// handle the callback from the repositoryif (result == null)

{

throw new InvalidOperationException("Result was null.");

}

else if (result.Error != null)

{

HandleTheException(result.Error);

}

else

{

IPerson person = result.Result;if (person == null)

{

// person not found...invalid id?

Id = String.Empty;

FirstName = String.Empty;

LastName = String.Empty;

EmailAddress = String.Empty;

}

else

{

Id = person.Id;

FirstName = person.FirstName;

LastName = person.LastName;

EmailAddress = person.EmailAddress;

}

}

}

// the rest of the implementation details go here

}Figure 6 – PersonViewModel calls IPersonRepository.GetById()

Summary

ReturnResult<T> solved my asynchronous Silverlight problems and allowed me to keep a layered and unit testable architecture by encapsulating all of the asynchronous “glue” code.

Click here to download the sample code.

-- Looking for help with your Silverlight architecture? Need some help implementing your asynchronous WCF calls? Hit a wall where you need to chain two asynchronous calls together? Drop us a line at info@benday.com.

<Return to section navigation list>

Windows Azure AppFabric: Access Control and Service Bus

• See the description of Thinktecture Starter STS, which follows the Dominick Baier explained Handling Configuration Changes in Windows Azure Applications in a 12/25/2010 post article in the Live Windows Azure Apps, APIs, Tools and Test Harnesses section below.

Dominick has posted the following three related projects to CodePlex:

- Thinktecture.IdentityModel v0.8: Helper library for the Windows Identity Foundation (WIF)

- InfoCardSelector v1.4: ASP.NET WebControl to easily integrate Information Cards into ASP.NET applications

- Custom Basic Authentication for IIS v1.0 : HTTP Module to allow HTTP Basic Authentication against non-Windows accounts in IIS

<Return to section navigation list>

Windows Azure Virtual Network, Connect, RDP and CDN

•• Cory Fowler (@SyntaxC4) posted Connecting to an Azure Instance via RPD on 12/25/2010:

This post is a conclusion to a series of blog entries on how to RDP into a Windows Azure instance. If you haven’t already done so, you may want to read the previous posts:

- Export & Upload a Certificate to an Azure Hosted Service

- Setting up RDP to a Windows Azure Instance: Part 1

- Setting up RDP to a Windows Azure Instance: Part 2

This post will provide two pieces of information: first, now that your Windows Azure Platform Portal has been configured for RDP, I will show you how to initialize the RDP Connection to a Windows Azure Instance. Second, I’ll step back and explain how to Setup the Cloud Service Configuration manually (which is typically automated by Visual Studio).

Connecting to Windows Azure via RDP

At this point you should have already uploaded a Certificate to the Hosted Service, checked the Enable checkbox in the Remote Access section of the Portal Ribbon and configured a Username and Password for accessing the particular Hosted Service.

In the Hosted Service Configuration page, select the Instance you would like to connect to.

This will enable the Connect button within the Remote Access section of the Portal Ribbon. Click on the Connect button to initialize a download of the RPD (.rpd) file to connect to that particular instance.

You can obviously choose open, however this is a good opportunity to save the RDP connection to an instance just for that odd chance you can’t access the Windows Azure Platform Portal to download it again. I would suggest saving at least one RDP file in a save location for this very reason.

You may need to accept a Security warning because the RDP file is Unsigned.

Then supply your username and password which was set up in the previous set of posts.

Once the connection has been initialized there is one last security warning to dismiss before the desktop of your Windows Azure Instance appears.

Welcome to your Windows Azure Instance in the Cloud!

Manually Configuring RDP Access to Windows Azure

In order to manually configure RDP access to a Windows Azure Instance in the csconfig file there are a few things that need to be done. There is a well written outline on MSDN in a post entitled “Setting up a Remote Desktop Connection for a Role”.

During Step 2 of the process outlined on MSDN, Encrypting the Password with Powershell, there is the need to provide a thumbprint for a Self-Signed Certificate. What isn’t mentioned within the article is that it is necessary to Capitalize the Letters and Remove the Spaces in the thumbprint in order for the Powershell script to work.

Maarten Balliauw explained The quickest way to a VPN: Windows Azure Connect in this 12/23/2010 post:

First of all: Merry Christmas in advance! But to be honest, I already have my Christmas present… I’ll give you a little story first as it’s winter, dark outside and stories are better when it’s winter and you are reading this post n front of your fireplace. Last week, I received the beta invite for Windows Azure Connect, a simple and easy-to-manage mechanism to setup IP-based network connectivity between on-premises and Windows Azure resources. Being targeted at interconnecting Windows Azure instances to your local network, it also contains a feature that allows interconnecting endpoints. Interesting!

Now why would that be interesting… Well, I recently moved into my own house, having lived with my parents since birth, now 27 years ago. During that time, I bought a Windows Home Server that was living happily in our home network and making backups of my PC, my work laptop, my father’s PC and laptop and my brother’s laptop. Oh right, and my virtual server hosting this blog and some other sites. Now what on earth does this have to do with Windows Azure Connect? Well, more then you may think…

I’ve always been struggling with the idea on how to keep my Windows Home Server functional, between the home network at my place and the home network at my parents place. Having tried PPTP tunnels, IPSEC, OpenVPN, TeamViewer’s VPN capabilities, I found these solutions working but they required a lot of tweaking and installation woes. Not to mention that my ISP (and almost all ISP’s in Belgium) blocks inbound TCP connections to certain ports.

Until I heard of Windows Azure Connect and that tiny checkbox on the management portal that states “Allow connections between endpoints in group”. Aha! So I started installing the Windows Azure Connect connector on all machines. A few times “Next, I accept, Next, Finish” later, all PC’s, my virtual server and my homeserver were talking cloud with each other! Literally, it takes only a few minutes to set up a virtual network on multiple endpoints. And it also routes through proxies, which means that my homeserver should even be able to make backups of my work laptop when I’m in the office with a very restrictive network. Restrictive for non-cloudians, that is :-)

Installing Windows Azure Connect connector

This one’s easy. Navigate to http://windows.azure.com, and in the management portal for Windows Azure Connect, click “Install local endpoint”.

You will be presented a screen containing a link to the endpoint installer.

Copy this link and make sure you write it down as you will need it for all machines that you want to join in your virtual network. I tried just copying the download to all machines and installing from there, but that does not seem to work. You really need a fresh download every time.

Interconnecting machines

This one’s reall, really easy. I remember configuring Cisco routers when I was on school, but this approach is a lot easier I can tell you. Navigate to http://windows.azure.com and open the Windows Azure Connect management interface. Click the “Create group” button in the top toolbar.

Next, enter a name and an optional description for your virtual network. Next, add the endpoints that you’ve installed. Note that it takes a while for them to appear, this can be forced by going to every machine that you installed the connector for and clicking the “Refresh” option in the system tray icon for Windows Azure Connect. Anyway, here are my settings:

Make sure that you check “Allow connections between endpoints in group”. And eh… that’s it! You now have interconnected your machines on different locations in about five minutes. Cloud power? For sure!

As a side node: it would be great if one endpoint could be joined to multiple “groups” or virtual networks. That would allow me to create a group for other siuations, and make my PC part of all these groups.

Some findings

Just for the techies out there, here’s some findings… Let’s start with doing an ipconfig /all on one of the interconnected machines:

Windows Azure Connect really is a virtual PPP adapter added to your machine. It operates over IPv6. Let’s see if we can ping other machines. Ebh-vm05 is the virtual machine hosting my blog, running in a datacenter near Brussels. I’m issuing this ping command from my work laptop in my parents home network near Antwerp. Here goes:

Bam! Windows Azure Connect even seems to advertise hostnames on the virtual network! I like this very, very much! This would mean I can also do remote desktop between machines, even behind my company’s restrictive proxy. I’m going to try that one on Monday. Eat that, corporate IT :-)

One last thing I’m interested in: the IPv6 addresses of all connected machines seem to be in different subnets. Let’s issue a traceroute as well.

Sweet! It seems that there’s routing going on inside Windows Azure Connect to communicate between all endpoints.

As a side node: Yes, those are high ping times. But do note that I was at my parents home when taking this screenshot, and the microwave was defrosting Christmas meals between my laptop and the wireless access point.

Conclusion

I’m probably abusing Windows Azure Connect doing this. However, it’s a great use case in my opinion and I really hope Microsoft keeps supporting this. What would even be better is that they offered Windows Azure Connect in the setup I described above for home users as well. It would be a great addition to Windows Intune as well!

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

•• Zulfiqar (@zahmed) explained using WCF Certificates in Compute Emulator in a 12/21/2010 post (missed when published):

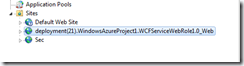

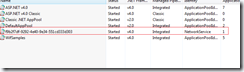

Windows Azure SDK 1.3 introduced significant changes to the local development environment. The old DevFabric is broken down into “Compute emulator” & “Storage emulator” which are the local emulated environments for the compute and storage respectively.

Azure SDK 1.3 uses the ‘full IIS’ feature for the WebRole running in the compute emulator which makes it much easier to configure and debug applications in the emulator. For example, when you run your azure project (containing a WebRole) in the Compute emulator it transparently creates web sites and application pools in IIS and configures them correctly by pointing to the physical application directory. Your WebRole code executes inside the good-old worker process (w3wp.exe) and can be configured using the appPool properties plus you can directly edit the web.config to change application settings.

You can configure HTTPs endpoints for you application and the emulator automatically setup SSL bindings using a test certificate. These bindings can be viewed using the netsh.exe utility.

If your WebRole however requires additional certificates then you have to manually deploy those. For example, WCF message security would require a service certificate which needs to be referenced in the web.config.

- <serviceCredentials>

- <serviceCertificate findValue="bc2b61b66fda75dbaae50ae2757ad756cfeff016" x509FindType="FindByThumbprint" storeLocation="LocalMachine" storeName="My" />

- </serviceCredentials>

The AppPool created by the Compute emulator is configured to run under ‘Network Service’ account so additional certificate needs to be copied in the local machine store (inside personal folder) and ‘Network Service’ account needs to have read permissions to the private keys.

• Paul Patterson explained Deploying a WCF Service to Windows Azure in a 12/24/2010 post:

I have never used Windows Azure, so I am posting about my first attempts at deploying something to the Windows Azure Platform.

If you’ve seen my posts on Windows Phone 7 (WP7) development, you may have seen where I am creating a WP7 application that uses a WCF service. A deliverable from this is a WCF service that I want to make available from the “cloud”.

So, here is what I did to achieve this…

Up to this point, I have already signed up to Windows Azure.

Download and install the Windows Azure SDK for Microsoft Visual Studio. Link to download site… Windows Azure SDK for Microsoft Visual Studio

Installing the SDK will add Windows Azure Project templates to Visual Studio, used in the sections that follow.

Creating the Project

The first thing I did was to create an Empty solution using Visual Studio.

From the file menu in Visual Studio, select File> New > Project

In the New Project dialog, select the Cloud template. In my case, I am using the Visual Basic version of the template.

Selecting the Cloud template will display the Windows Azure Project item in the center of the dialog. Select the item, and then give your project a name and location. Click the OK button.

This launches a wizard that allows you to select one or more roles to use in your Azure project. A role is basically the type of project you want to create. I selected to use the ASP.Net Web Role because I want to build out both the service, and a web site that I can use to inform people about the service (and my new WP7 application). So, I select the ASP.Net Web Role and click the right arrow button to add the role. (Don’t click OK yet).

Hover over the newly added item in the Windows Azure solution list box and click the editor icon that appears. This allows you to rename your project that gets created. For example, I renamed mine to Zenfolio.WP7.Web.

After renaming the project, click the OK button. Visual Studio will then create the solution for your project.

Visual Studio creates two things in your solution. The first is the ASP.Net project that will be used for the web site and services. The second is the project containing information to be used by Visual Studio to later deploy the ASP.Net project, and its services to Azure.

Here is an example of the solution that I am now using. The solution includes the ASP.Net project, the Azure deployment project, and my WP7 project that I added later on.

In the Azure deployment project (named Zenfolio.WP7.Cloud in my solution), expand the Roles folder. You can see how the ASP.Net Web project is shown to be one of the roles to be deployed to Azure.

The Deployment

In that last image above you can see that my Zenfolio.WP7.Web project contains a service named ZenfolioService.svc. I want to now deploy this project to Azure so that I can use it in my WP7 application from anywhere I have Interweb access.

Preparing the Package

The first thing to do is prepare the project for deployment to Azure.

Right-click the Azure deployment project and select Publish.

In the Deploy to Windows Azure project dialog. Select Create Service Package Only. Click the OK button.

Visual Studio creates a deployment package and then opens a File Explorer window to display where the package is saved.

Prepare Azure

I have an Azure account, however I still need to configure Azure to host my new service. So, I log into Azure at http://windows.azure.com.

I am presented with the Azure homepage. I click the Hosted Services, Storage Accound, & CDN link in the lower left of the site.

I then click the Hosted Services link from the list on the left.

I don’t have an services there yet, so the list will only contain information about my subscription.

Next I click the New Hosted Service item in the ribbon bar on the top of the page.

The Create a new Hosted Service dialog is presented. In this dialog I;

- enter a Service Name – which is ZenfolioWCFApi in my example,

- enter a URL prefix, or subdomain, name prefix. I use Zenfolio for mine (which I may get in trouble for later on…we’ll see),

- select a region of Anywhere US for the region,

- select a deployment option of Deploy to stage environment because I am only testing right now,

- check the Start after successful deployment option on,

- enter a deployment name of ZenfolioWCFApiStaging,

- select the package and configuration files created earlier by Visual Studio.

Here is what my dialog entries look like…

I then click the OK button. Azure immediately prompts with a warning message.

The message suggests that I deploy more than one instance of my service for availability purposes. I am not really worried about availability right now because I am only testing. So I select to override and submit my package; I click the Yes button.

The package starts uploading…

…and does some initializing….

After a number of minutes (be patient!!) , the service is shown as being hosted in a staging environment in Azure.

Remember what you are deploying to Azure is going to use resources. It doesn’t matter if it’s for development or not, it still uses resources. If you deploy something only for testing, get rid of it after you are done. That way you are not chewing up your Azure Hours when not doing anything.

The Proof

Cool! Now that I have the service up and running. I want to test it out.

First, I need to find out the URI for my service that is hosted on Azure. Remember that this is not a production deployment, but rather a staging environment. Azure creates a DNS name specifically for staging. The DNS name can be found by viewing the properties of the deployment on the Azure management portal…

Clicking on the DNS Name link in the management portal will navigate your browser to location of the deployed application. I did this in mine and this is what it showed.

Yay! This worked. Not much to the web application itself (yet), however it is exactly what I expected to appear.

Now to test that the service is working, I enter the URL to the service itself…

Score!

Now, for I am going to update the Service Reference I use in my WP7 project to point to the Azure installed (staged) service.

Now lets run the WP7 app and see what happens…

Success!!

What’s Next

Now that I know how to do that, the rest should be pudding…right? We’ll see.

Stay tuned for more shenanigans from my WP7 and Azure journey !!

• Dominick Baier explained Handling Configuration Changes in Windows Azure Applications in a 12/25/2010 post:

While finalizing StarterSTS 1.5, I had a closer look at lifetime and configuration management in Windows Azure. (This is not new information – just some bits and pieces compiled at one single place – plus a bit of a reality check)

When dealing with lifetime management (and especially configuration changes), there are two mechanisms in Windows Azure – a RoleEntryPoint derived class and a couple of events on the RoleEnvironment class. You can find good documentation about RoleEntryPoint here. The RoleEnvironment class features two events that deal with configuration changes – Changing and Changed.

Whenever a configuration change gets pushed out by the fabric controller (either changes in the settings section or the instance count of a role) the Changing event fires. The event handler receives an instance of the RoleEnvironmentChangingEventArgs type. This contains a collection of type RoleEnvironmentChange. This in turn is a base class for two other classes that detail the two types of possible configuration changes I mentioned above: RoleEnvironmentConfigurationSettingsChange (configuration settings) and RoleEnvironmentTopologyChange (instance count). The two respective classes contain information about which configuration setting and which role has been changed. Furthermore the Changing event can trigger a role recycle (aka reboot) by setting EventArgs.Cancel to true.

So your typical job in the Changing event handler is to figure if your application can handle these configuration changes at runtime, or if you rather want a clean restart. Prior to the SDK 1.3 VS Templates – the following code was generated to reboot if any configuration settings have changed:

private void RoleEnvironmentChanging(object sender, RoleEnvironmentChangingEventArgs e) { // If a configuration setting is changing if (e.Changes.Any(change => change is RoleEnvironmentConfigurationSettingChange)) { // Set e.Cancel to true to restart this role instance e.Cancel = true; } }This is a little drastic as a default since most applications will work just fine with changed configuration – maybe that’s the reason this code has gone away in the 1.3 SDK templates (more).

The Changed event gets fired after the configuration changes have been applied. Again the changes will get passed in just like in the Changing event. But from this point on RoleEnvironment.GetConfigurationSettingValue() will return the new values. You can still decide to recycle if some change was so drastic that you need a restart. You can use RoleEnvironment.RequestRecycle() for that (more).

As a rule of thumb: When you always use GetConfigurationSettingValue to read from configuration (and there is no bigger state involved) – you typically don’t need to recycle.

In the case of StarterSTS, I had to abstract away the physical configuration system and read the actual configuration (either from web.config or the Azure service configuration) at startup. I then cache the configuration settings in memory. This means I indeed need to take action when configuration changes – so in my case I simply clear the cache, and the new config values get read on the next access to my internal configuration object. No downtime – nice!

Gotcha

A very natural place to hook up the RoleEnvironment lifetime events is the RoleEntryPoint derived class. But with the move to the full IIS model in 1.3 – the RoleEntryPoint methods get executed in a different AppDomain (even in a different process) – see here.. You might no be able to call into your application code to e.g. clear a cache. Keep that in mind! In this case you need to handle these events from e.g. global.asax.

Dominick described thinktecture Starter STS (Community Edition) v1.2, which is available for download from CodePlex, as follows:

Project Description

StarterSTS is a compact, easy to use security token service that is completely based on the ASP.NET provider infrastructure. It is built using the Windows Identity Foundation and supports WS-Federation., WS-Trust, REST, OpenId and Information Cards.Disclaimer

Though you could use StarterSTS directly as a production STS, be aware that this was not the design goal.

StarterSTS did not go through the same testing or quality assurance process as a "real" product like ADFS2 did. StarterSTS is also lacking all kinds of enterprisy features like configuration services, proxy support or operations integration. The main goal of StarterSTS is to give you a learning tool for building non-trivial security token services. Another common scenario is to use StarterSTS in a development environment.High level features

- Active and passive security token service

- Supports WS-Federation, WS-Trust, REST, OpenId, SAML 1.1/2.0 tokens and Information Cards

- Supports username/password and client certificate authentication

- Based on standard ASP.NET membership, roles and profile infrastructure

- Control over security policy (SSL, encryption, SOAP security) without having to touch WIF/WCF configuration directly

- Automatic generation of WS-Federation metadata for federating with relying parties and other STSes

Documentation

http://identity.thinktecture.com/stsce/docs/

Screencasts

Initial Setup & Configuration

Federating your 1st Web App

Federating your 1st Web Service

Single-Sign-On

Using the REST Endpoint

Using the OpenId Bridge

Tracing

Using Client Certificates

Using Information CardsMy Blog

http://www.leastprivilege.com

Jose Barreto reported the public availability of his BingMatrix – A Windows Azure application that provides a fun way to mine data from Bing project on 12/23/2010:

I wanted to share a little application I put together using Windows Azure. It uses Bing queries to find out how the popularity of a specific set of keywords on a specific set of sites. I actually created this for my own use while researching how frequently some registry keys are mentioned on Microsoft support, on TechNet blogs or on the TechNet forums.

To get started, go to http://bingmatrix.cloudapp.net and provide:

- Title (option)

- List of keyword

- List of web sites

- Additional keywords (optional)

Here’s a sample screenshot of the input screen:

You can use use one of the sample queries provided. For instance, to get the data above I simply clicked the “SMB2” button on the right. To get your results, click on the big “Build my BingMatrix” button on the left. Please note that it will take a few seconds to build the matrix, so be patient. Here are the results for the sample above:

For the specific example above, it searches the 4 keywords on the 5 different sites, it goes to Bing 20 times to get the results. For each one, it uses one keyword from the “keywords list”, one of the sites from the “sites list” and adds the additional keywords on the “additional keywords”. For instance, for the query on “Performance” on the “blogs” site, it passes the following query string to Bing: +"Performance" +"File Server" +SMB2 site:blogs.technet.com. The table with the results includes links to each individual query, so you can go directly to Bing to find the details.

Here are a few additional sample results:

You should interpret these results carefully, since they can vary based on the additional keywords provided. Obviously, Bing is constantly crawling the Internet, so the output for he same query will change over time. The numbers you see on the screenshots above will probably be different by the time you try them out. Also note that if you get millions of hits for a certain query, the numbers are obviously less precise. If you get just a few dozen, they are usually fairly accurate.

You can also provide direct links to a BingMatrix query. For instance, here are direct URL to the complete list of 12 sample queries provided in the main page of the site:

- Kinect Games

- Windows Phone 7 Devices

- Famous Movie Series

- File Server and SMB2

- Linux Distributions

- Technology Podcasters

- Technology Buzzwords

- Popular Brands

- Top Celebrities in 2010

- Car Brands

- Homework Topics in Puget Sounds School Districts

- Top Words in 2010

Try it out at http://bingmatrix.cloudapp.net and make sure to post a comment if you like it. You can obviously type in any keywords, sites or additional keywords to build your own matrix. Just keep in mind that if your matrix is too big, it will take longer to process. It might also time out. This was a weekend project for me and I have made a few updates in the last few weekends. Feel free to provide feedback and suggest improvements.

Updated on 12/15/2010: Deployed a new version that works faster by using multiple threads to query Bing.

Updated on 12/21/2010: New sample queries added (8 total now).

Updated on 12/23/2010: New sample queries added (12 total now). Support for passing in parameters in the URL. Added title field. Added “Building…” message.

Here’s my search screen for the OakLeaf Systems blog:

And the results, which appear suspiciously high for all search terms except OData and Azure AppFabric:

I’ve left a comment about this issue on Jose’s post.

Wictor Wilén added an Azure FotoBadge application to Facebook on 12/20 and updated it on 12/23/2010:

The MSDN Library added an Introduction to the VM Role Development Process topic in 12/2010:

[This topic contains preliminary content for the current release of Windows Azure.]

The Windows Azure virtual machine (VM) role enables you to deploy a custom Windows Server 2008 R2 image to Windows Azure. With a VM role, you can work in a familiar environment, using standard Windows technologies to create an image, install your software to it, prepare it for deployment to Windows Azure, and manage it once it has been deployed. The Windows Azure VM role is designed for compatibility with ordinary Windows Server workloads, making it an ideal option for migrating existing applications to Windows Azure.

When you deploy a VM role to Windows Azure, you are running an instance of Windows Server 2008 R2 in Windows Azure. A VM role gives you a high degree of control over the virtual machine, while also providing the advantages of running within the Windows Azure environment: immediate scalability, in-place upgrades with no service downtime, integration with other components of your service, and load-balanced traffic.

Note: Windows Server 2008 R2 is the only supported operating system for a VM role.

Note also that a server instance running in Windows Azure is subject to certain limitations that an on-premises installation of Windows is not. Some network-related functionality is restricted; for example, in order to use the UDP protocol, you must also use Windows Azure Connect. Additionally, a server instance running in Windows Azure does not persist state. If the server instance is re-created from the image, either because the operator requested a reimage or because Windows Azure needed to move the server instance to different hardware, any state associated with that instance that has not been persisted to a durable storage medium outside of the virtual machine is lost.

It's recommended that you use the Windows Azure storage services to persist state, either by writing it to a blob or to a Windows Azure drive. Data that is written to the local storage resource directory is persisted when a server instance is reimaged; however, this data may be lost in the event of a transient failure in Windows Azure that requires your server instance to be moved to different hardware.

The process of developing and deploying a VM role consists of the following steps:

- Preparing your image and uploading it to the Windows Azure image repository associated with your subscripton.

- Designing your service model and preparing your service package.

- Uploading any certificates to the certificate store.

- Deploying your service to Windows Azure.

The following image provides an overview of the steps involved, and the subsequent sections provide additional information on each step:

Preparing Your Customized Image

You can use standard Windows image creation technologies to create your custom Windows Server 2008 R2 image. The process of creating your custom image and preparing it for deployment is described in Getting Started with Windows Azure VM Role. Step-by-step instructions are available in How to: Manage Windows Azure VM Roles. You may also wish to consult the documentation for the Windows Automated Install Kit (Windows AIK) for a more thorough explanation of the process of creating a Windows image.

Installing the Windows Azure Integration Components

As part of preparing your Windows Server image on-premises, you must install the Windows Azure Integration Components onto the image. For information on installing the Integration Components onto your image, see How to Install the Windows Azure Integration Components. Once the image has been deployed to Windows Azure as a server instance, the Windows Azure Integration Components handle integrating the server instance with the Windows Azure environment.

Installing Software Applications

As part of the process of preparing your image, you must install any software applications that you want to run on your server instances.

To prepare your image, you will run the System Preparation (Sysprep) tool; Sysprep is also used to setup your server instances once you have deployed your image to Windows Azure. Consult your software application's documentation for information about its compatibility with Sysprep.

Develop the Adapter

When you develop and deploy a custom image, you are installing and configuring software applications to run in the dynamic environment of Windows Azure. In many cases, you may need to provide configuration information for your application that is not available at development time, and must be gathered at runtime. The recommended way to configure your applications for the dynamic environment is by writing an adapter that interacts with Windows Azure and prepares and runs your application. For details on developing an adaptor, see Developing the VM Role Adapter.

Configure the Firewall

As part of the process of preparing your image, you should configure the Windows firewall to open any ports that your application needs once it is running on your server instance in Windows Azure.

It's recommended that your service use well-known ports. You can specify port numbers for endpoints that you define for any role – web, worker, or virtual machine. See (xref) for more information on specifying ports in your service definition.

You can configure the firewall in any of the following ways:

- Manually, during the process of preparing your image.

- By using the Windows Firewall API to open a port programmatically.

- By using the Windows Installer XML (WiX) toolset. If you are writing an installer to install software to your server instance, you can use WiX to open a port in the firewall when the installer runs.

Generalize the Image for Deployment

After you have installed the Windows Azure Integration Components, your software, and your adapter and configured the firewall, you need to generalize your image for deployment to Windows Azure by running Sysprep. The process of generalization strips out information that is specific to the current running instance of Windows, so that the image may be deployed to one or more virtual machine in Windows Azure. For more information on using Sysprep to generalize your image, see How to Prepare the Image for Deployment. For technical details on Sysprep, see the Sysprep Technical Reference.

During the process of preparing your image for deployment, you may generalize and then specialize it several times, in an iterative process. Each time you create a virtual machine from the image, Windows Setup runs and performs specialization functions for that machine. Your final task before you upload your image to the Windows Azure image repository is to generalize the image.

The specialization pass happens again once your image is deployed to Windows Azure. Each server instance that is created from your image runs an automated Windows setup process using Sysprep. The automated specialization pass uses configuration values from the answer file that is installed to the image's root directory by the Windows Azure Integration Components. For more information, see About the Windows Azure Integration Components.

For information on uploading your image to Windows Azure, see How to Deploy an Image to Windows Azure.

Designing Your Service Model

Your service definition and service configuration files define your VM role and specify the image to use for it; specify where any certificates should be installed; and specify the number of server instances to create. For details on creating your service model and deploying your service package, see Creating the Service Model and Deploying the Package.

Uploading Certificates to the Windows Azure Certificate Store

Any certificates required by your VM role should be installed to the Windows Azure certificate store; you should not include them with your image, as Sysprep strips out private key information. For more information on installing certificates to your server instances, see About the Windows Azure Integration Components.

For general information on working with service certificates in Windows Azure, see Managing Service Certificates.

Deploying Your Service to Windows Azure