Windows Azure and Cloud Computing Posts for 3/5/2012+

| A compendium of Windows Azure, Service Bus, EAI & EDI Access Control, Connect, SQL Azure Database, and other cloud-computing articles. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Windows Azure Blob, Drive, Table, Queue and Hadoop Services

- SQL Azure Database, Federations and Reporting

- Marketplace DataMarket, Social Analytics and OData

- Windows Azure Access Control, Service Bus, and Workflow

- Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch and Entity Framework v4+

- Windows Azure Infrastructure and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

Azure Blob, Drive, Table, Queue and Hadoop Service

Joe Giardino of the Windows Azure Storage Team posted a Windows Azure Storage Client for Java Overview on 3/5/2012:

We released the Storage Client for Java with support for Windows Azure Blobs, Queues, and Tables. Our goal is to continue to improve the development experience when writing cloud applications using Windows Azure Storage. This release is a Community Technology Preview (CTP) and will be supported by Microsoft. As such, we have incorporated feedback from customers and forums for the current .NET libraries to help create a more seamless API that is both powerful and simple to use. This blog post serves as an overview of the library and covers some of the implementation details that will be helpful to understand when developing cloud applications in Java. Additionally, we’ve provided two additional blog posts that cover some of the unique features and programming models for the blob and table service.

Packages

The Storage Client for Java is distributed in the Windows Azure SDK for Java jar (see below for locations). For the optimal development experience import the client sub package directly (com.microsoft.windowsazure.services.[blob|queue|table].client). This blog post refers to this client layer.

The relevant packages are broken up by service:

Common

com.microsoft.windowsazure.services.core.storage – This package contains all storage primitives such as CloudStorageAccount, StorageCredentials, Retry Policies, etc.

Services

com.microsoft.windowsazure.services.blob.client – This package contains all the functionality for working with the Windows Azure Blob service, including CloudBlobClient, CloudBlob, etc.

com.microsoft.windowsazure.services.queue.client – This package contains all the functionality for working with the Windows Azure Queue service, including CloudQueueClient, CloudQueue, etc.

com.microsoft.windowsazure.services.table.client – This package contains all the functionality for working with the Windows Azure Table service, including CloudTableClient, TableServiceEntity, etc.

Services

While this document describes the common concepts for all of the above packages, it’s worth briefly summarizing the capabilities of each client library. Blob and Table each have some interesting features that warrant further discussion. For those, we’ve provided additional blog posts linked below. The client API surface has been designed to be easy to use and approachable, however to accommodate more advanced scenarios we have provided optional extension points when necessary.

Blob

The Blob API supports all of the normal Blob Operations (upload, download, snapshot, set/get metadata, and list), as well as the normal container operations (create, delete, list blobs). However we have gone a step farther and also provided some additional conveniences such as Download Resume, Sparse Page Blob support, simplified MD5 scenarios, and simplified access conditions.

To better explain these unique features of the Blob API, we have published an additional blog post which discusses these features in detail. You can also see additional samples in our article How to Use the Blob Storage Service from Java.

Sample – Upload a File to a Block Blob

// You will need these imports import com.microsoft.windowsazure.services.blob.client.CloudBlobClient; import com.microsoft.windowsazure.services.blob.client.CloudBlobContainer; import com.microsoft.windowsazure.services.blob.client.CloudBlockBlob; import com.microsoft.windowsazure.services.core.storage.CloudStorageAccount; // Initialize Account CloudStorageAccount account = CloudStorageAccount.parse([ACCOUNT_STRING]); // Create the blob client CloudBlobClient blobClient = account.createCloudBlobClient(); // Retrieve reference to a previously created container CloudBlobContainer container = blobClient.getContainerReference("mycontainer"); // Create or overwrite the "myimage.jpg" blob with contents from a local // file CloudBlockBlob blob = container.getBlockBlobReference("myimage.jpg"); File source = new File("c:\\myimages\\myimage.jpg"); blob.upload(new FileInputStream(source), source.length());(Note: It is best practice to always provide the length of the data being uploaded if it is available; alternatively a user may specify -1 if the length is not known)

Table

The Table API provides a minimal client surface that is incredibly simple to use but still exposes enough extension points to allow for more advanced “NoSQL” scenarios. These include built in support for POJO, HashMap based “property bag” entities, and projections. Additionally, we have provided optional extension points to allow clients to customize the serialization and deserialization of entities which will enable more advanced scenarios such as creating composite keys from various properties etc.

Due to some of the unique scenarios listed above the Table service has some requirements and capabilities that differ from the Blob and Queue services. To better explain these capabilities and to provide a more comprehensive overview of the Table API we have published an in depth blog post which includes the overall design of Tables, the relevant best practices, and code samples for common scenarios. You can also see more samples in our article How to Use the Table Storage Service from Java.

Sample – Upload an Entity to a Table

// You will need these imports import com.microsoft.windowsazure.services.core.storage.CloudStorageAccount; import com.microsoft.windowsazure.services.table.client.CloudTableClient; import com.microsoft.windowsazure.services.table.client.TableOperation; // Retrieve storage account from connection-string CloudStorageAccount storageAccount = CloudStorageAccount.parse([ACCOUNT_STRING]); // Create the table client. CloudTableClient tableClient = storageAccount.createCloudTableClient(); // Create a new customer entity. CustomerEntity customer1 = new CustomerEntity("Harp", "Walter"); customer1.setEmail("Walter@contoso.com"); customer1.setPhoneNumber("425-555-0101"); // Create an operation to add the new customer to the people table. TableOperation insertCustomer1 = TableOperation.insert(customer1); // Submit the operation to the table service. tableClient.execute("people", insertCustomer1);

Queue

The Queue API includes convenience methods for all of the functionality available through REST. Namely creating, modifying and deleting queues, adding, peeking, getting, deleting, and updating messages, and also getting the message count. Here is a sample of creating a queue and adding a message, and you can also read How to Use the Queue Storage Service from Java.

Sample – Create a Queue and Add a Message to it

// You will need these imports import com.microsoft.windowsazure.services.core.storage.CloudStorageAccount; import com.microsoft.windowsazure.services.queue.client.CloudQueue; import com.microsoft.windowsazure.services.queue.client.CloudQueueClient; import com.microsoft.windowsazure.services.queue.client.CloudQueueMessage; // Retrieve storage account from connection-string CloudStorageAccount storageAccount = CloudStorageAccount.parse([ACCOUNT_STRING]); // Create the queue client CloudQueueClient queueClient = storageAccount.createCloudQueueClient(); // Retrieve a reference to a queue CloudQueue queue = queueClient.getQueueReference("myqueue"); // Create the queue if it doesn't already exist queue.createIfNotExist(); // Create a message and add it to the queue CloudQueueMessage message = new CloudQueueMessage("Hello, World"); queue.addMessage(message);Design

When designing the Storage Client for Java, we set up a series of design guidelines to follow throughout the development process. In order to reflect our commitment to the Java community working with Azure, we decided to design an entirely new library from the ground up that would feel familiar to Java developers. While the basic object model is somewhat similar to our .NET Storage Client Library there have been many improvements in functionality, consistency, and ease of use which will address the needs of both advanced users and those using the service for the first time.

Guidelines

- Convenient and performant – This default implementation is simple to use, however we will always be able to support the performance-critical scenarios. For example, Blob upload APIs require a length of data for authentication purposes. If this is unknown a user may pass -1, and the library will calculate this on the fly. However, for performance critical applications it is best to pass in the correct number of bytes.

- Users own their requests – We have provided mechanisms that will allow users to determine the exact number of REST calls being made, the associated request ids, HTTP status codes, etc. (See OperationContext in the Object Model discussion below for more). We have also annotated every method that will potentially make a REST request to the service with the @DoesServiceRequest annotation. This all ensures that you, the developer, are able to easily understand and control the requests made by your application, even in scenarios like Retry, where the Java Storage Client library may make multiple calls before succeeding.

- · Look and feel –

- Naming is consistent. Logical antonyms are used for complimentary actions (i.e. upload and download, create and delete, acquire and release)

- get/set prefixes follow Java conventions and are reserved for local client side “properties”

- Minimal overloads per method. One with the minimum set of required parameters and one overload including all optional parameters which may be null. The one exception is listing methods have 2 minimum overloads to accommodate the common scenario of listing with prefix.

- Minimal API Surface – In order to keep the API surface smaller we have reduced the number of extraneous helper methods. For example, Blob contains a single upload and download method that use Input / OutputStreams. If a user wishes to handle data in text or byte form, they can simply pass in the relevant stream.

- Provide advanced features in a discoverable way – In order to keep the core API simple and understandable advanced features are exposed via either the RequestOptions or optional parameters.

- Consistent Exception Handling - The library immediately will throw any exception encountered prior to making the request to the server. Any exception that occurs during the execution of the request will subsequently be wrapped inside a StorageException.

- Consistency – objects are consistent in their exposed API surface and functionality. For example a Blob, Container, or Queue all expose an exists() method

Object Model

The Storage Client for Java uses local client side objects to interact with objects that reside on the server. There are additional features provided to help determine if an operation should execute, how it should execute, as well as provide information about what occurred when it was executed. (See Configuration and Execution below)

Objects

StorageAccount

The logical starting point is a CloudStorageAccount which contains the endpoint and credential information for a given storage account. This account then creates logical service clients for each appropriate service: CloudBlobClient, CloudQueueClient, and CloudTableClient. CloudStorageAccount also provides a static factory method to easily configure your application to use the local storage emulator that ships with the Windows Azure SDK.

A CloudStorageAccount can be created by parsing an account string which is in the format of:

"DefaultEndpointsProtocol=http[s];AccountName=<account name>;AccountKey=<account key>"Optionally, if you wish to specify a non-default DNS endpoint for a given service you may include one or more of the following in the connection string.

“BlobEndpoint=<endpoint>”, “QueueEndpoint=<endpoint>”, “TableEndpoint=<endpoint>”Sample – Creating a CloudStorageAccount from an account string

// Initialize Account CloudStorageAccount account = CloudStorageAccount.parse([ACCOUNT_STRING]);ServiceClients

Any service wide operation resides on the service client. Default configuration options such as timeout, retry policy, and other service specific settings that objects associated with the client will reference are stored here as well.

For example:

- To turn on Storage Analytics for the blob service a user would call CloudBlobClient.uploadServiceProperties(properties)

- To list all queues a user would call CloudQueueClient.listQueues()

- To set the default timeout to 30 seconds for objects associated with a given client a user would call Cloud[Blob|Queue|Table]Client.setTimeoutInMs(30 * 1000)

Cloud Objects

Once a user has created a service client for the given service it’s time to start directly working with the Cloud Objects of that service. A CloudObject is a CloudBlockBlob, CloudPageBlob, CloudBlobContainer, and CloudQueue, each of which contains methods to interact with the resource it represents in the service.

Below are basic samples showing how to create a Blob Container, a Queue, and a Table. See the samples in the Services section for examples of how to interact with a CloudObjects.

Blobs

// Retrieve reference to a previously created container CloudBlobContainer container = blobClient.getContainerReference("mycontainer"); // Create the container if it doesn't already exist container.createIfNotExist()Queues

// Retrieve a reference to a queue CloudQueue queue = queueClient.getQueueReference("myqueue"); // Create the queue if it doesn't already exist queue.createIfNotExist();Tables

Note: You may notice that unlike blob and queue the table service does not use a CloudObject to represent an individual table, this is due to the unique nature of the table service which will is covered more in depth in the Tables deep dive blog post. Instead, table operations are performed via the CloudTableClient object:

// Create the table if it doesn't already exist tableClient.createTableIfNotExists("people");Configuration and Execution

In each maximum overload of each method provided in the library you will note there are two or three extra optional parameters provided depending on the service, all of which accept null to allow users to utilize just a subset of the features they require. For example to utilize only RequestOptions simply pass in null to AccessCondition and OperationContext. These objects for these optional parameters provide the user an easy way to determine if an operation should execute, how to execute it, and retrieve additional information about how it was executed when it completes.

AccessCondition

An AccessCondition’s primary purpose is to determine if an operation should execute, and is supported when using the Blob service. Specifically, AccessCondition encapsulates Blob leases as well as the If-Match, If-None-Match, If-Modified_Since, and the If-Unmodified-Since HTTP headers. An AccessCondition may be reused across operations as long as the given condition is still valid. For example, a user may only wish to delete a blob if it hasn’t been modified since last week. By using an AccessCondition, the library will send the HTTP "If-Unmodified-Since" header to the server which may not process the operation if the condition is not true. Additionally, blob leases can be specified through an AccessCondition so that only operations from users holding the appropriate lease on a blob may succeed.

AccessCondition provides convenient static factory methods to generate an AccessCondition instance for the most common scenarios (IfMatch, IfNoneMatch, IfModifiedSince, IfNotModifiedSince, and Lease) however it is possible to utilize a combination of these by simply calling the appropriate setter on the condition you are using.

The following example illustrates how to use an AccessCondition to only upload the metadata on a blob if it is a specific version.

blob.uploadMetadata(AccessCondition.generateIfMatchCondition(currentETag), null /* RequestOptions */, null/* OperationContext */);Here are some Examples:

//Perform Operation if the given resource is not a specified version: AccessCondition.generateIfNoneMatchCondition(eTag) //Perform Operation if the given resource has been modified since a given date: AccessCondition. generateIfModifiedSinceConditionlastModifiedDate) //Perform Operation if the given resource has not been modified since a given date: AccessCondition. generateIfNotModifiedSinceCondition(date) //Perform Operation with the given lease id (Blobs only): AccessCondition. generateLeaseCondition(leaseID) //Perform Operation with the given lease id if it has not been modified since a given date: AccessCondition condition = AccessCondition. generateLeaseCondition (leaseID); condition. setIfUnmodifiedSinceDate(date);RequestOptions

Each Client defines a service specific RequestOptions (i.e. BlobRequestOptions, QueueRequestOptions, and TableRequestOptions) that can be used to modify the execution of a given request. All service request options provide the ability to specify a different timeout and retry policy for a given operation; however some services may provide additional options. For example the BlobRequestOptions includes an option to specify the concurrency to use when uploading a given blob. RequestOptions are not stateful and may be reused across operations. As such, it is common for applications to design RequestOptions for different types of workloads. For example an application may define a BlobRequestOptions for uploading large blobs concurrently, and a BlobRequestOptions with a smaller timeout when uploading metadata.

The following example illustrates how to use BlobRequestOptions to upload a blob using up to 8 concurrent operations with a timeout of 30 seconds each.

BlobRequestOptions options = new BlobRequestOptions(); // Set ConcurrentRequestCount to 8 options.setConcurrentRequestCount(8); // Set timeout to 30 seconds options.setTimeoutIntervalInMs(30 * 1000); blob.upload(new ByteArrayInputStream(buff), blobLength, null /* AccessCondition */, options, null /* OperationContext */);

OperationContext

The OperationContext is used to provide relevant information about how a given operation executed. This object is by definition stateful and should not be reused across operations. Additionally the OperationContext defines an event handler that can be subscribed to in order to receive notifications when a response is received from the server. With this functionality, a user could start uploading a 100 GB blob and update a progress bar after every 4 MB block has been committed.

Perhaps the most powerful function of the OperationContext is to provide the ability for the user to inspect how an operation executed. For each REST request made against a server, the OperationContext stores a RequestResult object that contains relevant information such as the HTTP status code, the request ID from the service, start and stop date, etag, and a reference to any exception that may have occurred. This can be particularly helpful to determine if the retry policy was invoked and an operation took more than one attempt to succeed. Additionally, the Service Request ID and start/end times are useful when escalating an issue to Microsoft.

The following example illustrates how to use OperationContext to print out the HTTP status code of the last operation.

OperationContext opContext = new OperationContext(); queue.createIfNotExist(null /* RequestOptions */, opContext); System.out.println(opContext.getLastResult().getStatusCode());Retry Policies

Retry Policies have been engineered so that the policies can evaluate whether to retry on various HTTP status codes. Although the default policies will not retry 400 class status codes, a user can override this behavior by creating their own retry policy. Additionally, RetryPolicies are stateful per operation which allows greater flexibility in fine tuning the retry policy for a given scenario.

The Storage Client for Java ships with 3 standard retry policies which can be customized by the user. The default retry policy for all operations is an exponential backoff with up to 3 additional attempts as shown below:

new RetryExponentialRetry( 3000 /* minBackoff in milliseconds */, 30000 /* delatBackoff in milliseconds */, 90000 /* maxBackoff in milliseconds */, 3 /* maxAttempts */);With the above default policy, the retry will approximately occur after: 3,000ms, 35,691ms and 90,000ms

If the number of attempts should be increased, one can use the following:

new RetryExponentialRetry( 3000 /* minBackoff in milliseconds */, 30000 /* delatBackoff in milliseconds */, 90000 /* maxBackoff in milliseconds */, 6 /* maxAttempts */);With the above policy, the retry will approximately occur after: 3,000ms, 28,442ms and 80,000ms, 90,000ms, 90,000ms and 90,000ms.

NOTE: the time provided is an approximation because the exponential policy introduces a +/-20% random delta as described below.

NoRetry - Operations will not be retried

LinearRetry - Represents a retry policy that performs a specified number of retries, using a specified fixed time interval between retries.

ExponentialRetry (default) - Represents a retry policy that performs a specified number of retries, using a randomized exponential backoff scheme to determine the interval between retries. This policy introduces a +/- %20 random delta to even out traffic in the case of throttling.

A user can configure the retry policy for all operations directly on a service client, or specify one in the RequestOptions for a specific method call. The following illustrates how to configure a client to use a linear retry with a 3 second backoff between attempts and a maximum of 3 additional attempts for a given operation.

serviceClient.setRetryPolicyFactory(new RetryLinearRetry(3000,3));Or

TableRequestOptions options = new TableRequestOptions(); options.setRetryPolicyFactory(new RetryLinearRetry(3000, 3));Custom Policies

There are two aspects of a retry policy, the policy itself and an associated factory. To implement a custom interface a user must derive from the abstract base class RetryPolicy and implement the relevant methods. Additionally, an associated factory class must be provided that implements the RetryPolicyFactory interface to generate unique instances for each logical operation. For simplicities sake the policies mentioned above implement the RetryPolicyFactory interface themselves, however it is possible to use two separate classes

Note about .NET Storage Client

During the development of the Java library we have identified many substantial improvements in the way our API can work. We are committed to bringing these improvements back to .NET while keeping in mind that many clients have built and deployed applications on the current API, so stay tuned.

Summary

We have put a lot of work into providing a truly first class development experience for the Java community to work with Windows Azure Storage. We very much appreciate all the feedback we have gotten from customers and through the forums, please keep it coming. Feel free to leave comments below,

Joe Giardino

Developer

Windows Azure StorageResources

Get the Windows Azure SDK for Java

Learn more about the Windows Azure Storage Client for Java

- Windows Azure Storage Client for Java Tables Deep Dive

- Windows Azure Storage Client for Java Blob Features

- Windows Azure Storage Client for Java Storage Samples

- How to Use the Blob Storage Service from Java

- How to Use the Queue Storage Service from Java

- How to Use the Table Storage Service from Java

- Windows Azure SDK for Java Developer Center

Learn more about Windows Azure Storage

Joe Giardino of the Windows Azure Storage Team also posted detailed Windows Azure Storage Client for Java Tables Deep Dive and Windows Azure Storage Client for Java Blob Features articles on 3/5/2012.

Robin Cremers (@robincremers) posted a very detailed Everything you need to know about Windows Azure Table Storage to use a scalable non-relational structured data store article on 3/4/2012:

In my attempt to cover most of the features of the Microsoft Cloud Computing platform Windows Azure, I’ll be covering Windows Azure storage in the next few posts.

You can find the Windows Azure Blog Storage post here:

Everything you need to know about Windows Azure Blob Storage including permissions, signatures, concurrency, …

Why using Windows Azure storage:

- Fault-tolerance: Windows Azure Blobs, Tables and Queues stored on Windows Azure are replicated three times in the same data center for resiliency against hardware failure. No matter which storage service you use, your data will be replicated across different fault domains to increase availability

- Geo-replication: Windows Azure Blobs and Tables are also geo-replicated between two data centers 100s of miles apart from each other on the same continent, to provide additional data durability in the case of a major disaster, at no additional cost.

- REST and availability: In addition to using Storage services for your applications running on Windows Azure, your data is accessible from virtually anywhere, anytime.

- Content Delivery Network: With one-click, the Windows Azure CDN (Content Delivery Network) dramatically boosts performance by automatically caching content near your customers or users.

- Price: It’s insanely cheap storage

The only reason you would not be interested in the Windows Azure storage platform would be if you’re called Chuck Norris …

Now if you are still reading this line it means you aren’t Chuck Norris, so let’s get on with it.The Windows Azure Table storage service stores large amounts of structured data. The service is a NoSQL datastore which accepts authenticated calls from inside and outside the Azure cloud. Azure tables are ideal for storing structured, non-relational data. Common uses of the Table service include:

- Storing TBs of structured data capable of serving web scale applications

- Storing datasets that don’t require complex joins, foreign keys, or stored procedures and can be denormalized for fast access

- Quickly querying data using a clustered index

- Accessing data using the OData protocol and LINQ queries with WCF Data Service .NET Libraries

You can use the table storage service to store and query huge sets of structured, non-relational data, and your tables will scale as demand increases.

If you do not know the OData protocol is and what is used for, you can find more information about it in this post:

WCF REST service with ODATA and Entity Framework with client context, custom operations and operation interceptorsThe concept behind the Windows Azure table storage is as following:

There are 3 things you need to know about to use Windows Azure Table storage:

- Account: All access to Windows Azure Storage is done through a storage account. The total size of blob, table, and queue contents in a storage account cannot exceed 100TB.

- Table: A table is a collection of entities. Tables don’t enforce a schema on entities, which means a single table can contain entities that have different sets of properties. An account can contain many tables, the size of which is only limited by the 100TB storage account limit.

- Entity: An entity is a set of properties, similar to a database row. An entity can be up to 1MB in size

1. Creating and using the Windows Azure Storage Account

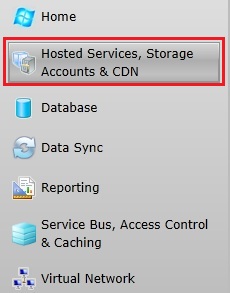

To be able to store data in the Windows Azure platform, you will need a storage account. To create a storage account, log in the Windows Azure portal with your subscription and go to the Hosted Services, Storage Accounts & CDN service:

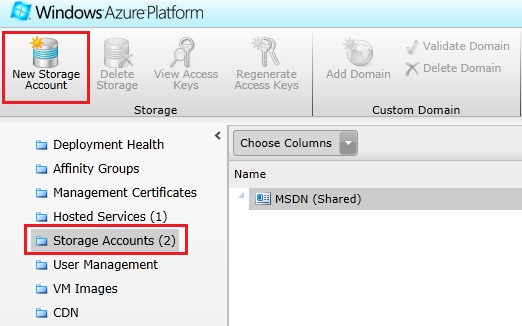

Select the Storage Accounts service and hit the Create button to create a new storage account:

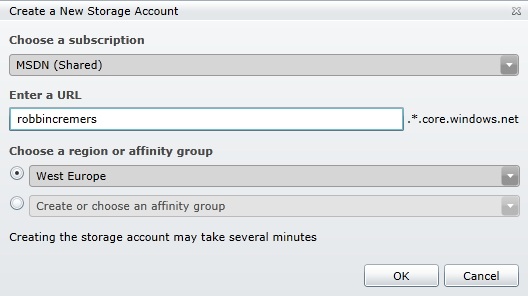

Define a prefix for your storage account you want to create:

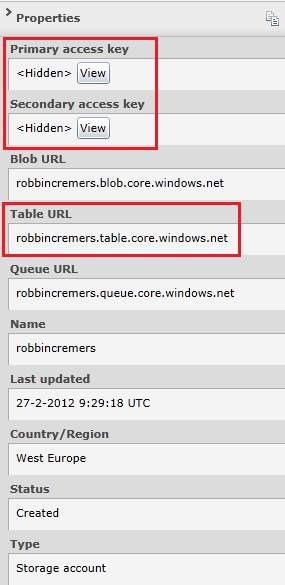

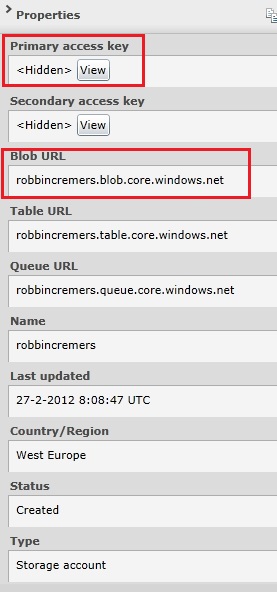

After the Windows Azure storage account is created, you can view the storage account properties by selecting the storage account:

The storage account can be used to store data in the blob storage, table storage or queue storage. In this post, we will only cover table storage. One of the properties of the storage account is the primary and secondary access key. You will need one of these 2 keys to be able to execute operations on the storage account. Both the keys are valid and can be used as an access key.

When you have an active Windows Azure storage account in your subscription, you’ll have a few possible operations:

- Delete Storage: Delete the storage account, including all the related data to the storage account

- View Access Keys: Shows the primary and secondary access key

- Regenerate Access Keys: Allows you to regenerate one or both of your access keys. If one of your access keys is compromised, you can regenerate it to revoke access for the compromised access key

- Add Domain: Map a custom DNS name to the storage account blob storage. For example map the robbincremers.blob.core.windows.net to static.robbincremers.me domain. Can be interesting for storage accounts which directly expose data to customers through the web. The mapping is only available for blob storage, since only blob storage can be publicly exposed.

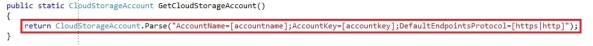

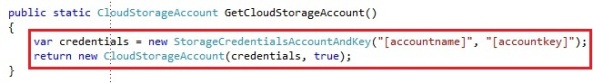

Now that we created our Windows Azure storage account, we can start by getting a reference to our storage account in our code. To do so, you will need to work with the CloudStorageAccount, which belongs to Microsoft.WindowsAzure namespace:

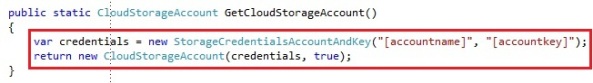

We create a CloudStorageAccount by parsing a connection string. The connection string takes the account name and key, which you can find in the Windows Azure portal. You can also create a CloudStorageAccount by passing the values as parameters instead of a connection string, which could be preferable. You need to create an instance of the StorageCredentialsAccountAndKey and pass it into the CloudStorageAccount constructor:

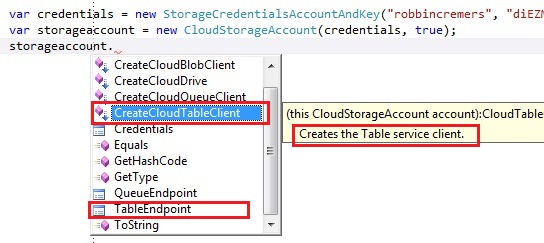

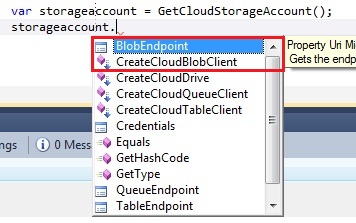

The boolean that the CloudStorageAccount takes is to define whether you want to use HTTPS or not. In our case we chose to use HTTPS for our operations on the storage account. The storage account only has a few operations, like exposing the storage endpoints, the storage account credentials and the storage specific clients:

The storage account exposes the endpoint of the blob, queue and table storage. It also exposes the storage credentials by the Credentials operation. Finally it also exposes 4 important operations:

- CreateCloudBlobClient: Creates a client to work on the blob storage

- CreateCloudDrive: Creates a client to work on the drive storage

- CreateCloudQueueClient: Creates a client to work on the queue storage

- CreateCloudTableClient: Creates a client to work on the table storage

You won’t be using the CloudStorageAccount much, except for creating the service client for a specific storage type.

2. Basic operations for managing Windows Azure table storage

There are 2 levels to be working with the windows azure table storage, which is the table and the entity.

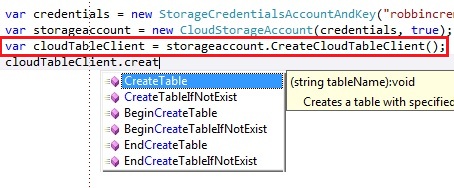

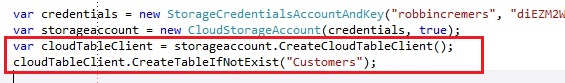

To manage the windows azure tables, you need to create an instance of the CloudTableClient through the CreateCloudTableClient operation on the CloudStorageAccount:

The CloudTableClient exposes a bunch of operations to manage the storage tables:

- CreateTable: Create a table with a specified name. If the table already exists, a StorageClientException will be thrown

- CreateTableIfNotExist: Create a table with a specified name, only if the table does not exist yet

- DoesTableExist: Check whether a table with the specified name exists

- DeleteTable: Delete a table and it’s content from the table storage. If you attempt to delete a table that does not exist, a StorageClientException will be thrown

- DeleteTableIfExist: Delete a table and it’s content from the table storage, if the table exists

- ListTables: List all the tables or all the tables with a specified prefix that belong to the storage account table storage

- GetDataServiceContext: Get a new untyped DataServiceContext to query data with table storage

Creating a storage table:

If you run this code, a storage table will be created with the name “Customers”.

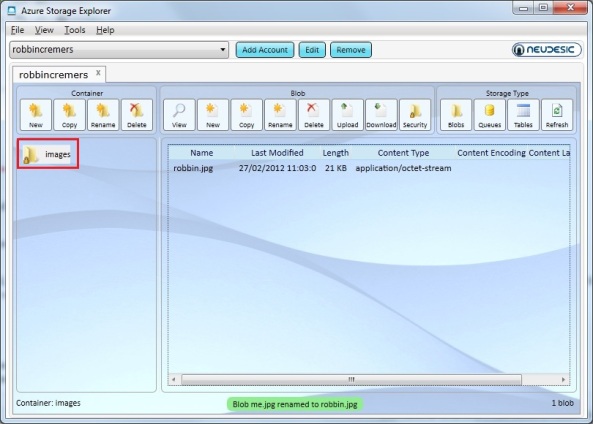

To explore my storage accounts, I use a free tool called Azure Storage Explorer which you can download on codeplex:

http://azurestorageexplorer.codeplex.com/You can see your storage tables and storage data with the Azure Storage Explorer after you connect to your storage account:

3. Creating Windows Azure table storage entities with TableServiceEntity

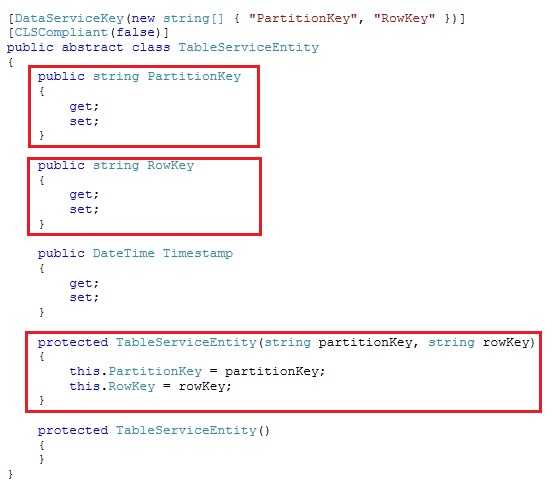

Entities map to C# objects derived from TableServiceEntity. To add an entity to a table, create a class that defines the properties of your entity and that derives from the TableServiceEntity.

The TableServiceEntity abstract class belongs to the Microsoft.WindowsAzure.StorageClient namespace. The TableServiceEntity looks as following:

There are 3 properties to the TableServiceEntity:

- PartitionKey: The first key property of every table. The system uses this key to automatically distribute the table’s entities over many storage nodes

- RowKey: A second key property for the table. This is the unique ID of the entity within the partition it belongs to.

- TimeStamp: Every entity has a version maintained by the system which is used for optimistic concurrency. The TimeStamp value is managed by the windows azure platform

Together, an entity’s partition and row key uniquely identify the entity in the table. Entities with the same partition key can be queried faster than those with different partition keys. Deciding on the PartitionKey and RowKey is a discussion on itself and can make a large difference on the performance of retrieving data. We will not discuss best practices for chosing partitions keys

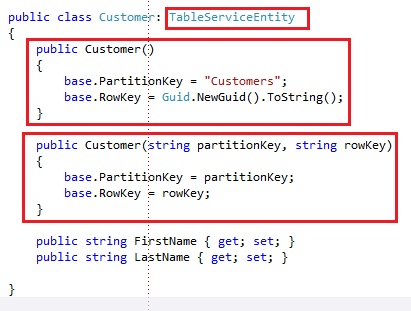

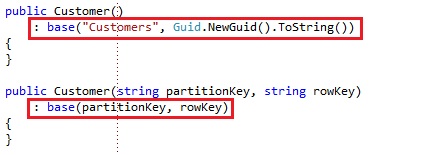

We create a class Customer, which will contain some basic information about a customer:

We have a few properties which store information about the customer. Finally we have 2 constructors set for the Customer class:

- No parameters: Sets the PartitionKey and RowKey by default. For now we set the PartitionKey to Customers and we assign the RowKey a unique identifier, so each entity has a unique combination

- Take the partition key and row key as a parameter and set them to the related properties that are defined on the base class, which is the TableServiceEntity

You can write the constructors also like this, but that’s up to preference:

It simply calls into the base class constructor, which is the TableServiceEntity, which takes a partition key and row key as parameters. This is all that’s necessary to store the entities at the Windows Azure table storage. You take these 2 steps to be able to use the class for the table storage:

- Derive the class from TableServiceEntity

- Set the partition key and row key on the base class, either through the base class constructor or through direct assignment

4. Storing and retrieving table storage data with the TableServiceContext

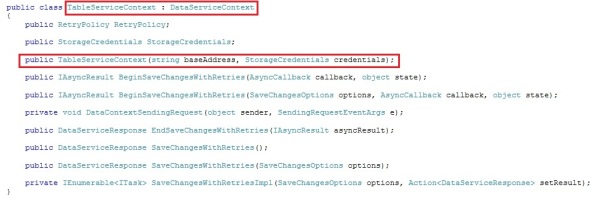

To store and retrieve entities in the Windows Azure table storage, you will need to work with a TableServiceContext, which also belongs to the Microsoft.WindowsAzure.StorageClient namespace.

If we have a look in the framework code at the TableServiceContext, it looks like this:

The Windows Azure TableServiceContext object is derived from the DataServiceContext object provided by the WCF Data Services. This object provides a runtime context for performing data operations against the Table service, including querying entities and inserting, updating, and deleting entities. The DataServiceContext belongs to the System.Data.Services.Client namespace.

The DataServiceContext is something you already might have seen with Entity Framework and WCF Data Services. I already covered the DataServiceContext. Read chapter 2. Querying the WCF OData service by a client DataServiceContext

WCF REST service with ODATA and Entity Framework with client context, custom operations and operation interceptorsIf you are not familiar with Entity Framework, you can find the necessary information in the articles about Entity Framework on the sitemap:

http://robbincremers.me/sitemap/From this point on, I’ll assume you are familiar with retrieving and storing data with Entity Framework, lazy loading, change tracking, LINQ and the Odata protocol.

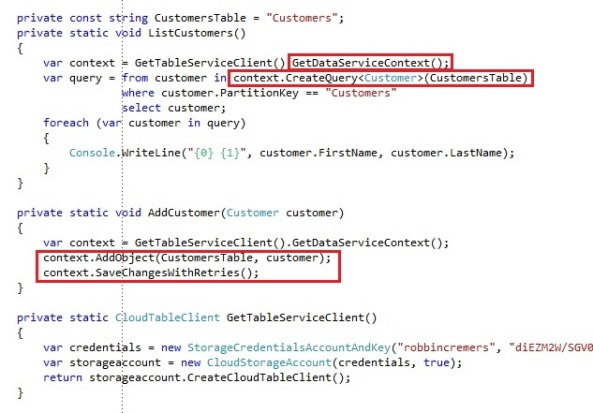

The CloudTableClient exposes a GetDataServiceContext operation, which returns a TableServiceContext object for performing data operations against the Table service. To use the TableServiceContext provided by the GetDataServiceContext operation, you would use some code looking like this:

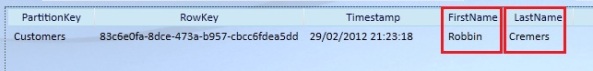

If you would execute the code to add a customer to the Customers table:

You can see the Customer got added to the Customers table and the FirstName and Lastname property got added to the structure of the table.

We are using some common Entity Framework code for retrieving entities and storing entities. The SaveChangesWithRetries operation is an operation added on the TableServiceContext, which is basically an Entity Framework SaveChanges operation with a retry policy in case a request would fail. The only annoying issue with working with the TableServiceClient.GetDataServiceContext is that you get a non-strongly typed context, by which I mean you get a context without the available tables that are exposed. You need to define the return type and the table name every time you use the CreateQuery operation. If you have are having 10 or 20+ tables exposed in your table storage, it’ll start being a mess to know what tables are being exposed and to define the table names with every single operation you execute with the context.

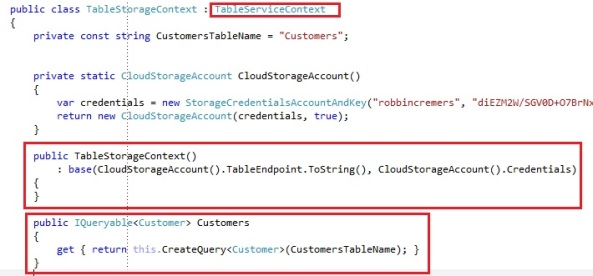

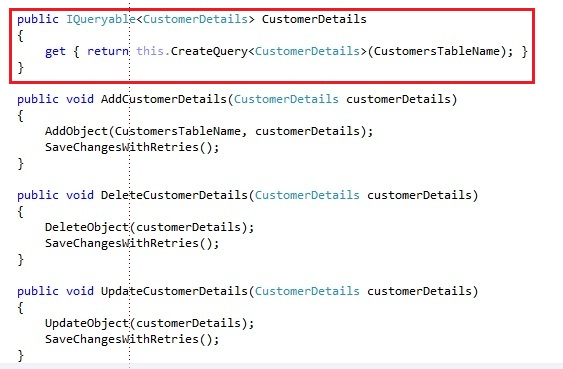

A preferred possibility is to create your own context that derives from the TableServiceContext and which allows you to define what tables are being exposed through the context:

We write a class that derives from the TableServiceContext. The constructor of our custom context calls to the base constructor of the TableServiceContext, which looks like this:

You need to pass the endpoint uri of the Windows Azure table storage and you need to pass a StorageCredentials. We decided to add our CloudStorageAccount details in the context itself, that way we do not have to pass the storage account details into the constructor of our custom context.

We also expose 1 operation, which is an IQueryable<Customer>, which should look familiar if you know the basics about Entity Framework. It basically wraps the CreateQuery<T> code with the table name you want the query to run on. We specified the Customers table name in a private field in the context.

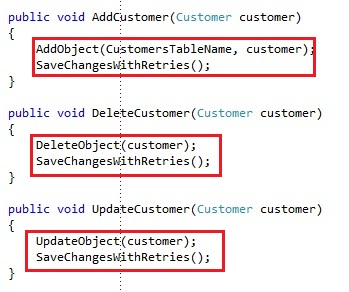

You can also add some operations for managing entities if you want to make your client code less verbose:

Doing it this way, you don’t need to share the CustomersTableName variable anywhere outside of the custom context, but again, this is up to preference. If you would run an example with the provided code, the operations will behave as we expect them to.

Personally I prefer creating a custom class deriving from the TableServiceContext. It will allow you to manage your exposed tables a lot more and it will keep your data access code in a single location, instead of spread all over. Adding strongly typed data operations for the entity types is just providing an easier use of the context for other people and avoids people needing to know what the table names are outside of our context code.

The TableServiceContext does not add much functionality to the DataServiceContext, except for this:

- SaveChangesWithRetries: Save changes with retries, depending on the retry policy

- RetryPolicy: Allows you to specify the retry policy when you want to save changes with retries

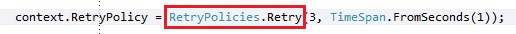

It is suggested to save your entities with retries due to the state of the internet. Setting the RetryPolicy on your custom context is done like this:

You set it to a RetryPolicies retry policy value. There are 3 possible RetryPolicy values:

- NoRetry: A retry policy that performs no retries.

- Retry: A retry policy that retries a specified number of times, with a specified fixed time interval between retries.

- RetryExponential: A policy that retries a specified number of times with a randomized exponential backoff scheme. The delay between every retry it takes will be increasing. The minimum delay and maximum delay is defined by the DefaultMinBackoff and DefaultMaxBackoff property, which you can pass along in one of the RetryExponential policies as a parameter

The RetryExponential retry policy is the default retry policy for the CloudBlobClient, CloudQueueClient and CloudTableClient objects

5. Understanding the structure of windows azure table storage

Windows Azure Storage Tables don’t enforce a schema on entities, which means a single table can contain different entities that have different sets of properties. This might require some mind switch to move from thinking in the relational model that has been used for the last decades.

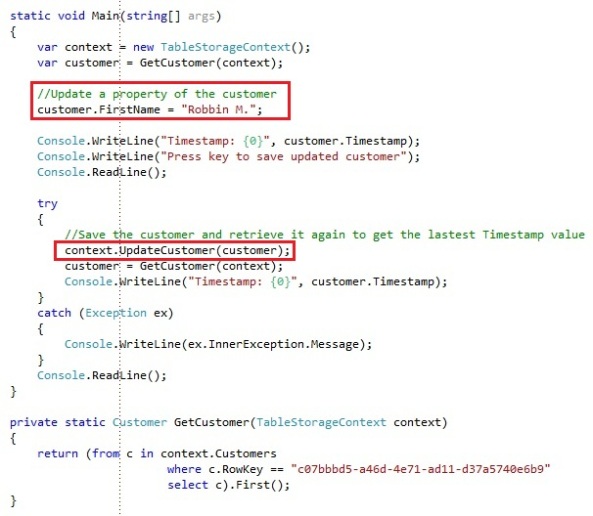

When we added a customer to the our windows azure table storage table, it had the following properties:

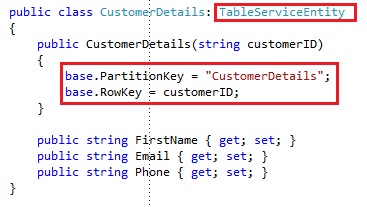

Let’s suppose we would have a CustomerDetails class:

The CustomersDetail class sets the partition key to “CustomersDetails” and the row key is being passed on as a parameter. The customer id will be the row key that is specified for the customer instance. Our class has 3 properties defined, of which 1 is identical to the Customer class.

Since we like working with a types TableServiceContext, we will add some code to our custom context to make our or the developers lives a bit easier:

Notice we store the CustomerDetails entities also in the Customers table.You could store the CustomerDetails entities in a separate table, but we decided to place them in the same table as the Customers entities.

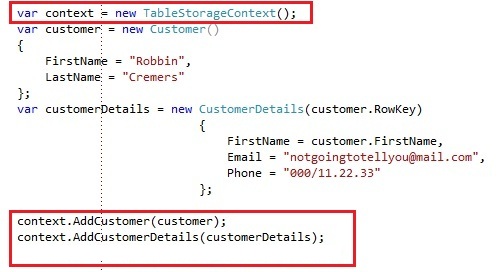

If we run some code to store a new Customer and CustomerDetails entity in our windows azure table storage table:

PS: This code can be optimized by changing your custom TableServiceContext to not save each entity when adding it and creating a SaveChangesBatch operation that saves all the changes in a batch. However, this again belongs to the scope of Entity Framework

The Customers table in our windows azure table storage will be looking like this:

Both our Customer and our CustomerDetails entity have been saved in the same table, even though their structure is different. Notice that the FirstName property value is set for both entities, since they both share this property. The Email and Phone property is set for the CustomerDetails entity, while it is not for the Customer entity, while the LastName property is set for the Customer entity and not for the CustomerDetails entity.

Tables don’t enforce a schema on entities, which means a single table can contain entities that have different sets of properties. A property is a name-value pair. Each entity can include up to 252 properties to store data. Each entity also has 3 system properties that specify a partition key, a row key, and a timestamp. Entities with the same partition key can be queried more quickly, and inserted/updated in atomic operations. Both the entities are unique defined by the combination of the partition and row key.

At start you might question why you would store different entity types in the same table. We are used to think in our used relational model, which has a schema for each table and in there each table matches to 1 single entity type. However saving different entity types in the same table can be interesting if they share the same partition key, which would result in the fact that you can query these entities a lot faster then you would have split them in two different tables.

You could save different entity types in the same table if they are related and you need to often load them all together. If you save them in the same table and they all have the same partition key, you’ll be able to load the related data really quickly, where as in the relation model you would have to join a bunch of tables to get the necessary information.

6. Managing concurrency with Windows Azure table storage

Just as with all other data services, the Windows Azure table storage provides an optimistic concurrency control mechanism. The optimistic concurrency control in Windows Azure table storage is being done by the Timestamp property on the TableServiceEntity. This is the same as the Etag concurrency mechanism.

The issue is as following:

- Client 1 retrieves the entity with a specified key.

- Client 1 updates some property on the entity

- Client 2 retrieves the same entity.

- Client 2 updates some property on the entity

- Client 2 saves the changes on the entity to table storage

- Client 1 saves the changes on the entity to table storage. The changes client 2 made to the entity were overwritten and are lost since those changes were not retrieved yet by client 1.

The idea behind the optimistic concurrency is as following:

- Client 1 retrieves the entity with the specified key. The Timestamp is currently 01:00:00 in table storage

- Client 1 updates some property on the entity

- Client 2 retrieves the same entity. The Timestamp is currently 01:00:00 in table storage

- Client 2 updates some property on the entity

- Client 2 saves the changes on the entity to table storage with a Timestamp of 01:00:00. The Timestamp of the entity in table storage is 01:00:00, the provided Timestamp on the entity by the client is 01:00:00, so the client had the latest version of the entity. The update is being validated and the entity is updated. The Timestap property is being changed to 01:00:10 in table storage.

- Client 1 saves the changes on the entity to table storage with a Timestamp of 01:00:00. The Timestamp of the entity in table storage is 01:00:10, the provided Timestamp on the entity by the client is 01:00:00, so the client does not have the latest version of the entity since both Timestamp properties do not match. The update fails and an exception is being returned to the client.

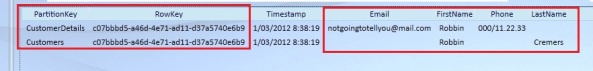

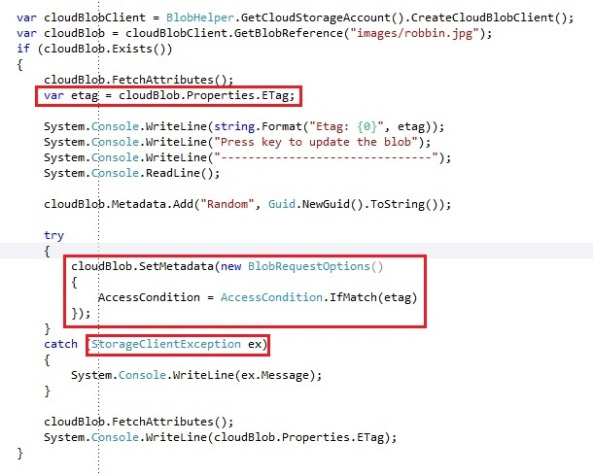

Some dummy code in the console application to show this behavior of optimistic concurrency control through the Timestamp property:

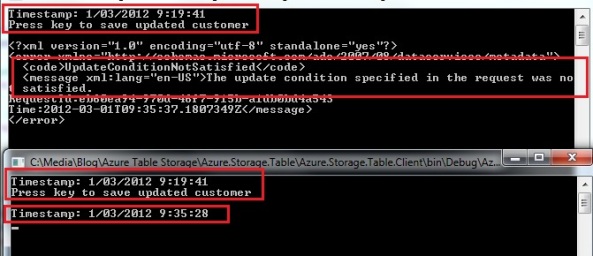

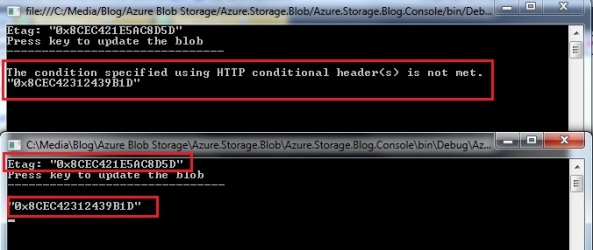

Running the console application twice and updating the 2nd client first and afterwards attempting to save the update on the 1st client:

You will get an optimistic concurrency error: The update condition specified in the request was not satisfied, meaning that we tried to update an entity that was already updated by someone else since we received it. That way we avoid overwriting updated data in the table storage and losing the changes made by another user. The exception you get back is a DataServiceClientException. You can provide a warning to the client that the item has already been changed since he retrieved it and to give him the option whether he wants to reload the latest version, or overwrite the latest version with his version.

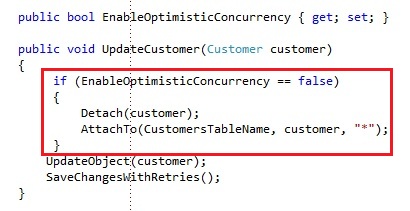

If you would want to overwrite the latest version with an older version, you’ll need to disable the optimistic concurrency mechanism. You can disable this default behavior of the optimistic concurrency by detaching the object and reattaching it with the AttachoTo operation and using the overload operation where you can pass a Etag string, like this:

We add a property to our custom TableServiceContext to specify we want to use optimistic concurrency or not. If we update a customer and the optimistic concurrency is not enabled, we will detach and reattach the customer with a an Etag value of “*”.

The great thing is that optimistic concurrency is enabled by default in table storage. You do not need to write any additional code like with everything else. Optimistic concurrency is very light-weight so it’s useful to have. In case you would need to disable it, you have the possibility to do so, but it’s a bit verbose.

7. Working with large data sets and continuation tokens

A query against the Table service may return a maximum of 1,000 items at one time and may execute for a maximum of five seconds. If the result set contains more than 1,000 items, if the query did not complete within five seconds, or if the query crosses the partition boundary, the response includes headers which provide the developer with continuation tokens to use in order to resume the query at the next item in the result set.

Note that the total time allotted to the request for scheduling and processing the query is 30 seconds, including the five seconds for query execution.

If you are familiar with the WCF Data Services, then this concept will be familiar to you. It allows the client to keep retrieving information when the service is exposing data through paging.

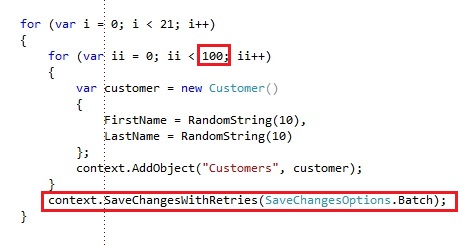

We will add some table storage data to our table:

Notice we run some iteration of adding 100 entities, and every 100 entities we submit the changes with a SaveChangesOptions of Batch, which will batch all the requests in a single request, which will be a lot faster then doing 100 requests separately. We only pay the request latency once instead of 100 times for each batch. Using batching is higly recommended when inserting or updating multiple entities.

To use batching, you need to fulfill to the following requirements:

- All entities subject to operations as part of the transaction must have the same PartitionKey value

- An entity can appear only once in the transaction, and only one operation may be performed against it

- The transaction can include at most 100 entities, and its total payload may be no more than 4 MB in size.

You can find some more textual information about continuation tokens and partition boundaries by Steve Marx:

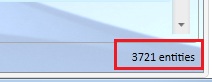

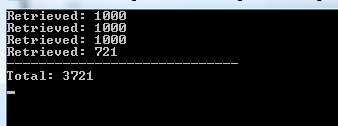

http://blog.smarx.com/posts/windows-azure-tables-expect-continuation-tokens-seriouslyIf we look in our azure storage explorer, we will see we currently have 3.721 Customer entities in our Customers table:

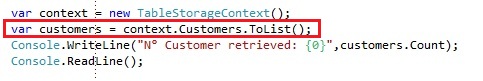

If we want to retrieve all customer entities from our storage, by default we would have used a normal LINQ query like this:

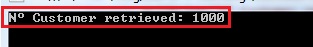

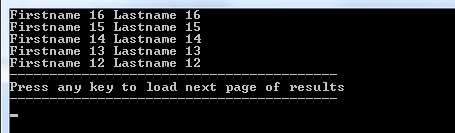

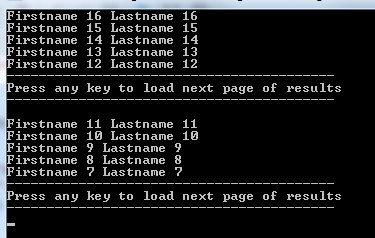

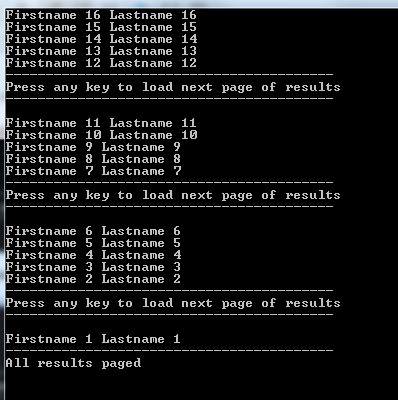

If you would run this code:

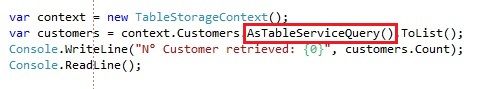

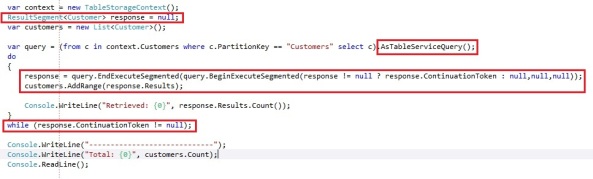

Windows Azure table storage by design only allows a maximum retrieval of 1000 entities in an operation, so we are getting returned at 1000 entities. However we still want to get the other entities and that’s where the continuation tokens come in play. You can write the continuation processing yourself, but there is also an extension for the IQueryable we can use:

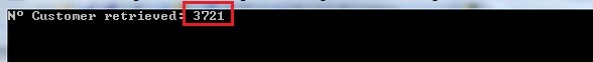

We use the AsTableServiceQuery extension method on the IQueryable which returns a CloudTableQuery<T> instead of an IQueryable<T>. The CloudTableQuery derives from IQueryable and IEnumerable and adds functionality to handle with continuation tokens. If you would execute the code above:

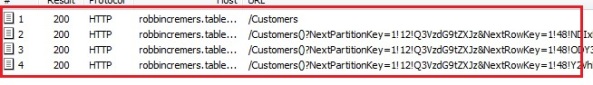

All the entities are being returned now if we use the CloudTableQuery instead of the IQueryable. If I disable the HTTPS on the CloudStorageAccount (to see what request are being send out, otherwise you’ll only see encrypted mambo jumbo) and run Fiddler while we retrieve our customers:

You notice the single request we did by code is getting split up in multiple requests to the table storage behind the scenes. Since we are retrieving 3721 entities, and the maximum entities returned is 1000, the request is being split up in 4 requests. The first request will return 1000 entities and a continuation token. To request the next 1000 entities, we do another request and pass the continuation token along. We keep repeating this until we no longer receive a continuation token, which means all data has been received. The continuation token basically exists of the next partition key and the next row key it has to start retrieving information from again, which makes sense.

8. Writing paging code with ResultContinuation and ResultSegment<T>

The CloudTableQuery also exposes a few other useful operations:

- Execute: Execute a query with the retry policy which returns an the data directly as IEnumerable<T>

- BeginExecuteSegmented / EndExecuteSegmented: An asynchronous execution of the query which returns as a result segment, which is of type ResultSegment<T>

Both these operations allows you to pass a ResultContinuation so you can retrieve the next set of data based on the continuation token. Suppose we want to retrieve all the entities in the Customers table by doing the continuation token paging ourselves:

We run through the following steps to retrieve all windows azure table entities with continuation tokens:

- Create our CloudTableQuery through the AsTableServiceQuery extension method

- Invoke the EndExecuteSegmented operation and we specify the BeginExecuteSegmented operation as the callback operation. The BeginExecuteSegmented operation takes a ContinuationToken, an async callback and and state object as parameters. We pass the callback and state object as null. We pass the ContinuationToken in here if the ResultSegment response is not null. This operation returns a ResultSegment<T>.

- We get the IEnumerable<T> from the ResultSegment by the Results property and add it to our list of customers

- We keep repeating step 2 and 3 as long the ResultSegment.ContinuationToken is different from null.

The first time we execute this the ResultSegment<t> reponse is set to null, so the first Execute operation it will pass a null into the ContinuationToken parameter. This makes sense, since a continuation token can only be retrieved when you did the first data request and it would appear there are more results then what is being retrieved. The ResultSegment we will get back will contain the first 1000 Customer entities and it will also contain a ContinuationToken. Since the ContinuationToken is different from null, it will execute the query again, but this time the response variable will be set and the ContinuationToken will be passed along in the BeginExecuteSegmented, meaning the next series of entities will be retrieved.

If our Customers table would contain less then 1000 entities, the ResultSegment Results property would be populated with the retrieved entities, but the ContinuationToken would be set to null, since there are no possible entities to be retrieved after this request. Since the ContinuationToken would be set to null, we would jump out of the loop.

If you would execute this, it would retrieve the full 3721 entities from our table storage:

For each 1000 entities we retrieve, we get a continuation token back. With this continuation token, we do another request which returns the next set of results. We keep doing this until we no longer get a continuation token.

One of the main reasons doing the continuation token paging yourself is when you want to implement paging in your application. If you retrieve 10 entities in your application, you might provide a link to get to the next page. To get those next 5 entities, you would need to pass along the continuation token that was provided after you requested the first 5 entities.

I wrote some code to add 16 customers to the table storage for testing with paging. They look like this:

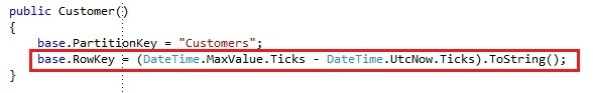

You might notice they RowKey is different. I changed the generation of the row key from guid to a datetime calculation:

This makes sure the latest entity insert ends up at top of the table. Tables are being sorted by the partition and row key. It’s a common way to create unique row key’s which result in the latest inserted item being added on top of the table.

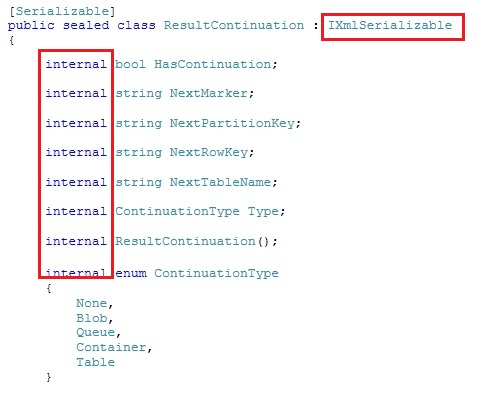

We are going from the idea that we have a web page that shows 5 results in a grid and provides a link to see the next 5 results. When you click the link, the 5 next results get loaded. I wrote some code the simulate this behavior in a console application. Internally the ResultContinuation class looks like this:

The operations are defined as internal, which means we can’t access some of the values we need. We need to be able to pass the necessary information of the ResultContinuation to our website, so that when the user clicks the link for the next page, we know the user wants to get the next page, which corresponds with a certain ResultContinuation. That’s why the ResultContinuation derives from IXmlSerializable. It allows you to serialize the ResultContinuation to an xml string or deserialize it back to the ResultContinuation object. After we serialized it to an xml string, we can pass it along to the client, which can return it to us at a later moment to retrieve the next set of entities.

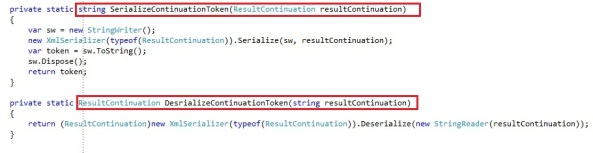

First up I wrote 2 operations to serialize the ResultContinuation to a xml string and the deserialize the xml string back to a ResultContinuation.

When we get the first 5 entities, we will serialize the ResultContinuation to an xml string and pass this xml string to the client. If the client wants to get the next 5 pages, he simply has to pass this xml string back to us, which we will serialize back into a ResultContinuation. With the ResultContinuation object we can invoke the BeginExecuteSegmented operation and pass the continuation token along, which will fetch the next series of entities.

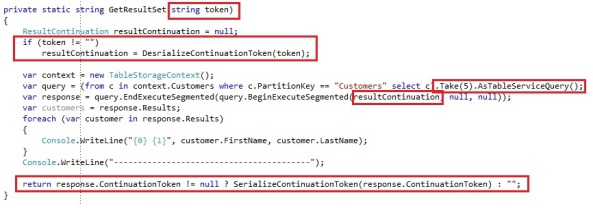

We have an operation which gets 5 entities from the table storage, depending on a continuation token:

If a xml token is being passed along, we serialize it back to a ResultContinuation and this continuation token is being used to get the next series of entities. If the xml token is empty, which will be on the first request, then the first 5 entities are being retrieved. In the response we get back, we check if there is a ContinuationToken present and if there is, we serialize it to xml and pass it back to the client. If there is no continuation token available, we simply return an empty string, which would mean there are no more entities available after this current set of entities.

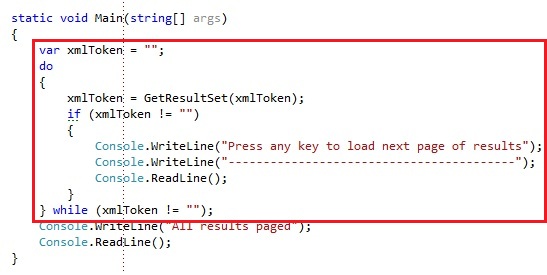

The client code could like this to simulate the paging:

When executing the console application it starts by listing the first 5 properties:

It will get a xml continuation token back, so the client knows there are more entities to be retrieved. When we press any key, we call the GetResultSet operation again and pass this xml continuation token. The operation will deserialize the xml continuation token back to a ResultContinuation, which will be passed along in our BeginExecuteSegmented operation.

If we hit any key, it will receive the next 5 values:

When we keep paging, we keep getting the next 5 results. We are able to keep paging as long there are results after the current set of data. When we are retrieving the 4th set of 5 entities, only 1 entity is being returned and there is no continuation token returned, resulting in the fact that we are done paging.

If you would want to go back to a previous page, you can store the tokens in a variable or in session state. That way you could go back to a previous page by providing a token that you already used before to page forward. I will not write dummy code since it would look very similar to the previous example, but the scenario would be like this:

- First 5 entities are being returned and a continuation token of “getpage2″ is being returned

- We invoke the operation again and pass the “getpage2″ continuation token along

- We will get the next set of 5 entities and a continuation token of “getpage3″ is being returned

- We invoke the operation again and pass the “getpage3″ continuation token along

- We will get the next set of 5 entities and a continuation token of “getpage4″ is being returned

- At this point want to get back to the previous page of 5 entities so we invoke the operation again and pass the “getpage2″ continuation token instead of the “getpage4″ continuation token. Since we are at page 3, we need to pass the continuation token we got when retrieving page 1.

- We will get the 5 entities that belong to page 2 and a continuation token of “getpage3″ is being returned

You implemented a previous and next paging mechanism with table storage. The only thing you will need to account for is to store the continuation tokens and the paging indexing. You can wrap this up in a nice wrapper, which abstracts this away from you code. If you are using a web application, you’ll need to store this in session state.

PS: Do not store your session state on your machine itself. When using session state with windows azure you’ll need to account for the round-robin load balancing so you’ll need to make sure your session state is shared over all your instances. You can store your session state in the Windows Azure distributed caching service or you can store it in SQL azure or table storage.

9. Why using Windows Azure Table Storage

The storage system achieves good scalability by distributing the partitions across many storage nodes.

The system monitors the usage patterns of the partitions, and automatically balances these partitions across all the storage nodes. This allows the system and your application to scale to meet the traffic needs of your table. That is, if there is a lot of traffic to some of your partitions, the system will automatically spread them out to many storage nodes, so that the traffic load will be spread across many servers. However, a partition i.e. all entities with same partition key, will be served by a single node. Even so, the amount of data stored within a partition is not limited by the storage capacity of one storage node.

The entities within the same partition are stored together. This allows efficient querying within a partition. Furthermore, your application can benefit from efficient caching and other performance optimizations that are provided by data locality within a partition. Choosing a partition key is important for an application to be able to scale well. There is a tradeoff here between trying to benefit from entity locality, where you get efficient queries over entities in the same partition, and the scalability of your table, where the more partitions your table has the easier it is for Windows Azure Table to spread the load out over many servers.

You want the most common and latency critical queries to have the PartitionKey as part of the query expression. If the PartitionKey is part of the query, then the query will be efficient since it has to only go to a single partition and traverse over the entities there to get its result. If the PartitionKey is not part of the query, then the query has to be done over all of the partitions for the table to find the entities being looked for, which is not as efficient. A table partition are all of the entities in a table with the same partition key value, and most tables have many partitions. The throughput target for a single partition is:

- Up to 500 entities per second

- Note, this is for a single partition, and not a single table. Therefore, a table with good partitioning, can process up to a few thousand requests per second (up to the storage account target)

Windows Azure table storage is designed for high scalability, but there are some drawbacks to it though:

- There is no possibility to sort the data through your query. The data is being sorted by default by the partition and row key and that’s the only order you can retrieve the information from the table storage. This can often be a painful issue when using table storage. Sorting is apparently an expensive operation, so for scalability this is not supported.

- Each entity will have a primary key based on the partition key and row key

- The only clustered index is on the PartitionKey and the RowKey. That means if you need to build a query that searches on another property then these, performance will go down. If you need to query for data that doesn’t search on the partition key, performance will go down drastically. With the relational database we are used to make filters on about any column when needed. With table storage this is not a good idea or you might end up with slow data retrieval.

- Joining related data is not possible by default. You need to read from seperate tables and doing the stitching yourself

- There is no possibility to execute a count on your table, except for looping over all your entities, which is a very expensive query

- Paging with table storage can be of more of a challenge then it was with the relational database

- Generating reports from table storage is nearly impossible as it’s non-relational

If you can not manage with these restrictions, then Windows Azure table storage might not be the ideal storage solution. The use of Windows Azure table storage is depending on the needs and priorities of your application. But if you have a look at how large companies like Twitter, Facebook, Bing, Google and so forth work with data, you’ll see they are moving away from the traditional relational data model. It’s trading some features like filtering, sorting and joining for scalability and performance. The larger your data volume is growing, the more the latter will be impacted.

This is an awesome video of Brad Calder about Windows Azure storage, which I suggest you really have a look at:

http://channel9.msdn.com/Events/BUILD/BUILD2011/SAC-961TSome tips and tricks for performance for .NET and ADO.NET Data Services for Windows Azure table storage:

http://social.msdn.microsoft.com/Forums/en-US/windowsazuredata/thread/d84ba34b-b0e0-4961-a167-bbe7618beb83

The Windows Azure Storage Team have promised secondary indexes to provide sorting Windows Azure table rowsets by other than PartitionKey and RowKey values for several years, but haven’t yet delivered.

Robin Cremers (@robincremers) described Everything you need to know about Windows Azure Blob Storage including permissions, signatures, concurrency, … in detail on 2/27/2012 (missed when posted):

In my attempt to cover most of the features of the Microsoft Cloud Computing Windows Azure, I’ll be covering Windows Azure storage in the next few posts.

Why using Windows Azure storage:

- Fault-tolerance: Windows Azure Blobs, Tables and Queues stored on Windows Azure are replicated three times in the same data center for resiliency against hardware failure. No matter which storage service you use, your data will be replicated across different fault domains to increase availability

- Geo-replication: Windows Azure Blobs and Tables are also geo-replicated between two data centers 100s of miles apart from each other on the same continent, to provide additional data durability in the case of a major disaster, at no additional cost.

- REST and availability: In addition to using Storage services for your applications running on Windows Azure, your data is accessible from virtually anywhere, anytime.

- Content Delivery Network: With one-click, the Windows Azure CDN (Content Delivery Network) dramatically boosts performance by automatically caching content near your customers or users.

- Price: It’s insanely cheap storage

The only reason you would not be interested in the Windows Azure storage platform would be if you’re called Chuck Norris …

Now if you are still reading this line it means you aren’t Chuck Norris, so let’s get on with it, as long as it is serializable.In this post we will cover Windows Azure Blob Storage, one of the storage services provided by the Microsoft cloud computing platform. Blob storage is the simplest way to store large amounts of unstructured text or binary data such as video, audio and images, but you can save about anything in it.

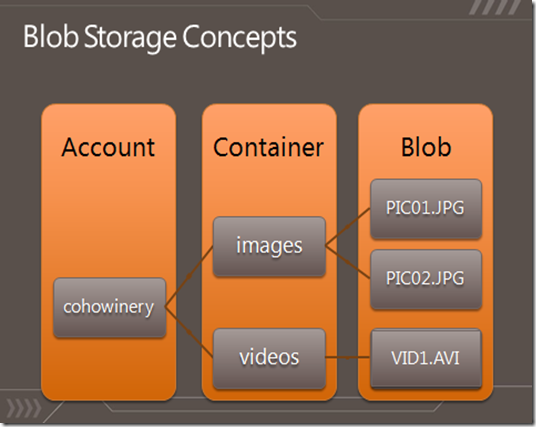

The concept behind the Windows Azure Blog storage is as following:

There are 3 things you need to know about to use Windows Azure Blob storage:

- Account: Windows Azure storage account, which is the account, containing blob, table and queue storage. The storage account blob storage can contain multiple containers.

- Container: blob storage container, which behaves like a folder in which we store items

- Blob: Binary Large Object, which is the actual item we want to store in the blob storage

1. Creating and using the Windows Azure Storage Account

To be able to store data in the Windows Azure platform, you will need a storage account. To create a storage account, log in the Windows Azure portal with your subscription and go to the Hosted Services, Storage Accounts & CDN service:

Select the Storage Accounts service and hit the Create button to create a new storage account:

Define a prefix for your storage account you want to create:

After the Windows Azure storage account is created, you can view the storage account properties by selecting the storage account:

The storage account can be used to store data in the blob storage, table storage or queue storage. In this post, we will only cover the blob storage. One of the properties of the storage account is the primary and secondary access key. You will need one of these 2 keys to be able to execute operations on the storage account. Both the keys are valid and can be used as an access key.

When you have an active Windows Azure storage account in your subscription, you’ll have a few possible operations:

- Delete Storage: Delete the storage account, including all the related data to the storage account

- View Access Keys: Shows the primary and secondary access key

- Regenerate Access Keys: Allows you to regenerate one or both of your access keys. If one of your access keys is compromised, you can regenerate it to revoke access for the compromised access key

- Add Domain: Map a custom DNS name to the storage account blob storage. For example map the robbincremers.blob.core.windows.net to static.robbincremers.me domain. Can be interesting for storage accounts which directly expose data to customers through the web. The mapping is only available for blob storage, since only blob storage can be publicly exposed.

Now that we created our Windows Azure storage account, we can start by getting a reference to our storage account in our code. To do so, you will need to work with the CloudStorageAccount, which belongs to Microsoft.WindowsAzure namespace:

We create a CloudStorageAccount by parsing a connection string. The connection string takes the account name and key, which you can find in the Windows Azure portal. You can also create a CloudStorageAccount by passing the values as parameters instead of a connection string, which could be preferable. You need to create an instance of the StorageCredentialsAccountAndKey and pass it into the CloudStorageAccount constructor:

The boolean that the CloudStorageAccount takes is to define whether you want to use HTTPS or not. In our case we chose to use HTTPS for our operations on the storage account. The storage account only has a few operations, like exposing the storage endpoints, the storage account credentials and the storage specific clients:

The storage account exposes the endpoint of the blob, queue and table storage. It also exposes the storage credentials by the Credentials operation. Finally it also exposes 4 important operations:

- CreateCloudBlobClient: Creates a client to work on the blob storage

- CreateCloudDrive: Creates a client to work on the drive storage

- CreateCloudQueueClient: Creates a client to work on the queue storage

- CreateCloudTableClient: Creates a client to work on the table storage

You won’t be using the CloudStorageAccount much, except for creating the service client for a specific storage type.

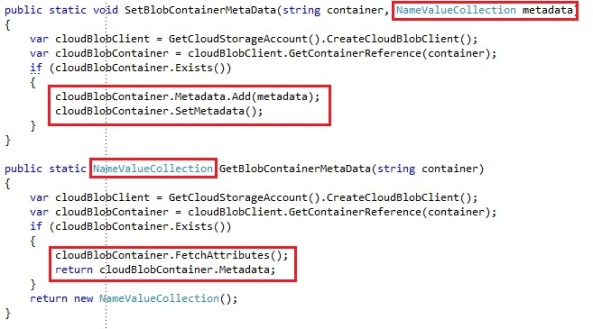

2. Basic operations for managing blob storage containers

A blob container is basically a folder in which we place our blobs. You can do the usual stuff like creating and deleting blob containers. There is also a possibility to set some permissions and metadata on our blob container, but those will be covered in the next chapters after we looked into the basics of the CloudBlobContainer and the CloudBlob.

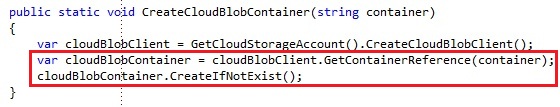

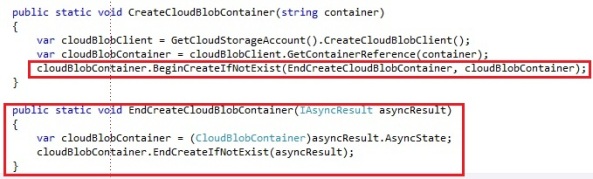

Creating a blob container synchronously:

You start by creating the CloudBlobClient from the CloudStorageAccount through the CreateCloudBlobClient on the CloudStorageAccount. The CloudBlobClient exposes a bunch of operations which will be used to manage blob containers and to manage and store blobs. To create or retrieve a blob container, you use the GetContainerReference operation. This will return a reference to the blob container, even if the container does not exist yet. The reference does not execute a request over the network. It simply creates a reference with the values the container would have, and is returned as a CloudBlobContainer.

To create the blob container, you invoke the Create or CreateIfNotExists operation on the CloudBlobContainer. The Create operation will return a StorageClientException if the container you are trying to create already exists. You can also use the CreateIfNotExists operation, which only attempts to create the container if it does not exist yet. As a parameter you pass the name of the container. Important is to know a blob container can only contain numbers, lower case letters and dashes and has to be between 3 and 24 characters.

For almost every synchronous operation there is an asynchronous operation available as well.

Creating the blob container asynchronously by the BeginCreateIfNotExists and EndCreateIfNotExists operation:

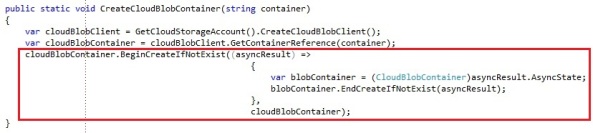

It follows the default Begin and End pattern of the asynchronous operations. If you are a fan lambda expressions (awesomesauce), you can avoid splitting up your operation with a lambda expression:

You could do almost anything asynchronous, which is highly recommended. If my Greek ninja master would see me writing synchronous code, he would most likely slap me, but for demo purposes I will continue using synchronous code throughout this post, since this might be easier to follow and understand for the some people.

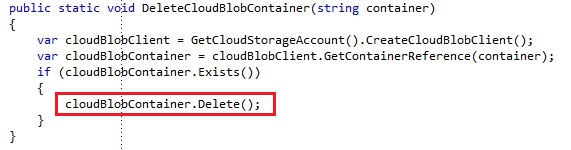

Deleting a blob container is as straight forward as creating one. We simple invoke the Delete operation on the CloudBlobContainer, which will execute a request to the REST storage interface:

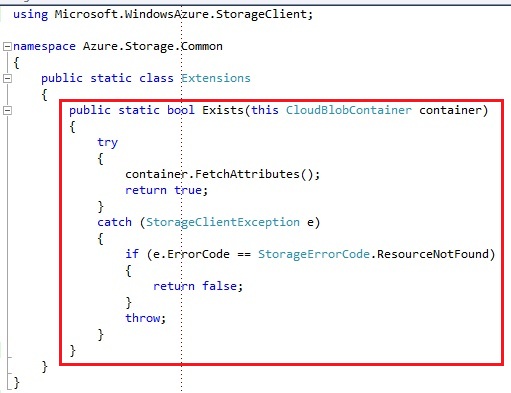

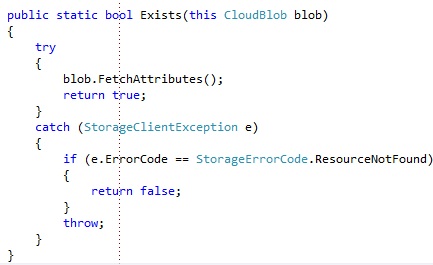

There is 1 remark about this piece of code, and it is the Exists operation. By default, there is no operation to check whether a blob container already exists or not. I added an extension method on the CloudBlobContainer, which will check whether the blob container exists. This way of validation the existence of the container was suggested by Steve Marx. We will come back to FetchAttributes method in a later chapter.

I added an identical extension method to check if a Blob exists:

If you do not know what extension methods are, you can find an easy article about it here:

Implementing and executing C# extension methodsTo explore my storage accounts, I use a free tool called Azure Storage Explorer which you can download on codeplex:

http://azurestorageexplorer.codeplex.com/When you created the new blob container, you can view and change it’s properties with the Azure Storage Explorer:

I manually uploaded an image to the blob container so we have some data to test with.

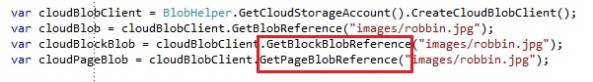

There are a few other operations exposed on the CloudBlobClient, which you might end up using:

- ListContainers: Allows you to retrieve a list of blob containers that belong to the storage account blob storage. You can list all containers are search by a prefix.

- GetBlobReference: Allows you to retrieve a CloudBlob reference through the absolute uri of the blob.

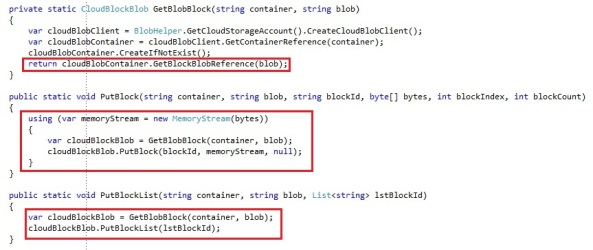

- GetBlockBlobReference: See chapter 9

- GetPageBlobReference: See chapter 9

- SetMetadata: See chapter 4

- SetPermissions: See chapter 6

- FetchAttributes: See chapter 4

One thing that might surprise you is that it is not possible to nest one container beneath another.

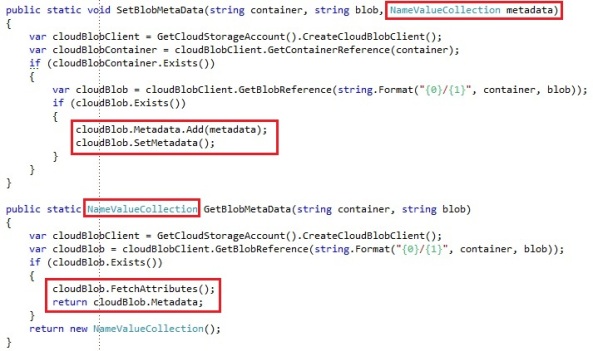

3. Basic operations for storing and managing blobs

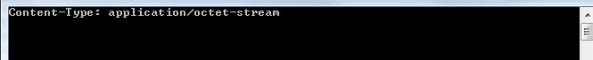

Blob stands for Binary Large Object, but you can basically store about anything in blob storage. Ideally it is build for storing images, files and so forth. But you can just as easily serialize an object and store it in blob storage. Let’s cover the basics for the CloudBlob.

There are a few possible operations on the CloudBlob to upload a new blob in a blob container:

- UploadByteArray: Uploads an array of bytes to a blob

- UploadFile: Uploads a file from the file system to a blob

- UploadFromStream: Uploads a blob from a stream

- UploadText: Uploads a string of text to a blob

There are a few possible operations on the CloudBlob to download a blob from blog storage:

- DownloadByteArray: Downloads the blob’s contents as an array of bytes

- DowloadText: Downloads the blob’s contents as a string

- DownloadToFile: Downloads the blob’s contents to a file

- DownloadToStream: Downloads the contents of a blob to a stream

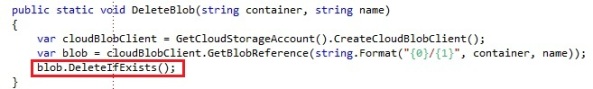

There are a few possible operations on the CloudBlob to delete a blob from blog storage:

- Delete: Delete the blob. If the blob does not exist, the operation will fail

- DeleteIfExists: Delete the blob only if it exists

A few other common operations on the CloudBlob you might run into:

- OpenRead: Opens a stream for reading the blob’s content

- OpenWrite: Opens a stream for writing to the blob

- CopyFromBlob: Copy an existing blob with content, properties and metadata to a new blob

They all work identical, so we will only cover the upload and download of a file to blob storage, to show as an example.

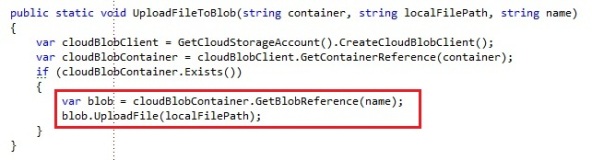

Uploading a file to blob storage:

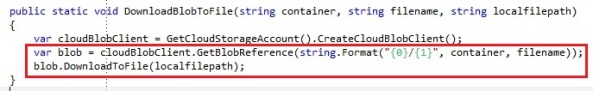

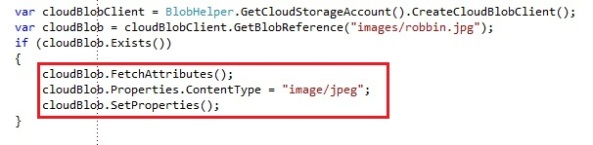

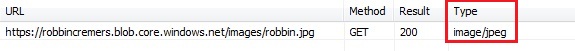

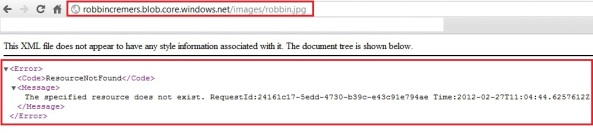

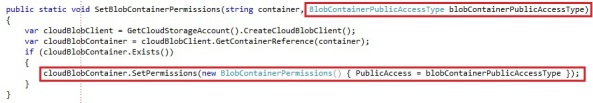

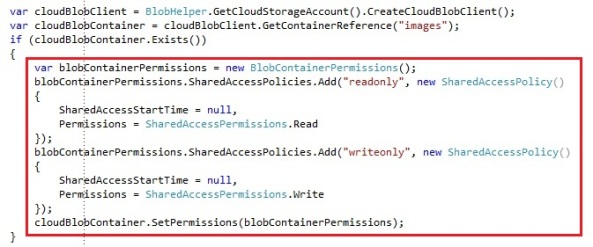

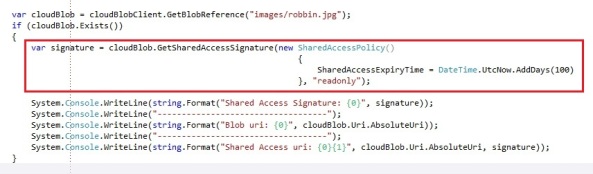

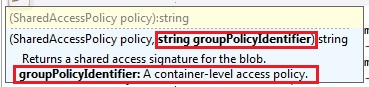

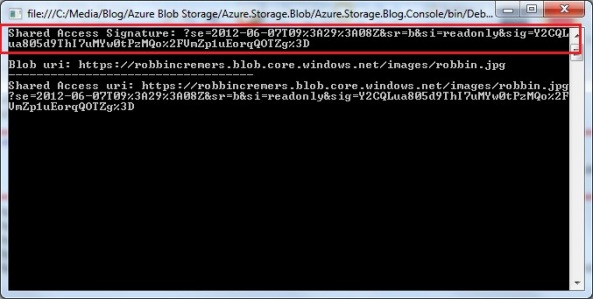

In the GetBlobReference we pass along the name we want the blob to be called in the Windows Azure blob storage. Finally we upload our local file to blob storage. Retrieving a file from blob storage: