Windows Azure and Cloud Computing Posts for 3/16/2012+

| A compendium of Windows Azure, Service Bus, EAI & EDI Access Control, Connect, SQL Azure Database, and other cloud-computing articles. |

•• Updated 3/21/2011 with new Klout score (up from 35 to 45 and persons influenced from 135 to 806) in the Windows Azure Blob, Drive, Table, Queue and Hadoop Services section.

• Updated 3/17/2012 with additional articles marked • by Austin Jones, Microsoft All-In-One Code Gallery, Anupama Mandya, Xpert360, Bruno Terkaly, Maarten Balliauw, Kostas Christodoulou, Derrick Harris and the Microsoft patterns & practices team.

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Windows Azure Blob, Drive, Table, Queue and Hadoop Services

- SQL Azure Database, Federations and Reporting

- Marketplace DataMarket, Social Analytics, Big Data and OData

- Windows Azure Access Control, Identity and Workflow

- Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch and Entity Framework v4+

- Windows Azure Infrastructure and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

Azure Blob, Drive, Table, Queue and Hadoop Services

• Derrick Harris (@derrickharris) explained Why Klout is making its bed with Hadoop and … Microsoft in a 3/6/2012 post to GigaOm’s Structure blog (missed when published):

Look under the covers of almost any data-focused web application — including Klout — and you’ll find Hadoop. The open-source big data platform is ideal for storing and processing the large amounts of information needed for Klout to accurately measure and score its users’ social media influence. But Klout also has another important, and very not-open-source, weapon in its arsenal — Microsoft SQL Server.

Considering the affinity most web companies have for open source software, the heavy use of Microsoft technology within Klout is a bit surprising. The rest of the Klout stack reads like a who’s who of hot open source technologies — Hadoop, Hive, HBase, MongoDB, MySQL, Node.js. Even on the administrative side, where open source isn’t always an option, Klout uses the newer and less-expensive Google Apps instead of Microsoft Exchange.

“I would rather go open source, that’s my first choice always,” Klout VP of Engineering Dave Mariani told me during a recent phone call. “But when it comes to open source, scalable analysis tools, they just don’t exist yet.”

Klout’s stack is telling of the state of big data analytics, where tools for analyzing data processed by and stored in Hadoop can be hard to use effectively, and where Microsoft has actually been turning a lot of heads lately.

How Klout does big data

“Data is the chief asset that drives our services,” said Mariani, and being able to understand what that data means is critical. Hadoop alone might be fine if the company were just interested in analyzing and scoring users’ social media activity, but it actually has to satisfy a customer set that includes users, platform partners (e.g., The Palms hotel in Las Vegas, which ties into the Klout API and uses scores to decide whether to upgrade guests’ rooms) and brand partners (the ones who target influencers with Klout Perks).

As it stands today, Hadoop stores all the raw data Klout collects — about a billion signals a day on users, Mariani said — and also stores it in an Apache Hive data warehouse. When Klout’s analysts need to query the data set, they use the Analysis Services tools within SQL Server. But because SQL Server can’t yet talk directly to Hive (or Hadoop, generally), Klout has married Hive to MySQL, which serves as the middleman between the two platforms. Klout loads about 600 million rows a day into SQL Server from Hive.

It’s possible to use Hive for querying data in a SQL-like manner (that’s what it was designed for), but Mariani said it can be slow, difficult and not super-flexible. With SQL Servers, he said, queries across the entire data set usually take less than 10 seconds, and the product helps Klout figure out if its algorithms are putting the right offers in front of the right users and whether those campaigns are having the desired effects.

Analysis Services also functions as a sort of monitoring system, Mariani explained. It lets Klout keep a close eye on moving averages of scores so it can help spot potential problems with its algorithms or problems in the data-collection process that affect users’ scores.

Elsewhere, Klout uses HBase to serve user profiles and scores, and MongoDB for serving interactions between users (e.g., who tweeted what, who saw it, and how it affected everyone’s profiles).

Why Microsoft is turning heads in the Hadoop world

Although Klout is using SQL Server in part because Mariani brought it along with him from Yahoo and Blue Lithium before that, Microsoft’s recent commitment to Hadoop has only helped ensure its continued existence at Klout. Since October, Microsoft has been working with Yahoo spinoff Hortonworks on building distributions of Hadoop for both Windows Server and Windows Azure. It’s also working on connectors for Excel and SQL Server that will help business users access Hadoop data straight from their favorite tools.

Mariani said Klout is working with Microsoft on the SQL Server connector, as his team is anxious to eliminate the extra MySQL hop it currently must take between the two environments.

Microsoft’s work on Hadoop on Windows Azure is actually moving at an impressive pace. The company opened a preview of the service to 400 developers in December and on Tuesday, coinciding with the release of SQL Server 2012, opened it up to 2,000 developers. According to Doug Leland, GM of product management for SQL Server, Hadoop on Windows Azure is expanding its features, too, adding support for the Apache Mahout machine-learning libraries and new failover and disaster recovery capabilities for the problematic NameNode within the Hadoop Distributed File System.

Leland said Microsoft is trying ”to provide a service that is very easy to consume for customers of any size,” which means an intuitive interface and methods for analyzing data. Already, he said, Webtrends and the University of Dundee are among the early testers of Hadoop on Windows Azure, with the latter using it for genome analysis.

We’re just three weeks out from Structure: Data in New York, and it looks as if our panel on the future of Hadoop will have a lot to talk about, as will every at the show. As more big companies get involved with Hadoop and the technology gets more accessible, it opens up new possibilities who can leverage big data and how, as well as for an entirely new class of applications that use Hadoop like their predecessors used relational databases.

Related research and analysis from GigaOM Pro:

Subscriber content. Sign up for a free trial.

•• The top of my Klout topics dashboard (updated 3/21/2012):

Andrew Brust (@andrewbrust) described HDFS and file system wanderlust in a 3/16/2012 post to ZDNet’s Big on Data blog:

A continuing theme in Big Data is the commonality, and developmental isolation, between the Hadoop world on the one hand and the enterprise data, Business Intelligence (BI) and analytics space on the other. Posts to this blog — covering how Massively Parallel Processing (MPP) data warehouse and Complex Event Processing (CEP) products tie in to MapReduce – serve as examples.

The Enterprise Data and Big Data worlds will continue to collide and, as they do, they’ll need to reconcile their differences. The Enterprise is set in its ways. And when Enterprise computing discovers something in the Hadoop world that falls short of its baseline assumptions, it may try and work around it. From what I can tell, a continuing hot spot for this kind of adaptation is Hadoop’s storage layer.

Hadoop, at its core, consists of the MapReduce parallel computation framework and the Hadoop Distributed File System (HDFS). HDFS’ ability to federate disks on the cluster’s worker nodes, and thus allow MapReduce processing to work against storage local to the node, is a hallmark of what makes Hadoop so cool. But HDFS files are immutable — which is to say they can only be written to once. Also, Hadoop’s reliance on a “name node” to orchestrate storage means it has a single point of failure.

Pan to the enterprise IT pro who’s just discovering Hadoop, and cognitive dissonance may ensue. You might hear a corporate database administrator exclaim: “What do you mean I have to settle for write-once read-many?” This might be followed with outcry from the data center worker: “A single point of failure? Really? I’d be fired if I designed such a system.” The worlds of Web entrepreneur technologists and enterprise data center managers are so similar, but their relative seclusion makes for a little bit of a culture clash.

Maybe the next outburst will be “We’re mad as hell and we’re not going to take it anymore!” The market seems to be bearing that out. MapR’s distribution of Hadoop features DirectAccess NFS, which provides the ability to use read/write Network File System (NFS) storage in place of HDFS. Xyratex’s cluster file system, called Lustre, can also be used as an API-compatible replacement for HDFS (the company wrote a whole white paper on just that subject, in fact). Appistry’s CloudIQ storage does likewise. And although it doesn’t swap out HDFS, the Apache HCatalog system will provide a unified table structure for raw HDFS files, Pig, Streaming, and Hive.

Sometimes open source projects do things their own way. Sometimes that gets Enterprise folks scratching their heads. But given the Hadoop groundswell in the Enterprise, it looks like we’ll see a consensus architecture evolve. Even if there’s some creative destruction along the way.

<Return to section navigation list>

SQL Azure Database, Federations and Reporting

Rick Saling (@RickAtMicrosoft) described Versioning SQL Azure Schemas by Using Federations in a 3/15/2012 post:

This topic is now published on the MSDN Library at http://msdn.microsoft.com/en-us/library/hh859735(VS.103).aspx.

The bottom-line idea of the topic is that because SQL Azure Federation members can have different database schemas, therefore you can use this in order to upgrade your schema incrementally. This can be handy if you have an application with multiple customers, or a multi-tenant application: it enables you to roll out new features selectively, instead of all at once. You might want to do this if your customers vary in how quickly they wish to adopt new features. And it could be a useful way of validating your testing: just go live with a small subset of customers, and the impact of any bugs will be more contained.

I'd love to hear about your experiences using this scenario: is it easy to use? Are there glitches in the process that Microsoft could improve? Our software development gets driven by customer requests and experiences, so let us know what you think.

This topic describes one scenario that comes from being able to have different database schemas in federation members. But perhaps you have discovered a new scenario where you can use this feature. If so, we'd love to hear about it.

As an experiment, this was first released on the Microsoft Technet Wiki, at http://social.technet.microsoft.com/wiki/contents/articles/7894.versioning-sql-azure-schemas-by-using-federations.aspx. As a wiki article, you can easily edit it and make changes to it, unlike with MSDN articles. I'll be monitoring the wiki article for updates and comments, so that I can incorporate them into the MSDN version.

Thomas LaRock (@SQLRockstar) posted March Madness – SQL Azure – sys.sql_logins to his SQL RockStar blog on 3/15/2012:

It’s that time of year again folks and March Madness is upon us. Last year I did a blog series on the system tables found within SQL Server. This year I am leaving the earth-bound editions of SQL Server behind and will focus on SQL Azure.

If you are new to SQL Azure then I will point you to this resource list by Buck Woody (blog | @buckwoody). If you are not new, but simply cloud-curious, then join me for the next 19 days as we journey into the cloud and back again. Also, Glenn Berry (blog | @GlennAlanBerry) has a list of handy SQL Azure queries, and between his blog and Buck Woody I have compiled the items that will follow for the next 19 days.

How To Get Started With SQL Azure

It really isn’t that complex. Here’s what you do:

- Go to http://windows.azure.com

- Either create an account or use your

LiveMicrosoft account to sign in- You can select the option to do a free 90 day trial

- Create a new SQL Azure server

- Create a new database

- Get the connection string details and use them inside of SSMS to connect to SQL Azure

That’s really all there is to it, so simple even I could do it.

What Will This Blog Series Cover?

I am going to focus on the currently available system tables, views, functions, and stored procedures you may find most useful when working with Azure. Keep in mind that SQL Azure changes frequently. So frequently in fact that you should bookmark this page on MSDN that details what is new with the current build of SQL Azure.

Last year I started with the syslogins table. Well, that table doesn’t exist in SQL Azure, but sys.sql_logins does. But wait…before I even go there…how do I know what is available inside of SQL Azure? Well, I could go over to MSDN and poke around the documentation all day. Or, I could take 10 minutes to open up an Azure account and just run the following query:

SELECT name, type_desc FROM sys.all_objectsYou should understand that you only get to connect to one database at a time in SQL Azure. The exception is when you connect inside of SSMS initially to the master database then you *can* toggle to a user database in the dropdown inside of SSMS, but after that you are stuck in that user database. I created a quick video to help show this better.

You need to open a new connection in order to query a new database, including master. Why am I telling you this? Because you won’t be able to run this from a user database:

SELECT name, type_desc FROM master.sys.all_objectsYou will get back this error message:

Msg 40515, Level 15, State 1, Line 16

Reference to database and/or server name in ‘master.sys.all_objects’ is not supported in this version of SQL Server.I found that master has 242 objects listed in the sys.all_objects view but only 210 are returned against a user database. So, which ones are missing? Well, you’ll have to go find out on your own. I will give you one interesting item…

It would appear that the master database in SQL Azure has two views named sys.columns and sys.COLUMNS. Why the two? I have no idea. From what I can tell they return the exact same sets of data. So where is the difference? It’s all about the schema. If you run the following:

SELECT name, schema_id, type_desc FROM master.sys.all_objects WHERE name = 'COLUMNS'You will see that there are two different schema ids in play, a 3 (for the INFORMATION_SCHEMA schema) and a 4 (for the sys schema).

Of course, now I am curious to know about the collation of this instance, but we will save that for a later post. Let’s get back to those logins. To return a list of all logins that exist on your SQL Azure instance you simply run:

SELECT * FROM sys.sql_loginsYou can use this view to gather details periodically to make certain that the logins to your SQL Azure instance are not being add/removed/modified, for example.

OK, that’s enough for day one. Tomorrow we will peek under the covers of a user database.

<Return to section navigation list>

MarketPlace DataMarket, Social Analytics, Big Data and OData

• Austin Jones described How to Increase the 50 Record Page Limit on OData Retrieve Responses for Dynamics CRM 2011 in a 3/16/2011 post to the Dynamics CRM in the Field blog:

2An inherent limitation placed on retrieving data via CRM 2011’s OData endpoint (OrganizationData.svc) is that the response can only include up to a maximum of 50 records (http://msdn.microsoft.com/en-us/library/gg334767.aspx#BKMK_limitsOnNumberOfRecords_). For the most part, this does not impact your ability to retrieve the primary entity data sets because each response will provide the URI for a subsequent request that includes a paging boundary token. As you can see below, the URI for the next page of results is provided in the JSON response object:

Here is what the full URI looks like from the example response shown above, including the $skiptoken paging boundary parameter:

Similarly for Silverlight, a DataServiceContinuation<T> object is returned in the IAsyncResult which contains a NextLinkUri property with the $skiptoken paging boundary. The CRM 2011 SDK includes examples for retrieving paged results both via JavaScript and Silverlight:

- JavaScript OData paged retrieve demonstration: http://msdn.microsoft.com/en-us/library/gg985387.aspx

- Silverlight OData paged retrieve demonstration: http://msdn.microsoft.com/en-us/library/gg985388.aspx

Nevertheless, this 50 record limit can become problematic when using $expand/.Expand() to return related entity records in your query results. The expanded record sets do not contain any paging boundary tokens, thus you will always be limited to the first 50 related records.

The next logical question – can this limit be increased? Yes, it can. 50 records is a default limit available for update via an advanced configuration setting stored in MSCRM_Config. Note that increasing the limit may only delay the inevitable for encountering results that exceed the limit. It may also cause your responses to exceed the message limits on your WCF bindings depending on how you have that configured. To avoid these scenarios, you may instead consider breaking up your expanded request into a series of requests where each result set can be paged. Also, before proceeding, I’d be remiss not to point out the following advisory from CRM 2011 SDK:

**Warning**

You should use the advanced settings only when indicated to do so by the Microsoft Dynamics CRM customer support representatives.That said, now on with how to modify the setting…

The specific setting that imposes the 50 record limit is called ‘MaxResultsPerCollection’ and it’s part of the ServerSettings configuration table. Go here for the description of MaxResultsPerCollection and other ServerSettings available: http://msdn.microsoft.com/en-us/library/gg334675.aspx.

Advanced configuration settings can be modified either programmatically via the Deployment Service or via PowerShell cmdlet. The CRM 2011 SDK provides an example of how to set an advanced configuration setting via the Deployment Service here: http://msdn.microsoft.com/en-us/library/gg328128.aspx

To update the setting via PowerShell, execute each of the following at the PowerShell command-line:

Add-PSSnapin Microsoft.Crm.PowerShell

$setting = New-Object "Microsoft.Xrm.Sdk.Deployment.ConfigurationEntity"

$setting.LogicalName = "ServerSettings"

$setting.Attributes = New-Object "Microsoft.Xrm.Sdk.Deployment.AttributeCollection"

$attribute = New-Object "System.Collections.Generic.KeyValuePair[String, Object]" ("MaxResultsPerCollection", 75)

$setting.Attributes.Add($attribute)

Set-CrmAdvancedSetting -Entity $settingAlternatively, you could use the PowerShell script provided in the CRM 2011 SDK to update advanced configuration settings: [SDK Install Location]\samplecode\ps\setadvancedsettings.ps1

To be able to execute scripts, you must first set your PowerShell execution policy to allow unsigned local scripts.

Set-ExecutionPolicy RemoteSignedThen, execute the ‘setadvancedsettings.ps1’ PowerShell script from the SDK and pass in the configuration entity name (string), attribute name (string), and new value (Int32).

& "[SDK Install Location]\setadvancedsettings.ps1" "ServerSettings" "MaxResultsPerCollection" 75After performing either of the two methods above to update your setting, you can verify that the change was successful by inspecting the ServerSettingsProperties table in MSCRM_Config database.

Since these configuration values are cached, perform an IISRESET on the application server if you want them to take immediate affect.

Below are the results of a basic retrieve of AccountSet showing that more than the default 50 records were returned after applying this configuration change.

A few parting words … just because you can, doesn’t mean you should. [Emphasis added.]

• Anupama Mandya wrote a WCF Data Services and OData for Oracle Database tutorial in March 2012:

Purpose

This tutorial covers developing WCF Data Services and Open Data Protocol (OData) applications for the Oracle Database using Visual Studio.

Time to Complete

Overview

Microsoft WCF Data Services enables creating and consuming Web data services. To do so, it uses OData, which exposes data as URI-addressable resources, such as website URLs. Entity Data Models (EDMs) via Microsoft Entity Framework can expose data through WCF Data Services and OData to allow EDMs to be more widely consumed.

In this tutorial, you learn how to expose Oracle Database data via WCF Data Services and OData through Oracle's Entity Framework support.

You will start by creating a new EDM from the HR schema. Next, you will create a WCF Data Service that uses OData to expose this EDM via the Web. Last, you will run the Web application and execute URL queries to retrieve data from the database.

Prerequisites

Before starting this tutorial, you should:

- Install Microsoft Visual Studio 2010 with .NET Framework 4 or higher.

- Install Oracle Database 10.2 or later or Oracle Database XE.

- Install Oracle Data Access Components (ODAC) 11.2.0.3 or later from OTN. The ODAC download includes Oracle Developer Tools for Visual Studio and ODP.NET that will be used in this lab.

- Install Internet Explorer 7 or later versions or any browser that supports modern Web protocols.

- Extract these files on to your working directory

Anupama continues with the following detailed sections:

- Creating a New Website Project

- Creating a new ADO.NET Entity Data Model

- Creating a WCF Data Service with EDM data

- Summary

Jonathan Allen posted OData for LightSwitch to the InfoQ blog on 3/16/2012:

Microsoft is continuing its plan to make OData as ubiquitous as web services. Their latest offering allows LightSwitch 2 to both produce and consume OData services.

LightSwitch is a rapid application development platform based on .NET with an emphasis on self-service. Based around the concept of the “citizen programmer”, Visual Studio LightSwitch is intended to allow for sophisticated end users to build their own business applications without the assistance of an IT department. So why should a normal office worker knocking out a CRUD application care about OData?

OData brings something to the table that neither REST nor Web Services offer: a standardized API. While proxy generation tools can format WS requests and parse the results, they don’t offer any way of actually hooking the service into the application. Instead developers have to painstakingly map the calls and results into the correct format. And REST is an even lower on the stack than that, usually offering only raw XML or JSON.

By contrast, every OData API looks just like any other OData API. The requests are always made the same way through the same conventions; the only significant difference is the columns being returned. This allows tools like LightSwitch to work with OData much in the same way it works with database drivers. In fact, consuming a OData service from LightSwitch is done using the same Attach Data Source wizard used for databases.

To expose OData from LightSwitch one simply needs to deploy the application in one of the three-tier configurations. From there you can consume the OData service from not only LightSwitch but also any other OData enabled application. Beth Massi’s example show importing LightSwitch data into Excel using the PowerPivot extension.

Another new feature in LightSwitch is the ability to redefine relationships between tables that come from external data sources. John Stallo writes,

One of the most popular requests we received was the ability to allow data relationships to be defined within the same data container (just like you can add relationships across data sources today). It turns out that there are quite a few databases out there that don’t define relationships in their schema – instead, a conceptual relationship is implied via data in the tables. This was problematic for folks connecting LightSwitch to these databases because while a good number of defaults and app patterns are taken care for you when relationships are detected, LightSwitch was limited to only keying off of relationships pre-defined in the database. Folks wanted the ability to augment the data model with their own relationships so that LightSwitch can use a richer set of information to generate more app functionality. Well, problem solved – you can now specify your own user-defined relationships between entities within the same container after importing them into your project.

LightSwitch offers a “Metro-inspired Theme” but at this time it doesn’t actually build Windows 8 or Windows Phone Metro-style applications. It does, however, offer options for hosting LightSwitch applications on Windows Azure.

RelatedVendorContent

- Become a Java Developer

- Agile software development: Implementing and scaling it to your needs

- Kanban for Agile Teams

- Comparing IBM Websphere and Oracle WebLogic

- Cloud Computing 101: The Fundamentals

<Return to section navigation list>

Windows Azure Service Bus, Access Control, Identity and Workflow

Wade Wegner (@WadeWegner) announced Episode 72 - New Tools for Windows Identify Foundation and ACS in a 3/16/2012 post:

Join Wade and David each week as they cover Windows Azure. You can follow and interact with the show at @CloudCoverShow.

In this episode, Wade is joined by Vittorio Bertocci—Principal Program Manager at Microsoft and affectionately known to all of us as Admiral Identity—who shows us the new Identity and Access Tool for Visual Studio 11 Beta that, amongst other things, is closely integrated into the Access Control Service. You can get all the details at Windows Identity Foundation in the .NET Framework 4.5 Beta: Tools, Samples, Claims Everywhere and grab the bits here.

In the news:

Vittorio Bertocci (@vibronet) concluded his Windows Identity Foundation Tools for Visual Studio 11 Part IV: Get an F5 Experience with ACS2 series on 3/15/2012:

Welcome to the last walkthrough (for now) of the new WIF tools for Visual Studio 11 Beta! This is my absolute favorite, where we show you how to take advantage of ACS2 from your application with just a few clicks.

The complete series include Using the Local Development STS, manipulating common config settings, connecting with a business STS, get an F5 experience with ACS2.

Let’s say that you downloaded the new WIF tools (well done!

) and you at least checked out the first walkthrough. That test stuff is all fine and dandy, but now you want to get to something a bit more involved: you want to integrate with multiple identity providers, even if they come in multiple flavors.

Open the WIF tools dialog, and from the Provider tab pick the “Use the Windows Azure Access Control Service” option.

You’ll get to the UI shown below. There’s not much, right? That’s because in order to use ACS2 form the tools you first need to specify which namespace you want to use. Click on the “Configure…” link.

You get a dialog which asks you for your namespace and for the management key.

Why do we ask you for those? Well, the namespace is your development namespace: that’s where you will save the trust settings for your applications. Depending on the size of your company, you might not be the one that manages that namespace; you might not even have access to the portal, and the info about namespaces could be sent to you by one of your administrator.Why do we ask for the management key? As part of the workflow followed by the tool, we must query the namespace itself for info and we must save back your options in it. In order to do that, we need to use the namespace key.

As you can see, the tool offer the option of saving the management key: that means that if you always use the same development namespace, you’ll need to go through this experience only once.

As mentioned above, the namespace name and management key could be provided to you from your admin; however let’s assume that your operation is not enormous, and you wear both the dev and the admin hats. Here there’s how to get the the management key value form the ACS2 portal.

Navigate to http://windows.azure.com, sign in with your Windows Azure credentials, 1) pick the Service Bus, Access Control and Cache area, 2) select access control 3) pick the namespace you want to use for dev purposes and 4) hit the Access Control Service button for getting into the management portal.

Once here, pick the management service entry on the left navigation menu; choose “management client”, then “symmetric key”. Once here, copy the key text in the clipboard (beware not to pick up extra blanks!).

Now get back to the tool, paste in the values and you’re good to go!

As soon as you hit OK, the tool downloads the list of all the identity providers that are configured in your namespace. In the screenshot below you can see that I have all the default ones, plus Facebook which I added in my namespace. If I would have had other identity providers configured (local ADFS2 instances, OpenID providers, etc) I would see them as checkboxes as well. Let’s pick Google and Fecebook, then click OK.

Depending on the speed of your connection, you’ll see the little donut pictured below informing you that the tools are updating the various settings.

As soon as the tool closes, you are done! Hit F5.

Surprise surprise, you get straight to the ACS home realm discovery page. Let’s pick Facebook.

Here there’s the familiar FB login…

…and we are in!

What just happened? Leaving the key acquisition out for a minute, let me summarize.

- you went to the tools and picked ACS as provider

- You got a list checkboxes, one for each of the available providers, and you selected the ones you wanted

- you hit F5, and your app showed that it is now configured to work with your providers of choice

Now, I am biased: however to me this seems very, very simple; definitely simpler than the flow that you had to follow until now

.

Of course this is just a basic flow: if you need to manage the namespace or do more sophisticated operations the portal or the management API are still the way to go. However now if you just want to take advantage of those features you are no longer forced to learn how to go through the portal. In fact, now dev managers can just give the namespace credentials without necessarily giving access to the Windows Azure portal for all the dev staff.

What do you think? We are eager to hear your feedback!

Don’t forget to check out the other walkthroughs: the complete series include Using the Local Development STS, manipulating common config settings, connecting with a business STS, get an F5 experience with ACS2.

Vittorio Bertocci (@vibronet) continued his Windows Identity Foundation Tools for Visual Studio 11 Part III: Connecting With a Business STS (e.g. ADFS2) series on 3/15/2012:

Welcome to the second walkthrough of the new WIF tools for Visual Studio 11 Beta! This is about using the tools to modify common settings of your app without editing the web.config.

The complete series include Using the Local Development STS, manipulating common config settings, connecting with a business STS, get an F5 experience with ACS2.

Let’s say that you downloaded the new WIF tools (well done!

) and you went through the first walkthrough, and you are itching to go deeper in the rabbit’s hole. Pronto, good Sir/Ma’am!

Let’s go back to the tool and take a look at the Configuration tab. What’s in there, exactly?

In V1 the tools operated in fire & forget fashion: they were a tool for establishing a trust relationship with a WS-Federation or WS-Trust STS, and every time you opened them it was expected that your intention was to create a new relationship (or override (most of) an existing one).

The WIF tools for .NET 4.5 aspire to be something more than that: when you re-open them, you’ll discover that they are aware of your current state and they allow you to tweak some key properties of your RP without having to actually get to the web.config itself.

The main settings you find here are:

- Realm and AudienceUri

The Realm and AudienceUri are automatically generated assuming local testing, however before shipping your code to staging (or packaging it in a cspack) you’ll likely want to change those values. Those tow fields help you to do just that.- Redirection Strategy

For most business app developers WIF’s default strategy of automatically redirecting unauthenticated requests to the trusted authority makes a lot of sense. In business settings it is very likely that the authentication operation will be silent, and the user will experience single sign on (e.g. they type the address of the app the want, next thing they see the app UI).

There are however situations in which the authentication experience is not transparent: maybe there is a home realm discovery experience, or there is an actual credentials gathering step. In that case, for certain apps or audiences the user could be disoriented (e.g. they type the address of the app the want, next thing they see the STS UI). In order to handle that, the tool UI offers the possibility of specifying a local page (or controller) which will take care of handling the authentication experience. You can see this in action in the ClaimsAwareMVCApplication sample.- Flags: HTTPS, web farm cookies

The HTTPS flag is pretty self-explanatory: by default we don’t enforce HTTPS, given the assumption that we are operating in dev environment; this flag lets you turn the mandatory HTTPS check on.

The web far cookie needs a bit of background. In WIF 4.5 we have a new cookie transform based on MachineKey, which you can activate by simply pasting the appropriate snippet in the config. That’s what happens when you check this flag.Those are of course the most basic settings: we picked them because how often we observed people having to change them. Did we get them right? Let us know!

Don’t forget to check out the other walkthroughs: the complete series include Using the Local Development STS, manipulating common config settings, connecting with a business STS, get an F5 experience with ACS2.

Vittorio Bertocci (@vibronet) started his Windows Identity Foundation Tools for Visual Studio 11 Part I: Using The Local Development STS series on 3/15/2012:

Welcome to the first walkthrough of the new WIF tools for Visual Studio 11 Beta! This is about using the local STS feature to test your application on your dev machine.

The complete series include Using the Local Development STS, manipulating common config settings, connecting with a business STS, get an F5 experience with ACS2.

Let’s say that you downloaded the new WIF tools (well done!

) and you are itching to see them in action. Right away good Sir/Ma’am!

Fire up Visual Studio 11 as Administrator (I know, I know… I’ll explain later) and create a new ASP.NET Web Form Application.

Right-click on the project in Solution Explorer, you’ll find a very promising entry which sounds along the lines of “Identity and Access”. Go for it!

You get to a dialog which, in (hopefully) non-threatening terms, suggests that it can help handling your authentication options.

The default tab, Providers, offer three options.The first option sounds pretty promising: we might not know what an STS exactly is, but we do want to test our application. Let’s pick that option and hit OK.

That’s it? There must be something else I have to do, right? Nope. Just hit F5 and witness the magic of claims-based identity unfold in front of your very eyes. (OK, this is getting out hand. I’ll tone it down a little).

As you hit F5, keep an eye on the system tray: you’ll see a new icon appear, informing you that “Local STS” is now running.Your browser opens on the default page, shows the usual signs of redirection, and lands on the page with an authenticated user named Terry. Ok, that was simple! But what happened exactly?

Stop the debugger and go back to the Identity and Access dialog, then pick the Local Development STS tab.

The Local STS is a test endpoint, provided by the WIF tools, which can be used on the local machine for getting claims of arbitrary types and values in your application. By choosing “Use the local development STS to test your application” you told the WIF tools that you want your application to get tokens from the local STS, and the tools took care to configure your app accordingly. When you hit F5, the tools launched an instance of LocalSTS and your application redirected the request to it. LocalSTS does not attempt to authenticate requests, it just emits a token with the claim types and values it is configured to emit. In your F5 session you got the default claim types (name, surname, role, email) and values: if you want to modify those and add your own the Local Development STS tab offers you the means to do so, plus a handful of other knobs.

What does this all mean? Well, for one: you no longer need to rely on the kindness of strangers (i.e. your admins) to set up a test/staging ADFS2 endpoint to play with claim values; you also no longer need to create a custom STS and then modify directly the code in order to get the values you need to test your application.

Also: all the settings for the LocalSTS come from one file, LocalSTS.exe.config, which lives in your application’s folder. That means that you can create multiple copies of those files with different settings for your various test cases; you can even email values around fro repro-ing problems and similar. We think it’s pretty cool

.

Now: needless to say, this is absolutely for development-time and test-time only activities. This is absolutely not fit for production, in fact the F5 experience is enabled by various defaults which assume that you’ll be running this far, far away from production (“you don’t just walk in Production”). In v1 the tools kind of tried to enforce some production-level considerations, like HTTPS, and your loud & clear feedback is that at development time you don’t want to be forced to deal with those and that you’ll do it in your staging/production environment. We embraced that, please let us know how that works for you!

Don’t forget to check out the other walkthroughs: the complete series include Using the Local Development STS, manipulating common config settings, connecting with a business STS, get an F5 experience with ACS2.

Vittorio Bertocci (@vibronet) announced Windows Identity Foundation in the .NET Framework 4.5 Beta: Tools, Samples, Claims Everywhere in a 3/15/2012 post:

The first version of Windows Identity Foundation was released in November 2009, in form of out of band package. There were many advantages in shipping out of band, the main one being that we made WIF available to both .NET 3.5 and 4.0, to SharePoint, and so on. The other side of the coin was that it complicated redistribution (when you use WIF in Windows Azure you need to remember to deploy WIF’s runtime with your app) and that it imposed a limit to how deep claims could be wedged in the platform. Well, things have changed. Read on for some announcements that will rock your world!

No More Moon in the Water

With .NET 4.5, WIF ceases to exist as a standalone deliverable. Its classes, formerly housed in the Microsoft.IdentityModel assembly & namespace, are now spread across the framework as appropriate. The trust channel classes and all the WCF-related entities moved to System.ServiceModel.Security; almost everything else moved under some sub-namespace of System.IdentityModel. Few things disappeared, some new class showed up; but in the end this is largely the WIF you got to know in the last few years, just wedged deeper in the guts of the .NET framework. How deep?

Very deep indeed.

To get a feeling of it, consider this: in .NET 4.5 GenericPrincipal, WindowsPrincipal and RolePrincipal all derive from ClaimsPrincipal. That means that now you’ll always be able to use claims, regardless of how you authenticated the user!

In the future we are going to talk more at length about the differences between WIF1.0 and 4.5. Why did we start talking about this only now? Well, because unless your name is Dominic or Raf chances are that you will not brave the elements and wrestle with WS-Federation without some kind of tool to shield you from the raw complexity beneath. Which brings me to the first real announcement of the day.

Brand New Tools for Visual Studio 11

I am very proud to announce that today we are releasing the beta version of the WIF tooling for Visual Studio 11: you can get it from here, or directly from within Visual Studio 11 by searching for “identity” directly in the Extensions Manager.

The new tool is a complete rewrite, which delivers a dramatically simplified development-time experience. If you are interested in a more detailed brain dump on the thinking that went in this new version, come back in few days and you’ll find a more detailed “behind the scenes” post. To give you an idea of the new capabilities, here there are few highlights:

- The tool comes with a test STS which runs on your local machine when you launch a debug session. No more need to create custom STS projects and tweaking them in order to get the claims you need to test your apps! The claim types and values are fully customizable. Walkthrough here

- Modifying common settings directly from the tooling UI, without the need to edit the web.config. Walkthrough here

- Establish federation relationships with ADFS2 (or other WS-Federation providers) in a single screen. Walkthrough here

- My personal favorite. The tool leverages ACS capabilities to offer you a simple list of checkboxes for all the identity providers you want to use: Facebook, Google, Live ID, Yahoo!, any OpenID provider and any WS-Federation provider… just check the ones you want and hit OK, then F5; both your app and ACS will be automatically configured and your test application will just work. Walkthrough here

- No more preferential treatment for web sites. Now you can develop using web applications project types and target IIS express, the tools will gracefully follow.

- No more blanket protection-only authentication, now you can specify your local home realm discovery page/controller (or any other endpoint handling the auth experience within your app) and WIF will take care of configuring all unauthenticated requests to go there, instead of slamming you straight to the STS.

Lots of new capabilities, all the while trying to do everything with less steps and simply things! Did we succeed? You guys let us know!

In V1 the tools lived in the SDK, which combined the tool itself and the samples. When venturing in Dev11land, we decided there was a better way to deliver things to you: read on!

New WIF Samples: The Great Unbundling

If you had the chance to read the recent work of Nicholas Carr, you’ll know he is especially interested on the idea of unbundling: in a (tiny) nutshell, traditional magazines and newspapers were sold as a single product containing a collection of different content pieces whereas the ‘net (as in the ‘verse) can offer individual articles, with important consequences on business models, filter bubble, epistemic closure and the like (good excerpt here).

You’ll be happy to know that this preamble is largely useless, I just wanted to tell you that instead of packing all the samples in a single ZIP you can now access each and every one of them as individual downloads, again both via browser (code gallery) or from Visual Studio 11’s extensions manager. The idea is that all samples should be easily discoverable, instead of being hidden inside an archive; also, the browse code feature is extremely useful when you just want to look up something without necessarily download the whole sample .Also in this case we did our best to act on the feedback you gave us. The samples are fully redesigned, accompanied by exhaustive readmes and their code is thoroughly documented. Ah, and the look&feel does not induce that “FrontPage called from ‘96, it wants his theme back” feeling

. While still very simple, as SDK samples should be, they attempt to capture tasks and mini-scenarios that relate to the real-life problems we have seen you using WIF for in the last couple of years.

- ClaimsAwareWebApp

This is the classic ASP.NET application (as opposed to web site), demonstrating basic use of authentication externalization (to the local test STS from the tools).- ClaimsAwareWebService

Same as above, but with a classic WCF service.- ClaimsAwareMvcApplication

This sample demonstrates how to integrate WIF with MVC, including some juicy bits like non-blanket protection and code which honors the forms authentication redirects out of the LogOn controller.- ClaimsAwareWebFarm

This sample is an answer to the feedback we got from many of you guys: you wanted a sample showing a farm ready session cache (as opposed to a tokenreplycache) so that you can use sessions by reference instead of exchanging big cookies; and you asked for an easier way of securing cookies in a farm.

For the session cache we included a WCF-based one: we would have preferred something based on Windows Azure blob storage, but we are .NET 4.5 only hence for the time being we went the WCF service route.

For the session securing part: in WIF 4.5 we have a new cookie transform based on MachineKey, which you can activate by simply pasting the appropriate snippet in the config. The sample demonstrates this new capability. I think I can see Shawn in the audience smiling from ear to ear, or at least that’s what I hope

Note: the sample itself is not “farmed”, but it demonstrates what you need for making your app farm-ready.- ClaimsAwareFormsAuthentication

This very simple sample demonstrates that in .NET 4.5 you get claims in your principals regardless of how you authenticate your users. It is a simple sample, no alliteration intended, but it makes an important point. You can of course generalize the same concept for other authentication methods as well (extra points… ehhm.. claims, if you are in a Windows8 domain and you do Kerberos authentication)- ClaimsBasedAuthorization

In this sample we show how to use your CLaimsAuthorizationManager class and the CLaimsAuthorizationModule for applying your own authorization policies. We decided to keep it simple and use basic conditions, but we are open to feedback!- FederationMetadata

In this sample we demonstrate both dynamic generation (on a custom STS) and dynamic consumption (on a very trusty RP: apply this with care!) of metadata documents. As simple as that.- CustomToken

Finally, we have one sample showing how to build a custom token type. Not that we anticipate many of you will need this all that often, but just in case…

We factored the sample in a way that shows you how you would likely consume an assembly with a custom token (and satellite types, like resolvers) from a claims issuer (custom STS) and consumer (in this case a classic ASP.NET app). Ah, and since we were at it, we picked a token type that might occasionally come in useful: it’s the Simple Web Token, or SWT.Icing on the case: if you download a sample from Visual Studio 11, you’ll automatically get the WIF tools

. Many of the samples have a dependency on the new WIF tools, as every time we need a standard STS (e.g. we don’t need to show features that can be exercised only by creating a custom STS) we simply rely on the local STS.

The Crew!!!

Normally at this point I would encourage you to go out and play with the new toys we just released, but while I have your attention I would like to introduce you to the remarkable team that brought you all this, as captured at our scenic Redwest location:

…and of course there’s always somebody that does not show up at picture day, but I later chased them down and immortalized their effigies for posterity:

Thank you guys for an awesome, wild half year! Looking forward to fight again at your side

<Return to section navigation list>

Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

• MSDN published the Microsoft patterns & practices Team’s Appendix C - Implementing Cross-Boundary Communication in March 2012 as part of its Building Hybrid Applications in the Cloud on Windows Azure guidance:

A key aspect of any solution that spans the on-premises infrastructure of an organization and the cloud concerns the way in which the elements that comprise the solution connect and communicate. A typical distributed application contains many parts running in a variety of locations, which must be able to interact in a safe and reliable manner. Although the individual components of a distributed solution typically run in a controlled environment, carefully managed and protected by the organizations responsible for hosting them, the network that joins these elements together commonly utilizes infrastructure, such as the Internet, that is outside of these organizations’ realms of responsibility.

Consequently the network is the weak link in many hybrid systems; performance is variable, connectivity between components is not guaranteed, and all communications must be carefully protected. Any distributed solution must be able to handle intermittent and unreliable communications while ensuring that all transmissions are subject to an appropriate level of security.

The Windows Azure™ technology platform provides technologies that address these concerns and help you to build reliable and safe solutions. This appendix describes these technologies.

On this page:

- Uses Cases and Challenges

- Accessing On-Premises Resources From Outside the Organization

- Accessing On-Premises Services From Outside the Organization

- Implementing a Reliable Communications Channel across Boundaries

- Cross-Cutting Concerns

- Security

- Responsiveness

- Interoperability

- Windows Azure Technologies for Implementing Cross-Boundary Communication

- Accessing On-Premises Resources from Outside the Organization Using Windows Azure Connect

- Guidelines for Using Windows Azure Connect

- Windows Azure Connect Architecture and Security Model

- Limitations of Windows Azure Connect

- Accessing On-Premises Services from Outside the Organization Using Windows Azure Service Bus Relay

- Guidelines for Using Windows Azure Service Bus Relay

- Guidelines for Securing Windows Azure Service Bus Relay

- Guidelines for Naming Services in Windows Azure Service Bus Relay

- Selecting a Binding for a Service, Windows Azure Service Bus Relay and Windows Azure Connect Compared

- Implementing a Reliable Communications Channel across Boundaries Using Service Bus Queues

- Service Bus Messages

- Guidelines for Using Service Bus Queues

- Guidelines for Sending and Receiving Messages Using Service Bus Queues, Sending and Receiving Messages Asynchronously

- Scheduling, Expiring, and Deferring Messages

- Guidelines for Securing Service Bus Queues

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

• Bruno Terkaly (@brunoterkaly) began a new series with Node.js–The most popular modules and how they work-Part 1-A bakers dozen on 3/17/2012:

Starting with the Node Package Manager

I start this post by installing Node Package Manager. This is your gateway to Node nirvana. NPM will allow you to leverage 1000’s of lines of others code to make your node development efficient.

After installing NPM, we will download the library called underscore. This library has dozens of utility functions. I will walk you through about 12 of them. It will provide a quick background and allow you to start using underscore almost immediately in your code.

In a nutshell, you will learn:

Helps you install all modules from GitHub.

Windows or Mac – It is easy to install npm.exe (Node Package Manager)

Easy Install for the Node Package Manager: http://npmjs.org/dist/

Let’s run through the most popular packages

Here are the “most used” packages in Node

underscore

The command to install using the node package manager is:

npm install underscore

Purpose of underscore

To bring functional language capabilities to Node.js. There is tons of wrapper code to help you.

What is a functional language anyway?

underscore01.js

Note the code below loops through the array of numbers (1, 2, 3), calculating an average

It then increments the module level variable tot. Finally, it prints the result.

// Include underscore library var _ = require('underscore')._; // Needs module scope to work var tot = 0; var count = 0; _.each([1, 2, 3], function (num) { // increment tot tot += num; count++; }); console.log('The average is ' + tot / count);

underscore02.js

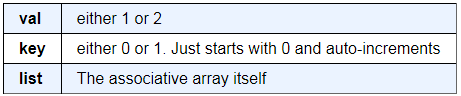

Uses map functions to build associative arrays. Notice the callback parameters

Note you can look values in the map using syntax like this:

list[key]

// Include underscore library var _ = require('underscore')._; _.map([1, 2], function (val, key, list) { console.log('key is ' + key + ', ' + 'value is ' + val); console.log('list[' + key + '] = ' + list[key]); });

underscore03.js

Notice we provide the keys in this example. So if we say something like list[one] we get the value of 1.

// Include underscore library var _ = require('underscore')._; // Using a map with keys declared. Notice the {} instead // of [] _.map({ one: 1, two: 2 }, function (val, key, list) { console.log('key is ' + key + ', ' + 'value is ' + val); console.log('list[' + key + '] = ' + list[key]); });underscore04.js – recursive techniques

I show two versions here. The first version uses the underscore library. The second version is hand written.

// Include underscore library var _ = require('underscore')._; var sum = _.reduce([1, 2, 3, 4], function (memo, num) { console.log('memo is ' + memo + ' and num is ' + num + ' and total is ' + (memo + num)); // Simple compounding function return memo + num; }, 0); console.log('sum is ' + sum); // You could build it by hand also var result = compound(4); console.log('hand written result is ' + result); // My handwritten version that is recursive function compound(aNumber) { if (aNumber < 0) { return 0; } else if (aNumber == 0) { return 0; } else { return (aNumber + compound(aNumber - 1)); } }

underscore05.js – using reject

Have you ever been rejected? Well this code shows you how to reject a genre of music

// Include underscore library var _ = require('underscore')._; var odds = _.reject(['80s music', '50s music', '90s music'], function (list, iterator) { console.log('list is ' + list + ', iterator is ' + iterator); return list == '80s music'; }); for (var i = 0; i < odds.length; i++) { console.log('odds[' + i + ']=' + odds[i]); }

underscore06.js

The function determines where in the list 35 fits in. It is the

// Include underscore library var _ = require('underscore')._; var lookupnumber = 35; var listOfNumbers = [10, 20, 30, 40, 50]; var answer = _.sortedIndex(listOfNumbers, lookupnumber); for (var i = 0; i < listOfNumbers.length; i++) { console.log('listOfNumbers [' + i + ']=' + listOfNumbers[i]); } console.log('lookupnumber is ' + lookupnumber + ', answer is ' + answer);

Javascript itself has a lot of built in power, but not as much

From ScriptPlanet.com you see some examples of Javascript primitives. These are awesome but there’s just a few.

Array Functions

Underscore07.js – lets you blend arrays together

Let’s you grab the first column out of 3 arrays and glue together.

// Include underscore library var _ = require('underscore')._; var stooges = ['moe', 'larry', 'curly']; var verb = ['has a violence level of ', 'is not violent', 'can be violent']; var violencelevel = ['very', 'not really', 'kind of']; var result = _.zip(stooges, verb, violencelevel); showArray(result); function showArray(myarray) { for (var i = 0; i < myarray.length; i++) { console.log('result [' + i + ']=' + myarray[i]); } }Function Functions

These are helper functions. They can enhance ‘this’ pointers or can help with timeouts when call functions.

Underscore07.js – lets you blend arrays together

_bind()

// Include underscore library var _ = require('underscore')._; var stooges = ['moe', 'larry', 'curly']; var verb = ['has a violence level of ', 'is not violent', 'can be violent']; var violencelevel = ['very', 'not really', 'kind of']; var result = _.zip(stooges, verb, violencelevel); var func = function showArray(myarray) { for (var i = 0; i < myarray.length; i++) { console.log(this.name + '[' + i + ']=' + myarray[i]); } } // This means that there will be a this.name property in the function // with the value of 'result' func = _.bind(func, { name: 'result' }, result); // Now call showArray() func();

underscore09.js

Let’s you run a function after the call stack is free. Noice that ‘calling bigJob’ shows up immediately.That is because we differed callking ‘bigJob.’

// Include underscore library var _ = require('underscore')._; _.defer(function () { bigJob(); }); console.log('calling bigJob'); function bigJob() { for (i = 0; i < 1000000; i++) { for (j = 0; j < 1000; j++) { } } console.log('bigJob has ended'); }

underscore10.js – using the throttle function

Can’t get it to run less than twice.

// Include underscore library var _ = require('underscore')._; var throttled = _.throttle(bigJob, 1000); // Will execute only twice // Can't get it to run once only. throttled(); throttled(); throttled(); throttled(); throttled(); function bigJob() { for (i = 0; i < 1000000; i++) { for (j = 0; j < 1000; j++) { } } console.log('bigJob has ended'); }

underscore11.js

Will execute only once.

// Include underscore library var _ = require('underscore')._; var justonce = _.once(bigJob); // Will execute only once justonce(); justonce(); justonce(); justonce(); justonce(); function bigJob() { for (i = 0; i < 1000000; i++) { for (j = 0; j < 10; j++) { } } console.log('bigJob has ended'); }underscore12.js

Enumerating properties and values.

// Include underscore library var _ = require('underscore')._; var customobject = { hottest_pepper: 'habanero', best_sauce: 'putanesca', least_healthy: 'alfredo' } var properties = _.keys(customobject); var values = _.values(customobject); console.log(''); console.log('Displaying properties'); showArray(properties); console.log('Displaying values'); showArray(values); function showArray(myarray) { for (var i = 0; i < myarray.length; i++) { console.log(myarray[i]); } console.log(''); }

Wrap up for now

The next post will continue with some new modules. I recommend doing what I did. I tweaked and combined different examples to learn better how they work together. I plan to post a few more samples on the other modules soon. As always, life is busy. Hope this gets you one step closer to using Node effectively.

• The Microsoft All-In-One Code Gallery posted Retry Azure Cache Operations (CSAzureRetryCache) on 3/11/2012 (missed when published):

This sample implements retry logic to protect the application from crashing in the event of transient errors in Windows Azure. This sample uses Transient Fault Handling Application Block to implement retry mechanism. …

Introduction

When using cloud based services, it is very common to receive exceptions similar to below while performing cache operations such as get, put. These are called transient errors.

Developer is required to implement retry logic to successfully complete their cache operations.

ErrorCode<ERRCA0017>:SubStatus<ES0006>:There is a temporary failure. Please retry later. (One or more specified cache servers are unavailable, which could be caused by busy network or servers. For on-premises cache clusters, also verify the following conditions. Ensure that security permission has been granted for this client account, and check that the AppFabric Caching Service is allowed through the firewall on all cache hosts. Also the MaxBufferSize on the server must be greater than or equal to the serialized object size sent from the client.)

This sample implements retry logic to protect the application from crashing in the event of transient errors. This sample uses Transient Fault Handling Application Block to implement retry mechanism

Building the Sample

1) Ensure Windows Azure SDK 1.6 is installed on the machine. Download Link

2) Modify the highlighted cachenamespace, autherizataionInfo attributes under DataCacheClient section of web.config and provide values of your own cache namespace and Authentication Token. Steps to obtain the value of authentication token, cache namespace value can be found here

XML

Edit|Remove

<dataCacheClients> <dataCacheClient name="default"> <hosts> <host name="skyappcache.cache.windows.net" cachePort="22233" /> </hosts> <securityProperties mode="Message"> <messageSecurity authorizationInfo="YWNzOmh0dHBzOi8vbXZwY2FjaGUtY2FjaGUuYWNjZXNzY29udHJvbC53aW5kb3dzLm5ldC9XUkFQdjAuOS8mb3duZXImOWRINnZQeWhhaFMrYXp1VnF0Y1RDY1NGNzgxdGpheEpNdzg0d1ZXN2FhWT0maHR0cDovL212cGNhY2hlLmNhY2hlLndpbmRvd3MubmV0"> </messageSecurity> </securityProperties> </dataCacheClient>Running the Sample

- Open the Project in VS 2010 and run it in debug or release mode

- Click on “Add To Cache” button to add a string object to Azure cache. Up on successful operation, “String object added to cache!” message will be printed on the webpage

- Click on “Read From Cache” button to read the string object from Azure Cache. Up on successful operation, value of the string object stored in Azure cache will be printed on the webpage. By default it will be “My Cache” (if no changes are made to code)

Using the Code

1. Define required objects globally, so that they are available for all code paths with in the module.

// Define DataCache object DataCache cache; // Global variable for retry strategy FixedInterval retryStrategy; // Global variable for retry policy RetryPolicy retryPolicy;2. This method configures strategies, policies, actions required for performing retries.

/// <summary> /// This method configures strategies, policies, actions required for performing retries. /// </summary> protected void SetupRetryPolicy() { // Define your retry strategy: in this sample, I'm retrying operation 3 times with 1 second interval retryStrategy = new FixedInterval(3, TimeSpan.FromSeconds(1)); // Define your retry policy here. This sample uses CacheTransientErrorDetectionStrategy retryPolicy = new RetryPolicy<CacheTransientErrorDetectionStrategy>(retryStrategy); // Get notifications from retries from Transient Fault Handling Application Block code retryPolicy.Retrying += (sender1, args) => { // Log details of the retry. var msg = String.Format("Retry - Count:{0}, Delay:{1}, Exception:{2}", args.CurrentRetryCount, args.Delay, args.LastException); // Logging the notification details to the application trace. Trace.Write(msg); }; }3. Create the Cache Object using the DataCacheClient configuration specified in web.config and perform initial setup required for Azure cache retries

protected void Page_Load(object sender, EventArgs e) { // Configure retry policies, strategies, actions SetupRetryPolicy(); // Create cache object using the cache settings specified web.config cache = CacheUtil.GetCacheConfig(); }4. Add string object to Cache on a button click event and perform retries in case of transient failures

protected void btnAddToCache_Click(object sender, EventArgs e) { try { // In order to use the retry policies, strategies defined in Transient Fault Handling // Application Block , user calls to cache must be wrapped with in ExecuteAction delegate retryPolicy.ExecuteAction( () => { // I'm just storing simple string object here .. Assuming this call fails, // this sample retries the same call 3 times with 1 second interval before it gives up. cache.Put("MyDataSet", "My Cache"); Response.Write("String object added to cache!"); }); } catch (DataCacheException dc) { // Exception occurred after implementing the Retry logic. // Ideally you should log the exception to your application logs and show user friendly // error message on the webpage. Trace.Write(dc.GetType().ToString() + dc.Message.ToString() + dc.StackTrace.ToString()); } }5. Read the value of string object stored in Azure Cache on a button click event and perform retries in case of transient failures

protected void btnReadFromCache_Click(object sender, EventArgs e) { try { // In order to use the retry policies, strategies defined in Transient Fault // Handling Application Block , user calls to cache must be wrapped with in // ExecuteAction delegate. retryPolicy.ExecuteAction( () => { // Getting the object from azure cache and printing it on the page. Response.Write(cache.Get("MyDataSet")); }); } catch (DataCacheException dc) { // Exception occurred after implementing the Retry logic. // Ideally you should log the exception to your application logs and show user // friendly error message on the webpage. Trace.Write(dc.GetType().ToString() + dc.Message.ToString() + dc.StackTrace.ToString()); } }More Information

Refer to below blog entry for more information http://blogs.msdn.com/b/narahari/archive/2011/12/29/implementing-retry-logic-for-azure-caching-applications-made-easy.aspx

Bruce Kyle recommended that you Harness [the] Power of Windows Azure in Your Windows 8 Metro App in a 3/16/2012 post:

Windows Azure Toolkit for Windows 8 helps you create Windows 8 Metro Style applications that can harness the power of Windows Azure. The idea is to connect your Windows application to data in the cloud.

The toolkit makes makes it easy to send Push Notifications to your Windows 8 client apps from Windows Azure via the Windows Push Notification Service. A video demonstration shows you how, all in about 4 minutes. See How to Send Push Notifications using the Windows Push Notification Service and Windows Azure on Channel 9.

Windows 8 recently reached the Windows 8 Consumer Preview milestone. ISVs are downloading the preview and developers are eagerly building/testing new Metro Style apps experiences and sketching out how unique Windows 8 Metro Style apps can add to their portfolios.

The new version of the Windows Azure Toolkit for Windows 8 helps Azure applications reach Windows 8 and helps Windows developers leverage the power of Azure. In just minutes, developers can download working code samples that use Windows Azure and the Windows Push Notification Service to send Toast, Tile and Badge notifications to a Windows 8 Metro Style application.

The core features of the toolkit include:

- Automated Install that scripts all dependencies.

- Project Templates, such as Windows 8 Metro Style app project templates, in both XAML/C# and HTML5/JavaScript with a supporting C# Windows Azure Project for Visual Studio 2010.

- NuGet Packages for Push Notifications and the Sample ACS scenarios. You can find the packages here and full source in the toolkit under /Libraries.

- Five App Samples that demonstrate ways Windows 8 Metro Style apps can use Azure-based ACS and Push Notifications

- Documentation – Extensive documentation including install, file new project walkthrough, samples and deployment to Windows Azure.

Getting Started with Windows 8

For more information about how you can get started on Windows 8, see Windows 8 Dev Center.

The toolkit would be much more useful if the Visual Studio 2011 Beta Tools for Windows Azure were available with it.

<Return to section navigation list>

Visual Studio LightSwitch and Entity Framework 4.1+

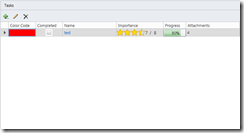

• Kostas Christodoulou announced availability of a CLASS Extensions Version 2 Demo for LightSwitch:

After a long time of preparation, my company Computer Life and [I] are ready to publish the next –-commercial-- version of CLASS Extensions.

In this SkyDrive location you can find 2 videos briefly presenting capabilities of this new version. In less than a week a page will be started here, where you would be able to buy the package you prefer. There will be 2 packages: no source code and with code and code documentation. Pricing will be announced along with the official release.

CLASS Extensions Demo Part I.swf

CLASS Extensions Demo Part II.swfIn these videos you will find a brief demonstration of the following controls and/or business types:

- Color Business Type and Control. This was available also in the first free version. It has been improved to automatically detect grid or detail and read-only or not.

- BooleanCheckbox and BooleanViewer controls. These were also available in the first version. No change to this one.

- Rating Business Type and Control. A new business type to support ratings. You can set maximum rating, star size, show/hide starts and/or labels, allow/disallow half stars.

- Image Uri Business Type and Control. A new business type to support displaying images from a URI location instead of binary data.

- Audio Uri Business Type and Control. A new business type to support playing audio files from a URI location.

- Video Uri Business Type and Control. A new business type to support playing video files from a URI location.

- Drawing Business Type and Control. A new business type that allows the user to create drawings using an image URI (not binary) as background. The drawing can be saved either as a property of an entity (the underlying type is string) or in the IsolatedStorage of the application. Also, every time the drawing is saved a PNG snapshot of the drawing is available and can be used any way you want, with 2 (two) lines of additional code. The drawings, both local or data service provided, can be retrieved any time and modified and saved back again.

- FileServer Datasource Extension. A very important RIA Service datasource provided to smoothly integrate all the URI-based features. This Datasources exports two EntityTypes: ServerFile and ServerFileType. Also provides methods to retrieve ServerFile entities representing the content files (Image, Audio, Video, Document, In the current version these are the ServerFileTypes supported) stored at your application server. Also you can delete ServerFile entities. Upload (insert/update) is not implemented in this version as depending on Whether your application is running out of browser or not it has to be done a different way.

Last but not least. There is a list of people whose code and ideas helped me a lot in the implementation of this version. Upon official release they will be properly mentioned as community is priceless.

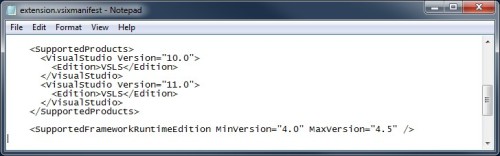

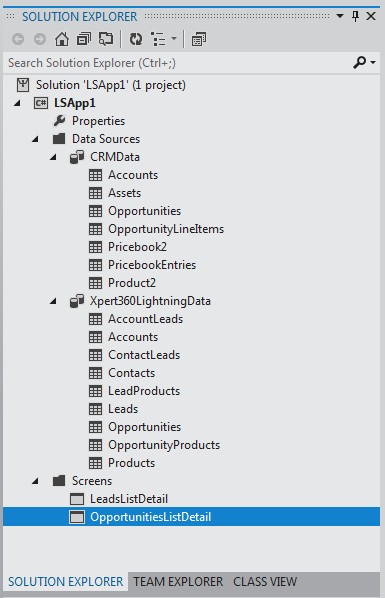

• Xpert360 described Working with SalesForce and Dynamics CRM data in Visual Studio 11 Beta in a 3/6/2012 post (missed when published):

Microsoft Visual Studio 11 beta and Windows 8 Consumer Previews were made available on Feb 29th 2012. They will be valid through to June 2012.

The Xpert360 development team took the beta for a spin with the latest versions of the Xpert360 Lightning Series product builds: WCF Ria Service data source extensions for LightSwitch and .NET 4 that connect to salesforce and Dynamics CRM Online instances.

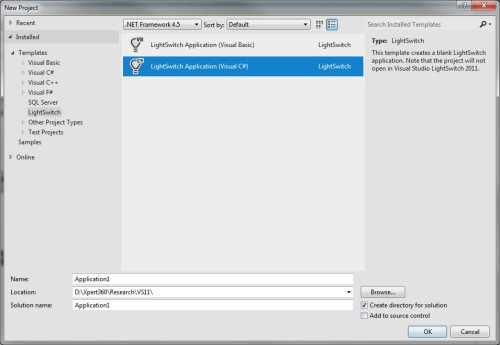

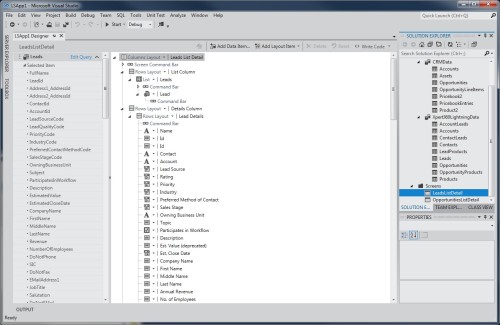

VS11 Beta Premium Applying: LightSwitch Beta Core

After the VS11 install a quick rebuild of the data extensions VSIX in VS2010 pulled in the latest software versioning as shown below:

Clip for LightSwitch Data Extension vsixmanifest

The new VSIX files now prompt for the version of Visual Studio if not already installed and within ten minutes of the VS11 install we are building our first Visual Studio LightSwitch 11 application to interact with our CRM test systems.

LightSwitch project templates in VS11 Beta

Then we create new data connections with the Xpert360 Lightning Data Extensions.

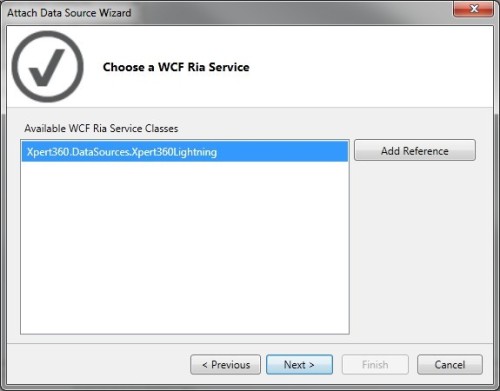

LightSwitch designer - Choose a WCF Ria Service!

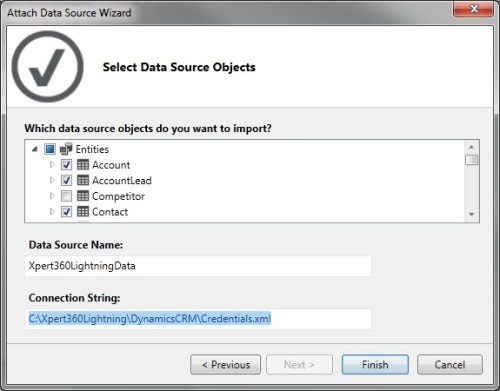

… and we move on and choose some of the CRM entities exposed by the service.

LightSwitch designer - Select Data Source Objects from Dynamics CRM Online

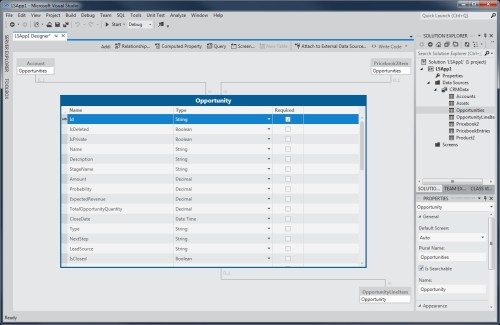

The chosen entities appear against the data source in the LightSwitch designer and can be explored and manipulated as usual. Notice the automatically available entity relationships between the salesforce entities.

LightSwitch designer - SalesForce Opportunity Entity with chosen subset fo relationships

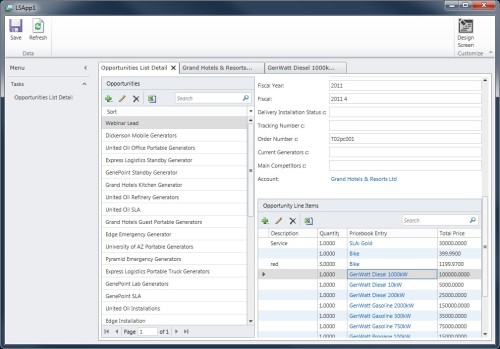

A few more clicks to build a list detail screen…

LightSwitch designer - Xpert360 Lightning Data Extensions

Ten minutes later we have our first CRM data from salesforce and Dynamics CRM Online.

LightSwitch - SalesForce Opportunities List Details at your disposal

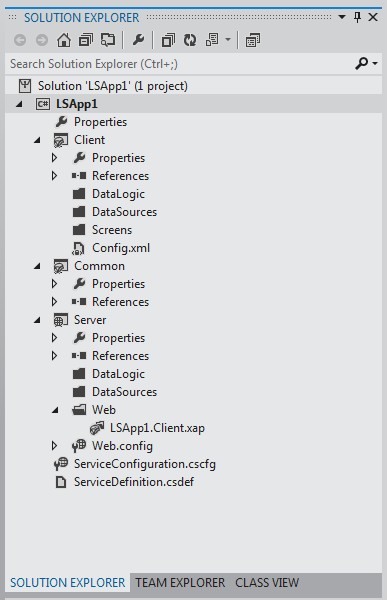

Looking around in the VS11 IDE the default theme has faded to grey, perhaps its vying for an Oscar with ”The Artist”. Here are the two LightSwitch project views available in solution explorer:

LightSwitch designer - Logical and File views

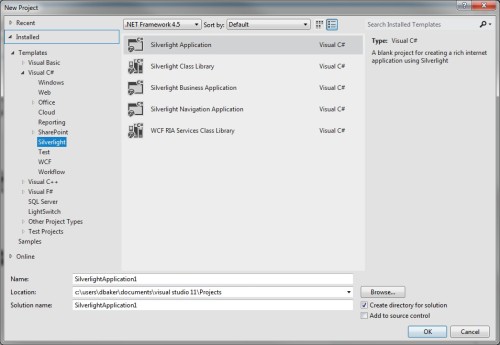

If you are embarking on custom UI controls with Silverlight 5 then here are the available project templates in VS11:

VS11 Beta Silverlight project templates

We are also eagerly awaiting the VS11 LightSwitch Extensibility Toolkit which has been indicated to be ready in a few weeks by Beth Massi:

The Xpert360 Lightning Series data extensions will unleash the true power of LightSwitch onto your salesforce CRM and Dynamics CRM Online data very soon. They are currently undergoing private beta testing which will now be extended to include the VS11 Beta as this platfrom has a go-live license.

If you somehow missed all the anouncements here are the links:

- Announcing LightSwitch in Visual Studio 11 Beta! – LS Team blog

- Welcome to the Beta of VS11 and .NET 4.5 – Jason Zanders Weblog

- Visual Studio 11 Beta downloads – Microsoft Visual Studio

Any feedback or enquiries welcome at mailto:info@xpert360.com.

For more details about Xpert360 Lightning Series extensions in VS 2010, see Xpert360’s Using SalesForce and Dynamics CRM with Visual Studio LightSwitch post of 3/1/2012.

Matt Thalman described Using the SecurityData service in LightSwitch in a 3/15/2012 post:

In this blog post, I’m going to describe how LightSwitch developers can programmatically access the security data contained in a LightSwitch application. Having access to this data is useful for any number of reasons. For example, you can use this API to programmatically add new users to the application.

This data is exposed in code as entities, just like with your own custom data. So it’s an easy and familiar API to work with. And it’s available from both client or server code.

First I’d like to describe the security data model. Here’s a UML diagram describing the service, entities, and supporting classes:

SecurityData

This is the data service class that provides access to the security entities as well as a few security-related methods. It is available from your DataWorkspace object via its SecurityData property.

SecurityData is a LightSwitch data service and behaves in the same way as the data service that gets generated when you add a data source to LightSwitch. It exposes the same type of query and save methods. It just operates on the built-in security entities instead of your entities.

Some important notes regarding having access to your application’s security data:

In a running LightSwitch application, users which do not have the SecurityAdministration permission are only allowed to read security data; they cannot insert, update, or delete it. In addition, those users are only able to read security data that is relevant to themselves. So if a user named Bob, who does not have the SecurityAdministration permission, queries the UserRegistrations entity set, he will only see a UserRegistration with the name of Bob. He will not be able to see that there also exists a UserRegistration with the name of Kim since he is not logged in as Kim. Similarly with roles, if Bob queries the Roles entity set, he can see that a role named SalesPerson exists because he is assigned to that role. But he cannot see that a role named Manager exists because he is not assigned to that role.

Users which do have the SecurityAdministration permission are not restricted in their access to security data. They are able to read all stored security data and have the ability to modify it.In addition to entity sets, SecurityData also exposes a few useful methods:

- ChangePassword

This method allows a user to change their own password. They need to supply their old password in order to do so. If the oldPassword parameter doesn’t match the current password or the new password doesn’t conform to the password requirements, an exception will be thrown. This method is only relevant when Forms authentication is being used. This method can be called by any user; they do not require the SecurityAdministration permission.- GetWindowsUserInfo