Analyzing Air Carrier Arrival Delays with Microsoft Codename “Cloud Numerics”

Table of Contents

- Introduction

- The FAA On_Time_Performance Database’s Schema and Size

- The Architecture of Microsoft Codename “Cloud Numerics”

- The Microsoft Codename “Cloud Numerics” Sample Solution for Analyzing Air Carrier Arrival Delays

- Prerequisites for the Sample Solution and Their Installation

- Creating the OnTimePerformance Solution from the Microsoft Cloud Numerics Application Template

- Configuring the AirCarrierOnTimeStats Solution

- Deploying the Cluster to Windows Azure and Processing the MSCloudNumericsApp.exe Job

- Interpreting FlightDataResult.csv’s Data

•• Updated 3/26/2012 12:40 PM PDT with two added graphics, clarification of the process for replacing storage account placeholders in the MSCloudNumericsApp project with actual values, a link to the The “Cloud Numerics” Programming and runtime execution model documentation, and The Architecture of Microsoft Codename “Cloud Numerics” section.

• Updated 3/21/2012 9:00 AM PDT with an added Prerequisites for the Sample Solution and Their Installation section.

Introduction

The BTS also publishes summaries of on-time performance, such as the Percent of Flights On Time (2011-2012) chart shown emphasized here:

Clicking one of the bars displays flight delay details for the past 10 years by default:

You can filter the data by airport, carrier, month, and arrival or departure delays. Many travel-related Web sites and some airlines use the TranStats data for consumer-oriented reports.

Return to Table of Contents

The FAA On_Time_Performance Database’s Schema and Size

The database file for January 2012, which was the latest available when this post was written, has 486,133 rows of 83 columns, only a few of which are of interest for analyzing on-time performance:

The ZIP file includes a Readme.html file with a record layout (schema) containing field names and descriptions.

The size of the extracted CSV file for January 2012 is 213,455 MB, which indicates that a year’s data would have about 5.8 million rows and be about 2.5 GB in size, which borders on qualifying for Big Data status.

Applying a filter to display flights with departure delays >0 shows that 151,762 (31.2%) of the 486,133 flights for the month suffered departure delays of 1 minute or more:

You’ll notice that many flights with departure delays had no arrival delays, which means that the flight beat its scheduled duration. Arrival delays are of more concern to passengers so a filter on arrival delays >0 (149,036 flights, 30.7%) is more appropriate:

Return to Table of Contents

The Architecture of Microsoft Codename “Cloud Numerics”

•• Microsoft Codename “Cloud Numerics” is an SQL Azure Labs incubator project for numerical and data analysis by “data scientists, quantitative analysts, and others who write C# applications in Visual Studio. It enables these applications to be scaled out, deployed, and run on Windows Azure” with high-performance computing (HPC) techniques for parallel processing of distributed data arrays.

Codename “Cloud Numerics” Components

The following diagram (from the team’s downloadable Cloud_Numerics_WP_Architecture.pdf and online The “Cloud Numerics” Programming and runtime execution model documents) describes the relationship of “Cloud Numerics” major components:

A downloadable CloudNumericsLab.chm v0.1.1 help file describes members of the Microsoft.Numerics namespaces and the NumericsRuntime Class:

Codename “Cloud Numerics” Deployment

Uploading and deploying the .NET components created locally with the Cloud Numerics Template for Visual Studio 2010 creates a Windows Azure High Performance Computing Cluster with two or more Compute Nodes:

The user configures and uploads a Windows Azure FrontEnd Web Role, as well as HeadNode and two or more ComputeNode Worker roles to a Windows Azure account. Each ComputNode incorporates the user application, MSCloudNumericsApp for this example, CloudNumerics Runtime, CloudNumerics Libraries and System Libraries. Deploying these roles creates a Windows Azure High Performance Computing cluster, which computes the results in parallel and reassembles them when done. The FrontEnd Web Role implements the interactive Windows Azure HPC Scheduler, which is described later in this post.

For the AirCarrierOnlineStats solution, users obtain results of the computation by downloading and opening a Windows Azure blog containing a flightdataresult.csv file, and opening the file in Excel.

Codename “Cloud Numerics” v0.1 Capabilities

The current “Cloud Numerics” version is limited to arrays which will fit in the main memory of a Windows 2008 R2 Server cluster. As the “Cloud Numerics” Team observes, disk-based data can be pre-processed by existing “big data” processing tools [such as Hadoop/MapReduce] and ingested into a “Cloud Numerics” application for further processing.

The “Cloud Numerics” Team included the source code for four sample solutions with the initial Community Technical Preview of 1/10/2012:

- Default Random Values (for testing prerequisites and configuration on the development machine)

- Latent Semantic Indexing

- Statistics

- Time Series

The OakLeaf blog has two earlier posts about Codename “Cloud Numerics” sample solutions 1 and 2:

- OakLeaf’s Introducing Microsoft Codename “Cloud Numerics” from SQL Azure Labs post of 1/22/2012 (upldated 3/17/2012) describes creating the default sample application with the Microsoft Cloud Numerics Application template for Visual Studio 2010 and running it in the Windows Azure Development Fabric and Storage Emulators on your local development computer. This sample uses a matrix of internally generate random numbers for its numbers.

- The Deploying “Cloud Numerics” Sample Applications to Windows Azure HPC Clusters post of 1/28/2012 describes deploying a slightly more complex Latent Semantic Indexing sample application to hosted compute clusters and storage services in Windows Azure.

The Microsoft Codename “Cloud Numerics” Sample Solution for Analyzing Air Carrier Arrival Delays

Roope Astala of the Codename “Cloud Numerics” Team described a “Cloud Numerics” Example: Analyzing Air Traffic “On-Time” Data in a 3/8/2012 post to the team’s blog:

You sit at the airport only to witness your departure time get delayed. You wait. Your flight gets delayed again, and you wonder “what’s happening?” Can you predict how long it will take to arrive at your destination? Are there many short delays in front of you or just a few long delays? This example demonstrates how you can use “Cloud Numerics” to sift though and calculate a big enough cross section of air traffic data needed to answer these questions. We will use on-time performance data from the U.S. Department of Transportation to analyze the distribution of delays.

The data is available at http://www.transtats.bts.gov/DL_SelectFields.asp?Table_ID=236&DB_Short_Name=On-Time. This data set holds data for every scheduled flight in the U.S. from 1987 to 2011 and is —as one would expect— huge! For your convenience, we have uploaded a sample of 32 months—one file per month with about 500,000 flights in each—to Windows Azure Blob Storage at this container URI: http://cloudnumericslab.blob.core.windows.net/flightdata.

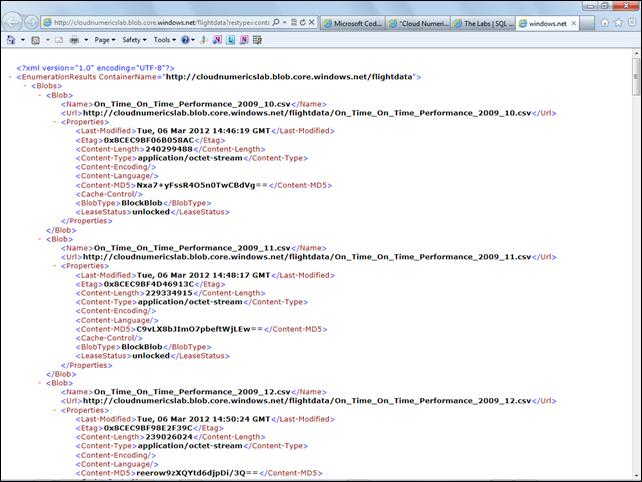

You cannot access this URI directly in a browser, you must use a Storage Client C# API … or a REST API query (http://cloudnumericslab.blob.core.windows.net/flightdata?restype=container&comp=list), to return a public blob list, the first three items of which are shown here:

Dates of the sample files range from May 2009 to December 2011; months don’t appear sequentially in the list.

Cloud Numeric’s IParallelReaderInterface could use four ExtraLarge compute instances having eight cores each to process all 32 months in parallel, if you have obtained permission to exceed the default 20 cores per subscription. Unlike most other SQL Azure Labs incubator projects, Cloud Numerics doesn’t offer free Windows Azure resources to run sample projects. ExtraLarge compute instances cost US$0.96 per hour of deployment, so assigning a core to each month would cost US$3.84 per deployed hour. Roope’s post recommends:

You should use two to four compute nodes when deploying the application to Windows Azure. One node might not have enough memory, and for larger-sized deployments there are not enough files in the sample data set to assign to all distributed I/O processes. You should not attempt to run the application on a local system because of data transfer and memory requirements. [Emphasis added.]

Note!

You specify how many compute nodes are allocated when you use the Cloud Numerics Deployment Utility to configure your Windows Azure cluster [three is the default]. For details, see this section in the Getting Started guide.

•• Following is a graphical summary of the steps in Visual Studio, the Cloud Numerics Deployment Utility, Windows Azure Portal and Windows Azure HPC Scheduler required to create, run, and obtain the results from the sample AirCarrierOnTimeStats solution:

The process is similar for all Codename “Cloud Numerics” projects.

Return to Table of Contents

Prerequisites for the Sample Solution and Their Installation

The project template and sample program have the following operating system and software prerequisites:

- Windows 7 or Windows Server 2008 R2 SP1, x64 (32-bit OS isn’t supported)

- Visual Studio 2010 Professional or Ultimate Edition with SP1 with Visual C++ components installed*

- SQL Server 2008 R2 Express or higher

- Windows Azure SDK v1.6 and Windows Azure Tools for Visual Studio, November 2011 or later x64 edition

- A Windows Azure subscription for deploying projects from local emulator mode to Windows Azure.

- Windows Azure HPC Scheduler SDK

- Windows HPC Pack 2008 R2 with SP3 Client Utilities

- Microsoft HPC Pack 2008 R2 MS-MPI with SP3 redistributable package

If any of the following obsolete components are present, installation will appear to succeed but you probably won’t be able to open a new “Cloud Numerics” project:

- Microsoft HPC Pack 2008 R2 Azure Edition

- Microsoft HPC Pack 2008 R2 Client Components

- Microsoft HPC Pack 2008 R2 MS-MPI Redistributable Pack

- Microsoft HPC Pack 2008 R2 SDK

- Windows Azure SDK v1.5

- Windows Azure AppFabric v1.5

- Windows Azure Tools for Microsoft VS2010 1.5

* Microsoft C++ Redistributable Files Required for Installing the Windows Azure HPC Scheduler

Important: The build script expects to find msvcp100.dll and msvcr100.dll files installed by VS2010 in the C:\Program Files(x86)\Microsoft Visual Studio 10.0\VC\redist\x64\Microsoft.VC100.CRT folder and the msvcp100d.dll and msvcr100d.dll debug versions in the C:\Program Files(x86)\Microsoft Visual Studio 10.0\VC\redist\Debug_NonRedist\x64\Microsoft.VC100.DebugCRT folder. If these files aren’t present in the specified locations, the Windows Azure HPC Scheduler will fail when attempting to run MSCloudNumericsApp.exe as Job 1.

After you install VS 2010 SP1, attempts to use setup’s Add or Remove Features option to add the VC++ compilers fail.

Note: You only need to take the following steps if you don’t have the Visual C++ compilers installed:

- Download the Microsoft Visual C++ 2010 SP1 Redistributable Package (x64) (vcredist_x64.exe) to a well-known location

- Run vcredist_x64.exe to add the msvcp100.dll, msvcp100d.dll, msvcr100.dll and msvcr100d.dll files to the C:\Windows\System32 folder.

- Create a C:\Program Files(x86)\Microsoft Visual Studio 10.0\VC\redist\x64\Microsoft.VC100.CRT folder and copy the msvcp100.dll and msvcr100.dll files to it.

- C:\Program Files(x86)\Microsoft Visual Studio 10.0\VC\redist\Debug_NonRedist\x64\Microsoft.VC100.DebugCRT folder and add the msvcp100d.dll and msvcr100d.dll files to it.

Installing the HPC and “Cloud Numerics” Components

Follow the instructions in the Microsoft Codename "Cloud Numerics" wiki article’s “Software Requirements” section to install the four components listed earlier.

Note: Links to http://connect.microsoft.com/ in the wiki article won’t work because you don’t receive an invitation code to enter.

Go directly to the "Cloud Numerics" Microsoft Connect Site to download:

- Installer for "Cloud Numerics": Downloads the MSI installer for "Cloud Numerics." Make sure to also download the documentation for "Cloud Numerics" (see below).

- Release Notes

- Getting Started with "Cloud Numerics": This document walks you through the installation and deployment process. By the end you should have a "Cloud Numerics" application running on Azure. For online version see here.

- Documentation for "Cloud Numerics": Documentation in the form of a Windows Help file (.chm). Download the file, save it in an easy to remember location, and open by double-cliking the file (see below).

- "Cloud Numerics" architecture white paper: White paper describing the architecture and technology behind "Cloud Numerics"

- Example applications: Three end-to-end C# examples: Latent Semantic Indexing, Statistics, and Time-series. The Air Carrier Arrival Delays project is an extension of the Statistics sample.

- A sample F# program

- An implementation of a CSV Loader (CSVLoader)

- An implementation of a sequence loader that works with the Data Transfer service (DataTransfer)

The wiki article’s Simple Examples section includes several example programs that you can run locally by replacing the code in the MSCloudNumerics project’s Sample.cs file.

Return to Table of Contents

Creating the OnTimePerformance Solution from the Microsoft Cloud Numerics Application Template

The Microsoft Cloud Numerics Application template proposes to create a new MSCloudNumerics1.sln solution with six prebuilt projects:

- AppConfigure

- AzureSampleService

- ComputeNode

- FrontEnd

- HeadNode

- MSCloudNumericsApp

Changes to the template code are required only to the MSCloudNumericsApp project’s Program.cs class file.

To create the AirCarrierOnTimeStats.sln solution and add a required reference, do the following:

1. Launch Visual Studio 2010 Web Developer Express or higher, choose New, Project to open the New Project dialog, select the Microsoft Cloud Numerics Application and name the project AirCarrierOnTimeStats:

2. Click OK to create the templated solution.

3. Right-click the MSCloudNumericsApp node and choose Add Reference to open the eponymous dialog. Scroll to and select the .NET tab’s Microsoft.WindowsAzure.StorageClient library:

4. Click OK to add the reference to the project.

Return to Table of Contents

Replacing Template Code with OnTimePerformance-Specific Procedures

1. Recreate the MSCloudNumericApp’s prebuilt Program.cs class, by replacing all prebuilt Program.cs class code with the following using block and procedure stubs:

using System; using System.Collections.Generic; using System.Linq; using System.Text; using msnl = Microsoft.Numerics.Local; using msnd = Microsoft.Numerics.Distributed; using Microsoft.Numerics.Statistics; using Microsoft.Numerics.Mathematics; using Microsoft.Numerics.Distributed.IO; using Microsoft.WindowsAzure; using Microsoft.WindowsAzure.StorageClient; namespace FlightOnTime { [Serializable] public class FlightInfoReader : IParallelReader<double> { } class Program { static void WriteOutput(string output) { } static void Main() { // Initialize runtime Microsoft.Numerics.NumericsRuntime.Initialize(); // Shut down runtime Microsoft.Numerics.NumericsRuntime.Shutdown(); } } }

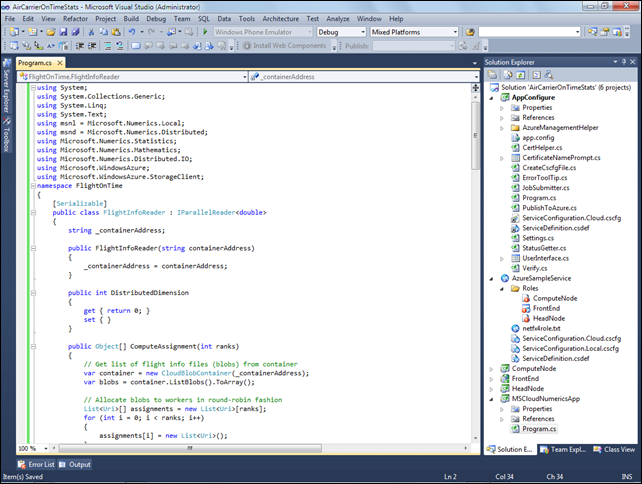

2. Add methods for reading blog data by replacing the public class FlightInfoReader stub with the following code:

[Serializable] public class FlightInfoReader : IParallelReader<double> { string _containerAddress; public FlightInfoReader(string containerAddress) { _containerAddress = containerAddress; } public int DistributedDimension { get {return 0;} set {} } public Object[] ComputeAssignment(int ranks) { // Get list of flight info files (blobs) from container var container = new CloudBlobContainer(_containerAddress); var blobs = container.ListBlobs().ToArray(); // Allocate blobs to workers in round-robin fashion List<Uri> [] assignments = new List<Uri> [ranks]; for (int i = 0; i < ranks; i++) { assignments[i] = new List<Uri>(); } for (int i = 0; i < blobs.Count(); i++) { int currentRank = i % ranks; assignments[currentRank].Add(blobs[i].Uri); } return (Object[]) assignments; } public msnl.NumericDenseArray<double> ReadWorker(Object assignment) { List<Uri> assignmentUris = (List<Uri>) assignment; // If there are no blobs to read, return empty array if (assignmentUris.Count == 0) { return msnl.NumericDenseArrayFactory.Create<double>(new long[] { 0 }); } List<double> arrivalDelays = new List<double>(); for (int blobCount = 0; blobCount < assignmentUris.Count; blobCount++) { // Open blob and read text lines var blob = new CloudBlob(assignmentUris[blobCount].AbsoluteUri); var rows = blob.DownloadText().Split(new char[] {'\n'}); int nrows = rows.Count(); // Offset by one row because of header file, also, note that last row is empty for (int i = 1; i < nrows - 1; i++) { // Remove quotation marks and split row var thisRow = rows[i].Replace("\"", String.Empty).Split(new char[] { ',' }); // Filter out canceled and diverted flights if (!thisRow[49].Contains("1") && !thisRow[51].Contains("1")) { // Add arrival delay from column 44 to list arrivalDelays.Add(System.Convert.ToDouble(thisRow[44])); } } } // Convert list to numeric dense array and return it from reader return msnl.NumericDenseArrayFactory.CreateFromSystemArray<double>(arrivalDelays.ToArray()); } } 3. Read the data and implement the statistics algorithm by replacing the static void Main stub with the following:

static void Main() { // Initialize runtime Microsoft.Numerics.NumericsRuntime.Initialize(); // Instantiate StringBuilder for writing output StringBuilder output = new StringBuilder(); // Read flight info string containerAddress = @"http://cloudnumericslab.blob.core.windows.net/flightdata/"; var flightInfoReader = new FlightInfoReader(containerAddress); var flightData = Loader.LoadData<double>(flightInfoReader); // Compute mean and standard deviation var nSamples = flightData.Shape[0]; var mean = Descriptive.Mean(flightData); flightData = flightData - mean; var stDev = BasicMath.Sqrt(Descriptive.Mean(flightData * flightData) * ((double)nSamples / (double)(nSamples - 1))); output.AppendLine("Mean (minutes), " + mean); output.AppendLine("Standard deviation (minutes), " + stDev); // Compute how much of the data is below or above 0, 1,...,5 standard deviations long nStDev = 6; for (long k = 0; k < nStDev; k++) { double aboveKStDev = 100d * Descriptive.Mean((flightData > k * stDev).ConvertTo<double>()); double belowKStDev = 100d * Descriptive.Mean((flightData < -k * stDev).ConvertTo<double>()); output.AppendLine("Samples below and above k standard deviations (percent), " + k + ", " + belowKStDev + ", " + aboveKStDev); } // Write output to a blob WriteOutput(output.ToString()); // Shut down runtime Microsoft.Numerics.NumericsRuntime.Shutdown(); }

4. Write results to a blob in your Windows Azure storage account by replacing the WriteOutput stub with the following:

static void WriteOutput(string output) { // Write to blob storage // Replace "myAccountKey" and "myAccountName" by your own storage account key and name string accountKey = "myAccountKey"; string accountName = "myAccountName"; // Result blob and container name string containerName = "flightdataresult"; string blobName = "flightdataresult.csv"; // Create result container and blob var storageAccountCredential = new StorageCredentialsAccountAndKey(accountName, accountKey); var storageAccount = new CloudStorageAccount(storageAccountCredential, true); var blobClient = storageAccount.CreateCloudBlobClient(); var resultContainer = blobClient.GetContainerReference(containerName); resultContainer.CreateIfNotExist(); var resultBlob = resultContainer.GetBlobReference(blobName); // Make result blob publicly readable, var resultPermissions = new BlobContainerPermissions(); resultPermissions.PublicAccess = BlobContainerPublicAccessType.Blob; resultContainer.SetPermissions(resultPermissions); // Upload result to blob resultBlob.UploadText(output); }

You will Replace myAccountName with the name of your Windows Azure Storage account and myAccountKey with the name of your Windows Azure Storage account’s access key after you complete the cluster configuration process in the next section.

5. Right-click the AppConfigure node and choose Set as StartUp Project:

Note: Compiling the project with the MSCloudNumericsApp as the Startup Project creates a MSCloudNumericsApp.exe executable file. You upload and run this file to transfer data from the blob to the Windows Azure HPC cluster as a distributed array and perform numeric operations on the array in the later Deploying the Cluster to Windows Azure and Processing the MSCloudNumericsApp.exe Job section.

Return to Table of Contents

Configuring the AirCarrierOnTimeStats Solution

This section assumes that you have Windows Azure Compute and Storage accounts, which are required to upload and run the solution in Windows Azure. If you don’t have a subscription with these accounts, you can sign up for a Three-Month Free Trial here. The Free Trial includes:

- Compute Virtual Machine: 750 Small Compute hours per month

- Relational Database: 1GB Web edition SQL Azure database

- Storage: 20GB with 1,000,000 storage transactions

- Content Delivery Network (CDN): 500,000 CDN transactions

- Data Transfer (Bandwidth): Unlimited inbound / 20GB Outbound

Small Compute nodes have the equivalent of a single 1.6 GHz CPU core, 1.75 GB of RAM, 225 GB of instance storage and “moderate” I/O performance. If you configure the minimum recommended number of Extra Large CPU instances with eight cores each, you will consume 18 Small Compute hours per hour that the solution is deployed. The additional two Small Compute instances are for the Head Node and Web Role (Front End). See step 11 below for more details.

Tip: See the Deploying “Cloud Numerics” Sample Applications to Windows Azure HPC Clusters post for a similar Cloud Numerics sample application if you want more detailed instructions for deploying the project.

1. Create or use a subscription with an Affinity Group that specifies the North Central US data center, where the data blobs are stored.

2. In Visual Studio, choose Build, Configuration Manager to open the Configuration Manager dialog, select Release in the Active Solution Configurations list, and click OK to change the build configuration from Debug to Release:

Important: If you don’t build for Release, the Cloud Numerics job you submit at the end of this post will fail to run to completion.

3. Press F5 to build the solution and start the configuration process with the Cloud Numerics Deployment Utility.

4. Copy the Subscription ID from the management portal and paste it to the Subscription ID text box:

5. If you’ve created a Microsoft Cloud Numerics Azure Management Certificate previously, click the Browse button to open the Windows Security dialog and select the certificate in the list:

Otherwise, click the Create button to open the Certificate Name Dialog, accept the Certificate Name, browse to the folder in which to store the *.cer file, and specify the File Name:

Click OK to accept the certificate and close the dialog.

6. If you created a new certificate, the following dialog appears.

Click OK to confirm either process.

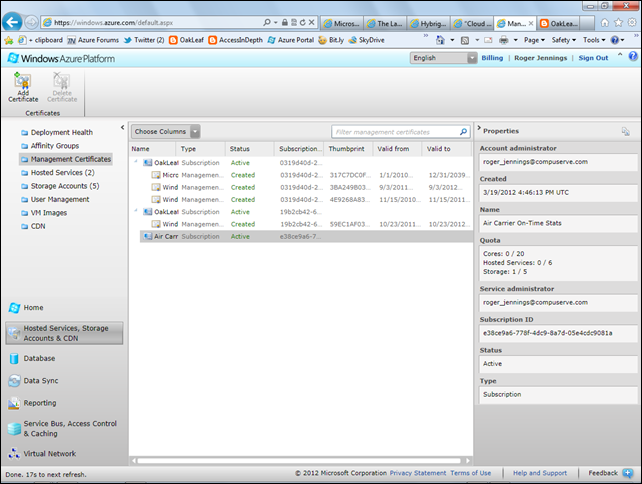

7. Return to the Management Portal, select Management Certificates in the navigation pane, select the appropriate subscription:

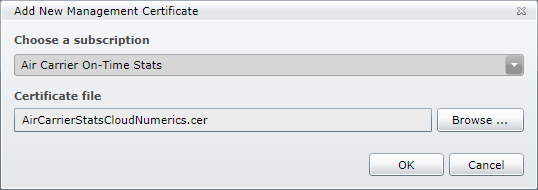

8. Click the Certificates group’s Add Certificate button to open the Add New Management Certificate dialog.

9. Click the Browse button to open the Open dialog, browse to the location where you saved the certificate file for the previous or new certificate, and double-click the *.cer file to add it:

10. Click OK to complete the certificate addition process, verify that the certificate appears under the subscription node, and return to the Utility dialog.

Important: If you don’t add the Management Certificate at this point, you won’t be able to select the Service Location in the following step.

11. Type a globally unique hosted Service Name, aircarrierstats for this example, and select the North Central US data center in the Location list:

The configuration process also will create a Storage Account with the Service Name as its name.

12. Click Next to select the Cluster in Azure tab, type and administrator name, password and confirm the password:

13. By default, the Utility specifies 3 Extra Large Compute nodes which have 8 CPU cores per instance. The default maximum number of cores (without requesting more from Windows Azure Billing Support) is 20, so change the number of Compute nodes to 2, which results in a total of 18 cores, including the Head and Web FrontEnd.

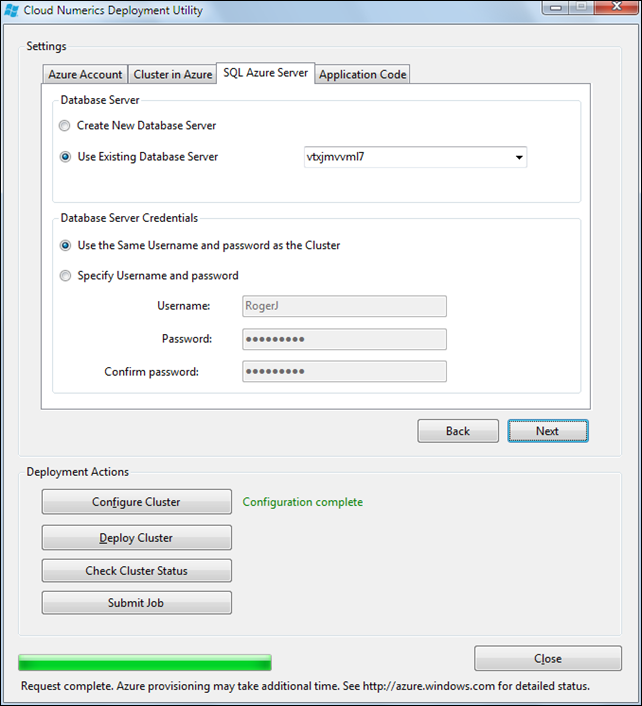

14. Click Next to activate the SQL Azure Server tab, accept the New Server and Administrator defaults and click Configure Cluster to start the configuration process. (You don’t need to complete the Application Code page until you submit a job.) After a few minutes, the cluster configuration process completes:

15. Select Storage Accounts under your subscription in the in the Windows Azure Portal’s navigation pane that the aircarrierstats storage account has been created:

16. Click the Primary Access Key’s View button to open a dialog with primary and secondary key values:

17. Click the Clipboard icon to the right of the Primary Key text box to copy the value and click Close to dismiss the dialog.

•• 18. Press Shift+F5 to stop the running 64-bit solution and enable code editing.

19. Open the MSCloudNumericsApp project’s Program.cs file, if necessary, and replace MyAccountName with aircarrierstats and paste-replace MyAccountKey with the Clipboard value.

•• 20. Select the MSCloudNumericsApp project node and choose Start as Setup Project.

•• 21. Press F5 to build and run the solution and create the MSCloudNumiericApp.exe executable (job) file.

In the next section, you’ll upload and deploy the cluster to Windows Azure and create the 1-GB SQL Azure database.

Return to Table of Contents

Deploying the Cluster to Windows Azure and Processing the MSCloudNumericsApp.exe Job

The final steps in the process are to deploy the project to a Windows Azure hosted service and submit (run) it to generate a results blob in the aircarrierstats storage service.

•• 1. Right click AppConfigure and set it as as the Startup Project.

•• 2. Press F5 to run the solution and open the Cloud Numerics Deployment Utility dialog.

3. Click Deploy Cluster from any tab to create the aircarrierstats hosted service, apply its Service Certificate, and start the deployment process:

Note: Copying the HPC package to blob storage is likely to take an hour or more, depending on your Internet connection’s upload speed. Initializing the four nodes takes an additional 15 to 20 minutes.

4. Completing deployment enables the Check Cluster Status button:

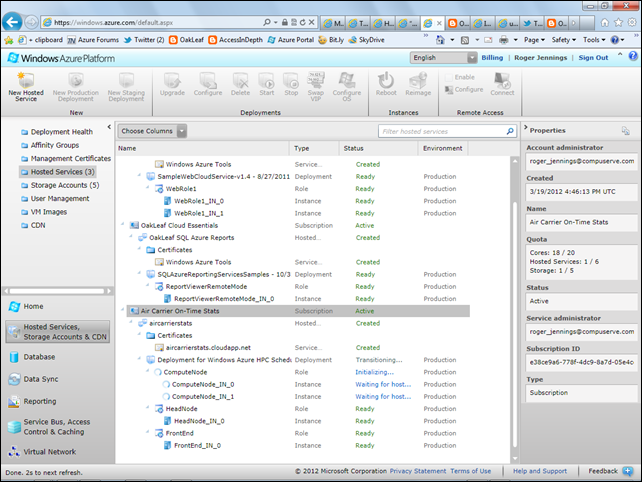

5. Return to the Windows Azure Portal, select Hosted Services in the navigation pane to display the status of the four nodes and select the Air Carrier On-Time Stats subscription to display its properties:

6. When all nodes reach Ready status, reopen the Cloud Numerics Deployment Utility, click the Application Code tab, click the Browse button, navigate to the \MyDocuments\Visual Studio 2010\Projects\AirCarrierOnTimeStats\MSCloudNumericsApp\bin\Release folder, select MSCloudNumericsApp.exe as the executable to run, and click Submit Job:

Using the Windows Azure HPC Scheduler Web Portal to Check Job Status

1. When the “Job successfully submitted” message appears, type the URL for the hosted service (https://aircarrierstats.cloudapp.net for this example) and click the Continue to Web Site link to temporarily accept the self-signed certificate and open the Sample Application page with a Certificate Warning in the address bar:

2. Click the Certificate Warning to display an Untrusted Certificate popup and click View Certificates to open the Certificate dialog:

3. Click the Install Certificate button to start the Certificate Import Wizard, click Next to display the Certificate Store page, and accept the default option:

4. Click Next to import the certificate, click Finish, and click OK to dismiss “The import was successful” message.

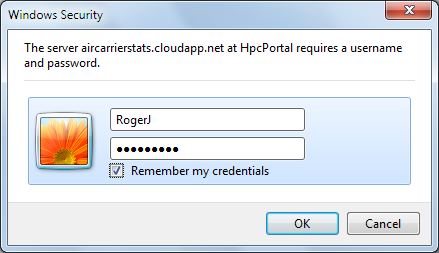

5. Type your Cluster Administrator username and password in the Windows Security dialog:

6. Click OK to open the Windows Azure HPC Scheduler Web Portal page and click All Jobs to display the submitted job status:

Note: If you receive an error message, click the My Jobs link. If the MSCloudNumericApp.exe item shows a Failed state, you probably compiled the project in Debug, rather than the required Release mode.

7. Click the MSCloudNumericsApp.exe link to open job details page:

Notice that the job completed in about two minutes.

8. Click the View Tasks tab to display execution details:

The Help link opens Release Notes for Microsoft HPC Pack 2008 R2 Service Pack 2, which doesn’t provide any information about the HPC Scheduler Web Portal.

Viewing the FlightDataResult Data in Excel

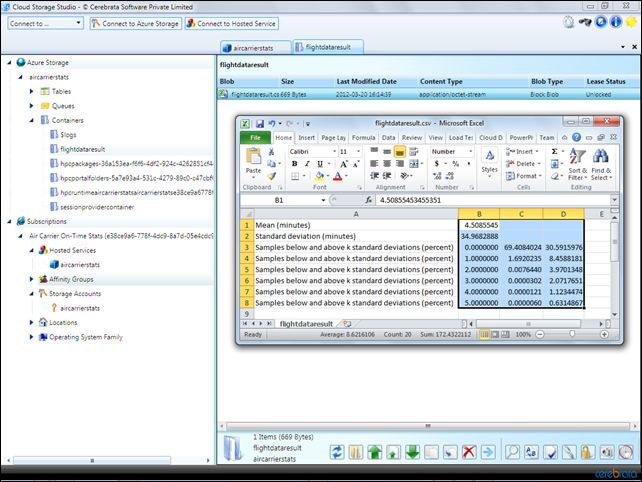

Open flightdataresult.csv in Excel from the aircarrierstats storage account’s flightdataresult container in an Azure storage utility, such as Cerebrata’s Cloud Storage Studio, to display the result of the standard deviation computations:

Note: The Azure Web Storage Explorer by Sebastian Gomez (@sebagomez) is a live Windows Azure Web application that’s a free alternative to Cloud Storage Studio.

Important: Be sure to delete your deployment and database in the Windows Azure Portal after reviewing and, optionally, making a local copy of the blob data. Otherwise, you will continue to accrue charges of US$2.16 per hour for your 18 cores, which occur regardless of whether you are accessing the deployment or not, and $9.99 per month for the SQL Azure database.

Return to Table of Contents

Interpreting FlightDataResult.csv’s Data

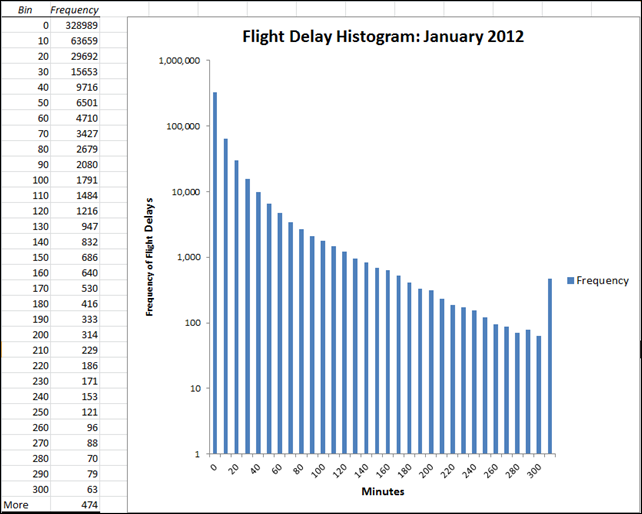

Following is a histogram for January 2012 flight arrival delays from 0 to 5 hours in 10-minute increments created with the Excel Data Analysis add-in’s Histogram tool from the unfiltered On_Time_On_Time_Performance_2012_1.csv worksheet:

The logarithmic Frequency scale shows an exponential decrease in the number of flight delays for increasing delay times starting at about one hour. Distributions for earlier months are similar.

Roope observes in the “Step 5: Deploy the Application and Analyze Results” section of his 3/8/2012 post:

Let’s take a look at the results. We can immediately see they’re not normal-distributed at all. First, there’s skew —about 70% of the flight delays are [briefer than the] average of 5 minutes. Second, the number of delays tails off much more gradually than a normal distribution would as one moves away from the mean towards longer delays. A step of one standard deviation (about 35 minutes) roughly halves the number of delays, as we can see in the sequence 8.5 %, 4.0 %, 2.1%, 1.1 %, 0.6 %. These findings suggests that the tail could be modeled by an exponential distribution. [See above histogram.]

This result is both good news and bad news for you as a passenger. There is a good 70% chance you’ll arrive no more than five minutes late. However, the exponential nature of the tail means —based on conditional probability— that if you have already had to wait for 35 minutes there’s about a 50-50 chance you will have to wait for another 35 minutes.

Return to Table of Contents.

0 comments:

Post a Comment