Windows Azure and Cloud Computing Posts for 3/26/2012+

Microfinance software specialist simplifies infrastructure, optimizes customer delivery, and expands capability and global scalability by adopting Microsoft cloud services platform.

| A compendium of Windows Azure, Service Bus, EAI & EDI Access Control, Connect, SQL Azure Database, and other cloud-computing articles. |

• Updated 3/28/2012 3:30 PM PDT with Mary Jo Foley’s “Antares” article in the Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds section and Lori MacVittie’s “Identity Gone Wild” post in the Windows Azure Access Control, Identity and Workflow section.

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Windows Azure Blob, Drive, Table, Queue and Hadoop Services

- SQL Azure Database, Federations and Reporting

- Marketplace DataMarket, Social Analytics, Big Data and OData

- Windows Azure Access Control, Identity and Workflow

- Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch and Entity Framework v4+

- Windows Azure Infrastructure and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

Azure Blob, Drive, Table, Queue and Hadoop Services

Denny Lee (@dennylee) posted A Primer on Hadoop (from the Microsoft SQL Community perspective) on 3/27/2012:

For a quick primer on Hadoop (from the perspective of the Microsoft SQL Community), as well as Microsoft Hadoop on Azure and Windows, check out the SlideShare.NET presentation below.

Above the cloud: Big Data and BIView more PowerPoint from Denny Lee

Note, as well, there is a great end-to-end Microsoft Hadoop on Azure and Windows presentation available at:

Michael Roberson of the Windows Azure Storage Team described Getting the Page Ranges of a Large Page Blob in Segments in a 3/26/2012 post:

One of the blob types supported by Windows Azure Storage is the Page Blob. Page Blobs provide efficient storage of sparse data by physically storing only pages that have been written and not cleared. Each page is 512 bytes in size. The Get Page Ranges REST service call returns a list of all contiguous page ranges that contain valid data. In the Windows Azure Storage Client Library, the method GetPageRanges exposes this functionality.

Get Page Ranges may fail in certain circumstances where the service takes too long to process the request. Like all Blob REST APIs, Get Page Ranges takes a timeout parameter that specifies the time a request is allowed, including the reading/writing over the network. However, the server is allowed a fixed amount of time to process the request and begin sending the response. If this server timeout expires then the request fails, even if the time specified by the API timeout parameter has not elapsed.

In a highly fragmented page blob with a large number of writes, populating the list returned by Get Page Ranges may take longer than the server timeout and hence the request will fail. Therefore, it is recommended that if your application usage pattern has page blobs with a large number of writes and you want to call GetPageRanges, then your application should retrieve a subset of the page ranges at a time.

For example, suppose a 500 GB page blob was populated with 500,000 writes throughout the blob. By default the storage client specifies a timeout of 90 seconds for the Get Page Ranges operation. If Get Page Ranges does not complete within the server timeout interval then the call will fail. This can be solved by fetching the ranges in groups of, say, 50 GB. This splits the work into ten requests. Each of these requests would then individually complete within the server timeout interval, allowing all ranges to be retrieved successfully.

To be certain that the requests complete within the server timeout interval, fetch ranges in segments spanning 150 MB each. This is safe even for maximally fragmented page blobs. If a page blob is less fragmented then larger segments can be used.

Client Library Extension

We present below a simple extension method for the storage client that addresses this issue by providing a rangeSize parameter and splitting the requests into ranges of the given size. The resulting IEnumerable object lazily iterates through page ranges, making service calls as needed.

As a consequence of splitting the request into ranges, any page ranges that span across the rangeSize boundary are split into multiple page ranges in the result. Thus for a range size of 10 GB, the following range spanning 40 GB

[0 – 42949672959]

would be split into four ranges spanning 10 GB each:

[0 – 10737418239]

[10737418240 – 21474836479]

[21474836480 – 32212254719]

[32212254720 – 42949672959].With a range size of 20 GB the above range would be split into just two ranges.

Note that a custom timeout may be used by specifying a BlobRequestOptions object as a parameter, but the method below does not use any retry policy. The specified timeout is applied to each of the service calls individually. If a service call fails for any reason then GetPageRanges throws an exception.

namespace Microsoft.WindowsAzure.StorageClient { using System; using System.Collections.Generic; using System.Linq; using System.Net; using Microsoft.WindowsAzure.StorageClient.Protocol; /// <summary> /// Class containing an extension method for the <see cref="CloudPageBlob"/> class. /// </summary> public static class CloudPageBlobExtensions { /// <summary> /// Enumerates the page ranges of a page blob, sending one service call as needed for each /// <paramref name="rangeSize"/> bytes. /// </summary> /// <param name="pageBlob">The page blob to read.</param> /// <param name="rangeSize">The range, in bytes, that each service call will cover. This must be a multiple of /// 512 bytes.</param> /// <param name="options">The request options, optionally specifying a timeout for the requests.</param> /// <returns>An <see cref="IEnumerable"/> object that enumerates the page ranges.</returns> public static IEnumerable<PageRange> GetPageRanges( this CloudPageBlob pageBlob, long rangeSize, BlobRequestOptions options) { int timeout; if (options == null || !options.Timeout.HasValue) { timeout = (int)pageBlob.ServiceClient.Timeout.TotalSeconds; } else { timeout = (int)options.Timeout.Value.TotalSeconds; } if ((rangeSize % 512) != 0) { throw new ArgumentOutOfRangeException("rangeSize", "The range size must be a multiple of 512 bytes."); } long startOffset = 0; long blobSize; do { // Generate a web request for getting page ranges HttpWebRequest webRequest = BlobRequest.GetPageRanges( pageBlob.Uri, timeout, pageBlob.SnapshotTime, null /* lease ID */); // Specify a range of bytes to search webRequest.Headers["x-ms-range"] = string.Format( "bytes={0}-{1}", startOffset, startOffset + rangeSize - 1); // Sign the request pageBlob.ServiceClient.Credentials.SignRequest(webRequest); List<PageRange> pageRanges; using (HttpWebResponse webResponse = (HttpWebResponse)webRequest.GetResponse()) { // Refresh the size of the blob blobSize = long.Parse(webResponse.Headers["x-ms-blob-content-length"]); GetPageRangesResponse getPageRangesResponse = BlobResponse.GetPageRanges(webResponse); // Materialize response so we can close the webResponse pageRanges = getPageRangesResponse.PageRanges.ToList(); } // Lazily return each page range in this result segment. foreach (PageRange range in pageRanges) { yield return range; } startOffset += rangeSize; } while (startOffset < blobSize); } } }Usage Examples:

pageBlob.GetPageRanges(10 * 1024 * 1024 * 1024 /* 10 GB */, null);

pageBlob.GetPageRanges(150 * 1024 * 1024 /* 150 MB */, options /* custom timeout in options */);Summary

For some fragmented page blobs, the GetPageRanges API call might not complete within the maximum server timeout interval. To solve this, the page ranges can be incrementally fetched for a fraction of the page blob at a time, thus decreasing the time any single service call takes. We present an extension method implementing this technique in the Windows Azure Storage Client Library.

Michael Roberson

<Return to section navigation list>

SQL Azure Database, Federations and Reporting

My (@rogerjenn) Tips for deploying SQL Azure Federations article of 3/28/2012 for TechTarget’s SearchSQLServer.com begins:

Microsoft is determined to enshrine Windows Azure and SQL Azure as the world’s flagship public-cloud Platform as a Service (PaaS) and relational database. Confronted by a continuous stream of new Infrastructure as a Service (IaaS) improvements to Amazon Web Services, the Windows Azure and SQL Azure teams have quickened their pace of adding new features and cutting service prices.

Managing SQL Azure Federations

Clicking the Databases tile and the Summary arrow of the database you want to manage in the Databases list opens the database’s Summary page, which includes a Query Usage (CPU) chart, Database Properties pane and Federations pane (see Figure 1). The sample federated database contains about 5 GB of event counter data from a live Windows Azure application.

Figure 1

This Summary page for a 5 GB federated database includes a link arrow at the lower right to open a management page for the named federation. Selecting the federation name enables the Drop Federation link. The summary page for all databases includes a New button (circled) to create a new federation root. …

and ends:

… Then in February, the SQL Azure team announced more changes -- a new, smaller Web edition database priced at $4.99 a month for up to 100 MB of data and substantial across-the-board price reductions for SQL Azure databases, as shown in Table 1.

Table 1

Above lists the cost for each SQL Azure database a month and the cost savings of new pricing for Web Edition and Business Edition databases. The database size stretches from 100 MB to 150 GB.

Finer-grained pricing took the bite out of 9 GB or 10 GB transitions for both Web (up to 10 GB) and Business (10 GB to 150 GB) editions, as shown in Figure 7.

Figure 7

Comparison of cost per month of SQL Azure databases in maximum sizes from 100 MB to 150 GB with prices adjusted on December 12, 2011 (Old) and February 14, 2012 shows more gradual transitions between database sizes.

Conclusion

Over its brief two-year lifetime, SQL Azure has grown from a maximum database size of 10 GB to 150 GB, gained more sophisticated management tools and has become substantially less costly to implement. If your organization needs a proven, highly available relational database in a multinational public cloud, give SQL Azure a test drive. The Windows Azure 90-Day Free Trialincludes use of a 1 GB SQL Azure database at no charge for three months.

Ian Hardenburgh described Hybrid on-demand business intelligence with SQL Azure Reporting in a 3/22/2012 post to TechRepublic’s The Enterprise Cloud blog:

Takeaway: Ian Hardenburgh describes Microsoft’s positioning of SQL Server, SQL Azure, and SQL Azure Reporting Services for enterprises that need data analysis and business intelligence tools.

Enterprise-class business intelligence software has become an essential part of many companies’ financial and operational decision-making. As exemplified by preeminent solution providers like IBM and SAS, BI adoption is growing at an exponential rate, mostly catalyzed by faster processors and cheaper data storage for heightened data warehousing and querying initiatives. This has afforded businesses the ability to perform a more intense type of data analysis, or what is known to some as analytics, where vast sets of information are disseminated across the enterprise.

An upshot from the demand for better BI is the need for scalable and well distributed business intelligence tools, to pervade the enterprise even further, instead of limiting it to just a few key analysts, by way of some kind of desktop software. Furthermore, as these same businesses move their data to off-premise cloud storage environments, uninterrupted use of these tools also becomes a concern. Microsoft’s SQL Azure Reporting on-demand service is not only well positioned to address this changing tide, but is already outfitted for hybrid use on and off Azure, Microsoft’s public cloud, to set up companies for the inevitable all-in-the-cloud future.

If you’re familiar with Microsoft SQL Server, you’re most likely also familiar with its SQL Server Reporting Services. Both SQL Azure and SQL Azure Reporting could be considered a toned-down version of SQL Server, and its major reporting component, meaning Reporting Services. In fact, many of the same development tools, like BIDS (Business Intelligence Development Studio) and SSMS (SQL Server Management Studio), are utilized to deploy reports from ad-hoc queries or stored and tasked database objects.

For those unfamiliar with Reporting Services, I wouldn’t be too concerned, as you can probably use all the web-based tools that ship with a subscription to SQL Azure. That is, at least for the foreseeable future. In the case where you might require a greater flexibility in the design and development of your database objects and reports, on-premise SQL Server might be a welcome addition. However, this might be considered as something of a luxury, as SQL Azure Reporting services is robust enough to address most reporting deliverables, outside of any Analysis Services kind of OLAP and data mining capabilities. But in my experience, there are a very limited set of users in any given company that know how to take advantage of very advanced analytics doings like performing a multi-dimensional analysis, or applying arcane kinds of data mining methods. As alluded to above, SQL Azure Reporting and on-premise SQL Server are well situated to address hybrid concerns like these. As Microsoft continues to expand upon its public cloud offering, you can also expect to see further service options to become available for on-demand database and business intelligence.

For a good understanding of SQL Azure Reporting capabilities/limitations, in comparison with on-premise SQL Server (2008 R2 edition), see this link. Take careful notice of the following tables entitled:

- High-Level Comparison of Reporting Services Features and SQL Azure Reporting Features

- Reporting Services Features Not Available in SQL Azure Reporting

- Tool Compatibility

<Return to section navigation list>

MarketPlace DataMarket, Social Analytics, Big Data and OData

My (@rogerjenn) Analyzing Air Carrier Arrival Delays with Microsoft Codename “Cloud Numerics” begins:

Table of Contents

Introduction

- The FAA On_Time_Performance Database’s Schema and Size

- The Architecture of Microsoft Codename “Cloud Numerics”

- The Microsoft Codename “Cloud Numerics” Sample Solution for Analyzing Air Carrier Arrival Delays

- Prerequisites for the Sample Solution and Their Installation

- Creating the OnTimePerformance Solution from the Microsoft Cloud Numerics Application Template

- Configuring the AirCarrierOnTimeStats Solution

- Deploying the Cluster to Windows Azure and Processing the MSCloudNumericsApp.exe Job

- Interpreting FlightDataResult.csv’s Data

•• Updated 3/26/2012 12:40 PM PDT with two added graphics, clarification of the process for replacing storage account placeholders in the MSCloudNumericsApp project with actual values, a link to the The “Cloud Numerics” Programming and runtime execution model documentation, and The Architecture of Microsoft Codename “Cloud Numerics” section.

• Updated 3/21/2012 9:00 AM PDT with an added Prerequisites for the Sample Solution and Their Installation section.

Introduction

The U.S. Federal Aviation Administration (FAA) publishes monthly an On-Time Performance dataset for all airlines holding a Department of Transportation (DOT) Air Carrier Certificate. The FAA’s Research and Innovative Technology Administration (RITA) of the Bureau of Transportation Statistics (BTS) publishes the data sets in the form of prezipped comma-separated value (CSV, Excel) files here:

…

You’ll notice that many flights with departure delays had no arrival delays, which means that the flight beat its scheduled duration. Arrival delays are of more concern to passengers so a filter on arrival delays >0 (149,036 flights, 30.7%) is more appropriate:

and concludes:

Interpreting FlightDataResult.csv’s Data

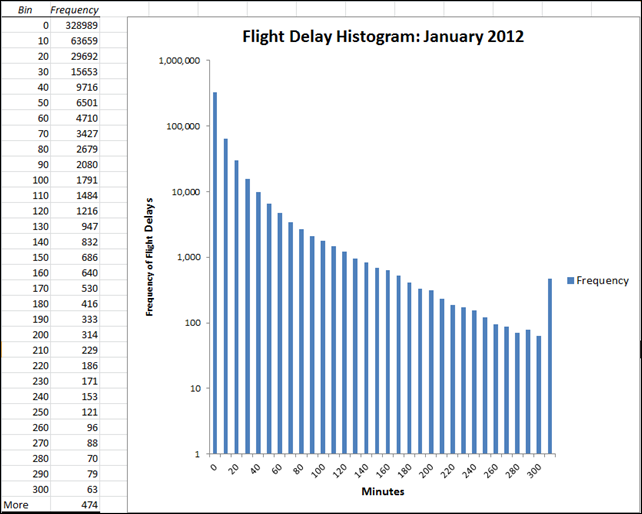

Following is a histogram for January 2012 flight arrival delays from 0 to 5 hours in 10-minute increments created with the Excel Data Analysis add-in’s Histogram tool from the unfiltered On_Time_On_Time_Performance_2012_1.csv worksheet:

The logarithmic Frequency scale shows an exponential decrease in the number of flight delays for increasing delay times starting at about one hour.

[Microsoft’s] Roope [Astala] observes in the “Step 5: Deploy the Application and Analyze Results” section of his 3/8/2012 post:

Let’s take a look at the results. We can immediately see they’re not normal-distributed at all. First, there’s skew —about 70% of the flight delays are [briefer than the] average of 5 minutes. Second, the number of delays tails off much more gradually than a normal distribution would as one moves away from the mean towards longer delays. A step of one standard deviation (about 35 minutes) roughly halves the number of delays, as we can see in the sequence 8.5 %, 4.0 %, 2.1%, 1.1 %, 0.6 %. These findings suggests that the tail could be modeled by an exponential distribution. [See above histogram.]

This result is both good news and bad news for you as a passenger. There is a good 70% chance you’ll arrive no more than five minutes late. However, the exponential nature of the tail means —based on conditional probability— that if you have already had to wait for 35 minutes there’s about a 50-50 chance you will have to wait for another 35 minutes.

Michael Washington (@DefWebServer) described Consuming The Netflix OData Service Using App Inventor in a 3/27/2012 post:

App inventor is a program that allows you to easily make applications that run on the Android system. This includes the Amazon Kindle Fire. Here are some links to get you started with App Inventor:

- Setup Set up your computer. Run the emulator. Set up your phone. Build your first app.

- Tutorials Learn the basics of App Inventor by working through these tutorials.

- Reference Documentation Look up how specific components and blocks work. Read about concepts in App Inventor, like displaying lists and accessing images and sounds.

You can use the server that is set-up at MIT or download the App Inventor Server source code and run your own server.

You will need to go through the Tutorials to learn how to manipulate the App Inventor Blocks.

The Netflix OData Feed

For the sample application, we desire to create an Android application that will allow us to browse the Netflix catalog by Genre.

If we go to: http://odata.netflix.com/v2/Catalog/ we see that Netflix has an OData feed of their catalog. This feed provides the information that we will use for the application.

The Netflix Genre Browser

The completed Android program allows you to choose a Movie Genre, and then a movie in the selected Genre. After you choose a Movie, it will display the summary of the movie:

(1) (2) (3) (4) (5) (6) (7) The Screen

In the Screen designer of App Inventor, we create a simple layout with two List Picker Buttons and two Labels. We also include two Web controls.

The Blocks

With App Inventor we create programs using Blocks.

First we create definition Blocks. These Blocks are variables that are used to hold values that will be used in the application. The Text (string) variables have a “text” Block plugged into their right-hand side. The List variables have a “make a list” Block plugged into them.

We can also create procedure which is a method that can take parameters and can return results.

We create the RemoveODataHeader procedure that will remove the first two items from a list passed to it. This procedure will be called from other parts of the application.

The List Of Genres

The first procedure to run is the Screen1.Initialize procedure.

After it runs, OData is returned to the application.

The next procedure to run is the webComponentGenres.GotText procedure. This procedure fills the Genre List Picker Button so that the list of Genre entries will show when the user clicks the Button.

The first part of the procedure breaks the OData result and parses it into a List called lstAllOData.

The second part of the procedure loops thru each list item in the list and dynamically strips out everything but the title, and builds a list that is then set as the item source of the Genre List Picker Button.

When the user clicks the Button, the list appears.

Choosing A Genre

When a user selects a Genre from the list, the ListPickerGenres.AfterPicking procedure runs. The first thing it does is display some hidden Screen elements and reinitialize the Movie Title and Movie Description lists (the user may have a list already displayed and simply chosen another Genre).

The next part of the procedure displays the selected Genre in a Label, and constructs a URL, and queries Netflix for Movies in the selected Genre.

The selected Genre is displayed and the list of movies in that Genre are retrieved from Netflix.

Choosing A Movie

After the ListPickerGenres.AfterPicking procedure runs, OData is returned to the application.

The next procedure to run is the webComponentTitles.GotText procedure.

This procedure fills the Movie List Picker Button so that the list of Movie entries will show when the user clicks the Button.

The first part of the procedure breaks the OData result and parses it into lstAllOData.

The next step to create a list of Movie titles is similar to what we did previously to build the list of Genres.

However, this time we build a second list of Movie Descriptions.

We will use this second list to display the Movie description when a Movie is selected.

We set the Movie List Picker to the list of Movie Titles and the list of Movies displays when the Button is selected.

When a Movie is selected, we will take the index position selected to retrieve the proper Movie Description in the lstMovieDescription list.

The Movie is then displayed.

Download

You can download the source code at this link.

Further Reading

David Menninger (@dmenningervr) reported Research Uncovers Keys to Using Predictive Analytics in a 3/27/2012 to his Ventana Research blog:

As a technology, predictive analytics has existed for years, but adoption has not been widespread among businesses. In our recent benchmark research on business analytics among more than 2,600 organizations, predictive analytics ranked only 10th among technologies they use to generate analytics, and only one in eight of those companies use it. Predictive analytics has been costly to acquire, and while enterprises in a few vertical industries and specific lines of business have been willing to invest large sums in it, they constitute only a fraction of the organizations that could benefit from them. Ventana Research has just completed a benchmark research project to learn about how the organizations that have adopted predictive analytics are using it and to acquire real-world information about their levels of maturity, trends and best practices. In this post I want to share some of the key findings from our research.

As I have noted, varieties of predictive analytics are on the rise. The huge volumes of data that organizations accumulate are driving some of this interest. Our Hadoop research highlights the intersection of this big data and predictive analytics: More than two-thirds (69%) of Hadoop users perform advanced analytics such as data mining. Regardless of the reasons for the rise, our new research confirms the importance of predictive analytics. Participants overwhelmingly reported that these capabilities are important or very important to their organization (86%) and that they plan to deploy more predictive analytics (94%). One reason for the importance assigned to predictive analytics is that most organizations apply it to core functions that produce revenue. Marketing and sales are the most common of those. The top five sources of data tapped for predictive analytics also relate directly to revenue: customer, marketing, product, sales and financial.

Although participants are using predictive analytics for important purposes and are generally positive about the experience, they do not minimize its complexities. While now usable by more types of people, this technology still requires special skills to design and deploy, and in half of organizations the users of it don’t have them. Having worked for two different vendors in the predictive analytics space, I personally can testify that the mathematics of it requires special training. Our research bears this out. For example, 58 percent don’t understand the mathematics required. Although not a math major, I had always been analytically oriented, but to get involved in predictive analytics I had to learn new concepts or new ways to apply concepts I knew.

Organizations can overcome these issues with training and support. Unfortunately, most are not doing an adequate job in these areas. Not half (44%) said their training in predictive analytics concepts and techniques is adequate, and fewer than one-fourth (24%) provide adequate help desk resources. These are important places to invest because organizations that do an adequate job in these two areas have the highest levels of satisfaction with their use of predictive analytics; 89% of them are satisfied vs. 66% overall. But we note that product training is not the most important type. That also correlated to higher levels of satisfaction, but training in concepts and the application of those concepts to business problems showed stronger correlation.

Timeliness of results also has an impact on satisfaction. Organizations that use real-time scoring of records occasionally or regularly are more satisfied than those that use real-time scoring infrequently or not at all. Our research also shows that organizations need to update their models more frequently. Almost four in 10 update their models quarterly or less frequently, and they are less satisfied with their predictive analytics projects than those who update more frequently. In some ways model updates represent the “last mile” of the predictive analytics process. To be fully effective, organizations need to build predictive analytics into ongoing business processes so the results can be used in real time. Using models that aren’t up to date undermines the whole effort.

Thanks to our sponsors, IBM and Alpine Data Labs, for helping to make this research available. And thanks to our media sponsors, Information Management, KD Nuggets and TechTarget, for helping in gaining participants and promoting the research and educating the market. I encourage you to explore these results in more detail to help ensure your organization maximizes the value of its predictive analytics efforts.

Asad Khan reported an OData meetup in a 3/26/2012 post to the OData Team blog:

This week we had our first OData meetup hosted by Microsoft. People representing 20+ companies came together to learn from other attendees’ experiences, chatted about everything OData, and enjoyed the food, beverages and awesome weather (no, really!) in Redmond.

We had some great presentations

- Mike Pizzo had fun stories on the Evolution of OData. He talked about the open design approach that the OData team adopted from the very beginning and how it helped to bring the community on board. He concluded that OData design has benefited greatly from broad community participation.

- Pablo Castro and Alex James covered the new features that are coming as part of OData v3. OData v3 has ton of features that augment the RESTful story for OData. Features like vocabularies and functions provide the necessary extension points that enable implementers to go beyond what is offered in the core implementation and still be able to play within the OData ecosystem.

- Ralf Handl from SAP talked about how OData helped them achieve the vision for ‘Open Data’ – Any Environment, Any Platform, Any Experience. In later talks by SAP they showed some of their products that are powered by OData. In addition to the OData feeds they publish, they demonstrated client tools that enable developers to easily consume SAP OData feeds on the platform of their choice.

- Dana Gutride from Citrix walked through their experience of enabling OData in some of their products. OData’s standards-based approach, capabilities like type safety, and ease of access made it an obvious choice for their product.

- Webnodes presented how they integrated OData into their CMS system

- Eastbanc Technologies talked about their metropolitan transit visualization tool

- Viecore’s demoed its advanced decision support and control systems for the U.S. military

- APIgee’s Anant Jhingran gave more of a Zen talk; Anant hit few themes that are worth mentioning

- If Data isn’t your core business, then you should give it away

- Opportunity for OData community is immense – question is whether we’ll grab it

- Data as an information halo surrounding core business is the OData opportunity

- Pablo Castro gave another talk titled ‘OData: The Good, the Bad, and the Ugly’, which focused on what things Microsoft has done right and wrong in implementing their OData stack (beer was served during this talk to ensure these points do not last long in people’s memory)

The first day ended with a delicious dinner at the Spitfire restaurant in Redmond.

On the second day of the meetup we used Open Space format (http://www.openspaceworld.org/) to encourage loosely-structured discussion. Through Arlo Belshee’s awesome coordination, we put together by the end of the first hour an exciting agenda for the rest of the day.

Some of the conversations that happened and themes that emerged:

- The topic of vocabularies sparked a great discussion, in which we were trying to decide what tools and communications media would best help groups create vocabularies and then advertise them to others. We also talked about whether there were vocabularies that were central enough to warrant definition by the OData community as a whole.

- SAP led a discussion exploring ways to model Analytical data (cubes) in OData, and meetup attendees had many good suggestions.

- There was a lot of talk about open source, ODataLib, and a shared query processor. Some people talked about porting ODataLib to other languages. Others discussed getting improvements folded back into existing projects, such as OData4J. We had several conversations about a query processor, and what form it could take. We even got into some architectural discussion about potential programming APIs.

- · We heard repeatedly that there isn’t enough marketing of OData to CIOs and other decision makers, and we discussed different ways to improve the odata.org website to make it more useful for the community.

- JSON Light came up several times. We kicked the tires around some of the current thinking and explored how that would interact with peoples’ existing implementations.

The two days were both educational and fun-filled, and they showed how big the OData community has grown in recent years. There was a strong interest from the attendees to do more of these community-driven events.

Sorry I missed this meeting!

No significant articles today.

<Return to section navigation list>

Windows Azure Service Bus, Access Control, Identity and Workflow

• Lori MacVittie (@lmacvittie) asserted Identity lifecycle management is out of control in the cloud in an introduction to her Identity Gone Wild! Cloud Edition post of 3/28/2012 to F5’s DeveloperCentral blog:

Remember the Liberty Alliance? Microsoft Passport? How about the spate of employee provisioning vendors snatched up by big names like Oracle, IBM, and CA?

That was nearly ten years ago.

That’s when everyone was talking about “Making ID Management Manageable” and leveraging automation to broker identity on the Internets. And now, thanks to the rapid adoption of SaaS driven, so say analysts, by mobile and remote user connectivity, we’re talking about it again.

“Approximately 48 percent of the respondents said remote/mobile user connectivity is driving the enterprises to deploy software as a service (SaaS). This is significant as there is a 92 percent increase over 2010.” -- Enterprise SaaS Adoption Almost Doubles in 2011: Yankee Group Survey

So what’s the problem? Same as it ever was, turns out. The lack of infrastructure integration available with SaaS models means double trouble: two sets of credentials to manage, synchronize, and track.

IDENTITY GONE WILD

Unlike Web 2.0 and its heavily OAuth-based federated identity model, enterprise-class SaaS lacks these capabilities. Users who use Salesforce.com for sales force automation or customer relationship management services have a separate set of credentials they use to access those services, giving rise to perhaps one of the few shared frustrations across IT and users – Yet Another Password. Worse, there’s less control over the strength (and conversely the weakness) of those credentials, and there’s no way to prevent a user from simply duplicating their corporate credentials in the cloud (a kind of manual single-sign on strategy users adopt to manage their lengthy identity lists). That’s a potential attack vector and one that IT is interested in cutting off sooner rather than later.

The lack of integration forces IT to adopt manual synchronization processes that lag behind reality. Synchronization of accounts often requires manual processes that extract, zip and share corporate identity with SaaS operations as a means to level access on a daily basis. Inefficient at best, dangerous as worst, this process can easily lead to orphaned accounts – even if only for a few weeks – that remain active for the end-user even as they’ve been removed from corporate identity stores.

“Orphan accounts refer to active accounts belonging to a user who is no longer involved with that organization. From a compliance standpoint, orphan accounts are a major concern since orphan accounts mean that ex-employees and former contractors or suppliers still have legitimate credentials and access to internal systems.” -- TEST ACCOUNTS: ANOTHER COMPLIANCE RISK

What users – and IT – want is a more integrated system. For IT it’s about control and management, for end-users it’s about reducing the impact of credential management on their daily workflows and eliminating the need to remember so many darn passwords.

IDENTITY GOVERNANCE: CLOUD STYLE

From a technical perspective what’s necessary is a better method of integration that puts IT back in control of identity and, ultimately, access to corporate resources wherever they may be.

It’s less a federated governance model and more a hierarchical trust-based governance model. Users still exist in both systems – corporate and cloud – but corporate systems act as a mediator between end-users and cloud resources to ensure timely authentication and authorization. End-users get the benefit of a safer single-sign on like experience, and IT sleeps better at night knowing corporate passwords aren’t being duplicated in systems over which they have no control and for which quantifying risk is difficult.

Much like the Liberty Alliance’s federated model, end-users authenticate to corporate identity management services and then a corporate identity bridging (or brokering) solution asserts to the cloud resource the rights and role of that user. The corporate system trusts the end-user by virtue of compliance with its own authentication standards (certificates, credentials, etc…) while the SaaS trusts the corporate system. The user still exists in both identity stores – corporate and cloud – but identity and access is managed by corporate IT, not cloud IT.

This problem, by the way, is not specific to SaaS. The nature of cloud is such that almost all models impose the need for a separate set of credentials in the cloud from that of corporate IT. This means an identity governance problem is being created every time a new cloud-based service is provisioned, which increases risks and the costs associated with managing those assets as they often require manual processes to synchronize.

Identity bridging (or brokering) is one method of addressing these risks. By putting control over access back in the hands of corporate IT, much of the risk of orphan accounts is mitigated. Compliance with corporate credential policies (strength and length of passwords, for example) can be restored because authentication occurs in the data center rather than in the cloud. And perhaps most importantly, if corporate IT is properly set up, there is no lag between an account being disabled in the corporate identity store and access to cloud resources being denied. The account may still exist, but because access is governed by corporate IT, the risk is diminished to nearly nothing; the user cannot gain access to that resource without the permission of corporate IT, which is immediately denied.

This is one of the reasons why identity and access management go hand in hand today. The distributed nature of cloud requires that IT be able to govern both identity and access, and a unified set of services enables IT to do just that.

<Return to section navigation list>

Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

No significant articles today.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

Himanshu Singh (@himanshuks) reported Microsoft Research and Windows Azure Partner to Influence Discovery and Sharing in a 3/28/2012 post to the Windows Azure Team blog:

This past month at Microsoft’s annual TechFest, Microsoft Research demoed three new research projects powered by Windows Azure which aim to unify data to empower discovery and sharing.

All three projects – Microsoft Translator Hub, ChronoZoom and FetchClimate! – involve machine learning and/or computing big data sets. Using Windows Azure helps each project accomplish significant computations and allows all to be used by online communities in the cloud. These tools are primarily used by scientists and researchers, but all are available to the general public for download.

Microsoft Translator Hub – Microsoft Translator Hub implements a self-service model for building a highly customized automatic translation service between any two languages. Microsoft Translator Hub empowers language communities, service providers and corporations to create automatic translation systems, allowing speakers of one language to share and access knowledge with speakers of any other language. By enabling translation to languages that aren’t supported by today’s mainstream translation engines, this also keeps less widely spoken languages vibrant and in use for future generations. This Windows Azure-based service allows users to upload language data for custom training, and then build and deploy custom translation models. These machine translation services are accessible using the Microsoft Translator APIs or a Webpage widget.

ChronoZoom – Powered by Windows Azure and SQL Azure, ChronoZoom is a collaborative tool that organizes history-related collections in one place. Given there are thousands of digital libraries, archives, collections and repositories, there hasn’t been an easy way to leverage these datasets for teaching, learning and research. With ChronoZoom, users can easily consume audio, video, text, charts, graphs and articles in one place. Using HTML5, ChronoZoom enables users to browse historical knowledge affixed to logical visual time scales, rather than digging it out piece by piece.

FetchClimate!: FetchClimate! is a powerful climate data service that provides climate information on virtually any point or region in the world and for a range of years. Deployed on Windows Azure, it can be accessed either through a simple web interface, or via a few lines of code inside any .NET program. All climate datasets are stored on Windows Azure as well.

Founded in 1991, Microsoft Research is dedicated to conducting both basic and applied research in computer science and software engineering. More than 850 Ph.D. researchers focus on more than 60 areas of computing and openly collaborate with leading academic, government, and industry researchers to advance the state of the art of computing, help fuel the long-term growth of Microsoft and its products, and solve some of the world’s toughest problems through technological innovation. Microsoft Research has expanded over the years to seven countries worldwide and brings together the best minds in computer science to advance a research agenda based on an array of unique talents and interests. More information can be found here.

Bruce Kyle asserted Up-to-Date Information on Windows Azure Now Available in New USCloud Blog in a 3/28/2012 post to the US ISV Evangelism blog:

My colleagues are now pooling their blogs into single feed to provide up-to-date news for readers interested in the latest in cloud. US Cloud Connection site is now live with has added the ability to aggregate the Azure-related blog posts. My colleagues will provide details on the latest offerings and let you know about events across the US.

Be sure to check out the contributions of Bruno Terkaly, Sanjay Jain, Zhiming Xue, Adam Hoffman, and myself.

For example, check out Peter Laudati’s Windows Azure for the ASP.NET Developer Series.

Find the top stories, links to the best deals, and how you get started with Windows Azure.

See USCloud.

Himanshu Singh (@himanshuks) posted Real World Windows Azure: Interview with Jan Kopmels, CEO of Crumbtag to the Windows Azure blog on 3/27/2012:

As part of the Real World Windows Azure interview series, I talked to Jan Kopmels, Cofounder and Chief Executive Officer at Crumbtag, about using Windows Azure to provide on-demand processing power for its ad-placement application. Read the customer success story. Here’s what he had to say.

Himanshu Kumar Singh: Where did the idea for Crumbtag come from?

Jan Kopmels: Some online advertising companies provide user-based ad matching, placing ads on web pages based on visitor profiles. This relies on the use of cookies—data that is stored locally on a user’s computer. The problem with cookies is that they are raising privacy concerns, and many countries are outlawing them. Plus, cookies stay on users’ PCs, so advertisers cannot store them centrally for analysis.

I wanted to capture user web-behavior data and process it centrally in a giant statistical database. This would not only allow customers to place ads without relying on cookies but also lets them take advantage of dynamic ad placements that are adjusted and refined with every webpage view and click.

HKS: When was Crumbtag launched?

JK: We launched Crumbtag in 2009 and our small team spent two years developing technology for the ad-placement application. I soon determined that we’d have to spend millions of dollars on data center infrastructure to process the prodigious amounts of data involved, and millions more to expand across Europe. We needed a whole new infrastructure and business model to make the business viable.

HKS: So you turned to the cloud?

JK: Yes, we began evaluating cloud service providers in December 2010. We turned to Windows Azure primarily because we’re a committed user of Microsoft technology and had developed our ad placement application using the .NET Framework and SQL Server 2008. We did look briefly at Amazon cloud solutions but felt they were too immature.

HKS: How does Crumbtag use Windows Azure?

JK: We use Windows Azure compute to provide on-demand processing power for our ad-placement application, which processes about 4000 requests a second and provides an 80-millisecond response time to well-known Dutch companies such as ABNAmro and KPN. Additionally, we use SQL Azure to store statistical information on visitors, as well as Windows Azure Service Bus to communicate ad-matching parameters to hundreds of virtual machines across its network. And Windows Azure Caching provides high-speed communication between those virtual machines.

HKS: How was the migration to Windows Azure?

JK: For an experienced .NET developer, moving to Windows Azure is a piece of cake. It took us just six weeks to move our application to Windows Azure - about 20 minutes of which was required to migrate the database to SQL Azure.

HKS: How does your application actually work?

JK: When a customer launches an ad campaign on Crumbtag, it uploads the ad and indicates how many clicks or views it wants to purchase. Crumbtag then places the ad randomly on the web. When the first web visitors click on the ad, Crumbtag starts determining statistical anomalies in the ‘clickers’ based on the site the visitor came from, where the visitor lives, what day and time it is, and so forth—and starts matching users with similar data. All campaigns are matched in real time against the Crumbtag statistical database.

HKS: How has your business benefitted from running on Windows Azure?

JK: By launching our ad placement business on Windows Azure, we’ve been able to scale our business rapidly, pitch ourselves to the biggest businesses, and avoid significant costs. We can quickly scale to serve its growing number of customers; in fact we plan to expand into the rest of Europe in 2012.

With Microsoft taking care of the Crumbtag infrastructure, we have more time to concentrate on growing their business. We’re a technology-driven business, but we don’t want to devote our resources to supporting hardware and managing IT systems. We’ve outsourced these tasks to Microsoft, which lowers our costs and allows us to focus on the business.

HKS: What have the cost-savings translated to for your business and your customers?

JK: By using cloud computing, we’ve been able to lower our operating costs and offer a more cost-effective solution that helps us win business against larger, more established players that are saddled with on-premises IT setups. And because we’re not spending millions of dollars on IT infrastructure, we can pass those savings on to customers. We have been able to win multinational customers as a small startup, but also demonstrate to them that we use cutting-edge technology.

I estimate that we have also avoided spending between U.S.$5 and 10 million a year on data centers and personnel, or up to $40 million in the first five years. As a startup, we did not have millions of dollars to build an enormous IT infrastructure. To expand across Europe, we would have to install a data center in each country. Our business model would not have been viable if we had not moved to Windows Azure.

Read the full case study. Learn how other companies are using Windows Azure.

Bruno Terkaly (@brunoterkaly) posted Microsoft Azure (Cloud) DevCamps–If you can’t make it in person.. tutorials on 3/27/2012:

Introduction

The purpose of this post is to bring you up to speed writing Windows Azure cloud-based applications from scratch. I assume you just have the hardware and the willingness to get started by installing the software.

Not everybody can afford the time to attend a DevCamp.

So what this post is about is getting you installed and executing even though you were not able to make it in person.

This first section is about getting setup and configured. You will need to download a number of things and you will create a test project to validate the setup.

Exercise 1: Getting the correct hardware and software

What you will need:

Exercise 2: Validating that your cloud project will run

Creating your first test project with Windows Azure and making sure it runs:

Summary

The core lessons in this post are:

Exercise 1: Getting the correct hardware and software

This exercise is about figuring out what you have and where you need to be. Currently, from a hardware point of view, I'm using VS 2010. So please try to get to Visual Studio version 2010. Shouldn't be a problem because you can use the Express version of VS for free. Does your hardware measure up to at least these standards?Exercise 1: Task 1 - Validating your current hardware

This task is about figuring your current available hardware that will be your developer machine. I recommend a little more than what you see here. The best environment I've ever had is a Lenovo w520, 16GB RAM, solid state drive. Everything loads in seconds. If you can afford solid state, I highly recommend it if you are an impatient developer type.

Figure 1

Computer Properties

Exercise 1: Task 2 – Installing the software

You will need a combination of Visual Studio, SQL Server 2008 R2 Management Studio Express with SP1, SDKs, and operating system settings.

Figure 2

Turn features on/off

- Under Microsoft .NET Framework 3.5, select Windows Communication Foundation HTTP Activation.

Figure 3

Changing Windows Features

- Under Internet Information Services, expand World Wide Web Services, then Application Development Features, then select .NET Extensibility, ASP.NET, ISAPI Extensions and ISAPI Filters.

- Under Internet Information Services, expand World Wide Web Services, then Common HTTP Features, then select Directory Browsing, HTTP Errors, HTTP Redirection, Static Content.

- Under Internet Information Services, expand World Wide Web Services, then Health and Diagnostics, then select Logging Tools, Request Monitor and Tracing.

- Under Internet Information Services, expand World Wide Web Services, then Security, then select Request Filtering.

- Under Internet Information Services, expand Web Management Tools, then select IIS Management Console.

- Install the selected features.

Exercise 1: Task 3 - Exploring the labs

A subset of labs are provided. The next section will guide you through the process of installing the content and testing the labs to make sure they can run.

Note

The Windows Azure Camps Training Kit uses the new Content Installer to install all prerequisites, hands-on labs and presentations that are used for the Windows Azure Camp events.

Note – The two kits to install

Windows Azure Devcamps: http://www.contentinstaller.net/Install/ContentGroup/WAPCamps

Figure Content Installer

For the Windows Azure Devcamp

http://www.microsoft.com/download/en/details.aspx?displaylang=en&id=8396

Figure Windows Azure Platform Training Kit

This is an additional download

Exercise 2: Validating that your cloud project will run

The purpose of this section is to validate that we can create and run a Windows Azure project.

We will create a new project and run it in the local emulators. We will then start opening the projects from: (1) Azure Dev Camp Kit; (2) Windows Azure Platform Kit.Exercise 2: Task 1 - File / New Project

We will create a new project from scratch to build a "hello world" application.

- Start Visual Studio 2010 as administrator. You need those administrator privileges.

- You will add an ASP.NET Web role

Figure Starting Visual Studio as administrator

How to start Visual Studio as administrator

- Select File/New Project from the Visual Studio menu.

- Provide a name of Hello World

Figure Creating a new project

How to create a cloud project

Figure Adding an ASP.NET Web Role

How to add a web role

Figure Validating our project

How to create a cloud based solution

Exercise 2: Task 2 - Adding basic code

We will add some very basic code to validate our project. We will not be using storage for the demo.

- Navigate to default.aspx. Add your name to the h2 section as follows.

<%@ Page Title="Home Page" Language="C#" MasterPageFile="~/Site.master" AutoEventWireup="true" CodeBehind="Default.aspx.cs" Inherits="WebRole1._Default" %> <asp:Content ID="HeaderContent" runat="server" ContentPlaceHolderID="HeadContent"> </asp:Content> <asp:Content ID="BodyContent" runat="server" ContentPlaceHolderID="MainContent"> <h2> Welcome to ASP.NET! to you, Bruno Terkaly </h2> <p> To learn more about ASP.NET visit <a href="http://www.asp.net" title="ASP.NET Website">www.asp.net</a>. </p> <p> You can also find <a href="http://go.microsoft.com/fwlink/?LinkID=152368&clcid=0x409" title="MSDN ASP.NET Docs">documentation on ASP.NET at MSDN</a>. </p> </asp:Content>

Exercise 2: Task 3 - Running your project

This next section will test if your Compute Emulator works.

It will not test the storage emulator. We will cover that in another post.

- Navigate to the Debug menu. Choose Start debugging.

- Validate you see the following window.

Figure Verifying your cloud project runs.

How to verify your cloud project can run in the compute emulator.

PRNewsWire asserted “Microfinance software specialist simplifies infrastructure, optimizes customer delivery, and expands capability and global scalability by adopting Microsoft cloud services platform” in an introduction to a Independent Software Vendor Gradatim Migrates to Windows Azure, Helping Reduce Deployment Time by 84 Percent While Saving $2 Million press release of 3/27/2012:

NEW DELHI, March 27, 2012 /PRNewswire/ -- Independent software vendor Gradatim, a specialist in creating technology solutions for businesses that offer microfinance products and services, migrated to the Windows Azure cloud platform to simplify its service configuration and gain global scalability, achieving an 84 percent decrease in project deployment time and saving an estimated $2 million (U.S.).

In 2011, Gradatim evaluated a number of shared services platforms, including IBM SmartCloud, and selected Windows Azure, the Microsoft cloud services development, hosting and management environment.

"We wanted a platform-as-a-service solution, not just cloud infrastructure," said CV Prakash, founder and CEO of Gradatim. "We concluded that Windows Azure offered the best long-term value and the most reliable cloud platform for transforming our business."

"Microfinance" describes the market for financial instruments such as loans and insurance policies, which involve small principal and transaction amounts. Service providers must be agile enough to quickly develop low-cost, custom products that can be accessed anywhere, including on mobile devices. To capitalize on the growing demand for its insurance policy and loan management applications, Gradatim chose to migrate its solutions to Windows Azure to achieve simplicity and global scalability.

By eliminating the need to set up datacenters and invest in server hardware to deliver its solutions, Gradatim substantially reduced its operating expenses. The company estimates that the cost savings from adopting Windows Azure will total about $2 million (U.S.) over the next 18 months.

The company previously needed about three months to fully deploy its solutions to customers. The same work can now be done in two weeks, which represents an 84 percent increase in efficiency. "Faster deployments mean that we start generating revenue from each project faster, so we have more flexibility in the investment decisions that we make to grow our business," Prakash said. The on-demand resource availability and scalability offered by Azure also helps the company spend less time planning customer deployments.

Windows Azure allowed Gradatim to not only shift to cloud-based delivery of its products but also to make major improvements to its pricing model. Rather than buying a perpetual license to access the software, customers can choose to pay a nominal subscription fee, along with a percentage of the value of each transaction or a fixed fee for each loan or insurance policy they manage, using Gradatim technology. "We've been able to restructure our pricing to better align with the way customers across the industry consume our services," Prakash said.

"We are excited about the impact this migration has for Gradatim and its customers," said Srikanth Karnakota, director, Server and Cloud Business, Microsoft India. "Azure has been enabling customers to innovate, reduce time to market and access newer markets. The current offering from Gradatim strengthens its position in the finance industry as a company focused on delivering affordable, high-caliber service."

More information on Gradatim's move to Windows Azure is available in the Microsoft case study and Microsoft News Center.

Jason Zander (@jlzander) described a Visual Studio Ultimate Roadmap in a 3/27/2012 post:

Today at DevConnections I shared some insight into the roadmap ahead for our Visual Studio Ultimate product. Visual Studio Ultimate is our complete, state-of-the art toolset. It provides tools for all members of the team, from product owners to testers, and is ideal for the development of mission critical enterprise applications. It contains unique features like architecture modeling, code discovery, Quality of Service testing, and advanced cross-environment diagnostic tools, which help save the team time throughout the software development lifecycle. Beyond the product features, Visual Studio Ultimate subscribers also enjoy additional MSDN subscriber benefits year-round, including feature packs.

As developers, we want to provide solutions to customer problems and we’d like to deliver those improvements faster than before while ensuring high quality. In my past few blog posts, I’ve talked about new features in Visual Studio 11 which help you optimize for that faster development cycle including support for DevOps. We want to deliver that same level of continuous improvement for Visual Studio user’s as well. Today I shared news that after the Visual Studio 11 release, we will ship Visual Studio 11 Ultimate Feature Packs as an ongoing benefit. The goal of these feature packs is to further build on the value and scenarios that we’re delivering in Visual Studio 11. The main themes for the first feature pack will be SharePoint Quality of Service Testing scenarios, and the ability to debug code anywhere its run using our IntelliTrace technology. These are two challenges we see at the interface between development and operations teams, which we can help address with the right tools.

In Visual Studio 11, we’re removing friction between the development teams building software and the operations groups managing software in production. Enhanced IntelliTrace capabilities and features like TFS connector for System Center Operations Manager allow teams to monitor and debug their apps anywhere: in environments spanning development, test and even in production. In the first Ultimate Feature Pack after Visual Studio 11, we’ll continue building upon the IntelliTrace enhancements in Visual Studio 11. We’ll add new capabilities for customizing collection of trace data, including the ability to refine the scope of an IntelliTrace collection to a specific class, a specific ASP.NET page, or a specific function. This fine grained control will enable more targeted investigations and allow you to debug issues more quickly, saving hours of effort. We’ll also invest in results filtering making it faster to find the data you need as well as improved summary pages for quickly identifying core issues.

In Visual Studio 11 we are expanding our support for teams working with SharePoint with features like performance profiling, unit testing, and IntelliTrace support. In the first Ultimate Feature Pack after Visual Studio 11 we’ll make it easy to test your site for high volume by introducing SharePoint load testing. We will also make it easier to do SharePoint unit testing by providing Behaviors support for SharePoint API’s. This is a great win for teams developing SharePoint solutions.

I’m happy to share this future roadmap with you today, and excited about the benefits we’ll be offering to our Ultimate subscribers in this first feature pack and beyond. These announcements are a sneak peek at the road ahead, and we will keep you updated as these plans materialize in the future.

Also make sure to visit Brian Harry’s blog to learn more about another announcement we made today, regarding build in the cloud for the Team Foundation Service Preview.

Richard Conway (@azurecoder) announced Release of Azure Fluent Management v0.1 library on 3/26/2012:

Wanted to let you know that we’re pleased to announce v0.1 of Azure Fluent Management. This is an API that I’ve pulled together primiarily from demos that we’ve given in the past few months. It’s a fluent API that we hope will be used for all management tasks in Windows Azure for those that would prefer to use C# in code directly rather than powershell. We feel that it allows for a much more reactive approach. In version v 0.1 we’ve included that following:

- Ability to create a hosted service, followed by a deployment

- Ability to use an existing hosted service and deploy to a particular deployment slot

- Can alter instance counts for roles on the fly

It’s lacking in several areas at the moment the immediate fixes of which will follow in the next few days and weeks:

- No direct operations on hosted services

- No code comments

- Direct of storage service through REST APIs – as such limit on size of .cspkg payload that can be sent to azure

- Currently need to provide a storage connection string, in future will enumerate services and upload to one

Longer term goals for the library:

- Full Sql Azure, Service Bus and ACS fluent management library

Source is currently hosted on bitbucket and the package has been uploaded to nuget. To see:

> Install-Package Elastacloud.AzureManagement.Fluent

I’ve tested two paths currently so feel free to have a play. Would welcome feedback – this is still very much in beta.

new Deployment("67000000-0000-0000-0000-0000000000ba") .ForNewDeployment("hellocloud") .AddCertificateFromStore("FFFFFFFFF0961B6A6C51D4AC657B0ADBFFFFFFFF") .WithExistingHostedService("pastasalad") .WithPackageConfigDirectory(@"C:\mydir\bin") .WithStorageConnectionStringName("DataConnectionString") .AddDescription("My new hosted services") .AddEnvironment(DeploymentSlot.Production) .AddLocation(Deployment.LocationNorthEurope) .AddParams(DeploymentParams.StartImmediately) .ForRole("HelloCloud.Web") .WithInstanceCount(3) .AndRole("HelloCloud.Worker") .WithInstanceCount(3) .Go();Let’s go through some of the ways of using this. Creating a new Deployment takes a subscription id as a constructor parameter. You can either add a certificate from a store, an X509Certificate2 object or from a .publishsettings file. You can use an existing service or you can use .WithNewHostedService instead. WithPackageConfigDirectory expects a valid .cspkg and .cscfg file and will use these to upload the package storage and configure the deployment respectively. WithStorageConnectionStringName will allow you to use a connection string in the .cscfg Settings and upload the package temporarily. Currently it doesn’t delete the package after deployment but that will be optional in a future version. Deployment parameters such as locations, descriptions, deployment slots, StartImmediately and TreatWarningsAsError can all be configured. Each role can be configured with a new instance count by using ForRole/WithInstanceCount and AndRole/WithInstanceCount. Then call Go and you’re done!

The library blocks so you’ll have to wait until the operation is finished but internally it uses continuation tokens via GetOperationStatus to ensure that the activities are completed and bubbles up any WebExceptions to the hosting applications.

It was late last night so may have missed a System.Xml dependency from Nuget so you may need to adjust. Over time I’ll get some documentation together on bitbucket for this.

Expect new releases every couple of weeks as we add more features.

Enjoy and give us your feedback or wishlists. As we’re writing this library to enable grid deployments for HPC and other parallel workloads (our business – anyone with any consultancy requests on this side of things please get in touch) expect it to be quite comprehensive.

David Pallman announced the beginning of a new series in his Outside-the-Box Pizza, Part 1: A Social, Mobile, and Cloudy Modern Web Application post of 3/26/2012:

Outside-the-Box Pizza, Part 1: A Social, Mobile, and Cloudy Modern Web Application

In this series we’ll be showing how we developed Outside-the-Box Pizza, a modern web application that combines HTML5, mobility, social networking, and cloud computing—by combining open standards on the front end with the Microsoft web and cloud platforms on the back end. This is a public online demo developed by Neudesic.

Here in Part 1 we’ll provide an overview of the application, and in subsequent parts we’ll delve into the individual technologies individually.

Scenario: A National Pizza Chain

The scenario for Outside-the-Box Pizza is a [fictional] national pizza chain with 1,000 stores across the US. All IT is in the cloud: the web presence is integral not only to customers placing orders but also to store operation, deliveries, and enterprise management.

Outside-the-Box Pizza’s name is a reference to the company’s strategy of stressing individuality and pursuing the younger mobile-social crowd. In addition to “normal” pizza, they offer unusual shapes (such as heart-shaped) and toppings (such as elk and mashed potato). The web site works on tablets and phones as well as desktop browsers. The site integrates with Twitter, and encourages customers to share their unusual pizzas over the social network. The most unusual pizzas are given special recognition.Technologies Used

Outside-the-Box Pizza uses the following technologies and techniques:

• Web Client: HTML5, CSS, JavaScript, jQuery, Modernizr

• Mobility: Responsive Web Design, CSS Media Queries

• Web Server: MVC4, ASP.NET, IIS, Windows Server

• Cloud: Windows Azure Compute, Storage, SQL Azure DB, CDN, Service Bus

• Social: Twitter

Again, we’ll go into detail about these technologies in subsequent posts.Home Page

On the home page, customers can view suggested special offers as well as videos showing how Outside-the-Box Pizza prepares its pizzas. The first video shows fresh ingredients, and the second video shows artisan pizza chefs practicing their craft.

The web site adapts layout for mobile devices, using the techniques of responsive web design. Here’s how it appears on an iPad:

And here’s how it appears on a smartphone:

Ordering

On the Order page, customers can design their masterpiece. Pizzas come in round, square, heart, and triangle shapes. Sauce choices are tomato, alfredo, bbq, and chocolate. Toppings are many and varied, a mix of traditional and non-traditional. Customers click or touch the options they want, enter their address, and click Order to place their order.

Order Fulfillment

Once an order has been placed, customers see a simulation of order fulfillment on the screen. Since this is a demo and we don’t actually have stores out there making pizzas, the ordering process is simulated. It’s also speeded up to take about a minute so we don’t have to wait 30-45 minutes as we would in real life.

The order is first transmitted to the web site back end in the cloud and placed in a queue for the target store.

As the order is received by the store, the pizza dough is made and sauce and toppings are added. After that, the pizza goes into the oven for baking.

After baking, the pizza is sent out for delivery. Once delivered to your door, order fulfillment is complete.

Social Media

On the Tweetza Pizza page, customers can view the Twitter feed for the #outsideboxpizza hashtag or post their own tweets.

To post a tweet, the user clicks the Connect with Twitter button and signs in to Twitter. They can then send tweets through the application.

The most impressive pizzas are promoted on the Cool Pizzas page.

The Store View

In the individual pizza stores, each store can view online orders. Orders are distributed to each store through cloud queues: each of the 1,000 stores has its own orders queue. The appropriate store is determined from the zip code of the order.

Once a pizza has been prepared, a delivery order is queued for the driver.

Driver View

Drivers get a view of delivery orders, integrated to Bing Maps so they can easily determine routes.

Enterprise Sales Activity View

Lastly, the enterprise can view the overall sales activity from the Sales page. Unit sales and revenue can be examined for day, month, or year; and grouped by national region, state, or store.

Summary

Outside-the-Box Pizza a modern web application: it's social, mobile, and cloud-based. Together the use of HTML5, adaptive layout for mobile devices, and cloud computing mean it can be run anywhere and everywhere: it has broad reach.

Outside-the-box-Pizza can be demoed online at http://outsidetheboxpizza.com. We aren’t quite ready to share the source code to Outside-the-Box Pizza yet—it’s still a work-in-progress, and we need to replace some licensed stock photos and a commercial chart package before we can do that. However, it is our eventual goal to make source available.

Stay tuned for the next installment, coming soon.

<Return to section navigation list>

Visual Studio LightSwitch and Entity Framework 4.1+

Andrew Lader described New Business Types: Percent & Web Address (Andrew Lader) in a 3/28/2012 post to the Visual Studio LightSwitch Team blog:

As many of you know, the first version of LightSwitch came with several built-in business types, specifically Email Address, Money and Phone Number. In the upcoming release of LightSwitch, two new business types have been added: the Percent and the Web Address. In this post, I’m going to explain the functionality they provide and how you can leverage them in your LightSwitch applications.

The Percent Business Type

Starting with the Percent business type, this new addition gives you the ability to treat a particular field in your entity model as a percentage. In other words, your customers will be able to view, edit and validate percentages as an intrinsic data type. Like other business types in LightSwitch, it is part of the LightSwitch Extensions included with LightSwitch and is enabled by default. Keeping this enabled gives you all of the familiar business types like Money, Phone and Email Address.

Using Percent in the Entity Designer

With this release, you can choose the Percent data type for a field in your entity, just like you can for the other existing data types. When you create a field, or edit an existing one, simply click on the drop down in the Type column of the entity; within the drop down list is a data type called “Percent”. Selecting it lets LightSwitch know that this field is to be treated as a Percent business type.

Percent Decimal Places Property

This business type shares some familiar properties that you can find on the Decimal data type, notably Scale and Precision. And they are used in exactly the same way. What’s new with the Percent business type is a property called Percent Decimal Places, though it is tied closely to the Scale property. In practice, this value represents the number of decimals displayed when the value is formatted as a percent. In other words, if it is set to 4, a value of 60% would be displayed as 60.0000%. By default, this value is set to 2. It must be greater than or equal to 0, but it can be no greater than 2 less than the value of the Scale property.

Choosing the Percent Business Type

For this introduction, I am going to use a simple application that records and displays how a community has voted on certain topics. There is one table, Topics, that tracks the votes for each particular topic being considered. As the image below shows, the Entity Designer for field types now contains Percent in the drop down menu:

The Topics table is pretty basic, as the picture below illustrates. It contains fields for defining the name and description of the topic. And there are three integers which are used to record votes for Yes, No and Undecided. The other field of note is the ThresholdNeededToPass field, which is a Percent used to decide the percentage needed for the particular topic to pass. For example, it might be a simple majority like 51%, or it might be something higher like 60%. The last three fields are computed fields that present the percentage values for those in favor of the topic, those against it, and those that are undecided:

Percent Computed Fields

The code for the computed fields is also pretty simple and straight forward. Here is the code I used to implement these computed properties using Visual C#:

private decimal TotalNumberOfVotes { get { decimal totalNumberOfVotes = 0; if ((this.VoteYes != null) && (this.VoteNo != null) && (this.VoteUndecided != null)) { totalNumberOfVotes = (decimal)(this.VoteNo + this.VoteYes + this.VoteUndecided); } return totalNumberOfVotes; } } partial void InFavor_Compute(ref decimal result) { if (TotalNumberOfVotes > 0) { result = Decimal.Round((decimal)this.VoteYes / TotalNumberOfVotes, 6); } else { result = 0; } } partial void Against_Compute(ref decimal result) { if (TotalNumberOfVotes > 0) { result = Decimal.Round((decimal)this.VoteNo / TotalNumberOfVotes, 6); } else { result = 0; } } partial void Undecided_Compute(ref decimal result) { if (TotalNumberOfVotes > 0) { result = Decimal.Round((decimal)this.VoteUndecided / TotalNumberOfVotes, 6); } else { result = 0; } }And here is the same code written using VB.NET:

Private ReadOnly Property TotalNumberOfVotes() As Decimal Get Dim totalNumberOfVotes__1 As Decimal = 0 If (Me.VoteYes IsNot Nothing) AndAlso (Me.VoteNo IsNot Nothing) AndAlso (Me.VoteUndecided IsNot Nothing) Then totalNumberOfVotes__1 = CDec(Me.VoteNo + Me.VoteYes + Me.VoteUndecided) End If Return totalNumberOfVotes__1 End Get End Property Partial Private Sub InFavor_Compute(ByRef result As Decimal) If TotalNumberOfVotes > 0 Then result = [Decimal].Round(CDec(Me.VoteYes) / TotalNumberOfVotes, 6) Else result = 0 End If End Sub Partial Private Sub Against_Compute(ByRef result As Decimal) If TotalNumberOfVotes > 0 Then result = [Decimal].Round(CDec(Me.VoteNo) / TotalNumberOfVotes, 6) Else result = 0 End If End Sub Partial Private Sub Undecided_Compute(ByRef result As Decimal) If TotalNumberOfVotes > 0 Then result = [Decimal].Round(CDec(Me.VoteUndecided) / TotalNumberOfVotes, 6) Else result = 0 End If End SubUsing Percent in the Screen Designer

In addition to having this new business type for your entities, your screens will be aware of the Percent business type as well. By default, the screen designer will use the Percent Editor control for fields of this data type, while computed fields of type Percent will default to using the Percent Viewer control. Like other business types, you can choose to use either control for fields in the screen designer:

How does it work in the Runtime?

Once you have an entity that contains a field of this type, and have created a screen that displays it, what will it look like when you run your application?

The Percent Viewer Control

The Percent Viewer control works just as you would expect. Much like other viewer controls, it is a read-only, data-bound textbox that displays the percentage value like this:

Please note that the format of the percentage value is based on the culture of the LightSwitch application. In my example above, you can see another screen entitled “Vote On Topics”. This is a straightforward screen I created using the Editable Grid screen template, and it lets you edit each topic in a grid. I entered a value of 37 for the VoteYes field, 26 for the VoteNo field and 10 for the VoteUndecided field. That’s what yielded the numbers you see in the image above.

Remember the Percent Decimal Places property we discussed earlier? Close the application and change that property to a value of 4 for the Undecided computed field. Now run it again. You will see that the list details screen displays a value of 13.6986% instead of 13.70%. This gives you some freedom to control how the values are displayed.

The Percent Editor Control

The editor control for the Percent business type will let your customers view and edit a percentage value, maintaining it to the user as a percentage even while editing. For example, tabbing into the “Threshold Needed To Pass” field selects only the value, excluding the “%” symbol. If the user were to then delete the value, the control will behave by removing the value but leaving the “%” symbol:

Even if the user deletes the “%” symbol, it is restored after the user tabs off. So for example, if the user deletes everything including the “%” symbol, and then enters a value of “60”, when they tab off, the field will display the following:

The Web Address Business Type

The new Web Address business type provides you with the ability to represent hyperlinks in your application’s entity model and screens. This means that your customers will be able to edit, test and use hyperlinks. And just like the Percent business type, the Web Address business type is part of the LightSwitch Extensions included with LightSwitch.

Adding it to an Entity

When editing fields in the Entity Designer, you can choose to add the Web Address data type by clicking on the drop down for the field’s data type. This will yield the following menu: