Windows Azure and Cloud Computing Posts for 3/19/2012+

| A compendium of Windows Azure, Service Bus, EAI & EDI Access Control, Connect, SQL Azure Database, and other cloud-computing articles. |

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Windows Azure Blob, Drive, Table, Queue and Hadoop Services

- SQL Azure Database, Federations and Reporting

- Marketplace DataMarket, Social Analytics, Big Data and OData

- Windows Azure Access Control, Identity and Workflow

- Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch and Entity Framework v4+

- Windows Azure Infrastructure and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

Azure Blob, Drive, Table, Queue and Hadoop Services

Denny Lee (@dennylee) described Connecting Hadoop on Azure to your Amazon S3 Blob storage in a 3/20/2012 post:

When working with Hadoop on Azure, you may be used to the idea of putting your data in the Cloud. In addition to using Azure Blob Storage, another option is connecting your Hadoop on Azure cluster to query data against Amazon S3. To configure Hadoop on Azure to connect to it, below are the steps (with the presumption that you already have an Amazon AWS / S3 account) and have uploaded data into your S3 account.

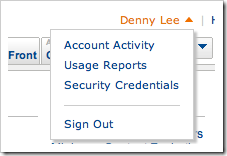

1) Log into your Amazon AWS Account and click onto Security Credentials

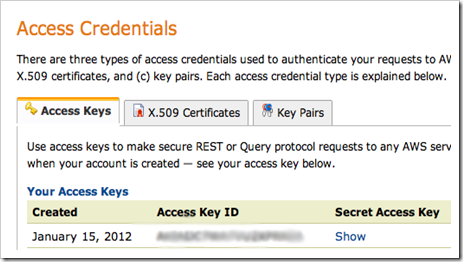

2) Obtain your access credentials – you’ll need both your Access Key ID and Secret Access Key.

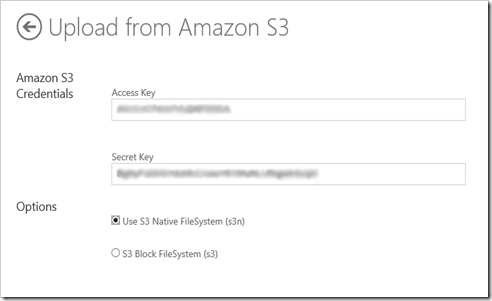

3) From here, log into your Hadoop on Azure account, click the Manage Cluster live tile, and click on Set up S3. From here, enter your Access Key and Secret Key and click Save Settings.

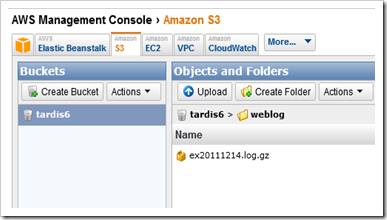

4) Once you have successfully saved your Amazon S3 settings, you can access your Amazon S3 files from Hadoop on Azure. For example, I have a bucket called tardis6 with folder weblog with a sample weblog file.

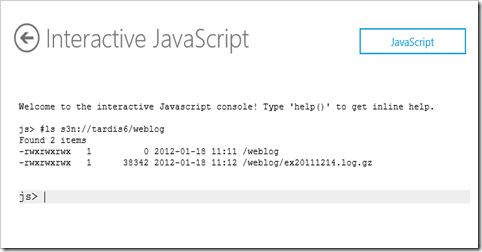

To access this file, you can use the s3n protocol from Hadoop on Azure. For example, click on the Interactive JavaScript console and type in the command:

#ls s3n://tardis6/weblog

Mary Jo Foley (@maryjofoley) reported Microsoft nabs former Yahoo chief scientist to work on big data integration in a 3/20/2012 post to ZDNet’s All About Microsoft blog:

Microsoft has added a new technical fellow to the ranks who will focus on big-data integration.

Raghu Ramakrishnan is now a technical fellow working in Microsoft’s the Server and Tools Business (STB). His area of focus, according to his online bio, will be “big data and integration between STB’s cloud offerings and the Online Services Division’s platform assets.”

Microsoft increasingly is playing up its big data assets, including the work it is doing with Hortonworks to create new Hadoop distributions for SQL Azure and Windows Server.

Ramakrishnan has been chief scientist for three divisions at Yahoo over the past five years (Audience, Cloud Platforms, Search). He also was a Yahoo Fellow leading applied science and research teams in Yahoo Labs, his bio says, and he worked on Yahoo’s CORE personalization project, the PNUTS geo-replicated cloud service platform and the creation of Yahoo’s Web of Objects through Web-scale information extraction.

According to reports, Yahoo may be cutting many of its research staff as part of larger corporate headcount cutbacks. …

Read more.

<Return to section navigation list>

SQL Azure Database, Federations and Reporting

No significant articles today.

<Return to section navigation list>

MarketPlace DataMarket, Social Analytics, Big Data and OData

My (@rogerjenn) detailed Analyzing Air Carrier Arrival Delays with Microsoft Codename “Cloud Numerics” tutorial of 3/21/2012 begins:

Introduction

The U.S. Federal Aviation Administration (FAA) publishes monthly an On-Time Performance dataset for all airlines holding a Department of Transportation (DOT) Air Carrier Certificate. The FAA’s Research and Innovative Technology Administration (RITA) of the Bureau of Transportation Statistics (BTS) publishes the data sets in the form of prezipped comma-separated value (CSV, Excel) files here:

Click images to view full size version. …

The FAA On_Time_Performance Database’s Schema and Size

The database file for January 2012, which was the latest available when this post was written, has 486,133 rows of 83 columns, only a few of which are of interest for analyzing on-time performance:

The ZIP file includes a Readme.html file with a record layout (schema) containing field names and descriptions.

The size of the extracted CSV file for January 2012 is 213,455 MB, which indicates that a year’s data would have about 5.8 million rows and be about 2.5 GB in size, which borders on qualifying for Big Data status. …

And ends:

Interpreting FlightDataResult.csv’s Data

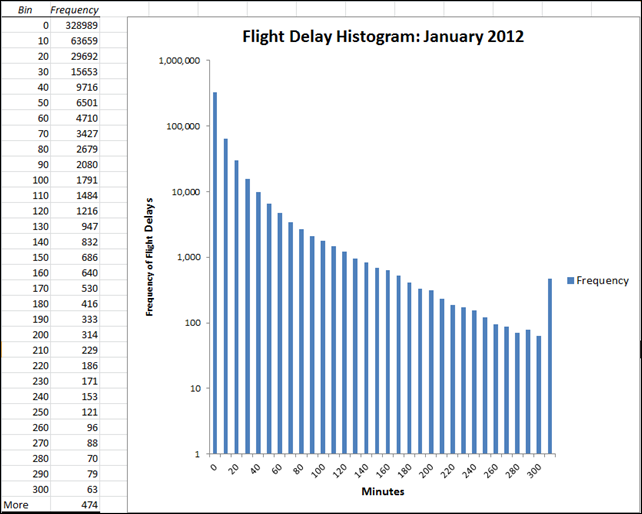

Following is a histogram for January 2012 flight arrival delays from 0 to 5 hours in 10-minute increments created with the Excel Data Analysis add-in’s Histogram tool from the unfiltered On_Time_On_Time_Performance_2012_1.csv worksheet:

The logarithmic Frequency scale shows an exponential decrease in the number of flight delays for increasing delay times starting at about one hour.

Roope observes in the “Step 5: Deploy the Application and Analyze Results” section of his 3/8/2012 post:

Let’s take a look at the results. We can immediately see they’re not normal-distributed at all. First, there’s skew —about 70% of the flight delays are [briefer than the] average of 5 minutes. Second, the number of delays tails off much more gradually than a normal distribution would as one moves away from the mean towards longer delays. A step of one standard deviation (about 35 minutes) roughly halves the number of delays, as we can see in the sequence 8.5 %, 4.0 %, 2.1%, 1.1 %, 0.6 %. These findings suggests that the tail could be modeled by an exponential distribution. [See above histogram.]

This result is both good news and bad news for you as a passenger. There is a good 70% chance you’ll arrive no more than five minutes late. However, the exponential nature of the tail means —based on conditional probability— that if you have already had to wait for 35 minutes there’s about a 50-50 chance you will have to wait for another 35 minutes.

Andrew Brust (@andrewbrust) described Big Data’s Ground Floor Consulting Opportunity in a 3/20/2012 post:

If you’re in the technology consulting world for very long, you quickly encounter the opposing force of horizontal competency and industry vertical specialty. This isn’t a Big Data issue, per se; it’s a technology services issue. Clients want consultants who know their business, while technologists usually prefer to concentrate on the technology. Some technologists just don’t care much about business domain expertise; others have a real passion for it, but don’t want to limit themselves to one vertical. Either way, the gravitas most technologists achieve and pursue is on the horizontal plane.

Early on in a technology’s life cycle, the requirement of vertical expertise is trumped by demand for the raw technology skill. This set of circumstances doesn’t last forever, though. As a technology matures, tech knowledge stops being the prize and favoritism falls on those who can apply the technology with industry savvy. At that point, competition becomes tougher, barriers to entry higher and the larger technology and professional services firms tend to gain big leverage over boutique shops.

Currently Big Data is in its horizontal golden age. The technology is significantly more powerful, in terms of speed and data volume tolerance, than the relational database technology that has preceded it. That drives demand. Meanwhile, the open source Hadoop stack is rather enterprise un-friendly, which puts the customer’s emphasis on competency rather than business domain knowledge. Competent implementation is Job One; contextual knowledge can come later.

I recently spoke to a medium-sized, but growing, technology services firm based in the Boston metropolitan region. They are relatively new to Hadoop and related technologies, but they’ve picked it up quickly and have established a competency in it that’s already an important line of business for them. Their Big Data practice augments, and is augmented by, the more conventional enterprise database and software development services that they offer.

As I spoke to this firm’s leadership, they mentioned something casually that I found to be rather significant. They explained how the Big Data project they did for a travel client provided them with expertise in a certain type of data analysis. All by itself that was good stuff. But the intriguing part came when they explained to me how they were able to apply that same technique on a different project, this time for a health care customer. To me that’s huge.

Intellectually, it’s not that surprising that analysis techniques are portable between industries. But from the business point of view, customer markets and consulting teams tend to be so stratified that this kind of industrial trancendence doesn’t often occur. 20 years ago it did, but as client/server technology, followed by Web development, and then mobile app development, matured, this has become far less common. Now, it seems to me, Big Data has created a new horizontal era.

For the Boston firm I chatted with, this allows them to move beyond their travel customer base and move into healthcare. If it plays its cards right, this company will gain the vertical expertise that will become a prerequisite again at some point, but by then the company will be ready, and won’t be locked out. Viewed this way, Big Data has become an equalizer for smaller companies (not to mention new bloggers). This shakes up the industry a bit. It forces the larger, incumbent tech firms to step up their game or to step aside and make room for new competitors.

This phenomenon — a disruption in its own right – is good for the tech world, and good for its customers too. Firms just need to bear in mind that it’s also the trait of a relatively immature technology space, and the window of horizontal opportunity will certainly close at some point.

Good advice, Andrew.

The Visual Studio LightSwitch Team (@VSLightSwitch) reported OData Applications in LightSwitch for Visual Studio 11 Beta (Matt Sampson) on 3/20/2012:

Matt Sampson has posted part 1 of a multi-part blog post this week that walks through attaching to a popular public transit OData Service, and making a few customizations to produce a LightSwitch application.

The blog post is here - OData Apps In LightSwitch, Part 1

See the article in the Visual Studio LightSwitch and Entity Framework v4+ also.

Frans Bouma (@FransBouma) announced on 3/19/2012 LLBLGen Pro v3.5 has been released! and that it now supports OData:

Last weekend we released LLBLGen Pro v3.5! Below the list of what's new in this release. Of course, not everything is on this list, like the large amount of work we put in refactoring the runtime framework. The refactoring was necessary because our framework has two paradigms which are added to the framework at a different time, and from a design perspective in the wrong order (the paradigm we added first, SelfServicing, should have been built on top of Adapter, the other paradigm, which was added more than a year after the first released version). The refactoring made sure the framework re-uses more code across the two paradigms (they already shared a lot of code) and is better prepared for the future. We're not done yet, but refactoring a massive framework like ours without breaking interfaces and existing applications is ... a bit of a challenge ;)

To celebrate the release of v3.5, we give every customer a 30% discount! Use the coupon code NR1ORM with your order :)

The full list of what's new:

Designer

- Rule based .NET Attribute definitions.

It's now possible to specify a rule using fine-grained expressions with an attribute definition to define which elements of a given type will receive the attribute definition. Rules can be assigned to attribute definitions on the project level, to make it even easier to define attribute definitions in bulk for many elements in the project. More information...- Revamped Project Settings dialog.

Multiple project related properties and settings dialogs have been merged into a single dialog called Project Settings, which makes it easier to configure the various settings related to project elements. It also makes it easier to find features previously not used by many (e.g. type conversions) More information...- Home tab with Quick Start Guides.

To make new users feel right at home, we added a home tab with quick start guides which guide you through four main use cases of the designer.- System Type Converters.

Many common conversions have been implemented by default in system type converters so users don't have to develop their own type converters anymore for these type conversions.- Bulk Element Setting Manipulator.

To change setting values for multiple project elements, it was a little cumbersome to do that without a lot of clicking and opening various editors. This dialog makes changing settings for multiple elements very easy.- EDMX Importer.

It's now possible to import entity model data information from an existing Entity Framework EDMX file.- Other changes and fixes

See for the full list of changes and fixes the online documentation.LLBLGen Pro Runtime Framework

- WCF Data Services (OData) support has been added.

It's now possible to use your LLBLGen Pro runtime framework powered domain layer in a WCF Data Services application using the VS.NET tools for WCF Data Services. WCF Data Services is a Microsoft technology for .NET 4 to expose your domain model using OData. More information...

- New query specification and execution API: QuerySpec.

QuerySpec is our new query specification and execution API as an alternative to Linq and our more low-level API. It's build, like our Linq provider, on top of our lower-level API. More information...- SQL Server 2012 support.

The SQL Server DQE allows paging using the new SQL Server 2012 style. More information...- System Type converters.

For a common set of types the LLBLGen Pro runtime framework contains built-in type conversions so you don't need to write your own type converters anymore.- Public/NonPublic property support.

It's now possible to mark a field / navigator as non-public which is reflected in the runtime framework as an internal/friend property instead of a public property. This way you can hide properties from the public interface of a generated class and still access it through code added to the generated code base.- FULL JOIN support.

It's now possible to perform FULL JOIN joins using the native query api and QuerySpec. It's left to the developer to check whether the used target database supports FULL (OUTER) JOINs. Using a FULL JOIN with entity fetches is not recommended, and should only be used when both participants in the join aren't the target of the fetch.- Dependency Injection Tracing.

It's now possible to enable tracing on dependency injection. Enable tracing at level '4' on the traceswitch 'ORMGeneral'. This will emit trace information about which instance of which type got an instance of type T injected into property P.- Entity Instances in projections in Linq.

It's now possible to return an entity instance in a custom Linq projection. It's now also possible to pass this instance to a method inside the query projection. Inheritance fully supported in this construct.Entity Framework support

The Entity Framework has been updated in the recent year with code-first support and a new simpler context api: DbContext (with DbSet). The amount of code to generate is smaller and the context simpler. LLBLGen Pro v3.5 comes with support for DbContext and DbSet and generates code which utilizes these new classes.

NHibernate support

- NHibernate v3.2+ built-in proxy factory factory support.

By default the built-in ProxyFactoryFactory is selected.- FluentNHibernate Session Manager uses 1.2 syntax.

Fluent NHibernate mappings generate a SessionManager which uses the v1.2 syntax for the ProxyFactoryFactory location- Optionally emit schema / catalog name in mappings

Two settings have been added which allow the user to control whether the catalog name and/or schema name as known in the project in the designer is emitted into the mappings.

<Return to section navigation list>

Windows Azure Service Bus, Access Control, Identity and Workflow

No significant articles today.

<Return to section navigation list>

Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

No significant articles today.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

Cory Fowler (@SyntaxC4) answered on 3/21/2012 how to determine if Windows Azure Storage Emulator or Service? is in use:

Depending on your development process and what you do on the Windows Azure Platform, today I’m going to announce [again for the very first time] one of the of best kept secrets of the Windows Azure Development when utilizing the Emulator tools.

Secret you say, how secret is it?

It’s such a secret that even the person that originally disclosed this juicy tip doesn’t even remember saying it!

Ryan Dunn originally released this information on Episode 18 of the Cloud Cover Show.

The suspense is killing, what is this about?

Sometimes when using the Storage Emulator while creating a Windows Azure Application you will run into a scenario where you will attempt to read an entity from storage before actually creating on entity of this kind.

This is a problem due to the way the Storage Emulator is structured. The Schema for a particular entity is stored in an instance of SQL Express (by default), because of this, an entity must first be stored to generate the schema before the emulator is aware of what you querying for.

Using this work around will allow you to pre-check to see if the storage account the application is pointing at is the Storage Emulator or the live Storage Service. Once you’ve determined local storage is being used you can create a fake entity save it to Storage (which creates the schema), then promptly delete it before doing your query.

Recently, I worked on a project which used multiple storage accounts for separating data into logical containers. Due to this separation I found myself creating storage connections dynamically. In order to get these accounts to work well locally this trick came in handy.

Enough already, Show us the Codez!

It is a relatively simple fix, it uses the IsLoopBack Property of the Uri class. One slight caveat to this would be if [in the future, or you’ve done some hacking] the Storage Emulator was located on a computer in the local network, as the MSDN documentation describes IsLoopBack as:

A Boolean value that is true if this Uri references the local host; otherwise, false.

But this works great as long as your storage stays local to your dev machine.

Brian Swan (@brian_swan) described Deploying Drupal at Scale on Microsoft Platform in a 3/20/2012 post:

I find that every conference I attend is a humbling experience. There are just so many knowledgeable people that I’m constantly reminded of how much I don’t know. The pre-conference training at DrupalCon Denver was no exception (and the real conference hadn’t even begun!). In the Deploying Drupal at Scale on Microsoft Platform training yesterday, Alessandro Pilotti delivered a densely packed training session that, once again, left me feeling humble. Alessandro’s breadth and depth of knowledge about running PHP applications on Windows (and Drupal in particular) is truly impressive.

As you can see from the training agenda, there is no way I can re-cap the day’s work in a single blog post, so I won’t try. I will, however, do my best to summarize his final demo of the day: creating a Drupal Web Farm using the Microsoft Web Farm Framework. What stood out at the end of the demo was the ease and speed with which he had a Drupal Web Farm up and running (almost completely from scratch!). Alessandro’s slides, which are attached to this post, go into much more detail than I will, so check them out if you want more than an interview.

Note: I will capture the high-level steps that Alessandro went through in creating a Drupal Web Farm. I’ve selected several slides from his presentation to to this – all of which show how to use IIS GUI tooling. However, Alessandro made a point of showing how everything he did could also be done from the command line using appcmd.exe and PowerShell commands.

Alessandro did a live demo of the following scenario: He had 4 Windows Server VM’s running on his laptop (480 GB SSD with 16 MB RAM !), which would serve as load balancer (Application Request Routing + Web Farm Framwork), two web servers (each hosting Drupal), and database server/file server.

Note: For the purposes of the demo, he used one VM for both the database server and file server, but he pointed out that having each on a separate machine (as shown in the diagram) would be the way to go for a production site.

Next, he used the Web Platform Installer to install Drupal and configure IIS on the primary server.

Again using the Web Platform Installer, he then installed Application Request Routing and Web Farm Framework.

With ARR and WFF installed, he could create a Web Farm…

…and add a primary host to the farm. (He also added the 2nd web server as a secondary host using the same UI.)

Finally, he provisioned the second server (and could have just as easily done more) using WFF. (Note that WFF uses Web Deploy. Complete details are in the attached slide deck.)

And, Voila! A scalable Drupal Web Farm. Of course, it wasn’t quite as simple as Voila!, but he did manage to go from four “blank” Windows Server VMs to a running web farm in about 45 minutes…very impressive IMHO.

Be sure to check out the attached slides from Alessandro’s presentation: DrupalCon2012_5F00_DrupalOnIIS.zip

Himanshu Singh (@himanshuks) posted Customers Talk About How Windows Azure Helps Them Run and Grow Their Business in Three New Videos to the Windows Azure blog on 3/20/2012:

We like to share how our customers are using Windows Azure. Check out these new videos with customers to hear them share some of the benefits they’ve seen from their move to Windows Azure.

Canada Does Windows Azure: Agility

Host Jonathan Rozenblit talks with Joel Varty, director of development at Agility Inc. about how the company uses Windows Azure and SQL Azure to run its content management system for managing content across digital channels.

Soundtracker Builds Global Geosocial Music Service on Multiple Platforms Including Windows Azure and Windows Phone 7

Daniele Calabrese, founder and CEO of Soundtracker, a Microsoft BizSpark One company, discusses with Zhiming Xue the enabling technologies behind their geosocial music service Soundtracker. During this interview, they explain the high-level architecture for the music service and Windows Azure powers the instant and predictive search function.

Online Gaming Firm BetOnSoft Taps SQL Azure To Build Real-Time Analytics and Scale for Planned Growth

In this video, BetOnSoft describes how it deployed a hybrid application solution that takes advantage of the high-availability features in SQL Server 2012 AlwaysOn and the scalability of SQL Azure to exceed 10 times its previous peak loads while running intensive real-time data analytics. Read the case study.

Go here to watch the videos.

PRNewsWire asserted “PaperTracer attracts international customers by migrating to Windows Azure to store, organize and safeguard its sensitive information in security-enhanced Microsoft global datacenters” in a deck for its HIPAA-Compliant Document Management Solution Expands Into Global Market by Migrating to Windows Azure Cloud Platform in a 3/20/2011 press release:

PaperTracer, a Jacksonville, Fla.-based document-management service that assures compliance with the Health Insurance Portability and Accountability Act (HIPAA) of 1996, has moved to the Windows Azure cloud platform to deliver its services to customers worldwide. By backing its HIPAA compliance with the security features of Microsoft Corp. datacenters, PaperTracer expects to boost revenues by 30 percent in the first year alone.

A subsidiary of Health Asset Management Inc. (HAMi), PaperTracer adopted cloud-computing technologies early on, delivering its Web-based solution as a service since its founding in 1999. For the past decade, PaperTracer has managed and maintained a server infrastructure that it hosted in a dedicated private cloud environment through a third-party provider in Florida.

PaperTracer wanted to expand its business overseas but found that potential international customers viewed its single-location¸ U.S.-based infrastructure as a concern. "When customers are storing sensitive information such as patient data or intellectual property, they want to know that their data is stored locally," said Michael Tarpley, CEO of HAMi. "Customers located outside the U.S. were not inclined to use PaperTracer."

The company resolved to move its services to a public cloud model, eventually narrowing the field to Windows Azure. "The decision to move to Windows Azure was an easy one," said Keith Hoot, the company's chief technology officer. "Not only does Microsoft offer security-enhanced global datacenters, but also its brand is recognized as synonymous with reliability."

Working with Microsoft partner Arth Systems, PaperTracer migrated to Windows Azure in April 2011. It hosts its application in Web roles in Windows Azure for background processing tasks and uses Microsoft SQL Azure for data storage. Using Windows Azure, the company packages its application and deploys it to the Microsoft datacenter nearest the customer's location.

PaperTracer recently created a variant that enables small and midsize businesses to take advantage of fast deployment on Windows Azure. Announced as 2EZData in November 2011, the solution is similar to PaperTracer and includes the same HIPAA-compliant features, such as activity-logging at the file level, role-based access controls, and forced password changes and logouts after periods of inactivity.

By the end of 2011, the company had two customers in the U.S. and one in India using PaperTracer on Windows Azure. It also had 15 customers in the pipeline for 2EZData, many of which are located in India and other overseas locations. "If it weren't for using Windows Azure, we would not have closed the deal with our PaperTracer customer in India," said Rajashree Varma, the director of Global Alliance at HAMi.

PaperTracer has traditionally provided customers the HIPAA-compliant features they require in a records management solution, but today it offers the service on a security-enhanced infrastructure that customers trust worldwide. With the previous infrastructure, it took a week or longer to procure the hardware and software required to get a customer up and running. On Windows Azure, PaperTracer can allocate compute and storage resources quickly, onboarding new customers in one or half a day.

But Tarpley reiterated that trust is the key factor in connecting with international customers. "They see the Microsoft name, and they know that everything has been put in place to help safeguard their data," he said. "That's invaluable for our continued success."

More information on PaperTracer's move to Windows Azure is available in the Microsoft case study and the Microsoft Customer Spotlight Newsroom.

Founded in 1975, Microsoft (Nasdaq: MSFT) is the worldwide leader in software, services and solutions that help people and businesses realize their full potential.

SOURCE Microsoft Corp.

Brian Swan (@brian_swan) explained Azure Real World: Migrating a Drupal Site from LAMP to Windows Azure on 3/19/2012:

Last month, the Interoperability team at Microsoft highlighted work done to move the Screen Actors Guild Awards Drupal website from a Linux-Apache-MySQL-PHP (LAMP) environment to the Windows Azure platform: SAG Awards Drupal Website Moves to Windows Azure. The move was the result of collaboration between SAG Awards engineers and engineers from Microsoft’s Interoperability Team and Customer Advisory Team (CAT). The move allowed the SAG Awards website to handle a sustained traffic spike during the SAG Awards show in January. Since then, I’ve had the opportunity to talk with some of the engineers who helped with the move. In this post I’ll describe the challenges and steps taken in moving the SAG Awards website from a LAMP environment to the Windows Azure platform.

Background

The Screen Actors Guild (SAG) is the United States’ largest union representing working actors. In January of every year since 1995, SAG has hosted the Screen Actors Guild Awards (SAG Awards) to honor performers in motion pictures and TV series. In 2011, the SAG Awards Drupal website, deployed on a LAMP stack, was impacted by site outages and slow performance during peak-usage days, with SAG having to consistently upgrade their hardware to meet demand for those days. That upgraded hardware was then not optimally used during the rest of the year. In late 2011, SAG Awards engineers began working with Microsoft engineers to migrate its website to Windows Azure in anticipation of its 2012 show. In January of 2012, the SAG Website had over 350K unique visitors and 1.1M page views, with traffic spiking to over 160K visitors during the show.

Overview and Challenges

In many ways, the SAG Awards website was a perfect candidate for Windows Azure. The website has moderate traffic throughout most of the year, but has a sustained traffic spike shortly before, during, and after the awards show in January. The elastic scalability and fast storage services offered by the Azure platform were designed to handle this type of usage.

The main challenge that SAG Awards and Microsoft engineers faced in moving the SAG Awards website to Windows Azure was in architecting for a very high, sustained traffic spike while accommodating the need of SAG Awards administrators to frequently update media files during the awards show. Both intelligent use of Windows Azure Blob Storage and a custom module for invalidating cached pages when content was updated were key to delivering a positive user experience.

Note: In this post I will focus on how the Drupal website was moved to Windows Azure, as well as how content and data were moved to Windows Azure Blob Storage and SQL Azure. I won’t cover the details of the caching strategy.

The process for moving the SAG-Awards website from a LAMP environment to the Windows Azure platform can be broken down into five high-level steps:

- Export data. A custom Drush command (portabledb-export) was used to create a database dump of MySQL data. A .zip archive of media files was created for later use.

- Install Drupal on Windows. The Drupal files that comprised the installation in the LAMP environment were copied to Windows Server/IIS as an initial step in discovering compatibility issues.

- Import data to SQL Azure. A custom Drush command (portabledb-import) was used together with the database dump created in step 1 to import data to SQL Azure.

- Copy media files to Azure Blob Storage. After unpacking the .zip archive in step 1, CloudXplorer was used to copy these files to Windows Azure Blob Storage.

- Package and deploy Drupal. The Azure packaging tool cspack was used to package Drupal for deployment. Deployment was done through the Windows Azure Portal.

Note: The portabledb commands mentioned above are authored and maintained by Damien Tournoud.

Details for each of these high-level steps are in the sections below.

Export Data

Microsoft and SAG engineers began investigating the best way to export MySQL data by looking at Damien Tournoud’s portabledb Drush commands. They found that this tool worked perfectly when moving Drupal to Windows and SQL Server, but they needed to make some modifications to the tool for exporting data to SQL Azure. (These modifications have since been incorporated into the portabledb commands, which are now available as part of the Windows Azure Integration Module.)

The names of media files stored in the file_managed table were of the form public://field/image/file_name.avi. In order for these files to be streamed from Windows Azure Blob Storage (as they would be by the Windows Azure Integration module when deployed in Azure), the file names needed to be modified to this form: azurepublic://field/image/file_name.avi. This was an easy change to make.

Because the SAG Awards website would be retrieving all data from the cloud, Windows Azure Storage connection information needed to be stored in the database. The portabledb tool was modified to create a new table, azure_storage, for containing this information.

Finally, to allow all media files to be retrieved from Blob Storage, the file_default_scheme table needed to be updated with the stream wrapper name: azurepublic.

Using the modified portabledb tool, the following command produced the database dump:

drush portabledb-export --use-windows-azure-storage=true --windows-azure-stream-wrapper-name=azurepublic --windows-azure-storage-account-name=azure_storage_account_name --windows-azure-storage-account-key=azure_storage_account_key --windows-azure-blob-container-name=azure_blob_container_name --windows-azure-module-path=sites/all/modules --ctools-module-path=sites/all/modules > drupal.dump

Note that the portabledb-export command does not copy media files themselves. Instead, the local media files were compressed in a .zip archive for use in a later step.

Install Drupal on Windows

In order to use the portabledb-import command (the counter part to the portabledb-export command above), a Drupal installation needed to be set up on Windows (with Drush for Windows installed). This was necessary, in part, because connectivity to SQL Azure was to be managed by the Commerce Guys’ SQL Server/SQL Azure module for Drupal, which relies on the SQL Server Drivers for PHP, a Windows-only PHP extension. Having a Windows installation of Drupal would also make it possible to package the application for deployment to Windows Azure. For this reason, Microsoft and SAG Awards engineers copied the Drupal files from the LAMP environment to a Windows Server machine. The team incrementally moved the rest of the application to an IIS/SQL Server Express stack before moving the backend to SQL Azure.

Note: The Windows Server machine was actually a virtual machine running in a Windows Azure Web Role in the same data center as SQL Azure. The Web Role was configured to allow RDP connections, which the team used to install and configure the SAG website installation. This was done to avoid timeouts that occurred when attempting to upload data from an on-premises machine to SQL Azure.

There were, however, some customizations made to the Drupal installation before running the portabledb-import command. Specifically,

- The SQL Server/SQL Azure module for Drupal was installed and enabled.

- The memcache module for Drupal was installed and enabled.

- The Windows Azure Integration module for Drupal was installed and enabled. Note that this module has a dependency on the CTools module. It also requires Damien Tournoud’s branch of the Windows Azure SDK for PHP, which must be unpacked and put into a folder called phpazure in the module’s main directory. (As of this writing, Damien Tournoud’s changes have not been merged with the Windows Azure SDK for PHP. However, they may be merged in the future.)

- Database connection information in the settings.php file was modified to connect to SQL Azure.

- A custom caching module was installed and enabled.

Some customizations to PHP were also necessary since this PHP installation would be packaged with the application itself:

- The php_pdo_sqlsrv.dll extension was installed and enabled. This extension provided connectivity to SQL Azure.

- The php_memcache.dll extension was installed an enabled. This would be used for caching purposes.

- The php_azure.dll extension was installed and enabled. This extension allowed configuration information to be retrieved from the Windows Azure service configuration file after the application was deployed. This allowed changes to be made without having to re-package and re-deploy the entire application. For example, database connection information could be retrieved in the settings.php file like this:

$databases['default']['default']['driver'] = 'sqlsrv';$databases['default']['default']['username'] = azure_getconfig('sql_azure_username');$databases['default']['default']['password'] = azure_getconfig('sql_azure_password');$databases['default']['default']['host'] = azure_getconfig('sql_azure_host');$databases['default']['default']['database'] = azure_getconfig('sql_azure_database');With Drupal running on Windows, and with the customizations to Drupal and PHP outlined above, the importing of data could begin.

Import Data to SQL Azure

There were two phases to importing the SAG Awards website data: importing database data to SQL Azure and copying media files to Windows Azure Blob Storage. As alluded to above, importing data to SQL Azure was done with the portabledb-import Drush command. With SQL Azure connection information specified in Drupal’s settings.php file, the following command copied data from the drupal.dump file (which was copied to Drupal’s root directory on the Windows installation) to SQL Azure:

drush portabledb-import --delete-local-files=false --copy-files-blob-storage=false --use-production-storage=true mysite.dump

Note: The copy-files-blob-storage flag was set to false in the command above. While the portabledb-import command can copy media files to Blob Storage, Microsoft and SAG engineers had some work to do in modifying media file names (discussed in the next section). For this reason, they chose not to use this tool for uploading files to Blob Storage.

The next step was to create stored procedures on SQL Azure that are designed to handle some SQL that is specific to MySQL. The SQL Server/SQL Azure module for Drupal normally creates these stored procedures when the module is enabled, but since Drupal would be deployed with the module already enabled, these stored procedures needed to be created manually. Engineers executed the stored procedure creation DDL that is defined in the module by accessing SQL Azure through the management portal.

After the import was complete, the Windows installation of the SAG Awards website was now retrieving all database data from SQL Azure. However, recall that the portabledb-export command modified the names of media files in the file_managed table so that the Drupal Azure module would retrieve media files from Blob Storage. The final phase in importing data was to copy media files to Blob Storage.

Note: After this phase was complete, engineers cleared the cache through the Drupal admin panel.

Copy Media Files to Blob Storage

The main challenge in copying media files to Windows Azure Blob Storage was in handling Linux file name conventions that are not supported on Windows. While Linux supports a colon (:) as part of a file name, Windows does not. Consequently, when the .zip archive of media files was unpacked on Windows, file names were automatically changed: all colons were converted to underscores (_). However, colons are supported in Blob Storage as part of blob names. This meant that files could be uploaded to Blob Storage from Windows with underscores in the blob names, but the blob names would have to be modified manually to match the names stored in SQL Azure.

Engineers used WinRAR to unpack the .zip archive of media files. WinRAR provided a record of all file names that were changed in the unpacking process. Engineers then used CloudXplorer to upload the media files to Blob Storage and to change the modified files names, replacing underscores with colons.

At this point in the migration process, the SAG Awards website was fully functional on Windows and was retrieving all data (database data and media files) from the cloud.

Package and Deploy Drupal

There were two main challenges in packaging the SAG Awards website for deployment to Drupal: packaging a custom installation of PHP and creating the necessary startup tasks.

Because customizations were made to the PHP installation powering Drupal, engineers needed to package their custom PHP installation for deployment to Windows Azure. The other option was to rely on Microsoft’s Web Platform Installer to install a “vanilla” installation of PHP and then write scripts to modify it on start up. Since it is relatively easy to package a custom PHP installation for deployment to Azure, engineers chose to go that route. (For more information, see Packaging a Custom PHP Installation for Windows Azure.)

The startup tasks that needed to be performed were the following:

- Configure IIS to use the custom PHP installation.

- Register the Service Runtime COM Wrapper (which played a role in the caching strategy).

- Put Drush (which was packaged with the deployment) in the PATH environment variable.

The final project structure, prior to packaging, was the following:

\SAGAwards

\WebRole

\bin

\php

install-php.cmd

register-service-runtime-COM-Wrapper.cmd

WinRMConfig.cmd

startup-tasks-errorlog.txt

startup-tasks-log.txt

\resources

\drush

\ServiceRuntimeCOMWrapper

\WebPICmdLine

(Drupal files and folders)Finally, the Windows Azure Memcached Plugin was added to the Windows Azure SDK prior to packaging so that memcache would run on startup and restart if killed.

The SAG Awards website was then packaged using cspack and deployed to Windows Azure through the developer portal.

Summary

The work in moving the SAG Awards Drupal website to the Windows Azure platform was an excellent example of Microsoft’s commitment to supporting popular OSS applications on the Windows Azure platform. The collaboration between engineers from SAG and from Microsoft’s Interoperability and Customer Advisory Teams resulted in a win for SAG (the SAG Awards website was able to handle sustained spikes in traffic that it could not handle previously) and in valuable lessons learned for the Windows Azure team about supporting migration and scalability of OSS applications on the Azure Platform.

<Return to section navigation list>

Visual Studio LightSwitch and Entity Framework 4.1+

Matt Sampson began a OData Apps In LightSwitch, Part 1 series on 3/19/2012:

One of the cooler features that comes with LightSwitch in Visual Studio 11 Beta is it’s support for attaching to OData Services and exposing it’s own data as an OData Service.

OData stands for Open Data Protocol. It’s an open protocol that defines how to expose your data for querying and updating. This enables consumers (like LightSwitch) to connect to an OData service like it would with other data sources, for example SQL.

There’s more and more places out there exposing their data through an OData service (see some at http://odata.org/producers).

One of the slickest ones that I have found is the Public Transit Data Community (PTDC) service. This OData feed is combining all the different public transit data feeds from around the United States and exposing them through one well defined OData feed.

The PTDC has made some nice progress so far and is exposing transit information for over a dozen different agencies. I’ve got a favorite agency on this list and that would be the Washington DC Metro agency or ‘WMATA’ for short.

If you’ve ridden the DC metro (or any subway system) more than a couple times you’ve probably found yourself wondering a few things like:

1) Where is my metro stop?

2) When is my train arriving?

3) How the heck do I get there?

4) My personal favorite – how many escalators will be broken today?We can figure all this out by making a LightSwitch app that consumes this OData Service.

We’ll keep it simple for this blog post, and then expand on some features over a couple more blog posts. Here’s the rundown of what we are going to accomplish in this blog post (we’ll add some more functionality in the next post):

- Consume a popular real-time public OData feed

- Create custom queries to limit the data to just DC Metro

- Create computed properties that query a cached entity set

- Use the new Web Address data type

Attach To It

For those of you familiar with LightSwitch V1, you’ll find that consuming OData with LightSwitch is just as easy as if you were consuming a SQL database.

Let’s get started walking through the creation of our simple app to simplify our life on the DC Metro.

- Open up Visual Studio 11 Beta

- Create a new project and select LightSwitch Application (Visual C#)

- Call the app “MyDCApp”

- We should see a screen something like this now:

- Click on “Attach to external Data Source” and you will see this:

- Click “Next”

- Use the url: http://transit.cloudapp.net/DevTransitODataService.svc

- Select “None” for the authorization method

- Click “Next”

- Import the following entities for now: Agencies, Arrivals, Entrances, Incidents, Regions, Routes, Stops, and Vehicles

- Select “Finish”

- We should have something like this now (minus the screens which we add later):

- Open up the Routes entity designer

- Change the KML propery from a “String” type to a “Web Address” type.

- This is a new type in LightSwitch and since the KML property is really a web address it makes sense to use it here

- Change the “Summary Property” value to RouteName

- Open up the Stops entity

- Change the “Summary Property” value to Name

Create Some Queries

We need to limit the data that we get back to be specific to the DC Metro for the purpose of our app. To do that we can create some queries based off the entities to limit it to just the DC Metro agency.

- Right click on the Arrivals entity and select “Add Query”

- Call the query MetroByArrivalTime

- Add two filters on the query, both filters will compare against a “Literal”:

- RouteType=Metro

- AgencyAbbreviation=WMATA

- Add a sort: ArrivalTime=Ascending

- Add a query on Routes entity, call it RoutesDCMetro.

- Add two filters on the query, both filters will compare against a “Literal”:

- RouteType=Metro

- AgencyAbbreviation=WMATA

- Add a query on Stops entity, call it StopsDCMetro

- Add one filter on the query, the filter will compare against a “Literal”:

- Type=Metro

You should have three queries now, one each under entities: Arrivals, Routes, and Stops.

Each query makes sure that we only get data for the DC Metro system. Additionally the Arrivals query makes sure that we get the data returned to us in order of their arrival time with the “arriving” trains coming back first.

Add Computed Properties

The Routes entity has a FinalStop0Id and a FinalStop1Id. These two properties represent the two different ending “Stops” of the Metro route. These aren’t very intuitive when viewed on a screen and we need to clean these up a little bit to give them a proper readable name.

We’ll add some computed properties now to accomplish this. Add two properties to this entity called FinalStop0Name and FinalStop1Name. After this is done, right click the table entity “Routes” and select View Table Code.

Code Snippet

- private static Dictionary<string, string> cachedFinalStops;

- public static Dictionary<string, string> GetCachedFinalStops(DataWorkspace myDataWorkspace)

- {

- if (cachedFinalStops == null)

- {

- cachedFinalStops = new Dictionary<string, string>();

- IEnumerable<c_Stop> myStops = myDataWorkspace.TransitDataData.StopsDCMetro().Execute();

- foreach (c_Stop myStop in myStops)

- {

- cachedFinalStops.Add(myStop.StopId, myStop.Name);

- }

- }

- return cachedFinalStops;

- }

- partial void FinalStop1Name_Compute(ref string result)

- {

- Dictionary<string, string> cachedStops = GetCachedFinalStops(this.DataWorkspace);

- result = cachedStops[this.FinalStop1Id];

- }

- partial void FinalStop0Name_Compute(ref string result)

- {

- Dictionary<string, string> cachedStops = GetCachedFinalStops(this.DataWorkspace);

- result = cachedStops[this.FinalStop0Id];

- }

There’s a couple things going on here. The FinalStopName_Compute methods find the Route’s two different stop’s name instead of just using the Id. They do this by using a LINQ query which says: For some variable q, find me all the stops on the Stops entity that have the same Id value as this Route’s stop.

The only trick here is that we cached all of the stops on the Stops entity the first time that we launched our screen. We do this because Metro Stops don’t ever change their names. So we can safely cache this information and then our Compute methods can query against the cached list of Stops which will speed things up a bit.

I want to stress here though that this method of caching is really ONLY appropriate for "static" data. That is data that we know won't be changing. I felt comfortable caching the names of the Metro Stops as I said, because that really is data that won't change. But you obviously would NOT want to cache, for example, the "Arrivals" entity this way because that data is definitely not static and using this method would result in you having old data in your cache.

You should have a Routes entity that looks something like this:

Create Some Screens

Let’s create some screens around the queries that we made now.

These screens will be read only, because the OData service we are querying against is read only. So we will want to remove our insert, edit, and delete buttons. Also, we’ll clean up our screens a bit to remove some useless data.

- Create a List and Details screen for the MetroByArrivalTime query.

- Change the “Summary” List to be a Columns Layout with the following fields:

- Arrival Time, Status, Route Name, and Stop Name

- Delete the Add, Edit, and Delete buttons under the command bar since we won’t need them

- You can customize the “Details” portion of the screen however you like, but below is what mine ended up looking like:

- Create a List and Details screen for the RoutesDCMetro query and for the StopsDCMetro query

- Delete the Add, Edit, and Delete buttons under the command bar since we won’t need them

- You can customize the screens however you like, my RoutesDCMetro and StopsDCMetro screens are displayed below respectively:

- Note how the “Routes” screen has the Kml property set to “Web Link” for the data type”- RoutesDCMetro

- StopsDCMetro

Run It

Hit F5 now in Visual Studio to run it and check out some of the features of the application and what LightSwitch provides.

The Metro By Arrival Time screen shows us the arriving metro trains, how many cars are arriving, what the status is (BRD for Boarding or ARR for Arriving), and what the destination stop is.

The Routes screen has the stop information for each route, as well as a clickable map of the route. If you click on the Kml link it will open up in an application like Google Earth and provide an overview of the route (see below).

KML Link:

And lastly the Stops DC Metro screen displays the location and name of each stop.

Concluding Part 1

To summarize what we’ve covered up to this point - we gave a basic example of how Visual Studio Light Switch can attach to a public OData feed. We showed the new web address data type. We utilized computed fields with caching to improve our performance. And we used queries to narrow down our data.

We still have a few problems we need to get to in the next blog post. We will customize the Stops screen so that it can provide us with a map of the Stops location instead of just latitude and longitude coordinates. The Incidents data contains all the information we want regarding broken escalators, so we will need to hook that up too so that we can retrieve that information for each given stop. Also, since this data is done in “real time” it’d be nice to add some automatic refresh to our application so that our data we are viewing doesn’t get too stale.

I’ll be looking to update the blog again sometime next week, until then feel free to leave any comments or questions and I’ll do my best to get back to them.

Thanks a lot, Cheers -

PS – I’ll get the application we developed uploaded here shortly and will post a link to it (in both VB and C# because I know how much people love VB.NET)

I like the Cheers icon, but who puts cherries in martinis?

Beth Massi (@bethmassi) posted LightSwitch Cosmopolitan Shell and Theme for VS 11 Beta on 3/19/2012:

Last week the team released the LightSwitch Cosmopolitan Shell and Theme for Visual Studio 11 Beta and there has been a ton of great feedback so far. So this morning I decided to update my Contoso Construction sample application to use it.

This shell displays the application logo at the top right, so I made some minor tweaks to the Home Screen to remove the logo image I had there before. I also updated some of the icons on the screen command bars to make them look a little better with this shell, like adding a transparent background.

Check it out! (click pictures to enlarge)

What I really like is the ability to brand the application with your own logo by specifying an image on the general properties of the application. This appears across the top of the main window. If you don’t specify an image then no space is taken up for it.

Another thing I really like is the cleaner menu system which takes up a lot less space than the previous “Office” theme. This theme will become the default theme for LightSwitch applications you create with LightSwitch V2 in Visual Studio 11. You’ll still be able to select the office theme, but this one will be the default on new apps.

I’ve updated the sample here:

Contoso Construction - LightSwitch Advanced Sample (Visual Studio 11 Beta)

Let us know what you think of the new shell by leaving a comment here or on the LightSwitch team blog post. There are a lot of great comments there already.

Return to section navigation list>

Windows Azure Infrastructure and DevOps

David Linthicum (@DavidLinthicum) asserted “With recent outages and price cuts, it may make sense to layer your cloud providers -- now it's affordable too” in a deck for his Use a tiered cloud strategy to skirt outages article of 3/21/2012 for InfoWorld’s Cloud Computing blog:

Two seemingly unrelated events in the cloud computing space should make you think about taking a different approach to your sourcing strategy for the cloud. The first event: Cloud outages, such as the recent Microsoft Azure failure, have shown us that no single cloud is infallible. The second event: Google, Amazon.com, and Microsoft have all dropped their cloud services' prices.

The second event means you can deal with the first event better. In your own data center, you'd have your servers fail over to others if needed. And any large organization will have a failover strategy to other data centers or external providers for business-critical information and processes so that an outage doesn't stop the business.

You should have exactly the same strategy in the cloud, and with the lower prices, it may not be cost effective to use a few clouds at a time to hedge your IT bets or to use cloud providers as tiers, much like you do with storage and compute servers within the data centers, when reliability by using redundant systems is a requirement.

You already know how to do this (or should!). All that's changed are the resources involved. You sign up with two or more cloud providers that provide the same service, such as storage. You make sure the data persists in each cloud service as a mirror of the other. You then use them as tiers, such as a primary cloud, a secondary cloud, and perhaps a cloud designated for disaster recovery. If the primary fails, you move to the secondary one automatically until the primary cloud service is restored.

If you do this right, users should not even notice an outage. Also, your uptime will vastly improve because the odds of both cloud services being down at the same time are tiny. (Just make sure that your secondary providers aren't just reselling your primary cloud provider's services under their name.)

Of course, there are two drawbacks:

- Cost. You'll pay for two or more cloud providers. However, with the recent price reductions, the business case for this is much more compelling than before. Count on even more price reductions in the near future.

- Complexity. This strategy adds a lot of work for administrators, so be sure to consider the complex nature of this tiered architecture, including software systems to manage the replication and the failover. Be sure to apply it where it justifies the added effort and complexity.

On the bright side, if done correctly, this strategy will provide better service to your users than the traditional in-house systems -- let's not forget that they typically suffer more outages than cloud-based systems do. Where the amount of money lost from outages could be in the millions of dollars in a single year, the use of a tiered cloud strategy becomes self-funding at worst and contributes significantly to the bottom line (via the avoided revenue disruptions) at best.

It's something to consider as you migrate to cloud-based services.

Joe Panettieri (@joepanettieri) asked Windows Azure User Groups: Cloud Customers Partnering Up? in a 3/20/2012 post to the TalkinCloud blog:

Windows Azure user groups — focused on Microsoft’s cloud computing platform — are popping up across the globe. Indeed, cloud computing customers and independent software developers (ISVs) seem determined to share best practices and tips for mastering Windows Azure. The big question: Can Windows Azure user groups help Microsoft itself to raise the cloud computing platform’s visibility?

Admittedly, some of the Windows Azure user groups are Microsoft-driven. But some Windows Azure special interest groups (SIGs), including this list, appear to be taking on lives and educational sessions of their own:

- Azure South Florida User Group

- Boston Azure Cloud User Group

- UK Windows Azure User Group (a.k.a. London Windows Azure User Group)

- Windows Azure User Group – NYC/NJ

- Windows Azure Sydney User Group

- Seattle Azure User Group

Talkin’ Cloud is poking around to see what types of applications and services user group members are launching in the Windows Azure cloud. Near term, Microsoft continues to face plenty of questions about Windows Azure adoption and reliability — especially after the recent Windows Azure Leap Year Outage. But long term, Talkin’ Cloud believes Microsoft will gain critical mass with Windows Azure, especially as Microsoft connects the dots between its own business applications (such as Dynamics CRM) and Azure.

Still, Windows Azure will continue to face intense competition from early market leaders (Amazon Web Services, Rackspace, Google) and emerging market disrupters. An HP public cloud initiative, for instance, is expected to launch within two months.

Microsoft has spent millions of dollars advertising its cloud services platforms. But I wonder: Does Windows Azure’s best hope for mainstream success involve grass-roots user groups, where customers and partners are busy sharing best practices? I’m betting the answer to that question is a solid yes.

Read More About This Topic

<Return to section navigation list>

Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

Yigal Edery (@yigaledery) described Cloud Datacenter Network Architecture in the Windows Server “8” era in a 3/19/2012 post:

In the previous blog, we talked about what makes Windows Server “8” a great cloud platform and how to actually get your hands dirty building your own mini-cloud. I hope by now that you’ve had a chance to explore the Windows Server “8” cloud infrastructure technologies, so now you’re ready to start diving a bit deeper into the implications of the new features on how a cloud architecture can look like in the Windows Server “8” era. We can also start talking more about the “why” and less about the “how”. We’ll start with the networking architecture of the datacenter.

Until today, if you wanted to build a cloud, the best practice recommendation was that each of your Hyper-V hosts should have at least 4-5 different networks. This is because of the need to have security and performance guarantees for the different traffic flows coming out of a Hyper-V server. Specifically, you would have a separate NIC and network for cluster traffic, for live migration, for storage access (that could be either FibreChannel or Ethernet), for out of band management to access the servers, and of course, for your actual VM tenant workloads.

The common practice with Hyper-V today is usually to have physical separation and 4-5 NICs in each server, usually 1GbE. If you wanted resiliency, you’d even double that, and indeed, many customers have added NICs for redundant access to storage and sometimes even more. Eventually, each server ends up looking something like this:

Simplifying the Network architecture

Windows Server “8” presents new opportunities to simplify the datacenter network architecture. With 10GbE and Windows Server 8 support for features like host QoS, DCB support, Hyper-V Extensible Switch QoS and isolation policies, and Hyper-V over SMB, you can now rethink the need for separate networks, as well as how many physical NICs you actually need on the server. Let’s take a look at two approaches for simplification.

Approach #1: Two networks, Two NICs (or NIC teams) per server

Given enough bandwidth on the NIC (which 10GbE provides), you can now converge your entire datacenter networks into basically two networks, physically isolated: A datacenter network can carry all the storage, live migration, clustering and management traffic flows, and a second network can carry all of the VM tenant generated traffic. You can still apply QoS policies to guarantee minimum traffic for each flow (both at the host OS level for the datacenter native traffic, as well as at the Hyper-V switch level, to guarantee bandwidth for virtual machines. Also, thanks to the Hyper-V support for remote SMB server storage (did you read Jeffrey Snover’s blog?), you can use your Ethernet fabric to access the file servers and can minimize your non-Ethernet fabric. You end up with a much simpler network, which will look something like this:

Notice that now you have just two networks. There are no hidden VLANs, although you could have them if you wanted to. Also note that the file server obviously still needs to be connected to the actual storage, and there are various options there as well (FC, iSCSI or SAS), but we’ll cover that in another blog.

Once you adopt this approach, you can now add more NICs and create teams to introduce resiliency to network failures, so you can end up with two NIC teams on each server. The new Windows Server “8” feature called Load Balancing and Failover (LBFO) makes NIC teams easy.

Approach #2: Converging to a single NIC (or NIC team)

Now, if we take this even further down the network convergence road, we could still reduce the number of NICs in the server and use VLANs for running different traffic flows on different networks, with a single NIC or a NIC team. This is again made possible by the fact that you can create additional switch ports on the Hyper-V extensible switch and route host traffic through them, while still applying isolation policies and QoS policies at the switch level. Such a configuration would look like this (and we promise a how-to guide very soon. It’s in the works, and you will need some PowerShell to make it work):

In summary, Windows Server “8” truly increases the flexibility in datacenter network design. You can still have different networks for different traffic flows, but you don’t have to. You can use QoS and isolation policies, combined with the increased bandwidth of 10GbE to converge them together. In addition, the number of NICs on the server doesn’t have to equal the number of networks you have. You can use VLANs for separation, but you can also use the built in NIC teaming feature and the Hyper-V extensible switch to create virtual NICs from a single NIC and separate the traffic that way while keeping your isolation and bandwidth guarantees. It’s up to you to decide which approach works and integrates best with your datacenter.

So, what do you think? We would really like to hear from you! Would you converge your networks? If yes, which approach would you take? If not, why? Please comment back on this blog and let’s start a dialog!

Michael Otey asserted “SQL Server can leverage the benefits of a private cloud environment in several ways” in a deck for his Microsoft SQL Server in the Private Cloud: Is it Possible? article for SQL Server Pro magazine:

It's clear that the public cloud and the private cloud are two important future trends that are looming over IT. For cloud-based database services, Microsoft has implemented SQL Server in the public cloud with SQL Azure. Most businesses are very hesitant to jump into the public cloud for multiple reasons, including concerns about security, ownership of data, performance, accessibility, and legal boundaries for where data resides.

However, the private cloud is another story. The private cloud offers most of the same benefits as the public cloud, and because it's implemented using your organization's resources, IT remains in complete control of its infrastructure. In addition, the private cloud provides similar flexibility and to some degree similar scalability benefits as the public cloud. Although the private cloud might offer fewer overall computing resources than a Microsoft global data center, it can be designed to offer computing power that matches the organization's demands. More importantly, the private cloud enables resources, such as virtual machines (VMs), to be moved dynamically between hosts in response to changing workloads. For Microsoft infrastructures, Microsoft System Center Virtual Machine Manager 2012 (VMM) will be the platform that enables the private cloud.

However, does SQL Server really fit into the private cloud? A SQL Server database isn't like a web server where you can add servers to a web farm and immediately gain scalability benefits. SQL Server clusters are designed to increase availability, but they aren’t designed to increase scalability. Sure, you can utilize features such as distributed partitioned views to create scale-out databases. However, this requires you to re-architect your database, and the process isn’t nearly as simple as adding a new server to a cluster. Furthermore, applications are designed to connect to a specific database that's hosted by a particular SQL Server instance. Although future versions might change the way databases are implemented, at this point databases don't really move between servers. In many ways, SQL Server and enterprise databases seem like they would be poor candidates for the private cloud. For the most part, databases are fixed entities and aren't very dynamic.

Although there are limitations, SQL Server can definitely participate in the private cloud. With that said, a critical prerequisite for taking advantage of the private cloud is virtualization. This technology is the key enabler for dynamic automated management. Although databases themselves don't move between servers, technologies such as live migration coupled with VMM 2012's Dynamic Optimization lets you move VMs dynamically between hosts in response to changing CPU and memory demands. This lets you move virtualized SQL Server instances to new hosts in case the CPU or memory utilization in the VM or its host exceeds a given level. This can help your applications to continuously meet service level agreements (SLAs) without requiring manual intervention.

With System Center 2012, it's also possible to take advantage of the automated management technologies in Orchestrator to create runbooks that dynamically reconfigure VMs to hot-add CPU or RAM. In addition, a new generation of database hardware, such as the HP Database Consolidation Appliance (DBC), let you essentially create a private cloud for all of your on-premises databases. To learn more about the DBC Appliance, see "First Look: HP Enterprise Database Consolidation Appliance" and Hewlett Packard's product page.

The private cloud also helps with the deployment of new applications. The private cloud provides a higher level of management in which IT can work with applications as services, rather than working with individual VMs that might be required to deploy the application. The service is a collection of VMs and other virtual resources that perform a common task. For instance, a service might consist of two VMs that act as front-end web servers, another VM that provides middle-tier application logic, and a fourth VM that provides SQL Server database services. The private cloud lets all related VMs be deployed as a single unit or service. They can also be turned on or off and managed as a single unit. Finally, features such as Dynamic Power Optimization in VMM 2012 can take advantage of private cloud resources to reduce power consumption during periods of low resource utilization.

The private cloud will definitely be a growing trend throughout the next couple of years, and SQL Server isn’t excluded from playing in the cloud. You don't need to move to technologies such as the public cloud and SQL Azure to gain flexibility, scalability, and dynamic operations. You can have all the same benefits with the private cloud by using the resources your organization already has.

<Return to section navigation list>

Cloud Security and Governance

Members of the Microsoft ForeFront Team announced the availability of a Microsoft Endpoint Protection for Windows Azure Customer Technology Preview download on 3/15/2012 (missed when pubished). Elyasse Elyacoubi (@elyas_yacoubi) posted Microsoft Endpoint Protection for Windows Azure Customer Technology Preview is now available for free download on 3/16/2012:

Today we released the customer technology preview of Microsoft Endpoint Protection (MEP) for Windows Azure, a plugin that allows Windows Azure developers and administrators to include antimalware protection in their Windows Azure VMs. The package is installed as an extension on top of Windows Azure SDK. After installing the MEP for Windows Azure CTP, you can enable antimalware protection on your Windows Azure VMs by simply importing the MEP antimalware module into your roles' definition:

The MEP for Windows Azure can be downloaded and installed from http://www.microsoft.com/download/en/details.aspx?id=29209. Windows Azure SDK 1.6 or later is required before install.

Functionality recap

When you deploy the antimalware solution as part of your Windows Azure service, the following core functionality is enabled:

- Real-time protection monitors activity on the system to detect and block malware from executing.

- Scheduled scanning periodically performs targeted scanning to detect malware on the system,

including actively running malicious programs.- Malware remediation takes action on detected malware resources, such as deleting or quarantining

malicious files and cleaning up malicious registry entries.- Signature updates installs the latest protection signatures (aka “virus definitions”) to

ensure protection is up-to-date.- Active protection reports metadata about detected threats and suspicious resources to

Microsoft to ensure rapid response to the evolving threat landscape, as well as

enabling real-time signature delivery through the Dynamic Signature Service

(DSS).Microsoft’s antimalware endpoint solutions are designed to run quietly in the background without human intervention required. Even if malware is detected, the endpoint protection agent will automatically take action to remove the detected threat. Refer to the document “Monitoring Microsoft Endpoint Protection for Windows Azure” for information on monitoring for malware-related events or VMs that get into a “bad state.”

Providing feedback

The goal of this technology preview version of Microsoft Endpoint Protection for Windows Azure is to give you a chance to evaluate this approach to providing antimalware protection to Windows Azure VMs and provide feedback. We want to hear from you! Please send any feedback to eppazurefb@microsoft.com.

How it works

Microsoft Endpoint Protection for Windows Azure includes SDK extensions to the Windows Azure Tools for Visual Studio which provides the means to configure your Windows Azure service to include endpoint protection in the specified roles. When you deploy your service, an endpoint protection installer startup task is included that runs as part of spinning up the virtual machine for a given instance. The startup task pulls down the full endpoint protection package platform components from Windows Azure Storage for the geographical region specified in the Service Configuration (.cscfg) file and installs it, applying the other configuration options specified.

Once up and running, the endpoint protection client downloads the latest protection engine and signatures from the Internet and loads them. At this point the virtual machine is up and running with antimalware protection enabled. Diagnostic information such as logs and antimalware events can be configured for persistence in Windows Azure storage for monitoring. The following diagram shows the “big pictures” of how all the pieces fit together:

Prerequisites

Before you get started, you should already have a Windows Azure account configured and have an understanding of how to deploy your service in the Windows Azure environment. You will also need Microsoft Visual Studio 2010. If you have Visual Studio 2010, the Windows Azure Tools for Visual Studio, and have written and deployed Windows Azure services, you’re ready to go.

If not, do the following:

- Sign up for a Windows Azure account http://windows.azure.com

- Install Visual Studio 2010 http://www.microsoft.com/visualstudio

- Install Windows Azure Tools for Visual Studio http://msdn.microsoft.com/en-us/library/windowsazure/ff687127.aspx

Deployment

Once you have Visual Studio 2010 and the Windows Azure Tools installed, you’re ready to get antimalware protection up and running in your Azure VMs. To do so, follow these steps:

- Install Microsoft Endpoint Protection for Windows Azure

- Enable your Windows Azure service for antimalware

- Optionally customize antimalware configuration options

- Configure Azure Diagnostics to capture antimalware related information

- Publish your service to Windows Azure

Install Microsoft Endpoint Protection for Windows Azure

Run the Microsoft Endpoint Protection for Windows Azure setup package. The package can be downloaded from the Web at http://go.microsoft.com/fwlink/?LinkID=244362.

Follow the steps in the setup wizard to install the endpoint protection components. The required files are installed in the Windows Azure SDK plugins folder. For example:

C:\Program Files\Windows Azure SDK\v1.6\bin\plugins\Antimalware

Once the components are installed, you’re ready to enable antimalware in your Windows Azure roles.

Enable your Windows Azure service for antimalware

To enable your service to include endpoint protection in

your role VMs, simply add the “Antimalware” plugin when defining the role.

- In Visual Studio 2010, open the service definition file for your service (ServiceDefinition.csdef).

- For each role defined in the service definition (e.g. your worker roles and web roles), update the

<imports> section to import the “Antimalware” plugin by adding the following line:<Import moduleName="Antimalware" />

The following image shows an example of adding antimalware for the worker role “WorkerRole1” but not for the project’s Web role.

3. Save the service definition file.

In this example, the worker role instances for the project will now include endpoint protection running in each virtual machine. However the web role instances will not include antimalware protection, because the antimalware import was only specified for the worker role. The next time the service is deployed to Windows Azure, the endpoint protection startup task will run in the worker role instances and install the full endpoint protection client from Windows Azure Storage, which will then install the protection engine and signatures from the Internet. At this point the virtual machine will have active protection up and running.

Optionally customize antimalware configuration options

When you enable a role for antimalware protection, the configuration settings for the antimalware plugin are automatically added to your service configuration file (ServiceConfiguration.cscfg). The configuration settings have been pre-optimized for running in the Windows Azure environment. You do not need to change any of these settings. However, you can customize these settings if required for your particular deployment.

Default antimalware configuration added to service configuration

The following table summarizes the settings available to configure as part of the service configuration:

Updating configuration for deployed services