Windows Azure and Cloud Computing Posts for 2/24/2012+

| A compendium of Windows Azure, Service Bus, EAI & EDI Access Control, Connect, SQL Azure Database, and other cloud-computing articles. |

Note: This post includes articles published on 2/24/2012 through 3/2/2012 while I was preparing for and attending the Microsoft Most Valuable Professionals (MVP) Summit 2012 in Seattle, WA, and the following weekend.

Note: This post is updated daily or more frequently, depending on the availability of new articles in the following sections:

- Windows Azure Blob, Drive, Table, Queue and Hadoop Services

- SQL Azure Database, Federations and Reporting

- Marketplace DataMarket, Social Analytics and OData

- Windows Azure Access Control, Service Bus, and Workflow

- Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

- Live Windows Azure Apps, APIs, Tools and Test Harnesses

- Visual Studio LightSwitch and Entity Framework v4+

- Windows Azure Infrastructure and DevOps

- Windows Azure Platform Appliance (WAPA), Hyper-V and Private/Hybrid Clouds

- Cloud Security and Governance

- Cloud Computing Events

- Other Cloud Computing Platforms and Services

Azure Blob, Drive, Table, Queue and Hadoop Service

Denny Lee (@dennylee) posted BI and Big Data–the best of both worlds! on 2/29/2012:

As part of the excitement of the Strata Conference this week, Microsoft has been talking about Big Data and Hadoop. It started off with Dave Campbell’s question: Do we have the tools we need to navigate the New World of Data?. And some of the tooling call outs specific to Microsoft include references to PowerPivot, Power View, and the Hadoop JavaScript framework (Hadoop JavaScript– Microsoft’s VB shift for Big Data).

As noticed by GigaOM’s article Microsoft’s Hadoop play is shaping up, and it includes Excel; the great call out is:

to make Hadoop data analyzable via both a JavaScript framework and Microsoft Excel, meaning many millions of developers and business users will be able to work with Hadoop data using their favorite tools.

Big Data for Everyone!

The title of the Microsoft BI blog post says it the best: Big Data for Everyone: Using Microsoft’s Familiar BI Tools with Hadoop – it’s about helping make Big Data accessible to everyone by use of one of the most popular and powerful BI tools – Excel.

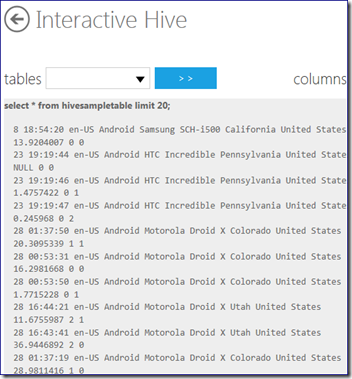

So what does accessible to everyone mean – in the BI sense? It’s about being to go from this (which is a pretty nice view of Hive query against Hadoop on Azure Hive Console)

and getting it Excel or PowerPivot.

The most important call out here is that you can use PowerPivot and Excel to merge data sets not just from Hadoop, but also bring in data sets from SQL Server, SQL Azure, PDW Oracle, Teradata, Reports, Atom feeds, Text files, other Excel files, and via ODBC – all within Excel! (thanks @sqlgal for that reminder!)

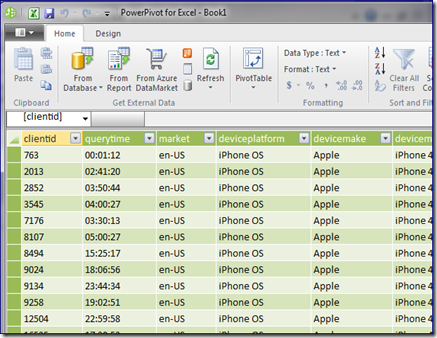

From here users can manipulate the data using Excel macros and PowerPivot DAX language respectively. Below is a screenshot of data extracted from Hive and placed into PowerPivot for Excel.

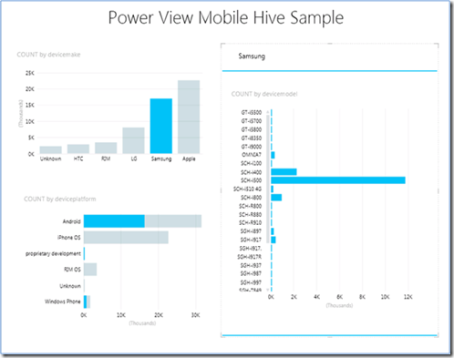

But even more cooler – data visualization wise – your PowerPivot for Excel workbook (once uploaded to SharePoint 2010 with SQL Server 2012) and you can create an interactive Power View report.

For more information on how to get PowerPivot and Power View to connect to Hadoop (in this case, its Hadoop on Azure but conceptually they are the same), please reference the links below:

- How To Connect Excel to Hadoop on Azure via HiveODBC

- Connecting PowerPivot to Hadoop on Azure – Self Service BI to Big Data in the Cloud

- Connecting Power View to Hadoop on Azure

- Connecting Power View to Hadoop on Azure [Video]

So what’s so Big about Big Data?

As noted by in the post What’s so Big about Big Data?, we call out that Big Data is important because of the sheer amount of machine generated data that needs to be made sense of.

As noted by Alexander Stojanovic (@stojanovic), the Founder and General Manager of Hadoop on Windows and Azure:

It’s not just your “Big Data” problems, it’s about your BIG “Data Problems”

To learn more, check out the my 24HOP (24 Hours of PASS) session:

Tier-1 BI in the Age of Bees and Elephants

In this age of Big Data, data volumes become exceedingly larger while the technical problems and business scenarios become more complex. This session dives provides concrete examples of how these can be solved. Highlighted will be the use of Big Data technologies including Hadoop (elephants) and Hive (bees) with Analysis Services. Customer examples including Klout and Yahoo! (with their 24TB cube) will highlight both the complexities and solutions to these problems.

Making this real, a great case study showcasing this includes the one at Klout, which includes a great blog post: Big Data, Bigger Brains. And below is a link to Bruno Aziza (@brunoaziza) and Dave Mariani’s (@dmariani) YouTube video on how Klout Leverages Hadoop and Microsoft BI Technologies To Manage Big Data.

Avkash Chauhan (@avkashchauhan) explained Primary Namenode and Secondary Namenode configuration in Apache Hadoop in a 2/27/2012 post:

Apache Hadoop Primary Namenode and secondary Namenode architecture is designed as below:

Namenode Master:

The conf/masters file defines the master nodes of any single or multimode cluster. On master, conf/masters that it looks like this:

localhost

This conf/slaves file lists the hosts, one per line, where the Hadoop slave daemons (datanodes and tasktrackers) will run. When you have both the master box and the slave box to act as Hadoop slaves, you will see same hostname is listed in both master and slave.

On master, conf/slaves looks like as below:

localhost

If you have additional slave nodes, just add them to the conf/slaves file, one per line. Be sure that your namenode can ping to those machine which are listed in your slave.

Secondary Namenode:

If you are building a test cluster, you don’t need to set up secondary name node on a different machine, something like pseudo cluster install steps. However if you’re building out a real distributed cluster, you must move secondary node to other machine and it is a great idea. You can have Secondary Namenode on a different machine other than Primary NameNode in case the primary Namenode goes down.

The masters file contains the name of the machine where the secondary name node will start. In case you have modified the scripts to change your secondary namenode details i.e. location & name, be sure that when the DFS service starts its reads the updated configuration script so it can start secondary namenode correctly.

In a Linux based Hadoop cluster, the secondary namenode is started by bin/start-dfs.sh on the nodes specified in conf/masters file. Initially bin/start-dfs.sh calls bin/hadoop-daemons.sh where you specify the name of master/slave file as command line option

Start Secondary Name node on demand or by DFS:

Location to your Hadoop conf directory is set using $HADOOP_CONF_DIR shell variable. Different distributions i.e. Cloudera or MapR have setup it differently so have a look where is your Hadoop conf folder.

To start secondary name node on any machine using following command:

$HADOOP_HOME/bin/hadoop –config $HADOOP_CONF_DIR secondarynamenode

When Secondary name node is started by DFS it does as below:

$HADOOP_HOME/bin/start-dfs.sh starts SecondaryNameNode

>>>> $bin”/hadoop-daemons.sh –config $HADOOP_CONF_DIR –hosts masters start secondarynamenode

In case you have changed secondary namenode name say “hadoopsecondary” then when starting secondary namenode, you would need to provide hostnames, and be sure these changes are available to when starting bin/start-dfs.sh by default:

$bin”/hadoop-daemons.sh –config $HADOOP_CONF_DIR –hosts hadoopsecondary start secondarynamenode

which will start secondary namenode on ALL hosts specified in file ” hadoopsecondary “.

How Hadoop DFS Service Starts in a Cluster:

In Linux based Hadoop Cluster:

- Namenode Service : start Namenode on same machine from where we starts DFS .

- DataNode Service : looks into slave file and start DataNode on all slaves using following command :

- #>$HADOOP_HOME/bin/hadoop-daemon.sh –config $HADOOP_CONF_DIR start datanodeSecondaryNameNode Service: looks into masters file and start SecondaryNameNode on all hosts listed in masters file using following command

- #>$HADOOP_HOME/bin/hadoop-daemon.sh –config $HADOOP_CONF_DIR start secondarynamenode

Alternative to backup Namenode or Avatar Namenode:

Secondary namenode is created as primary namenode backup to keep the cluster going in case primary namenode goes down. There are alternative to secondary namenode available in case you would want to build a name node HA. Once such method is to use avatar namenode. An Avatar namenode can be created by migrating namenode to avatar namenode and avatar namenode must build on a separate machine.

Technically when migrated Avatar namenode is the namenode hot standby. So avatar namenode is always in sync with namenode. If you create a new file to master name node, you can also read in standby avatar name node real time.

In standby mode, Avatar namenode is a ready-only name node. Any given time you can transition avatar name node to act as primary namenode. When in need you can switch standby mode to full active mode in just few second. To do that, you must have a VIP for name node migration and a NFS for name node data replication.

The Namenode Master paragraph is a bit mysterious.

Wely Lau (@wely_live) expained Uploading Big Files in Windows Azure Blob Storage using PutListBlock in a 2/25/2012 post:

Windows Azure Blob Storage could be analogized as file-system on the cloud. It enables us to store any unstructured data file such as text, images, video, etc. In this post, I will show how to upload big file into Windows Azure Storage. Please be inform that we will be using Block Blob for this case. For more information about Block Blob and Page Block, please visit here.

I am assume that you know how to upload a file to Windows Azure Storage. If you don’t know, I would recommend you to check out this lab from Windows Azure Training Kit.

Uploading a blob (commonly-used technique)

The following snippet show you how to upload a blob using a commonly-used technique, blob.UploadFromStream() which eventually invoking PutBlob REST-API.

protected void btnUpload_Click(object sender, EventArgs e) { var storageAccount = CloudStorageAccount.FromConfigurationSetting("DataConnectionString"); blobClient = storageAccount.CreateCloudBlobClient(); CloudBlobContainer container = blobClient.GetContainerReference("image2"); container.CreateIfNotExist(); var permission = container.GetPermissions(); permission.PublicAccess = BlobContainerPublicAccessType.Container; container.SetPermissions(permission); string name = fu.FileName; CloudBlob blob = container.GetBlobReference(name); blob.UploadFromStream(fu.FileContent); }The above code snippet works well in most case. Although you could upload at maximum 64 MB per file (for block blob), it’s more recommended to upload using another technique which I am going to describe more detail.

Uploading a blob by splitting it into chunks and calling PutBlockList

The idea of this technique is to split a block blob into smaller chunk of blocks, uploading them one-by-one or in-parallel and eventually join them all by calling PutBlockList().

protected void btnUpload_Click(object sender, EventArgs e) { CloudBlobClient blobClient; var storageAccount = CloudStorageAccount.FromConfigurationSetting("DataConnectionString"); blobClient = storageAccount.CreateCloudBlobClient(); CloudBlobContainer container = blobClient.GetContainerReference("mycontainer"); container.CreateIfNotExist(); var permission = container.GetPermissions(); permission.PublicAccess = BlobContainerPublicAccessType.Container; container.SetPermissions(permission); string name = fu.FileName; CloudBlockBlob blob = container.GetBlockBlobReference(name); blob.UploadFromStream(fu.FileContent); int maxSize = 1 * 1024 * 1024; // 4 MB if (fu.PostedFile.ContentLength > maxSize) { byte[] data = fu.FileBytes; int id = 0; int byteslength = data.Length; int bytesread = 0; int index = 0; List<string> blocklist = new List<string>(); int numBytesPerChunk = 250 * 1024; //250KB per block do { byte[] buffer = new byte[numBytesPerChunk]; int limit = index + numBytesPerChunk; for (int loops = 0; index < limit; index++) { buffer[loops] = data[index]; loops++; } bytesread = index; string blockIdBase64 = Convert.ToBase64String(System.BitConverter.GetBytes(id)); blob.PutBlock(blockIdBase64, new MemoryStream(buffer, true), null); blocklist.Add(blockIdBase64); id++; } while (byteslength - bytesread > numBytesPerChunk); int final = byteslength - bytesread; byte[] finalbuffer = new byte[final]; for (int loops = 0; index < byteslength; index++) { finalbuffer[loops] = data[index]; loops++; } string blockId = Convert.ToBase64String(System.BitConverter.GetBytes(id)); blob.PutBlock(blockId, new MemoryStream(finalbuffer, true), null); blocklist.Add(blockId); blob.PutBlockList(blocklist); } else blob.UploadFromStream(fu.FileContent); }Explanation about the code snippet

Since the idea is to split the big file into chunks. We would need to define size of each chunk, in this case 250KB. By dividing actual size with size of each chunk, we should be able to know number of chunk we need to split.

We also need to have a list of string (in this case: blocklist variable) to determine the blocks are in one group. Then we will loop to through each chunk and perform and upload by calling blob.PutBlock() and add it (as form of Base64 String) into the blocklist.

Note that there’s actually a left-over block that didn’t uploaded inside the loop. We will need to upload it again. When all blocks are successfully uploaded, finally we call blob.PutBlockList(). Calling PutListBlock() will commit all the blocks that we’ve uploaded previously.

Pros and Cons

The benefits (pros) of the technique

There’re a few benefit of using this technique:

- In the event where uploading one of the block fail due to whatever condition like connection time-out, connection lost, etc. We’ll just need to upload that particular block only, not the entire big file / blob.

- It’s also possible to upload each block in-parallel which might result shorter upload time.

- The first technique will only allow you to upload a block blob at maximum 64MB. With this technique, you can do more almost unlimited.

The drawbacks (cons) of the technique

Despite of the benefits, there’re also a few drawbacks:

- You have more code to write. As you can see from the sample, you can simply call the one line blob.UploadFromStream() in the first technique. But you will need to write 20+ lines of code for the second technique.

- It incurs more storage transaction as may lead to higher cost in some case. Referring to a post by Azure Storage team. The more chuck you have, the more storage transaction is incurred.

Large blob upload that results in 100 requests via PutBlock, and then 1 PutBlockList for commit = 101 transactions

Summary

I’ve shown you how to upload file with simple technique at beginning. Although, it’s easy to use, it has a few limitation. The second technique (using PutListBlock) is more powerful as it could do more than the first one. However, it certainly has some pros and cons as well.

<Return to section navigation list>

SQL Azure Database, Federations and Reporting

Cihan Biyikoglu (@cihangirb) started a Scale-First Approach to Database Design with Federations: Part 1 – Picking Federations and Picking the Federation Key series on 2/29/2012:

Scale-out with federations means you build your application with scale in mind as your first and foremost goal. Federations are build around this idea and allow application to annotate their schema with additional information for declaring its scale-out intent. Generating your data model and your database design is basic parts of the app design. At this point of the app lifecycle, it is also time to pick your federations and federations key and work in the scale-first principals into your data and database design.

In a series of posts, I’ll walk through the process of designing, coding and deploying applications with federations. If you’d like to design a database with scalability in mind using sharding [as] the technique, this post will also help you get there as well... This is a collection of my personal experience designing a number of sharded databases in past life and experience of some of the customers in the preview program we started back in June 2011, plus the experiences of customers who are going live with federations.

In case you missed earlier posts here is a quick definition of federation and federation key;

Federation is an object defined to scale out parts of your schema. Every database can have many federations. Federations use federation members which are regular sql azure databases to scale out your data in one or many tables and all associated rich programming properties like views, indexes, triggers, stored procs. Each federation has a name and a federation key which is also called a distribution scheme. Federation key or federation distribution scheme defines 3 properties;

- A federation key label, help self document the meaning of the federation key like tenant_id or product_id etc. ,

- A data domain to define the distribution surface for your data. In v1, data domains supported are INT, BIGINT, UNIQUEIDENTIFIER (guid) and VarBinary – up to 900 bytes.

- A distribution style, to define how the data is distributed to the data domain. At this point distribution style can only be RANGE.

Lets assume you are designing the AdventureWorks (AW) database. You have a basic idea on the entities at play like Stores, Customers, Orders, Products, SalesPeople etc and thinking about how you’d like to scale it out…

Picking your Federations:

Picking your federations and federations keys is much like other steps of data modeling and database design processes like normalization. You can come up with multiple alternatives and they optimize for various situations. You can get to your final design only by knowing the transactions and queries that are critical to your app.

Step #1: Identify entities you want to scale out: You first identify the entities (collection of tables) in your database that is going to be under pressure and needs scale out. These entities are your candidate federations in your design.

Step #2: Identify tables that make up these entities: After identifying these central entities you want to scale out like customer, order and product, it is fairly easy to traverse the relationships and identify the group of objects tied to these entities with relationships, access patterns and properties to these set of entities. Typically these are groups of tables with one-to-one or on-to-many relationships.

Picking your Federation Key:

Step #3: Identify the Federation Key: Federation key identify the key used for distributing the data and define the boundary of atomic units. Atomic units are rows across all scaled out tables (federated tables) that share the same federation key instance. One important rule in federation is that atom cannot be SPLIT. Ideal federation keys have the following properties;

- Atomic Units is the target of most query traffic & transaction boundaries.

- Distributes workload equally to all members equally – decentralize load to many atomic units as opposed to concentrating the load.

- Atomic units cannot be split, so largest atomic unit does not exceed the boundaries of a single federation member.

Walking through AW

The database design for AW is something I hope you are already familiar with. You can fid details here on the schema for SQL Azure; http://msftdbprodsamples.codeplex.com/releases/view/37304. For this app we want to be able to handle Mil customers, 100 Mil orders, and Mil products. These are the largest entities in our database. I’ll add a few more details on the workload to help guide our design; here are our most popular transactions and queries;

‘place an order’, ‘track/update orders’, ‘register/update a customer’, ‘get customer orders’,’get top ordering customers’, ‘register/update products’, ’get top selling products’

and here are some key transactions and consistent query requirements;

‘place an order’, ‘import/export orders for a customer and stores’, ‘monthly bill for customers’

Step #1: We have the classic sales database setup with customer, order and product in AW. In this example, we expect orders to be the parts that will be most active, the tables will be the target of most of our workload. We expect many customers and also handle cases where there are large product catalogs.

Step #2: In AW, Store and customer tables are used to identify the customer entity. SalesTerritory, SalesOrdersHeader and SalesOrderDetail tables contain properties of orders. Customer and Order entities have one-to-many relationship. On the other hand, Product entity has a many-to-many back to Order and to Customer entity. When scaling out, you can align one-to-one and one-to-many relationships together but not many-to-many relationships. Thus we can only group Customers and Orders together but not products.

Step #3: Given the Customer (store and customer tables) and Order (SalesOrderHeader, SalesrderDetail, SalesTerritory) we can think of a few setups here for the federation key.

- StoreID as the Federation Key:

- Well that would work for all transactions so #1 of ideal federation key principal taken care of! That is great.

- However stores may have varying size and may not distribute the load well if there the customer and order traffic variance between stores is too wide. Not so great on #2 principal of ideal federation keys.

- StoreID as a federation key will mean all customers and all their orders in that store will be a single atomic unit (AU). If you have stores that could get large enough to challenge the computational capacity of a single federation member, you will hit the ‘split the atom’ case and get stuck because you cannot.

So StoreID may be too coarse a granule to equally distribute load and may be too large an AU if a store gets ‘big’. By the way, TerritoryID is a similar alternative and has a very similar set of issues so same argument applies to that as well.

- OrderID as the Federation Key:

- OrderID certainly satisfy #2 and #3 of the ideal federation key principals but has an issue with #1 so lets focus on that.

- That could work as well but is too fine a granule for queries that are orders per customer in the system. It also won’t align with transactional requirements of import/export of customer and store orders. Another important note; with this setup, we will need a separate federation for the Customer entity. It means that queries that may be common like ‘get all orders of a customer’ or ‘get order of customer dated X/Y/Z’ will need to hit all or at least multiple members. Also with this setup we lose ability to transact multiple order from a customer. We may do that when we are importing or exporting a customers orders.

Fan-out is not necessarily bad. It promotes parallel executions and can provide great efficiencies. However efficiencies are lost when we hit all members and can’t fast-eliminate members that don’t have any data to return and when cost of parallelization overwhelms the processing of the query. With OrderID as the federation key, queries like ‘get orders of a customer’ or ‘get top products per customer’ will have to hit all members.

- OrderDetailID as the Federation Key:

- The case of OrderDetailID has the same issues as OrderID case above but amplified on principal #1. With this setup, we will lose transactional boundary to place a single order in a transaction. In this case, there will be more queries that will need to be fanned-out like ‘get all customer orders’ or ‘get an order’… Makes assembling order a full fan-out query which can get quite expensive.

- CustomerID as the Federation Key:

- With CustomerID #2 and #3 will not likely be an issue. The only case is where a customer gets so large that it overwhelms a member and computational capacity of AU. For most cases, CustomerID can be a great way to decentralize the load getting around issues StoreID or TerritoryID would create.

- This setup also satisfy almost all of #1 as well except 2 cases; one is ‘get top selling products across all customers’. However that case isn’t satisfied in any of the other alternatives either. This setup does focus the DML (INSERT/UPDATE/DELETE) transactions and satisfy both multiple order and single order placement transaction to work seamlessly. So looks like a good choice for #1 standpoint. Second is import/export at store boundary; for example import of stores all customers and orders will not be possible with this setup in a transaction. Some people may be able to live with consistency at the customer level and be ok relaxing consistency at the store level. you need to ask yourself; Can you work with eventual consistency without transactions at the store level by depending on things like datetime stamps or some other store level sequence generator. if you can this is the right choice.

How about Product Entity?

We have not touched on how to place products in this case. To remind you the issue; there is a many-to-many relationship in customers vs orders thus federation aligned with orders and/or customers Well, you have 3 choices when it comes to products.

- Products as a Central Table: In this case, you’d leave Product entity in the root. That would risk making root and products a bottleneck. Especially if you have a fast updating catalog of products and you don’t build caching facilities to minimize hitting the root for product information for popular queries and transaction in the system. The advantage to this setup is that product entity can be maintained in a single place with full consistency.

- Product as a Reference Table: In this case, you would place a copy of the products entity in each federation member. This would mean you need to pay more for storing this redundant information. This would mean updating the product catalog will have to be done across many copies of the data and will mean you need to live with eventual consistency on product entity across members. That is at any moment in time, copies of products in member 1 & 2 may not be identical. Upside is this would give you good performance like local joins.

- Product as a separate Federation: In this case, you have a separate federation with a key like productID that holds product entity = all the tables associated with that. You would set up products in a fully consistent setup so there would not be redundancy and you would be setting up products with great scale characteristics. You can independently decide how many nodes to engage and choose to expand if you run out of computational capacity for processing product queries. Downside compared to the reference table option is that you no longer enjoy local joins.

To tie all this together, given the constraints most people will choose customerID as the fed key and place customers and orders in the same federation and given the requirement to handle large catalogs, most people will choose a separate federation for products.

In this case, I choose a challenging database schema to show the highlight the variety of options and for partition-able workloads life isn’t this complicated. However for sophisticated schemas with variety of relationships, there pros and cons to evaluate much like other data and database design exercises. It is clear that doing design with federations puts ‘scale’ as the top goal and concern and that may mean that you evaluate some compromises compromises on transactions and query processing.

In part 2, I’ll cover the schema changes that you need to make with federations which is step 2 in designing o migrating an existing database design over to federations.

Steve Jones (@way0utwest) asserted The Cloud is good for your career in a 2/29/2012 post to the Voice of the DBA blog:

I think most of us know that the world is not really a meritocracy. We know that the value of many things is not necessarily intrinsic to the item; value is based on perception. That’s a large part of the economic theory of supply and demand. The more people want something, the more it should cost.

I ran across a piece that surveyed some salaries and it shows that people working with cloud platforms are commanding higher salaries, even when the underlying technologies are the same. It seems crazy, but that’s the world we live in. Perceptions drive a lot of things in the world, especially the ones that don’t seem to make sense.

Should you specialize in cloud technologies? In one sense, the platform is not a lot different than what you might run in your local data center. Virtualized machines, connections and deployments to remote networks, and limited access to the physical hardware. There are subtle differences, and learning about them and working with them, could be good for your next job interview, when the HR person or technology-challenged manager asks you about Azure.

Part of your career is getting work done, using your skills and talents in a practical and efficient manner. However a larger part of it, IMHO, is the marketing of your efforts and accomplishments. The words you choose, the way you present yourself, these things matter. I would rather be able to talk about SQL Azure as a skill, and then relate that to local Hyper-V installations of SQL Server than the other way around.

If you are considering new work, or interesting in the way cloud computing might fit into an environment, I’d urge you to take a look at SQL Azure or the Amazon web services. You can get a very low cost account for your own use, and experiment. You might even build a little demo for your next interview that impresses the person who signs the paychecks.

Cihan Biyikoglu (@cihangirb) asked What would you like us to work on next? in a 2/28/2012 post:

10 weeks ago we shipped federations in SQL Azure and it is great to see the momentum grow. This week, I'll be spending time with our MVPs in Redmond and we have many events like this where we get together with many of you to talk about what you'd like to see us deliver next on federations. I'd love to hear from the rest of you who don't make it to Redmond or to one of the conferences to talk to us; what would you like us to work on next?

If you like what you see today, what would make it even better? if you don't like what you see today, why? what experience would you like to see taken care of, simplified? Could be big or small. Could be NoSQL or NewSQL or CoSQL or just plain vanilla SQL. Could be in APIs, tools or in Azure or in SQL Azure gateway or the fabric or the engine? Could be the obvious or the obscure...

Open field; fire away and leave a comment or simply tweet #sqlfederations and tell us what you'd like us to work on next...

Peter Laudati (@jrzyshr) posted Get Started with SQL Azure: Resources on 2/28/2012:

Earlier this month, SQL Azure prices were drastically reduced, and a new 100mb for $5 bucks a month pricing level was introduced. This news has certainly gotten some folks looking at SQL Azure for the first time. I thought I’d share some resources to help you get started with SQL Azure.

Unlike the new “Developer Centers” for .NET, Node.js, Java, and PHP on WindowsAzure.com, there does not appear to be a one-stop shop for finding all of the information you’d need or want for SQL Azure. The information is out there, but it is spread around all over the place.

I’ve tried to organize these into three high-level categories based on the way one might think about approaching this platform:

- What do I need to know about SQL Azure to get started?

- Can I migrate my data into it?

- How do I achieve scale with it?

Note: This is by no means an exhaustive list of every SQL Azure resource out there. You may find (many) more that I’m not aware of. You also may come across documentation & articles that are older and possibly obsoleted by new features. Be wary of articles with a 2008 or 2009 date on the by line.

Getting Started

Understanding Storage Services in Windows Azure

Storage is generally provided “as-a-service” in Windows Azure. There is no notion of running or configuring your own SQL Server ‘server’ in your own VM. Windows Azure takes the hassle of managing infrastructure away from you. Instead, storage services are provisioned via the web-based Windows Azure management portal, or using other desktop-based tools. Like in a restaurant, you essentially look at a menu and order what you’d like.

Storage services in Windows Azure are priced and offered independently of compute services. That is, you do not have to host your application in Windows Azure to use any of the storage services Windows Azure has to offer. For example, you can host an application in your own datacenter, but store your data in Azure with no need to ever move your application there too. Exploring Windows Azure’s storage services is an easy (and relatively low-cost) way to get started in the cloud.

There are currently three flavors of storage available in Windows Azure:

- Local storage (in the compute VMs that host your applications)

- Non-relational storage (Blobs, Tables, Queues – a.k.a. “Windows Azure Storage”)

- Relational storage (SQL Azure)

For a high-level overview of these, see: Data Storage Offerings in Windows Azure

Note: This post is focused on resources for SQL Azure only. If you’re looking for information on the non-relational storage services (Blobs, Tables, Queues), this is post “is not the droids you’re looking for”.

Getting Started With SQL Azure

I started my quest to build this post at the new WindowsAzure.com (“new” in December 2011). Much of the technical content for the Windows Azure platform was reorganized into this new site. Some good SQL Azure resources are here. Others are still elsewhere. Let’s get started…

Start here:

- What is SQL Azure? – This page on WindowsAzure.com explains what SQL Azure is and the high-level scenarios it is good for.

- Business Analytics – This page on WindowsAzure.com explains SQL Azure Reporting at a high-level.

- Overview of SQL Azure – This whitepaper is linked from the “Whitepapers” page on WindowsAzure.com. It appears to be older (circa 2009), but appears to provide a still relevant overview of SQL Azure

- SQL Azure Migration Wizard (Part 1): SQL Azure – What Is It? – This screencast on Channel 9 by my colleagues Dave Bost and George Huey may have “Migration Wizard” in the title, but it goes here in the first section. These guys provide a good high-level overview of what SQL Azure is.

Get your hands dirty with the equivalent of a “Hello World” example:

- How To Use SQL Azure – Dive right in. This article walks you through setting up a simple SQL Azure database and then connecting to it from a .NET application.

- Managing SQL Azure Servers and Databases Using SQL Server Management Studio – SQL Azure can be managed via many different tools. One of the most popular is SQL Server Management Studio (SSMS). This article walks you through the basics of doing that.

BIG FLASHING NOTE #1:

You must have SQL Server Management Studio 2008 R2 SP1 to manage a SQL Azure database! SSMS 2008 and SSMS 2008 R2 are just NOT good enough. If you don’t have SSMS 2008 R2 SP1, it will cause a gap in the space time continuum! The errors you will receive if you don’t have SSMS 2008 R2 SP1 are obscure and not obvious indicators of the problem. You may be subject to losing valuable hours of your personal time seeking the correct solution. Be sure you have the right version.

BIG FLASHING NOTE #2:

You CAN run SQL Server Management Studio 2008 R2 SP1 even if you’re NOT running SQL Server 2008 R2 SP1. For example, if you need to still run SQL Server 2008 R2, 2008, or older edition, you can install SSMS 2008 R2 SP1 side-by-side without impacting your existing database installation. Disclaimer: Worked on my machine.

BIG FLASHING TIP:

How can I get SQL Server Management Studio 2008 R2 SP1?

Unfortunately, I found it quite difficult to parse through documentation to find the proper download for this. Searching for the “ssms 2008 r2 sp1 download” on Google or Bing will give will present you with Microsoft Download center pages that have multiple file download options. I present you with two options here:

- Microsoft SQL Server 2008 R2 SP1 – Express Edition – This page contains multiple download files to install the Express edition of SQL Server. The easiest thing to do here is download either SQLEXPRWT_x64_ENU.exe or SQLEXPRWT_x86_ENU.exe depending on your OS-version (32 vs 64 bit). These files contain both the database and the management tools. When you run the installation process, you can choose to install ONLY the management tools if you don’t want to install the database on your machine.

- Microsoft SQL Server 2008 R2 SP1 – This page contains multiple download files to install just SP1 to an existing installation of SQL Server 2008 R2. If you already have SQL Server Management Studio 2008 R2, you can run SQLServer2008R2SP1-KB2528583-x86-ENU.exe or SQLServer2008R2SP1-KB2528583-x64-ENU.exe, depending on your OS version (32 or 64 bit) to upgrade your existing installation to SP1.

The reason I call so much attention to this issue is because it is something that WILL cause you major pain if you don’t catch it. While some documents call out that you need SSMS 2008 R2 SP1, many do not provide the proper download links and send you on a wild goose chase looking for them. Thank me. I’ll take a bow.

The next place I recommend spending time reading is the SQL Azure Documentation on MSDN.

Content here is broken down into three high-level categories:

- SQL Azure Database – This is the top of a treasure trove of good content.

- SQL Azure Reporting

- SQL Azure Data Sync

You can navigate the tree on your own, but some topics of interest might be:

- SQL Azure Overview - I’m often asked what’s the difference between SQL Azure & SQL Server. This sheds some light on that.

- Guidelines & Limitations – This gets a little more specific on SQL Server features NOT supported on SQL Azure.

- Development: How-to Topics – There’s a smorgasbord of “How To” links here on how to connect to SQL Azure from different platforms and technologies.

- Administration – All the details you need to know to manage your SQL Azure databases. See the “How-to” sub-topic for details on things like backing up your database, importing/exporting data, managing the firewall, etc.

- Accounts & Billing in SQL Azure – Detailed info on pricing & billing here. (Be sure to see my post clarifying some pricing questions I had.)

- Tools & Utilities Support – Many of the same tools & utilities you use to manage SQL Server work with SQL Azure too. This is a comprehensive list of them and brief overview of what each does.

Windows Azure Training Kit – No resource list would be complete without the WATK! The WATK contains whitepapers, presentations, demo code, and labs that you can walkthrough to learn how to use the platform. This kit has grown so large, it has its own installer! You can selectively install only the documentation and sample labs that you want. The SQL Azure related content here is great!

Migrating Your Data

The resources in the previous section should hopefully give you a good understanding of how SQL Azure works and how to do most basic management of it. The next task most folks want to do is figure out how to migrate their existing databases to SQL Azure. There are several options for doing this.

Start here: Migrating Databases to SQL Azure – This page in MSDN provides a high-level overview of the various options.

Three migration tools you may find yourself interested in:

- SQL Azure Migration Wizard – The SQLAzureMW is an open source project on CodePlex. It was developed by my colleague George Huey. This is a MUST have tool in your toolbox!

SQLAzureMW is designed to help you migrate your SQL Server 2005/2008/2012 databases to SQL Azure. SQLAzureMW will analyze your source database for compatibility issues and allow you to fully or partially migrate your database schema and data to SQL Azure.

SQL Azure Migration Wizard (SQLAzureMW) is an open source application that has been used by thousands of people to migrate their SQL database to and from SQL Azure. SQLAzureMW is a user interactive wizard that walks a person through the analysis / migration process.

- SQL Azure Migration Assistant for MySQL

- SQL Azure Migration Assistant for Access

Channel 9 SQL Azure Migration Wizard (Part 2): Using the SQL Azure Migration Wizard - For people who are new to SQL Azure and just want to get an understanding of how to get a simple SQL database uploaded to SQL Azure, George Huey & Dave Bost did a Channel 9 video on the step by step process of migrating a database to SQL Azure with SQLAzureMW. This is a good place to to get an idea of what’s involved.

Tips for Migrating Your Applications To The Cloud – MSDN Magazine article by George Huey & Wade Wegner covering the SQLAzureMW.

Overview of Options for Migrating Data and Schema to SQL Azure – I found this Wiki article on TechNet regarding SQL Azure Migration. It appears to be from 2010, but with updates as recent as January 2012. The information here appears valid still.

Scaling with SQL Azure

Okay, you’ve figured out how to get an account and get going. You’re able to migrate your existing databases to SQL Azure. Now it’s time to take it to the next level: Can you scale?

Just because you can migrate your existing SQL Server database to SQL Azure doesn’t mean it will scale the same. SQL Azure is a multi-tenant “database-as-a-service” that is run in the Azure datacenters on commodity hardware. That introduces a new set of concerns regarding performance, latency, competition with other tenants, etc.

I recommend watching this great video from Henry Zhang at TechEd 2011 in Atlanta, GA:

- Microsoft SQL Azure Performance Considerations and TroubleshootingIn this talk, Henry goes deep on how SQL Azure is implemented under the covers, providing you a better understanding of how the system works. He covers life in a mutli-tenant environment, including throttling, and how to design your databases for it. (Henry’s talk is an updated version of one delivered by David Robinson at TechEd Australia in 2010.)

There is a 150GB size limit on SQL Azure databases (recently up from 50GB). So what do you do if you’re relational needs are greater than that limit? It’s time to learn about art of sharding and SQL Azure Federation. While SQL Azure may take away the mundane chores of database administration (clustering/replication/etc), it does introduce problems which require newer skillsets to solve. This is a key example of that.

Start off by watching this video by Chihan Biyikoglu from TechEd 2011 in Atlanta, GA:

Building Scalable Database Solutions Using Microsoft SQL Azure Database Federations – Chihan Biyikoglu

In this talk Chihan explains what a database federation is, and how they work in SQL Azure.

Note: This talk is from May 2011 when SQL Azure Federations were only available as a preview/beta. The SQL Azure Federations feature was officially released into production in December 2011. So there may be variances between the May video and current service feature. He released a short updated video here.

Next read George Huey’s recent MSDN Magazine Article:

- SQL Azure: Scaling Out with SQL Azure Federation

In this article, George covers the what, the why, and the how of SQL Azure Federations, and how SQL Azure Migration Wizard and SQL Azure Federation Data Migration Wizard can help simplify the migration, scale out, and merge processes. This article is geared to architects and developers who need to think about using SQL Azure and how to scale out to meet user requirements. (Chihan, from the previous video, was a technical reviewer on George’s article!)Follow that up George Huey as a guest on Cloud Cover Episode #69:

- Channel 9 Episode 69 - SQL Azure Federations with George Huey – George covers a lot of the same information with Wade Wegner in a Channel 9 Cloud Cover session on SQL Azure Federations. As the article above, this video covers what SQL Azure Federations is and the process of migrating / scaling out using SQL Azure Federations and some of the architectural considerations that need to be considered during the design process.

As a follow-up to the SQL Azure Migration Wizard, George has also produced another great tool:

- SQL Azure Federation Data Migration Wizard (SQLAzureFedMW)

SQL Azure Federation Data Migration Wizard simplifies the process of migrating data from a single database to multiple federation members in SQL Azure Federation.

SQL Azure Federation Data Migration Wizard (SQLAzureFedMW) is an open source application that will help you move your data from a SQL database to (1 to many) federation members in SQL Azure Federation. SQLAzureFedMW is a user interactive wizard that walks a person through the data migration process.That about wraps up my resource post here. Questions? Feedback? Leave it all below in the comments! Hope this helped you on your way to learning SQL Azure.

Gregory Leake posted Protecting Your Data in SQL Azure: New Best Practices Documentation Now Available on 2/27/2012:

We have just released new best practices documentation for SQL Azure under the MSDN heading SQL Azure Business Continuity. The documentation explains the basic SQL Azure disaster recovery/backup mechanisms, and best practices to achieve advanced disaster recovery capabilities beyond the default capabilities. This new article explains how you can achieve business continuity in SQL Azure to enable you to recover from data loss caused by failure of individual servers, devices or network connectivity; corruption, unwanted modification or deletion of data; or even widespread loss of data center facilities. We encourage all SQL Azure developers to review this best practices documentation.

<Return to section navigation list>

MarketPlace DataMarket, Social Analytics, Big Data and OData

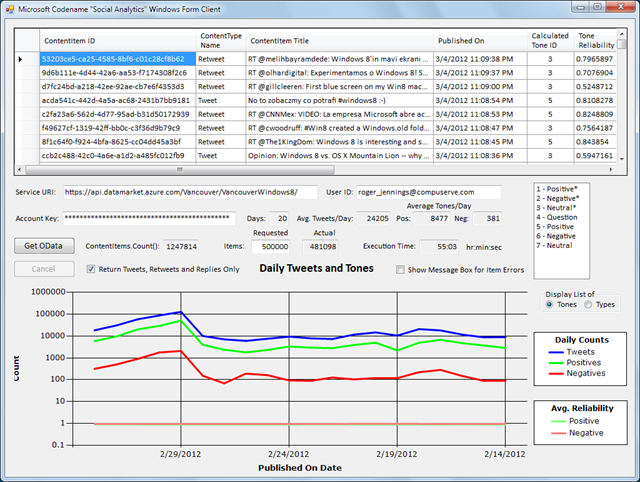

My (@rogerjenn) Windows 8 Preview Engagement and Sentiment Analysis with Microsoft Codename “Social Analytics” post of 3/4/2012 begins:

The VancouverWindows8 data set created by the Codename “Social Analytics” team and used by my Microsoft Codename “Social Analytics” Windows Form Client demo application is ideal for creating a time series of Twitter-user engagement and sentiment during the first few days of availability of the Windows 8 preview bits.

Following is a screen capture showing summary engagement (tweet count per day) and sentiment (Tone) data for 481,098 tweets, retweets and replies about Windows 8 from 2/12 to 3/4/2011:

Note: The execution time to retrieve the data was 04:55:05. Hour data is missing from the text box. (This problem has been corrected in the latest downloadable version.) The latest data (for 3/4/2011) isn’t exact because it’s only for 23 hours.

The abrupt increase in Tweets/Day from an average of 24,205 to more than 100,000 on 2/29/2011 is to be expected because Microsoft released the Windows 8 Consumer Preview and Windows 8 Server Beta on that date.

…

For more information about the downloadable “Social Analytics” sample app, see the following posts (in reverse chronological order):

- More Features and the Download Link for My Codename “Social Analytics” WinForms Client Sample App

- New Features Added to My Microsoft Codename “Social Analytics” WinForms Client Sample App

- My Microsoft Codename “Social Analytics” Windows Form Client Detects Anomaly in VancouverWindows8 Dataset

- Microsoft Codename “Social Analytics” ContentItems Missing CalculatedToneId and ToneReliability Values

- Querying Microsoft’s Codename “Social Analytics” OData Feeds with LINQPad

- Problems Browsing Codename “Social Analytics” Collections with Popular OData Browsers

- Using the Microsoft Codename “Social Analytics” API with Excel PowerPivot and Visual Studio 2010

- SQL Azure Labs Unveils Codename “Social Analytics” Skunkworks Project

Paul Patterson described Microsoft LightSwitch – Consuming OData with Visual Studio LightSwitch 2011 in a 3/3/2012 post:

The integrated OData (Open Data Protocol) data source features included with Microsoft Visual Studio 2011 (Beta) LightSwitch looks exciting.

Just how simple is it to consume OData with LightSwitch? Check it out…

Launch the new Visual Studio 2011 Beta and select to create a new LightSwitch project. I named mine LSOData001.

Next, in the Solution Explorer, Right-Click the Data Sources node and select Add Data Source…

Then, from the Attach Data Source Wizard, select OData Service from the Choose a Data Source Type dialog…

Microsoft has produced a great resource for OData developers. www.OData.org is where you’ll find some terrific information about all things OData, including a directory of OData publishers.

For this example post, I wanted to try out the Northwind OData service that is available. So, in the connection information dialog that resulted from the step above, I entered the source address for the OData service, and then tested the connection…

With a successful connection, I closed the Test Connection dialog and then clicked Next. This action opens the Choose your Entities dialog. From that dialog I selected the Customers AND the Orders (not shown below). Make sure to also select Orders.

Clicking the Finish button (above) causes LightSwitch to evaluate the entities selected. In the example I am using, the Customers and Orders entities each have some constraints that need to be included as part of the operation to attach the datasource. Shippers, Order_Details, and Employees are entities that need to be included in the operation. Also, note the warning about the many-to-many relationship that is not supported.

Next, I click the Continue button to… continue

…and PRESTO! The entity designer appears, showing the Customer entity.

Next I quickly add a List Detail screen so that I can actually see that the binding works when I run the application.

From the entity designer, I click “Screen…”…

Then I define what kind of screen, including the data source, to create…

I click the OK button (above), which results in the display of the screen designer. And being the over-enthusiastic sort of fella I am, I click the start button just to give myself that immediate rush of accomplishment….

Way COOL!!… er, I mean, yeah that’s what I knew would happen!

This opens a lot of opportunity, this whole OData goodness. I have lots of new ideas for posts now.

Glenn Gailey (@ggailey777) posted Blast from the Past: Great Post on Using the Object Cache on the Client on 3/2/2012:

In the course of trying to answer a customer question on how to leverage the WCF Data Services client for caching, I came across a great blog post on the subject—which I had almost completely forgotten about:

Working with Local Entities in Astoria Client

(This post—by OData developer Peter Qian—is, in fact, so old that is refers to WCF Data Services by its original code name “Astoria.”)

The customer was looking for a way to maintain a read-only set of relatively static data from an OData service in memory so that this data could be exposed by his web app. As Peter rightly points out, the best thing to do is use a NoTracking merge option when requesting the objects. In this case, the object data is available in the Entities collection of the DataServiceContext and can be exposed in various ways. The entity data stored by the context is wrapped in an EntityDescriptor that includes the entity’s tracking and metadata, so some fancier coding is involved to expose this cached data as an IQueryable<T>, which have the LINQ—iness that we all really want.

Just re-read the post yourself, and see if you agree with me that it’s a rediscovered gem for using the WCF Data Services client.

Tip:

Remember to try and avoid the temptation to use IQueryable<T> collections on the client as the data source for another OData feed (using the reflection provider). This kind of republishing using the WCF Data Services client can get you into a lot of problems. This is because the WCF Data Services client library does not support the full set of LINQ queries used by an OData service.

David Campbell asked Do we have the tools we need to navigate the New World of Data? in a 2/29/2012 post to the SQL Server Team blog:

Last October at the PASS Summit we began discussing our strategy and product roadmap for Big Data including embracing Hadoop as part of our data platform and providing insights to all users on any data. This week at the Strata Conference, we will talk about the progress we have been making with Hortonworks and the Hadoop ecosystem to broaden the adoption of Hadoop and the unique opportunity for organizations to derive new insights from Big Data.

In my keynote tomorrow, I will discuss a question that I’ve been hearing a lot from the customers I’ve been talking to over the past 18 months, “Do we have the tools we need to navigate the New World of Data?” Organizations are making progress at learning how to refine vast amounts of raw data into knowledge to drive insight and action. The tools used to do this, thus far, are not very good at sharing the intermediate results to produce “Information piece parts” which can be combined into new knowledge.

I will share some of the innovative work we’ve been doing both at Microsoft and with members of the Hadoop community to help customers unleash the value of their data by allowing more users to derive insights by combining and refining data regardless of the scale and complexity of data they are working with. We are working hard to broaden the adoption of Hadoop in the enterprise by bringing the simplicity and manageability of Windows to Hadoop based solutions, and we are expanding the reach with a Hadoop based service on Windows Azure. Hadoop is a great tool but, to fully realize the vision of a modern data platform, we also need a marketplace to search, share and use 1st and 3rd party data and services. And, to bring the power to everyone in the business, we need to connect the new big data ecosystem to business intelligence tools like PowerPivot and Power View.

There is an amazing amount of innovation going on throughout the ecosystem in areas like stream processing, machine learning, advanced algorithms and analytic languages and tools. We are working closely with the community and ecosystem to deliver an Open and Flexible platform that is compatible with Hadoop and works well with leading 3rd party tools and technologies enabling users of non-Microsoft technologies to also benefit from running their Hadoop based solutions on Windows and Azure.

We have recently reached a significant milestone in this journey, with our first series of contributions to the Apache Hadoop projects. Working with Hortonworks, we have submitted a proposal to the Apache Software Foundation for enhancements to Hadoop to run on Windows Server and are also in the process of submitting further proposals for a JavaScript framework and a Hive ODBC Driver.

The JavaScript framework simplifies programming on Hadoop by making JavaScript a first class programming language for developers to write and deploy MapReduce programs. The Hive ODBC Driver enables connectivity to Hadoop data from a wide range of Business Intelligence tools including PowerPivot for Excel.

In addition, we have also been working with several leading Big Data vendors like Karmasphere, Datameer and HStreaming and are excited to see them announce support for their Big Data solutions on our Hadoop based service on Windows Server & Windows Azure.

Just 10 years ago, most business data was locked up behind big applications. We are now entering a period where data and information become “first class” citizens. The ability to combine and refine these data into new knowledge and insights is becoming a key success factor for many ventures.

A modern data platform will provide new capabilities including data marketplaces which offer content, services, and models; and it will provide the tools which make it easy to derive new insights taking Business Intelligence to a whole new level.

This is an exciting time for us as we also prepare to launch our data platform that includes SQL Server 2012 and our Big Data investments. To learn more on what we are doing for Big Data you can visit www.microsoft.com/bigdata.

OData.org published OData Service Validation Tool Update: Ground work for V3 Support on 2/28/2012:

OData Service Validation Tool was updated with 5 new rules and the ground work for supporting OData v3 services:

- 4 new common rules

- 1 new entry rule

- Added version annotation to rules to differentiate rules by version

- Modified the rule selection engine to support rule version.

- Change rule engine API to support rule versions

- Changed database schema to log request and response headers

- Added a V3 checkbox for online and offline validation scenarios

This update brings the total number of rules in the validation tool to 152. You can see the list of the active rules here and the list of rules that are under development here.

Moreover, with this update the validation tool is positioned to support rules for v3 services for OData V3 payload validation.

Keep on validating and let us know what you think either on the mailing list or on the discussions page on the Codeplex site.

The Microsoft BI Team (@MicrosoftBI) asked Do We Have The Tools We Need to Navigate The New World of Data? in a 2/28/2012 post:

Today, with the launch of the 2012 Strata Conference, the SQL Server Data Platform Insider blog is featuring a guest post from Dave Campbell, Microsoft Technical Fellow in Microsoft’s Server and Tools Business. Dave discusses how Microsoft is working with the big data community and ecosystem to deliver an open and flexible platform as well as broadening the adoption of Hadoop in the enterprise by bringing the simplicity and manageability of Windows to Hadoop based solutions.

Be sure to also catch Dave Campbell’s Strata Keynote streaming live via http://strataconf.com/live at 9:00am PST Wednesday February 29th, 2012 where he will cover the topics mentioned in the SQL Server DPI blog post in more detail. [See post above.]

Finally, to learn more about Microsoft’s Big Data Solutions, visit www.microsoft.com/bigdata.

The Microsoft BI Team (@MicrosoftBI) reported Strata Conference Is Coming Up! on 2/27/2012:

The O’Reilly Strata Conference is the place to be to find out how to make data work for you. It’s starts tomorrow Tuesday February 28th and goes through Thursday March 1st. We’re excited to be participating in this great event. We’ll be covering a wide range of hot topics including Big Data, unlocking insights from your data, and self-service BI.

If you’re attending the event, you’ll want to check out our 800 sq. ft. booth. We’ll be at #301, and will have 3 demo pods featuring our products and technologies including what’s new with Microsoft SQL Server 2012, including Power View, as well as Windows Azure marketplace demos. We have a run-down of the sessions we’ll be speaking at, but first, a special note about February 29. From 1:30-2:10 PM. Microsoft Technical Fellow Dave Campbell will be hosting Office Hours. This is your chance to meet with the Strata presenters in-person. Dave Campbell is a Technical Fellow working on Microsoft’s Server and Tools business. His current product development interests include cloud-scale computing, realizing value from ambient data, and multidimensional, context-rich computing experiences. For those attending the conference, there is no need to sign up for this session. Just stop by the Exhibit Hall with your questions and feedback.

For those who aren’t able to attend in person, we’ll have representatives from the Microsoft BI team tweeting from the event. Be sure you are following @MicrosoftBI on Twitter so you can participate. Start submitting your questions today to @MicrosoftBI and we will incorporate questions into the Office Hours for Dave to answer.

Be sure to also catch Dave Campbell’s Strata Keynote streaming live via http://strataconf.com/live at 9:00am PST Wednesday February 29th, 2012. Mac Slocum will also be interviewing Dave at 10:15 A.M. PST on February 29th. You can watch this interview at the link above as well.

Now, a summary of what we’ll be presenting on!

SQL and NoSQL Are Two Sides Of The Same Coin

- Erik Meijer (Microsoft)

- 9:00amTuesday, 02/28/2012

The nascent NoSQL market is extremely fragmented, with many competing vendors and technologies. Programming, deploying, and managing noSQL solutions requires specialized and low-level knowledge that does not easily carry over from one vendor’s product to another.

A necessary condition for the network effect to take off in the NoSQL database market is the availability of a common abstract mathematical data model and an associated query language for NoSQL that removes product differentiation at the logical level and instead shifts competition to the physical and operational level. The availability of such a common mathematical underpinning of all major NoSQL databases can provide enough critical mass to convince businesses, developers, educational institutions, etc. to invest in NoSQL.

In this article we developed a mathematical data model for the most common form of NoSQL—namely, key-value stores as the mathematical dual of SQL’s foreign-/primary-key stores. Because of this deep and beautiful connection, we propose changing the name of NoSQL to coSQL. Moreover, we show that monads and monad comprehensions (i.e., LINQ) provide a common query mechanism for both SQL and coSQL and that many of the strengths and weaknesses of SQL and coSQL naturally follow from the mathematics.

In contrast to common belief, the question of big versus small data is orthogonal to the question of SQL versus coSQL. While the coSQL model naturally supports extreme sharding, the fact that it does not require strong typing and normalization makes it attractive for “small” data as well. On the other hand, it is possible to scale SQL databases by careful partitioning.

What this all means is that coSQL and SQL are not in conflict, like good and evil. Instead they are two opposites that coexist in harmony and can transmute into each other like yin and yang. Because of the common query language based on monads, both can be implemented using the same principles.

Do We Have The Tools We Need To Navigate The New World Of Data?

- Dave Campbell (Microsoft)

- 9:00amWednesday, 02/29/2012

In a world where data increasing 10x every 5 years and 85% of that information is coming from new data sources, how do our existing technologies to manage and analyze data stack up? This talk discusses some of the key implications that Big Data will have on our existing technology infrastructure and where do we need to go as a community and ecosystem to make the most of the opportunity that lies ahead.

Unleash Insights On All Data With Microsoft Big Data

- Alexander Stojanovic (Microsoft)

- 11:30amWednesday, 02/29/2012

Do you plan to extract insights from mountains of data, including unstructured data that is growing faster than ever? Attend this session to learn about Microsoft’s Big Data solution that unlocks insights on all your data, including structured and unstructured data of any size. Accelerate your analytics with a Hadoop service that offers deep integration with Microsoft BI and the ability to enrich your models with publicly available data from outside your firewall. Come and see how Microsoft is broadening access to Hadoop through dramatically simplified deployment, management and programming, including full support for JavaScript.

Hadoop + JavaScript: what we learned

- Asad Khan (Microsoft)

- 2:20pmWednesday, 02/29/2012

In this session we will discuss two key aspects of using JavaScript in the Hadoop environment. The first one is how we can reach to a much broader set of developers by enabling JavaScript support on Hadoop. The JavaScript fluent API that works on top of other languages like PigLatin let developers define MapReduce jobs in a style that is much more natural; even to those who are unfamiliar to the Hadoop environment.

The second one is how to enable simple experiences directly through an HTML5-based interface. The lightweight Web interface gives developer the same experience as they would get on the Server. The web interface provides a zero installation experience to the developer across all client platforms. This also allowed us to use HTML5 support in the browsers to give some basic data visualization support for quick data analysis and charting.

During the session we will also share how we used other open source projects like Rhino to enable JavaScript on top of Hadoop.

Democratizing BI at Microsoft: 40,000 Users and Counting

- Kirkland Barrett (Microsoft)

- 10:40amThursday, 03/01/2012

Learn how Microsoft manages a 10,000 person IT Organization utilizing Business Intelligence capabilities to drive communication of strategy, performance monitoring of key analytics, employee self-service BI, and leadership decision-making throughout the global Microsot IT organization. The session will focus on high-level BI challenges and needs of IT executives, Microsoft IT’s BI strategy, and the capabilities that helped to drive BI internal use from 300 users to over 40,000 users (and growing) through self-service BI methodologies.

Data Marketplaces for your extended enterprise: Why Corporations Need These to Gain Value from Their Data

- Piyush Lumba (Microsoft), Francis Irving (ScraperWiki Ltd.)

- 2:20pmThursday, 03/01/2012

One of the most significant challenges faced by individuals and organizations is how to discover and collaborate with data within and across their organizations, which often stays trapped in application and organizational silos. We believe that internal data marketplaces or data hubs will emerge as a solution to this problem of how data scientists and other professionals can work together to in a friction-free manner on data inside corporations and between corporations and unleash significant value for all.

This session will cover this concept in two dimensions.

Piyush from Microsoft will walk through the concept of internal data markets – an IT managed solution that allows organizations to efficiently and securely discover, publish and collaborate on data from various sub-groups within an organization, and from partners and vendors across the extended organization

Francis, from ScraperWiki, will talk through stories of both how people have already used data hubs, and stories which give signs of what is to come. For example – how Australian activists use collaborative web scraping to gather a national picture of planning applications, and how Nike are releasing open corporate data to create disruptive innovation. There’ll be a section where the audience can briefly tell how they use the Internet to collaborate on working with data, and ends with a challenge to use open data as a weapon.

Additional Information

For additional details on Microsoft’s presence at Strata and Big Data resources visit this link.

Finally, if you’re not able to attend Strata and want a fun way to interact with our technologies, be sure to participate in our #MSBI Power View Contest happening Tuesdays and Thursdays through March 6th 2012.

The Microsoft BI Team (@MicrosoftBI) posted Ashvini Sharma’s Big Data for Everyone: Using Microsoft’s Familiar BI Tools with Hadoop on 2/24/2012:

In our second Big Data technology guest blog post, we are thrilled to have Ashvini Sharma, a Group Program Manager in the SQL Server Business Intelligence group at Microsoft. Ashvini discusses how organizations can provide insights for everyone from any data, any size, anywhere by using Microsoft’s familiar BI stack with Hadoop.

Whether through blogs, twitter, or technical articles, you’ve probably heard about Big Data, and a recognition that organizations need to look beyond the traditional databases to achieve the most cost effective storage and processing of extremely large data sets, unstructured data, and/or data that comes in too fast. As the prevalence and importance of such data increases, many organizations are looking at how to leverage technologies such as those in the Apache Hadoop ecosystem. Recognizing one size doesn’t fit all, we began detailing our approach to Big Data at the PASS Summit last October. Microsoft’s goal for Big Data is to provide insights to all users from structured or unstructured data of any size. While very scalable, accommodating, and powerful, most Big Data solutions based on Hadoop require highly trained staff to deploy and manage. In addition, the benefits are limited to few highly technical users who are as comfortable programming their requirements as they are using advanced statistical techniques to extract value. For those of us who have been around the BI industry for a few years, this may sound similar to the early 90s where the benefits of our field were limited to a few within the corporation through the Executive Information Systems.

Analysis on Hadoop for Everyone

Microsoft entered the Business Intelligence industry to enable orders of magnitude more users to make better decisions from applications they use every day. This was the motivation behind being the first DBMS vendor to include an OLAP engine with the release of SQL Server 7.0 OLAP Services that enabled Excel users to ask business questions at the speed of thought. It remained the motivation behind PowerPivot in SQL Server 2008 R2, a self-service BI offering that allowed end users to build their own solutions without dependence on IT, as well as provided IT insights on how data was being consumed within the organization. And, with the release of Power View in SQL Server 2012, that goal will bring the power of rich interactive exploration directly in the hands of every user within an organization.

Enabling end users to merge data stored in a Hadoop deployment with data from other systems or with their own personal data is a natural next step. In fact, we also introduced Hive ODBC driver, currently in Community Technology Preview, at the PASS Summit in October. This driver allows connectivity to Apache Hive, which in turn facilitates querying and managing large datasets residing in distributed storage by exposing them as a data warehouse.

This connector brings the benefit of the entire Microsoft BI stack and ecosystem on Hive. A few examples include:

- Bring Hive data directly to Excel through the Microsoft Hive Add-in for Excel

- Build a PowerPivot workbook using data in Hive

- Build Power View reports on top of Hive

- Instead of manually refreshing a PowerPivot workbook based on Hive on their desktop, users can use PowerPivot for SharePoint to schedule a data refresh feature to refresh a central copy shared with others, without worrying about the time or resources it takes.

- BI Professionals can build BI Semantic Model or Reporting Services Reports on Hive in SQL Server Data tools

- Of course all of the 3rd party client applications built on the Microsoft BI stack can now access Hive data as well!

Klout is a great customer that’s leveraging the Microsoft BI stack on Big Data to provide mission critical analysis for both internal users as well as to its customers. In fact, Dave Mariani, the VP of Engineering at Klout has taken some time out to describe how they use our technology. This is recommended viewing not just to see examples of applications possible but also to get a better understanding of how new options complement technology you are already familiar with. Dave also blogged about their approach here.

Best of both worlds

As we mentioned in the beginning of this blog article, one size doesn’t fit all, and it’s important to recognize the inherent strengths of options available to choose when to use what. Hadoop broadly provides:

- an inexpensive and highly scalable store for data in any shape,

- a robust execution infrastructure for data cleansing, shaping and analytical operations typically in a batch mode, and

- a growing ecosystem that provides highly skilled users many options to process data.

The Microsoft BI stack is targeted at significantly larger user population and provides:

- functionality in tools such as Excel and SharePoint that users are already familiar with,

- interactive queries at the speed of thought,

- business layer that allows users to understand the data, combine it with other sources, and express business logic in more accessible ways, and

- mechanisms to publish results for others to consume and build on themselves.

Successful projects may use both of these technologies in complementary manner, like Klout does. Enabling this choice has been the primary motivator for providing Hive ODBC connectivity, as well as investing in providing Hadoop-based distribution for Windows Server and Windows Azure.

More Information

This is an exciting field, and we’re thrilled to be a top-tier Elite sponsor of the upcoming Strata Conference between February 28th and March 1st 2012 in Santa Clara, California. If you’re attending the conference, you can find more information about the sessions here. We also look forward to meeting you at our booth to understand your needs.

Following that, on March 7th, we will be hosting an online event that will allow you to immerse yourself in the exciting New World of Data with SQL Server 2012. More details are here.

For more information on Microsoft’s Big Data offering, please visit http://www.microsoft.com/bigdata.

<Return to section navigation list>

Windows Azure Access Control, Service Bus and Workflow

Alan Smith reported PDF and CHM versions of Windows Azure Service Bus Developer Guide Available & Azure Service Bus 2-day Course in a 3/1/2012 post:

I’ve just added PDF and CHM versions of “Windows Azure Service Bus Developer Guide”, you can get them here.

The HTML browsable version is here.

I have the first delivery of my 2-day course “SOA, Connectivity and Integration using the Windows Azure Service Bus” scheduled for 3-4 May in Stockholm. Feel free to contact me via my blog if you have any questions about the course, or would be interested in an on-site delivery. Details of the course are here.

Alan Smith announced availability of this Windows Azure Service Bus Developer Guide in a 2/29/2012 post:

I’ve just published a web-browsable version of “Windows Azure Service Bus Developer Guide”. “The Developers Guide to AppFabric” has been re-branded, and has a new title of “Windows Azure Service Bus Developer Guide”. There is not that much new in the way of content, but I have made changes to the overall structure of the guide. More content will follow, along with updated PDF and CHM versions of the guide.

<Return to section navigation list>

Windows Azure VM Role, Virtual Network, Connect, RDP and CDN

Avkash Chauhan (@avkashchauhan) described Windows Azure CDN and Referrer Header in a 2/27/2012 post:

The Windows Azure Azure CDN, like any other CDNs, attempts to be a transparent caching layer. The CDN doesn’t care who the referring site might be. Like any other CDN, Windows Azure CDN keep things transparent and have no concern of what the referring site is. So it is correct to say that Windows Azure CDN does not have any dependency on Referrer Header. Any client solution created using referrer header will have no direct impact on how Windows Azure CDN works.

If you try to control CDN access based on be referrer, that may be a good idea because it is very easy to use tools like wget or curl to create a http request which has your referrer URL. Windows Azure CDN does not publicly support any mechanism for authentication or authorization of requests. If a request is received, it’s served. There is no way for Windows Azure CDN to reject requests based on referrer header or on any other header.

<Return to section navigation list>

Live Windows Azure Apps, APIs, Tools and Test Harnesses

Himanshu Singh posted Real World Windows Azure: Interview with Rajesekar Shanmugam, CTO at AppPoint on 3/2/2012:

As part of the Real World Windows Azure interview series, I talked to Rajasekar Shanmugam, Chief Technology Officer at AppPoint, about using Windows Azure for its cloud-based process automation and systems integration services. Here’s what he had to say.

Himanshu Kumar Singh: Tell me about AppPoint.

Rajasekar Shanmugam: AppPoint is an independent software vendor and we provide process automation and system integration services to enterprises. At the core of our solutions is a business-application infrastructure with a unique model-driven development environment to help simplify the development process. We originally developed our platform on Microsoft products and technologies, including the .NET Framework 4 and SQL Server 2008 data management software.

HKS: What led you to consider a move to the cloud?